当前位置:

X-MOL 学术

›

Comput. Animat. Virtual Worlds

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

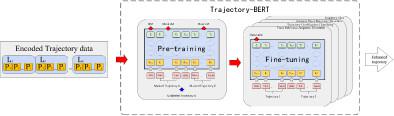

Trajectory-BERT: Pre-training and fine-tuning bidirectional transformers for crowd trajectory enhancement

Computer Animation and Virtual Worlds ( IF 1.1 ) Pub Date : 2023-05-28 , DOI: 10.1002/cav.2190 Lingyu Li 1 , Tianyu Huang 1 , Yihao Li 1 , Peng Li 1

Computer Animation and Virtual Worlds ( IF 1.1 ) Pub Date : 2023-05-28 , DOI: 10.1002/cav.2190 Lingyu Li 1 , Tianyu Huang 1 , Yihao Li 1 , Peng Li 1

Affiliation

|

To address the issue of trajectory fragments and ID switches caused by occlusion in dense crowds, we propose a space-time trajectory encoding method and a point-line-group division method to construct Trajectory-BERT in this paper. Leveraging the spatiotemporal context-dependent features of trajectories, we introduce pre-training and fine-tuning Trajectory-BERT tasks to repair occluded trajectories. Experimental results show that data augmented with Trajectory-BERT outperforms raw annotated data on the MOTA metric and reduces ID switches in raw labeled data, demonstrating the feasibility of our method.

中文翻译:

Trajectory-BERT:用于增强人群轨迹的预训练和微调双向变换器

针对密集人群中遮挡造成的轨迹碎片和ID切换问题,本文提出了一种时空轨迹编码方法和点线组划分方法来构建Trajectory-BERT。利用轨迹的时空上下文相关特征,我们引入了预训练和微调 Trajectory-BERT 任务来修复被遮挡的轨迹。实验结果表明,使用 Trajectory-BERT 增强的数据在 MOTA 指标上优于原始注释数据,并减少了原始标记数据中的 ID 开关,证明了我们方法的可行性。

更新日期:2023-05-28

中文翻译:

Trajectory-BERT:用于增强人群轨迹的预训练和微调双向变换器

针对密集人群中遮挡造成的轨迹碎片和ID切换问题,本文提出了一种时空轨迹编码方法和点线组划分方法来构建Trajectory-BERT。利用轨迹的时空上下文相关特征,我们引入了预训练和微调 Trajectory-BERT 任务来修复被遮挡的轨迹。实验结果表明,使用 Trajectory-BERT 增强的数据在 MOTA 指标上优于原始注释数据,并减少了原始标记数据中的 ID 开关,证明了我们方法的可行性。

京公网安备 11010802027423号

京公网安备 11010802027423号