当前位置:

X-MOL 学术

›

Ann. N. Y. Acad. Sci.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Neural representation in active inference: Using generative models to interact with—and understand—the lived world

Annals of the New York Academy of Sciences ( IF 5.2 ) Pub Date : 2024-03-25 , DOI: 10.1111/nyas.15118 Giovanni Pezzulo 1 , Leo D'Amato 1, 2 , Francesco Mannella 1 , Matteo Priorelli 3 , Toon Van de Maele 4 , Ivilin Peev Stoianov 3 , Karl Friston 5, 6

Annals of the New York Academy of Sciences ( IF 5.2 ) Pub Date : 2024-03-25 , DOI: 10.1111/nyas.15118 Giovanni Pezzulo 1 , Leo D'Amato 1, 2 , Francesco Mannella 1 , Matteo Priorelli 3 , Toon Van de Maele 4 , Ivilin Peev Stoianov 3 , Karl Friston 5, 6

Affiliation

|

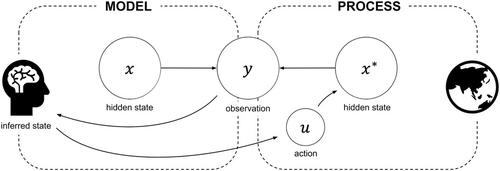

This paper considers neural representation through the lens of active inference, a normative framework for understanding brain function. It delves into how living organisms employ generative models to minimize the discrepancy between predictions and observations (as scored with variational free energy). The ensuing analysis suggests that the brain learns generative models to navigate the world adaptively, not (or not solely) to understand it. Different living organisms may possess an array of generative models, spanning from those that support action-perception cycles to those that underwrite planning and imagination; namely, from explicit models that entail variables for predicting concurrent sensations, like objects, faces, or people—to action-oriented models that predict action outcomes. It then elucidates how generative models and belief dynamics might link to neural representation and the implications of different types of generative models for understanding an agent's cognitive capabilities in relation to its ecological niche. The paper concludes with open questions regarding the evolution of generative models and the development of advanced cognitive abilities—and the gradual transition from pragmatic to detached neural representations. The analysis on offer foregrounds the diverse roles that generative models play in cognitive processes and the evolution of neural representation.

中文翻译:

主动推理中的神经表示:使用生成模型与生活世界交互并理解

本文从主动推理的角度考虑神经表征,主动推理是理解大脑功能的规范框架。它深入研究了生物体如何利用生成模型来最小化预测与观察之间的差异(用变分自由能评分)。随后的分析表明,大脑学习生成模型是为了自适应地驾驭世界,而不是(或不仅仅是)理解世界。不同的生物体可能拥有一系列生成模型,从支持行动-感知循环的模型到支持计划和想象力的模型;也就是说,从包含用于预测并发感觉(如物体、面孔或人)的变量的显式模型,到预测行动结果的面向行动的模型。然后阐明生成模型和信念动态如何与神经表征联系起来,以及不同类型的生成模型对于理解主体与其生态位相关的认知能力的影响。论文最后提出了有关生成模型的演变和高级认知能力的发展以及从实用到分离的神经表征的逐渐转变的开放性问题。对提议的分析突出了生成模型在认知过程和神经表征进化中发挥的不同作用。

更新日期:2024-03-25

中文翻译:

主动推理中的神经表示:使用生成模型与生活世界交互并理解

本文从主动推理的角度考虑神经表征,主动推理是理解大脑功能的规范框架。它深入研究了生物体如何利用生成模型来最小化预测与观察之间的差异(用变分自由能评分)。随后的分析表明,大脑学习生成模型是为了自适应地驾驭世界,而不是(或不仅仅是)理解世界。不同的生物体可能拥有一系列生成模型,从支持行动-感知循环的模型到支持计划和想象力的模型;也就是说,从包含用于预测并发感觉(如物体、面孔或人)的变量的显式模型,到预测行动结果的面向行动的模型。然后阐明生成模型和信念动态如何与神经表征联系起来,以及不同类型的生成模型对于理解主体与其生态位相关的认知能力的影响。论文最后提出了有关生成模型的演变和高级认知能力的发展以及从实用到分离的神经表征的逐渐转变的开放性问题。对提议的分析突出了生成模型在认知过程和神经表征进化中发挥的不同作用。

京公网安备 11010802027423号

京公网安备 11010802027423号