当前位置:

X-MOL 学术

›

Comput. Vis. Image Underst.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Evolutionary Search via channel attention based parameter inheritance and stochastic uniform sampled training

Computer Vision and Image Understanding ( IF 4.5 ) Pub Date : 2024-03-28 , DOI: 10.1016/j.cviu.2024.104000 Yugang Liao , Junqing Li , Shuwei Wei , Xiumei Xiao

Computer Vision and Image Understanding ( IF 4.5 ) Pub Date : 2024-03-28 , DOI: 10.1016/j.cviu.2024.104000 Yugang Liao , Junqing Li , Shuwei Wei , Xiumei Xiao

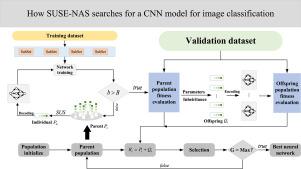

|

Convolutional neural networks’ performance is significantly affected by their architecture. Different algorithms have been developed to aid in automating the network structure selection. Recently, evolutionary neural architecture search (ENAS) methods have gained increasing number of attentions due to their excellent global search capability. Nevertheless, evolutionary neural architecture can be computationally expensive since improving evolution typically involves many performance evaluations. This study introduces a framework utilizing evolutionary search for a lightweight channel attention convolutional network. It leverages stochastic uniform sampled and parameter inheritance to address these challenges. The lightweight channel attention method is applied to the model to make it more capable of extracting features while using fewer parameters. In this framework, parent individuals undergo stochastic sampling and receive training repeatedly on each mini-batch of the training dataset. The strategy of parameter inheritance enables the assessment of offspring individuals’ fitness without fully training the offspring candidate network. Compared to existing methods on standard-bench (CIFAR-10, CIFAR-100, ImageNet) and real-world datasets(NEU-CLS, Chest Xray2017), our model demonstrated superior search efficiency by several orders of magnitude. Our experiments showed that Stochastic uniform sampled evolutionary Network (SUSE-Net) achieved the lowest error rate among state-of-the-art NAS models, with 2.55% for CIFAR-10, 14.8% for CIFAR-100, and 23.2% for ImageNet dataset. This results indicate that the proposed algorithm is not only more computationally efficient, but also competitive with existing methods in terms of learning performance.

中文翻译:

通过基于通道注意的参数继承和随机均匀采样训练进行进化搜索

卷积神经网络的性能很大程度上受到其架构的影响。已经开发了不同的算法来帮助自动化网络结构选择。近年来,进化神经架构搜索(ENAS)方法因其出色的全局搜索能力而受到越来越多的关注。然而,进化神经架构的计算成本可能很高,因为改进进化通常涉及许多性能评估。本研究介绍了一个利用进化搜索的轻量级通道注意卷积网络的框架。它利用随机均匀采样和参数继承来应对这些挑战。模型中应用了轻量级的通道注意力方法,使其在使用更少的参数的情况下更有能力提取特征。在此框架中,父个体进行随机采样,并在训练数据集的每个小批量上重复接受训练。参数继承策略可以在不充分训练后代候选网络的情况下评估后代个体的适应度。与标准平台(CIFAR-10、CIFAR-100、ImageNet)和现实世界数据集(NEU-CLS、Chest Xray2017)上的现有方法相比,我们的模型展示了几个数量级的卓越搜索效率。我们的实验表明,随机均匀采样进化网络 (SUSE-Net) 在最先进的 NAS 模型中实现了最低的错误率,CIFAR-10 为 2.55%,CIFAR-100 为 14.8%,ImageNet 为 23.2%数据集。这一结果表明,所提出的算法不仅计算效率更高,而且在学习性能方面与现有方法具有竞争力。

更新日期:2024-03-28

中文翻译:

通过基于通道注意的参数继承和随机均匀采样训练进行进化搜索

卷积神经网络的性能很大程度上受到其架构的影响。已经开发了不同的算法来帮助自动化网络结构选择。近年来,进化神经架构搜索(ENAS)方法因其出色的全局搜索能力而受到越来越多的关注。然而,进化神经架构的计算成本可能很高,因为改进进化通常涉及许多性能评估。本研究介绍了一个利用进化搜索的轻量级通道注意卷积网络的框架。它利用随机均匀采样和参数继承来应对这些挑战。模型中应用了轻量级的通道注意力方法,使其在使用更少的参数的情况下更有能力提取特征。在此框架中,父个体进行随机采样,并在训练数据集的每个小批量上重复接受训练。参数继承策略可以在不充分训练后代候选网络的情况下评估后代个体的适应度。与标准平台(CIFAR-10、CIFAR-100、ImageNet)和现实世界数据集(NEU-CLS、Chest Xray2017)上的现有方法相比,我们的模型展示了几个数量级的卓越搜索效率。我们的实验表明,随机均匀采样进化网络 (SUSE-Net) 在最先进的 NAS 模型中实现了最低的错误率,CIFAR-10 为 2.55%,CIFAR-100 为 14.8%,ImageNet 为 23.2%数据集。这一结果表明,所提出的算法不仅计算效率更高,而且在学习性能方面与现有方法具有竞争力。

京公网安备 11010802027423号

京公网安备 11010802027423号