Machine Learning ( IF 7.5 ) Pub Date : 2024-04-10 , DOI: 10.1007/s10994-024-06526-x Susobhan Ghosh , Raphael Kim , Prasidh Chhabria , Raaz Dwivedi , Predrag Klasnja , Peng Liao , Kelly Zhang , Susan Murphy

|

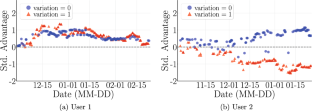

There is a growing interest in using reinforcement learning (RL) to personalize sequences of treatments in digital health to support users in adopting healthier behaviors. Such sequential decision-making problems involve decisions about when to treat and how to treat based on the user’s context (e.g., prior activity level, location, etc.). Online RL is a promising data-driven approach for this problem as it learns based on each user’s historical responses and uses that knowledge to personalize these decisions. However, to decide whether the RL algorithm should be included in an “optimized” intervention for real-world deployment, we must assess the data evidence indicating that the RL algorithm is actually personalizing the treatments to its users. Due to the stochasticity in the RL algorithm, one may get a false impression that it is learning in certain states and using this learning to provide specific treatments. We use a working definition of personalization and introduce a resampling-based methodology for investigating whether the personalization exhibited by the RL algorithm is an artifact of the RL algorithm stochasticity. We illustrate our methodology with a case study by analyzing the data from a physical activity clinical trial called HeartSteps, which included the use of an online RL algorithm. We demonstrate how our approach enhances data-driven truth-in-advertising of algorithm personalization both across all users as well as within specific users in the study.

中文翻译:

我们个性化了吗?使用重采样通过在线强化学习算法评估个性化

人们越来越有兴趣使用强化学习 (RL) 来个性化数字健康中的治疗序列,以支持用户采取更健康的行为。这种顺序决策问题涉及基于用户的背景(例如,之前的活动水平、位置等)关于何时治疗以及如何治疗的决策。在线强化学习是解决此问题的一种很有前途的数据驱动方法,因为它根据每个用户的历史响应进行学习,并利用这些知识来个性化这些决策。然而,为了决定是否应将强化学习算法纳入现实世界部署的“优化”干预中,我们必须评估表明强化学习算法实际上正在为其用户提供个性化治疗的数据证据。由于强化学习算法的随机性,人们可能会产生一种错误的印象,即它正在某些状态下学习,并利用这种学习来提供特定的治疗。我们使用个性化的工作定义,并引入基于重采样的方法来研究 RL 算法所表现出的个性化是否是 RL 算法随机性的产物。我们通过案例研究来说明我们的方法,通过分析名为 HeartSteps 的身体活动临床试验的数据,其中包括使用在线强化学习算法。我们展示了我们的方法如何在所有用户以及研究中的特定用户中增强数据驱动的算法个性化广告的真实性。

京公网安备 11010802027423号

京公网安备 11010802027423号