International Journal of Computer Vision ( IF 19.5 ) Pub Date : 2024-04-24 , DOI: 10.1007/s11263-024-02057-z Lei Zhang , Xiaowei Fu , Fuxiang Huang , Yi Yang , Xinbo Gao

|

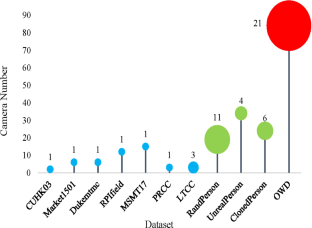

Person re-identification (ReID) has made great strides thanks to the data-driven deep learning techniques. However, the existing benchmark datasets lack diversity, and models trained on these data cannot generalize well to dynamic wild scenarios. To meet the goal of improving the explicit generalization of ReID models, we develop a new Open-World, Diverse, Cross-Spatial-Temporal dataset named OWD with several distinct features. (1) Diverse collection scenes: multiple independent open-world and highly dynamic collecting scenes, including streets, intersections, shopping malls, etc. (2) Diverse lighting variations: long time spans from daytime to nighttime with abundant illumination changes. (3) Diverse person status: multiple camera networks in all seasons with normal/adverse weather conditions and diverse pedestrian appearances (e.g., clothes, personal belongings, poses, etc.). (4) Protected privacy: invisible faces for privacy critical applications. To improve the implicit generalization of ReID, we further propose a Latent Domain Expansion (LDE) method to develop the potential of source data, which decouples discriminative identity-relevant and trustworthy domain-relevant features and implicitly enforces domain-randomized identity feature space expansion with richer domain diversity to facilitate domain-invariant representations. Our comprehensive evaluations with most benchmark datasets in the community are crucial for progress, although this work is far from the grand goal toward open-world and dynamic wild applications. The project page is https://github.com/fxw13/OWD.

中文翻译:

开放世界、多样化、跨时空的动态野人重识别基准

得益于数据驱动的深度学习技术,人员重新识别(ReID)取得了长足的进步。然而,现有的基准数据集缺乏多样性,并且在这些数据上训练的模型不能很好地泛化到动态的野外场景。为了满足提高 ReID 模型显式泛化的目标,我们开发了一个新的开放世界、多样化、跨时空数据集,名为 OWD,具有几个不同的特征。 (1)多样的采集场景:多个独立的开放世界、高动态的采集场景,包括街道、路口、商场等。 (2)多样的光照变化:白天到夜间的时间跨度长,光照变化丰富。 (3)多样化的行人状态:所有季节的多个摄像头网络,正常/恶劣的天气条件和多样化的行人外观(例如,衣服、个人物品、姿势等)。 (4)受保护的隐私:隐私关键应用程序的隐形面孔。为了提高 ReID 的隐式泛化能力,我们进一步提出了一种潜在域扩展(LDE)方法来开发源数据的潜力,该方法将区分性身份相关和可信域相关特征解耦,并隐式强制执行域随机化身份特征空间扩展更丰富的领域多样性,以促进领域不变的表示。我们对社区中大多数基准数据集的综合评估对于进步至关重要,尽管这项工作距离开放世界和动态野生应用的宏伟目标还很远。项目页面为https://github.com/fxw13/OWD。

京公网安备 11010802027423号

京公网安备 11010802027423号