Abstract

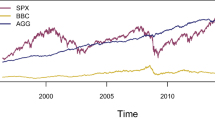

This paper develops a model for optimal portfolio allocation for an investor with quantile preferences, i.e., who maximizes the \(\tau \)-quantile of the portfolio return, for \(\tau \in (0,1)\). Quantile preferences allow to study heterogeneity in individuals’ portfolio choice by varying the quantiles, and have a solid axiomatic foundation. Their associated risk attitude is captured entirely by a single dimensional parameter (the quantile \(\tau \)), instead of the utility function. We formally establish the properties of the quantile model. The presence of a risk-free asset in the portfolio produces an all-or-nothing optimal response to the risk-free asset that depends on investors’ quantile preference. In addition, when both assets are risky, we derive conditions under which the optimal portfolio decision has an interior solution that guarantees diversification vis-à-vis fully investing in a single risky asset. We also derive conditions under which the optimal portfolio decision is characterized by two regions: full diversification for quantiles below the median and no diversification for upper quantiles. These results are illustrated in an exhaustive simulation study and an empirical application using a tactical portfolio of stocks, bonds and a risk-free asset. The results show heterogeneity in portfolio diversification across risk attitudes.

Similar content being viewed by others

Notes

Some studies suggesting that individuals did not always employ objective probabilities resulted in, among others, Prospect Theory (Kahneman and Tversky 1979), Rank-Dependent Expected Utility Theory (Quiggin 1982), Cumulative Prospect Theory (Tversky and Kahneman 1992), Regret (Bell 1982), and Ambiguity Aversion (Gilboa and Schmeidler 1989). Rabin (2000) criticized EU theory arguing that EU would require unreasonably large levels of risk aversion to explain the data from some small-stakes laboratory experiments. See also Simon (1979), Tversky and Kahneman (1981), Payne et al. (1992) and Baltussen and Post (2011) as examples providing experimental evidence on the failure of the EU paradigm.

Rostek (2010) discusses other advantages of the QP, such as robustness, ability to deal with categorical (instead of continuous) variables, and the flexibility of offering a family of preferences indexed by quantiles.

Intuitively, the monotonicity of quantiles allows one to avoid modeling individuals’ utility function. This is because the maximization problem is invariant to monotonic transformations of the distribution of portfolio returns.

Our model can deal with short sale, but we leave this to future work.

For instance, if the preference is given by EU, the belief is captured by the probability while the tastes by the utility function over outcomes or consequences (such as monetary payoffs). Beliefs and tastes are not completely separated, however, because if we take a monotonic transformation of the utility function, which maintains the same tastes over consequences, we may end up with a different preference. That is, the pair beliefs and tastes come together and are stable only under affine transformations of the utility function. In other words, the EU preferences, as many other preferences, do not allow a separation of tastes and beliefs.

See, e.g., Damodaran (2010) for an argument that every asset carries some risk.

The inferior limit m may be taken over a limited region, not over the whole support. That is, we can accommodate cases in which the support is infinite so that \(f(x,y) \rightarrow 0\) when \(y \rightarrow \infty \).

Of course, \(w^{*}=0\), when \({\underline{y}}>{\underline{x}}>-\infty \) and \({\underline{y}}- {\underline{x}} \geqslant \frac{M}{2m}\).

The inferior limit m may be taken over a limited region, not over the whole support. That is, we can accommodate cases in which the support is infinite so that \(f(x,y) \rightarrow 0\) when \(y \rightarrow \infty \).

The two illustrations (a) and (b) correspond, respectively, to \(\tau \leqslant \Pr \left( \left[ \frac{X+Y}{2} \leqslant q \right] \right) \) and \(\tau > \Pr \left( \left[ \frac{X+Y}{2} \leqslant q \right] \right) \). In the first case (a), the line \(wX+(1-w)Y=q\) is below the dashed blue line joining (1, 0) to (0, 1). In case (b) \(\tau >\Pr \left( \left[ \frac{X+Y}{2} \leqslant q \right] \right) \), the line \(wX+(1-w)Y=q\) is above the dashed blue line.

The simulation algorithm reflects this issue by selecting either \(w=0\) or \(w=1\) for \(\tau _0=0.5\).

When \({\mathscr {I}}_{X}\) or \({\mathscr {I}}_{Y}\) are not \({\mathbb {R}}\), then we have to consider limits that make the derivatives more complex.

The case of two risky assets with different means is available from the authors upon request.

References

Apiwatcharoenkul, W., Lake, L., Jensen, J.: Uncertainty in proved reserves estimates by decline curve analysis. In: SPE/IAEE Hydrocarbon Economics and Evaluation Symposium, Society of Petroleum Engineers (2016)

Artzner, P., Delbaen, F., Eber, J., Heath, D.: Coherent measures of risk. Math Finance 9, 203–228 (1999)

Baltussen, G., Post, G.T.: Irrational diversification: an examination of individual portfolio choice. J Financ Quant Anal 46, 1463–1491 (2011)

Basak, S., Shapiro, A.: Value-at-risk based risk management: optimal policies and asset prices. Rev Financ Stud 14, 371–405 (2001)

Bassett, G.W., Koenker, R., Kordas, G.: Pessimistic portfolio allocation and choquet expected utility. J Financ Econom 2, 477–492 (2004)

Bell, D.E.: Regret in decision making under uncertainty. Oper Res 30, 961–981 (1982)

Bhattacharya, D.: Inferring optimal peer assignment from experimental data. J Am Stat Assoc 104, 486–500 (2009)

Brown, D.B., Sim, M.: Satisficing measures for analysis of risky positions. Manag Sci 55, 71–84 (2009)

Campbell, J.: Household finance. J Financ Econ 61, 1553–1604 (2006)

Campbell, J.Y.: Financial Decisions and Markets: A Course in Asset Pricing. Princeton: Princeton University Press (2018)

Campbell, R., Huisman, R., Koedijk, K.: Optimal portfolio selection in a value-at-risk framework. J Bank Finance 25, 1789–1804 (2001)

Chambers, C.P.: Ordinal aggregation and quantiles. J Econ Theory 137, 416–431 (2007)

Chambers, C.P.: An axiomatization of quantiles on the domain of distribution functions. Math Finance 19, 335–342 (2009)

Cochrane, J.H.: Asset Pricing, Revised. Princeton: Princeton University Press (2005)

Damodaran, A.: Into the abyss: what if nothing is risk free? Available at SSRN: https://ssrn.com/abstract=1648164. https://doi.org/10.2139/ssrn.1648164 (2010)

de Castro, L., Galvao, A.F.: Dynamic quantile models of rational behavior. Econometrica 87, 1893–1939 (2019)

de Castro, L., Galvao, A.F.: Static and dynamic quantile preferences. Econ Theory (2021) (Forthcoming)

de Castro, L., Galvao, A.F., Noussair, C.N., Qiao, L.: Do people maximize quantiles? Games Econ Behav 132, 22–40 (2022)

Duffie, D., Pan, J.: An overview of value at risk. J Deriv 4, 7–49 (1997)

Engle, R.F., Manganelli, S.: CAViaR: conditional autoregressive value at risk by regression quantiles. J Bus Econ Stat 22, 367–381 (2004)

Fanchi, J.R., Christiansen, R.L.: Introduction to Petroleum Engineering. New York: Wiley (2017)

Fishburn, P.C.: Mean-risk analysis with risk associated with below-target returns. Am Econ Rev 67, 116–126 (1977)

Föllmer, H., Leukert, P.: Quantile hedging. Finance Stoch 3, 251–273 (1999)

Gaivoronski, A., Pflug, G.: Value-at-risk in portfolio optimization: properties and computational approach. J Risk 7(2), 1–31 (2005)

Garlappi, L., Uppal, R., Wang, T.: Portfolio selection with parameter and model uncertainty: a multi-prior approach. Rev Financ Stud 20, 41–81 (2007)

Ghirardato, P., Marinacci, M.: Ambiguity made precise: a comparative foundation. J Econ Theory 102, 251–289 (2002)

Ghirardato, P., Maccheroni, F., Marinacci, M.: Certainty independence and the separation of utility and beliefs. J Econ Theory 120, 129–136 (2005)

Gilboa, I., Schmeidler, D.: Maxmin expected utility with a non-unique prior. J Math Econ 18, 141–153 (1989)

Giovannetti, B.C.: Asset pricing under quantile utility maximization. Rev Financ Econ 22, 169–179 (2013)

He, X.D., Zhou, X.Y.: Portfolio choice via quantiles. Math Finance Int J Math Stat Financ Econ 21, 203–231 (2011)

Ibragimov, R., Walden, D.: The limits of diversification when losses may be large. J Bank Finance 31, 2551–2569 (2007)

Jorion, P.: Value at Risk. The New Benchmark for Managing Financial Risk, vol. 81, 3rd edn. New York: McGraw-Hill (2007)

Kahneman, D., Tversky, A.: Prospect theory: an analysis of decision under risk. Econometrica 47, 263–292 (1979)

Kempf, A., Ruenzi, S.: Tournaments in mutual-fund families. Rev Financ Stud 21, 1013–1036 (2007)

Krokhmal, P., Uryasev, S., Palmquist, J.: Portfolio optimization with conditional value-at-risk objective and constraints. J Risk 4(2), 43–68 (2001)

Kulldorff, M.: Optimal control of favorable games with a time limit. SIAM J Control Optim 31, 52–69 (1993)

Lintner, J.: The valuation of risky assets and the selection of risky investments in the portfolios and capital budgets. Rev Econ Stat 47, 13–37 (1965)

Manski, C.: Ordinal utility models of decision making under uncertainty. Theor Decis 25, 79–104 (1988)

Markowitz, H.: Portfolio selection. J Finance 7, 77–91 (1952)

Mendelson, H.: Quantile-preserving spread. J Econ Theory 42, 334–351 (1987)

Mitton, T., Vorkink, K.: Equilibrium underdiversification and the preference for skewness. Rev Financ Stud 20, 1255–1288 (2007)

Payne, J.W., Bettman, J.R., Johnson, E.J.: Behavioral decision research: a constructive processing perspective. Annu Rev Psychol 43, 87–131 (1992)

Porter, R.B.: Semivariance and stochastic dominance: a comparison. Am Econ Rev 64, 200–204 (1974)

Quiggin, J.: A theory of anticipated utility. J Econ Behav Organ 3, 323–343 (1982)

Rabin, M.: Risk aversion and expected-utility theory: a calibration theorem. Econometrica 68, 1281–1292 (2000)

Rachev, S.T., Stoyanov, S., Fabozzi, F.J.: Advanced Stochastic Models, Risk Assessment, and Portfolio Optimization: The Ideal Risk, Uncertainty, and Performance Measures. New York: Wiley (2007)

Rostek, M.: Quantile maximization in decision theory. Rev Econ Stud 77, 339–371 (2010)

Rothschild, M., Stiglitz, J.E.: Increasing risk: I. A definition. J Econ Theory 2, 225–243 (1970)

Savage, L.: The Foundations of Statistics. New York: Wiley (1954)

Sharpe, W.F.: Capital asset prices: a theory of market equilibrium under conditions of risk. J Finance 19, 425–462 (1964)

Simon, H.A.: Rational decision making in business organizations. Am Econ Rev 69, 493–513 (1979)

Tobin, J.: Liquidity preference as behavior towards risk. Rev Econ Stud 67, 65–86 (1958)

Tversky, A., Kahneman, D.: The framing of decisions and the psychology of choice. Science 211, 453–458 (1981)

Tversky, A., Kahneman, D.: Advances in prospect theory: cumulative representation of uncertainty. J Risk Uncertain 5, 297–323 (1992)

Wu, G., Xiao, Z.: An analysis of risk measures. J Risk 4(4), 53–75 (2002)

Yaari, M.E.: The dual theory of choice under risk. Econometrica 55, 95–115 (1987)

Author information

Authors and Affiliations

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors would like to express their appreciation to the Editor-in-Chief Anne Villamil, an anonymous reviewer, Nabil Al-Najjar, Hide Ichimura, Derek Lemoine, Richard Peter, Tiemen Woutersen, María Florencia Gabrielli and seminar participants at the University of Iowa, INSPER, JOLATE Bahía Blanca, RedNIE, Southampton Econometrics Conference, and Universidad de Buenos Aires for helpful comments and discussions. Luciano de Castro acknowledges the support of the National Council for Scientific and Technological Development – CNPq. Computer programs to replicate the numerical analyses are available from the authors.

Appendices

Appendix

A Proofs

This Appendix collects the proofs for the results in the main text.

1.1 Proof of Lemma 3.1

Proof

The objective function of the primal problem in (4) is

Noticing that \(u(\cdot )\) is continuous and increasing, and that the quantile is invariant with respect to monotone transformations, then the above maximization argument is given by

\(\square \)

1.2 Proof of Lemma 3.2

Proof

It is sufficient to show that \( w \mapsto \text {Q}_{\tau }\left[ w X + (1-w) Y \right] =\text {Q}_{\tau }[S_{w}] \) is continuous. But this follows from Assumption 1, which implies that the CDF of \(S_{w}=w X + (1-w) Y \) is strictly increasing, thus making its quantile continuous. \(\square \)

1.3 Proof of Theorem 1

Proof

(1) By appealing to translations if necessary, we may assume without loss of generality that \({\underline{x}}={\underline{y}}=0\), that is, if this is not the case, we may consider the random variables \(X' = X - {\underline{x}}\) and \(Y'= Y - {\underline{y}}\). We may also assume without loss of generality that \({\overline{x}}, {\overline{y}}< \infty \), for if \({\overline{x}}=\infty \) or \({\overline{y}}=\infty \), we may truncate the distribution at large \({\hat{x}}, {\hat{y}}\) so that \(\Pr ( \{ (x,y): x> {\hat{x}} \text { or } y> {\hat{y}} \} ) < \epsilon \) for some small \(\epsilon >0\). Thus, without loss of generality, we may assume that there exists \(m,M \in {\mathbb {R}}_{+}\) such that \(0< m< f(x,y)< M < \infty \) for all \((x,y) \in [0,{\overline{x}} ] \times [0,{\overline{y}} ]\).

We will prove that we can choose \(\tau \) small enough so that \(w=1\) is dominated by \(w = \frac{1}{2}\). The argument that \(w=0\) is also dominated by \(w= \frac{1}{2}\) for sufficiently small \(\tau \) is analogous. For this, we will assume that \(w=1\) is better than \(w= \frac{1}{2}\) and find a contradiction for \(\tau \) small enough. Let \(x_{\tau }\) denote the \(\tau \)-quantile of X and assume that \(q=q\left( \frac{1}{2}, \tau \right) \leqslant x_{\tau }\). See Fig. 11 where \(q < x_{\tau }\). From the definition, the probability under the red line \(\frac{X+Y}{2} \leqslant 2\) is \(\tau \), which is the same of the probability of the vertical band from 0 to \(x_{\tau }\). Therefore, the blue area in the graph (both light and dark blue together) have to be the same as the dark red that corresponds to the small triangle from \(x_{\tau }\) to 2q in the horizontal axis and from 0 to \(2q-x_{\tau }\). To better follow the argument, let \(A_{1}\) correspond to the probability in the dark blue area and \(A_{2}\), the probability of the light blue area. Let \(A_{3}\) correspond to the probability of the dark red area and \(A_{4}\), the probability of the light red area. Therefore, as observed above, by definition,

We will show, however, that we can choose \(\tau \) sufficiently small so that

Observe that (15) implies

which contradicts (14). In order to obtain (15), observe that

Since \(m< f(x,y) < M\) for \((x,y) \in [0,{\overline{x}}]^{2}\), we have

Therefore, we obtain (15) if

Since \({\overline{y}}\), M and m are given constants, the above inequality is satisfied if \(x_{\tau }\) is small enough, that is, if \(\tau \) is sufficiently close to 0. \(\square \)

1.4 Proof of Theorem 2

Proof

Note that by translating the distribution (along the 45\(^{\circ }\) line) if necessary, we may assume without loss of generality that \({\underline{y}}=0\).

Let us fix any \(\tau \in (0,{\overline{\tau }}]\) and let \(x_{\tau }, y_{\tau }\) denote the \(\tau \)-quantiles of X and Y, respectively. Of course, \(x_{\tau }> {\underline{x}}\). Figure 12 below will be useful to illustrate the reasoning in this proof.

Let \( {\bar{M}}\) be the average \(f_{X}(x)\) density on the region \(x \in [{\underline{x}}, x_{\tau }]\), that is, \({\bar{M}} \equiv \frac{ \tau }{x_{\tau } - {\underline{x}} }\). Therefore, \(m \leqslant {\bar{M}} \leqslant M\) and \(m \leqslant f(x,y) \leqslant M\) for all \((x,y) \in [{\underline{x}}, x_{{\overline{\tau }}}]\times [ {\underline{y}},{\overline{y}}]\). We want to show that \(q(w,\tau ) < x_{\tau }\) for all \(w \in [0,1)\). To show this, it is enough to show that for any \(q\geqslant x_{\tau }\) and \( w \in [0,1)\), the probability under the line \(wx + (1-w) y \leqslant q\) is larger than \(\tau \), that is,

For this, it is sufficient to show that the area in blue in Fig. 12 is larger than \(\tau \) already for \(q=x_{\tau }\), that is,

Since \(f(x,y) >m\) for all \((x,y)\in [{\underline{x}},x_{{\overline{\tau }}}] \times [{\underline{y}},{\overline{y}}] \cup [{\underline{x}},{\overline{x}}] \times [{\underline{y}}, x_{{\overline{\tau }}}]\), we have

Thus, to establish (16), it is sufficient to show that

Let us define the quadratic polynomial:

Notice that \(p(0)=mx_{\tau }^{2}>0\) and for \(w=1\):

Let \(w_V\) denote the vertex of the quadratic p(w), which is given by

Note that \(w_V\) is always positive.

We can conclude that the quadratic form p(w) in (17) is positive if \(p(w_{V})>0\). To verify this, we can substitute \(w_{V}\) in the quadratic form to obtain:

which is positive as long as

Since \(\tau = {\bar{M}} (x_{\tau } - {\underline{x}})\), the above condition is equivalent to

Since \({\bar{M}} \leqslant M\) and \(x_{\tau } > {\underline{x}} \geqslant \frac{M}{2m}\), we have \(x_{\tau } > \frac{{\bar{M}}}{2m}\), thus proving the result. \(\square \)

Remark A.1

One can also conclude that \(p(w)>0\) for all \(w \in [0,1]\) if \(w_V > 1\). Unfortunately, the condition for this is stronger than the one given in Theorem 2. Indeed, \(w_V > 1\) if

which is equivalent to

Since \(\tau = {\bar{M}} (x_{\tau } - {\underline{x}})\), the above condition simplifies to

Since \({\bar{M}} \leqslant M\), if we have \({\underline{x}} \geqslant \frac{M}{m}\) then \({\underline{x}} \geqslant \frac{{\bar{M}}}{m}\). However, this assumption \({\underline{x}} \geqslant \frac{M}{m}\) is stronger than the condition in Theorem 2.

1.5 Proof of Theorem 3

Proof

Fix \(\tau \geqslant {\overline{\tau }}\) and assume that \({\underline{x}}={\underline{y}}\) and \({\overline{x}}={\overline{y}}\). We want to show that \(w^{*}(\tau )=1\) for sufficiently high \(\tau \), which is equivalent to show that \(q(w,\tau ) < x_{\tau }\) for all \(w \in (0,1)\) and \(\tau \geqslant {\overline{\tau }}\). To show this, it is enough to show that for any \(q> x_{\tau }\) the probability under the line \(wx + (1-w) y \leqslant q\) is larger than \(\tau \), that is,

where \(A(w,q) \equiv \{(x,y) \in [ {\underline{x}}, {\overline{x}} ] \times [{\underline{x}},{\overline{x}}]: wx + (1-w) y \leqslant q \}\). This is equivalent to show that

For estimating this integral, we have to consider three intervals for w: \((0,{\underline{w}})\), \([{\underline{w}}, {\overline{w}}]\) and \(( {\overline{w}}, 1)\), where \({\underline{w}}\) and \( {\overline{w}}\) correspond to the lines that pass by \(({\underline{x}},{\overline{x}})\) and \(({\overline{x}},{\underline{x}})\) respectively, that is,

Note that \({\underline{w}}=1-{\overline{w}} < {\overline{w}}\) for large \(\tau \).

Illustration for the proof of Theorem 3: the case of \(\tau \) close to 1

Figure 13 shows the set \(A(w,x_{\tau })\) in blue, for \(w \in [{\underline{w}}, {\overline{w}}]\). Thus, if \(w \in [{\underline{w}}, {\overline{w}}]\),

For \(w \in (0, {\underline{w}})\),

and, for \(w \in ({\overline{w}},1)\),

We will consider each of the above cases.

Case 1: \(w \in [{\underline{w}},{\overline{w}}]\).

Since \(m \leqslant f(x,y) \leqslant M\) for all \((x,y) \in [ x_{{\overline{\tau }}}, {\overline{x}} ] \times [{\underline{x}},{\overline{x}}]\), we have

Using \({\overline{x}}={\overline{x}}\), the left hand side becomes \(\frac{M \left( {\overline{x}} - x_{\tau } \right) ^{2 }}{2w(1-w)}\). Thus, it is enough to prove that

Since \(w\in [{\underline{w}},{\overline{w}}]\), we have

Thus, it is sufficient to show that:

We now define \({\bar{m}} = \frac{({\overline{x}} - x_{\tau })({\overline{x}}-{\underline{x}})}{1-\tau }\) so that \(1-\tau ={\bar{m}}({\overline{x}}-x_{\tau })({\overline{x}}-{\underline{x}})={\bar{m}}({\overline{x}}-x_{\tau })({\overline{x}}-{\underline{x}})\). Since

we have \({\bar{m}} \geqslant m \implies \frac{M}{{\bar{m}}} \leqslant \frac{M}{m}\). Therefore, the assumption gives

as we wanted to show.

Case 2: \(w \in (0,{\underline{w}})\).

Since \(m \leqslant f(x,y) \leqslant M\) for all \((x,y) \in [ x_{{\overline{\tau }}}, {\overline{x}} ] \times [{\underline{x}},{\overline{x}}]\), we have

Thus, it is enough to prove

Since \(w\in (0,{\underline{w}})\), we have \(1-w > 1- {\underline{w}} = \frac{x_{\tau } - {\underline{x}} }{{\overline{x}}-{\underline{x}}}\). Thus, it is sufficient to show that:

This is exactly condition (18) above, which we have shown to be implied by the assumption.

Case 3: \(w \in ({\overline{w}},1)\).

Since \(m \leqslant f(x,y) \leqslant M\) for all \((x,y) \in [ x_{{\overline{\tau }}}, {\overline{x}} ] \times [{\underline{x}},{\overline{x}}]\), we have

Thus, it is enough to prove

Since \(w\in ({\overline{w}},1)\), \(w > {\overline{w}}=\frac{ x_{\tau } - {\underline{x}} }{{\overline{x}}-{\underline{x}}} \), it is sufficient to show again condition (18) above, which we have shown to be implied by the assumption of Theorem 3. \(\square \)

1.6 Proof of Proposition 3.10

Proof

Assume first that q(w) is differentiable at \(w\in (0,1)\). Taking the total derivative with respect to w on the equation \( h(w,q) = \tau \), we obtain

From (8), it is clear that h is differentiable and that \(\partial _{q} h(w,q)>0\). Therefore, by the Implicit Function Theorem, q is differentiable and given by (19). Now, we will calculate \(\partial _{w}h(w,q)\) and \(\partial _{q}h(w,q)\). Define the function \(y(w,q) \equiv \frac{q-wx}{1-w}\). Then,

Support on \({\mathbb {R}}\)

Here we consider first the case in which \({\mathscr {I}}_{X}={\mathscr {I}}_{Y} = {\mathbb {R}}\). The other two cases are similar and considered below.Footnote 15 It is clear from (8) and Assumption 1 that h is \(C^{1}\) and:

and

From this,

Therefore,

as we wanted to show. \(\square \)

Support on \([0,\infty )\)

Now, we consider the case \({\mathscr {I}}_{X}={\mathscr {I}}_{Y}={\mathbb {R}}_{+}=[0, \infty )\). In this case, we have

Let us define:

for \(x < \frac{q}{w}\) and \( g \left( w,q, \frac{q}{w} \right) = 0\) so that g is continuous and differentiable. Also,

Then,

and

From this, we observe that the same expressions remain valid:

and

as before.

Support on [0, c]

We now consider the case \({\mathscr {I}}_{X}={\mathscr {I}}_{Y}=[0,c]\). There are two subcases to consider, as show in Fig. 14.

Case (a)

In this case,

Let

Therefore,

Thus,

Since \(g(0,0, \cdot )=0\) and \(\partial _{w} g(0,c,x)=0\), and \(g \left( 0,c, \frac{q-(1-w)c}{w} \right) \partial _{w}\left[ \frac{q - (1-w)c}{1-w}\right] \) appear with + and - signs, we have

We have:

Anagously to the previous case,

Note that in this case,

so that

From this, we observe that the same expressions remain valid:

and

as before.

Case (b)

In this case,

and

Thus,

Since \(\partial _{w} g(0,c,x)=0\), and \(g \left( 0,c, \frac{q-(1-w)c}{w} \right) \partial _{w}\left[ \frac{q - (1-w)c}{1-w}\right] \) appear with + and - signs,

Also,

Therefore,

Note that in this case,

so that

From this, we observe that the same expressions remain valid:

and

as before. \(\square \)

1.7 Proof of Corollary 3.11

Proof

The proof of this result is immediate from imposing \(q'(w^*)=0\) in Proposition 3.10, and noting that \(q''(w)=\frac{\partial }{\partial w} E[Z_{w,q}]\), with \(q''(\cdot )\) the second derivative with respect to w. To show this, we take the first derivative of \(q'(w)\) in (10). Then,

Then, noting that \(E[Z]=q(w^*)\) and \(q'(w^*)=0\), we have

\(\square \)

1.8 Proof of Proposition 3.13

Proof

The proof of this result is shown in three different stages. First, we note from Corollary 3.11 that the first order condition characterizing an extremum of the optimization problem (7) is \(E[Z]=q(w^*)\), with \(w^* \in (0,1)\). Now, given that the density function \(f_Z(z)\) is evaluated over the line \(y=\frac{q(w^*)-w^* x}{1-w^*}\), it is not difficult to see that the projection of this line on the y-axis is \(y^*=E[Z]\) for \(x^*=E[Z]\). This result implies that for different quantile values \(q(w^*) \equiv q(w^*,\tau )\) indexed by \(\tau \in (0,1)\), the mean value of the random variable Z characterized by the density function \(f_Z(z)\) in (9) is in the \(45\%\) degree line such that \((x^*,y^*)=(E[Z],E[Z])\).

Second, we prove that condition (11) evaluated at \(w^*\) guarantees the symmetry around E[Z] of the density function \(f_{Z}\) for \(x \in {\mathscr {I}}_{Z}={\mathscr {I}}_{Z}^{w^*,q}= \{x \in {\mathbb {R}}: \left( x, \frac{q-w^*x}{1-w^*} \right) \in {\mathscr {I}}_{X} \times {\mathscr {I}}_{Y} \}\). More formally, condition (11) implies \(f_{Z}(E[Z]+\varepsilon )=f_{Z}(E[Z]-\varepsilon )\) for all \(\varepsilon >0\). This is so by noting that \(f_{Z}(E[Z]+\varepsilon )= \frac{f \left( E[Z]+\varepsilon , E[Z] - \frac{w^*}{1-w^*} \varepsilon \right) }{\int _{{\mathscr {I}}_{Z}} f \left( t, \frac{E[Z]-tw^*}{1-w^*} \right) dt}\) and \(f_{Z}(E[Z]-\varepsilon )= \frac{f \left( E[Z]-\varepsilon , E[Z] + \frac{w^*}{1-w^*} \varepsilon \right) }{\int _{{\mathscr {I}}_{Z}} f \left( t, \frac{E[Z]-tw^*}{1-w^*} \right) dt}\).

Now, we show that under assumption 2, the density function \(f_{Z}\) is unimodal. To show this, we note that \(f_Z(z)=\frac{f \left( x, \frac{E[Z] - w^* x}{1-w^*} \right) }{\int _{{\mathscr {I}}_{Z}} f \left( t, \frac{E[Z]-tw^*}{1-w^*} \right) dt}\), such that under the change of variable \(x=E[Z]+\varepsilon \), we obtain \(f_Z(z)=\frac{f \left( E[Z]+\varepsilon , E[Z] - \frac{w^*}{1-w^*} \varepsilon \right) }{\int _{{\mathscr {I}}_{Z}} f \left( t, \frac{E[Z]-tw^*}{1-w^*} \right) dt}\). Assumption 2 evaluated at \(\mu =E[Z]\) implies that the numerator of this expression increases with \(\varepsilon \) up to \(\varepsilon =0\) and then decreases. This condition is sufficient to show that \(f_Z(z)\) is unimodal with mode at E[Z].

These findings (unimodality and symmetry of \(f_Z(\cdot )\)) apply to every \(\tau \in (0,1)\) and imply that the point \((x^*,y^*)=(E[Z],E[Z])\) divides the line \(\frac{q(w^*)-w^* x}{1-w^*}\) in two equal segments for all quantile values \(q(w^*) \equiv q(w^*,\tau )\) indexed by \(\tau \in (0,1)\). This property also implies that E[Z] is the median of the distribution of Z with support the projection of the line on the x-axis. In this scenario no other combination \({\widetilde{w}}\), with \({\widetilde{w}} \ne w^*\), and such that \(q({\widetilde{w}},\tau )\) and \(q(w^*,\tau )\) defines two different lines for the same \(\tau \in (0,1)\), yields a line \(y=\frac{q({\widetilde{w}})-{\widetilde{w}} x}{1-{\widetilde{w}}}\) that intersects \(y=x\) at \((x^*,y^*)=(E[Z],E[Z])\). Let \(({\widetilde{x}},{\widetilde{x}})\) denote such intersection, and let \(\tau _0 \in (0,1)\) be defined by the condition \(\tau \le P(Z \le q)\) for \(\tau \le \tau _0\), and \(P(Z \le q) < \tau \) for \(\tau \in (\tau _0,1]\). Then, the condition \(\tau \le P(Z \le q)\), for all \(\tau \in (0,\tau _0]\), implies that the projection of the crossing point \(({\widetilde{x}},{\widetilde{x}})\) on the x-axis, given by \({\widetilde{x}}\), is smaller than the corresponding projection for \(w^*\), that is \(x^*=E[Z]\). Then, for all \({\widetilde{w}} \in (0,1)\) with \({\widetilde{w}} \ne w^*\), it follows that \({\widetilde{x}} < E[Z]\), that is equivalent to the condition \(q({\widetilde{w}})<q(w^*)\) since \(q({\widetilde{w}})={\widetilde{w}} x + (1-{\widetilde{w}}) y\). Then, for \(x=y={\widetilde{x}}\), we have \(q({\widetilde{w}})={\widetilde{x}}\) and for \(x=y= E[Z]\), we have \(q(w^*) =E[Z]\). Hence, the quantity \(w^*\) maximizes the quantile function for all \(\tau \in (0,\tau _0]\).

It remains to see that the condition \(q(w^{*})=E[Z]\) characterizes a minimum of the optimization problem (7) for \(\tau > \tau _0\). In this scenario, the solution to the optimization problem (7) is also in the \(45^o\) degree line, however, for \(\tau \in (\tau _{0},1]\), it follows that \(P(Z \le q) < \tau \) (see Fig. 5b). Then, for any \({\widetilde{w}}=w^{*} \pm \varepsilon \), with \(\varepsilon >0\), the projection of the crossing point \(({\widetilde{x}},{\widetilde{x}})\) on the x-axis is larger than the projection of the crossing point (E[Z], E[Z]) associated to \(w^{*}\). Thus, for any \({\widetilde{w}}=w^{*} \pm \varepsilon \) with \(\varepsilon >0\), it follows that \({\widetilde{x}}>E[Z]\) and, using the above arguments, \(q({\widetilde{w}})>q(w^*)\) for all \({\widetilde{w}} \ne w^*\), with \(w^*, {\widetilde{w}} \in (0,1)\).

The continuity of q(w) with respect to w implies that the solution to (7) is a corner solution. For \(w^*=\frac{1}{2}\) in the region \(\tau \in (0,\tau _0]\), the solution for \(\tau >\tau _0\) is indistinctively zero or one due to the symmetry of condition (11). Otherwise, if \(w^* \ne \frac{1}{2}\) in the region \(\tau \in (0,\tau _0]\), the solution to the maximization problem for \(\tau >\tau _0\) is given by the random variable with largest upside potential. More formally, it will be one if \(F_X(z) < F_Y(z)\) uniformly over z, for z sufficiently large. In contrast, the solution will be zero for \(\tau >\tau _0\) if \(F_X(z) > F_Y(z)\) uniformly over z, for z sufficiently large. This property is determined by whether \(w^*<\frac{1}{2}\) in condition (11) or not. \(\square \)

1.9 Proof of Corollary 3.14

Proof

To prove the result in the corollary, it is sufficient to show that the iid assumption implies assumptions 2 and 3 with \(w^*=\frac{1}{2}\). Then, applying Proposition 3.13 the result follows.

To prove assumption 2, we note that under the iid condition, for any \(\mu \in {\mathbb {R}}\), it holds that \(f(\mu +\varepsilon , \mu -\varepsilon ) = f_X(\mu +\varepsilon ) f_X(\mu -\varepsilon )\). Now, taking the first derivative with respect to \(\varepsilon \), we obtain

Let \(m_X\) denote the mode of the random variable X. For \(\mu =m_X\), the unimodality of \(f_X(\cdot )\) implies that \(\frac{\partial f_X(\mu +\varepsilon )}{\partial \varepsilon }>0\) for \(\varepsilon <0\), \(\frac{\partial f_X(\mu +\varepsilon )}{\partial \varepsilon }=0\) at \(\varepsilon =0\) and \(\frac{\partial f_X(\mu +\varepsilon )}{\partial \varepsilon }<0\) for \(\varepsilon >0\). Under these conditions, expression (26) yields \(\frac{\partial f(\mu +\varepsilon , \mu -\varepsilon )}{\partial \varepsilon }>0\) for \(\varepsilon <0\); \(\frac{\partial f(\mu +\varepsilon , \mu -\varepsilon )}{\partial \varepsilon }=0\) at \(\varepsilon =0\) and \(\frac{\partial f(\mu +\varepsilon ,\mu -\varepsilon )}{\partial \varepsilon }<0\) for \(\varepsilon >0\), as stated in assumption 2.

The proof is a bit more complex for \(\mu \ne m_X\). In this case, for \(\mu <m_X\) there exists an interval \(|\varepsilon |< m_X - \mu \) that needs to be carefully evaluated. More specifically, for values of \(\varepsilon \) inside the interval, we note that expression (26) is positive if \(\varepsilon <0\) and negative if \(\varepsilon >0\). This follows from noting that for \(\varepsilon <0\) and \(\mu -m_X<\varepsilon \), the following condition is satisfied:

To show this condition it is sufficient to show that \(\frac{\partial f_X(\mu +\varepsilon )}{\partial \varepsilon }>\frac{\partial f_X(\mu -\varepsilon )}{\partial \varepsilon }\) given that \(f_X(\mu +\varepsilon )<f_X(\mu -\varepsilon )\) for \(\varepsilon <0\). This condition is, however, fulfilled for \(x \in (-\infty ,m_X)\) if the density function \(f_X\) is unimodal. Similarly, for \(\varepsilon >0\) and \(\varepsilon <m_X-\mu \), the following condition is satisfied:

This condition can be shown using the above arguments and noting that \(\varepsilon >0\).

For values of \(\varepsilon \) outside the interval \((0,m_x-\mu )\) the above proof follows straightforwardly.

For \(\mu > m_X\), there exists an interval \(|\varepsilon |< \mu - m_X\) that needs to be carefully evaluated. Nevertheless, the proof in this case follows as in the previous case. Also, for values of \(\varepsilon \) outside the interval the above proof follows similarly.

To verify Assumption 3, it is sufficient to show that for \(w^*=\frac{1}{2}\) and \(\varepsilon >0\), Eq. (11) becomes

for all \(\mu \in {\mathbb {R}}\), with \(f(\cdot ,\cdot )\) the joint density function of the random variables X and Y. This condition is, however, satisfied by construction under the iid assumption. This is so because

for all \(\mu \in {\mathbb {R}}\). \(\square \)

B Numerical simulation study

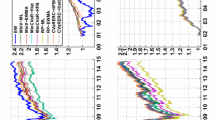

This Appendix presents additional results on the optimal asset allocation under quantile preferences (QP) for a portfolio \(S_{w}=w X+(1-w)Y\) given by the mixture of two continuous random variables X and Y, which are chosen from different distribution functions. For illustrative purposes, we consider pairs of Gaussian and Chi-squared random variables, under mutual independence and also with dependence. These results are also compared with the optimal asset allocation obtained under EU. Numerical computation of the portfolios are as described in Sect. 3.4.

1.1 B.1 Independent and identically distributed random variables

1.1.1 Two Chi-squared independent and identically distributed random variables

To illustrate the optimal portfolio allocation problem for asymmetric distributions, we investigate the case of two independent and identically distributed standard Chi-squared distributions, X and Y. Under risk aversion, the iid assumption is sufficient to guarantee that the optimal portfolio choice of a EU individual will be a fully diversified portfolio given by \(w^*=0.5\). For QP, the same result holds true for values of \(\tau \) identified with risk aversion. The left panel of Fig. 15 shows that the family of distribution functions \(F_{S_{w}}\) satisfies the single-crossing condition defined by Manski (1988); see also de Castro and Galvao (2021, Section 5). The right panel of Fig. 15 shows the optimal portfolio allocation is divided into two regions: a first region given by \({\widehat{w}}_n^{*}(\tau )=0.5\), for \(\tau \le \tau _0\approx 0.80\), and a second region given by \({\widehat{w}}_n^{*}(\tau )=\{0, 1\}\), for \(\tau >\tau _0\). The shift in the cut-off point \(\tau _0\) compared to \(\tau _0=0.5\) is due to the asymmetry of the Chi-squared distribution.

1.2 B.2 Independently distributed random variables

1.2.1 Two Gaussian independent random variables with different variances

We extend the Gaussian case discussed above and consider the case of independent random variables X and Y with different variances. Let \(X\sim N(0,1)\) and \(Y\sim N(0,2)\), independent of each other. In this case Y is a mean-preserving spread of the random variable X. This observation implies that X second order stochastically dominates Y and, using Fishburn (1977), X is preferred to Y for EU individuals endowed with an increasing and concave utility function. We can extend this result to derive the optimal portfolio allocation of a EU risk averse individual. In this case, the combination \(S_{w^*}\) with \(w^*=2/3\) has zero mean and minimizes the variance of the family of random variables \(S_{w}\). This combination second order stochastically dominates any other convex combination including X and Y. Then, using Fishburn (1977), \(S_{w^*}\) is the optimal strategy for EU individuals endowed with an increasing and concave utility function.

For individuals endowed with QP, we note that condition (11) is satisfied for \(w^*=2/3\). Then, Proposition 3.13 implies that the optimal combination of a QP individual is \(w^*=\frac{2}{3}\) for \(\tau \le \tau _0\), with \(\tau _0=\frac{1}{2}\). This result is illustrated in the numerical exercise on the right panel of Fig. 16. Diversification takes place for \(\tau \le \frac{1}{2}\) and no diversfication is the optimal result for \(\tau >\frac{1}{2}\).

1.3 Two Gaussian independent random variables with different means

Consider now the case of two independent Gaussian random variables with different means. Let \(X\sim N(1,1)\) and \(Y\sim N(1.5,1)\), independent of each other. In this case the random variable Y first order stochastically dominates the random variable X. In this scenario the optimal portfolio decision of an EU individual has to be calculated for each utility function separately because it depends on how the individual faces the trade-off between risk and return.

The results for the QP case are provided in Fig. 17. The results show the presence of an interior solution for \(\tau \le \tau _0\), with \(\tau _0\) around 0.30, and a corner solution \({\widehat{w}}_n^{*}(\tau )=0\) for \(\tau >0.30\). The solution in the right tail of the distribution is rationalized by the first order stochastic dominance of Y over any other convex combination of X and Y for \(\tau \) sufficiently large.

1.4 B.3 Dependent random variables

We study now the case of two dependent random variables. We differentiate between symmetric and asymmetric random variables.

1.4.1 Two Gaussian dependent random variables with same mean

In this exercise, we consider a bivariate Normal random variable (X, Y) with covariance matrix \(\Sigma =[1 \ \rho , \rho \ 1]\), where \(\rho =0.5\) is the correlation parameter. This scenario is rationalized by Proposition 3.13. More specifically, condition (11) is satisfied for \({\widehat{w}}_n^{*}(\tau )=0.5\), such that an interior solution is obtained for \(\tau \le \tau _0=0.5\). This result is observed in Fig. 18. For values of \(\tau \) greater than 0.5 the optimal solution is, indistinctively, \({\widehat{w}}_n^{*}(\tau )=\{0,1\}\).

1.4.2 Chi-squared dependent random variables

We complete the case of dependent assets with asymmetric distribution functions. Suppose that \(X\sim \chi ^2_1+1\) and \(Y\sim \chi ^2_2+X-2\) two dependent assets with expected value equal to two in both cases. For QP individuals, the optimal portfolio allocation has an interior solution for \(\tau \le \tau _0\), with \(\tau _0=0.60\). The presence of an interior solution for small values of \(\tau \) is rationalized by Theorem 1. However, in contrast to previous examples, assumptions 3 is not satisfied implying that the optimal portfolio allocation varies over \(\tau \) and there is no separation between risk aversion and risk loving behavior. Figure 19 reports the optimal portfolio allocation in this case.

1.5 B.4 Optimal portfolio allocation when there is a risk-free asset

Building upon previous insights of the QP theory in Sect. 3.2, we can extend the mutual fund separation theorem to the case of one risk free asset with returns \({\bar{r}}\) and two risky assets \(R_1\), \(R_2\) with distribution functions \(F_{R_1}, F_{R_2}\), respectively, and such that \(F_{R_1}\) crosses \(F_{R_2}\) from below at point \(x_{12}\). This assumption implies that \(R_2\) is riskier than \(R_1\). The portfolio return is defined by the convex combination \(R_p=w_0 {\bar{r}} + w_1 R_1+ w_2 R_2\), with \(w_0+w_1+w_2=1\). The investor’s optimization problem is

In this case the individual’s optimal portfolio choice is given by the risk-free rate when \(\text {Q}_{\tau }[R_p(w)] < {\bar{r}}\), for any combination of weights \(w = \{w_0, w_1, w_2 \}\) that is different from \(w^* = \{w_0^*=1, w_1^*=w_2^*=0 \}\). For higher quantiles, the solution to the maximization problem (31) is, in principle, quantile-specific. We illustrate this scenario by simulating the returns on three assets with returns \({\bar{r}}\), \(R_1 \sim N(\mu _1,1)\) and \(R_2 \sim N(\mu _2,1)\). The solution for \(0 \le \tau \le 1\) is obtained by simulating \(n=10,000\) realizations of the random variables.

The left panel of Fig. 20 reports the case \({\bar{r}}=0.5\), \(\mu _1=\mu _2=0\). The right panel considers the case \({\bar{r}}=0.25\), \(\mu _1=\mu _2=0\). As discussed above, for \(\text {Q}_{\tau }[R_p(w)] < {\bar{r}}\), the optimal allocation to the portfolio is given by investing on the risk-free asset. For the parameterization \({\bar{r}}=0.5\), \(\mu _1=\mu _2=0\) - left panel - the value of \(\tau \) that yields the condition \(\text {Q}_{\tau }[R_p(w^*)] = {\bar{r}}\) is \(\tau _0 \approx 0.7\). For values of \(\tau >0.7\), the optimal allocation to the risk-free asset is zero, and the QP individual is indifferent between assets 1 and 2. The right panel characterized by a smaller risk-free return (\({\bar{r}}=0.25\)) presents a similar outcome. In this scenario the value of \(\tau \) that satisfies the condition \(\text {Q}_{\tau }[R_p(w^*)] = {\bar{r}}\) is \(\tau _0 \approx 0.6\). For higher quantiles, the optimal portfolio allocation is the same as for the left panel and entails a zero allocation to the risk-free rate.Footnote 16

Rights and permissions

About this article

Cite this article

Castro, L.d., Galvao, A.F., Montes-Rojas, G. et al. Portfolio selection in quantile decision models. Ann Finance 18, 133–181 (2022). https://doi.org/10.1007/s10436-021-00405-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10436-021-00405-4