Abstract

Teachers’ noticing of students’ mathematical thinking plays an important role in supporting student learning. Yet little is known about how online professional development (PD)—a growing setting for PD in the USA—can cultivate this noticing. Here, we explore the potential of using two online tools for engaging video to support K-2 teachers’ noticing of students’ mathematical thinking in the context of a six-week online video-based PD program. While participating in the program, teachers used a commenting tool that allowed them to view video in its entirety and write a summary note, as well as a tagging tool that allowed them to mark moments of video while viewing and associate notes with those moments. We found that teachers’ regular use of the tagging tool promoted their increased noticing of students’ mathematical thinking in video. Further, the tagging tool and commenting tool appeared to function in complementary ways to support teachers’ noticing. These findings contribute to the field’s growing understanding of how technological advances can support the development and study of mathematics teacher noticing, with implications for the design of online teacher learning environments.

Similar content being viewed by others

Introduction

Effective teaching requires close attention to students’ thinking in order to make in-the-moment decisions that are responsive to students’ strengths and needs (National Council of Teachers of Mathematics, 2014; Richards et al., 2020). Toward this end, a range of research suggests that cultivating teachers’ noticing can help them navigate students’ thinking during instruction, with numerous in-person video-based professional development (PD) programs showing promise in supporting teachers (Gaudin & Chaliès, 2015). Reflecting on classroom video, in particular, can provide teachers with an opportunity to examine teaching in a context that portrays the richness of instruction while providing needed time for examination (Santagata et al., 2021).

Increasingly common is for teacher PD to take place in an online format, where teachers connect at a distance in both formal and informal ways (Elliott, 2017; Watkins & Portsmore, 2021). Less is known about how features of an online format may constrain or enable teachers’ noticing of students’ thinking while viewing classroom video. From a teacher education perspective, asynchronous online contexts—without the opportunity to interact in real-time—pose particular challenges for mediating teachers’ noticing of students’ thinking evident in video.

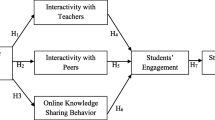

Here, we address this issue by investigating the use of two online tools to support teacher noticing of student thinking. Building on the idea that tools can scaffold productive engagement with video (Kang & van Es, 2019), we examine use of a commenting tool that allowed teachers to view a video clip in its entirety and then write a summary note about the clip, as well as use of a tagging tool that allowed teachers to mark a moment of video as it was viewed and add a note associated with that specific time in the video. This study explores how the tools, embedded and used in one online PD context, functioned to support teachers’ noticing of students’ mathematical thinking in video

Background and theoretical framing

Noticing students’ mathematical thinking

Given the “blooming, buzzing confusion of sensory data” that are abundant in classrooms (Sherin & Star, 2011, p. 69), making sense of moment-to-moment classroom activity is a particularly complex task for teachers. We refer to this as teacher noticing, conceptualized as two interrelated processes: how teachers selectively attend to what is taking place in their classrooms and how teachers interpret or make sense of these stimuli (Sherin & van Es, 2009). Importantly, such noticing is both active and finite—teachers “direct” and “pay” attention toward some objects and not others (Erickson, 2011, p. 17). For instance, if teachers pay attention to pedagogy or classroom management, which are common foci (e.g., Jacobs et al., 2010; Star & Strickland, 2008), they may not simultaneously attend to students’ thinking.

Noticing students’ thinking is necessary to position teachers to make responsive in-the-moment decisions (Richards et al., 2020). Indeed, Schoenfeld (2011) characterizes highly accomplished teachers as those who ‘‘shape their lessons according to what they discover about their students’’ (p. 463). Yet noticing students’ thinking is not always a routine practice for teachers, and teaching experience alone does not guarantee that teachers develop skills to notice students’ thinking (Jacobs et al., 2010). While important research has explored affordances of different ways of noticing students’ thinking (e.g., Louie et al., 2021), our interest here is continuing to unearth ways teachers can notice students’ thinking, broadly speaking. Noticing students’ mathematical thinking is a significant achievement in itself, and recent research highlights how learning to do so in the context of teacher education and PD can positively impact teacher noticing during instruction (e.g., Stockero, 2020; van Es et al., 2017).

Video-based opportunities for learning to notice

Since the 1980s, and especially over the past two decades, video has been considered a key resource for helping teachers learn to notice (Marsh & Mitchell, 2014; Ramos et al., 2021; van Es et al., 2019). A range of studies across pre- and in-service settings have documented how viewing and discussing classroom video, particularly in collaborative settings such as video clubs, can promote noticing of students’ thinking (e.g., Stockero et al., 2017; van Es & Sherin, 2008; Watkins & Portsmore, 2021). Several affordances of video seem particularly supportive of learning to notice (Sherin, 2004). For instance, video can approximate classroom interactions but does not require an immediate response, enabling teachers to slow down and notice what students are contributing. Video also offers a lasting record of classroom activity, allowing teachers to review classroom interactions multiple times and for multiple purposes. Further, video can be collected, edited, segmented, organized, and scaffolded to suit particular goals.

Supporting video-based noticing

Of course, video in and of itself is not sufficient to induce learning. In fact, experienced teachers often “react and speculate” beyond the information available in the video itself, reflecting what they expect interactions “should” look like (Erickson, 2007, p. 152). Research syntheses highlight that teacher educators must structure teachers’ interactions with video to support teacher learning through the intentional design and selection of prompts, facilitation, video clips, tools, and other mediators (Baecher et al., 2018; Kang & van Es, 2019; van Es et al., 2019). For example, one way that designers have mediated teachers’ interactions with video is to embed video in a structured learning environment, with tasks and prompts that teachers respond to as they explore the video and/or supporting activities. An instantiation of this approach is described in Seago et al.'s (2018) depiction of “video in the middle,” with video viewing occurring in between selected pre- and post-activities. Here, teachers might work together on a math task, then view a video of students engaging with the same task, and then discuss how they might use the task in their own classrooms.

As teacher learning experiences are increasingly offered in online contexts, and in some cases asynchronously (Falk & Drayton, 2015), new questions arise about how to support learning to notice in such formats. Recent advances in technology play a key role here. Teachers today can easily record their own classrooms, and video editing and sharing tools are widely available. Online platforms provide forums through which teachers can easily access and explore videos with other teachers, as well. But mediating noticing seems particularly challenging in online, asynchronous contexts, where real-time interactions are not part of the design (Baecher et al., 2018; Watkins & Portsmore, 2021). We explore how different tools embedded and used in a designed context of online, asynchronous, video-based PD can function to promote teachers’ noticing of students’ mathematical thinking.

Tools to support video-based noticing

Sociocultural and situative perspectives point to the role of tools—broadly conceptualized as material or conceptual artifacts created and used to facilitate action—as important mediators of activity and learning (see, for example, Cole & Engeström, 1993; Greeno, 2006). Interactions with tools can afford or constrain forms of action, and as such can shape ways of participating in activities. In this study, we seek to build from the idea that tools can productively mediate teachers’ engagement with video-embedded activities, as coordinated with each other and other features of video-based activity systems (Kang & van Es, 2019). We consider roles and impacts of tools as they are embedded and used within the video-based activities of our PD, with associated tasks, prompts, and social interactions, which we will describe further in the study context.

Aligned with advances in technology, video reflection tools are increasingly used to support teacher learning with video (e.g., Brunvand, 2010; Rich & Hannafin, 2009). Such tools may range from electronic notebooks to written comments on a site to video annotation tools (Brunvand, 2010). The latter in particular has been a subject of limited but increasing study in teacher education (Evi-Colombo et al., 2020). Video annotation tools enable the linking of notes or other forms of annotation to specific video moments or segments; when used as part of educative video-based activities, such tools have been shown to support teachers in reflection and critical thinking (Evi-Colombo et al., 2020), recognizing participation structures (Burwell, 2010), and connecting reflections to evidence (Rich & Hannafin, 2009), as well as cultivating growth mindsets among teacher candidates (Chizhik & Chizhik, 2018).

However, less is currently known about how video reflection tools may support teacher noticing specifically, especially in online programs (Watkins & Portsmore, 2021). As a recent review of video-based mathematics teacher noticing studies claimed, “Despite the advances of digital video technologies in the last one or two decades, this review made apparent that video software was rarely used, video annotation features were seldom utilized, and the potential of technology for supporting the development and for studying mathematics teacher noticing was under-examined” (Santagata et al., 2021, p. 128). A few studies have drawn on video annotation tools to support and provide windows into teacher noticing (Castro Superfine et al., 2017; McFadden et al., 2014; Sherin & van Es, 2005; So et al., 2016; Walkoe et al., 2019; Watkins & Portsmore, 2021), though often in concert with in-person learning opportunities and generally focusing on single tools rather than multiple tools used together. For instance, Sherin and van Es (2005) explored the impact of using an online video annotation tool on preservice teachers’ subsequent analyses of their own classroom videos without the use of the annotation tool. Teachers who had previously used the tool selectively attended to more significant interactions in their videos and provided more evidence-based interpretations, beginning to “refer to specific student actions and comments in the video as representing ‘student thinking’” (Sherin & van Es, p. 487). These characteristics were not seen as widely in the reflections of teachers who had not engaged with the tool, suggesting that work with the video annotation tool productively mediated preservice teachers’ noticing. Watkins & Portsmore's (2021) study of inservice teachers’ noticing and framing of online video discussions is most similar to the context and purpose of our present work, as they explored the possibilities of supporting noticing of students’ engineering thinking with a video annotation tool in an online, asynchronous course. They considered the role of several course design features intended to promote a framing of making sense of student thinking, including maintaining this focus across course activities and building on teachers’ contributions in varied ways as facilitators to model and highlight this focus. While our broader course design takes similar features into account, in this study we focus on the use and potential affordances of different video reflection tools for noticing in an online, asynchronous video-based PD.

Specifically, we explore teachers’ use of a commenting tool that functions similarly to a discussion board, a video annotation tool that we call a tagging tool, and the ways that teachers used them together. While work with video reflection tools to support teacher noticing in online settings is sparse, we anticipated that either or both tools could support teachers in selectively attending to the students and the mathematics in the video when the task is framed as working to notice students’ mathematical thinking, but we also imagined that the tools may serve complementary functions with respect to teacher noticing.

The commenting tool we examine here invites teachers to write a note about a video in its entirety on a discussion board-like platform (see “Tools for engaging video” for more on the specific tools). We anticipated that the tool’s positioning outside of the video player may afford more holistic discussions of noticings from across the video, and potentially selective elevation of the most noteworthy events from teachers’ perspectives, but may make concrete connections to specific moments in the video more challenging. Further, we envisioned that the format of the tool (with a large text box and threaded nature) may afford more elaborated and interconnected remarks from teachers (Cattaneo et al., 2019) and hence more extensive sense-making around stimuli from the video.

In contrast, like other video annotation tools, the tagging tool is integrated into the video player and offers teachers the opportunity to pause the video, mark particular moments, and attach notes to such moments. With its integration with the video, we anticipated that the tagging tool may promote teachers’ selective attention to specific occurrences in the video while viewing (e.g., Sherin & van Es, 2005), as the tool associates a note with a particular timestamp. We also imagined that the smaller text box may constrain the length of remarks and the amount of interpretation teachers would offer about what they noticed.

Additionally, we envisioned potential impacts of using the tools together for noticing in the online PD design. For instance, if teachers used both tools to support their video-based noticing, they could theoretically draw on what they noticed about a specific moment (a potential affordance of the tagging tool for noticing) to develop a more extensive and holistic interpretation of what took place (a potential affordance of the commenting tool for noticing), similar to what So et al. (2016) observed when they had teachers use a video annotation tool prior to engaging in face-to-face discussions with colleagues.

Here we examine these possibilities in the context of an online video-based PD for elementary teachers. Our primary research question was: How might the use of a commenting tool and a tagging tool in a PD activity system support teachers in noticing students’ mathematical thinking evident in video? We also asked: If teachers are prompted to use both tools, to what extent and how are their comments and tags related?

Study context and methods

Course context

The data we present come from an investigation of two iterations of a six-week online PD course on mathematical argumentation in the early grades, adapted from a course developed at the University of Washington (Lomax et al., 2017) and hosted by the Teaching Channel. Broadly speaking, the goal of the course was to support teachers in engaging their students in mathematical thinking and discourse, framed around a series of argumentation tasks that invited students to make claims and support them with evidence, and discussing these experiences using video with colleagues in the online platform. For the purposes of this study, we conceptualized the course activities as rich opportunities for teachers to notice students’ mathematical thinking.

Participants

Ten K-2 teachers from two neighboring suburban districts in the midwestern USA participated in each iteration of the course. All 20 teachers were women, 19 identified as White and one as Latinx. Cohort 1 teachers reported an average of 14.7 years of teaching experience, ranging from 4 to 28 years; three teachers reported having taken at least one online class about math teaching. Cohort 2 teachers reported an average of 10.3 years of teaching experience, ranging from 1 to 27 years, and none reported having taken online courses about math teaching.

Course structure and design

Our course design was informed by literature on effective practice- and video-based PD (e.g., Beisiegel et al., 2018; McDonald et al., 2013), adapted to an online asynchronous setting. The course was organized into six weekly modules, each of which had start and end dates but could be completed asynchronously anytime during a given week. In the first week of the course (which we call Week 0 because of its introductory nature), teachers learned about argumentation in mathematics broadly through example videos and blog posts depicting argumentation within elementary classrooms, as well as talk moves for facilitating productive discussion among students (Chapin & Anderson, 2013). In Weeks 1–4, teachers were introduced to a series of tasks designed to support students’ mathematical argumentation. For example, in Week 2 teachers learned about Which One Doesn’t Belong? (Danielson, 2016), in which students are shown a set of four images and asked to make claims about which image does not belong with the others and why. The images are selected so that there is not a single correct answer; there are reasons that any of the images might not belong.

With respect to video specifically, teachers were asked to video record themselves using the given task for the week in their classroom and select a four- to six-minute excerpt of video to upload to the online course along with a brief written reflection. Similar to the framing invited by Watkins & Portsmore (2021), teachers were consistently encouraged to focus their reflections on noticings about student thinking within the clip—ideas students shared, ways students engaged in argumentation, and what surprised or puzzled them about students’ thinking. Teachers were also asked to interact with at least two of their peers’ videos and reflections; as such, they had opportunities to observe both their own and others’ videos (Seidel et al., 2011) and engage in collaborative analysis with peers and researchers (Ramos et al.,2021). During Week 5 of the course, teachers engaged their students again in a previous task and reflected on their students’ learning during the course. These features and the roles of the researchers (see “The role of the researchers” section) remained consistent across iterations; what shifted was the ways in which course participants were asked to use online video reflection tools, described below.

Tools for engaging video

As noted, teachers had access to two tools for remarking on their own and their peers’ videos: a commenting tool and a tagging tool. The commenting tool allowed teachers to remark on a video outside of the video itself, in an environment akin to a discussion board as shown in Fig. 1. Subsequent remarks could either be in the form of a “Reply” to a posted comment or a new thread. (Note that all names here (e.g., "Jaime" in Fig. 1) and throughout the manuscript are pseudonyms.)

The tagging tool, in contrast, was integrated with the video player within the online course. Once teachers uploaded their video to the course site, the video subsequently played in a window with the tagging tool available to the right, as shown in Fig. 2. Teachers could click “Add Note” to add a tag while watching, and the video would pause in that moment for them to type their remarks, which were associated with the time that the video was paused. Tags were persistent and visible once made, so teachers could see and “Reply” to any tags that were already on the video, consistent with design suggestions to provide space for shared annotations (Cattaneo et al., 2019).

These two tools and their uses formed the basis of revisions we made to the course across iterations, as we intentionally sought to enhance teachers’ noticing of students’ mathematical thinking from the video.

Design for cohort 1

In the initial iteration of the course, we aimed to seed online discussion about student thinking in the videos by explicitly asking teachers to use the commenting tool to remark about at least two peers’ videos each week. The prompt for discussion each week read:

Choose two or three colleagues' videos to watch, and read their reflections as well. On the discussion board, start or enter a conversation about the videos, reflections, or existing responses. You might want to include what you notice about students’ thinking, the types of evidence students are using to support their ideas, anything that surprised you, questions you have, or how your ideas are similar to or different from those of your colleagues.

The tagging tool was available for use, but teachers were not given instructions on whether or how to use it. Looking back, our sense is that we thought affording teachers some flexibility in their use of the tools was appropriate, and we anticipated and were curious to see whether use of the commenting tool would support teachers' noticing of students' thinking.

Design shift for cohort 2

As we prepared for a second iteration of the course, we wondered if more explicit directions around use of the tagging tool might promote teachers’ noticing of students’ mathematical thinking in the video. In particular, we had noticed that Cohort 1 teachers all used the tagging tool at some point to remark on their own or their peers’ videos, and that their remarks seemed more attentive to students’ mathematical thinking evident in the video when using the tagging tool as compared to the commenting tool. We wondered if the tagging tool might orient teachers to students’ thinking overall—for instance, if they were required to use the tagging tool, would we see an increase in their noticing of students’ mathematical thinking from the video in comment threads as well? And if teachers were invited to use both tools together, would the tools function in distinct or redundant ways in their noticing? As described in the “Tools to support video-based noticing” section, we theoretically anticipated that the different natures of the tools and their positions with respect to the video itself could support complementary uses.

In the second iteration of the course, therefore, we explicitly integrated the tagging tool into the flow of teachers’ activity. Cohort 2 teachers were asked to tag two moments in their own posted videos each week and to tag two moments that stood out to them in the videos of two peers along with use of the commenting tool. The prompt for discussion looked similar across iterations, but now integrated the tagging tool (highlighted below in italics):

Choose at least two colleagues’ videos to watch. Use the “Add Note” tool to tag at least two moments in their videos that stand out to you. Then, read their reflections. On the discussion board, start or enter a conversation about the videos, reflections, or existing responses. You might want to include what you notice about students’ thinking, the types of evidence students are using to support their ideas, anything that surprised you, questions you have, or how your ideas are similar to or different from those of your colleagues.

This study examines how this shift in course design—from asking teachers to use the commenting tool in the first iteration of the course, to asking teachers to use the tagging tool along with the commenting tool in the second iteration—influenced teachers’ noticing of students' mathematical thinking in the video as evident in their remarks.

The role of the researchers

Prior to the start of the asynchronous course activities in both cohorts, teachers and researchers met for a kick-off event. The goal was to introduce the course structure and online system as well as to review the research aspects of the project. During course implementation, the researchers were available to assist teachers with any technical questions. A subset of the research team (including all co-authors of this study) served as facilitators for the course. Each week, one facilitator uploaded a video to introduce the topic for the week and reiterate the focus on exploring students’ thinking. In addition, facilitators remarked on videos using the tools in the same ways that participants were asked to do in each iteration of the course. Facilitators consistently modeled an inquiry stance and sustained attention to events in the video and students’ mathematical thinking (van Es et al., 2014) as they tagged and commented on videos, and they intentionally aimed not to be the first contributors to discussions about shared videos in order to invite teachers to set the stage (Beisiegel et al., 2018). However, similar to Watkins & Portsmore’s (2021) observation, facilitators’ remarks within the asynchronous interactions did not overall seem to prompt extended exchanges or shift the nature of the ongoing discussion threads.

Data sources

To understand the impacts of our different tool-based designs on teachers’ noticing, we examined the substance of all tags and comments teachers made about teacher-generated video clips throughout both iterations of the course.Footnote 1 Note that teachers remarked more inconsistently than we anticipated, resulting in fewer total comments and tags than we might have expected. In Cohort 1, teachers made a total of 112 tags and 36 comments; 105 of the tags discussed their peers’ videos, 7 tags discussed their own videos, and all 36 comments discussed their peers’ videos. Cohort 2 made 233 tags and 57 comments; 125 of the tags discussed their peers’ videos, 98 discussed their own videos, and all 57 comments discussed their peers’ videos. Nine Cohort 1 teachers and all ten Cohort 2 teachers also participated in an exit interview with a researcher in which they discussed their experiences in the course.

Analysis

Our analytic process proceeded in several phases. Phases 1–3 facilitated exploration of our primary research question of how the use of the tools in the PD activity system supported noticing. Phases 4 and 5 addressed our secondary research question about relations between comments and tags when both tools were used. In these analyses, we treat the content of teachers’ remarks in tags and comments as proxies for their noticing (Sherin & Star, 2011).

Phase 1: segmenting tags and comments into idea units

The first stage of analysis included dividing tags and comments into idea units that had a singular focus or topic (Jacobs & Morita, 2002). We chose to do this because sometimes the tags, and often the comments, included multiple foci, and we wanted to ensure our initial analysis accounted for all foci teachers noticed.

The first two co-authors independently segmented 20% of the tags and 20% of the comments from the first iteration of the course into idea units. They repeated this process twice to reach 95% agreement. Disagreements were resolved through discussion. They then independently segmented the remaining data. The first iteration of the course yielded 123 tag units and 50 comment units for analysis. The second iteration of the course yielded 237 tag units and 97 comment units for analysis.

Phase 2: coding tag and comment units

In the second stage of analysis, we coded each idea unit across dimensions that allowed us to track and compare the focus of teachers’ noticing. Specifically, we drew on elements of a scheme that van Es and Sherin (2008) used to characterize teachers’ noticing within in-person video club settings. This scheme depicts who teachers noticed (“Actor”), what they noticed (“Topic”), and whether their remarks were based on the video or events outside the video (“Video-based”) (see Table 1).

For instance, consider the following tag unit from a Cohort 2 teacher early in the course: “I love how you said, ‘good observation.’ I noticed in my videos, I say ‘okay’ a lot.” Here, we coded “Actor” as teacher, since the person being centered was the teacher. We coded “Topic” as pedagogy because the tag focused on a specific approach the teacher used when interacting with students’ contributions. Finally, we coded “Video-based” as yes because the tag referred to an occurrence within the video. To establish interrater reliability, all three authors independently coded 20% of the idea units randomly selected across tools and course iterations. We found substantial agreement on all dimensions with Fleiss’ Kappa (Hartling et al., 2012) (Actor = 0.871; Topic = 0.778; Video-based = 0.795). Subsequently, we discussed and resolved all disagreements; then, two of the authors coded the remaining idea units.

Finally, we distinguished idea units by whether they demonstrated the following cluster of codes—Actor: Student, Topic: Mathematical thinking, and Video-based: Yes. We called this cluster “Student Mathematical Thinking in the Video” (SMTV for short) and used it as our primary way of identifying idea units that demonstrated the characteristics we were striving for in teachers’ noticing. Any tag or comment that contained at least one SMTV tag unit or comment unit was considered to be an “SMTV tag” or “SMTV comment.”

Phase 3: conducting statistical analyses

In this phase of analysis, we first examined the total number of productions by teacher (both tags and comments) as well as the proportion of SMTV productions by teacher within each cohort and determined that there were no significant differences that would affect cross-cohort comparisons. We then conducted a Chi-square test of independence to examine the relationship between cohorts in producing SMTV comments and SMTV tags, respectively. However, the nature of our dataset is such that each data point (tag or comment) is not fully independent, as individual teachers made multiple tags and comments. Thus, we also calculated the proportion of SMTV tags produced by each teacher in each cohort, then used a Mann–Whitney U test to compare those proportions of SMTV tags by teacher across cohorts to examine whether the “average” teacher in Cohort 1 differed from the “average” teacher in Cohort 2 in terms of the proportion of SMTV tags that they made (Stock & Watson, 2012). We repeated the same process with the cohorts’ SMTV comments.

Phase 4: examining relations between SMTV comments and tags

Next, we considered relations between SMTV comments and tags for Cohort 2 teachers specifically, who more consistently used both tools. We used qualitative analyses to identify instances in which teachers in Cohort 2 produced a SMTV tag and a SMTV comment that referred to the same instance of student mathematical thinking in the video. To do so, we first noted those instances in which a Cohort 2 teacher tagged and commented on the same video (n = 33). From this set, we used bottom-up open coding (Miles et al., 2018) to determine if the same event was referred to in both the tag and the comment. If so, we referred to the tag and comment as “connected.” We then calculated the number of SMTV comments that connected to SMTV tags, and the number of SMTV comments and SMTV tags that connected to non-SMTV tags or comments, respectively.

Phase 5: analyzing teacher interviews

Finally, we used qualitative methods to analyze the exit interviews conducted with teachers in Cohort 2 to better understand their experiences using both tools. We first transcribed all the interviews, then located any remarks about the commenting and tagging tools, and specifically sought to characterize teachers’ perceptions of the affordances of the tools for noticing and/or perceived relations between the tools.

Findings

The goal of this study was to explore how a shift in course design that asked teachers to use both a commenting and a tagging tool to interact with classroom video in an online PD course influenced teacher’s noticing, as evident in their remarks with the tools. We were particularly interested in impacts on teachers' noticing of students' mathematical thinking. As discussed previously, the design shift was in part prompted by our observation that the remarks of teachers in Cohort 1 seemed more attentive to students’ mathematical thinking evident in the video (referred to as “SMTV” moving forward) when using the tagging tool as compared to the commenting tool. When we went back and coded systematically, our coding of tags and comments in Cohort 1 confirmed this observation—71% of tags (80/112) focused on SMTV, whereas only 47% of comments (17/36) had this focus. We conjectured that asking Cohort 2 teachers to tag before commenting might enhance their noticing of SMTV.

Finding 1: Cohort 2 teachers produced proportionally more SMTV comments than did Cohort 1 teachers

As we conjectured, Cohort 2 teachers produced proportionally more SMTV comments than did Cohort 1 teachers. As shown in Table 2, 47% of teachers’ comments from Cohort 1 were coded as SMTV (17 of 36 comments), while 84% of teachers’ comments from Cohort 2 were coded as SMTV (48 of 57 comments). These differences were significant using a Chi-square test of independence x2 (92, N = 93) = 14.35, p < 0.01. Furthermore, when we compared the proportions of SMTV comments by teacher across cohorts using the Mann–Whitney U test (Cohort 1 M = 0.45, SD = 0.33; Cohort 2 M = 0.82, SD = 0.19), we also found a statistically significant difference (U = 14.5; p = 0.014; p < 0.05). Together, these analyses illuminate the overall difference in SMTV comment generation between the cohorts at both the cohort level and teacher level.

While comments coded as SMTV varied, in general, they depicted either students' ideas or methods from the video. For example, after watching a video from a colleague’s class in which students discussed whether 5 + 3 = 5 − 3 was true or not, and subsequently what would make it true, Dorothy from Cohort 2 wrote in a comment:

… I love how Brodie said you could use the greater sign. He was right—that would make it true! So interesting. I love when they come up with an idea different than what I was expecting or thinking. (Actor: Student, Topic: Mathematical thinking, Video-based: Yes)

Here, Dorothy mentioned Brodie’s idea, noting that it was not something she expected to hear from a student. In another example comment, Patti, a Cohort 1 teacher, remarked on students’ efforts to rearrange an equation to make it a true statement. She wrote:

I found it interesting that some of your students, like mine, so desperately wanted to rearrange the equation! I wonder where this comes from? I was wondering if it was just because since we read from left to right, so for many, it seems natural that we should start at the left and just have to solve by writing what’s “missing” on the right? (Actor: Student, Topic: Mathematical thinking, Video-based: Yes)

Patti’s comment focused on a common method multiple students used and why they might have done so. As Table 2 shows, such SMTV comments were present in Cohort 1 but more common among Cohort 2 teachers.

We also found that Cohort 2 teachers produced proportionally more SMTV comments each subsequent week of the course. This was not the case for Cohort 1 teachers. Figure 3 shows that in Week 2 of the course, 78% of Cohort 2 teachers’ comments were coded as SMTV; this proportion rose to 88% in Week 3 and 90% in Week 4. In contrast, 53% of the comments that Cohort 1 teachers produced during Week 2 were coded as SMTV, and that proportion fell in subsequent weeks to 44% and 42%, respectively.

These differences in cohorts’ use of the commenting tool are notable. In the findings that follow, we explore the content of teachers’ tags and how teachers in Cohort 2 used the commenting and tagging tools together—reflecting the primary design distinction between cohorts.

Finding 2: preliminary evidence suggests that more consistent use of the tagging tool over time supported increased noticing of SMTV in tags

Turning to teachers’ use of the tagging tool in each cohort, we saw that as prompted, Cohort 2 teachers used the tagging tool more than Cohort 1 teachers (233 total tags in Cohort 2 compared to 112 total tags in Cohort 1). Cohort 2 teachers began using the tagging tool in Week 1 of the course and made more tags each week as the course progressed, whereas Cohort 1 teachers started using the tagging tool in Week 2 of the course and made fewer tags week to week. Here, we take a closer look at the focus of teachers’ tags in each cohort, given their differential use of the tagging tool.

Table 3 shows the proportion of tags coded as SMTV within each cohort: 71% of Cohort 1’s tags were coded as SMTV (80 of 112 tags), while 86% of Cohort 2’s tags were coded as SMTV (200 of 233 tags). These differences were statistically significant using a Chi-square test of independence x2 (344, N = 345) = 10.27, p < 0.01. However, when we compared the proportions of SMTV tags by teacher across cohorts using the Mann–Whitney U Test (Cohort 1 M = 0.67, SD = 0.28; Cohort 2 M = 0.85, SD = 0.14), the difference between cohorts was not statistically significant (U = 28.5; p = 0.112; p < 0.05). In other words, the average teacher in Cohort 2 did not produce proportionally more SMTV tags than did the average teacher in Cohort 1.

How might we understand the difference in proportions of SMTV tags across cohorts, if there were not significant differences by teacher? Figure. 4 shows each cohort’s proportion of SMTV tags over time.Footnote 2

The trends in this graph are interesting to consider. Cohorts’ patterns of tagging tool use with respect to SMTV were similar; both cohorts produced proportionally more SMTV tags over time (until Cohort 2 teachers’ proportion of SMTV tags plateaued near 100% in Week 3 of tool use). While these patterns are similar, recall that Cohort 1 teachers made fewer tags week to week while Cohort 2 teachers made more. Thus, we anticipate that the significant difference in the proportion of SMTV tags between cohorts (as seen with the Chi-square results) was likely connected to the extra week that Cohort 2 used the tool and the overall difference in total tag productions between the cohorts.

Together, these analyses suggest that the overall difference in cohorts’ SMTV tags may be best explained by the fact that Cohort 2 teachers used the tagging tool more consistently, and for a longer period of time, than Cohort 1 teachers did. We think the data presented suggest that ongoing use of the tagging tool, in the context of this course, supported teachers’ noticing of SMTV.

Finding 3: Cohort 2 teachers’ SMTV comments sometimes, but not always, directly connected to SMTV tags they made

Taken together, findings 1 and 2 suggest that tags became increasingly focused on SMTV the more teachers used the tagging tool in the course, and there may be an effect of tagging on commenting. One possible mechanism for the latter is fairly direct—that a teacher noticed SMTV while tagging and drew on that tag directly while commenting, resulting in an SMTV comment connected to the SMTV tag. Here, we examine relationships among Cohort 2’s tags and comments to explore whether this occurred.

Almost half of teachers’ SMTV comments connected directly to an SMTV tag they made about the same video moment.

Recall that Cohort 2 had 57 total comments, 48 of which we coded as SMTV. When we looked at the content of Cohort 2 teachers’ SMTV comments and whether they connected to SMTV tags that the same teacher made about the same video moment, we found that this occurred in 22 of the 48 SMTV comments (46%). There was only one instance in which an SMTV tag was connected to a comment that was not coded as SMTV, and only one instance in which an SMTV comment was connected to a tag that was not coded as SMTV. The remainder of the SMTV comments did not directly connect to an SMTV tag on the same moment by the same teacher.

In Table 4, we share two quick examples of what it looked like for an SMTV tag to be connected to an SMTV comment, with the italicized portions of the comments reflecting the specific connections we identified:

As illustrated in Table 4, an SMTV comment that was “connected” to an SMTV tag included remarks about the tagged moment of student thinking in the video. Teachers at times elaborated on what they noticed in the tag, as in the second example where the teacher unpacked that a “strong understanding” of the equal sign (to her) was reflected in the student’s explanation that one equation can be equal to another equation, not just a number.

Further, as Cohort 2 teachers used both tools, we saw that there were increased SMTV connections across tools later in the course. This is illustrated in Figs. 5 and 6, with Fig. 5 looking at the number of connected SMTV comments by week and Fig. 6 looking at the number of teachers in Cohort 2 who made such comments by week.

Figure 5 illustrates that week to week, Cohort 2 teachers produced more SMTV connected comments, with a notable difference between Week 1 (n = 1) and Week 4 (n = 13). Further, more teachers began to use the commenting tool in ways that were connected to tags over time (see Fig. 6). One teacher connected her SMTV comment to her SMTV tag in Week 1, whereas by Week 4 nine of the ten teachers had connected SMTV tags and comments. This pattern suggests that the way teachers used the tools in tandem may be part of what supported their noticing of SMTV in comments.

Teachers’ exit interviews also provided some evidence that they noticed student thinking when tagging and then drew on these noticings when commenting. When asked how they used the tools, eight of the ten teachers in Cohort 2 described using the tagging tool in relation to a peer’s video prior to writing a comment. Five teachers in their exit interviews described how they used the tagging tool to specifically mark moments of student thinking that caught their attention, and three teachers described extending these noticings with the commenting tool. For instance, one kindergarten teacher, Dorothy, noted that she “would tag it… and then maybe elaborate a little more in the post.” She described tags as observations, “noticing the student did this or the student did this, and then more elaboration in the comment part.” Similarly, a second-grade teacher, Heather, reflected how the tags are “very short… and then you can almost be a little more elaborative in your response when you comment on the reflection. And you might build on that, what you said on the tag and maybe elaborate a bit more…” However, for two teachers, the tags and comments felt somewhat redundant with each other. For instance, first-grade teacher Audrey noted, “I felt like the tagging, the tagging comment and the reflecting comments were… saying the same thing,” and she instead tended to use one tool or the other for a given video depending on the length of her response.

Yet about half of teachers’ SMTV comments were not connected to an SMTV tag they made about the same video moment.

In Cohort 2, there were also many SMTV comments (26 of 48 SMTV comments, 54%) that were not connected to SMTV tags the teacher made about the same video moment. Thus, direct connections between SMTV tags and comments were not the only mechanism supporting generation of an SMTV comment. Preliminary trends in our data and evidence from teachers' perspectives in their interviews point toward several other possibilities for why Cohort 2 produced more SMTV comments than Cohort 1.

One possibility is that noticing SMTV became an overall norm in the course context—that as Cohort 2 teachers watched and interacted with classroom videos using both tools, in the context of prompts and remarks from facilitators and peers that addressed SMTV, teachers focused more on SMTV in their comments. One second-grade teacher, Kendra, shared her perception that this is what occurred for her and potentially others:

I felt like my posts in the beginning, I wasn’t used to reflecting on students’ thinking… it was not our typical way of reflection… I was able to shift with practice on what the kids are saying and… why they’re saying it like that. It was hard… I think as the weeks went on, we got better at it.

In this reflection, Kendra positioned reflecting on students’ thinking as something that she “wasn’t used to,” and noted that others might not be used to doing so either. She perceived that she and other teachers got “better at” doing so “with practice.”

Another possibility is that Cohort 2 teachers’ noticing of SMTV in comments was cued by seeing SMTV tags on videos, even if they did not make them. Recall that all tags were persistently visible with the video as soon as they were made in the course platform. Eighteen of the 48 total SMTV comments (38%) occurred in response to videos on which the commenting teacher had not made any tags, but SMTV tags from other teachers existed on the video; there was only one SMTV comment made about a video without SMTV tags. In their exit interviews, five teachers discussed paying attention to existing tags on the video while watching.

Thus, the data suggest that teachers in Cohort 2 became increasingly attuned and socialized into noticing SMTV through multiple mechanisms involving the tagging tool. Direct connections between tags and comments mattered, but the very acts of tagging and being present to others’ tags may have also shaped collective noticing of SMTV in ways that permeated teachers’ comments.

Discussion

As teacher education and PD efforts increasingly move in the direction of online, asynchronous offerings (Elliott, 2017; Falk & Drayton, 2015), questions arise for supporting teachers' noticing of students’ mathematical thinking in video. How can teacher educators design for and promote such noticing without the real-time interactional possibilities present in in-person settings? How might technological advances support the development of mathematics teacher noticing (Santagata et al., 2021)? In this study, we examined the potential of using two video reflection tools for promoting elementary teachers’ noticing of SMTV in an online, video-based PD course. The commenting tool, located outside the video player, invited teachers to make summary remarks on what they noticed after viewing a video, while the tagging tool, located within the video player, prompted teachers to remark on specific moments in a video.

We found that two cohorts of participating teachers exhibited differences in their degrees of noticing SMTV with the tools. While the course stayed largely the same in design and consistently emphasized noticing students’ mathematical thinking in prompts and examples across cohorts, the cohorts were asked to interact differently with the available video reflection tools—Cohort 1 was asked to use the commenting tool after watching videos and given the option to use the tagging tool, whereas Cohort 2 was asked to tag and comment on videos. When we coded the focus of both cohorts’ comments and tags, we found that Cohort 2 teachers noticed significantly more SMTV in both comments and tags than Cohort 1 teachers (Findings 1 and 2). Both cohorts showed similarities in their increasing noticing of SMTV in tags across weeks, but Cohort 2 had higher numbers of tags (as it was a required part of the course) and an additional week to work with the tagging tool. In contrast, only Cohort 2 showed increasing noticing of SMTV in comments across weeks. A closer examination of relationships among Cohort 2’s tags and comments demonstrated that about half of teachers’ SMTV comments were connected to SMTV tags the teacher had made about the same video moment; the other half of SMTV comments were not (Finding 3).

We think that these findings point toward some important affordances of the video reflection tools for supporting teachers’ noticing of SMTV. For instance, prior studies point toward the importance of selectively attending to and focusing on specific moments in videos (e.g., van Es et al., 2014). It seems like the tagging tool may promote this process in asynchronous PD contexts, supporting noticing of specific moments in the video through either the tool’s direct association with and time-stamping of the video within the viewing platform and/or through tags that are already visible when a teacher views the video. A similar focusing role was identified for a video annotation tool in a study by So et al. (2016), which examined interactions between in-service secondary mathematics teachers’ use of a video annotation tool and their face-to-face discussions. Through examining the content of teachers’ annotations and discussions, the authors claimed that the video annotation tool supported teachers in descriptively identifying specific moments in the videos that they then interpreted or evaluated together in discussion. Further, teachers in our study did not use the tagging tool to identify just any moment—from the beginning, they used the tool fairly consistently to identify moments of SMTV as prompted in the course, even in Cohort 1 when they had the option but not the requirement to use the tool. This suggests that tagging moments of student thinking may be an accessible and generative entry point into noticing, specifically into selectively attending to student thinking in classroom videos.

Use of the tagging and commenting tools together also had interesting affordances. We originally imagined that the tagging tool would be used to notice specific moments of SMTV that teachers would engage with further using the commenting tool. While this played out as anticipated in some cases, approximately half of the SMTV comments in Cohort 2 were not clearly associated with SMTV tags. This finding leads us to think that SMTV tags may have also been useful in broadly attuning teachers to SMTV and, along with other aspects described in the “Course structure and design” section, in supporting an ongoing framing of teachers' activity as exploring student thinking (Watkins & Portsmore, 2021)—a focus which then showed up in their comments as well. Such attunement resonates with findings from prior studies (Sherin & van Es, 2005; van Es & Sherin, 2002) in which teachers who had previously used a video annotation tool to notice student thinking were more likely to refer to specific moments of student thinking later when writing reflections on their own classroom videos, despite not using the video annotation tool as part of this later activity. The authors discussed the tool-based experience as having “cognitive residue” (van Es & Sherin, 2002, p. 592) for the teachers, shaping their subsequent work with video. We anticipate that similar “residue,” afforded at least partly by use of the tagging tool, is part of the story here.

Taken together, these findings suggest that future PD designs for supporting teacher noticing of SMTV could integrate a tagging tool with prompts to focus on student thinking as a productive scaffold for drawing teachers’ attention selectively to specific moments of student thinking in classroom videos. We also see potential in being more explicit with teachers about ways of using the tagging and commenting tools in conjunction with each other and the different, complementary functions they might serve. In this study, we were fairly open in our tool use instructions with teachers, in part to explore how they would more organically take the tools up in relation to SMTV. We anticipate that more direct instructions to tag notable moments of SMTV and then unpack and synthesize tagged moments in connected SMTV comments may be useful for supporting teacher noticing.

Finally, there are numerous limitations, remaining questions, and future directions to explore with respect to supporting teachers’ noticing of SMTV with video reflection tools. We feel like we have just scratched the surface of this rich area for research and practice. Future work can build from these findings to explore how tools might differentially support teachers’ noticing of SMTV in particular ways, such as attending to strengths in students’ thinking (Jilk, 2016) or enacting anti-deficit ways of noticing (Louie et al., 2021). Further, while we examined the content of individual teachers’ comments and tags as they pertained to the specific tool that was used (and relations among them in Cohort 2), we recognize that other factors may also have shaped teachers’ remarks. These include but are not limited to the nature of the specific videos or tasks being discussed, the order in which videos and remarks were added to the online system as teachers completed the week’s asynchronous tasks, as well as teachers’ tendency to respond positively to videos from each other’s classrooms (Dobie & Anderson, 2015) and existing comments or tags present before a teacher contributed. Similarly, the participation of facilitators in the online course may have impacted teachers’ remarks; while we and other facilitators used the tools as teachers were invited to do and intentionally contributed after teachers, our contributions focused strongly on SMTV and may have shaped teachers’ use of the tools over time.

Additionally, the broader design of the argumentation course context and the videos that teachers collected may also have influenced the degree to which SMTV was visible to teachers as they used the video reflection tools, as the course was designed to make student thinking highly visible. Still, it is worth noting that our data suggest that differences among the teacher-uploaded videos and the tasks within the argumentation course were not a factor in our results. In other analyses, we found the teachers’ videos to be fairly similar along dimensions previously identified as impacting teacher noticing of SMTV (Richards et al., 2021); additionally, as mentioned in Finding 2, cohorts showed similar patterns of tool use even when engaging their students in different argumentation tasks.

Finally, we want to make one additional comment about future research. We noticed some interesting qualitative differences in how teachers connected SMTV comments to SMTV tags when they did so in Cohort 2. For instance, at times teachers seemed to deepen their account of what they noticed in the tag when they commented; at other times, they seemed to bridge from the noticing in the tag to something about their own classroom in the comment. We anticipate it would be productive to understand more about the varied ways in which teachers connect SMTV tags and comments and the learning affordances of different approaches. To do so, it would be valuable to know whether teachers first tag or first comment on a video. Due to the design of the course platform in this study, we were unable to access such information, though again most teachers self-reported tagging before commenting.

In conclusion, as teacher learning experiences are increasingly offered in online contexts, we believe that there is both much to learn and much in the way of opportunity to consider how to support teachers’ noticing and learning with video. Today teachers can easily record their own classrooms, and video editing and sharing tools are widely available. There are also online platforms where teachers can easily access and discuss videos with other teachers. We believe our claims about the affordances of the tagging tool to prompt teacher noticing of SMTV is an important advancement in understanding the development of teacher noticing in an online context. At the same time, we believe that future work would benefit from exploring how different designs for using video reflection tools individually and in combination might influence teachers’ engagement with SMTV, and we see a need for additional work focusing on other design features and interactional aspects of teachers’ noticing in the context of online professional development programs. Ongoing research into tools that can support teachers’ noticing with video can aid efforts to imagine and design video-based learning experiences that support teachers’ noticing and learning in online contexts.

Notes

A separate analysis of the teacher-generated video clips being viewed (Richards et al., 2021) showed that the vast majority across cohorts were consistently high in depth of mathematical thinking, windows into student thinking, and technical quality.

We chose to examine week of tool use rather than week of the course to compare patterns in how each cohort actually used the tool when they started to do so. Focusing on week of tool use rather than week of the course also mitigates a potential argument that observed patterns in teachers' noticing were strongly shaped by specific argumentation tasks, since cohorts showed similar patterns across offset weeks of the course (and hence tasks).

References

Baecher, L., Kung, S-C., Ward, S. L., & Kern, K. (2018). Facilitating video analysis for teacher development: A systematic review of the research. Journal of Technology and Teacher Education, 26(2), 185–216.

Beisiegel, M., Mitchell, R., & Hill, H. C. (2018). The design of video-based professional development: An exploratory experiment intended to identify effective features. Journal of Teacher Education, 69(1), 69–89.

Brunvand, S. (2010). Best practices for producing video content for teacher education. Contemporary Issues in Technology and Teacher Education, 10(2), 247–256.

Burwell, D. (2010). Using video annotation software to analyze classroom teaching events. In 2nd international conference on education and new learning technologies

Castro Superfine, A., Fisher, A., Bragelman, J., & Amador, J. M. (2017). Shifting perspectives on preservice teachers’ noticing of children’s mathematical thinking. In E. O. Schack, M. H. Fisher, & J. A. Wilhelm (Eds.), Teacher noticing: bridging and broadening perspectives, contexts, and frameworks (pp. 409–426). Springer.

Cattaneo, A, A. P., van der Meij, H., Aprea, C., Sauli, F., & Zahn, C. (2019). A model for designing hypervideo-based instructional scenarios. Interactive Learning Environments, 27(4), 508–529.

Chapin, S. H., & Anderson, N. C. (2013). Talk moves: A teacher's guide for using classroom discussions in math, grades K-6. Math Solutions.

Chizhik, E. W., & Chizhik, A. W. (2018). Value of annotated video-recorded lessons as feedback to teacher-candidates. Journal of Technology and Teacher Education, 26(4), 527–552.

Cole, M., & Engeström, Y. (1993). A cultural-historical approach to distributed cognition. In G. Salomon (Ed.), Distributed cognitions: psychological and educational considerations (pp. 1–46). Cambridge University Press.

Danielson, C. (2016). Which one doesn’t belong?: A shapes book. Stenhouse Publishers.

Dobie, T. E., & Anderson, E. R. (2015). Interaction in teacher communities: Three forms teachers use to express contrasting ideas in video clubs. Teaching and Teacher Education, 47, 230–240.

Elliott, J. C. (2017). The evolution from traditional to online professional development: A review. Journal of Digital Learning in Teacher Education, 33(3), 114–125.

Erickson, F. (2007). Ways of seeing video: Toward a phenomenology of viewing minimally edited footage. In R. Goldman, R. Pea, B. Barron, & S. J. Derry (Eds.), Video Research in the Learning Sciences (pp. 145–155). Routledge.

Erickson, F. (2011). On noticing teacher noticing. In M. G. Sherin, V. R. Jacobs, & R. A. Philipp (Eds.), Mathematics teacher noticing: Seeing through teachers’ eyes (pp. 17–34). Routledge.

van Es, E. A., Tekkumru-Kisa, M., & Seago, N. (2019). Leveraging the power of video for teacher learning: A design framework for mathematics teacher educators. In S. Llinares & O. Chapman (Eds.), International handbook of mathematics teacher education (Vol. 2, pp. 23–54). Leiden: Brill Sense.

Evi-Colombo, A., Cattaneo, A., & Bétrancourt, M. (2020). Technical and pedagogical affordances of video annotation: A literature review. Journal of Educational Multimedia and Hypermedia, 29(3), 193–226.

Falk, J. K., & Drayton, B. (2015). Creating and sustaining online professional learning communities. Teachers College Press.

Gaudin, C., & Chaliès, S. (2015). Video viewing in teacher education and professional development: A literature review. Educational Research Review, 16, 41–67.

Greeno, J. G. (2006). Learning in activity. In R. Keith Sawyer (Ed.), The Cambridge handbook of the learning sciences (pp. 79–96). Cambridge University Press.

Hartling, L., Hamm, M., Milne, A., Vandermeer, B., Santaguida, P. L., Ansari, M., Tsertsvadze, A., Hempel, S., Shekelle, P., & Dryden, D. M. (2012). Validity and inter-rater reliability testing of quality assessment instruments. (Prepared by the University of Alberta Evidence-based Practice Center under Contract No. 290–2007–10021-I.) AHRQ Publication No. 12-EHC039-EF. Agency for Healthcare Research and Quality.

Jacobs, J. K., & Morita, E. (2002). Japanese and American teachers’ evaluations of videotaped mathematics lessons. Journal for Research in Mathematics Education, 33(3), 154–175.

Jacobs, V. R., Lamb, L. L., & Philipp, R. A. (2010). Professional noticing of children’s mathematical thinking. Journal for Research in Mathematics Education, 41(2), 169–202.

Jilk, L. M. (2016). Supporting teacher noticing of students’ mathematical strengths. Mathematics Teacher Educator, 4(2), 188–199.

Kang, H., & van Es, E. A. (2019). Articulating design principles for productive use of video in preservice education. Journal of Teacher Education, 70(3), 237–250.

Lomax, K., Fox, A., Kazemi, E., & Shahan, E. (2017). Argumentation in mathematics. An online course developed in partnership with the Teaching Channel.

Louie, N., Adiredja, A. P., & Jessup, N. (2021). Teacher noticing from a sociopolitical perspective: the FAIR framework for anti-deficit noticing. ZDM-Mathematics Education, 53(1), 95–107.

Marsh, B., & Mitchell, N. (2014). The role of video in teacher professional development. Teacher Development, 18(3), 403–417.

McDonald, M. Kazemi, E., & Kavanagh, S. S. (2013). Core practices and pedagogies of teacher education: A call for a common language and collective activity. Journal of Teacher Education, 64(5), 378–386.

McFadden, J., Ellis, J., Anwar, T., & Roehrig, G. (2014). Beginning science teachers' use of a digital video annotation tool to promote reflective practices. Journal of Science Education and Technology, 23(3), 458–470.

Miles, M. B., Huberman, A. M., & Saldaña, J. (2018). Qualitative data analysis: A methods sourcebook. SAGE.

National Council of Teachers of Mathematics. (2014). Principles to actions. National Council of Teachers of Mathematics.

Ramos, J. L., Cattaneo, A. A. P., de Jong, F. P. C. M., & Espadeiro, R. G. (2021). Pedagogical models for the facilitation of teacher professional development via video-supported collaborative learning. A review of the state of the art. Journal of Research on Technology in Education, 1–24.

Rich, P. J., & Hannafin, M. (2009). Video annotation tools: Technologies to scaffold, structure, and transform teacher reflection. Journal of Teacher Education, 60(1), 52–67.

Richards, J., Elby, A., Luna, M. J., Robertson, A. D., Levin, D. M., & Nyeggen, C. G. (2020). Reframing the responsiveness challenge: A framing-anchored explanatory framework to account for irregularity in novice teachers' attention and responsiveness to student thinking. Cognition and Instruction, 38(2), 116–152.

Richards, J., Altshuler, M., Sherin, B. L., Sherin, M. G., & Leatherwood, C. J. (2021). Complexities and opportunities in teachers' generation of videos from their own classrooms. Learning, Culture, and Social Interaction, 28(4), 100490.

Santagata, R., König, J., Scheiner, T., Nguyen, H., Adleff, A. K., Yang, X., & Kaiser, G. (2021). Mathematics teacher learning to notice: A systematic review of studies of video-based programs. ZDM Mathematics Education, 53, 119–134.

Schoenfeld, A. H. (2011). Toward professional development for teachers grounded in a theory of decision making. ZDM Mathematics Education, 43, 457–469.

Seago, N., Koellner, K., & Jacobs, J. (2018). Video in the middle: Purposeful design of video-based mathematics professional development. Contemporary Issues in Technology and Teacher Education, 18(1), 29–49.

Seidel, T., Sturmer, K., Blomberg, G., Kobarg, M., & Schwindt, K. (2011). Teacher learning from analysis of videotaped classroom situations: Does it make a difference whether teachers observe their own teaching or that of others? Teaching and Teacher Education, 27, 259–267.

Sherin, M. G. (2004). New perspectives on the role of video in teacher education. In J. Brophy (Ed.), Using video in teacher education (pp. 1–27). Elsevier Science.

Sherin, M. G., & van Es, E. A. (2005). Using video to support teachers' ability to notice classroom interactions. Journal of Technology and Teacher Education, 13(3), 475–491.

Sherin, M. G., & van Es, E. A. (2009). Effects of video club participation on teachers' professional vision. Journal of Teacher Education, 60(1), 20–37.

Sherin, B., & Star, J. R. (2011). Reflections on the study of teacher noticing. In M. G. Sherin, V. R. Jacobs, & R. A. Philipp (Eds.), Mathematics teacher noticing: seeing through teachers’ eyes (pp. 66–78). Routledge.

So, H.-J., Lim, W., & Xiong, Y. (2016). Designing video-based teacher professional development: Teachers’ meaning making with a video annotation tool. Educational Technology International, 17(1), 87–116.

Star, J. R., & Strickland, S. K. (2008). Learning to observe: Using video to improve preservice mathematics teachers’ ability to notice. Journal of Mathematics Teacher Education, 11(2), 107–125.

Stock, J. H., & Watson, M. W. (2012). Introduction to econometrics (Vol. 3). Pearson.

Stockero, S. L. (2020). Transferability of teacher noticing. ZDM, 1–12.

Stockero, S. L., Rupnow, R. L., & Pascoe, A. E. (2017). Learning to notice important student mathematical thinking in complex classroom interactions. Teaching and Teacher Education, 63, 384–395.

Tekkumru-Kisa, M., & Stein, M. K. (2017). Designing, facilitating, and scaling-up video-based professional development: Supporting complex forms of teaching in science and mathematics. International Journal of STEM Education, 4(1), 27.

van Es, E. A., Cashen, M., Barnhart, T., & Auger, A. (2017). Learning to notice mathematics instruction: Using video to develop preservice teachers’ vision of ambitious pedagogy. Cognition and Instruction, 35(3), 165–187.

van Es, E. A., Tunney, J., Goldsmith, L. T., & Seago, N. (2014). A framework for the facilitation of teachers’ analysis of video. Journal of Teacher Education, 65(4), 340–356.

van Es, E. A., & Sherin, M. G. (2002). Learning to notice: Scaffolding new teachers' interpretations of classroom interactions. Journal of Technology and Teacher Education, 10(4), 571–596.

van Es, E. A., & Sherin, M. G. (2008). Mathematics teachers "learning to notice" in the context of a video club. Teaching and Teacher Education, 24, 244–276.

Walkoe, J., Sherin, M., & Elby, A. (2019). Video tagging as a window into teacher noticing. Journal of Mathematics Teacher Education, 23(4), 385–405.

Watkins, J., & Portsmore, M. (2021). Designing for framing in online teacher education: Supporting teachers' attending to student thinking in video discussions of classroom engineering. Journal of Teacher Education, 73(4), 00224871211056577.

Acknowledgements

We would like to thank Mari Altshuler, Tracy Dobie, and Bruce Sherin for their integral contributions to this project, and particularly the professional development program described, and David Rapp and Alexis Papak for their generative feedback on previous drafts of the manuscript.

Funding

This work was supported by funding from the Spencer Foundation (Deeper Learning Labs: Digital Resources for Collaborative Teacher Learning) in the USA, Grant Award #201600137, and the US Department of Education, Institute of Education Sciences, Multidisciplinary Program in Education Sciences, Grant Award # R305B140042. All findings, opinions, and recommendations expressed herein are those of the authors.

Author information

Authors and Affiliations

Contributions

First authorship is shared between the first two authors, who made equal contributions to this publication. All authors contributed to the study conception and design, data collection, analysis, and writing. All authors read and approved the manuscript being submitted.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Consent for publication

Participants have given consent to have data used for research purposes including for publication.

Ethical approval

This study was reviewed and approved by Northwestern University’s Institutional Review Board.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Larison, S., Richards, J. & Sherin, M.G. Tools for supporting teacher noticing about classroom video in online professional development. J Math Teacher Educ 27, 139–161 (2024). https://doi.org/10.1007/s10857-022-09554-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10857-022-09554-3