Abstract

Fail-operational behavior of safety-critical software for autonomous driving is essential as there is no driver available as a backup solution. In a failure scenario, safety-critical tasks can be restarted on other available hardware resources. Here, graceful degradation can be used as a cost-efficient solution where hardware resources are redistributed from non-critical to safety-critical tasks at run-time. We allow non-critical tasks to actively use resources that are reserved as a backup for critical tasks, which would be otherwise unused and which are only required in a failure scenario. However, in such a scenario, it is of paramount importance to achieve a predictable timing behavior of safety-critical applications to allow a safe operation. Here, it has to be ensured that even after the restart of safety-critical tasks a guarantee on execution times can be given. In this paper, we propose a graceful degradation approach using composable scheduling. We use our approach to present, for the first time, a performance analysis which is able to analyze timing constraints of fail-operational distributed applications using graceful degradation. Our method can verify that even during a critical Electronic Control Unit failure, there is always a backup solution available which adheres to end-to-end timing constraints. Furthermore, we present a dynamic decentralized mapping procedure which performs constraint solving at run-time using our analytical approach combined with a backtracking algorithm. We evaluate our approach by comparing mapping success rates to state-of-the-art approaches such as active redundancy and an approach based on resource availability. In our experimental setup our graceful degradation approach can fit about double the number of critical applications on the same architecture compared to an active redundancy approach. Combined, our approaches enable, for the first time, a dynamic and fail-operational behavior of gracefully degrading automotive systems with cost-efficient backup solutions for safety-critical applications.

Similar content being viewed by others

1 Introduction

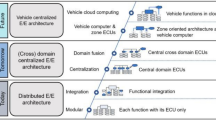

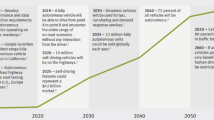

Fail-operational behaviour of safety-critical software is essential to enable autonomous driving. Without any driver as a backup solution, the failure of an ECU has to be handled by the software system. To manage increased software complexity, automotive electronic architectures are currently undergoing major changes. Instead of adding a new control unit for each new functionality, software is being integrated on a few, more powerful central control units [1].

By contrast, from a software perspective, this trend leads to a decentralization. In comparison to state-of-the-art monolithic ECU software, future software will be designed modular. Such a modular design will allow dynamic shifting of software components at run-time, including activation and deactivation of components on different ECUs. This perspective allows new strategies to enable fail-operational behaviour.

Additionally, automotive vendors are offering over-the-air software updates to regularly deliver the latest functionality. Here, customers will be able to purchase and enable features over an app store, which leads to unique and customized software systems.

Thus, a dynamic resource management is required, which maps applications at run-time as part of the software platform. A dynamic resource management allows to integrate new applications at run-time with unique solutions for an individual mix of applications. Furthermore, it enables a gracefully degrading system behaviour and allows the system to react to unplannable changes such as the defect of a hardware unit. Using a graceful degradation approach, safety-critical tasks can be restarted on other available hardware resources, while, in return, non-critical tasks are shut down to free resources. Therefore, instead of adding costly hardware redundancy to enable a fail-operational behaviour, existing resources can be repurposed. Decentralized run-time approaches have the advantage that there is no single-point-of-failure such that the system is still able to act after any ECU failure [2]. The advantage of using a passive backup solution compared to active redundancy is that almost no overhead is added in terms of required computational power.

The main challenge for such a system is to achieve a predictable system behavior. Most safety-critical applications have to meet real-time requirements, where a complete application execution has to finish within a deadline. To provide real-time guarantees, a performance analysis has to be based on a composable system such that the interference between applications can be bounded [3].

However, there is no approach yet that ensures a predictable timing behaviour of gracefully degrading systems where passive task instances are activated after a failure. To achieve a fail-operational behaviour it has to be ensured that the timing constraints are met under any circumstance. Finding a new task binding after a failure is not realistic as the backup solution has to be available immediately. Thus, an application binding can only be considered feasible if the deadline can be also met after restarting any of the passive task instances and a feasible backup solution is available for any possible failure.

In this paper, we present an agent-based mapping procedure based on a backtracking approach using a performance analysis to find feasible mappings at run-time. The agent-based system enables a decentralized control without a single point of failure. Each agent controls one task and is responsible for allocating resources and ensuring that constraints are met. The system is based on a composable scheduling technique that supports a gracefully degrading system behaviour. This allows to estimate an upper bound for the execution time not only for active tasks but also for passive tasks once they are started. Our approach includes passive tasks in the mapping search such that there is a backup solution available that fulfills the real-time constraints under any ECU failure.

The mapping approach is intended to be performed while the car is not actively in use such that most system resources are available for performing the search. Once the system is in a stable state and running, the agents do not perform any action and, therefore, put no additional strain on the system resources. During run-time, monitoring with heartbeat messages and watchdogs is performed to detect ECU failures. The solutions found by the mapping approach include valid backup solutions for critical applications to which a fast failover can be performed after a failure has been detected. Here, we follow our main hypothesis, such that when the failure occurs a safe state can be reached with a minimum amount of communication and computation. The timing analysis of a failover itself is out of scope of this work, we refer any reader interested in the topic to the work of [4]. After a failover has been performed, safety-critical applications are able to stay operational. However, to ensure a safe continuation, the fail-operational behavior has to be re-established. Depending on the amount of remaining resources, a re-mapping of the applications could be performed during a safe halt on a parking space.

In this work, we make the following contributions:

-

We analyze related work in Sect. 2 and provide an overview over related approaches in the fields of fail-operational systems, dynamic task mapping, graceful degradation and predictable timing behavior. We conclude that there is no work yet that allows an execution time analysis of gracefully degrading systems.

-

After introducing our system model in Sect. 3, we introduce and adapt a state-of-the-art performance analysis based on composable scheduling to our system model in Sect. 4.

-

We present our performance analysis for fail-operational systems which supports a gracefully degradable system behaviour in Sect. 5. This analysis also takes backup solutions into consideration and can evaluate whether the worst-case end-to-end application latency can still meet the deadline after switching to a backup solution during a failover. Furthermore, we introduce our gracefully degrading scheduling scheme.

-

We present our agent-based run-time mapping procedure in Sect. 6 using our performance analysis to find feasible mappings that meet real-time requirements. Our approach includes passive tasks in the search such that it is ensured that all backup solutions meet the real-time constraints. Here, we also introduce three strategies which can strongly influence the degradation behavior. A reconfiguration of the system can be performed after any failure to re-establish the fail-operational behaviour of safety-critical applications.

-

We evaluate our graceful degradation approach in our simulation framework in Sect. 7. Here, we compare our approach to an active redundancy approach by measuring the success rate and resource utilization over multiple experiments. Furthermore, we evaluate and discuss our three allocation and reservation strategies. Results show that around twice as much critical applications can be mapped onto the same architecture when using our graceful degradation approach compared to active redundancy approaches. We conclude that graceful degradation can greatly increase the success rate in scenarios where resources are limited if the risk of loosing non-critical functionality in a failure scenario is acceptable.

2 Related work

As our work is combining aspects from many different research areas, we organized the related work section into four subsections. First, we are presenting traditional fault-tolerant approaches in Sect. 2.1 which can be applied on device level but also on system level. Then we provide an overview over existing approaches in the field of dynamic mapping methodologies in Sect. 2.2. Afterwards, we discuss previous work on graceful degradation in Sect. 2.3. Last, we introduce work in Sect. 2.4 which has the goal of achieving predictable timing behavior using composable systems.

2.1 Fail-operational systems

In [5], the authors present an overview on existing fail-operational hardware approaches and introduce concepts for the implementation on a multi-core processor. The authors in [6] review common fault-tolerant architectures in System-on-a-Chip (SoC) solutions such as lock-step architectures, loosely synchronized processors or triple modular redundancy and perform a trade-off analysis. However, this work misses to apply fail-operational aspects on a system level instead of device level only.

The authors in [7] present a system-level simplex architecture to ensure a fail-operational behavior of applications but also to protect the underlying operating system, middleware, and microprocessor from failures. Here, the complex subsystem is driving the system as long as no failure occurs. A safety subsystem and a decision controller are running on a dedicated microcontroller. Similar authors in [8] present a fail-operational simplex architecture and address the problem of inconsistent states in Controller Area Network (CAN) controllers during a failover. As a solution, an atomic function stores the state and sends the message to avoid inconsistencies allowing to protect communication with peripherals. Although these approaches apply fail-operational capabilities on system level they are limited in flexibility and add a lot of cost as a dedicated hardware component is required.

Other work [9] addresses the automatic optimization of redundant message routings in automotive ethernet networks to enable fail-operational communication. In our work, redundant tasks are distributed over the system with redundant communication routes such that once a task is restarted there is always at least one communication path with preceding and succeeding tasks.

Overall, hardware components provided with failure detection and mitigation mechanisms are the base for enabling a dynamic fail-operational solution on system level. However, these solutions lack flexibility and are not dealing with the problem of providing a dynamic fail-operational behavior for an entire system consisting of many applications distributed over multiple ECUs.

2.2 Dynamic mapping

There has been a lot of work in the field of dynamic mapping approaches. The authors in [10] present a decentralized and dynamic mapping approach for Network-on-Chip (NoC) architectures. Their mapping approach takes bandwidth and load constraints into consideration and uses the best-neighbour strategy, which takes only the closest search space around a task into account. In [11] an agent-based run-time mapping approach for heterogeneous NoC architectures is presented. The system is based on global agents containing system state information and cluster agents which are responsible for assigning resources. Here, the main motivation is to reduce computational effort and global traffic for monitoring the system utilization when mapping the distributed applications. The authors in [12] present a centralized run-time mapping approach with the goal of reducing network load in NoC-based Multiprocessor-System-on-a-Chip (MPSoC) systems. Here, a dedicated manager processor is taking care of mapping initial tasks of each application to a cluster. As a dynamic workload is considered, all following tasks are mapped at run-time of the application upon communication requests. Multiple heuristics considering the channel load are presented and evaluated. The authors argue that it is reasonable to use greedy algorithms as they can provide quick results in exchange for lower search space exploration quality. They conclude that compared to static optimization methods the moderate overhead of solutions found by dynamic methods is acceptable considering the gain in flexibility.

Most of these approaches have the goal of increasing flexibility and at most consider being able to reconfigure the system when a faulty component is found. However, none of them take fail-operational or timing requirements into account.

2.3 Graceful degradation

The authors in [2] introduced an agent-based approach which finds task-mappings at run-time and ensures a fail-operational behavior of critical applications by applying graceful degradation. Here, agents can allocate and reserve parts of resources to ensure degradation. However, this work does not include any base for a timing analysis such that no guarantees to timing constraints can be given. Furthermore, we introduce a performance analysis for such a gracefully degrading system which we embed at run-time to ensure that timing constraints are met even in failure scenarios. The authors in [13] have presented a design-time analysis to find valid application mappings in mixed critical systems. Here, applications can have multiple redundancies based on their fail-operational level and the system can be degraded by shutting down optional software components. By contrast, in our approach, instead of having a limited amount of redundancy, we re-establish lost redundancy after a failover. The authors in [14] present a degradation-aware reliability analysis in which tasks are grouped according to different safety levels. Using design space exploration the reliability of the degradation modes is optimized. The work in [15] calculates the utility of a system by decomposing it into feature subsets, which may be defined by functional or non-functional attributes, such that the utility of the system in different degradation modes can be analyzed. However, they do not propose any approach on designing a gracefully degrading behavior. Other work such as [16] has been proposing to relax constraints once an anomaly occurs, leading potentially to degraded system behavior.

Overall, none of the mentioned work related to graceful degradation takes timing constraints into consideration such that a safe execution of time-critical applications can not be guaranteed.

2.4 Predictable timing behavior

The authors of [3] describe the two concepts of predictability and composability which can be used to reduce complexity and to verify real-time requirements. In composable systems, applications are isolated such that they do not influence each other allowing to verify their timing behavior independently. Furthermore, using formal analysis, lower bounds on performance can be guaranteed. The work of [17] uses hybrid application mapping to combine design-time analysis with run-time application mapping. The spatial and temporal isolation techniques together with a performance analysis are based on the concepts from [3]. At design-time a design space exploration with a formal performance analysis finds pareto-optimal configurations. A run-time manager then searches for suitable mappings of these optimized solutions. In contrast to their work, we extend the scheduling techniques and include passive tasks and messages in the performance analysis to enable graceful degradation. Furthermore, our application mapping is performed entirely at run-time with an agent-based approach.

In the real-time mixed-criticality systems community there has been work on guaranteeing reduced service to low criticality tasks after switching to a safety mode when a critical task can not meet its deadline [18]. By contrast, in our work we do not switch between two modes, but only degrade tasks if the resources of it have been reserved and are claimed by a critical task. Furthermore, the approaches in the literature are mostly not focusing on distributed systems and are not taking fail-operational aspects into consideration.

Previous work [4] has presented a formal analysis to derive the worst-case application failover time for distributed systems. The authors analyzed the impact of failure detection and recovery times on the timing behavior of distributed applications. This analysis guarantees an upper bound on the time that it would take for an application to generate a new output after the failure of one or multiple tasks. Our current work presented in the paper at hand could be used as a base for the analysis performed in [4] as an upper bound on task execution and message transmission is assumed to be given there and can now be calculated. Similar to this work the authors in [19] present a worst-case timing analysis for tasks with hot and passive standbys. However, the topic of graceful degradation is not addressed in this work.

Related to this topic authors in [20] have analyzed the timing behavior of task migration at run-time if a new mapping has to be found. Their deterministic mapping reconfiguration mechanism identifies efficient migration routes and determines the worst-case reconfiguration latency. To find new application mappings and to transition predictably to new configurations again, run-time mechanisms are combined with an off-line design space exploration. In [21] the same authors together with others present a general overview of hybrid application mapping techniques and composable many-core systems.

Overall, there has not been any work in the field of predictable timing behavior that considers a gracefully degrading system architecture. For this purpose we build mainly upon our previous work in [2] and the work from [17] to create, for the first time, a comprehensive approach that enables a safe and efficient fail-operational behavior of time-critical applications in distributed systems by predictably analyzing the execution time behavior and allowing a gracefully degrading system behavior.

3 System model

3.1 System

In the past, automotive vendors added a new ECU for each new functionality in the vehicle. Today, cars consist often of more than 100 ECUs to control functions in the domains like infotainment, chassis, powertrain or comfort [1]. Now the automotive industry is aiming towards zonal or more centralized architectures. Some vendors such as Tesla prefer a centralized architecture, where most of the functions are executed on a single ECU such as the FSD computer of Tesla [22]. Bosch is developing a vehicle-centralized, zone-oriented Electrical/Electronic (E/E) architecture with a few centralized powerful vehicle computers integrating cross-domain functionality similar as [23, 24]. These vehicle computers are connected to actuators and sensors via zone ECUs. This reduces the required wiring and weight in vehicles but also system complexity.

In our work we focus on the deployment of bigger applications on a future system architecture which consists of a set of a few ECUs \(e \in E\) which are interconnected via switches and a set of Ethernet links \(l \in L\). The ECUs and Ethernet links use Time-Division Multiplexing (TDM) scheduling with pre-determined time slices which can be allocated or reserved. To dynamically activate, deactivate, and move tasks on the platform at run-time, we implemented a middleware which is based on SOME/IP [25], an automotive middleware solution. This middleware includes a decentralized service-discovery to dynamically find services in the system and a publish/subscribe scheme to publish and subscribe to events.

3.2 Criticality

In our work we are exploring graceful degradation methodologies. Here, critical applications e.g. for autonomous driving can be restarted after a failure on another ECU. Instead of exclusively reserving resources for this scenario, non-critical applications e.g. from the infotainment domain can be shut down to free resources.

According to the ISO 26262 standard, applications can be assigned one of four Automotive Safety Integrity Levels (ASILs) (A to D) [26]. However, we do not differ between criticality levels of critical applications in our work as there is no justification in shutting down applications with an assigned ASIL of A for an application with an assigned ASIL of B as the failure of any critical application can have safety-critical consequences. Instead it has to be ensured that all safety goals are met for any critical application. Therefore, we only distinct between critical and non-critical applications. In our work we assume that each critical application has fail-operational requirements. This means that the application has to stay operational even if a failure occurs that affects this application. We define critical and non-critical applications as follows:

-

Critical application An application that has fail-operational requirements. To ensure a fail-operational behavior passive redundancy on task level is applied. In a failure scenario resources of non-critical applications might be used to keep critical applications operational.

-

Non-critical application An application without specific safety requirements. Non-critical applications can be shut down to free resources for critical applications even if they are not directly affected by a failure.

3.3 System software

Our system software consists of a set of applications \(a \in A\), which are composed of tasks \(t \in T\). The tasks t of an application a can be distributed across multiple ECUs. Applications are executed periodically with a period \(P_a\) and we assume each application has to meet a deadline \(\delta \), with the period \(P_a\) being at least as long as the deadline \(\delta \). For every task we assume that the Worst-Case Execution Time (WCET) W(t) is known.

We model each application a by an acyclic and directed application graph \(G_A(V,E)\). Safety-critical application have to fulfill fail-operational requirements and, thus, have to remain operational even during critical ECU failures. Therefore, we assume that redundant passive task instances are required for our safety-critical applications. The vertices \(V = T_a \cup T_b\) of the application graph \(G_A(V,E)\) are composed of the set of active task instances \(T_a\) and the set of passive task instances \(T_b\). The edges \(E = M_a \cup M_b\) of the application graph \(G_A(V,E)\) are composed of the set of active messages \(M_a\) and the set of backup messages \(M_b\). In the following are our definitions for active and passive task instances and active and backup message instances:

-

Active task instance A task instance of a critical or non-critical application. This is the default task instance actively executing any workload. A binding \(\alpha : T \rightarrow E\) assigns an active task instance \(t \in T\) to an ECU \(\alpha (t) \in E\).

-

Passive task instance A backup instance \(t \in T_b\) of an active task instance which is part of a critical application. The passive task instance is only activated if its active counterpart is affected by a failure. Here, the binding \(\beta : T \rightarrow E\) assigns a passive task instance \(t \in T_b\) to an ECU \(\beta (t) \in E\).

-

Active message instance A routing is required for each active message instance \(m \in M_a\) which is part of a critical or non-critical application. A routing \(\rho : M \rightarrow 2^L\) assigns each message \(m \in M_a\) to a set of connected links \(L' \subseteq L\) that establish a route \(\rho (m)\). We use the shortest path routing obtained through Dijkstra’s algorithm such that there is only a single route between two ECUs e available [27].

-

Backup message instance For critical applications three backup message instances \(m \in M_b\) are required of which one will get activated after an ECU failure depending on which passive task instances get activated. A routing \(\sigma : M \rightarrow 2^L\) assigns each backup message \(m \in M_b\) to a set of connected links \(L' \subseteq L\) that establish a route \(\sigma (m)\).

The application graph of non-critical applications consists only of active task instances \(t \in T_a\) and active messages \(m \in M_a\).

An exemplary application graph \(G_A(V,E)\) of a non-critical application (left) and a critical application (right). The application graph of the critical application consists of two active task instances \(t_{0,a}, t_{1,b} \in T_a\) and two passive task instances \(t_{0,b}, t_{1,b} \in T_b\). Furthermore, it contains one active message instance \(m_{0,aa} \in M_a\) and three backup message instances \(m_{0,ab}, m_{0,ba}, m_{0,bb} \in M_b\), which are required to ensure there is always a communication path between the tasks instances available. (Color figure online)

Figure presents a non-critical and a critical application according to our system model. The non-critical application consists of two tasks and one message being sent between the two tasks. For the safety-critical application the graph also includes two passive task instances and three backup message instances. Three backup message instances are required such that it can be ensured that always a communication between two task instances is possible regardless of which task instances are affected by a failure. The message instances \(m_{0,ab}\) and \(m_{0,ba}\) are required if only one of the active task instances is failing, while the message instance \(m_{0,bb}\) is required if both active task instances are affected by a failure, e.g. because they are mapped onto the same failing ECU.

Exemplary mapping of a safety-critical application onto a hardware architecture consisting of four ECUs \(e_0\), \(e_1\), \(e_2\), and \(e_3\), and three switches \(s_0\), \(s_1\), and \(s_2\). The green arrows indicate the active bindings of tasks \(t_0\) and \(t_1\), while the dashed yellow arrows indicate the passive task bindings. The routings of the message instances are indicated by the same arrow color and style as in the application graph. (Color figure online)

Figure shows the binding \(\alpha \) of the active task instances \(t \in T_a\) and the binding \(\beta \) of the passive task instances \(t \in T_b\) onto a system architecture. The routing \(\rho \) of the active message instance \(m \in M_a\) and the routings \(\sigma \) of the backup message instances \(m \in M_b\) are also marked by colored arrows.

3.4 Failures

In this work and our experiments we focus on mitigating ECU failures which are detected by watchdogs and heartbeats. Our graceful degradation approach ensures that safety-critical applications can keep running after an ECU failure while there is no guarantee for non-critical applications. As redundancy is used for all safety-critical tasks, the system could withstand any single ECU failure and perform a failover for affected task instances. After a failover, any critical application is still able to stay operational such that any hazards can be avoided. After a time-critical failover has been performed, fail-operational capabilities have to be re-established with a new mapping process. Applications might have to be stopped temporarily if active task instances have to be remapped such that a safe mapping could only be performed during a halt. To prevent this, methods such as proposed in [28] to perform a safe real-time task migration could be applied. Our approach could be also used to mitigate transient or software failures if the corresponding failure detection mechanisms are supported by hardware or software e.g. through a lock-step architecture. If possible, a failure should be handled locally. Our solution is intended to be used as a last resort, as a failover could lead to the shut down of non-critical applications when graceful degradation is applied. While our approach considers that redundant message instances are required to ensure that a communication is possible after a failover, the mitigation of network or switch failures are out of scope of this work. For the interested reader we recommend the work of [9] on this topic.

3.5 Failover

Our approach ensures that applications are executed withing a deadline \(\delta \) and that a backup mapping is available that is also meeting this timing constraints under regular operation. In the case of an ECU failure we consider that the current application execution might not finish if an active task instance is affected directly by the failure and that application execution might be interrupted for a certain time interval. Here, it is important that a failover within the Fault Tolerant Time Interval (FTTI) can be guaranteed [29]. In the work at hand, we do not focus on giving a failover timing guarantee but that a stable application execution within a timing constraint is always readily available once the failover is done. We refer any reader interested in the topic of failover timing analysis to the work of [4]. Furthermore, we consider that no critical states have to be transferred and recovered. However, if required checkpoints can be periodically transmitted from active to passive task instances to save important state data [30]. After the failure recovery computation can be continued with the latest transmitted checkpoints.

4 Performance analysis

In this section we contribute our concept of performance analysis for distributed applications based on the work from [3, 17]. Using this performance analysis we present our performance analysis of gracefully degrading systems in Sect. 5. Instead of targeting NoC architectures as in [17] we target a distributed electronic system consisting of multiple ECUs which are connected via switches and Ethernet links. The goal of the performance analysis is to find an upper bound for the end-to-end application latency such that it can be verified whether a mapping adheres to a timing constraint. Here, we first formally introduce the end-to-end application latency in Sect. 4.1 and present our derived analytical formulas for distributed and composable system. Afterwards, we present composable scheduling together with our adapted analytical formulas for both task and message scheduling in Sect. 4.2. Compared to the work in [17] we chose TDM instead of a Round-Robin (RR) scheme for task scheduling. The disadvantage of RR scheduling is that the execution latency depends on the number of other tasks in the schedule such that the execution latency might change once tasks are added to the schedule later. By contrast, when using a schedule based on TDM, an upper bound of service intervals that can be allocated by tasks is already predefined which can be used for a worst-case estimation of the execution latency. For message scheduling we use TDM as well where we design the system such that exactly one Ethernet frame can be sent per slot and assume that all messages can be sent within one frame. In case messages should be able to be split up into multiple slots we refer the interested reader to [31] and [17].

4.1 End-to-end application latency

For time-critical applications, a mapping can only be considered feasible if the worst-case end-to-end application latency \({\mathcal {L}}(\alpha ,\rho )\) does not exceed a given deadline \(\delta \) such that the following constraint has to be met:

The end-to-end latency of a distributed application is influenced by both executing computational tasks t and sending messages m between the tasks. However, task execution times and message transmission times will most certainly differ between each iteration. This highly depends on the paths being taken in a program and interference caused by other applications which are executed concurrently in the system. The interference time is mainly influenced by the scheduling and admission algorithms that are used for the Central Processing Unit (CPU), the network infrastructure and other shared resources. The worst-case end-to-end execution latency \({\mathcal {L}}(\alpha ,\rho )\) is determined by the critical path as

where the critical path is the path through an application with the highest aggregated latency. The path latency \(PL(path,\alpha ,\rho )\) itself can be calculated as

by summing up all task latencies \(TL(t,\alpha (t))\) and communication latencies \(CL(m,\rho (m))\) of tasks and messages which lie in this path. To predictably calculate these worst-case task latencies \(TL(t,\alpha (t))\) and worst-case communication latencies \(CL(m,\rho (m))\) it is required to analytically determine an upper bound. To reduce complexity, composability is required to ensure that applications have only a bounded effect on each other. Well-known scheduling approaches such as RR or TDM temporally isolate task execution or message transmission on a resource.

In Fig. an exemplary mapping of a non-critical application with annotated worst-case task and communication latencies is presented. The path latencies for the two paths \(p_0 = (t_{0} - m_{0} - t_{1})\) and \(p_1 = (t_{0} - m_{1} - t_{2})\) in the application graph can be calculated as \(PL(p_0,\alpha ,\rho ) = 30\) ms and \(PL(p_1,\alpha ,\rho ) = 35\) ms. The critical path \(p_1\) leads to a worst-case end-to end application latency of \({\mathcal {L}}(\alpha ,\rho ) = 35\) ms. With a deadline of \(\delta = 40\) ms Eq. 1 would be still fulfilled.

Exemplary mapping of a non-critical application onto a hardware architecture consisting of four ECUs \(e_0\), \(e_1\), \(e_2\), and \(e_3\), and three switches \(s_0\), \(s_1\), and \(s_2\). The green arrows indicate the binding of a task to an ECU. The message routings are marked in the color of the corresponding message in the application graph. There are two paths \(p_0 = (t_{0} - m_{0} - t_{1})\) and \(p_1 = (t_{0} - m_{1} - t_{2})\) in the application graph. Taking the task and communication latencies from the figure, the path latencies can be calculated as \(PL(p_0,\alpha ,\rho ) = 30\) ms an \(PL(p_1,\alpha ,\rho ) = 35\) ms. With p1 as the critical path, the worst-case end-to-end application latency can be calculate as \({\mathcal {L}}(\alpha ,\rho ) = 35\) ms. With a deadline of \(\delta = 40\) ms the constraint in Eq. 1 would be met. (Color figure online)

4.2 Composable scheduling

We use TDM scheduling for tasks and messages as this allows a partitioned analysis, where applications can be mapped and analyzed independent from each other.

4.2.1 Task scheduling

In general, the task latency \(TL(t,\alpha (t))\) consists of the actual task execution time \(TL_{exec}(t,\alpha (t))\) and the task interference time \(TL_{inter}(t,\alpha (t))\), which a task spends waiting e.g. due to scheduling:

Thus, the worst-case task execution time without interference \(TL_{exec}(t,\alpha (t))\) is a multiple of the service interval time \(\tau _{SI}\) such that we can calculate it using the WCET \(W(t,\alpha (t))\) as

Given a number of service intervals \(SI_a(t)\) that are allocated for a task t and a defined maximum number of service intervals \(SI_{max}\) we can determine the worst-case interference time \(TL_{inter}(t,\alpha (t))\) as

Here, \(SI_{max} - {|{SI_a(t)}|}\) reflects the number of service intervals that a task would have to wait until it is executed again. The first factor represents how many times the task would have to wait until its turn in the worst-case. An exemplary task schedule with the derivation of the worst-case task latency \(TL(t,\alpha (t))\) is presented in Fig. .

Example of a task schedule with a maximum amount of allocatable service intervals of \(SI_{max} = 5\). One service interval \({|{SI_a}|} = 1\) is allocated for the task \(t_0\). With a WCET of \(TL_{exec}(t_0,\alpha ) = W(t_0,\alpha ) = 2 \tau _{SI}\), two full execution cycles are required in the worst case for the task to finish execution. With the corresponding worst-case task interference time of \(TL_{inter}(t_0,\alpha ) = 8 \tau _{SI}\), the worst-case task latency can be calculated as \(TL_{inter}(t_0,\alpha ) = 10 \tau _{SI}\). (Color figure online)

4.2.2 Message scheduling

We assume that messages are sent over ethernet links oppose to the work of [17] where smaller links connect multiple processing elements on a NoC architecture. For the worst-case communication latency we can proceed likewise as for the task scheduling to calculate the worst-case communication latency \(CL(m,\rho )\) with the worst-case message transmission time \(CL_{trans}(m,\rho )\) and the worst-case communication interference time \(CL_{inter}(m,\rho )\):

For the message scheduling we use the notation SL to describe the time frame of a slot interval. We design the system such that exactly one ethernet frame with a maximum frame size of 1518 bytes can be sent in one time slot. We assume that message sizes do no exceed the Maximum Transmission Unit (MTU) of an ethernet frame such that only one slot has to be allocated per message. Using this we can calculate the tranmission time of a message over one ethernet link \(CL_{trans}(m,l)\) as

To calculate the interference time of a message over one link \(CL_{inter}(m,l)\) we assume that a maximum number of slots \(SL_{max}\) is defined. As the transmission of the message requires only one slot, a message has to wait one transmission round in the worst case:

Combining these two worst-case latencies we can calculate the worst-case communication latency \(CL_(m,l)\) of a message m over one link l as

This approach allows us to analyze the worst-case communication latency over each link individually as

Under the assumption that all links in the system are designed equally, the communication latency only depends on the number of links that the message m is passing on its route \(\rho \), further denoted as \(hops(\rho (m))\), which allows us to further simplify the formula to

Our formulas are based on the assumption that a message requires exactly one slot to be transmitted. In case the system should be designed more fine granularly such that a message could require multiple slots for transmission, we refer the interested reader to [31] and [17].

5 Performance analysis of gracefully degrading systems

Using a state-of-the-art analysis presented in Sect. 4 it is possible to analyze whether a configuration of an application consisting of active tasks is meeting a deadline \(\delta \). However, we need to ensure that critical applications are still able to continue full operation after any ECU failure without violating Eq. 1. Here, we add passive tasks as a backup solutions that are started once the active task is affected by a failure. Furthermore, we are enabling a gracefully degrading behaviour as in [2] such that resources that are allocated by non-critical applications can be used by critical applications if required. The advantage of using our passive backup solution compared to active redundancy is that during operation no overhead is added in terms of required computational power.

Theoretically, it would be possible to search for a new valid configuration which satisfies the timing constraint after an ECU failure occurred. However, this approach would have multiple disadvantages accompanied with highly unpredictable behaviours. First, it can not be guaranteed that sufficient resources are available for task computations and message transmissions after a failure even if a degradation approach is used. The ECU failure reduces the system-wide resource pool such that not every application might be able to find sufficient resources. Even if the system was over-designed, the next failure would increase the uncertainty further. Second, even if sufficient resources were still available, it is uncertain if a mapping could be found that would satisfy the timing constraint. Third, it could take a lot of time to find a valid solution. Even if a valid solution was available it could take an unknown amount of time to find one. Although the unavailability of an application might be tolerable for a certain amount of time (FTTI) during a failover, it would not be predictable how long it would take to find a solution and most likely it would not be found in time. Fourth, it would be unpredictable which non-critical applications would be shut down due to degradation as this would be only decided after the occurrence of the failure. Most importantly, this means it is uncertain if a solution can be found in time yet if one is existing, which is unarguably an unacceptable behaviour for safety-critical applications. Furthermore, it would be at least desirable to also allow a more predictable degradation behaviour of non-critical applications.

Therefore, it is necessary to improve uncertainty and achieve a more predictable behaviour. To bypass having to find new solutions after an ECU failure, we have to ensure that a suitable backup solution is already available for any ECU failure in the system. To ensure this we add redundant tasks such that there is at least one instance of each task available after an ECU failure that has sufficient resources to continue operation and to communicate with other tasks. Here, we present our performance analysis of gracefully degrading systems to verify that these backup solutions always meet timing constraints in Sect. 5.1. This solution allows a predictable system behaviour as it is known if a valid backup solution is available that meets resource and timing constraints before any failure and allows to quickly switch to this backup solution. Last, we present our composable scheduling of gracefully degrading systems in Sect. 5.2, which allows to independently derive worst-case latencies while also enabling a gracefully degrading system behavior. This solution also allows to predict in which failure scenario a non-critical application will be shut-down due to graceful degradation.

5.1 End-to-end application latency

A valid binding needs to ensure that the deadline \(\delta \) is not only met by the active part of the application, but also in case any of the backup solutions have to be used in a failure scenario. The activation of passive task instances could lead to a new critical path in the application which might not meet the deadline \(\delta \). The worst-case end-to-end application latency \({\mathcal {L}}(\alpha , \beta , \rho , \sigma )\) considers not only the worst-case end-to-end application latency \({\mathcal {L}}(\alpha , \rho )\) of currently active task instances but also of any possible future configurations, where passive task instances are activated. To achieve a fail-operational behaviour, a valid mapping of a safety-critical application has to fulfill the following constraint:

As any activation of a passive task instance could potentially lead to a violation of this constraint, any path through the instance graph \(G_B(V,E)\), including passive task instances \(t \in T_b\) and backup messages \(m \in M_b\), has to be considered. Therefore, we can define the worst-case end-to-end application latency \({\mathcal {L}}(\alpha , \beta , \rho , \sigma )\) as the critical path through the instance graph \(G_B(V,E)\), which also includes all possible backup solutions:

The path latency \(PL(path,\alpha ,\beta ,\rho ,\sigma )\) depends not only on the worst-case latency of active task instances \(TL(t,\alpha (t))\) and the worst-case communication latency of active messages \(CL(m,\rho (m))\), but also on the potential worst-case latency of passive task instances \(TL(t,\beta (t))\) and the potential worst-case latency of backup messages \(CL(m,\sigma (m))\), such that it can be calculated as

From this formula it can be observed that it is also necessary to find a bound on the potential worst-case latency of passive task instances \(TL(t,\beta (t))\) and the potential worst-case latency of backup messages \(CL(m,\sigma (m))\).

For illustration let us assume that a deadline \(\delta = 35\) ms is given for the safety-critical application as presented in Fig. . When disregrading passive task and message instances and using the annotated worst-case latencies from the figure, state-of-the-art performance analysis as presentend in [17] would conclude a worst-case end-to-end application latency of \({\mathcal {L}}(\alpha , \rho ) = 30\) ms which would meet the deadline \(\delta = 35\) ms. However, in a failure scenario where \(t_{0,a}\) is affected by a failure and \(t_{0,b}\) activated, the deadline can no longer be met leading to a configuration that violates the timing constraint. By constrast, our performance analysis takes passive task and messages instances into account and, therefore, is able to identify the highlighted critical path \(p_2 = (t_{0,b} - m_{0,ba} - t_{1,a})\) with a worst-case path latency of \(PL(p_2,\alpha ,\beta ,\rho ,\sigma ) = 40\) ms. A violation of the timing constraint with a worst-case end-to-end application latency of \({\mathcal {L}}(\alpha , \beta , \rho , \sigma ) = 40\) ms and the deadline \(\delta = 35\) ms is clearly visible. Here, our performance analysis can be used to quickly evaluate different configurations and help design automation algorithms to find a valid configuration.

Exemplary mapping of a safety-critical application onto a system architecture with annotated worst-case latencies. When using state-of-the-art performance analysis and disregarding the passive task and message instances the worst-case end-to-end application latency \({\mathcal {L}}(\alpha , \rho ) = 30\) ms would meet the deadline \(\delta = 35\) ms. However, in a failure scenario where \(t_{0,a}\) was affected by a failure and \(t_{0,b}\) activated, the deadline could no longer be met leading to a configuration that violates the timing constraint. Our performance analysis takes the highlighted critical path \(p_2 = (t_{0,b} - m_{0,ba} - t_{1,a})\) with a worst-case path latency of \(PL(p_2,\alpha ,\beta ,\rho ,\sigma ) = 40\) ms into account leading to a worst-case end-to-end application latency of \({\mathcal {L}}(\alpha , \beta , \rho , \sigma ) = 40\) ms. Here, a violation of the timing constraint with the deadline \(\delta = 35\) ms can be identified. Therefore, our performance analysis can be used to quickly evaluate different configurations and help design automation algorithms to find a valid configuration. (Color figure online)

5.2 Composable scheduling of gracefully degrading systems

We extend state-of-the-art scheduling such as presented in [17] by introducing the concept of graceful degradation. For our analysis and experiments we apply graceful degradation to CPU resources although the concept can also be applied to link resources as well. Applied to our composable schedules, this means that service intervals can not only be allocated for a task but also reserved. A reservation indicates that the corresponding service interval is currently not in use, but might be used and turned into an allocation once the backup instance is being used. Service intervals that can be reserved are empty service intervals that have not been allocated yet or service intervals which are already allocated by non-critical applications. The allocation of slots works vice-versa, non-critical applications can allocate service intervals that are free or which are already reserved by critical applications. Critical applications on the other hand can only allocate service intervals that are completely free. If a service interval is allocated by a non-critical application and also reserved by a critical application the graceful degradation approach is applied. In case there is a failure in the system and critical passive task instances have to be started to mitigate a failure, the reservation of the resources will be turned into an active allocation and any non-critical tasks which formerly held an allocation of the corresponding slots are shut down. Depending on the application, it could then be decided if the degraded non-critical application keeps running in a degraded mode or is completely shut down.

Example of a task schedule with a maximum amount of allocatable (lower) and reservable (upper) service intervals of \(SI_{max} = 5\). One service interval \({|{SI_r}|} = 1\) is reserved for the critical task instance \(t_{0,b}\). The same service interval is allocated by the non-critical task instance \(t_n\). In a failure scenario where \(t_{0,b}\) has to be activated, \(t_n\) is being degraded in a first step. Afterwards, \(t_{0,b}\) is taking over the allocation of the service interval. (Color figure online)

For the latency analysis of the backup solutions itself it is not relevant whether a reserved slot is also allocated and graceful degradation is applied or not as the reservation will be turned into an allocation. This procedure allows to predict before the occurrence of any failure if a valid backup solution can be found, but also allows a predictable behaviour of the graceful degradation approach. Furthermore, we can reuse our analytical formulas from Sect. 4.2 to calculate \(TL(t,\beta (t))\) based on the number of reserved service intervals \(SI_r(t)\) and the binding of the passive task instance \(\beta (t)\):

To calculate the worst-case communication latency \(CL(m,\sigma (m))\) of backup message instances, under the same assumptions as in Sect. 4.2, we can reuse Eq. 12 based on the routing of the backup message instances \(\sigma \):

In Fig. an exemplary task schedule with reservations and allocations is presented. The upper service intervals indicate a reservation, while the lower service intervals indicate an allocation of the same service interval. In this example the first service interval is allocated by the non-critical task \(t_n\) but also reserved by the critical task instance \(t_{0,b}\). In a failure scenario where the task instance \(t_{0,b}\) is activated to serve as a backup solution, the non-critical \(t_n\) first looses its allocation and, thus, is being degraded. Afterwards, the reservation of the task instance \(t_{0,b}\) is turned into an active allocation such that this resource can now be used exclusively by the critical task instance.

The decision which service interval should be allocated or reserved is not straightforward. When always a completely free service interval is taken first, then the schedule might run out of service intervals earlier such that not sufficient resources might be left for other tasks. On the other hand, when it is tried to overlap service intervals as much as possible but more than sufficient service intervals are available for all tasks, then an avoidable degradation might occur in a failure scenario. We present and discuss three different allocation and reservation strategies in Sect. 6.4 and evaluate them in Sect. 7. Before that, we introduce in the following section our agent-based mapping approach and the components required to implement such a gracefully degrading behavior at run-time.

6 Agents—finding feasible solutions at run-time

We use a dynamic agent-based approach to perform mapping and constraint checking at run-time. In the following we describe the components involved in the mapping process and the algorithms used by the agents. Each agent controls one task instance and is responsible for allocating resources and ensuring that constraints are met. Together the agents enable a decentralized control without a single point of failure. This concerns both active and passive task instances. The agents are able to communicate in a predefined order with each other to manage the mapping process of all task instances of an application. To dynamically activate, deactivate, and move tasks on the platform at run-time, we implemented a middleware which is based on SOME/IP [25]. This middleware includes a decentralized service-discovery to dynamically find services in the system and a publish/subscribe scheme to publish and subscribe to events.

We assume that most system resources are available for performing the search and that the mapping approach be performed while the car is not actively in use. Once a stable mapping is found, the agents do not perform any action and, thus, do not require any additional resources. As backup mappings are already found and readily available, we follow our main hypothesis, such that when the failure occurs a safe state can be reached with a minimum amount of communication and computation. After a failover, the fail-operational behavior has to be re-established to ensure a safe travel as further failures could lead to potential hazards. A re-mapping of the applications has to be performed during a safe halt.

Our system to find feasible mappings at run-time consists mainly of agents and resource managers. Resource managers manage the resources associated with an ECU or switch. The resource managers take care of the allocation or reservation requests and assign the corresponding service intervals or slots. By assigning a slot a resource manager implicitly decides how the system will be degraded in a failure scenario and which task instances will loose resources. Therefore, changing the algorithm for assigning the slots can impact the degradation behaviour and success chance of finding a mapping. The responsibility of an agent is to find a suitable mapping for its task that meets all mandatory constraints by allocating and reserving resources at the resource manager or by moving to another ECU. Furthermore, each agent also verifies if an application-wide constraint such as the end-to-end application latency is still met. As partially a sequential mapping order is required to fulfill or verify these constraints, agents can communicate with each other to synchronize the mapping flow.

In the following we first provide an overview and formalize the constraints that have to be respected to consider a given mapping as a valid solution in Sect. 6.1. We then present a solution how the timing constraints are evaluated at run-time using our performance analysis in Sect. 6.2. Afterwards, we present the mapping process of an application using agents in Sect. 6.3. Here, we first go into detail in which order the agents find a mapping for their task to be able to meet all constraints. Afterwards, we present a code listing and describe how the search space is searched for a valid solution by the agents which solves all constraints using a backtracking algorithm. Furthermore, we describe the role of resource managers in Sect. 6.4 which are responsible for performing allocations and reservations by choosing the corresponding service intervals. We also present our allocation and reservations strategies that can be used by a resource manager which has a direct effect on how the system will be degraded. Last, we describe the recovery and reconfiguration process with the immediate failure reaction to ensure a safe fail-operational behavior in Sect. 6.5.

6.1 Constraints

The following constraints have to be respected to consider any found mapping as a valid solution.

-

C.1

The application-wide worst-case end-to-end application latency must meet the deadline \(\delta \):

$$\begin{aligned} {\mathcal {L}}(\alpha , \beta , \rho , \sigma ) \le \delta \end{aligned}$$(20) -

C.2

An active task instance \(t_a\) and its corresponding passive task instance \(t_b\) may not be mapped onto the same ECU as this would contradict our fail-operational goal:

$$\begin{aligned} \alpha (t_a) \ne \beta (t_b). \end{aligned}$$(21) -

C.3

The number of allocated service intervals \(N_{SI,a}(e)\), reserved service intervals \(N_{SI,r}(e)\) and service intervals with both allocation and reservation \(N_{SI,ar}(e)\) of an ECU e must not exceed the number of maximum available service intervals \(SI_{max}\):

$$\begin{aligned} N_{SI,a}(e)+ N_{SI,r}(e) + N_{SI,ar}(e) \le SI_{max} \end{aligned}$$(22) -

C.4

The number of allocated slots \(N_{SL,a}(l)\), reserved slots \(N_{SL,r}(l)\) of a link l must not exceed the number of maximum available slots \(SL_{max}\):

$$\begin{aligned} N_{SL,a}(l) + N_{SL,r}(l) \le SL_{max} \end{aligned}$$(23) -

C.5

Service intervals which are allocated by a critical task t must not be allocated or reserved by any other task:

$$\begin{aligned} \forall SI \in SI_{a}(t) \wedge \forall t' \in T: SI \not \in SI_a(t') \wedge SI \not \in SI_r(t') \end{aligned}$$(24) -

C.6

Service intervals which are reserved by a critical task t must not be allocated or reserved by another critical task but may be allocated by a non-critical task:

$$\begin{aligned} \forall SI \in SI_{r}(t) \wedge \forall t' \in T_{c}: SI \not \in SI_a(t') \wedge SI \not \in SI_r(t') \end{aligned}$$(25) -

C.7

Service intervals which are allocated by a non-critical task t must not be allocated by any other task but may be reserved by a critical task:

$$\begin{aligned} \forall SI \in SI_{a}(t) \wedge \forall t' \in T: SI \not \in SI_a(t') \end{aligned}$$(26) -

C.8

Slots which are allocated or reserved by a message m must not be allocated or reserved by another message:

$$\begin{aligned} \forall SL \in SL_{a}(m) \wedge \forall m' \in M: SL \not \in SL_a(m') \wedge SL \not \in SL_r(m') \end{aligned}$$(27)$$\begin{aligned} \forall SL \in SL_{r}(m) \wedge \forall m' \in M: SL \not \in SL_a(m') \wedge SL \not \in SL_r(m') \end{aligned}$$(28)

Sect. 6.2 describes how solutions that respect Constraint C.1 can be found at run-time by splitting the validation problem up into smaller sub-problems which are solved by the agents. Constraint C.2 is respected by constraining the mapping order of the agents and by limiting the search space accordingly as described in Sect. 6.3. Constraints C.3 to C.8 are implicitly respected by the resource manager when assigning service intervals and slots to task and message instances as described in Sect. 6.4.

6.2 Run-time timing constraint solving

As described in Sect. 5.1 the critical path in the application graph has to be identified to verify whether Constraint C.1 can be met. Instead of verifying the timing constraint once all task instances are mapped, we continuously evaluate whether the application meets the timing constraint with the task instances mapped so far. This is done at run-time as the mapping of task instances and their predecessors is not known at design time. We split the bigger problem of verifying each single path into smaller sub-problems solved by the agents such that the constraint is continuously being verified and the search can be interrupted earlier if the mapping process is running into a dead-end. As only the critical path is of interest, each agent calculates and stores the worst-case path latency to its task \({\mathcal {L}}(t, \alpha , \beta , \rho , \sigma )\) as

Here, an agent requires only the worst-case path latencies \({\mathcal {L}}(t_p, \alpha , \beta , \rho , \sigma )\) of its predecessor tasks \(t_p\) and the corresponding worst-case communication latencies to its own task \(CL(m_{t_p - t},\rho ,\sigma ))\) together with the worst-case task latency \(TL(t, \alpha (t),\beta (t))\). The maximum of all paths from preceding tasks \(t_p\) to this task t is then the worst-case path latency to it task \({\mathcal {L}}(t, \alpha , \beta , \rho , \sigma )\). Accordingly, instead of verifying Constraint C.1 once all tasks are mapped, each agent can verify the following constraint on its own:

This has the advantage if there are multiple sink tasks in an application graph that each agent can verify the constraint on its own. Furthermore, if the constraint can not be met by any of the tasks during the mapping process, the process can be stopped and a backtracking algorithm can be applied saving the agents from evaluating invalid solutions.

6.3 Agent

In our work each task instance is assigned to an agent which is responsible for finding a mapping by allocating and reserving resources at resource managers and by coordinating the mapping process with other agents. In the following we first present a pre-defined mapping order in which the agents of an application find a mapping. Afterwards, we describe the search space exploration of an agent using a code listing. Last, we present the backtracking approach which agents require to explore different solutions.

6.3.1 Mapping order

For the mapping process of the tasks onto the ECUs a predefined mapping flow is required to ensure that constraints are met and only valid mappings are generated. Theoretically, it would be preferable to map all tasks in parallel. However, there are limitations to the level of parallelism due to constraints and their verification at run-time. To adhere to Constraint C.2 a sequential mapping flow between active and passive task instances is required to avoid mapping both task instances onto the same ECU. Therefore, we define that active task instances have to be mapped before passive task instances. Second, to allocate and reserve resources for communication, the routing from a predecessor task to its successor has to be known. We decided that the succeeding tasks allocate and reserve the resources for all incoming messages. As a consequence, a task instance can only be mapped onto an ECU once all preceding task instances are mapped. This sequential mapping order is also required to perform the run-time constraint solving described in Sect. 6.2.

An exemplary task instance graph with the corresponding mapping flow. Passive task instances are mapped after active task instances. Active task instances have to wait until the passive task instances of their predecessors are mapped. As the task instances of t1 and t2 do not have any dependencies on each other they can be mapped in parallel. However, the active task instance of t3 has to wait for both branches to finish to continue with the mapping process. (Color figure online)

Figure shows the mapping flow of an exemplary task instance graph consisting of four tasks. The mapping process starts with the active task instance of the source task \(t_{0,a}\). All other task instances are initially waiting until they received a message from all their mapping predecessors. Once the active task instance has found a mapping it will inform its passive counterpart \(t_{0,b}\) that it found a valid mapping. The passive task instance \(t_{0,b}\) that has been waiting then finds a mapping itself. It ensures that the ECU to which the active task instance is mapped to is removed from the search space of the passive task instance. Afterwards, the passive task instance informs all active task instances of directly succeeding tasks. In the example \(t_{0,b}\) informs \(t_{1,a}\) and \(t_{2,a}\) about a successful mapping. Active task instances might have to wait not only for one but multiple passive task instance of their preceding tasks depending on the dependencies in the application graph. In the figure \(t_{3,a}\) has to wait for both \(t_{1,b}\) and \(t_{2,b}\) to finish. By contrast, neighbouring tasks with shared predecessors can search for a mapping in parallel as they have no direct dependency and open a new branch as it is the case for the task instances of t1 and t2 in the example. Any tasks instances belonging to different branches can also work in parallel. Therefore, the level of parallelism in the mapping search is bound by the number of branches or the width of the application graph. Tasks which have predecessors in multiple different branches reduce the level of parallelism and represent a bottleneck.

6.3.2 Agent mapping

Once it is the turn of an agent to find a mapping, it is its responsibility to evaluate the mapping options and ensure that resources are allocated and reserved accordingly. Listing 1 shows the pseudo-code for the mapping process of an agent. Each agent starts in the Wait() function (Line 1) where they wait until all predecessors send a signal that they found a mapping and transmit their worst-case latencies and mappings. Afterwards, the agent becomes active and is searching for a mapping. Here, at first the search space is set up consisting of all available ECUs in the system (Line 6). Then, in case a passive task instance \(t_b\) is being mapped, the ECU to which the corresponding active task instance \(t_a\) is mapped to is removed from the search space \(B(t_{b})\) to adhere to Constraint C.2 (Lines 7 and 23):

To find the most suitable solution, the different options for the mapping of the task are evaluated (Lines 8 and 28). Here, the worst-case path latency \({\mathcal {L}}(t, e)\) for each available ECU \(e \in E\) is calculated. Afterwards, the search space can be filtered for invalid solutions that do not adhere to Eq. 6.2 and, therefore, would violate Constraint C.1 (Lines 9 and 34). Using a heuristic the possible solutions are then sorted by the calculated by an increasing worst-case latency (Line 10). The agent then tries to allocate resources for the most fitting solution by sending allocation and reservation requests to resource managers (Lines 11 and 40). Here, service intervals have to be allocated or reserved for the executing tasks and slots allocated or reserved for all incoming messages on the links on the corresponding routing paths. The resource managers receive a request from an agent to allocate or reserve a certain amount of service intervals for CPU resources or slots for a link. The resource manager then executes the allocation or reservation and answers the agent whether the allocation or reservation could be performed successfully or not. The resource manager also implicitly decides how the system will be degraded by choosing the corresponding service intervals for the agent. However, this behavior is completely transparent to the agent and it does not know whether the activation of its passive task instance will lead to a degradation of another task and vice versa. Multiple strategies of the resource manager for allocating and reserving resources are described in Sect. 6.4. In case all resources could be successfully allocated and reserved a valid solution has been found such that the agent can update the binding for its task, move to the corresponding ECU and inform all succeeding agents about its success (Lines 18-21).

6.3.3 Backtracking

In case that either all solutions in the search space have been filtered out or not sufficient resources could be allocated or reserved for any of the solutions, the mapping algorithm ran into a dead end. Here, a backtracking algorithm is performed. The agent informs its predecessor in the mapping flow that no solution could be found and then goes back into its initial waiting status (Lines 14–16 and 49). The informed agent then frees all resources it had previously allocated or reserved and the ECU to which it is currently mapped to is removed from the search space. Afterwards, this agent has to in turn inform all other succeeding agents in the mapping flow that its mapping has been invalidated. This prohibits the succeeding agents in other branches from wasting time and unnecessarily occupying resources. Even if the other branches were to find a valid solution, the solution would be based on a mapping of their predecessor that is no longer valid. Furthermore, they are informed that they have to wait again for their predecessor in the mapping flow. After invalidating its successors the backtracking agent tries to find another valid solution (Line 53) and will then again inform its predecessors about the success. If there is no valid solution left or no other solution can be found, it will itself start the backtracking process of its own predecessors.

6.4 Resource manager

In the following we describe how an allocation and reservation request of an agent is performed by a resource manager. Furthermore, we introduce the three allocation and reservation strategies Random, FreeFirst and FreeLast and describe their respective advantages and disadvantages over each other. These three strategies are evaluated in detail in Sect. 7.

6.4.1 Resource allocation and reservation

The resource managers receive a request from an agent to allocate or reserve a certain amount of service intervals for CPU resources or slots for a link. It is the job of the corresponding resource manager to execute the allocation or reservation and to answer whether the allocation or reservation could be performed successfully or not. The resource manager decides during this process which exact service intervals or slots will be allocated or reserved. Here, it ensures that constraints C.3 to C.8 are met as it is programmed to only operate within these bounds. This allocation and reservation process also decides how the system will be degraded in a failure scenario. Section 5.2 describes in detail how a degradation would be performed on the level of service intervals. If a service interval is both reserved by a critical passive task instance and allocated by a non-critical task instance, the service interval is automatically assigned to the critical task instance once a failure occurs. In our system a critical agent can pass the information in which failure scenarios this activation should occur. The resource manager is then informed by a watchdog about any failures occuring in the system such that it can immediately react. This behavior is completely transparent to the agent and it does not know whether the activation of its passive task instance will lead to a degradation of another task.

6.4.2 Strategies

In the following we discuss the three strategies we developed to allocate and reserve resource slots. By default the resource managers use the Random strategy which chooses the service intervals assigned to an allocation or reservation request randomly. Changing this algorithm can impact the degradation behaviour and success chance of finding a mapping. In the following we propose two alternative strategies FreeFirst and FreeLast for assigning service intervals.

The FreeFirst strategy aims at minimizing the overlap between reservations and allocations by assigning service intervals first that have not been allocated or reserved. Only if no free service interval is available the algorithm will allocate or reserve other service intervals. The algorithm has the advantage of reducing the degradation effect as allocations and reservations overlap as little as possible. The downside is that in scenarios where resources are constrained, the algorithm might lead to lower success rates of finding mappings as less free service intervals will be available. On the other hand, in more relaxed scenarios it uses all available resources to reduce the degradation effect with little effect on the success rate.

The FreeLast strategy aims at utilizing resources more efficiently by assigning service intervals first that already have been allocated or reserved, which maximizes the overlap between reservations and allocations. The advantage is that even in resource-constrained scenarios, the resources are used in an efficient way such that the success rate of finding mappings is increased. On the other hand, as the overlap between reservations and allocations is maximized, there will be a stronger degradation effect in case of a failure scenario. In scenarios where resources are less constrained, reservations and allocations will overlap even if additional free service intervals were available leading to avoidable degradation effects.

When choosing one of the two opposing strategies, a trade-off between degradation effect, success rate and resource-efficiency has to be made. In scenarios where degradation is desired or tolerated, the FreeLast strategy can lead to a lower resource utilization and higher success rates. In scenarios where many resources are available and degradation should be avoided as much as possible the FreeFirst strategy maximizes resource utilization to minimize degradation impact. We continue this discussion with our experimental results in Sect. 7, which gives further insights into these three strategies.

6.5 Recovery and reconfiguration

In a failure scenario with a critical application, an immediate failure reaction and failover to the backup solution is required to keep the application operational. Failures can be detected via watchdogs and heartbeats. In previous work [4], we have presented a formal analysis to derive the worst-case application failover time for distributed systems. Here, we analyzed the impact of failure detection and recovery times on the timing behavior of distributed applications. This analysis guarantees an upper bound on the time that it would take for an application to generate a new output after the failover of one or multiple task instances. Our current work can be used as a base for the analysis done in [4] as our system provides an upper bound on task execution and message transmission times.

In case an active task instance of a critical application is affected by the failure, the watchdogs notice a timeout and notify both the resource manager and the agent of the passive task instance. The resource manager immediately turns the reservation of the agent into an allocation and performs a degradation if required. The agent of the passive task instance then starts its task. As we use a service-oriented middleware with a publish/subscribe pattern, this task instance then has to subscribe to its predecessor task instances to receive the corresponding messages. In case the preceding task instances have been affected by the failure as well, they first have to advertise their service before succeeding task instances can subscribe to them. If a passive task instance of a critical application is affected by a failure no immediate failure reaction is required. In case a failover has been performed by a preceding task instance, a task instances also has to wait until the corresponding service is offered until it can re-subscribe to it.

If a task instance of a non-critical application is affected by a failure, no immediate reaction is required. In case a non-critical task instance is affected by a degradation the agent is automatically notified. The degraded non-critical task can then run in a degraded mode with less available resources or completely be shut down.

After the immediate failover, which ensures a safe fail-operational behavior of critical applications, a reconfiguration of the system can be performed. During this reconfiguration, resources can be freed by shutting down task instances of failed non-critical applications. Furthermore, it is important to re-establish the fail-operational behavior of critical applications. Here, the agent of the source task invalidates the mapping of all its successors in the mapping order which in turn also inform all their own successors. Afterwards, the mapping process can be repeated while the car is in a safe state until a valid configuration is found.

7 Evaluation

We evaluate our performance analysis and our agent-based approach using our in-house developed simulation framework. The framework has been developed to simulate automotive hardware architectures and the execution and communication of the system software according to our system model in Sect. 3. On top of the simulation framework we implemented the agents, resource managers and strategies as described in Sect. 6. For the simulation framework we chose a process-based Discrete-Event Simulation (DES) architecture based on the SimPy framework [32]. The hardware architecture and system software are described in a specification file using the XML schema from the OpenDSE framework [33]. The simulation framework supports any kind of hardware architecture consisting of ECUs, switches, and links. To allow a dynamic behavior where tasks and agents are moving between ECUs at run-time we use a communication middleware based on the SOME/IP standard [25]. The middleware consists of a service discovery which allows to dynamically find services at run-time. Communication participants are either modelled as clients or services. Furthermore, the middleware supports remote-procedure calls and includes a publish/subscribe scheme. The framework also offers the possibility to simulate ECU failures by shutting down ECUs. ECU failures are detected via heartbeats that are periodically sent between all ECUs. Once a watchdog does not receive the heartbeat within a certain timeout interval it reports the corresponding ECU failure.

7.1 Setup

The simulated hardware architecture used in our experiments. Ten homogeneous ECUs \(e \in E\) are connected in pairs via ethernet links \(l \in L\) to one switch \(s \in S\) forming a ring architecture. Messages are routed using Dijkstra’s algorithm [27]. In the worst case four hops are required to enable communication between two ECUs. Using ethernet links with a data rate of 1 Gbit/s, a maximum slot number of \(SL_{max} = 1000\) and a slot interval of \(\tau _{SL} = 12.5 \,\mu {\text {s}}\), the worst-case communication latency for sending a message m over one link is \(CL_(m,l) = 12.5\) ms