Abstract

AVATAR is an elegant and effective way to split clauses in a saturation prover using a SAT solver. But is it refutationally complete? And how does it relate to other splitting architectures? To answer these questions, we present a unifying framework that extends a saturation calculus (e.g., superposition) with splitting and that embeds the result in a prover guided by a SAT solver. The framework also allows us to study locking, a subsumption-like mechanism based on the current propositional model. Various architectures are instances of the framework, including AVATAR, labeled splitting, and SMT with quantifiers.

Similar content being viewed by others

1 Introduction

One of the great strengths of saturation calculi such as resolution [26] and superposition [1] is that they avoid case analyses. Derived clauses hold unconditionally, and the prover can stop as soon as it derives the empty clause, without having to backtrack. The drawback is that these calculi often generate long, unwieldy clauses that slow down the prover. A remedy is to partition the search space by splitting a multiple-literal clause \(C_1 \vee \cdots \vee C_n\) into subclauses \(C_i\) that share no variables. Splitting approaches include splitting with backtracking [31, 32], splitting without backtracking [25], labeled splitting [15], and AVATAR [28].

The AVATAR architecture, which is based on a satisfiability (SAT) solver, is of particular interest because it is so successful. Voronkov reported that an AVATAR-enabled Vampire could solve 421 TPTP problems that had never been solved before by any system [28, Sect. 9], a mind-boggling number. Intuitively, AVATAR works well in combination with the superposition calculus because it combines superposition’s strong equality reasoning with the SAT solver’s strong clausal reasoning. It is also appealing theoretically, because it gracefully generalizes traditional saturation provers and yet degenerates to a SAT solver if the problem is propositional.

To illustrate the approach, we follow the key steps of an AVATAR-enabled resolution prover on the initial clause set containing \(\lnot \textsf{p}(\textsf{a}),\) \(\lnot \textsf{q}(z, z),\) and \(\textsf{p}(x)\vee \textsf{q}(y, \textsf{b}).\) The disjunction can be split into \(\textsf{p}(x)\mathbin {\leftarrow }\{[\textsf{p}(x)]\}\) and \(\textsf{q}(y, \textsf{b})\mathbin {\leftarrow }\{[\textsf{q}(y, \textsf{b})]\},\) where \(C \mathbin {\leftarrow }\{[C]\}\) indicates that the clause C is enabled only in models in which the associated propositional variable [C] is true. A SAT solver is then run to choose a model \(\mathcal {J}\) of \([\textsf{p}(x)] \vee [\textsf{q}(y, \textsf{b})]\). Suppose \(\mathcal {J}\) makes \([\textsf{p}(x)]\) true and \([\textsf{q}(y, \textsf{b})]\) false. Then resolving \(\textsf{p}(x)\mathbin {\leftarrow }\{[\textsf{p}(x)]\}\) with \(\lnot \textsf{p}(\textsf{a})\) produces \(\bot \mathbin {\leftarrow }\{[\textsf{p}(x)]\}\), meaning that \([\textsf{p}(x)]\) must be false. Next, the SAT solver makes \([\textsf{p}(x)]\) false and \([\textsf{q}(y, \textsf{b})]\) true. Resolving \(\textsf{q}(y, \textsf{b})\mathbin {\leftarrow }\{[\textsf{q}(y, \textsf{b})]\}\) with \(\lnot \textsf{q}(z, z)\) yields \(\bot \mathbin {\leftarrow }\{[\textsf{q}(y, \textsf{b})]\}\), meaning that \([\textsf{q}(y, \textsf{b})]\) must be false. Since both disjuncts of \([\textsf{p}(x)] \vee [\textsf{q}(y, \textsf{b})]\) are false, the SAT solver reports “unsatisfiable,” concluding the refutation.

What about refutational completeness? Far from being a purely theoretical concern, establishing completeness—or finding counterexamples—could yield insights into splitting and perhaps lead to an even stronger AVATAR. Before we can answer this open question, we must mathematize splitting. Our starting point is the saturation framework by Waldmann, Tourret, Robillard, and Blanchette [29], based on the work of Bachmair and Ganzinger [2]. It covers a wide array of techniques, but “the main missing piece of the framework is a generic treatment of clause splitting” [29, p. 332]. We provide that missing piece, in the form of a splitting framework, and use it to show the completeness of an AVATAR-like architecture. The framework is currently a pen-and-paper creature; a formalization using Isabelle/HOL [21] is underway.

Our framework has five layers, linked by refinement. The first layer consists of a base calculus, such as resolution or superposition. It must be presentable as an inference system and a redundancy criterion, as required by the saturation framework, and it must be refutationally complete.

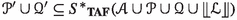

From a base calculus, our framework can be used to derive the second layer, which we call the splitting calculus (Sect. 3). This extends the base calculus with splitting and inherits the base’s completeness. It works on A-clauses or A-formulas of the form \(C \mathbin {\leftarrow }A\), where C is a base clause or formula and A is a set of propositional literals, called assertions (Sect. 2).

Using the saturation framework, we can prove the dynamic completeness of an abstract prover, formulated as a transition system, that implements the splitting calculus. However, this ignores a major component of AVATAR: the SAT solver. AVATAR considers only inferences involving A-formulas whose assertions are true in the current propositional model. The role of the third layer is to reflect this behavior. A model-guided prover operates on states of the form \((\mathcal {J}{,}\> {{\mathscr {N}}}),\) where \(\mathcal {J}\) is a propositional model and \({{\mathscr {N}}}\) is a set of A-formulas (Sect. 4). This layer is also dynamically complete.

The fourth layer introduces AVATAR’s locking mechanism (Sect. 5). With locking, an A-formula \(D \mathbin {\leftarrow }B\) can be temporarily disabled by another A-formula \(C \mathbin {\leftarrow }A\) if C subsumes D, even if \(A \not \subseteq B.\) Here we make a first discovery: AVATAR-style locking compromises completeness and must be curtailed.

Finally, the fifth layer is an AVATAR-based prover (Sect. 6). This refines the locking model-guided prover of the fourth layer with the given clause procedure, which saturates an A-formula set by distinguishing between active and passive A-formulas. Here we make another discovery: Selecting A-formulas fairly is not enough to guarantee completeness. We need a stronger criterion.

There are also implications for other architectures. In a hypothetical tête-à-tête with the designers of labeled splitting, they might gently point out that by pioneering the use of a propositional model, including locking, they almost invented AVATAR themselves. Likewise, developers of satisfiability modulo theories (SMT) solvers might be tempted to claim that Voronkov merely reinvented SMT. To investigate such questions, we apply our framework to splitting without backtracking, labeled splitting, and SMT with quantifiers (Sect. 7). This gives us a solid basis for comparison as well as some new theoretical results.

A shorter version of this article was presented at CADE-28 [14]. This article extends the conference paper with more explanations, examples, counterexamples, and proofs. We strengthened the definition of consequence relation to require compactness, which allowed us to simplify property (D4). The property (D4) from the conference paper is proved as Lemma 5. The definition of strongly finitary was also changed to include a stronger condition on the introduced assertions, which is needed for the proof of Lemma 72.

2 Preliminaries

Our framework is parameterized by abstract notions of formulas, consequence relations, inferences, and redundancy. We largely follow the conventions of Waldmann et al. [29]. A-formulas generalize Voronkov’s A-clauses [28].

2.1 Formulas

A set \({\textbf{F}}\) of formulas, ranged over by \(C, D \in {\textbf{F}},\) is a set that contains a distinguished element \(\bot \) denoting falsehood. A consequence relation \(\models \) over \({\textbf{F}}\) is a relation \({\models } \subseteq {({\mathscr {P}}({\textbf{F}}))}^2\) with the following properties for all sets \(M, M', N, N' \subseteq {\textbf{F}}\) and formulas \(C, D \in {\textbf{F}}\):

-

(D1)

\(\{\bot \} \models \emptyset \);

-

(D2)

\(\{C\} \models \{C\}\);

-

(D3)

if \(M' \subseteq M\) and \(N' \subseteq N,\) then \(M' \models N'\) implies \(M \models N\);

-

(D4)

if \(M \models N \cup \{C\}\) and \(M' \cup \{C\} \models N'\), then \(M \cup M' \models N \cup N'\);

-

(D5)

if \(M \models N,\) then there exist finite sets \(M' \subseteq M\) and \(N' \subseteq N\) such that

.

.

The intended interpretation of \(M \models N\) is conjunctive on the left but disjunctive on the right: “ .” The disjunctive interpretation of N will be useful to define splittability abstractly in Sect. 3.1. Property (D4) is called the cut rule, and (D5) is called compactness.

.” The disjunctive interpretation of N will be useful to define splittability abstractly in Sect. 3.1. Property (D4) is called the cut rule, and (D5) is called compactness.

For their saturation framework, Waldmann et al. instead consider a fully conjunctive version of the consequence relation, with different properties. The incompatibility can easily be repaired: Given a consequence relation \(\models ,\) we can obtain a consequence relation \({\models }'\) in their sense by defining \(M \mathrel {{\models }'} N\) if and only if \(M \models \{C\}\) for every \(C \in N.\) The two versions differ only when the right-hand side is not a singleton. The conjunctive version \({\models }'\) can then be used when interacting with the saturation framework.

The \(\models \) notation can be extended to allow negation on either side. Let \({\textbf{F}}_{\!{{\sim }}}\) be defined as  such that \({{\sim }{{\sim }C}} = C.\) Given \(M, N \subseteq {\textbf{F}}_{\!{{\sim }}},\) we set \(M \models N\) if and only if

such that \({{\sim }{{\sim }C}} = C.\) Given \(M, N \subseteq {\textbf{F}}_{\!{{\sim }}},\) we set \(M \models N\) if and only if

We write \(M \models \!\!\!|N\) for the conjunction of \(M \models N\) and \(N \models M.\)

Lemma 1

Let \(C\in {\textbf{F}}_{\!{{\sim }}}\). Then \(\{C\}\cup \{{{\sim }C}\}\models \{\bot \}\).

Proof

This holds by (D2) and (D3) due to the definition of \(\models \) on \({\textbf{F}}_{\!{{\sim }}}\). \(\square \)

Following the saturation framework [29, p. 318], we distinguish between the consequence relation \({\models }\) used for stating refutational completeness and the consequence relation \({|\!\!\!\approx }\) used for stating soundness. For example, \({\models }\) could be entailment for first-order logic with equality, whereas \({|\!\!\!\approx }\) could also draw on linear arithmetic, or interpret Skolem symbols so as to make skolemization sound. Normally \({\models } \subseteq {|\!\!\!\approx }\), but this is not required.

Example 1

In clausal first-order logic with equality, as implemented in superposition provers, the formulas in \({\textbf{F}}\) consist of clauses over a signature \({\Sigma }.\) Each clause C is a finite multiset of literals \(L_1, \dotsc , L_n\) written \(C = L_1 \vee \cdots \vee L_n.\) The clause’s variables are implicitly quantified universally. Each literal L is either an atom or its negation (\(\lnot \)), and each atom is an unoriented equation \(s \approx t\). We define the consequence relation \(\models \) by letting \(M \models N\) if and only if every first-order \({\Sigma }\)-interpretation that satisfies all clauses in M also satisfies at least one clause in N.

2.2 Calculi and Derivations

A refutational calculus combines a set of inferences, which are a priori mandatory, and a redundancy criterion, which identifies inferences that a posteriori need not be performed as well as formulas that can be deleted.

Let \({\textbf{F}}\) be a set of formulas equipped with \(\bot .\) An \({\textbf{F}}\)-inference \(\iota \) is a tuple \((C_n,\dotsc ,C_1,D) \in {\textbf{F}}^{n+1}.\) The formulas \(C_n,\dotsc ,C_1\) are the premises, and D is the conclusion. Define \(\textit{prems}(\iota ) = \{C_n,\dotsc ,C_1\}\) and \(\textit{concl}(\iota ) = \{D\}.\) An inference \(\iota \) is sound w.r.t. \(|\!\!\!\approx \) if \(\textit{prems}(\iota ) |\!\!\!\approx \textit{concl}(\iota ).\) An inference system \(\textit{Inf}{}\) is a set of \({\textbf{F}}\)-inferences.

Given \(N \subseteq {\textbf{F}},\) we let \(\textit{Inf}{}(N)\) denote the set of all inferences in \(\textit{Inf}{}\) whose premises are included in N, and \(\textit{Inf}{}(N, M) = \textit{Inf}{}(N {\cup }M) \setminus \textit{Inf}{}(N \setminus M)\) for the set of all inferences in \(\textit{Inf}{}\) such that one or more premises are in M and the remaining premises are in N.

A redundancy criterion for an inference system \(\textit{Inf}{}\) and a consequence relation \({\models }\) is a pair \(\textit{Red}= (\textit{Red}_\text {I},\textit{Red}_\text {F}),\) where \(\textit{Red}_\text {I}: {\mathscr {P}}({\textbf{F}}) \rightarrow {\mathscr {P}}(\textit{Inf}{})\) and \(\textit{Red}_\text {F}:{\mathscr {P}}({\textbf{F}}) \rightarrow {\mathscr {P}}({\textbf{F}})\) enjoy the following properties for all sets \(M, N \subseteq {\textbf{F}}\):

-

(R1)

if \(N \models \{\bot \},\) then \(N {\setminus } \textit{Red}_\text {F}(N) \models \{\bot \}\);

-

(R2)

if \(M \subseteq N,\) then \(\textit{Red}_\text {F}(M) \subseteq \textit{Red}_\text {F}(N)\) and \(\textit{Red}_\text {I}(M) \subseteq \textit{Red}_\text {I}(N)\);

-

(R3)

if \(M \subseteq \textit{Red}_\text {F}(N),\) then \(\textit{Red}_\text {F}(N) \subseteq \textit{Red}_\text {F}(N {\setminus } M)\) and \(\textit{Red}_\text {I}(N) \subseteq \textit{Red}_\text {I}(N {\setminus } M)\);

-

(R4)

if \(\iota \in \textit{Inf}{}\) and \(\textit{concl}(\iota ) \in N,\) then \(\iota \in \textit{Red}_\text {I}(N).\)

Inferences in \(\textit{Red}_\text {I}(N)\) and formulas in \(\textit{Red}_\text {F}(N)\) are said to be redundant w.r.t. N. \(\textit{Red}_\text {I}\) indicates which inferences need not be performed, whereas \(\textit{Red}_\text {F}\) justifies the deletion of formulas deemed useless. The above properties make the passage from static to dynamic completeness possible: (R1) ensures that deleting a redundant formula preserves a set’s inconsistency, so as not to lose refutations; (R2) and (R3) ensure that arbitrary formulas can be added and redundant formulas can be deleted by the prover; and (R4) ensures that adding an inference’s conclusion to the formula set makes the inference redundant.

A pair \((\textit{Inf}{}, \textit{Red})\) forms a calculus. A set \(N \subseteq {\textbf{F}}\) is saturated w.r.t. \(\textit{Inf}{}\) and \(\textit{Red}_\text {I}\) if \(\textit{Inf}{}(N) \subseteq \textit{Red}_\text {I}(N).\) The calculus \((\textit{Inf}{}, \textit{Red})\) is statically (refutationally) complete (w.r.t. \(\models \)) if for every set \(N \subseteq {\textbf{F}}\) that is saturated w.r.t. \(\textit{Inf}{}\) and \(\textit{Red}_\text {I}\) and such that \(N \models \{\bot \},\) we have \(\bot \in N.\)

Lemma 2

Assume that the calculus \((\textit{Inf}{},\textit{Red})\) is statically complete. Then \(\bot \notin \textit{Red}_\text {F}(N)\) for every \(N \subseteq {\textbf{F}}.\)

Proof

By (R2), it suffices to show \(\bot \notin \textit{Red}_\text {F}({\textbf{F}}).\) Clearly, by (D2) and (D3), \({\textbf{F}}\models \{\bot \}.\) Thus, by (R1), \({\textbf{F}}{\setminus } \textit{Red}_\text {F}({\textbf{F}}) \models \{\bot \}.\) Moreover, by (R3) and (R4), \({\textbf{F}}{\setminus } \textit{Red}_\text {F}({\textbf{F}})\) is saturated. Hence, since \((\textit{Inf}{}, \textit{Red})\) is statically complete, \(\bot \in {\textbf{F}}\setminus \textit{Red}_\text {F}({\textbf{F}}).\) Therefore, \(\bot \notin \textit{Red}_\text {F}({\textbf{F}}).\) \(\square \)

Remark 3

Given a redundancy criterion \((\textit{Red}_\text {I}, \textit{Red}_\text {F}),\) where \(\bot \notin \textit{Red}_\text {F}({\textbf{F}}),\) we can make it stricter as follows. Define \(\textit{Red}_\text {I}'\) such that \(\iota \in \textit{Red}_\text {I}'(N)\) if and only if either \(\iota \in \textit{Red}_\text {I}(N)\) or \(\bot \in N.\) Define \(\textit{Red}_\text {F}'\) such that \(C \in \textit{Red}_\text {F}'(N)\) if and only if either \(C \in \textit{Red}_\text {F}(N)\) or else both \(\bot \in N\) and \(C \not = \bot .\) Obviously, \(\textit{Red}' = (\textit{Red}_\text {I}', \textit{Red}_\text {F}')\) is a redundancy criterion. Moreover, if N is saturated w.r.t. \(\textit{Inf}{}\) and \(\textit{Red}_\text {I},\) then N is saturated w.r.t. \(\textit{Inf}{}\) and \(\textit{Red}_\text {I}'\), and if the calculus \((\textit{Inf}{}, \textit{Red})\) is statically complete, then \((\textit{Inf}{}, \textit{Red}')\) is also statically complete. (In the last case, the condition \(\bot \notin \textit{Red}_\text {F}({\textbf{F}})\) holds by Lemma 2.)

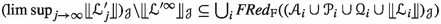

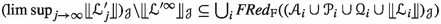

A sequence \((x_i)_i\) over a set X is a function from \({\mathbb {N}}\) to X that maps each \(i \in {\mathbb {N}}\) to \(x_i \in X.\) Let \((X_i)_i\) be a sequence of sets. Its limit inferior is \(X_\infty = \textstyle \liminf _{j\rightarrow \infty } X_j = \bigcup _i \bigcap _{j \ge i} X_j,\) and its limit superior is \(X^\infty = \textstyle \limsup _{j\rightarrow \infty } X_j = \bigcap _i \bigcup _{j \ge i} X_j.\) The elements of \(X_\infty \) are called persistent. A sequence \((N_i)_i\) of sets of \({\textbf{F}}\)-formulas is weakly fair w.r.t. \(\textit{Inf}{}\) and \(\textit{Red}_\text {I}\) if \(\textit{Inf}{}(N_\infty ) \subseteq \bigcup _i \textit{Red}_\text {I}(N_i)\) and strongly fair if \({\limsup _{i\rightarrow \infty } \textit{Inf}{}(N_i)} \subseteq \bigcup _i \textit{Red}_\text {I}(N_i).\) Weak fairness requires that all inferences possible from some index i and ever after eventually be performed or become redundant for another reason. Strong fairness requires the same from all inferences that are possible infinitely often, even if not continuously so. Both can be used to ensure that some limit is saturated.

Given a relation \({\rhd } \subseteq X^2\) (pronounced “triangle”), a \(\rhd \)-derivation is a sequence of X elements such that \(x_i \rhd x_{i+1}\) for every i. Finite runs can be extended to derivations by repeating the final state infinitely. We must then ensure that \(\rhd \) supports such stuttering. Abusing language, and departing slightly from the saturation framework, we will say that a derivation \((x_i)_i\) terminates if \(x_i = x_{i+1} = \cdots \) for some index i.

Let \({\rhd _{\!\textit{Red}_\text {F}}} \subseteq ({\mathscr {P}}({\textbf{F}}))^2\) be the relation such that \(M \rhd _{\!\textit{Red}_\text {F}}N\) if and only if \(M {\setminus } N \subseteq \textit{Red}_\text {F}(N).\) Note that it is reflexive and hence supports stuttering. The relation is also transitive due to (R3). We could additionally require soundness (\(M |\!\!\!\approx N\)) or at least consistency preservation (\(M \not |\!\!\!\approx \{\bot \}\) implies \(N \not |\!\!\!\approx \{\bot \}\)), but this is unnecessary for proving completeness.

The calculus \((\textit{Inf}{}, \textit{Red})\) is dynamically (refutationally) complete (w.r.t. \(\models \)) if for every \(\rhd _{\!\textit{Red}_\text {F}}\)-derivation \((N_i)_i\) that is weakly fair w.r.t. \(\textit{Inf}{}\) and \(\textit{Red}_\text {I}\) and such that \(N_0 \models \{\bot \},\) we have \(\bot \in N_i\) for some i.

2.3 A-Formulas

We fix throughout a countable set \({{\textbf {V}}}\) of propositional variables \(\textsf{v}_0,\textsf{v}_1,\dots .\) For each \(\textsf{v} \in {{\textbf {V}}},\) let \(\lnot \textsf{v} \in \lnot {{\textbf {V}}}\) denote its negation, with \(\lnot \lnot \textsf{v} = \textsf{v}.\) We assume that a formula \(\textit{fml}(\textsf{v}) \in {\textbf{F}}\) is associated with each propositional variable \(\textsf{v} \in {{\textbf {V}}}.\) Intuitively, \(\textsf{v}\) approximates \(\textit{fml}(\textsf{v})\) at the propositional level. This definition is extended so that \(\textit{fml}(\lnot \textsf{v}) = {{\sim }\textit{fml}(\textsf{v})}.\) A propositional literal, or assertion, \(a \in {{\textbf {A}}}= {{\textbf {V}}}{\cup }\lnot {{\textbf {V}}}\) over \({{\textbf {V}}}\) is either a propositional variable \(\textsf{v}\) or its negation \(\lnot \textsf{v}.\)

A propositional interpretation \(\mathcal {J} \subseteq {{\textbf {A}}}\) is a set of assertions such that for every variable \(\textsf{v} \in {{\textbf {V}}}\), exactly one of \(\textsf{v} \in \mathcal {J}\) and \(\lnot \textsf{v} \in \mathcal {J}\) holds. We lift \(\textit{fml}\) to sets in an elementwise fashion:  . In the rest of this article, we will often implicitly lift functions elementwise to sets. The condition on the variables ensures that \(\mathcal {J}\) is propositionally consistent. \(\mathcal {J}\) might nevertheless be inconsistent for \(\models \), which takes into account the semantics of the formulas \(\textit{fml}(\textsf{v})\) associated with the variables \(\textsf{v}\); for example, we might have \(\mathcal {J} = \{\textsf{v}_0, \textsf{v}_1\}\), \(\textit{fml}(\textsf{v}_0) = \textsf{p}(x)\), and \(\textit{fml}(\textsf{v}_1) = \lnot \textsf{p}(\textsf{a})\), and \(\mathcal {J} \models \{\bot \}.\)

. In the rest of this article, we will often implicitly lift functions elementwise to sets. The condition on the variables ensures that \(\mathcal {J}\) is propositionally consistent. \(\mathcal {J}\) might nevertheless be inconsistent for \(\models \), which takes into account the semantics of the formulas \(\textit{fml}(\textsf{v})\) associated with the variables \(\textsf{v}\); for example, we might have \(\mathcal {J} = \{\textsf{v}_0, \textsf{v}_1\}\), \(\textit{fml}(\textsf{v}_0) = \textsf{p}(x)\), and \(\textit{fml}(\textsf{v}_1) = \lnot \textsf{p}(\textsf{a})\), and \(\mathcal {J} \models \{\bot \}.\)

An A-formula over a set \({\textbf{F}}\) of base formulas and an assertion set \({{\textbf {A}}}\) is a pair \({{\mathscr {C}}} = (C, A) \in {\textbf {AF}}= {\textbf{F}}\times {{\mathscr {P}}}_\text {fin}({{\textbf {A}}}),\) written \(C \mathbin {\leftarrow }A,\) where C is a formula and A is a finite set of assertions \(\{a_1, \dotsc , a_n\}\) understood as an implication  . We identify \(C \mathbin {\leftarrow }\emptyset \) with C and define the projections \(\lfloor C \mathbin {\leftarrow }A \rfloor = C\) and \(\lfloor (C_n \mathbin {\leftarrow }A_n, \dots , C_0 \mathbin {\leftarrow }A_0) \rfloor = (C_n, \dots , C_0)\). Moreover, \({{\mathscr {N}}}_\bot \) is the set consisting of all A-formulas of the form \(\bot \mathbin {\leftarrow }A \in {{\mathscr {N}}}\), where \(A \in {{\mathscr {P}}}_\text {fin}({{\textbf {A}}}).\) Since \(\bot \mathbin {\leftarrow }\{a_1,\dotsc ,a_n\}\) can be read as \(\lnot a_1 \vee \dots \vee \lnot a_n,\) we call such A-formulas propositional clauses. (In contrast, we call a variable-free base formula such as \(\textsf{p} \vee \textsf{q}\) a ground clause when \({\textbf{F}}\) is first-order logic.) The set \({{\mathscr {N}}}_\bot \) represents the clauses considered by the SAT solver in the original AVATAR [28]. Note the use of calligraphic letters (e.g., \({{\mathscr {C}}}, {{\mathscr {N}}}\)) to range over A-formulas and sets of A-formulas.

. We identify \(C \mathbin {\leftarrow }\emptyset \) with C and define the projections \(\lfloor C \mathbin {\leftarrow }A \rfloor = C\) and \(\lfloor (C_n \mathbin {\leftarrow }A_n, \dots , C_0 \mathbin {\leftarrow }A_0) \rfloor = (C_n, \dots , C_0)\). Moreover, \({{\mathscr {N}}}_\bot \) is the set consisting of all A-formulas of the form \(\bot \mathbin {\leftarrow }A \in {{\mathscr {N}}}\), where \(A \in {{\mathscr {P}}}_\text {fin}({{\textbf {A}}}).\) Since \(\bot \mathbin {\leftarrow }\{a_1,\dotsc ,a_n\}\) can be read as \(\lnot a_1 \vee \dots \vee \lnot a_n,\) we call such A-formulas propositional clauses. (In contrast, we call a variable-free base formula such as \(\textsf{p} \vee \textsf{q}\) a ground clause when \({\textbf{F}}\) is first-order logic.) The set \({{\mathscr {N}}}_\bot \) represents the clauses considered by the SAT solver in the original AVATAR [28]. Note the use of calligraphic letters (e.g., \({{\mathscr {C}}}, {{\mathscr {N}}}\)) to range over A-formulas and sets of A-formulas.

Model-guided provers only consider A-formulas whose assertions are true in the current interpretation. Thus we say that an A-formula \(C \mathbin {\leftarrow }A \in {\textbf {AF}}\) is enabled in a propositional interpretation \(\mathcal {J}\) if \(A \subseteq \mathcal {J}\). A set of A-formulas is enabled in \(\mathcal {J}\) if all of its members are enabled in \(\mathcal {J}.\) Given an A-formula set \({{\mathscr {N}}} \subseteq {\textbf {AF}},\) the enabled projection \({{\mathscr {N}}}_{\mathcal {J}} \subseteq \lfloor \mathscr {N} \rfloor \) consists of the projections \(\lfloor \mathscr {C} \rfloor \) of all A-formulas \(\mathscr {C}\) enabled in \(\mathcal {J}.\) Analogously, the enabled projection \(\textit{Inf}{}_{\mathcal {J}} \subseteq \lfloor \textit{Inf}{} \rfloor \) of a set \(\textit{Inf}{}\) of \({\textbf {AF}}\)-inferences consists of the projections \(\lfloor \iota \rfloor \) of all inferences \(\iota \in \textit{Inf}{}\) whose premises are all enabled in \(\mathcal {J}.\)

A propositional interpretation \(\mathcal {J}\) is a propositional model of \({{\mathscr {N}}}_\bot ,\) written \(\mathcal {J} \models {{\mathscr {N}}}_\bot ,\) if \(\bot \notin ({{\mathscr {N}}}_\bot )_{\mathcal {J}}\). (i.e., \(({{\mathscr {N}}}_\bot )_{\mathcal {J}} = \emptyset \)). Moreover, we write \(\mathcal {J} |\!\!\!\approx {{\mathscr {N}}}_\bot \) if \(\bot \notin ({{\mathscr {N}}}_\bot )_{\mathcal {J}}\) or \(\textit{fml}(\mathcal {J}) |\!\!\!\approx \{\bot \}\). A set \({{\mathscr {N}}}_\bot \) is propositionally satisfiable if there exists an interpretation \(\mathcal {J}\) such that \(\mathcal {J} \models {{\mathscr {N}}}_\bot \). In contrast to consequence relations, propositional modelhood \(\models \) interprets the set \({{\mathscr {N}}}_\bot \) conjunctively: \(\mathcal J \models \mathscr {N}_\bot \) is informally understood as \(\mathcal {J} \models \bigwedge {{\mathscr {N}}}_\bot .\)

Given consequence relations \(\models \) and \(|\!\!\!\approx \), we lift them from \({\mathscr {P}}({\textbf{F}})\) to \({\mathscr {P}}({\textbf {AF}})\): \({{\mathscr {M}}} \models {{\mathscr {N}}}\) if and only if \({{\mathscr {M}}}_{\mathcal {J}} \models \lfloor {{\mathscr {N}}} \rfloor \) for every \(\mathcal {J}\) in which \(\mathscr {N}\) is enabled, and \({{\mathscr {M}}} |\!\!\!\approx {{\mathscr {N}}}\) if and only if \(\textit{fml}(\mathcal {J}) {\cup }{{\mathscr {M}}}_{\mathcal {J}} |\!\!\!\approx \lfloor {{\mathscr {N}}} \rfloor \) for every \(\mathcal {J}\) in which \(\mathscr {N}\) is enabled. The consequence relation \(\models \) is used for the completeness of the splitting prover and only captures what inferences such a prover must perform. In contrast, \(|\!\!\!\approx \) captures a stronger semantics: For example, thanks to \(\textit{fml}(\mathcal {J})\) among the premises for \(|\!\!\!\approx ,\) the A-formula \(\textit{fml}(a) \mathbin {\leftarrow }\{a\}\) is always a \(|\!\!\!\approx \)-tautology. Also note that assuming \(\emptyset \not \models \emptyset \), then \({\models } \subseteq {|\!\!\!\approx }\) on sets that contain exclusively propositional clauses. When needed, we use \(|\!\!\!\approx _{\textbf{F}}\) to denote \(|\!\!\!\approx \) on \({\mathscr {P}}({\textbf{F}})\) and analogously for \(|\!\!\!\approx _{\textbf {AF}}\), as well as \(\models _{\textbf{F}}\) and \(\models _{\textbf {AF}}\).

Lemma 4

The relations \(\models \) and \(|\!\!\!\approx \) on \({\mathscr {P}}({\textbf {AF}})\) are consequence relations.

Proof

We consider only \({|\!\!\!\approx }\); the proof for \(\models \) is analogous. For (D1), we need to show \(\textit{fml}(\mathcal {J}) \cup \{\bot \} |\!\!\!\approx \emptyset \) for every \(\mathcal {J}\) because \(\emptyset \) is always enabled. This follows from (D1) and (D3). For (D2), we need to show \(\textit{fml}(\mathcal {J}) \cup \{C \mathbin {\leftarrow }A\}_{\mathcal {J}} |\!\!\!\approx \lfloor \{C \mathbin {\leftarrow }A\} \rfloor ,\) assuming \(C \mathbin {\leftarrow }A\) is enabled in \(\mathcal {J}.\) Hence it suffices to show \(\textit{fml}(\mathcal {J}) \cup \{C\} |\!\!\!\approx \{C\},\) which follows from (D2) and (D3). For (D3), it suffices to show \(\textit{fml}(\mathcal {J}) \cup \mathscr {M}_{\mathcal {J}}|\!\!\!\approx \lfloor \mathscr {N} \rfloor \) assuming that \(\mathscr {N}\) is enabled in \(\mathcal {J},\) and \(\textit{fml}(\mathcal {J}) \cup \mathscr {M}'_{\mathcal {J}} |\!\!\!\approx \lfloor \mathscr {N}' \rfloor \) for every \(\mathscr {M}' \subseteq \mathscr {M}\) and \(\mathscr {N}' \subseteq \mathscr {N}.\) This follows from (D3) and monotonicity of \(\lfloor \, \rfloor \) and \((\,)_{\mathcal {J}}\). For (D4), we need to show \(\textit{fml}(\mathcal J) \cup (\mathscr {M} \cup \mathscr {M}') _{\mathcal J} |\!\!\!\approx \lfloor \mathscr {N} \cup \mathscr {N}' \rfloor ,\) assuming \(\textit{fml}(\mathcal J) \cup \mathscr {M} _{\mathcal J} |\!\!\!\approx \lfloor \mathscr {N} \rfloor \cup \{C\}\) if \(C \mathbin {\leftarrow }A\) is enabled in \(\mathcal J,\) \(\textit{fml}(\mathcal J) \cup \mathscr {M}' _{\mathcal J} \cup \{C \mathbin {\leftarrow }A\} _{\mathcal J} |\!\!\!\approx \lfloor \mathscr {N}' \rfloor ,\) and \(\mathscr {N} \cup \mathscr {N}'\) is enabled in \(\mathcal J.\) This follows directly from (D4) if \(C \mathbin {\leftarrow }A\) is enabled in \(\mathcal J,\) and from (D3) if \(C \mathbin {\leftarrow }A\) is not enabled.

Finally, we show the compactness of \(|\!\!\!\approx _{\textbf {AF}}\) (D5), using the compactness of propositional logic. First we consider the case where \(\mathscr {N}\) is never enabled. Then the set of assertions in \(\mathscr {N}\), seen as conjunctions of propositional literals, is unsatisfiable. By compactness, there exists a finite subset of these assertions that is also unsatisfiable, i.e., there is a finite subset \(\mathscr {N}'\) of \(\mathscr {N}\) that is also never enabled. Thus for any finite subset \(\mathscr {M}'\) of \(\mathscr {M}\), \(\mathscr {M}'|\!\!\!\approx \mathscr {N}'\) as wanted.

Otherwise, there is at least one \(\mathcal J\) enabling \(\mathscr {N}.\) By abuse of notation, we write \(\mathscr {N}_A\) even if \(A \subseteq {{\textbf {A}}}\) is not an interpretation. For every interpretation \(\mathcal J\) in which \(\mathscr {N}\) is enabled, there exist by compactness of \({|\!\!\!\approx _{\textbf{F}}}\) finite sets \(\mathcal J' \subseteq \mathcal J,\) \(\mathscr {M}^\mathcal J \subseteq \mathscr {M},\) and \(\mathscr {N}^\mathcal J \subseteq \mathscr {N}\) such that \(\textit{fml}(\mathcal J') \cup {\mathscr {M}^{\mathcal J}} _{\mathcal J'} |\!\!\!\approx \lfloor {\mathscr {N}^{\mathcal J}} \rfloor .\) Define  Note that \(\mathcal J \models E\) if and only if \(\mathscr {N}\) is enabled in \(\mathcal J.\) This observation implies that the sets of propositional clauses E and \(\{ \bot \mathbin {\leftarrow }\mathcal J' \mid \mathcal J\,\text {interpretation where}\,\mathscr {N}\,\text { is enabled} \} \cup E\) are, respectively, propositionally satisfiable and propositionally unsatisfiable. By compactness, there exists a finite unsatisfiable subset \(\{ \bot \mathbin {\leftarrow }\mathcal J_1', \dots , \bot \mathbin {\leftarrow }\mathcal J_n' \} \cup E'\) of the latter set.

Note that \(\mathcal J \models E\) if and only if \(\mathscr {N}\) is enabled in \(\mathcal J.\) This observation implies that the sets of propositional clauses E and \(\{ \bot \mathbin {\leftarrow }\mathcal J' \mid \mathcal J\,\text {interpretation where}\,\mathscr {N}\,\text { is enabled} \} \cup E\) are, respectively, propositionally satisfiable and propositionally unsatisfiable. By compactness, there exists a finite unsatisfiable subset \(\{ \bot \mathbin {\leftarrow }\mathcal J_1', \dots , \bot \mathbin {\leftarrow }\mathcal J_n' \} \cup E'\) of the latter set.

Let \(\mathscr {M}' = \bigcup _i \mathscr {M}^{\mathcal J_i}\) and \(\mathscr {N}' = \bigcup _i \mathscr {N}^{\mathcal J_i} \cup \mathscr {N}''\) where \(\mathcal J_i\) is any of the interpretations enabling \(\mathscr {N}\) that is at the origin of the existence of this \(\mathcal J_i'\) and \(\mathscr {N}''\) is a finite subset of \(\mathscr {N}\) such that all assertions in \(E'\) also occur negated in \(\mathscr {N}''.\) Note that both \(\mathscr {M}'\) and \(\mathscr {N}'\) are finite sets. It now suffices to show \(\mathscr {M}' |\!\!\!\approx \mathscr {N}'.\) Thus let \(\mathcal J\) be an interpretation in which \(\mathscr {N}'\) is enabled. Then \(\mathcal J \models E'\) because all assertions in \(E'\) also appear negated in \(\mathscr {N}'' \subseteq \mathscr {N}'.\) Thus, since \(\{ \bot \mathbin {\leftarrow }\mathcal J_1', \dots , \bot \mathbin {\leftarrow }\mathcal J_n' \} \cup E'\) is unsatisfiable, there must exist an index k such that \(\mathcal J \not \models \bot \mathbin {\leftarrow }\mathcal J_k'\), that is, \(\mathcal J_k' \subseteq \mathcal J\). We have \(\textit{fml}(\mathcal J_k') \cup {\mathscr {M} ^ {\mathcal J_k}} _{\mathcal J_k'} |\!\!\!\approx \lfloor \mathscr {N} ^ {\mathcal J_k} \rfloor \) by construction, and thus \(\smash {\textit{fml}(\mathcal J) \cup \bigcup _i {\mathscr {M} ^ {\mathcal J_i}} _{\mathcal J} |\!\!\!\approx \bigcup _i \lfloor \mathscr {N} ^ {\mathcal J_i} \rfloor \cup \lfloor \mathscr {N}'' \rfloor }\) by (D3). \(\square \)

Given sets \(M, N \subseteq {\mathscr {P}}({\textbf{F}}),\) the expression \(M \models N\) can refer to either the base consequence relation on \({\mathscr {P}}({\textbf{F}})\) or the lifted consequence relation on \({\mathscr {P}}({\textbf {AF}})\) (since \({\textbf{F}}\subseteq {\textbf {AF}}\)). Fortunately, there is no ambiguity. First, let us show a preparatory lemma:

Lemma 5

Let \(\models \) be a consequence relation on \({\textbf{F}},\) and \(M, N \subseteq {\textbf{F}}.\) If \(M' \models N'\) for all \(M' \supseteq M\) and \(N' \supseteq N\) such that \(M' \cup N' = {\textbf{F}}\), then \(M \models N.\)

Proof

By contraposition, we assume that \(M \not \models N\), and we need to find \(M' \supseteq M\) and \(N' \supseteq N\) such that \(M' \cup N' = {\textbf{F}}\) and \(M' \not \models N'.\) We apply Zorn’s lemma to obtain a maximal element \((M', N')\) of the set  with the order \((M_1,N_1) \le (M_2,N_2)\) if and only if \(M_1 \subseteq M_2\) and \(N_1 \subseteq N_2\). Compactness of \(\models \), together with (D3), guarantees that every chain in this set has an upper bound; for nonempty chains, this is the pairwise union of all the elements in the chain. It remains to show that \(M' \cup N'= {\textbf{F}}.\) Assume to the contrary that \(C \not \in M' \cup N'\) for some C. Due to the maximality of \((M',N')\), we necessarily have \(M' \cup \{C\} \models N'\) and \(M' \models N' \cup \{C\}.\) Applying the cut rule for \({\models },\) we get \(M' \models N',\) a contradiction. \(\square \)

with the order \((M_1,N_1) \le (M_2,N_2)\) if and only if \(M_1 \subseteq M_2\) and \(N_1 \subseteq N_2\). Compactness of \(\models \), together with (D3), guarantees that every chain in this set has an upper bound; for nonempty chains, this is the pairwise union of all the elements in the chain. It remains to show that \(M' \cup N'= {\textbf{F}}.\) Assume to the contrary that \(C \not \in M' \cup N'\) for some C. Due to the maximality of \((M',N')\), we necessarily have \(M' \cup \{C\} \models N'\) and \(M' \models N' \cup \{C\}.\) Applying the cut rule for \({\models },\) we get \(M' \models N',\) a contradiction. \(\square \)

Lemma 6

The two versions of \(\models \) coincide on \({\textbf{F}}\)-formulas, and similarly for \(|\!\!\!\approx \).

Proof

The first property is obvious. For the second property, the argument is as follows. Let \(M, N \subseteq {\textbf{F}}\). Then we must show that \(M |\!\!\!\approx _{\textbf{F}}N\) if and only if \(M |\!\!\!\approx _{\textbf {AF}}N\). First assume that \(M |\!\!\!\approx _{\textbf{F}}N.\) Then clearly \(\textit{fml}(\mathcal {J}) \cup M |\!\!\!\approx _{\textbf{F}}N\) for any \(\mathcal {J}\) by (D3) and thus \(M |\!\!\!\approx _{\textbf {AF}}N.\) Assuming \(M |\!\!\!\approx _{\textbf {AF}}N,\) we show \(M |\!\!\!\approx _{\textbf{F}}N\) using Lemma 5. It thus suffices to show that \(M' |\!\!\!\approx _{\textbf{F}}N'\) for every \(M' \supseteq M\) and \(N' \supseteq N\) such that \(M' \cup N' = {\textbf{F}}.\) Set  Then \(\textit{fml}(\mathcal J) \cup M \cup {{\sim }N} \subseteq M' \cup {{\sim }N'}.\) By the assumption \(M |\!\!\!\approx _{\textbf {AF}}N\) we have \(\textit{fml}(\mathcal {J}) \cup M |\!\!\!\approx _{\textbf{F}}N\) and thus \(M' |\!\!\!\approx _{\textbf{F}}N'\) via (D3). \(\square \)

Then \(\textit{fml}(\mathcal J) \cup M \cup {{\sim }N} \subseteq M' \cup {{\sim }N'}.\) By the assumption \(M |\!\!\!\approx _{\textbf {AF}}N\) we have \(\textit{fml}(\mathcal {J}) \cup M |\!\!\!\approx _{\textbf{F}}N\) and thus \(M' |\!\!\!\approx _{\textbf{F}}N'\) via (D3). \(\square \)

Aside from resolving ambiguity, Lemma 6 justifies the use of splitting in provers without compromising soundness or completeness: When we prove a completeness theorem that claims that a given prover derives \(\bot \) from any initial \(\models _{\textbf {AF}}\)-unsatisfiable set \(M \subseteq {\textbf {AF}}\), Lemma 6 allows us to conclude that it also derives \(\bot \) when starting from any initial \(\models _{\textbf{F}}\)-unsatisfiable set \(M \subseteq {\textbf{F}}\).

Given a formula \(C \in {\textbf{F}}_{\!{{\sim }}},\) let \(\textit{asn}(C)\) denote the set of assertions \(a \in {{\textbf {A}}}\) such that \(\{\textit{fml}(a)\} |\!\!\!\approx \!\!\!|\{C\}.\) Normally, we would make sure that \(\textit{asn}(C)\) is nonempty for every formula C. Given \(a \in \textit{asn}(C),\) observe that if \(a \in \textit{asn}(D),\) then \(\{C\} |\!\!\!\approx \!\!\!|\{D\},\) and if \(\lnot a \in \textit{asn}(D),\) then \(\{C\} |\!\!\!\approx \!\!\!|\{{{\sim }D\}}.\)

Remark 7

Our propositional interpretations are always total. We could also consider partial interpretations—that is, \(\mathcal J \subseteq {{\textbf {A}}}\) such that at most one of \(\textsf{v} \in \mathcal J\) and \(\lnot \textsf{v} \in \mathcal J\) holds for every \(\textsf{v} \in V\). But this is not necessary, because partial interpretations can be simulated by total ones: For every variable \(\textsf{v}\) in the partial interpretation, we can use two variables \(\textsf{v}^+\) and \(\textsf{v}^-\) in the total interpretation and interpret \(\textsf{v}^+\) as true if \(\textsf{v}\) is true and \(\textsf{v}^-\) as true if \(\textsf{v}\) is false. By adding the propositional clause \(\bot \mathbin {\leftarrow }\{\textsf{v}^-, \textsf{v}^+\}\), every total model of the translated A-formulas corresponds to a partial model of the original A-formulas.

Example 8

In the original description of AVATAR [28], the connection between first-order clauses and assertions takes the form of a function \([\,]: {\textbf{F}}\rightarrow {{\textbf {A}}}.\) The encoding is such that \([\lnot C] = \lnot [C]\) for every ground unit clause C and \([C] = [D]\) if and only if C is syntactically equal to D up to variable renaming. This can be supported in our framework by letting \(\textit{fml}(\textsf{v}) = C\) for some C such that \([C] = \textsf{v}\), for every propositional variable \(\textsf{v}.\)

A different encoding is used to exploit the theories of an SMT solver [4]. With a notion of \(|\!\!\!\approx \)-entailment that gives a suitable meaning to Skolem symbols, we can go further and have \([\lnot C(\textsf{sk}_{\lnot C(x)})] = \lnot [C(x)].\) Even if the superposition prover considers \(\textsf{sk}_{\lnot C(x)}\) an uninterpreted symbol (according to \(\models \)), the SAT or SMT solver can safely prune the search space by assuming that C(x) and \(\lnot C(\textsf{sk}_{\lnot C(x)})\) are exhaustive (according to \(|\!\!\!\approx \)).

3 Splitting Calculi

Let \({\textbf{F}}\) be a set of base formulas equipped with \(\bot ,\) \(\models ,\) and \({|\!\!\!\approx }.\) The consequence relation \(|\!\!\!\approx \) is assumed to be nontrivial: (D6) \(\emptyset \not |\!\!\!\approx \emptyset .\) Let \({{\textbf {A}}}\) be a set of assertions over \({{\textbf {V}}}\), and let \({\textbf {AF}}\) be the set of A-formulas over \({\textbf{F}}\) and \({{\textbf {A}}}.\) Let \((\textit{FInf}{},\textit{FRed})\) be a base calculus for \({\textbf{F}}\)-formulas, where \(\textit{FRed}\) is a redundancy criterion that additionally satisfies

-

(R5)

\(\textit{Inf}{}({\textbf{F}}, \textit{Red}_\text {F}(N)) \subseteq \textit{Red}_\text {I}(N)\) for every \(N \subseteq {\textbf{F}}\);

-

(R6)

\(\bot \notin \textit{FRed}_\text {F}(N)\) for every \(N \subseteq {\textbf{F}}\);

-

(R7)

\(C \in \textit{FRed}_\text {F}(\{\bot \})\) for every \(C \not = \bot .\)

These requirements can easily be met by a well-designed redundancy criterion. Requirement (R5) is called reducedness by Waldmann et al. [30, Sect. 2.3]. Requirement (R6) must hold of any complete calculus (Lemma 2), and (R7) can be made without loss of generality (Remark 3). Bachmair and Ganzinger’s redundancy criterion for superposition [1, Sect. 4.3] meets (R1)–(R7).

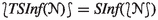

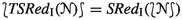

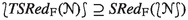

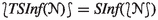

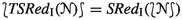

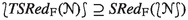

From a base calculus, we will define an induced splitting calculus \((\textit{SInf}{},\textit{SRed})\). We will show that the splitting calculus is sound w.r.t. \(|\!\!\!\approx \) and that it is statically and dynamically complete w.r.t. \({\models }.\) Furthermore, we will show two stronger results that take into account the switching of propositional models that characterizes most splitting architectures: strong static completeness and strong dynamic completeness.

3.1 The Inference Rules

We start with the mandatory inference rules.

Definition 9

The splitting inference system \(\textit{SInf}{}\) consists of all instances of the following two rules:

For Base, the side condition is \((C_n,\dotsc ,C_1,D) \in \textit{FInf}{}.\) For Unsat, the side condition is that \(\{\bot \mathbin {\leftarrow }A_1,\dotsc ,\bot \mathbin {\leftarrow }A_n\}\) is propositionally unsatisfiable.

In addition, the following optional inference rules can be used if desired; the completeness proof does not depend on their application. Rules identified by double bars, such as Split, are simplifications; they replace their premises with their conclusions in the current A-formula set. The premises’ removal is justified by \(\textit{SRed}_\text {F},\) defined in Sect. 3.2.

In the Split rule, we require that \(C \ne \bot \) is splittable into \(C_1,\dotsc ,C_n\) and that \(a_i \in \textit{asn}(C_i)\) for each i. A-formula C is splittable into formulas \(C_1,\dotsc ,C_n\) if \(n \ge 2\), \(\{C\} |\!\!\!\approx \{C_1,\dotsc ,C_n\}\) and \(C \in \textit{FRed}_\text {F}(\{C_i\})\) for each i.

Split performs an n-way case analysis on C. Each case \(C_i\) is approximated by an assertion \(a_i.\) The first conclusion expresses that the cases are exhaustive. The n other conclusions assume \(C_i\) if its approximation \(a_i\) is true.

In a clausal prover, typically \(C = C_1 \vee \cdots \vee C_n,\) where the subclauses \(C_i\) have mutually disjoint sets of variables and form a maximal split. For example, the clause \(\textsf{p}(x) \vee \textsf{q}(x)\) is not splittable because of the shared variable x, whereas \(\textsf{p}(x) \vee \textsf{q}(y)\) can be split into \(\{\textsf{p}(x){,}\;\textsf{q}(y)\}\).

For Collect, we require \(C \not = \bot \) and \(\{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n |\!\!\!\approx \{\bot \mathbin {\leftarrow }A\}.\) For Trim, we require \(C \not = \bot \) and \(\{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n \cup \{\bot \mathbin {\leftarrow }A\} |\!\!\!\approx \{\bot \mathbin {\leftarrow }B\}.\)

Collect removes A-formulas whose assertions cannot be satisfied by any model of the propositional clauses—a form of garbage collection. Similarly, Trim removes assertions that are entailed by existing propositional clauses.

For StrongUnsat, we require \(\{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n |\!\!\!\approx \{\bot \}.\) For Approx, we require \(a \in \textit{asn}(C).\) For Tauto, we require \({|\!\!\!\approx }\; \{C \mathbin {\leftarrow }A\}.\)

StrongUnsat is a variant of Unsat that uses \(|\!\!\!\approx \) instead of \({\models }.\) A splitting prover may choose to apply StrongUnsat if desired, but only Unsat is necessary for completeness. In practice, \(|\!\!\!\approx \)-entailment can be much more expensive to decide, or even be undecidable. A splitting prover could invoke an SMT solver [4] (\(|\!\!\!\approx \)) with a time limit, falling back on a SAT solver (\(\models \)) if necessary.

Approx can be used to make any derived A-formula visible to \(|\!\!\!\approx \). It is similar to a one-way split. Tauto, which asserts a \(|\!\!\!\approx \)-tautology, allows communication in the other direction, from the SMT or SAT solver to the calculus.

Example 10

Suppose the base calculus is first-order resolution [2] and the initial clauses are \(\lnot \textsf{p}(\textsf{a}),\) \(\lnot \textsf{q}(z, z),\) and \(\textsf{p}(x)\vee \textsf{q}(y, \textsf{b}),\) as in Sect. 1. Split replaces the last clause by \(\bot \mathbin {\leftarrow }\{\lnot \textsf{v}_0, \lnot \textsf{v}_1\},\) \(\textsf{p}(x)\mathbin {\leftarrow }\{\textsf{v}_0\},\) and \(\textsf{q}(y, \textsf{b})\mathbin {\leftarrow }\{\textsf{v}_1\}.\) Two Base inferences then generate \(\bot \mathbin {\leftarrow }\{\textsf{v}_0\}\) and \(\bot \mathbin {\leftarrow }\{\textsf{v}_1\}.\) Finally, Unsat generates \(\bot .\)

Example 11

Consider a splitting calculus obeying the AVATAR conventions of Example 8. When splitting on \(C(x) \vee D(y),\) after closing the C(x) case, we can assume that C(x) does not hold when considering the D(y) case. This can be achieved by adding the A-clause \(\lnot C(\textsf{sk}_{\lnot C(x)}) \mathbin {\leftarrow }\{\lnot [C(x)]\}\) using Tauto. If we use an SMT solver that is strong enough to determine that \(\lnot C(\textsf{sk}_{\lnot C(x)})\) and D(y) are inconsistent, we can then apply StrongUnsat immediately, skipping the D(y) branch altogether. This would be the case if we took \(C(x):= \textsf{f}(x) \mathbin {>} 0\) and \(D(y):= \textsf{f}(y) \mathbin {>} 3\) with a solver that supports linear arithmetic and quantifiers. We are not aware of any prover that implements this idea, although a similar idea is described for ground C(x) in the context of labeled splitting [15, Sect. 2].

Example 12

Consider a splitting calculus whose propositional solver is an SMT solver supporting linear arithmetic. Suppose that we are given the inconsistent clause set \(\{\textsf{c} > 0{,}\; \textsf{c} < 0\}\). Two applications of Approx make these clauses visible to the SMT solver, as the propositional clause set \(\{\bot \mathbin {\leftarrow }\lnot (\textsf{c} > 0){,}\; \bot \mathbin {\leftarrow }\lnot (\textsf{c} < 0)\}\). Then the SMT solver, modeled by StrongUnsat, detects the unsatisfiability.

The splitting inference system commutes nicely with the enabled projection:

Lemma 13

(\(\textit{SInf}{}(\mathscr {N}) )_{\mathcal J} = \textit{FInf}{}(\mathscr {N} _{\mathcal J})\) if \(\bot \not \in \mathscr {N} _{\mathcal J}.\)

Proof

The condition \(\bot \not \in \mathscr {N} _{\mathcal J}\) rules out the Unsat inferences. It remains to show that the enabled projection of a Base inference is an \(\textit{FInf}{}\)-inference from enabled premises, and vice versa. \(\square \)

Theorem 14

(Soundness) The rules Unsat, Split, Collect, Trim, StrongUnsat, Approx, and Tauto are sound w.r.t. \({|\!\!\!\approx }.\) Moreover, if every rule in \(\textit{FInf}{}\) is sound w.r.t. \(|\!\!\!\approx \) (on \({\mathscr {P}}({\textbf{F}})\)), then the rule Base is sound w.r.t. \(|\!\!\!\approx \) (on \({\mathscr {P}}({\textbf {AF}})\)).

Proof

Cases Unsat, StrongUnsat, Tauto:Trivial.

Case Split:For the left conclusion, by definition of \(|\!\!\!\approx ,\) it suffices to show \(\textit{fml}(\mathcal {J}) {\cup }\{C\} |\!\!\!\approx \{\bot \}\) for every \(\mathcal {J} \supseteq A {\cup }\{\lnot a_1, \dotsc , \lnot a_n\}.\) By the side condition \(\{C\} |\!\!\!\approx \{C_1,\dotsc ,C_n\},\) it suffices in turn to show \(\textit{fml}(\mathcal {J}) {\cup }\{C_i\} |\!\!\!\approx \{\bot \}\) for every i. Notice that \({{\sim }C_i} \in \textit{fml}(\mathcal {J}).\) The entailment amounts to \(\bigl (\textit{fml}(\mathcal {J}) {\setminus } \{{{\sim }C_i}\}\bigr ) {\cup }\{C_i\} |\!\!\!\approx \{C_i\},\) which follows from (D2) and (D3).

For the right conclusions, we must show \(\textit{fml}(\mathcal {J}) {\cup }\lfloor \{C \mathbin {\leftarrow }A\}_{\mathcal {J}} \rfloor |\!\!\!\approx \{C_i\}\) for every \(\mathcal {J} \supseteq \{a_i\}.\) Notice that \(C_i \in \textit{fml}(\mathcal {J}).\) The desired result follows from (D2) and (D3).

Case Collect:We must show \(\{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n |\!\!\!\approx \{C \mathbin {\leftarrow }A\}.\) This follows from the stronger side condition \(\{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n |\!\!\!\approx \{\bot \mathbin {\leftarrow }A\}.\)

Case Trim:Only the right conclusion is nontrivial. Let \({{\mathscr {N}}} = \{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n.\) It suffices to show \({{\mathscr {N}}}_{\mathcal {J}} {\cup }\{C \mathbin {\leftarrow }A\}_{\mathcal {J}} |\!\!\!\approx \{C\}\) for every \(\mathcal {J} \supseteq B.\) Assume \(\mathcal {J} |\!\!\!\approx {{\mathscr {N}}}_{\mathcal {J}} {\cup }\{C \mathbin {\leftarrow }A\}_{\mathcal {J}}.\) By the side condition \({{\mathscr {N}}} \cup \{\bot \mathbin {\leftarrow }A\} |\!\!\!\approx \{\bot \mathbin {\leftarrow }B\},\) we get \({{\mathscr {N}}}_{\mathcal {J}} \cup \{\bot \mathbin {\leftarrow }A\}_{\mathcal {J}} |\!\!\!\approx \{\bot \}\), meaning that either \({{\mathscr {N}}}_{\mathcal {J}} |\!\!\!\approx \{\bot \}\) or \(\mathcal J \supseteq A\). The first case is trivial. In the other case, \(\mathcal {J} |\!\!\!\approx {{\mathscr {N}}}_{\mathcal {J}} {\cup }\{C\}\) and thus \(\mathcal {J} |\!\!\!\approx \{C\},\) as required.

Case Approx:The proof is as for the left conclusion of Split.

Case Base:To show \(\{C_i \mathbin {\leftarrow }A_i\}_{i=1}^n |\!\!\!\approx \{D \mathbin {\leftarrow }A_1 \cup \cdots \cup A_n\},\) by the definition of \(|\!\!\!\approx \) on \({\mathscr {P}}({\textbf {AF}})\), it suffices to show \(\{C_1,\dotsc ,C_n\} |\!\!\!\approx \{D\}.\) This follows from the soundness of the inferences in \(\textit{FInf}{}.\) \(\square \)

3.2 The Redundancy Criterion

Next, we lift the base redundancy criterion.

Definition 15

The splitting redundancy criterion \(\textit{SRed}= (\textit{SRed}_\text {I}, \textit{SRed}_\text {F})\) is specified as follows. An A-formula \(C \mathbin {\leftarrow }A \in {\textbf {AF}}\) is redundant w.r.t. \({{\mathscr {N}}}\), written \(C \mathbin {\leftarrow }A \in \textit{SRed}_\text {F}({{\mathscr {N}}}),\) if either of these conditions is met:

-

(1)

\(C \in \textit{FRed}_\text {F}({{\mathscr {N}}}_{\mathcal {J}})\) for every propositional interpretation \(\mathcal {J} \supseteq A\); or

-

(2)

there exists an A-formula \(C \mathbin {\leftarrow }B \in {{\mathscr {N}}}\) such that \(B \subset A.\)

An inference \(\iota \in \textit{SInf}{}\) is redundant w.r.t. \({{\mathscr {N}}}\), written \(\iota \in \textit{SRed}_\text {I}({{\mathscr {N}}}),\) if either of these conditions is met:

-

(3)

\(\iota \) is a Base inference and \(\{\iota \}_{\mathcal {J}} \subseteq \textit{FRed}_\text {I}({{{\mathscr {N}}}_{\mathcal {J}}})\) for every \(\mathcal {J}\); or

-

(4)

\(\iota \) is an Unsat inference and \(\bot \in {{\mathscr {N}}}.\)

Condition (1) lifts \(\textit{FRed}_\text {F}\) to A-formulas. It is used both as such and to justify the Split and Collect rules, as we will see below. Condition (2) is used to justify Trim. We will use \(\textit{SRed}_\text {F}\) to justify global A-formula deletion, but also \(\textit{FRed}_\text {F}\) for local A-formula deletion in the locking prover. Note that \(\textit{SRed}\) is not reduced. Inference redundancy partly commutes with the enabled projection:

Lemma 16

\((\textit{SRed}_\text {I}(\mathscr {N})) _{\mathcal J} \subseteq \textit{FRed}_\text {I}(\mathscr {N} _{\mathcal J})\) if \(\bot \not \in \mathscr {N}.\)

Proof

Since \(\bot \not \in \mathscr {N},\) condition (4) of the definition of \(\textit{SRed}_\text {I}\) cannot apply. The inclusion then follows directly from condition (3) applied to the interpretation \(\mathcal J.\) \(\square \)

Lemma 17

\(\bot \notin \textit{SRed}_\text {F}({{\mathscr {N}}})\) for every \({{\mathscr {N}}} \subseteq {\textbf {AF}}.\)

Proof

By Lemma 2, condition (1) of the definition of \(\textit{SRed}_\text {F}\) cannot apply. Nor can condition (2). \(\square \)

Lemma 18

\(\textit{SRed}\) is a redundancy criterion.

Proof

We will first show that the restriction \(\textit{ARed}\) of \(\textit{SRed}\) to Base inferences is a redundancy criterion. Then we will consider Unsat inferences.

We start by showing that \(\textit{ARed}\) is a special case of the redundancy criterion \(\textit{FRed}^{\cap {{\mathscr {G}}},\sqsupset }\) of Waldmann et al. [29, Sect. 3]—the intersection of lifted redundancy criteria with tiebreaker orders. Then we can simply invoke Theorem 37 and Lemma 19 from their technical report [30].

To strengthen the redundancy criterion, we define a tiebreaker order \(\sqsupset \) such that \(C \mathbin {\leftarrow }A \sqsupset D \mathbin {\leftarrow }B\) if and only if \(C = D\) and \(A \subset B.\) In this way, \(C \mathbin {\leftarrow }B\) is redundant w.r.t. \(C \mathbin {\leftarrow }A\) if \(A \subset B\), even though the base clause is the same. The only requirement on \(\sqsupset \) is that it must be well founded, which is the case since the assertion sets of A-formulas are finite. We also define a family of grounding functions \({{\mathscr {G}}}_{\mathcal {J}}\) indexed by a propositional model \(\mathcal {J}.\) Here, “grounding” will mean enabled projection. For A-formulas \({{\mathscr {C}}},\) we set \({{\mathscr {G}}}_{\mathcal {J}}({{\mathscr {C}}}) = {\{{{\mathscr {C}}}\}_{\mathcal {J}}}.\) For inferences \(\iota ,\) we set \({{\mathscr {G}}}_{\mathcal {J}}(\iota ) = {\{\iota \}_{\mathcal {J}}}.\)

We must show that \({{\mathscr {G}}}_{\mathcal {J}}\) satisfies the following characteristic properties of grounding function: (G1) \({{\mathscr {G}}}_{\mathcal {J}}(\bot ) = \{\bot \}\); (G2) for every \({{\mathscr {C}}} \in {\textbf {AF}},\) if \(\bot \in {{\mathscr {G}}}_{\mathcal {J}}({{\mathscr {C}}}),\) then \({{\mathscr {C}}} = \bot \); and (G3) for every \(\iota \in \textit{SInf}{},\) \({{\mathscr {G}}}_{\mathcal {J}}(\iota ) \subseteq \textit{FRed}_\text {I}({{\mathscr {G}}}_{\mathcal {J}}(\textit{concl}(\iota ))).\)

Condition (G1) obviously holds, and (G3) holds by property (R4) of \(\textit{FRed}.\) However, (G2) does not hold, a counterexample being \(\bot \mathbin {\leftarrow }\{a\}.\) On closer inspection, Waldmann et al. use (G2) only to prove static completeness (Theorems 27 and 45 in their technical report) but not to establish that \(\textit{FRed}^{\cap {{\mathscr {G}}},\sqsupset }\) is a redundancy criterion, so we can proceed. It is a routine exercise to check that \(\textit{ARed}\) coincides with \(\textit{FRed}^{\cap {{\mathscr {G}}},\sqsupset } = (\textit{FRed}_\text {I}^{\cap {{\mathscr {G}}}},\textit{FRed}_\text {F}^{\cap {{\mathscr {G}}},\sqsupset }),\) which is defined as follows:

-

1.

\(\iota \in \textit{FRed}_\text {I}^{\cap {{\mathscr {G}}}}({{\mathscr {N}}})\) if and only if for every propositional interpretation \(\mathcal {J},\) we have \({{\mathscr {G}}}_{\mathcal {J}}(\iota ) \subseteq \textit{FRed}_\text {I}({{\mathscr {G}}}_{\mathcal {J}}({{\mathscr {N}}}))\);

-

2.

\({{\mathscr {C}}} \in \textit{FRed}_\text {F}^{\cap {{\mathscr {G}}},\sqsupset }({{\mathscr {N}}})\) if and only if for every propositional interpretation \(\mathcal {J}\) and every \({{\mathscr {D}}} \in {{\mathscr {G}}}_{\mathcal {J}}({{\mathscr {C}}}),\) either \({{\mathscr {D}}} \mathbin {\in } \textit{FRed}_\text {F}({{\mathscr {G}}}_{\mathcal {J}}({{\mathscr {N}}}))\) or there exists \({{\mathscr {C}}}' \in {{\mathscr {N}}}\) such that \({{\mathscr {C}}}' \sqsubset {{\mathscr {C}}}\) and \({{\mathscr {D}}} \in {{\mathscr {G}}}_{\mathcal {J}}({{\mathscr {C}}}').\)

We also need to check that the consequence relation \(\models \) used in \(\textit{SRed}\) coincides with the consequence relation \(\models ^{\cap }_{\mathscr {G}}\), which is defined as \(\mathscr {M} \models ^{\cap }_{\mathscr {G}} \{\mathscr {C}\}\) if and only if for every \(\mathcal J\) and \(D \in \mathscr {G}_\mathcal J(\{\mathscr {C}\})\), we have \(\mathscr {G}_\mathcal J(\mathscr {M}) \models \{ D \}\). After expanding \(\mathscr {G}_\mathcal J\), this is exactly the definition we used for lifting \(\models \) to \({\textbf {AF}}\).

To extend the above result to \(\textit{SRed},\) we must show the second half of conditions (R2) and (R3) as well as (R4) for Unsat inferences.

(R2)Given an Unsat inference \(\iota ,\) we must show that if \({{\mathscr {M}}} \subseteq {{\mathscr {N}}}\) and \(\iota \in \textit{SRed}_\text {I}({{\mathscr {M}}}),\) then \(\iota \in \textit{SRed}_\text {I}({{\mathscr {N}}}).\) This holds because if \(\bot \in {{\mathscr {M}}},\) then \(\bot \in {{\mathscr {N}}}.\)

(R3)Given an Unsat inference \(\iota ,\) we must show that if \({{\mathscr {M}}} \subseteq \textit{SRed}_\text {F}({{\mathscr {N}}})\) and \(\iota \in \textit{SRed}_\text {I}({{\mathscr {N}}}),\) then \(\iota \in \textit{SRed}_\text {I}({{\mathscr {N}}} {\setminus } {{\mathscr {M}}}).\) This amounts to proving that if \(\bot \in {{\mathscr {N}}},\) then \(\bot \in {{\mathscr {N}}} {\setminus } {{\mathscr {M}}},\) which follows from Lemma 17.

(R4)Given an Unsat inference \(\iota ,\) we must show that if \(\bot \in {{\mathscr {N}}},\) then \(\iota \in \textit{SRed}_\text {I}({{\mathscr {N}}}).\) This follows from the definition of \(\textit{SRed}_\text {I}.\) \(\square \)

\(\textit{SRed}\) is highly versatile. It can justify the deletion of A-formulas that are propositionally tautological, such as \(C \mathbin {\leftarrow }\{\textsf{v}, \lnot \textsf{v}\}\). It lifts the base redundancy criterion gracefully: If \(D \in \textit{FRed}_\text {F}(\{C_i\}_{i=1}^n),\) then \(D \mathbin {\leftarrow }A_1 \cup \cdots \cup A_n \in \textit{SRed}_\text {F}(\{C_i \mathbin {\leftarrow }A_i\}_{i=1}^n)\). It also allows other simplifications, as long as the assertions on A-formulas used to simplify a given \(C \mathbin {\leftarrow }A\) are contained in A. If the base criterion \(\textit{FRed}_\text {F}\) supports subsumption (e.g., following the lines of Waldmann et al. [29]), this also extends to A-formulas: \(D \mathbin {\leftarrow }B \in \textit{SRed}_\text {F}(\{C \mathbin {\leftarrow }A\})\) if D is strictly subsumed by C and \(B \supseteq A\), or if \(C = D\) and \(B \supset A.\) Finally, it is strong enough to justify case splits and the other simplification rules presented in Sect. 3.1.

Theorem 19

(Simplification) For every Split, Collect, or Trim inference, the conclusions collectively make the premises redundant according to \(\textit{SRed}_\text {F}.\)

Proof

Case Split:We must show \(C \mathbin {\leftarrow }A \in \textit{SRed}_\text {F}( \{\bot \mathbin {\leftarrow }\{\lnot a_1, \dotsc , \lnot a_n\} \cup A\} {\cup }\{C_i \mathbin {\leftarrow }\{a_i\}\}_{i=1}^n ).\) By condition (1) of the definition of \(\textit{SRed}_\text {F},\) it suffices to show \(C \in \textit{FRed}_\text {F}( \{\bot \mathbin {\leftarrow }\{\lnot a_1, \dotsc , \lnot a_n\}\}_{\mathcal {J}}{\cup }(\{C_i \mathbin {\leftarrow }\{a_i\}\}_{i=1}^n)_{\mathcal {J}}\) for every \(\mathcal {J} \supseteq A.\) If \(a_i \in \mathcal {J}\) for some i, this follows from Split’s side condition \(C \in \textit{FRed}_\text {F}(\{C_i\}).\) Otherwise, this follows from (R7), the requirement that \(C \in \textit{FRed}_\text {F}(\{\bot \})\), since \(C \not = \bot .\)

Case Collect:We must show \(C \mathbin {\leftarrow }A \in \textit{SRed}_\text {F}( \{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n ).\) By condition (1) of the definition of \(\textit{SRed}_\text {F},\) it suffices to show \(C \in \textit{FRed}_\text {F}( {(\{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n)_{\mathcal {J}}} )\) for every \(\mathcal {J} \supseteq A.\) If \(A_i \subseteq \mathcal {J}\) for some i, this follows from Collect’s side condition that \(C \not = \bot \) and (R7). Otherwise, from the side condition \(\{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n |\!\!\!\approx \{\bot \mathbin {\leftarrow }A\},\) we obtain \(\emptyset |\!\!\!\approx \{\bot \},\) which contradicts (D6).

Case Trim:We must show \(C \mathbin {\leftarrow }A \cup B \in \textit{SRed}_\text {F}( \{\bot \mathbin {\leftarrow }A_i\}_{i=1}^n \cup \{C \mathbin {\leftarrow }B\} ).\) This follows directly from condition (2) of the definition of \(\textit{SRed}_\text {F}.\) \(\square \)

Annoyingly, the redundancy criterion \(\textit{SRed}\) does not mesh well with \(\alpha \)-equivalence. We would expect the A-formula \(\textsf{p}(x) \mathbin {\leftarrow }\{a\}\) to be subsumed by \(\textsf{p}(y) \mathbin {\leftarrow }\emptyset ,\) where x, y are variables, but this is not covered by condition (2) of \(\textit{SRed}_\text {F}\) because \(\textsf{p}(x) \not = \textsf{p}(y).\) The simplest solution is to take \({\textbf{F}}\) to be the quotient of some set of raw formulas by \(\alpha \)-equivalence. An alternative is to generalize the theory so that the projection operator \({\mathscr {G}_{\mathcal {J}}}\) generates entire \(\alpha \)-equivalence classes (e.g., \({\mathscr {G}_{\mathcal {J}} (\{\textsf{p}(x)\})} = \{\textsf{p}(x){,}\> \textsf{p}(y){,}\> \textsf{p}(z){,}\> \cdots \}\)) or groundings (e.g., \({\mathscr {G}_{\mathcal {J}}(\{\textsf{p}(x)\})} = \{\textsf{p}(\textsf{a}){,}\> \textsf{p}(\textsf{f}(\textsf{a})){,}\> \cdots \}\)). Waldmann et al. describe the second approach [29, Sect. 4].

3.3 Standard Saturation

We will now prove that the splitting calculus is statically complete and therefore dynamically complete. Unfortunately, derivations produced by most practical splitting architectures violate the fairness condition associated with dynamic completeness. Nevertheless, the standard completeness notions are useful stepping stones, so we start with them.

Lemma 20

Let \({{\mathscr {N}}} \subseteq {\textbf {AF}}\) be an A-formula set, and let \(\mathcal {J}\) be a propositional interpretation. If \({{\mathscr {N}}}\) is saturated w.r.t. \(\textit{SInf}{}\) and \(\textit{SRed}_\text {I},\) then \({{{\mathscr {N}}}_{\mathcal {J}}}\) is saturated w.r.t. \(\textit{FInf}{}\) and \(\textit{FRed}_\text {I}.\)

Proof

Assuming \(\iota \in \textit{FInf}{}({{{\mathscr {N}}}_{\mathcal {J}}}),\) we must show \(\iota \in \textit{FRed}_\text {I}({{{\mathscr {N}}}_{\mathcal {J}}}).\) The argument follows that of the “folklore” Lemma 26 in the technical report of Waldmann et al. [30]. First note that any inference in \(\textit{FInf}{}\) is lifted, via Base, in \(\textit{SInf}{},\) so that we have \(\iota \in (\textit{SInf}{}({{\mathscr {N}}}))_{\mathcal {J}}.\) This means that there exists a Base inference \(\iota _0 \in \textit{SInf}{}({{\mathscr {N}}}).\) By saturation of \({{\mathscr {N}}},\) we have \(\iota _0 \in \textit{SRed}_\text {I}({{\mathscr {N}}}).\) By definition of \(\textit{SRed}_\text {I},\) \({\{\iota _0\}_{\mathcal {J}}} = \{\iota \} \subseteq \textit{FRed}_\text {I}({{{\mathscr {N}}}_{\mathcal {J}})},\) as required. \(\square \)

Theorem 21

(Static completeness) Assume \((\textit{FInf}{},\textit{FRed})\) is statically complete. Then \((\textit{SInf}{},\textit{SRed})\) is statically complete.

Proof

Suppose \({{\mathscr {N}}} \subseteq {\textbf {AF}},\) \({{\mathscr {N}}} \models \{\bot \},\) and \({{\mathscr {N}}}\) is saturated w.r.t. \(\textit{SInf}{}\) and \(\textit{SRed}_\text {I}.\) We will show \(\bot \in {{\mathscr {N}}}.\)

First, we show \(\bot \in {{{\mathscr {N}}}_{\mathcal {J}}}\) for every \(\mathcal {J}.\) From \({{\mathscr {N}}} \models \{\bot \},\) by the definition of \(\models \) on A-formulas, it follows that \({N_{\mathcal {J}}} \models \{\bot \}.\) Moreover, by Lemma 20, \({N_{\mathcal {J}}}\) is saturated w.r.t. \(\textit{FInf}{}\) and \(\textit{FRed}_\text {I}.\) By static completeness of \((\textit{FInf}{}, \textit{FRed}),\) we get \(\bot \in {{{\mathscr {N}}}_{\mathcal {J}}}.\)

Hence \(\mathscr {N}_\bot \) is propositionally unsatisfiable. By compactness of propositional logic, there exists a finite subset \(\mathscr {M}\subseteq {\mathscr {N}}\) such that \(\mathscr {M}\) is propositionally unsatisfiable. By saturation w.r.t. Unsat, we obtain \(\bot \in {{\mathscr {N}}},\) as required. \(\square \)

Thanks to the requirements on the redundancy criterion, we obtain dynamic completeness as a corollary:

Corollary 22

(Dynamic completeness) Assume \((\textit{FInf}{},\textit{FRed})\) is statically complete. Then \((\textit{SInf}{},\textit{SRed})\) is dynamically complete.

Proof

This immediately follows from Theorem 21 by Lemma 6 in the technical report of Waldmann et al. [30]. \(\square \)

3.4 Local Saturation

The above completeness result, about \(\rhd _{\!\textit{SRed}_\text {F}}\)-derivations, can be extended to prover designs based on the given clause procedure, such as the Otter, DISCOUNT, and Zipperposition loops, as explained by Waldmann et al. [29, Sect. 4]. But it fails to capture a crucial aspect of most splitting architectures. Since \(\rhd _{\!\textit{SRed}_\text {F}}\)-derivations have no notion of current split branch or propositional model, they place no restrictions on which inferences may be performed when.

To fully capture splitting, we need to start with a weaker notion of saturation. If an A-formula set is consistent, it should suffice to saturate w.r.t. a single propositional model. In other words, if no A-formula \(\bot \mathbin {\leftarrow }A\) such that \(A \subseteq \mathcal {J}\) is derivable for some model \(\mathcal {J} \models {{\mathscr {N}}}_\bot ,\) the prover will never be able to apply the Unsat rule to derive \(\bot .\) It should then be allowed to deliver a verdict of “consistent.” We will call such model-specific saturations local and standard saturations global.

Definition 23

A set \({{\mathscr {N}}} \subseteq {\textbf {AF}}\) is locally saturated w.r.t. \(\textit{SInf}{}\) and \(\textit{SRed}_\text {I}\) if either \(\bot \in {{\mathscr {N}}}\) or there exists a propositional model \(\mathcal {J} \models {{\mathscr {N}}}_\bot \) such that \({{{\mathscr {N}}}_{\mathcal {J}}}\) is saturated w.r.t. \(\textit{FInf}{}\) and \(\textit{FRed}_\text {I}.\)

Local saturation works in tandem with strong static completeness:

Theorem 24

(Strong static completeness) Assume \((\textit{FInf}{},\textit{FRed})\) is statically complete. Given a set \({{\mathscr {N}}} \subseteq {\textbf {AF}}\) that is locally saturated w.r.t. \(\textit{SInf}{}\) and \(\textit{SRed}_\text {I}\) and such that \({{\mathscr {N}}} \models \{\bot \},\) we have \(\bot \in {{\mathscr {N}}}.\)

Proof

We show \(\bot \in {{\mathscr {N}}}\) by case analysis on the condition by which \({{\mathscr {N}}}\) is locally saturated. The first case is vacuous. Otherwise, let \(\mathcal {J} \models {{\mathscr {N}}}_\bot .\) Since \({{\mathscr {N}}} \models \{\bot \},\) we have \({{{\mathscr {N}}}_{\mathcal {J}}} \models \{\bot \}.\) By the definition of local saturation and static completeness of \((\textit{FInf}{}, \textit{FRed}),\) we get \(\bot \in {{{\mathscr {N}}}_{\mathcal {J}}},\) contradicting \(\mathcal {J} \models {{\mathscr {N}}}_\bot .\) \(\square \)

Example 25

Consider the following A-clause set expressed using AVATAR conventions:

It is not globally saturated for resolution, because the conclusion \(\bot \mathbin {\leftarrow }\{[\textsf{q}(y)]\}\) of resolving the last two A-clauses is missing, but it is locally saturated with \(\mathcal {J} \supseteq \{[\textsf{p}(x)], \lnot [\textsf{q}(y)]\}\) as the witness in Definition 23.

We also need a notion of local fairness that works in tandem with local saturation.

Definition 26

A sequence \(({{\mathscr {N}}}_i)_i\) of sets of A-formulas is locally fair w.r.t. \(\textit{SInf}{}\) and \(\textit{SRed}_\text {I}\) if either \(\bot \in {{\mathscr {N}}}_i\) for some i or there exists a propositional model \(\mathcal {J} \models ({{\mathscr {N}}}_\infty )_\bot \) such that \(\textit{FInf}{}({(\mathscr {N}_\infty )_{\mathcal {J}}}) \subseteq \bigcup _i \textit{FRed}_\text {I}({(\mathscr {N}_i)_{\mathcal {J}}}).\)

Lemma 27

Let \(({{\mathscr {N}}}_i)_i\) be a \(\rhd _{\!\textit{SRed}_\text {F}}\)-derivation that is locally fair w.r.t. \(\textit{SInf}{}\) and \(\textit{SRed}_\text {I}.\) Then the limit inferior \({{\mathscr {N}}}_\infty \) is locally saturated w.r.t. \(\textit{SInf}{}\) and \(\textit{SRed}_\text {I}.\)

Proof

The proof is by case analysis on the condition by which \(({{\mathscr {N}}}_i)_i\) is locally fair. If \(\bot \in \mathscr {N}_i,\) then \(\bot \in \mathscr {N}_\infty \) by Lemma 17, and \(\mathscr {N}_\infty \) is therefore locally saturated. In the remaining case, we have \({\mathscr {N}}_i \subseteq {\mathscr {N}}_\infty \cup \textit{SRed}_\text {F}({\mathscr {N}}_\infty )\) by Lemma 4 in the technical report of Waldmann et al. [30], and therefore \(\bigcup _i \textit{FRed}_\text {I}({(\mathscr {N}_i)_{\mathcal {J}}}) \subseteq \bigcup _i \textit{FRed}_\text {I}({(\mathscr {N}_\infty )_{\mathcal {J}}} \cup \textit{FRed}_\text {F}({(\mathscr {N}_\infty )_{\mathcal {J}}})) = \bigcup _i \textit{FRed}_\text {I}({(\mathscr {N}_\infty )_{\mathcal {J}}})\) because we clearly have \({(\textit{SRed}_\text {F}(\mathscr {N}_\infty ))_{\mathcal {J}}} \subseteq \textit{FRed}_\text {F}({(\mathscr {N}_\infty )_{\mathcal {J}}}) \cup {(\mathscr {N}_\infty )_{\mathcal {J}}}.\) \(\square \)

Local fairness works in tandem with strong dynamic completeness.

Theorem 28

(Strong dynamic completeness) Assume \((\textit{FInf}{},\textit{FRed})\) is statically complete. Given a \(\rhd _{\!\textit{SRed}_\text {F}}\)-derivation \(({{\mathscr {N}}}_i)_i\) that is locally fair w.r.t. \(\textit{SInf}{}\) and \(\textit{SRed}_\text {I}\) and such that \({{\mathscr {N}}}_0 \models \{\bot \},\) we have \(\bot \in {{\mathscr {N}}}_i\) for some i.

Proof

We connect the dynamic and static points of view along the lines of the proof of Lemma 6 in the technical report of Waldmann et al. [30]. First, we show that the limit inferior is inconsistent: \({{\mathscr {N}}}_\infty \models \{\bot \}.\) We have \(\bigcup _i {{\mathscr {N}}}_i \supseteq {{\mathscr {N}}}_0 \models \{\bot \},\) and by (R1), it follows that \((\bigcup _i {{\mathscr {N}}}_i) {\setminus } \textit{SRed}_\text {F}(\bigcup _i {{\mathscr {N}}}_i) \models \{\bot \}.\) By their Lemma 2, \((\bigcup _i {{\mathscr {N}}}_i) {\setminus } \textit{SRed}_\text {F}(\bigcup _i {{\mathscr {N}}}_i) \subseteq {{\mathscr {N}}}_\infty .\) Hence \({{\mathscr {N}}}_\infty \supseteq (\bigcup _i {{\mathscr {N}}}_i) {\setminus } \textit{SRed}_\text {F}(\bigcup _i {{\mathscr {N}}}_i) \models \{\bot \}.\) By Lemma 27, \({{\mathscr {N}}}_\infty \) is locally saturated, so by Theorem 24, \(\bot \in {{\mathscr {N}}}_\infty .\) Thus, \(\bot \in {{\mathscr {N}}}_i\) for some i. \(\square \)

An alternative proof based on dynamic completeness follows:

Proof

We show \(\bot \in {{\mathscr {N}}}_i\) for some i by case analysis on the condition by which \(({{\mathscr {N}}}_i)_i\) is locally fair. The first case is vacuous. Otherwise, we have \(\mathcal {J} \models ({{\mathscr {N}}}_\infty )_\bot .\) Since \({{\mathscr {N}}}_0 \models \{\bot \},\) we have \({({{\mathscr {N}}}_0)_{\mathcal {J}}} \models \{\bot \}.\) By the definition of local fairness and Theorem 22, we get \(\bot \in {({{\mathscr {N}}}_i)_{\mathcal {J}}}\) for some i. By Lemma 2 and the definition of \(\rhd _{\!\textit{FRed}_\text {F}},\) we obtain \(\bot \in {({{\mathscr {N}}}_\infty )_{\mathcal {J}}},\) contradicting \(\mathcal {J} \models ({{\mathscr {N}}}_\infty )_\bot .\) \(\square \)

In Sects. 4 to 6, we will review three transition systems of increasing complexity, culminating with an idealized specification of AVATAR. They will be linked by a chain of stepwise refinements, like pearls on a string. All derivations using these systems will correspond to \(\rhd _{\!\textit{SRed}_\text {F}}\)-derivations, and their fairness criteria will imply local fairness. Consequently, by Theorem 28, they will all be complete.

4 Model-Guided Provers

The transition system \(\rhd _{\!\textit{SRed}_\text {F}}\) provides a very abstract notion of splitting prover. AVATAR and other splitting architectures maintain a model of the propositional clauses, which represents the split tree’s current branch. We can capture this abstractly by refining \(\rhd _{\!\textit{SRed}_\text {F}}\)-derivations to incorporate a propositional model.

4.1 The Transition Rules

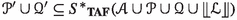

The states are now pairs \((\mathcal {J}, {{\mathscr {N}}}),\) where \(\mathcal {J}\) is a propositional interpretation and \({{\mathscr {N}}} \subseteq {\textbf {AF}}\). Initial states have the form \((\mathcal J, N),\) where \(N \subseteq {\textbf{F}}.\) The model-guided prover \({{\textsf{M}}}{{\textsf{G}}}\) is defined by the following transition rules:

The Derive rule can add new A-formulas (\({{\mathscr {M}}}'\)) and delete redundant A-formulas (\({{\mathscr {M}}}\)). In practice, Derive will perform only sound or consistency-preserving inferences, but we impose no such restriction. If soundness of a prover is desired, it can be derived easily from the soundness of the individual inferences. Similarly, \({{\mathscr {M}}}\) and \({{\mathscr {M}}}'\) will usually be enabled in \(\mathcal J\), but we do not require this.

The interpretation \(\mathcal {J}\) should be a model of \({{\mathscr {N}}}_\bot \) most of the time; when it is not, Switch can be used to switch interpretation or StrongUnsat to finish the refutation. Although the condition \(\mathcal {J}_i \models ({{\mathscr {N}}}_i)_\bot \) might be violated for some i, to make progress we must periodically check it and apply Switch as needed. Much of the work that is performed while the condition is violated will likely be wasted. To avoid this waste, Vampire invokes the SAT solver whenever it selects a clause as part of the given clause procedure.

Transitions can be combined to form \(\Longrightarrow _{{{\textsf{M}}}{{\textsf{G}}}}\)-derivations (pronounced “arrow-\({{\textsf{M}}}{{\textsf{G}}}\)-derivations”).

Lemma 29

If \((\mathcal {J}, {{\mathscr {N}}}) \Longrightarrow _{{{\textsf{M}}}{{\textsf{G}}}}(\mathcal {J}', {{\mathscr {N}}}'),\) then \({{\mathscr {N}}} \rhd _{\!\textit{SRed}_\text {F}}{{\mathscr {N}}}'.\)

Proof

The only rule that deletes A-formulas, Derive, exclusively takes out A-formulas that are redundant w.r.t. the next state, as mandated by \(\rhd _{\!\textit{SRed}_\text {F}}.\) \(\square \)

To develop our intuitions, we will study several examples of \(\Longrightarrow _{{{\textsf{M}}}{{\textsf{G}}}}\)-derivations. In all the examples in this section, the base calculus is first-order resolution, and \(\models \) is entailment for first-order logic with equality.

Example 30

Let us revisit Example 10. Initially, the propositional interpretation is \(\mathcal {J}_0 = \{ \lnot \textsf{v}_0, \lnot \textsf{v}_1 \}.\) After the split, we have the A-clauses \(\lnot \textsf{p}(\textsf{a}),\) \(\lnot \textsf{q}(z, z),\) \(\textsf{p}(x)\mathbin {\leftarrow }\{\textsf{v}_0\},\) \(\textsf{q}(y, \textsf{b})\mathbin {\leftarrow }\{\textsf{v}_1\},\) and \(\bot \mathbin {\leftarrow }\{\lnot \textsf{v}_0, \lnot \textsf{v}_1\}.\) The natural option is to switch interpretation. We take \(\mathcal {J}_1 = \{\textsf{v}_0, \lnot \textsf{v}_1\}.\) We then derive \(\bot \mathbin {\leftarrow }\{\textsf{v}_0\}.\) Since \(\mathcal {J}_1 \not \models \bot \mathbin {\leftarrow }\{\textsf{v}_0\},\) we switch to \(\mathcal {J}_2 = \{\lnot \textsf{v}_0, \textsf{v}_1\},\) where we derive \(\bot \mathbin {\leftarrow }\{\textsf{v}_1\}.\) Finally, we detect that the propositional clauses are unsatisfiable and generate \(\bot \). This corresponds to the transitions below, where arrows are annotated by transition names and light gray boxes identify enabled A-clauses:

4.2 Fairness

We need a fairness criterion for \({{\textsf{M}}}{{\textsf{G}}}\) that implies local fairness of the underlying \(\rhd _{\!\textit{SRed}_\text {F}}\)-derivation. The latter requires a witness \(\mathcal {J}\) but gives us no hint as to where to look for one. This is where basic topology comes into play.

Definition 31

A propositional interpretation \(\mathcal {J}\) is a limit point in a sequence \((\mathcal {J}_i)_i\) if there exists a subsequence \((\mathcal {J}'_i)_i\) of \((\mathcal {J}_i)_i\) such that \(\mathcal {J} = \mathcal {J}'_\infty = \mathcal {J}'^\infty .\)

Intuitively, a limit point is a propositional interpretation that is the limit of a family of interpretations that we revisit infinitely often. We will see that there always exists a limit point. To achieve fairness, we will focus on saturating a limit point.

Example 32

Let \((\mathcal {J}_i)_i\) be the sequence such that \(\mathcal {J}_{2i} \cap {{\textbf {V}}}= \{\textsf{v}_1,\textsf{v}_3,\ldots ,\textsf{v}_{2i-1} \}\) (i.e., \(\textsf{v}_1,\textsf{v}_3,\ldots ,\textsf{v}_{2i-1}\) are true and the other variables are false) and \(\mathcal {J}_{2i+1\!} = (\mathcal {J}_{2i} {\setminus } \{ \lnot \textsf{v}_{2i} \}) \cup \{\textsf{v}_{2i}\}.\) Although it is not in the sequence, the interpretation \(\mathcal {J} \cap {{\textbf {V}}}= \{\textsf{v}_1, \textsf{v}_3, \ldots \}\) is a limit point. The split tree of \((\mathcal {J}_i)_i\) is depicted in Fig. 1. The direct path from the root to a node labeled \(\mathcal {J}_i\) specifies the assertions that are true in \(\mathcal {J}_i.\) The limit point \(\mathcal {J}\) corresponds to the only infinite branch of the tree.

The above example hints at why the proof of \({{\textsf{M}}}{{\textsf{G}}}\)’s dynamic completeness is nontrivial: Some derivations might involve infinitely many split branches, making it difficult for the prover to focus on any single one and saturate it.

Example 33