Abstract

Amid the COVID-19 pandemic, an unprecedented number of higher education institutions adopted test-optional admissions policies. The proliferation of these policies and the criticism of standardized admissions tests as unreliable predictors of applicants’ postsecondary educational promise have prompted the reimagining of evaluative methodologies in college admissions. However, few institutions have designed and implemented new measures of applicants’ potential for success, rather opting to redistribute the weight given to other variables such as high school course grades and high school GPA. We use multiple regression to investigate the predictive validity of a measure of non-cognitive, motivational-developmental dimensions implemented as part of a test-optional admissions policy at a large urban research university in the United States. The measure, composed of four short-answer essay questions, was developed based on the social-cognitive motivational and developmental-constructivist perspectives. Our findings suggest that scores derived from the measure make a statistically significant but small contribution to the prediction of undergraduate GPA and 4-year bachelor’s degree completion. We also find that the measure does not make a statistically significant nor practical contribution to the prediction of 5-year graduation.

Similar content being viewed by others

The current landscape of higher education, characterized by the changing demography of college students and public health concerns amid the COVID-19 pandemic, has prompted institutions to employ a more comprehensive approach to the evaluation of admissions applicants’ credentials, personal characteristics, and past experiences. The proliferation of test-optional admissions policies (FairTest National Center for Fair & Open Testing, 2023; Furuta, 2017) and holistic approaches to the evaluation of college admission applicants necessitates a more comprehensive understanding of applicants’ potential to succeed in postsecondary education. Admissions models vary widely depending on institutions’ mission and philosophical orientation. For example, some institutions hold that any student is entitled to a college education whereas others hold that college access is a reward for prior academic success (Perfetto et al., 1999). Regardless of an institution’s philosophical basis for admissions decision-making and the corresponding criteria it uses to determine applicants’ eligibility for admission, most admissions models can be improved by including measures that reliably, accurately, and comprehensively evaluate applicants’ potential for success in college with concern for the fair and equitable distribution of educational opportunities (Camara & Kimmel, 2005; Zwick, 2017).

Measures lack validity when they are not reproducible (or reliable, as defined in psychometrics) for applicants from all backgrounds (American Educational Research Association [AERA], American Psychological Association [APA], & National Council on Measurement in Education [NCME], 2014; Drost, 2011). For example, scholars have documented concerns about the use of standardized tests in college admissions, citing differential prediction by reported race (e.g., Blau et al., 2004), gender (e.g., Leonard & Jiang, 1999), and socioeconomic status (e.g., Rothstein, 2004; Sackett et al., 2012). Research has also demonstrated the limited predictive validity of tests beyond the first year of undergraduate study (e.g., Berry & Sackett, 2009; Sackett & Kuncel, 2018; Sackett et al., 2012).

Although an increasing number of institutions have adopted test-optional policies (FairTest National Center for Fair & Open Testing, 2023; Furuta, 2017) or embraced holistic review (Bastedo et al., 2018; Hossler et al., 2019), few institutions have replaced standardized tests with new, proprietary measures of applicants’ potential to succeed in college. Rather, many institutions have redistributed the weight given to standardized test scores to other aspects of the admissions application such as high school grade point average (HSGPA; Galla et al., 2019; Sweitzer et al., 2018), a practice which may perpetuate barriers to equitable access for students who are systemically marginalized and have limited access to educational opportunities such as enrollment in Advanced Placement courses (Kolluri, 2018). Accordingly, recent research has highlighted the need to provide admissions officers with richer information about prospective students. When used as part of a holistic admissions model, information about applicants’ high school and home context may mitigate bias against disadvantaged students and help to promote equitable access to postsecondary education (Bastedo et al., 2022).

As colleges and universities employ holistic assessments of applicants, the increased inclusion of motivational and developmental constructs in the admissions process stands out as a promising possibility. Sedlacek (2004) advanced the view that non-cognitive constructs, such as achievement motivation, may be used to expand the predictive potential of more frequently relied upon cognitive measures. Motivational constructs reflect the resources and abilities students need to effectively adapt to the various academic, emotional, and social challenges associated with attaining a postsecondary education (Allen et al., 2009; Camara & Kimmel, 2005; Kaplan et al., 2017; Sedlacek, 2017). Scholars suggest flexible admissions policies that incorporate a wide variety of student characteristics—including psychosocial and motivational constructs—will likely have the added benefit of increasing diversity among the student body by promoting more equitable access to postsecondary education (Soares, 2015; Zwick, 2002).

Despite evidence of considerable associations between several psychosocial constructs and college student outcomes, an adequately valid integrated inventory of these constructs has yet to be developed (Le et al., 2005; Sedlacek, 2004). Such an inventory could prove highly valuable, especially as student demographics shift and an increasing number of institutions adopt test-optional policies. We respond to the need for reliable and valid measures of postsecondary promise by investigating the predictive validity of a proprietary measure of admissions applicants’ motivational-developmental attributes. Our measure includes select non-cognitive constructs derived from the social-cognitive and developmental-constructivist domains. The measure was developed in 2015 to coincide with the implementation of a test-optional admissions policy at a large, public, urban research university located in the Mid-Atlantic region of the United States. Guided by motivational and student development theories, we addressed the following research questions:

-

1.

Is the measure of motivational-developmental dimensions a reliable predictor of undergraduate GPA (UGPA), and 4- and 5-year bachelor’s degree completion?

-

2.

Is the measure of motivational-developmental dimensions a statistically significant predictor of UGPA?

-

3.

Is the measure of motivational-developmental dimensions a statistically significant predictor of 4- and 5-year bachelor’s degree completion?

-

4.

Which specific motivational-developmental dimensions, if any, predict UGPA and four- and five-year bachelor’s degree completion?

Literature Review

Although evidence suggests that high-achieving, underserved students particularly benefit from post-college achievements such as increased lifetime earnings (Ma et al., 2019), admissions practices frequently benefit students with higher socioeconomic status (Bowman & Bastedo, 2018). As inequitable admissions practices have persisted, the criteria and methodologies colleges and universities use to evaluate admissions applicants have been the subject of substantial empirical investigation (e.g., Bowman & Bastedo, 2018; Hossler et al., 2019; National Association for College Admission Counseling, 2016; Robbins et al., 2004). Previous research has predominately focused on cognitive factors such as standardized test scores (i.e., SAT, ACT) and other variables with cognitive and non-cognitive attributes such as high school course grades, HSGPA, and class rank (Burton & Ramist, 2001; Galla et al., 2019; Sweitzer et al., 2018). However, these criteria have been criticized as unreliable and potentially biased. In recognition of these shortcomings, scholars have attempted to validate new measures of postsecondary educational promise (e.g., Le et al., 2005; Oswald et al., 2004; Robbins et al., 2006; Sedlacek, 2004; Thomas et al., 2007).

Predictive Validity of Traditional Criteria in College Admissions

Traditionally, the criteria most widely considered in the undergraduate admissions process measure cognitive and reasoning abilities and therefore may be used to assess subject-specific knowledge and skills (Camara & Kimmel, 2005). Research has consistently demonstrated that standardized test scores and HSGPA each contribute to the prediction of academic performance and student persistence (e.g., Allen et al., 2008; Bridgeman et al., 2008; Kobrin et al., 2008; Robbins et al., 2004, 2006; Westrick et al., 2015; Willingham et al., 2002; see also Mattern & Patterson, 2011a, 2011b for a series of reports on SAT validity for predicting grades and persistence). However, previous studies have identified limitations of these variables, suggesting, for example, that SAT predictions may overestimate first-year college GPA and obscure background characteristics that are more accurately predictive of college performance (e.g., Rothstein, 2004; Soares, 2015; Syverson, 2007). Others have identified problematic variability of high school grading standards and course rigor (Atkinson & Geiser, 2009; Bowers, 2011; Buckley et al., 2018; Burton & Ramist, 2001; Camara & Kimmel, 2005; Syverson, 2007; Thorsen & Cliffordson, 2012; Westrick et al., 2015; Zwick, 2002), which may diminish the reliability of HSGPA and high school course grades for high-stakes admissions decisions.

Personal essays are a common component of holistic admissions reviews, offering insight into applicants’ experiences, challenges, goals, and interests (Todorova, 2018). The inclusion of personal essays in college applications is often justified as a means by which applicants can demonstrate character strengths and talents that may not be evident in academic records or standardized test scores. Personal essays may be used to evaluate applicants’ non-cognitive attributes such as creativity and self-efficacy (Pretz & Kaufman, 2017). Although critics argue that personal essay assessments, like standardized tests, may reflect pervasive social class- and race-based inequities (Alvero et al., 2021; Rosinger et al., 2021; Todorova, 2018; Warren, 2013), foregoing sole reliance on high school grades and standardized test scores by including personal essays in admissions decision-making is often championed as a way to expand college access to traditionally underserved students (Hossler et al., 2019).

Predictive Validity of Psychosocial Factors in College Admissions

The use of holistic approaches to undergraduate admissions has emerged as a strategy for expanding the predictive potential of traditional admissions criteria while addressing the disparities these criteria present (Bastedo et al., 2018; Hossler et al., 2019). Incorporating non-cognitive psychosocial factors into the admissions process has the potential to incrementally enhance the predictive power achieved when relying solely on cognitive variables (Allen et al., 2009; Sedlacek, 2004, 2017), as these psychosocial constructs are largely distinct from commonly used cognitive measures (Camara & Kimmel, 2005). Additionally, many psychosocial factors are more malleable than student demographic characteristics and traditional measures of cognitive ability, allowing for the possibility of interventions that may make college success more likely for students once they are enrolled (Allen et al., 2009; Robbins et al., 2004).

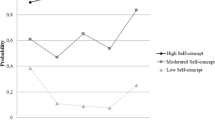

Prior research has identified associations between several non-cognitive psychosocial attributes and outcomes related to educational success (Robbins et al., 2006). Research pertaining to specific psychosocial factors has revealed positive associations between self-efficacy and various components of college success, including academic adjustment (Chemers et al., 2001), academic performance (Bandura, 1986; Krumrei-Mancuso et al., 2013; Robbins et al., 2006; Vuong et al., 2010; Zajacova et al., 2005), college satisfaction (Chemers et al., 2001; DeWitz & Walsh, 2002), and persistence and retention (Davidson & Beck, 2006; Robbins et al., 2004, 2006). Conscientiousness, a personality trait that is part of the “Big Five” factor model (Digman, 1990; Goldberg, 1993), has also consistently been shown to predict academic performance to an even greater extent than standardized test scores and HSGPA (Lounsbury et al., 2003; Nguyen et al., 2005; Noftle & Robins, 2007). Additionally, academic self-concept, or how well someone feels they can learn, has been identified as a significant predictor of academic performance, particularly among students from minoritized racial and ethnic groups and low-income backgrounds (Astin, 1992; Bailey, 1978; Gerardi, 2005; Sedlacek, 2004, 2017). Despite inconsistencies among the findings, scholars found coping and attributional styles to be both directly and indirectly associated with outcomes including student motivation, academic performance (LaForge & Cantrell, 2003; Martinez & Sewell, 2000; Rowe & Lockhart, 2005; Struthers et al., 2000; Yee et al., 2003), health status (Sasaki & Yamasaki, 2007), and happiness (O’Donnell et al., 2013). Additionally, prior studies sought to determine the extent to which psychosocial constructs reflect social inequities and thus become potentially biased criteria in college admissions. For instance, evidence suggests that the development of self-efficacy and self-concept are influenced by one’s social class (Easterbrook et al., 2020; Usher et al., 2019; Wiederkehr et al., 2015).

Theoretical Framework

We examined the validity of a measure of several motivational-developmental dimensions designed to predict undergraduate students’ academic performance and degree completion. These dimensions included causal attributions, coping strategies, relevant experiences, self-awareness, self-authorship, self-concept, and self-set goals. These constructs reflect the need for college students to have motivational resources to facilitate effort and persistence as well as developmental maturity to apply these resources adaptively in the context of college (Kaplan et al., 2017). The social-cognitive motivational (Bandura, 1986, 2006) and constructivist-developmental (Kegan, 1994) perspectives provide corresponding complementary theoretical frameworks from which the motivational-developmental dimensions we examined were drawn.

Social-Cognitive Motivational Perspective

The social-cognitive motivational perspective underscores the contribution of the combined influence of students’ high competence perceptions to persistence, adjustment, coping, and performance; attributions of success and failure to internal, malleable, and controllable causes; self-setting of autonomous, challenging, specific, and realistic goals; and coping with difficulties and failure by focusing on analyzing the problem, regulating negative emotions, and applying context-specific strategies (Bandura, 2006). The dimensions we examined include four constructs based on the social-cognitive motivational perspective: self-concept (i.e., individuals’ self-perceptions of ability; Marsh & Martin, 2011); self-set goals (i.e., an individual’s personally determined goals for themselves and for others; Locke & Latham, 2002; Vansteenkiste & Ryan, 2013); causal attributions (i.e., cognitive-affective explanations of the causes of success and failure; Hong et al., 1999; Weiner, 2010); and coping strategies (i.e., purposeful behavioral, emotional, and cognitive actions for responding to situations perceived to challenge an individual’s resources; Compas et al., 2001). These social-cognitive motivational constructs are related. However, they constitute distinct attributes that combine to form an adaptive motivational mindset (Dweck & Leggett, 1988).

Developmental-Constructivist Perspective

The developmental-constructivist perspective reflects the role of cross-contextual capacities for intentional and purposeful self-reflection and self-regulation of knowledge, relationships, goals, and actions related to coping and growth (Kegan, 1994). The motivational-developmental dimensions we examined include two constructs based on the developmental-constructivist perspective: self-awareness (i.e., the ability to consider oneself as an object for reflection, monitoring, and learning; Silvia & Duval, 2001) and self-authorship (i.e., the agentic capacity for an individual to generate and regulate their beliefs, decisions, identity, and social relationships; Baxter-Magolda et al., 2010).

Methodology

Informed by the literature as well as theoretical, empirical, ethical, and logistical factors, Kaplan et al. (2017) advanced the operational definitions of the motivational-developmental dimensions presented in Table 1. These definitions guided the development of an essay-based measure of motivational-developmental dimensions implemented as part of a test-optional admissions policy at a large, public, urban research university located in the Mid-Atlantic region of the United States. We investigated the predictive validity of this measure using multiple regression to analyze data collected from 886 first-year undergraduate students who applied for test-optional admissions and subsequently enrolled at the participating institution. Table 2 presents descriptive statistics on the demographic characteristics of these students.

Procedures

As part of the test-optional admissions process, students provided responses to four short-answer essay questions developed by Kaplan et al. (2017). Table 3 presents descriptions of the essay questions and the primary motivational-developmental dimensions measured within each question. These essays were presented to students as a part of their initial admissions application. Students did not have access to the essay questions in advance and were expected to complete them without preparation. Following several rounds of training, norming, and rubric calibration, two readers used a rubric created by Kaplan (2015) and Kaplan et al. (2017; Table 4) to independently score the primary motivational-developmental dimensions within each essay question according to the following scale: 1 point (does not articulate the dimension), 4 points (narrowly articulates the dimension), 7 points (generally articulates the dimension), 10 points (explicitly articulates the dimension). Each essay question received a total score between 4 and 40 points per reader. A third reader scored an essay response if there was a variance of 5 points or more between the scores produced by the two initial readers on a given essay question. For 11.3% of the essays, a third reader’s score was accepted and the scores produced by both initial readers were rejected. Using this methodology, a total motivational-developmental dimension score (MDS) from 4 to 40 was produced by averaging the scores produced for each essay question. The MDS was included as part of an admissions index that the institution used to make undergraduate admission decisions. This index included a high school academic performance rating (HSGPA and course grades), MDS or standardized test score (depending on whether the applicant applied under the test-optional policy), and an admissions counselor rating. We obtained all student data from the Institutional Research department at the participating institution. Recognizing that college participation and completion varies by family income (Ma et al., 2019), we collected estimated county-level median household income data from the U.S. Department of Commerce Bureau of the Census Small Area Income and Poverty Estimates Program (2022).

Variables

To reduce the possibility of omitted variable bias, the data we analyzed included a range of student demographic, financial, admissions, and academic information collected as part of the undergraduate admissions process at the participating institution. However, as is consistent with single institution studies, our data did not include all possible variables identified in the literature that may explain our outcomes of interest.

Our predictor variable MDS was a composite score of seven motivational-developmental dimensions (attributions of successes and failures, coping, relevant experiences, self-authorship, self-awareness, self-concept, and self-set goals). UGPA and 4- and 5-year graduation served as our outcome variables. The UGPA variable reflected the cumulative UGPA earned as of a student’s final semester enrolled. Four-year graduation was a dichotomous variable that represented bachelor’s degree completion within eight or fewer consecutive academic semesters. Five-year graduation was a dichotomous variable that represented bachelor’s degree completion in nine or ten consecutive academic semesters.

Based on previous studies that investigated the correlations between student characteristics (e.g., race, gender, and socioeconomic status), academic performance (e.g., HSGPA and UGPA), and baccalaureate degree completion (Mayhew et al., 2016; Pascarella & Terenzini, 2005), we included students’ race, gender, and socioeconomic status (approximated by Pell Grant receipt status and county-level median household income) as covariates in our regression analyses to account for the effects these variables may have on college outcomes. We dummy coded the categorical covariate for students’ race given the five racial groups included in our institutional data. Students with a self-reported race of American Indian, Multiple Ethnicities, Pacific Islander, or Unknown or a status of International were categorized as “Other Race” due to the limited racial representativeness of the sample (see Table 2). Additionally, we retained incongruent designations of race (e.g., African American and White) as these categories reflect those in the institutional dataset. We utilized Pell Grant receipt and a standard score of estimated median household income for the county in which students resided at the time of their application as an approximation of students’ socioeconomic status because Expected Family Contribution (EFC) data were missing for 75 students (8.5%) at the time of their matriculation. We also included HSGPA as a covariate in our regression analyses to account for students’ prior academic performance and an admissions counselor rating of students’ extracurricular activities, personal essay, and high school context. We included these variables in our analyses because of the associations between these commonly utilized admissions criteria and relevant postsecondary outcomes such as undergraduate GPA and graduation (Allensworth & Clark, 2020; Bastedo et al., 2018; Galla et al., 2019; Huang et al., 2017).

The admission counselor rating variable was recorded on a scale of 1 to 10, with 10 reflecting a counselor’s highest positive rating of the applicant. Additionally, we included a categorical variable for students’ academic program at matriculation to account for differences in the rigor and grading standards across academic disciplines and fields (Arcidiacono et al., 2012; Martin et al., 2017). The academic program categories in our dataset included Arts & Humanities, Business & Social Sciences, Health Sciences, and Sciences & Mathematics. Table 5 includes the means, standard deviations, and correlations for all variables.

Data Analysis

We used SPSS version 28 (IBM, 2020) to compute descriptive statistics and to conduct our correlation and regression analyses. We also used Lenhard and Lenhard’s (2014) calculator to compare correlations from independent samples. Prior to conducting our analyses, we examined our dataset to identify systematically missing cases and tested our data to ensure they met the assumptions associated with the analytical techniques we used (see “Appendix” for the results of our assumption tests). We removed five cases (0.6%) for students who matriculated at the participating institution but withdrew before earning course grades in their first semester. Additionally, we removed one case (0.1%) with a missing MDS, one case (0.1%) with a missing HSGPA, and 16 cases with missing admissions counselor ratings (1.8%). Accordingly, our analyses included all cases for which there were complete data. We indicate the analytical sample size for each analysis in table notes.

To test the reliability of the MDS measure, first we computed a Light’s kappa statistic (Light, 1971) to measure interrater reliability between the essay readers as there was not a fixed number of readers for each essay question. We computed Light’s kappa values by calculating Cohen’s kappa and averaging these values across all rater pairs. Second, we computed Pearson correlation coefficients to determine the strength and direction of the associations between the MDS scores and our outcome variables by student demographic characteristics. Third, we compared the resulting correlation coefficients to test for statistically significant differences across student subgroups (Diedenhofen & Musch, 2015; Lenhard & Lenhard, 2014). Specifically, we ran three separate tests to compare the nine correlation coefficients for each of our outcome variables across the student demographic characteristics in our dataset (race, gender, Pell Grant receipt). Fourth, we ran separate regression analyses using interaction terms to determine if the MDS was moderated by student demographic characteristics including race, gender, and Pell Grant receipt status. To create our interaction terms, we centered the MDS to reduce multicollinearity caused by higher-order terms. Lastly, to nuance our findings, we entered the individual motivational-development dimensions as separate variables in stepwise and combined regression models to examine which dimensions, if any, were statistically significant predictors of our outcome variables. We used multivariate linear and logistic regression to investigate the accuracy of the MDS in predicting UGPA and four- and five-year degree completion, respectively. We used the following regression equation to predict our outcome variables:

where Yi is our outcome variable of interest (UGPA, 4-year, and 5-year graduation); βi is the coefficient of Xi, a given covariate in the model (e.g., HSGPA); βi is the slope of the line for the MDS, our coefficient of interest; MDSi is the value of the MDS for student i; and εi are the residuals or errors in the model.

Results

Research Question 1

For Research Question 1, we asked whether the MDS is a reliable predictor of UGPA and 4- and 5-year bachelor’s degree completion. The results of our interrater reliability analysis indicated slight agreement between readers across the individual motivational-development dimensions that comprise our measure. Light’s Kappa values ranged from κ = .132 (Coping) to κ = .238 (Relevant Experiences). Table 6 presents these results.

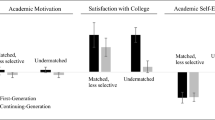

Pearson correlational analyses indicated statistically significant associations between the MDS score and the outcome variables for several student subgroups. We identified statistically significant correlations at the p < .01 level between the MDS and UGPA for female students (r = .12) and Pell Grant recipients (r = .14); 4-year graduation for female students (r = .14) and Pell Grant recipients (r = .17); and 5-year graduation for Asian students (r = .28), male students (r = .16), and Pell Grant recipients (r = .15). Table 7 presents these results.

Given these findings, we tested the equality of these correlation coefficients since they were obtained from independent samples (Cohen & Cohen, 1983; Preacher, 2002). The tests did not identify two-tailed p values less than .05 between all correlation coefficients. By convention, this indicates that the differences between the correlation coefficients are not statistically significant (Cohen & Cohen, 1983).

Our moderator analyses using interaction terms between the MDS and student demographic characteristics (race, gender, Pell Grant recipient) yielded nonsignificant results except for the interaction between Asian students and the MDS for 5-year graduation, p = .009. Table 8 presents these results.

Research Question 2

For Research Question 2, we asked whether the MDS is a statistically significant predictor of UGPA. The MDS was statistically significant in the model (p = .013). However, the small β coefficient estimate (β = .022) suggests that a 1-point increase in the MDS is associated with a .022 increase in UGPA. The full linear regression model was statistically significant, R2 = .165, F(13, 861) = 12.876, p < .001, adjusted R2 = .152. Table 9 presents these results.

For robustness, we reran our analysis using stepwise regression to produce a covariate-only model and a model that includes the MDS. The addition of the MDS resulted in a statistically significant change in the F-statistic, F(1, 848) = 6.175, p = .013. This suggests that adding the MDS to the model marginally improved the prediction of UGPA compared to the covariate-only model, ΔR2 = .006.

Research Question 3

For Research Question 3, we asked whether the MDS is a statistically significant predictor of four- and five-year bachelor’s degree completion. To nuance the results, we reran our analyses for graduation using stepwise regression produce a covariate-only model and a model that includes the MDS.

Four-Year Graduation

The full logistic regression model to predict four-year graduation was statistically significant, X2(13) = 109.061, p < .001. The model explained 15.8% (Nagelkerke R2 = .158) of the variance in four-year graduation and correctly classified 64.6% of cases. Sensitivity was 50.5%, specificity was 45.6%, positive predictive value was 62.9%, and negative predictive value was 33.6%. The MDS was statistically significant in the model (p = .013). An increase in the MDS score was associated with a small increase in the likelihood of 4-year graduation (Exp(β) = 1.076). The addition of the MDS to the regression model resulted in a statistically significant yet minimal contribution to the explanation of the variance in four-year graduation, Nagelkerke ΔR2 = .009. Table 10 presents these results.

Five-Year Graduation

The full logistic regression model to predict 5-year graduation was statistically significant, X2(13) = 75.464, p < .001. The model explained 11.4% (Nagelkerke R2 = .114) of the variance in 5-year graduation and correctly classified 69.2% of cases. Sensitivity was 89.8%, specificity was 32.3%, positive predictive value was 70.4%, and negative predictive value was 63.7%. The MDS was not statistically significant in the model (p = .114). An increase in the MDS was associated with a small increase in the likelihood of 5-year graduation (Exp(β) = 1.05). The addition of the MDS to the regression model resulted in a statistically significant yet minimal contribution to the explanation of the variance in 4-year graduation, Nagelkerke ΔR2 = 0.003. Table 11 presents these results.

Research Question 4

For Research Question 4, we asked whether any specific motivational-developmental dimensions predicted UGPA and 4- and 5-year bachelor’s degree completion. Among the individual motivational-developmental dimensions we examined, only coping was a statistically significant predictor of UGPA (p = .009), 4-year graduation (p = .014), and 5-year graduation (p = .016). Table 12 presents these results.

Discussion

Summary of the Findings

The results of our reliability tests indicated slight agreement between raters on the scoring of the motivational-developmental dimensions that comprise our measure. This finding suggests that variation in students’ MDS may be a function of variation in raters’ scores rather than true differences across students in the motivational-developmental constructs our measure was designed to assess. This is demonstrated by the slight agreement between raters on the Coping dimension, yet Coping was identified as the only statistically significant predictor of our outcomes of interest. Therefore, readers should interpret our results with caution as potential measurement error may lead to incorrect conclusions regarding the reliability and efficacy of our measure.

Our between-group and moderator analyses did not identify statistically significant subgroup differences between the MDS and our outcomes of interest, nor did we identify statistically significant results when we entered interaction terms of student demographic variables and the MDS into our regression models, except for Asian students. The relationships that do exist are small in magnitude as demonstrated by the correlations between MDS and 4-year graduation (r = .21) and 5-year graduation (r = .28) among Asian students. Despite the absence of statistically significant differences in the correlation coefficients between student subgroups, the MDS is associated with varying levels of validity across student racial groups as suggested by the results of our moderation analysis.

Our findings are generally consistent with prior research that has explored the relationship between non-cognitive variables and student outcomes. For example, using the Student Readiness Inventory, Komarraju et al. (2013) found that the non-cognitive variable Academic Discipline incrementally predicted UGPA over HSGPA and standardized test scores. Additionally, a study of an admissions innovation employing essay-based non-cognitive assessments at DePaul University found that non-cognitive variables helped to predict first-year success and retention, particularly for students from lower income and minoritized backgrounds (Sedlacek, 2017). Consistent with our findings, the predictive power of non-cognitive variables in prior studies was small. Taken together, the similarities across such studies hold that incorporating psychosocial-based assessments remains a promising direction. However, strengthening the reliability of our measure is a necessary first step before it has the potential to effectively promote more holistic, equitable admissions decisions.

Importance of the Findings

Holistic review is an intentional approach for expanding the predictive utility of traditional admissions criteria by considering the non-cognitive characteristics of applicants to make more accurate and equitable decisions about postsecondary educational opportunities (Bastedo et al., 2018; Hossler et al., 2019). However, literature has demonstrated the need for clear and consistent understanding of the validity of non-cognitive factors for predicting students’ success during and beyond college. An effective shift to more holistic admissions processes requires new, validated measures of postsecondary educational promise that meaningfully incorporate psychosocial attributes into admissions models. This is of particular importance as test-optional admissions policies proliferate. To this end, we examined the predictive validity of one such measure of students’ motivational-developmental dimensions.

Our measure did not meet what Cohen (1960) deemed an acceptable threshold of reliability to be considered a valid measure. Although our results show that the MDS makes a small contribution to the explanation of the variance in UGPA and 4-year graduation rates, we do not recommend its use for high-stakes decision-making given the propensity for measurement error in the absence of additional steps to improve interrater reliability. Measures, such as the one used in our study, must demonstrate reliability and consistent predictive validity for all groups of students. Assessments used for high-stakes decision making should be designed and implemented with care to avoid perpetuating inequitable admissions outcomes and presenting barriers in the college admissions process.

Nevertheless, we remain encouraged that the assessment of applicants’ psychosocial attributes may be a worthy component of the admissions process. Assessing non-cognitive dimensions may encourage admissions offices, and by extension institutions, to think holistically about their philosophical bases for admission decision-making (Perfetto et al., 1999) and how these philosophies pertain to their institution’s mission and values. Furthermore, research has demonstrated that even in admissions offices committed to holistic review, officers tend to predominantly rely on traditional academic criteria to make admissions decisions (Bowman & Bastedo, 2018). Although administering and evaluating non-cognitive assessments may require more time and effort from both admissions officers and prospective students, this approach is likely worthwhile if it promotes a more truly holistic, reliable, and accurate assessment of students’ potential.

Limitations

Despite the longitudinal nature of our study, we used degree completion outcome variables that are potentially influenced by a variety of factors not accounted for in our analyses. Consequently, we acknowledge that our study may be subject to omitted variable bias. For example, studies have suggested that variations in students’ tuition expenses net of financial aid affect retention and degree completion rates (Goldrick-Rab et al., 2016; Hossler et al., 2009; Nguyen et al., 2019; Welbeck et al., 2014; Xu & Webber, 2018). However, we endeavored to limit this bias by including variables that allowed us to comprehensively consider factors related to students’ admissions and outcomes, including background characteristics, academic variables, and admissions counselor ratings.

Student motivation and developmental characteristics are not fixed attributes; they are the product of self-reflection and accumulated life experiences (Bandura, 1994) and evolve over time (Mayhew et al., 2016; Pascarella & Terenzini, 2005). Scholars have argued that a holistic evaluation of students' previous academic performance and psychosocial attributes, developed over time in different contexts, cannot be captured by a single assessment (Sedlacek, 2004, 2017). However, our study measures students’ motivation and development at a specific time in their educational careers (i.e., during the college admissions process) and not as longitudinal constructs that may be continuously predictive of behaviors positively associated with educational outcomes.

Compared to the gender and race demographics of college students nationally, the relative homogeneity of the students in our study should frame any interpretation of the findings. For example, to feasibly conduct quantitative analyses using all participants’ data, several races had to be grouped into a single category (“Other”). This grouping may obscure important measurement and educational differences between student subgroups. Additionally, our analytic sample consists only of students who (a) applied for test-optional admissions, and (b) subsequently enrolled at the participating institution. This narrow sample further limits the generalizability of our findings.

Transfer students, graduate students, and denied admission applicants were excluded from the sample. Therefore, our findings may not generalize to these student populations, despite the need to validate predictors of success for students of all types (e.g., transfer students, international students, returning students) and at all levels (e.g., graduate, professional). Additionally, we conducted our study at a single institution located in a specific geographic region and of a particular institutional classification (i.e., public, urban, comprehensive research university). Therefore, our findings may not generalize to other institutional types.

Research has identified associations between essay content, SAT scores, and household income (Alvero et al., 2021). The essay readers in our study were trained to specifically score articulations of the motivational-developmental constructs as opposed to other aspects of analytical writing such as grammar, syntax, and mechanics. We believe this approach allowed us to more accurately capture the dimensions of interest rather than students’ background characteristics such as their socioeconomic status or writing ability. However, because our study used human readers with inherent subjectivity, the reliability of the MDS is subject to their level of agreement on the articulations of each dimension measured within the essay questions.

Implications for Practice and Future Research

Enrollment management leaders and other higher education professionals must weigh the practical significance of accounting for a nominal percentage of the variance in educational outcomes (e.g., UGPA and degree completion) against the introduction of additional requirements in the undergraduate admissions process. Because our results demonstrated slight agreement between readers on the MDS measure and our outcomes of interest are moderated by MDS for Asian students, the MDS should not be used for high-stakes decision making in the absence of other variables with empirically demonstrated reliability and validity. However, we remain encouraged that scores derived from more reliable measures may enrich applicant portfolios undergoing holistic review by providing admissions officers with more comprehensive information about how students may approach and adapt to challenges, insights that are of particular importance at a time when many admissions policies have been disrupted (Bastedo et al., 2022). Still, institutions should be mindful that the use of an essay-based measure in the college admissions process may limit application completion and present workload constraints for admissions officers.

Successfully transitioning to college, especially in times of social and economic uncertainty, requires the possession of coping skills and psychosocial resources that exist apart from a student’s cognitive ability. Therefore, researchers should continue to develop and work to validate measures of non-cognitive psychosocial factors relevant to higher education and related contexts. For example, we believe in the promise of alternative measures of psychosocial factors such as the development of an empirically validated integrated inventory or scale.

While our study found that psychosocial factors made a small contribution to the explanation of UGPA and degree completion, greater explanatory power might be obtained from more reliable measures of applicants’ coping skills and similar variables related to educational success such as perseverance and resiliency. Therefore, future research should examine the predictive value of psychosocial factors concerning additional outcomes that may be both constitutive of and indirectly related to educational success (e.g., mental wellness). Future research should also be conducted to discern the predictive validity of psychosocial factors among diverse student populations in various higher education contexts including different types of institutions and degree levels.

Throughout the development and implementation of our measure, steps were taken to mitigate the effect of bias. For instance, raters responsible for scoring the essays were required to attend several training sessions, throughout which the rubric was calibrated and normed. Furthermore, multiple raters read and scored each applicant essay. While these measures were employed to reduce bias and ensure the reliability of our instrument, continued research on the efficacy of these steps—and on the utility of the personal essay format in general—is warranted. With this in mind, we encourage future research that employs alternative methods of data collection such as situational judgement tests that present prospective students with a realistic scenario they may encounter in college and ask them to indicate how they would respond. Such efforts should be scaled to include multiple study sites to maximize external validity. Finally, future research should examine student outcomes at multiple institutions that have integrated proprietary measures of psychosocial variables into their admissions processes. Such studies could examine whether these measures effectively address the limitations of traditional admissions criteria, reduce predictive bias, and expand postsecondary educational opportunities while allowing the institution to enroll a highly qualified and diverse student body.

Conclusion

Many colleges and universities have adapted their admissions criteria and shifted away from the traditional reliance on standardized test scores as a key predictor of student outcomes. Institutions must also carefully consider which criteria most reliably, accurately, and equitably predict students’ college performance and persistence. Equitable access to postsecondary education and the benefits it confers may be advanced using novel measures that represent not only applicants’ prior academic achievement but their personalities, backgrounds, and the challenges they have overcome. Understanding applicants’ non-cognitive psychosocial attributes such as those assessed using our instrument may contribute to a more holistic understanding of how students can succeed in postsecondary education.

Data Availability

The dataset analyzed in the current study is not publicly available due to the proprietary nature of the measure examined. However, the dataset is available from the corresponding author on reasonable request.

Code Availability

Not applicable.

References

Allen, J., Robbins, S. B., Casillas, A., & Oh, I. S. (2008). Third-year college retention and transfer: Effects of academic performance, motivation, and social connectedness. Research in Higher Education, 49(7), 647–664. https://doi.org/10.1007/s11162-008-9098-3

Allen, J., Robbins, S. B., & Sawyer, R. (2009). Can measuring psychosocial factors promote college success? Applied Measurement in Education, 23(1), 1–22. https://doi.org/10.1080/08957340903423503

Allensworth, E. M., & Clark, K. (2020). High school GPAs and ACT scores as predictors of college completion: Examining assumptions about consistency across high schools. Educational Researcher, 49(3), 198–211. https://doi.org/10.3102/0013189X20902110

Alvero, A. J., Giebel, S., Gebre-Medhin, B., Lising A. L., Stevens, M. L., & Domingue, B. W. (2021). Essay content is strongly related to household income and SAT scores: Evidence from 60,000 undergraduate applications (CEPA working paper No. 21–03). Stanford Center for Education Policy Analysis. http://cepa.stanford.edu/wp21-03

American Educational Research Association, American Psychological Association, National Council on Measurement in Education. (2014). Standards for educational and psychological testing. American Educational Research Association.

Arcidiacono, P., Aucejo, E. M., & Spenner, K. (2012). What happens after enrollment? An analysis of the time path of racial differences in GPA and major choice. IZA Journal of Labor Economics, 1, 1–24. https://doi.org/10.1186/2193-8997-1-5

Astin, A. W. (1992). Minorities in American higher education: Recent trends, current prospects, and recommendations. Jossey-Bass.

Atkinson, R. C., & Geiser, S. (2009). Reflections on a century of college admissions tests. Educational Researcher, 38(9), 665–676. https://doi.org/10.3102/0013189X09351981

Bailey, R. N. (1978). Minority admissions. Heath.

Bandura, A. (1986). The explanatory and predictive scope of self-efficacy theory. Journal of Social and Clinical Psychology, 4(3), 359–373.

Bandura, A. (1994). Self-efficacy. In V. S. Ramachandran (Ed.), Encyclopedia of human behavior (Vol. 4, pp. 71–81). Academic Press.

Bandura, A. (2006). Guide for constructing self-efficacy scales. In F. Pajares & T. Urdan (Eds.), Self-efficacy beliefs of adolescents (Vol. 5, pp. 307–337). Information Age Publishing.

Bastedo, M. N., Bell, D., Howell, J. S., Hsu, J., Hurwitz, M., Perfetto, G., & Welch, M. (2022). Admitting students in context: Field experiments on information dashboards in college admissions. Journal of Higher Education, 93(3), 327–374. https://doi.org/10.1080/00221546.2021.1971488

Bastedo, M. N., Bowman, N. A., Glasener, K. M., & Kelly, J. L. (2018). What are we talking about when we talk about holistic review? Selective college admissions and its effects on low-SES students. The Journal of Higher Education, 89(5), 782–805. https://doi.org/10.1080/00221546.2018.1442633

Baxter-Magolda, M., Creamer, E., & Meszaros, P. (2010). Development and assessment of self-authorship: Exploring the concept across cultures. Stylus Publishing.

Berry, C. M., & Sackett, P. R. (2009). Individual differences in course choice result in underestimation of the validity of college admissions systems. Psychological Science, 20(7), 822–830. https://doi.org/10.1111/j.1467-9280.2009.02368.x

Blau, J. R., Moller, S., & Jones, L. V. (2004). Why test? Talent loss and enrollment loss. Social Science Research, 33(3), 409–434. https://doi.org/10.1016/j.ssresearch.2003.09.002

Bowers, A. J. (2011). What’s in a grade? The multidimensional nature of what teacher-assigned grades assess in high school. Educational Research and Evaluation, 17(3), 141–159. https://doi.org/10.1080/13803611.2011.597112

Bowman, N. A., & Bastedo, M. N. (2018). What role may admissions office diversity and practices play in equitable decisions? Research in Higher Education, 59, 430–447. https://doi.org/10.1007/s11162-017-9468-9

Bridgeman, B., Pollack, J., & Burton, N. (2008). Predicting grades in different types of college courses (College Board Research Report No. 2008–1, ETS RR-08–06). The College Board.

Buckley, J., Letukas, L., & Wildavsky, B. (Eds.). (2018). Measuring success: Testing, grades, and the future of college admissions. Johns Hopkins University Press.

Burton, N., & Ramist, L. (2001). Predicting success in college: SAT studies of classes graduating since 1980 (College Board Research Report No. 2001–2). College Board.

Camara, W. J., & Kimmel, E. W. (2005). Choosing students: Higher education admissions tools for the 21st century. Lawrence Erlbaum Associates Inc.

Chemers, M. M., Hu, L. T., & Garcia, B. F. (2001). Academic self-efficacy and first year college student performance and adjustment. Journal of Educational Psychology, 93(1), 55. https://doi.org/10.1037/0022-0663.93.1.55

Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20(1), 37–46. https://doi.org/10.1177/001316446002000104

Cohen, J., & Cohen, P. (1983). Applied multiple regression/correlation analysis for the behavioral sciences. Lawrence Erlbaum Associates Inc.

Compas, B., Connor-Smith, J., Saltzman, H., Thomsen, A., & Wadsworth, M. (2001). Coping with stress during childhood and adolescence: Problems, progress, and potential in theory and research. Psychological Bulletin, 127(1), 87–127. https://doi.org/10.1037/0033-2909.127.1.87

Davidson, W. B., & Beck, H. P. (2006). Survey of academic orientations scores and persistence in college freshmen. Journal of College Student Retention: Research, Theory & Practice, 8(3), 297–305. https://doi.org/10.2190/H18T-6850-77LH-0063

DeWitz, S. J., & Walsh, W. B. (2002). Self-efficacy and college student satisfaction. Journal of Career Assessment, 10(3), 315–326. https://doi.org/10.1177/10672702010003003

Diedenhofen, B., & Musch, J. (2015). cocor: A comprehensive solution for the statistical comparison of correlations. PLoS ONE, 10(4), e0121945. https://doi.org/10.1371/journal.pone.0121945

Digman, J. M. (1990). Personality structure: Emergence of the five-factor model. Annual Review of Psychology, 41, 417–440. https://doi.org/10.1146/annurev.ps.41.020190.002221

Drost, E. A. (2011). Validity and reliability in social science research. Education Research and Perspectives, 38(1), 105–123.

Dweck, C. S., & Leggett, E. L. (1988). A social-cognitive approach to motivation and personality. Psychological Review, 95(2), 256–273. https://doi.org/10.1037/0033-295X.95.2.256

Easterbrook, M. J., Kuppens, T., & Manstead, A. S. (2020). Socioeconomic status and the structure of the self-concept. British Journal of Social Psychology, 59(1), 66–86. https://doi.org/10.1111/bjso.12334

FairTest National Center for Fair and Open Testing. (2023). Test-optional list. https://fairtest.org/test-optional-list

Furuta, J. (2017). Rationalization and student/school personhood in US college admissions: The rise of test-optional policies, 1987 to 2015. Sociology of Education, 90(3), 236–254. https://doi.org/10.1177/0038040717713583

Galla, B. M., Shulman, E. P., Plummer, B. D., Gardner, M., Hutt, S. J., Goyer, J. P., D’Mello, S. K., Finn, A. S., & Duckworth, A. L. (2019). Why high school grades are better predictors of on-time college graduation than are admissions test scores: The roles of self-regulation and cognitive ability. American Educational Research Journal, 56(6), 2077–2115. https://doi.org/10.3102/0002831219843292

Gerardi, S. (2005). Self-concept of ability as a predictor of academic success among urban technical college students. The Social Science Journal, 42(2), 295–300. https://doi.org/10.1016/j.soscij.2005.03.007

Goldberg, L. R. (1993). The structure of phenotypic personality traits. American Psychologist, 48, 26–34. https://doi.org/10.1037/0003-066X.48.1.26

Goldrick-Rab, S., Kelchen, R., Harris, D. N., & Benson, J. (2016). Reducing income inequality in educational attainment: Experimental evidence on the impact of financial aid on college completion. American Journal of Sociology, 121, 1762–1817. https://doi.org/10.1086/685442

Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2018). Multivariate data analysis (8th ed.). Prentice Hall.

Hong, Y., Chiu, C., Lin, D., & Wan, W. (1999). Implicit theories, attributions, and coping: A meaning system approach. Journal of Personality and Social Psychology, 77(3), 588–599. https://doi.org/10.1037/0022-3514.77.3.588

Hosmer, D. W., Hosmer, T., Le Cessie, S., & Lemeshow, S. (1997). A comparison of goodness-of-fit test for the logistic regression model. Statistics in Medicine, 16, 965–980. https://doi.org/10.1002/(SICI)1097-0258(19970515)16:9%3c965::AID-SIM509%3e3.0.CO;2-O

Hossler, D., Chung, E., Kwon, J., Lucido, J., Bowman, N., & Bastedo, M. (2019). A study of the use of nonacademic factors in holistic undergraduate admissions reviews. The Journal of Higher Education, 90(6), 833–859. https://doi.org/10.1080/00221546.2019.1574694

Hossler, D., Ziskin, M., Gross, J., Kim, S., & Cekic, O. (2009). Student aid and its role in encouraging persistence. In J. C. Smart (Ed.), Higher education: Handbook of theory and research (pp. 389–425). Springer.

Huang, L., Roche, L. R., Kennedy, E., & Brocato, M. B. (2017). Using an integrated persistence model to predict college graduation. International Journal of Higher Education, 6(3), 40–56.

IBM Corp. (2020). IBM SPSS statistics for windows (Version 28.0) [Computer software].

Kaplan, A. (2015). Codebook: Motivational-developmental constructs assessed in test optional essays. Unpublished rubric.

Kaplan, A., Pendergast, L., French, B., & Kanno, Y. (2017). Development of a measure of test-optional applicants’ motivational-developmental attributes. Institute of Educational Sciences Proposal.

Kegan, R. (1994). In over our heads: The mental demands of modern life. Harvard University Press.

Kobrin, J. L., Patterson, B. F., Shaw, E. J., Mattern, K. D., & Barbuti, S. M. (2008). The validity of the SAT for predicting first-year college grade point average (College Board research report 2008–5). The College Board.

Kolluri, S. (2018). Advanced placement: The dual challenge of equal access and effectiveness. Review of Educational Research, 88(5), 671–711. https://doi.org/10.3102/0034654318787268

Komarraju, M., Ramsey, A., & Rinella, V. (2013). Cognitive and non-cognitive predictors of college readiness and performance: Role of academic discipline. Learning and Individual Differences, 24, 103–109. https://doi.org/10.1016/j.lindif.2012.12.007

Krumrei-Mancuso, E. J., Newton, F. B., Kim, E., & Wilcox, D. (2013). Psychosocial factors predicting first-year college student success. Journal of College Student Development, 54(3), 247–266. https://doi.org/10.1353/csd.2013.0034

LaForge, M. C., & Cantrell, S. (2003). Explanatory style and academic performance among college students beginning a major course of study. Psychological Reports, 92(3), 861–865. https://doi.org/10.2466/pr0.2003.92.3.861

Le, H., Casillas, A., Robbins, S., & Langley, R. (2005). Motivational and skills, social, and self-management predictors of college outcomes: Constructing the student readiness inventory. Educational and Psychological Measurement, 65(3), 482–508. https://doi.org/10.1177/0031364404272493

Lenhard, W., & Lenhard, A. (2014). Hypothesis tests for comparing correlations. Psychometrica. https://doi.org/10.13140/RG.2.1.2954.1367

Leonard, D. K., & Jiang, J. (1999). Gender bias and the college predictions of the SATs: A cry of despair. Research in Higher Education, 40(4), 375–407. https://doi.org/10.1023/a:1018759308259

Light, R. J. (1971). Measures of response agreement for qualitative data: Some generalizations and alternatives. Psychological Bulletin, 76(5), 365–377. https://doi.org/10.1037/h0031643

Locke, E. A., & Latham, G. P. (2002). Building a practically useful theory of goal setting and task motivation: A 35-year odyssey. American Psychologist, 57(9), 705. https://doi.org/10.1037/0003-066X.57.9.705

Lounsbury, J. W., Sundstrom, E., Loveland, J. M., & Gibson, L. W. (2003). Intelligence, “Big Five” personality traits, and work drive as predictors of course grade. Personality and Individual Differences, 35(6), 1231–1239. https://doi.org/10.1016/S0191-8869(02)00330-6

Ma, J., Pender, M., & Welch, M. (2019). Education pays 2019: The benefits of higher education for individuals and society. The College Board.

Marsh, H. W., & Martin, A. J. (2011). Academic self-concept and academic achievement: Relations and causal ordering. British Journal of Educational Psychology, 81(1), 59–77. https://doi.org/10.1348/000709910X503501

Martin, N. D., Spenner, K. I., & Mustillo, S. A. (2017). A test of leading explanations for the college racial-ethnic achievement gap: Evidence from a longitudinal case study. Research in Higher Education, 58(6), 617–645. https://doi.org/10.1007/s11162-016-9439-6

Martinez, R., & Sewell, K. W. (2000). Explanatory style in college students: Gender differences and disability status. College Student Journal, 34(1), 72–72.

Mattern, K. D., & Patterson, B. F. (2011a). The relationship between SAT scores and retention (College Board statistical reports). The College Board.

Mattern, K. D., & Patterson, B. F. (2011b). Validity of the SAT for predicting grades (College Board statistical reports). The College Board.

Mayhew, M. J., Rockenbach, N. A., Bowman, T. A., Seifert, D., Wolniak, G. C., Pascarella, E. T., & Terenzini, P. T. (2016). How college affects students: 21st century evidence that higher education works. Wiley.

McHugh, M. (2012). Interrater reliability: The kappa statistic. Biochemia Medica, 22(3), 276–282.

National Association for College Admission Counseling. (2016). Use of predictive validity studies to inform admission practices. https://www.nacacnet.org/globalassets/documents/publications/research/testvalidity.pdf

Nguyen, N. T., Allen, L. C., & Fraccastoro, K. (2005). Personality predicts academic performance: Exploring the moderating role of gender. Journal of Higher Education Policy and Management, 27(1), 105–117. https://doi.org/10.1080/13600800500046313

Nguyen, T. D., Kramer, J. W., & Evans, B. J. (2019). The effects of grant aid on student persistence and degree attainment: A systematic review and meta-analysis of the causal evidence. Review of Educational Research, 89(6), 831–874. https://doi.org/10.3102/0034654319877156

Noftle, E. E., & Robins, R. W. (2007). Personality predictors of academic outcomes: Big five correlates of GPA and SAT scores. Journal of Personality and Social Psychology, 93(1), 116. https://doi.org/10.1037/0022-3514.93.1.116

O’Donnell, S., Chang, K., & Miller, K. (2013). Relations among autonomy, attribution style, and happiness in college students. College Student Journal, 47(1), 228–234.

Oswald, F., Schmitt, N., Kim, B., Ramsay, L., & Gillespie, M. (2004). Developing a biodata measure and situational judgment inventory as predictors of college student performance. Journal of Applied Psychology, 89, 187–207. https://doi.org/10.1037/0021-9010.89.2.187

Pascarella, E. T., & Terenzini, P. T. (2005). How college affects students: A third decade of research (Vol. 2). Jossey-Bass.

Perfetto, G., Escandón, M., Graff, S., Rigol, G., & Schmidt, A. (1999). Toward a taxonomy of the admissions decision-making process: A public document based on the first and second College Board conferences on admissions models. The College Board.

Preacher, K. J. (2002). Calculation for the test of the difference between two independent correlation coefficients [Computer software]. http://quantpsy.org

Pretz, J. E., & Kaufman, J. C. (2017). Do traditional admissions criteria reflect applicant creativity? The Journal of Creative Behavior, 51(3), 240–251. https://doi.org/10.1002/jocb.120

Robbins, S. B., Allen, J., Cassillas, A., Peterson, C. H., & Le, H. (2006). Unraveling the differential effects of motivational and skills, social and self-management measures from traditional predictors of college outcomes. Journal of Educational Psychology, 98, 598–616. https://doi.org/10.1037/0022-0663.98.3.598

Robbins, S. B., Lauver, K., Le, H., Davis, D., Langley, R., & Carlstrom, A. (2004). Do psychosocial and study skill factors predict college outcomes? A meta-analysis. Psychological Bulletin, 130, 261–288. https://doi.org/10.1037/0033-2909.130.2.261

Rosinger, K. O., Sarita Ford, K., & Choi, J. (2021). The role of selective college admissions criteria in interrupting or reproducing racial and economic inequities. The Journal of Higher Education, 92(1), 31–55. https://doi.org/10.1080/00221546.2020.1795504

Rothstein, J. M. (2004). College performance predictions and the SAT. Journal of Econometrics, 121, 297–317. https://doi.org/10.1016/j.jeconom.2003.10.003

Rowe, J. E., & Lockhart, L. K. (2005). Relationship of cognitive attributional style and academic performance among a predominantly Hispanic college student population. Individual Differences Research, 3(2), 136–139.

Sackett, P. R., & Kuncel, N. R. (2018). Eight myths about standardized admissions testing. In J. Buckley, J. Letukas, & B. Wildavsky (Eds.), Measuring success: Testing, grades, and the future of college admissions (pp. 288–307). Johns Hopkins University Press.

Sackett, P. R., Kuncel, N. R., Beatty, A. S., Rigdon, J. L., Shen, W., & Kiger, T. B. (2012). The role of socioeconomic status in SAT-grade relationships and in college admissions decisions. Psychological Science, 23(9), 1000–1007. https://doi.org/10.1177/0956797612438732

Sasaki, M., & Yamasaki, K. (2007). Stress coping and the adjustment process among university freshmen. Counselling Psychology Quarterly, 20(1), 51–67. https://doi.org/10.1080/09515070701219943

Sedlacek, W. E. (2004). Beyond the big test: Noncognitive assessment in higher education. Jossey-Bass.

Sedlacek, W. (2017). Measuring noncognitive variables: Improving admissions, success and retention for underrepresented students. Stylus Publishing.

Silvia, P. J., & Duval, T. S. (2001). Objective self-awareness theory: Recent progress and enduring problems. Personality and Social Psychology Review, 5(3), 230–241. https://doi.org/10.1207/S15327957PSPR0503_4

Small Area Income and Poverty Estimates Program. (2022). U.S. Census Bureau. https://www.census.gov/programs-surveys/saipe.html

Soares, J. A. (2015). SAT wars: The case for test-optional college admissions. Teachers College Press.

Struthers, C. W., Perry, R. P., & Menec, V. H. (2000). An examination of the relationship among academic stress, coping, motivation, and performance in college. Research in Higher Education, 41(5), 581–592. https://doi.org/10.1023/A:1007094931292

Sweitzer, K., Blalock, A. E., & Sharma, D. B. (2018). The effect of going test-optional on diversity and admissions: A propensity score matching analysis. In J. Buckley, J. Letukas, & B. Wildavsky (Eds.), Measuring success: Testing, grades, and the future of college admissions (pp. 288–307). Johns Hopkins University Press.

Syverson, S. (2007). The role of standardized tests in college admissions: Test-optional admissions. New Directions for Student Services, 118, 55–70. https://doi.org/10.1002/ss.241

Tabachnick, B. G., Fidell, L. S., & Ullman, J. B. (2007). Using multivariate statistics (Vol. 5, pp. 481–498). Pearson.

Thomas, L. L., Kuncel, N. R., & Crede, M. (2007). Noncognitive variables in college admissions: The case of the non-cognitive questionnaire. Educational and Psychological Measurement, 67(4), 635–657. https://doi.org/10.1177/0013164406292074

Thorsen, C., & Cliffordson, C. (2012). Teachers’ grade assignment and the predictive validity of criterion-referenced grades. Educational Research and Evaluation, 18(2), 153–172. https://doi.org/10.1080/13803611.2012.659929

Todorova, R. (2018). Institutional expectations and students’ responses to the college application essay. Social Sciences, 7(10), 205. https://doi.org/10.3390/socsci7100205

Usher, E. L., Li, C. R., Butz, A. R., & Rojas, J. P. (2019). Perseverant grit and self-efficacy: Are both essential for children’s academic success? Journal of Educational Psychology, 111(5), 877. https://doi.org/10.1037/edu0000324

Vansteenkiste, M., & Ryan, R. M. (2013). On psychological growth and vulnerability: Basic psychological need satisfaction and need frustration as a unifying principle. Journal of Psychotherapy Integration, 23, 263–280. https://doi.org/10.1037/a0032359

Vuong, M., Brown-Welty, S., & Tracz, S. (2010). The effects of self-efficacy on academic success of first-generation college sophomore students. Journal of College Student Development, 51(1), 50–64. https://doi.org/10.1353/csd.0.0109

Warren, J. (2013). The rhetoric of college application essays: Removing obstacles for low income and minority students. American Secondary Education, 1, 43–56.

Weiner, B. (2010). The development of an attribution-based theory of motivation: A history of ideas. Educational Psychologist, 45(1), 28–36. https://doi.org/10.1080/00461520903433596

Welbeck, R., Diamond, J., Mayer, A., & Richburg-Hayes, L. (2014). Piecing together the college affordability puzzle. MDRC. https://www.mdrc.org/sites/default/files/Piecing_together_the_College_affordability_puzzle.pdf

Westrick, P. A., Le, H., Robbins, S. B., Radunzel, J. M., & Schmidt, F. L. (2015). College performance and retention: A meta-analysis of the predictive validities of ACT® scores, high school grades, and SES. Educational Assessment, 20(1), 23–45. https://doi.org/10.1080/10627197.2015.997614

Wiederkehr, V., Darnon, C., Chazal, S., Guimond, S., & Martinot, D. (2015). From social class to self-efficacy: Internalization of low social status pupils’ school performance. Social Psychology of Education, 18(4), 769–784. https://doi.org/10.1007/s11218-015-9308-8

Willingham, W. W., Pollack, J. M., & Lewis, C. (2002). Grades and test scores: Accounting for observed differences. Journal of Educational Measurement, 39(1), 1–37. https://doi.org/10.1111/j.1745-3984.2002.tb01133.x

Xu, Y. J., & Webber, K. L. (2018). College student retention on a racially diverse campus: A theoretically guided reality check. Journal of College Student Retention: Research, Theory & Practice, 20(1), 2–28. https://doi.org/10.1177/1521025116643325

Yee, P. L., Pierce, G. R., Ptacek, J. T., & Modzelesky, K. L. (2003). Learned helplessness attributional style and examination performance: Enhancement effects are not necessarily moderated by prior failure. Anxiety, Stress, and Coping, 16(4), 359–373. https://doi.org/10.1080/0003379031000140928

Zajacova, A., Lynch, S. M., & Espenshade, T. J. (2005). Self-efficacy, stress, and academic success in college. Research in Higher Education, 46(6), 677–706. https://doi.org/10.1007/s11162-004-4139-z

Zwick, R. (2002). Fair game? The use of standardized admissions tests in higher education. Psychology Press.

Zwick, R. (2017). Who gets in? Harvard University Press.

Funding

The authors received no financial support for the research, authorship, and/or publication of this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Ethical Approval

This study was exempt by the Temple University Institutional Review Board as all data were anonymized and provided to the researchers by the Institutional Research department at the participating institution.

Consent to Participate

Not applicable.

Consent for Publication

The publisher has the authors’ permission to publish the relevant contribution.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Assumption Test Results

Prior to conducting our linear and logistic regression analyses, we tested relevant assumptions. An analysis of standard residuals was conducted, which showed that the data contained no outliers after removing 24 cases (2.7%) for missing UGPA (Std. Residual Min = − 5.361, Std. Residual Max = 2.019), and one outlier (.11%) for both 4-year (Std. Residual Min = − 1.81, Std. Residual Max = 2.49) and 5-year graduation (Std. Residual Min = − 2.34, Std. Residual Max = 1.45). By examining the outlier for graduation, we determined that this student participated in AP and dual enrollment coursework and graduated in less than 4 years. We confirmed that despite the removal of these cases, the resulting analytical sample sizes of 862 for UGPA and 867 for graduation provided adequate statistical power given the number of predictor variables included in our analyses (Tabachnick et al., 2007). The assumption of singularity was met as the predictor variables were determined to not be a combination of other predictor variables. The data met the assumption of independent errors (Durbin–Watson value = 1.942 for UGPA). An examination of correlations identified statistically significant correlations between several of the predictor variables (see Table 5). However, collinearity statistics were within acceptable limits (Tolerance = .705–.966, VIF = 1.035–1.417; Hair et al., 2018). Therefore, we deemed the assumption of multicollinearity to have been met. We determined our data were a good fit for the regression model based on Hosmer and Lemeshow Test (Hosmer et al., 1997) values for 4-year graduation (p = .213) when Pell Grant receipt is excluded from the analysis, and 5-year graduation (p = .221). However, we retained Pell Grant receipt in our regression analysis for 4-year graduation given the strength of the correlation between Pell Grant receipt and 4-year graduation (p = 0.72). Histograms of standardized residuals indicated that the data contained approximately normally distributed errors, as did the normal P–P plots of standardized residuals, which showed that all points were on or near the line. Therefore, the assumptions of normality, linearity, and homoscedasticity were satisfied (Hair et al., 2018).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Paris, J.H., Beckowski, C.P. & Fiorot, S. Predicting Success: An Examination of the Predictive Validity of a Measure of Motivational-Developmental Dimensions in College Admissions. Res High Educ 64, 1191–1216 (2023). https://doi.org/10.1007/s11162-023-09743-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-023-09743-w