Abstract

In structural dynamics a structure’s dynamic properties are often determined from its frequency-response functions (FRFs). Commonly, FRFs are determined by measuring a structure’s response while it is subjected to controlled excitation. Impact excitation performed by hand is a popular way to perform this step, as it enables rapid FRF acquisition for each individual excitation location. On the other hand, the precise location of impacts performed by hand is difficult to estimate and relies mainly on the experimentalist’s skills. Furthermore, deviations in the impact’s location and direction affect the FRFs across the entire frequency range. This paper proposes the use of ArUco markers for an impact-pose estimation for the use in FRF acquisition campaign. The approach relies on two dodecahedrons with markers on each face, one mounted on the impact hammer and another at a known location on the structure. An experimental setup with an analog trigger is suggested, recording an image at the exact time of the impact. A camera with a fixed aperture is used to capture the images, from which the impact pose is estimated in the structure’s coordinate system. Finally, a procedure to compensate for the location error is presented. This relies on the linear dependency of the FRFs in relation to the impact offset.

Similar content being viewed by others

Introduction

In noise and vibration engineering, a structure’s dynamic properties are often evaluated in terms of its frequency response functions (FRFs). A reliable determination of FRFs is commonly carried out using an experimental approach. This is typically performed on a non-operating system by exciting the structure using an impact hammer or electrodynamic shaker. The response of the structure is measured simultaneously, typically with accelerometers, laser vibrometers or optical methods [2, 11, 20]. Obtained FRFs can serve as a prerequisite for various dynamic studies (e.g., modal identification,Footnote 1 dynamic substructuring or transfer-path analysis); therefore, a high level of acquisition precision is necessary for a meaningful analysis.

The real-life measurement process for obtaining FRFs is often hindered by the presence of experimental errors. In general, they can be classified according to their nature into two categories: random errors and systematic/bias errors [29]. Random errors affect the reliability, but not the overall accuracy of the outcome. Often referred to as measurement uncertainty, it characterizes the spread of the measured quantity. Typical sources of random errors are sensor/environment noise, rounding errors in analog to digital conversion and other uncontrollable factors. Meanwhile, systematic errors are consistent and repeatable, resulting in a systematic shift of the measurement results, affecting their accuracy but not their reliability. An erroneous position/orientation of the applied impact excitation is a common example of measurement bias. Errors of this type can be reduced by carefully planning the experiment in advance, but as the source of the inaccuracies is unknown, they cannot be corrected.

Assume that we measure a system’s FRF using an impulse hammer and a fixed accelerometer on the structure. Looking at the response measurement, significant errors might arise due to erroneous sensor positioning, mass loading, added stiffness and additional damping from the sensor cabling [9]. Carefully designing the experiment in advance helps to minimize the influence of the above-mentioned errors. Due to the fact that the response measurement from a single impact is subjected to random errors, an approach often adopted is where multiple impacts at the same location are averaged, thus reducing the effect of noise. However, due to the manual nature of exciting the structure, a large degree of uncertainty is introduced by the error in the location and the orientation of each excitation. Accurate impact position is a requirement for several approaches commonly used in structural dynamics, such as dynamic substructuring [10], virtual point transformation [8] or transfer path analysis [22]. When performing impacts by hand, a sufficient level of repeatability is challenging to achieve [18] and is highly dependent on the skill of the experimentalist. Using an automatic modal hammer, the impact repeatability can be significantly improved [21]. However, this does not address the bias errors, as the impact location is again dependent on the experimentalist [29]. The error in the impact’s location is noticeable in the resonance and anti-resonance regions of the FRFs. Close to the resonances it is reflected in different amplitudes, while at anti-resonance frequencies it appears as a shift in the anti-resonance frequency. The differences in the FRFs can be considered linear for small location offsets [18]. This enables compensation of the FRF or the location error, given that the exact impact location is known, which is rarely possible in practice [8, 22].

In this paper an approach to determine the hammer’s impact location with respect to the tested structure is presented for the use in FRF acquisition campaign. The approach relies on using computer-vision and fiducial markers. Fiducial markers have found uses in many computer-vision applications that require a pose estimation, such as drones [27], autonomous robots [3, 19], object tracking [31] and facial landmark detection [4]. Among the fiducial markers, the ArUco marker library [17] in particular was found to be effective and robust for the simultaneous detection of multiple markers [26] and has shown promising results in structural dynamics applications [1, 28].

Single marker pose tracking, while reasonably accurate, was found to be subject to ambiguity and inaccurate detections [23]. The pose-estimation accuracy of an object can be improved by employing multiple markers at different angles, as shown by Oščadal et al. [23]. Accurate results using multiple markers can be achieved by mounting the markers on the faces of a dodecahedron object. In [31], this is demonstrated with a mixed reality application of a real-time, six-degrees-of-freedom, stylus-tracking application, achieving a sub-0.4-mm accuracy. The proposed method allows the system to track the position and orientation of the passive stylus as it moves and provide updated information in a timely manner without significant delay. The same conclusions were found in [33] when developing a hand-held, tissue-stiffness measurement device, achieving the same location accuracy of sub-0.4 mm.

This paper proposes an approach using a dodecahedron with ArUco markers attached to an impact hammer to estimate its location and orientation during structure-impact testing. The proposed method does not focus on estimating the impact pose in real-time, but on the offline process. Methods that can estimate the modal parameters in real time [5, 24] are used for continuous monitoring and damage detection, but do not provide impact position estimation, vital for methods in [8, 10, 22]. Dodecahedron shape is proposed as it allows the use of multiple markers, thus improving pose estimation results. To estimate the impact pose in a structure’s coordinate system, an additional reference dodecahedron is mounted on the structure itself with its location known. Using a high-resolution industrial camera with an analog trigger, an image is taken of every impact. The markers are then detected through particular dedicated algorithms, resulting in an estimated impact pose with regards to the tested structure. An experiment was devised to validate the location accuracy of the proposed approach. The practical applicability of the approach was then investigated on a test structure, where excitations using an impact hammer were spread around a target area, as is usually the case when manually performing the impacts. For each impact performed, its pose was estimated when the impact hammer was in contact with the structure. Finally, by assuming a linear relation regarding the FRF and the location offset, a compensation of FRFs for the location error is proposed, resulting in an improved consistency of the measured FRFs.

The paper is organized as follows. The following section briefly summarizes the basic principles of pose estimation using ArUco markers. “Impact-Pose Detection Using ArUcoMarkers” introduces the procedure to estimate the hammer pose for impact testing. The procedure is then validated in “Experimental Study”, followed by the application for a FRF measurement. In “Results”, the pose estimation results are presented, followed by the conclusion in the final section.

Theoretical Background

ArUco Markers

ArUco markers are a type of fiducial markers developed by Garrido-Jurado et al. [17]. Each ArUco marker is a black square with an internal binary grid. The grid encodes a unique ID for each marker and determines its orientation. In an image, all square-shaped objects are detected, and using the binary grid the ArUco markers are differentiated from the other shapes. The pixel coordinates of the marker corners are extracted from the image and are further refined to sub-pixel accuracy using the marker edge gradients in the image [12]. Then, the marker pose with respect to the camera can be determined using the P3P solution to the Perspective-n-Point (PnP) problem [13]. This determines the translation and rotation vector from the camera to the centre of the marker. All distances in the image are estimated based on the pre-defined marker size.

To successfully determine the ArUco marker pose, a camera calibration is required to determine the camera matrix and the distortion coefficients [16]. A traditional calibration is made using chessboard grids, as proposed by Zhang et al. [32]. The major drawback of this approach is that the chessboard has to be fully visible and must not be occluded. The chessboard approach was further improved using ChArUco boards. The addition of ArUco markers with known locations on the chessboard allows the use of partially occluded chessboards [15].

Pose Detection

Single ArUco marker detections are subject to ambiguity and jittery detections. To improve the accuracy, the use of multiple markers is suggested. A dodecahedron with ArUco markers on its sides provides multiple visible faces at every angle. As such, it is very suitable for use in spatial tracking, as shown in [31].

The ArUco marker’s position and orientation are estimated in the camera’s coordinate system. However, this does not allow for a direct determination of its pose in (the more preferable) structure’s coordinate system, since the location of the camera with respect to the structure is unknown. In order to obtain the marker’s location in the structure’s frame of reference, a second (also known as the reference) dodecahedron is introduced. The latter is placed near or mounted directly on the structure. The pose of the reference in relation to the structure’s coordinate system must be known (for instance, from a CAD model). Using a geometric transformation, it is then possible to determine the location and orientation of the markers on the structure in the structure’s coordinate system.

First, the detected markers of both dodecahedrons need to be transformed to their respective centres. To achieve this, the transformation matrix \(\textbf{R}\) from each face to the centre of the dodecahedron must be defined by knowing the exact geometry of the dodecahedron:

where \(\varphi = ||\varvec{r}_\textrm{v} ||\) and \(\varvec{r} = \frac{\varvec{r}_\textrm{v}}{\varphi }\) with \(r_x\), \(r_y\) and \(r_z\) being the components of \(\varvec{r}_\textrm{v}\). The rotation and translation components can then be composed into a single matrix as:

where \(R_{\text {ij}}\) are the components of \(\textbf{R}\) and \(t_x\), \(t_y\) and \(t_z\) are the translation components. All detected markers are transformed to the dodecahedron centre for both the reference and the hammer dodecahedron.

where \(\textbf{T}_{d,c}\) is the transformation matrix of the dodecahedron centre in the camera coordinate system, \(\textbf{T}_{f,c}\) the transformation matrix of the dodecahedron face in the camera coordinate system and \(\textbf{T}^*\) the transformation matrix of the dodecahedron centre in the coordinate system of its face. As mentioned previously, a pose estimation of planar targets is susceptible to ambiguity under certain circumstances [30], which usually results in an unstable z direction. This problem commonly occurs when the ArUco marker is at a very steep or shallow angle with respect to the camera. To eliminate bad detections, all marker transformation matrices are projected on an image plane, taking into account the known camera matrix and distortion coefficients. The pixel distances between all the projected marker centres are calculated. Inter-marker distances over the set pixel limit are used to eliminate the bad detections from the dataset.

The result for each dodecahedron is a set of locations and rotations for each visible marker, transformed to its centre. The next step is to average all the poses of all the markers on the dodecahedron. For locations, the simple mean of their coordinates is estimated, while rotations are averaged by converting the rotation vectors to quaternions and using spherical linear interpolation (slerp) [25].

Dodecahedron Calibration

The manual nature of manufacturing the dodecahedron with ArUco markers leads to deviations in the locations and rotations of the marker centres with respect to their ideal positions on the faces. As proposed in [31], the dodecahedron should be calibrated by minimising the appearance distance between the image \(I_c\) and the object \(O_t\) across all visible marker points \(\textbf{x}_i\):

where \(\textbf{p}_{\text {j}}\) is the marker pose with respect to the dodecahedron and \(\textbf{p}_{\text {k}}\) is the marker pose with respect to the camera. Using this, we determine the precise pose of each marker relative to the dodecahedron.

Impact-Pose Detection Using ArUco Markers

For the impact-pose detection, the impact hammer is equipped with a dodecahedron that has ArUco markers glued to its faces. The identified pose is then transformed into the desired coordinate system with the help of the reference dodecahedron with its exact location known. The procedure is schematically presented in Fig. 1.

The rotation matrices are notated as \(\textbf{R}\) and the translation vectors as \(\varvec{t}\). The subscript denotes whether the quantity is related to the hammer \((\star )^\textrm{imp}\) or the reference \((\star )^\textrm{ref}\). The hammer and reference dodecahedrons are detected in the camera’s coordinate system; therefore, first the rotation matrix of the reference dodecahedron is transposed and its corresponding translation vector inverted.

The pose of the hammer with respect to the reference is calculated:

And with the known pose of the reference in the desired coordinate system, the pose of the hammer is calculated:

To calculate the location of the hammer tip upon impact, one final transformation from the dodecahedron centre to the tip is performed.

Experimental Study

To demonstrate the practical applicability of the proposed impact-location estimation, an experimental case study was devised, which had two stages. First, an experimental validation of the impact-pose determination was performed using a known impact location. Second, a FRF measurement campaign was performed on a laboratory test-structure, where FRFs were obtained using multiple excitation repetitions.

Set-Up Calibration

An 11x16 ChArUco board was printed and glued to a glass plane. A total of 47 images of the board in different positions and orientations with respect to the camera were taken. For the image capturing, an industrial Basler acA4112-20um camera with a Basler C10-2514-3M-S f25mm lens was used. The images were captured at a resolution of 4096x3000 pixels. The coverage of the camera’s sensor by the chessboard corners was evaluated (Fig. 2). The camera was calibrated using the OpenCV python library and the camera matrix and distortion matrix were obtained [14]. The re-projection error for each calibration image was evaluated and is shown in Fig. 3.

Two dodecahedron objects with a side of 23.25 mm were 3D printed. ArUco markers were glued to their faces.Footnote 2 Altogether, 30 calibration photographs from different angles were taken for both dodecahedrons used later in the experimental study. The location and orientation deviations of the markers from the ideal ones were determined and accounted for during the transformations of the markers to the centre of the dodecahedron.

Validation of the Pose-Estimation Accuracy

To verify the proposed approach, an experimental validation was performed. The validation relies on the tip of the impact hammer being positioned at a precisely known location. To fix the tip at a single point, a special pointed hammer tip was used, along with a shallow blind hole being drilled in the test structure (Fig. 4). The reference dodecahedron was positioned in one of the structure’s holes with its position precisely known from the CAD model. In this manner, an accurate reference pose was defined. The camera aperture was set to the minimum value possible (f/1.4) to achieve the maximum depth of field, ensuring that all the markers on the dodecahedron were in focus. Furthermore, to achieve a focus on both the hammer and the reference dodecahedron, the camera was placed at an approximately equal distance from each of the dodecahedrons. Due to the stationary nature of the experiment, long exposure times could be used. Homogeneous lighting was ensured across the whole setup.

For the validation process, the camera was kept stationary while the hammer was tilted in different directions. The tip of the hammer was always kept at the associated hole. For each hammer pose an image was captured. The hammer was always placed in positions that ensured the most visible ArUco markers from the camera’s perspective. Altogether, 27 photographs were taken, with three examples being presented in Fig. 5.

The pose of the hammer tip in relation to the test structure’s coordinate system was determined for each image using the approach presented in “Impact-Pose Detection Using ArUcoMarkers”. The identified impact poses are depicted in Fig. 6 using an open-source python package pyFBS [6].

As for this experimental verification, the precise location of the impact hammer is available, and the results were compared to the predetermined location of the hammer tip on the structure. Multiple approaches with the calibration of the dodecahedron-marker location and orientation were analysed (Fig. 7). The results indicate that there is an improvement when compensating for the offsets determined during the calibration. The maximum translation error achieved using this approach was 0.58 mm, compared to 0.94 mm without any calibration. To determine the reliable orientation of the hammer towards the camera, three distinct orientations were used. In the first few images, where the errors of rotation+translation calibration are high, the impact hammer was oriented as shown in pose 1 (Fig. 5a). The markers captured by the camera in this particular orientation were not calibrated as successfully as in orientations where different markers are visible (pose 2 or 3, Fig. 5b and c, respectively), which led to poor results. Pose 2 used in the second batch of images (Fig. 5b) proved to return the best results and was therefore chosen as the reliable orientation in further experimental work. Since the maximum translation error can be considered smaller than the error achieved during a manual excitation, determining the impact location using the proposed approach is viable for practical use.

To analyse the effect of the number of visible ArUco markers on the accuracy of the proposed approach, tests were performed, using from 1 to all 6 visible markers on the hammer dodecahedron (Fig. 8). Meanwhile, the number of visible markers on the reference was kept constant. The results indicate that to achieve maximum accuracy, a larger number of visible markers is required. This indicates that using multiple cameras to capture a single impact would further improve the results.

Experimental Setup

The FRF acquisition was then performed on two beam-like aluminium structures held together with a bolted connection (Fig. 9). The test structure was supported by polyurethane foam blocks, which simulated free-free boundary conditions. One triaxial PCB 356A32 accelerometer was fixed to the structure using cyanoacrylate glue. Again, the reference dodecahedron was mounted at one of the structure’s holes, so its location could be accurately determined. For the excitation, a PCB 086C03 impact hammer with a vinyl tip was used. The hammer was fitted with a dodecahedron on the opposite end.

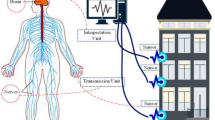

For the image acquisition, the camera from the validation setup was used. An analog trigger was applied to capture the image at the moment the hammer tip made contact with the structure. When the tip was in contact with the metal structure, the trigger circuit was completed (Fig. 10), thus triggering the camera.

Due to the non-conductive nature of the hammer tip used, a thin copper foil was glued on top of it and an electrical contact was achieved upon exciting the structure. To ensure the maximum possible depth of field, the camera aperture was again set to its minimum value (f/1.4). The camera exposure time was set to 3 ms to avoid excessive motion blur distorting the images. The combination of a small aperture and a short exposure time meant that strong lighting had to be used. To further improve the lighting conditions, 2 dB of digital gain were used.

A total of 100 excitations using the modal hammer were performed by hand. The impacts were spread around the target area due to the random variations in the repeatability of the impact. Upon every impact, an image was taken when the trigger circuit was completed (such as, for example, in Fig. 11). As shown previously, the number of visible markers captured affects the accuracy of the pose determination. Therefore, all the impacts were performed with the maximum possible number of markers visible.

The use of digital gain during image acquisition increases the image noise. Hence, the images were de-noised using the Non-Local Means de-noising algorithm [7] before being processed.

Next, the check for structure’s linearity was performed to ensure the FRF data are in fact independent of excitation amplitudes and non-linearity can be neglected as a significant source of FRF differences between individual impacts. As the force level varies for individual excitation (Fig. 12a), the ordinary coherence function will be less than unity, given that the input and output functions are not linear [20]. The ordinary coherence function (Fig. 12b) is close to one in the majority of the frequency region of interest, including resonant regions (indicated by orange vertical lines). This indicates that the FRF amplitude and phase are very repeatable from measurement to measurement, regardless of the force level. The ordinary coherence function is less than unity at the anti-resonance frequencies (indicated by red vertical lines). However, this is not a consequence of a structure’s non-linearity, but rather a consequence of an extremely poor signal-to-noise ratio at these frequencies.Footnote 3 Overall, it can be concluded that the nonlinearity of the structure is not a significant source of FRF differences, and can thus be neglected.

Results

The locations and orientations of the applied impacts were determined according to “Impact-Pose Detection UsingArUco Markers”. The identified impact poses are displayed in a 3D environment in Fig. 13.

The obtained time series from the accelerometer’s channels and the impact hammer were then used for the structure’s FRF estimation. The calculated FRFs as a simple ratio of the response and the excitation force for each individual impact and one channel (in the z direction) are presented in Fig. 14. Poor impact repeatability leads to inconsistent FRFs, which are especially apparent in the resonance and anti-resonance regions. By zooming in on the frequency range of 470–550 Hz, a shift in the anti-resonance frequency was observed for each impact. By inspecting the proximity of the natural frequency at 730 Hz, differences in the FRF magnitudes were observed for each impact. All of the above-mentioned observations are in accordance with the conclusions drawn in [18].

Figure 14 presents typical results from the FRF measurement campaign. However, the introduction of ArUco markers and an estimation of the impact pose makes it possible to examine the dependency of the FRFs on the impact location. The real parts for the selected frequencies (close to the resonance and anti-resonance frequencies) in relation to impact location bias (determined x and y offsets) are depicted in Fig. 15.

In the following, we focus on the location of the impact only. The orientation deviations are considered insignificant compared to location errors and are therefore neglected. From an inspection of the results in Fig. 15, it is evident that the effect of location bias leads to linear changes in the FRFs’ real part for small offsets. This is in accordance with the findings presented in [8]. Minor deviations from the linear dependency are contributed to the unobserved sources of errors (e.g., measurement noise, impact orientation). The linear nature of the data enables a simple compensation of the FRFs for the error in the impact location, while neglecting all other sources of errors. First, the functional dependency of the FRFs’ real part on the impact location is deduced for each frequency point by fitting the measured data to the plane equation:

where \(\textbf{Y}(f)\) consists of the measured FRFs at individual frequency line for all the repeated impacts and one response location, while \(\Delta \varvec{x}\) and \(\Delta \varvec{y}\) are vectors containing the coordinates of each impact in the structure’s coordinate system. In this manner, the coefficients a, b and c are obtained for each frequency line f. Due to the stationary placement of the sensors, there is no additional uncertainty in the measurement results due to their fluctuating position. Therefore, the proposed method can be used for any number and position of response sensors. A similar procedure can be applied to the imaginary FRFs’ part; however, for the sake of simplicity, only real FRF parts are examined in the scope of this work. The obtained approximated planes are presented along with the measured data in Fig. 15.

For an individual frequency point each FRF can then be compensated for location errors on the basis of the established functional dependency (Eq. (10)). Each FRF is translated parallel to the fitted plane at the ideal impact location (\({\Delta } x = 0\), \({\Delta } y = 0\)). The results of this compensation are presented in Fig. 16 for a single frequency point. The compensated data has a noticeably smaller spread around the reference value (inerquartile range has been reduced from 0.029 ms\(^{-2}\)/N for the measured FRFs to 0.009 ms\(^{-2}\)/N for the compensated FRFs), recognized in the origin (\({\Delta } x = 0\) and \({\Delta } y = 0\)) of the fitted plane. The remaining spread is contributed to the other uncontrollable sources of measurement errors that cannot be observed using the proposed approach (e.g. spread of impact orientation, instrumentation noise-floor). Figure 16 also justifies the assumption of treating the impact orientation less prominent source of FRF spread than location offset.

As the error is corrected for each frequency point, fully compensated FRFs across the entire frequency range can be obtained. As shown in Fig. 17 the original measured FRFs are overlaid with the compensated ones. A significantly reduced spread of the FRF’s magnitude is observed. The most apparent improvements are near the anti-resonance and resonance frequencies, where the effect of location bias is most apparent.

The current experimental setup used only a single camera and assumes that multiple ArUco markers will be in the field of view of the camera all the time. If more cameras are added to the experimental setup we could have multiple poses, which could be used to either increase the spatial resolution or maintain the accuracy if a part of the dodecahedron from one camera becomes obscured.

Furthermore, by placing the dodecahedron on transducers we could estimate the positions and orientations of the output channels as well. This can reduce the time of positioning the transducers on the CAD model, as well as the number of possible wrong positions or orientations during the experiment.

Conclusion

In this paper the use of ArUco markers for an impact-pose estimation for applications in structural dynamics is investigated. For improved robustness in spatial tracking, the use of a dodecahedron with markers on its side is proposed. By equipping the impact hammer with a dodecahedron, a precise impact location and orientation can be determined in the reference coordinate system. The approach is suited for the FRF measurement campaign where impact location determination or sufficient repeatability between successive impacts proves to be challenging.

The applicability of the proposed approach is demonstrated with an experimental case study, where the structure’s FRFs are acquired for one response and one excitation location with multiple repetitions. From the individual impact-pose estimations the quality of the impact location’s repeatability can be assessed. By adopting a linear relation between the FRF’s real and imaginary parts with regards to the impact offset, a compensation for the location error is proposed. The proposed method does not require a baseline measurement. Knowing the exact location of a particular impact, we can perform a linear approximation of the real part of the FRFs. Thus, we can estimate the value of the real part of the FRF at any point near the repeated impacts using the approximated plane. It is shown that by using this approach, an improved consistency of the estimated FRFs is obtained. The approach is suitable for applications where the precise impact location is required (e.g., virtual point transformation) or accurate FRFs are of key importance (e.g., frequency based substructuring, transfer-path analysis).

Notes

The modal parameters of vibrating structures can also be monitored in real time using a suitable eigen perturbation method. This method takes into account the uncertainties in the measured data by considering a first-order error model [5, 24]. The initial estimate of the modal parameters is continuously updated with new data as they are collected.

Markers with a size of 20 mm were determined to still be of practical use, while providing sufficient accuracy. Larger markers improve the accuracy and can be more reliably detected at a greater distance.

Note that the lower frequency range (coloured orange) is excluded from this inspection due to the presence of hardly measurable rigid-body modes.

References

Abdelbarr M, Chen YL, Jahanshahi MR, Masri SF, Shen WM, Qidwai UA (2017) 3d dynamic displacement-field measurement for structural health monitoring using inexpensive rgb-d based sensor. Smart materials and structures 26(12):125016

Avitabile P (2017) Modal testing: a practitioner’s guide. John Wiley & Sons

Babinec A, Jurišica L, Hubinský P, Duchoň F (2014) Visual localization of mobile robot using artificial markers. Procedia Engineering 96:1–9, modelling of Mechanical and Mechatronic Systems

Barbero-García I, Pierdicca R, Paolanti M, Felicetti A, Lerma JL (2021) Combining machine learning and close-range photogrammetry for infant’s head 3d measurement: a smartphone-based solution. Measurement 182:109686

Bhowmik B, Tripura T, Hazra B, Pakrashi V (2020) Real time structural modal identification using recursive canonical correlation analysis and application towards online structural damage detection. Journal of Sound and Vibration 468:115101

Bregar T, El Mahmoudi A, Kodrič M, Ocepek D, Trainotti F, Pogačar M, Göldeli M, Čepon G, Boltežar M, Rixen DJ (2022) pyfbs: A python package for frequency based substructuring. Journal of Open Source Software 7(69):3399

Buades A, Coll B, Morel JM (2011) Non-local means denoising. Image Processing On Line 1:208–212

Čepon G, Ocepek D, Korbar J, Bregar T, Boltežar M (2022) Sensitivity-based characterization of the bias errors in frequency based substructuring. Mechanical Systems and Signal Processing 170:108800

De Klerk D, Visser R (2010) Characterization of measurement errors in experimental frequency based substructuring. ISMA 2010 Including USD2010 pp 1881–1890

De Klerk D, Rixen DJ, Voormeeren S (2008) General framework for dynamic substructuring: history, review and classification of techniques. AIAA journal 46(5):1169–1181

Ewins DJ (2009) Modal testing: theory, practice and application. John Wiley & Sons

Förstner W, Gülch E (1987) A fast operator for detection and precise location of distinct points, corners and centre of circular features. Proc of the Intercommission Conference on Fast Processing of Photogrammetric Data pp 281–305

Gao X, Hou X, Tang J, Cheng H (2003) Complete solution classification for the perspective-three-point problem. IEEE Trans Pattern Anal Mach Intell 25:930–943

Garrido S (2020a) Calibration with aruco and charuco. opencv: Open source computer vision. https://docs.opencv.org/4.5.2/da/d13/tutorial_aruco_calibration.html

Garrido S (2020b) Detection of charuco corners. opencv: Open source computer vision. https://docs.opencv.org/4.5.2/d9/d6d/tutorial_table_of_content_aruco.html

Garrido S, Nicholson S (2021) Detection of aruco markers. opencv: Open source computer vision. https://docs.opencv.org/master/d5/dae/tutorial_aruco_detection.html

Garrido-Jurado S, Muñoz-Salinas R, Madrid-Cuevas FJ, Marín-Jiménez MJ (2014) Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognition 47(6):2280–2292

Klerk DD (2011) How bias errors affect experimental dynamic substructuring. In: Structural Dynamics, Volume 3, Springer, pp 1101–1112

Liu Y, Zhou J, Li Y, Zhang Y, He Y, Wang J (2022) A high-accuracy pose measurement system for robotic automated assembly in large-scale space. Measurement 188:110426

Maia NMM, e Silva JMM (1997) Theoretical and experimental modal analysis. Research Studies Press

Maierhofer J, Mahmoudi AE, Rixen DJ (2020) Development of a low cost automatic modal hammer for applications in substructuring. In: Dynamic Substructures, Volume 4, Springer, pp 77–86

Ocepek D, Čepon G, Boltežar M (2022) Characterization of sensor location variations in admittance-based tpa methods. Journal of Sound and Vibration p 116888

Oščádal P, Heczko D, Vysockỳ A, Mlotek J, Novák P, Virgala I, Sukop M, Bobovskỳ Z (2020) Improved pose estimation of aruco tags using a novel 3d placement strategy. Sensors 20(17):4825

Panda S, Tripura T, Hazra B (2021) First-order error-adapted eigen perturbation for real-time modal identification of vibrating structures. Journal of Vibration and Acoustics 143(5)

Pennec X (1998) Computing the mean of geometric features application to the mean rotation. PhD thesis, INRIA

Romero-Ramirez FJ, Muñoz-Salinas R, Medina-Carnicer R (2018) Speeded up detection of squared fiducial markers. Image and vision Computing 76:38–47

Sani MF, Karimian G (2017) Automatic navigation and landing of an indoor ar. drone quadrotor using aruco marker and inertial sensors. In: 2017 International Conference on Computer and Drone Applications (IConDA), pp 102–107

Tocci T, Capponi L, Rossi G (2021) Aruco marker-based displacement measurement technique: uncertainty analysis. Engineering Research Express 3(3):035032

Trainotti F, Haeussler M, Rixen D (2020) A practical handling of measurement uncertainties in frequency based substructuring. Mechanical Systems and Signal Processing 144:106846

Tseng HY, Wu PC, Yang MH, Chien SY (2016) Direct 3d pose estimation of a planar target. In: 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), IEEE, pp 1–9

Wu PC, Wang R, Kin K, Twigg C, Han S, Yang MH, Chien SY (2017) Dodecapen: accurate 6dof tracking of a passive stylus. In: Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology, pp 365–374

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Transactions on pattern analysis and machine intelligence 22(11):1330–1334

Zodage T, Chaudhury A, Srivatsan R, Zevallos N, Choset H Hand-held stiffness measurement device for tissue analysis

Acknowledgements

The authors acknowledge partial financial support from the core research funding P2-0263 financed by ARRS, the Slovenian research agency, and project 101091536-DiCiM (Horizont Europe).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Čepon, G., Ocepek, D., Kodrič, M. et al. Impact-Pose Estimation Using ArUco Markers in Structural Dynamics. Exp Tech 48, 369–380 (2024). https://doi.org/10.1007/s40799-023-00646-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40799-023-00646-0