Abstract

The Trust Region Subproblem is a fundamental optimization problem that takes a pivotal role in Trust Region Methods. However, the problem, and variants of it, also arise in quite a few other applications. In this article, we present a family of iterative Riemannian optimization algorithms for a variant of the Trust Region Subproblem that replaces the inequality constraint with an equality constraint, and converge to a global optimum. Our approach uses either a trivial or a non-trivial Riemannian geometry of the search-space, and requires only minimal spectral information about the quadratic component of the objective function. We further show how the theory of Riemannian optimization promotes a deeper understanding of the Trust Region Subproblem and its difficulties, e.g., a deep connection between the Trust Region Subproblem and the problem of finding affine eigenvectors, and a new examination of the so-called hard case in light of the condition number of the Riemannian Hessian operator at a global optimum. Finally, we propose to incorporate preconditioning via a careful selection of a variable Riemannian metric, and establish bounds on the asymptotic convergence rate in terms of how well the preconditioner approximates the input matrix.

Similar content being viewed by others

Notes

Standard in the sense that it uses the most natural choice of retraction and Riemannian metric.

We caution that [22] does not use the term “affine eigenvalues”.

This statement is simple corollary of Lemma 2.2 in [20].

In spite of the method’s simplicity, we are not aware of any descriptions of this method earlier then Phan et al.’s (relatively) recent work.

References

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Adachi, S., Iwata, S., Nakatsukasa, Y., Takeda, A.: Solving the trust-region subproblem by a generalized eigenvalue problem. SIAM J. Optim. 27(1), 269–291 (2017)

Bakshi, A., Chepurko, N., Jayaram, R.: Testing positive semi-definiteness via random submatrices. In: 61st Annual IEEE Symposium on Foundations of Computer Science (2020)

Beck, A., Vaisbourd, Y.: Globally solving the trust region subproblem using simple first-order methods. SIAM J. Optim. 28(3), 1951–1967 (2018)

Boumal, N.: An Introduction to Optimization on Smooth Manifolds. To appear with Cambridge University Press (2022)

Boumal, N., Voroninski, V., Bandeira, A.S.: Deterministic guarantees for Burer–Monteiro factorizations of smooth semidefinite programs. Commun. Pure Appl. Math. 73(3), 581–608 (2019)

Carmon, Y., Duchi, J.C.: Analysis of Krylov subspace solutions of regularized non-convex quadratic problems. In: Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 31, pp. 10705–10715. Curran Associates Inc., Red Hook (2018)

Chambers, L.G., Fletcher, R.: Practical methods of optimization. Math. Gaz. 85(504), 562 (2001)

Chapelle, O., Sindhwani, V., Keerthi, S.: Branch and bound for semi-supervised support vector machines. In: Proceedings of the 19th International Conference on Neural Information Processing Systems, NIPS’06, pp. 217–224. MIT Press, Cambridge (2006)

Cucuringu, M., Tyagi, H.: Provably robust estimation of modulo 1 samples of a smooth function with applications to phase unwrapping. arXiv e-prints arXiv:1803.03669 (2018)

Edelman, A., Arias, T.A., Smith, S.T.: The geometry of algorithms with orthogonality constraints. SIAM J. Matrix Anal. Appl. 20(2), 303–353 (1998)

Gander, W., Golub, G.H., von Matt, U.: A constrained eigenvalue problem. Linear Algebra Appl. 114–115, 815–839 (1989). (Special Issue Dedicated to Alan J. Hoffman)

Golub, G.H., von Matt, U.: Quadratically constrained least squares and quadratic problems. Numer. Math. 59(1), 561–580 (1991)

Gould, N., Lucidi, S., Roma, M., Toint, P.: Solving the trust-region subproblem using the Lanczos method. SIAM J. Optim. 9(2), 504–525 (1999)

Hager, W.W.: Minimizing a quadratic over a sphere. SIAM J. Optim. 12(1), 188–208 (2001)

Han, I., Malioutov, D., Avron, H., Shin, J.: Approximating spectral sums of large-scale matrices using stochastic Chebyshev approximations. SIAM J. Sci. Comput. 39(4), A1558–A1585 (2017)

Joachims, T.: Transductive learning via spectral graph partitioning. In: Proceedings of the Twentieth International Conference on International Conference on Machine Learning, ICML’03, pp. 290–297. AAAI Press (2003)

Lucidi, S., Palagi, L., Roma, M.: On some properties of quadratic programs with a convex quadratic constraint. SIAM J. Optim. 8(1), 105–122 (1998)

Luenberger, D.G.: Linear and nonlinear programming. Math. Comput. Simul. 28(1), 78 (1986)

Martínez, J.M.: Local minimizers of quadratic functions on Euclidean balls and spheres. SIAM J. Optim. 4(1), 159–176 (1994)

Mishra, B., Sepulchre, R.: Riemannian preconditioning. SIAM J. Optim. 26(1), 635–660 (2016)

Moré, J.J., Sorensen, D.C.: Computing a trust region step. SIAM J. Sci. Stat. Comput. 4(3), 553–572 (1983)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. (2006)

Phan, A.-H., Yamagishi, M., Mandic, D., Cichocki, A.: Quadratic programming over ellipsoids with applications to constrained linear regression and tensor decomposition. Neural Comput. Appl. 32(11), 7097–7120 (2020)

Shustin, B., Avron, H.: Randomized Riemannian preconditioning for orthogonality constrained problems (2020)

Tropp, J.A., Yurtsever, A., Udell, M., Cevher, V.: Practical sketching algorithms for low-rank matrix approximation. SIAM J. Matrix Anal. Appl. 38(4), 1454–1485 (2017)

Vandereycken, B., Vandewalle, S.: A Riemannian optimization approach for computing low-rank solutions of Lyapunov equations. SIAM J. Matrix Anal. Appl. 31(5), 2553–2579 (2010)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by Israel Science Foundation Grant 1272/17.

Appendix A: Constructing \(\phi \)

Appendix A: Constructing \(\phi \)

We now show a simple way to construct a smooth \(\phi (\cdot )\) fulfilling both requirements stated in Items 1 and 2 in Sect. 6.

First, we choose some time parameter \(\epsilon > 0\) and set \( d:= \lambda _{\min }(\textbf{M}) - \epsilon \). We construct \(\phi (\alpha )\) to be a smoothed out over-estimation of \( \max (\alpha , -d) \). We first construct an under estimation. Let \(\gamma >1\) be another parameter, and define

Then \( \varphi (\alpha ) \) is a smooth function that approximates \(\max (\alpha , -d) \), but it is an under estimation: \(\varphi (\alpha ) \le \max (\alpha , -d) \).

While the difference between \( \max (\alpha , -d) \) and \(\varphi (\alpha )\) reduces significantly when \( \gamma \) grows, we want to make sure that our approximation is greater than or equal to the \( \max (\alpha , -d) \). To that end, let

where \(\textrm{W}\left[ 0,\cdot \right] \) is the zero branch of the Lambert-\( \textrm{W}\) function. Now, set:

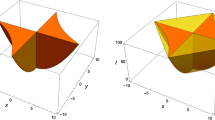

See Fig. 5 for a graphical illustration of \(\phi \). It is possible to show that

so Item 1 holds, and the approximation error (Item 2) is small if \(\epsilon \) is sufficiently small and \(\gamma \) is sufficiently large. The proof of Eq. (A.2) is rather technical and does not convey any additional insight on the btrs and its solution, so we omit it.

Illustration of the approximation’s behavior. Values of the variable \(\alpha \) are depicted in the x axis. Note that \( \phi (-\alpha ) > - \lambda _{\min }(\textbf{M}) \) for all \( \alpha \), while in addition \(\phi (\alpha ) \) well approximates \( \alpha \) for values of \(\alpha \ge -\lambda _{\min }(\textbf{M})\)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mor, U., Shustin, B. & Avron, H. Solving trust region subproblems using Riemannian optimization. Numer. Math. 154, 1–33 (2023). https://doi.org/10.1007/s00211-023-01360-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-023-01360-0