Abstract

We use hyperbolic wavelet regression for the fast reconstruction of high-dimensional functions having only low dimensional variable interactions. Compactly supported periodic Chui–Wang wavelets are used for the tensorized hyperbolic wavelet basis. In a first step we give a self-contained characterization of tensor product Sobolev–Besov spaces on the d-torus with arbitrary smoothness in terms of the decay of such wavelet coefficients. In the second part we perform and analyze scattered-data approximation using a hyperbolic cross type truncation of the basis expansion for the associated least squares method. The corresponding system matrix is sparse due to the compact support of the wavelets, which leads to a significant acceleration of the matrix vector multiplication. In case of i.i.d. samples we can even bound the approximation error with high probability by loosing only \(\log \)-terms that do not depend on d compared to the best approximation. In addition, if the function has low effective dimension (i.e. only interactions of few variables), we qualitatively determine the variable interactions and omit ANOVA terms with low variance in a second step in order to increase the accuracy. This allows us to suggest an adapted model for the approximation. Numerical results show the efficiency of the proposed method.

Similar content being viewed by others

1 Introduction

We consider the problem of reconstructing a multivariate periodic function f on the d-torus \(\mathbb {T}^d\) from discrete function samples on the set of nodes \(\mathcal {X}=\{{\varvec{x}}_1,\ldots ,{\varvec{x}}_M\}\subset \mathbb {T}^d\). As a function model we use periodic Sobolev–Besov spaces with dominating mixed smoothness as they have proven useful in several multivariate approximation problems, see [45, 18, Chapt. 9] and [42, Chapter III,IV]. We provide fast algorithms which recover an individual function \(f:\mathbb {T}^d\rightarrow \mathbb {C}\) from unstructured samples (scattered data), where the error is measured in \(L_2(\mathbb {T}^d)\) and \(L_\infty (\mathbb {T}^d)\). In the sense of [33, Chapt. 22,23] our recovery operator is a Monte-Carlo method referring to the randomized setting, since the nodes in \(\mathcal {X}\) are drawn individually for each function f and are not supposed to work simultaneously for the whole class of functions like in [27, Sect. 9]. We will extend the idea in [3,4,5] to higher order smoothness and give statements that work with overwhelming probability. In fact, the core of the recovery is a wavelet based least squares algorithm. Throughout the paper we work in a rather general context with a minimal set of assumptions, namely in the context of (semi-)orthogonal compactly supported wavelets being a Riesz basis on a fixed level and having m vanishing moments, see (P1), (P2) and (P3). This allows for considering two main examples, periodic orthogonal Daubechies wavelets as well as semi-orthogonal Chui–Wang wavelets.

In this paper we give a self-contained and rather elementary proof for the characterization of Sobolev and Besov-Nikolskij spaces \(H^s_\textrm{mix}(\mathbb {T}^d)\) and \(\mathbf {\varvec{B}}^s_{2,\infty }(\mathbb {T}^d)\) in terms of such wavelet coefficients. There is an interesting qualitative phenomenon happening if s equals m, the order of vanishing moments, see Theorem 3.9. In case of Chui–Wang wavelets this is reflected by the order of the underlying B-spline.

For a finite dimensional hyperbolic cross type index set I, given as in (3.20) and cardinality |I|, we study the projection operator

As a first result wee state in Corollary 3.12 that for \(s<m\) the \(L_2(\mathbb {T}^d)\)-error is bounded by

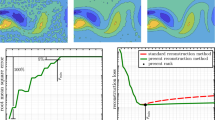

From this bound we infer that the operator \(P_I\) yields the asymptotic optimal error among all operators with rank |I|, see [18, Thm. 4.3.10]. In case \(s=m\), the above bound remains true if we replace the space \(\mathbf {\varvec{B}}^m_{2,\infty }(\mathbb {T}^d)\) by the smaller space \(H^m_{\textrm{mix}}(\mathbb {T}^d)\), see Corollary 3.13. One would actually expect a better rate. However, numerical experiments based on the tools developed in this paper indicate, that there must be an additional \(\log \)-term, see Fig. 8. Additionally, we give in Theorem 3.15 a bound for the \(L_\infty {(\mathbb {T}^d)}\)-error of the projection operator in both cases. Note, that these problems have some history. They belong to the field “hyperbolic wavelet approximation” and have already been investigated by several authors in the literature, e.g. [4, 14, 22, 24, 39], see Sect. 3.3.

In order to investigate the scattered data problem, where we are engaged with the sample set \(\mathcal {X}\) and the corresponding function values, we construct a recovery operator \(S_I^\mathcal {X}\). This operator computes a best least squares fit

to the given data \((f({\varvec{x}}))_{{\varvec{x}}\in \mathcal {X}}\) from the finite dimensional subspace spanned by the wavelets with indices in the hyperbolic cross type set I. We derive the coefficients \(a_j\) by minimizing the error \(\sum _{{\varvec{x}}\in \mathcal {X}}|f({\varvec{x}})-S_I^\mathcal {X}f({\varvec{x}})|^2\) by using an LSQR algorithm, see (3.26). This results in the hyperbolic wavelet regression in Algorithm 1. Assuming that the sample points in \(\mathcal {X}\) are drawn i.i.d. and equally distributed at random with \(|\mathcal {X}| > rsim |I|\,\log |I|\), we show in Corollary 3.22 for \(1/2<s<m\) and \(r>1\) that there is a constant \(C(r,d,s)>0\) such that for fixed \(\Vert f\Vert _{\mathbf {\varvec{B}}^s_{2,\infty }} \le 1\)

For details to the constant C(r, d, s) see Corollary 3.22. The proof is based on the well-known elementary Bernstein inequality for the deviation of a sum of random variables [41, Theorem 6.12]. It turns out that the error of the least squares approximation asymptotically coincides with the behavior of the projection operator \(P_I\) on the wavelet space with index set I. Even in the case \(s=m\) we show in Corollary 3.22 that the error of the least squares approximation inherits the error bound of the projection operator. Such operators as well as the approximation error are also considered in [8, 10, 12, 27]. Note that, in contrast to the expected error, which has been considered in those references, we show a new concentration inequality for the approximation error \(\left\Vert \smash {f-S_I^\mathcal {X}f} \right\Vert _{L_2(\mathbb {T}^d)}\). The often considered case where \(f\in H^2_{\textrm{mix}}(\mathbb {T}^d)\), [3, 19], can be covered in our results with wavelets with vanishing moments of order \(m\ge 2\), where we benefit from piecewise quadratic wavelets what concerns the convergence rate, see Fig. 8.

We use the parameter n to determine the index-set I, for details see Sect. 3.3. In Lemma 3.11 we state the well-known asymptotic bound \(|I| = \mathcal {O}(2^n n^{d-1})\) for hyperbolic cross type wavelet index sets I. This cardinality of the index set |I| in the hyperbolic wavelet regression drastically reduces the complexity compared to a full grid approximation, which requires an index set of order \(2^{d n}\). However, since the dimension d still appears in the exponent of a logarithmic term, we aim to further reduce the index set I, while keeping the same approximation rate. To this end we introduce the analysis of variance (ANOVA) decomposition, see [7, 25, 30, 31, Section 3.1.6], which decomposes the d-variate function into \(2^d\) ANOVA terms, i.e.

Each term corresponding to \({\varvec{u}}\) depends only on variables \(x_i\), where \(i\in {\varvec{u}}\). The number of these variables is called order of the ANOVA term. However, in practical applications with high-dimensional functions, often only the ANOVA terms of low order play a role in order to describe the function well, see [7, 15, 29, 37, 44]. For a rigorous mathematical treatment of this observation we introduce ANOVA inspired Sobolev spaces of dominating mixed derivatives with superposition dimension \(\nu \)

see also [16] and (4.5) for a natural generalization. These function spaces describe functions, which only consist of ANOVA terms of order less than the superposition dimension \(\nu \). Note that, in contrast to [21] we do not restrict our model to ANOVA terms of order \(\nu =1\). We propose the new Algorithm 2, which approximates functions in the space \(H^{s,\nu }_{\textrm{mix}}(\mathbb {T}^d)\) very well, by using the connection between the ANOVA terms and the corresponding wavelet functions. This allows us to reduce the cardinality of the needed index set I significantly to \(|I|=\mathcal {O}(\left( {\begin{array}{c}d\\ \nu \end{array}}\right) \, 2^n n^{{\nu }-1})\). Furthermore, we gain on the approximation error for \(r>1\) to

with a constant \(C(r,\nu ,s)>0\), for details see Sect. 4.1.

Our further strategy, finding the unimportant dimension interactions and then building an adapted model, allows an interpretation of the data. This strategy is based on sensitivity analysis in combination with computing sensitivity indices, see (2.4), analytically for the related wavelets. A similar algorithm for the approximation with Fourier methods is described in [36]. Note that in this case one has to use a full index set in frequency domain, which gives the larger cardinality of the index set \(|I|=\mathcal {O}(\left( {\begin{array}{c}d\\ \nu \end{array}}\right) \, 2^{n\nu })\) for the same approximation error, for details see Remark 4.3.

This paper is organized as follows. In Sect. 2 we recall the well-known ANOVA decomposition of a function on the d-dimensional torus. Section 3 is dedicated to the approximation using wavelets. In Sect. 3.1 we introduce periodic wavelet spaces, especially Chui–Wang wavelets which are semi-orthogonal, piecewise polynomial and compactly supported wavelets. We formulate three fundamental properties of wavelets which represent the only requirements for our theory. In Sect. 3.2 we give a rather simple and elementary proof of the one-sided sharp wavelet characterization in our periodic setting. In Sect. 3.3 we introduce the operator which truncates the wavelet decomposition by orthogonal projection on the wavelet spaces and we determine the number of necessary parameters. Two results from probability theory are summarized in Sect. 3.4. We use these results in Sect. 3.5 to bound the approximation error from scattered data approximation. This results in the concentration inequalities in Corollary 3.22. Algorithm 1 summarizes the hyperbolic wavelet regression, which gives us the approximant \(S_I^\mathcal {X}f\). In Sect. 4 we show the connection between the ANOVA decomposition and the hyperbolic wavelet regression. Therefore, we determine in Theorem 4.1 the ANOVA decomposition of our approximant \(S_I^\mathcal {X}f\), which we use to improve our algorithm to Algorithm 2. Finally, Sect. 5 is dedicated to some numerical examples, where we apply our algorithms to confirm our theory. The relevant facts about Sobolev–Besov spaces of mixed smoothness have been collected in the appendix.

1.1 Notation

In this paper we consider multivariate periodic functions \(f:\mathbb {T}^d\rightarrow \mathbb {C}\), \(d\in \mathbb {N}\), where we identify the torus \(\mathbb {T}\) with \({[-\tfrac{1}{2},\tfrac{1}{2})}\). As usually, we define the function spaces

normed by

The overall aim is to approximate a square integrable function \(f\in L_2(\mathbb {T}^d)\) by a function from a finite dimensional subspace of \(L_2(\mathbb {T}^d)\). We study the scattered-data problem, i.e. we have given some sample points \({\varvec{x}}\in \mathbb {T}^d\), where we denote the set of all sample points by \(\mathcal {X}\), and we have given the function values \({\varvec{y}} = (f({\varvec{x}}))_{{\varvec{x}}\in \mathcal {X}}\in \mathbb {C}^M\). We denote the number of sample points by \(M=|\mathcal {X}|\).

Let us first introduce some notation. In this paper we denote by [d] the set \(\{1,\ldots ,d\}\) and its power set by \(\mathcal {P}([d])\). The d-dimensional input variable of the function f is \({\varvec{x}}\), where we denote the subset-vector by \({\varvec{x}}_{{\varvec{u}}}=(x_i)_{i\in {\varvec{u}}}\) for a subset \({\varvec{u}}\subseteq [d]\). The complement of those subsets is always with respect to [d], i.e., \({\varvec{u}}^c=[d]\backslash {\varvec{u}}\). For an index set \({\varvec{u}}\subseteq [d]\) we define \(|{\varvec{u}}|\) as the number of elements in \({\varvec{u}}\). Since we will often use vector-notation, the relations < and > for vectors are always meant pointwise. As usual, we use the Kronecker delta

The vector-valued version is also a pointwise generalization \(\delta _{{\varvec{j}},{\varvec{\ell }}}=\prod _{i=1}^d\delta _{j_i,\ell _i}\). Additionally, if we write \(a+{\varvec{b}}\), where a is a scalar and \({\varvec{b}}\) is a vector, we mean that we add a to every component of the vector \({\varvec{b}}\). Furthermore, the notation \(X\lesssim Y\) means that \(X\le CY\) for some constant C which does not depend on the relevant parameters. The inner product for functions and vectors is defined by

For \(p\in \{1,2\}\) we introduce the norm \(\left\Vert \smash {{\varvec{x}}} \right\Vert _p=\left( \sum _{i=1}^d |{\varvec{x}}_i|^p\right) ^{1/p}.\) Moreover, we introduce the multi-dimensional Fourier coefficients on the torus by

2 The ANOVA decomposition

The aim of sensitivity analysis is to describe the structure of multivariate periodic functions f and analyze the influence of each variable. A traditional approach is to study the variance, defined in (2.3), of \(f({\varvec{x}}_{{\varvec{u}}})\) for \({\varvec{u}}\subseteq [d]\), to find out which terms contribute how much to the total variance of f. A concept used frequently, see for instance [7, 25, 30], is the following.

Definition 2.1

The ANOVA decomposition (Analysis of variance) of a function \({f:\mathbb {T}^d\rightarrow \mathbb {C}}\) is given by

For \({\varvec{u}}=\varnothing \) the function \(f_\varnothing \) is a constant that is equal to the grand mean

The one-dimensional terms for \(j=1,\ldots ,d\), the so-called main effects, can be estimated by the integral

which only depends on \({\varvec{x}}\) through \(x_j\), all other components \({\varvec{x}}_{j^c}\) have been integrated out. The corresponding two-factor interactions are

In general, we define the following.

Definition 2.2

Let f be in \(L_2(\mathbb {T}^d)\). For a subset \({\varvec{u}}\subseteq [d]\) we define the ANOVA terms by

We do not want to attribute anything to \({\varvec{x}}_{{\varvec{u}}}\) that can be explained by \({\varvec{x}}_{\varvec{v}}\) for strict subsets \({\varvec{v}}\subset {\varvec{u}}\), so we subtract the corresponding \(f_{\varvec{v}}({\varvec{x}}_{\varvec{v}})\). By averaging over all other variables not in \({\varvec{u}}\), we receive functions that depend only on \({\varvec{x}}_{{\varvec{u}}}\). The definition of \(f_{[d]}({\varvec{x}})\) ensures that the functions defined in Definition 2.2 satisfy (2.1). There are many ways to make a decomposition of the form (2.1). Indeed, an arbitrary choice of \(f_{{\varvec{u}}}\) for all \(|{\varvec{u}}| < d\) can be accommodated by taking \(f_{[d]}\) to be f minus all the other terms. The terms in Definition 2.2 are the unique decomposition (2.1), such that they have additionally mean zero.

Lemma 2.3

For all \({\varvec{u}}\ne \varnothing \) the decomposition in Definition 2.2 fulfills

Proof

The result follows by induction over \(|{\varvec{u}}|\) by

\(\square \)

In order to show orthogonality of the ANOVA terms, we use the following lemma.

Lemma 2.4

Let \(f\in L_2(\mathbb {T}^d)\) and \({\varvec{u}}\ne \varnothing \). Then for the ANOVA terms from Definition 2.2 we have

for \(j\in {\varvec{u}}\).

Proof

The proof is by induction on \(|{\varvec{u}}|\). For \({\varvec{u}}=\{j\}\) this follows from Lemma 2.3. Now suppose that \(\int _\mathbb {T}f_{\varvec{v}}({\varvec{x}}_{\varvec{v}})\, \textrm{d}x_j=0\) for \(j\in {\varvec{v}}\) whenever \(1\le |{\varvec{v}}|\le r<d\). Choose \({\varvec{u}}\) with \(|{\varvec{u}}|=r+1\) and pick \(j\in {\varvec{u}}\). To complete the induction, we calculate

\(\square \)

This lemma establishes \(L_2(\mathbb {T}^d)\)-orthogonality of the ANOVA-terms:

Lemma 2.5

Let \(f\in L_2(\mathbb {T}^d)\). Then the ANOVA-terms from Definition 2.2 are orthogonal, i.e. for \({\varvec{u}}\ne {\varvec{v}}\subseteq [d]\)

Proof

Since \({\varvec{u}} \ne {\varvec{v}}\), there either exists \(j \in {\varvec{u}}\) with \(j \notin {\varvec{v}}\), or \(j \in {\varvec{v}}\) with \(j \notin {\varvec{u}}\). Without loss of generality suppose that \(j \in {\varvec{u}}\) and \(j \notin {\varvec{v}}\). We integrate \(x_j\) out of \(f_{\varvec{u}} f_{\varvec{v}}\) as follows:

using Lemma 2.4 for the inner integral. \(\square \)

In order to get a notion of the importance of single terms compared to the entire function, we define the variance of a function by

The idea of the ANOVA decomposition is to analyze which combinations of the input variables \(x_j\) play a role for the approximation of f, i.e. which ANOVA terms are necessary to approximate the function f and which terms can be omitted. The variances of the ANOVA terms indicate their importance, i.e. if an ANOVA-term has high variance, this term contributes much to the variance of f. For that reason we do the following. For subsets \({\varvec{u}}\subseteq [d]\) with \({\varvec{u}}\ne \varnothing \) the global sensitivity indices (gsi) [40] are then defined as

where the variance of the ANOVA term \(f_{{\varvec{u}}}\) is

since the mean of the ANOVA terms is zero. The \(L_2(\mathbb {T}^d)\)-orthogonality of the ANOVA terms implies that the variance of \(f({\varvec{x}})\) for \(L_2(\mathbb {T}^d)\)-functions f can be decomposed as

This implies

The global sensitivity index \(\rho ({\varvec{u}},f)\) represents the proportion of variance of \(f({\varvec{x}})\) explained by the interaction between the variables indexed by \({\varvec{u}}\). The knowledge of the indices is very helpful for understanding the influence of the inputs, but the computation of these relies on the computation of the integrals of Eq. (2.2). Following [17] we want to perform the sensitivity analysis on a function g, which approximates f.

Remark 2.6

Instead of using the conventional Lebesgue measure \(\, \textrm{d}{\varvec{x}}\), we can instead use another measure, which leads to a possible different ANVOVA-decomposition. But \(L_2(\mathbb {T}^d)\)-orthogonality of the ANOVA terms still holds. Using for instance the Dirac measure located at a point \(\varvec{a}\), which is a simple evaluation of f at \(\varvec{a}\), yields to the anchored ANOVA decomposition, see [29].

3 Approximation with wavelets

In this section we will use periodized, translated and dilated wavelets to approximate periodic functions. We will give some theoretical results, which apply on general wavelets that fulfill the properties in Definition 3.3. The main result of this section is Theorem 3.9, which generalizes Theorem 3.7 to the multivariate and dual case. In contrast to Theorem 3.5 this is a sharp characterization of the decay of wavelet coefficients for functions in \(H^s_\textrm{mix}(\mathbb {T}^d)\) or \(\mathbf {\varvec{B}}_{2,\infty }^s(\mathbb {T}^d)\).

3.1 Wavelet spaces

In order to get basis functions on \(\mathbb {T}\), we use 1-periodized functions. We first introduce the B-splines (Fig. 1).

Definition 3.1

For \(m\in \mathbb {N}\) we define the cardinal B-spline \(B_m:\mathbb {R}\rightarrow \mathbb {R}\) of order m as a piecewise polynomial function recursively by

The function \(B_m(x)\) is a piecewise polynomial function of order \(m-1\). Furthermore, the support of \(B_m(x)\) is \((-\tfrac{m}{2},\tfrac{m}{2})\) and they are normalized by \(\int _{-\tfrac{m}{2}}^{\tfrac{m}{2}}B_m(x)\textrm{d}x =1\). We introduce the function spaces \(V_j\) by \(V_j=\mathop {\textrm{span}}\limits \{\phi (2^j\cdot -k)\mid k\in \mathbb {Z}\}\), where the function \(\phi \) is some scaling function, for example one can use here the m-th order cardinal B-spline \(B_m(x)\) as scaling function \(\phi \). Consequently, we have

From the nested sequence of spline subspaces \(V_j\), we build the the orthogonal complementary subspaces \(W_j\), namely

These subspaces are mutually orthogonal. Hence, we write

We are interested in a wavelet function \(\psi \in W_0\) that generates the subspaces \(W_j\) in the sense that

where

Thus, we use a normalization such that \(\left\Vert \smash {\psi _{j,k}} \right\Vert _{L_2(\mathbb {T})}=1\).

Example 3.2

An example for the scaling function is the cardinal B-spline, \(\phi = B_m\) of order m. The corresponding wavelet functions are the Chui–Wang wavelets [9], which are given by

where

In order to approximate periodic functions, we use 1-periodized versions of these wavelets (see Fig. 2),

where we first have to dilate and then periodize. To get a periodic decomposition of the form (3.2), we have to periodize the scaling function \(\phi \in V_0\) by

see [13, Section 9.3], which is the constant function, denoted by \(1_\mathbb {T}\). To simplify notation, we denote the scaling function \(\phi (\cdot -k)\) by \(\psi _{-1,k}\) and \(\psi ^\textrm{per}_{-1,0}=\phi ^\textrm{per}= 1_\mathbb {T}\).

We want to approximate a function \(f\in L_2(\mathbb {T})\) by some function in \(V_j\). If the wavelets do not induce an orthonormal basis, but a Riesz-basis for every index j, we have to consider the dual basis \(\psi _{j,k}^*\), which has the property

The usage of wavelets allow us a decomposition of a non-periodic \(f\in L_2(\mathbb {R})\) in terms of \(\psi _{j,k}\), using (3.2) by

If \(\{\psi _{j,k}(x)\}_k\) is an orthonormal basis for \(W_j\), the dual wavelet and the wavelet coincide, i.e. \(\psi _{j,k}=\psi _{j,k}^*\). The periodized version of (3.2) is

Hence, we can decompose every function \(f\in L_2(\mathbb {T})\) as

since the periodization of \(\psi _{j,k}\) reduces the parameter k to the range \(\{0,\ldots 2^j-1\}\) and the periodization of \(\psi _{-1}\) makes the parameter k in the first term obsolete. The periodization inherits indeed the orthogonality in (3.3), which can be seen by setting \(y = x+\ell '\) and \({\tilde{\ell }}=\ell -\ell '\) in

In order to give theoretical error estimates, we require some properties of the wavelets.

Definition 3.3

We define the following properties of a wavelet \(\psi :\mathbb {R}\rightarrow \mathbb {R}\).

-

The wavelet \(\psi (x)\) has compact support, i.e.,

$$\begin{aligned} \mathop {\textrm{supp}}\limits \psi =[0,S]. \end{aligned}$$(P1) -

The wavelet has vanishing moments of order m, i.e.

$$\begin{aligned} \int _{-\infty }^{\infty } \psi (x)x^\beta \, \textrm{d}x=0,\quad \beta = 0,\ldots ,m-1. \end{aligned}$$(P2) -

The periodized wavelets form a Riesz-Basis for every index j with

$$\begin{aligned} \gamma _m \sum _{k=0}^{2^j-1} |d_{j,k}|^2\le \left\| \sum _{k=0}^{2^j-1}d_{j,k}\psi _{j,k}^\textrm{per}(x)\right\| _{L_2(\mathbb {T})}^2\le \delta _m \sum _{k=0}^{2^j-1} |d_{j,k}|^2. \end{aligned}$$(P3)

For example, the Chui–Wang wavelets of order m in Example 3.2 fulfill all three properties. These wavelets have compact support with \(\mathop {\textrm{supp}}\limits \psi =[0,2m-1]\), they have vanishing moments of order m and the periodic Chui–Wang wavelets represent a Riesz-basis for every index j, [35, Theorem 3.5.] with the constants from there, see Table 1.

To generalize the one-dimensional wavelets to higher dimensions, we use the following tensor-product approach. To this end we define the multi-dimensional wavelets

where \({\varvec{j}}\in \mathbb {Z}^d\) and \({\varvec{k}}=(k_i)_{i=1}^d\in \mathbb {Z}^d\) are multi-indices. Analogously, we define the 1-periodized versions

where \({\varvec{j}}=(j_i)_{i=1}^d,\, j_i\in \{-1,0,2,\ldots \}\) and \({\varvec{k}}=(k_i)_{i=1}^d\) are multi-indices \({\varvec{k}}\in \mathcal {I}_{{\varvec{j}}}\). Hence, we define the sets

In an analogous way we define the multi-variate dual wavelets and their periodization.

3.2 Boundedness of wavelet coefficients for mixed regularity

The following results are essentially known and appear in several papers [14, 24, 39] in various different settings. We decided to give a rather simple and elementary proof of the one-sided sharp wavelet characterization in our periodic setting. We would like to point out that the vanishing moments of order m of these wavelets play a crucial role for the partial characterization which we have in mind. Our proof can be easily extended to \(1<p<\infty \). Note, that the analysis in [24] relies on proper Jackson and Bernstein inequalities.

The relevant function spaces are defined in the appendix. In order to analyze a best-approximation error of the function space \(V_j\), we characterize the \(H^s(\mathbb {T})\)-norm of a function by a sequence-norm of the wavelet-coefficients.

Lemma 3.4

Let \(f\in H^m(\mathbb {T})\) and \(\psi \) a wavelet, which is compactly supported, see (P1), and has vanishing moments of order m, see (P2). Then there exists a constant C, which depends on m, such that

Proof

We define the function

Note that this function is defined using the non-periodic wavelet function \(\psi \), which has compact support on [0, S]. Because of the moment condition (P2) and the fact that \((x-t)^{m-1}\) is a polynomial of degree at most \(m-1\), we have \(\Psi _m(x)\rightarrow 0\) for \(x\rightarrow \pm \infty \). Hence, \(\Psi _m(x)\) has also support [0, S]. Furthermore, m-times differentiation yields

The periodization of \(\Psi _m\) is

Since \(\Psi _m\) has compact support, the summation over \(\ell \) is finite and we can interchange differentiation and summation, which yields \(\frac{\, \textrm{d}^m }{\, \textrm{d}x^m}\Psi _m^\textrm{per}(x)=\psi ^\textrm{per}(x).\) Analogously, we confirm that

Now we calculate the inner products by using variable substitutions

where \(I_{\ell ,j,k}=\mathop {\textrm{supp}}\limits V(2^j\cdot +2^j\ell -k)\). Since \(\Psi _m\) is supported on [0, S], the interval \(I_{\ell ,j,k}\) is \([2^{-j}k-\ell ,2^{-j}(S +k)-\ell ]\cap [-\frac{1}{2},\frac{1}{2})\). We denote the union \(\cup _{\ell \in \mathbb {Z}}I_{\ell ,j,k}\) by \(I_{j,k}\). Note that in this proof we put points that are in multiple \(I_{\ell ,j,k}\), multiple times in \(I_{j,k}\). This is the case if \(S>2^{j}\), hence \(|I_{j,k}|=S\,2^{-j}\), which can be greater than 1.

Summation over k means to unite \(I_{j,k}\) for all \(k\in \mathcal {I}_j\). The intervals \(I_{j,k}\) for fixed j overlap for different k, but at most \(\lceil S\rceil \)-times. Hence for \(I_j=\displaystyle \cup _{k=0}^{2j-1}I_{j,k}\), we have \(\int _{I_j}|g(x)|^2\, \textrm{d}x\le S\int _\mathbb {T}|g(x)|^2\, \textrm{d}x\). All together, this yields

For the scaling function we have

Now the assertion follows from (3.10) and (3.11). \(\square \)

We use this one-dimensional Lemma to give a characterization for multi-dimensional functions. Instead of the function space \(H^m(\mathbb {T})\) we have to use functions from \(H^m_\textrm{mix}(\mathbb {T}^d)\).

Theorem 3.5

Let \(f\in H^m_{\textrm{mix}}(\mathbb {T}^d)\) and \(\psi ^\textrm{per}_{{\varvec{j}},{\varvec{k}}}\) be the periodized, dilated and translated versions of a wavelet \(\psi \), which is compactly supported, see (P1), and has vanishing moments of order m, see (P2). Then there exists a constant C, which depends on m and d, such that

where we define the index norm \(|{\varvec{j}}|_1=\sum _{i,j_i\ge 0} j_i\).

Proof

We use a multi-variate version of the function \(\Psi _m\) of the proof of Lemma 3.4, which we get by tensorizing the one-dimensional functions, \(\Psi _m({\varvec{x}})= \prod _{i=1}^d \Psi _m(x_i)\). This function is supported on \([0,S]^d\). Furthermore, we have

where \(\varvec{1}\) is the d-dimensional vector of ones. We also derive multi-dimensional identities like in (3.7), where we tensorize the functions and differentiate in every dimension m times. Let the multi-index \({\varvec{j}}\) be fixed, with \({\varvec{u}} = \{i\in [d]\mid j_i\ge 0\}\). We apply the partial integration in the dimensions i, where \(j_i\ge 0\), i.e. in the dimensions \({\varvec{u}}\). In these dimensions we use (3.7) with \(j=j_i\) and \(x=x_i\). Therefore we get

where the vector \(\varvec{1}_{({\varvec{u}})}\) is the vector which is 1 at the indices \({\varvec{u}}\) and all other entries are zero. The intervals for integration are given by the support of \(\Psi _m\), i.e.

where expressions over multi-indices are always meant component-wise. That means \((2^{-{\varvec{j}}}{\varvec{k}})_i=2^{-{j_i}}k_i\). We denote the union \(\cup _{\varvec{\ell }\in \mathbb {Z}^d}I_{\varvec{\ell },{\varvec{j}},{\varvec{k}}}\) by \(I_{{\varvec{j}},{\varvec{k}}}\). Note that in this proof we put points that are in multiple \(I_{\varvec{\ell },{\varvec{j}},{\varvec{k}}}\), multiple times in \(I_{{\varvec{j}},{\varvec{k}}}\). This is the case if \(S>2^{j_i}\) for some i, hence \(|I_{{\varvec{j}},{\varvec{k}}}|=S\,2^{-|{\varvec{j}}_{{\varvec{u}}}|_1}\), which can be greater than 1. Like in (3.9) we use Cauchy–Schwarz-inequality and get

where the constant depends on m and \(|{\varvec{u}}|\). Summation over \({\varvec{k}}\in \mathcal {I}_{\varvec{j}}\) means to unite \(I_{{\varvec{j}},{\varvec{k}}}\) for all \({\varvec{k}}\in I_{\varvec{j}}\). The intervals \(I_{{\varvec{j}}_,{\varvec{k}}}\) have length \(2^{-|{\varvec{j}}_{\varvec{u}}|_1}S^{|{\varvec{u}}|}\) and they are centered at the points \(2^{{\varvec{j}}_{\varvec{u}}}{\varvec{k}}_{\varvec{u}}\) for \({\varvec{k}}_{\varvec{u}}\in \mathcal {I}_{{\varvec{j}}_{\varvec{u}}}\). Hence, the intervals overlap at most \(\lceil S\rceil ^{|{\varvec{u}}|}\)-times, i.e. we have

where the constant depends on m and d. This finishes the proof. \(\square \)

In the previous proof the function f had to have the same smoothness as the order m of the vanishing moments property (P2). If we require only slightly less smoothness, we get a much better characterization of functions in \(V_j\), which uses the sum instead of the supremum of the wavelet coefficients if the fractional smoothness parameter s satisfies \(s<m\). Again, we prepare the multi-dimensional result by proving the following one-dimensional result first.

Lemma 3.6

Let \(f\in H^s(\mathbb {T})\), \(0<s<m\) and \(\psi ^\textrm{per}_{j,k}\) an 1-periodized wavelet, which is compactly supported, see (P1), has vanishing moments of order m, see (P2), and forms a Riesz-basis, see (P3). Then there exists a fixed constant C, which depends on m, such that

where \(\delta _m\) is the Riesz constant from (P3) and where we use \(|j|_1=j\) if \(j\ge 0\) and 0 otherwise.

Proof

The first summand for \(j=-1\) is \(|\langle f, 1_\mathbb {T}\rangle |^2=\left\Vert \smash {f} \right\Vert ^2_{L^1(\mathbb {T})}\le C\left\Vert \smash {f} \right\Vert _{H^s(\mathbb {T})}^2\). Now we consider a fixed index \(j\ge 0\). We will use the equivalent norm given in (A3) in the appendix (univariate version). This yields in particular for the block \(f_{q}\)

The decomposition of f in dyadic blocks and triangle inequality yields

Let now \(\ell \in \mathbb {Z}\) be fixed. We distinguish two cases and we begin with \(\ell >0\). Here we have

Note that at this point we need the property that \(\psi _{j,k}\) form a Riesz basis for fixed j, i.e (P3), and every Riesz basis is a frame with the same constants. Using the Riesz-basis property (P3), summation over the weighted wavelet coefficients yields

For the remaining case \(\ell \le 0\) we use Lemma 3.4 and (3.12), i.e we have

where the constant is from Lemma 3.4 and depends on m. This yields

The assertion follows from (3.14) and (3.16). \(\square \)

By a similar and straight-forward direction-wise analysis as in Theorem 3.5 we get the following multivariate version from (3.13) and (3.15),

where we define \({\varvec{\ell }}_-{:}{=}((\ell _1)_-,...,(\ell _d)_-)\) with \(x_{-}=\min \{0,x\}\).

The following result represents a multivariate version of Lemma 3.6.

Theorem 3.7

Let \(f\in L_2(\mathbb {T}^d)\), \(0<s<m\) and \(\psi \) a wavelet, which is compactly supported, see (P1), has vanishing moments of order m, see (P2), and forms a Riesz-basis, see (P3). Then there exists a constant C, which depends on m, s and d, such that

and

where we use \(|{\varvec{j}}|_1=\sum _{i,j_i\ge 0} j_i\).

Proof

The relation in (3.18) can be shown along the lines of Lemma 3.6 using (3.17) instead of (3.13) and (3.15) at the respective place. However, let us additionally give a different proof argument based on an abstract tensor product result. For this end we need the sequence space

Corollary 3.6.(i) for the case \(p=2\) from [39] gives us a result of the multivariate versions of these one-dimensional sequence spaces. It was shown that the multivariate sequence spaces are the tensor products of the one-dimensional sequence spaces. In our case we have to consider the sequence spaces of the wavelet coefficients, where \(a_{{\varvec{j}},{\varvec{k}}}=\langle f,\psi _{ {\varvec{j}}, {\varvec{k}}}^{*,\textrm{per}}\rangle \). Theorem 2.1 also from [39] shows that the spaces \(H^s_\textrm{mix}(\mathbb {T}^d)\) coincide with the tensor products \(\otimes _{i=1}^d H^s(\mathbb {T})\). Our one-dimensional Lemma 3.6 bounds the operator which maps a function from \(H^s(\mathbb {T})\) to \(b_2^s\). Hence, the tensor product operator is also bounded between the tensor-product spaces.

As for (3.19) we again need a direct argument since a counterpart of the mentioned tensor product result is not available. The modification is straightforward and again based on (3.17). \(\square \)

Remark 3.8

Note that the constant in the previous theorem is the d-th power of the constant in Theorem 3.6. We receive the same constant in an elementary proof which uses multi-dimensional ideas of the proof of Lemma 3.6.

The version in Theorem 3.7 is not suitable for our purpose. We want to approximate a function \(f\in L_2(\mathbb {T}^d)\) in terms of multi-dimensional tensor products of dilated and translated versions of the wavelet \(\psi \), given in (3.6), i.e.

which is the multi-dimensional version of (3.4). For that reason we need a characterization with the scalar products \(\langle f, \psi _{{\varvec{j}},{\varvec{k}}}^{\textrm{per}*}\rangle \) instead of \(\langle f, \psi _{{\varvec{j}},{\varvec{k}}}^{\textrm{per}}\rangle \).

Theorem 3.9

With the assumptions like in the previous theorem and letting \(\psi ^{\textrm{per}*}_{{\varvec{j}},{\varvec{k}}}\) denote the dual wavelets corresponding to the wavelets \(\psi ^{\textrm{per}}_{{\varvec{j}},{\varvec{k}}}\). There exists a constant C, which depends on m, s and d, such that

and

where we define the index norm \(|{\varvec{j}}|_1=\sum _{i,j_i\ge 0} j_i\).

Proof

In this proof we use the property (P3), i.e. that \(\{\psi ^\textrm{per}_{{\varvec{j}},{\varvec{k}}}\}_{{\varvec{k}}\in \mathcal {I}_{\varvec{j}}}\) as well as their duals \(\{\psi ^{\textrm{per},*}_{{\varvec{j}},{\varvec{k}}}\}_{{\varvec{k}}\in \mathcal {I}_{\varvec{j}}}\) are a Riesz-basis for every fixed \({\varvec{j}}\). That means

Hence, this theorem follows immediately from Theorem 3.7. \(\square \)

Note that the converse inequality for orthogonal wavelets in case \(0<s<m-\tfrac{1}{2}\) was shown in [39, Prop. 2.8 ii)].

3.3 Hyperbolic wavelet approximation

In the sequel we always deal with multi-dimensional periodic wavelets \(\psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per}\), which are compactly supported, see (P1), have vanishing moments of order m, see (P2), and form a Riesz basis, see (P3). The last subsection motivates to introduce an approximation operator \(P_n\), which truncates the wavelet decomposition, by

in order to approximate a function \(f\in L_2(\mathbb {T}^d)\). To do so, we define the index sets

The operator \(P_n\) is the projection of a function in \(L_2(\mathbb {T}^d)\) onto the space

In Fig. 3 every small square stands for one multi-index \({\varvec{k}}\). The operator \(P_3\) chooses those wavelet functions, for which the corresponding square is colored, i.e. all \({\varvec{k}}\) in the index-set \(\mathcal {I}_{\varvec{j}}\) where \(|{\varvec{j}}|_1\le 3\). Using Theorem 3.9, we estimate the approximation error of this operator by

Corollary 3.10

Let \(f\in H^s_{\textrm{mix}}(\mathbb {T}^d)\). For \(s<m\) we have for the projection operator \(P_n\) defined in (3.20)

Proof

Due to the wavelet decomposition of f, we have

Taking the square root gives the assertion. \(\square \)

Note that this result can be compared with [3, Theorem 3.25] for \(m=2\). But we get a better approximation rate, since we proved the characterization in Theorem 3.9, whereas in [3] only a characterization of type from Theorem 3.5 was proven. Related results also appeared in [14, Theorem 3.2], [24, Proposition 6], [39, Theorem 2.11], but with less transparent requirements on the wavelets.

We also give a relation between the number of necessary parameters (degrees of freedom) and the order of approximation. The content of the Lemma below is essentially known, see [6, Lem. 3.6].

Lemma 3.11

Let \(N{:}{=}\text{ rank } P_n\) be the number of parameters, that we need to describe the space \(V_n^\textrm{per}(\mathbb {T}^d)\), defined in (3.22), which is induced by a wavelet which fulfills (P1), (P2) and (P3). Then

Proof

The number of parameters is

where \({\varvec{u}}\) always denotes the index set \({\varvec{u}} = \{i\in [d]\mid {\varvec{j}}_i\ge 0\}\). We consider each summand seperately. Therefore we consider the case where \({\varvec{j}}\ge \varvec{0}\),

since there are \(\left( {\begin{array}{c}i-1\\ d-1\end{array}}\right) \) partitions of i into non-zero natural numbers. For this sum holds

Summing over all \({\varvec{u}}\in {\mathcal {P}}([d])\) gives us

\(\square \)

Corollary 3.12

Let \(f\in H^s_{\textrm{mix}}(\mathbb {T}^d)\). For \(0<s<m\) we have for the projection operator \(P_n\) defined in (3.20) with \(N{:}{=}\text{ rank } P_n\). Then

Proof

This follows from Corollary 3.10 together with Lemma 3.11. \(\square \)

Note that the previous corollary deals with the case \(s<m\), i.e. the smoothness s is smaller than the order m of vanishing moments of the wavelet. For the case \(s=m\) we can only prove the following worse bound, which is based on the estimate in Theorem 3.5. We do not know whether this bound is optimal or can be improved.

Corollary 3.13

Let \(f\in H^m_\textrm{mix}(\mathbb {T}^d)\) and \(P_n\) being the approximation operator defined in (3.20). Then

Proof

Like in Corollary 3.10 we have

In contrast to Corollary 3.10 we have to sum over the indices \({\varvec{j}}\) instead of taking the supremum. By first considering the cases where \({\varvec{j}}\ge \varvec{0}\), we have by [6, Lemma 3.7], that

Taking the scaling functions into account, which are constant, i.e. \(\psi _{-1,0}=1\) we have

The estimation regarding the number N of parameters follows analogously as in Lemma 3.11. \(\square \)

Remark 3.14

With literally the same argument we obtain an analogous \(L_2\)-bound also for \(f\in \mathbf {\varvec{B}}^s_{2,\infty }(\mathbb {T})\) if \(s<m\). This is a direct consequence of Theorems 3.7, 3.9.

The characterizations of our wavelet spaces also allow a bound on the \(L_{\infty }\)-error.

Theorem 3.15

For \(1/2<s<m\) we have for the projection operator \(P_n\) defined in (3.20)

whereas for \(s=m\) we have

Proof

Using triangle inequality we obtain in case \(1/2<s<m\)

Applying Cauchy–Schwarz-inequality yields

Incorporating \(|\psi _{{\varvec{j}},{\varvec{k}}}({\varvec{x}})|\lesssim 2^{j/2}\) implies

Note, that the last estimate boils down to estimate the sum, which has been already done in Corollary 3.13.

In the remaining case where \(s = m\), we only have the weak characterization in Theorem 3.5. This gives a slightly worse bound for the \(L_\infty \)-error. Like in the previous estimates we have

where we bounded the last sum again by using [6, Lemma 3.7]. The estimation for the space \(\mathbf {\varvec{B}}_{2,\infty }^s(\mathbb {T}^d)\) follows similarly. \(\square \)

Note that, using Lemma 3.11 this Theorem can also be written in terms of the number of degrees of freedom N, which gives for \(s<m\)

3.4 Tools from probability theory

In this subsection we collect some basic tools from probability theory, which we will apply later to our concrete settings. Concentration inequalities describe how much a random variable spreads around the expectation value. One basic result about the spectral norm of sums of complex rank-1-matrices from [43, Theorem 1.1] is the following.

Theorem 3.16

(Matrix Chernoff) Consider a finite sequence \(\varvec{A}_i\in \mathbb {C}^{N\times N}\) of independent, random, self-adjoint, positive definite matrices, where the eigenvalues satisfy \(\mu _{\max }(\varvec{A}_i)\le R\) almost surely. Define

then

We will use this theorem in Theorem 3.19 to prepare error estimates for the recovery of individual functions.

Another basic inequality which we will use later is the Bernstein inequality, see [41, Theorem 6.12].

Theorem 3.17

Let \(\mathbb {P}\) be a probability measure on \(\mathbb {T}^d\), \(B>0\) and \(\sigma >0\) be real numbers and \(M\ge 1\) be an integer. Furthermore, let \(\xi _1,\ldots ,\xi _M:\mathbb {T}^d\rightarrow \mathbb {R}\) be independent random variables satisfying \(\mathbb {E}\,\xi _i=0\), \(\left\Vert \smash {\xi _i} \right\Vert _\infty \le B\) and \(\mathbb {E}\,\xi _i^2\le \sigma ^2\) for all \(i=1,\ldots ,M\). Then we have

3.5 Hyperbolic wavelet regression

So far we have bounded the error between a function and the approximation operator \(P_nf\), defined in (3.20), in Corollary 3.10. Now we want to consider the case where we have random sample points \({\varvec{x}}\in \mathcal {X}\subset \mathbb {T}^d\) with cardinality \(|\mathcal {X}|=M\) together with the function values \({\varvec{y}} = (f({\varvec{x}}))_{{\varvec{x}}\in \mathcal {X}}\). In the sequel we always consider the case where the samples \({\varvec{x}}\in \mathcal {X}\) are drawn i.i.d. at random according to the uniform Lebesgue measure. For that reason we introduce the d-dimensional probability measure \(\, \textrm{d}\mathbb {P}= \otimes _{i=1}^d \, \textrm{d}x_i\). In this scenario we do not have the wavelet coefficients \(\langle f, \psi _{{\varvec{j}},{\varvec{k}}}^{\textrm{per},*}\rangle \) at hand. However, we study least squares solutions of the overdetermined system

where \(\varvec{A}=(\psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per}({\varvec{x}}))_{{\varvec{x}}\in \mathcal {X},{\mathop {{\varvec{k}}\in \mathcal {I}_{\varvec{j}}}\limits ^{{\varvec{j}}\in \mathcal {J}_n}}}\in \mathbb {C}^{M\times N}\) is the hyperbolic wavelet matrix with \(M>N\). At some point we will reduce the number of columns of the hyperbolic wavelet matrix \(\varvec{A}\). For that reason we will always denote the number of parameters, i.e. the number of columns of our wavelet matrix, by N. In order to also minimize the \(L_2(\mathbb {T}^d)\)-error, we minimize the residual \(\left\Vert \smash {\varvec{A}\varvec{a}-{\varvec{y}}} \right\Vert _2\). Multiplying the system (3.25) with \(\varvec{A}^*\) gives

If the hyperbolic wavelet matrix \(\varvec{A}\) has full rank, the unique solution of the least squares problem is

Computing these coefficients \(\varvec{a}\) gives us the wavelet coefficients of an approximation \(S_n^\mathcal {X}f\) to f, i.e

To compute this approximant \(S_n^\mathcal {X}f\) numerically, we have to ensure that the condition number of the matrix \(\varvec{A}^*\varvec{A}\) is bounded away from zero. In order to apply Theorem 3.16 to our purposes, we use for \(i=1,\ldots M\) and \({\varvec{x}}_i\in \mathcal {X}\) the matrices

Hence, we have \(\sum _{i=1}^M \varvec{A}_i=\frac{1}{M} \varvec{A}^*\varvec{A}\). Additionally, these matrices fulfill the conditions in Theorem 3.16. Since we will often consider the mass matrix

we have a closer look to its structure. In fact, the matrix \(\varvec{\Lambda }\) has entries \(\langle \psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per},\psi _{\varvec{i},\varvec{\ell }}^\textrm{per}\rangle \), that are zero for \({\varvec{j}}\ne \varvec{i}\) because of the orthogonality of the one-dimensional wavelets for different scales j and i, see (3.5). We denote the entries of the matrix \(\varvec{\Lambda }\) for \({\varvec{k}}\in \mathcal {I}_{\varvec{j}}\) by

Having a closer look at these entries, we see that there are only at most \(\lceil 2S-1\rceil \) ones of the \(\lambda _{ j,k}\) non-zero for every one-dimensional index j. Additionally, these non-zero entries are the same for every index j. Furthermore, the matrix \(\varvec{\Lambda }\) is symmetric. Since the matrix \(\varvec{\Lambda }\) has the entries \(\langle \psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per},\psi _{\varvec{i},\varvec{\ell }}^\textrm{per}\rangle \), it has a block structure and every block is dedicated to one index \({\varvec{j}}\). Therefore we introduce the partial matrices

where the circulant matrices \(\mathop {\textrm{circ}}\limits {\varvec{y}}\in \mathbb {C}^{r\times r}\) are defined by \((\mathop {\textrm{circ}}\limits {\varvec{y}})_{i,j}=y_{i-j+1 \mod r}\) and \(\otimes \) denotes the Kronecker product of matrices.

Figure 4 shows the structure of \(\varvec{\Lambda }\) for \(d=1\) and the Chui–Wang wavelets of order \(m=2\), which mean, in every column of every block there are at most \(4m-1\) non-zero entries, only \(2m-1\) of them are different. Equal colors in the picture stand for equal matrix entries. In higher dimensions we get one block for every index \({\varvec{j}}\), which is the Kronecker product of the one-dimensional circulant matrices.

Having these only few different entries of \(\varvec{\Lambda }\) in mind, we can bound the lowest eigenvalue of this matrix away from zero.

Lemma 3.18

Let the matrix \(\varvec{\Lambda }\) be defined like in (3.27). Then we can bound the eigenvalues of this matrix by

where the constants \(\gamma _m\) and \(\delta _m\) are the Riesz bounds from (P3).

Proof

As usual, we begin with the one-dimensional case. As mentioned before this lemma, this matrix has only few non-zero entries. In fact, for \(d=1\) we have a block-diagonal matrix with blocks belonging to every j, see also Fig. 4. To be precise, the blocks are circulant matrices

For the case \(j = -1\) this is only 1, which is also the eigenvalue. For \(j\ge 0\) we use [34, Theorem 3.31] and we write a circulant matrix as

where \(\varvec{F}_{2^j}=\left( w_{2^j}^{k\ell }\right) _{k,\ell =0}^{2^j}\) is the Fourier matrix of dimension \(2^j\) with the primitive \(2^j\)-th roots of unity \(w_{2^j}{:}{=}\textrm{e}^{-2\pi \textrm{i}2^{-j}}\). In order to bound the eigenvalues of the matrix \(\varvec{\Lambda }\), we have to determine the infimum and supremum of all eigenvalues of all blocks \(\varvec{\Lambda }_j\). Since the Fourier matrices are orthogonal, we have

and analog for the maximum. To bound this term we calculate the Fourier coefficients (see (1.1)) of the wavelets \(\psi _{j,k}(x)\) using substitution in the integral by

We begin with the case where j is big enough, such that \(2^j>S\), so that \(\varvec{\lambda }_j=\left( \langle \psi _{j,0},\psi _{j,k}\rangle \right) _{k\in \mathcal {I}_j}\). Therefore we get, using Parsevals’ equality,

We denote

Hence,

For the other case, where \(2^j\le S\), we get the same estimates in a similar way. Therefore the eigenvalues of our block of the desired matrix \(\varvec{\Lambda }\) are bounded by

These extreme values coincide with the Riesz constants, which can be seen as follows

where \(\hat{\varvec{d}}_r\) is the r-th component of \(\hat{\varvec{d}}=\varvec{F}_{2^j}\varvec{d}=\varvec{F}_{2^j}(d_{j,k})_{k\in \mathcal {I}_j}.\) Taking into account that \(\left\Vert \smash {\hat{\varvec{d}}} \right\Vert _{2}^2=2^j\left\Vert \smash {\varvec{d}} \right\Vert _2^2\), the one-dimensional assertion follows.

To generalize this to the multi-dimensional case, we have a closer look at the matrix \(\varvec{\Lambda }\) for \(d>1\). Again we have a block diagonal matrix, because of the orthogonality of the wavelets \(\psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per}\) for different scales \({\varvec{j}}\). So according to every \({\varvec{j}}\ge -\varvec{1}\), we have a block in the matrix \(\varvec{\Lambda }\). Because of the tensor product form of our wavelet functions \(\psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per}\), we order the functions \(\psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per}\) in the matrix \(\varvec{A}\) such that the block belonging to \({\varvec{j}}\) is equal to the Kronecker product

Since the eigenvalues of the Kronecker product of a matrix are the products of the eigenvalues of the matrices, we can bound the smallest eigenvalue of every block matrix by \(\gamma _m^d\) and the largest eigenvalues by \(\delta _m^d\). The eigenvalue \(\mu =1\) is explained by the first block for \({\varvec{j}}= -\varvec{1}\), which is basically 1. \(\square \)

Let us again consider the example of the Chui–Wang wavelets from Example 3.2. The function E in the previous proof is in this case the Euler-Frobenius polynomial \(\Psi _{2m}\) from [35]. From there we also get the Riesz-constants, which are summarized in Table 1.

The previous Lemma gives us one constant in Theorem 3.16. For the other constant R let us introduce the spectral function

In order to give an estimation of the complexity of R(n) we denote for every \({\varvec{j}} \ge -\varvec{1}\) the subset of indices \({\varvec{u}}=\{i\in [d]\mid j_i\ge 0\}\). Hence, there holds

where we use (3.23). The supremum of \(\sum _{ k \in \mathbb {Z}} |\psi (x-k)|^2\) is a constant, since the wavelet \(\psi \) is compactly supported on [0, S]. In Table 2 we calculated these constants for the Chui–Wang wavelets of different orders.

Now we are in the position to apply Theorem 3.16 for our setting.

Theorem 3.19

Let \({\varvec{x}}\in \mathcal {X}\) drawn i.i.d. and uniformly at random, the wavelet function \(\psi \) having vanishing moments of order m, \(r>1\) and \(\gamma _m\) the Riesz constant from (P3). Then the matrix \(\frac{1}{M} \varvec{A}^*\varvec{A}\), where \(\varvec{A}\) is the hyperbolic wavelet matrix from (3.25), only has eigenvalues greater than \(\tfrac{ \gamma _m^d}{2}\) with probability at least \(1-M^{-r}\) if

with the m- and d-dependent constant

Especially, we have for the operator norm

Proof

We have that

Hence, we use \(R= \frac{R(n)}{M}\), \(\mu _{\min }=\gamma _m^d\) and \(\delta = \tfrac{1}{2}\) in Theorem 3.16. Using the bound in (3.33) and \(N\le M\), we confirm

Therefore, it follows that \(\mathbb {P}\left( \mu _{\min }\left( \varvec{A}^*\varvec{A}\right) \le \frac{M\,\gamma _m^d}{2} \right) \le \tfrac{1}{M^r}\). Hence, \(\varvec{A}\) has singular values at least \(\sqrt{\frac{ 2\,\gamma _m^d}{M}}\) with high probability. This yields an upper bound for the norm of the Moore-Penrose-inverse \(\left\Vert \smash {\varvec{A}^+} \right\Vert _2=\left\Vert \smash {(\varvec{A}^*\varvec{A})^{-1}\varvec{A}^*} \right\Vert _2\) by using Proposition 3.1 in [27], i.e. (3.35) follows. \(\square \)

It remains to estimate the number of samples M, such that (3.33) is fulfilled. In (3.32) we estimated the complexity of the spectral function R(n), hence we have to require \(N\le \frac{c_{m,d}\, M}{c_{\psi }^d\,(r+1)\,\log M}\), which yields that

where \(c_{m,d}\) is the constant from (3.34). We receive an estimation of the number of samples M in terms of the number of parameters N. In order to recover individual functions, we get a bound of the individual error \(\left\Vert \smash {f-S_n^\mathcal {X}f} \right\Vert _{L_2(\mathbb {T}^d)}\) with high probability.

Theorem 3.20

Let M be the number of samples satisfying (3.36), \(({\varvec{x}}_i)_{i=1}^M\) drawn i.i.d. and uniformly at random, \(r>1\) and \(f \in C(\mathbb {T}^d)\) a continuous function. Then

where we define \(e_2{:}{=}\left\Vert \smash {f-P_nf} \right\Vert _{L_2(\mathbb {T}^d)}\) and \(e_\infty {:}{=}\left\Vert \smash {f-P_nf} \right\Vert _{L_\infty (\mathbb {T}^d)}\). That means, the \(L_2(\mathbb {T}^d)\)-error of our approximation can be bounded with high probability by rates of the \(L_2(\mathbb {T}^d)\)-and the \(L_\infty (\mathbb {T}^d)\)-error of the projection \(P_n\).

Proof

Using the orthogonality of \(\psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per}\) and \(\psi _{\varvec{i},\varvec{\ell }}^\textrm{per}\) for \({\varvec{j}}\ne \varvec{i}\) we have

We apply Theorem 3.19 to bound the operator norm \(\left\Vert \smash {S_n^\mathcal {X}} \right\Vert _2\). For the \(\ell _2\)-norm we give a bound with high probability by using Bernstein inequality. Therefore we introduce the random variables

where \(\eta _i =|f({\varvec{x}}_i)-P_nf({\varvec{x}}_i)|^2\). These random variables are centered, i.e. \(\mathbb {E}(\xi _i)=0\). The variances of these random variables can be bounded by

Furthermore, we have

For the last estimation we used that for positive \(y_1,y_2\) it holds \(|y_1-y_2|\le \max \{y_1,y_2\}\).

Now we are in the position to merge all inequalities in order to apply Bernstein’s inequality from Theorem 3.17. Using \(\tau = r\log M\), this yields

Because of our choice for the random variables \(\xi _i\), we have that

Hence, we add the mean \(e_2^2\) and get

The terms in (3.37) are bounded with high probability. Let us define the events

Due to (3.38) and Theorem 3.19 we know that

which implies that

This gives us the assertion. \(\square \)

In a similar way we give an estimation for the \(L_\infty \)-error.

Theorem 3.21

Let M be the number of samples satisfying (3.36), \(({\varvec{x}}_i)_{i=1}^M\) drawn i.i.d. and uniformly at random, \(r>1\) and \(f \in C(\mathbb {T}^d)\) a continuous function. Then

where we define as in the previous theorem \(e_2{:}{=}\left\Vert \smash {f-P_nf} \right\Vert _{L_2(\mathbb {T}^d)}\) and \(e_\infty {:}{=}\left\Vert \smash {f-P_nf} \right\Vert _{L_\infty (\mathbb {T}^d)}\).

Proof

This proof is similar to the proof of the previous theorem. Triangle inequality gives

We denote the function \(g =P_n f-S_n^\mathcal {X}f = \sum _{{\varvec{j}}\in \mathcal {J}_n}\sum _{{\varvec{k}}\in \mathcal {I}_{{\varvec{j}}} }\langle g, \psi _{{\varvec{j}},{\varvec{k}}}^{\textrm{per},*}\rangle \psi _{{\varvec{j}},{\varvec{k}}}^{\textrm{per}}\), which gives

The condition (3.33) gives a bound for R(n) and the estimation of \(\left\Vert \smash {P_nf -S_n^\mathcal {X}f} \right\Vert _{L_2(\mathbb {T}^d)} \) follows the same lines as the proof of Theorem 3.20. \(\square \)

We use the general Theorem 3.20 to give a bound for the approximation error with high probability for our settings, where \(s = m\) and where \(s<m\), using our estimates for the errors of \(\left\Vert \smash {f-P_nf} \right\Vert \) for both cases.

Corollary 3.22

Let the assumptions be like in Theorem 3.20. Let m be the order of vanishing moments of the wavelets, the number of samples satisfying (3.36), and \(\gamma _m, \delta _m\) the Riesz constants from (P3). In the case where \(1/2<s<m\) we have

and in the case where \(s = m\) we have

Proof

In order to apply the previous theorem, let us collect bounds for the occurring terms. We have for the number of samples \(\frac{\log M}{M} \lesssim 2^{-n} n^{-d+1}\), see (3.36). The \(L_2\)-error is bounded in Corollary 3.10 respectively Corollary 3.13 and the subsequent remark. Theorem 3.15 gives us a bound for the \({L_\infty }\)-error. Hence, we have in the case \(s<m\),

and for \(s=m\)

Theorem 3.20 gives the assertion. \(\square \)

Remark 3.23

Note, that this theorem establishes a bound for the error of the least squares approximation with high probability, which has the same rate like the best approximation with the projection operator \(P_n\) in Corollarys 3.10 and 3.13. The projection operator \(P_n\) is the optimal approximation in the wavelet spaces. Hence, with high probability we also get this optimal rate using the operator \(S_n^\mathcal {X}\). Furthermore, also the \(L_\infty \)-error of \(S_n^\mathcal {X}\) allows such an optimal bound by applying Theorem 3.21. Note, in this case choosing the sampling number M according to (3.33), gives that

All the theoretical considerations in this section result in Algorithm 1, which determines the approximant (3.26) from given samples \(\mathcal {X}\) by solving a least squares algorithm with the hyperbolic wavelet matrix \(\varvec{A}\).

3.5.1 Comparison to other work

Error estimates with piecewise linear wavelet functions, i.e. the case \(m=2\) are considered in [3], where the wavelets are called “prewavelets” and the non-periodic setting is treated. To compare, in [3, p. 117] an approximation rate \(2^{-ns}n^{(d-1)/2}\) was proven for \(s\le m\) in case of \(H^s_{\textrm{mix}}(\mathbb {T}^d)\)-functions. This is due to the sub-optimal analysis of the projection operator. We obtain the same bound for the larger space \(\mathbf {\varvec{B}}^s_{2,\infty }(\mathbb {T}^d)\) additionally with high probability. In the case \(s=m\) our results match the results in [3]. Furthermore, we consider quasi-optimal \(L_\infty (\mathbb {T}^d)\)-error bounds with high probability.

In [12] the authors also studied the approximation error of a least squares operator like \(S_n^\mathcal {X}\). But they used an orthonormal system of basis functions and bounded expectation of the approximation error \(\left\Vert \smash {f-S_n^\mathcal {X}f} \right\Vert _{L_2(\mathbb {T}^d)}\). A recent improvement was done in [11]. In contrast to that, we give in Corollary 3.22 a concentration inequality for the approximation error based on the probabilistic Bernstein inequality. In [2] this idea is extended to a more general setting.

It is also possible to estimate the worst-case error for the whole function class, see [27] and for the \(L_\infty \)-error [20, 38].

4 Computing the ANOVA decomposition

The hyperbolic wavelet regression in Algorithm 1 already reduces the curse of dimensionality because of the hyperbolic structure of our index set. This is reflected in the number of parameters of the wavelet spaces \(N=\mathcal {O}(2^nn^d)\). But we still have the dimension d in the exponent, which grows fast for high dimensions d. Therefore we want to reduce the number of necessary parameters further by taking into account which variable interactions play a role for describing the function.

In this section we calculate the global sensitivity indices \(\rho ({\varvec{u}},S_n^\mathcal {X}f)\) defined in (2.4) for the approximated functions \(S_n^\mathcal {X}f\), which we introduced in the last section. Knowing these indices \(\rho ({\varvec{u}},S_n^\mathcal {X}f)\), we can reduce the number of parameters by omitting the ANOVA terms which do not play a role in describing the variance of a function \(f\in L_2(\mathbb {T}^d)\). Solving the least-squares problem

where the hyperbolic wavelet matrix \(\varvec{A}\in \mathbb {R}^{M\times N}\) has the form \(\varvec{A}=(\psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per}({\varvec{x}}))_{{\varvec{x}}\in \mathcal {X},{\mathop {{\varvec{k}}\in \mathcal {I}_{\varvec{j}}}\limits ^{{\varvec{j}}\in \mathcal {J}_n}}}\), leads to a coefficient vector \(\varvec{a}=(a_{{\varvec{j}},{\varvec{k}}})_{{\varvec{j}}\in \mathcal {J}_n,{\varvec{k}}\in \mathcal {I}_{\varvec{j}}}\), which describes the approximant by

We calculate the global sensitivity indices \(\rho ({\varvec{u}},g)\) in terms of the coefficient vector \(\varvec{a}\).

Theorem 4.1

Let \(g\in L_2(\mathbb {T}^d)\) be a function in the periodic wavelet space written as

For a function \(f\in H^s_\textrm{mix}(\mathbb {T}^d)\) let g be the projection \(g = P_nf\) defined in (3.20) or the approximation \(g = S_n^\mathcal {X}f\) from (3.26). In these cases the sum for the index \({\varvec{j}}\) reduces to \({\varvec{j}}\in \mathcal {J}_n\). Furthermore, let g be decomposed in ANOVA terms \(g_{{\varvec{u}}}\) as in Definition 2.2. For these terms yields

where we define the notion for up-sampling \(\uparrow :\mathbb {R}^{|{\varvec{u}}|}\rightarrow \mathbb {R}^d\), given by

Then the variances \(\sigma ^2(g_{\varvec{u}})\) of these ANOVA terms for \(\varnothing \ne {\varvec{u}}\subseteq [d]\) are given by

where the matrices \(\varvec{\Lambda }_{{\varvec{j}}}\) are defined in (3.28) and we denote the vectors \(\varvec{a}_{{\varvec{j}}} = \left( a_{{\varvec{j}},{\varvec{k}}}\right) _{{\varvec{k}}\in \mathcal {I}_{{\varvec{j}}}}\).

Proof

We use Definition 2.2 to determine the ANOVA terms. We take into account that the wavelets have the property that

We use induction over \(|{\varvec{u}}|\) and begin with

The induction step reads

For determining the variances of these terms, we use the fact that \(\langle \psi _{j_1,k_1}^\textrm{per},\psi _{j_2,k_2}^\textrm{per}\rangle =0,\text { if } j_1\ne j_2\), see (3.5). Hence,

\(\square \)

This theorem tells us that the description of a function \(g\in L_2(\mathbb {T}^d)\) in terms of wavelets like in (4.1) inherits the ANOVA structure of the function, since we have for every subset \({\varvec{u}} \in \mathcal {P}([d])\),

Note that there are only few different matrix entries in the matrices \(\varvec{\Lambda }_{{\varvec{j}}}\). Hence, these few entries only have to be precomputed and with (4.2) the global sensitivity indices \(\rho ({\varvec{u}},S_n^\mathcal {X}f)\) can be computed in a fast way.

4.1 Truncating the ANOVA decomposition

So far we used in Algorithm 1 all ANOVA terms to approximate a function \(f\in H^s_\textrm{mix}(\mathbb {T}^d)\). The number of ANOVA terms of a function is equal to \(2^d\) and therefore grows exponentially in the dimension d. This reflects the curse of dimensionality in a certain way and poses a problem for the approximation of a function, even while we use a wavelet decomposition, which decreases the number of used parameters in comparison to using a full grid approximation. For that reason, we want to truncate the ANOVA decomposition, i.e., removing certain terms \(f_{{\varvec{u}}}\), and creating certain form of sparsity.

To this end we introduce the notion of effective dimension, see [7].

Definition 4.2

For \(0<\epsilon _s\le 1\) the effective dimension of f, in the superposition sense, is the smallest integer \(\nu \le d\), such that

This means, we can describe a function with low effective dimension in the superposition sense by only using a low dimensional approximant very well. For that reason, we introduce the set \(U_{\nu }\), where we use all ANOVA terms up to the superposition dimension \(\nu \), i.e.

Furthermore, in [36] was shown that functions of dominating mixed smoothness have low effective dimension. There they bounded the truncation error \(\left\Vert \smash {\sum _{{\varvec{u}}\notin U_{\nu }}f_{{\varvec{u}}}} \right\Vert _{L_2(\mathbb {T}^d)},\) which we accept in the following by supposing that a function has only dimension interactions up to order \(\nu \).

To gain from a low effective dimension, we introduce the following ANOVA inspired Sobolev spaces of dominating mixed derivatives with superposition dimension \(\nu \)

For functions in these subspaces of \(H^s_{\textrm{mix}}(\mathbb {T}^d)\) we adapt our algorithm to benefit from the structure of f. The first spaces were already introduced in [16] in terms of Fourier coefficients. But there they used trigonometric polynomials for approximation.

Theorem 4.1 tells us, which coefficients of the wavelets representation of a function in \(L_2(\mathbb {T}^d)\) coincide to which ANOVA terms. We truncate the operator \(P_n\), defined in (3.20) to a set \(\varnothing \in U\subseteq \mathcal P([d])\) by

where we define analog to (3.21) the index-sets

and \(\mathcal {J}_n^{U} = \bigcup _{{\varvec{u}}\in U}\mathcal {J}_n^{\{{\varvec{u}}\}}\). Note that for the whole power set \(U = {\mathcal {P}}([d])\) we obtain the untruncated projection \(P_nf\) from (3.20). In order to truncate the ANOVA decomposition of f, we have to know which terms we can omit. If the function f has low superposition dimension \(\nu \), we use \(U_{\nu }\) as truncation index set, where we have to know \(\nu \) in advance or we have to make a suitable guess. It turns out that in many real world problems the superposition dimension is low, see [7, 15, 29, 37, 44]. Therefore we have to determine in a first step the variances of the ANOVA terms. Then we omit in the second step these ANOVA terms for which \(\rho ({\varvec{u}}, S_n^\mathcal {X}f)< \varepsilon \) for some threshold-parameter \(\varepsilon \).

Algorithm 1 allows us to restrict our approximation to some index-set \(U\subset {\mathcal {P}}([d])\), while using the decomposition in Theorem 4.1. This coincides with deleting columns in the matrix \(\varvec{A}\), which belong to ANOVA terms \(g_{\varvec{u}}\)with \({\varvec{u}}\notin U\). Instead of the matrix \(\varvec{A}\) of the first step we use the reduced matrix

which allows us to increase the maximal level n. The whole algorithm is summarized in Algorithm 2. One remaining question is, how much samples do we need for the approximation if we only use some columns \(\varvec{A}_U\) of the hyperbolic wavelet matrix. Again, we denote by N the number of parameters, i.e. \(N = \text {dim }\mathop {\textrm{span}}\limits \{\psi _{{\varvec{j}},{\varvec{k}}}^\textrm{per}\mid {\varvec{j}}\in \mathcal {J}, {\varvec{k}}\in \mathcal {I}_{\varvec{j}}\}\). If we do require nothing to the index set \(\mathcal {J}\), we have

which follows by the same arguments as in (3.32). This shows that our theory in Sect. 3.5 also applies if we choose other index sets than \(\mathcal {J}_n\) as for the hyperbolic wavelet regression. Especially we can also apply Theorem 3.19. If we use a number of N wavelets for the approximation, we have to use \(M \approx N\log N\) samples. Especially, if we choose an index set \(\mathcal {J}=\mathcal {J}_n^{U_{\nu }}\), this has cardinality \(\left( {\begin{array}{c}d\\ \nu \end{array}}\right) 2^n n^{\nu -1}\). Therefore, we do in a first step the hyperbolic wavelet approximation with the matrix \(\varvec{A}_{U_\nu }\). Then we calculate the global sensitivity indices of the resulting approximant. In the second step we omit ANOVA terms with low variances. This reduction allows us to increase the accuracy, i.e. to increase n. Algorithm 2 summarizes that approach.

The theory in Sect. 3.5 suffers from the truncation to low dimensional terms. All proofs can be done in the same way, but instead of the d-dependence we receive the a \(\nu \)-dependance. The number N of necessary parameters reduces in this case to \(N=\mathcal {O}\left( \left( {\begin{array}{c}d\\ \nu \end{array}}\right) 2^n n^{\nu -1}\right) \). The number of samples has to fulfill

Similarly to Corollary 3.22 we get

where we eliminate the d-dependance. The approximation operator \(S_n^{\mathcal {X},U_\nu }\) is defined in Algorithm 2. Analog estimates can be done for the \(L_\infty \)-error as well as for the spaces \(\mathbf {\varvec{B}}_{2,\infty }^s(\mathbb {T}^d)\).

To conclude this, in Table 3 we summarize the asymptotic behavior of full grid approximation, hyperbolic wavelet approximation as well as approximation of functions with low effective dimensions using ANOVA ideas. For the comparison with the full grid see [3, Section 3.5]. The hyperbolic wavelet regression coincides with Algorithm 1. The truncated hyperbolic regression coincides with steps 2, 3 of Algorithm 2. Finally, the ANOVA-hyperbolic wavelet regression coincides with steps 6, 7 of Algorithm 2. In all cases we end up with the approximation error from Corollary 3.22.

Remark 4.3

To compare our theory with the results in [36], in the Fourier setting a full grid approximation with polynomial degree at most \(2^n\) has the same approximation rate, number of parameters N and number of needed samples M as the full grid approximation in the wavelet case. Choosing hyperbolic cross index sets in frequency domain gives a similar cardinality of the index set as the hyperbolic wavelet regression. But for this case no fast algorithms are available so far. However, in [36] they use full index sets of dimension \(\nu \), which give the worse estimates \(N=\mathcal {O}(\left( {\begin{array}{c}d\\ \nu \end{array}}\right) \,2^{n\nu })\) in comparison to the third line of Table 3.

5 Numerical results

After deriving our theoretical statements in the previous chapters, in this section we now underpin our findings by several numerical results. We use the Chui–Wang wavelets from Example 3.2, which fulfill the properties (P1), (P2) and (P3). We also did numerical tests with Daubechies wavelets, which have Riesz constants \(\gamma _m=\delta _m= 1\). In this case a closed form for accessing \(\psi (x)\) is not available, so we precomputed and stored the function values at dyadic points and interpolated in between. Since this does not improve the numerical results, we restrict our results to Chui–Wang wavelets.

First we give estimates about the computational cost. We begin with a kink function to illustrate our theorems from Sect. 3 by using Algorithm 1. Our second example shows the benefit of using wavelets with vanishing moments of higher order for function with higher regularity. The example in Sect. 5.3 of a high-dimensional function shows that Algorithm 2 is a powerful method. The last example in Sect. 5.5 shows that even functions in a Sobolev space with low regularity benefit from the ANOVA ideas.

5.1 Computational cost

In order to determine the complexity of our algorithms we first calculate the complexity of one matrix multiplication with the hyperbolic wavelet matrix. For fixed \({\varvec{j}}\in \mathcal {J}_n\) there are at most \(\lceil 2m-1\rceil ^d\) non-zero entries in every block of columns in every row. Since \(\sum _{{\varvec{u}}\in U}\sum _{|{\varvec{j}}_{{\varvec{u}}}|\le n} 1=\sum _{{\varvec{u}}}\mathcal {O}(n^{|{\varvec{u}}|}),\) every matrix multiplication with the hyperbolic wavelet matrix \(\varvec{A}_{U}\) has complexity

The cases where we choose \(U={\mathcal {P}}([d])\) in Algorithm 1 and \(U=U_\nu \) in Algorithm 2 are included in this consideration. Note that in contrast to the Fourier setting, see [36], our index set has a lower cardinality N, which gives a better complexity of the algorithm.

The second factor which plays a role is the number of iterations \(r^*\). The whole algorithm has a complexity of

Theorem 3.19 gives us an estimation about the minimal eigenvalues of the matrix \(\varvec{A}^*\varvec{A}\). In order to bound the maximal eigenvalues of the matrix \(\varvec{A}^*\varvec{A}\), we can use the same argumentation as in the proof of Theorem 3.19 together with the bound for the maximal eigenvalues in Theorem 3.16. This gives us for \(r>1\)

if we are in the setting of logarithmic oversampling. Hence, with high probability we can bound the condition number of the matrix \(\varvec{A}^*\varvec{A}\) by

Following [1, Example 13.1] the maximal number of iteration \(r^*\), to achieve an accuracy of \(\varepsilon \), can be bounded by

As an example, if we aim an accuracy of \(\varepsilon = 10^{-4} \) and we choose as parameters the order of vanishing moments \(m =3\) and the dimension \(d =2\), this bound gives us \(r^*\le 85\) with high probability.

The calculation of the global sensitivity indices in step 4 of Algorithm 2 does not play role compared to the LSQR-algorithm, since this can be done in a fast way. There are only few different matrix entries in the matrices \(\varvec{\Lambda }_{{\varvec{j}}}\) in (4.2), so these few entries can be precomputed. Also, these matrices are sparse circulant matrices of size smaller than \(2^{n\nu }\).

5.2 Kink test function

We start with an example of an \(L_2(\mathbb {T}^d)\)-normalized kink function,

which has the Fourier-coefficients

i.e. the Fourier coefficients of this function decay like \(|c_{{\varvec{k}}}(f)|\sim \prod _{i=1}^d\left( 1+|k_i|^2\right) ^{-1}\). Consequently, \(f\in H^s_\textrm{mix}(\mathbb {T}^d)\) with \(s = 1+\tfrac{1}{2} -\varepsilon =\tfrac{3}{2} -\varepsilon \). In addition it follows that \(f \in \mathbf {\varvec{B}}^{3/2}_{2,\infty }(\mathbb {T}^d)\). Hence, we use wavelets with vanishing moments of order \(m>\tfrac{3}{2}\).

The kink function (5.1) for \(d=1\)

We will begin with the one-dimensional function, which is plotted in Fig. 5. For our approximation we use \(n= 9\), i.e we have \(N = 1024\) parameters. To apply our theory, we have to choose logarithmic oversampling. For that reason we sample the function at the i.i.d. samples \(\mathcal {X}\) with \(|\mathcal {X}|=M=20000\). Then we use Algorithm 1 to approximate the coefficients \(a_{j,k}\approx \langle f,\psi _{j,k}^{\textrm{per},*}\rangle \). Figure 6 shows the resulting coefficients.

Our test function is piecewise polynomial of degree 2, only at the two kink points we have regularity \(\tfrac{3}{2}\). Figure 6 shows that the wavelet coefficients detect locally lower regularity. This is due to the compact support of the wavelets.

In Fig. 7a we plotted the sums \(\sum _{|{\varvec{j}}|_1=n}\sum _{{\varvec{k}}\in \mathcal {I}_{{\varvec{j}}}}|a_{{\varvec{j}},{\varvec{k}}}|^2\). In Theorem 3.9 we proved that \(\sum _{{\varvec{k}}\in \mathcal {I}_{{\varvec{j}}}}|\langle f, \psi _{{\varvec{j}},{\varvec{k}}}^{*,\textrm{per}}\rangle |^2\) decay like \(2^{-2ns}\). Lemma 3.11 gives the estimation that there are \(\left( {\begin{array}{c}n+d-1\\ d-1\end{array}}\right) =\mathcal {O}(n^{d-1})\) indices \({\varvec{j}}\), such that \(|{\varvec{j}}|_1=n\). This gives us a proposed decay rate \(2^{-3n}n^{d-1}\). We see this decay even though we approximated the wavelet coefficients \(\langle f,\psi _{{\varvec{j}},{\varvec{k}}}^{*,\textrm{per}}\rangle \) by the solutions \(a_{{\varvec{j}},{\varvec{k}}}\) of the hyperbolic wavelet regression.

Using different parameters n, while always ensuring logarithmic oversampling, we see the decay of the \(L_2(\mathbb {T}^d)\)-error in Fig. 7b. It matches the proposed error bound \(2^{-3/2n}n^{(d-1)/2}\) from Corollary 3.22. To measure the error we use the root mean squared error (RMSE), which is defined by

for some sample points \(\mathcal {X}_{\text {test}}\subset \mathbb {T}^d\), which gives us a good estimator for the \(L_2(\mathbb {T}^d)\)-error \(\left\Vert \smash {f-S_n^\mathcal {X}f} \right\Vert _{L_2(\mathbb {T}^d)}\). Since we always use \(L_2(\mathbb {T}^d)\)-normalized test functions, the RMSE can be interpreted as a relative error. For the RMSE we use random points \(\mathcal {X}_{\text {test}}\subset \mathbb {T}^d\) with \(|\mathcal {X}_{\text {test}}|=10^6\) as test samples.

5.3 A function with higher mixed regularity

We consider the following test function

where we use the B-spline from (3.1). This function is in \(H^{5/2-\varepsilon }_\textrm{mix}(\mathbb {T}^3)\) and in \(\mathbf {\varvec{B}}^{5/2}_{2,\infty }(\mathbb {T}^d)\). We do an approximation using Algorithm 1, where we use Chui–Wang wavelets of order \(m=2\) and \(m=3\).

Decay of the RMSE for the test function (5.2)

While always ensuring logarithmic oversampling, we use \(|\mathcal {X}_{\text {test}}|= 3M\) samples for calculation of the RMSE. The results can be seen in Fig. 8. This confirms our proposed error decay from Corollary 3.22. For \(m=2\) we are in the setting where \(s=m\) and the error decays a bit faster than \(2^{-2n}n\) but slower than \(2^{-2n}\) (recall that \(d=3\)). If we use wavelets of higher regularity, i.e. \(m=3\), we reduce the error as well as the decay rate compared to \(m=2\). We are in the case where \(s<m\) and since the test function is in \(\mathbf {\varvec{B}}_{2,\infty }^{5/2}(\mathbb {T}^d)\), we proved that the error decays like \(2^{-5/2n}n\), which is confirmed by the numerical experiments.

5.4 A high-dimensional function with low effective dimension

The Ishigami function [26] is used as an example for uncertainty and sensitivity analysis methods, because it exhibits strong non-linearity and non-monotonicity. Since we are in the periodic setting, we consider the suited periodized version, add a term which consists of a B-spline term and add additional dimensions, which do not contribute to the function \(f:\mathbb {T}^8\rightarrow \mathbb {R}\) with

where \({\varvec{v}} = \{6,7,8\}\) and g is a tensor product of a three-dimensional B-spline, see (3.1), of order 6, given by \(g({\varvec{x}}_{\varvec{v}})=\prod _{i=6}^8 \left( B_6(2^4 x_i)-\frac{1}{16}\right) \) and the constant c is such that the function is normalized to \(\left\Vert \smash {f} \right\Vert _{L_2(\mathbb {T}^d)}=1\). The ANOVA terms and their variances can be computed analytically. The variances of \(f_{\varvec{u}}\) are non-zero only for the indices \({\varvec{u}}\in \{\{1\},\{2\},\{1,3\},\{6,7,8\}\}\). It follows easily that the effective dimension of f, in the superposition sense, see Definition 4.2, for \(\epsilon _s=1\) is \(\nu = 3\). We use this function to test Algorithm 2 and initially choose \(n=2\), which means that we use \(N = 2269\) parameters. Therefore we randomly draw \(M=10^5\) sample points on \(\mathbb {T}^8\). Figure 9 shows all \(|U_3|=92\) resulting global sensitivity indices from the first step of our algorithm as proposed in Theorem 4.1 in comparison to the analytically calculated global sensitivity indices. Although we have low maximal level n, we can detect the correct ANOVA terms. Numerical experiments showed that even with a maximal level 0 or 1, we can detect the correct ANOVA terms. In the second step we use this information and build an adapted model, i.e. we use \(U = \{\varnothing ,\{1\},\{2\},\{1,3\},\{6,7,8\}\}\) and increase the maximal level to \(n = 6\).

Global sensitivity indices \(\rho ({\varvec{u}})\) of the function (5.3) analytically computed and from Algorithm 2 with \(n=2\)