Abstract

We study the approximation of general multiobjective optimization problems with the help of scalarizations. Existing results state that multiobjective minimization problems can be approximated well by norm-based scalarizations. However, for multiobjective maximization problems, only impossibility results are known so far. Countering this, we show that all multiobjective optimization problems can, in principle, be approximated equally well by scalarizations. In this context, we introduce a transformation theory for scalarizations that establishes the following: Suppose there exists a scalarization that yields an approximation of a certain quality for arbitrary instances of multiobjective optimization problems with a given decomposition specifying which objective functions are to be minimized/maximized. Then, for each other decomposition, our transformation yields another scalarization that yields the same approximation quality for arbitrary instances of problems with this other decomposition. In this sense, the existing results about the approximation via scalarizations for minimization problems carry over to any other objective decomposition—in particular, to maximization problems—when suitably adapting the employed scalarization. We further provide necessary and sufficient conditions on a scalarization such that its optimal solutions achieve a constant approximation quality. We give an upper bound on the best achievable approximation quality that applies to general scalarizations and is tight for the majority of norm-based scalarizations applied in the context of multiobjective optimization. As a consequence, none of these norm-based scalarizations can induce approximation sets for optimization problems with maximization objectives, which unifies and generalizes the existing impossibility results concerning the approximation of maximization problems.

Similar content being viewed by others

1 Introduction

Multiobjective optimization covers methods and techniques for solving optimization problems with several equally important but conflicting objectives, a field of study which is of growing interest both in theory and real-world applications. In such problems, solutions that optimize all objectives simultaneously usually do not exist. Hence, the notion of optimality needs to be refined: A solution is said to be efficient if any other solution that is better in some objective is necessarily worse in at least one other objective. The image of an efficient solution under the objectives is called a nondominated image. A solution is said to be weakly efficient if no other solution exists that is strictly better in each objective. It is widely accepted that some entity, called the decision maker, chooses a final preferred solution among the set of (weakly) efficient solutions. When no prior preference information is available, a main goal of multiobjective optimization is to compute all nondominated images and, for each nondominated image, at least one corresponding efficient solution.

However, multiobjective optimization problems are typically inherently difficult: they are hard to solve exactly (Ehrgott 2005; Serafini 1987) and, moreover, the cardinalities of the set of nondominated images may be exponentially large (or even infinite, e.g., for continuous problems), see e.g. Bökler and Mutzel (2015), Ehrgott and Gandibleux (2000) and the references therein. In general, this impedes the applicability of exact solution methods and strongly motivates the approximation of multiobjective optimization problems—a concept to substantially reduce the number of required solutions while still obtaining a provable solution quality. Here, it is sufficient to find a set of (not necessarily efficient) solutions, called an approximation set, that, for each possible image, contains a solution whose image is in every objective at least as good up to some multiplicative factor.

A scalarization is a technique to systematically transform a multiobjective optimization problem into single-objective optimization problems with the help of additional parameters such as weights or reference points.Footnote 1 The solutions obtained by solving these single-objective optimization problems are then interpreted in the context of multiobjective optimization (see, e.g., Ehrgott and Wiecek 2005; Jahn 1985; Miettinen and Mäkelä 2002; Wierzbicki 1986 for overviews on scalarizations). As a consequence, scalarization techniques are a key concept in multiobjective optimization: They often yield (weakly) efficient solutions and they are used as subroutines in algorithms for solving or approximating multiobjective optimization problems. Unsurprisingly, there exists a vast amount of research concerning both exact and approximate solutions methods that use scalarizations as building blocks, see Bökler and Mutzel (2015), Holzmann and Smith (2018), Klamroth et al. (2015), Wierzbicki (1986) and Bazgan et al. (2022), Daskalakis et al. (2016), Diakonikolas and Yannakakis (2008), Glaßer et al. (2010a), Glaßer et al. (2010b), Halffmann et al. (2017) and the references therein for a small selection.

A widely-known scalarization—and probably the most simple example—is the weighted sum scalarization, where single-objective optimization problems are obtained by forming weighted sums of the multiple objective functions while keeping the feasible set unchanged. The weighted sum scalarization is frequently used, among others, in approximation methods for multiobjective optimization problems. In fact, it has been shown that optimal solutions of the weighted sum scalarization can be used to obtain approximation sets for each instance of each multiobjective minimization problem (Bazgan et al. 2022; Glaßer et al. 2010a, b; Halffmann et al. 2017). However, these approximation results crucially rely on the assumption that all objectives are to be minimized. In fact, it is known that, for the weighted sum scalarization as well as for every other scalarization studied so far in the context of approximation, even the union of all sets of optimal solutions of the scalarization obtainable for any choice of its parameters does, in general, not constitute an approximation set in the case of maximization problems (Bazgan et al. 2022; Glaßer et al. 2010a, b; Halffmann et al. 2017; Herzel et al. 2023). Consequently, general approximation methods building on the studied scalarizations cannot exist for multiobjective maximization problems.

This raises several fundamental questions: Are there intrinsic structural differences between minimization and maximization problems with respect to approximation via scalarizations? Is it, in general, substantially harder or even impossible to construct a scalarization for maximization problems that is as powerful as the weighted sum scalarization is for minimization problems? More precisely, does there exist a scalarization such that, in arbitrary instances of arbitrary maximization problems, optimal solutions of the scalarization constitute an approximation set? Beyond that, can also optimization problems in which both minimization and maximization objectives appear be approximated by means of scalarizations? If yes, what structural properties are necessary in order for scalarizations to be useful concerning the approximation of multiobjective optimization problems in general? We answer these questions in this paper and study the power of scalarizations for the approximation of multiobjective optimization problems from a general point of view. We focus on scalarizations built by scalarizing functions that combine the objective functions of the multiobjective problem by means of strongly or strictly monotone and continuous functions. This captures many important and broadly-applied scalarizations such as the weighted sum scalarization, the weighted max-ordering scalarization, and norm-based scalarizations (Ehrgott and Wiecek 2005), but not scalarizations that change the feasible set. However, most important representatives of the latter class such as the budget constraint scalarization, Benson’s method, and the elastic constraint method are capable of finding the whole efficient set and, thus, obviously yield approximation sets with approximation quality equal to one (see Ehrgott 2005; Ehrgott and Wiecek 2005).

We develop a transformation theory for scalarizations with respect to approximation in the following sense: Suppose there exists a scalarization that yields an approximation of a certain quality for arbitrary instances of multiobjective optimization problems with a given decomposition specifying which objective functions are to be minimized/maximized. Then, for each other decomposition, our transformation yields another scalarization that yields the same approximation quality for arbitrary instances of problems with this other decomposition. We also study necessary and sufficient conditions for a scalarization such that optimal solutions can be used to obtain an approximation set, and determine an upper bound on the best achievable approximation quality. The computation of this upper bound simplifies for so-called weighted scalarizations and, in particular, is tight for the majority of norm-based scalarizations applied so far in the context of multiobjective optimization. As a consequence of this tightness, none of the above norm-based scalarizations can induce approximation sets for arbitrary instances of optimization problems containing maximization objective functions. Hence, this result unifies and generalizes all impossibility results concerning the approximation of maximization problems obtained in Bazgan et al. (2022), Glaßer et al. (2010a), Glaßer et al. (2010b), Halffmann et al. (2017), Herzel et al. (2023).

1.1 Related work

General approximation methods seek to work under very weak assumptions and, thus, to be applicable to large classes of multiobjective optimization problems. In contrast, specific approximation methods are tailored to problems with a particular structure. We refer to Herzel et al. (2021b) for an extensive survey on both general and specific approximation methods for multiobjective optimization problems.

Almost all general approximation methods for multiobjective optimization problems build upon the seminal work of Papadimitriou and Yannakakis (2000), who show that, for any \(\varepsilon >0\), a \((1 + \varepsilon )\)-approximation set (i.e., an approximation set with approximation quality \(1 + \varepsilon \) in each objective) of polynomial size is guaranteed to exist in each instance under weak assumptions. Moreover, they prove that a \((1 + \varepsilon )\)-approximation set can be computed in (fully) polynomial time for every \(\varepsilon >0\) if and only if the so-called gap problem, which is an approximate version of the canonical decision problem associated with the multiobjective problem, can be solved in (fully) polynomial time.

Subsequent work focuses on approximation methods that, given an instance and \(\alpha \ge 1\), compute approximation sets whose cardinality is bounded in terms of the cardinality of the smallest possible \(\alpha \)-approximation set while maintaining or only slightly worsening the approximation quality \(\alpha \) (Bazgan et al. 2015; Diakonikolas and Yannakakis 2009, 2008; Koltun and Papadimitriou 2007; Vassilvitskii and Yannakakis 2005). Additionally, the existence result of Papadimitriou and Yannakakis (2000) has recently been improved by Herzel et al. (2021a), who show that, for any \(\varepsilon >0\), an approximation set that is exact in one objective while ensuring an approximation quality of \(1 + \varepsilon \) in all other objectives always exists in each instance under the same assumptions.

As pointed out in Halffmann et al. (2017), the gap problem is not solvable in polynomial time unless \(\textsf {P}=\textsf {NP}\) for problems whose single-objective version is APX-complete and coincides with the weighted sum problem. For such problems, the algorithmic results of Papadimitriou and Yannakakis (2000) and succeeding articles cannot be used. Consequently, other works study how the weighted sum scalarization and other scalarizations can be employed for approximation. Daskalakis et al. (2016), Diakonikolas and Yannakakis (2008) show that, in each instance, a set of solutions such that the convex hull of their images yields an approximation quality can be computed in (fully) polynomial time if and only if there is a (fully) polynomial-time approximation scheme for all single-objective optimization problems obtained via the weighted sum scalarization.

The results of Glaßer et al. (2010a), Glaßer et al. (2010b) imply that, in each instance of each p-objective minimization problem and for any \(\varepsilon >0\), a \(((1 + \varepsilon ) \cdot \delta \cdot p)\)-approximation set can be computed in fully polynomial time provided that the objective functions are positive-valued and polynomially computable and a \(\delta \)-approximation algorithm for the optimization problems induced by the weighted sum scalarization exists. They also give analogous results for more general norm-based scalarizations, where the obtained approximation quality additionally depends on the constants determined by the norm-equivalence between the chosen norm and the 1-norm.

Halffmann et al. (2017) present a method to obtain, in each instance of each biobjective minimization problem and for any \(0 < \varepsilon \le 1\), an approximation set that guarantees an approximation quality of \((\delta \cdot (1 + 2\varepsilon ))\) in one objective function while still obtaining an approximation quality of at least \((\delta \cdot (1 + \frac{1}{\varepsilon }))\) in the other objective function, provided a polynomial-time \(\delta \)-approximation algorithm for the problems induced by the weighted sum scalarization is available. This “trade-off” between the approximation qualities in the individual objectives is studied in more detail by Bazgan et al. (2022), who introduce a multi-factor notion of approximation and present a method that, in each instance of each p-objective minimization problem for which a polynomial-time \(\delta \)-approximation algorithm for the problems induced by the weighted sum scalarization exists, computes a set of solutions such that each feasible solution is component-wise approximated within some (possibly solution-dependent) vector \((\alpha _1, \ldots , \alpha _p)\) of approximation qualities \(\alpha _i \ge 1\) such that \(\sum _{i: \alpha _i > 1} \alpha _i = \delta \cdot p + \varepsilon \).

From another point of view, the weighted sum scalarization can be interpreted as a special case of ordering relations that use cones to model preferences. Vanderpooten et al. (2016) study approximation in the context of general ordering cones and characterize how approximation with respect to some ordering cone carries over to approximation with respect to some larger ordering cone. In a related paper, Herzel et al. (2023) focus on biobjective minimization problems and provide structural results on the approximation quality that is achievable with respect to the classical (Pareto) ordering cone by solutions that are efficient or approximately efficient with respect to larger ordering cones.

Notably, none of the methods and approximation results for minimization problems provided in Bazgan et al. (2022), Glaßer et al. (2010a), Glaßer et al. (2010b), Halffmann et al. (2017), Herzel et al. (2023) can be translated to maximization problems in general: Glaßer et al. (2010a), Glaßer et al. (2010b) and Halffmann et al. (2017) show that similar approximation results are impossible to obtain in polynomial time for maximization problems unless \(\textsf {P} = \textsf {NP}\). Bazgan et al. (2022) provide, for any \(p\ge 2\) and polynomial \(\text {pol}\), an instance I with encoding length \(|I |\) of a p-objective maximization problem such that at least one solution not obtainable as an optimal solution of the weighted sum scalarization is not approximated by solutions that are obtainable in this way within a factor of \(2^{\text {pol}(|I |)}\) in \(p-1\) of the objective functions. Similarly, Herzel et al. (2023) show that, for any set P of efficient solutions with respect to some larger ordering cone and any \(\alpha \ge 1\), an instance of a biobjective maximization problem can be constructed such that the set P is not an \(\alpha \)-approximation set (in the classical sense).

To the best of our knowledge, the only known results tailored to general maximization problems are presented by Bazgan et al. (2013). Here, rather than building on scalarizations, additional severe structural assumptions on the set of feasible solutions are proposed in order to obtain an approximation.

In summary, most of the known approximation methods that build on scalarizations focus on minimization problems. In fact, mainly impossibility results are known concerning the application of such methods for maximization problems and, to the best of our knowledge, a scalarization-based approximation of optimization problems with both minimization and maximization objectives has so far not been considered at all.

1.2 Our contribution

We study the power of optimal solutions of scalarizations with respect to approximation. We focus on scalarizations built by scalarizing functions that combine the objective functions of the multiobjective problem by means of strongly or strictly monotone and continuous functions. In particular, we address the questions outlined above and study why existing approximation results and methods using scalarizations typically work well for minimization problems, but do not yield any approximation quality for maximization problems in general. To this end, we develop a transformation theory for scalarizations with respect to approximation in the following sense: Suppose there exists a scalarization that yields an approximation of a certain quality for arbitrary instances of multiobjective optimization problems with a given decomposition specifying which objective functions are to be minimized / maximized. Then, for each other decomposition, our transformation yields another scalarization that yields the same approximation quality for arbitrary instances of problems with this other decomposition. Hence, our results show that, in principle, the decomposition of the objectives into minimization and maximization objectives does not have an impact on how well multiobjective problems can be approximated via scalarizations. In particular, this shows that, with respect to approximation, equally powerful scalarizations exist for (pure) minimization and (pure) maximization problems and any other possible decomposition of the objectives into minimization and maximization objectives. Consequently, the lack of positive approximation results for maximization problems in the literature is not based on a general impossibility. Rather, it results from the fact that the scalarizations that work well for minimization problems (such as the weighted sum scalarization) have been used also for maximization problems, while our results show that different scalarizations work for the maximization case.

We further provide necessary and sufficient conditions for a scalarization such that optimal solutions of the scalarization can be used to obtain approximation sets for arbitrary instances of multiobjective problems with a certain objective decomposition. We give an upper bound on the best achievable approximation quality solely depending on the level sets of the scalarizing functions contained in the scalarization. We show that the computation of this upper bound simplifies for weighted scalarizations, and provide classes of scalarizations, which include all norm-based scalarizations applied in the context of multiobjective optimization, for which this upper bound is in fact tight. As a consequence of this tightness, none of the above norm-based scalarizations can induce approximation sets for arbitrary instances of optimization problems containing maximization objectives. Hence, this result unifies and generalizes all impossibility results concerning the approximation of maximization problems obtained in Bazgan et al. (2022), Glaßer et al. (2010a), Glaßer et al. (2010b), Halffmann et al. (2017), Herzel et al. (2023).

2 Preliminaries

In this section, we revisit basic concepts from multiobjective optimization and state the assumptions made in this article. For a thorough introduction to the field of multiobjective optimization, we refer to Ehrgott (2005). In the following, if, for a set \(Y \in \mathbb {R}^p\) and some index \(i \in \{1, \ldots ,p\}\), there exists a \(q \in \mathbb {R}^p\) such that \(y_i \ge q_i\) for all \(y \in Y\), we say that Y is bounded from below in i (by q). If there exists a \(q \in \mathbb {R}^p\) such that \(y_i \le q_i\) for all \(y \in Y\), we say that Y is bounded from above in i (by q). Note that a set \(Y \subseteq \mathbb {R}^p\) is bounded (in the classical sense) if and only if Y is bounded from above in all i and bounded from below in all i.

We consider general multiobjective optimization problems with p objectives each of which is to be minimized or maximized: Let \(p \in \mathbb {N} {\setminus } \{0\}\) be, as is usually the case in multiobjective optimization, a fixed constant, and let \( \textsc {MIN}\in 2^{\{1,\ldots ,p\}}\), \(\textsc {MAX}:=\{1,\ldots ,p\} {\setminus } \textsc {MIN}\), and \(\Pi :=( \textsc {MIN},\textsc {MAX})\). Then, we call \(\Pi \) an objective decomposition and we define multiobjective optimization problems as follows:

Definition 2.1

Let \(\Pi = ( \textsc {MIN},\textsc {MAX})\) be an objective decomposition. A p-objective optimization problem of type \(\Pi \) is given by a set of instances. Each instance \(I= (X,f)\) consists of a set X of feasible solutions and a vector \(f = (f_1,\ldots , f_p)\) of objective functions \(f_i:X \rightarrow \mathbb {R}\), \(i=1,\ldots , p\), where the objective functions \(f_i, i \in \textsc {MIN}\), are to be minimized and the objective functions \(f_i, i \in \textsc {MAX}\), are to be maximized. If \( \textsc {MIN}= \{1,\ldots , p\}\) and \(\textsc {MAX}= \emptyset \), the p-objective optimization problem of type \(\Pi \) is called a p-objective minimization problem. If \( \textsc {MIN}= \emptyset \) and \(\textsc {MAX}= \{1,\ldots , p\}\), the p-objective optimization problem of type \(\Pi \) is called a p-objective maximization problem.

Component-wise orders on \(\mathbb {R}^p\), based on a given objective decomposition, induce relations between images of solutions:

Definition 2.2

Let \(\Pi = ( \textsc {MIN},\textsc {MAX})\) be an objective decomposition. For \(y,y ' \in \mathbb {R}^p\), the weak component-wise order, the component-wise order, and the strict component-wise order (with respect to \(\Pi \)) are defined by

respectively. Furthermore, we write \(\mathbb {R}^p_{>} :=\{y \in \mathbb {R}^p \, \vert \, 0 < y_i, i=1,\ldots ,p\}\).

Based on these component-wise orders, multiobjective notions of optimality can be defined:

Definition 2.3

Let \(\Pi \) be an objective decomposition. In an instance of a p-objective optimization problem of type \(\Pi \), a solution \(x \in X\) (strictly) dominates another solution \(x' \in X\) if \(f(x) \le _{\Pi } f(x')\) (\(f(x) <_{\Pi } f(x')\)). A solution \(x \in X\) is called (weakly) efficient if there does not exist any solution \(x' \in X\) that (strictly) dominates x. If a solution \(x \in X\) is (weakly) efficient, then the corresponding point \(y = f(x) \in \mathbb {R}^p\) is called (weakly) nondominated. The set \(X_E \subseteq X\) of efficient solutions is called the efficient set. The set \(Y_N= f(X_E) \subseteq \mathbb {R}^p\) of nondominated images is called the nondominated set.

In each instance of a p-objective optimization problem, it is then the goal to return a set \(X^* \subseteq X\) of feasible solutions whose image \(f(X^*)\) under \(f:X\rightarrow \mathbb {R}^p\) is the nondominated set \(Y_N\).

One main issue of a multiobjective optimization problem is that the nondominated set \(Y_N\) may consist of exponentially many images in general (Bökler and Mutzel 2015; Ehrgott and Gandibleux 2000), i. e., such problems are intractable. Approximation is a concept to substantially reduce the number of solutions that must be computed. Instead of requiring at least one corresponding efficient solution for each nondominated image, a solution whose image “almost” (by means of a multiplicative factor) dominates the nondominated image is sufficient. To ensure that approximation is meaningful and well-defined, a typical assumption made in the literature on both the approximation of single-objective and multiobjective optimization problems (see Williamson and Shmoys 2011 and Bazgan et al. 2013, 2015; Diakonikolas and Yannakakis 2009; Papadimitriou and Yannakakis 2000; Vanderpooten et al. 2016, respectively) is also used in this work:

Assumption 2.4

In any instance of each p-objective optimization problem, the set \(Y = f(X)\) of feasible points is a subset of \(\mathbb {R}^{p}_{>}\). That is, the objective functions \(f_i :X \rightarrow \mathbb {R}_>\) map solutions to positive values, only.

Approximation is then formally defined as follows:

Definition 2.5

Let \(\Pi = ( \textsc {MIN},\textsc {MAX})\) be an objective decomposition and let \(\alpha \ge 1\) be a constant. In an instance \(I = (X,f)\) of a p-objective optimization problem of type \(\Pi \), we say that \(x' \in X\) is \(\alpha \)-approximated by \(x \in X\), or x \(\alpha \)-approximates \(x'\), if \(f_i(x) \le \alpha \cdot f_i(x')\) for all \(i \in \textsc {MIN}\) and \(f_i(x) \ge \frac{1}{\alpha } \cdot f_i(x')\) for all \(i \in \textsc {MAX}\). A set \(P_\alpha \subseteq X\) of solutions is called an \(\alpha \)-approximation set if, for any feasible solution \(x' \in X\), there exists a solution \(x \in P_\alpha \) that \(\alpha \)-approximates \(x'\).

Scalarizations are common approaches to obtain (efficient) solutions (Ehrgott 2005). One large class of scalarizations transforms a multiobjective optimization problem into a single-objective optimization problem with the help of scalarizing functions:

Definition 2.6

Given an objective decomposition \(\Pi \), a function \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) is called

-

strongly \(\Pi \)-monotone if \(y \le _{\Pi } y'\) for \(y,y' \in \mathbb {R}^{p}_{>}\) implies \(s(y) < s(y')\), and

-

strictly \(\Pi \)-monotone if \(y <_{\Pi } y'\) for \(y,y' \in \mathbb {R}^{p}_{>}\) implies \(s(y) < s(y')\).

Then, a scalarizing function for \(\Pi \) is a function \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) that is continuous and (at least) strictly \(\Pi \)-monotone. The level set of s at some point \(y'\) is denoted by

A set S of scalarizing functions is referred to as a scalarization for \(\Pi \).

This definition is motivated by norm-based scalarizations (Ehrgott 2005) and captures several important scalarizations such as the weighted sum scalarization (see Example 2.12). These scalarizations typically subsume only scalarizing functions that follow the same underlying construction idea. Such a construction is motivated, for example, by (polynomial-time) solvability of the obtained single-objective optimization problems. However, we allow scalarizations to contain various different scalarizing functions for the sake of generality.

With the help of scalarizing functions, any instance of a multiobjective optimization problem can be transformed into instances of a single-objective optimization problem, for which solution methods are widely studied.

Definition 2.7

Let \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) be a scalarizing function for an objective decomposition \(\Pi \). In an instance \(I = (X,f)\) of a multiobjective optimization problem of type \(\Pi \), a solution \(x \in X\) is called optimal for s if \(s(f(x)) \le s(f(x'))\) for each \(x' \in X\).

Note that the minimization of the instance of a single-objective problem obtained by scalarizing functions (which is implicitly assumed both in Definitions 2.6 and 2.7) is without loss of generality. One could alternatively define strongly (strictly) \(\Pi \)-monotonicity of functions s via \(y \le _{\Pi } y'\) (\(y <_{\Pi } y'\)) implies \(s(y) > s(y')\), and optimality for s of a solution \(x \in X\) via \(s(f(x)) \ge s(f(x'))\) for all \(x' \in X\). Then, all results in this work are still valid.

In order to guarantee that optimal solutions exist for any scalarizing function, we additionally assume:

Assumption 2.8

In any instance of each p-objective optimization problem, the set \(Y = f(X)\) of feasible points is compact.

Note that Assumption 2.8 is satisfied for a large variety of well-known optimization problems, including multiobjective formulations of (integer/mixed integer) linear programs with compact feasible sets, nonlinear problems with continuous objectives and compact feasible sets, and all combinatorial optimization problems.

Summarizing, we assume that, in any instance of each p-objective optimization problem, the set \(Y = f(X)\) of feasible points is a compact subset of \(\mathbb {R}^{p}_{>}\). This implies that the objective functions \(f_i:X \rightarrow \mathbb {R}_>\) map solutions to positive values only, and that the set of images of feasible solutions is guaranteed to be bounded from below in all i (by the origin). Hence, the set of images is bounded if and only if it is bounded from above in all i.

Before we interpret scalarizing functions and their optimal solutions in the context of multiobjective optimization, we collect some useful properties.

Lemma 2.9

Let \(\Pi = ( \textsc {MIN}, \textsc {MAX})\) be an objective decomposition. Let \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) be a scalarizing function for \(\Pi \). Let \(q, y \in \mathbb {R}^{p}_{>}\). Then, there exists \(\lambda \in \mathbb {R}_>\) such that \(s(q') = s(y)\), where \(q' \in \mathbb {R}^{p}_{>}\) is defined by \(q'_i :=\lambda \cdot q_i\) for all \(i \in \textsc {MIN}\), \(q'_i :=\frac{1}{\lambda } \cdot q_i\) for all \(i \in \textsc {MAX}\).

Proof

Without loss of generality, let \( \textsc {MIN}= \{1,\ldots , k\}\) and \(\textsc {MAX}= \{k+1,\ldots , p\}\) for some \(k \in \{0, \ldots , p\}\). Otherwise, the objectives may be reordered accordingly. Consider the function \(s_q :\mathbb {R}_> \rightarrow \mathbb {R}, s_q(\lambda ) :=s( (\lambda \cdot q_1, \ldots , \lambda \cdot q_k,\frac{1}{\lambda } \cdot q_{k+1},\ldots ,\frac{1}{\lambda } \cdot q_p))\). Then, \(s_q\) is a continuous function. Choose

Then \(\underline{\lambda }\cdot q_i< y_i < \bar{\lambda }\cdot q_i\) for all \(i =1, \ldots ,k\) and \(\underline{\lambda }\cdot q_i> y_i > \bar{\lambda }\cdot q_i\) for all \(i =k+1,\ldots , p\), which implies that

Since \(s_q\) is continuous, by the intermediate value theorem, there exists some \(\lambda \in \mathbb {R}_>\) such that

\(\square \)

Lemma 2.10

Let \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) be a scalarizing function for some objective decomposition \(\Pi \). Let \(y, y' \in \mathbb {R}^{p}_{>}\). Then, \(y \leqq _{\Pi } y'\) implies \(s(y) \le s(y')\).

Proof

Again, let \(\Pi = (\{1,\ldots ,k\},\{k+1,\ldots , p\} )\) for some \(k \in \{0,\ldots ,p\}\) without loss of generality. Let \(y \leqq _{\Pi } y'\). For the sake of a contradiction, assume that \(s(y) > s(y')\). Then, by Lemma 2.9, there exists \(\lambda \in \mathbb {R}_>\) such that \(s(q) = s(y')\), where \(q \in \mathbb {R}^{p}_{>}\) is defined by

Note that \(\lambda < 1\) since, otherwise, either \(q = y\) or \(q >_{\Pi } y\) and, thus, \(s(y') = s(q) \ge s(y)\) by the strict monotonicity of s. We obtain \(q <_{\Pi } y \leqq _{\Pi } y'\) and, therefore, \(s(q) < s(y')\) contradicting that \(s(q) = s(y')\). \(\square \)

Concerning scalarizing functions, a natural question is whether optimal solutions for a scalarizing function s are always efficient.

Proposition 2.11

Let \(\Pi \) be an objective decomposition. Let \(I = (X,f)\) be an instance of a p-objective optimization problem of type \(\Pi \) and \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) be a scalarizing function for \(\Pi \). Then any solution \(x \in X\) that is optimal for s is weakly efficient. Moreover, there exists a solution \(x \in X\) that is optimal for s and also efficient. If s is strongly \(\Pi \)-monotone, then any solution \(x \in X\) that is optimal for s is efficient.

Proof

Let \(x \in X\) be an optimal solution for s. Assume that x is not weakly efficient, i.e., there exists \(x' \in X\) such that \(f(x') <_{\Pi } f(x)\). Then the strict \(\Pi \)-monotonicity of s implies that \(s(f(x')) < s(f(x))\) contradicting that x is optimal for s.

Since f(X) is a compact set, the continuous function s attains its minimum on f(X), i.e., there exists a solution \(x \in X\) that is optimal for s. Moreover, it is well-known that the nondominated set \(Y_N\) is externally stable if \(Y = f(X)\) is compact (Ehrgott 2005). Thus, if x is not efficient, there exists an efficient solution \(x'\) dominating x. Lemma 2.10 yields that \(s(f(x')) \le s(f(x))\), which implies that (the efficient solution) \(x'\) is also optimal for s.

If s is strongly \(\Pi \)-monotone, then, for any solution \(x \in X\) that is not efficient, there exists a solution \(x' \in X\) with \(f(x') \le _{\Pi } f(x)\) and, therefore, \(s(f(x')) < s(f(x))\). Hence, any optimal solution for s must be efficient. \(\square \)

Example 2.12

Consider an objective decomposition \(\Pi = ( \textsc {MIN}, \textsc {MAX})\). Then, for any (fixed) weight vector \(w = (w_1, \ldots ,w_p) \in \mathbb {R}^{p}_{>}\), the function \(s_w :\mathbb {R}_>^p \rightarrow \mathbb {R}, s_w(y) :=\sum _{i \in \textsc {MIN}} w_i \cdot y_i - \sum _{i \in \textsc {MAX}} w_i \cdot y_i\) defines a scalarizing function that is strongly \(\Pi \)-monotone. The scalarizing function \(s_w\) is called weighted sum scalarizing function with weights \(w_1, \ldots ,w_p\). The set of all weighted sum scalarizing functions \(\{ s_w(y) = \sum _{i \in \textsc {MIN}} w_i \cdot y_i - \sum _{i \in \textsc {MAX}} w_i \cdot y_i \; \vert \; w \in \mathbb {R}^{p}_{>}\}\) is called weighted sum scalarization. Typically, a feasible solution that is optimal for some weighted sum scalarizing function is called supported (Bazgan et al. 2022; Ehrgott 2005).

Note that, in the literature, the single-objective optimization problem obtained by a weighted sum scalarizing function applied to instances of multiobjective maximization problems typically reads as \(\max _{x \in X} \sum _{i = 1}^p w_i \cdot f_i(x)\). In our notation used in the above example, the single-objective problem reads as \(\min _{x\in X} - \sum _{i = 1}^p w_i \cdot f_i(x)\). Since \(\min _{x\in X} - \sum _{i = 1}^p w_i \cdot f_i(x) = - \max _{x \in X} \sum _{i = 1}^p w_i \cdot f_i(x)\), the optimization problems are indeed equivalent in the sense that the optimal solution sets of both problems coincide. Next, we generalize the concept of supportedness to arbitrary scalarizations:

Definition 2.13

Let S be a scalarization (of finite or infinite cardinality) for an objective decomposition \(\Pi \). In an instance of a multiobjective optimization problem of type \(\Pi \), a solution \(x \in X\) is called S-supported if there exists a scalarizing function \(s \in S\) such that x is optimal for s.

Note that optimal solutions for a scalarizing function are not necessarily unique. Moreover, different optimal solutions can be mapped to different images in \(\mathbb {R}^p\), and, thus, contribute different information to the solution/approximation process. However, given a scalarization S, it is often the case that not all S-supported solutions must be computed to draw conclusions on the nondominated set. Instead, it is sufficient to compute a set of solutions that contains, for each \(s \in S\), at least one solution that is optimal for s, see, for example, Bökler and Mutzel (2015). Hence, we define:

Definition 2.14

Let S be a scalarization (of finite or infinte cardinality) for an objective decomposition \(\Pi \). In an instance of a multiobjective optimization problem of type \(\Pi \), a set of solutions \(P \subseteq X\) is an optimal solution set for S, if, for each scalarizing function \(s \in S\), there is a solution \(x \in P\) that is optimal for s.

Note that the set of S-supported solutions is the largest optimal solution set for S in the sense that it is the union of all optimal solution sets for S.

Example 2.15

Let \(I = (X,f)\) be an instance of a bi-objective minimization problem such that \(Y = \text {conv}(\{q^1,q^2\})\) for some \(q^1,q^2 \in \mathbb {R}^{p}_{>}\) with \(q^1_1 < q^2_1\) and \(q^1_2 > q^2_2\). Let S be the weighted sum scalarization (see Example 2.12). Then, X is the set of (S-) supported solutions, and \(\{x^1,x^2\}\) with \(f(x^1) = q^1\) and \(f(x^2) = q^2\) is an optimal solution set for S with minimum cardinality.

3 Transforming scalarizations

In this section, we study the approximation quality that can be achieved for multiobjective optimization problems by means of optimal solutions of scalarizations. Countering the existing impossibility results for maximization problems (see Sect. 1.1), we show that, in principle, scalarizations may serve as building blocks for the approximation of any multiobjective optimization problem: If there exists a scalarization S for an objective decomposition \(\Pi \) such that, in each instance of each multiobjective optimization problem of type \(\Pi \), every optimal solution set for S is an approximation set, then, for any other objective decomposition \(\Pi '\), there exists a scalarization \(S'\) for which the same holds true (with the same approximation quality). To this end, given a set \(\Gamma \subseteq \{1,\ldots ,p\}\), we define a “flip function” \(\sigma ^{\Gamma }:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}^{p}_{>}\) via

Note that \(\sigma ^{\Gamma }\) is continuous, bijective, and self-inverse, i.e., \(\sigma ^{\Gamma }(\sigma ^{\Gamma }(y)) = y\) for all \(y \in \mathbb {R}^{p}_{>}\).

In the remainder of this section, let an objective decomposition \(\Pi = ( \textsc {MIN},\textsc {MAX})\) be given. Using \(\sigma ^{\Gamma }\), we define a transformed objective decomposition by reversing the direction of optimization of all objective functions \(f_i\), \(i \in \Gamma \). Formally, this is done as follows:

Definition 3.1

For \(\Pi = ( \textsc {MIN},\textsc {MAX})\), the \(\Gamma \)-transformed decomposition \(\Pi ^{\Gamma } = ( \textsc {MIN}^{\Gamma },\textsc {MAX}^{\Gamma })\) (of \(\Pi \)) is defined by \( \textsc {MIN}^{\Gamma } :=( \textsc {MIN}{\setminus } \Gamma ) \cup (\Gamma \cap \textsc {MAX})\) and \(\textsc {MAX}^{\Gamma } :=(\textsc {MAX}{\setminus } \Gamma ) \cup (\Gamma \cap \textsc {MIN})\).

It is known (e.g., from Papadimitriou and Yannakakis 2000) that any p-objective optimization problem of type \(\Pi \) can be transformed with the help of \(\sigma ^{\Gamma }\) to a p-objective optimization problem of type \(\Pi ^{\Gamma }\): for any instance \(I = (X,f)\) of a given p-objective optimization problem of type \(\Pi \), define an instance \(I^{\Gamma } =(X^{\Gamma },f^{\Gamma })\) of some p-objective optimization problem of type \(\Pi ^{\Gamma }\) via \(X^{\Gamma } :=X\) and \(f^{\Gamma }:X \rightarrow \mathbb {R}^{p}_{>}, f^{\Gamma }(x) :=\sigma ^{\Gamma }(f(x))\). The instance \(I^{\Gamma }\) is equivalent to I in the sense that, for any two solutions \(x, x^{\Gamma } \in X\), the solution \(x^{\Gamma }\) is (strictly) dominated by the solution x in I if and only if \(x^{\Gamma }\) is (strictly) dominated by x in \(I^{\Gamma }\). Moreover, it is easy to see that our assumption that the set of feasible points is a compact subset of \(\mathbb {R}^{p}_{>}\) is preserved under this transformation: If f(X) is a compact subset of \(\mathbb {R}^{p}_{>}\), then \(f^{\Gamma }(X)\) is also a compact subset of \(\mathbb {R}^{p}_{>}\). Further, this transformation is compatible with the notion of approximation: For any \(\alpha \ge 1\), a solution \(x' \in X\) is \(\alpha \)-approximated by a solution \(x \in X\) in I if and only if \(x'\) is \(\alpha \)-approximated by x in \(I^{\Gamma }\). This means that a set \(P_\alpha \subseteq X\) of solutions is an \(\alpha \)-approximation set for I if and only if \(P_\alpha \) is an \(\alpha \)-approximation set for \(I^{\Gamma }\). Note that this transformation is self-inverse, i.e., \((I^{\Gamma })^{\Gamma }=I\). Thus, we call \(I^{\Gamma }\) the \(\Gamma \)-transformed instance of I, and the p-objective optimization problem of type \(\Pi ^{\Gamma }\) that consists of all \(\Gamma \)-transformed instances the \(\Gamma \)-transformed optimization problem. Similarly, we define \(\Gamma \)-transformed scalarizing functions:

Definition 3.2

Let \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) be a scalarizing function for \(\Pi \) and let \(\Gamma \subseteq \{1,\ldots , p\}\). We define the \(\Gamma \)-transformed scalarizing function \(s^{\Gamma }:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) (of s) by

Given a scalarization S for \(\Pi \), we call \(S^{\Gamma } :=\left\{ s^{\Gamma } \, \big \vert \, s \in S\right\} \) the \(\Gamma \)-transformed scalarization (of S).

Note that the scalarizing function \(\left( s^{\Gamma }\right) ^{\Gamma } \), i.e., the \(\Gamma \)-transformed scalarizing function of the \(\Gamma \)-transformed scalarizing function of s, equals the scalarizing function s: For each \(y \in \mathbb {R}^{p}_{>}\), we have

The next lemma shows that scalarizing functions for \(\Pi \) are indeed mapped to scalarizing functions for \(\Pi ^{\Gamma }\):

Lemma 3.3

Let s be a scalarizing function for \(\Pi \). Then, \(s^{\Gamma }\) is a scalarizing function for \(\Pi ^{\Gamma }\).

Proof

Since s and \(\sigma ^{\Gamma }\) are continuous, \(s^{\Gamma }\) is continuous as well. Let \(y,y' \in \mathbb {R}^{p}_{>}\) such that \(y <_{\Pi ^{\Gamma }} y'\). Then, \(\sigma ^{\Gamma }(y) <_{\Pi } \sigma ^{\Gamma }(y')\) and, since s is strictly \(\Pi \)-monotone, \(s(\sigma ^{\Gamma }(y)) \le s(\sigma ^{\Gamma }(y'))\). That is, the function \(s^{\Gamma }\) is strictly \(\Pi ^{\Gamma }\)-monotone. \(\square \)

As discussed in Remark 3.8 below, several meaningful, but not self-inverse, definitions of a \(\Gamma \)-transformed scalarizing function \(s^{\Gamma }\) exist.

The next lemma shows that the \(\Gamma \)-transformed scalarizing function \(s^{\Gamma }\) of a scalarizing function s preserves optimality of a solution x in the sense that x is optimal for s in I if and only if x is optimal for \(s^{\Gamma }\) in \(I^{\Gamma }\).

Lemma 3.4

Let \(I = (X,f)\) be an instance of a p-objective optimization problem of type \(\Pi \) and let \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) be a scalarizing function for \(\Pi \). Then a solution \(x\in X\) is an optimal solution for s in I if and only if x is an optimal solution for \(s^{\Gamma }\) in \(I^{\Gamma }\).

Proof

Note that

for all \(x'' \in X\). This implies, for any \(x,x' \in X\), that \(s(f(x)) \le s(f(x'))\) if and only if \(s^{\Gamma }(\sigma ^{\Gamma }(f(x))) \le s^{\Gamma }(\sigma ^{\Gamma }(f(x')))\) and, hence, a feasible solution x is optimal for s in I if and only if x is optimal for \(s^{\Gamma }\) in \(I^{\Gamma } = (X,f^{\Gamma })\). \(\square \)

Consequently, if every optimal solution set for S is an approximation set in an instance (X, f) of a p-objective optimization problem of type \(\Pi \), every optimal solution set for \(S^{\Gamma }\) is an approximation set for the instance \(I^{\Gamma }\) of the \(\Gamma \)-transformed p-objective optimization problem with the same approximation quality:

Corollary 3.5

Let S be a scalarization for \(\Pi \), let \(I = (X,f)\) be an instance of a p-objective optimization problem of type \(\Pi \), and let \(\alpha \ge 1\). Then, in I, every optimal solution set for S is an \(\alpha \)-approximation set if and only if, in the instance \(I^{\Gamma }\) of the \(\Gamma \)-transformed optimization problem, every optimal solution set for \(S^{\Gamma }\) is an \(\alpha \)-approximation set.

Proof

Lemma 3.4 implies that an optimal solution set for S in I is an optimal solution set for \(S^{\Gamma }\), and vice versa. The set \(S^{\Gamma }\) is an \(\alpha \)-approximation set in I if and only if it is an \(\alpha \)-approximation set in \(I^{\Gamma }\). \(\square \)

As a consequence of Corollary 3.5, we obtain the following transformation theorem:

Theorem 3.6

(Transformation Theorem for Scalarizations with respect to Approximation) Let \(\alpha \ge 1\). Let S be a scalarization for \(\Pi = ( \textsc {MIN}, \textsc {MAX})\) such that, in each instance of each p-objective optimization problem of type \(\Pi \), every optimal solution set for S is an \(\alpha \)-approximation set. Then, for any other objective decomposition \(\Pi '\), there exists a scalarization \(S'\) such that the same holds true: in each instance of each p-objective optimization problem of type \(\Pi '\), every optimal solution set for \(S'\) is an \(\alpha \)-approximation set.

Proof

Let \(\Pi ' = ( \textsc {MIN}',\textsc {MAX}')\) be an objective decomposition. Set \(\Gamma = ( \textsc {MIN}{\setminus } \textsc {MIN}') \cup (\textsc {MAX}{\setminus } \textsc {MAX}')\). Then, \((\Pi ')^{\Gamma } = \Pi \). Let \(I'\) be an instance of a multiobjective optimization problem of type \(\Pi '\). Then, \((I')^{\Gamma }\) is an instance of the \(\Gamma \)-transformed optimization problem, which is of type \((\Pi ')^{\Gamma } = \Pi \), and by assumption, in \((I')^{\Gamma }\), every optimal solution set for S is an \(\alpha \)-approximation set. But then, in \(\left( (I')^{\Gamma }\right) ^{\Gamma } = I'\), every optimal solution set for \(S^{\Gamma }\) is an \(\alpha \)-approximation set by Corollary 3.5. Hence, \(S' :=S^{\Gamma }\) is the desired scalarization for \(\Pi '\). \(\square \)

Example 3.7

Let \(\Pi ^{\min } = (\{1,\ldots ,p\},\emptyset )\) and \(\Pi ^{\max } = (\emptyset ,\{1,\ldots ,p\})\). Recall that the weighted sum scalarizing function for \(\Pi ^{\min }\) with weights \(w_1,\ldots ,w_p > 0\) is \(s_w:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}_>, s_w(y) = \sum _{i=1}^p w_i \cdot y_i\). Then, \(-s_w\) is the weighted sum scalarizing function for \(\Pi ^{\max }\). Let \(S = \{s_w :\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\, \vert \, w \in \mathbb {R}^{p}_{>}\}\) and \(-S = \{- s_w :\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\, \vert \, w \in \mathbb {R}^{p}_{>}\}\) be the weighted sum scalarizations for \(\Pi ^{\min }\) and \(\Pi ^{\max }\), respectively. It is known that, in each instance of each p-objective minimization problem, every optimal solution set for S is a p-approximation set, but there exist instances of p-objective maximization problems for which the set of \(-S\)-supported solutions does not yield any constant approximation quality (Bazgan et al. 2022; Glaßer et al. 2010a).

Consider \(\Gamma = \{1,\ldots ,p\}\). Then, \(\Pi ^{\max }\) is the \(\Gamma \)-transformed objective decomposition of \(\Pi ^{\min }\), and vice versa. Thus, the opposite result holds for the corresponding \(\Gamma \)-transformed scalarizations: The \(\Gamma \)-transformed scalarization of S, which is a scalarization for \(\Pi ^{\max }\), is the scalarization

The \(\Gamma \)-transformed scalarization of \(-S\), which is a scalarization for \(\Pi ^{\min }\), is the scalarization

Hence, in each instance of each p-objective maximization problem, every optimal solutions set for \(S^{\Gamma }\) is a p-approximation set, but there exist instances of p-objective minimization problems for which the set of \(-S^{\Gamma }\)-supported solutions does not yield any constant approximation quality.

Remark 3.8

Let S be a scalarization for \(\Pi \) and let \(\Gamma \subseteq \{1,\ldots ,p\}\). In fact, for each \(s \in S\), any continuous strictly increasing function \(g:s(\mathbb {R}_>^p) \rightarrow \mathbb {R}\) could be utilized to define \(s^{\Gamma }\) via \(s^{\Gamma }(y) :=g\left( s\left( \sigma ^{\Gamma }(y)\right) \right) \) while still obtaining the results of Lemma 3.4, Corollary 3.5, and Theorem 3.6. However, defining \(s^{\Gamma }\) as in Definition 3.2 yields the additional property that \((s^{\Gamma })^{\Gamma } = s\) for any scalarizing function s, i.e., applying the transformation twice yields the original scalarizing function.

Example 3.9

Let \(\Pi ^{\min } = (\{1,\ldots ,p\},\emptyset )\). Since the weighted sum scalarizing function \(s_w:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}, s_w(y) = \sum _{i=1}^p w_i \cdot f_i(x)\) for \(\Pi ^{\min }\) is positive-valued, one can alternatively define its \(\Gamma \)-transformation with the help of \(g:\mathbb {R}_> \rightarrow \mathbb {R}_>, g(t) = - \frac{1}{t}\). For a p-objective maximization problem, the corresponding optimization problem induced by this transformation reads as

Note that this single-objective optimization problem is equivalent to

in the sense that, in each instance, the optimal solution sets coincide. The function \(h_w:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}_>, h_w(y) = \sum _{j=1}^p w_j \cdot \frac{1}{\sum _{i=1}^p w_i \cdot \frac{1}{y_i}}\) is known as the weighted harmonic mean (Ferger 1931).

Example 3.10

In each instance of each p-objective minimization problem, every optimal solution set for the weighted max-ordering scalarization \(S = \{ s_w:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}_>, s_w(y) = \max _{i=1,\ldots ,p} w_i \cdot y_i \, \vert \, w \in \mathbb {R}^{p}_{>}\}\) must contain at least one efficient solution for each nondominated image (Ehrgott 2005), i.e., every optimal solutions set for S is a 1-approximation set. The transformed scalarizing function \(s_w \in S\) for maximization (i.e., the \(\{1,\ldots ,p\}\)-transformed scalarizing function) is

Consequently, the transformed scalarization of the weighted max-ordering scalarization for maximization is \(S^{\{1,\ldots ,p\}} = \{s_w^{\{1,\ldots ,p\}}:\mathbb {R}^p \rightarrow \mathbb {R}\, \vert \, w \in \mathbb {R}^{p}_{>}\}\) and, in each instance of each p-objective maximization problem, every optimal solution set for \(S^{\{1,\ldots ,p\}}\) is a 1-approximation set.

For a p-objective maximization problem and a scalarizing function \(s^{\{1,\ldots ,p\}}_w \in S^{\{1,\ldots ,p\}}\), one can rewrite the corresponding implied single-objective optimization problem: In each instance, it holds that

Hence, the optimal solution set of \(\min _{x \in X} \max _{i=1,\ldots ,p} w_i \cdot \frac{1}{f_i(x)}\) coincides with the optimal solution set of \(\max _{x \in X} \min _{i=1,\ldots ,p} \tilde{w}_i \cdot f_i(x)\), where \(\tilde{w} = (\frac{1}{w_1},\ldots , \frac{1}{w_p}) \in \mathbb {R}^{p}_{>}\). This means that, in each instance of each p-objective maximization problem, instead of solving all single-objective minimization problem instances obtained from scalarizing functions \(s^{\{1,\ldots ,p\}} \in S^{\{1,\ldots ,p\}}\), one can solve the single-objective maximization problem instances obtained from the functions in \(\{ r_{\tilde{w}}:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}, r_{\tilde{w}}(y) = \min _{i=1,\ldots ,p} \tilde{w}_i \cdot y_i \, \vert \, \tilde{w} \in \mathbb {R}^{p}_{>}\}\) to obtain a 1-approximation set.

4 Conditions for general scalarizations

Given an objective decomposition \(\Pi \) and \(\alpha \ge 1\), we study sufficient and necessary conditions for a scalarization S such that, in each instance of each multiobjective optimization problem of type \(\Pi \), the set of S-supported solutions is an \(\alpha \)-approximation set. We also derive upper bounds on the best approximation quality \(\alpha \) that can be achieved by S-supported solutions and that solely depends on the level sets of the scalarizing functions. In the following, we assume without loss of generality that the objective decomposition \(\Pi = ( \textsc {MIN},\textsc {MAX})\) is given such that \( \textsc {MIN}= \{1,\ldots , k\}\) and \(\textsc {MAX}= \{k+1, \ldots , p\}\) holds for some \(k \in \{0, \ldots ,p\}\). Otherwise, the objectives may be reordered accordingly.

The first result in this section states that, for any finite set S of scalarizing functions and any \(\alpha \ge 1\), the set of S-supported solutions, and, thus, any optimal solution set for S, is not an \(\alpha \)-approximation set in general.

Theorem 4.1

Let S be a scalarization of finite cardinality for \(\Pi \). Then, for any \(\alpha \ge 1\), there exists an instance I of a multiobjective optimization problem of type \(\Pi \) such that the set of S-supported solutions is not an \(\alpha \)-approximation set.

Proof

We first show that it suffices to construct an instance of a biobjective optimization problem of each possible type such that the set of S-supported solutions is not an \(\alpha \)-approximation set. To this end, given an objective decomposition \(\Pi \) of \(p>2\) objectives, we consider the objective decomposition \(\bar{\Pi }\) restricted to the objectives 1 and 2 given as \(\bar{\Pi } :=(\emptyset ,\{1,2\})\) if \(k= 0\), \(\bar{\Pi } :=(\{1\},\{2\})\) if \( k = 1\), and \(\bar{\Pi } :=(\{1,2\},\emptyset )\) if \(k\ge 2\). Now, let S be a scalarization of finite cardinality for \(\Pi \). Then, for each \(s \in S\), the function \(\bar{s} :\mathbb {R}^2_> \rightarrow \mathbb {R}\), \(\bar{s}(y_1,y_2) :=s(y_1,y_2,1,\ldots ,1)\) is a scalarizing function for \(\bar{\Pi }\). Applying the construction for \(p = 2\) to the set \(\bar{S} = \{\bar{s} \; \vert \; s\in S\}\) of scalarizing functions for \(\bar{\Pi }\) then yields an instance of a biobjective optimization problem of type \(\bar{\Pi }\) such that the set of \(\bar{S}\)-supported solutions is not an \(\alpha \)-approximation set; and this instance can be transformed into an instance of a p-objective optimization problem of type \(\Pi \) such that the set of S-supported solutions is not an \(\alpha \)-approximation set by setting the additional \(p-2\) objective functions to be equal to 1 for all \(x \in X\).

It remains to show the claim for biobjective optimization problems, i.e., for \(p=2\). To this end, we first consider the case \(\bar{\Pi } = (\{1,2\},\emptyset )\), i.e., the case where both objective functions are to be minimized. Let S be a finite set of scalarizing functions for \(\bar{\Pi }\). In the following, we construct an instance \(I = (X,f)\) of a biobjective minimization problem of type \(\bar{\Pi }\) whose feasible set X consists of \(\vert S |+ 1\) solutions such that

-

1.

no solution \(x \in X\) is \(\alpha \)-approximated by any other solution \(x' \in X{\setminus }\{x\}\), and

-

2.

we have \(s(f(x)) \ne s(f(x'))\) for all \(x,x' \in X\) with \(x \ne x'\) and each \(s \in S\).

We set \(X = \{x^{(0)}, \ldots , x^{(|S |)}\}\) and inductively determine the components of the vectors \(f(x^{(\ell )})\) for \(\ell = 0,\ldots , \vert S |\) as follows: We start by setting \(f_1(x^{(0)}) :=f_2(x^{(0)}) :=1\). Next, let \(f(x^{(0)}), \ldots , f(x^{(\ell -1)})\) be given for some \(\ell \in \{1,\ldots , \vert S |\}\). We construct the vector \(f(x^{(\ell )})\) such that \(x^{(\ell )}\) does not \(\alpha \)-approximate \(x^{(m)}\) and is not \(\alpha \)-approximated by \(x^{(m)}\) for \(m = 0,\ldots , \ell -1\), and such that \(s(f(x^{(\ell )})) \ne s(f(x^{(m)}))\) for \(m = 0,\ldots , \ell -1\) and each \(s \in S\). To this end, we first set

If \(s(f(x^{(\ell )})) \ne s(f(x^{(m)}))\) for \(m = 0,\ldots , \ell -1\) and each \(s \in S\), we are done. Otherwise, we do a decreasing step as follows: We strictly decrease the value \(f_1(x^{(\ell )})\) by a factor of \(\frac{1}{2}\) and strictly decrease the value of \(f_2(x^{(\ell )})\) by an additive constant of 1. Note that, by strict monotonicity, this strictly decreases the value \(s(f(x^{(\ell )}))\) for each \(s \in S\). Thus, for each \(m \in \{0, \ldots , \ell -1\}\) and \(s \in S\) where we previously had \(s(f(x^{(\ell )})) = s(f(x^{(m)}))\), we now have \(s(f(x^{(\ell )})) < s(f(x^{(m)}))\). Note that this strict inequality is preserved in subsequent decreasing steps. Hence, after at most \(\ell \cdot \vert S |\) many decreasing steps, we must have \(s(f(x^{(\ell )})) \ne s(f(x^{(m)}))\) for \(m = 0,\ldots , \ell -1\) and each \(s \in S\), so we can proceed with the construction of \(f(x^{(\ell +1)})\) in iteration \(\ell + 1\).

It is now left to prove that the resulting instance satisfies the two claimed Properties 1 and 2. The solution \(x^{(\ell )}\) whose objective values have been constructed in iteration \(\ell \) is not \(\alpha \)-approximated by \(x^{(m)}\) for \(m = 0,\ldots , \ell -1\) in the first objective \(f_1\) since

Further, the solution \(x^{(\ell )}\) does not \(\alpha \)-approximate \(x^{(m)}\) in the second objective \(f_2\) for \(m = 0,\ldots , \ell -1\): We have performed at most \(\ell \cdot \vert S |\le \vert S |^2\) many decreasing steps, where, in each decreasing step, the value \(f_2(x^{(\ell )})\) has been decreased by 1. Thus,

Hence, the instance \(I = (X,f)\) constructed as above indeed satisfies the two claimed Properties 1 and 2. Property 2 implies that, for each scalarizing function \(s \in S\), exactly one solution is optimal for s. Thus, at most \(\vert S |\) many solutions can be S-supported, and at least one solution \(x \in X\) is not S-supported. However, by Property 1, this solution x is not \(\alpha \)-approximated by any other solution. Thus, I is an instance of a biobjective minimization problem for which the set of S-supported solutions is not an \(\alpha \)-approximation set.

In order to show the claim for the case \(\bar{\Pi } = (\emptyset , \{1,2\})\), i.e., the case where both objective functions are to be maximized, we apply the above construction to the \({\{1,2\}}\)-transformed scalarization \(S^{\{1,2\}}\). This yields an instance I of a biobjective minimization problem where the set of \(S^{{\{1,2\}}}\)-supported solutions is not an \(\alpha \)-approximation set. Thus, by Corollary 3.5, the set of S-supported solutions is not an \(\alpha \)-approximation set in the \({\{1,2\}}\)-transformed instance \(I^{{\{1,2\}}}\), which is an instance of a biobjective maximization problem. The case \(\bar{\Pi } = (\{1\}, \{2\})\) follows analogously with the transformation induced by \(\Gamma = \{2\}\). \(\square \)

Note that the (algorithmically motivated) approximation for instances of p-objective minimization problems in Glaßer et al. (2010a), Glaßer et al. (2010b), Bazgan et al. (2022) is done by an instance-based choice of finitely many scalarizing functions. Nevertheless, to obtain a scalarization that yields approximation sets for arbitrary instances of arbitrary p-objective minimization problems, the cardinality of S must be infinite by Theorem 4.1. However, the inapproximability results for maximization problems presented in Bazgan et al. (2022), Herzel et al. (2023) state that there exists an instance where even the set of all supported solutions (for the weighted sum scalarization) does not constitute an approximation set. Hence, in general, even considering infinitely many scalarizing functions is not sufficient for approximation. Instead, additional conditions for the scalarizing functions are crucial, which we derive next.

We first study with what approximation quality a given feasible solution can be approximated by optimal solutions for a single scalarizing function. Afterwards, we investigate what approximation quality can be achieved by every optimal solution set for a scalarization S, and then derive conditions under which an optimal solution set for S constitutes an approximation for arbitrary instances of p-objective optimization problems of type \(\Pi \).

The first result shows that, given a feasible solution \(x'\), if the component-wise maximum ratio between points in the level set of a scalarizing function at \(f(x')\) can be bounded by some \(\alpha \ge 1\), then \(x'\) is \(\alpha \)-approximated by every optimal solution for the scalarizing function:

Lemma 4.2

In an instance of a p-objective optimization problem of type \(\Pi \), let \(x' \in X\) and \(y' :=f(x')\). Let \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) be a scalarizing function for \(\Pi \) such that the level set \(L(y',s)\) is bounded from above in \(i = 1, \ldots , k\) by some \(q \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i = k+1, \ldots ,p\) by some \(q' \in \mathbb {R}^{p}_{>}\). Then the solution \(x'\) is \(\alpha \)-approximated by every solution \(x \in X\) that is optimal for s, where

Proof

Note that \(\alpha < \infty \) since \(y'=f(x')\) is fixed and \(L(y',s)\) is bounded from above in \(i =1,\ldots ,k\) by \(q \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i = k+1, \ldots ,p\) by \(q' \in \mathbb {R}^{p}_{>}\). Let x be an optimal solution for s. By Lemma 2.9, there exists \(\lambda \in \mathbb {R}_>\) such that \(y'' \in \mathbb {R}^{p}_{>}\) defined by \(y''_i :=\lambda \cdot f_i(x)\) for \(i = 1, \ldots , k\) and \(y''_i :=\frac{1}{\lambda } \cdot f_i(x)\) for \(i =k+1,\ldots ,p\) satisfies \(s(y'') = s(y')\), i.e., \(y'' \in L(y',s)\). Moreover, \(\lambda < 1\) would imply that \(s(y'') < s(f(x)) \le s(f(x')) = s(y') = s(y'')\) by strict monotonicity of s and optimality of x for s, which is a contradiction. Hence, \(\lambda \ge 1\), so \(f(x) \le _{\Pi } y''\), and we obtain

for \(i =1,\ldots ,k\) and

for \(i = k+1, \ldots , p\). \(\square \)

We proceed by investigating with what approximation quality a given feasible solution can be approximated by the set of S-supported solutions of a scalarization S.

Proposition 4.3

In an instance of a p-objective optimization problem of type \(\Pi \), let \(x' \in X\) be given. Let S be a scalarization for \(\Pi \) such that, for some scalarizing function \(\bar{s} \in S\), the level set \(L(y',\bar{s})\) for \(y' :=f(x')\) is bounded from above in \(i=1,\ldots ,k\) by some \(q \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i=k+1,\ldots ,p\) by some \(q' \in \mathbb {R}^{p}_{>}\). Then, for any \(\varepsilon > 0\), the solution \(x'\) is \((\alpha + \varepsilon )\)-approximated by every optimal solution for some scalarizing function \(s \in S\), where

If the infimum is attained at some \(s\in S\), then \(x'\) is \(\alpha \)-approximated by every optimal solution for s.

Proof

Note that \(\alpha < \infty \) since \(y'=f(x')\) is fixed and \(L(y',\bar{s})\) is bounded from above in \(i=1,\ldots ,k\) by some \(\bar{q} \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i=k+1,\ldots ,p\) by some \(\bar{q}' \in \mathbb {R}^{p}_{>}\). Given \(\varepsilon > 0\), let \(s \in S\) be a scalarizing function such that

Then \(L(y',s)\) must be bounded from above in \(i=1,\ldots ,k\) by some \(q \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i=k+1,\ldots ,p\) by some \(q' \in \mathbb {R}^{p}_{>}\). Thus, Lemma 4.2 implies that \(x'\) is \((\alpha + \varepsilon )\)-approximated by any solution \(x \in X\) that is optimal for s, which proves the first claim. If the infimum is attained at \(s\in S\), this means that we even have

and the second claim also follows immediately by using Lemma 4.2. \(\square \)

If the scalarization S admits a common finite upper bound on

for all points \(y' \in \mathbb {R}^{p}_{>}\), then Proposition 4.3 implies that, in each instance of each p-objective optimization problem of type \(\Pi \), every optimal solution set for S yields a constant approximation quality:

Theorem 4.4

Let S be a scalarization for \(\Pi \) and let

If \(\alpha < \infty \), then, in each instance of each p-objective optimization problem of type \(\Pi \), every optimal solution set for S is an \((\alpha + \varepsilon )\)-approximation set for any \(\varepsilon > 0\). If, additionally, the infimum

is attained and finite for each \(y' \in \mathbb {R}^{p}_{>}\), then, in each instance of each p-objective optimization problem of type \(\Pi \), every optimal solution set for S is an \(\alpha \)-approximation set.

Proof

Let \(I = (X,f)\) be an instance of a p-objective optimization problem of type \(\Pi \). Let \(x' \in X\) be a feasible solution and set \(y':=f(x')\). Then

which implies that \(L(y',\bar{s})\) must be bounded from above in \(i=1,\ldots , k\) by some \(\bar{q} \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i=k+1,\ldots p\) by some \(\bar{q}' \in \mathbb {R}^{p}_{>}\) for at least one \(\bar{s}\in S\). Consequently, the first claim follows by Proposition 4.3. The second claim follows similarly by using the second statement in Proposition 4.3. \(\square \)

Given a scalarization S for \(\Pi \) and \(y' \in \mathbb {R}^{p}_{>}\), set

Theorem 4.4 states that, if there exists a common finite upper bound \(\alpha \) with \(\sup _{y' \in \mathbb {R}^{p}_{>}} \alpha (y) \le \alpha < \infty \), then a constant approximation quality (namely \(\alpha +\varepsilon \)) is achieved by every optimal solution set for S in arbitrary instances of arbitrary p-objective optimization problems of type \(\Pi \). The following example, however, shows that the weaker condition \(\alpha (y')<\infty \) for every \(y'\in \mathbb {R}^{p}_{>}\) (which holds if all level sets \(L(y',s)\) are bounded from above in \(i=1,\ldots , k\) by some \(\bar{q} \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i=k+1,\ldots p\) by some \(\bar{q}' \in \mathbb {R}^{p}_{>}\)) is not sufficient in order to guarantee a constant approximation quality:

Example 4.5

Let \(p = 2\) and \(\Pi ^{\min } = (\{1,2\},\emptyset )\). Consider the scalarization \(S = \{s\}\) for \(\Pi ^{\min }\), where \(s:\mathbb {R}^2_>\rightarrow \mathbb {R}\) is defined by \(s(y) :=\min \{y_1^2 + y_2, y_1+ y_2^2\}\). Then, Theorem 4.1 shows that there exists an instance of a biobjective minimization problem with a solution \(x' \in X\) such that \(x'\) is not \(\alpha \)-approximated by any S-supported solution. Nevertheless, for any \(y' \in \mathbb {R}^2_>\), it can be shown that

Further,

so there is no \(\alpha < \infty \) with \(\sup _{y' \in \mathbb {R}^{p}_{>}} \alpha (y') \le \alpha \) as required in Theorem 4.4.

There exist scalarizations S for which the approximation quality \(\alpha \) given in Theorem 4.4 is tight in the sense that, for any \(\varepsilon > 0\), there is an instance of a multiobjective optimization problem of type \(\Pi \) such that the set of S-supported solutions is not an \((\alpha \cdot (1 - \varepsilon ))\)-approximation set. Examples of such scalarizations where, additionally, \(\alpha \) is easy to calculate, are presented in Sect. 5.2.

Nevertheless, we now show that the approximation quality in Theorem 4.4 is not tight in general. To this end, we provide an example of a scalarization S for minimization for which each individual scalarizing function \(s \in S\) does not satisfy the requirements of Lemma 4.2. That is, for each point \(y' \in \mathbb {R}^{p}_{>}\), the level set \(L(y',s)\) is not bounded from above in some \(i=1,\ldots ,p\). However, for each instance, every optimal solution set for the whole scalarization S is indeed a 1-approximation set.

Example 4.6

Again, let \(p=2\) and \(\Pi ^{\min } = (\{1,2\},\emptyset )\). For each \(w \in \mathbb {R}^2_>\) and \(\varepsilon \in (0,1)\), define a scalarizing function \(s_{w,\varepsilon }:\mathbb {R}_>^2 \rightarrow \mathbb {R}\) for \(\Pi ^{\min }\) via

Then, the level set \(L(y',s_{w,\varepsilon })=\left\{ y \in \mathbb {R}^2_> \, \vert \, s_{w,\varepsilon }(y) = s_{w,\varepsilon }(y')\right\} \) is unbounded for each \(y' \in \mathbb {R}^2_>\), and consequently not bounded from above in neither \(i=1\) nor \(i=2\). Thus, for each \(s_{w,\varepsilon }\) and each \(y' \in \mathbb {R}_>\), it holds that

and, therefore, the value \(\alpha \) given in Theorem 4.4 is infinite. However, for \(S = \{s_{w,\varepsilon }: w \in \mathbb {R}^2_> \; \vert \; 0<\varepsilon <1\}\), in any instance \(I = (X,f)\) of any biobjective minimization problem, at least one corresponding efficient solution \(x \in X_E\) for every nondominated image \(y \in Y_N\) must be contained in every optimal solution set for S and, consequently, every optimal solution set is a 1-approximation set: Since f(X) is a compact subset of \(\mathbb {R}^2_>\), it is bounded from above in each i by some \(y \in \mathbb {R}^2_>\), and from below in each i by some \(y' \in \mathbb {R}^2_>\). Choose \(\varepsilon < \frac{y'_1 \cdot y'_2}{y_1 \cdot y_2}\). Then, for each \(w \in \mathbb {R}^2_>\) with \(y'_2 \le w_1 \le y_2\) and \(y'_1 \le w_2 \le y_1\), and each \(x\in X\), we have

so \(s_{w,\varepsilon }(f(x)) = \max \{w_1 \cdot f_1(x),w_2\cdot f_2(x)\}\). This means that, for such combinations of w and \(\varepsilon \), the scalarizing function \(s_{w,\varepsilon }\) coincides with the weighted max-ordering scalarizing function. It is well-known that, for \(y \in Y_N\), any optimal solution x for the weighted max-ordering scalarizing function with weights \(w_1= y_2\) and \(w_2 = y_1\) is a preimage of y, i.e., \(f(x) = y\) and \(x \in X_E\).

5 Weighted scalarizations

In this section, we tailor the results of Sect. 4 to so-called weighted scalarizations, in which the objective functions are weighted by positive scalars before a given scalarizing function s for an objective decomposition \(\Pi \) is applied. By varying the weights, different optimal solutions are potentially obtained. In Sect. 5.1, we show that the computation of the approximation quality \(\alpha \) given in Theorem 4.4 simplifies for weighted scalarizations. Moreover, we see in Sect. 5.2 that \(\alpha \) is easy to calculate and is best possible for all norm-based weighted scalarizations applied in the context of multiobjective optimization.

As in Sect. 4, we assume without loss of generality that the objective decomposition is given as \(\Pi = (\{1,\ldots ,k\},\{k+1,\ldots ,p\})\) for some \(k \in \{0,\ldots , p\}\). Weighted scalarizations for \(\Pi \) are then formally defined as follows:

Definition 5.1

Let \(W \subseteq \mathbb {R}^{p}_{>}\) be a set of possible weights and \(s: \mathbb {R}^{p}_{>}\rightarrow \mathbb {R}\) some scalarizing function for \(\Pi \). Then, the weighted scalarization S induced by W and s is defined via

As the most prominent example, this class contains the weighted sum scalarization, where \(W = \mathbb {R}^{p}_{>}\) and \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}, s(y) :=\sum _{i =1}^k y_i - \sum _{i=k+1}^p y_i\), see Example 2.12.

5.1 Simplified computation of the approximation quality

For weighted scalarizations S as in (3), the computation of the approximation quality \(\alpha \) given in Theorem 4.4 simplifies as follows:

Lemma 5.2

Let S be the weighted scalarization induced by \(W = \mathbb {R}^{p}_{>}\) and some scalarizing function s for \(\Pi \). Define \(\alpha \ge 1\) as in (2), i.e.,

Further, define \(\beta \ge 1\) by

Then, it holds that \(\alpha = \beta \).

Proof

Let \(y' \in \mathbb {R}^{p}_{>}\). For each \(s' \in S\), there exists a vector \(w\in \mathbb {R}^{p}_{>}\) of parameters such that \(s' = s_w\). Vice versa, for each vector \(w\in \mathbb {R}^{p}_{>}\) of parameters, there exists a scalarizing function \(s' \in S\) such that \(s_w = s'\). Consequently,

Further, it holds that

where we substitute \(\bar{y}_i = w_i \cdot y'_i\), \(i = 1, \ldots ,p\), in the second equality and \(y^*_i = \frac{\bar{y}_i}{y'_i} \cdot y_i\), \(i=1,\ldots ,p\), in the third equality. Note that, since \(y'_i>0\) for \(i=1,\ldots ,p\), every point \(\bar{y}\in \mathbb {R}^{p}_{>}\) can actually be obtained via \(\bar{y}_i = w_i \cdot y'_i\) using an appropriate positive weight vector \(w\in \mathbb {R}^{p}_{>}\). Hence, for each \(y' \in \mathbb {R}^{p}_{>}\), the value

is equal to the constant \(\beta \), and we obtain \(\alpha = \sup _{y' \in \mathbb {R}^{p}_{>}} \beta = \beta \). \(\square \)

Consequently, if, for some \(\bar{y} \in \mathbb {R}^{p}_{>}\), the level set \(L(\bar{y},s)\) is bounded from above in \(i = 1, \ldots ,k\) by some \(q \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i=k+1,\ldots ,p\) by some \(q' \in \mathbb {R}^{p}_{>}\), Theorem 4.4 and Lemma 5.2 imply that, in each instance, every optimal solution set for S constitutes an approximation set with approximation quality arbitrarily close or even equal to \(\beta \), with \(\beta \) computed as in Lemma 5.2. This is captured in the following theorem:

Theorem 5.3

Let S be the weighted scalarization induced by \(W = \mathbb {R}^{p}_{>}\) and some scalarizing function s for \(\Pi \) such that, additionally, \(L(\bar{y},s)\) is bounded from above in \(i = 1, \ldots ,k\) by some \(q \in \mathbb {R}^{p}_{>}\) and bounded from below in \(i=k+1,\ldots ,p\) by some \(q' \in \mathbb {R}^{p}_{>}\) for some \(\bar{y} \in \mathbb {R}^{p}_{>}\). Define

Then, in each instance of each p-objective optimization problem of type \(\Pi \), every optimal solution set for S is a \((\beta + \varepsilon )\)-approximation set for any \(\varepsilon > 0\). If the infimum is attained, i.e., if there exists \(\bar{y} \in \mathbb {R}^{p}_{>}\) such that

holds, then, in each instance of each p-objective optimization problem of type \(\Pi \), every optimal solution set for S is a \(\beta \)-approximation set. \(\square \)

Example 5.4

Again, consider the objective decomposition \(\Pi ^{\min } = (\{1,\ldots , p\}, \emptyset )\) and the scalarizing function \(s:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}, s(y) = \sum _{i=1}^p y_i\). Then, the weighted scalarization induced by \(W = \mathbb {R}^{p}_{>}\) and s is the weighted sum scalarization \(S = \{ s_w:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}, s_w(y) = \sum _{i=1}^p w_i y_i \; \vert \; w \in \mathbb {R}^{p}_{>}\}\) for minimization. For each \(\bar{y}\in \mathbb {R}^{p}_{>}\), it can be shown (see Lemma A.1 in the appendix) that a tight upper bound on the component-wise worst case ratio of \(\bar{y}\) to any \(y^* \in L(\bar{y},s)\) is

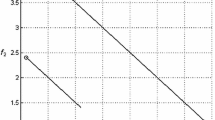

For \(p=2\), this is illustrated in Fig. 1 (left). Since \((1,\ldots ,1) \in \mathbb {R}^{p}_{>}\) with

where the proof of the first inequality is given in Theorem 5.5, the approximation quality for the weighted sum scalarization for minimization given in Theorem 5.3 resolves to \(\beta = p\).

In view of Theorem 4.4, observe that, for each \(y' \in \mathbb {R}^{p}_{>}\), exactly the parameter vector \(w' = \left( \frac{\sum _{i=1}^p y'_i}{y'_1}, \ldots , \frac{\sum _{i=1}^p y'_i}{y'_p} \right) \in \mathbb {R}^{p}_{>}\) satisfies

see Fig. 1 (right) for an illustration of the case \(p=2\). Hence, Theorems 4.4 and 5.3 indeed generalize the known approximation results on the weighted sum scalarization for minimization in Glaßer et al. (2010a), Bazgan et al. (2022). In fact, the known tightness of these results yields that the approximation quality in Theorems 4.4 and 5.3 is tight for the weighted sum scalarization for minimization.

For the weighted sum scalarization for objective decompositions \(\Pi \) containing maximization objectives, however, it can be shown that

This bound is also tight: for every \(\alpha \ge 1\), an instance of a p-objective optimization problem of type \(\Pi \) exists for which the set of supported solutions is not an \(\alpha \)-approximation set. A proof is given in Sect. 5.2.

Let \(s(y) = y_1 + y_2\) be the (unweighted) sum scalarizing function for \(\Pi ^{\min } = (\{1,2\},\emptyset )\). Left: the component-wise worst case ratio of \(\bar{y}\) to any \(y^* \in L(\bar{y},s)\) is bounded by \(\sup \bigg \{ \max \bigg \{\frac{y^*_1}{\bar{y}_1}, \frac{y^*_2}{\bar{y}_2} \bigg \} \; \bigg \vert \; y^* \in L(\bar{y},s) \bigg \} = \max \bigg \{ \frac{\bar{y}_1 + \bar{y}_2}{\bar{y}_1}, \frac{\bar{y}_1 + \bar{y}_2}{\bar{y}_2}\bigg \} \ge 2.\) Right: the component-wise worst case ratio of \(y'\) to any \(y \in L(y',s_{w'})\), where \(w' = \bigg ( \frac{y'_1 + y'_2}{y'_1}, \frac{y'_1 + y'_2}{y'_2} \bigg )\), is bounded by \(\sup \bigg \{ \max \bigg \{\frac{y_1}{y'_1}, \frac{y}{y'_2} \bigg \} \,\bigg \vert \, y \in L(y',s_{w'}) \bigg \} = \max \bigg \{ \frac{1}{y'_1} \cdot \bigg (y'_1 + \frac{w'_2}{w'_1} \cdot y'_2 \bigg ),\frac{1}{y'_2} \cdot \bigg (\frac{w'_1}{w'_2} \cdot y'_1 + y'_2\bigg ) \bigg \} =\max \bigg \{ \frac{2 \cdot y'_1}{y'_1}, \frac{2 \cdot y'_2}{y'_2} \bigg \} = 2.\)

5.2 Tightness results for norm-based weighted scalarizations

In the following, we consider scalarizations as in (3) for which the defining scalarizing function s is based on norms. We first consider the case that all objective functions are to be minimized and then investigate the case with at least one maximization objective.

Note that a norm restricted to the positive orthant is not necessarily a scalarizing function for \(\Pi ^{\min } = (\{1,\ldots ,p\},\emptyset )\).Footnote 2 Hence, we have to assume that s is strictly \(\Pi ^{\min }\)-monotone. This assumption is satisfied, among others, for all q-norms with \(1 \le q \le \infty \). The next result states that, for each weighted scalarization induced by \(W = \mathbb {R}^{p}_{>}\) and a strictly \(\Pi ^{\min }\)-monotone norm s, the computation of the approximation quality given in Theorem 5.3 simplifies to an explicit expression. Moreover, the approximation quality is best possible. Glaßer et al. (2010a) compute the value of \(\alpha \) for the special case of q-norms based on constants given by the norm equivalence to the 1-norm. The next result extends this: for each \(\Pi ^{\min }\)-monotone norm, there is actually a closed-form expression for the approximation quality \(\alpha \):

Theorem 5.5

Let \(s:\mathbb {R}^p \rightarrow \mathbb {R}_\ge \) be a strictly \(\Pi ^{\min }\)-monotone norm, let \(S = \{s_w:\mathbb {R}^{p}_{>}\rightarrow \mathbb {R}, s_w(y) = s(w_1 \cdot y_1, \ldots , w_p \cdot y_p) \; \vert \; w \in \mathbb {R}^{p}_{>}\}\) be the weighted scalarization induced by \(W= \mathbb {R}^{p}_{>}\) and s and denote by \(e^i\) the i-th unit vector in \(\mathbb {R}^p\). Then,

Moreover,

-

1.

in each instance of each p-objective minimization problem, every optimal solution set for S is an \(\alpha \)-approximation set, and

-

2.

for each \(0<\varepsilon <1\), there exists an instance of a p-objective minimization problem where the set of S-supported solutions is not an \(\left( \alpha \cdot (1 - \varepsilon ) \right) \)-approximation set.

Proof

For each \(\bar{y} \in \mathbb {R}^{p}_{>}\), Lemma A.1 in the appendix implies that