Abstract

In this paper, we try to recover an unknown source in a time-fractional diffusion equation. In order to overcome the influence of boundary conditions on source conditions, we introduce the Jacobi polynomials to construct the approximation and a modified Tikhonov regularization method is proposed to deal with the illposedness. Error estimates are obtained under a discrepancy principle as the parameter choice rule. Numerical results are also presented to demonstrate the effectiveness of the proposed method.

Similar content being viewed by others

1 Introduction

In recent years, fractional differential equations have attracted much attention since they play an important role in widespread fields such as biochemistry, physics, biology, chemistry, and finance; please refer to [1–6]. The interest of the study of fractional differential equations lies in the fact that the fractional-order derivatives and integrals enable the description of memory and hereditary properties of various materials and processes [7]. Time-fractional diffusion equations, obtained from the standard diffusion equation by replacing the standard time derivative with a time-fractional derivative, have been studied with respect to their direct problems in different contexts, see [8–16] and references therein.

In some practical problems, we need to determine the diffusion coefficients, initial data or source term by additional measured data that will lead to some fractional diffusion inverse problems. Among them, the inverse source problems for the time-fractional diffusion equations have been widely studied. A large number of studies has been done to research the uniqueness [17–19], conditional stability [18–20], and numerical computations [18–25] of these problems. Many methods for solving these problems are based on the eigenfunction system of a corresponding differential operator [19–24]. This leads to a problem: only when the solution satisfies certain boundary conditions can the methods obtain better convergence results. Next, we illustrate this with the inverse source problem considered in this paper.

We consider the following unknown source problem in a time-fractional diffusion equation [20]:

where \({}_{0}\partial _{t}^{\alpha}u\) is the left-sided Caputo fractional derivative of order α defined by

where \(\Gamma (\cdot )\) is the Gamma function. Our goal is to recover the source term \(f(x)\) from the final data \(u(x,T)=g(x)\). Since the data \(g(x)\) is usually based on the observation, they must contain errors and we assume the noisy data \(g^{\delta}\) satisfies

To obtain the solution of problem (1), we solve the following Sturm–Liouville Problem (SLP)

Its solution is

If we define the generalized Mittag–Leffler function as

where \(\alpha >0\), \(\beta \in \mathcal{R}\), then it can be deduced that the solution of (1) has the form [20]:

Hence, we can obtain

Define operator \(K:f\rightarrow g\) as

then a singular system \(\{\sigma _{\ell}, \phi _{\ell}, \varphi _{\ell}\}\) of K can be given as

Hence, the inverse problem (1) can be transformed into solving the following compact operator equation

Based on the above singular system, we can obtain the stable solution of (7) by different regularization schemes and the complete process of the truncation method has been given in [20]. In this framework, the following source condition is needed to obtain the convergence rates of the regularization solution:

where \(E_{1}>0\) is a constant. In other words, the smoothness of the function is characterized by the decay rate of the expansion coefficient with respect to \(X_{\ell}\). However, it is well known that the Fourier-sine coefficients of a function can decay rapidly only if the function satisfies certain boundary conditions. Specifically, if boundary condition

does not hold, then even if the function is sufficiently smooth, the condition (8) holds only for \(r<1\). The fundamental reason for this situation is that the SLP in formula (3) is regular, i.e., a smooth function can be approximated by the eigenfunctions of (3) with spectral accuracy if and only if all its even derivatives vanish at the boundary [26].

Hence, in this paper we change the approach to approximate the source term f. A direct idea is that we can construct an approximation by Jacobi polynomials that are eigenfunctions of the singular SLPs. Jacobi polynomials as recommended basis functions have been used to solve some inverse problems [27–30]. In this paper, we will use the Jacobi polynomials instead of eigenfunctions \(\{X_{\ell}\}\). Moreover, we introduce a modified Tikhonov method to overcome the illposedness of the problem (7). The method has been used to solve a numerical differentiation problem in [30]. A discrepancy principle will be used to choose the regularization parameter and the new method can self-adaptively obtain the convergence rate without the limitation of boundary conditions.

The outline of the paper is as follows: we construct the regularization solution by a modified Tikhonov regularization method with Jacobi polynomials in Sect. 2. In Sect. 3, the detailed theoretical analysis of the method is carried out. Some numerical examples are given in Sect. 4 to confirm the effectiveness of the method. Finally, we end this paper with a brief conclusion in Sect. 5.

2 A modified Tikhonov regularization method based on Jacobi polynomials

The kth Jacobi polynomials are defined by [26]

They satisfy the orthogonality relations

where

From [26], the following derivative relations hold

where

Since we consider the problem in the interval \(\Lambda =[0, 1]\), we introduce the functions \({L}_{k}(x)\), \(J_{k}(x)\) by coordinate transformation:

The following related properties can be easily obtained:

where

The orthogonality relations of \({L}_{k}\) and \(J_{k}\) can be given as:

with

The weighted space \(L^{2}_{\omega}(\Lambda )\) is defined as

For a function \(f\in L_{\omega}^{2}(\Lambda )\), we can obtain

with

By the Parseval equality

Let vector \(\vec{\mathbf{f}}=(\hat{f}_{0},\hat{f}_{1},\ldots \hat{f}_{n},\ldots )^{T}\) that contains all expansion coefficients of \(f\in L_{\omega}^{2}(\Lambda )\) with respect to \(J_{k}(x)\), and we define the operators

where N is a nonnegative integer and e is the natural constant.

We introduce the following variable Hilbert scale spaces

where \(\psi :[0,\infty )\rightarrow (0,\infty )\) is a nondecreasing function satisfying \(\lim_{x\rightarrow \infty}\psi (x)=\infty \). In this paper, we will consider the following two cases:

-

1.

Finitely smoothing case:

$$ \psi (x)=\phi _{r}(x)=\textstyle\begin{cases} 1,& x< 1, \\ x^{r},& x\geq 1,\end{cases}\displaystyle \quad r>1. $$(16) -

2.

Infinitely smoothing case:

$$ \psi (x)=\varphi _{\lambda}(x)=e^{\lambda x},\quad \lambda >0. $$(17)

In both cases, the regularization solution of problem (1) is defined as the minimizer of the following Tikhonov functional:

where ρ is a regularization parameter and it will be chosen by the Morozov discrepancy principle: ρ is the solution of the equation

with a given constant \(C>1\).

If we let \({A}=K\mathcal{J}\) and \(h=\mathcal{J}\vec{\mathbf{{h}}}\), then (18) becomes

hence, the minimizer of (18) can be given as

where \(\vec{\mathbf{{h}}}_{\rho}^{\delta}\) is the solution of equation

In this case, the equation (19) converts to

Lemma 1

[20] For \(0<\alpha <1\), \(E_{\alpha ,1}\) is a monotone decreasing function for \(t \geq 0\) and we have

Lemma 2

[31] If we let \(\mathcal{B}=A\mathcal{R}^{-1}\), then by using functional calculus, we have

The function \(d_{\rho}:(0,\|\mathcal{B}\|^{2}]\rightarrow (0,\infty )\) such that

and

3 Convergence rates of the regularization solution

We will derive the error estimates of the method in this section. For any \(f\in W^{2}_{\psi}\), let \(\vec{\mathbf{{f}}}=(\hat{f}_{0},\hat{f}_{1},\ldots ,\hat{f}_{n},\ldots )^{T}\),

and we define \(\vec{\mathbf{{f}}}_{\rho ,N}\) by

It should be noted that we only use the parameter N for theoretical derivation and it does not appear in practical computing. In the following different proof process, N has to be chosen properly. This approach is borrowed from [31].

It is easy to obtain that

Lemma 3

If \(f\in W_{2}^{\psi}\), then we have

Proof

□

Now, we define an operator K̂ as

Then, it is easily to see that

Lemma 4

If \(f\in W_{2}^{\psi}\), then there exists a constant \(c_{1}>0\) such that

Proof

Let \(h_{1}=\mathcal{J}\vec{\mathbf{f}}\), \(h_{2}={\mathcal{J}}(\mathcal{P}_{N}\vec{\mathbf{f}})\) and \(q_{i}=\hat{K}h_{i}\), \(i=1,2\). Then, it can be deduced that \(q_{i}\) are the solutions of the following equations:

Then, from (12), we can obtain

and

By using the Cauchy inequality, we have

Hence, we can obtain

□

Lemma 5

If \(f\in W_{2}^{\psi}\), then we have

Proof

Using the triangle inequality, (2), (23), and Lemma 4, we obtain

Due to the triangle inequality, (30), (2), and Lemmas 2–4

Let \(S_{\rho}=I-d_{\rho}(\mathcal{B}\mathcal{B}^{*})\mathcal{B} \mathcal{B}^{*}\), then we use the representation \(g^{\delta}-A\vec{\mathbf{{h}}}_{\rho}^{\delta}=S_{\rho}g^{ \delta}\) and obtain from the triangle inequality, (2), and Lemmas 2–4

□

3.1 Convergence rates for \(\psi (x)=\phi _{r}(x)\)

Lemma 6

If \(f\in W_{2}^{\psi}\) with \(\psi (x)=\phi _{r}(x)\) \((r>1)\), then there exists a constant \(c_{2}\) such that

Proof

By using the Hölder inequality

From (35)

We can finish the proof by using (37), (38), and (33). □

Lemma 7

For the vector sequences \(\vec{\mathbf{{h}}}^{\delta}=(\hat{h}_{1}^{\delta},\hat{h}^{\delta}_{2}, \ldots ,\hat{h}^{\delta}_{n},\ldots )^{T}\), if

hold with some nonnegative constants \(c_{3}\), \(c_{4}\), \(c_{5}\), then there exists a constant \(M_{1}\) such that

Proof

Using the properties of the exponential function and the power function,

holds for a constant \(\delta _{0}\). Now, we prove the lemma for \(\delta <\delta _{0}\), let

then by using the triangle inequality

For the first term \(I_{1}\),

For the second term \(I_{2}\),

This finishes the proof. □

Theorem 8

Suppose that \(f\in W_{2}^{\psi}\) with \(\psi (x)=\phi _{r}(x)\) (\(r>1\)) and the condition (2) holds. If the regularization solution \(h_{\rho}^{\delta}\) is defined by (21)–(23), then

Proof

From Lemma 5,

Now, we choose N such that

then we can obtain that there exist constants \(C_{1}\), \(C_{2}\), \(C_{3}\) such that

Hence, from Lemma 7, there exists a constant \(M_{2}\) such that

Hence, by using the triangle inequality

Moreover, from (2), (23), and the triangle inequality

It follows from Lemma 6 that the assertion of this theorem is true. □

3.2 Convergence rates for \(\psi (x)=\varphi _{\lambda}(x) (\lambda >0)\)

Lemma 9

If the functions sequences \(f^{\delta}\) satisfy

where \(c_{6}\), \(c_{7}\) are two fixed nonnegative constants, then we can obtain

Proof

Let

then we have

and

□

Theorem 10

Suppose that \(f\in W_{2}^{\psi}\) with \(\psi (x)=\varphi _{\lambda}(x)\) \((\lambda >0)\) and the condition (2) holds. If the regularization solution \(h_{\rho}^{\delta}\) is defined by (21)–(23), then

Proof

From Lemma 5, for \(0<\lambda <1\),

Now, we choose N such that

then

and

hold with two constants \(c_{4}\) and \(c_{5}\). Hence, it is easy to obtain by the Hölder inequality that there exists a constant \(M_{3}\)

Hence, we can obtain

Secondly, for \(\lambda >1\), noting that \(\vec{\mathbf{{h}}}_{\rho}^{\delta}\) is the minimizer of (18), hence, we can obtain

Hence,

Therefore,

Moreover, by the triangle inequality

It follows from Lemma 9 that the assertion of this theorem is true. □

4 Numerical experiments

In this section, we present several numerical results from our method. Let \(x_{i}=\frac{i}{M}\), \(i=0,1, \ldots , M\). For noisy data, we use

where \(\{\epsilon _{i} \}_{i=1}^{N}\) are generated by Function \((2*\operatorname{rand}(N, 1)-1) * \delta _{1}\) in Matlab. Since the exact solution of the fractional diffusion equation is difficult to obtain, we generate the additional data \(g(x)\) by the method in [20].

We obtain \(\vec{\mathbf{{h}}}_{\rho}^{\delta}\) approximatively by solving the following equation:

where

with \(a_{l}=\frac{1-E_{\alpha ,1}(-l^{2}\pi ^{2})}{l^{2}\pi ^{2}}\), \(l=0,1, \ldots , N\) and \(J_{k}(x)\), which is defined in (11), \(k=0,1, \ldots , M\) and \(\mathbf{g}^{\delta}= (g^{\delta}(x_{0}), g^{\delta}(x_{1}), \ldots , g^{\delta}(x_{M}) )^{T}\), B is a diagonal matrix with the elements of \((1, e^{2}, e^{4}, \ldots , e^{2 M} )^{T}\) on the main diagonal.

Then, the regularization parameter ρ is chosen by

with \(C=1.01\), where \(\hat{\delta}=\sqrt{M+1} \delta _{1}\).

Numerical tests for four examples are investigated as follows. We take \(T=1\) and \(M=N=256\) in all of the examples. The relative error of the numerical solution is measured by

We also give the comparison of the numerical results between our method (M1) and the one in [20] (M2).

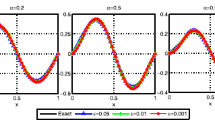

Example 1

Take

then \(f(0)\neq 0\), \(f(1)\neq 0\) and \(f(x)\) is smooth.

In Fig. 1(a), the comparisons between the exact solution and numerical approximations with \(\delta _{1}=1\text{e-2}\) are shown and we give the variation of \(e_{r}(f)\) with \(\delta _{1}\) in Fig. 1(b). Moreover, we present the relative errors for various α and \(\delta _{1}\) in Table 1. We can see that the results of M1 are much better than those of M2 when the boundary condition does not hold. For different α, there is little difference in the numerical results. Hence, in the following experiments we only give the results for \(\alpha =0.5\).

Numerical results of Example 1 with \(\alpha =0.5\)

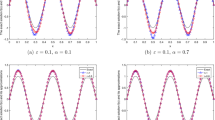

Example 2

[20] We take

then \(f(0)=f(1)=0\) and \(f(x)\) is smooth.

The relative error has been listed in Table 2. Figure 2(a) shows the comparisons between the exact solution and numerical solutions and Fig. 2(b) exhibits the changes of \(e_{r}(f)\) with \(\delta _{1}\). We can see that the results of M1 are still better than those of M2. The advantage of M1 becomes obvious as \(\delta _{1}\) decreases.

Numerical results of Example 2 with \(\alpha =0.5\)

Next, we consider the case of piecewise-smooth functions. Example 3 does not satisfy the boundary condition but Example 4 does.

Example 3

Take

Example 4

Take

From the results of Figs. 3 and 4 and Tables 3 and 4, we can see that the results of the two examples using M1 are close. However, the results of Example 4 using M2 are obviously better than those of Example 3. The results of M1 in Example 3 are better than those of M2, but the results of M2 in Example 4 are slightly better than those of M1. These results are consistent with theoretical analysis.

Numerical results of Example 3 with \(\alpha =0.5\)

Numerical results of Example 4 with \(\alpha =0.5\)

5 Conclusion

To overcome the dependence of previous methods on boundary conditions, we present a modified Tikhonov method based on Jacobi polynomials to identify an unknown source in a time-fractional diffusion equation. The convergence results of the new method are no longer restricted by boundary conditions, and the method has obvious advantages when the solution has high smoothness.

Availability of data and materials

No datasets were generated or analyzed during the current study.

References

Benson, D.A., Wheatcraft, S.W., Meerschaert, M.M.: Application of a fractional advection-dispersion equation. Water Resour. Res. 36(6), 1403–1412 (2000)

Liu, F., Anh, V.V., Turner, I., Zhuang, P.: Time fractional advection-dispersion equation. J. Appl. Math. Comput. 13(1), 233–245 (2003)

Hall, M.G., Barrick, T.R.: From diffusion-weighted mri to anomalous diffusion imaging. Magn. Reson. Med. 59(3), 447–455 (2008)

Henry, B., Langlands, T., Wearne, S.: Fractional cable models for spiny neuronal dendrites. Phys. Rev. Lett. 100(12), 128103 (2008)

Santamaria, F., Wils, S., De Schutter, E., Augustine, G.J.: Anomalous diffusion in Purkinje cell dendrites caused by spines. Neuron 52(4), 635–648 (2006)

Scalas, E., Gorenflo, R., Mainardi, F.: Fractional calculus and continuous-time finance. Phys. A, Stat. Mech. Appl. 284(1–4), 376–384 (2000)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications. Elsevier, Amsterdam (1998)

Luchko, Y.: Maximum principle and its application for the time-fractional diffusion equations. Fract. Calc. Appl. Anal. 14(1), 110–124 (2011)

Luchko, Y.: Some uniqueness and existence results for the initial-boundary-value problems for the generalized time-fractional diffusion equation. Comput. Math. Appl. 59(5), 1766–1772 (2010)

Agrawal, O.P.: Solution for a fractional diffusion-wave equation defined in a bounded domain. Nonlinear Dyn. 29(1), 145–155 (2002)

Duan, J.-S.: Time-and space-fractional partial differential equations. J. Math. Phys. 46(1), 013504 (2005)

Langlands, T., Henry, B.I.: The accuracy and stability of an implicit solution method for the fractional diffusion equation. J. Comput. Phys. 205(2), 719–736 (2005)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225(2), 1533–1552 (2007)

Li, X., Xu, C.: A space-time spectral method for the time fractional diffusion equation. SIAM J. Numer. Anal. 47(3), 2108–2131 (2009)

Deng, W.H.: Finite element method for the space and time fractional Fokker–Planck equation. SIAM J. Numer. Anal. 47(1), 204–226 (2009)

Murio, D.A.: Implicit finite difference approximation for time fractional diffusion equations. Comput. Math. Appl. 56(4), 1138–1145 (2008)

Cheng, J., Nakagawa, J., Yamamoto, M., Yamazaki, T.: Uniqueness in an inverse problem for a one-dimensional fractional diffusion equation. Inverse Probl. 25(11), 115002 (2009)

Dien, N.M., Hai, D.N.D., Viet, T.Q., Trong, D.D.: On Tikhonov’s method and optimal error bound for inverse source problem for a time-fractional diffusion equation. Comput. Math. Appl. 80(1), 61–81 (2020)

Wei, T., Wang, J.: A modified quasi-boundary value method for an inverse source problem of the time-fractional diffusion equation. Appl. Numer. Math. 78, 95–111 (2014)

Zhang, Z., Wei, T.: Identifying an unknown source in time-fractional diffusion equation by a truncation method. Appl. Math. Comput. 219(11), 5972–5983 (2013)

Wang, J.G., Wei, T.: Quasi-reversibility method to identify a space-dependent source for the time-fractional diffusion equation. Appl. Math. Model. 39(20), 6139–6149 (2015)

Yang, F., Liu, X., Li, X.-X., Ma, C.-Y.: Landweber iterative regularization method for identifying the unknown source of the time-fractional diffusion equation. Adv. Differ. Equ. 2017(1), 388 (2017)

Ma, Y.K., Prakash, P., Deiveegan, A.: Generalized Tikhonov methods for an inverse source problem of the time-fractional diffusion equation. Chaos Solitons Fractals 108, 39–48 (2018)

Wen, J., Liu, Z.X., Yue, C.W., Wang, S.J.: Landweber iteration method for simultaneous inversion of the source term and initial data in a time-fractional diffusion equation. J. Appl. Math. Comput. 68, 3219–3250 (2022)

Tuan, N.H., Kirane, M., Hoan, L.V.C., Long, L.D.: Identification and regularization for unknown source for a time-fractional diffusion equation. Comput. Math. Appl. 73(6), 931–950 (2017)

Shen, J., Tang, T., Wang, L.L.: Spectral Methods: Algorithms, Analysis and Applications. Springer, Berlin (2011)

Ammari, A., Karoui, A.: Stable inversion of the Abel integral equation of the first kind by means of orthogonal polynomials. Inverse Probl. 26(10), 105005 (2010)

Lu, S., Naumova, V., Pereverzev, S.V.: Legendre polynomials as a recommended basis for numerical differentiation in the presence of stochastic white noise. J. Inverse Ill-Posed Probl. 20, 1–22 (2012)

Mhaskar, H.N., Naumova, V., Pereverzyev, S.V.: Filtered Legendre expansion method for numerical differentiation at the boundary point with application to blood glucose predictions. Appl. Math. Comput. 224, 835–847 (2013)

Zhao, Z., You, L.: A numerical differentiation method based on Legendre expansion with super order Tikhonov regularization. Appl. Math. Comput. 393, 125811 (2021)

Nair, S.T., Pereverzev, S.V., Tautenhahn, U.: Regularization in Hilbert scales under general smoothing conditions. Inverse Probl. 21(21), 1851–1869 (2005)

Acknowledgements

The authors would like to thank the editor and the anonymous referees for their valuable comments and helpful suggestions that improved the quality of our paper.

Funding

The authors received no financial support for this article.

Author information

Authors and Affiliations

Contributions

All authors have contributed to all aspects of this manuscript and have reviewed its final draft. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tang, HD., Zhao, ZY., Yu, K. et al. Determining an unknown source in a time-fractional diffusion equation based on Jacobi polynomials expansion with a modified Tiknonov regularization. Adv Cont Discr Mod 2023, 33 (2023). https://doi.org/10.1186/s13662-023-03779-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-023-03779-z