Abstract

Academic misconduct is a threat to the validity and reliability of online examinations, and media reports suggest that misconduct spiked dramatically in higher education during the emergency shift to online exams caused by the COVID-19 pandemic. This study reviewed survey research to determine how common it is for university students to admit cheating in online exams, and how and why they do it. We also assessed whether these self-reports of cheating increased during the COVID-19 pandemic, along with an evaluation of the quality of the research evidence which addressed these questions. 25 samples were identified from 19 Studies, including 4672 participants, going back to 2012. Online exam cheating was self-reported by a substantial minority (44.7%) of students in total. Pre-COVID this was 29.9%, but during COVID cheating jumped to 54.7%, although these samples were more heterogenous. Individual cheating was more common than group cheating, and the most common reason students reported for cheating was simply that there was an opportunity to do so. Remote proctoring appeared to reduce the occurrence of cheating, although data were limited. However there were a number of methodological features which reduce confidence in the accuracy of all these findings. Most samples were collected using designs which makes it likely that online exam cheating is under-reported, for example using convenience sampling, a modest sample size and insufficient information to calculate response rate. No studies considered whether samples were representative of their population. Future approaches to online exams should consider how the basic validity of examinations can be maintained, considering the substantial numbers of students who appear to be willing to admit engaging in misconduct. Future research on academic misconduct would benefit from using large representative samples, guaranteeing participants anonymity.

Similar content being viewed by others

Introduction

Distance learning came to the fore during the global COVID-19 pandemic. Distance learning, also referred to as e-learning, blended learning or mobile learning (Zarzycka et al., 2021) is defined as learning with the use of technology where there is a physical separation of students from the teachers during the active learning process, instruction and examination (Armstrong-Mensah et al., 2020). This physical separation was key to a sector-wide response to reducing the spread of coronavirus.

COVID prompted a sudden, rapid and near-total adjustment to distance learning (Brown et al., 2022; Pokhrel & Chhetri, 2021). We all, staff and students, had to learn a lot, very quickly, about distance learning. Pandemic-induced ‘lockdown learning’ continued, in some form, for almost 2 years in many countries, prompting predictions that higher education would be permanently changed by the pandemic, with online/distance learning becoming much more common, even the norm (Barber et al., 2021; Dumulescu & Muţiu, 2021). One obvious potential change would be the widespread adoption of online assessment methods. Online exams offer students increased flexibility, for example the opportunity to sit an exam in their own homes. This may also reduce some of the anxiety experienced during attending in-person exams in an exam hall, and potentially reduce the administrative cost to universities.

However, assessment poses many challenges for distance learning. Summative assessments, including exams, are the basis for making decisions about the grading and progress of individual students, while aggregated results can inform educational policy such as curriculum or funding decisions (Shute & Kim, 2014). Thus, it is essential that online summative assessments can be conducted in a way that allows for their basic reliability and validity to be maintained. During the pandemic, Universities shifted, very rapidly, in-person exams to an online format, with limited time to ensure that these methods were secure. There were subsequent media reports that academic misconduct was now ‘endemic’, with universities supposedly ‘turning a blind eye’ towards cheating (e.g. Henry, 2022; Knox, 2021). However, it is unclear whether this media anxiety is reflected in the real-world experience in universities.

Dawson defines e-cheating as ‘cheating that uses or is enabled by technology’ (Dawson, 2020, p. 4). Cheating itself is then defined as the gaining of an unfair advantage (Case and King 2007, in Dawson, 2020, P4). Cheating poses an obvious threat to the validity of online examinations, a format which relies heavily on technology. Noorbebahani and colleagues recently reviewed the research literature on a specific form of e-cheating; online exam cheating in higher education. They found that students use a variety of methods to gain an unfair advantage, including accessing unauthorized materials such as notes and textbooks, using an additional device to go online, collaborating with others, and even outsourcing the exam to be taken by someone else. These findings map onto the work of Dawson, 2020, who found a similar taxonomy when considering ‘e-cheating’ more generally. These can be driven by a variety of motivations, including a fear of failure, peer pressure, a perception that others are cheating, and the ease with which they can do it (Noorbehbahani et al., 2022). However, it remains unclear how many students are actually engaged in these cheating behaviours. Understanding the scale of cheating is an important pragmatic consideration when determining how, or even if, it could/should be addressed. There is an extensive literature on the incidence of other types of misconduct, but cheating in online exams has received less attention than other forms of misconduct such as plagiarism (Garg & Goel, 2022).

One seemingly obvious response to concerns about cheating in online exams is to use remote proctoring systems wherein students are monitored through webcams and use locked-down browsers. However, the efficacy of these systems is not yet clear, and their use has been controversial, with students feeling that they are ‘under surveillance’, anxious about being unfairly accused of cheating, or of technological problems (Marano et al., 2023). A recent court ruling in the USA found that the use of a remote proctoring system to scan a student’s private resident prior to taking an online exam was unconstitutional (Bowman, 2022), although, at the time of writing, this case is ongoing (Witley, 2023). There is already a long history of legal battles between the proctoring companies and their critics (Corbyn, 2022), and it is still unclear whether these systems actually reduce misconduct. Alternatives have been offered in the literature, including guidance for how to prepare online exams in a way that reduces the opportunity for misconduct (Whisenhunt et al., 2022), although it is unclear whether this guidance is effective either.

There is a large body of research literature which examines the prevalence of different types of academic dishonesty and misconduct. Much of this research is in the form of survey-based self-report studies. There are some obvious problems with using self-report as a measure of misconduct; it is a ‘deviant’ or ‘undesirable’ behaviour, and so those invited to participate in survey-based research have a disincentive to respond truthfully, if at all, especially if there is no guarantee of anonymity. There is also some evidence that certain demographic characteristics associated with an increased likelihood of engaging in academic misconduct are also predictive of a decreased likelihood of responding voluntarily to surveys, meaning that misconduct is likely under-reported when a non-representative sampling method is used such as convenience sampling (Newton, 2018).

Some of these issues with quantifying academic misconduct can be partially addressed by the use of rigorous research methodology, for example using representative samples with a high response rate, and clear, unambiguous survey items (Bennett et al., 2011; Halbesleben & Whitman, 2013). Guarantees of anonymity are also essential for respondents to feel confident about answering honestly, especially when the research is being undertaken by the very universities where participants are studying. A previous systematic review of academic misconduct found that self-report studies are often undertaken with small, convenience samples with low response rates (Newton, 2018). Similar findings were reported when reviewing the reliability of research into the prevalence of belief in the Learning Styles neuromyth, suggesting that this is a wider concern within survey-based education research (Newton & Salvi, 2020).

However, self-report remains one of the most common ways that academic misconduct is estimated, perhaps in part because there are few other ways to meaningfully measure it. There is also a basic, intuitive objective validity to the method; asking students whether they have cheated is a simple and direct approach, when compared to other indirect approaches to quantifying misconduct, based on (for example) learner analytics, originality scores or grade discrepancies. There is some evidence that self-report correlates positively with actual behaviour (Gardner et al., 1988), and that data accuracy can be improved by using methods which incentivize truth-telling (Curtis et al., 2022).

Objectives

Here we undertook a systematic search of the literature in order to identify research which studied the prevalence of academic dishonesty in summative online examinations in Higher Education. The research questions were thus.

-

1.

How common is self-report of cheating in online exams in Higher Education? (This was the primary research question, and studies were only included if they addressed this question).

-

2.

Did cheating in online exams increase during the COVID-19 pandemic?

-

3.

What are the most common forms of cheating?

-

4.

What are student motivations for cheating?

-

5.

Does online proctoring reduce the incidence of self-reported online exam cheating?

Methods

The review was conducted according to the principles of the PRISMA statement for reporting systematic reviews (Moher et al., 2009) updated for 2020 (Page et al., 2021). We adapted this methodology based on previous work systematically reviewing survey-based research in education, misbelief and misconduct (Fanelli, 2009; Newton, 2018; Newton & Salvi, 2020), based on the limited nature of the outcomes reported in these studies (i.e. percentage of students engaging in a specific behaviour).

Search Strategy and Information Sources

Searches were conducted in July and August 2022. Searches were first undertaken using the ERIC education research database (eric.ed.gov) and then with Google Scholar. We used Google Scholar since it covers grey literature (Haddaway et al., 2015), including unpublished Masters and PhD theses (Jamali & Nabavi, 2015) as well as preprints. The Google Scholar search interface is limited, and the search returns can include non-research documents search as citations, university policies and handbooks on academic integrity, and multiple versions of papers (Boeker et al., 2013). It is also not possible to exclude the results of one search from another. Thus it is not possible for us to report accurately the numbers of included papers returned from each term. ‘Daisy chaining’ was also used to identify relevant research from studies that had already been identified using the aforementioned literature searches, and recent reviews on the subject (Butler-Henderson & Crawford, 2020; Chiang et al., 2022; Garg & Goel, 2022; Holden et al., 2021; Noorbehbahani et al., 2022; Surahman & Wang, 2022).

Selection Process

Search results were individually assessed against the inclusion/exclusion criteria, starting with the title, followed by the abstract and then the full text. If a study clearly did not meet the inclusion criteria based on the title then it was excluded. If the author was unsure, then the abstract was reviewed. If there was still uncertainty, then the full text was reviewed. When a study met the inclusion criteria (see below), the specific question used in that study to quantify online exam cheating was then itself also used as a search term. Thus the full list of search terms used is shown in Supplementary Online Material S1.

Eligibility Criteria

The following criteria were used to determine whether to include samples. Many studies included multiple datasets (e.g. samples comprising different groups of students, across different years). The criteria here were applied to individual datasets.

Inclusion Criteria

-

Participants were asked whether they had ever cheated in an online exam (self-report).

-

Participants were students in Higher Education.

-

Reported both total sample size and percent of respondents answering yes to the relevant exam cheating questions, or sufficient data to allow those metrics to be calculated.

-

English language publication.

-

Published 2013-present, with data collected 2012-present. We wanted to evaluate a 10 year timeframe. In 2013, at the beginning of this time window, the average time needed to publish an academic paper was 12.2 months, ranging from 9 months (chemistry) to 18 months (Business) (Björk & Solomon, 2013). It would therefore be reasonable to conclude that a paper published in 2013 was most likely submitted in 2012. Thus we included papers whose publication date was 2013 onwards, unless the manuscript itself specifically stated that the data were collected prior to 2012.

Exclusion Criteria

-

Asking participants would they cheat in exams (e.g. Morales-Martinez et al., 2019), or did not allow for a distinction between self-report of intent and actual cheating (e.g. Ghias et al., 2014).

-

Phrasing of survey items in a way that does not allow for frequency of online exam cheating to be specifically identified according to the criteria above. Wherever necessary, study authors were contacted to clarify.

-

Asking participants ‘how often do others cheat in online exams’.

-

Asking participants about helping other students to cheat.

-

Schools, community colleges/further education, MOOCS.

-

Cheating in formative exams, or did not distinguish between formative/summative (e.g. quizzes/exams (e.g. Alvarez, Homer et al., 2022; Costley, 2019).

-

Estimates of cheating from learning analytics or other methods which did not include directly asking participants if they had cheated.

-

Published in a predatory journal (see below).

Predatory Journal Criteria

Predatory journals and publishers are defined as “entities which prioritize self-interest at the expense of scholarship and are characterised by false or misleading information, deviation from best editorial and publication practices, a lack of transparency, and/or the use of aggressive and indiscriminate solicitation practices.” (Grudniewicz et al., 2019). The inclusion of predatory journals in literature reviews may therefore have a negative impact on the data, findings and conclusions. We followed established guidelines for the identification and exclusion of predatory journals from the findings (Rice et al., 2021):

-

Each study which met the inclusion criteria was checked for spelling, punctuation and grammar errors as well as logical inconsistencies.

-

Every included journal was checked against open access criteria;

-

If the journal was listed on the Directory of Open Access Journals (DOAJ) database (DOAJ.org) then it was considered to be non-predatory.

-

If the journal was not present in the DOAJ database, we looked for it in the Committee on Publication Ethics (COPE) database (publicationethics.org). If the journal was listed on the COPE database then it was considered to be non-predatory.

-

Only one paper met these criteria, containing logical inconsistencies and not listed on either DOAJ or COPE. For completeness we also searched an informal list of predatory journals (https://beallslist.net) and the journal was listed there. Thus the study was excluded.

-

Data Items

All data were extracted by both authors independently. Where the extracted data differed between authors then this was clarified through discussion. Data extracted were, where possible, as follows:

-

Author/date

-

Year of Publication

-

Year study was undertaken. If this was a range (e.g. Nov 2016-Apr 2017) then the most recent year was used as the data point (e.g. 2017 in the example). If it was not reported when the study was undertaken, then we recorded the year that the manuscript was submitted. If none of these data were available then the publication year was entered as the year that the study was undertaken.

-

Publication type. Peer reviewed journal publication, peer reviewed conference proceedings or dissertation/thesis.

-

Population size. The total number of participants in the population, from which the sample is drawn and supposed to represent. For example, if the study is surveying ‘business students at University X’, is it clear how many business students are currently at University X?

-

Number Sampled. The number of potential participants, from the population, who were asked to fill in the survey.

-

N. The number of survey respondents.

-

Cheated in online summative examinations. The number of participants who answered ‘yes’ to having cheated in online exams. Some studies recorded the frequency of cheating on a scale, for example a 1–5 Likert scale from ‘always’ to ‘never’. In these cases, we collapsed all positive reports into a single number of participants who had ever cheated in online exams. Some studies did not ask for a total rate of cheating (i.e. cheating by any/all methods) and so, for analysis purposes the method with the highest rate of cheating was used (see Results).

-

Group/individual cheating. Where appropriate, the frequency of cheating via different methods was recorded. These were coded according to the highest level of the framework proposed by Noorbehbahani (Noorbehbahani et al., 2022), i.e. group vs. individual. More fine-grained analysis was not possible due to the number and nature of the included studies.

Study Risk of Bias and Quality metrics

-

Response rate. Defined as “ the percentage of people who completed the survey after being asked to do so” (Halbesleben & Whitman, 2013).

-

Method of sampling. As one of the following; convenience sampling, where all members of the population were able to complete the survey, but data were analysed from those who voluntarily completed it. ‘Unclassifiable’ where it was not possible to determine the sampling method based on the data provided (no other sampling methods were used in the included studies).

-

Ethics. Was it reported whether ethical/IRB approval had been obtained? (note that a recording of ‘N’ here does not mean that ethical approval was not obtained, just that it is not reported)

-

Anonymity. Were participants assured that they were answering anonymously? Students who are found to have cheated in exams can be given severe penalties, and so a statement of anonymity (not just confidentiality) is important for obtaining meaningful data.

Synthesis Methods

Data are reported as mean ± SEM unless otherwise stated. Datasets were tested for normal distribution using a Kolmogorov-Smirnov test prior to analysis and parametric tests were used if the data were found to be normally distributed. The details of the specific tests used are in the relevant results section.

Results

25 samples were identified from 19 studies, containing a total of 4672 participants. Three studies contained multiple distinct samples from different participants (e.g. data was collected in different years (Case et al., 2019; King & Case, 2014), or were split by two different programmes of study (Burgason et al., 2019), or whether exams were proctored or not (Owens, 2015). Thus, these samples were treated as distinct in the analysis since they represent different participants. Multiple studies asked the same groups of participants about different types of cheating, or the conditions under which cheating happens. The analysis of these is explained in the relevant results subsection. A summary of the studies is in Table 1. The detail of each individual question asked to study participants is in supplementary online data S2.

Descriptive Metrics of Studies

Sampling Method

23/25 samples were collected using convenience sampling. The remaining two did not provide sufficient information to determine the method of sampling.

Population Size

Only two studies reported the population size.

Sample Size

The average sample size was 188.7 ± 36.16.

Response Rate

Fifteen of the samples did not report sufficient information to allow a response rate to be calculated. The ten remaining samples returned an average response rate of 55.6% ±10.7, with a range from 12.2 to 100%.

Anonymity

Eighteen of the 23 samples (72%) stated that participant responses were collected anonymously.

Ethics

Seven of the 25 samples (28%) reported that ethical approval was obtained for the study.

How Common is Self-Reported Online Exam Cheating in Higher Education?

44.7% of participants (2088/4672) reported engaging in some form of cheating in online exams. This analysis included those studies where total cheating was not recorded, and so the most commonly reported form of cheating was substituted in. To check the validity of this inclusion, a separate analysis was conducted of only those studies where total cheating was recorded. In this case, 42.5% of students (1574/3707) reported engaging in some form of cheating. An unpaired t-test was used to compare the percentage cheating from each group (total vs. highest frequency), and returned no significant difference (t(23) = 0.5926, P = 0.56).

Did the Frequency of Online Exam Cheating Increase During COVID?

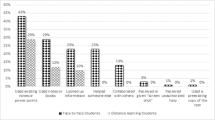

The samples were classified as having been collected pre-COVID, or during COVID (no samples were identified as having been collected ‘post-COVID’). One study (Jenkins et al., 2022) asked the same students about their behaviour before, and during, COVID. For the purposes of this specific analysis, these were included as separate samples, thus there were 26 samples, 17 pre-COVID and 9 during COVID. Pre-COVID, 29.9% (629/2107) of participants reported cheating in online exams. During COVID this figure was 54.7% (1519/2779).

To estimate the variance in these data, and to test whether the difference was statistically significant, the percentages of students who reported cheating for each study were grouped into pre-and during-COVID and the average calculated for each group. The average pre-COVID was 28.03% ± 4.89, (N = 17), whereas during COVID the average is 65.06 ± 9.585 (N = 9). An unpaired t-test was used to compare the groups, and returned a statistically significant difference (t(24) = 3.897, P = 0.0007). The effect size (Hedges g) was 1.61, indicating that the COVID effect was substantial (Fig. 1).

To test the reliability of this result, we conducted a split sample test as in other systematic reviews of the prevalence of academic misconduct (Newton, 2018), wherein the data for each group were ordered by size and then every other sample was extracted into a separate group. So, the sample with the lowest frequency of cheating was allocated into Group A, the next smallest into Group B, the next into Group A, and so on. This was conducted separately for the pre-COVID and ‘during COVID’. Each half-group was then subject to an unpaired t-test to determine whether cheating increased during COVID in that group. Each group returned a significant difference (t(10) = 2.889 P = 0.0161 for odd-numbered samples, t(12) = 2.48, P = 0.029 for even-numbered samples. This analysis gives confidence that the observed increase in self-reported online exam cheating during the pandemic is statistically robust, although there may be other variables which contribute to this (see discussion).

Comparison of Group vs. Individual Online Exam Cheating in Higher Education

In order to consider how best to address cheating in online exams, it is important to understand the specific behaviours of students. Many studies asked multiple questions about different types of cheating, and these were coded according to the typology developed by Noorbehbehani which has a high-level code of ‘individual’ and ‘group’ (Noorbehbahani et al., 2022). More fine-grained coding was not possible due to the variance in the types of questions asked of participants (see S2). ‘Individual’ cheating meant that, whatever the type of cheating, it could be achieved without the direct help of another person. This could be looking at notes or textbooks, or searching for materials online. ‘Group’ cheating meant that another person was directly involved, for example by sharing answers, or having them sit the exam on behalf of the participant (contract cheating). Seven studies asked their participants whether they had engaged in different forms of cheating where both formats (Group and Individual) were represented. For each study we ranked all the different forms of cheating by the frequency with which participants reported engaging in it. For all seven of the studies which asked about both Group and Individual cheating, the most frequently reported cheating behaviour was an Individual cheating behaviour. For each study we calculated the difference between the two by subtracting the frequency of the most commonly reported Group cheating behaviour from the frequency of the most commonly reported Individual cheating behaviour. The average difference was 23.32 ± 8.0% points. These two analyses indicate that individual forms of cheating are more common than cheating which involves other people.

Effect of Proctoring/Lockdown Browsers

The majority of studies did not make clear whether their online exams were proctored or unproctored, or whether they involved the use of related software such as lockdown browsers. Thus it was difficult to conduct definitive analyses to address the question of whether these systems reduce online exam cheating. Two studies did specifically address this issue in both cases there was a substantially lower rate of self-reported cheating where proctoring systems were used. Jenkins et al., in a study conducted during COVID, asked participants whether their instructors used ‘anti cheating software (e.g., Lockdown Browser)’ and, if so, whether they had tried to circumvent it. 16.5% admitted to doing this, compared to the overall rate of cheating of 58.4%. Owens asked about an extensive range of different forms of misconduct, in two groups of students whose online exams were either proctored or unproctored. The total rates of cheating in each group did not appear to be reported. The most common form of cheating was the same in both groups (‘web search during an exam’) and was reported by 39.8% of students in the unproctored group but by only 8.5% in the proctored group (Owens, 2015).

Reasons Given for Online Exam Cheating

Ten of the studies asked students why they cheated in online exams. These reasons were initially coded by both authors according to the typology provided in (Noorbehbahani et al., 2022). Following discussion between the authors, the typology was revised slightly to that shown in Table 1, to better reflect the reasons given in the reviewed studies.

Descriptive statistics (the percentages of students reporting the different reasons as motivations for cheating) are shown in Table 2. Direct comparison between the reasons is not fully valid since different studies asked for different options, and some studies offered multiple options whereas some only identified one. However in the four studies that offered multiple options to students, three of them ranked ‘opportunities to cheat’ as the most common reason (and the fourth study did not have this as an option). Thus students appear to be most likely to cheat in online exams when there is an opportunity to do so.

Discussion

We reviewed data from 19 studies, including 25 samples totaling 4672 participants. We found that a substantial proportion of students, 44.7%, were willing to admit to cheating in online summative exams. This total number masks a finding that cheating in online exams appeared to increase considerably during the COVID-19 pandemic, from 29.9 to 54.7%. These are concerning findings. However, there are a number of methodological considerations which influence the interpretation of these data. These considerations all lead to uncertainty regarding the accuracy of the findings, although a common theme is that, unfortunately, the issues highlighted seem likely to result in an under-reporting of the rate of cheating in online exams.

There are numerous potential sources of error in survey-based research, and these may be amplified where the research is asking participants to report on sensitive or undesirable behaviours. One of these sources of error comes from non-respondents, i.e. how confident can we be that those who did not respond to the survey would have given a similar pattern of responses to those that did (Goyder et al., 2002; Halbesleben & Whitman, 2013; Sax et al., 2003). Two ways to minimize non-respondent error are to increase the sample size as a percentage of the population, and then simply to maximise the percentage of the invited sample who responds to the survey. However only nine of the samples reported sufficient information to even allow the calculation of a response rate, and only two reported the total population size. Thus for the majority of samples reported here, we cannot even begin to estimate the extent of the non-response error. For those that did report sufficient information, the response rate varied considerably, from 12.2% to 100, with an average of 55.6%. Thus a substantial number of the possible participants did not respond.

Most of the surveys reviewed here were conducted using convenience sampling, i.e. participation was voluntary and there was no attempt to ensure that the sample was representative, or that the non-respondents were followed up in a targeted way to increase the representativeness of the sample. People who voluntarily respond to survey research are, compared to the general population, older, wealthier, more likely to be female and educated (Curtin et al., 2000). In contrast, individuals who engage in academic misconduct are more likely to be male, younger, from a lower socioeconomic background and less academically able (reviewed in Newton, 2018). Thus the features of the survey research here would suggest that the rates of online exam cheating are under-reported.

A second source of error is measurement error – for example, how likely is it that those participants who do respond are telling the truth? Cheating in online exams is clearly a sensitive subject for potential survey participants. Students who are caught cheating in exams can face severe penalties. Measurement error can be substantial when asking participants about sensitive topics, particularly when they have no incentive to respond truthfully. Curtis et al. conducted an elegant study to investigate rates of different types of contract cheating and found that rates were substantially higher when participants were incentivized to tell the truth, compared to traditional self-report (Curtis et al., 2022). Another method to increase truthfulness is to use a Randomised Response Technique, which increases participants confidence that their data will be truly anonymous when self-reporting cheating (Mortaz Hejri et al., 2013) and so leads to increased estimates of the prevalence of cheating behaviours when measured via self-report (Kerkvliet, 1994; Scheers & Dayton, 1987). No studies reviewed here reported any incentivization or use of a randomized response technique, and many did not report IRB (ethical) approval or that participants were guaranteed anonymity in their responses. Absence of evidence is not evidence of absence, but it again seems reasonable to conclude that the majority of the measurement error reported here will also lead to an under-reporting of the extent of online exam cheating.

However, there are very many variables associated with likelihood of committing academic misconduct (also reviewed in Newton, 2018). For example, in addition to the aforementioned variables, cheating is also associated with individual differences such as personality traits (Giluk & Postlethwaite, 2015; Williams & Williams, 2012), motivation (Park et al., 2013), age and gender (Newstead et al., 1996) and studying in a second language (Bretag et al., 2019) as well as situational variables such as discipline studied (Newstead et al., 1996). None of the studies reviewed here can account for these individual variables, and this perhaps explains, partly, the wide variance in the studies reported, where the percentage of students willing to admit to cheating in online exams ranges from essentially none, to all students, in different studies. However, almost all of the variables associated with differences in likelihood of committing academic misconduct were themselves determined using convenience sampling. In order to begin to understand the true nature, scale and scope of academic misconduct, there is a clear need for studies using large, representative samples, with appropriate methodology to account for non-respondents, and rigorous analyses which attempt to identify those variables associated with an increased likelihood of cheating.

There are some specific issues which must be considered when determining the accuracy of the data showing an increase in cheating during COVID. In general, the pre-COVID group appears to be a more homogenous set of samples, for example, 11 of the 16 samples are from students studying business, and 15 of the 16 pre-COVID samples are from the USA. The during-COVID samples are from a much more diverse range of disciplines and countries. However the increase in self-reported cheating was replicated in the one study which directly asked students about their behaviour before, and during, the pandemic; Jenkins and co-workers found that 28.4% of respondents were cheating pre-COVID, nearly doubling to 58.4% during the pandemic (Jenkins et al., 2022), very closely mirroring the aggregate results.

There are some other variables which may be different between the studies and so affect the overall interpretation of the findings. For example, the specific questions asked of participants, as shown in the supplemental online material (S2) reveal that most studies do not report on the specific type of exam (e.g. multiple choice vs. essay based), or the exam duration, weighting, or educational level. This is likely because the studies survey groups of students, across programmes. Having a more detailed understanding of these factors would also inform strategies to address cheating in online exams.

It is difficult to quantify the potential impact of these issues on the accuracy of the data analysed here, since objective measures of cheating in online exams are difficult to obtain in higher education settings. One way to achieve this is to set up traps for students taking closed-book exams. One study tested this using a 2.5 h online exam administered for participants to obtain credit from a MOOC. The exam was set up so that participants would “likely not benefit from having access to third-party reference materials during the exam”. Students were instructed not to access any additional materials or to communicate with others during the exam. The authors built a ‘honeypot’ website which had all of the exam questions on, with a button ‘click to show answer’. If exam participants went online and clicked that button then the site collected information which allowed the researchers to identify the unique i.d. of the test-taker. This approach was combined with a more traditional analysis of the originality of the free-text portions of the exam. Using these methods, the researchers estimated that ~ 30% of students were cheating (Corrigan-Gibbs et al., 2015b). This study was conducted in 2014-15, and the data align reasonably well with the pre-COVID estimates of cheating found here, giving some confidence that the self-report measures reported here are in the same ball park as objective measures, albeit from only one study.

The challenges of interpreting data from small convenience samples will also affect the analysis of the other measures made here; that students are more likely to commit misconduct on their own, because they can. The overall pattern of findings though does align somewhat, suggesting that concerns may be with the accuracy of the numbers rather than a fundamental qualitative problem (i.e. it seems reasonable to conclude that students are more likely to cheat individually, but it is challenging to put a precise number to that finding). For example, the apparent increase in cheating during COVID is associated with a rapid and near-total transition to online exams. Pre-covid, the use of online exams would have been a choice made by education providers, presumably with some efforts to ensure the security and integrity of that assessment. During COVID lockdown, the scale and speed of the transition to online exams made it much more challenging to put security measures in place, and this would therefore almost certainly have increased the opportunities to cheat.

It was challenging to gather more detail about the specific types of cheating behaviour, due to the considerable heterogeneity between the studies regarding this question. The sector would benefit from future large-scale research using a recognized typology, for example those proposed by Dawson (Dawson, 2020, p. 112) or Noorbehbahani (Noorbehbahani et al., 2022).

Another important recommendation that will help the sector in addressing the problem is for future survey-based research of student dishonesty to make use of the abundant methodological research undertaken to increase the accuracy of such surveys. In particular the use of representative sampling, or analysis methods which account for the challenges posed by unrepresentative samples. Data quality could also be improved by the use of question formats and survey structures which motivate or incentivize truth-telling, for example by the use of methods such as the Randomised Response Technique which increase participant confidence that their responses will be truly anonymous. It would also be helpful to report on key methodological features of survey design; pilot testing, scaling, reliability and validity, although these are commonly underreported in survey based research generally (Bennett et al., 2011).

Thus an aggregate portrayal of the findings here is that students are committing misconduct in significant numbers, and that this has increased considerably during COVID. Students appear to be more likely to cheat on their own, rather than in groups, and most commonly motivated by the simple fact that they can cheat. Do these findings and the underlying data give us any information that might be helpful in addressing the problem?

One technique deployed by many universities to address multiple forms of online exam cheating is to increase the use of remote proctoring, wherein student behaviour during online exams is monitored, for example, through a webcam, and/or their online activity is monitored or restricted. We were unable to draw definitive conclusions about the effectiveness of remote proctoring or other software such as lockdown browsers to reduce cheating in online exams, since very few studies stated definitively that the exams were, or were not, proctored. The two studies that examined this question did appear to show a substantial reduction in the frequency of cheating when proctoring was used. Confidence in these results is bolstered by the fact that these studies both directly compared unproctored vs. proctored/lockdown browser. Other studies have used proxy measures for cheating, such as time engaged with the exam, and changes in exams scores, and these studies have also found evidence for a reduction in misconduct when proctoring is used (e.g. (Dendir & Maxwell, 2020).

The effectiveness (or not) of remote proctoring to reduce academic misconduct seems like an important area for future research. However there is considerable controversy about the use of remote proctoring, including legal challenges to its use and considerable objections from students, who report a net negative experience, fuelled by concerns about privacy, fairness and technological challenges (Marano et al., 2023), and so it remains an open question whether this is a viable option for widespread general use.

Honour codes are a commonly cited approach to promoting academic integrity, and so (in theory) reducing academic misconduct. However, empirical tests of honour codes show that they do not appear to be effective at reducing cheating in online exams (Corrigan-Gibbs et al., 2015a, b). In these studies the authors likened them to ‘terms and conditions’ for online sites, which are largely disregarded by users in online environments. However in those same studies the authors found that replacing an honour code with a more sternly worded ‘warning’, which specifies the consequences of being caught, was effective at reducing cheating. Thus a warning may be a simple, low-cost intervention to reduce cheating in online exams, whose effectiveness could be studied using appropriately conducted surveys of the type reviewed here.

Another option to reduce cheating in online exams is to use open-book exams. This is often suggested as a way of simultaneously increasing the cognitive level of the exam (i.e. it assesses higher order learning) (e.g. (Varble, 2014), and was suggested as a way of reducing the perceived, or potential increase in academic misconduct during COVID (e.g. (Nguyen et al., 2020; Whisenhunt et al., 2022). This approach has an obvious appeal in that it eliminates the possibility of some common forms of misconduct, such as the use of notes or unauthorized web access (Noorbehbahani et al., 2022; Whisenhunt et al., 2022), and can even make this a positive feature, i.e. encouraging the use of additional resources in a way that reflects the fact that, for many future careers, students will have access to unlimited information at their fingertips, and the challenge is to ensure that students have learned what information they need and how to use it. This approach certainly fits with our data, wherein the most frequently reported types of misconduct involved students acting alone, and cheating ‘because they could’. Some form of proctoring or other measure may still be needed in order to reduce the threat of collaborative misconduct. Perhaps most importantly though, it is unclear whether open-book exams truly reduce the opportunity for, and the incidence of, academic misconduct, and if so, how might we advise educators to design their exams, and exam question, in a way that delivers this as well as the promise of ‘higher order’ learning. These questions are the subject of ongoing research.

In summary then, there appears to be significant levels of misconduct in online examinations in Higher Education. Students appear to be more likely to cheat on their own, motivated by an examination design and delivery which makes it easy for them to do so. Future research in academic integrity would benefit from large, representative samples using clear and unambiguous survey questions and guarantees of anonymity. This will allow us to get a much better picture of the size and nature of the problem, and so design strategies to mitigate the threat that cheating poses to exam validity.

References

Alvarez, Homer, T., Reynald, S., Dayrit, Maria Crisella, A., Dela Cruz, C. C., Jocson, R. T., Mendoza, A. V., & Reyes (2022). & Joyce Niña N. Salas. Academic dishonesty cheating in synchronous and asynchronous classes: A proctored examination intervention. International Research Journal of Science, Technology, Education, and Management, 2(1), 1–1.

Armstrong-Mensah, E., Ramsey-White, K., Yankey, B., & Self-Brown, S. (2020). COVID-19 and Distance Learning: Effects on Georgia State University School of Public Health Students. Frontiers in Public Health, 8. https://www.frontiersin.org/articles/https://doi.org/10.3389/fpubh.2020.576227.

Barber, M., Bird, L., Fleming, J., Titterington-Giles, E., Edwards, E., & Leyland, C. (2021). Gravity assist: Propelling higher education towards a brighter future - Office for Students (Worldwide). Office for Students. https://www.officeforstudents.org.uk/publications/gravity-assist-propelling-higher-education-towards-a-brighter-future/.

Bennett, C., Khangura, S., Brehaut, J. C., Graham, I. D., Moher, D., Potter, B. K., & Grimshaw, J. M. (2011). Reporting guidelines for Survey Research: An analysis of published Guidance and Reporting Practices. PLOS Medicine, 8(8), e1001069. https://doi.org/10.1371/journal.pmed.1001069.

Björk, B. C., & Solomon, D. (2013). The publishing delay in scholarly peer-reviewed journals. Journal of Informetrics, 7(4), 914–923. https://doi.org/10.1016/j.joi.2013.09.001.

Blinova, O., & WHAT COVID TAUGHT US ABOUT ASSESSMENT: STUDENTS’ PERCEPTIONS OF ACADEMIC INTEGRITY IN DISTANCE LEARNING. (2022). INTED2022 Proceedings, 6214–6218. https://doi.org/10.21125/inted.2022.1576.

Boeker, M., Vach, W., & Motschall, E. (2013). Google Scholar as replacement for systematic literature searches: Good relative recall and precision are not enough. BMC Medical Research Methodology, 13, 131. https://doi.org/10.1186/1471-2288-13-131.

Bowman, E. (2022, August 26). Scanning students’ rooms during remote tests is unconstitutional, judge rules. NPR. https://www.npr.org/2022/08/25/1119337956/test-proctoring-room-scans-unconstitutional-cleveland-state-university.

Bretag, T., Harper, R., Burton, M., Ellis, C., Newton, P., Rozenberg, P., Saddiqui, S., & van Haeringen, K. (2019). Contract cheating: A survey of australian university students. Studies in Higher Education, 44(11), 1837–1856. https://doi.org/10.1080/03075079.2018.1462788.

Brown, M., Hoon, A., Edwards, M., Shabu, S., Okoronkwo, I., & Newton, P. M. (2022). A pragmatic evaluation of university student experience of remote digital learning during the COVID-19 pandemic, focusing on lessons learned for future practice. EdArXiv. https://doi.org/10.35542/osf.io/62hz5.

Burgason, K. A., Sefiha, O., & Briggs, L. (2019). Cheating is in the Eye of the beholder: An evolving understanding of academic misconduct. Innovative Higher Education, 44(3), 203–218. https://doi.org/10.1007/s10755-019-9457-3.

Butler-Henderson, K., & Crawford, J. (2020). A systematic review of online examinations: A pedagogical innovation for scalable authentication and integrity. Computers & Education, 159, 104024. https://doi.org/10.1016/j.compedu.2020.104024.

Case, C. J., King, D. L., & Case, J. A. (2019). E-Cheating and Undergraduate Business Students: Trends and Role of Gender. Journal of Business and Behavioral Sciences, 31(1). https://www.proquest.com/openview/9fcc44254e8d6d202086fc58818fab5d/1?pq-origsite=gscholar&cbl=2030637.

Chiang, F. K., Zhu, D., & Yu, W. (2022). A systematic review of academic dishonesty in online learning environments. Journal of Computer Assisted Learning, 38(4), 907–928. https://doi.org/10.1111/jcal.12656.

Corbyn, Z. (2022, August 26). ‘I’m afraid’: Critics of anti-cheating technology for students hit by lawsuits. The Guardian. https://www.theguardian.com/us-news/2022/aug/26/anti-cheating-technology-students-tests-proctorio.

Corrigan-Gibbs, H., Gupta, N., Northcutt, C., Cutrell, E., & Thies, W. (2015a). Measuring and maximizing the effectiveness of Honor Codes in Online Courses. Proceedings of the Second (2015) ACM Conference on Learning @ Scale, 223–228. https://doi.org/10.1145/2724660.2728663.

Corrigan-Gibbs, H., Gupta, N., Northcutt, C., Cutrell, E., & Thies, W. (2015b). Deterring cheating in Online environments. ACM Transactions on Computer-Human Interaction, 22(6), 28:1–2823. https://doi.org/10.1145/2810239.

Costley, J. (2019). Student perceptions of academic dishonesty at a Cyber-University in South Korea. Journal of Academic Ethics, 17(2), 205–217. https://doi.org/10.1007/s10805-018-9318-1.

Curtin, R., Presser, S., & Singer, E. (2000). The Effects of Response Rate Changes on the index of consumer sentiment. Public Opinion Quarterly, 64(4), 413–428. https://doi.org/10.1086/318638.

Curtis, G. J., McNeill, M., Slade, C., Tremayne, K., Harper, R., Rundle, K., & Greenaway, R. (2022). Moving beyond self-reports to estimate the prevalence of commercial contract cheating: An australian study. Studies in Higher Education, 47(9), 1844–1856. https://doi.org/10.1080/03075079.2021.1972093.

Dawson, R. J. (2020). Defending Assessment Security in a Digital World: Preventing E-Cheating and Supporting Academic Integrity in Higher Education (1st ed.). Routledge. https://www.routledge.com/Defending-Assessment-Security-in-a-Digital-World-Preventing-E-Cheating/Dawson/p/book/9780367341527.

Dendir, S., & Maxwell, R. S. (2020). Cheating in online courses: Evidence from online proctoring. Computers in Human Behavior Reports, 2, 100033. https://doi.org/10.1016/j.chbr.2020.100033.

Dumulescu, D., & Muţiu, A. I. (2021). Academic Leadership in the Time of COVID-19—Experiences and Perspectives. Frontiers in Psychology, 12. https://www.frontiersin.org/article/https://doi.org/10.3389/fpsyg.2021.648344.

Ebaid, I. E. S. (2021). Cheating among Accounting Students in Online Exams during Covid-19 pandemic: Exploratory evidence from Saudi Arabia. Asian Journal of Economics Finance and Management, 9–19.

Elsalem, L., Al-Azzam, N., Jum’ah, A. A., & Obeidat, N. (2021). Remote E-exams during Covid-19 pandemic: A cross-sectional study of students’ preferences and academic dishonesty in faculties of medical sciences. Annals of Medicine and Surgery, 62, 326–333. https://doi.org/10.1016/j.amsu.2021.01.054.

Fanelli, D. (2009). How many scientists fabricate and falsify Research? A systematic review and Meta-analysis of Survey Data. PLOS ONE, 4(5), e5738. https://doi.org/10.1371/journal.pone.0005738.

Gardner, W. M., Roper, J. T., Gonzalez, C. C., & Simpson, R. G. (1988). Analysis of cheating on academic assignments. The Psychological Record, 38(4), 543–555. https://doi.org/10.1007/BF03395046.

Garg, M., & Goel, A. (2022). A systematic literature review on online assessment security: Current challenges and integrity strategies. Computers & Security, 113, 102544. https://doi.org/10.1016/j.cose.2021.102544.

Gaskill, M. (2014). Cheating in Business Online Learning: Exploring Students’ Motivation, Current Practices and Possible Solutions. Theses, Student Research, and Creative Activity: Department of Teaching, Learning and Teacher Education. https://digitalcommons.unl.edu/teachlearnstudent/35.

Ghias, K., Lakho, G. R., Asim, H., Azam, I. S., & Saeed, S. A. (2014). Self-reported attitudes and behaviours of medical students in Pakistan regarding academic misconduct: A cross-sectional study. BMC Medical Ethics, 15(1), 43. https://doi.org/10.1186/1472-6939-15-43.

Giluk, T. L., & Postlethwaite, B. E. (2015). Big five personality and academic dishonesty: A meta-analytic review. Personality and Individual Differences, 72, 59–67. https://doi.org/10.1016/j.paid.2014.08.027.

Goff, D., Johnston, J., & Bouboulis, B. (2020). Maintaining academic Standards and Integrity in Online Business Courses. International Journal of Higher Education, 9(2). https://econpapers.repec.org/article/jfrijhe11/v_3a9_3ay_3a2020_3ai_3a2_3ap_3a248.htm.

Goyder, J., Warriner, K., & Miller, S. (2002). Evaluating Socio-economic status (SES) Bias in Survey Nonresponse. Journal of Official Statistics, 18(1), 1–11.

Grudniewicz, A., Moher, D., Cobey, K. D., Bryson, G. L., Cukier, S., Allen, K., Ardern, C., Balcom, L., Barros, T., Berger, M., Ciro, J. B., Cugusi, L., Donaldson, M. R., Egger, M., Graham, I. D., Hodgkinson, M., Khan, K. M., Mabizela, M., Manca, A., & Lalu, M. M. (2019). Predatory journals: No definition, no defence. Nature, 576(7786), 210–212. https://doi.org/10.1038/d41586-019-03759-y.

Haddaway, N. R., Collins, A. M., Coughlin, D., & Kirk, S. (2015). The role of Google Scholar in evidence reviews and its applicability to Grey Literature Searching. Plos One, 10(9), https://doi.org/10.1371/journal.pone.0138237.

Halbesleben, J. R. B., & Whitman, M. V. (2013). Evaluating Survey Quality in Health Services Research: A decision Framework for assessing Nonresponse Bias. Health Services Research, 48(3), 913–930. https://doi.org/10.1111/1475-6773.12002.

Henry, J. (2022, July 17). Universities “turn blind eye to online exam cheats” as fraud rises. Mail Online. https://www.dailymail.co.uk/news/article-11021269/Universities-turning-blind-eye-online-exam-cheats-studies-rates-fraud-risen.html.

Holden, O. L., Norris, M. E., & Kuhlmeier, V. A. (2021). Academic Integrity in Online Assessment: A Research Review. Frontiers in Education, 6. https://www.frontiersin.org/articles/https://doi.org/10.3389/feduc.2021.639814.

Jamali, H. R., & Nabavi, M. (2015). Open access and sources of full-text articles in Google Scholar in different subject fields. Scientometrics, 105(3), 1635–1651. https://doi.org/10.1007/s11192-015-1642-2.

Janke, S., Rudert, S. C., Petersen, Ä., Fritz, T. M., & Daumiller, M. (2021). Cheating in the wake of COVID-19: How dangerous is ad-hoc online testing for academic integrity? Computers and Education Open, 2, 100055. https://doi.org/10.1016/j.caeo.2021.100055.

Jantos, A., & IN SUMMATIVE E-ASSESSMENT IN HIGHER EDUCATION - A QUANTITATIVE ANALYSIS. (2021). MOTIVES FOR CHEATING. EDULEARN21 Proceedings, 8766–8776. https://doi.org/10.21125/edulearn.2021.1764.

Jenkins, B. D., Golding, J. M., Grand, L., Levi, A. M., M. M., & Pals, A. M. (2022). When Opportunity knocks: College Students’ cheating amid the COVID-19 pandemic. Teaching of Psychology, 00986283211059067. https://doi.org/10.1177/00986283211059067.

Jones, I. S., Blankenship, D., Hollier, G., & I CHEATING? AN ANALYSIS OF ONLINE STUDENT PERCEPTIONS OF THEIR BEHAVIORS AND ATTITUDES. (2013). AM. Proceedings of ASBBS, 59–69. http://asbbs.org/files/ASBBS2013V1/PDF/J/Jones_Blankenship_Hollier(P59-69).pdf.

Kerkvliet, J. (1994). Cheating by Economics students: A comparison of Survey results. The Journal of Economic Education, 25(2), 121–133. https://doi.org/10.1080/00220485.1994.10844821.

King, D. L., & Case, C. J. (2014). E-CHEATING: INCIDENCE AND TRENDS AMONG COLLEGE STUDENTS. Issues in Information Systems, 15(1), 20–27. https://doi.org/10.48009/1_iis_2014_20-27.

Knox, P. (2021). Students “taking it in turns to answer exam questions” during home tests. The Sun. https://www.thesun.co.uk/news/15413811/students-taking-turns-exam-questions-cheating-lockdown/.

Larkin, C., & Mintu-Wimsatt, A. (2015). Comparing cheating behaviors among graduate and undergraduate Online Business Students—ProQuest. Journal of Higher Education Theory and Practice, 15(7), 54–62.

Marano, E., Newton, P. M., Birch, Z., Croombs, M., Gilbert, C., & Draper, M. J. (2023). What is the Student Experience of Remote Proctoring? A Pragmatic Scoping Review. EdArXiv. https://doi.org/10.35542/osf.io/jrgw9.

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., & Group, T. P. (2009). Preferred reporting items for systematic reviews and Meta-analyses: The PRISMA Statement. PLOS Medicine, 6(7), e1000097. https://doi.org/10.1371/journal.pmed.1000097.

Morales-Martinez, G. E., Lopez-Ramirez, E. O., & Mezquita-Hoyos, Y. N. (2019). Cognitive mechanisms underlying the Engineering Students’ Desire to Cheat during Online and Onsite Statistics Exams. Cognitive mechanisms underlying the Engineering Students’ Desire to Cheat during Online and Onsite Statistics Exams, 8(4), 1145–1158.

Mortaz Hejri, S., Zendehdel, K., Asghari, F., Fotouhi, A., & Rashidian, A. (2013). Academic disintegrity among medical students: A randomised response technique study. Medical Education, 47(2), 144–153. https://doi.org/10.1111/medu.12085.

Newstead, S. E., Franklyn-Stokes, A., & Armstead, P. (1996). Individual differences in student cheating. Journal of Educational Psychology, 88, 229–241. https://doi.org/10.1037/0022-0663.88.2.229.

Newton, P. M. (2018). How Common Is Commercial Contract Cheating in Higher Education and Is It Increasing? A Systematic Review. Frontiers in Education, 3. https://www.frontiersin.org/article/https://doi.org/10.3389/feduc.2018.00067.

Newton, P. M., & Salvi, A. (2020). How Common Is Belief in the Learning Styles Neuromyth, and Does It Matter? A Pragmatic Systematic Review. Frontiers in Education, 5. https://doi.org/10.3389/feduc.2020.602451.

Nguyen, J. G., Keuseman, K. J., & Humston, J. J. (2020). Minimize Online cheating for online assessments during COVID-19 pandemic. Journal of Chemical Education, 97(9), 3429–3435. https://doi.org/10.1021/acs.jchemed.0c00790.

Noorbehbahani, F., Mohammadi, A., & Aminazadeh, M. (2022). A systematic review of research on cheating in online exams from 2010 to 2021. Education and Information Technologies. https://doi.org/10.1007/s10639-022-10927-7.

Owens, H. (2015). Cheating within Online Assessments: A Comparison of Cheating Behaviors in Proctored and Unproctored Environment. Theses and Dissertations. https://scholarsjunction.msstate.edu/td/1049.

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., & Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Bmj, 71. https://doi.org/10.1136/bmj.n71.

Park, E. J., Park, S., & Jang, I. S. (2013). Academic cheating among nursing students. Nurse Education Today, 33(4), 346–352. https://doi.org/10.1016/j.nedt.2012.12.015.

Pokhrel, S., & Chhetri, R. (2021). A Literature Review on Impact of COVID-19 pandemic on teaching and learning. Higher Education for the Future, 8(1), 133–141. https://doi.org/10.1177/2347631120983481.

Rice, D. B., Skidmore, B., & Cobey, K. D. (2021). Dealing with predatory journal articles captured in systematic reviews. Systematic Reviews, 10, 175. https://doi.org/10.1186/s13643-021-01733-2.

Romaniuk, M. W., & Łukasiewicz-Wieleba, J. (2022). Remote and stationary examinations in the opinion of students. International Journal of Electronics and Telecommunications, 68(1), 69.

Sax, L. J., Gilmartin, S. K., & Bryant, A. N. (2003). Assessing response Rates and Nonresponse Bias in web and paper surveys. Research in Higher Education, 44(4), 409–432. https://doi.org/10.1023/A:1024232915870.

Scheers, N. J., & Dayton, C. M. (1987). Improved estimation of academic cheating behavior using the randomized response technique. Research in Higher Education, 26(1), 61–69. https://doi.org/10.1007/BF00991933.

Shute, V. J., & Kim, Y. J. (2014). Formative and Stealth Assessment. In J. M. Spector, M. D. Merrill, J. Elen, & M. J. Bishop (Eds.), Handbook of Research on Educational Communications and Technology (pp. 311–321). Springer. https://doi.org/10.1007/978-1-4614-3185-5_25.

Subotic, D., & Poscic, P. (2014). Academic dishonesty in a partially online environment: A survey. Proceedings of the 15th International Conference on Computer Systems and Technologies, 401–408. https://doi.org/10.1145/2659532.2659601.

Surahman, E., & Wang, T. H. (2022). Academic dishonesty and trustworthy assessment in online learning: A systematic literature review. Journal of Computer Assisted Learning, n/a (n/a). https://doi.org/10.1111/jcal.12708.

Tahsin, M. U., Abeer, I. A., & Ahmed, N. (2022). Note: Cheating and Morality Problems in the Tertiary Education Level: A COVID-19 Perspective in Bangladesh. ACM SIGCAS/SIGCHI Conference on Computing and Sustainable Societies (COMPASS), 589–595. https://doi.org/10.1145/3530190.3534834.

Valizadeh, M. (2022). CHEATING IN ONLINE LEARNING PROGRAMS: LEARNERS’ PERCEPTIONS AND SOLUTIONS. Turkish Online Journal of Distance Education, 23(1), https://doi.org/10.17718/tojde.1050394.

Varble, D. (2014). Reducing Cheating Opportunities in Online Test. Atlantic Marketing Journal, 3(3). https://digitalcommons.kennesaw.edu/amj/vol3/iss3/9.

Whisenhunt, B. L., Cathey, C. L., Hudson, D. L., & Needy, L. M. (2022). Maximizing learning while minimizing cheating: New evidence and advice for online multiple-choice exams. Scholarship of Teaching and Learning in Psychology, 8(2), 140–153. https://doi.org/10.1037/stl0000242.

Williams, M. W. M., & Williams, M. N. (2012). Academic dishonesty, Self-Control, and General Criminality: A prospective and retrospective study of academic dishonesty in a New Zealand University. Ethics & Behavior, 22(2), 89–112. https://doi.org/10.1080/10508422.2011.653291.

Witley, S. (2023). Virtual Exam Case Primes Privacy Fight on College Room Scans (1). https://news.bloomberglaw.com/privacy-and-data-security/virtual-exam-case-primes-privacy-fight-over-college-room-scans?context=search&index=1.

Zarzycka, E., Krasodomska, J., Mazurczak-Mąka, A., & Turek-Radwan, M. (2021). Distance learning during the COVID-19 pandemic: Students’ communication and collaboration and the role of social media. Cogent Arts & Humanities, 8(1), 1953228. https://doi.org/10.1080/23311983.2021.1953228.

Acknowledgements

We would like to acknowledge the efforts of all the researchers whose work was reviewed as part of this study, and their participants who gave up their time to generate the data reviewed here. We are especially grateful to Professor Carl Case at St Bonaventure University, NY, USA for his assistance clarifying the numbers of students who undertook online exams in King and Case (2014) and Case et al. (2019).

Funding

No funds, grants, or other support was received.

Author information

Authors and Affiliations

Contributions

PMN designed the study. PMN + KE independently searched for studies and extracted data. PMN analysed data and wrote the results. KE checked analysis. PMN + KE drafted the introduction and methods. PMN wrote the discussion and finalised the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethics approval and consent to participate

This paper involved secondary analysis of data already in the public domain, and so ethical approval was not necessary.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Newton, P.M., Essex, K. How Common is Cheating in Online Exams and did it Increase During the COVID-19 Pandemic? A Systematic Review. J Acad Ethics (2023). https://doi.org/10.1007/s10805-023-09485-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s10805-023-09485-5