Abstract

We establish criteria on the chemotactic sensitivity \(\chi \) for the non-existence of global weak solutions (i.e., blow-up in finite time) to a stochastic Keller–Segel model with spatially inhomogeneous, conservative noise on \(\mathbb {R}^2\). We show that if \(\chi \) is sufficiently large then blow-up occurs with probability 1. In this regime, our criterion agrees with that of a deterministic Keller–Segel model with increased viscosity. However, for \(\chi \) in an intermediate regime, determined by the variance of the initial data and the spatial correlation of the noise, we show that blow-up occurs with positive probability.

Similar content being viewed by others

1 Introduction

In this work, we present criteria for non-existence of global solutions (that we will frequently refer to as finite time blow-up) to a stochastic partial differential equation (SPDE) model of chemotaxis on \(\mathbb {R}^2\). The model we consider,

is based on the parabolic-elliptic Patlak–Keller–Segel model of chemotaxis (\(\gamma =0\)) with the addition of a stochastic transport term (\(\gamma >0\)), where \(\{W^k\}_{k\ge 1}\) is a family of i.i.d. standard Brownian motions on a filtered probability space, \((\Omega ,\mathcal {F},(\mathcal {F}_t)_{t\ge 0},\mathbb {P})\), satisfying the usual assumptions. Here \(\mathcal {P}(\mathbb {R}^2)\) denotes the set of probability measures on \(\mathbb {R}^2\). We will give detailed assumptions on the vector fields \(\sigma _k:\mathbb {R}^2\rightarrow \mathbb {R}^2\) below (see (H1)–(H3)), but for now simply stipulate that they are assumed to be divergence free and such that \(\varvec{\sigma }:=\{\sigma _k\}_{k\ge 1}\in \ell ^2(\mathbb {Z};L^\infty (\mathbb {R}^2))\).

The noiseless model (\(\gamma =0\)) is a well-known PDE system modeling chemotaxis: the collective movement of a population of cells (represented by its time-space density u) in the presence of an attractive chemical substance (represented by its time-space concentration c). The chemical sensitivity is encoded by the parameter \(\chi >0\). The main particularity of the model is that solutions may become unbounded in finite time even though the total mass is preserved. This is the so-called blow-up in finite time, and it occurs depending on the spatial dimension of the problem and the size of the parameter \(\chi \). In particular, on \(\mathbb {R}^2\) blow-up occurs in finite time for \(\chi >8\pi \), at \(t=\infty \) for \(\chi =8\pi \) see [2] and global existence holds for \(\chi <8\pi \), see for example the survey by [32]. For results in other dimensions, we refer to [4, 20, 30, 32].

Since the scenario described by the noiseless model often occurs within an external environment, it is natural to take into account additional environmental effects. In some cases, this can be done by coupling additional equations into the system, such as the Navier–Stokes equations of fluid mechanics [27, 37, 38]. With particular relevance to our work, we note the results of [22, 23] where it was shown that transport by sufficiently strong relaxation enhancing flows can have a regularizing effect on the Keller–Segel equation. However, for both modeling and analysis purposes it is also relevant to study the effect of random environments. These either model a rough background, accumulated errors in measurement or emergent noise from micro-scale phenomena not explicitly considered.

The noise introduced in (1.1) is related to stochastic models of turbulence, [6, 8, 24, 26] and we refer to the monograph by [12] for a broader overview of its relevance to SPDE models. Noise satisfying either our assumptions, or closely related ones, has been applied in a number of related settings; interacting particle systems, [7, 11, 15]; regularization, stabilization and enhanced mixing of general parabolic and transport PDE, [14, 17, 19], and with particular applications to the Keller–Segel and Navier–Stokes equations among others in [13, 16, 18].

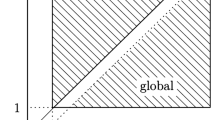

The motivation of the present work is to understand the persistence of blow-up in the case of stochastic chemotactic models driven by conservative noise. Our main result is that if \( \chi >\ (1+\gamma )8\pi \) then finite time blow-up occurs almost surely, while if \( \chi >(1+ \gamma V[u_0] C_{\varvec{\sigma }})8\pi \) then finite time blow-up occurs with positive probability. Here \(V[u_0]\) denotes one half the spatial variance of the initial data and \(C_{\varvec{\sigma }}\) indicates a type of Lipschitz norm of the vector fields \(\varvec{\sigma }\) and measures the spatial decorrelation of the noise. We refer to (2.2) for a precise definition. Furthermore, if \(\chi \) satisfies either of the above conditions and blow-up does occur then it must do so before a deterministic time \(T^*>0\), (see Theorem 2.8).

Note that when \(\gamma =0\), we recover the usual conditions for blow-up of the deterministic equation, see [32].

Three interesting regimes emerge from our criteria, on the one hand, if we let \(C_{\varvec{\sigma }}\) increase to infinity, the second condition becomes the first and blow-up must occur almost surely, albeit for larger and larger \(\chi \). On the other hand, when \(C_{\varvec{\sigma }}\) is arbitrarily small (which is the case for spatially homogeneous noise) one again recovers the deterministic criterion. However, in the third regime, where the noise and initial variance are reciprocally of the same order, i.e., \(V[u_0] C_{\varvec{\sigma }}<1\), we are only able to show blow-up with positive probability. It is an interesting question, that we leave for future work, to obtain more information on the probability of blow-up in this case. See Remark 2.9 for a longer discussion of these points.

The study of blow-up of solutions to SPDEs is a large topic of which we only mention some examples. It was shown by [3] that additive noise can eliminate global well-posedness for stochastic reaction–diffusion equations, while a similar statement has been shown for both additive and multiplicative noise in the case of stochastic nonlinear Schrödinger equations by [9, 10]. In addition, non-uniqueness results for stochastic fluid equations have been studied by [21] and [35].

In the case of SPDE models of chemotaxis, the study of blow-up phenomena has begun to be considered and we mention here two very recent works, by [16] and [28]. In [16], the authors show that under a particular choice of the vector fields, \(\varvec{\sigma }\), a similar model to (1.1) on \(\mathbb {T}^d\) for \(d=2,\,3\) enjoys delayed blow-up with \(1-\varepsilon \) after choosing \(\gamma \) and \(\varvec{\sigma }\) w.r.t. \(\chi \) and \(\varepsilon \in (0,1)\). In [28], the authors study global well-posedness and blow-up of a conservative model similar to (1.1) with a constant family of vector fields \(\sigma _k(x) = \sigma \) and a single common Brownian motion. Translating their parameters into ours, they establish global well-posedness of solutions to (1.1), with \(\sigma _k(x)\equiv 1\) and for \(\chi <8\pi \), as well as finite time blow-up when \(\chi >(1+\gamma )8\pi \).

The main contribution of this paper is the above-mentioned blow-up criterion for an SPDE version of the Keller–Segel model in the case of a spatially inhomogeneous noise term. To the best of our knowledge, this is a new result. An interesting point is that, unlike the deterministic criterion, it relates the chemotactic sensitivity with the initial variance, regularity and intensity of the noise term. In addition, we close the gap in [28], as in the case of constant vector fields we show that finite time blow-up occurs for \(\chi >8\pi \) (see Remark 2.9). In addition, we show that \(\chi >(1+\gamma )8\pi \) cannot be a sharp blow-up threshold for all sufficiently regular initial data.

Our technique of proof follows the deterministic approach by tracking a priori the evolution in time of the spatial variance of solutions to (1.1). We derive an SDE satisfied by this quantity which we analyze both pathwise and probabilistically to obtain criterion for blow-up.

Notation

-

For \(n\ge 1\) and \(p\in [1,\infty )\) (resp. \(p=\infty \)), we write \(L^p(\mathbb {R}^2;\mathbb {R}^n)\) for the spaces of p integrable (resp. essentially bounded) \(\mathbb {R}^n\)-valued functions on \(\mathbb {R}^2\).

For \(\alpha \in \mathbb {R}\), we write \(H^\alpha (\mathbb {R}^2;\mathbb {R}^n)\) for the inhomogeneous Sobolev spaces of order \(\alpha \)—a full definition and some useful facts are given in Appendix A.

For \(k\ge 0\) and \(\alpha \in (0,1)\), we write \(C^k(\mathbb {R}^2;\mathbb {R}^n)\) for the k continuously differentiable maps and \(\mathcal {C}^{k,\alpha }(\mathbb {R}^2;\mathbb {R}^n)\) for the k continuously differentiable maps with \(\alpha \) Hölder continuous \(k^{\text {th}}\) derivatives.

When the context is clear, we remove notation for the target space, simply writing \(L^p(\mathbb {R}^2)\), \(H^\alpha (\mathbb {R}^2)\). We equip these spaces with the requisite norms writing \(\Vert \,\cdot \,\Vert _{L^p},\, \Vert \,\cdot \,\Vert _{H^\alpha }\) removing the domain as well when it will not cause confusion.

-

We write \(\mathcal {P}(\mathbb {R}^2)\) for the space of probability measures on \(\mathbb {R}^2\) and for \(m\ge 1\), \(\mathcal {P}_m(\mathbb {R}^2)\) for the space of probability measures with m finite moments. By an abuse of notation we write, for example, \(\mathcal {P}(\mathbb {R}^2)\cap L^p(\mathbb {R}^2)\) to indicate the space of probability measures with densities in \(L^p(\mathbb {R}^2)\).

-

For \(\mu \in \mathcal {P}(\mathbb {R}^2)\) and when they are finite, we define the following quantities:

$$\begin{aligned} C[\mu ]&:= \int _{\mathbb {R}^2} x \mathop {}\!\textrm{d}\mu (x),\\ V[\mu ]&:= \frac{1}{2}\int _{\mathbb {R}^2}|x-C[\mu ]|^2 \mathop {}\!\textrm{d}\mu (x) = \frac{1}{2}\int _{\mathbb {R}^2}|x|^2\mathop {}\!\textrm{d}\mu (x)- \frac{1}{2}|C[\mu ]|^2. \end{aligned}$$Note that \(V[\mu ]\) is one half the usual variance, we define it in this way for computational ease.

-

For \(T>0\), X a Banach space, and \(p\in [1,\infty )\) (resp. \(p=\infty \)), we write \({L^p_TX:=L^p([0,T];X)}\) for the space of p-integrable (resp. essentially bounded) maps \(f:[0,T]\rightarrow X\). Similarly we write \(C_TX:=C([0,T];X)\) for the space of continuous maps \({f:[0,T]\rightarrow X}\), which we equip with the supremum norm \({\Vert f\Vert _{C_TX}:= \sup _{t\in [0,T]}\Vert f\Vert _X}\). We define the function space \(S_T:= C_TL^2(\mathbb {R}^2)\cap L^2_TH^1(\mathbb {R}^2)\).

-

We write \(\nabla \) for the usual gradient operator on Euclidean space while for \(k\ge 2\), \(\nabla ^k\) denotes the matrix of k-fold derivatives. We denote the divergence operator by \(\nabla \cdot \) and we write \(\Delta := \nabla \cdot \nabla \) for the Laplace operator.

-

If we write \(a\,\lesssim \, b\), we mean that the inequality holds up to a constant which we do not keep track of. Otherwise we write \(a\,\le \, C b\) for some \(C >0\) which is allowed to vary from line to line.

-

Given \(a,\,b\in \mathbb {R}\), we write \(a\wedge b :=\min \{a,b\}\) and \(a\vee b :=\max \{a,b\}\).

Plan of the paper In Sect. 2, we give the precise assumptions on the noise term and formulate our main result. Then, in Sect. 3 we establish some important properties of weak solutions to (1.1) which are made use of in Sect. 4 where we prove our main theorem. Appendix A is devoted to a brief recap of the fractional Sobolev spaces on \(\mathbb {R}^2\) along with some useful properties. Appendix B gives a sketch proof for the equivalence between (1.1) and a comparable Itô equation. Finally, in Appendix C, for the readers convenience, we provide a relatively detailed proof of local existence of weak solutions in the sense of Definition 2.4.

2 Main result

Before stating our main results, we reformulate (1.1) into a closed form and state our standing assumptions on the noise.

It is classical that c is uniquely defined up to a harmonic function, hence it can be written as \(c = K *u\) with \( K(x) = -\frac{1}{2\pi }\ln \left( |x|\right) \). Therefore, from now on, for \(t>0\), we work with the expression

Throughout we fix a complete, filtered, probability space, \((\Omega ,\mathcal {F},(\mathcal {F}_t)_{t\ge 0},\mathbb {P})\), satisfying the usual assumptions and carrying a family of i.i.d Brownian motions \(\{W^k\}_{k\ge 1}\). Furthermore, we consider a family of vector fields \(\varvec{\sigma }:= \{\sigma _k\}_{k\ge 1}\), satisfying the following assumptions.

-

(H1)

For \(k\ge 1\), \(\sigma _k:\mathbb {R}^2 \rightarrow \mathbb {R}^2\) are measurable and such that \( \sum _{k=1}^\infty \Vert \sigma _k\Vert _{L^\infty }^2 <\infty .\)

-

(H2)

For every \(k\ge 1\), \(\sigma _k \in C^2(\mathbb {R}^2;\mathbb {R}^2)\) and \( \nabla \cdot \sigma _k =0.\)

-

(H3)

Defining \(q:\mathbb {R}^2\times \mathbb {R}^2 \rightarrow \mathbb {R}^2 \otimes \mathbb {R}^2\) by

$$\begin{aligned} q^{ij}(x,y)= \sum _{k=1}^\infty \sigma _k^i(x)\sigma _k^j(y),\quad \forall \, i,j =1,\ldots ,d, \, x,y\in \mathbb {R}^2; \end{aligned}$$-

(a)

The mapping \((x,y)\mapsto q(x,y)=:Q(x-y)\in \mathbb {R}^2 \otimes \mathbb {R}^2\) depends only on the difference \(x-y\).

-

(b)

\(Q(0)=q(x,x)=\text {Id}\) for any \(x \in \mathbb {R}^2\).

-

(c)

We have \(Q \in C^2(\mathbb {R}^2;\mathbb {R}^2\otimes \mathbb {R}^2)\) and \( \sup _{x\in \mathbb {R}^2} |\nabla ^2Q(x)|<\infty .\)

-

(a)

Remark 2.1

For \(\varvec{\sigma }\) satisfying Assumption (H3), it is possible to show that the quantity

is finite. See [7, Rem. 4] for details. Note that due to (H3)-(b) one cannot re-scale \(\varvec{\sigma }\) so as to remove \(\gamma \) from (1.1).

Remark 2.2

It is important to note that one can instead specify the covariance matrix Q first. In fact, due to [25, Thm. 4.2.5] any matrix-valued map \(Q:\mathbb {R}^{2}\times \mathbb {R}^2\rightarrow \mathbb {R}^{2}\otimes \mathbb {R}^2\) satisfying the analogue of (2.2),

can be expressed as a family of vector fields \(\{\sigma _k\}_{k\ge 1}\) satisfying (H1)–(H3).

Analysis and presentations of vector fields satisfying these assumptions can be found in [7, 19, Sec. 5] and [11, 15]. For the reader’s convenience, we give an explicit example here in the spirit of Remark 2.2, based on [7, Ex. 5], but adapted to our precise setting.

Example 2.3

Let \(f\in L^1(\mathbb {R}_+)\) be such that \(\int _{\mathbb {R}_+} rf(r) \mathop {}\!\textrm{d}r =\pi ^{-1}\) and \(\int _{\mathbb {R}^2}|u|^ 2f(|u|)\mathop {}\!\textrm{d}u <\infty \). Then, let \(\Pi : \mathbb {R}\rightarrow M_{2\times 2}(\mathbb {R})\) be the \(2\times 2\)-matrix-valued map defined by,

Then, we define the covariance function,

Property (H3) (a) is satisfied by definition, after setting \(q(x,y):= Q(x-y)\). Since

property (H3) (b) is easily checked by moving to polar coordinates, making use of elementary trigonometric identities and the normalization \(\int _{\mathbb {R}_+} r f(r)\mathop {}\!\textrm{d}r = \pi ^{-1}\). Finally, (H3) (c) can be checked by a straightforward computation using smoothness of the trigonometric functions and the moment assumption on f.

We now define our notion of weak solutions.

Definition 2.4

Let \(\chi , \gamma >0\). Then, given \(u_0 \in \mathcal {P}(\mathbb {R}^2)\cap L^2(\mathbb {R}^2)\), we say that a weak solution to (1.1) is a pair \((u,\bar{T})\) where

-

\(\bar{T}\) is an \(\{\mathcal {F}_t\}_{t\ge 0}\) stopping time taking values in \(\mathbb {R}_+\cup \{\infty \}\),

-

For \(T< \bar{T}\), u is an \(S_T:=C_TL^2 \cap L^2_T H^1\)-valued random variable such that

$$\begin{aligned} \mathbb {E}\left[ \Vert u\Vert ^2_{L^\infty _TL^1}+\Vert u\Vert ^2_{L^\infty _T L^2} + \Vert u\Vert ^2_{L^2_TH^1}\right] <\infty . \end{aligned}$$

In addition, for any \(t \in [0,T]\), \(\phi \in H^1(\mathbb {R}^2)\), \(\mathbb {P}\)-a.s. the following identities hold,

In Appendix C, we detail a standard argument to show that there exists a deterministic, positive time \(T>0\) such that (u, T) is a weak solution in the above sense. This is due to the particular structure of the noise and we stress that in general the maximal time of existence may be random.

Applying the standard Itô-Stratonovich correction, one can prove the following remark, a sketch is given in Appendix B.

Remark 2.5

Let \((u,\bar{T})\) be a solution, in the sense of Definition 2.4, to (1.1). Then it also holds that \((u,\bar{T})\) is a solution to the following Itô equation: For every \(\phi \in H^1(\mathbb {R}^2)\), \(t\in [0,\bar{T}]\), \(\mathbb {P}\)-a.s.

Remark 2.6

It follows from Definition 2.4 and the standard chain rule, obeyed by the Stratonovich integral, that for u a weak, Stratonovich solution to (1.1) and \(F \in C^3(L^2(\mathbb {R}^2);\mathbb {R})\),

where \(DF[u_s][\varphi ]\) denotes the Gateaux derivative of \(F[u_s]\) in the direction \(\varphi \in H^{1}(\mathbb {R}^2)\). An equivalent Itô formula for nonlinear functional of (2.4) also holds, see for example [31, Sec. 2].

Remark 2.7

Note that under assumption (H1), for any \(T>0\) and any weak solution on [0, T], the stochastic integral is well defined as an element of \(L^2(\Omega \times [0,T];L^2(\mathbb {R}^2))\subset L^2(\Omega \times [0,T];H^{-1}(\mathbb {R}^2))\), since for any \(t\in (0,T]\), we have

We are ready to state our main result.

Theorem 2.8

(Blow-up in finite time) Let \(\chi ,\,\gamma >0\) and let \(u_0\in \mathcal {P}_{2}(\mathbb {R}^2)\cap L^2(\mathbb {R}^2)\) be such that \(\int x u_0(x)\mathop {}\!\textrm{d}x =0\). Assume \(\varvec{\sigma }=\{\sigma _k\}_{k\ge 1}\) satisfy (H1)-(H3). Let \((u,{\bar{T}})\) be a weak solution to (1.1). Then

-

(i)

Under the condition

$$\begin{aligned} \chi > (1+\gamma )8\pi , \end{aligned}$$(2.6)we have

$$\begin{aligned} \mathbb {P}({\bar{T}} < T^*_1 )=1, \end{aligned}$$for \(T^*_1:= \frac{4\pi V[u_0]}{\chi -(1+\gamma )8\pi }\).

-

(ii)

Under the condition

$$\begin{aligned} \chi >(1+ \gamma V[u_0] C_{\varvec{\sigma }})8\pi \end{aligned}$$(2.7)we have

$$\begin{aligned} \mathbb {P}({\bar{T}} < T^*_2 )>0, \end{aligned}$$for \(T^*_2:= \frac{\log (\chi -8\pi ) - \log \left( \chi -V[u_0]8\pi \gamma C_{\varvec{\sigma }} -8\pi \right) }{2\gamma C_{\varvec{\sigma }}}\).

Remark 2.9

-

If \(V[u_0]C_{\varvec{\sigma }}>1\) and \(\chi \) satisfies (2.7), then \(\chi \) also satisfies (2.6), in which case blow-up occurs almost surely before \(T^*_1\). This has relevance to the setting of [16] in which a model similar to (1.1) is considered on \(\mathbb {T}^d\) for \(d=2,\,3\) where formally \(C_{\varvec{\sigma }}\) can be taken arbitrarily large.

-

In the case \(C_{\varvec{\sigma }}=0\), which corresponds to noise that is independent of the spatial variable, criterion (2.7) becomes \(\chi >8\pi \) which is exactly the criterion for blow-up of solutions to the deterministic PDE. Applying Theorem 2.8, one would only recover blow-up with positive probability in this case. However, using the spatial independence of the noise we can instead implement a change of variables, setting \(v(t,x):= u(t,x-\sqrt{2\gamma }\sigma W_t)\). It follows from the Leibniz rule that v solves a deterministic version of the PDE with viscosity equal to one. Hence, it blows up in finite time with probability one for \(\chi >8\pi \). Note that in [28] a similar model was treated, among others, with spatially homogeneous noise and positive probability of blow-up was shown only for \(\chi >(1+\gamma )8\pi \).

-

Observe that the second half of Theorem 2.8 demonstrates that (2.6) cannot be a sharp threshold for almost sure global well-posedness of (1.1) for all initial data (or all families of suitable vector fields \(\{\sigma _k\}_{k\ge 1}\)). Given any \(8\pi<\chi <(1+\gamma )8\pi \), initial data \(u_0\) (resp. family of vector fields \(\{\sigma _k\}_{k\ge 1}\)) one can always choose suitable vector fields (resp. initial data) such that \(\chi >(1+\gamma V[u_0]C_{\varvec{\sigma }})8\pi \) so that there is at least a positive probability that solutions cannot live for all time. However, the results of this paper leave open any quantitative information on this probability.

Remark 2.10

If we set \(T^*:= T^*_1 \wedge T^*_2\), then it is possible to show that \(T^*\) respects the ordering of \( V[u_0]C_{\varvec{\sigma }}\) and 1. That is,

As mentioned before, in the PDE case blow-up occurs, for \(\chi >8\pi \), and weak solutions cannot exist beyond \(T^* = \frac{4\pi V[u_0]}{\chi -8\pi }\). It follows that in all parameter regions both the threshold for \(\chi \) and definition of \(T^*\) in Theorem 2.8 agree with the equivalent quantities in the limit \(\gamma \rightarrow 0\).

The proof of Theorem 2.8 is completed in Sect. 4 after establishing some preliminary results in Sect. 3. The central point is to analyze an SDE satisfied by \(t\mapsto V[u_t]\).

3 A priori properties of weak solutions

The following lemma demonstrates that the expression \(\nabla c_t:= \nabla K *u_t\) is well-defined Lebesgue almost everywhere.

Lemma 3.1

Let \((u,\bar{T})\) be a weak solution to (1.1) in the sense of Definition 2.4. Then, there exists a \(C>0\) such that for all \(t\in (0,\bar{T}]\),

Proof

First, applying [29, Lem. 2.5] with \(q=3\) gives,

Interpolation between \(L^2(\mathbb {R}^2)\) and \(L^\infty (\mathbb {R}^2)\) gives,

Combined with the embedding \(H^1(\mathbb {R}^2)\hookrightarrow \mathcal {C}^{0,0}(\mathbb {R}^2)\) (see Lemma A.3), the required estimate is obtained. \(\square \)

Remark 3.2

Note that the choice of \(q=3\) in the proof of Lemma 3.1 and the resulting exponents are essentially arbitrary, the only restriction being that a non-zero power of \(\Vert u_t\Vert _{L^p}\) for some \(p \in [1,2)\) must be included in the right-hand side. The choice of \(L^1\) is convenient since we will shortly demonstrate that \(\frac{\mathop {}\!\textrm{d}}{\mathop {}\!\textrm{d}t}\Vert u_t\Vert _{L^1}=0\) for all weak solutions.

Remark 3.3

Exploiting symmetries of the kernel K, (2.1) and following [36], we can write the advection term of (2.3) in a different form that will become useful later on. We note that,

Renaming the dummy variables in the double integral and applying Fubini’s theorem, we also have

Combining (3.4) and (3.5) gives

Therefore, in view of (2.1) we may re-write \(\langle u_s\nabla c_s,\nabla \phi \rangle \) as

In order to prove our main result, we will need to manipulate the zeroth, first, and second moments of weak solutions. To do so, we define a family of radial, cut-off functions, indexed by \(\varepsilon \in (0,1)\) such that for some \(C>0\)

For any family of cut-off functions satisfying (3.7), it is straightforward to show that there exists a \(C>0\) such that

Note also that since \(\text {supp}(\nabla \Psi _\varepsilon ) = \text {supp}(\Delta \Psi _\varepsilon ) = B_{2\varepsilon ^{-1}}(0){\setminus } B_{\varepsilon ^{-1}}(0)\), then

We start with sign and mass preservation.

Proposition 3.4

Let \((u,\bar{T})\) a weak solution to (1.1). If \(u_0\ge 0\), then \(\mathbb {P}\)-a.s.

-

(i)

\(u_t\ge 0\) for all \(t\in [0,\bar{T})\),

-

(ii)

\(\Vert u_t\Vert _{L^1}= \Vert u_0\Vert _{L^1} =1\) for all \(t\in [0,\bar{T})\).

Proof

Let us define

on \(L^2(\mathbb {R}^2)\), where \(u_t^- = u_t \mathbbm {1}_{\{u_t<0\}}\). The computations below can be properly justified by first defining an \(H^1\) approximation of the indicator function, obtaining uniform bounds in the approximation parameter using that \(u\in H^1\) and then passing to the limit using dominated convergence. For ease of exposition, we work directly with \(S[u_t]\) keeping these considerations in mind so that the following calculations should only be understood formally.

Applying (2.5) gives

Regarding the stochastic integral term, using that \(\nabla \cdot \sigma _k =0\), \(u_s \big |_{\partial \{u_s<0 \}} =0\) and integrating by parts, we have

Regarding the finite variation integral,

we apply Young’s inequality in the second term, to give

So choosing \(\varepsilon = \frac{\chi }{4}\), we have

Putting all this together in (3.10) and using that \(\nabla u_s \in L^2(\mathbb {R}^2)\) for almost every \(s\in [0,\bar{T}]\),

So, having in mind Lemma 3.1 and applying Grönwall’s inequality, we almost surely have

where C is the constant from Lemma 3.1. Since \(S[u_0] =0\), it follows that \(\mathbb {P}\)-a.s. \(S[u_t]=0\) for all \(t\in [0,\bar{T})\) which shows the first claim.

To show the second claim, for \(\varepsilon \in (0,1)\), we define \(M_\varepsilon [u_t]:= \int _{\mathbb {R}^2} \Psi _\varepsilon (x)u_t(x)\mathop {}\!\textrm{d}x\), where the cut-off functions \(\Psi _\varepsilon \) are given in (3.7). Using the weak form of the equation and integrating by parts where necessary we see that

Applying the Cauchy–Schwartz inequality, the fact that the Itô integral disappears under the expectation and in view of (3.9), there exists a \(C>0\) such that

Applying Fatou’s lemma,

Hence, \(\int _{\mathbb {R}^2} u_t(x)\mathop {}\!\textrm{d}x<\infty \) \(\mathbb {P}\)-a.s. for every \(t\in [0,\bar{T})\). We may now apply dominated convergence to each term in (3.11). In particular, stochastic dominated convergence is used for the last term on the right-hand side. Thus, to obtain almost sure convergence all the limits should be taken up to a suitable subsequence. Finally, noting that \(\Delta \Psi _\varepsilon \) and \(\nabla \Psi _\varepsilon \) converge to zero pointwise almost everywhere, we conclude

In combination with the first statement of the lemma, this proves the second claim. \(\square \)

The following corollary to Proposition 3.4 will be crucial to obtaining our central contradiction in the proof of Theorem 2.8.

Corollary 3.5

Let \((u,\bar{T})\) be a weak solution to (1.1). Then for any \(f:\mathbb {R}^2\rightarrow \mathbb {R}\) such that \(f>0\) Lebesgue almost everywhere and any \(t\in [0,\bar{T})\),

Proof

We first show that any weak solution must have positive support. Let us fix an almost sure realization of the solution, then chose any \(t\in [0,\bar{T})\) and assume for a contradiction that, \(u_t(\omega )\) is supported on a set of zero measure. However, since \(\Vert u_t(\omega )\Vert _{L^1}=1\), we find that for any \(p> 1\),

which is a contradiction. Since f is assumed to be strictly positive, Lebesgue almost surely, the conclusion follows. \(\square \)

In the following proposition, we derive the evolution for the center of mass and the variance of a weak solution to (1.1).

Proposition 3.6

Let us assume that \(u_0\in L^2(\mathbb {R}^2)\cap \mathcal {P}(\mathbb {R}^2)\) is such that \(V[u_0] <\infty \). Then for any weak solution to (1.1) in the sense of Definition 2.4, \(\mathbb {P}\)-a.s. for any \(t\in [0,\bar{T})\),

Proof

Without loss of generality, we may assume that \(C[u_0] = \int _{\mathbb {R}^2}x u_0(x)\mathop {}\!\textrm{d}x =0\). Indeed, given a non-centred initial condition \(\tilde{u}_0\) with \(C[\tilde{u}_0]=c\ne 0\) one may redefine \({C[u_t]:= \int _{\mathbb {R}^2}(x-c)u_t(x)\mathop {}\!\textrm{d}x}\) whose evolution along weak solutions to (1.1) will again, using the argument given below, satisfy the identity (3.12). The rest of our analysis therefore holds without further change.

Let \(p\in \{1,2\}\) and we use the convention that for \(p=2\), \(x^p:= |x|^2\). Since \(x^p\Psi _{\varepsilon }(x)\) is an \(H^1(\mathbb {R}^2)\) function, we may apply (2.4) along with Remark 3.3 and integrate by parts where necessary to give that

From (3.8), it follows that uniformly across \(x\in \mathbb {R}^2\) and \(\varepsilon \in (0,1)\), \(\Delta (x^p\Psi _\varepsilon (x))\) is bounded and \(\nabla (x^p\Psi _\varepsilon (x))\) is Lipschitz continuous. Hence, using that \(\Vert u_t\Vert _{L^1}=1\) for all \(t\in [0,\bar{T}]\) there exists a \(C>0\) such that, for all \(\varepsilon \in (0,1)\),

Note that we may directly apply Lebesgue’s dominated convergence to the initial data term, since \(|x^p\Psi _\varepsilon (x)u_0(x)|\,\le \, |x^pu_0(x)|\) where the latter is assumed to be integrable. Now, let us for the moment take only \(p=2\). Applying Fatou’s lemma,

Hence, \(\int _{\mathbb {R}^2} |x|^2 u_t(x)\mathop {}\!\textrm{d}x<\infty \) \(\mathbb {P}\)-a.s. From Proposition 3.4, \(u_t\) is a probability measure on \(\mathbb {R}^2\), so we have the bound

It follows that for \(p\in \{1,2\}\), \(\int _{\mathbb {R}^2} x^p u_t(x)\mathop {}\!\textrm{d}x<\infty \) \(\mathbb {P}\)-a.s. Since by definition we also have,

as in the proof of Proposition 3.4, we may apply dominated convergence in each integral of (3.14). Using that for \(p\in \{1,2\}\) and Lebesgue almost every \(x,\,y\in \mathbb {R}^2\)

we directly find the claimed identities for \(C[u_t]\) and \(\frac{1}{2}\int _{\mathbb {R}^2}|x|^2u_t(x)\mathop {}\!\textrm{d}x\). To conclude it only remains to note that

\(\square \)

4 Proof of Theorem 2.8

We will prove both statements by demonstrating that in each case the a priori properties of any weak solution proved in Proposition 3.4 will be violated at some finite time, either almost surely or with positive probability. Furthermore, we will make use of the identities shown in Proposition 3.6. Notice that our proofs of both of these propositions rely heavily on assumptions (H1)–(H3).

To prove (i) let us assume that given \(u_0 \in \mathcal {P}_2(\mathbb {R}^2)\cap L^2(\mathbb {R}^2)\) and any associated weak solution \((u,\bar{T})\), it holds that \(\mathbb {P}(\bar{T}=\infty )>0\) and let us choose any \(\omega \in \{\bar{T}=\infty \}\). We may in addition assume that \(\omega \) is a member of the full measure set where the solution lies in \(C_TL^2(\mathbb {R}^2)\cap L^2_TH^1(\mathbb {R}^2)\) for any \(T>0\) and the set where the Itô integral is well defined. Applying (3.13) of Proposition 3.6, for any \(0<t<\infty \) and the above \(\omega \) we have that

Now since the stochastic integral is by definition a local martingale, by the Dambis–Dubin–Schwarz theorem [34, Ch. V. Thm. 1.6], there exists a random time change \(t\mapsto q(t)\), with q(t) being the quadratic variation of the local martingale at time t, and a real-valued Brownian motion B such that for all t in the range of q,

Either the range of q is \([0,\infty )\) or q is bounded. If \(\omega \) is such that the first case holds, then there exists a \(T>0\) such that \(\sqrt{2\gamma }B_{q(T)(\omega )}(\omega )=V[u_0]\), at which point, since by assumption \(\left( 2(\gamma +1)-\frac{\chi }{4\pi }\right) T-\frac{1}{2}|C[u_t]|^2(\omega )<0\) for any \(T>0\), we have

The latter is in contradiction with Corollary 3.5. Alternatively, if \(\omega \) is such that \(t\mapsto q(t)(\omega )\) is bounded, then there exists a \(B_\infty (\omega )\in \mathbb {R}\) such that \(\lim _{t\nearrow \infty }B_{q(t)(\omega )}(\omega ) = B_\infty (\omega )\), see cite[Ch. 5, Prop. 1.8]. In which case, for all \(t>0\),

Since the final term vanishes for large \(t>0\) and using again the fact that \(2(1+\gamma ) -\frac{\chi }{4\pi }<0\) there exists a \(T>0\) sufficiently large such that once more

Again this contradicts Corollary 3.5 and as such our initial assumption that \(\mathbb {P}(\bar{T}=\infty )>0\) must be false. Hence, \(\mathbb {P}(\bar{T}<\infty )=1\).

Finally, taking the expectation on both sides of (4.1) we see that for

one has

Since \(V[u_t]\) is non-negative, we must in addition have \(\mathbb {P}(\bar{T}< T^*_1) =1\).

To prove (ii) let us instead assume that given any suitable initial data, for the associated weak solution one has \(\mathbb {P}(\bar{T}=\infty )=1\). Now taking expectations on both sides of (3.13), we have

Using (3.12) and Itô’s isometry, we see that

where we exchanged summation and expectation using dominated convergence and recalling that \(u_s\in \mathcal {P}(\mathbb {R}^2)\) \(\mathbb {P}\)-a.s. for all \(s\in [0,T]\) and applying (H1).

Now the game is to estimate \(\sum _{k=1}^\infty \sigma _k(x)\cdot \sigma _k(y)\) from below, remembering that \(u_t\ge 0\) \(\mathbb {P}\)-a.s. for all \(t\in [0,T]\). From Remark 2.1,

As a direct consequence and in view of (H3)-b)

Rearranging the above inequality gives

Plugging (4.4) into (4.3) and the fact that \(u_t\in \mathcal {P}(\mathbb {R}^2)\) \(\mathbb {P}\)-a.s. for all \(t\in [0,T]\), we find

The integrand in the second term can easily be rewritten as

Thus, we establish the lower bound

Therefore, inserting (4.5) into (4.2), we find

Applying Grönwall lemma,

Evaluating the integrals,

which implies that if \(\chi >8\pi \left( 1+\gamma C_{\varvec{\sigma }} V[u_0]\right) \) and \(t\ge T^*_2\), for

then

This is again in contradiction with Corollary 3.5 which shows \(\mathbb {P}\)-a.s. positivity of \(V[u_t]\) for u a weak solution to (1.1). Hence, our initial assumption must have been false and so for any weak solution the probability of global existence must be strictly less than 1. Furthermore, using again the fact that \(V[u_t]\) is almost surely non-negative, we must in fact have \(\mathbb {P}(\bar{T}< T^*_2)>0\). \(\square \)

Data availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

H. Bahouri, J.-Y. Chemin, and R. Danchin. Fourier Analysis and Nonlinear Partial Differential Equations. Springer, 2011.

A. Blanchet, J. A. Carrillo, and N. Masmoudi. Infinite time aggregation for the critical Patlak–Keller–Segel model in \({\mathbb{R}}^{2}\). Comm. Pure Appl. Math., 61(10):1449–1481, 2008. https://doi.org/10.1002/cpa.20225.

J. F. Bonder and P. Groisman. Time-space white noise eliminates global solutions in reaction-diffusion equations. Physica D: Nonlinear Phenomena, 238(2):209 – 215, 2009. ISSN 0167-2789. https://doi.org/10.1016/j.physd.2008.09.005. http://www.sciencedirect.com/science/article/pii/S0167278908003400.

M. Bossy and D. Talay. Convergence rate for the approximation of the limit law of weakly interacting particles: application to the Burgers equation. Ann. Appl. Probab., 6(3):818–861, 1996. ISSN 1050-5164. https://doi.org/10.1214/aoap/1034968229.

H. Brezis. Functional analysis, Sobolev spaces and partial differential equations. Universitext. Springer, New York, 2011. ISBN 978-0-387-70913-0.

R. Chetrite, J.-Y. Delannoy, and K. Gawȩdzki. Kraichnan flow in a square: an example of integrable chaos. J. Stat. Phys., 126 (6):1165–1200, 2007. ISSN 0022-4715. https://doi.org/10.1007/s10955-006-9225-5.

M. Coghi and F. Flandoli. Propagation of chaos for interacting particles subject to environmental noise. The Annals of Applied Probability, 26(3):1407–1442, 2016. https://doi.org/10.1214/15-aap1120.

R. W. R. Darling. Isotropic stochastic flows: a survey. In Diffusion processes and related problems in analysis, Vol. II (Charlotte, NC, 1990), volume 27 of Progr. Probab., pages 75–94. Birkhäuser Boston, Boston, MA, 1992.

A. de Bouard and A. Debussche. On the effect of a noise on the solutions of the focusing supercritical nonlinear Schrödinger equation. Probab. Theory Related Fields, 123(1):76–96, 2002. ISSN 0178-8051. https://doi.org/10.1007/s004400100183.

A. de Bouard and A. Debussche. Blow-up for the stochastic nonlinear Schrödinger equation with multiplicative noise. Ann. Probab., 33(3):1078–1110, 2005. ISSN 0091-1798. https://doi.org/10.1214/009117904000000964.

F. Delarue, F. Flandoli, and D. Vincenzi. Noise prevents collapse of Vlasov–Poisson point charges. Communications on Pure and Applied Mathematics, 67(10):1700–1736, 2013. https://doi.org/10.1002/cpa.21476.

F. Flandoli. Random perturbation of PDEs and fluid dynamic models. Springer, 2011.

F. Flandoli and D. Luo. High mode transport noise improves vorticity blow-up control in 3D Navier-Stokes equations. Probability Theory and Related Fields, 180(1):309–363, 2021. ISSN 1432-2064. https://doi.org/10.1007/s00440-021-01037-5.

F. Flandoli, M. Gubinelli, and E. Priola. Well-posedness of the transport equation by stochastic perturbation. Invent. Math., 180(1):1–53, 2010. ISSN 0020-9910. https://doi.org/10.1007/s00222-009-0224-4.

F. Flandoli, M. Gubinelli, and E. Priola. Full well-posedness of point vortex dynamics corresponding to stochastic 2D Euler equations. Stochastic Processes and their Applications, 121(7):1445–1463, 2011. https://doi.org/10.1016/j.spa.2011.03.004.

F. Flandoli, L. Galeati, and D. Luo. Delayed blow-up by transport noise. Comm. Partial Differential Equations, 0(46):1–39, 2021a. https://doi.org/10.1080/03605302.2021.1893748.

F. Flandoli, L. Galeati, and D. Luo. Quantitative convergence rates for scaling limit of SPDEs with transport noise. arXiv:2104.01740 (2021b)

L. Galeati. On the convergence of stochastic transport equations to a deterministic parabolic one. Stochastics and Partial Differential Equations: Analysis and Computations, 8(4):833–868, 2020. ISSN 2194-041X. https://doi.org/10.1007/s40072-019-00162-6.

B. Gess and I. Yaroslavtsev. Stabilization by transport noise and enhanced dissipation in the kraichnan model. arXiv:2104.03949, 2021.

T. Hillen and A. Potapov. The one-dimensional chemotaxis model: global existence and asymptotic profile. Mathematical Methods in the Applied Sciences, 27(15):1783–1801, 2004. https://doi.org/10.1002/mma.569.

M. Hofmanová, R. Zhu, and X. Zhu. Global-in-time probabilistically strong and Markov solutions to stochastic 3D Navier–Stokes equations: existence and nonuniqueness. The Annals of Probability, 51(2):524–579, 2023. https://doi.org/10.1214/22-AOP1607.

G. Iyer, X. Xu, and A. Zlatoš. Convection-induced singularity suppression in the Keller–Segel and other non-linear PDEs. Transactions of the American Mathematical Society, page 1, 2020. https://doi.org/10.1090/tran/8195.

A. Kiselev and X. Xu. Suppression of chemotactic explosion by mixing. Archive for Rational Mechanics and Analysis, 222(2):1077–1112, 2016. https://doi.org/10.1007/s00205-016-1017-8.

R. H. Kraichnan. Small-scale structure of a scalar field convected by turbulence. Physics of Fluids, 11(5):945–953, May 1968. https://doi.org/10.1063/1.1692063.

H. Kunita. Stochastic flows and stochastic differential equations, volume 24 of Cambridge Studies in Advanced Mathematics. Cambridge University Press, Cambridge, 1997. ISBN 0-521-35050-6; 0-521-59925-3. Reprint of the 1990 original.

Y. Le Jan. On isotropic Brownian motions. Z. Wahrsch. Verw. Gebiete, 70(4):609–620, 1985. ISSN 0044-3719. https://doi.org/10.1007/BF00531870.

A. Lorz. A coupled Keller–Segel–Stokes model: global existence for small initial data and blow-up delay. Communications in Mathematical Sciences, 10(2):555–574, 2012. https://doi.org/10.4310/cms.2012.v10.n2.a7.

O. Misiats, O. Stanzhytskyi, and I. Topaloglu. On global existence and blowup of solutions of Stochastic Keller–Segel type equation. Nonlinear Differential Equations and Applications NoDEA, 29(1):3, 2021. ISSN 1420-9004. https://doi.org/10.1007/s00030-021-00735-2.

T. Nagai. Global existence and decay estimates of solutions to a parabolic-elliptic system of drift-diffusion type in \({\mathbb{R}}^2\). Differential Integral Equations, 24(1-2):29–68, 2011. ISSN 0893-4983.

K. Osaki and A. Yagi. Finite dimensional attractor for one-dimensional Keller–Segel equations. Funkcial. Ekvac., 44(3):441–469, 2001. ISSN 0532-8721. URL http://www.math.kobe-u.ac.jp/~fe/xml/mr1893940.xml.

É. Pardoux. Stochastic partial differential equations—an introduction. SpringerBriefs in Mathematics. Springer, Cham, 2021. ISBN 978-3-030-89002-5; 978-3-030-89003-2. https://doi.org/10.1007/978-3-030-89003-2.

B. Perthame. PDE models for chemotactic movements: parabolic, hyperbolic and kinetic. Appl. Math., 49(6):539–564, 2004. ISSN 0862-7940. https://doi.org/10.1007/s10492-004-6431-9.

C. Prévôt and M. Röckner. A concise course on stochastic partial differential equations. Springer, 2007.

D. Revuz and M. Yor. Continuous Martingales and Brownian Motion. Springer, 2008.

M. Romito. Uniqueness and blow-up for a stochastic viscous dyadic model. Probab. Theory Related Fields, 158(3-4):895–924, 2014. ISSN 0178-8051. https://doi.org/10.1007/s00440-013-0499-7.

T. Senba and T. Suzuki. Weak solutions to a parabolic-elliptic system of chemotaxis. Journal of Functional Analysis, 191:17–51, 2002.

M. Winkler. Boundedness in a two-dimensional Keller–Segel–Navier–Stokes system involving a rapidly diffusing repulsive signal. Zeitschrift för angewandte Mathematik und Physik, 71(1), 2019a. https://doi.org/10.1007/s00033-019-1232-x.

M. Winkler. A three-dimensional Keller–Segel–Navier–Stokes system with logistic source: global weak solutions and asymptotic stabilization. Journal of Functional Analysis, 276(5):1339–1401, 2019b. https://doi.org/10.1016/j.jfa.2018.12.009.

Acknowledgements

The authors warmly thank J. Norris, A. de Bouard, and L. Galeati for helpful discussions and B. Hambly for useful comments on the manuscript. The authors would like to express their gratitude to the French Centre National de Recherche Scientifique (CNRS) for the grant (PEPS JCJC) that supported this project. M.T. was partly supported by Fondation Mathématique Jacques Hadamard. Work on this paper was undertaken during A.M.’s tenure as INI-Simons Post Doctoral Research Fellow hosted by the Isaac Newton Institute for Mathematical Sciences (INI) participating in programme Frontiers in Kinetic Theory, and by the Department of Pure Mathematics and Mathematical Statistics (DPMMS) at the University of Cambridge. This author would like to thank INI and DPMMS for support and hospitality during this fellowship, which was supported by Simons Foundation (award ID 316017) and by Engineering and Physical Sciences Research Council (EPSRC) Grant Number EP/R014604/1.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author’s have no competing financial or non-financial interests that relate to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A Sobolev spaces on \(\mathbb {R}^d\)

We include some useful definitions and lemmas concerning inhomogeneous Sobolev spaces on \(\mathbb {R}^2\).

Definition A.1

Let \(\alpha \in \mathbb {R}\). The non-homogeneous Sobolev space \(H^\alpha (\mathbb {R}^2)\) consists of the tempered distributions \(u\in \mathcal {S}'(\mathbb {R}^2)\) such that \(\hat{u}\in L^1_{\text {loc}}(\mathbb {R}^2)\) and

where \(\mathcal {F}^{-1}\) denotes the inverse Fourier transform.

We recall that \(H^\alpha (\mathbb {R}^2)\) is a Hilbert space for all \(\alpha \in \mathbb {R}\) with inner products,

When \(\alpha =1\), we have \(\langle u,v\rangle _{H^1}= \langle u,v\rangle _{L^2}+\langle \nabla u,\nabla v\rangle _{L^2}\). It follows from the definition that for \(\alpha _0<\alpha _1\),

We also recall the following interpolation and embedding results.

Lemma A.2

([1, Prop. 1.32 & Prop.1.52]) For \(\alpha _0 \,\le \, \alpha \,\le \, \alpha _1\), it holds that \(H^{\alpha _0}\cap H^{\alpha _1}\hookrightarrow H^\alpha \). In particular, for all \(\theta \in [0,1]\) and \(\alpha = (1-\theta )\alpha _0 + \theta \alpha _1\), one has

Lemma A.3

([1, Thm. 1.66]) For \(\alpha \in \mathbb {R}\), the space \(H^\alpha (\mathbb {R}^2)\) embeds continuously into

-

The Lebesgue space \(L^p(\mathbb {R}^2)\), if \(0<\alpha <d/2\) and \(2\,\le \, p\,\le \, {\frac{4}{2-2\alpha }}\);

-

The Hölder space \(\mathcal {C}^{k,\rho }(\mathbb {R}^2)\), if \(\alpha \ge {1+k+\rho }\) for some \(k\in \mathbb {N}\) and \(\rho \in [0,1)\).

B Stratonovich to Itô correction

We briefly detail the necessary calculations to justify Remark 2.5. We refer to [7, Sec. 2.2], [13, Sec. 2], and [18, Sec. 2.3] for similar arguments. All equalities below should be interpreted in the weak sense.

Lemma B.1

Let \((u,\bar{T})\) be a Stratonovich solution to (1.1), in the sense of Definition 2.4, with \(\varvec{\sigma }\) satisfying Assumptions (H1)–(H3). Then u also solves the Itô SPDE,

Proof

Repeating the caveat that all equalities should be understood after testing against suitable test functions, for all \(k\ge 1\) and making use of (H1) to ensure the stochastic integrals are well defined,

where the process \(s \mapsto [ u,W^k]_s\) denotes the quadratic covariation between u and W. Using (1.1), we find

so that we have

Summing over \(k\ge 1\) and applying the Leibniz rule, we see that

where \(Q^{ij}(0)= \sum _{k=1}^\infty \sigma _k^i(x) \sigma _k^j(x)\) for any \(x\in \mathbb {R}^2\) and \(\nabla \sigma _k \cdot \sigma _k\) is the vector field with components,

Applying the Leibniz rule once more, for \(j=1,\ldots , d\), we see that

By Assumptions (H2) and (H3), we have that \(\nabla \cdot \sigma _k =0\) and \(q^{ij}(x,x)=Q^{ij}(0)=\delta _{ij}\) from which it follows that \(\sum _{k=1}^\infty (\nabla \sigma _k \cdot \sigma _k)^i=0\) for all \(i=1,\ldots ,d\) and that

which completes the proof. \(\square \)

C Local existence

In this section, we give a sketched proof of local existence of weak solutions to (1.1). The method of proof is well known and can be found in a general form in [33]. In the case of (1.1), a similar proof of local existence was exhibited in [16, Prop. 3.6]. For the readers convenience, we supply here a lighter version adapted to our particular setting.

Theorem C.1

Let \(u_0 \in L^2(\mathbb {R}^2)\). Then there exists a pair \((u,\bar{T})\), with \(\bar{T}\) deterministic, which is a weak solution to (1.1) in the sense of Definition 2.4. Furthermore, \(\mathbb {P}\)-a.s. \(u\in C([0,\bar{T}];L^2(\mathbb {R}^2))\).

We begin with a local a priori bound on solutions to (1.1).

Lemma C.2

Let \(u_0 \in \mathcal {P}(\mathbb {R}^2)\,\cap \, L^2(\mathbb {R}^2)\). Then there exists a \(\bar{T}=\bar{T}(\Vert u_0\Vert _{L^2})>0\) and a \(C>0\), such that for any weak solution u to (1.1) on \([0,\bar{T}]\),

Furthermore, it holds that

Remark C.3

Since the constant on the right-hand side of (C.1) is non-random, it follows immediately that \(\Vert u\Vert _{L^\infty _{\bar{T}}L^2} + \Vert u\Vert _{L^2_{\bar{T}}H^1} \in L^p(\Omega ;\mathbb {R})\) for any \(p\ge 1\).

Proof of Lemma C.2

The identity (C.2) is shown by Proposition 3.4 so that we are only required to obtain (C.1).

By assumption, \(u_t \in H^1(\mathbb {R}^2)\) for all \(t\in [0,\bar{T}]\) and it satisfies (2.3). In particular, the Stratonovich integral is well-defined for \(\mathbb {P}\)-a.e. \(\omega \in \Omega \). Applying (2.5) to the functional \(F[u_t]:= \Vert u_t\Vert ^2_{L^2}\), we have the identity,

For the nonlinear term, integrating by parts and using the equation satisfied by c,

Then using the Sobolev embedding \(H^{1/2}(\mathbb {R}^2)\hookrightarrow L^3(\mathbb {R}^2)\), real interpolation as given by Lemma A.2 and Young’s inequality, for any \(\varepsilon >0\),

Regarding the stochastic integral, since each \(\sigma _k\) is divergence free, it follows that,

So, choosing \(\varepsilon = \chi \), we find that \(\mathbb {P}\)-a.s.,

That is \(t\mapsto \Vert u_t\Vert _{L^2}\) satisfies the nonlinear, locally Lipschitz, differential inequality,

By standard ODE theory and recalling that \(u_0\) is non-random, there exists a strictly positive, but possibly finite time \(\bar{T}(\Vert u_0\Vert _{L^2})\) and a deterministic constant \(C>0\), such that,

Coming back to (C.4) to obtain a bound on \(\int _0^t \Vert \nabla u_s\Vert ^2_{L^2} \mathop {}\!\textrm{d}s\) for \(t\,\le \, \bar{T}\) completes the proof of (C.1). \(\square \)

Definition C.4

We say that a mapping \(A:H^{1}(\mathbb {R}^2)\rightarrow H^{-1}(\mathbb {R}^2)\) is locally coercive, locally weakly monotone and hemi-continuous if the following hold:

Locally coercive: there exists an \(\alpha >0\) such that if \(u \in H^1(\mathbb {R}^2)\) with \(\Vert u\Vert _{H^1}\,\le \, R\) for any \(R>0\) there exists a \(\lambda >0\) for which it holds that

Locally weakly monotone: for any \(R>0\) there exists a \(\lambda >0\) such that for all \(u,\,w\in H^{1}(\mathbb {R}^2)\) with \(\Vert u\Vert _{H^1}\vee \Vert w\Vert _{H^1}\,\le \, R\)

Hemi-continuous: for any \(u,\,w,\,v \in H^1(\mathbb {R}^2)\) the mapping,

is continuous.

Lemma C.5

The operator \(A:\mathcal {P}(\mathbb {R}^2)\cap H^{1}(\mathbb {R}^2) \rightarrow H^{-1}(\mathbb {R}^2)\) given by the mapping,

is locally coercive, locally weakly monotone and hemi-continuous.

Proof

Local Coercivity: Approximating u by smooth compactly supported functions it follows that,

By Hölder’s inequality, Young’s inequality and Lemma 3.1, for any \(\varepsilon >0\)

So that under the assumption that \(\Vert u\Vert _{H^1}\,\le \, R\) and choosing \(\varepsilon >0\) sufficiently small, there exist \(\alpha ,\,\lambda (R)>0\) such that

Local Weak Monotonicity: Let us introduce the notation \(-\Delta c_u = u\), so that we have

Applying Cauchy–Schwarz followed by the triangle inequality, Young’s product inequality and Hölder’s inequality give

Making use of Lemma 3.1 and the assumptions that \(\Vert u\Vert _{L^1}\vee \Vert w\Vert _{L^1}=1\) and \(\Vert u\Vert _{H^1}\vee \Vert w\Vert _{H^1}\le R\), we find the estimates

and

Hence, again using the assumption \(\Vert u\Vert _{L^2}\,\le \, \Vert u\Vert _{H^1}\,\le \,R\), we find

which proves the claim.

Hemi-continuity: Letting \(u,\,v,\,w \in H^1(\mathbb {R}^2)\) and \(\theta \in \mathbb {R}\), we have

The first term directly converges to 0 as \(\theta \rightarrow 0\). For the second term, after applying Hölder’s inequality we see that we are required to control

which again directly converges to 0 as \(\theta \rightarrow 0\). \(\square \)

Lemma C.6

For \(\varvec{\sigma }:=\{\sigma _k\}_{k\ge 1}\) satisfying (H1) and divergence free, the mapping,

is linear and strongly continuous.

Proof

Linearity is clear. Let \(u,\,w \in H^{1}(\mathbb {R}^2)\), using the divergence free property of the \(\sigma _k\),

\(\square \)

Proof

The strategy of proof is to first define a finite-dimensional approximation to (1.1) using a Galerkin projection, we project the solution and the nonlinear term to a finite-dimensional subspace of \(L^2(\mathbb {R}^2)\). Using Lemma C.5 and the linearity of the noise term, it follows that this finite-dimensional system has a global solution and using the same arguments as in the proof of Lemma C.2, there is a non-trivial interval \([0,\bar{T}]\) on which we have uniform control on this solution. By Banach–Alaoglu, we can extract a convergent subsequence, whose limit, u, will be our putative solution to (1.1). By linearity, the noise term converges so it will remain to show that A converges along this subsequence to A(u) and that u is a solution in the sense of Definition 2.4.

For \(N\ge 1\), let \(H_N \subset L^2(\mathbb {R}^2)\) denote the finite-dimensional subspace spanned by the basis vectors \(\{e_k\}_{|k|\,\le \, N}\) and \(\Pi _N: L^2(\mathbb {R}^2)\rightarrow H_N\) be an orthogonal projection such that \(\Vert \Pi ^N f\Vert _{L^2}\,\le \, \Vert f\Vert _{L^2}\). Then we consider the finite-dimensional system of Stratonovich SDEs,

It follows from [33], Thm. 3.1.1 and Lemma C.5 that a unique, global solution exists for all \(N\ge 1\). Furthermore, for each \(N\ge 1\), \(u^N\) is a smooth solution to a truncated version of (1.1) with smooth initial data and is such that for all \(t>0\) it holds that \(\Vert u^N_{t}\Vert _{L^1} = \Vert u^N_{0}\Vert _{L^1}=1\). It is readily shown that

Hence, using the same arguments as in the proof of Lemma C.2, there exists a \(\bar{T}\in (0,\infty )\) depending only on \(\Vert u^N_0\Vert _{L^2}\,\le \, \Vert u_0\Vert _{L^2}\) such that

We can therefore apply the Banach–Alaoglu theorem, [5], Thm. 3.16 & Thm. 3.17, to see that there exist sub-sequences \(\{u^k\}_{k\ge 1},\, \{A(u^k)\}_{k\ge 1}\), a \(u \in L^2(\Omega \times [0,\bar{T}];H^1(\mathbb {R}^2))\) and a \(\xi \in L^2(\Omega \times [0,\bar{T}];H^{-1}(\mathbb {R}^2))\) such that

It follows from the first and Lemma C.6 that the stochastic integrals converge so it remains to show that \(\xi = A(u)\). From the local monotonicity of A, for any \(t\in (0,\bar{T}]\), \(v\in L^2(\Omega \times [0,\bar{T}];H^1(\mathbb {R}^2))\) and \(N\ge 1\)

Using the identity,

which can be proved directly using the chain rule for Stratonovich integrals and the arguments of Lemma C.2, it is straightforward to show the inequality,

It follows, applying (C.9) in the final inequality, that for any \(v\in L^2(\Omega \times [0,\bar{T}]; H^1(\mathbb {R}^2))\),

Now, choosing \(v = u -\theta w\) for some \(\theta >0\) and \(w\in L^2(\Omega \times [0,\bar{T}];H^1(\mathbb {R}^2))\) gives that

So applying (C.7) and taking \(\theta \rightarrow 0\), we finally find that,

for all \(w\in L^2(\Omega \times [0,\bar{T}];H^1(\mathbb {R}^2))\) from which it follows that \(\xi =A(u_s) \in L^2(\Omega \times [0,\bar{T}];H^{-1}(\mathbb {R}^2))\).

It follows that \(u \in L^2(\Omega ;L^\infty ([0,\bar{T}];L^2(\mathbb {R}^2))) \cap L^2(\Omega \times [0,\bar{T}];H^1(\mathbb {R}^2))\) and satisfies (2.3). We now show that in fact, \(u \in L^2(\Omega ;C([0,\bar{T}];L^2(\mathbb {R}^2))\). To see this, we recall that since \(L^2(\mathbb {R}^2)\) is a Hilbert space, if \(u_{t_k} \rightharpoonup u_t \in L^2(\mathbb {R}^2)\), and \(\Vert u_{t_k}\Vert _{L^2}\rightarrow \Vert u_t\Vert _{L^2}\in \mathbb {R}\) one has

From (C.3), it follows that given a sequence \(t_k\rightarrow t\), \(\Vert u_{t_k}\Vert _{L^2}\rightarrow \Vert u_t\Vert _{L^2}\). So it suffices to show that \(u_{t_k}\rightharpoonup u_t\in L^2(\mathbb {R}^2)\). Let \(h\in L^2(\mathbb {R}^2)\) be arbitrary, \(\{h_n\}_{n\ge 1}\subset H^1(\mathbb {R}^2)\) be a sequence converging to h strongly in \(L^2(\mathbb {R}^2)\) and \(\varepsilon >0\), \(n_\varepsilon \ge 1\) be large enough such that,

Therefore, we have

By definition, for any weak solution \(u_{t_k} \rightarrow u_t\) strongly in \(H^{-1}(\mathbb {R}^2)\) and so conclude

Since \(\varepsilon >0\) was arbitrary, we may conclude \(u_{t_k}\rightarrow u_t \in L^2(\mathbb {R}^2)\) strongly. Furthermore, inspecting the proof we see that the modulus of continuity is deterministic and hence \(u\in L^p(\Omega ;C([0,\bar{T}];L^2(\mathbb {R}^2)))\) for any \(p\ge 1\). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mayorcas, A., Tomašević, M. Blow-up for a stochastic model of chemotaxis driven by conservative noise on \(\mathbb {R}^2\). J. Evol. Equ. 23, 57 (2023). https://doi.org/10.1007/s00028-023-00900-3

Accepted:

Published:

DOI: https://doi.org/10.1007/s00028-023-00900-3