Abstract

The effects of climate change, population growth, and future hydrologic uncertainties necessitate increased water conservation, new water resources, and a shift towards sustainable urban water supply portfolios. Diversifying water portfolios with non-traditional water sources can play a key role. Rooftop harvested rainwater (RHRW), atmospheric and condensate harvesting, stormwater, recycled wastewater and greywater, and desalinated seawater and brackish water are all currently utilized and rapidly emerging non-traditional water sources. This review explores the status and trends around these non-traditional water sources, and reviews approaches and models for prioritizing, predicting, and quantifying metrics of concern. The analysis presented here suggests that understanding the challenges of location specific scenarios, socioeconomic knowledge gaps, water supply technologies, and/or water management structure is the crucial first step in establishing a model or framework approach to provide a strategy for improvement going forward. The findings of this study also suggest that clear policy guidance and onsite maintenance is necessary for variable water quality concerns of non-traditional sources like harvested rainwater and greywater. In addition, use of stormwater or reuse of wastewater raises public health concerns due to unknown risks and pathogen levels, thus rapid monitoring technologies and transparent reporting systems can facilitate their adoption. Finally, cost structure of desalination varies significantly around the world, largely due to regulatory requirements and local policies. Further reduction of its capital cost and energy consumption is identified as a hurdle for implementation. Overall, models and process analyses highlight the strength of comparative assessments across scenarios and water supply options.

Similar content being viewed by others

Introduction

As population growth continues around the world, so does the need for potable water sources and infrastructure that can ensure its availability. Climate change, which includes extreme weather events and natural disasters, further exacerbates water stress due to its impacts to water quantity, quality, and local shortages1,2. Future climatic and hydrologic uncertainties that continue to widen the gap between water resource supply and demand have motivated water management decision-making towards increased conservation, technological advancements around water treatment, and a shift towards diversifying urban water portfolios with non-traditional, decentralized, or more “sustainable” sources3. The water supply and treatment paradigm must handle and prepare for current and future impacts of climate, populations, and disease.

To date, it is most common in urban areas and developed countries around the world to depend on centralized drinking water systems that draw from traditional surface and groundwater sources. These systems provide clean water for consumers and abide by standardized environmental waste disposal requirements. Therefore, improvements to the system with regards to population growth and climate change are more difficult and tend to focus on infrastructure retrofitting for increased flows and to support larger populations. It is questionable whether this remains economically and environmentally feasible in the years to come, especiaslly in water-stressed areas4. Conversely, onsite, and decentralized water systems remain the standard in many rural regions around the world, for water collection, storage, treatment, and use. For example, rooftop harvested rainwater (RHRW), cisterns, and water recycling are well-established practices and methods in rural areas and developing countries, globally. However, these non-traditional water supplies vary in quality, health risks, maintenance, and may be more impacted by natural disasters and disease agents than centralized infrastructure. Quantity, quality, and accessibility of water resources and treatment are complex challenges and a better understanding across non-traditional water resources and treatment designs is needed to ensure widespread availability and safety of the water supplies.

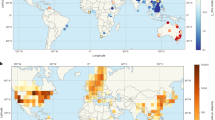

Non-traditional water sources come with unique implementation challenges and benefits of use. Future water supply security requires these challenges and benefits to be understood and researched for sustainable water management Fig. 1. Sustainable water management is the use of water in a way that provides adequate quality and quantity and addresses unique social and ecological needs while ensuring that these needs and standards will also be met in the future. Specific challenges depend on regional and socioeconomic factors, such as cost, land use, and differing perspectives on water governance and technology adoption. Water governance has been defined as the range of political, social, economic, and administrative systems in place to develop, manage, and deliver water resources at different levels of society5. The water industry in the U.S. is highly fragmented, with nearly 150,000 entities registered with the EPA Safe Drinking Water Information System (SDWIS) as drinking water providers6. Therefore, the decision to adopt new water sources or invest in technology is region specific, and dependent on local governance and conditions. As environmental and climatic factors exacerbate water issues, the effect is compounded on areas and communities that are at higher risk, such as semi-arid regions or areas of varying urbanization that lack the infrastructure to provide reliable, clean water. Therefore, there is no “one-size-fits-all” technology or approach to sustainable water management. Planning and design beyond the current systematic approaches and policies is necessary to begin to account for climate change, population growth, and water quantity and quality concerns across all groups and locations.

Analytical tools and models are useful in planning and design by allowing researchers to pinpoint and highlight critical areas. Many existing and emerging quantitative models, frameworks, and approaches can be used to identify challenges of a specific non-traditional water source to develop frameworks or models to a process for comparison against established benchmarks or measurements for water resource decision-making. Drawing such comparisons can guide policymakers and researchers towards better implementation strategies, intervention, and uncertainties as future research areas and data collection.

Identification of technological or socioeconomic knowledge gaps for non-traditional water sources provides the motivation for this review, and is the first step to ensuring a more adequate and equitable water future. This paper explores the status and trends around non-traditional water sources, identifies challenges, and reviews approaches for prioritizing, and quantifying priority metrics. Current and emerging non-traditional water sources are tabulated and described. In parallel, examples of the most demonstrated models for each non-traditional water source are explored, identifying areas of priority application. Finally, a summary of key areas of future research is provided. The analysis suggests that understanding the challenges of scenarios and water technologies is the crucial first step in establishing a framework or model to provide a strategy for improvement. The multifaceted nature of decision-making for water management makes it important to compare, contrast, and weigh options for a sustainable water future. Therefore, this review is unique in that it defines and analyzes both a list of the major non-traditional water sources available and the methods for enumerating quantity and quality metrics to compare across sources. These approaches provide a toolbox for decision-makers, stakeholders, and researchers to better understand trends and applications for more diverse water supply portfolios.

Non-traditional water sources

Non-traditional, or alternative, water sources are defined as sustainable methods of providing water from sources besides fresh surface water or groundwater that reduce or offset the demand for freshwater7. Non-traditional water could mean onsite treatment and storage or larger-scale recycling or treatment to supplement existing water supplies. A list of the non-traditional water sources focused on in this paper and their definitions is provided in Table 1.

A notable difference between existing water supplies and non-traditional sources is the source-to-tap cost (aka, pipe-parity8). The cost of non-traditional water sources can range from 1.5 to 4 times higher than traditional water supplies (Fig. 2). Desalinated seawater has the highest cost with an upper end at U.S. $3.3 per m3. While seawater desalination can provide a virtually limitless supply of freshwater, its cost and energy requirements pose a significant hurdle for many stakeholders and municipalities. In addition, seawater desalination is only a feasible option for coastal regions, thus it is not readily accessible to landlocked countries experiencing water shortages (e.g., Jordan9, Mongolia10, and Nepal11). Water quality, health risk, treatment technology, and energy status of these water sources are the major knowledge gaps that require comparative quantification through models and simulations for better understanding of their origins, impacts, and management strategies for moving forward with water resource decisions. A summary of various water quantity and quality challenges is included in the Supplementary Information.

Assessments and modeling frameworks for non-traditional water sources

The sections below review examples of modeling frameworks that are potentially useful for non-traditional water source adoption decisions, followed by case studies of their application in various sources of non-traditional water in sections “Rainwater”, “Municipal wastewater”, “Desalinated water”, and “Condensate capture and atmospheric water harvesting”. These examples are by no means inclusive but simply emphasize the role of the assessment; there are many other models and assessment tools beyond the examples provided in this review.

Techno-economic assessment (TEA)

Techno-economic assessment (or analysis), commonly known as TEA, integrates a process with a cost model to ultimately estimate the capital cost and operating costs of the given process. Beginning with a process flow diagram, a process is outlined by the treatment unit. Previously established cost curves are useful in estimating the requirements and costs for units based on sizing, such as the volumetric flowrates needed for water treatment technologies, or the chemical addition needed based on the flow. Spreadsheets or process simulators, such as the Water Technoeconomic Assessment Pipe Parity Platform (WaterTAP3) based in Python, are most utilized for TEA12. Once the process model is successfully implemented including all sizing and cost requirements, the final total capital cost, operating costs, and energy requirements can be summarized and compared between processes or locations13. Documentation and github access to WaterTAP3 is available online at https://www.nawihub.org/water-tap3/.

Cost-benefit analysis (CBA)

Simply, CBA is a systematic approach to weighing the benefits, such as benefits to the environment, with the costs of a process or policy. Unlike TEA, CBA can include benefits that are intangible or nonmonetary. In recent years, the externalities or environmental benefits are given a “shadow price” to establish a monetary value to such aspects that have no market value. A simple net profit equation can then be used to calculate the difference between the costs and priced benefits such as \({NP}=\sum {B}_{{\rm{i}}}-\sum {C}_{{\rm{i}}}\), where NP is the net profit, B is the benefit value of item i and C is the cost of item i, as outlined by Molinos-Senante et al., (2010) for wastewater treatment14. As with TEA, a series of costs and values can be established for various processes, treatments, or pollutants and a CBA can be conducted and compared across different layouts or facilities. CBA is a useful tool in the decision-making process, particularly in adopting non-traditional water sources. The Harvard Business School outliness the steps and necessary approaches for CBA online https://online.hbs.edu/blog/post/cost-benefit-analysis.

Life cycle analysis (LCA) and life cycle cost (LCC)

LCA and LCC analysis are two additional tools for quantifying the costs and impacts of a system. LCA is a combination of the “inputs, outputs, and potential environmental impacts of a product system throughout its life cycle,” also referred to as its impacts from “cradle to grave.” Hellweg and Milà i Canals15 outlined LCA in four steps: (1) defining the goal and scope including the system boundaries such as resource extraction to end-of-life disposal of materials; (2) inventory analysis to compile all inputs, resources, and emissions; (3) impact assessment, or categorizing and converting impacts/emissions to a common unit such as CO2eq; and (4) interpretation of results, such as finding that a proposed water treatment technology has higher environmental impacts than the current system in place15. Therefore, LCA is useful for assessing carbon footprint and emissions associated with non-traditional water sources. LCC extends the framework of LCA, which assesses the total impact of a system, to the costs associated with a system. LCC accounts for “all costs of acquiring, owning, and disposing of a building or building system.”16 One area of uncertainty and challenge in utilizing TEA, LCC, CBA, and LCA is a lack of a strict framework or methodology, resulting in models and approaches that are specific to individual case studies. There are many LCA tools and software available online, such as the open source Open LCA: https://www.openlca.org/.

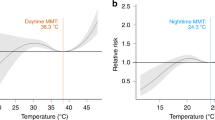

Quantitative microbial risk assessment (QMRA)

One of the most useful quantitative tools and frameworks for estimating health risk is the quantitative microbial risk assessment (QMRA). QMRA is a framework outlined by the National Academy of Sciences and often utilized by the U.S. EPA for evaluating microbial health risks of drinking water and water supply systems17. The framework is comprised of five main components: hazard identification, exposure assessment, dose–response assessment, risk characterization, and risk management. First, a particular pathogen or toxin is identified as the hazard of concern for a modeled scenario of interest. Next, a specific exposure pathway and scenario is defined and modeled, such as drinking untreated water or eating produce which has been irrigated with recycled wastewater. This exposure assessment uses a quantitative model and/or behavioral data to estimate a dose of the pathogen one may be exposed to during a given scenario event. Then, a best-established dose-response model is utilized to calculate a probability of a response (such as illness or death) due to the range of possible exposed doses. Clinical dose-response data is useful in this endeavor and fitted to a model. Finally, the total risk of response is estimated, quantifying a daily, annual, or otherwise risk based on all inputs to provide the best course of action (risk management) going forward. QMRA is often utilized by the US EPA to establish or improve regulatory measures and monitoring practices. The central online resource to find target pathogens and respective dose-response data for QMRA is the QMRA wiki site https://qmrawiki.org/.

Rainwater

Rooftop-harvested rainwater

Rooftop-harvested rainwater (RHRW) is defined as rainwater collected from the runoff of building rooftops and stored in engineered structures such as a rain tank or an underground cistern18,19. In comparison with rainwater that falls on the ground that can collect pollutants from the road, RHRW has relatively fewer contaminants and may serve as a good source of supplemental water for existing supplies18,20,21. In regions where rainfalls are plentiful, RHRW is a well-established water supply system with mandatory installation in countries such as Spain and Belgium22. The U.S. Virgin Islands has legal precedents in place, with building codes stating that buildings must consist of a “self-sustaining water supply system” such as a well or rainwater collection area and cistern (V.I. Code tit. 29, § 308). In addition, drier countries such as Australia have utilized RHRW in part due to increased environmental awareness and mandatory water restrictions in urban areas23. South Africa has utilized RHRW for generations, and tens of thousands of households use rainwater as their main water source24. Most RHRW is used for domestic purposes by individual households or apartment buildings, namely showering, toilet flushing, clothes washing, and outdoor watering (Fig. 3). One of the main benefits of installation of RHRW systems is the reduced dependence on centralized water supplies. A secondary benefit of RHRW systems is to reduce the peak of the hydrograph during major storm events, thus, reducing stormwater runoff and surface water contamination24.

The water quality of RHRW varies with system design, level of treatment, and local factors such as climatic conditions and regulation. Several studies have linked RHRW use with disease outbreaks and health risks for both drinking and household use25,26,27. A suite of pathogens has been identified in RHRW, with origins of dry deposition, wet deposition, and wildlife19. Rainwater may contain E. coli, Legionella spp., Salmonella spp., Mycobacterium avium, and Giardia, according to limited investigations on water quality25,28,29. Formation of natural biofilm and regrowth of bacteria in the rain tank are also major water quality concerns of RHRW30,31.

RHRW has the potential to act as a potable source of water, however further levels of treatment are generally required to ensure that the supply meets potable quality standards, as determined by a review of recent developments in RHRW technology and management practices32. For example, a study by Fuentes-Galván et al.33 in Guanajuato, Mexico, concluded that further treatment was required before consumption. Keithley et al.34 found activated carbon filtration followed by chlorination produced high quality potable water. Therefore, on-site testing and maintenance of RHRW collection and storage systems is suggested before recommending strategies for safe non-potable and potable water use or consumption.

Water quality and quantity estimation of RHRW

Quantitative models are useful for RHRW design and implementation. The design criteria are a balance of quantity of RHRW with water demand. A balance of these two variables results in optimal storage and design recommendations for a RHRW system. Two possible approaches for this objective are based on empirical observations35,36 or stochastic rainfall analysis37,38. Water demand and use dynamics are highly variable and more difficult to accurately capture and model. Socioeconomic factors have a large impact on water use, even in similar areas or regions, as the demand and use can vary at the household level. Studies have been conducted to model water use and associated RHRW design based on empirical data and system configurations39,40. However, predicting water demand at the household level requires further research especially in the effects of various socioeconomic factors on water use and perceptions surrounding water quality and system maintenance. Mathematical models have also been implemented in water quantity analysis for the purposes of analyzing design and operational costs and optimal configurations40,41. Morales-Pinzón et al.41 compared the deployment of three economic and environmental models: Plugrisost, AquaCycle, and RainCycle for anyslsis of RHRW systems, and found that the urban scale being modeled (such as residential scale or neighborhood scale) is a critical factor. While RHRW is a relatively simple technological system, its implementation and quantity challenges rely on understanding of local water use and rainfall dynamics, socioeconomic factors, and a balance between supply and demand for optimal system design41. Accounting for the cost, footprint, and carbon emissions of constructing such systems has also been modeled and estimated. Hofman-Caris et al.42 modeled six scenarios of rainwater collection with various treatment methods specifically for potable use in the Netherlands and found impacts of 0.002–0.004 kg CO2eq m−3, as compared with around 1.16 kg CO2eq m−3 for a centralized, traditonal water supply. Non-potable systems that do not implement treatment methods such as reverse osmosis or UV disinfection, inherently would have even smaller carbon footprints to operate, which is the case for many countries.

Surveys and case studies that accommodate water analysis and modelling efforts can play a key role in identifying the impacts of location-specific and socioeconomic factors. Collection of information and data regarding income, water use, perception of water quality, and attitude towards treatment technologies is suggested and has proven successful. Such efforts were performed in Pakistan for RHRW, finding that the residents, especially the women, believed they could benefit from RHRW systems to improve their lives, but supported government subsidization as the income levels were generally low43. Surveys in the U.S. Virgin Islands after disastrous hurricanes in 2017 revealed that access to clean water was more limited for lower income groups. Higher income groups used bottled water mostly to replace RHRW during this time of crisis, and there was a disparity in the local perception of water safety that is divided by income group. However, all groups felt that the government should have intervened further and provided better access to clean water during this time44. These analyses, one in a water-stressed country and one in a tropical region, both demonstrated the benefits and acceptance of RHRW, but highlight the impacts of socioeconomics and local perception on their water use and access. Since RHRW is a non-traditional water source used at the single household level, socioeconomics and public acceptance are critical factors regarding its use and implementation.

In terms of water quality, QMRA is a useful tool for understanding health risk of RHRW. One example would be quantifying the annual risk of infection by either Legionella or Mycobacterium avium complex while showering using RHRW44,45. Based on the available data and probabilistic results, risk management strategies can be recommended to reduce or mitigate future health risk. Many QMRA studies have been conducted on RHRW as a water supply for consumption26, gardening46, showering47, and toilet flushing and faucet use48. QMRA was also carried out for environmental exposures such as at a water park setting49. As such, the forefront of models for quantifying quality and health risk for RHRW are based on potable consumption and non-potable exposure through aerosolization50. Quantifying risk thresholds is the first critical step in recommending proper disinfection, maintenance strategies and water quality criteria for RHRW. Opportunistic pathogens in premise plumbing have been identified as a critical future research area. Pathogen levels are often difficult to predict and measure, especially when RHRW storage and treatment is variable and seldom monitored. Impacts due to seasonality, presence of animals, and extreme weather events can all impact pathogen level, and bacterial growth and regrowth even with treatment interventions44,51.

Harvested stormwater

Stormwater, or surface runoff collected from the ground and in the storm drains, can become a non-traditional source of water with multiple benefits: (1) mitigating impacts to receiving surface water quality due to pollutants carried in stormwater runoff; (2) reducing risk of flooding in urban areas; and (3) increasing non-potable water supply when collected and managed appropriately52. Therefore, stormwater harvesting has gained attraction from an integrated urban water management perspective in recent years. Without duplication of previous reviews on the benefits of stormwater harvesting for surface water quality protection and flood mitigation52,53,54, this review will focus on models used to determine the suitability of harvested stormwater as a non-traditional water supply.

Stormwater harvesting (SWH) is similar to RHRW, the distinguishing factor being RHRW is rainfall only from rooftops and stormwater is collected from drains, gutters, waterways, or engineered permeable infrastructure. SWH systems can comprise of various methods for collection and conveyance such as traditional drains and gutter systems or green infrastructure. A diagram illustrating integrated SWH in an urban setting is shown in Fig. 4. Green infrastructure includes constructed systems, defined as “the range of measures that use plant or soil systems, permeable pavement or other permeable surfaces or substrates for stormwater harvest and reuse, or landscaping to store, infiltrate, or evapotranspirate stormwater and reduce flows to sewer systems or to surface waters,” by the Water Infrastructure Improvement Act (H.R. 7279). Notably, common types of green infrastructure for stormwater harvesting are bioswales, biofilters, and permeable pavements.

The quality and quantity of the stormwater runoff is critical in designing infrastructure and in determining management strategies for stormwater non-potable reuse. Treatment is critical for reuse, and the type of treatment depends on the reuse application. Stormwater that is collected in urban settings can contain a variety of contaminants, with many origins such as rainfall, irrigation and agricultural runoff, and car washes. The potential pollution sources for stormwater runoff could then be from vehicle oil and fuel55, organic matter56, pesticides and fertilizers57, heavy metals58, and pathogens59. While SWH is useful in diverting these streams to prevent surface water contamination, water quality becomes the main concern when targeting stormwater for reuse and environmental applications. Some methods of stormwater capture such as biofiltration provide treatment of the stormwater but quantitative modeling should be done on a case-by-case basis to evaluate treatment needs and to determine the best method for removing pollutants and pathogens from the stormwater.

Quantity and quality estimation of harvested stormwater

Modeling for harvested stormwater can be conducted for simulating stormwater and hydrological movement for a watershed, or for predicting pollutants and quality metrics. Much like modeling for RHRW, the quantity modeling is highly dependent on rainfall patterns. However, when not using rain tanks, the subsequent runoff of stormwater must be modeled hydrologically. Many models for urban stormwater have been established in the field of hydrology, including the Storm Water Management Model (SWMM) (https://www.epa.gov/water-research/storm-water-management-model-swmm), and HEC-HMS (https://www.hec.usace.army.mil/software/hec-hms/), and are reviewed by Zoppou (2001)60. Few approaches focused on modeling the capture and storage of the stormwater for use as a non-traditional water source. For example, MUSIC (Model for Urban Stormwater Improvement Conceptualization) developed by Fletcher et al.61 was used to model various scenarios of implementing SWH in urban settings. Their results showed that urbanization and pervious land coverage impact stormwater flow and runoff quality, and implementation of SWH and reuse regimes can address these impacts.

In addition to the benefits as an alternative water resource, stormwater-capture often utilizes “green infrastructure.” As urban sustainability becomes more focused on carbon emissions and footprint, green infrastructure can provide carbon offsets in the form of carbon sequestration. A LCA by Kavehei et al.62 compared across the literature the carbon sequestration potential of various stormwater infrastructures. They found that rain gardens had the smallest net carbon footprint (carbon footprint – carbon sequestration) at −12.6 kg CO2eq m− 2 followed by bioretention basins, stormwater ponds, and vegetated swales, at 28.7, 108.9, 10.5 kg CO2eq m−2 over a 30-year life, respectively. However, they did not account for the net footprint per volume of water treated or captured in this study.

Benefits of SWH for surface water quality improvements were quantified recently by Zhang et al.63 using a sensitivity analysis. Results showed clear benefits in pollution reduction. A simulated runoff model using a stormwater tank with real-time control for capture and storage on a college campus64 highlighted the benefits of SWH for water supply and flood risk reduction based on rainfall event prediction, precipitation, and tank size simulation.

As with RHRW, SWH quality for reuse has been approached based on modeling human health risk from exposure. Ma et al.65 analyzed SWH pollutants based on hazard indices for drinking and swimming to create a hierarchy of hazard control for stormwater management. Murphy et al.66 followed QMRA methodology to establish risk benchmarks for various stormwater harvesting scenarios and consumer uses and found that current (as of 2017) guidelines were inadequate for mitigating risk of Campylobacter. Risk-based QMRA was used by Schoen et al.67 to find targets for reduction of various pathogens in water sources, including stormwater for domestic use. These targets provide clear recommendations and standards for microbial risk and act as guidelines for disinfection and treatment of harvested stormwater for non-potable reuse.

Water quality, health risk, and quantity modeling of SWH is regional and scenario specific and is difficult to model for general cases. QMRA and risk-based modeling are critical for establishing treatment methods, guidelines, and regulations for SWH non-potable reuse. Hydrologic modeling and rainfall simulation play an important role in designing catchment and storage size requirements for SWH, and scenario models have shown that SWH systems are effective in reducing flood risk and improving surface water quality when using systems such as biofilters and bioswales to mimic the natural treatments.

Municipal wastewater

Reclaimed municipal wastewater

Reclaimed, or recycled, municipal wastewater (or sewage) is becoming an increasingly popular source of both non-potable and potable water. Partially treated wastewater from a sewage treatment facility that is normally discharged into the ocean, lakes, or rivers can be further treated for non-potable uses. Reclaimed non-potable reuse water is most often piped separately and used for irrigation and agriculture and other municipal purposes68. Non-potable reuse is especially valuable in arid and semi-arid regions where rainfall is less common, and recycled water for irrigation and agriculture can reduce the demands on the conventional water supply. Water production for non-potable reuse only requires additional disinfection processes beyond traditional wastewater treatment for surface discharge. Potable reuse of wastewater requires additional advanced treatments to meet potable drinking water standards. The finished water is often used to recharge groundwater aquifers or supplement surface drinking water reservoirs, which is referred to as indirect potable reuse. Direct potable water reuse of advanced treated wastewater is less common, but is in consideration in highly water-stressed regions (i.e., California). So far, most of the wastewater reclamation plants in the U.S. are large, centralized municipal wastewater treatment utilities. The production of water for potable purpose often employs processes of biological treatment, microfiltration, ultrafiltration, reverse osmosis, UV disinfection, and advanced oxidation69. Therefore, it is much more costly in comparison with non-potable water reuse. Large-scale wastewater reuse is still emerging, often hindered by complex social and economic factors and management practices70. The most noteworthy successful implementations of wastewater reuse worldwide are in the United States71, Israel72, Singapore73, Australia74, and Namibia75.

One example of successful municipal wastewater reuse implementation is at the Groundwater Replenishment System (GWRS) in Southern California. The purified reclaimed wastewater is infiltrated into local groundwater aquifers to increase water storage and for better public perception of the treated water through blending with the natural groundwater before withdrawn for drinking water treatment76. The GWRS allows for a reduced need for imported water sources to the local area, which were shown to cost more than water produced at the GWRS. In this case, the community involvement and transparency of treatment process, costs, and demonstration of good quality of the produced water led to a successful addition to the local water portfolio. The GWRS is highly costly as it requires expensive treatment processes, piping, pumping, and regulated pathogen log-removal. As defined in the State of California, under Title 22, full advanced treatment is the treatment of wastewater using a reverse osmosis (RO) and an oxidation treatment process. Therefore, the high cost of water purification limits the broader implementation of wastewater as a non-traditional source of water supply in low economic regions outside California. Moreover, the application of reclaimed wastewater for domestic use can come with negative perception, often referred to as the “yuck factor.” Duong and Saphores76 explored this qualitative obstacle and found that it is one of the main reasons why purified wastewater is often not directly used to supplement drinking water supplies. This factor requires public outreach efforts in addressing for public acceptance.

Wastewater treatment plants have been identified as a hotspot for enriching antibiotic resistance and the transmission of antibiotic resistant bacteria (ARB) and antibiotic resistant genes (ARG) into the environment77. Reuse of treated wastewater to flush toilets, irrigate parks, golf courses, and agriculture, may expose human directly to ARB and ARG78. There is concern that reclaimed wastewater could facilitate the spreading of ARB and ARG and pose a threat to human health. Several studies have begun to look into ARB in reclaimed water and distribution systems79,80 but it is noted that further exploration is needed to establish better monitoring and a better understanding of the magnitude of ARB and ARG challenge in water reuse applications81,82.

At the individual building level, sewage can also be recycled and used for toilet flushing. This design concept for “green buildings” has been around for decades, for example in urban Japanese cities83. Large office buildings, skyscrapers, or apartment complexes with an onsite wastewater treatment system can treat and recycle wastewater, and separately pipe and distribute it back through the building. Due to the treatment technology and nature of municipal wastewater, the main challenges faced for decisions to adopt this non-traditional water source are cost considerations of treatment implementation and public perception of water quality and health risks associated with wastewater. Therefore, a combination of quantitative modeling and public outreach for approval is critical in increasing the capacity and utilization of reclaimed wastewater as a water source.

Quantity and quality estimation for reclaimed wastewater

Many of the challenges associated with reclaimed wastewater involve the impacts of residual wastewater constituents on human health either by direct exposure or indirectly through consuming food products irrigated by recycled water, or contamination of groundwater supply. Thus, quantitative modeling of reclaimed wastewater has tended to focus on economic analysis, risk assessment, and fate and transport models.

Human exposure to wastewater that is reclaimed and reused for irrigation of agriculture can be through direct (inhalation or ingestion near an irrigation source) or indirect exposure (consuming irrigated produce). QMRA has been applied to wastewater reuse for inhalation risk, due to pathogens such as Legionella, which has been shown to experience regrowth in distribution networks and in biofilms84. For consumption, Shahriar et al.85 modeled the fate of various pharmaceuticals in reclaimed wastewater irrigated crops based on biodegradation of the organic compounds in the soil, uptake by crops, and bio-transfer from the plant (alfalfa) to cattle. Based on this fate, a risk assessment was conducted to quantify the human exposure via consumption of the cattle. There have been other similar models including direct consumption of reclaimed wastewater irrigated lettuce86, of irrigated rice paddy87, and of kale, coriander, and spinach88. Various combinations of transport models and Monte Carlo methods of risk assessment were deployed for the studies, which is in line with the assessments conducted for other water supplies.

The tradeoffs of implementing wastewater reuse are most often quantified through cost-benefit analysis (CBA). Regional cost, reuse standards, and climatic factors play important roles in determining the outcomes of such operations and are necessary data inputs for CBA modeling. For example, semi-arid regions with less rainfall may benefit differently from reclaiming wastewater for irrigation than an area where rainfall is plentiful year-round. Specific case studies of CBA for wastewater reuse are in Italy89, Beijing90, Spain91, and the semi-arid regions of the Mediterranean92. These models are process-based and data-driven, as opposed to the more probabilistic methods of risk assessment in this area.

Greywater reuse

Greywater, a sub-portion of municipal wastewater, is defined and characterized differently around the world. Generally, it is defined as wastewater from all non-toilet plumbing fixtures in the home, including kitchen, bath, and laundry wastewater93. In some cases, dishwasher, kitchen sink, and laundry wastewater are excluded from greywater classification because wastewater from these sources generally has a higher pollutant load than greywater from bathing and hand washing94. In comparison with municipal sewage discussed in the previous section, greywater collection requires dual plumbing to separate the wastewater streams, which are generally installed at the household and single-building scale. Blackwater, which includes but is not limited to toilet water, is generally piped by sewer lines to centralized municipal wastewater treatment plants, while greywater is harvested and treated on-site for reuse95. Greywater classification, treatment requirements and standards, and separation from blackwater are highly dependent on local policy and laws.

Greywater recycling and reuse represents a significant opportunity for water savings for a domestic residence and follows the same basic principle and paradigm as reclaimed wastewater. Unlike the large-scale municipal wastewater reuse approach, greywater reuse is decentralized and more like RHRW in design and implementation. The decentralized and on-site approach to greywater reuse has been referred to as a “closed-loop concept.”94 The most common reuse purposes of greywater are replacing potable water for irrigation and toilet flushing in the household. Widespread greywater reuse towards toilet flushing in urban households and multi-story buildings can achieve a reduction of up to 10–25% of urban water demand96.

Greywater treatment technologies vary in performance and complexity and may include direct reuse such as diversion for toilet flushing, or treatment by physical, chemical, or biological processes for short term storage. Filtration and disinfection are commonly employed on-site treatments. For filtration, sand or membrane filters are often used, and disinfection is achieved using chlorine tablets or ultraviolet (UV) light. Biological treatment such as anaerobic sludge blankets97, sequencing batch reactors98, and membrane bioreactors99 are also implemented in some cases. Greywater can be diverted and drained to outdoor irrigation systems after filtration, and some systems divert the greywater to a constructed wetland for additional treatment before disinfection100.

When used for irrigation, some larger size pathogens (e.g., helminths) are of less concern since they are easily filtered out through soil infiltration. However, bacteria and viruses are more problematic. For example, E. coli, Salmonella, Shigella, Legionella, and enteric viruses have been found in greywater sources and irrigated soils101, and Legionella can be spread by aerosolization through spray irrigation45. Further research on greywater pathogen monitoring and health risks is recommended for advancing and improving its utilization and implementation as a water supply. Greywater as a water supply can significantly alleviate household water demands on traditional sources and provide a sustainable water management option.

Quantity and quality estimation for greywater reuse

The quality concerns with reusing greywater are like other alternative water supplies. Therefore, modeling efforts follow the same principles. For health concerns related to irrigation, priority is given to quantifying the health risks of consuming fresh produce that has been irrigated with greywater41 and in human exposure to greywater that is airborne from irrigation sprinklers67,102,103 or toilet flushing104. While these models have many exposure parameters such as physical transport and exposure distance and time, the most sensitive parameter is most often the number of pathogens being consumed or inhaled, and therefore the number of pathogens in the water source. Therefore, the quality of the recycled greywater and the type and thoroughness of treatment are all critical in minimizing risk.

The primary metrics for quantitative analyses are cost, energy requirements, and the water supply-and-demand relationship. Studies using LCA and LCC models have quantified the requirements and tradeoffs of greywater reuse against demand for basic water use activities in households105, airports106, and schools104. Results demonstrate the benefits of utilizing greywater production to alleviate some domestic water demands to provide both water and financial savings.

Economic analysis is valuable for quantifying the cost of investment in a greywater reuse system. Cost is an important metric for stakeholders and investors particularly in the urban sector, such as for multi-story residential buildings where the systems cover many units and residents and would therefore be more expensive due to larger flows and distribution needs. Friedler & Hadari96 performed a CBA on such a scenario with estimations for capital investment and operation and maintenance costs, as well as annual savings (or benefit) of reusing greywater to reduce water demand. Their model found that a rotating biological contactor proved to be economically feasible for a building of 28 or more stories, versus a membrane system requiring 37 stories. This type of economic analysis is typical for estimating costs and potential savings of water supply systems and depends on capacity, energy requirements, treatment train, and process specifications such as chemical additions, and local subsidies, incentives, and interest rates. While costs and benefits differ by location and system, one of the takeaways by Rodríguez et al.107 was that socioeconomic factors, feelings of improved quality of life, and a better understanding of societal roles should be considered when quantifying impacts and decision-making around sustainability, water savings, and ecological systems.

Desalinated water

Desalinated water is brackish water or seawater from which the dissolved minerals, salts, and other contaminants are removed by purification processes. Brackish water is water with more salt than freshwater, but less than seawater. These waters are found where saltwater and freshwater mix, such as estuaries or in some groundwater aquifers. Typical salinity for seawater is around 35,000 ppm but can range between 10,000 and 50,000 ppm. Brackish water salinity covers a range of around 1000 ppm to 30,000 ppm, but is typically 1000 to 10,000 ppm. Reverse osmosis (RO) desalination has replaced traditional thermal-based technologies to dominate the world desalination market due to a significant reduction in energy demand108. Desalination is typically designed to produce purified potable water for drinking water supplies. The treatment infrastructure and energy cost for desalination are still higher than that of other non-traditional water resources109,110.

The use of seawater and brackish water as non-traditional sources of potable water has become an increasingly attractive and viable long-term solution for water scarcity, particularly in semi-arid and coastal regions. Over the past 30 years, significant advances have been made, including a twofold reduction in energy requirements for seawater RO (SWRO)8. The state-of-the-art for SWRO plant installation includes three major engineering processes: pretreatment, reverse osmosis, and post-treatment. A typical SWRO treatment train is illustrated in Fig. 5. Seawater desalination in general recovers ~50% of inflow as freshwater, discharging the other 50% with twice the salinity of seawater as reject brine. As brackish water salinity is much lower than seawater, the recovery is higher, up to 75–85%111.

Diagram illustrates nearshore intake, pretreatment by filtration, high-pressure reverse osmosis system, permeate post-treatment, and storage before distribution. The seawater is shown in dark blue and the desalinated water is in the light blue color. The energy recovery from concentrated brine and brine discharge through ocean outfall is shown in gray. The arrows indicate the direction of water flow.

In addition to the energy concern of desalination, brine management is also a critical component of the desalination process. Brine is usually discharged back into the ocean as it is the least expensive option, but this raises concerns of impacts to marine life due to salinity, toxic substances, and temperature112. In addition to a salinity and temperature differences, brine can also include chemicals from antiscalants, coagulants, and even heavy metals from corrosion113. Inland desalination facilities have the added challenge of brine management without the option of ocean discharge. Common methods of inland brine management in the United States include evaporation ponds, zero liquid discharge systems involving evaporators, crystallizers, and spray dryers, and deep well injection114. Some methods and approaches are beginning to utilize recovery of salts to offset the high total costs of desalination, in which brine disposal could account for 5–33%115.

Since seawater and brackish water are less contaminated than other alternative water sources, acute illnesses such as microbial infections or CEC are less of a concern. Ultrafiltration membranes and RO membranes with pore sizes down to 0.0001 μm have been shown to significantly remove pathogens, and it is suspected that even viruses are significantly reduced due to adsorption onto particles116. In 2011, the World Health Organization issued a report on desalinated water health with major points of recommending virus inactivation and disinfection after primary (RO membrane) treatment, and noting the challenge of maintaining microbial water quality during storage and distribution. Neither of these are unique to desalination and are general challenges for treating and delivering potable drinking water in a traditional centralized manner117.

Water quality and quantity estimation for desalinated water

To address the concern of cost and energy requirements in desalination technologies, modeling efforts have focused on TEA with the aim of minimizing costs and comparing across designs. In addition, TEA can assess the use of renewable energy sources (e.g., wind power) or co-location of desalination with power plants, for reducing cost. The latter reduces the overall cost, since the warmer source seawater collected from power plant discharge usually requires less energy for membrane separation than using ambient temperature seawater8.

The most prevalent modeling software for cost and energy of desalination is The International Atomic Energy Agency’s Desalination Economic Evaluation Program (IAEA DEEP). The DEEP model can be utilized for different configurations and power supplies for desalination processes and has been updated regularly since its creation118. Another method of understanding cost and energy requirements for different desalination technologies used the cost database approach based on collating and correlating data from over 300 desalination plants worldwide119. As introduced in the "Techno-economic assessment (TEA)" section, WaterTAP3 created by the National Alliance for Water Innovation (NAWI) can be used for user-created processes and treatment train configurations and water quality parameters (i.e., salinity or boron concentration) to assess techno-economics of different options12. In addition, simulation models have been used to optimize specific treatment processes. Oh et al.120 simulated RO membrane performance based on solution-diffusion and fouling mechanisms to model permeate flux and recovery. Models such as these have been valuable in improving desalination performance over the last few decades including enhancing boron rejection by membrane process. A discussion on boron and other low-rejection ions in membrane desalination is presented in the Supplementary Information.

Applications of the TEA have offered significant insights to desalination implementation. For example, Quon et al.121 conducted a baseline cost and energy analysis on several SWRO desalination plants and found that economy of scale plays a significant role in SWRO, with levelized cost of around U.S. $1–1.35 m−3. Actual costs are highly variable, made apparent by the $1.61 m−3 cost of SWRO in Carlsbad, CA, USA versus the $0.53 m−3 cost of SWRO in Ashkelon, Israel, despite the two facilities being nearly identical in design.

The recognition of the impact of local factors to the cost and adoption of water technologies and supplies is of great significance for desalination. Economic analyses often lack the ability to properly capture externalities and local factors related to construction, permitting, financing, market regulations, and government subsidies, which have been identified as challenges of note in California122. The risks associated with these areas and the economic feasibility of weighing them against the predicted costs of the facility (modeled through TEA, for example) are lacking based on the current state of knowledge and demonstrations. For example, the study by Quon et al.121 suggested that future cost savings are most dependent on local factors and consistent plant operation; large RO seawater desalination plants with state-of-the-art technology have similar energy costs while total capital and operational costs vary. A similar conclusion was drawn by a TEA on thermal desalination by Zheng & Hatzell123, who stated that “we cannot ignore many other factors that can affect the siting selection, such as local government subsidies, transportation fee of facilities, local land prices.”

In addition, the sociopolitical challenges of desalinating waters have been reviewed and explored124. For example, studies have highlighted the disparities and vulnerabilities of border areas regarding water rights, namely at the Mexico-USA border125 and between Israel and Jordan126. On one hand, increased water security shared between countries and the collaborative process is achievable126 while on the other it may increase tensions125. Such factors that ultimately affect the timelines and amenability of desalination are difficult to include from a modelling and design perspective and require further qualitative study and location-specific dives into how they inevitably impact the costs and benefits of including desalination in water portfolios.

Condensate capture and atmospheric water harvesting

Condensate capture and atmospheric water harvesting (AWH) are additional methods to provide non-traditional water. Captured condensate is the collection of condensate water generally from air conditioning cooling coils, rather than traditionally draining the condensate to sewer lines. Much like RHRW and SWH, it therefore relies on diverting and storing a previously wasted source of freshwater, making it a generally untapped water source, particularly in hot, humid regions. Captured condensate can be used for non-potable uses such as toilet flushing, irrigation, and cooling tower make up water127. Atmospheric water harvesting (AWH) is the use of a device to extract water vapor directly from the air by condensation technology, adsorption based technology, and cloud seeding/fog collection128,129. Condensation technology for AWH requires a power source for cooling in order to condense the air to vapor. Adsorption technology can be designed to utilize day and night cycles, ambient temperatures, and solar heat for capturing and condensing vapor. Therefore, it is less energy intensive, but the yield of water harvested is less than with condensation technology128. Cloud seeding is a form of weather modification to induce and collect rain, but only where water abundant clouds have gathered, thus it is difficult to perform in a routine and predictable manner. Fog collection is simply the capture of droplets on mesh-like material perpendicular to fog and wind. It has demonstrated a water production ability up to 3–7 kg day−1 m−2 but is best utilized in high elevations where fog and wind are regular130,131.

Water quality and quantity estimation for captured condensate and AWH

Both sources of water are promising to alleviate water stresses on traditional sources, but research and efforts for their quantitative modelling and design are fewer than for the other sources outlined earlier. Currently, modeling has been conducted focusing on estimating theoretical yield (water quantity) of condensate based on thermodynamic principles and climate conditions132,133. Regions around the world identified as having high potential for implementing condensate collection are the Arabian Peninsula, Sub-Saharan Africa, South Asia, and the Southeastern United States132,134. Hassan and Bakry133 found that for 1 ton of refrigerant, the condensate recovery for a year of operation and typical weather conditions was highest in Singapore with 35.33 m3 followed by 30.69 m3 in Kuala Lumpur, Malaysia. Captured condensate as an onsite water supply offsets conventional water demand, similar to onsite greywater reuse, thus reducing the overall demand and footprint associated with potable water treatment. Khan135 estimated a reduction of 0.54 kg CO2eq per kWh used for pumping of the conventional water supply associated with the implementation of captured condensate in residential buildings in Dubai, UAE. Conversely, atmospheric water harvesting was estimated to have a reduction of 0.3–0.35 kg CO2eq per kWh based on the average footprints of traditional water sources in the United States and Middle East136. For water quality modeling, Loveless et al.137 conducted water quality testing on captured condensate systems throughout Saudi Arabia and found high quality, with all samples under the U.S. EPA recommended quality values. Based on their climate model and water quality findings, the authors suggested that industrial application of captured condensate could lead to cost savings and reduced impact on operations which already require highly pure water, and simple post-treatment methods could make the collected water drinkable.

Summary of key areas for future research

In this paper, several non-traditional water sources were described and compared, with a focus on the methods and approaches in place for estimating their respective quantity and quality metrics for implementation and water management. Computer modeling and analytical tools serve to pinpoint and predict metrics regarding capacity, cost, energy, microbial quality, and health risk. As each non-traditional water source varies in water quality, operation, size, and treatment level, there are still key areas that require further research to improve their use and management. This applies at all levels of society and water management, from the household scale of water use to the planning of government policy and regulatory measures. Below are the key areas identified in this study for each of the water sources explored.

-

Rooftop harvested rainwater: RHRW has highly variable water quality and microbial contamination concerns, therefore a clear and more uniform policy on onsite maintenance and upkeep for water quality concerns is needed in areas where RHRW is implemented or required.

-

Stormwater harvesting: Due to the nature of stormwater and the variable effects of weather on its abundance and water quality, there are health concerns with utilizing it for reuse purposes. Further research is recommended in understanding the impacts of weather on stormwater quality and on the health risks of human exposure and consumption when using it as a non-potable water source.

-

Reclaimed municipal wastewater: In general, reclaimed municipal wastewater is a more reliable source in terms of quantity in comparison with RHRW and stormwater for the local community. It is mostly used for non-potable purposes, but recent advancements have demonstrated that direct potable reuse is possible. However, it is not readily accepted and there is a lack of policy for its implementation and regulation. Therefore, more research on potable reuse technologies in terms of cost and treatment capabilities is necessary, particularly in comparing RO with alternative treatment technologies. The health concerns with non-potable reuse due to aerosolization and the uncertainty around viral pathogens must be explored and compared to further develop an understanding of pathogen removal in wastewater reuse. In addition, the occurrence of ARB in wastewater treatment plants has created concerns about their spread, prevalence, and subsequent effects on human health through non-potable exposure. A lack of sufficient ARB and ARG prevalence data in reclaimed wastewater calls for future efforts to better characterize their concentrations, information on antibiotics, and a reassessment of treatment criteria and regulation for possible associated health risks. These health concerns apply to the variable water quality and pathogen levels in raw wastewater and in treated wastewater effluent that is used as a non-traditional and non-potable water source.

-

Greywater reuse: The possible health risks and concerns of onsite greywater recycling, such as for toilet flushing and irrigation, continue to pose a hurdle for its wider spread implementation. Uniformed treatment requirements and regulatory policies are recommended to facilitate the broader implementation.

-

Desalination: High costs and concerns of how to properly manage brine waste hinder its development and acceptance in the United States. More research is recommended on the origins of cost discrepancies in a comparative manner across desalination facilities around the world, including local costs and legal requirements. The effects of offshore brine discharge must continue to be studied, as well as other methods of brine waste processing and handling for inland desalination facilities.

-

Condensate capture and AWH: These methods can be used to reduce the dependence on traditional, centralized water sources, but a better understanding of the quality requirements is suggested. The benefits are region-specific due to the pivotal impacts of temperature and weather, which should be well understood before any design and implementation. The design cost of post-treatment for potable water use is a key requirement and must be considered.

Data availability

All data are available upon request.

Code availability

This manuscript does not contain any custom code.

References

Schwabe, K., Nemati, M., Landry, C. & Zimmerman, G. Water markets in the Western United States: trends and opportunities. Water 12, 1–15 (2020).

Aalst, M. Kvan The impacts of climate change on the risk of natural disasters. Disasters 30, 5–18 (2006).

Brown, R. R., Keath, N. & Wong, T. H. Urban Water Management in cities: historical, current and future regimes. Water Sci. Technol. 59, 847–855 (2009).

Arora, M., Malano, H., Davidson, B., Nelson, R. & George, B. Interactions between centralized and decentralized water systems in urban context: a review. WIREs Water 2, 623–634 (2015).

Rogers, P., Hall, A. W., van de Meene, S. J., Brown, R. R. & Farrelly, M. A. Effective Water Governance Global Water Partnership Technical Committee (TEC). Global Environ. Change https://doi.org/10.1016/j.gloenvcha.2011.04.003%5Cnwww.gwpforum.org (2003).

Environmental Protection Agency. Safe Drinking Water Information System (SDWIS) Federal Reporting Services. EPA. https://www.epa.gov/ground-water-and-drinking-water/safe-drinking-water-information-system-sdwis-federal-reporting. (2021).

Giammar, D. et al. National Alliance for Water Innovation (NAWI) municipal sector technology roadmap 2021. https://doi.org/10.2172/1782448 (2021).

NAWI. NAWI: Research and Projects. National Alliance for Water Innovation (NAWI). https://www.nawihub.org/research/#:~:text=Pipe%20parity%20means%20solutions%20and,viable%20for%20end%2Duse%20applications. (2020).

Schyns, J. F., Hamaideh, A., Hoekstra, A. Y., Mekonnen, M. M. & Schyns, M. Mitigating the risk of extreme water scarcity and dependency: The case of Jordan. Water 7, 5705–5730 (2015).

Liu, J. et al. Water scarcity assessments in the past, present, and future. Earth’s Future 5, 545–559 (2017).

Pandey, C. L. Managing urban water security: challenges and prospects in Nepal. Environ. Dev. Sustain. 23, 241–257 (2021).

Miara, A. et al. WaterTAP3 (The Water Technoeconomic Assessment Pipe-Parity Platform) [Computer software]. https://www.osti.gov//servlets/purl/1807472. https://doi.org/10.11578/dc.20210709.1 (2021).

Burk, C. Techno-economic modeling for new technology development. Chem. Eng. Prog. 114, 43–52 (2018).

Molinos-Senante, M., Hernández-Sancho, F. & Sala-Garrido, R. Economic feasibility study for wastewater treatment: a cost–benefit analysis. Sci. Tot. Environ. 408, 4396–4402 (2010).

Hellweg, S. & Milà i Canals, L. Emerging approaches, challenges and opportunities in life cycle assessment. Science 344, 1109–1113 (2014).

Fuller, S. Life-cycle cost analysis (LCCA). National Institute of Building Sciences, An Authoritative Source of Innovative Solutions for the Built Environment, 1090. https://archexamacademy.com/download/Building%20Design%20Construction%20Systems/Life-Cycle%20Cost%20Analysis.pdf (2010).

N. R. C. Risk Assessment in the Federal Government: Managing the Process. (National Research Council, Washington (DC), 1983).

Boers, T. M. & Ben-Asher, J. A review of rainwater harvesting. Agric. Water Manag. 5, 145–158 (1982).

Campisano, A. et al. Urban rainwater harvesting systems: research, implementation and future perspectives. Water Res. 115, 195–209 (2017).

Gurung, T. R. & Sharma, A. Communal rainwater tank systems design and economies of scale. J. Clean. Prod. 67, 26–36 (2014).

Imteaz, M. A., Ahsan, A., Naser, J. & Rahman, A.Reliability analysis of rainwater tanks in Melbourne using daily water balance model. Resour. Conserv. Recycl. 56, 80–86 (2011).

Domènech, L. & Saurí, D. A comparative appraisal of the use of rainwater harvesting in single and multi-family buildings of the Metropolitan Area of Barcelona (Spain): social experience, drinking water savings and economic costs. J. Clean. Prod. 19, 598–608 (2011).

Rahman, A., Keane, J. & Imteaz, M. A. Rainwater harvesting in Greater Sydney: water savings, reliability and economic benefits. Resour. Conserv. Recycl. 61, 16–21 (2012).

Mwenge Kahinda, J. & Taigbenu, A. E. Rainwater harvesting in South Africa: challenges and opportunities. Phys. Chem. Earth 36, 968–976 (2011).

Ahmed, W., Gardner, T. & Toze, S. Microbiological quality of roof-harvested rainwater and health risks: a review. J. Environ. Qual. 40, 13–21 (2011).

Dean, J. & Hunter, P. R. Risk of gastrointestinal illness associated with the consumption of rainwater: a systematic review. Environ. Sci. Technol. 46, 2501–2507 (2012).

Simmons, G. et al. A Legionnaires’ disease outbreak: a water blaster and roof-collected rainwater systems. Water Res. 42, 1449–1458 (2008).

Dobrowsky, P. H., de Kwaadsteniet, M., Cloete, T. E. & Khan, W. Distribution of indigenous bacterial pathogens and potential pathogens associated with roof-harvested rainwater. Appl. Environ. Microbiol. 80, 2307–2316 (2014).

Kaushik, R. & Balasubramanian, R. Assessment of bacterial pathogens in fresh rainwater and airborne particulate matter using real-time PCR. Atmos. Environ. 46, 131–139 (2012).

Hamilton, K. et al. A global review of the microbiological quality and potential health risks associated with roof-harvested rainwater tanks. NPJ Clean. Water 2, 7 (2019).

Zhang, X., Xia, S., Ye, Y. & Wang, H. Opportunistic pathogens exhibit distinct growth dynamics in rainwater and tap water storage systems. Water Res. 204, 117581 (2021).

Alim, M. A. et al. Suitability of roof harvested rainwater for potential potable water production: a scoping review. J. Clean. Prod. 248, 119226 (2020).

Fuentes-Galván, M. L., Medel, J. O. & Arias Hernández, L. A.Roof rainwater harvesting in central Mexico: uses, benefits, and factors of adoption. Water 10, 116 (2018).

Keithley, S. E., Fakhreddine, S., Kinney, K. A. & Kirisits, M. J. Effect of treatment on the quality of harvested rainwater for residential systems. J. Am. Water Works Assoc. 110, 1–11 (2018).

Ghisi, E. Parameters influencing the sizing of rainwater tanks for use in houses. Water Resour. Manag. 24, 2381–2403 (2010).

Sabina Yeasmin, K. F. R. Potential of Rainwater Harvesting in Dhaka City: An Empirical Study. 3rd International Conference on Water & Flood Management 7, https://www.researchgate.net/publication/257934572_Potential_of_Rainwater_harvesting_in_Dhaka_city (2013).

Basinger, M., Montalto, F. & Lall, U. A rainwater harvesting system reliability model based on nonparametric stochastic rainfall generator. J. Hydrol. 392, 105–118 (2010).

Cowden, J. R., Watkins, D. W. & Mihelcic, J. R. Stochastic rainfall modeling in West Africa: Parsimonious approaches for domestic rainwater harvesting assessment. J. Hydrol. 361, 64–77 (2008).

Leonard, D. & Gato-Trinidad, S. Effect of rainwater harvesting on residential water use: empirical case study. J. Water Resour. Plan. Manag. – ASCE 147, 05021003 (2021).

Melville-Shreeve, P., Ward, S. & Butler, D. Rainwater harvesting typologies for UK houses: a multi criteria analysis of system configurations. Water https://doi.org/10.3390/w8040129 (2016).

Morales-Pinzón, T., Rieradevall, J., Gasol, C. M. & Gabarrell, X. Modelling for economic cost and environmental analysis of rainwater harvesting systems. J. Clean. Prod. 87, 613–626 (2015).

Hofman-Caris, R. et al. Rainwater harvesting for drinking water production: a sustainable and cost-effective solution in the Netherlands? Water 11, 511 (2019).

Abbas, S., Mahmood, M. J. & Yaseen, M. Assessing the potential for rooftop rainwater harvesting and its physio and socioeconomic impacts, Rawal watershed, Islamabad, Pakistan. Environ. Dev. Sustain. 23, 17942–17963 (2021).

Quon, H., Allaire, M. & Jiang, S. C. Assessing the risk of Legionella infection through showering with untreated rain cistern water in a tropical environment. Water 13, 889 (2021).

Hamilton, K. A., Ahmed, W., Toze, S. & Haas, C. N. Human health risks for Legionella and Mycobacterium avium complex (MAC) from potable and non-potable uses of roof-harvested rainwater. Water Res. 119, 288–303 (2017).

Lim, K. Y. & Jiang, S. C. Reevaluation of health risk benchmark for sustainable water practice through risk analysis of rooftop-harvested rainwater. Water Res. 47, 7273–7286 (2013).

Schoen, M. E. & Ashbolt, N. J. An in-premise model for Legionella exposure during showering events. Water Res. 45, 5826–5836 (2011).

Hamilton, K. A. et al. Risk-based critical concentrations of Legionella pneumophila for indoor residential water uses. Environ. Sci. Technol. 53, 4528–4541 (2019).

de Man, H. et al. Health risk assessment for splash parks that use rainwater as source water. Water Res. 54, 254–261 (2014).

Bollin, G. E., Plouffe, J. F., Para, M. F. & Hackman, B. Aerosols containing Legionella pneumophila generated by shower heads and hot-water faucets. Appl. Environ. Microbiol. 50, 1128–1131 (1985).

Ahmed, W., Hamilton, K. A., Toze, S. & Haas, C. N. Seasonal abundance of fecal indicators and opportunistic pathogens in roof-harvested rainwater tanks. Open Health Data https://doi.org/10.5334/ohd.29 (2018).

Philp, M. et al. Review of Stormwater Harvesting Practices Urban Water Security Research Alliance Technical Report No. 9. Urban Water 9, 1–131 (2008).

Ahmed, W., Hamilton, K., Toze, S., Cook, S. & Page, D. A review on microbial contaminants in stormwater runoff and outfalls: potential health risks and mitigation strategies. Sci. Tot. Environ. 692, 1304–1321 (2019).

Akram, F., Rasul, M. G., Masud K Khan, M. & Sharif I. I. Amir, M. A review on stormwater harvesting and reuse. World Acad. Eng. Technol. 8, 178–187 (2014).

Hong, E., Seagren, E. A. & Davis, A. P. Sustainable oil and grease removal from synthetic stormwater runoff using bench-scale Bioretention Studies. Water Environ. Res. 78, 141–155 (2006).

McElmurry, S. P., Long, D. T. & Voice, T. C. Stormwater dissolved organic matter: Influence of land cover and environmental factors. Environ. Sci. Technol. 48, 45–53 (2014).

Chen, C., Guo, W. & Ngo, H. H. Pesticides in stormwater runoff—A mini review. Front. Environ. Sci. Eng. 13, 1–12 (2019).

Brown, J. N. & Peake, B. M. Sources of heavy metals and polycyclic aromatic hydrocarbons in urban stormwater runoff. Sci. Tot. Environ. 359, 145–155 (2006).

Sidhu, J. P. S., Hodgers, L., Ahmed, W., Chong, M. N. & Toze, S. Prevalence of human pathogens and indicators in stormwater runoff in Brisbane, Australia. Water Res. 46, 6652–6660 (2012).

Zoppou, C. Review of urban storm water models. Environ. Model. Softw. 16, 195–231 (2001).

Fletcher, T. D., Mitchell, V. G., Deletic, A., Ladson, T. R. & Séven, A. Is stormwater harvesting beneficial to urban waterway environmental flows? Water Sci. Technol. 55, 265–272 (2007).

Kavehei, E., Jenkins, G. A., Adame, M. F. & Lemckert, C. Carbon sequestration potential for mitigating the carbon footprint of Green Stormwater Infrastructure. Renew. Sustain. Energy Rev. 94, 1179–1191 (2018).

Zhang, K., Bach, P. M., Mathios, J., Dotto, C. B. S. & Deletic, A. Quantifying the benefits of stormwater harvesting for pollution mitigation. Water Res. 171, 115395 (2020).

Parker, E. A., Grant, S. B., Sahin, A., Vrugt, J. A. & Brand, M. W. Can smart stormwater systems outsmart the weather? Stormwater capture with real-time control in Southern California. ACS ES&T Water. https://doi.org/10.1021/acsestwater.1c00173 (2021).

Ma, Y. et al. Creating a hierarchy of hazard control for urban stormwater management. Environ. Pollut. 255, 113217 (2019).

Murphy, H. M., Meng, Z., Henry, R., Deletic, A. & McCarthy, D. T. Current stormwater harvesting guidelines are inadequate for mitigating risk from campylobacter during nonpotable reuse activities. Environ. Sci. Technol. 51, 12498–12507 (2017).

Schoen, M. E., Ashbolt, N. J., Jahne, M. A. & Garland, J. Risk-based enteric pathogen reduction targets for non-potable and direct potable use of roof runoff, stormwater, and greywater. Microb. Risk Anal. 5, 32–43 (2017).

Meneses, M., Pasqualino, J. C. & Castells, F. Environmental assessment of urban wastewater reuse: treatment alternatives and applications. Chemosphere 81, 266–272 (2010).

Tang, C. Y. et al. Potable water reuse through advanced membrane technology. Environ. Sci. Technol. 52, 10215–10223 (2018).

Mizyed, N. R. Challenges to treated wastewater reuse in arid and semi-arid areas. Environ. Sci. Policy 25, 186–195 (2013).

Rice, J., Wutich, A. & Westerhoff, P. Assessment of de facto wastewater reuse across the US: trends between 1980 and 2008. Environ. Sci. Technol. 47, 11099–11105 (2013).

Friedler, E. Water reuse—an integral part of water resources management: Israel as a case study. Water Policy 3, 29–39 (2001).

Tortajada, C. Water management in Singapore. Water Resour. Dev. 22, 227–240 (2006).

Gude, V. G. Desalination and water reuse to address global water scarcity. Rev. Environ. Sci. 16, 591–609 (2017).

Lahnsteiner, J. & Lempert, G. Water management in Windhoek, Namibia. Water Sci. Technol. 55, 441–448 (2007).

Duong, K. & Saphores, J. M. Obstacles to wastewater reuse: an overview. WIREs Water 2, 199–214 (2015).

Michael, I. et al. Urban wastewater treatment plants as hotspots for the release of antibiotics in the environment: a review. Water Res. 47, 957–995 (2013).

Chen, Z., Ngo, H. H. & Guo, W. A critical review on the end uses of recycled water. Crit. Rev. Environ. Sci. Technol. 43, 1446–1516 (2013).

Piñaet, B. et al. On the contribution of reclaimed wastewater irrigation to the potential exposure of humans to antibiotics, antibiotic resistant bacteria and antibiotic resistance genes–NEREUS COST Action ES1403 position paper. J. Environ. Chem. Eng. 8, 102131 (2020).

Fahrenfeld, N., Ma, Y., O’Brien, M. & Pruden, A. Reclaimed water as a reservoir of antibiotic resistance genes: distribution system and irrigation implications. Front. Microbiol. 4, 130 (2013).

Garner, E. et al. Towards risk assessment for antibiotic resistant pathogens in recycled water: a systematic review and summary of research needs. Environ. Microbiol. 23, 7355–7372 (2021).

Pepper, I. L., Brooks, J. P. & Gerba, C. P. Antibiotic resistant bacteria in municipal wastes: is there reason for concern? Environ. Sci. Technol. 52, 3949–3959 (2018).

Ogoshi, M., Suzuki, Y. & Asano, T. Water reuse in Japan. Water Sci. Technol. 43, 17–23 (2001).

Caicedo, C. et al. Legionella occurrence in municipal and industrial wastewater treatment plants and risks of reclaimed wastewater reuse: Review. Water Res. 149, 21–34 (2019).

Shahriar, A. et al. Modeling the fate and human health impacts of pharmaceuticals and personal care products in reclaimed wastewater irrigation for agriculture. Environ. Pollut. 276, 116532 (2021).

van Ginneken, M. & Oron, G. Risk assessment of consuming agricultural products irrigated with reclaimed wastewater: an exposure model. Water Resour. Res. 3, 2691–2699 (2000).

An, Y. J., Yoon, C. G., Jung, K. W. & Ham, J. H. Estimating the microbial risk of E. coli in reclaimed wastewater irrigation on paddy field. Environ. Monit. Assess. 129, 53–60 (2007).

Njuguna, S. M. et al. Health risk assessment by consumption of vegetables irrigated with reclaimed waste water: a case study in Thika (Kenya). J. Environ. Manag. 231, 576–581 (2019).

Verlicchi, P. et al. A project of reuse of reclaimed wastewater in the Po Valley, Italy: polishing sequence and cost benefit analysis. J. Hydrol. 432, 127–136 (2012).

Fan, Y., Chen, W., Jiao, W. & Chang, A. C. Cost-benefit analysis of reclaimed wastewater reuses in Beijing. Desalination Water Treat. 53, 1224–1233 (2015).

Molinos-Senante, M., Hernández-Sancho, F. & Sala-Garrido, R. Cost-benefit analysis of water-reuse projects for environmental purposes: a case study for spanish wastewater treatment plants. J. Environ. Manag. 92, 3091–3097 (2011).

Gonzalez-Serrano, E., Rodriguez-Mirasol, J., Cordero, T., Koussis, A. D. & Rodriguez, J. J. Cost of reclaimed municipal wastewater for applications in seasonally stressed semi-arid regions. J. Water Supply.: Res. Technol. - AQUA 54, 355–369 (2005).

Ghaitidak, D. M. & Yadav, K. D. Characteristics and treatment of greywater-a review. Environ. Sci. Pollut. Res. 20, 2795–2809 (2013).

Al-Jayyousi, O. R. Greywater reuse: Towards sustainable water management. Desalination 156, 181–192 (2003).

Friedler, E. Quality of individual domestic greywater streams and its implication for on-site treatment and reuse possibilities. Environ. Technol. 25, 997–1008 (2004).

Friedler, E. & Hadari, M. Economic feasibility of on-site greywater reuse in multi-storey buildings. Desalination 190, 221–234 (2006).

Elmitwalli, T. A. & Otterpohl, R. (2007). Anaerobic biodegradability and treatment of grey water in upflow anaerobic sludge blanket (UASB) reactor. Water Res. 41, 1379–1387 (2007).

Leal, L. H., Temmink, H., Zeeman, G. & Cees, C. J. Comparison of three systems for biological greywater treatment. Water 2, 155–169 (2010).

Merz, C., Scheumann, R., el Hamouri, B. & Kraume, M. Membrane bioreactor technology for the treatment of greywater from a sports and leisure club. Desalination 215, 37–43 (2007).

Allen, L., Christian-Smith, J. & Palaniappan, M. Overview of greywater reuse: the potential of greywater systems to aid sustainable water management. Pac. Inst. 654, 19–21 (2010).

Finley, S., Barrington, S. & Lyew, D. Reuse of domestic greywater for the irrigation of food crops. Water, Air, Soil Pollut. 199, 235–245 (2009).

Blanky, M., Sharaby, Y., Rodríguez-Martínez, S., Halpern, M. & Friedler, E. Greywater reuse - Assessment of the health risk induced by Legionella pneumophila. Water Res. 125, 410–417 (2017).

Shi, K. W., Wang, C. W. & Jiang, S. C. Quantitative microbial risk assessment of greywater on-site reuse. Sci. Tot. Environ. 635, 1507–1519 (2018).

Alsulaili, A. D. & Hamoda, M. F. Quantification and characterization of greywater from schools. Water Sci. Technol. 72, 1973–1980 (2015).

Antonopoulou, G., Kirkou, A. & Stasinakis, A. S. Quantitative and qualitative greywater characterization in Greek households and investigation of their treatment using physicochemical methods. Sci. Tot. Environ. 454, 426–432 (2013).

do Couto, E. D. A., Calijuri, M. L., Assemany, P. P., Santiago, A. D. F. & Carvalho, I. D. C. Greywater production in airports: qualitative and quantitative assessment. Resour. Cons. Recycl. 77, 44–51 (2013).

Rodríguez, C. et al. Cost–benefit evaluation of decentralized greywater reuse systems in rural public schools in chile. Water 12, 1–14 (2020).

Voutchkov, N. Energy use for membrane seawater desalination – current status and trends. Desalination 431, 2–14 (2018).