Abstract

We consider a general type of non-Markovian impulse control problems under adverse non-linear expectation or, more specifically, the zero-sum game problem where the adversary player decides the probability measure. We show that the upper and lower value functions satisfy a dynamic programming principle (DPP). We first prove the dynamic programming principle (DPP) for a truncated version of the upper value function in a straightforward manner. Relying on a uniform convergence argument then enables us to show the DPP for the general setting. Following this, we use an approximation based on a combination of truncation and discretization to show that the upper and lower value functions coincide, thus establishing that the game has a value and that the DPP holds for the lower value function as well. Finally, we show that the DPP admits a unique solution and give conditions under which a saddle point for the game exists. As an example, we consider a stochastic differential game (SDG) of impulse versus classical control of path-dependent stochastic differential equations (SDEs).

Similar content being viewed by others

1 Introduction

We solve a robust impulse control problem where the aim is to find an impulse control, \(u^*=(\tau _i^*,\beta _i^*)_{i=1}^{\infty }\), that is optimal in the sense that it satisfies

where \(\mathcal {E}^u[\cdot ]:=\sup _{\mathbb {P}\in \mathcal {P}(u)}\mathbb {E}^\mathbb {P}[\cdot ]\) is a non-linear expectation and the terminal reward \(\varphi \) is a random function that maps impulse controls \(u=(\tau _j,\beta _j)_{j=1}^\infty \) (\(N:=\max \{j:\tau _j< T\}\)) to values of the real line and is measurable with respect to \(\mathcal {F}\otimes \mathcal {B}({\bar{\mathcal {D}}})\), where \(\mathcal {B}({\bar{\mathcal {D}}})\) is the Borel \(\sigma \)-field of the space \({\bar{\mathcal {D}}}\) where the impulse control u takes values and \(\mathcal {F}\) is the Borel \(\sigma \)-field on the space of continuous trajectories starting at 0. The intervention cost c is defined similarly to \(\varphi \) but is in addition assumed to be progressively measurable in the last intervention-time and bounded from below by a positive constant.

In fact, we take this formulation one step further by showing that, under mild conditions, the zero-sum game where we play an impulse control while the adversary player (nature) chooses a probability measure has a value when allowing our adversary to play strategies, i.e.that

where \(\mathcal {P}^S\) is the set of non-anticipative strategies mapping impulse controls to probability measures, and derive additional conditions under which a saddle point, \((u^*,\mathbb {P}^{*,S})\in \mathcal {U}\times \mathcal {P}^S\), for the game exists.

Our approach relies on the tower property of non-linear expectations discovered in [22] and applied in [23] to solve an optimal stopping problem under adverse non-linear expectation. In this regard, we need to assume that \(\varphi \) and c are uniformly bounded and uniformly continuous under a suitable metric.

To indicate the applicability of the results we consider the special case when (1.1) corresponds to a weak formulation of the stochastic differential game (SDG) of impulse versus classical control

where \(\mathcal {A}\) is a set of classical controls with càdlàg paths and \(X^{u,\alpha }\) solves an impulsively-continuously controlled path-dependent stochastic differential equation (SDE) that implements u in feedback form. To assure sufficient regularity in this setting, we impose an additional \(L^2\)-Lipschitz condition on the coefficients of the SDE. In particular, we will see that this SDG corresponds to setting

and

where for each \(u\in \mathcal {U}\), \(\mathcal {I}^u\) is a map from the space of continuous paths to the space of càdlàg paths that adds the impulses in u to the, otherwise, continuous trajectory.

The main contributions of the present work are threefold. First, we show that the game (1.2) has a solution when the sets \((\mathcal {P}(u):u\in \mathcal {U})\) satisfy standard conditions translated to our setting and the functions \(\varphi \) and c are bounded and uniformly continuous. Second, we extract a saddle point under additional weak-compactness assumptions on the family \((\mathcal {P}(u):u\in \mathcal {U})\). Finally, we give a set of conditions under which the cost/reward pair defined by (1.4)–(1.5) satisfies the assumptions in the first part of the paper, enabling us to show that the path-dependent SDG of classical versus impulse control in (1.3) has a value.

Related Literature The optimal stopping problem under adverse non-linear expectation was considered by Nutz and Zhang [23] and by Bayraktar and Yao [5], where the latter allows for a slightly more general setting not having to assume a uniform bound on the rewards. Nevertheless, as explained above, [23] is based on the tower property of non-linear expectations developed in [22] and is, therefore, more closely related to the present work.

Non-Markovian impulse control under standard (linear) expectation was first considered by Hamadène et. al.in [9], where it was assumed that the impulses do not affect the dynamics of the underlying process. This approach was extended to incorporate delivery lag in [17] and, more recently, also to an infinite horizon setting in [10]. A different approach to non-Markovian impulse control was initiated in [25] and then further developed in [18] where interconnected Snell envelopes indexed by controls were used to find solutions to problems with impulsively controlled path-dependent SDEs. We mention also the general formulation of impulse controls in [26], which can be seen as a linear expectation version of the present work, and the work on impulse control of path-dependent SDEs under g-expectation (see [24]) and related systems of backward SDEs (BSDEs) in [28]. Although the latter work considers a path-dependent SDG of impulse versus classical control, the classical control only enters the drift term. Effectively this corresponds to the situation when the set \(\mathcal {P}(u)\) in our framework is dominated by a single probability measure and the extension to non-dominated sets would have to go through the incorporation of second-order BSDEs (2BSDEs) (see [8, 32]).

The idea of having one player implement a strategy in the zero-sum game setting was first proposed by Elliot and Kalton [14] to counter the unrealistic idea that one of the players has to give up their control to the opponent in games of control versus control. The approach was combined with the theory of viscosity solutions to find a representation of the upper and lower value functions in deterministic differential games as solutions to Hamilton-Jacobi-Bellman-Isaacs (HJBI) equations by Evans and Souganidis [15]. Using a discrete time approximation technique, this was later translated to the stochastic setting by Flemming and Souganidis [16]. Notable is that, while the framework of [14], which has been the prevailing formulation in the literature since its introduction, assumes that the first player to act always implements a strategy, our formulation only allows the adversary player to implement a strategy when she acts first, while our impulse control is always open loop. The reason that our approach is still successful lies in the weak formulation of the game, effectively turning the impulse control into a feedback control when we turn to the SDG in the latter part of the paper. Moreover, our approach avoids the asymmetric information structure that results from implementing the game formulation in [14] which in turn enables us to show the existence of a saddle point.

Related to the SDG of impulse versus classical control that we consider is the work of Azimzadeh [1] when the intervention costs are deterministic and by Bayraktar et. al.[2] that considers a robust impulse control problem when the impulse control is of switching type. Both of these are restricted to the Markovian setting. The latter implements the switching control in feedback form while the classical control is open loop. In this sense, it is probably the closes work to the present one that can be found in the literature. However, the approach used in [2] is based on the Stochastic Perron Method of Bayraktar and Sîrbu [3, 4] (see also [31] for another application to SDGs) that do not easily translate to a path-dependent setting. The setup in [1] was later extended in [27] to allow for stochastic intervention costs and g-nonlinear expectation. However, this extension also falls within the Markovian framework and uses standard BSDEs to define the cost/reward.

Previous works on non-Markovian SDGs in the standard framework of classical versus classical control can be found in Pham and Zhang [29] and Possamaï et. al.[30], where the latter uses a weak-formulation of feedback control versus feedback control and shows that the game has a value under the Isaac’s condition and uniqueness of solutions to the corresponding path-dependent HJBI-equation [12, 13]. We remark that an interesting further development of the present work would be to consider the corresponding path-dependent quasi-variational inequalities, reminiscent of the relation between Markovian impulse control problems and classical quasi-variational inequalities [6].

Outline The following section provides some preliminary definitions, reviews certain prior results (in particular, the solution to the optimal stopping problem under non-linear expectation in [23]), and give an immediate extension of the tower property in [22] to our setting. Then, in Sect. 3 we show that a dynamic programming principle holds for the upper value function. In Sect. 4, we use an approximation routine applied to both value functions to conclude that the game has a value, i.e.that (1.2) holds. In Sect. 5 it is shown that our DPP admits a unique solution and conditions are given under which an optimal pair can be extracted from the value function. Finally, in Sect. 6 we relate the result to path-dependent SDGs of impulse versus classical control.

2 Preliminaries

2.1 Notation

Throughout, we shall use the following notation, where we set a fixed horizon \(T\in (0,\infty )\):

-

We fix a positive integer d, define the sample space \(\Omega :=\{\omega \in C(\mathbb {R}_+\rightarrow \mathbb {R}^d):\omega _0=0\}\) and set \(\Lambda :=[0,T]\times \Omega \). For \(t\in [0,T]\) we introduce the pseudo-norm \(\Vert \omega \Vert _t:=\sup _{s\in [0,t]}|\omega _s|\) and extend the corresponding distance to \(\Lambda \) by defining

$$\begin{aligned} {\textbf{d}}[(t,\omega ),(t',\omega ')]:=|t'-t|+\Vert \omega '_{\cdot \wedge t'}-\omega _{\cdot \wedge t}\Vert _T. \end{aligned}$$(2.1) -

For \((t,\omega )\in \Lambda \), we let \(\Omega ^{t,\omega }:=\{\omega '\in \Omega :\omega '|_{[0,t]}=\omega |_{[0,t]}\}\).

-

The set of all probability measures on \(\Omega \) equipped with the topology of weak convergence, i.e.the weak topology induced by the bounded continuous functions on \(\Omega \), is denoted \(\mathfrak {P}(\Omega )\).

-

We let B denote the canonical process, i.e. \(B_t(\omega ):=\omega _t\) and denote by \(\mathbb {P}_0\) the probability measure under which B is a Brownian motion and let \(\mathbb {E}\) be the expectation with respect to \(\mathbb {P}_0\).

-

\(\mathbb {F}:=\{\mathcal {F}_t\}_{0\le t\le T}\) is the natural (raw) filtration generated by B and \(\mathbb {F}^*:=\{\mathcal {F}^*_t\}_{0\le t\le T}\), where \(\mathcal {F}^*_t\) is the universal completion of \(\mathcal {F}_t\).

-

We let \(\mathcal {T}\) be the set of all \(\mathbb {F}\)-stopping times and for each \(\eta \in \mathcal {T}\) we let \(\mathcal {T}_\eta \) be the set of all \(\tau \in \mathcal {T}\) such that \(\tau (\omega )\ge \eta (\omega )\) for all \(\omega \in \Omega \). For fixed \(t\in [0,T]\), we let \(\mathcal {T}^t\) be all \(\tau \in \mathcal {T}_t\) such that \(\omega \mapsto \tau (\omega )\) is independent of \(\omega |_{[0,t]}\).

-

For \(\kappa \ge 0\), we let \(D^\kappa :=\{(t_j,b_j)_{j=1}^{\kappa }: 0\le t_1\le \cdots \le t_\kappa \le T,\, b_j\in U\}\), where U is a compact subset of \(\mathbb {R}^d\), and set \(\mathcal {D}:=\cup _{\kappa \ge 0}D^\kappa \) and \({\bar{\mathcal {D}}}:=\mathcal {D}\cup \{(t_j,b_j)_{j= 1}^\infty : 0\le t_1\le \cdots \le T,\, b_j\in U\}\). Moreover, for \(0\le t\le s\le T\), we let \(\mathcal {D}_{[t,s]}\) (resp. \({\bar{\mathcal {D}}}_{[t,s]}\)) be the subset of \((t_j,b_j)_{j=1}^\kappa \in \mathcal {D}\) (resp. \({\bar{\mathcal {D}}}\)) with \(t_j\subset [t,s]\) for \(j=1,\ldots ,\kappa \).

-

We let \(\mathcal {U}\) be the set of all \(u=(\tau _j,\beta _j)_{j=1}^N\), where \((\tau _j)_{j=1}^\infty \) is a non-decreasing sequence of \(\mathbb {F}\)-stopping times, \(\beta _j\) is a \(\mathcal {F}_{\tau _j}\)-measurable r.v. taking values in U and \(N:=\max \{j:\tau _j< T\}\). Moreover, for \(k\ge 0\) we let \(\mathcal {U}^k:=\{u\in \mathcal {U}:\,N\le k\}\). We interchangeably refer to elements \(u\in \mathcal {U}\) (resp. \(u\in \mathcal {U}^k\)) as \(u=(\tau _j,\beta _j)_{j=1}^N\) and \(u=(\tau _j,\beta _j)_{j=1}^\infty \) (resp. \(u=(\tau _j,\beta _j)_{j=1}^k\)).

-

For \(t\in [0,T]\), we let \(\mathcal {U}_t\) (resp. \(\mathcal {U}^k_t\)) be the subset of \(\mathcal {U}\) (resp. \(\mathcal {U}^k\)) with all controls for which \(\tau _1\ge t\) and denote by \(\mathcal {U}^{t}\) the subset of \(\mathcal {U}_t\) with all controls u such that \(\omega \mapsto u(\omega )\) is independent of \(\omega |_{[0,t]}\).

-

For \(u\in \mathcal {U}\cup (\cup _{j=1}^\infty \mathcal {U}^j)\) and \(k\ge 0\), we let \([u]_k:=(\tau _j,\beta _j)_{j=1}^{N\wedge k}\). Furthermore, for \(t\ge 0\) we let \(N(t):=\max \{j\ge 0:\tau _j\le t\}\) and define \(u_t:=[u]_{N(t)}\) and \(u^t:=(\tau _j,\beta _j)_{j= N(t)+1}^N\).

-

We denote by \(\emptyset \in \mathcal {U}\) the control with no interventions, i.e. \(N=0\) implying that \(\tau _1=T\).

-

For each \(\kappa \ge 0\), we introduce the pseudo-distance \({\textbf{d}}_\kappa \) on \(\Lambda \times D^\kappa \) as

$$\begin{aligned}&{\textbf{d}}_\kappa [(t,\omega ,{\textbf {v}}),(t',\omega ',{\textbf {v}}')]:=|t'-t|+\Vert \omega '_{\cdot \wedge t'}-\omega _{\cdot \wedge t}\Vert _T\\&\quad +\sum _{j=1}^\kappa \Vert \omega '_{\cdot \wedge t'_j\wedge t'}-\omega _{\cdot \wedge t_j\wedge t}\Vert _T+|{\textbf {v}}'-{\textbf {v}}|. \end{aligned}$$

We stretch the definition of uniform continuity to maps on \(\Lambda \times \mathcal {D}\) as follows:

Definition 2.1

We say that a map \(\mathcal {Y}:\Lambda \times \mathcal {D}\rightarrow \mathbb {R}\) is uniformly continuous if for each \(\kappa \ge 0\), the map \(\mathcal {Y}:\Lambda \times D^\kappa \rightarrow \mathbb {R}\) is uniformly continuous under the distance \({\textbf{d}}_\kappa \).

We define the concatenation of \({\textbf {v}}=(t_j,b_j)_{j=1}^\kappa \in D^\kappa \) and \({\textbf {v}}'=(t'_j,b'_j)_{j\ge 1}\in {\bar{\mathcal {D}}}\) as

For technical reasons we extend the composition to pairs \(({\textbf {v}},{\textbf {v}}')\) when \({\textbf {v}}\) has infinite length by letting \({\textbf {v}}\circ {\textbf {v}}'={\textbf {v}}\) in this case. The extension allows us to decompose any control \(u\in \mathcal {U}\) as \(u=u_\tau \circ u^\tau \).

For \(\tau \in \mathcal {T}\) and \(\omega ,\omega '\in \Omega \) we introduce the composition on \(\Omega \) as

and for \(f:\Omega \rightarrow \mathbb {R}\) we set \(f^{\tau ,\omega }(\omega '):=f(\omega \otimes _\tau \omega ')\).

The results we present rely on the notion of regular conditional probability: Any \(\mathbb {P}\in \mathfrak {P}(\Omega )\) has a regular conditional probability distribution \((\mathbb {P}^\omega _\tau )_{\omega \in \Omega }\) given \(\mathcal {F}_\tau \) satisfying

for all \(\omega \in \Omega \) (see e.g.page 34 in [33]). We define the probability measure \(\mathbb {P}^{\tau ,\omega }\in \mathfrak {P}(\Omega )\) as

for all \(A\in \mathcal {F}\), where \(\omega \otimes _\tau A:=\{\omega \otimes _\tau \omega ':\omega '\in A\}\).

Definition 2.2

We let \(\{\mathcal {P}(t,\omega ,{\textbf {v}}\circ u):(t,\omega ,{\textbf {v}},u)\in \cup _{t\in [0,T]}\{t\}\times \Omega \times {\bar{\mathcal {D}}}_{[0,t]} \times \mathcal {U}_t\}\) be a family of subsets of \(\mathfrak {P}(\Omega )\) such that

Moreover, we let \(\mathcal {P}(\tau ,\omega ,u):=\mathcal {P}(\tau (\omega ),\omega ,u_{\tau (\omega )}(\omega )\circ u^{\tau (\omega )})\) and set \(\mathcal {P}(u):=\mathcal {P}(0,\omega ,u)\).

We recall that a subset of a Polish space is analytic if it is the image of a Borel subset of another Polish space under a Borel map and that an \({\bar{\mathbb {R}}}\)-valued function f is upper semi-analytic if the set \(\{f> c\}\) is analytic for each \(c\in \mathbb {R}\).

For \(\mathbb {P}\in \mathfrak {P}(\Omega )\) we let \(\mathbb {E}^\mathbb {P}_t[f](\omega ):=\mathbb {E}^\mathbb {P}[f^{t,\omega }]\) (here \(\mathbb {E}^\mathbb {P}\) is expectation with respect to \(\mathbb {P}\)) and define the non-linear expectation

for all \((\tau ,\omega ,u)\in \mathcal {T}\times \Omega \times \mathcal {U}\) and all upper semi-analytic functions \(f:\Omega \rightarrow {\bar{\mathbb {R}}}\). It was shown in [22] that, under Assumption 2.5 below, \(\mathcal {E}^u_\tau [f]\) is \(\mathcal {F}_\tau ^*\)-measurable and upper semi-analytic.

The idea is that the adversary player, given a trajectory \(\omega |_{[0,t]}\) and a sequence of impulses \({\textbf {v}}\in \mathcal {D}_{[0,t]}\) chooses a probability measure on \(\Omega ^{t,\omega }\) to maximize (1.1). In this regard we introduce the set of non-anticipative maps from controls to probability measures:

Definition 2.3

We denote by \(\mathcal {P}^S\) the set of non-anticipative maps \(\mathbb {P}^S:\mathcal {U}\rightarrow \mathfrak {P}(\Omega )\) mapping \(u\in \mathcal {U}\) to \(\mathbb {P}=\mathbb {P}^S(u)\in \mathcal {P}(u)\). By non-anticipativity, we mean that if \(u_{\tau -}={\tilde{u}}_{\tau -}\) for some \(\tau \in \mathcal {T}\) and \(u,{\tilde{u}}\in \mathcal {U}\), then \(\mathbb {P}^S(u)[A]=\mathbb {P}^S({\tilde{u}})[A]\) for all \(A\in \mathcal {F}_\tau \).

Moreover, for \((t,\omega )\in \Lambda \) we let \(\mathcal {P}^S(t,\omega )\) denote the set of all non-anticipative maps \(\mathbb {P}^S:\mathcal {U}\rightarrow \mathfrak {P}(\Omega )\) such that \(\mathbb {P}^S(u)\in \mathcal {P}(t,\omega ,u)\). Often, we suppress dependence of \(\omega \) and write \(\mathcal {P}^S_t\) for \(\mathcal {P}^S(t,\omega )\).

2.2 Assumptions

Assumption 2.4

For each \(u\in \mathcal {U}\) we assume that \(\mathcal {P}(u)\) is non-empty. Moreover, \(\mathbb {P}\in \mathcal {P}(u)\) if for each \(k\ge 0\), there is a \(\mathbb {P}'\in \mathcal {P}([u]_k)\) with \(\mathbb {P}'=\mathbb {P}\) on \(\mathcal {F}_{\tau _k}\).

Assumption 2.5

For \((t,\omega )\in \Lambda \), \({\textbf {v}}\in {\bar{\mathcal {D}}}_{[0,t]}\) and \(u\in \mathcal {U}_t\), we assume that \(\mathcal {P}(t,\omega ,{\textbf {v}}\circ u)\) satisfies for any stopping time \(\tau \in \mathcal {T}_t\) and \(\mathbb {P}\in \mathcal {P}(t,\omega ,{\textbf {v}}\circ u)\) (with \(\theta :=\tau ^{t,\omega }-t\)):

-

(a)

The graph \(\{(\mathbb {P}',\omega '):\omega '\in \Omega ,\,\mathbb {P}'\in \mathcal {P}(\tau ,\omega ',{\textbf {v}}\circ u)\}\subset \mathfrak {P}(\Omega )\times \Omega \) is analytic.

-

(b)

We have \(\mathbb {P}^{\theta ,\omega '}\in \mathcal {P}(\tau ,\omega \otimes _{t}\omega ',{\textbf {v}}\circ u)\) for \(\mathbb {P}\)-a.e. \(\omega '\).

-

(c)

If \(\nu :\Omega \rightarrow \mathfrak {P}(\Omega )\) is an \(\mathcal {F}_{\theta }\)-measurable kernel and \(\nu (\omega ')\in \mathcal {P}(\tau ,\omega \otimes _{t}\omega ',{\textbf {v}}\circ u)\) for \(\mathbb {P}\)-a.e. \(\omega '\), then the measure defined by

$$\begin{aligned} \mathbb {P}'[A]:=\int \!\!\!\int (\mathbbm {1}_A)^{\theta ,{\tilde{\omega }}}(\omega ')\nu (d\omega ';{\tilde{\omega }})\mathbb {P}[d{\tilde{\omega }}] \end{aligned}$$(2.2)for any \(A\in \mathcal {F}\), belongs to \(\mathcal {P}(t,\omega ,{\textbf {v}}\circ u)\).

-

(d)

Let \({\tilde{u}}\in \mathcal {U}_t\) be such that \(u_{\tau -}={\tilde{u}}_{\tau -}\), then there is a \(\mathbb {P}'\in \mathcal {P}(t,\omega ,{\textbf {v}}\circ {\tilde{u}})\) such that \(\mathbb {P}'=\mathbb {P}\) on \(\mathcal {F}_{\theta }\).

In the above assumption, conditions (a)–(c) are standard (see e.g.[20]) and basically means that dynamic programming holds. The last condition implies that the non-anticipativity postulated in Definition 2.3 is achievable.

Assumption 2.6

The functions \(\varphi :\Omega \times \mathcal {D}\rightarrow \mathbb {R}\) and \(c:\Omega \times \mathcal {D}\rightarrow [\delta ,\infty )\) (with \(\delta >0\)) are uniformly bounded, i.e.there is a constant \(C_0>0\) such that

for all \((\omega ,{\textbf {v}})\in \Omega \times {\bar{\mathcal {D}}}\). For each \(\kappa \ge 0\), there is a modulus of continuity function \(\rho _{\varphi ,\kappa }\) such that

for all \(\omega ,\omega '\in \Omega \) and \({\textbf {v}},{\textbf {v}}'\in D^\kappa \) with \(t_j\le t'_j\) for \(j=1,\ldots ,\kappa \). Moreover, c(u) is \(\mathcal {F}_{\tau _N}\)-measurable and for each \(\kappa \ge 0\) there is a modulus of continuity function \(\rho _{c,\kappa }\) such that

where \(t_\kappa \) and \(t'_\kappa \) are the times of the last interventions in \({\textbf {v}}\) and \({\textbf {v}}'\), respectively. Finally, for any \({\textbf {v}}\in \mathcal {D}\) and \(b\in U\) we have

for all \(\omega \in \Omega \).

It is worth noting that due to the lower bound on the intervention costs and the uniform boundedness of the terminal cost, it is never optimal to perform an infinite number of interventions.

Remark 2.7

In view of (2.3) it is never optimal to intervene on the system at time T. In light of this and to simplify notation later on we will assume that \(\varphi \) is such that \(\varphi (\omega ,{\textbf {v}}\circ (T,b))=\varphi (\omega ,{\textbf {v}})\) for all \(\omega \in \Omega \), \({\textbf {v}}\in \mathcal {D}\) and \(b\in U\).

The following assumption is stated in a form that is most suitable for the proofs.

Assumption 2.8

For each \(i\ge 0\), there is a modulus of continuity \(\rho _{\mathcal {E},i}\) such that for all \(\kappa ,k\ge 0\), \({\textbf {v}}',{\textbf {v}}\in D^\kappa \), \(t\in [0,T]\) and \(\omega , \omega '\in \Omega \) and \(u\in \mathcal {U}^k_t\), there is a \(u_\omega \in \mathcal {U}^k_{t}\) such that

and \((\omega ,{\tilde{\omega }})\mapsto u_\omega ({\tilde{\omega }})\) is \(\mathcal {F}_t\otimes \mathcal {F}\)-measurable.

Assumption 2.8 serves the purpose of ensuring that if, at any given time \(t\in [0,T]\), the state variable \(\omega _{\cdot \wedge t}\) and interventions made \({\textbf {v}}\) are in close proximity to another state variable \(\omega '_{\cdot \wedge t}\) and corresponding interventions \({\textbf {v}}'\), respectively, then the resulting future minimal costs will also be similar. While this property is generally evident in most standard control problems where the coefficients are sufficiently regular, our framework requires that Assumption 2.8 is explicitly imposed.

To better understand the practical implications of Assumption 2.8, we refer readers to Sect. 6, where we discuss a specific example of an SDG with an underlying impulsively controlled process that is the solution to a path-dependent SDE. We prove that in this framework, Assumption 2.8 is satisfied, which underscores its practical significance.

Assumption 2.9

For each modulus of continuity \(\rho \) there is a modulus of continuity \(\rho '\) such that for any \(\tau \in \mathcal {T}\), we have \(\sup _{\mathbb {P}\in \mathcal {P}(t,\omega ,u)}\mathbb {E}^\mathbb {P}(\rho (\varepsilon +\sup _{s\in [\tau ,\tau +\varepsilon ]}|B_s|))\le \rho '(\varepsilon )\) for all \((t,\omega ,u)\in \Lambda \times \mathcal {U}\).

Remark 2.10

In order to prioritize clarity of the central concepts and main ideas, we have intentionally avoided striving for the greatest possible generality. This includes imposing a uniform bound on the coefficients, which allows us to directly use the results on optimal stopping from [23]. However, as noted in the introduction, uniform boundedness is replaced by a linear growth assumption in [5], and future research could explore adopting a similar approach to generalize our work to coefficients \(\varphi \) and c that have linear growth in \(\omega \). Another possible generalization is to allow for negative jumps in \(\varphi \) and c, thus replacing the uniform continuity assumptions with one sided versions.

2.3 The Tower Property for Our Family of Nonlinear Expectations

The following trivial extension of Theorem 2.3 in [22] follows immediately from the above assumptions:

Proposition 2.11

For each \(k\ge 0\) and \(\tau ,\eta \in \mathcal {T}\), with \(\tau \le \eta \), and all \({\textbf {v}}\in \mathcal {D}\), \(u:=(\tau _j,\beta _j)_{j=1}^k\in \mathcal {U}^k_\tau \) and \({\tilde{u}}\in \mathcal {U}_\eta \) we have

for each upper semi-analytic function \(f:\Omega \rightarrow {\bar{\mathbb {R}}}\).

Proof

We first note that since \(\mathcal {E}^{{\textbf {v}}\circ u\circ {\tilde{u}}}\) satisfies Assumption 2.1 in [22], Theorem 2.3 of the same article gives that

Moreover, since \(({\textbf {v}}\circ u \circ {\tilde{u}})_{\eta -}=({\textbf {v}}\circ u)_{\eta -}\), Assumption 2.5.(d)) gives that for each \(\mathbb {P}\in \mathcal {P}(t,\omega ,{\textbf {v}}\circ u \circ {\tilde{u}})\), there is a \(\mathbb {P}'\in \mathcal {P}(t,\omega ,{\textbf {v}}\circ u)\) such that \(\mathbb {P}=\mathbb {P}'\) on \(\mathcal {F}_\eta \) (and thus on \(\mathcal {F}^*_\eta \)), and vice versa. Finally, as \(\mathcal {E}^{{\textbf {v}}\circ u\circ {\tilde{u}}}_\eta \big [f\big ]\) is \(\mathcal {F}^*_\eta \)-measurable the result follows. \(\square \)

2.4 Optimal Stopping Under Non-linear Expectation

We recall the following result on optimal stopping under non-linear expectation \(\mathcal {P},\,\mathcal {E}\) (satisfying the assumptions on, say, \(\mathcal {P}(\emptyset ),\,\mathcal {E}^{\emptyset }\) above).

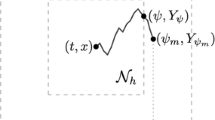

Theorem 2.12

(Nutz and Zhang [23]) Assume that the process \((X_t)_{0\le t\le T}\) has càdlàg paths, is progressively measurable and uniformly bounded, and satisfies

for some modulus of continuity function \(\rho _X\) and all \((t,\omega ),(t',\omega ')\in \Lambda \) with \( t\le t'\). Then, \(Y_t:=\inf _{\tau \in \mathcal {T}_t}\mathcal {E}_t[X_\tau ]\) satisfies:

-

(i)

Let \(\tau ^*:=\inf \{s\ge t:Y_s=X_s\}\), then \(\tau ^*\in \mathcal {T}_t\) and \(Y_t:=\mathcal {E}_t[X_{\tau ^*}]\). Moreover, \(Y_{\cdot \wedge \tau ^*}\) is a \(\mathbb {P}\)-supermartingale for any \(\mathbb {P}\in \mathcal {P}\).

-

(ii)

The game has a value: \(\inf _{\tau \in \mathcal {T}}\mathcal {E}[X_\tau ]=\sup _{\mathbb {P}\in \mathcal {P}}\inf _{\tau \in \mathcal {T}}\mathbb {E}^\mathbb {P}[X_\tau ]\).

-

(iii)

If \(\mathcal {P}(t,\omega )\) is weakly compact for each \((t,\omega )\in \Lambda \), then there is a \(\mathbb {P}^*\in \mathcal {P}\) such that \(Y_0=\mathbb {E}^{\mathbb {P}^*}[X_{\tau ^*}]=\inf _{\tau \in \mathcal {T}} \mathbb {E}^{\mathbb {P}^*}[X_{\tau }]\).

3 A Dynamic Programming Principle

We define the upper value function asFootnote 1

for all \(\omega \in \Omega \). Moreover, we define the lower value function as

for all \(\omega \in \Omega \).

Remark 3.1

As noted in the introduction, it may appear as though the setup is somewhat asymmetric as the optimization problem for the lower value function contains a strategy, whereas the corresponding problem for the upper value function does not. However, as the impulse controls in \(\mathcal {U}\) are \(\mathbb {F}\)-adapted and the opponent controls the probability measure (effectively deciding the likelihoods for different trajectories), this can be seen as the impulse player implementing a type of non-anticipative strategy as well. This becomes more evident when turning to the application in Sect. 6.

In this section we will concentrate on the upper value function and the main result is the following dynamic programming principle:

Theorem 3.2

The map Y is bounded, uniformly continuous and satisfies the recursion

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\).

Remark 3.3

As a consequence, the map \((t,\omega ,{\textbf {v}})\mapsto Y^{{\textbf {v}}}_t(\omega )\) is Borel measurable.

The proof of Theorem 3.2 is given through a sequence of lemmata where the main obstacle that we need to overcome is to show uniform continuity of the map \((t,\omega ,{\textbf {v}})\mapsto Y^{{\textbf {v}}}_t(\omega )\). This will be obtained through a uniform convergence argument and we introduce the truncated upper value function defined as

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\) and \(k\ge 0\). Note that in the definition of \(Y^{{\textbf {v}},k}\), the impulse controller is restricted to using a maximum of k impulses. The following approximation result is central:

Lemma 3.4

There is a \(C>0\) such that

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\) and each \(k\ge 0\).

Proof

We first note that

Now, as

for any \(u\in \mathcal {U}_t\), this implies that in the infimum of (3.3) we can restrict our attention to impulse controls for which

for each \(k\ge 0\). Clearly, any impulse control not satisfying (3.5) is dominated by \(u=\emptyset \). For any \(u\in \mathcal {U}_t\) satisfying (3.5) we have,

To arrive at the last inequality above, we have used that since \(([u]_k)_{\tau _{k+1}-}=u_{\tau _{k+1}-}\), Assumption 2.5.d) gives that

from which the inequality is immediate by (3.5). Now, as \(\mathbbm {1}_{[\tau _{k+1} = T]}\varphi ({\textbf {v}}\circ u) +\sum _{j=1}^{N\wedge k} c({\textbf {v}}\circ [u]_{j})\) is \(\mathcal {F}_{\tau _{k+1}}\)-measurable we can again use Assumption 2.5.d) to find that

Consequently,

Since u was an arbitrary impulse control satisfying (3.5) and \([u]_k\in \mathcal {U}^k_t\), the result follows. \(\square \)

Lemma 3.5

For each \(k,\kappa \ge 0\) and \(t\in [0,T]\), the map \((\omega ,{\textbf {v}})\mapsto Y^{{\textbf {v}},k}_t(\omega ):\Omega \times D^\kappa \rightarrow \mathbb {R}\) is uniformly continuous with a modulus of continuity that is independent of t.

Proof

We have

with \(u_\omega \) as in Assumption 2.8. This immediately gives that

by the same assumption. \(\square \)

As is customary, the dynamic programming principle for \(Y^{\cdot ,k}\) will be proved by leveraging regularity and we introduce the following partition of the set \(\Lambda \times U\):

Definition 3.6

For \(\varepsilon >0\):

-

We let \(n\ge 0\) be the smallest integer such that \(2^{-n}T\le \varepsilon \), set \(n_t^\varepsilon :=2^{n}+1\) and introduce the discrete set \(\mathbb {T}^\varepsilon :=\{t^\varepsilon _j:t^\varepsilon _j=(j-1)2^{-n}T,j=1,\ldots ,n^\varepsilon _t\}\). Moreover, we define \({\bar{t}}^\varepsilon _{i}:=t^\varepsilon _{i}\) for \(i=1,\ldots ,n_t^\varepsilon -1\) and \({\bar{t}}^\varepsilon _{n_t^\varepsilon }:=T+1\).

-

We then let \((E^\varepsilon _{i,j})_{1\le i\le n^\varepsilon _t}^{j\ge 1}\) be such that \((E^\varepsilon _{i,j})_{j\ge 1}\) forms a partition of \(\Omega \) with \(E^\varepsilon _{i,j}\in \mathcal {F}_{t^\varepsilon _i}\) and \(\Vert \omega -\omega '\Vert _{t^\varepsilon _i}\le \varepsilon \) for all \(\omega ,\omega '\in E^\varepsilon _{i,j}\). We let \((\omega ^\varepsilon _{i,j})_{1\le i\le n^\varepsilon _t}^{j\ge 1}\) be a sequence with \(\omega ^\varepsilon _{i,j}\in E^\varepsilon _{i,j}\).

-

Finally, we let \((U^\varepsilon _{l})_{l=1}^{n^\varepsilon _U}\) be a Borel-partition of U such that the diameter of \(U^\varepsilon _l\) does not exceed \(\varepsilon \) for \(l=1,\ldots ,n^\varepsilon _U\) and let \((b^\varepsilon _l)_{l=1}^{n^\varepsilon _U}\) be a sequence with \(b^\varepsilon _l\in U^\varepsilon _l\) and denote by \({\bar{U}}^\varepsilon :=\{b^\varepsilon _1,\ldots ,b^\varepsilon _{n^\varepsilon _U}\}\) the corresponding dicretization of U.

The reason for introducing \(\bar{t}^\varepsilon _i\) is to enable the use of the half-open interval \([t^\varepsilon _{n_t^\epsilon -1}, \bar{t}^\varepsilon _{n_t^\epsilon })\) to capture the last interval in the discretization, \([t^\varepsilon _{n_t^\epsilon -1},T]\). Furthermore, the above partition allows us to discretize the set of impulse controls in the following way:

Definition 3.7

For \(\varepsilon >0\):

-

We let \({\bar{\mathcal {U}}}^{k,\varepsilon }_t:=\{u\in \mathcal {U}^k_t: (\tau _j(\omega ),\beta _j(\omega ))\in \mathbb {T}^\varepsilon \times {\bar{U}}^\varepsilon \text { for all }\omega \in \Omega \text { and }j=1,\ldots ,k\}\).

-

We introduce the projection from \(\mathcal {U}^{k}_t\rightarrow {\bar{\mathcal {U}}}^{k,\varepsilon }_t\), defined as

$$\begin{aligned} \Xi ^\varepsilon (u):=\Big (\sum _{i=2}^{n^\varepsilon _t}t^\varepsilon _i\mathbbm {1}_{[t^\varepsilon _{i-1},{\bar{t}}^\varepsilon _i)}(\tau _j), \sum _{l=1}^{n^\varepsilon _U}b^\varepsilon _l\mathbbm {1}_{U^\varepsilon _l}(\beta _j)\Big )_{j=1}^k \end{aligned}$$for each \(u=(\tau _j,\beta _j)_{j=1}^k\in \mathcal {U}^{k}_t\).

In the next lemma we show that for any \(\varepsilon >0\), the impulse size can be chosen \(\varepsilon \)-optimally.

Lemma 3.8

Let \(g:\Lambda \times \mathcal {D}\rightarrow \mathbb {R}\) be bounded and uniformly continuous. Then, for each \(k\ge 0\), \(\varepsilon >0\), \(u\in \mathcal {U}^k\) and \(\tau \in \mathcal {T}_{\tau _k}\), there is a \(\mathcal {F}_{\tau }\)-measurable random variable \(\beta \) with values in U, such that

for all \((t,\omega ,v)\in \Lambda \times \mathcal {U}\).

Proof

Step 1. We first prove the result for \(u={\textbf {v}}\in D^k\). For arbitrary \(\varepsilon _1>0\) there is, by properties of the supremum, a double sequence \((b_{i,j})_{1\le i\le n^{\varepsilon _1}_t}^{j\ge 1}\) with \(b_{i,j}\in U\) such that

for \(i=1,\ldots ,n^{\varepsilon _1}_t\) and all \(j\ge 1\). Consequently, letting \(\beta ^{\textbf {v}}(\omega '):=\sum _{i=1}^{n_t-1}\sum _{j\ge 0}b_{i,j}\mathbbm {1}_{[t^\varepsilon _i,{\bar{t}}^\varepsilon _{i+1})}(\tau (\omega '))\mathbbm {1}_{E^{\varepsilon _1}_{i,j}}(\omega ')\) we find that

where \(\rho _g\) is the modulus of continuity of g and the result, for deterministic u, follows from Assumption 2.9.

Step 2. We turn to the general setting with \(u\in \mathcal {U}^k\) and let \({\hat{u}}:=\Xi ^{\varepsilon _1}(u)\) for some \(\varepsilon _1>0\). By definition, it follows that

for all \((s,\omega ',b)\in \Lambda \times U\). Let \(\beta :=\beta ^{{\hat{u}}}\). Then \(\beta \) is \(\mathcal {F}_\tau \)-measurable and we find that

Finally, by choosing a concave modulus of continuity that dominates \(\rho _g\) the result follows from using Assumption 2.9, taking nonlinear expectations on both sides and choosing \(\varepsilon _1\) sufficiently small. \(\square \)

Lemma 3.9

For each \(k\ge 0\), the map \((t,\omega ,{\textbf {v}})\mapsto Y^{{\textbf {v}},k}_t(\omega )\) is bounded and uniformly continuous. Moreover, the family \((Y^{\cdot ,k})_{k\ge 0}\) satisfies the recursion

for each \(k\ge 1\) and \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\).

Proof

We note that \(|Y^{{\textbf {v}},k}_t|\le C_0\) and so boundedness clearly holds. The remaining assertions will follow by induction in k and we note by a simple argument that \(Y^{\cdot ,0}\) is uniformly continuous and bounded. In fact, for \(0\le t\le s\le T\) we have by the tower property that

that together with Lemma 3.5 yields the desired continuity.

We thus assume that for each \(\kappa \ge 0\), the map \(Y^{\cdot ,k-1}:\Lambda \times D^\kappa \rightarrow \mathbb {R}\) is uniformly continuous with modulus of continuity \(\rho _{\kappa ,k-1}\) and prove that then (3.6) holds and that this, in turn, implies uniform continuity of \(Y^{\cdot ,k}\). Let us consider the right-hand side of (3.6) that we denote \({\hat{Y}}^{{\textbf {v}},k}_t(\omega )\). By our induction assumption and Theorem 2.12.i) we have,

where \(\tau _1^*=\inf \big \{s\ge t: {\hat{Y}}^{{\textbf {v}},k}_s(\omega )=\inf _{b\in U}\{Y^{{\textbf {v}}\circ (s,b),k-1}_{s}+c({\textbf {v}}\circ (s,b))\}\big \}\wedge T\).

Step 1. We show that \({\hat{Y}}\ge Y\). For this we fix \(\kappa \ge 0\) and \({\textbf {v}}\in D^\kappa \) and note that for each \(\varepsilon >0\), our induction assumption together with Lemma 3.8 implies the existence of a \(\mathcal {F}_{\tau _1^*}\)-measurable \({\bar{\beta }}^\varepsilon _1\) with values in U such that

where \({\textbf {v}}_{i,l}:={\textbf {v}}\circ (t^\varepsilon _{i},b^\varepsilon _l)\) and \(\rho '\) is a modulus of continuity function. On the other hand, for each (i, j), there is a \( u^\varepsilon _{i,j,l}\in \mathcal {U}_{t^\varepsilon _i}^{k-1}\) such that

Moreover, Assumption 2.8 implies the existence of a \(\mathcal {F}_{t^\varepsilon _i}\times \mathcal {F}\)-measurable map \((\omega ,\omega ')\mapsto u^{i,j,l}_{\omega }(\omega ')\) with \(u^{i,j,l}_{\omega }\in \mathcal {U}^{k-1}_{t^\varepsilon _i}\) for all \(\omega \in \Omega \), such that

for all \(\omega \in E^\varepsilon _{i,j}\). By once again using uniform continuity of \(Y^{\cdot ,k-1}\) we have that

for all \(\omega \in E^\varepsilon _{i,j}\), and we conclude that

whenever \(\omega \in E^\varepsilon _{i,j}\). Letting \({\tilde{u}}:=\sum _{i= 2}^{n^\varepsilon _t}\mathbbm {1}_{[t^\varepsilon _{i-1},t^\varepsilon _i)}(\tau ^\varepsilon _1)\sum _{j\ge 1}\mathbbm {1}_{E^\varepsilon _{i,j}}(\omega )\sum _{l=1}^{n^\varepsilon _U}\mathbbm {1}_{U^\varepsilon _{l}}(\beta _1^\varepsilon )u^{i,j,l}_{\omega }\), we find that

for all \(\omega \in E^\varepsilon _{i,j}\). We can combine the above impulse controls into \(u^\varepsilon :=\Xi ^\varepsilon (\tau _1^*,{\bar{\beta }}^\varepsilon _1)\circ {\tilde{u}}\in \mathcal {U}^k_t\). The tower property now gives that

for some modulus of continuity \(\rho \) and it follows that \({\hat{Y}}^{{\textbf {v}},k}_ t(\omega )\ge Y^{{\textbf {v}},k}_ t(\omega )\) since \(\varepsilon >0\) was arbitrary.

Step 2. We now show that \({\hat{Y}}\le Y\). Pick \({\hat{u}}=({\hat{\tau }}_j,{\hat{\beta }}_j)_{j=1}^k\in \mathcal {U}^k_t\) and note that

Moreover, for each \(\omega \in \Omega \) we have

We conclude by the tower property that

and it follows that \({\hat{Y}}^{{\textbf {v}},k}_ t(\omega )\le Y^{{\textbf {v}},k}_ t(\omega )\) since this time \({\hat{u}}\in \mathcal {U}^k_t\) was arbitrary.

Step 3. It remains to show that \(Y^{\cdot ,k}\) is uniformly continuous. As uniform continuity in \((\omega ,{\textbf {v}})\) follows from Lemma 3.5 we only need to consider the time variable and find a modulus of continuity that is independent of \({\textbf {v}}\). Let \(0\le t\le s\le T\) and note that by the preceding steps and (4.4) of [23]

By arguing as in (3.7) we have

for some \(\rho \) independent of \({\textbf {v}}\). Concerning the second term we have, since

that

for some modulus of continuity \(\rho '\) and we conclude that \((t,\omega )\mapsto Y^{{\textbf {v}},k}_t(\omega )\) is uniformly continuous with a modulus of continuity that does not depend on \({\textbf {v}}\). Uniform continuity of the map \((t,\omega ,{\textbf {v}})\mapsto Y^{{\textbf {v}},k}_t(\omega )\) then follows by Lemma 3.5. This completes the induction-step. \(\square \)

Proof of Theorem 3.2

The sequence \((Y^{{\textbf {v}},k}_{t}(\omega ))_{k\ge 0}\) is bounded, uniformly in \((t,\omega ,{\textbf {v}})\), and since \(\mathcal {U}^k\subset \mathcal {U}^{k+1}\) it follows by Lemma 3.4 that \(Y^{{\textbf {v}},k}_{t}(\omega )\searrow Y^{{\textbf {v}}}_{t}(\omega )\). Moreover, again by Lemma 3.4 the convergence is uniform and Lemma 3.9 gives that \(Y^{{\textbf {v}}}_{t}(\omega )\) is bounded and uniformly continuous. Concerning the dynamic programming principle (3.3), we have by (3.6) and uniform convergence that

On the other hand, monotonicity implies that

for each \(k\ge 0\) and the result follows by letting \(k\rightarrow \infty \). \(\square \)

4 Value of the Game

We show that the game has a value by proving that \(Z^{{\textbf {v}}}_t(\omega )=Y^{{\textbf {v}}}_t(\omega )\) for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\). As a bonus, this immediately gives that the lower value function Z satisfies the DPP in (3.3).

The approach is once again to arrive at the result through a sequence of lemmata. Similarly to the above, we define the truncated lower value function as

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\) and have the following:

Lemma 4.1

There is a \(C>0\) such that

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\) and each \(k\ge 0\).

Proof

We have

Given \(\mathbb {P}^S\in \mathcal {P}^S(t,\omega )\) and \(\varepsilon >0\), let \(u^\varepsilon =(\tau ^\varepsilon _j,\beta ^\varepsilon _j)_{j\ge 1}\in \mathcal {U}_t\) be such that

where \(N^\varepsilon :=\max \{j\ge 0:\tau ^\varepsilon _j<T\}\). Then, since \(\varphi \) is bounded and \(c\ge \delta >0\),

Moreover,

Now, since \(\mathbb {P}^S\) is a non-anticipative map,

Put together, we find that

As \(\varepsilon >0\) was arbitrary and C does not depend on either \(\mathbb {P}^S\) or \(\varepsilon \), the result follows by first letting \(\varepsilon \rightarrow 0\) and then taking the supremum over all \(\mathbb {P}^S\in \mathcal {P}^S(t,\omega )\). \(\square \)

Lemma 4.2

For each \(k,\kappa \ge 0\) and \(t\in [0,T]\), the map \((\omega ,{\textbf {v}})\mapsto Z^{{\textbf {v}},k}_{t}(\omega ):\Omega \times D^\kappa \rightarrow \mathbb {R}\) is uniformly continuous with a modulus of continuity that does not depend on t.

Proof

We once again have

with \(u_\omega \) as in Assumption 2.8. This gives that

by again using the same assumption. \(\square \)

For \(\varepsilon >0\), we introduce the discretization of \(Z^{\cdot ,k}\) as

where we recall that \({\bar{\mathcal {U}}}^{k,\varepsilon }\) is the discretization of the control set from Definition 3.7.

Lemma 4.3

For each \(k,\kappa \ge 0\), there is a modulus of continuity \(\rho _{{\bar{Z}},\kappa ,k}\), such that

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times D^\kappa \).

Proof

For \(u\in \mathcal {U}^k\), we have \(\Xi ^\varepsilon (u)\in {\bar{\mathcal {U}}}^{k,\varepsilon }\) and thus get that

for some modulus of continuity \(\rho \). \(\square \)

Lemma 4.4

For each \(\varepsilon > 0\), the family \(({\bar{Z}}^{\cdot ,k,\varepsilon })_{k\ge 0}\) satisfies the recursion

for all \(k\ge 1\). Moreover,

for all \(k\ge 0\), \(i=1,\ldots ,n^\varepsilon _t\) and \((\omega ,{\textbf {v}})\in \Omega \times \mathcal {D}\).

Proof

The result will follow by a double induction and we note that the terminal condition holds trivially since (2.3) implies that

for all \((\omega ,{\textbf {v}})\in \Omega \times \mathcal {D}\) and \(k\ge 0\). We let \((R^{\cdot ,k})_{k\ge 0}\) be such that \(R^{\cdot ,0}\equiv {\bar{Z}}^{\cdot ,0,\varepsilon }\) and \((R^{\cdot ,k})_{k\ge 1}\) is the unique solution to the recursion (4.4) and assume that for some \(k'\ge 1\) and \(i'\in \{1,\ldots ,n_t^\varepsilon -1\}\) the statement holds for all \(i\in \{1,\ldots ,n_t^\varepsilon -1\}\) when \(k=1,\ldots ,k'-1\) and for \(i=i'+1,\ldots ,n^\varepsilon _t\) when \(k=k'\).

Step 1. We first show that \({\bar{Y}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _{i'}}\) satisfies the recursion step in (4.4). By definition we have,

By the tower property we have

establishing that \({\bar{Y}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _{i'}}\ge R^{{\textbf {v}},k}_{t^\varepsilon _{i'}}\). To arrive at the opposite inequality we introduce the impulse control \(u^*:=(\tau _j^*,\beta ^*_j)_{j=1}^{N^*}\), where

for \(j=1\ldots ,k\), with \(\tau ^*_0:=t_{i'}\), \(N^*:=\max \{j\ge 0: \tau _j^*<T\}\wedge k\) and \(\beta ^*_j\) is a measurable selection of

Then, \(u^*\in {\bar{\mathcal {U}}}^{k,\varepsilon }_{t_{i'}}\) and it easily follows by repeated use of (4.4) and the tower property that

and we conclude that \({\bar{Y}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _{i'}}\) satisfies the recursion step in (4.4).

Step 2. For the sake of completeness, we also prove rigorously that \({\bar{Z}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _i}\le {\bar{Y}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _i}\). We have

By the tower property for \(\mathbb {E}^\mathbb {P}\) and the induction hypothesis we have

and we conclude that

In particular, our induction assumption then implies that \({\bar{Z}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _{i'}}(\omega )\le {\bar{Y}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _i}(\omega )\).

Step 3. Finally, we show that \({\bar{Z}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _{i'}}\ge {\bar{Y}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _{i'}}\). We do this by showing that for any \(\varepsilon '>0\), there is a strategy \(\mathbb {P}^{S,\varepsilon '}\in \mathcal {P}^S({t^\varepsilon _{i'}},\omega )\) such that

for each \(u\in {\bar{\mathcal {U}}}^{k,\varepsilon }_{t^\varepsilon _{i'}}\) and \(\omega \in \Omega \). First note that for any \(u\in {\bar{\mathcal {U}}}^{k,\varepsilon }_{t^\varepsilon _{i'}}\) and \(i\in \{i'+1,\ldots ,n^\varepsilon _t\}\), the map

is Borel-measurable and thus upper semi-analytic (recall that \(N(t):=\max \{j\ge 0:\tau _j\le t\}\) and that for any \(u:=(\tau _j,\beta _j)_{j=1}^N\in \mathcal {U}\) and \(t\in [0,T]\) we have \(u_t:=(\tau _j,\beta _j)_{j=1}^{N(t)}\)). Given \(u\in {\bar{\mathcal {U}}}^{k,\varepsilon }_{t^\varepsilon _{i'}}\) we can then, by repeatedly arguing as in the proof of Lemma 4.11 in [23], find a sequence of measures \((\mathbb {P}^u_{i})_{i=i'}^{n^\varepsilon _t-1}\) with \(\mathbb {P}^u_{i}\in \mathcal {P}(t^\varepsilon _{i'},\omega ,{\textbf {v}}\circ u_{t^\varepsilon _{i'}})\) such that \(\mathbb {P}^u_{i}=\mathbb {P}^u_{i-1}\) on \(\mathcal {F}_{t^\varepsilon _{i-1}-t^\varepsilon _{i'}}\) for \(i=i'+1,\ldots ,n^\varepsilon _{t}-1\) and

This implies the existence of a strategy \(\mathbb {P}^{S,\varepsilon '}\in \mathcal {P}^S({t^\varepsilon _{i'}},\omega )\) by setting \(\mathbb {P}^{S,\varepsilon '}({\textbf {v}}\circ u):=\mathbb {P}^u_{n^{\varepsilon }_t}\) such that for any \(u\in {\bar{\mathcal {U}}}^k_{t^\varepsilon _{i'}}\), we have

By repeating this process \(n^\varepsilon _t-i'\) times followed by using (4.6) and the tower property for \(\mathbb {E}^{\mathbb {P}^{S,\varepsilon '}({\textbf {v}}\circ u)}\) we find that

and since \(u\in {\bar{\mathcal {U}}}^k_{t^\varepsilon _{i'}}\) was arbitrary, we conclude that \({\bar{Y}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _{i'}}(\omega )\le {\bar{Z}}^{{\textbf {v}},k,\varepsilon }_{t^\varepsilon _{i'}}(\omega )+\varepsilon '\) from which the assertion follows as \(\varepsilon '>0\) was arbitrary. This concludes the induction step. \(\square \)

Corollary 4.5

We can extend the definition of \({\bar{Y}}\) to \(\Lambda \times \mathcal {D}\) by letting

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\) and have that \({\bar{Y}}^{\cdot ,k,\varepsilon }\equiv {\bar{Z}}^{\cdot ,k,\varepsilon }\) for all \(k\ge 0\).

Proof

Repeating the above proof we find that \({\bar{Y}}^{\cdot ,k,\varepsilon }\) and \({\bar{Z}}^{\cdot ,k,\varepsilon }\) both satisfy the recursion

for all \(t\in (t^\varepsilon _i,t^\varepsilon _{i+1})\). \(\square \)

Theorem 4.6

The game has a value, i.e. \(Y\equiv Z\).

Proof

Applying an argument identical to the one in the proof of Lemma 4.3 gives that \(\Vert {\bar{Y}}^{{\textbf {v}},k,\varepsilon }-Y^{{\textbf {v}},k}\Vert _T\rightarrow 0\) as \(\varepsilon \rightarrow 0\). By uniqueness of limits we find that \(Y^{\cdot ,k}=Z^{\cdot ,k}\) and taking the limit as k tends to infinity, the result follows by Lemma 3.4 and Lemma 4.1. \(\square \)

Corollary 4.7

The map Z is bounded, uniformly continuous and satisfies the recursion

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\).

Proof

Since \(Z\equiv Y\) the statement in Theorem 3.2 applies to Z as well. \(\square \)

5 A Verification Theorem

In the previous two sections we have shown that the dynamic programming principle is a necessary condition for a map to be the value function of (1.1): if \(\mathcal {Y}\) is an upper or lower value function then it satisfies (3.3). In this section we turn to sufficiency and its implications, starting with the following uniqueness result:

Proposition 5.1

Suppose that there is a progressively measurable map \(\mathcal {Y}:\Lambda \times \mathcal {D}\rightarrow \mathbb {R}\) that is uniformly continuous and bounded, such that

for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\). Then \(\mathcal {Y}\equiv Y\).

Proof

For any \({\hat{u}}=({\hat{\tau }}_j,{\hat{\beta }}_j)_{j=1}^k\in \mathcal {U}^k_t\) we have

But similarly,

Repeating this argument k times and using the tower property and Remark 2.7 we find, since \({\hat{u}}\in \mathcal {U}^k_t\) was arbitrary, that

where the second inequality follows by restricting the minimization to all \(u\in \mathcal {U}^k\) for which \(\tau _k=T\). Letting \(k\rightarrow \infty \), it follows by Lemma 3.4 that \(\mathcal {Y}^{\textbf {v}}_t(\omega )\le Y^{\textbf {v}}_t(\omega )\) for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\).

On the other hand, from Theorem 2.12 and Lemma 3.8 there is for each \(\varepsilon >0\) a pair \((\tau ^\varepsilon _1,\beta ^\varepsilon _1)\in \mathcal {U}^1_t\) such that

Moreover, Step 1 in the proof of Lemma 3.9 implies the existence of a \((\tau ^\varepsilon _2,\beta ^\varepsilon _2)\in \mathcal {U}^1_{\tau ^\varepsilon _1}\) such that

Repeating this argument indefinitely gives us an infinite sequence \(u^\varepsilon =(\tau _j^\varepsilon ,\beta _j^\varepsilon )_{j= 1}^\infty \in \mathcal {U}_t\) such that

for each \(k\ge 1\) and we find by applying the tower property that

Taking the limit as \(k\rightarrow \infty \) on the right hand side thus gives

Now, since \(\mathcal {Y}\) and \(\varphi \) are uniformly bounded and \(c\ge \delta >0\), arguing as in the proof of Lemma 3.4 gives that \(\mathcal {E}^{{\textbf {v}}\circ u^\varepsilon }\big [\mathbbm {1}_{[\tau ^\varepsilon _k<T]}\big ]\le C/k\). In particular, \(\mathbb {P}\big [\{\tau ^\varepsilon _k<T,\,\forall k\ge 0\}\big ]= 0\) for any \(\mathbb {P}\in \mathcal {P}(t,\omega ,{\textbf {v}}\circ u^\varepsilon )\) and we can apply Fatou’s lemma in the above inequality to conclude that

Since \(\varepsilon >0\) was arbitrary, it follows that \(\mathcal {Y}\equiv Y\). \(\square \)

Having proved that the value function is the unique solution to the dynamic programming equation (3.3) we show that, under additional measurability and compactness assumptions, Y can be used to extract an optimal control/strategy pair.

Theorem 5.2

Assume that \(u^*=(\tau _j^*,\beta _j^*)_{j=1}^{\infty }\) is such that:

-

the sequence \((\tau ^*_j)_{j=1}^{\infty }\) is given by

$$\begin{aligned} \tau ^*_j:=\inf \Big \{&s \ge \tau ^*_{j-1}:\,Y_s^{[u^*]_{j-1}}=\inf _{b\in U} \big \{Y^{[u^*]_{j-1}\circ (s,b)}_s+c([u^*]_{j-1}\circ (s,b))\big \}\Big \}\wedge T, \end{aligned}$$(5.2)using the convention that \(\inf \emptyset =\infty \), with \(\tau ^*_0=0\);

-

the sequence \((\beta _j^*)_{j=1}^{\infty }\) is such that \(\beta ^*_j\) is \(\mathcal {F}_{\tau _j^*}\)-measurable and satisfies

$$\begin{aligned} \beta ^*_j\in \mathop {\arg \min }_{b\in U}\big \{Y^{[u^*]_{j-1}\circ (\tau ^*_j,b)}_{\tau ^*_j}+c([u^*]_{j-1}\circ (\tau ^*_j,b))\big \}. \end{aligned}$$(5.3)

Then, \(u^*\in \mathcal {U}\) is an optimal impulse control for (1.1) in the sense that

Moreover, if \(\mathcal {P}(t,\omega ,{\textbf {v}})\) is weekly compact for each \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\) and \(\mathcal {P}^{\mathbb {P}}_{\tau }(u):=\{\mathbb {P}'\in \mathcal {P}(u):\mathbb {P}'=\mathbb {P}\text { on }\mathcal {F}_{\tau }\}\) is weekly compact for all \(u\in \mathcal {U}\), \(\mathbb {P}\in \mathcal {P}(u)\) and \(\tau \in \mathcal {T}\), then there is an optimal response \(\mathbb {P}^{*,S}\in \mathcal {P}^S\) for which

Proof of (5.4)

Repeated use of the dynamic programming principle for Y gives that

Taking the limit as \(k\rightarrow \infty \) and repeating the argument in the second part of the proof of Proposition 5.1, (5.4) follows. \(\square \)

To prove the second statement we need two lemmas, the first of which (loosely speaking) shows that for any measure/control pair \((\mathbb {P},u)\) with \(u\in \mathcal {U}^k\) and \(\mathbb {P}\in \mathcal {P}(u)\), we can extend \(\mathbb {P}\) optimally from \(\tau _k\) until the time that \((Y^u_t)_{\tau _k\le t\le T}\) hits the corresponding barrier. This extension then acts as a minimizer up until the first hitting time in (3.3). The main obstacle we face is that \(Y^u\) is not necessarily continuous in \(\omega \), disqualifying direct use of Lemma 4.5 in [11] as in, for example, Lemma 4.13 of [23].

Lemma 5.3

For \(k\ge 1\) and \(u\in \mathcal {U}^k\) we let

and assume that for some \(\mathbb {P}\in \mathcal {P}(u)\) the set \(\mathcal {P}^{\mathbb {P}}_{\tau _k}(u)\) is weakly compact. Then, there is a \(\mathbb {P}^\diamond \in \mathcal {P}^{\mathbb {P}}_{\tau _k}(u)\) such that

Proof

To simplify notation we let

and consider the following sequence of stopping times \(\eta _l:=\inf \{s\ge \eta _{l-1}: S^{u}_s-Y^{u}_s\le 1/l\}\wedge T\) for \(l\ge 1\) with \(\eta _0:=\tau _{k}\). Then, by Proposition 7.50.b) of [7] and a standard approximation result there is for each \(l\ge 0\) a \(\mathcal {F}_{\tau _k}\)-measurable kernel \(\nu ^l:\Omega \rightarrow \mathfrak {P}(\Omega )\) such that \(\nu ^l(\cdot )\in \mathcal {P}(\tau _k,\cdot ,u)\) and

for \(\mathbb {P}\)-a.e. \(\omega \in \Omega \). We then define the measure \(\mathbb {P}_l\in \mathcal {P}(u)\) as

for each \(A\in \mathcal {F}\). Since \(\mathcal {P}^{\mathbb {P}}_{\tau _k}(u)\) is weakly compact we may assume, by possible going to a subsequence, that \(\mathbb {P}_l\rightarrow \mathbb {P}^\diamond \) weakly for some \(\mathbb {P}^\diamond \in \mathcal {P}^{\mathbb {P}}_{\tau _k}(u)\). We need to show that \(\mathbb {P}^\diamond \) satisfies (5.6). We do this over two steps:

Step 1. We first find approximations of u and \(\eta _l\) that allow us to take the limit as \(l\rightarrow \infty \) on the sequence \(\mathbb {P}_l\) and use weak convergence. Let \((c_m)_{m\ge 1}\) be a sequence of positive numbers. We note that we can (by approximating stopping times from the right and U by a finite set) find a discrete approximation

where \(M_m\le C/c_m^{k(d+1)}\) (recall that \(d\ge 1\) is the dimension of U) and \({\textbf {v}}_i\in D^k\), such that \(A^m_i\in \mathcal {F}_{\tau _k}\) and \(|{\hat{u}}-u|\le c_m\) for all \(\omega \in \Omega \).

Then, with \(\eta ^i_l:=\{t\ge \eta ^i_{l-1}: S^{{\textbf {v}}_i}_t-Y^{{\textbf {v}}_i}_t\le 1/l\}\wedge T\), we can, since \(S^{{\textbf {v}}_i}\) and \(Y^{{\textbf {v}}_i}\) are both uniformly continuous, repeat the argument in Step 1 in the proof of Theorem 3.3 in [11] to find that there are continuous [0, T]-valued random variables \((\theta ^i_l)_{l\ge 1}\) and \(\mathcal {F}_T\)-measurable sets \(\Omega ^i_l\subset \Omega \) such that

for \(i=1,\ldots ,M_l\). For \(l\ge 1\), we define \({\hat{\eta }}_l:=\inf \{t\ge {\hat{\eta }}_{l-1}:S^{{\hat{u}}}_t-Y^{{\hat{u}}}_t\le 1/l\}\wedge T\) with \({\hat{\eta }}_0:=\tau _k\) and note that \({\hat{\eta }}_l=\sum _{i=1}^{M_l} \mathbbm {1}_{A^l_i}(\omega ) \eta ^i_l\). Then \(\Omega ^{-}_l:=\{\omega \in \Omega :{\hat{\eta }}_l\le \eta _{l-1}\}\) satisfies

Hence, as \(S^{{\hat{u}}}_{{\hat{\eta }}_l}-Y^{{\hat{u}}}_{{\hat{\eta }}_l}=1/l\) on \([{\hat{\eta }}_l<T]\) by continuity, we have that

However, there is a modulus of continuity function \(\rho '\) such that

and thus also

so that

Similarly, for \(\Omega ^{+}_l:=\{\omega \in \Omega :{\hat{\eta }}_l\ge \eta _{l+1}\}\) we have

We can thus choose \(c_l\) such that \(8l^2\rho '(c_l)\le 2^{-l}\) for all \(l\ge 1\) and letting \({\hat{\theta }}_l:=\sum _{i=1}^{M_l} \mathbbm {1}_{A^l_i}(\omega ) \theta ^i_l\) and \({\hat{\Omega }}_l:=\big (\cap _{i=1}^{M_l}\Omega ^i_l\big )\cap (\Omega ^{-}_l)^c\cap (\Omega ^{+}_l)^c\) we find that

Hence, since \(\eta _{l}(\omega )\rightarrow \tau ^\diamond (\omega )\) for all \(\omega \in \Omega \), we get that \(({\hat{\theta }}_l)_{l\ge 1}\) is a sequence of random variables such that \({\hat{\theta }}_l\) is continuous on \(A^l_i\) for \(i=1,\ldots ,M_l\) and by the Borel-Cantelli lemma, \({\hat{\theta }}_l\rightarrow \tau ^\diamond \), \(\mathbb {P}'\)-a.s. for all \(\mathbb {P}'\in \mathcal {P}(u)\).

Next, given \((c'_l)_{l\ge 1}\) there are \(\mathbb {P}\)-continuity sets \(((D^l_i)_{i=1}^{M_l})_{l\ge 1}\) (i.e. \(\mathbb {P}[\partial D^l_i]=0)\) such that \(D^l_i\in \mathcal {F}_{\tau _{k}}\) and \(\mathbb {P}[A^l_i\Delta D^l_i]\le c'_l\). Then, for each \(\mathbb {P}'\in \mathcal {P}^{\mathbb {P}}_{\tau _k}(u)\), the sets in the double sequence \(((D^l_i)_{i=1}^{M_l})_{l\ge 1}\) are also \(\mathbb {P}'\)-continuity sets and \(\mathbb {P}'[A^l_i\Delta D^l_i]=\mathbb {P}[A^l_i\Delta D^l_i]\le c'_l\). Moreover, \(D^l_0:=(D^l_{1}\cup \cdots \cup D^l_{M_l})^c\in \mathcal {F}_{\tau _k}\) is also a \(\mathbb {P}'\)-continuity set for each \(\mathbb {P}'\in \mathcal {P}^{\mathbb {P}}_{\tau _k}(u)\) and we can define

With \(c'_l:=c_l^{k(d+1)}2^{-l}\) we thus find that \(\theta '_l\rightarrow \tau ^\diamond \), \(\mathbb {P}'\)-a.s. for each \(\mathbb {P}'\in \mathcal {P}^{\mathbb {P}}_{\tau _k}(u)\), moreover, \(\theta '_l\) (and therefore also \(Y^{u'_l}_{\theta '_l})\) is continuous on \(D^l_i\) for \(i=0,\ldots ,M_l\).

Step 2. Using the approximation constructed in Step 1, we now show that (5.6) holds. Since for any \(t\in [\tau _k,T]\), we have

where \(\Omega ^\Delta _l:=\cup _{i=1}^{M_l}A^l_i\Delta D^l_i\) and \(\tau '_{l,k}\) is the time of the \(k^\text {th}\) intervention in \(u'_l\), with \(\tau '_k:=T\) on \(D^l_0\), we find that there is a \(C>0\) such that for all \(l\ge 2\), we have

where we have used the fact that, as \(\Omega ^\Delta _l\in \mathcal {F}_{\tau _k}\), we have

On the other hand, by Theorem 2.12.i), \(Y^{u}_{\cdot \wedge \tau ^\diamond }\) is a \(\mathbb {P}'\)-supermartingale for each \(\mathbb {P}'\in \mathcal {P}(u)\) and we have that \(\mathbb {E}^{\mathbb {P}_m}\big [Y^{u}_{\eta _l}\big ]\ge \mathbb {E}^{\mathbb {P}_m}\big [Y^{u}_{\eta _m}\big ]\) whenever \(l\le m\) implying that

where, to reach the last inequality, we have used (5.7) and the definition of \(\eta _m\) to conclude that

and the right hand side of the last inequality tends to \(\mathbb {E}^{\mathbb {P}}\big [Y^u_{\tau _k}\big ]\) as \(m\rightarrow \infty \). Hence,

where we again use (5.8) and (5.9). Now, since \(\eta _l\) is not continuous we cannot immediately proceed to take the limit. However, as

where \(\Omega '_l:={\hat{\Omega }}_l\setminus \Omega ^\Delta _l\), setting

we find that \(\psi _l\) is continuous on \(D^l_i\) for \(i=0,\ldots ,M_l\) and thus

by dominated convergence under \(\mathbb {P}^\diamond \). As

we thus find that

from which the assertion follows since \(Y^{u}_{\cdot \wedge \tau ^\diamond }\) is a \(\mathbb {P}^\diamond \)-supermartingale. \(\square \)

Since stopping before \(\tau ^\diamond \) in the above lemma is never optimal, this means that the \(\mathbb {P}^\diamond \) in Lemma 5.3 is optimal until \(\tau ^\diamond \). It remains to decide a continuation after \(\tau ^\diamond \) such that, at time \(\tau ^\diamond \), stopping is optimal.

Lemma 5.4

Let \(\mathcal {P}(t,\omega ,{\textbf {v}})\) be weakly compact for all \((t,\omega ,{\textbf {v}})\in \Lambda \times \mathcal {D}\) and let \(u\in \mathcal {U}^k\). There is a \(\mathcal {F}^*_{\tau _k}\)-measurable kernel \(\nu :\Omega \rightarrow \mathfrak {P}(\Omega )\) such that \(\nu (\omega )\in \mathcal {P}(\tau _k,\omega ,u)\) and

for all \(\omega \in \Omega \).

Proof

Although the statement in the lemma differs from that of Lemma 4.16 in [23], the proof is almost identical and we give the main steps for the sake of completeness. We simplify notation by letting

for \({\textbf {v}}\in D^k\). Then by Lemma 4.15 of [23], the map \(\mathbb {P}\mapsto V({\textbf {v}},\omega ,\mathbb {P})\) is upper semi-continuous for every \((\omega ,{\textbf {v}})\in \Omega \times \mathcal {D}\). To use a measurable selection theorem we need to ascertain that the map \((\omega ,\mathbb {P})\mapsto V(u(\omega ),\omega ,\mathbb {P})\) is Borel. Since \(u\in \mathcal {U}^k\), we have that \(\omega \mapsto u(\omega )\) is Borel and this will be obtained by showing that \(({\textbf {v}},\omega )\mapsto V({\textbf {v}},\omega ,\mathbb {P})\) is Borel for any \(\mathbb {P}\in \mathfrak {P}(\Omega )\). However, for any \(\tau \in \mathcal {T}\) we have that

is Borel, by Fubini’s theorem. Hence, taking the infimum over a countable set of stopping times that is dense outside of a \(\mathbb {P}\)-null set the assertion follows. \(\square \)

Proof of (5.5)

Step 1. We first construct a candidate for an optimal strategy. Note that by Theorem 2.12, there is a \(\mathbb {P}^*_0\in \mathcal {P}(\emptyset )\) such that

We fix \(u\in \mathcal {U}\) and define \((\mathbb {P}^u_k)_{k\ge 1}\) iteratively where we start by setting \(\mathbb {P}^u_0:=\mathbb {P}^*_0\). For \(k=1,\ldots \), we construct \(\mathbb {P}^{u}_{k}\) from \(\mathbb {P}^u_{k-1}\) by first letting \(\mathbb {P}^{u,\diamond }_k \in \mathcal {P}^{\mathbb {P}^u_{k-1}}_{\tau _{k-1}}([u]_{k-1})\) be as in the statement of Lemma 5.3 with \(\mathbb {P}=\mathbb {P}^u_{k-1}\) and \(u\leftarrow [u]_k\), then there is by Lemma 5.4 a \(\mathcal {F}^*_{\tau ^\diamond }\)-measurable kernel \(\nu ^{u,k}:\Omega \rightarrow \mathfrak {P}(\Omega )\) such that \(\nu ^{u,k}(\omega )\in \mathcal {P}(\tau _k,\omega ,[u]_k)\) and

for all \(\omega \in \Omega \) and \(k\ge 1\). We can thus define the measure

for all \(A\in \mathcal {F}\), where \({\hat{\nu }}^{u,k}\) is an \(\mathcal {F}_{\tau _k}\)-measurable kernel such that (5.12) holds \(\mathbb {P}_k^{u,\diamond }\)-a.s. Combining Lemma 5.3 and Lemma 5.4 we realize that our above construction extends (5.11) yielding that for each \(k\ge 0\), we have

where \(\tau ^\diamond :=\inf \big \{s \ge \tau _{k}:\,Y_s^{[u]_k}=\inf _{b\in U} \{Y^{[u]_k\circ (s,b)}_s+c([u]_k\circ (s,b))\}\big \}\wedge T\) and \(\mathbb {P}^u_{-1}=\mathbb {P}_0\). Our candidate strategy is then \(\mathbb {P}^{*,S}(u):=\mathbb {P}^u\), where \(\mathbb {P}^u\) is such that (a subsequence of) \((\mathbb {P}^u_k)_{k\ge 0}\) converges weakly to \(\mathbb {P}^u\). Since \(\mathcal {P}^{\mathbb {P}^u_k}_{\tau _k}([u]_k)\) is weakly compact, we note that \(\mathbb {P}^u=\mathbb {P}^u_k\) on \(\mathcal {F}_{\tau _k}\) for each \(k\ge 0\) and as \(\mathbb {P}^u_k\in \mathcal {P}([u]_k)\) it follows by Assumption 2.4 that \(\mathbb {P}^u\in \mathcal {P}(u)\). Furthermore, it is clear by the above construction that the strategy \(\mathbb {P}^{*,S}\) is non-anticipative, and we conclude that \(\mathbb {P}^{*,S}\in \mathcal {P}^S\).

Step 2. Next, we show that if a subsequence of \((\mathbb {P}^{u^*}_k)_{k\ge 0}\) converges weakly to some \(\mathbb {P}^*\), then \(\mathbb {P}^*\) is an optimal response to the optimal impulse control \(u^*\). We thus assume that u in the previous step equals \(u^*\). By (5.11) and the fact that \(Y^{(T,\beta ^*_1)}_{T}=\varphi (\emptyset )\) (see Remark 2.7) we get that

However, (5.13) gives that

Repeating this procedure \(k\ge 1\) times we find that

In fact, as \(\mathbb {P}^*_k=\mathbb {P}^*_l\) on \(\mathcal {F}_{\tau _{k+1}\wedge \tau _{l+1}}\) this holds when replacing the measure \(\mathbb {P}^*_k\) with \(\mathbb {P}^*_l\) whenever \(l\ge k\) and we have that

By weak compactness of \(\mathcal {P}^{\mathbb {P}^*_l}_{\tau ^*_l}(u^*)\) for each \(l\ge 0\), by possibly going to a subsequence, \(\mathbb {P}^*_l\) converges weekly to some \(\mathbb {P}^*\in \mathcal {P}(u^*)\) such that \(\mathbb {P}^*=\mathbb {P}^*_l\) on \(\mathcal {F}_{\tau ^*_l}\) for each \(l\ge 0\) and we find that

by dominated convergence under \(\mathbb {P}^*\).

Step 3. It remains to show that \(u^*\) is an optimal impulse control under the strategy \(\mathbb {P}^{*,S}\). For arbitrary \(u\in \mathcal {U}\) we have

by again using that \(Y^{(T,\beta _1)}_{T}=\varphi (\emptyset )\). Now, (5.13) gives that

Repeating k times we find that

Arguing as in Step 2 gives that there is a subsequence under which \(\mathbb {P}^u_k\) converges weakly to some \(\mathbb {P}^u\in \mathcal {P}(u)\) such that \(\mathbb {P}^u=\mathbb {P}^u_l\) on \(\mathcal {F}_{\tau _l}\) for each \(l\ge 0\) and then

Now either u makes infinitely many interventions on some set of positive size under \(\mathbb {P}^u\) in which case the right hand side equals \(+\infty \) or we can use dominated and monotone convergence under \(\mathbb {P}^u\) to find that

Combined, this proves (5.5). \(\square \)

Remark 5.5

Theorem 5.2 presumes existence of a \(\mathcal {F}_{\tau _j}\)-measurable minimizer \(\beta ^*\), we note that such a minimizer always exists if U is a finite set. The canonical example under which the compactness assumption holds is when the uncertainty/ambiguity stems from the set of all Itô processes \(\int _0^t b_s+\int _0^t\sigma _sdB_s\) with \((b,\sigma \sigma ^\top )\in A\) for some compact, convex set \(A\subset \mathbb {R}^d\times \mathbb {S}_+\) (here \(\mathbb {S}_+\) denotes the set of positive semi-definite symmetric \(d\times d\)-matrices).

6 Application to Path-Dependent Zero-Sum Stochastic Differential Games

In the present section we extend the literature on stochastic differential games by considering the application of the above developments to zero-sum games of impulse versus classical control in a path-dependent setting. For simplicity we only consider the driftless setting as this is sufficient to capture the main features when applying the above results to controlled path-dependent SDEs.

Throughout this section, we only consider impulse controls for which \(\tau _j(\omega )\rightarrow T\) as \(j\rightarrow \infty \) for all \(\omega \in \Omega \). We will make frequent use of the following decomposition of càdlàg paths:

Definition 6.1

For \(x\in \mathbb {D}\) (the set of \(\mathbb {R}^d\)-valued càdlàg paths) we let \(\mathcal {J}(x)=(\eta _j,\Delta x_j)_{j=1}^{n(T)}\) where \(\eta _j\) is the time of the \(j^\text {th}\) jump of x, \(\Delta x_j:=x_{\eta _j}-x_{\eta _j-}\) the corresponding jump (with \(x_{0-}:=0\)) and for each \(t\in [0,t]\), n(t) is the number of jumps of x in [0, t]. Moreover, we let \(\mathcal {C}(x)\) be the path without jumps, i.e. \((\mathcal {C}(x))_s=x_s-\sum _{i=1}^{n(s)}\Delta x_i\).

6.1 Problem Formulation

We let \(\mathcal {A}\) be the set of all progressively measurable càdlàg processes \(\alpha :=(\alpha _s)_{0\le s\le T}\), taking values in a bounded Borel-measurable subset A of \(\mathbb {R}^d\) and let \(\mathcal {A}^S:\mathcal {U}\rightarrow \mathcal {A}\) be the corresponding set of non-anticipative strategies. We then consider the problem of showing that

where J is the cost functional (recall that \(\mathbb {E}\) is expectation with respect to \(\mathbb {P}_0\), the probability measure under which B is a Brownian motion)

We assume that \(X^{u,\alpha }:=\limsup _{j\rightarrow \infty }X^{[u]_{j},\alpha }\), where \(\limsup \) is taken componentwise, and \(X^{[u]_{j}}\) is defined recursively as the \(\mathbb {P}_0\)-a.s. unique solution to

where \(\sigma :[0,T]\times \mathbb {D}\times A\rightarrow \mathbb {S}_{++}\) (here \(\mathbb {S}_{++}\) denotes the set of positive definite symmetric \(d\times d\)-matrices) and \(\Gamma :[0,T]\times \mathbb {D}\times U\rightarrow \mathbb {R}^d\) satisfy the conditions in Assumption 6.3 below. Examining (6.2) and (6.3), we note that the control u is implemented as a feedback control. In this framework, the intervention times are generally referred to as stopping rules rather than stopping times, following [19], and the corresponding u is referred to as a strategy (as in for example [31]) rather than a control as it depends on the history of \(\alpha \) through the state process \(X^{u,\alpha }\).

We will make frequent use of the following operator that acts as an inverse to \(\mathcal {C}\):

Definition 6.2

For each \(u\in \mathcal {U}\), we introduce the impulse operator \(\mathcal {I}^u:\Omega \rightarrow \mathbb {D}\) defined as \(\mathcal {I}^u(\omega ):=\lim \sup _{k\rightarrow \infty }\mathcal {I}^{[u]_k}(\omega )\), where

for each \(u\in \mathcal {U}\).

We then let \({\bar{X}}^{u,\alpha }\) be the \(\mathbb {P}_0\)-a.s. unique solution to the path-dependent SDE

for all \((u,\alpha )\in \mathcal {U}\times \mathcal {A}\). The problem posed in (6.1) can now be related to that of (1.3) by noting that

where \(\mathbb {P}^{u,\alpha }:=\mathbb {P}_0\circ \big ({\bar{X}}^{u,\alpha }\big )^{-1}\). In the remainder of this section we first give suitable assumptions on the coefficients of the problem and then show that, under these assumptions, the problem stated here falls into the framework handled in the first part of the paper.

6.2 Assumptions

Throughout, we make the following assumptions on the coefficients in the above problem formulation:

Assumption 6.3

For any \(t,t'\in [0,T]\), \(b,b'\in U\), \(\xi \in \mathbb {R}^d\), \(x,x'\in \mathbb {D}\) and \(a\in A\) we have:

-

i)

The function \(\Gamma :[0,T]\times \mathbb {D}\times U\rightarrow \mathbb {R}^d\) is such that \((t,\omega )\mapsto \Gamma (t,X,b)\) is progressively measurable whenever X is progressively measurable. Moreover, there is a \(C>0\) such that

$$\begin{aligned} |\Gamma (t,x,b)-\Gamma (t',x',b')|&\le C({{\textbf {d}}}_{n(t)}[(t,\mathcal {C}(x),\mathcal {J}(x_{\cdot \wedge t})),(t',\mathcal {C}(x'),\mathcal {J}(x'_{\cdot \wedge t'}))]\\&\quad +|b'-b|), \end{aligned}$$whenever \(n(t)=n'(t')\) (that is, the number of jumps of x during [0, t] and the number of jumps of \(x'\) during \([0,t']\) agree).

-

ii)

The coefficient \(\sigma :[0,T]\times \mathbb {D}\times A\rightarrow \mathbb {S}_{++}\) is such that \((t,\omega )\mapsto \sigma (t,X,\alpha )\) is progressively measurable (resp. càdlàg) whenever X and \(\alpha \) are progressively measurable (resp. càdlàg) and satisfies the growth condition

$$\begin{aligned} |\sigma (t,x,a)|&\le C \end{aligned}$$and the functional, resp. \(L^2\), Lipschitz continuity

$$\begin{aligned} |\sigma (t,x',a)-\sigma (t,x,a)|&\le C\sup _{s\le t}|x'_s-x_s|, \\ \int _0^t |\sigma (s,x',\alpha _s)-\sigma (s,x,\alpha _s)|^2ds&\le C\int _{0}^t|x'_s-x_s|^2ds, \end{aligned}$$for all \(\alpha \in \mathcal {A}\). Moreover, for each \((t,x)\in [0,T]\times \mathbb {D}\), the map \(a\mapsto \sigma (t,x,a)\) has a measurable inverse, i.e.there is a \(\sigma ^\text {inv}:[0,T]\times \mathbb {D}\times \mathbb {S}_{++}\rightarrow A\), that satisfies the same measurability properties as \(\sigma \), for which

$$\begin{aligned} \sigma ^\text {inv}(t,x,\sigma (t,x,a))=a \end{aligned}$$for all \((t,x,a)\in [0,T]\times \mathbb {D}\times A\).

-

iii)

The running cost \(\phi :[0,T]\times \mathbb {R}^d\rightarrow \mathbb {R}\) is Borel-measurable, uniformly bounded and uniformly continuous in the second variable uniformly in the first variable.

-

iv)

The terminal cost \(\psi :\mathbb {R}^d\rightarrow \mathbb {R}\) is uniformly bounded and uniformly continuous.

-

v)

The intervention cost \(\ell :[0,T]\times \mathbb {R}^d\times U\rightarrow \mathbb {R}_+\) is uniformly bounded, uniformly continuous and satisfies

$$\begin{aligned} \ell (t,\xi ,b)\ge \delta >0. \end{aligned}$$

6.3 Value of the Game

We now provide a solution to the problem stated in Sect. 6.1 by showing that, under the above assumptions, it is a special case of the general impulse control problem under non-linear expectation treated in Sects. 2–5.

For \((t,\omega )\in \Lambda \), we extend the definition of \({\bar{X}}^{u,\alpha }\) by letting \({\bar{X}}^{u,\alpha ,t,\omega }\) be the \(\mathbb {P}_0\)-a.s. unique solution to the path-dependent SDE

We then let \(\mathcal {P}(t,\omega ,u):=\cup _{\alpha \in \mathcal {A}}\{\mathbb {P}_0\circ ({\bar{X}}^{u,\alpha ,t,\omega })^{-1}\}\) and get, in particular, that \(\mathcal {P}(u):=\cup _{\alpha \in \mathcal {A}}\{\mathbb {P}_0\circ ({\bar{X}}^{u,\alpha })^{-1}\}\). In this setting it is straightforward to show that \(\mathcal {P}(t,\omega ,u)\) corresponds to a random G-expectation [21] and thus satisfies properties 1-3 of Assumption 2.5 by the results of [20]. Moreover, by non-anticipativity and continuity of \({\bar{X}}^{u,\alpha }\), the fourth property also follows and we conclude that Assumption 2.5 holds. Note also that Assumption 2.9 holds since \(\sigma \) is bounded.

It remains to show that Assumptions 2.6 and 2.8 hold. For this we introduce for each \(u\in \mathcal {U}^{t}\), the controlled process \({\hat{X}}^{t,\omega ,u,\alpha }:=\limsup _{j\rightarrow \infty }{\hat{X}}^{t,\omega ,[u]_{j},\alpha }\), where

has u implemented as an open loop strategy. Moreover, we define the control set \(\mathcal {A}_t\) as the set of all \((\alpha _s)_{t\le s\le T}\) with \(\alpha \in \mathcal {A}\) and \((\alpha _s(\omega ))_{t\le s\le T}\) independent of \(\omega |_{[0,t)}\) and for each \({\textbf {v}}=(t_j,b_j)_{1\le j\le k},{\textbf {v}}'=(t'_j,b'_j)_{1\le j\le k}\in D^k\), we introduce the set \(\Upsilon ^{{\textbf {v}},{\textbf {v}}'}:=\cup _{j=1}^k[t_j\wedge t'_j,t_j\vee t'_j)\). The core result we need is the following stability estimate:

Proposition 6.4

Under Assumption 6.3 there is for each \(k,\kappa \ge 0\) a modulus of continuity \(\rho _{X,\kappa +k}\) such that

for all \((t,\omega ,\omega ',{\textbf {v}},{\textbf {v}}') \in [0,T]\times \Omega ^2\times (D^\kappa )^2\) and \((u,\alpha )\in \mathcal {U}^{k}_t\times \mathcal {A}_t\).

Proof

To simplify notation we let \(v:={\textbf {v}}\circ u=(\eta _j,\gamma _j)_{j=1}^{k+\kappa }\) and \(v':={\textbf {v}}'\circ u=(\eta '_j,\gamma '_j)_{j=1}^{k+\kappa }\) and set \(X^l:={\hat{X}}^{t,\omega ,[v]_l,\alpha }\) and \({\tilde{X}}^l:={\hat{X}}^{t,\omega ',[v']_l,\alpha }\) for \(l=0,\ldots ,\kappa +k\). Moreover, we let \(\delta X^l:=X^l-{\tilde{X}}^l\) and set \(\delta X:=\delta X^{\kappa +k}\). For \(s\in [{\bar{\eta }}_l,T]\) with \({\bar{\eta }}_l:=\eta _l\vee \eta '_l\) we have

Now, as \(X^l=X^j\) on \([0,\eta _{j+1})\) for \(j\le l\), we have

and induction gives that

Combined, we find that

For \(l=0\) we see that

Squaring and taking expectations on both sides and using the Burkholder-Davis-Gundy inequality then gives

where we have used the \(L^2\)-Lipschitz condition and the uniform bound on \(\sigma \) to arrive at the last inequality. Grönwall’s lemma now gives that

We proceed by induction and assume that there is a C such that

But then, repeating the above argument and using (6.8), gives that

Since \(|v-v'|\le k|{\textbf {v}}-{\textbf {v}}'|\) and \(\Upsilon ^{v,v'}=\Upsilon ^{{\textbf {v}},{\textbf {v}}'}\) we find by induction that

and the statement of the proposition follows by Jensen’s inequality. \(\square \)

Remark 6.5

Note that the constant \(C>0\) in the proof of Proposition 6.4 generally depends on \(k+\kappa \) and may tend to infinity as either k or \(\kappa \) goes to infinity. This is the main reason that we needed to go through the process of truncating the maximal number of interventions to obtain the dynamic programming principle in Sect. 3 and to prove that the game has a value in Sect. 4.

To translate our problem to the general framework we define

and

Lemma 6.6

The maps \(\varphi \) and c defined in (6.9), resp. (6.10) satisfy Assumption 2.6.

Proof

Uniform boundedness of \(\varphi \) (resp. c) is immediate from the boundedness of \(\phi \) and \(\psi \) and (6.9) (resp. \(\ell \) and (6.10)).

Letting \(t=T\) in Proposition 6.4 we find that, with \({\textbf {v}},{\textbf {v}}'\in D^k\), we have

Since \(\phi \) is bounded and the Lebesgue measure of the set \(\Upsilon ^{{\textbf {v}},{\textbf {v}}'}\) is bounded by \(|{\textbf {v}}-{\textbf {v}}'|\), we conclude that

where \(\rho _\phi \) and \(\rho _\psi \) are bounded modulus of continuities for \(\phi \) and \(\psi \), respectively. Moreover, as

we find that

and we conclude that c satisfies Assumption 2.6 with \(\rho _{c,k}(\cdot )=\rho _\ell (\rho _{X,k}(\cdot ))\), where \(\rho _\ell \) is a bounded modulus of continuity for \(\ell \). \(\square \)

Finally, we have the following result:

Lemma 6.7

Assumption 2.8 holds in this setting.

Proof

We fix \(k\ge 0\), \(u\in \mathcal {U}^{t,k}\) and \(\omega '\in \Omega \) and note that \(x\rightarrow \mathcal {I}^u(x)\) maps progressively measurable (resp. càdlàg) processes to progressively measurable (resp. càdlàg) processes and then so does the composition \(\sigma ^u:=\sigma (\cdot ,\mathcal {I}^u(\cdot ),\cdot )\). We can thus repeated the argument in the appendix to [23] to show that there is a \(\mathcal {F}_t\otimes \mathcal {F}\)-measurable map \((\omega ,{\tilde{\omega }})\mapsto u_\omega ({\tilde{\omega }})\) such that \(u_\omega \in \mathcal {U}^{t,k}\) for all \(\omega \in \Omega \) and

Now, since the set of open loop controls is at least as rich as the set of feedback controls, we have for \({\textbf {v}},{\textbf {v}}'\in D^\kappa \), that