Abstract

We introduce and analyze a method of learning-informed parameter identification for partial differential equations (PDEs) in an all-at-once framework. The underlying PDE model is formulated in a rather general setting with three unknowns: physical parameter, state and nonlinearity. Inspired by advances in machine learning, we approximate the nonlinearity via a neural network, whose parameters are learned from measurement data. The latter is assumed to be given as noisy observations of the unknown state, and both the state and the physical parameters are identified simultaneously with the parameters of the neural network. Moreover, diverging from the classical approach, the proposed all-at-once setting avoids constructing the parameter-to-state map by explicitly handling the state as additional variable. The practical feasibility of the proposed method is confirmed with experiments using two different algorithmic settings: A function-space algorithm based on analytic adjoints as well as a purely discretized setting using standard machine learning algorithms.

Similar content being viewed by others

1 Introduction

We study the problem of determining an unknown nonlinearity f from data in a parameter-dependent dynamical system

Here, the state u is a function on a finite time interval (0, T) and a bounded Lipschitz domain \(\Omega \), and \(\dot{u}\) denotes the first order time derivative. In (1), both F, f are nonlinear Nemytskii operators in \(\lambda , \alpha , u\); these Nemytskii operators are induced by nonlinear, time-dependent functions \( [F(\lambda ,u)](t): = F(t,\lambda ,u(t))\) and \( [f (\alpha ,u)](t,x): = f (\alpha ,u(t,x)), \) where we consistently abuse notation in this manner throughout the paper; see also Lemmas 2 and 4. We assume that F was specified beforehand from an underlying physical model, that the terms \(\lambda \), \(u_0\) are physical parameters (with \(\lambda =\lambda (x)\) depending only on space), and that \(\alpha \) is a finite dimensional parameter arising in the nonlinearity. Furthermore, the model (1) is equipped with Dirichlet or Neumann boundary conditions.

Some examples of partial differential equations (PDEs) of the from (1) are diffusion models \(\dot{u}=\Delta u + f(\alpha ,u)\) with a nonlinear reaction term \(f(\alpha ,u)\) as follows [30]:

-

\(f(\alpha ,u)= -\alpha u(1 - u)\): Fisher equation in heat and mass transfer, combustion theory.

-

\(f(\alpha ,u)= -\alpha u(1 - u)(\alpha - u), 0<\alpha <1\): Fitzhugh–Nagumo equation in population genetics.

-

\(f(\alpha ,u)=-u/(1+\alpha _1 u+\alpha _2 u^2)\), \(\alpha = (\alpha _1,\alpha _2)\), \( \alpha _1>0, \alpha _1^2<4\alpha _2 \): Enzyme kinetics.

-

\(f(\alpha ,u) = f(u) =-u|u|^p\), \( p\ge 1 \): Irreversible isothermal reaction, temperature in radiating bodies.

The underlying assumption of this work is that in some cases, the nonlinearity f is unknown due to simplifications or inaccuracies in the modeling process or due to undiscovered physical laws. In such situations, our goal is to learn f from data. In order to realize this in practice, we need to use a parametric representation. For this, we choose neural networks, which have become widely used in computer science and applied mathematics due to their excellent representation properties, see for instance [19] for the classical universal approximation theorem, [27] for recent results indicating superior approximation properties of neural networks with particular activations (potentially at the cost of stability) and [3, 9] for general, recent overviews on the topic. Learning the nonlinearity f thus reduces to identifying parameters \(\theta \) of a neural network \(\mathcal {N}_\theta \) such that \(\mathcal {N}_\theta \approx f\), rendering the problem of learning a nonlinearity to be a parameter identification problem of a particular form.

For the majority of this paper, the nonlinearity f will therefore not appear directly; instead, f will consistently be replaced by its neural network representation \(\mathcal {N}_\theta \), and our focus will be on showing the properties of \(\mathcal {N}_\theta \), rather than those of f.

A main point in our approach, which is motivated from feasibility for applications, is that learning the nonlinearity must be achieved only via indirect, noisy measurements of the state \(y^\delta \approx Mu\) with M a linear measurement operator. More precisely, we assume to have K different measurements

of different states \(u^k\) available, where the different states correspond to solutions of the system (1) with different, unknown parameters \((\lambda ^k,\alpha ^k,u_0^k)\), but the same, unknown nonlinearity f which is assumed to be part of the ground truth model. The simplest form of M is a full observation over time and space of the states, i.e. \(M=\text {Id}\) as in e.g. (theoretical) population genetics. In other contexts, M could be discrete observations at time instances of u, i.e. \(Mu={(u(t_i,\cdot ))_{i=1}^{n_T}}, t_i\in (0,T)\), as in material science [31], system biology [4] (see also Corollary 32), or Fourier transform as in MRI acquisition [2], etc. In most cases, M is linear, as is assumed here.

Our approach to address this problem is to use an all-at-once formulation that avoids constructing the parameter-to-state map (see for instance [21]). That is, we aim to identify all unknowns by solving a minimization problem of the form

where we refer to Sect. 2 for details on the function spaces involved. Here, \(\mathcal {G}\) is a forward operator that incorporates the PDE model, the initial conditions and the measurement operator via

and \(\mathcal {R}_1\), \(\mathcal {R}_2\) are suitable regularization functionals.

Once a particular parameter \(\hat{\theta }\) such that \(\mathcal {N}_{\hat{\theta }}\) accurately approximates f in (1) is learned, one can use the learning informed model in other parameter identification problems by solving

for a new measured datum \(y \approx Mu\).

Existing research towards learning PDEs and all-at-once identification Exploring governing PDEs from data is an active topic in many areas of science and engineering. With advances in computational power and mathematical tools, there have been numerous recent studies on data-driven discovery of hidden physical laws. One novel technique is to construct a rich dictionary of possible functions, such as polynomials, derivatives etc., and to then use sparse regression to determine candidates that most accurately represent the data [5, 33, 35]. This sparse identification approach yields a completely explicit form of the differential equation, but requires an abundant library of basic functions specified beforehand. In this work, we take the viewpoint that PDEs are constructed from principal physical laws. As it preserves the underlying equation and learns only some unknown components of the models, e.g. f in (1), our suggested approach is capable of refining approximate models by staying more faithful to the underlying physics.

Besides the machine learning part, the model itself may contain unknown physical parameters belonging to some function space. This means that if the nonlinearity f is successfully learned, one can insert it into the model. One thus has a learning-informed PDE, and can then proceed via a classical parameter identification. The latter problem was studied in [11] for stationary PDEs, where f is learned from training pairs (u, f(u)). This paper emphasizes analysis of the error propagating from the neural network-based approximation of f to the parameter-to-state map and the reconstructed parameter.

In reality, one does not have direct access to the true state u, but only partial or coarse observations of u under some noise contamination. This factor affects the creation of training data pairs (u, f(u)) with \(f(u)=\dot{u}-F(u)\) for the process of learning f, e.g in [11]. Indeed, with a coarse measurement of u, for instance \(u\in L^2((0,T)\times \Omega )\), one cannot evaluate \(\dot{u}\), nor terms such as \(\Delta u\) that may appear in F(u). Moreover, with discrete observations, e.g. a snapshot \(y={(u(t_i,\cdot ))_{i=1}^{n_T}}, t_i\in (0,T)\), one is unable to compute \(\dot{u}\) for the training data.

For this reason, we propose an all-at-once approach to identify the nonlinearity f, state u and physical parameter simultaneously. In comparison to [11], our approach bypasses the training process for f, and accounts for discrete data measurements. The all-at-once formulation avoids constructing the parameter-to-state map, which is nonlinear and often involves restrictive conditions [16, 20, 21, 23, 28]. Additionally, we here consider time-dependent PDE models.

For discovering nonlinearities in evolutionary PDEs, the work in [7] suggests an optimal control problem for nonlinearities expressed in terms of neural networks. Note that the unknown state still needs to be determined through a control-to-state map, i.e. via the classical reduced approach, as opposed to the new all-at-once approach.

While [7, 11] are the recent publications that are most related to our work, we also mention the very recent preprint [12] on an extension of [11] that appeared independently and after the original submission of our work. Furthermore, there is a wealth of literature on the topic of deep learning emerging in the last decade; for an authoritative review on machine learning in the context of inverse problems, we refer to [1]. For the regularization analysis, we follow the well known theory put forth in [13, 22, 26, 37]. It is worthwhile to note that since this work, to the knowledge of the authors, is the first attempt at applying an all-at-once approach to learning-informed PDEs, our focus will be on this novel concept itself, rather than on obtaining minimal regularity assumptions on the involved functions, in particular on the activation functions. In subsequent work, we might further improve upon this by considering, e.g., existing techniques from a classical optimal control setting with non-smooth equations [6] or techniques to deal with non-smoothness in the context of training neural networks [8].

Contributions Besides introducing the general setting of identifying nonlinearities in PDEs via indirect, parameter-dependent measurements, the main contributions of our work are as follows: Exploiting an all-at-once setting of handling both the state and the parameters explicitly as unknowns, we provide well-posedness results for the resulting learning- and learning-informed parameter identification problems. This is achieved for rather general, nonlinear PDEs and under local Lipschitz assumptions on the activation function of the involved neural network. Further, for the learning-informed parameter identification setting, we ensure the tangential cone condition on the neural-network part of our model. Together with suitable PDEs, this yields local uniqueness results as well as local convergence results of iterative solution methods for the parameter identification problem. We also provide a concrete application of our framework for parabolic problems, where we motivate our function-space setting by a unique existence result on the learning-informed PDE. Finally, we consider a case study in a Hilbert space setting, where we compute function-space derivatives of our objective functional to implement the Landweber method as solution algorithm. Using this algorithm, and also a parallel setting based on the ADAM algorithm [25], we provide numerical results that confirm feasibility of our approach in practice. Code is made available at https://github.com/hollerm/pde_learning.

Organization of the paper Section 2 introduces learning-informed parameter identification and the abstract setting. Section 3 examines existence, stability and solution methods for the minimization problem. Section 4 focuses on the learning-informed PDE, and analyzes some problem settings. Finally, in Sect. 5 we present a complete case study, from setup to numerical results.

2 Problem Setting

2.1 Notation and Basic Assertions

Throughout this work, \(\Omega \subset \mathbb {R}^d\) will always be a bounded Lipschitz domain, where additional smoothness will be required and specified as necessary. We use standard notation for spaces of continuous, integrable and Sobolev functions with values in Banach spaces, see for instance [10, 32], in particular [32, Sect. 7.1] for Sobolev-Bochner spaces and associated concepts such as time-derivatives of Banach-space valued functions.. For an exponent \(p \in [1,\infty ]\), we denote by \(p^*\) the conjugate exponent given as \(p^* = p/(p-1)\) if \(p \in (1,\infty )\), \(p^* = \infty \) if \(p=1\) and \(p^* = 1\) if \(p=\infty \). For \(l \in \mathbb {N}\), we denote by

the continuous embedding of \(W^{l,p}(\Omega )\) to \(L^q(\Omega )\), which exists for \(q\preceq \frac{dp}{d-lp}\), where the notation \(\preceq \) means if \(lp<d\), then \(q\le \frac{dp}{d-lp}\), if \(lp=d\), then \(q<\infty \), and if \(lp\ge d\), then \( q=\infty \) . An example of such an embedding, which will be used frequently in Sect. 4, is \(H^1(\Omega )\hookrightarrow L^6(\Omega )\) for \(d=3\). We further denote by \(C_{W^{l,p}\rightarrow L^q}\) the operator norm of the corresponding continuous embedding operator.

We also use  to denote the compact embedding (see [32, Theorem 1.21])

to denote the compact embedding (see [32, Theorem 1.21])

The notation C indicates generic positive constants. Given any Banach spaces X, Y, we denote by \(\Vert \cdot \Vert _{{X\rightarrow Y}}\) the operator norm \(\Vert \cdot \Vert _{{\mathcal {L}(X,Y)}}\), and by \(\langle \cdot ,\cdot \rangle _{{X,X^*}}\) the pairing between dual spaces X, \(X^*\). We write \(\mathcal {C}_\text {locLip}({X,Y})\) for the space of locally Lipschitz continuous functions between X and Y. Furthermore, \(A\cdot B\) denotes the Frobenius inner product between generic matrices A, B, while AB stands for matrix multiplication, and \(A^T\) stands for the transpose of A. The notation \(\mathcal {B}^X_\rho (x^\dagger )\) means a ball of center \(x^\dagger \), radius \(\rho >0\) in X. For functions mapping between Banach spaces, by the term weak continuity we will always refer to weak-weak continuity, i.e., continuity w.r.t. weak convergence in both the domain and the image space.

2.2 The Dynamical System

For the general setting considered in this work, we use the following set of definitions and assumptions. A concrete application where these abstracts assumptions are satisfied can be found in Sect. 4 below.

Assumption 1

-

The space X (parameter space) is a reflexive Banach space. The spaces V (state space) and W (image space under the model operator), Y (observation space) and \(\tilde{V} \) are separable, reflexive Banach spaces. In view of initial conditions, we further require \(U_0 \) (initial data space) to be a reflexive Banach space, and H to be a separable, reflexive Banach space.

-

We assume the following embeddings:

(6)

(6)Further, \(\tilde{V}\) will always be such that either \(L^{\hat{p}}(\Omega )\hookrightarrow \tilde{V}\) or \(\tilde{V} \hookrightarrow L^{\hat{p}}(\Omega )\).

-

The function

$$\begin{aligned} F:(0,T)\times X \times V\rightarrow W \end{aligned}$$is such that for any fixed parameter \(\lambda \in X\), \(F(\cdot ,\lambda ,\cdot ): (0,T) \times V \rightarrow W\) meets the Carathéodory conditions, i.e., \(F(\cdot ,\lambda ,v)\) is measurable with respect to t for all \(v\in V\) and \(F(t,\lambda ,\cdot ) \) is continuous with respect to v for almost every \(t\in (0,T)\). Moreover, for almost all \(t\in (0,T)\) and all \(\lambda \in X\), \(v\in V\), the growth condition

$$\begin{aligned} \Vert F(t,\lambda ,v)\Vert _W\le \mathcal {B}(\Vert \lambda \Vert _X,\Vert v\Vert _H)(\gamma (t)+\Vert v\Vert _V) \end{aligned}$$(7)is satisfied for some \(\mathcal {B}:\mathbb {R}^2\rightarrow \mathbb {R}\) such that \(b \mapsto \mathcal {B}(a,b)\) is increasing for each \(a \in \mathbb {R}\), and \(\gamma \in L^2(0,T)\).

-

We define the overall state space and image space including time dependence as

$$\begin{aligned} \mathcal {V}=L^2(0,T;V)\cap H^1(0,T;{\widetilde{V}}), \quad \mathcal {W}=L^2(0,T;W), \end{aligned}$$(8)respectively with the norms \(\Vert u\Vert _\mathcal {V}:=\sqrt{\int _0^T \Vert u(t)\Vert _V^2+\Vert \dot{u}(t)\Vert _{\widetilde{V}}^2\,\textrm{d}t}\) and \(\Vert u\Vert _\mathcal {W}:=\sqrt{\int _0^T \Vert u(t)\Vert _W^2 \,\textrm{d}t}\).

-

We define the overall observation space including time as

$$\begin{aligned} \mathcal {Y}=L^2(0,T;Y), \end{aligned}$$with the norm \(\Vert y\Vert _\mathcal {W}:=\sqrt{\int _0^T \Vert y(t)\Vert _Y^2 \,\textrm{d}t}\) and the corresponding measurement operator

$$\begin{aligned} M \in \mathcal {L}(\mathcal {V},\mathcal {Y}). \end{aligned}$$(9) -

We further assume the following embeddings for the state space:

$$\begin{aligned} \mathcal {V}\hookrightarrow L^\infty ((0,T)\times \Omega ), \quad \mathcal {V}\hookrightarrow C(0,T;H). \end{aligned}$$

The embeddings in (6) are very feasible in the context of PDEs. The state space V usually has some certain smoothness such that its image under some spatial differential operators belongs to W. For the motivation of \(\mathcal {V}\hookrightarrow C(0,T;H)\), the abstract setting in [32, Lemma 7.3.] (see Appendix A) is an example. Note that due to \(\mathcal {V}\hookrightarrow C(0,T;H)\), clearly \(U_0=H\) is a feasible choice for the initial space; for the sake of generality, only \(U_0\hookrightarrow H\) is assumed in (6).

Under Assumption 1, the function F induces a Nemytskii operator on the overall spaces.

Lemma 2

Let Assumption 1 hold. Then the function \(F:(0,T)\times X \times V\rightarrow W \) induces a well-defined Nemytskii operator \(F: X \times \mathcal {V}\rightarrow \mathcal {W}\) given as

Proof

Under the Carathéodory assumption, \(t \mapsto F(t,\lambda ,u(t))\) is Bochner measurable for every \(\lambda \in X\) and \(u \in \mathcal {V}\). For such \(\lambda ,u\), we further estimate

by \(b \mapsto \mathcal {B}(\Vert \lambda \Vert ,b)\) being increasing and by the embedding \( \mathcal {V}\hookrightarrow C(0,T;H)\). This allows to conclude that \(t \mapsto F(t,\lambda ,u(t))\) is Bochner integrable (see [10, Theorem II.2.2]) and that the Nemytskii operator \(F: X \times \mathcal {V}\rightarrow \mathcal {W}\) is well-defined. \(\square \)

Note that we use the same notation for the function \(F:(0,T) \times X \times V \rightarrow W\) and the corresponding Nemytskii operators.

2.3 Basics of Neural Networks

As outlined in the introduction, the unknown nonlinearity f will be represented by a neural network. In this work, we use a rather standard, feed-forward form of neural networks defined as follows.

Definition 3

A neural network \( \mathcal {N} _\theta \) of depth \(L \in \mathbb {N}\) with architecture \((n_i)_{i=0}^{L}\) is a function \( \mathcal {N} _\theta :\mathbb {R}^{n_0 } \rightarrow \mathbb {R}^{n_L }\) of the form

where \(L_{\theta _l}:\mathbb {R}^{n_{l-1} } \rightarrow \mathbb {R}^{n_{l}} \), for \(z \in \mathbb {R}^{n_{l-1} }\) is given as

Here, \(\omega ^l \in \mathcal {L}( \mathbb {R}^{n_{l-1}},\mathbb {R}^{n_{l}})\), \(\beta ^l \in \mathbb {R}^{n_{l}}\), \(\theta _l = (\omega ^l,\beta ^l)\) summarizes all the parameters of the l-th layer and \(\sigma \) is a pointwise nonlinearity that is fixed. Given a depth \(L \in \mathbb {N}\) and architecture \((n_i)_{i=0}^{L}\), we also use \(\Theta \) to denote the finite dimensional vector space containing all possible parameters \(\theta _1,\ldots ,\theta _L\) of neural networks with this architecture.

In this work, neural networks will be used to approximate the nonlinearity \(f:\mathbb {R}^{m+1} \rightarrow \mathbb {R}\). Consequently, we always deal with neural networks \(\mathcal {N}_\theta :\mathbb {R}^{m+1} \rightarrow \mathbb {R}\), i.e., \(n_0=m+1\) and \(n_L = 1\).

As such, rather than showing that f induces a well-defined Nemytskii operator, we instead show that \(\mathcal {N}_\theta \) does so. A sufficient condition for this to be true is the continuity of the activation function \(\sigma \), as the following Lemma shows.

Lemma 4

Assume that \(\sigma \in \mathcal {C}(\mathbb {R},\mathbb {R})\). Then, with the setting of Assumption 1, \( \mathcal {N} _\theta :\mathbb {R}^{m} \times \mathbb {R}\rightarrow \mathbb {R}\) as in Definition 3 induces a well-defined Nemytskii operator \( \mathcal {N} _\theta : \mathbb {R}^m \times \mathcal {V}\rightarrow L^2(0,T;L^{{{\hat{p}}}}(\Omega )) \) via

regarding \(u \in \mathcal {V}\) as \(u \in L^\infty ((0,T)\times \Omega )\) by the embedding \( \mathcal {V}\hookrightarrow L^\infty ((0,T)\times \Omega )\). Further, using the embedding \(L^2(0,T;L^{{{\hat{p}}}}(\Omega )) \hookrightarrow \mathcal {W}\), \( \mathcal {N} _\theta \) induces a well-defined Nemytskii operator \( \mathcal {N} _\theta :\mathbb {R}^m \times \mathcal {V}\rightarrow \mathcal {W}\).

Proof

We first fix \(\alpha \in \mathbb {R}^m\). By continuity of \(\sigma \), \( \mathcal {N} _\theta \) is also continuous and, for \(u \in L^\infty ((0,T) \times \Omega )\), \(\sup _{t,x} | \mathcal {N} _\theta (\alpha ,u(t,x))| < \infty \); thus, \( \mathcal {N} _\theta (\alpha ,u(t,\cdot )) \in L^{\hat{p}}(\Omega )\) for almost every \(t \in (0,T)\). It then follows by standard measurability arguments that the mapping \(t \mapsto \int _\Omega \mathcal {N} _\theta (\alpha ,u(t,x))w^*(x)\,\textrm{d}x\) is measurable for every \(w^* \in L^{{\hat{p}}^*}(\Omega )\). Using separability and the Pettis theorem [10, Theorem II.1.2], it follows that \(t \mapsto \mathcal {N} _\theta (\alpha ,u(t,\cdot )) \in L^{\hat{p}}(\Omega )\) is Bochner measureable. This, together with \(\sup _{t,x} | \mathcal {N} _\theta (\alpha ,u(t,x))| < \infty \) as before, implies that the Nemytskii operator \( \mathcal {N} _\theta :\mathbb {R}^m \times \mathcal {V}\rightarrow L^2(0,T;L^{\hat{p}}(\Omega )) \) is well defined. The remaining assertions follow immediately from \(L^{\hat{p}}(\Omega ) \hookrightarrow W\). \(\square \)

We again use the same notation for \( \mathcal {N} _\theta :\mathbb {R}^{m} \times \mathbb {R}\rightarrow \mathbb {R}\) and the corresponding Nemytskii operator.

2.4 The Learning Problem

As the nonlinearity f is represented by a neural network \(\mathcal {N}_\theta :\mathbb {R}^{m+1} \rightarrow \mathbb {R}\), we rewrite the partial-differential-equation (PDE) model (1) into the form

and introduce the forward operator \(\mathcal {G}\), which incorporates the observation operator M, as

Here, \(U_0\) and H are the spaces related to the initial condition and the trace operator, that is, one has unknown initial data \(u_0\in U_0\) and trace operator \((\cdot )_{t=0}: \mathcal {V}\ni u\mapsto u(0)\in H\). With \(U_0\hookrightarrow H\) as assumed in (6), one has \(u(0)-u_0\in H\).

The minimization problem for the learning process is then given by

where \(\mathcal {R}_1:X \times \mathbb {R}^m \times U_0 \times \mathcal {V}\rightarrow [0,\infty ]\) and \(\mathcal {R}_2:\Theta \rightarrow [0,\infty ]\) are suitable regularization functionals.

Assume now that the particular parameter \(\hat{\theta }\) has been learned. As in (4), one can now solve other parameter identification problems, given new measured datum \(y\approx Mu\), by solving

3 Learning-Informed Parameter Identification

3.1 Well-Posedness of Minimization Problems

We start our analysis by studying existence theory for the optimization problems (13) and (14), where the unknown nonlinearity is replaced by a neural network approximation. To this aim, we first establish weak closedness of the forward operator. In what follows, the architecture of the network \( \mathcal {N} \) is considered fixed.

Lemma 5

Let Assumption 1 hold. Then, if \(\sigma \in \mathcal {C}_\text {locLip}(\mathbb {R},\mathbb {R})\), \( \mathcal {N} :\mathbb {R}^m \times \mathcal {V}\times \Theta \rightarrow \mathcal {W}\) is weakly continuous. Further, if eitherFootnote 1

or

and \((-F)\) is pseudomonotone in the sense that for almost all \(t\in (0,T),\)

then F is weakly closed. Moreover, if \( \mathcal {N} \) is weakly continuous and F is weakly closed, then \(\mathcal {G}\) as in (12) is weakly closed.

Proof

We first consider weak closedness of \(\mathcal {G}\). To this aim, recall that \(\mathcal {G}\) is given as

First note that \( M\in \mathcal {L}(\mathcal {V},\mathcal {Y})\) by (9). Weak closedness of \(((\cdot )_{t=0},\text {Id}):\mathcal {V}\times U_0 \rightarrow H\) follows from weak continuity of \(\text {Id}:U_0 \rightarrow H\) as \(U_0 \hookrightarrow H\), and from weak-weak continuity of \((\cdot )_{t=0}:\mathcal {V}\rightarrow H\) which follows from \(\Vert u(0)\Vert _H \le \sup _{t\in [0,T]}\Vert u(t)\Vert _H \le C\Vert u\Vert _\mathcal {V}\) for \(C>0\) and \(\mathcal {V}\hookrightarrow C(0,T;H)\). Weak continuity of \(\frac{d}{dt}:\mathcal {V}\rightarrow \mathcal {W}\) results from the choice of norms in the respective spaces. Thus, weak closedness of \(\mathcal {G}\) follows when F is weakly closed and \( \mathcal {N} \) is weakly continuous.

Weak continuity of \( \mathcal {N} \). First, we observe that \( \mathcal {N} : \mathbb {R}^m \times \mathbb {R}\times \Theta \rightarrow \mathbb {R}, (\alpha ,y,\theta ) \mapsto \mathcal {N} _\theta (\alpha ,y)\) is in \(\mathcal {C}_\text {locLip}(\Theta \times \mathbb {R}^m \times \mathbb {R},\mathbb {R})\), since the activation function \(\sigma \) is locally Lipschitz continuous. For a sequence \((\alpha _n,u_n,\theta _n)_n\) converging weakly to \((\alpha ,u,\theta )\) in \(\mathbb {R}^m \times \mathcal {V}\times \Theta \), we observe that by the embedding \(\mathcal {V}\hookrightarrow L^\infty ((0,T)\times \Omega )\), \(\sup _{t,x} \Vert (\alpha _n,u_n(t,x),\theta _n)\Vert < M\) for some \(M>0\).

Now the embeddings  imply in particular that

imply in particular that  (in case \(\widetilde{V}\hookrightarrow L^{\hat{p}}(\Omega )\), this follows from

(in case \(\widetilde{V}\hookrightarrow L^{\hat{p}}(\Omega )\), this follows from  together with [32, Lemma 7.7] (see Appendix A), in the other case that \(L^{\hat{p}}(\Omega )\hookrightarrow \widetilde{V}\), this follows directly from [32, Lemma 7.7]). Based on this, we deduce \(u_n\rightarrow u\) in \(L^2(0,T;L^{\hat{p}}(\Omega ))\). Then

together with [32, Lemma 7.7] (see Appendix A), in the other case that \(L^{\hat{p}}(\Omega )\hookrightarrow \widetilde{V}\), this follows directly from [32, Lemma 7.7]). Based on this, we deduce \(u_n\rightarrow u\) in \(L^2(0,T;L^{\hat{p}}(\Omega ))\). Then

as \(W^*\hookrightarrow L^{\frac{{\hat{p}}}{{\hat{p}}-1}}(\Omega )\), \(u_n\overset{n\rightarrow \infty }{\rightarrow }u\) in \(L^2(0,T;L^{\hat{p}}(\Omega ))\), and \( \mathcal {N} (\alpha ,u_n(t,\cdot ),\theta _n)) \in L^{\hat{p}}(\Omega )\), as argued in the proof of Lemma 4. Above L(M) denotes the Lipschitz constant of \((\alpha ,y,\theta ) \mapsto \mathcal {N} _\theta (\alpha ,y)\) in the ball with radius M and \({\hat{p}}/({\hat{p}}-1) = \infty \) in case \({\hat{p}}=1\). This shows that here, we even obtain weak-strong continuity of \(\mathcal {N}_\theta \), which is stronger than weak-weak continuity, as required.

Weak closedness of F. To show weak closedness of the Nemytskii operator \(F:X \times \mathcal {V}\rightarrow \mathcal {W}\), we consider two cases. We first consider the case that \(F(t,\cdot )\) is weakly continuous. To this aim, take \((\lambda _n,u_n)_n\) to be a sequence weakly converging to \((\lambda ,u)\) in \(X \times \mathcal {V}\). As \(\mathcal {V}\hookrightarrow C(0,T;H)\), we have \(u_n\overset{C(0,T;H)}{\rightharpoonup }u\) as \(n \rightarrow \infty \). Now, we show \(u_n(t)\overset{H}{\rightharpoonup }u(t)\) for all \(t\in (0.T)\) via the fact that the point-wise evaluation function \((\cdot )(t):\mathcal {V}\rightarrow H\) for any \( t\in [0,T]\) is linear and bounded, thus weak-weak continuous. Indeed, its linearity is clear and boundedness follows from

From this, we obtain \(u_n(t)\overset{H}{\rightharpoonup }u(t)\), thus having \( (u_n(t),\lambda _n)\overset{H\times X}{\rightharpoonup }(u(t),\lambda ))\) for all \(t \in (0,T).\) Using the growth condition (7), we now estimate

where \(C(\Vert \lambda \Vert _X,\Vert u\Vert _\mathcal {V})>0\) can be obtained independently from n due to \(\mathcal {V}\hookrightarrow C(0,T;H)\), \(\mathcal {B}\) being increasing, and boundedness of \(((u_n,\lambda _n))_n \) in \(\mathcal {V}\times X\). Since F is assumed to be weakly continuous on \(H\times X\), when \(n\rightarrow \infty \) we have \(\epsilon _n(t)\rightarrow 0\) pointwise in t. Hence, applying Lebesgue’s Dominated Convergence Theorem yields convergence of the time integral to 0, thus weak convergence of \(F(\lambda _n,u_n)\) to \(F(\lambda ,u)\) in \(\mathcal {W}\) as claimed. Accordingly, if the condition (15) holds, we obtain weak-weak continuity of F.

Now we consider the second case, i.e. (16)-(17), for weak closedness of F. Assume that  as in (16), \( H\hookrightarrow W^*\) and that \(-F\) is pseudomonotone as in (17). Given \((u_n,\lambda _n) \overset{\mathcal {V}\times X}{\rightharpoonup }\ (u,\lambda )\), \(F({\lambda _n,u_n})\overset{\mathcal {W}}{\rightharpoonup }\ g\) and

as in (16), \( H\hookrightarrow W^*\) and that \(-F\) is pseudomonotone as in (17). Given \((u_n,\lambda _n) \overset{\mathcal {V}\times X}{\rightharpoonup }\ (u,\lambda )\), \(F({\lambda _n,u_n})\overset{\mathcal {W}}{\rightharpoonup }\ g\) and  , it follows that

, it follows that  [32, Lemma 7.7] (see Appendix A) and that \(u_{n}\rightarrow u \) strongly in \(L^2(0,T;H)\). By the embedding \(H \hookrightarrow W^*\), it holds also \(u_{n} \rightarrow u\) in \(\mathcal {W}^*\). With \(\xi _{n}{(t)}:= |\langle F(t,\lambda _{n},u_{n}(t)),u_{n}(t)-u(t)\rangle _{W,W^*}|\), we obtain

[32, Lemma 7.7] (see Appendix A) and that \(u_{n}\rightarrow u \) strongly in \(L^2(0,T;H)\). By the embedding \(H \hookrightarrow W^*\), it holds also \(u_{n} \rightarrow u\) in \(\mathcal {W}^*\). With \(\xi _{n}{(t)}:= |\langle F(t,\lambda _{n},u_{n}(t)),u_{n}(t)-u(t)\rangle _{W,W^*}|\), we obtain

By moving to a subsequence indexed by \((n_k)_k\), we thus have \(\xi _{n_k}(t)\rightarrow 0 \) as \(k\rightarrow \infty \) for almost every \(t\in (0,T)\). As \(\underset{k\rightarrow \infty }{\liminf }\ \, \xi _{n_k}(t)\rightarrow 0\), pseudomonotonicity (as in (17)) implies that for any \(v\in \mathcal {W}^*\),

Further, from the Fatou–Lebesgue theorem, we get

where the last estimate follows from (20) and from weak convergence of of \(F(\lambda _n,u_n)\) to g in \(\mathcal {W}\). As this estimate is valid for any \(v\in \mathcal {W}^*\), we conclude that F is weakly closed on \(X\times \mathcal {V}\), that is,

\(\square \)

Existence of a solution to (13) and (14) now follows from a standard application of the direct method [13, 37], using weak-closedness of \(\mathcal {G}\) and weak lower semi-continuity of the involved quantities.

Proposition 6

(Existence) Let the assumptions of Lemma 5 hold, and assume that \(\mathcal {R}_1, \mathcal {R}_2 \) are nonnegative, weakly lower semi-continuous and such that the sublevel sets of \((\lambda ,\alpha ,u_0,u,\theta ) \mapsto \mathcal {R}_1(\lambda ,\alpha ,u_0,u) + \mathcal {R}_2(\theta )\) are weakly precompact. Then the minimization problems (13) and (14) admit a solution.

Remark 7

(Stability) We note that under the assumptions of Proposition 6, also stability for the minimization problems (13) and (14) follows with standard arguments, see for instance [17, Theorem 3.2]. Here, stability means that for convergent sequence of data \((y_n)_n\) converging to some y, any corresponding sequence of solutions admits a weakly convergent subsequence, and any limit of such weakly convergent subsequence is a solution of the original problem with data y.

Next we deal with minimization problem (13) in the limit case where the given data converges to a noise-free ground truth, and the PDE should be fulfilled exactly. Our result in this context is a direct extension of classical results as provided for instance in [17], but since also variants of this result will be of interest, we provide a short proof.

Proposition 8

(Limit case) With the assumption of Proposition 6 and parameters \(\beta ^e,\beta ^M>0\), consider the parametrized learning problem

and assume that, for \(((y^\dagger )^k)_k \in \mathcal {Y}^K\), there exists \((\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k)_k \in X \times \mathbb {R}^m \times U_0\times \mathcal {V}\) and \(\hat{\theta }\in \Theta \) such that \( e(\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k,\hat{\theta }) = 0 \) , \( M{\hat{u}}^k = (y^\dagger )^k\), \(\mathcal {R}_1(\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k)< \infty \) for all k and \( \mathcal {R}_2(\hat{\theta })< \infty \).

Then, for any sequence \((y_n)_n = (y_n^1,\ldots ,y_n^K)_n \) in \(\mathcal {Y}^K\) with \(\sum _{k=1}^K\Vert y^k_n - {(y^\dagger )^k} \Vert ^2_{\mathcal {Y}}:= \delta _n^2 \rightarrow 0\) and parameters \(\beta _n^e,\beta _n^M\) such that

as \(n \rightarrow \infty \), any sequence of solutions \(((\lambda _n^k,\alpha _n^k,(u_0^k)_n,u_n^k)_k,\theta _n)_n\) of (21) with parameters \(\beta _n^e,\beta _n^M\) and data \(y_n\) admits a weakly convergent subsequence, and any limit of such a subsequence is a solution to

If, further, the solution to (22) is unique, then the entire sequence \(((\lambda _n^k,\alpha _n^k,(u_0^k)_n,u_n^k)_k,\theta _n)_n\) weakly converges to the solution of (22).

Proof

With \((\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k)_k \) and \(\hat{\theta }\) arbitrary such that \(e(\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k,\hat{\theta }) = 0 \) and \( M{\hat{u}}^k = (y^\dagger )^k\), and \(((\lambda _n^k,\alpha _n^k,(u_0^k)_n,u_n^k)_k,\theta _n)_n\) any sequence of solutions to (21) with parameters \(\beta ^e_n,\beta ^M_n\), by optimality it holds that

By weak precompactness of the sublevel sets of \(\mathcal {R}_1\) and \(\mathcal {R}_2\) and convergence of \(\beta _n^M\delta _n^2\) to zero it thus follows that \(((\lambda _n^k,\alpha _n^k,(u_0^k)_n,u_n^k)_k,\theta _n)_n\) admits a weakly convergent subsequence in \((X \times \mathbb {R}^m \times U_0\times \mathcal {V})^K\times \Theta \).

Now let \(((\lambda ^k,\alpha ^k,u_0^k,u^k)_k,\theta )\) be the limit of such a weakly convergent subsequence, which we again denote by \(((\lambda _n^k,\alpha _n^k,(u_0^k)_n,u_n^k)_k,\theta _n)_n\). Closedness of \(\mathcal {G}\) together with lower semi-continuity of the norm \(\Vert \cdot \Vert _{\mathcal {W}\times H}\) and the estimate (23) (possibly moving to another non-relabeled subsequence) then yields that both

and

This shows that \(e(\lambda ^k,\alpha ^k,u_0^k,u^k,\theta ) = 0 \) and \( Mu^k = (y^\dagger )^k\) for all k. Again using the estimate (23), now together with weak lower semi-continuity of \(\mathcal {R}_1,\mathcal {R}_2\), we further obtain that

Since \((\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k)_k \) and \(\hat{\theta }\) were arbitrary solutions of \(e(\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k,\hat{\theta }) = 0 \) and \( M{\hat{u}}^k = (y^\dagger )^k\), it follows that \(((\lambda ^k,\alpha ^k,u_0^k,u^k)_k,\theta )\) solves (22) as claimed.

At last, in case the solution to (22) is unique, weak convergence of the entire sequence follows by a standard argument, using that any subsequence contains another subsequence that weakly converges to the same limit. \(\square \)

Remark 9

(Different limit cases) The above result considers the limit case of both fulfilling the PDE exactly and matching noise-free ground truth measurements. Variants can be easily obtained as follows: In case only the PDE should be fulfilled exactly, one can consider \(\beta ^M\) fixed and only \(\beta ^e\) converging to infinity (at an arbitrary rate), such that the resulting limit solution will be a solution of the reduced setting. Likewise, one can consider the case that \(\beta ^e\) is fixed and \(\beta ^M\) converges to infinity appropriately in dependence of the noise level \(\delta \), in which case the limit solutions solves the all-at-once setting with the hard constraint \(Mu^k=(y^\dagger )^k\), see [18] for some general results in that direction. The corresponding assumption of existence of \(((\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k)_k,\hat{\theta })\) such that \(e(\hat{\lambda }^k,\hat{\alpha }^k,{\hat{u}}_0^k,{\hat{u}}^k,\hat{\theta }) = 0 \) and \( M{\hat{u}}^k = (y^\dagger )^k\) can be weakened in both cases accordingly.

Further, note that the convergence result as well as its variants can be deduced also for the learning-informed parameter identification problem (14) exactly the same way.

Remark 10

(Uniqueness of minimum-norm solution.) A sufficient condition for uniqueness of a minimum-norm solution, and thus for convergence of the entire sequence of minimizers as stated in Proposition 8, is the tangential cone condition and existence of a solution \((\hat{\lambda }^k,\hat{u}_0^k,\hat{u}^k)\) to the PDE such that \(Mu^k = (y^\dagger )^k\), see [22, Proposition 2.1]. In Sect. 3.3 below, we discuss this condition in more detail and provide a result which, together with Remark 19, ensures this condition to hold for some particular choices of F and \( \mathcal {N} _\theta \). Regarding solvability of the PDE, we refer to Proposition 24 below, where a particular application is considered.

3.2 Differentiability of the Forward Operator

Solution methods for nonlinear optimization problems, like gradient descent or Newton-type methods, require uniform boundedness of the derivative of \(\mathcal {G}\). Differentiability of \(\mathcal {G}\) is a question of differentiability of F and \( \mathcal {N} \), which is discussed in the following. Note that there, and henceforth, we denote by \(H'(a): A \rightarrow B\) the Gâteaux derivative of a function \(H:A \rightarrow B\) and define Gâteaux differentiability in the sense of [37, Sect. 2.6], i.e., require \(H'(a)\) to be a bounded linear operator. The basis for differentiability of the forward operator is the following lemma, which is a direct extension of [37, Lemma 4.12].

Lemma 11

Let A, B, S be Banach spaces such that \(A \hookrightarrow S\). For \(\Sigma \subset \mathbb {R}^N\) open and bounded, and \(r \in [1,\infty )\), let \(\mathcal {A}\), \(\mathcal {B}\) be Banach spaces such that \(\mathcal {A}\hookrightarrow L^r(\Sigma ,A)\) and \(\mathcal {A}\hookrightarrow L^\infty (\Sigma ,S)\), and \(L^r(\Sigma ,B) \hookrightarrow \mathcal {B}\). Further, let \(H:\Sigma \times A \rightarrow B\) be a function such that \(H(z,\cdot )\) is Gâteaux differentiable for every \(z \in \Sigma \) with derivative \(H'(z,\cdot )\), and such that H is locally Lipschitz continuous in the sense that, for any \(M>0\) there exists \(L(M)>0\) such that for every \(a,\xi \in A\) with \(\max \{\Vert a\Vert _S,\Vert \xi \Vert _S\} \le M\)

Then, if the Nemytskii operators \(H:\mathcal {A}\rightarrow \mathcal {B}\) given as \(H(a)(z) = H(z,a(z))\) and \(H':\mathcal {A}\rightarrow \mathcal {L}(\mathcal {A},\mathcal {B})\) given as \(H'(a)(\xi )(z) = H'(z,a(z))(\xi (z))\) are well defined, then \(H:\mathcal {A}\rightarrow \mathcal {B}\) is also Gâteaux differentiable with \(H'(a) \in \mathcal {L}(\mathcal {A},\mathcal {B})\) given as \(H'(a)(\xi )(z) = H'(z,a(z))(\xi (z))\). Further, \(H'\) is locally bounded in the sense that, for any bounded set \(\tilde{\mathcal {A}} \subset \mathcal {A}\), \( \sup _{a \in \tilde{A}} \Vert H'(a)\Vert < \infty . \)

Proof

Fix \(M>0\) and \(z \in \Sigma \). Local Lipschitz continuity implies for any \(\tilde{a},\xi \in A\) with \(\Vert \tilde{a}\Vert _S+1\le M\),

Next, define \(h:[0,1] \rightarrow B\) as \(h(s) = H(z,a + \epsilon s \xi )\), for \(a \in A\) and \(\epsilon \in (0,1)\) such that \(\Vert a\Vert _S+2 \le M\), \(\epsilon \Vert \xi \Vert _S \le 1\). We note that h is differentiable and Lipschitz continuous (hence absolutely continuous), such that by the fundamental theorem of calculus for Bochner spaces, see [15, Theorem 2.2.17], \(h(1) - h(0) = \int _0^1\,h'(s) \,\textrm{d}s.\) This yields

Now by \(\mathcal {A}\hookrightarrow L^\infty (\Sigma ,S)\), for \(a,\xi \in \mathcal {A}\), we can apply the above with \(M:= \sup _{z \in \Sigma }\Vert a(z)\Vert _S+2\) and \(\epsilon \) sufficiently small such that \(\epsilon \sup _{z \in \Sigma }\Vert \xi (z)\Vert _S \le 1\) and obtain

Using the Lebesgue’s Dominated Convergence Theorem, we deduce \(\lim _{\epsilon \rightarrow 0} r_H(\epsilon )=0\), which, by \(L^r(\Sigma ,B) \hookrightarrow \mathcal {B}\), shows Gâteaux differentiability.

Local boundedness as claimed follows direct from choosing \(M:= \sup _{a \in \tilde{\mathcal {A}}} \sup _{t \in (0,t)}\Vert a(t)\Vert _S+1\), and integrating the rth power of (26) over time. \(\square \)

Proposition 12

(Differentiability) Let Assumption 1 hold and let \(\sigma \in \mathcal {C}^1(\mathbb {R},\mathbb {R})\). Assume that for every \(t \in (0,T)\), the mapping \(F(t,\cdot ,\cdot ):X \times V \rightarrow W\) is jointly Gâteaux differentiable with respect to the second and third arguments, with \((t,\lambda ,u,\xi ,v) \mapsto F'(t,\lambda ,u)(\xi ,v)\) satisfying the Carathéodory conditions.

In addition, assume that F satisfies the following local Lipschitz continuity condition: For all \( M\ge 0\) there exists \(L(M)>0\), such that for all \(v_i \in V\) and \(\lambda _i \in X\), \(i=1,2\), with \(\max \{\Vert v_i\Vert _H, \Vert \lambda _i\Vert _X\} \le M\) and for almost every \(t \in (0,T)\),

Then \(\mathcal {G}:X \times \mathbb {R}^m \times U_0 \times \mathcal {V}\times \Theta \rightarrow \mathcal {W}\times H \times \mathcal {Y}\) is Gâteaux differentiable with

Furthermore, \(\mathcal {G}'(\cdot )\) is locally bounded in the sense specified in Lemma 11.

Proof

First note that it suffices to show corresponding differentiability and local boundedness assertions for the different components of \(\mathcal {G}\) given as \(u \mapsto \dot{u}\), F, \( \mathcal {N} \), \((u,u_0) \mapsto u(0) - u_0\) and M. For all except F and \( \mathcal {N} \), the corresponding assertions are immediate, hence we focus on the latter two.

Regarding F, this is an immediate consequence of Lemma 11 with \(A = X \times V\), \(B = W\), \(S = X \times H\), \(\Sigma = (0,T)\), \(r = 2\), \(\mathcal {A}= X \times \mathcal {V}\) with \(\Vert (\lambda ,v)\Vert _\mathcal {A}= \Vert \lambda \Vert _X + \Vert v\Vert _\mathcal {V}\), \(\mathcal {B}= \mathcal {W}\) and \(H(t,(\lambda ,v)) = F(t,\lambda ,v)\).

For \( \mathcal {N} \), this is again an immediate consequence of Lemma 11 with \(A =S= \mathbb {R}^m \times \mathbb {R}\times \Theta \), \(B = \mathbb {R}\), \(\Sigma = (0,T) \times \Omega \), \(r=\max \{2,{\hat{p}}\}\), \(\mathcal {A}= \mathbb {R}^m \times \mathcal {V}\times \Theta \) with \(\Vert (\alpha ,v,\theta )\Vert _\mathcal {A}= |\alpha | + \Vert v\Vert _\mathcal {V}+ |\theta |\), \(\mathcal {B}= \mathcal {W}\) and \(H((t,x),(\alpha ,v,\theta )) = \mathcal {N} _{\theta }(\alpha ,v(t,x))\). \(\square \)

Remark 13

For stronger image spaces \(W\nsupseteq L^q(\Omega ), \forall q\in [1,\infty )\), differentiability of F remains valid if (27) holds, while differentiability of \( \mathcal {N} \) requires a smoother activation function, e.g., the one suggested in Remark 29 below.

3.3 Lipschitz Continuity and the Tangential Cone Condition

In this section, we focus on showing a rather strong Lipschitz-type result for the neural network. This property allows us to apply (finite-dimensional) gradient-based algorithms to learn the neural networks, where the Lipschitz constant and its derivatives are used to determine the step size. Moreover, by this Lipschitz continuity, the tangential cone condition on (14) can be verified. This condition, together with solvability of the learning-informed PDE, answers the important question of uniqueness of a minimizer to the limit case of (14), as mention in Remark 10.

For ease of notation, we assume in this lemma that the outer layer of the neural network has activation \(\sigma \), as in the lower layers. Adapting the proof for \(\sigma =\text {Id}\) in the last layer is straightforward.

Lemma 14

(Lipschitz properties of neural networks) Consider an L-layer neural network \( \mathcal {N} : \mathbb {R}^{m+1}\times \Theta \ni (z,\theta ) \mapsto \mathcal {N} _\theta ((z_1,\ldots ,z_m),z_{m+1})\in \mathbb {R}\), \(L\in \mathbb {N}\) (z taking the role of \((\alpha ,u(t,x))\) in Lemma 4). Denote by \( \mathcal {N} ^{i}_{\theta ^{i}}\) the i lowest layers of the neural network, depending only on z and on the i lowest-index pairs of parameters \(\theta ^{i}\), while \( \mathcal {N} ^0_{\theta ^0}(z):=z\in \mathbb {R}^{m+1}\).

Fix any subset \(\mathcal {B}\subseteq \mathbb {R}^{m+1}\times \Theta \). For each \(1\le i\le L\), define \(\mathcal {B}_i:=\{\omega ^i \mathcal {N} ^{i-1}_{\theta ^{i-1}}(z)+\beta ^i \mid (z,\theta )\in \mathcal {B})\}\), that is, the image of the i-th layer before applying the activation function. Assume that the activation function \(\sigma \in \mathcal {C}^1(\mathbb {R},\mathbb {R})\) associated to \(\mathcal {N}\) for all \(1\le i\le L\) satisfies the Lipschitz inequalities

for all x, \(\tilde{x}\in {\mathcal {B}_i}\) and some positive constants \(C_\sigma \), \(C'_\sigma \), and that \(s_i:=\sup _{x\in {\mathcal {B}_i}}\left| \sigma '(x)\right| < \infty \).

Fix now a layer l, \(1\le l\le L\), as well as \((\tilde{z},\theta )\), \((z,\bar{\theta })\), \((z,\hat{\theta })\in \mathcal {B}\), where \(\bar{\theta }\) differs from \(\theta \) only in that its l-th weight is replaced by some \(\tilde{\omega ^l}\) and \(\hat{\theta }\) differs from \(\theta \) only in that its l-th bias is replaced by some \(\tilde{\beta ^l}\); explicitly,

Then \( \mathcal {N} \) satisfies the Lipschitz estimates

while its derivatives with regards to z, \(\omega ^l\) and \(\beta ^l\), respectively, satisfy the Lipschitz estimates

where one defines \(C^z_{L+1}:=C^{\omega ^l}_{L+1}:=C^{\beta ^l}_{L+1}:=0\) and, by backward recursion for \(1\le i\le L\),

Proof

See Appendix B. \(\square \)

Remark 15

If \(\sigma '\) is locally Lipschitz continuous on \(\mathbb {R}\), the existence of \(C_\sigma \), \(C'_\sigma \) and the \(s_i\) is clear whenever \(\mathcal {B}\) is a bounded set. Thus, it is a direct consequence of Lemma 14 (or follows simply by the properties of the functions \( \mathcal {N} \) is composed of) that the mapping \((z,\theta ) \mapsto \mathcal {N} (z,\theta )\) restricted to any bounded set is bounded, Lipschitz continuous and has Lipschitz continuous derivative. This is relevant for gradient-based optimization algorithms to solve the learning problem (13), where Lipschitz continuity of the derivative of the objective function is a key ingredient for (local) convergence, see for instance [36] for a result in Hilbert spaces. In particular, Lipschitz continuity of \(\theta \mapsto \mathcal {N} (z,\theta )\) for z fixed is useful for the learning problem (13), where the exact \((\lambda ,u)\) is known. In this case, one simply learns the finite-dimensional hyperparameter \(\theta \), thus standard convergence results on gradient-based methods in finite dimensional vector spaces apply, see, e.g., [34, Sect. 5.3].

Based on these Lipschitz estimates, we can study the tangential cone condition for the problem (14), given a learned \(\mathcal {N}_\theta \). For this, we assume that \(\mathcal {N}_\theta (\alpha ,u)=\mathcal {N}_\theta (u)\).

Condition 16

(Tangential cone condition [22, Expression (2.4)]) We say that the tangential cone condition for a mapping \(G:\mathcal {D}(G)(\subseteq X)\rightarrow Y\) holds in a ball \(\mathcal {B}^X_\rho (x^\dagger )\), if there exists \(c_{tc}<1\) such that

Here, \(G'(x)h\) denotes the directional derivative [24].

Analyzed in the all-at-once setting (14), the tangential cone condition reads as

for all \((\lambda ,u_0,u), (\tilde{\lambda },{\tilde{u}}_0,{\tilde{u}})\in B_\rho ^{X \times U_0\times \mathcal {V}}(\lambda ^\dagger ,u_0^\dagger ,u^\dagger )\), where \(F'\) and \( \mathcal {N} ' \) are the Gâteaux derivatives.

The tangential cone condition strongly depends on the PDE model F and the architectures of \( \mathcal {N} \). By triangle inequality, a sufficient condition for (33) to hold is that the tangential cone condition holds for F and for \( \mathcal {N} \) separately. The tangential cone condition in combination with solvability of equation \(G(x)=0\) ensures uniqueness of a minimum-norm solution [22, Proposition 2.1] (see Appendix A). Solvability of the operator equation \(G(x)=0\), according to the all-at-once formulation, is the question of solvability of the learning-informed PDE and exact measurements, i.e. \(\delta =0\). For solvability of the learning-informed PDE, we refer to Proposition 24 in Sect. 4. In the following, we focus on the tangential cone condition for the neural networks by studying Condition 16 for \(G:=\mathcal {N}_\theta .\)

Lemma 17

(Tangential cone condition for neural networks) The tangential cone condition in Condition 16 for \(G = \mathcal {N} _\theta {: \mathcal {V}\rightarrow \mathcal {W}}\) with fixed parameter \(\theta \) holds in any ball \( \mathcal {B} ^\mathcal {V}_\rho (u^\dagger ) \) if \(M=\text {Id},\) \( Y\hookrightarrow L^{\hat{p}}(\Omega )\) with \({\hat{p}}>0\) as in (6), \(\sigma \in \mathcal {C}^1(\mathbb {R},\mathbb {R})\) and \(\rho \), depending on the Lipschitz constant in Lemma 14 is sufficiently small.

Proof

Since \(\mathcal {V}\hookrightarrow L^\infty ((0,T)\times \Omega )\) for \(u, {\tilde{u}} \in \mathcal {B}_\rho ^\mathcal {V}(u^\dagger )\), we have for almost all \((t,x)\in (0,T)\times \Omega \) that \(u(t,x), \tilde{u}(t,x)\in \mathcal {B}\) for some \(\mathcal {B}\) bounded. Thus, we can use Lemma 14 with such a \(\mathcal {B}\), and in particular the estimate (62) for \(z=u(t,x)\), to obtain

where \(C^z_1|\omega _1|\) is the Lipschitz constant of \( \mathcal {N} '_u\) derived in Lemma 14, and \(c_{tc}<1\) if

We note that having full observation, i.e. \(M=\text {Id}\), is crucial for establishing the tangential cone condition, as it allows us to link the estimate from \(\Vert u- {\tilde{u}}\Vert _\mathcal {Y}\) to \(\Vert M(u- {\tilde{u}})\Vert _\mathcal {Y}\), yielding the last quantity on the right hand side of (33). The necessity of full observation has also been mentioned in [24]. \(\square \)

Now using [22, Proposition 2.1] together with Lemma 17, a uniqueness result follows.

Proposition 18

(Uniqueness of minimizer for the limit case of (14)) With \(\nu \ge 1\), consider the regularizer \(\mathcal {R}_1=\Vert \cdot \Vert ^\nu _{X\times \mathbb {R}^m \times U_0\times \mathcal {V}}\), and assume that the conditions in Lemma 17 are satisfied. Moreover, suppose that the tangential cone condition for F holds in \(\mathcal {B}_\rho ^{X\times U_0\times \mathcal {V}}(\lambda ^\dagger ,u_0^\dagger ,u^\dagger )\) and the equation \(\mathcal {G}(\lambda ,u_0,u,\hat{\theta }) = 0\) with \(\mathcal {G}\) in (12) and \(\theta \) fixed is solvable in \(\mathcal {B}_\rho ^{X\times U_0\times \mathcal {V}}(\lambda ^\dagger ,u_0^\dagger ,u^\dagger )\). Then the limit case of the parameter identification problem (14) admits a unique minimizer in the ball \(\mathcal {B}_\rho ^{X\times U_0\times \mathcal {V}}(\lambda ^\dagger ,u_0^\dagger ,u^\dagger )\).

Remark 19

We refer to Section (4) below for solvability of the learning-informed PDE in an application. We refer to [24] for concrete choices of F and of function space settings such that the tangential cone condition can be verified.

Note that, while the tangential cone condition for limit case of the of the parameter identification problem (14) can be confirmed as above, the same question for the learning problem (13) remains open.

4 Application

In this section, as special case of the dynamical system (1), we examine a class of general parabolic problems given as

where \(\Omega \subset \mathbb {R}^d\) is a bounded \(C^2\)-class domain, with \( d\in \{1,2,3 \}\) being relevant in practice. The nonlinearity f, which can be replaced by a neural network later, is assumed to be given as the Nemytskii operator \(f:\mathbb {R}^m\times \mathcal {V}\rightarrow \mathcal {W}\) [32, Sect. 1.3] of a pointwise function \(f:\mathbb {R}^m\times \mathbb {R}\rightarrow \mathbb {R}\), making use of the notation \( [f(\alpha ,u)](t,x) = f(\alpha ,u(t,x))\). We initially work with the following parameter spaces

where \(Pa >d\), and, for existence of a solution, we will require the constraints

Thus, the overall parameter space X is given as \(X=(X_\varphi ,X_c,X_a)\).

4.1 Unique Existence Results for (34)

Our next goal is to study unique existence of (34). The main purpose of this is to inspire a relevant choice of function space setting for the all-at-once setting of (13) and (14), even though unique existence is not required there. Also, a unique existence result is of interest for studying the reduced setting, where well-definedness of the parameter-to-state map is needed.

We will proceed in two steps: In the first step, we prove that (34) admits a unique solution

with \(W^{1,p,q}(0,T;V_1,V_2):=\{u\in L^p(0,T;V_1):\dot{u}\in L^q(0,T;V_2)\}\). Then, in the second step, we lift the regularity of u to the somewhat stronger space

to achieve boundedness in time and space of the solution, which will later serve our purpose of working with a neural network acting pointwise. It is worth noting that the study for unique existence is carried out first of all for classes of general nonlinearity f satisfying some specific assumptions, such as pseudomonotonicity and growth condition, see Lemmas 21 and 23 below. The nonlinearity f as a neural network will then be considered in Proposition 24, Remark 25.

Before investigating (34), we summarize the unique existence theory as provided in [32, Theorems 8.18, 8.31] for the autonomous case.

Theorem 20

Let \({\hat{V}}\) be a Banach space, \({\hat{H}}\) be a Hilbert spaces and assume that for \({\hat{F}}:{\hat{V}}\rightarrow {\hat{V}}^*\), \(u_0 \in {\hat{H}}\) and \(\varphi \in {\hat{V}}^*\), with the Gelfand triple \({\hat{V}}\subseteq {\hat{H}}\cong {\hat{H}}^* \subseteq {\hat{V}}^*\), the following holds:

-

S1.

\({\hat{F}}\) is pseudomonotone.

-

S2.

\({\hat{F}}\) is semi-coercive, i.e,

$$\begin{aligned} \forall v \in {\hat{V}}: \langle {\hat{F}}(v),v\rangle _{{\hat{V}}^*,{\hat{V}}} \ge c_0|v|^2_{\hat{V}}-c_1|v|_{\hat{V}}-c_2\Vert v\Vert _{{\hat{H}}}^2 \end{aligned}$$for some \(c_0 > 0\) and some seminorm \(|.|_{{\hat{V}}}>0\) satisfying \(\forall v \in {\hat{V}}: \Vert v\Vert _{{\hat{V}}} \le c_{|.|}(|v|_{{\hat{V}}} + \Vert v\Vert _{{\hat{H}}})\).

-

S3.

\({\hat{F}}\), \(u_0\) and \(\varphi \) satisfy the regularity condition \({\hat{F}}(u_0)-\varphi \in {\hat{H}}\), \(u_0 \in {\hat{V}}\) and

$$\begin{aligned} \langle {\hat{F}}(u)-{\hat{F}}(v), u-v\rangle _{{\hat{V}}^*,{\hat{V}}}\ge C_0|u-v|^2_{{\hat{V}}} -C_2\Vert u-v\Vert _{{\hat{H}}}^2 \end{aligned}$$for all \(u,v \in V\) with some \(C_0>0.\)

Then the abstract Cauchy problem

has a unique solution \(u \in W^{1,\infty ,\infty }(0,T;{\hat{H}},{\hat{H}})\cap W^{1,\infty ,2}(0,T;{\hat{V}},{\hat{V}})\).

By verifying the conditions in Theorem 20, we now obtain unique existence as follows.

Lemma 21

(Unique existence) Let the nonlinearity \(f(\alpha ,\cdot ):H_0^1(\Omega ) \rightarrow H_0^1(\Omega )^*\) be given as the Nemytskii mapping of a measurable function \(f(\alpha ,\cdot ):\mathbb {R}\rightarrow \mathbb {R}\) that satisfies

Then, equation (34) with parameter \(\varphi \), c, a and \(u_0\) such that (35), (36) hold, admits a unique solution

Proof

We verify the conditions in Theorem 20 for \({\hat{H}}= L^2(\Omega )\), \({\hat{V}}= H_0^1(\Omega )\) with \(\Vert u\Vert _{{\hat{V}}}=\Vert \nabla u\Vert _{{\hat{H}}}\) and \({\hat{F}}(u):= -F(u) - f(\alpha ,u)\), where \(F:{\hat{V}}\rightarrow {\hat{V}}^*\) is given as

First, note that due to measurability and the growth constraint, the Nemytskii mapping \(f(\alpha ,\cdot ):{\hat{V}}\rightarrow {\hat{V}}^*\), where we set \(f(\alpha ,u)(w):= \int _\Omega f(\alpha ,u(x))w(x) \,\textrm{d}x\) for \(w \in {\hat{V}}\), is indeed well-defined since,

Since \(0<\underline{a}\le a\) almost everywhere on \(\Omega \) and \(c\in L^2(\Omega )\), the estimate

yields

with \(c_0:=\underline{a}/4\), \(c_2:=(C_{H^1\rightarrow L^6})^6\Vert c\Vert _{L^2(\Omega )}^4/4\underline{a}^3\). Together with monotonicity of \({-f(\alpha ,\cdot )}\) and \(f(\alpha ,0)=0\), one has \(\langle f(\alpha ,u),u\rangle _{V^*,V}=\langle f(\alpha ,u)-f(\alpha ,0), u-0\rangle _{{\hat{V}}^*,{\hat{V}}}\ge 0\). This implies that semicoercivity as in S2 with \(c_0\), \(c_2\) as above and \(c_1 = 0\). Also, the second estimate in the regularity condition S3 now follows directly with

where again, we employ monotonicity of \(f(\alpha ,\cdot )\).

In order to verify pseudomonotonicity S1., we first notice that \({\hat{F}}:{\hat{V}}\rightarrow {\hat{V}}^*\) is bounded, i.e., it maps bounded sets to bounded sets, and continuous where the latter follows from continuity of F, which is immediate, and continuity of f, which holds by assumption. Using this, one can apply [14, Lemma 6.7] to conclude pseudomonotonicity if the following statement is true

The latter follows since, by  , one gets for \(u_n \overset{{\hat{V}}}{\rightharpoonup }\ u\) that \(u_n\overset{{\hat{H}}}{\rightarrow }u\) and

, one gets for \(u_n \overset{{\hat{V}}}{\rightharpoonup }\ u\) that \(u_n\overset{{\hat{H}}}{\rightarrow }u\) and

which implies \(u_n \overset{{\hat{V}}}{\rightarrow }u\) as \(n\rightarrow \infty .\) With this, Theorem 20 implies unique existence of a solution

\(\square \)

Note that, by embedding, \(u\in W^{1,\infty ,\infty }(0,T;{\hat{H}},{\hat{H}})\cap W^{1,\infty ,2}(0,T;{\hat{V}},{\hat{V}})\) implies that \(u\in L^\infty (0,T;{\hat{V}})\cap H^1(0,T;{\hat{V}}))\). In a second step, we now aim to find suitable assumptions on the parameter spaces \(X_\varphi \), \(X_c\), \(X_a\) and \(U_0\) such that regularity of the solution u of (34) as obtained in the previous proposition is lifted to \(u\in L^\infty ((0,T)\times \Omega )\).

Remark 22

There are at least two ways to achieve this: One is to enhance space regularity of u from \(H^1(\Omega ) \) to \(W^{k,p}(\Omega )\) with \( kp>d\) such that \(W^{k,p} (\Omega ) \hookrightarrow C(\overline{\Omega })\) and we can ensure \(u \in L^\infty ( (0,T), C(\overline{\Omega })) \hookrightarrow L^\infty ((0,T)\times \Omega )\). The other possible approach is to ensure a \( W^{2,q}(\Omega )\)-space regularity with q sufficiently large such that \( u \in L^2((0,T),W^{2,q}(\Omega ))\cap H^1(0,T;W^{2,q}(\Omega ))\hookrightarrow C(0,T;L^\infty (\Omega ))\).

While the first approach might yield weaker condition on kp, it imposes a non-reflexive state space. The latter choice on the other hand fits better into our setting of reflexive spaces, thus we proceed with the latter choice.

Now our goal is to determine an exponent q such that, if \(u\in L^2(0,T;W^{2,q}(\Omega ))\cap H^1(0,T;H^1(\Omega ))\), it follows that \(u \in C(0,T;W^{1,2p}(\Omega ))\) with \(p>d/2\) such that \(W^{1,2p}(\Omega )\hookrightarrow L^\infty (\Omega )\) and ultimately \(u \in L^\infty ((0,T)\times \Omega )\). To this aim, first note that for \(u\in L^2(0,T;W^{2,q}(\Omega )\cap H^1_0(\Omega ))\,\cap \, H^1(0,T;H^1(\Omega ))\), by Friedrichs’s inequality, it follows that \(u \in C(0,T;L^{2p}(\Omega ))\) if

To ensure the latter, we use that \(({\nabla u})^p \in L^2(0,T;W^{1,q/p}(\Omega ))\cap H^1(0,T;L^{2/p}(\Omega ))\) and that

provided that \(dp>q\ge \frac{dp}{d+1}\) and \(\frac{p}{2}\le 1-\frac{p}{q}+\frac{1}{d}\). Indeed, in this case it follows that

such that the embedding into \(C(0,T;L^2(\Omega ))\) follows from [32, Lemma 7.3] (see Appendix A). Since \(2pd/(2d+2-dp)\ge dp/(d+1)\), it follows that we can ensure for \( p>d/2\) that \( u \in L^\infty ((0,T)\times \Omega )\) if

This is fulfilled for \(p=d/2+\epsilon \) with \(\epsilon >0\) if \(dp > q\ge (2d^2+4\epsilon d)/(4+4d-d^2-2\epsilon d)\) and, more concretely, in case \(d=2\) for \(p=1+\epsilon \) \(q=(2+2\epsilon )/(2-\epsilon )\) and \(\epsilon \in (0,1)\) and in case \(d=3\) for \(p=3/2+\epsilon \), \(q = (18+12\epsilon )/(7-6\epsilon )\) and \(\epsilon \in (0,1/2)\).

Let us focus on the latter case of \(d=3\) and derive suitable assumptions on \(X_\varphi \), \(X_c\), \(X_a, U_0\) and f such that the solution u to (34) fulfills

where the embedding holds by our choice of q and p.

Lemma 23

(Lifted regularity) In addition to the assumptions of Lemma 21, assume that \(d=3\) and that, for positive numbers \(p,\epsilon ,q,\bar{q}\) and Pa with

it holds that

Then, the unique solution of (34) fulfills

Proof

From (34) we get

and by \(\overline{q}\preceq \frac{3q}{3-q}\) such that \(W^{1,q}(\Omega )\hookrightarrow L^{\overline{q}}(\Omega )\)), we estimate the components of the right hand side of (41), using parameters \(\delta ,\delta _1>0\) (which will be small later on). Since \(q\le 6\)

By \(q \le 6\) and \(c\in L^q(\Omega )\), using density, we can choose \(c_\infty \in L^\infty (\Omega )\) such that \(\Vert c-c_\infty \Vert _{L^q(\Omega )} \le \delta \) and obtain

Now by the assumption \(|f(\alpha ,v)| \le C_\alpha (1 + |v|^{B}) \) with \(B < 6/q+1\) (note that this means also \(B \le 5\)) then, by possibly increasing B, we can assume that \(6/q< { B < 6/q+1}\) and select \(\beta := B-6/q \in (0,1)\), such that \(q(B-\beta ) = 6\). Applying Young’s inequality with arbitrary positive factor \(\delta _1>0\), we have

Using \( a\in W^{1,Pa}(\Omega )\) with \(Pa\ge \frac{q\bar{q}}{\overline{q}-q}\) and \(\frac{q\bar{q}}{2\overline{q}-q} \le 2\), again using density, we can choose \(a_\infty \in W^{1,\infty }(\Omega )\) such that \(\Vert \nabla a-\nabla a_\infty \Vert _{L^{\frac{q\bar{q}}{\overline{q}-q}}(\Omega )}<\delta \) and obtain

Using that also \(\varphi \in L^q(\Omega )\), taking the spatial \(L^q\)-norm in (41), estimating by the triangle inequality, raising everything to the second power, we arrive at

with

For sufficiently small \(\delta ,\delta _1\), this leads to

The fact that \(\nabla u\in L^2(0,T;L^2(\Omega ))\) and \(\Delta u\in L^2(0,T;L^q(\Omega )), q\ge 2\) as above imply \(\nabla u\in L^2(0,T;H^1(\Omega ))\) thus \(\nabla u\in L^2(0,T;L^q(\Omega ))\) for \(q\le 6\). This and (46) ensures that \(u\in L^2(0,T;W^{2,q}(\Omega ))\). By Lemma 21, \(u\in W^{1,\infty ,\infty }(0,T;{\hat{H}},{\hat{H}})\cap W^{1,\infty ,2}(0,T;{\hat{V}},{\hat{V}})\); thus, by embedding, \(u \in H^1(0,T;H^1(\Omega ))\). Consequently,

This, together with the argumentation after Remark 22 completes the proof. \(\square \)

The obtained unique existence result in now summarized in the following proposition.

Proposition 24

(i) The nonlinear parabolic PDE (34) with \(d=3\) admits the unique solution

if the following conditions are fulfilled:

(ii) Moreover, the claim in (i) still holds in case \(f(\alpha ,\cdot )\) is replaced by neural network \( \mathcal {N} _\theta (\alpha ,\cdot )\) with \(\sigma \in \mathcal {C}_\text {Lip}(\mathbb {R},\mathbb {R})\).

Proof

(i) Lemma 21 ensures that (34) admits a unique solution

such that in particular \(u\in L^\infty (0,T;H^1(\Omega ))\cap H^1(0,T;H^1(\Omega ))\). Proposition 23 ensures the embeddings as in (47) hold true again by our choice of p, q.

ii) Now consider the case that \(f(\alpha ,\cdot )\) is replaced by \( \mathcal {N} _\theta (\alpha ,\cdot )\) for some known \(\alpha , \theta \). With \(L_{\theta ,\alpha }\) the Lipschitz constant of \( \mathcal {N} _\theta (\alpha ,\cdot ):\mathbb {R}\rightarrow \mathbb {R}\), we first observe that, for \(v \in \mathbb {R}\),

such that the growth condition \(| \mathcal {N} _\theta (\alpha ,v)|< C_\alpha (1+|v|^{B})\) with \(B < 6/q + 1\) and in particular the growth condition of Proposition 23 holds. This shows in particular that the induced Nemytskii mapping \( \mathcal {N} _\theta (\alpha ,\cdot ):H^1(\Omega ) \rightarrow H^1(\Omega )^*\) is well-defined. Further, we can observe that, again for \(u,v \in H^1(\Omega )\)

Using these estimates, it is clear that the conditions S2 and S3 in Theorem 20 can be shown similarly as in Step 1 without requiring \(\mathcal {N}_\theta (\alpha ,0)=0\) or monotonicity of \( \mathcal {N} _\theta (\alpha ,\cdot )\). This completes the proof. \(\square \)

Remark 25

For neural networks, some examples fulfilling the conditions in Proposition 24, i.e. Lipschitz continuous activation functions, are the RELU function \(\sigma (x)=\max \{0,x\}\), the tansig function \(\sigma (x)=\tanh (x)\), the sigmoid (or soft step) function \(\sigma (x)=\frac{1}{1+e^{-x}}\), the softsign function \(\sigma =\frac{x}{1+|x|}\) or the softplus function \(\sigma (x)=\ln (1+e^x)\).

4.2 Well-Posedness for the All-at-Once Setting

With the result attained in Proposition 24, we are ready to determine the function spaces for the minimization problems (13), (14) in the all-at-once setting and explore further properties discussed in Sect. 3.

Remark 26

For minimization in the reduced setting, we usually invoke monotonicity in order to handle high nonlinearity (c.f. Proposition 24). The minimization problems in the all-at-once setting, however, do not require this condition, thus allowing for more general classes of functions, e.g. by including in F another known nonlinearity \(\phi \) as in the following Proposition.

Proposition 27

For \(d=3\) and \(\epsilon >0\) sufficiently small, define the spaces

and Y such that \(V \hookrightarrow Y\), resulting in the following state-, image- and observation spaces

Further, define the corresponding parameter spaces \( U_0=H^2(\Omega )\), \(X = X_\varphi \times X_c \times X_a\), where

and let \(M \in \mathcal {L}(\mathcal {V},\mathcal {Y})\) be the observation operator.

Consider the minimization problems (13) and (14), with \({F: (0,T) \times X \times V} \rightarrow W\) given as

where \(\phi :V \rightarrow W\) is an additional known nonlinearity in F (c.f Remark 26); \(\phi \) is the induced Nemytskii mapping of a function \(\phi \in \mathcal {C}_\text {locLip}(\mathbb {R},\mathbb {R})\). The associated PDE given as,

with the activation functions \(\sigma \) of \(\mathcal {N}_\theta (\alpha ,u)\) satisfying \( \sigma \in \mathcal {C}_\text {locLip}(\mathbb {R},\mathbb {R})\), and with \(\mathcal {R}_1, \mathcal {R}_2 \) nonnegative, weakly lower semi-continuous and such that the sublevel sets of \((\lambda ,\alpha ,u_0,u,\theta ) \mapsto \mathcal {R}_1(\lambda ,\alpha ,u_0,u) + \mathcal {R}_2(\theta )\) are weakly precompact. Then, each of (13) and (14) admits a minimizer.

Proof

Our aim is examining the assumptions proposed Lemma 5, which leads to the result in Proposition 6. At first, we verify Assumption 1. The embeddings

are an immediate consequence of our choice of p and q and standard Sobolev embeddings. The embeddings

follow from the discussion in Step 2 above, see also Proposition 24.

Noting that well-definedness of the Nemytskii mappings as well as the growth condition (7) are consequences of the following arguments on weak continuity. We focus on weak continuity of \(F:\mathcal {V}\times (X_c,X_a,X_\varphi )\rightarrow \mathcal {W}, F(\lambda ,u):=\nabla \cdot (a\nabla u) - cu+\varphi + \phi (u)\) via weak continuity of the operator inducing it as presented in Lemma 5. First, for the cu part we see \((c,u)\mapsto cu\) is weakly continuous on \((X_c,H)\). Indeed, for \(c_n\rightharpoonup c\) in \(X_c\), \(u_n\rightharpoonup u\) in  thus \(u_n\rightarrow u\) in \(L^\infty (\Omega )\), one has for any \(w^*\in W^*= L^{q^*}(\Omega )\),

thus \(u_n\rightarrow u\) in \(L^\infty (\Omega )\), one has for any \(w^*\in W^*= L^{q^*}(\Omega )\),

due to \(uw^*\in L^{q^*}(\Omega )\), \( \Vert c_n w^*\Vert _{L^1(\Omega )}\le C<\infty \) for all n and \(u_n\rightarrow u\) in \(L^\infty (\Omega )\).

For the \(\nabla \cdot (a\nabla u)\) part, \(H=W^{1,2p}(\Omega )\) is not strong enough to enable weak continuity of \((a,u)\mapsto \nabla \cdot (a\nabla u)\) on \((X_a,H)\), we therefore evaluate directly weak continuity of the Nemytskii operator. So, let \((a_n,u_n) \rightharpoonup (a,u)\) in \(X_a\times \mathcal {V}\), taking \(w^*\in L^2(0,T;L^{q^*}(\Omega ))\) we have

due to the following: we have \(\nabla u w^*\in L^2(0,T;L^{Pa^*}(\Omega )), \nabla a_n\rightharpoonup \nabla a\) in \(L^{Pa}(\Omega )\) in the first estimate, and \(u_n\rightarrow u\) in \(L^2(0,T;W^{1,18}(\Omega )), \Vert \nabla a_n w^*\Vert _{L^2(0,T;L^{18/17}(\Omega ))}\le C<\infty \) for all n in the second estimate. In the third estimate, one has \(a_n\rightarrow a\) in \(L^\infty (\Omega )\) and

Finally, in in the last estimate it is clear that \(aw^*\in L^2(0,T;L^{q^*}(\Omega )), u_n \rightharpoonup u\) in \(L^2(0,T;W^{2,q}(\Omega ))\) implying \(\Delta u_n \rightharpoonup \Delta u\) in \(L^2(0,T;L^{q}(\Omega )\)).

For the term \(\phi \), by  we attain weak-strong continuity of \(\phi \) on H

we attain weak-strong continuity of \(\phi \) on H

Finally, the fact that activation function \(\sigma \) satisfies \(\sigma \in \mathcal {C}_\text {locLip}(\mathbb {R},\mathbb {R})\) completes the verification that the result of Proposition 6 holds. \(\square \)

For the following results, we set \(\phi =0\).

Lemma 28

(Differentiability) In accordance with Proposition 12 and the frameworks in Proposition 27, setting \(\phi =0\), the model operator \(F:X\times \mathcal {V}\rightarrow \mathcal {W}\) is Gâteaux differentiable, as is the neural network \(\mathcal {N}_\theta :\mathbb {R}^m\times \mathcal {V}\rightarrow \mathcal {W}\) with \(\sigma \in \mathcal {C}^1(\mathbb {R},\mathbb {R})\).

Proof

With the setting in Proposition 27, we verify local Lipschitz continuity of \(F(\lambda ,u)=\nabla \cdot (a\nabla u)-cu+\varphi \) with \(\lambda = (\varphi ,c,a)\). To this aim, we estimate

with \({\overline{q}}\overline{q}\preceq \frac{3q}{3-q}\). Also, Gâteaux differentiability of \(F:X\times V\rightarrow W\) as well as Carathéodory assumptions are clear from this estimate and bilinearity of F with respect to \(\lambda , u\). Differentiability of \(\mathcal {N}_\theta \) with \(\sigma \in \mathcal {C}^1(\mathbb {R},\mathbb {R})\) has been shown in Proposition 12, the last paragraph of its proof. \(\square \)

When the image space \(\mathcal {W}\) is stronger, that is, \(W\nsupseteq L^q(\Omega ), \forall q\in [1,\infty )\) as discussed in Remark 13, we require smoother activation functions than what was employed in Lemma 28 in order to ensure differentiability of \(\mathcal {N}_\theta \).

Remark 29

(Strong image space \(\mathcal {W}\) and smoother neural network) Consider the case where the unknown parameter is \(\varphi \), parameters a, c are known, and the neural network \(\mathcal {N}_\theta \) has smoother activation

The minimization problems introduced in Proposition 27 have minimizers that belong to the Hilbert spaces

and

Proof

For fixed \(\theta , \alpha \), let us denote \(\mathcal {N}_\theta (\alpha ,\cdot )=: \mathcal {N} _\theta \). It is clear that this setting fulfills all the embeddings in Assumption 1. Weak-strong continuity of \( \mathcal {N} _\theta \) is derived from

since with \(\mathcal {V}\hookrightarrow C(0,T;H^2(\Omega ))\) and \(\sigma \in \mathcal {C}^1_\text {locLip}(\mathbb {R},\mathbb {R})\), one has

implying \(A+B \rightarrow 0\) for  and Lipschitz constants \(L', L''\). This shows continuity of \( \mathcal {N} \) in u; continuity of \( \mathcal {N} \) in \((\alpha ,\theta )\) can be done similarly. For F, when c, a are known and fixed, it is just a linear operator on u. Weak continuity of F hence can be explained through its boundedness, which can be confirmed in the same fashion as A, B above. \(\square \)

and Lipschitz constants \(L', L''\). This shows continuity of \( \mathcal {N} \) in u; continuity of \( \mathcal {N} \) in \((\alpha ,\theta )\) can be done similarly. For F, when c, a are known and fixed, it is just a linear operator on u. Weak continuity of F hence can be explained through its boundedness, which can be confirmed in the same fashion as A, B above. \(\square \)

To conclude this section, we consider a Hilbert space setting that will be relevant for our subsequent applications.

Remark 30

(Hilbert space framework for application) Another possible Hilbert space framework where the all-at-once setting is applicable is

where Y is a Hilbert space, and

Verification of weak continuity and the growth condition for F can be carried out similarly as in Proposition 27; moreover, weak continuity of \((X_a\times H)\ni (a,u)\mapsto \nabla \cdot (a\nabla )\in W\) can be confirmed like the part \((c,u)\mapsto cu\), without the need of evaluating directly the Nemytskii operator. This is the setting in which we will study in detail the application (34).

5 Case Studies in Hilbert Space Framework

5.1 Setup for Case Studies

In this section, for the sake of simplicity of implementation, we carry out case studies for some minimization examples in a Hilbert space framework, where we drop the unknown \(\alpha \) and use the regularizers \(\mathcal {R}_1=\Vert \cdot \Vert ^2_{X\times U_0\times \mathcal {V}}\), \(\mathcal {R}_2=\Vert \cdot \Vert ^2_\Theta \).

Proposition 31

Consider the minimization problem (13) (or (14)) associated with the learning informed PDE

for \({\sigma \in }\, \mathcal {C}^1(\mathbb {R},\mathbb {R})\), \(M=\text {Id}\) in the Hilbert spaces

The following statements are true:

-

(i)

The minimization problem admits minimizers.

-

(ii)

The corresponding model operator \(\mathcal {G}\) is Gâteaux differentiable with locally bounded \(\mathcal {G}'\).

-

(iii)

The adjoint of the derivative operator is given by

$$\begin{aligned}&\mathcal {G}'(\lambda ,u,\theta )^*: \mathcal {W}\times H\times \mathcal {Y}\rightarrow X\times \mathcal {V}\times \Theta \\&\mathcal {G}'(\lambda ,u,\theta )^*= \begin{pmatrix} -F'_\lambda (\lambda ,u)^* &{} 0 &{} 0\\ \left( \frac{d}{dt}-F'_u(\lambda ,u)- \mathcal {N} _u'(u,\theta )\right) ^* &{} (\cdot )_{t=0}^* &{} M^*\\ - \mathcal {N} _\theta '(u,\theta )^* &{} 0 &{}0 \end{pmatrix} =:(g_{i,j})_{i,j=1}^3 \end{aligned}$$with

$$\begin{aligned}&F'_\lambda (\lambda ,u)^*:\mathcal {W}\rightarrow X, \qquad{} & {} F'_u(\lambda ,u)^*: \mathcal {W}\rightarrow \mathcal {V}, \qquad{} & {} (\cdot )_{t=0}^*:H\rightarrow \mathcal {V}\\&\mathcal {N} _\theta '(u,\theta )^*: \mathcal {W}\rightarrow \Theta ,\ {}{} & {} \mathcal {N} _u'(u,\theta )^*:\mathcal {W}\rightarrow \mathcal {V},{} & {} M^*: \mathcal {Y}\rightarrow \mathcal {V}. \end{aligned}$$

By defining \((-\Delta )^{-1}(-\Delta +\text {Id})^{-1}: L^2(\Omega )\ni k^z\mapsto {\widetilde{z}}\in H^2(\Omega )\cap H^1_0(\Omega )\) such that \({\widetilde{z}}\) solves

we can write explicitly

\(g_{3,1}\): one has the recursive procedure

with \(a_l,a'_l\) detailed in the proof.

Proof

Assertion (i) follows from Remark 30. Using Proposition 12, Assertion (ii) can be shown similarly as in Lemma 28. The proof for Assertion (iii) is presented in Appendix B. \(\square \)

Corollary 32

(Discrete measurements) In case of discrete measurements \(M_i:\mathcal {V}\rightarrow Y, M_i(u)=u(t_i), t_i\in (0,T)\), where the pointwise time evaluation is well-defined as \(\mathcal {V}\hookrightarrow C(0,T;H^2(\Omega ))\), the adjoint \(g_{2,3}\) is modified as follows. For \(h\in Y\),

provided that \(u^h=\) const in \([t_i,T]\) in order to form the integral of the full time line (0, T) in the last line. Above, \(h,\tilde{h}\) are respectively in place of \(k^z\) and \({\widetilde{z}}\) in (50); besides, \(u^h\) solves

Thus we arrive at

This shows a numerical advantage of processing discrete observations in an Kaczmarz scheme, for instance in deterministic or stochastic optimization. To be specific, for each data point in the forward propagation, thanks to the all-at-one approach, no nonlinear model needs to be solved; in the backward propagation, by the same reason and (57), one needs to compute the corresponding adjoint only for small time intervals.

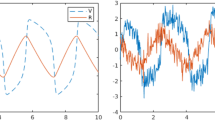

5.2 Numerical Results

This section is dedicated to a range of numerical experiments carried out in two parallel settings: by way of analytic adjoints in Sect. 5.2.1, and with Pytorch in Sect. 5.2.2. While, in our experiments, we evaluate and compare the proposed method for different settings, such as varying the number of time measurements or noise, we highlight that the main purpose of these experiments is to show numerical feasibility of the proposed approach in principle, rather than providing highly optimized results. In particular, a tailored optimization of, e.g., regularization parameters and initialization strategies involved in our method might still be able to improve results significantly.

For both settings (analytic adjoints and Pytorch), we use the following learning-informed PDE as special case of the one considered in Proposition 31:

We deal with time-discrete measurements as in Corollary 32, i.e., we use a time-discrete measurement operator \(M:\mathcal {V}\rightarrow L^2(\Omega )^{n_T}\), with \(n_T \in \mathbb {N}\), given as \(M(u)_{t_i} = u(t_i)\) for \(t_0 = 0\) and \(t_i \in (0,T)\) with \(i=1,\ldots ,n_T-1\). We further let a noisy measurement of the initial state \(u_0\) be given at timepoint \(t=0\). Further, we consider two situations:

-

1.

The source \(\varphi \) in (58) is fixed; we estimate the state u and the nonlinearity \(\mathcal {N}_\theta \) only, yielding a model operator \(\mathcal {G}_\varphi :H^1(0,T;H^2(\Omega )\cap H^1_0(\Omega )) \times \Theta \rightarrow L^2(0,T;L^2(\Omega )) \times L^2(0,T;L^2(\Omega ))\) given as

$$\begin{aligned} \mathcal {G}_\varphi (u,\theta ) = \begin{pmatrix} \dot{u}-\Delta u - \varphi - \mathcal {N}_\theta (u) \\ Mu \end{pmatrix}. \end{aligned}$$ -