Abstract

This paper complements the study of the wave equation with discontinuous coefficients initiated in (Discacciati et al. in J. Differ. Equ. 319 (2022) 131–185) in the case of time-dependent coefficients. Here we assume that the equation coefficients are depending on space only and we formulate Levi conditions on the lower order terms to guarantee the existence of a very weak solution as defined in (Garetto and Ruzhansky in Arch. Ration. Mech. Anal. 217 (2015) 113–154). As a toy model we study the wave equation in conservative form with discontinuous velocity and we provide a qualitative analysis of the corresponding very weak solution via numerical methods.

Similar content being viewed by others

1 Introduction

In this paper we want to study the well-posedness of the Cauchy problem for the inhomogeneous wave equation with space-dependent coefficients. In detail, we are concerned with

where \(a(x)\ge 0\) and for the sake of simplicity we work in space dimension 1. The well-posedness of (1) is well-understood when the coefficients are regular, namely smooth. Indeed, the equation above can be re-written in the variational form

This kind of Cauchy problem has been studied by Oleinik in [16]. Assuming that the coefficients are smooth and bounded, with bounded derivatives of any order, she proved that the Cauchy problem is \(C^{\infty}\) well-posed provided that the following Oleinik’s condition is satisfied:Footnote 1

Note that \(a'\) is bounded by \(\sqrt{a}\) as a direct consequence of Glaeser’s inequality: If \(a\in C^{2}(\mathbb{R})\), \(a(x)\ge 0\) for all \(x\in \mathbb{R}\) and \(\Vert a'' \Vert _{L^{\infty}}\le M_{1}\), then

for all \(x\in \mathbb{R}\).

Therefore, \(C^{\infty}\) well-posedness is obtained by simply imposing on the lower order term \(b_{1}\) a Levi condition of the type

for some \(M_{2}>0\) independent of \(x\). It is of physical interest to understand the well-posedness of this Cauchy problem when the coefficients are less than continuous. This kind of investigation has been initiated in [13] for second order hyperbolic equations with \(t\)-dependent coefficients and recently extended to inhomogeneous equations in [3]. Here we want to work with space-dependent coefficients with minimal assumptions of regularity, namely distributions with compact support. We are motivated by the toy model

where \(g_{0},\, g_{1} \in C_{c}^{\infty}(\mathbb{R})\) and \(H\) is the Heaviside function (\(H(x)=0\) if \(x<0\), \(H(x)=1\) if \(x\ge 0\)) or more in general \(H\) is replaced by a positive distribution with compact support. Note that the well-posedness of the Cauchy problem for hyperbolic equations has been widely investigated when the equation coefficients are at least continuous, see [4–7, 11, 12] and references therein. However, in presence of discontinuities distributional solutions might fail to exists due to the well-known Schwartz impossibility result [17].

For this reason, as in [9, 13] we look for solutions of the Cauchy problem (1) in the very weak sense. In other words we replace the equation under consideration with a family of regularised equations obtained via convolution with a net of mollifiers. We will then obtain a net \((u_{\varepsilon })_{\varepsilon }\) that we will analyse in terms of qualitative and limiting behaviour as \(\varepsilon \to 0\). The paper is organised as follows.

In Sect. 2 we revisit Oleinik’s result in the case of smooth coefficients. We show how her condition on the lower order term \(b_{1}\) can be obtained via transformation into a first order system and energy estimates. Note that the energy is provided by the hyperbolic symmetriser associated to the wave operator. This system approach turns out to be easily adaptable to the case of singular coefficients and in general to the framework of very weak solutions. In Sect. 3 we pass to consider discontinuous coefficients. After a short introduction to the notion of very weak solution, we formulate Levi conditions on the lower order terms which allow to prove that our Cauchy problem admits a very weak solution of Sobolev type. Some toy models are analysed in Sect. 4 where we prove that every weak solution to (3) recovers, in the limit as \(\varepsilon \to 0\), the piecewise distributional solution defined in [8]. More singular toy models, defined via delta of Dirac and homogeneous distributions, are also presented in Sect. 4 and the corresponding very weak solutions investigated numerically.

2 A Revisited Approach to Oleinik’s Result

This section is devoted to the Cauchy problem

where \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\), are smooth and bounded with bounded derivatives of any order, and \(a \geq 0\). We also assume that all the functions involved in the system are real-valued. The \(C^{\infty}\) well-posedness of (4) is known thanks to [16]. Here, we give an alternative proof of this result based on the reduction to a first order system. Note that in the sequel with \(H^{k}\) well-posedness we mean that, for suitable initial data and right-hand side, the solution of the Cauchy problem exists in \(C^{2}([0,T], H^{k}(\mathbb{R}))\) and is unique. Because of the presence of multiplicities we will need to take initial data and right-hand side more regular (higher Sobolev order) and we will therefore have some loss of derivatives. We refer the reader to [16] and [18] for more details. Here we will work out the estimates which will be specifically needed in the second part of the paper to deal with singular coefficients and very weak solutions.

2.1 System in U

In detail, by using the transformation,

our Cauchy problem can be rewritten as

where

The matrix \(A\) has a block diagonal shape with a \(1\times 1\) block equal to 0 and a \(2\times 2\) block in Sylvester form and has the symmetriser

i.e., \(QA=A^{\ast }Q=A^{t}Q\). The symmetriser \(Q\) defines the energy

Since \(a\ge 0\), we have that the bound from below

holds, for all \(t\in [0,T]\). Assume that the initial data \(g_{0}, g_{1}\) are compactly supported and that \(f\) is compactly supported with respect to \(x\). By the finite speed of propagation, it follows that the solution \(U\) is compactly supported with respect to \(x\) as well. Hence, by integration by parts we obtain the following energy estimate:

Since

from Glaeser’s inequality (\(|a'(x)|^{2}\le 2M_{1} a(x)\)) it immediately follows that

Furthermore we have,

Hence, using the Levi condition (2), \(b_{1}^{2}(x)\le M_{2} a(x)\), we have

Finally,

Combining (5), (6) and (7), we obtain the estimate

where

Using the bound from below for the energy and Grönwall’s lemma we obtain the following estimate for \(U^{0}\) and \(U_{2}\):

In addition,

for all \(t\in [0,T]\). Note that the constant \(C_{2}\) depends linearly on \(\Vert a\Vert _{\infty}\) and exponentially on \(T\), \(M_{2}\), \(\Vert a''\Vert _{\infty}\) \(\Vert b_{2}\Vert _{\infty}\) and \(\Vert b_{3}\Vert _{\infty}\). Indeed, setting \(M_{1}=\Vert a''\Vert _{\infty}\),

Concluding,

2.2 \(L^{2}\)-Estimates for \(U_{1}\)

We now want to obtain a similar estimate for \(U_{1}\). To attain this, we transform once more the system by taking a derivative with respect to \(x\). Let \(V=(V^{0},V_{1},V_{2})^{T}=(\partial _{x}U^{0},\partial _{x}U_{1}, \partial _{x}U_{2})^{T}\). By getting an estimate for \(V_{2}\) we also automatically get an estimate for \(U_{1}\) since \(V_{2}=\partial _{t} U_{1}\). Indeed, we can do so by applying the fundamental theorem of calculus and making use of the initial conditions. Hence, if \(U\) solves

then \(V\) solves

The system in \(V\) still has \(A\) as a principal part matrix and additional lower order terms. It follows that we can still use the symmetriser \(Q\) to define the energy

for which we obtain the bound from below \(\Vert V^{0}\Vert ^{2}_{L^{2}}+\Vert V_{2}\Vert ^{2}_{L^{2}} \le E(t)\). Therefore,

By direct computations

Hence,

Now,

and using (9),

Therefore,

Now we note that \(V_{2}=\partial _{x} U_{2}=\partial _{x} \partial _{t} u=\partial _{t} \partial _{x} u=\partial _{t} U_{1}\). By the fundamental theorem of calculus we have

By Minkowski’s integral inequality

and therefore by applying Hölder’s inequality on the integral in \(ds\) we get

Hence, estimate (11) becomes

For the sake of the reader we now recall a Grönwall’s type lemma (Lemma 6.2 in [18]) that will be applied to the inequality (13) in order to estimate the energy.

Lemma 2.1

Let \(\varphi \in C^{1}([0,T])\) and \(\psi \in C([0,T])\) two positive functions such that

for some constants \(B_{1},B_{2}>0\). Then, there exists a constant \(B>0\) depending exponentially on \(B_{1}, B_{2}\) and \(T\) such that

for all \(t\in [0,T]\).

Hence, combining the bound from below for the energy \(E(t)\) with Lemma (2.1), we obtain that there exists a constant \(C_{3}>0\) depending exponentially on \(\Vert b_{2} \Vert _{\infty}\), \(\Vert b_{3} \Vert _{\infty}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(M_{2}\) and \(T\) such that

for all \(t\in [0,T]\), with \(C_{2}\) as in (8). Noting that \(V^{0}=U_{1}\), we have that

2.2.1 System in W

Analogously, if we want to estimate the \(L^{2}\)-norm of \(V_{1}\) we need to repeat the same procedure, i.e., to derive the system in \(V\) with respect to \(x\) and introduce \(W=(W^{0},W_{1},W_{2})^{T}=(\partial _{x}V^{0},\partial _{x}V_{1}, \partial _{x}V_{2})^{T}\). We have that if \(V\) solves

then \(W\) solves

Again, by using the energy \(E(t)=(QW, W)_{L^{2}}=\Vert W^{0}\Vert ^{2}_{L^{2}}+(aW_{1},W_{1})_{L^{2}}+ \Vert W_{2}\Vert ^{2}_{L^{2}}\) and following similar steps as in (10), we have

By direct computations we get

Hence,

Now,

Therefore,

From estimates (9), (13), and (14), we get

where \(C_{4}>0\) depends linearly on \(T\), \(\Vert a \Vert _{\infty}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(\Vert b'_{2} \Vert ^{2}_{\infty}\), \(\Vert b'_{3} \Vert ^{2}_{\infty}\) and exponentially on \(T\), \(\Vert b_{2} \Vert _{\infty}\), \(\Vert b_{3} \Vert _{\infty}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(M_{2}\), \(\Vert a''\Vert _{\infty}\). It remains to estimate \(3\Vert V_{1}\Vert _{L^{2}}^{2}\). Since \(\partial _{t} V_{1}=W_{2}\) we can write

Combining (16) with (17), we rewrite (15) as

By Lemma 2.1, we conclude that there exists a constant \(C_{5}>0\), depending exponentially on \(T\), \(\Vert a''\Vert _{\infty}\), \(M_{2}\), \(\Vert b_{2} \Vert _{\infty}\), \(\Vert b_{3} \Vert _{\infty}\), \(\Vert a''\Vert _{\infty}^{2}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(\Vert b'_{2} \Vert ^{2}_{\infty}\), \(\Vert b'_{3} \Vert ^{2}_{\infty}\), \(\Vert b''_{1}\Vert _{\infty}^{2}\), \(\Vert b''_{2}\Vert _{\infty}^{2}\) and \(\Vert b''_{3}\Vert _{\infty}^{2}\), such that

Note that the constant \(C'_{5}\) depends linearly on \(T\), \(\Vert a \Vert _{\infty}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(\Vert b'_{2} \Vert ^{2}_{\infty}\), \(\Vert b'_{3} \Vert ^{2}_{\infty}\) and exponentially on \(T\), \(\Vert a''\Vert _{\infty}\), \(M_{2}\), \(\Vert b_{2} \Vert _{\infty}\), \(\Vert b_{3} \Vert _{\infty}\), \(\Vert a''\Vert _{\infty}^{2}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(\Vert b'_{2} \Vert ^{2}_{\infty}\), \(\Vert b'_{3} \Vert ^{2}_{\infty}\), \(\Vert b''_{1}\Vert _{\infty}^{2}\), \(\Vert b''_{2}\Vert _{\infty}^{2}\) and \(\Vert b''_{3}\Vert _{\infty}^{2}\). Noting that \(W^{0}= V_{1}\), we have that

2.3 Sobolev Estimates

Bringing everything together, we have proven that if \(U\) is a solution of the Cauchy problem

then \(\Vert U_{1}(t)\Vert ^{2}_{L^{2}}\) is bounded by

and

It follows that

where \(A_{0}\) depends linearly on \(T\), \(\Vert a\Vert _{\infty}\), \(\Vert b'_{1}\Vert ^{2}_{\infty}\), \(\Vert b'_{2}\Vert ^{2}_{\infty}\), \(\Vert b'_{3}\Vert ^{2}_{\infty}\) and exponentially on \(T\), \(M_{2}\), \(\Vert a''\Vert _{\infty}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(\Vert b_{2}\Vert _{\infty}\), \(\Vert b_{3}\Vert _{\infty}\).

Passing now to the system in \(V\) we have proven that

and therefore there exists a constant \(A_{1}>0\), depending linearly on \(T\), \(\Vert a\Vert _{\infty}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(\Vert b'_{2} \Vert ^{2}_{\infty}\), \(\Vert b'_{3} \Vert ^{2}_{\infty}\) and exponentially on \(T\), \(M_{2}\), \(\Vert a''\Vert _{\infty}\), \(\Vert a''\Vert _{\infty}^{2}\), \(\Vert b_{2} \Vert _{\infty}\), \(\Vert b_{3} \Vert _{\infty}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(\Vert b'_{2} \Vert ^{2}_{\infty}\), \(\Vert b'_{3} \Vert ^{2}_{\infty}\), \(\Vert b''_{1}\Vert _{\infty}^{2}\), \(\Vert b''_{2}\Vert _{\infty}^{2}\), \(\Vert b''_{3}\Vert _{\infty}^{2}\), such that

This immediately gives the estimates

for all \(t\in [0,T]\) and \(k=-1,0,1\), where \(A_{k}\) depends linearly on \(T^{\frac{(k+1)(2-k)}{2}}\), \(\Vert a\Vert _{\infty}\), \(\Vert b'_{1} \Vert ^{(k+1)(2-k)}_{\infty}\), \(\Vert b'_{2} \Vert ^{(k+1)(2-k)}_{\infty}\), \(\Vert b'_{3} \Vert ^{(k+1)(2-k)}_{\infty}\) and exponentially on \(T\), \(M_{2}\), \(\Vert a''\Vert _{\infty}\), \(\Vert a''\Vert _{\infty}^{k(k+1)}\), \(\Vert b'_{1} \Vert ^{2}_{\infty}\), \(\Vert b''_{1}\Vert _{\infty}^{k(k+1)}\), \(\Vert b_{2} \Vert _{\infty}\), \(\Vert b'_{2} \Vert ^{k(k+1)}_{\infty}\), \(\Vert b''_{2}\Vert _{\infty}^{k(k+1)}\), \(\Vert b_{3} \Vert _{\infty}\), \(\Vert b'_{3} \Vert ^{k(k+1)}_{\infty}\), \(\Vert b''_{3}\Vert _{\infty}^{k(k+1)}\).

2.4 Conclusion

We have obtained \(H^{k}\)-Sobolev estimates for the Cauchy problem (4) for \(k=0,1,2\), provided that \(a\ge 0\) and \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\). We can obtain existence of the solution via a standard perturbation argument on the strictly hyperbolic case (see [18] and the proof of Theorem 2.3) and from the above estimates we can obtain uniqueness. This argument can be iterated to obtain Sobolev estimates for every order \(k\). The iteration will involve higher-order derivatives of the coefficients. More precisely, if we want to estimate the \(H^{k}\)-norm of \(U(t)\) then we will derive the coefficients \(a_{j}\) up to order \(k+1\). We therefore have the following proposition.

Proposition 2.2

Assume that \(a\ge 0\) and \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\). Then, for all \(k\in {\mathbb{N}}_{0}\) there exists a constant \(C_{k}\) depending on \(T\), \(M_{2}\) and the \(L^{\infty}\)-norms of the derivatives of the coefficients up to order \(k+1\) such that

for all \(t\in [0,T]\).

2.5 Existence and Uniqueness Result

We now prove that the Cauchy problem (4)

is well-posed in every Sobolev space and hence in \(C^{\infty}(\mathbb{R})\). We will use the estimate (19) which we will re-write in terms of \(u\).

Theorem 2.3

Let \(a\ge 0\) and \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\). Assume that \(g_{0},g_{1} \in C_{c}^{\infty} (\mathbb{R})\) and \(f\in C([0,T], C_{c}^{\infty}(\mathbb{R}))\). Then, the Cauchy problem (4) is well-posed in every Sobolev space \(H^{k}\), with \(k\in {\mathbb{N}}_{0}\), and for all \(k\in {\mathbb{N}}_{0}\) there exists a constant \(C_{k}>0\) depending on \(T\), \(M_{2}\) and the \(L^{\infty}\)-norms of the derivatives of the coefficients up to order \(k+1\) such that

for all \(t\in [0,T]\).

Proof

-

(i)

Existence. Assume that \(f\in {C}([0,T], {C}_{c}^{\infty} (\mathbb{R}))\) and let

$$ P(u)=\partial _{t}^{2}u-a(x)\partial ^{2}_{x} u+ b_{1}(x)\partial _{x} u+ b_{2}(x)\partial _{t} u + b_{3}(x) u. $$The strictly hyperbolic Cauchy problem

$$ \begin{aligned} P_{\delta}(u)&=\partial _{t}^{2}u-(a(x)+\delta ) \partial ^{2}_{x} u+ b_{1}(x)\partial _{x} u+ b_{2}(x)\partial _{t} u + b_{3}(x) u=f, \\ u(0,x)&=g_{0}(x)\in{C}_{c}^{\infty}(\mathbb{R}), \\ \partial _{t} u(0,x)&=g_{1}(x)\in{C}_{c}^{\infty}( \mathbb{R}), \end{aligned} $$has a unique solution \((u_{\delta})_{\delta}\) defined via \((U_{\delta})_{\delta}\), the corresponding vector. Since, we can choose the constant

$$ A=A(T, M_{2}, \Vert a+\delta \Vert _{\infty}, \Vert a''\Vert _{\infty}, \Vert b_{2}\Vert _{\infty}, \Vert b_{3}\Vert _{\infty}, \Vert b'_{1} \Vert _{\infty}, \Vert b'_{2}\Vert _{\infty }, \Vert b'_{3}\Vert _{ \infty})>0 $$independent of \(\delta \in (0,1)\), and the same conclusion holds for the constants \(C_{k}\) in (19) for all \(k\), by the Ascoli-Arzelà Theorem one gets the compactness of the net

$$ U_{\delta}=(U^{0}_{\delta}, U_{1,\delta},U_{2,\delta})\in C([0,T], H^{k}( \mathbb{R}))^{3} $$for all \(k\), when \(g_{0},g_{1}(x) \in{C}_{c}^{\infty} (\mathbb{R})\). Note that the needed estimates on \(\partial _{t} U\) can be obtained directly from the equation. Therefore there exists a convergent subsequence in \((C([0,T],H^{k}(\mathbb{R})))^{3}\) with limit \(U\in (C([0,T],H^{k}(\mathbb{R})))^{3}\) that solves the system

$$ \begin{aligned} \partial _{t} U=&A \partial _{x} U+BU+F, \\ U(0,x)&=(g_{0},g'_{0},g_{1})^{T}, \end{aligned} $$in the sense of distributions.

-

(ii)

Uniqueness. The uniqueness of the solution \(u\) follows immediately from the estimate (20).

□

As a straightforward consequence we get the following result of \(C^{\infty}\) well-posedness which is consistent with Oleinik’s result [16]. As we have bounded coefficients, we obtain global well-posedness instead of local well-posedness.

Corollary 2.4

Let \(a\ge 0\), \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\) and let \(f\in C([0,T], C^{\infty}_{c}(\mathbb{R}))\). Then the Cauchy problem (4) is \(C^{\infty}(\mathbb{R})\) well-posed, i.e., given \(g_{0},g_{1}\in{C}^{\infty}_{c}(\mathbb{R})\) there exists a unique solution \(C^{2}([0,1], {C}^{\infty}(\mathbb{R}))\) of

Moreover, the estimate (20) holds for every \(k\in {\mathbb{N}}_{0}\).

Note that one can remove the assumption of compact support on \(f\) and the initial data, by the finite speed of propagation.

3 The Inhomogeneous Wave Equation with Space-Dependent Singular Coefficients

When dealing with singular coefficients, one can encounter equations that may not have a meaningful classical distributional solution. This is due to the well-known problem that in general it is not possible to multiply two arbitrary distributions. To handle this, the notion of a very weak solution has been introduced in [13]. In [13], the authors were looking for solutions modelled on Gevrey spaces. However, in this paper we will instead prove Sobolev well-posedness. This motivates the introduction of very weak solutions of Sobolev type.

3.1 Very Weak Solutions of Sobolev Type

In the sequel, let \(\varphi \) be a mollifier (\(\varphi \in C^{\infty}_{c}( \mathbb{R}^{n})\), \(\varphi \ge 0\) with \(\int \varphi =1\)) and let \(\omega (\varepsilon )\) a positive net converging to 0 as \(\varepsilon \to 0\). Let \(\varphi _{\omega (\varepsilon )}(x)=\omega (\varepsilon )^{-n} \varphi (x/\omega (\varepsilon ))\). \(K\Subset \mathbb{R}^{n}\) stands for \(K\) is a compact subset of \(\mathbb{R}^{n}\). We are now ready to introduce the concepts of \(H^{k}\)-moderate and \(H^{k}\)-negligible nets.

Definition 3.1

-

(i)

A net \((v_{\varepsilon })_{\varepsilon }\in H^{k}(\mathbb{R}^{n})^{(0,1]}\) is \(H^{k}\)-moderate if there exist \(N\in {\mathbb{N}}_{0}\) and \(c>0\) such that

$$ \| v_{\varepsilon }(x)\|_{H^{k}(\mathbb{R}^{n})} \le c \varepsilon ^{-N}, $$(21)uniformly in \(\varepsilon \in (0,1]\).

-

(ii)

A net \((v_{\varepsilon })_{\varepsilon }\in H^{k}(\mathbb{R}^{n})^{(0,1]}\) is \(H^{k}\)-negligible if for all \(q\in {\mathbb{N}}_{0}\) there exists \(c>0\) such that

$$ \| v_{\varepsilon }(x)\|_{H^{k}(\mathbb{R}^{n})} \le c \varepsilon ^{q}, $$(22)uniformly in \(\varepsilon \in (0,1]\).

Since we will only be considering nets of \(H^{k}\) functions, we can simply use the expressions moderate net and negligible net and drop the \(H^{k}\)-suffix. Note that Biagioni and Oberguggenberger introduced in [2] spaces of generalised functions generated by nets in \(H^{\infty}(\mathbb{R}^{n})^{(0,1]}\). As a consequence, their notion of moderateness and negligibility involves derivatives of any order.

Analogously, by considering \(C^{\infty}([0,T]; H^{k}(\mathbb{R}^{n}))^{(0,1]}\) instead of \(H^{k}(\mathbb{R}^{n})^{(0,1]}\), we formulate the following definition.

Definition 3.2

-

(i)

A net \((v_{\varepsilon })_{\varepsilon }\in C^{\infty}([0,T]; H^{k}( \mathbb{R}^{n}))^{(0,1]}\) is moderate if for all \(l\in {\mathbb{N}}_{0}\) there exist \(N\in {\mathbb{N}}_{0}\) and \(c>0\) such that

$$ \|\partial _{t}^{l} v_{\varepsilon }(t,\cdot )\|_{H^{k}( \mathbb{R}^{n})} \le c\varepsilon ^{-N}, $$(23)uniformly in \(t\in [0,T]\) and \(\varepsilon \in (0,1]\).

-

(ii)

A net \((v_{\varepsilon })_{\varepsilon }\in C^{\infty}([0,T]; H^{k}( \mathbb{R}^{n}))^{(0,1]}\) is negligible if for all \(l\in {\mathbb{N}}_{0}\) and \(q\in {\mathbb{N}}_{0}\) there exists \(c>0\) such that

$$ \|\partial _{t}^{l} v_{\varepsilon }(t,\cdot )\|_{H^{k}( \mathbb{R}^{n})} \le c\varepsilon ^{q}, $$(24)uniformly in \(t\in [0,T]\) and \(\varepsilon \in (0,1]\).

In order to introduce the notion of a very weak solution for the Cauchy problem (1),

where \(a\ge 0\) and in general all coefficients, initial data and right-hand side are compactly supported distributions, we need some preliminary work on how to regularise our equation. We will provide estimates in terms of \(L^{\infty}\)- as well as \(L^{2}\)-norm and we will focus on coefficients and initial data in \(L^{\infty}(\mathbb{R}^{n})\), \({\mathcal {E}}'(\mathbb{R}^{n})\) and \(B^{\infty}(\mathbb{R}^{n})\).

Proposition 3.3

Let \(\varphi \) be a mollifier and \(\omega (\varepsilon )\) a positive function converging to 0 as \(\varepsilon \to 0\).

-

(i)

If \(v \in L^{\infty}(\mathbb{R}^{n})\), then \(\forall \varepsilon \in (0,1)\), \(v \ast \varphi _{\omega (\varepsilon )}\in B^{\infty}( \mathbb{R}^{n})\) and

$$ \forall \alpha \in {\mathbb{N}}_{0}^{n}, \,\Vert \partial ^{\alpha}(v \ast \varphi _{\omega (\varepsilon )}) \Vert _{\infty }\le \omega ( \varepsilon )^{-|\alpha |} \Vert v\Vert _{\infty }\Vert \partial ^{ \alpha}\varphi \Vert _{L^{1}}. $$ -

(ii)

If \(v \in {\mathcal {E}}'(\mathbb{R}^{n})\), then \(\forall \varepsilon \in (0,1)\), \(v \ast \varphi _{\omega (\varepsilon )}\in B^{\infty}( \mathbb{R}^{n})\) and

$$ \forall \alpha \in {\mathbb{N}}_{0}^{n}, \,\Vert \partial ^{\alpha}(v \ast \varphi _{\omega (\varepsilon )}) \Vert _{\infty }\le \omega ( \varepsilon )^{-|\alpha |-m} \sum _{|\beta |\le m } \Vert g_{\beta} \Vert _{\infty }\Vert \partial ^{\alpha + \beta}\varphi \Vert _{L^{1}}, $$where \(m \in {\mathbb{N}}_{0}\) and \(g_{\beta }\in C_{c}(\mathbb{R}^{n})\) come from the structure of \(v\).

-

(iii)

If \(v \in B^{\infty}(\mathbb{R}^{n})\), then \(\forall \varepsilon \in (0,1)\), \(v \ast \varphi _{\omega (\varepsilon )}\in B^{\infty}( \mathbb{R}^{n})\) and

$$ \forall \alpha \in {\mathbb{N}}_{0}^{n}, \,\Vert \partial ^{\alpha}(v \ast \varphi _{\omega (\varepsilon )}) \Vert _{\infty }\le \Vert \partial ^{\alpha}v \Vert _{\infty }. $$

Proof

-

(i)

By the properties of convolution,

$$\begin{aligned} \Vert \partial ^{\alpha}(v \ast \varphi _{\omega (\varepsilon )}) \Vert _{\infty }&= \Vert v \ast (\partial ^{\alpha}\varphi _{\omega ( \varepsilon )} )\Vert _{\infty}= \omega (\varepsilon )^{-|\alpha |-n} \Vert v \ast (\partial ^{\alpha}\varphi )\left ( \frac{\cdot}{\omega (\varepsilon )}\right ) \Vert _{\infty } \\ &= \omega (\varepsilon )^{-|\alpha |-n}\Vert \int _{ \mathbb{R}^{n}} v(\cdot -\xi ) (\partial ^{\alpha} \varphi )\left (\frac{\xi}{\omega (\varepsilon )}\right )d\xi \Vert _{ \infty } \\ &= \omega (\varepsilon )^{-|\alpha |}\Vert \int _{ \mathbb{R}^{n}} v(\cdot -\omega (\varepsilon )z) ( \partial ^{\alpha}\varphi )\left (z\right )dz \Vert _{\infty } \\ &\le \omega (\varepsilon )^{-|\alpha |} \Vert v\Vert _{\infty }\Vert \partial ^{\alpha}\varphi \Vert _{L^{1}}. \end{aligned}$$ -

(ii)

By the structure theorem of compactly supported distributions, there exists \(m \in {\mathbb{N}}_{0}\) and \(g_{\beta }\in C_{c}(\mathbb{R}^{n})\) such that

$$\begin{aligned} \Vert \partial ^{\alpha}(v \ast \varphi _{\omega (\varepsilon )}) \Vert _{\infty }&= \Vert ( \sum _{|\beta |\le m } \partial ^{\beta} g_{ \beta} ) \ast (\partial ^{\alpha}\varphi _{\omega (\varepsilon )} ) \Vert _{\infty}= \Vert \sum _{|\beta |\le m }( \partial ^{\beta} g_{ \beta} \ast \partial ^{\alpha}\varphi _{\omega (\varepsilon )} ) \Vert _{\infty} \\ &= \Vert \sum _{|\beta |\le m }( g_{\beta} \ast \partial ^{\alpha + \beta}\varphi _{\omega (\varepsilon )} ) \Vert _{\infty }\le \sum _{| \beta |\le m } \Vert g_{\beta} \ast \partial ^{\alpha + \beta} \varphi _{\omega (\varepsilon )} \Vert _{\infty } \\ &\le \omega (\varepsilon )^{-|\alpha |-m-n} \sum _{|\beta |\le m } \Vert g_{\beta} \ast (\partial ^{\alpha + \beta}\varphi )\left ( \frac{\cdot}{\omega (\varepsilon )}\right ) \Vert _{\infty } \\ &\le \omega (\varepsilon )^{-|\alpha |-m} \sum _{|\beta |\le m } \Vert g_{\beta} \Vert _{\infty }\Vert \partial ^{\alpha + \beta} \varphi \Vert _{L^{1}}. \end{aligned}$$ -

(iii)

Putting the derivatives on \(v\), we get

$$\begin{aligned} \Vert \partial ^{\alpha}(v \ast \varphi _{\omega (\varepsilon )}) \Vert _{\infty }&= \Vert (\partial ^{\alpha}v) \ast \varphi _{\omega ( \varepsilon )} \Vert _{\infty }\le \Vert \partial ^{\alpha}v \Vert _{ \infty }\Vert \varphi _{\omega (\varepsilon )} \Vert _{L^{1}} \\ &=\Vert \partial ^{\alpha}v \Vert _{\infty }. \end{aligned}$$

□

By employing Theorem 2.7 in [2] we get the following result of \(L^{2}\)-moderateness, where \(\varphi \) and \(\omega (\varepsilon )\) are defined as above.

Proposition 3.4

Let \(w \in \mathcal{E}'(\mathbb{R}^{n})\). Then, there exists \(m \in {\mathbb{N}}_{0}\) and for \(\beta \in {\mathbb{N}}_{0}^{n}\) there exists \(C>0\) such that,

where \(m\) depends on the structure of \(w\).

Proof

By the structure theorem of compactly supported distributions, there exists \(m \in {\mathbb{N}}_{0}\) and \(w_{\alpha }\in C_{c}(\mathbb{R}^{n})\) such that \(w=\sum _{|\alpha |\le m } \partial ^{\alpha} w_{\alpha}\). For \(\beta \in {\mathbb{N}}_{0}^{n}\), by Young’s inequality we get

□

Note that if \(f\in C([0,T], \mathcal{E}'(\mathbb{R}^{n})\) then the net

fulfils moderate estimates with respect to \(x\) which are uniform with respect to \(t\in [0,T]\). We now have all the needed background to go back to the Cauchy problem (1) and formulate the appropriate notion of very weak solution. We work under the assumptions that all the coefficients are distributions with compact support with \(a\) positive distribution, \(f\in C([0,T], {\mathcal {E}}'(\mathbb{R}))\) and \(g_{0},g_{1}\in {\mathcal {E}}'(\mathbb{R})\). As a first step, by convolution with a mollifier as in Proposition 3.3, we regularise the Cauchy problem (1) and get

where \(t\in [0,T]\), \(x\in \mathbb{R}\), \(a_{\varepsilon }=a\ast \varphi _{\omega (\varepsilon )}\), \(b_{1,\varepsilon }=b_{1}\ast \varphi _{\omega (\varepsilon )}\), \(b_{2,\varepsilon }=b_{2}\ast \varphi _{\omega (\varepsilon )}\), \(b_{3,\varepsilon }=b_{3}\ast \varphi _{\omega (\varepsilon )}\), \(f_{\varepsilon }(t,x)= f(t,\cdot )\ast \varphi _{\omega ( \varepsilon )}(x)\), \(g_{0,\varepsilon }=g_{0}\ast \varphi _{\omega (\varepsilon )}\) and \(g_{1,\varepsilon }=g_{1}\ast \varphi _{\omega (\varepsilon )}\). We also assume that the regularised nets are real-valued (easily obtained with our choice of mollifiers).

We also impose the Levi condition

This will guarantee that the regularised Cauchy problem above has a net of smooth solutions \((u_{\varepsilon })_{\varepsilon }\).

Definition 3.5

The net of solutions \(u_{\varepsilon}(t,x)\) is a very weak solution of Sobolev order k of the Cauchy problem (1) if there exist \(N \in {\mathbb{N}}_{0}\) and \(c>0\) such that

for all \(t\in [0,T]\) and \(\varepsilon \in (0,1]\).

In other words, the Cauchy problem (1) has a very weak solution of order \(k\) if there exist moderate regularisations of coefficients, right-hand side and initial data such that the corresponding net of solutions \((u_{\varepsilon })_{\varepsilon }\) is Sobolev moderate of order \(k\). This definition is in line with the one introduced in [13] but moderateness is measured in terms of Sobolev norms rather than \(C^{\infty}\)-seminorms.

Remark 3.6

Uniqueness of very weak solutions is formulated, as for algebras of generalised functions, in terms of negligible nets. Namely, we say that the Cauchy Problem (1) is very weakly well-posed if a very weak solution exists and it is unique modulo negligible nets. We will discuss this more in details later in the paper.

3.2 Existence of a Very Weak Solution

In this subsection we want to understand which regularisations entail the existence of a very weak solution.

By using the transformation,

we can rewrite the above Cauchy problem as

where

The symmetriser of the matrix \(A\) is

and the energy is defined as follows:

Note that since both \(a\) and \(\varphi _{\varepsilon }\) are non-negative we have that \(a_{\varepsilon }\ge 0\) and therefore the bound from below

holds. By arguing as in Sect. 2 we arrive at

Estimating the right-hand side of (27) requires Glaeser’s inequality. However, since we are working with nets, we have that

where

Hence,

Making use of the Levi condition (26),

arguing as in Sect. 2 we obtain

Lastly,

Therefore from (28), (29) and (30), we obtain

where

Applying Grönwall’s lemma and using the bound from below for the energy, we obtain the estimate following estimate

where the constant \(C_{\varepsilon }\) depends linearly on \(\Vert a_{\varepsilon }\Vert _{\infty}\) and exponentially on \(T\), \(M_{1,\varepsilon }\), \(M_{2,\varepsilon }\), \(\Vert b_{2,\varepsilon }\Vert _{\infty}\) and \(\Vert b_{3,\varepsilon }\Vert _{\infty}\). In particular,

By looking at the estimate above it is natural to formulate three different cases for the coefficients.

-

Case 1: the coefficients \(a, b_{1}, b_{2}, b_{3}\in L^{\infty}(\mathbb{R})\) and \(a(x)\ge 0\) for all \(x\in \mathbb{R}\).

-

Case 2: the coefficients \(a, b_{1}, b_{2}, b_{3}\in {\mathcal {E}}'( \mathbb{R})\) and \(a\ge 0\).

-

Case 3: the coefficients \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\) and \(a(x)\ge 0\) for all \(x\in \mathbb{R}\).

The analysis of these cases will require the following parametrised version of Glaeser’s inequality, that for the sake of completeness, we formulate in \(\mathbb{R}^{n}\). We recall that in \(\mathbb{R}^{n}\), Glaeser’s inequality is formulated as follows: If \(a\in C^{2}(\mathbb{R}^{n})\), \(a(x)\ge 0\) for all \(x\in \mathbb{R}^{n}\) and

for some constant \(M_{1}>0\). Then,

for all \(i=1,\dots ,n\) and \(x\in \mathbb{R}^{n}\).

Hence, we immediately obtained its parametrised version for \(a_{\varepsilon }=a\ast \varphi _{\omega (\varepsilon )}\), where \(\varphi \) be a mollifier (\(\varphi \in C^{\infty}_{c}( \mathbb{R}^{n})\), \(\varphi \ge 0\) with \(\int \varphi =1\)) and \(\omega (\varepsilon )\) a positive net converging to 0 as \(\varepsilon \to 0\).

Proposition 3.7

-

(i)

If \(a \in L^{\infty}(\mathbb{R}^{n})\) and \(a\ge 0\) then

$$ |\partial _{x_{i}}a_{\varepsilon }(x)|^{2}\le 2M_{1,\varepsilon } a_{ \varepsilon }(x), $$for all \(i=1,\dots ,n\), \(x\in \mathbb{R}^{n}\) and \(\forall \varepsilon \in (0,1)\), where

$$ M_{1,\varepsilon }=\omega (\varepsilon )^{-2} \Vert a\Vert _{\infty } \sum _{i=1}^{n} \Vert \partial ^{2}_{x_{i}}\varphi \Vert _{L^{1}}. $$ -

(ii)

If \(a \in {\mathcal {E}}'(\mathbb{R}^{n})\) and \(a\ge 0\) then

$$ |\partial _{x_{i}}a_{\varepsilon }(x)|^{2}\le 2M_{1,\varepsilon } a_{ \varepsilon }(x), $$for all \(i=1,\dots ,n\), \(x\in \mathbb{R}^{n}\) and \(\forall \varepsilon \in (0,1)\), where

$$ M_{1,\varepsilon }=\omega (\varepsilon )^{-2-m} \sum _{|\beta |\le m } \Vert g_{\beta} \Vert _{\infty }\sum _{|\alpha | = 2} \Vert \partial ^{ \alpha + \beta}\varphi \Vert _{L^{1}} $$and \(m \in {\mathbb{N}}_{0}\) and \(g_{\beta }\in C_{c}(\mathbb{R}^{n})\) come from the structure of \(a\).

-

(iii)

If \(a \in B^{\infty}(\mathbb{R}^{n})\) and \(a\ge 0\) then

$$ |\partial _{x_{i}}a_{\varepsilon }(x)|^{2}\le 2M_{1} a_{\varepsilon }(x), $$for all \(i=1,\dots ,n\), \(x\in \mathbb{R}^{n}\) and \(\forall \varepsilon \in (0,1)\), where \(M_{1}= \sum _{i=1}^{n} \Vert \partial ^{2}_{x_{i}} a\Vert _{\infty}\).

In the rest of this section we will analyse the three different cases above and prove the existence of a very weak solution. In the sequel we say that \(\omega (\varepsilon )^{-1}\) is a scale of logarithmic type if \(\omega (\varepsilon )^{-1}\prec \ln (\varepsilon ^{-1})\). In the different cases below we will precisely state which kind of net is required to get the desired moderateness estimates for the solution \((u_{\varepsilon })_{\varepsilon }\).

3.3 Case 1

We assume that our coefficients \(a, b_{1}, b_{2}, b_{3}\in L^{\infty}(\mathbb{R})\) and \(a(x)\ge 0\) for all \(x\in \mathbb{R}\). From Proposition 3.7 (i), \(M_{1,\varepsilon }=\omega (\varepsilon )^{-2} \Vert a\Vert _{\infty } \Vert \partial ^{2}\varphi \Vert _{L^{1}}\) and \(\Vert a_{\varepsilon }\Vert _{\infty}\le \Vert a\Vert _{\infty}\), \(\Vert b_{2,\varepsilon }\Vert _{\infty}\le \Vert b_{2}\Vert _{\infty}\), \(\Vert b_{3,\varepsilon }\Vert _{\infty}\le \Vert b_{3}\Vert _{\infty}\) from Proposition 3.3 (i). The above calculations in combination with (32), (31) and Proposition 3.4 show that we have proven the following theorem.

Theorem 3.8

Let us consider the Cauchy problem

where \(t\in [0,T]\), \(x\in \mathbb{R}\). Assume the following set of hypotheses (Case 1):

-

(i)

the coefficients \(a, b_{1}, b_{2}, b_{3}\in L^{\infty}(\mathbb{R})\) and \(a(x)\ge 0\) for all \(x\in \mathbb{R}\),

-

(ii)

\(f\in C([0,T],\mathcal{E}'(\mathbb{R}))\),

-

(iii)

\(g_{0},g_{1}\in \mathcal{E}'(\mathbb{R})\).

If we regularise

where \(\omega (\varepsilon )^{-2}\) is a scale of logarithmic type and the following Levi condition

is fulfilled for \(\varepsilon \in (0,1]\), then the Cauchy problem has a very weak solution of Sobolev order 0.

3.4 Case 2

We assume that our coefficients \(a, b_{1}, b_{2}, b_{3}\in {\mathcal {E}}'( \mathbb{R})\) and \(a\ge 0\). From Proposition 3.7 (ii), \(M_{1,\varepsilon }=\omega (\varepsilon )^{-2-m_{a}} \sum _{\beta \le m_{a} } \Vert g_{a,\beta} \Vert _{\infty }\Vert \partial ^{\beta +2} \varphi \Vert _{L^{1}}\), where \(m_{a} \in {\mathbb{N}}_{0}\) and \(g_{a,\beta} \in C_{c}(\mathbb{R})\) come from the structure of \(a\). Analogously, from Proposition 3.3 (ii), \(\Vert a_{\varepsilon }\Vert _{\infty}\le \omega (\varepsilon )^{-m_{a}} \sum _{\beta \le m_{a} } \Vert g_{a,\beta} \Vert _{\infty }\Vert \partial ^{ \beta}\varphi \Vert _{L^{1}}\), \(\Vert b_{2,\varepsilon }\Vert _{\infty}\le \omega (\varepsilon )^{-m_{b_{2}}} \sum _{\beta \le m_{b_{2}} } \Vert g_{b_{2},\beta} \Vert _{\infty } \Vert \partial ^{ \beta}\varphi \Vert _{L^{1}}\), \(\Vert b_{3,\varepsilon }\Vert _{\infty}\le \omega (\varepsilon )^{-m_{b_{3}}} \sum _{\beta \le m_{b_{3}} } \Vert g_{b_{3},\beta} \Vert _{\infty } \Vert \partial ^{ \beta}\varphi \Vert _{L^{1}}\). The above calculations in combination with (32), (31) and Proposition 3.4 show that we have proven the following theorem.

Theorem 3.9

Let us consider the Cauchy problem

where \(t\in [0,T]\), \(x\in \mathbb{R}\). Assume the following set of hypotheses (Case 2):

-

(i)

the coefficients \(a, b_{1}, b_{2}, b_{3}\in {\mathcal {E}}'( \mathbb{R})\) and \(a\ge 0\),

-

(ii)

\(f\in C([0,T],\mathcal{E}'(\mathbb{R}))\),

-

(iii)

\(g_{0},g_{1}\in \mathcal{E}'(\mathbb{R})\).

If we regularise

where \(\omega (\varepsilon )^{-h}\) is a scale of logarithmic type, \(h=\max \{2+m_{a}, m_{b_{2}}, m_{b_{3}}\}\), and the following Levi condition

is fulfilled for \(\varepsilon \in (0,1]\), then the Cauchy problem has a very weak solution of Sobolev order 0.

3.5 Case 3

We assume that our coefficients \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\) and \(a(x)\ge 0\) for all \(x\in \mathbb{R}\). This corresponds to the classical case in Sect. 2.1. In the next proposition we prove that the classical Levi condition on \(b_{1}\) can be transferred on the regularised coefficient \(b_{1,\varepsilon }\).

Proposition 3.10

Let

where \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\) and \(a \geq 0\). Suppose the Levi condition

holds for some \(M_{2}>0\). Then the Levi condition also holds for \(b_{1,\varepsilon }\), i.e.,

for some constant \(\tilde{M_{2}}\), independent of \(\varepsilon \).

Proof

We begin by writing

where in the last step we used Hölder’s inequality. The right-hand side of (35) can be estimated by

for some constant \(C>0\) independent of \(\varepsilon \). □

From Proposition 3.7 (iii), \(M_{1}= \Vert \partial ^{2} a\Vert _{\infty}\) and \(\Vert a_{\varepsilon }\Vert _{\infty}\le \Vert a\Vert _{\infty}\), \(\Vert b_{2,\varepsilon }\Vert _{\infty}\le \Vert b_{2}\Vert _{\infty}\), \(\Vert b_{3,\varepsilon }\Vert _{\infty}\le \Vert b_{3}\Vert _{\infty}\) from Proposition 3.3 (iii). The above calculations in combination with (32), (31), Proposition 3.4 and Proposition 3.10 show that we have proven the following theorem.

Theorem 3.11

Let us consider the Cauchy problem

where \(t\in [0,T]\), \(x\in \mathbb{R}\). Assume the following set of hypotheses (Case 3):

-

(i)

the coefficients \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\) and \(a(x)\ge 0\) for all \(x\in \mathbb{R}\),

-

(ii)

\(f\in C([0,T],C_{c}^{\infty}(\mathbb{R}))\),

-

(iii)

\(g_{0},g_{1}\in C_{c}^{\infty}(\mathbb{R})\),

and that the Levi condition \(b_{1}(x)^{2}\le M_{2}a(x)\) holds uniformly in \(x\). If we regularise

then the Cauchy problem has a very weak solution of Sobolev order 0.

Theorem 3.11 proves the existence of a very weak solution when the equation coefficients are regular. Now, we want to prove that in this case every very weak solution converges to the classical solution as \(\varepsilon \to 0\). This result holds independently of the choice of regularisation, i.e., mollifier and scale.

Theorem 3.12

Let

where \(a, b_{1}, b_{2}, b_{3}\in B^{\infty}(\mathbb{R})\) and \(a \geq 0\). We also assume that all the functions involved in the system are real-valued. Let \(g_{0}\) and \(g_{1}\) belong to \(C_{c}^{\infty}(\mathbb{R})\) and \(f \in C([0,T];C_{c}^{\infty}(\mathbb{R}))\). Suppose the Levi condition

holds. Then, every very weak solution \((u_{\varepsilon })_{\varepsilon }\) of \(u\) converges in \(C([0,T], L^{2}(\mathbb{R}))\) as \(\varepsilon \to 0\) to the unique classical solution of the Cauchy problem (37);

Proof

Let \(\widetilde{u}\) be the classical solution. By definition we have

By the finite speed of propagation of hyperbolic equations, \(\widetilde{u}\) is compactly supported with respect to \(x\). As before, we regularise (37) and by Proposition 3.10, the Levi condition also holds for the regularised coefficients with a constant independent of \(\varepsilon \). Hence, there exists a very weak solution \((u_{\varepsilon })_{\varepsilon }\) of \(u\) such that

for regularisation of the coefficients via convolution with a mollifier as done throughout the paper. We can therefore rewrite (38) as

where

and \(n_{\varepsilon }\in C([0,T], L^{2}(\mathbb{R}))\) and converges to 0 in this space. Note that here we have used the fact that all the terms defining \(n_{\varepsilon }\) have \(L^{2}\)-norm which can be estimated by \(\varepsilon \) uniformly with respect to \(t\in [0,T]\). Hence, \(\Vert n_{\varepsilon }\Vert _{L^{2}}=O(\varepsilon )\) and \(n_{\varepsilon }\) converge to 0 in \(C([0,T], L^{2}(\mathbb{R}))\) as \(\varepsilon \to 0\). From (40) and (39) we obtain that \(\widetilde{u}-u_{\varepsilon }\) solves the equation

which fulfils the initial conditions

Following the energy estimates Sect. 2.1 and as in the proof of Theorem 3.11, after reduction to a system, we have an estimate of \(\Vert \widetilde{u}- u_{\varepsilon }\Vert _{L^{2}}^{2}\) as in (31), in terms of \((\widetilde{u}-u_{\varepsilon })(0,x)\), \((\partial _{t} \widetilde{u}- \partial _{t} u_{\varepsilon })(0,x)\) and the right-hand side \(n_{\varepsilon }(t,x)\). In particular, since \(g_{0}-g_{0,\varepsilon }\) and \(g_{1}-g_{1,\varepsilon }\) tends to 0 in the \(H^{1}\) and \(L^{2}\) norm, respectively as \(\varepsilon \) tends to 0, and noting that the constant \(C_{\varepsilon }\) in (31) is independent of \(\varepsilon \) in this case, we immediately get

Since \(n_{\varepsilon }\to 0\) in \(C([0,T], L^{2}(\mathbb{R}))\) as well, we conclude that \(u_{\varepsilon }\to \widetilde{u}\) in \(C([0,T], L^{2}(\mathbb{R}))\). Note that our argument is independent of the choice of the regularisation of the coefficients and the right-hand side. □

Concluding, we have proven the existence of very weak solutions of Sobolev order 0 since in the next sections we will mainly work on \(L^{2}\)-norms. However, very weak solutions of higher Sobolev order can also be obtained with a suitable choice of nets involved in the regularisation process by following the techniques developed in Sect. 2. In analogy to Sect. 7 in [3] it is immediate to see from the estimate (31) that perturbing the equation’s coefficients, right-hand side and initial data with negligible nets leads to a negligible change in the solution, where with negligible we intend negligible net of Sobolev order 0. So, we can conclude that our Cauchy problem is very weakly well-posed.

4 Toy Models and Numerical Experiments

The final section of this paper is devoted to a wave equation toy model with discontinuous space-dependent coefficient. This complements the study initiated in [3] where the equation’s coefficient was depending on time only. In detail, we consider the Cauchy problem

where \(g_{0},\, g_{1} \in C_{c}^{\infty}(\mathbb{R})\) and \(H\) is the Heaviside function with jump at \(x=0\). Classically, we can solve this Cauchy problem piecewise to obtain the piecewise distributional solution \(\bar{u}\). First for \(x<0\), then for \(x>0\) and then combining these two solutions together under the transmission conditions that \(\bar{u}\), \(\partial _{t} \bar{u}\), \(H\partial _{x} \bar{u}\) are continuous across \(x=0\), as in [10]. Problems of this type have been investigated in [8] for a jump function between two positive constants. Here, we work with the Heaviside function which is equal to zero before the jump and then identically 1.

In the next subsection we describe how the classical piecewise distributional solution \(\bar{u}\) of the Cauchy problem (41) is defined.

4.1 Classical Piecewise Distributional Solution

In the region defined by \(x<0\), our equation becomes

Integrating once we get \(\partial _{t}u=g_{1}(x)\). Integrating once more, we get \(u(t,x)=tg_{1}(x)+g_{0}(x)\). From this we obtain that \(\partial _{t}u(t,x)=g_{1}(x)\) and \(\partial _{x}u(t,x)=tg_{1}'(x)+g_{0}'(x)\). We first do a transformation into a system

Note that from the equation we get that \(\partial _{t} v =\partial _{t} w=0 \) and hence we have that \(v(t,x)=w(t,x)=g_{1}(x)\) for \(x<0\).

In the region \(x>0\), the equation becomes

We first do a transformation into a system

From this we can recover that

Note that the system satisfies

We therefore have that \(v(t,x)=v_{0}(x-t)\) for \(x \ge t \) and \(w(t,x)=w_{0}(x+t)\) when \(0 \le x < t \) or \(x \ge t \) (i.e. \(x\ge 0\)).

We now compare the values of \(v\) and \(w\) on both sides of \(x=0\). We denote by \(v_{-},w_{-}\) and \(v_{+},w_{+}\) the values of \(v,w\) for \(x<0\) and \(x>0\), respectively. Using the conditions that the values of \(u_{t}\) and \(u_{x}\) should not jump across \(x=0\), we get that

along \(x=0\). Substituting the value of \(w_{+}\) and \(v_{-}\) we get

Rearranging with respect to \(v_{+}\), we have

This gives us the condition

This condition on the initial data can also be found in [1, Sect. 2]. Concluding we get that,

and

Using that \(\partial _{t} u =\frac{v+w}{2}\), we get that

Note that (43) is continuous on the lines \(x=0\) and \(x=t\).

Integrating and using the initial conditions, we conclude that the solution of (41) for \(t\in [0,T] \) is

Note that (44) is continuous on the lines \(x=0\) and \(x=t\) and hence \(u \in C^{1}([0,T]\times \mathbb{R})\). Furthermore we have that \(\bar{u}(0,x)=g_{0}(x)\) and \(\partial _{t}\bar{u}(0,x)=g_{1}(x)\).

The Cauchy problem (41) can also be studied via the very weak solutions approach. In the sequel, we prove that every very weak solution obtained after regularisation will recover the distributional solution \(\bar{u}\) in the limit as \(\varepsilon \to 0\).

4.2 Consistency of the Very Weak Solution Approach with the Classical One

Let

where \(H_{\varepsilon }=H\ast \varphi _{\omega (\varepsilon )}\) with \(\varphi _{\omega (\varepsilon )}(x)=\omega (\varepsilon )^{-1} \varphi (x/\omega (\varepsilon ))\) and \(\varphi \) is a mollifier (\(\varphi \in C^{\infty}_{c}(\mathbb{R}^{n})\), \(\varphi \ge 0\) with \(\int \varphi =1\)). Assume that \(\omega (\varepsilon )\) is a positive scale with \(\omega (\varepsilon )\to 0\) as \(\varepsilon \to 0\) and that the initial data belong to \(C^{\infty}_{c}(\mathbb{R})\).

Theorem 4.1

Every very weak solution of Cauchy problem (41) converges to the piecewise distributional solution \(\bar{u}\), as \(\varepsilon \to 0\).

Proof

Let \((u_{\varepsilon })_{\varepsilon }\) be a very weak solution of the Cauchy problem (41), i.e. a net of solutions of the Cauchy problem (45) which fulfills moderate \(L^{2}\)-estimates. Since the initial data are compactly supported, from the finite speed of propagation, it is not restrictive to assume that \((u_{\varepsilon })\) vanishes for \(x\) outside some compact set K, independently of \(t \in [0,T]\) and \(\varepsilon \in (0,1]\). By construction we have that \(u_{\varepsilon }\in C^{\infty}([0,T], C_{c}^{\infty}( \mathbb{R}))\). The rest of the proof is an easy adaptation of Proposition 6.8 in [10] and for the sake of simplicity it will not be repeated here. □

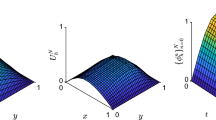

4.3 Numerical Model

We conclude the paper by investigating the previous toy-model numerically. We provide numerical investigations of more singular toy-models by replacing the Heaviside coefficient with a delta of Dirac or an homogeneous distribution. Our analysis is aimed to have a better understanding of the corresponding very weak solutions as \(\varepsilon \to 0\).

4.3.1 Heaviside

We solve the Cauchy problem (41) numerically using the Lax–Friedrichs method [15] after transforming it to an equivalent first-order system. We consider \(t \in [0,2]\), \(x \in [-4,4]\), and compactly supported initial conditions

satisfying condition (42). For the space and time discretisation, we fix the discretisation steps \(\Delta x=\Delta t= 0.0005\). For different values of \(\varepsilon \), we compute the numerical solutions \(u_{\varepsilon }\) and we compare them with the piecewise distributional solution \(\bar{u}\) obtained in Sect. 4. In particular, we compute the norm \(\|u_{\varepsilon }- \bar{u} \|_{L^{2}([-4,4])}\) at \(t=2\) and show that it tends to 0 for \(\varepsilon \to 0\) as in Theorem 4.1.

We consider the mollifier \(\varphi _{\varepsilon }(x)=\frac{1}{\varepsilon }\,\varphi ( \frac{x}{\varepsilon })\) with

Note that we do not expect that a change in the mollifier will impact our results as we have shown similarly in [3].

Figure 1 shows the exact solution \(\bar{u}\) and the solutions \(u_{\varepsilon }\) at \(t=2\) for \(\varepsilon =0.1\), 0.05, 0.01, 0.005, 0.001, 0.0005 (left), a close-up around \(x=0\) (right) and the computed \(L^{2}\) error norm for the various values of \(\varepsilon \) (bottom). We can see that the solutions \(u_{\varepsilon }\) better approach the exact solution \(\bar{u}\) as \(\varepsilon \) is reduced and that the error norm decreases for \(\varepsilon \to 0\) as expected.

4.3.2 Delta

We now focus on the Cauchy problem (41) where we replace the Heaviside function \(H_{\varepsilon }\) by a regularised delta distribution \(\delta _{\varepsilon }\). We consider the same setting and discretisation as in the previous test, we compute the numerical solutions \(u_{\varepsilon }\) and we calculate the norm \(\|u_{\varepsilon }\|_{L^{2}([-4,4])}\) at \(t=0.05\) and show that it grows to infinity as \(\varepsilon \to 0\). As a result, \(u_{\varepsilon }\) cannot have a limit in \(L^{2}([-4,4])\) since if it did, \(\|u_{\varepsilon }\|_{L^{2}([-4,4])}\) would have to converge.

In Fig. 2 we depict the computed \(L^{2}\) norm at \(t=0.05\) for \(\varepsilon =0.1\), 0.05, 0.01, 0.005, 0.001, 0.0005. As expected, this seems to confirm the blow-up of the \(L^{2}\) norm in the case of coefficients as singular as delta.

4.3.3 Homogeneous Distributions

We finally study numerically the Cauchy problem (41) where the coefficient is replaced by the regularisation of the homogeneous distribution \(\chi ^{\alpha}_{+}\), as defined in [14]

Following [14], we recall that when \(\alpha = 0 \), \(\chi ^{\alpha}_{+}\) corresponds to the Heaviside function and when \(\alpha = -1 \), \(\chi ^{\alpha}_{+}\) corresponds to the delta distribution. As before, we consider the same setting and discretisation. For different values of \(\alpha \) between 0 and −1, we compute the numerical solutions \(u_{\varepsilon }\) and we calculate the norm \(\|u_{\varepsilon }\|_{L^{2}([-4,4])}\) at \(t=0.05\) and compare how it grows compared to the previous cases. In Fig. 3 we depict the computed \(L^{2}\) norm at \(t=0.05\) for \(\varepsilon =0.1\), 0.05, 0.01, 0.005, 0.001, 0.0005 and for \(\alpha =0\), −0.1, −0.25, −0.5, −0.75, −0.9, −1. As expected, this seems to confirm a faster blow-up of the \(L^{2}\) norm as \(\alpha \to -1\) (\(\alpha =-1\) corresponds to the case of delta). Note that for the case \(\alpha =-1\), we have used the information from the prior analysis in the previous section.

Notes

There exists a constant \(D>0\) such that \((a'(x)+b_{1}(x))^{2} \le D a(x)\) for all \(x\in \mathbb{R}\).

References

Brown, D.L.: A note on the numerical solution of the wave equation with piecewise smooth coefficients. Math. Comput. 42(166), 369–391 (1984)

Biagioni, H., Oberguggenberger, M.: Generalized solutions to the Korteweg - de Vries and the regularized long-wave equations. SIAM J. Math. Anal. 23(4), 923–940 (1992)

Discacciati, M., Garetto, C., Loizou, C.: Inhomogeneous wave equation with \(t\)-dependent singular coefficients. J. Differ. Equ. 319, 131–185 (2022)

Colombini, F., Kinoshita, T.: On the Gevrey well posedness of the Cauchy problem for weakly hyperbolic equations of higher order. J. Differ. Equ. 186, 394–419 (2002)

Colombini, F., Kinoshita, T.: On the Gevrey wellposedness of the Cauchy problem for weakly hyperbolic equations of 4th order. Hokkaido Math. J. 31, 39–60 (2002)

Colombini, F., Spagnolo, S.: An example of a weakly hyperbolic Cauchy problem not well posed in \(C^{\infty}\). Acta Math. 148, 243–253 (1982)

Colombini, F., De Giorgi, E., Spagnolo, S.: Sur les équations hyperboliques avec des coefficients qui ne dépendent que du temps. Ann. Sc. Norm. Super. Pisa, Cl. Sci. 6, 511–559 (1979)

Deguchi, H., Oberguggenberger, M.: Propagation of singularities for generalized solutions to wave equations with discontinuous coefficients. SIAM J. Math. Anal. 48, 397–442 (2016)

Garetto, C.: On the wave equation with multiplicities and space-dependent irregular coefficients. Trans. Am. Math. Soc. 374, 3131–3176 (2021)

Garetto, C., Oberguggenberger, M.: Symmetrisers and generalised solutions for strictly hyperbolic systems with singular coefficients. Math. Nachr. 288(2–3), 185–205 (2015)

Garetto, C., Ruzhansky, M.: On the well-posedness of weakly hyperbolic equations with time-dependent coefficients. J. Differ. Equ. 253(5), 1317–1340 (2012)

Garetto, C., Ruzhansky, M.: Weakly hyperbolic equations with non-analytic coefficients and lower order terms. Math. Ann. 357(2), 401–440 (2013)

Garetto, C., Ruzhansky, M.: Hyperbolic second order equations with non-regular time dependent coefficients. Arch. Ration. Mech. Anal. 217(1), 113–154 (2015)

Hörmander, L.: The Analysis of Linear Partial Differential Operators I: Distribution Theory and Fourier Analysis. Springer, Berlin (2015)

Le Veque, R.J.: Numerical Methods for Conservation Laws. Birkhäuser, Basel (1992)

Oleinik, O.A.: On the Cauchy problem for weakly hyperbolic equations. Commun. Pure Appl. Math. 23, 569–586 (1970)

Schwartz, L.: Sur l’impossibilité de la multiplication des distributions. C. R. Acad. Sci. Paris 239, 847–848 (1954)

Spagnolo, S., Taglialatela, G.: Homogeneous hyperbolic equations with coefficients depending on one space variable. J. Differ. Equ. 4(3), 533–553 (2007)

Acknowledgements

The authors are grateful to the referee for the useful comments that have improved the final version of the paper.

Funding

The second author was supported by the EPSRC grant EP/V005529/2.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Discacciati, M., Garetto, C. & Loizou, C. On the Wave Equation with Space Dependent Coefficients: Singularities and Lower Order Terms. Acta Appl Math 187, 10 (2023). https://doi.org/10.1007/s10440-023-00601-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10440-023-00601-6