Abstract

In the study of parameterized streaming complexity on graph problems, the main goal is to design streaming algorithms for parameterized problems such that \(\mathcal {O}(f(k) \log ^{\mathcal {O}(1)} n)\) space is enough, where f is an arbitrary computable function depending only on the parameter k. However, in the past few years very few positive results have been established. Most of the graph problems that do have streaming algorithms of the above nature are ones where localized checking is required, like Vertex Cover or Maximum Matching parameterized by the size k of the solution we are seeking. Chitnis et al. (SODA’16) have shown that many important parameterized problems that form the backbone of traditional parameterized complexity are known to require \(\Omega (n)\) bits of storage for any streaming algorithm; e.g. Feedback Vertex Set, Even Cycle Transversal, Odd Cycle Transversal, Triangle Deletion or the more general \(\mathcal{F}\)-Subgraph Deletion when parameterized by solution size k. Our contribution lies in overcoming the obstacles to efficient parameterized streaming algorithms in graph deletion problems by utilizing the power of parameterization. We focus on the vertex cover size K as the parameter for the parameterized graph deletion problems we consider. In this work, we consider the four most well-studied streaming models: the Ea, Dea, Va (vertex arrival) and Al (adjacency list) models. Surprisingly, the consideration of vertex cover size K in the different models leads to a classification of positive and negative results for problems like \(\mathcal{F}\)-Subgraph Deletion and \(\mathcal{F}\)-Minor Deletion.

Similar content being viewed by others

1 Introduction

In streaming algorithms, a graph is presented as a sequence of edges. In the simplest model, we have a stream of edge arrivals, where each edge adds to the graph seen so far, or may include a dynamic mixture of arrivals and departures of edges. In either case, the primary objective is to quickly answer some basic questions over the current state of the graph, such as finding a (maximal) matching over the current graph edges, or finding a (minimum) vertex cover, while storing only a small amount of information. In the most restrictive model, we only allow \(\mathcal {O}( \log ^{\mathcal {O}(1)} n)\) bits of space for storage. However, using standard techniques from communication complexity one can show that most problems do not admit such algorithms. Thus one relaxes this notion and defines what is called a semi-streaming model, which allows \(\mathcal {O}(n \log ^{\mathcal {O}(1)} n)\) bits of space. This model has been extremely successful for graph streaming algorithms and a plethora of non-trivial algorithms has been designed in this model [1, 19, 20]. There is a vast literature on graph streaming and we refer to the survey by McGregor [25] for more details. This lead to the space complexity of the algorithm and the analysis of the space complexity. We have marked blue to the change of the all bounds and the space compelxity analysis in page 10.

The theme of this paper is parameterized streaming algorithms. So, before we go into parameterized streaming let us introduce a few basic definitions in parameterized complexity. The goal of parameterized complexity is to find ways of solving NP-hard problems more efficiently than brute force: the aim is to restrict the combinatorial explosion to a parameter that is hopefully much smaller than the input size. Formally, a parameterization of a problem is assigning an integer k to each input instance. A parameterized problem is said to be fixed-parameter tractable (FPT) if there is an algorithm that solves the problem in time \(f(k)\cdot |I|^{\mathcal {O}(1)}\), where \(\left| I \right| \) is the size of the input and f is an arbitrary computable function depending only on the parameter k. There is a long list of NP-hard graph problems that are FPT under various parameterizations: finding a vertex cover of size k, finding a cycle of length k, finding a maximum independent set in a graph of treewidth at most k, etc. For more details, the reader is referred to the monograph [10]. Given the definition of FPT for parameterized problems, it is natural to seek an efficient algorithm for the corresponding parameterized streaming versions to allow \(\mathcal {O}(f(k) \log ^{\mathcal {O}(1)} n)\) bits of space, where f is an arbitrary computable function depending on the parameter k.

There are several ways to formalize the parameterized streaming question, and in literature certain natural models are considered. The basic case is when the input of a given problem consists of a sequence of edge arrivals only, for which one seeks a parameterized streaming algorithm (PSA). It is more challenging when the input stream is dynamic, and contains both deletions and insertions of edges. In this case one seeks a dynamic parameterized streaming algorithm (DPSA). To understand the challenges to designing DPSA, consider the problem of maintaining maximal matching over a dynamic stream. Suppose an edge or multiple edges gets deleted from a maximal matching (which may be unique) then one may need to do a substantial amount of work and also store enough information to move to a new maximal matching in a modified graph. If we are promised that at every timestamp there is a solution of cost k, then we seek a promised dynamic parameterized streaming algorithm (PDPSA). These notions were formalized in the following two papers [6, 7] and several results for Vertex Cover and Maximum Matching were presented there. Unfortunately, this relaxation to \(\mathcal {O}(f(k) \log ^{\mathcal {O}(1)} n)\) bits of space does not buy us too many new results. Most of the problems for which parameterized streaming algorithms are known are “local problems”. Also, problems that require some global checking – such as Feedback Vertex Set, Even Cycle Transversal, Odd Cycle Transversal etc. remain elusive. In fact, one can show that, when edges of the graph arrive in an arbitrary order, using reductions from communication complexity, all of the above problems will require \(\Omega (n)\) space even if we allow a constant number of passes over the data stream [7].

The starting point of this paper is the above mentioned \(\Omega (n)\) lower bounds on basic graph problems. We ask the most natural question – how do we deconstruct these intractability results? When we look deeper we realize that, to the best of our knowledge the only parameter that has been used in parameterized streaming algorithms is the size of the solution that we are seeking in most of the works. Indeed this is the most well-studied parameter, but there is no reason to only use solution size as a parameter. In effect, researchers have looked at structural parameters in parameterized streaming; we review them later. In parameterized complexity, when faced with obstacles, we either study a problem with respect to parameters larger than the solution size or consider some structural parameters.

1.1 Parameters, Models, And Problems

What parameters to use? In parameterized complexity, after solution size and treewidth, arguably the most notable structural parameter is vertex cover size K [10, 15]. For all the vertex deletion problems that we consider in this paper, a vertex cover is also a solution. Thus, the vertex cover size K is always larger than the solution size k for all the above problems. We do a thorough study of vertex deletion problems from the view point of parameterized streaming in all known models. The contribution of this paper is to carry forward the use of structural parameters in parameterized streaming algorithms as done earlier in [7, 14].

Streaming models. The models that we consider are: (1) Edge Arrival (Ea) model; (2) Dynamic Edge Arrival (Dea) model; (3) Vertex Arrival (Va) model (In each step, a vertex \(v \in V(G)\) is revealed along with all the edges between v and already revealed neighbors of v.); and (4) Adjacency List (Al) model (In a step, a vertex \(v \in V(G)\) is revealed along with all edges incident on v). The formal definitions are in Section 2.

What problems to study? We study the streaming complexity of parameterized versions of \(\mathcal {F}\)-Subgraph deletion and \(\mathcal {F}\)-Minor deletion. These problems are one of the most well studied ones in parameterized complexity [5, 12, 13, 15, 17, 18, 21, 23, 24, 29, 31] and have led to development of the field. The parameters we consider in this paper are (i) the solution size k and (ii) the size K of the vertex cover of the input graph G. In \(\mathcal {F}\)-Subgraph deletion and \(\mathcal {F}\)-Minor deletion, the objective is to decide whether there exists \(X \subset V(G)\) of size at most k such that \(G \setminus X\) has no graphs in \(\mathcal {F}\) as a subgraph and has no graphs in \(\mathcal {F}\) as a minor, respectively. \(\mathcal {F}\)-Subgraph deletion and \(\mathcal {F}\)-Minor deletion are interesting due to the following reasons. Feedback Vertex set (FVS), Even Cycle Transversal (ECT), Odd Cycle Transversal (OCT) and Triangle Deletion (TD) are special cases of \(\mathcal {F}\)-Subgraph deletion when \(\mathcal {F}=\{C_3,C_4,C_5,\ldots \}\), \(\mathcal {F}=\{ C_3,C_5,\ldots \} \), \(\mathcal {F}=\{C_4,C_6,\ldots \}\) and \(\mathcal {F}=\{C_3\}\), respectively. FVS is also a special case of \(\mathcal {F}\)-Minor deletion when \(\mathcal {F}=\{C_3\}\).

1.2 Our Results

Let a graph G and a non-negative integer k be the inputs to the graph problems we consider. Notice that for \(\mathcal {F}\)-Subgraph deletion and \(\mathcal {F}\)-Minor deletion, \(K \ge k\). In particular, we obtain a range of streaming algorithms as well as lower bounds on streaming complexity for the problems we consider. Informally, for a streaming model \(\mathcal {M}\) and a parameterized problem \(\Pi \), if there is a p-pass randomized streaming algorithm for \(\Pi \) that uses \(\mathcal {O}(\ell )\) space then we say that \(\Pi \) is \((\mathcal {M},\ell ,p)\)-streamable. Similarly, if there is no p-pass algorithm using \(o(\ell )\) bitsFootnote 1 of storage then \(\Pi \) is said to be \((\mathcal {M},\ell ,p)\)-hard. For formal definitions please refer to Section 2. When we omit p, it means we are considering one pass of the input stream. The highlight of our results are captured by the \(\mathcal {F}\)-Subgraph deletion and \(\mathcal {F}\)-Minor deletion problems.

Theorem 1.1

Consider \(\mathcal {F}\)-Subgraph deletion in the Al model. Parameterized by solution size k, \(\mathcal {F}\)-Subgraph deletion is \(({\textsc {Al}},n \log n)\)-hard. However, when parameterized by vertex cover K, \(\mathcal {F}\)-Subgraph deletion is \(({\textsc {Al}}, \Delta (\mathcal {F})^2 \cdot K^{\Delta (\mathcal {F})+1} )\)-streamable. Here \(\Delta (\mathcal {F})\) is the maximum degree of any graph in \(\mathcal {F}\).

The above Theorem is in contrast to results shown in [7]. First, we would like to point out that to the best of our knowledge this is the first set of results on hardness in the Al model. The results in [7] showed that \(\mathcal {F}\)-Subgraph deletion is \(({\textsc {Ea}},\Omega (n))\)-hard. A hardness result in the Al model implies one in the Ea model (Refer to Section 2). Thus, our result (Proof in Theorem 5.1) implies a stronger lower bound for \(\mathcal {F}\)-Subgraph deletion particularly in the Ea model. On the positive side, we show that \(\mathcal {F}\)-Subgraph deletion parameterized by the vertex cover size K, is \(\left( {\textsc {Al}},\Delta (\mathcal {F}) \cdot K^{\Delta (\mathcal {F})+1}\right) \)-streamable (Proof in Theorem 4.1).

Our hardness results are obtained from reductions from well-known problems in communication complexity. The problems we reduced from are \(\text{ Index}_n\), \(\text{ Disj}_n\) and \(\text{ Perm}_n\) (Please refer to Section 5.1 for details). In order to obtain the algorithm, one of the main technical contributions of this paper is the introduction of the Common Neighbor problem which plays a crucial role in designing streaming algorithms in this paper. We show that \(\mathcal {F}\)-Subgraph deletion and many of the other considered problems, like \(\mathcal {F}\)-Minor deletion parameterized by vertex cover size K, have a unifying structure that can be solved via Common Neighbor, when the edges of the graph are arriving in the Al model. In Common Neighbor problem, we are given two parameters d and \(\ell \) called as degree parameter and common neighbor parameter respectively. The objective is to obtain from the input graph G, a subgraph H such that H contains the set of vertices V(M) in the maximal matching M (obtained from the streaming), all edges between the vertices in V(M), and some other vertices and edges such that any \(S\subseteq V(M)\) with \(\left| S \right| \le d\) we have enough common neighbors of S. Using structural properties of such a subgraph, called the common neighbor subgraph, we show that it is enough to solve \(\mathcal {F}\)-Subgraph deletion on the common neighbor subgraph. Similar algorithmic and lower bound results can be obtained for \(\mathcal {F}\)-Minor deletion. The following theorem can be proven using Theorem 4.4 in Section 3 and Theorem 5.1 in Section 6.

Theorem 1.2

Consider \(\mathcal {F}\)-Minor deletion in the Al model. Parameterized by solution size k, \(\mathcal {F}\)-Minor deletion is \(({\textsc {Al}},n \log n)\)-hard. However, when parameterized by vertex cover K, \(\mathcal {F}\)-Minor deletion is \(({\textsc {Al}}, \Delta (\mathcal {F})^2 \cdot K^{\Delta (\mathcal {F})+1} )\)-streamable. Here \(\Delta (\mathcal {F})\) is the maximum degree of any graph in \(\mathcal {F}\).

Though we have mentioned the main algorithmic and lower bound results in the above theorems, we have a list of other algorithmic and lower bound results in the different streaming models. The full list of results are summed up in Table 1. To understand the full strength of our contribution, we request the reader to go to Section 2 to see the relations between different streaming models and the notion of hardness and streamability.

1.3 Related Work

Problems in class P have been extensively studied in streaming complexity [25] in the last decade. Recently, there has been a lot of interest in studying streaming complexity of NP-hard problems like Hitting Set, Set Cover, Max Cut and Max CSP [1, 19, 20]. Structural parameters have been considered to study Matching in streaming [3, 7, 11, 14, 26, 27]. Fafianie and Kratsch [16] were the first to study parameterized streaming complexity of NP-hard problems like d-Hitting Set and Edge Dominating Set in graphs. Chitnis et al. [6,7,8] developed a sampling technique to design efficient parameterized streaming algorithms for promised variants of Vertex Cover, d-Hitting Set problem, b-Matching etc. They also proved lower bounds for problems like \(\mathcal {G}\)-Free Deletion, \(\mathcal {G}\)-Editing, Cluster Vertex Deletion etc. [7].

1.4 Organisation of the Paper

Section 2 contains preliminary definitions and notations. The algorithm for Common Neighbor is in Section 3, and that of \(\mathcal {F}\)-Subgraph deletion and \(\mathcal {F}\)-Minor deletion are presented in Section 4. The lower bound proofs are given in Section 5 and we conclude the paper in Section 6. Appendix A has the formal definitions of all the problems – \(\mathcal {F}\)-Subgraph deletion, \(\mathcal {F}\)-Minor deletion, FVS, ECT, OCT, TD, and Common Neighbor.

2 Preliminaries, Models and Relationship between Models

In this section we state formally the models of streaming algorithms we use in this paper, relationship between them and some preliminary notations that we make use of.

2.1 Streaming Models

A promising prospect to deal with problems on large graphs is the study of streaming algorithms, where a compact sketch of the subgraph whose edges have been streamed/revealed so far, is stored and computations are done on this sketch. Algorithms that can access the sequence of edges of the input graph, p times in the same order, are defined as p-pass streaming algorithms. For simplicity, we refer to 1-pass streaming algorithms as streaming algorithms. The space used by a (p-pass) streaming algorithm, is defined as the streaming complexity of the algorithm. The algorithmic model to deal with streaming graphs is determined by the way the graph is revealed. Streaming algorithms for graph problems are usually studied in the following models [9, 25, 28]. For the upcoming discussion, V(G) and E(G) will denote the vertex and edge set, respectively of the graph G having n vertices.

-

(i)

Edge Arrival (Ea) model: The stream consists of edges of G in an arbitrary order.

-

(ii)

Dynamic Edge Arrival (Dea) model: Each element of the input stream is a pair \((e,\text{ state})\), where \(e \in E(G)\) and \(\text{ state } \in \{\text{ insert, } \text{ delete }\}\) describes whether e is being inserted into or deleted from the current graph.

-

(iii)

Vertex Arrival (Va) model: The vertices of V(G) are exposed in an arbitrary order. After a vertex v is exposed, all the edges between v and neighbors of v that have already been exposed, are revealed. This set of edges are revealed one by one in an arbitrary order.

-

(iv)

Adjacency List (Al) model: The vertices of V(G) are exposed in an arbitrary order. When a vertex v is exposed, all the edges that are incident to v, are revealed one by one in an arbitrary order. Note that in this model each edge is exposed twice, once for each exposure of an endpoint.

2.2 Streamability and Hardness

Let \(\Pi \) be a parameterized graph problem that takes as input a graph on n vertices and a parameter k. Let \(f:\mathbb {N}\times \mathbb {N} \rightarrow \mathbb {R}\) be a computable function. For a model \(\mathcal {M}\in \{{{\textsc {Dea}}, {\textsc {Ea}}, {\textsc {Va}}, {\textsc {Al}}}\}\), whenever we say that an algorithm \(\mathcal {A}\) solves \(\Pi \) with space (complexity) f(n, k) in model \(\mathcal {M}\), we mean \(\mathcal {A}\) is a randomized algorithm that for any input instance of \(\Pi \) in model \(\mathcal {M}\) gives the correct output with probability 2/3 and has streaming complexity f(n, k).

Definition 2.1

A parameterized graph problem \(\Pi \), that takes an n-vertex graph and a parameter k as input, is \(\Omega (f) p\)-pass hard in the Edge Arrival model, or in short \(\Pi \) is \(({\textsc {Ea}},f,p)\)-hard, if there does not exist any p-pass streaming algorithm of space complexity \(\mathcal {O}(f(n,k))\) bits that can solve \(\Pi \) in model \(\mathcal {M}\).

Analogously, \(({\textsc {Dea}},f,p)\)-hard, \(({\textsc {Va}},f,p)\)-hard and \(({\textsc {Al}},f,p)\)-hard are defined.

Definition 2.2

A parameterized graph problem \(\Pi \), that takes an n-vertex graph and a parameter k as input, is \(\mathcal {O}(f) p\)-pass streamable in Edge Arrival model, or in short \(\Pi \) is \(({\textsc {Ea}},f,p)\)-streamable if there exists a p-pass streaming algorithm of space complexity \(\mathcal {O}(f(n,k))\) words Footnote 2 that can solve \(\Pi \) in Edge Arrival model.

\(({\textsc {Dea}},f,p)\)-streamable, \(({\textsc {Va}},f,p)\)-streamable and \(({\textsc {Al}},f,p)\)-streamable are defined analogously. For simplicity, we refer to \((\mathcal {M},f,1)\)-hard and \((\mathcal {M},f,1)\)-streamable as \((\mathcal {M},f)\)-hard and \((\mathcal {M},f)\)-streamable, respectively, where \(\mathcal {M}\in \{{\textsc {Dea}}, {\textsc {Ea}}, {\textsc {Va}}, {\textsc {Al}} \}\).

Definition 2.3

Let \(\mathcal {M}_1,\mathcal {M}_2\in \) \(\{{{\textsc {Dea}},{\textsc {Ea}},{\textsc {Va}},{\textsc {Al}}}\}\) be two streaming models, \(f:\mathbb {N}\times \mathbb {N}\rightarrow \mathbb {R}\) be a computable function, and \(p \in \mathbb {N}\).

-

(i)

If for any parameterized graph problem \(\Pi \), \((\mathcal {M}_1,f,p)\)-hardness of \(\Pi \) implies \((\mathcal {M}_2,f,p)\)-hardness of \(\Pi \), then we say \(\mathcal {M}_1 \le _h \mathcal {M}_2\).

-

(ii)

If for any parameterized graph problem \(\Pi \), \((\mathcal {M}_1,f,p)\)-streamability of \(\Pi \) implies \((\mathcal {M}_2,f,p)\)-streamability of \(\Pi \), then we say \(\mathcal {M}_1 \le _s \mathcal {M}_2\).

Now, from Definitions 2.1, 2.2 and 2.3, we have the following Observation.

Observation 2.4

\({{\textsc {Al}}} \le _h {{\textsc {Ea}}} \le _h {{\textsc {Dea}}}\); \( {{\textsc {Va}}} \le _h {{\textsc {Ea}}} \le _h {{\textsc {Dea}}}\); \({{\textsc {Dea}}} \le _s {{\textsc {Ea}}} \le _s {{\textsc {Va}}} \); \({{\textsc {Dea}}} \le _s {{\textsc {Ea}}} \le _s {{\textsc {Al}}}\).

This observation has the following implication. If we prove a lower (upper) bound result for some problem \(\Pi \) in model \(\mathcal {M}\), then it also holds in any model \(\mathcal {M}'\) such that \(\mathcal {M}\le _h \mathcal {M}'\) (\(\mathcal {M}\le _s \mathcal {M}'\)). For example, if we prove a lower bound result in Al or Va model, it also holds in Ea and Dea model; if we prove an upper bound result in Dea model, it also holds in Ea, Va and Al model. In general, there is no direct connection between Al and Va. In Al and Va, the vertices are exposed in an arbitrary order. However, we can say the following when the vertices arrive in a fixed (known) order.

Observation 2.5

Let \({{\textsc {Al}}}'\) (\({{\textsc {Va}}}'\)) be the restricted version of \({{\textsc {Al}}}\) (\({{\textsc {Va}}}\)), where the vertices are exposed in a fixed (known) order. Then \({{\textsc {Al}}}' \le _h {{\textsc {Va}}}'\) and \({{\textsc {Va}}}' \le _s {{\textsc {Al}}}'\).

Next is a remark on the implication of the relation between different models discussed in this section to our results mentioned in Table 1.

Remark 1

In Table 1, the lower bound results in Va and Al hold even if we know the sequence in which vertices are exposed, and the upper bound results hold even if the vertices arrive in an arbitrary order. In general, the lower bound in the Al model for some problem \(\Pi \) does not imply the lower bound in the Va model for \(\Pi \). However, our lower bound proofs in the Al model hold even if we know the order in which vertices are exposed. So, the lower bounds for FVS, ECT, OCT in the Al model imply the lower bound in the Va model. By Observations 2.4 and 2.5, we will be done by showing a subset of the algorithmic and lower bound results mentioned in the Table 1.

2.3 General Notation

The set \(\{1,\ldots ,n\}\) is denoted as [n]. Without loss of generality, we assume that the number of vertices in the graph is n, which is a power of 2. Given an integer \(i \in [n]\) and \(r \in [\log _2 n]\), \( \text{ bit }(i,r)\) denotes the \(r \text{-th }\) bit in the bit expansion of i. The union of two graphs \(G_1\) and \(G_2\) with \(V(G_1)=V(G_2)\), is \(G_1\cup G_2\), where \(V(G_1\cup G_2)=V(G_1)=V(G_2)\) and \(E(G_1 \cup G_2)=E(G_1)\cup E(G_2)\). For \(X \subseteq V(G)\), \(G \setminus X\) is the subgraph of G induced by \(V(G) \setminus X\). The degree of a vertex \(u \in V(G)\), is denoted by \(\text{ deg}_G(u)\). The maximum and average degrees of the vertices in G are denoted as \(\Delta (G)\) and \(\Delta _{av}(G)\), respectively. For a family of graphs \(\mathcal {F}\), \(\Delta (\mathcal {F})=\max \limits _{F \in \mathcal {F}} \Delta (F)\). A graph F is a subgraph of a graph G if \(V(F)\subseteq V(G)\) and \(E(F)\subseteq E(G)\) be the set of edges that can be formed only between vertices of V(F). A graph F is said to be a minor of a graph G if F can be obtained from G by deleting edges and vertices and by contracting edges. The neighborhood of a vertex \(v \in V(G)\) is denoted by \(N_G(v)\). For \(S \subseteq V(G)\), \(\mathbf{{N_G^{\cap }(S)}}\) denotes the set of vertices in \(V(G) \setminus S\) that are neighbors of every vertex in S. A vertex \(v \in \mathbf{{N_G^{\cap }(S)}}\) is said to be a common neighbor of S in G. The size of any minimum vertex cover in G is denoted by \(\text{ VC }(G)\). A cycle on the sequence of vertices \(v_1,\ldots ,v_n\) is denoted as \(\mathcal {C}(v_1,\ldots ,v_n)\). For a matching M in G, the vertices in the matching are denoted by V(M). \(C_t\) denotes a cycle of length t. \(P_t\) denotes a path having t vertices.

3 Common Neighbor Problem (useful subroutine in our algorithms)

In the next Section, we show that \(\mathcal {F}\)-Subgraph deletion is \(({\textsc {Al}}, \Delta (\mathcal {F})^2 \cdot K^{\Delta (\mathcal {F})+1})\)-streamable when the vertex cover of the input graph is parameterized by K. This will imply that FVS, ECT, OCT and TD parameterized by vertex cover size K, are \(({\textsc {Al}},K^3)\)-streamable. This complements the results in Theorems 5.1 and 5.2 that show that the problems parameterized by vertex cover size K are \(({\textsc {Va}},n/p,p)\)-hard. Note that by Observation 2.4, this also implies that the problems parameterized by vertex cover size K are \((\mathcal {M},n/p,p)\)-hard when \(\mathcal {M}\in \{{{\textsc {Ea}},{\textsc {Dea}}}\}\). Finally, we design an algorithm for \(\mathcal {F}\)-Minor deletion that is inspired by the algorithm for \(\mathcal {F}\)-Subgraph deletion.

3.1 Common Neighbor Problem

For a graph G and parameters \(\mathbf{{d}}, \ell \in \mathbb {N}\), H will be called a common neighbor subgraph for G if

-

(i)

\(V(H) \subseteq V(G)\) such that H has no isolated vertex.

-

(ii)

E(H) contains the edges of a maximal matching M of G along with the edges where both the endpoints are from V(M), such that for all subsets \(S \subseteq V(M)\), with \(\left| S \right| \le d\), we have

-

\(N^{\cap }_H(S) \setminus V(M) = N_ G^{\cap }(S) \setminus V(M)\) if \(\left| N_ G^{\cap }(S) \setminus V(M) \right| < \ell \);

-

\(\left| {N^{\cap }_H(S)} \setminus V(M) \right| {\ge } \ell \) if \(\left| N_ G^{\cap }(S) \setminus V(M) \right| \ge \ell \).

-

In simple words, a common neighbor subgraph H of G is an augmentation of the vertex and edge set of a maximal matching M of G. For each subset S of at most d vertices in V(M), H contains edges to sufficiently many common neighbors of S in G. The parameters \(d \le K\) and \(\ell \) are referred to as the degree parameter and common neighbor parameter, respectively.

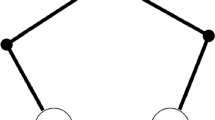

Suppose the vertices are exposed in the increasing order of the indices. Also, when \(v_i\) is exposed, edge \(\{v_i,v_j\}\) arrives first before \(\{v_i,v_{j'}\}\) arrives if and only if \(j < j'\). Figure (a) shows a graph G where \(M=\{(v_1,v_2),(v_3,v_4)\}\) is a maximal matching found by the streaming algorithm (Algorithm 3.1). Figure (b) shows the corresponding common neighbor subgraph H with degree parameter \(d=2\) and a common neighbor parameter \(\ell =2\).

The Common Neighbor problem is formally defined as follows. It takes as input a graph G with \(\text{ VC }(G) \le K\), degree parameter \(d \le K\) and common neighbor parameter \(\ell \) and produces a common neighbor subgraph of G as the output. Common Neighbor parameterized by vertex cover size K, admits Algorithm 1.

Observe that G is a common neighbor subgraph of itself for any \(d ,\ell \) with \(1 \le d,\ell <n. \) However, when the vertex cover the input graph is at most K, our algorithm (Algorithm 1) always generates a common neighbor subgraph of size \(\mathcal {O}(K^2+ K^{d}\cdot d \ell )\). Note that a graph G can have multiple common neighbor subgraphs with the given parameters d and \(\ell \). But, if the stream order is fixed, then the common neighbor subgraph generated by our algorithm is fixed. Figure 1 shows an illustration of common neighbor subgraph.

Lemma 3.1

Common Neighbor, with a commmon neighbor parameter \(\ell \) and parameterized by vertex cover size K, is \(({\textsc {Al}},K^2+ K^d\cdot d \ell )\)-streamable.

Proof

We start our algorithm by initializing a maximal matching M with \(\emptyset \) and construct M in G that is maximal under inclusion; See Algorithm 1. As \(\left| \text{ VC }(G) \right| \le K\), \(\left| M \right| \le K\). Recall that we are considering the Al model here. Let \(M_u\) and \(M'_u\) be the current maximal matchings just before and after the exposure of the vertex u (including the processing of the edges adjacent to u), respectively. Note that, by construction, these partial matchings \(M_u\) and \(M'_u\) are also maximal matchings in the subgraph exposed so far. The following Lemma will be useful for the proof.

Claim 3.2

Let \(u \in \mathbf{{N_ G^{\cap }(S)}} \setminus V(M)\) for some \(S \subseteq V(M)\). Then \(S \subseteq V(M_u)\), that is, u is exposed, after all the vertices in S are declared as vertices of V(M).

Proof

Observe that if there exists \(x \in S\) such that \(x \notin V(M_u)\), then after u is exposed, there exists \(y \in N_G(u)\) such that (u, y) is present in \(M_u'\). This implies \(u \in V(M_u') \subseteq V(M)\), which is a contradiction to \(u \in \mathbf{{N_ G^{\cap }(S)}} \setminus V(M)\).\(\square \)

Now, we describe what our algorithm does when a vertex u is exposed. The complete pseudocode of our algorithm for Common Neighbor is given in Algorithm 1. When a vertex u is exposed in the stream, we try to extend the maximal matching \(M_u\). Also, we store all the edges of the form (u, x) such that \(x \in V(M_u)\), in a temporary memory T. As \(\left| M_u \right| \le K\), we are storing \(\mathcal {O}(K)\) many edges in T. Now, there are the following possibilities.

-

If \(u \in V(M_u')\), that is, either \(u \in V(M_u)\) or the matching \(M_u\) is extended by one of the edges stored in T, then we add all the edges stored in T to E(H).

-

Otherwise, for each \(S \subseteq V(M_u)\) such that \(\left| S \right| \le d\) and \(S \subseteq N_G(u)\), we check whether the number of common neighbors of the vertices present in S, that are already stored, is less than \( \ell \). If yes, we add all the edges of the form (u, z) such that \(z \in S\) to E(H); else, we do nothing. Now, we reset T to \(\emptyset \).

Now we argue about the space complexity of the algorithm. The set of edges in graph H is of two types: the edges between the vertices of V(M) are of the first type, and the edges with one vertex in V(M) and the other vertex in \(V(H)\setminus V(M)\) are of the second type. As \(\left| M \right| \le K\), \(\left| V(M) \right| \le 2K\), the number of edges of the first type is at most \(\mathcal {O}(K^2)\). To analyze the number of second type edges, note that each such edge e (along with at most other \(d-1\) other edges) is added in Line 18 of Algorithm 1 only when there exist some \(S_e \subseteq V(M)\) with \(\left| S_e \right| \le d\) such that \(S_e\) has less than \(\ell \) common neighbors in \(N^\cap _H(S_e) \setminus V(M)\). If we think of charging edge e to \(S_e\), then the number of edges charged to \(S_e\) is at most \(d \cdot \ell \). So, the total number of the second type edges is at most \(\mathcal {O}(K^d \cdot d \ell )\). Putting things together the space complexity of Algorithm 1 is \(\mathcal {O}(K^2 + K^d \cdot d \ell )\).\(\square \)

We call our algorithm described in the proof of Lemma 3.1 and given in Algorithm 1, as \(\mathcal {A}_{cn}\). Lemma 3.3 justifies how the common neighbor subgraph of G (obtained by algorithm \(\mathcal {A}_{cn}\)) is important for the design and analysis of streaming algorithms for \(\mathcal {F}\)-Subgraph deletion. Note that Lemma 3.3 is the central lemma of the paper and it says how common neighbor subgraphs may be of independent interest in the design of other parametrized (streaming) algorithms.

Lemma 3.3

Let G be a graph with \(\text{ VC }(G) \le K\) and let F be a connected graph with \(\Delta (F) \le d \le K\). Let H be the common neighbor subgraph of G with degree parameter d and common neighbor parameter \((d+2) K\), obtained by running the algorithm \(\mathcal {A}_{cn}\). Then the following holds in H: For any subset \(X \subseteq V(H)\), where \(\vert X \vert \le K\), F is a subgraph of \(G\setminus X\) if and only if \(F'\) is a subgraph of \(H\setminus X\), such that F and \(F'\) are isomorphic.

Proof

Let the common neighbor subgraph H, obtained by algorithm \(\mathcal {A}_{cn}\), contain a maximal matching M of G. First, observe that since \(\text{ VC }(G) \le K\), the size of a subgraph F in G is at most dK. Now let us consider a subset \(X \subseteq V(H)\) such that \(\vert X \vert \le K\). First, suppose that \(F'\) is a subgraph of \(H \setminus X\) and \(F'\) is isomorphic to F. Then since H is a subgraph of G, \(F'\) is also a subgraph of \(G \setminus X\). Therefore, \(F = F'\) and we are done.

Conversely, suppose F is a subgraph of \(G \setminus X\) that is not a subgraph in \(H \setminus X\). We show that there is a subgraph \(F'\) of \(H \setminus X\) such that \(F'\) is isomorphic to F. Consider an arbitrary ordering \(\{e_1,e_2,\ldots ,e_s\} \subseteq (E(G) \setminus E(H)) \cap E(F)\); note that \(s \le \left| E(F) \right| \). We describe an iterative subroutine that converts the subgraph F to \(F'\) through s steps, or equivalently, through a sequence of isomorphic subgraphs \(F_0,F_1,F_2,\ldots F_s\) in G such that \(F_0=F\) and \(F_s = F'\).

Let us discuss the consequence of such an iterative routine. Just before the starting of step \(i \in [s]\), we have the subgraph \(F_{i-1}\) such that \( F_{i-1 }\) is isomorphic to F and the set of edges in \((E(G) \setminus E(H)) \cap E(F_{i-1})\) is a subset of \(\{e_{i},e_{i+1},\ldots ,e_s\}\). In step i, we convert the subgraph \(F_{i-1}\) into \(F_i\) such that \(F_{i-1}\) is isomorphic to \(F_i\). Just after the step \(i \in [s]\), we have the subgraph \(F_{i}\) such that \(F_i\) is isomorphic to F and the set of edges in \((E(G) \setminus E(H)) \cap E(F_i)\) is a subset of \(\{e_{i+1},e_{i+2},\ldots ,e_s\}\). In particular, in the end, \(F_s = F'\) is a subgraph both in G and H.

Now consider the instance just before step i. We show how we select the subgraph \(F_{i}\) from \(F_{i-1}\). Let \(e_{i}=\{u,v\}\). Note that \(e_i \notin E(H)\). By the definition of the maximal matching M in G, it must be the case that \(\vert \{u,v\} \cap V(M) \vert \ge 1\). From the construction of the common neighbor subgraph H, if both u and v are in V(M), then \(e_i=(u,v) \in E(H)\). So, exactly one of u and v is present in V(M). Without loss of generality, let \(u \in V(M)\). Observe that v is a common neighbor of \(N_{G}(v)\) in G. Because of the maximality of M, each vertex in \(N_G(v)\) is present in V(M). Now, as \(\{u,v\} \notin E(H)\), v is not a common neighbor of \(N_F(v) \subset N_G(v)\) in H. From the construction of the common neighbor subgraph, H contains at least \((d+2) K\) common neighbors of all the vertices present in \(N_F(v)\) as \(\left| N_F(v) \right| \le d\). Of these common neighbors, at most \((d+1)K\) common neighbors can be vertices in \(X \cup F_{i-1}\). Thus, there is a vertex \(v'\) (outside \(F_{i-1}\)) such that it is a common neighbor of \(N_F(v)\) in H. Let \(F_{i}\) be the subgraph obtained by deleting vertex v from \(F_{i-1}\) and then adding vertex \(v'\) along with the edges between \(v'\) and \(N_F(v)\). Observe that \(F_{i}\) is a subgraph that is isomorphic to \(F_i\). Moreover, \((E(G) \setminus E(H)) \cap E(F_{i}) \subseteq \{e_{i+1},e_{i+3}\ldots ,e_s\}\). Thus, this leads to the fact that there is a subgraph \(F'\) in \(H\setminus X\) that is isomorphic to the subgraph F in \(G \setminus X\).\(\square \)

4 Streamabality Results for \(\mathcal {F}\)-Subgraph deletion and \(\mathcal {F}\)-Minor deletion problems

Our result on Common Neighbor leads us to the following streamability result for \(\mathcal {F}\)-Subgraph deletion and \(\mathcal {F}\)-Minor deletion.

4.1 Algorithm for \(\mathcal {F}\)-Subgraph deletion

We first discuss the result on \(\mathcal {F}\)-Subgraph deletion, which is stated in the following theorem.

Theorem 4.1

\(\mathcal {F}\)-Subgraph deletion parameterized by vertex cover size K is \(({\textsc {Al}},\mathbf{{d^2}} \cdot K^{d+1})\)-streamable, where \(d=\Delta (\mathcal {F}) \le K\).

Proof

Let (G, k, K) be an input for \(\mathcal {F}\)-Subgraph deletion, where G is the input graph, \(k \le K\) is the size of the solution of \(\mathcal {F}\)-Subgraph deletion, and the parameter K is at least \(\text{ VC }(G)\).

Now, we describe the streaming algorithm for \(\mathcal {F}\)-Subgraph deletion. First, we run the Common Neighbor streaming algorithm described in Lemma 3.1 (and given in Algorithm 1) with degree parameter d and common neighbor parameter \((d +2) K\), and let the common neighbor subgraph obtained be H. We run a traditional FPT algorithm for \(\mathcal {F}\)-Subgraph deletion [10] on H and output YES if and only if the output on H is YES.

Let us argue the correctness of this algorithm. By Lemma 3.3, for any subset \(X \subseteq V(H)\), where \(\vert X \vert \le K\), \(F \in \mathcal {F}\) is a subgraph of \(G\setminus X\) if and only if \(F'\), such that \(F'\) is isomorphic to \(F'\), is a subgraph of \(H\setminus X\). In particular, let X be a k-sized vertex set of G. As mentioned before, \(k \le K\). Thus, by Lemma 3.3, X is a solution of \(\mathcal {F}\)-Subgraph deletion in H if and only if X is a solution of \(\mathcal {F}\)-Subgraph deletion in G. Therefore, we are done with the correctness of the streaming algorithm for \(\mathcal {F}\)-Subgraph deletion.

The streaming complexity of \(\mathcal {F}\)-Subgraph deletion is same as the streaming complexity for the algorithm \(\mathcal {A}_{cn}\) from Lemma 3.1 with degree parameter \(d=\Delta (\mathcal {F})\) and common neighbor parameter \((d+2)K\). Therefore, the streaming complexity of \(\mathcal {F}\)-Subgraph deletion is \(\mathcal {O}(\mathbf{{d^2}} \cdot K^{d+1})\).\(\square \)

Corollary 4.2

FVS, ECT, OCT and TD parameterized by vertex cover size K are \(({\textsc {Al}},K^3)\)-streamable due to deterministic algorithms.

4.2 Algorithm for \(\mathcal {F}\)-Minor deletion

Finally, we describe a streaming algorithm for \(\mathcal {F}\)-Minor deletion that works similar to that of \(\mathcal {F}\)-Subgraph deletion due to the following proposition and the result is stated in Theorem 4.4.

Proposition 4.3

([15]) Let G be a graph with F as a minor and \(\text{ VC }(G) \le K\). Then there exists a subgraph \(G^*\) of G that has F as a minor such that \(\Delta (G^*) \le \Delta (F)\) and \(\left| V(G^*) \right| \le \left| V(F) \right| +K(\Delta (F)+1) .\)

Theorem 4.4

\(\mathcal {F}\)-Minor deletion parameterized by vertex cover size K are \(({\textsc {Al}},\mathbf{{d^2}} \cdot K^{d+1})\)-streamable, where \(d=\Delta (\mathcal {F}) \le K\).

Proof

Let (G, k, K) be an input for \(\mathcal {F}\)-Minor deletion, where G is the input graph, k is the size of the solution of \(\mathcal {F}\)-Minor deletion we are looking for, and the parameter K is such that \(\text{ VC }(G) \le K\). Note that, \(k \le K\).

Now, we describe the streaming algorithm for \(\mathcal {F}\)-Minor deletion. First, we run the Common Neighbor streaming algorithm described in Lemma 3.1 with degree parameter d and common neighbor parameter \((d +2) K\), and let the common neighbor subgraph obtained be H. We run a traditional FPT algorithm for \(\mathcal {F}\)-Minor deletion [10] and output YES if and only if the output on H is YES.

Let us argue the correctness of this algorithm, that is, we prove the following for any \(F \in \mathcal {F}\). \(G \setminus X\) contains F as a minor if and only if \(H \setminus X \) contains \(F'\) as a minor such that F and \(F'\) are isomorphic, where \(X \subseteq V(G)\) is of size at most K. For the only if part, suppose \(H \setminus X\) contains \(F'\) as a minor. Then since H is a subgraph of G, \(G \setminus X \) contains \(F'\) as a minor. For the if part, let \(G \setminus X\) contains F as a minor. By Proposition 4.3, \(G \setminus X\) contains a subgraph \(G^{*}\) such that \(G^*\) contains F as a minor and \(\Delta (G^*) \le \Delta (F)\). Now, Lemma 3.3 implies that \(H \setminus X\) also contains a subgraph \(\hat{G^*}\) that is isomorphic to \(G^*\). Hence, \(H \setminus X\) contains \(F'\) as a minor such that \(F'\) is isomorphic to F.

The streaming complexity of the streaming algorithm for \(\mathcal {F}\)-Minor deletion is same as the streaming complexity for the algorithm \(\mathcal {A}_{cn}\) from Lemma 3.1 with degree parameter \(d=\Delta (\mathcal {F})\) and common neighbor parameter \((d+2)K\). Therefore, the streaming complexity for \(\mathcal {F}\)-Minor deletion is \(\mathcal {O}({d^2} \cdot K^{d+1})\).\(\square \)

5 Lower Bounds

Before we explicitly give the statements of the stated lower bound results presented in Table 1, we want to note that a lower bound on Feedback Vertex Set is also a lower bound for \(\mathcal {F}\)-Subgraph deletion (deletion of cycles as subgraphs) and \(\mathcal {F}\)-Minor deletion (deletion of 3-cycles as minors). Observe that we will be done by proving the following theorems, and the rest of the lower bound results will follow from Observations 2.4 and 2.5.

Theorem 5.1

Feedback Vertex Set, Even Cycle Transversal and Odd Cycle Transversal are

-

(I)

\(({\textsc {Al}}, n \log n)\)-hard parameterized by solution size k and even if \(\Delta _{av}(G)=\mathcal {O}(1)\),

-

(II)

\(({\textsc {Al}}, n/p,p)\)-hard parameterized by solution size k and even if \(\Delta (G)=\mathcal {O}(1)\), and

-

(III)

\(({\textsc {Va}}, n/p,p)\)-hard parameterized by vertex cover size K and even if \(\Delta _{av}(G)=\mathcal {O}(1)\) .

Theorem 5.2

TD is

-

(I)

\(({\textsc {Va}}, n \log n)\)-hard parameterized by solution size k and even if \(\Delta _{av}(G)=\mathcal {O}(1)\),

-

(II)

\(({\textsc {Va}}, n/p,p)\)-hard parameterized by solution size k and even if \(\Delta (G)=\mathcal {O}(1)\), and

-

(III)

\(({\textsc {Va}}, n/p,p)\)-hard parameterized by vertex cover size K and even if \(\Delta _{av}(G)=\mathcal {O}(1)\).

Remark 2

-

(i)

The proofs of part (I) of Theorems 5.1 and 5.2 use the lower bound constructions given in [30]. To the best of our knowledge this is the first set of results on hardness in the Al model.

-

(ii)

The proofs of parts (II) and (III) of Theorems 5.1 and 5.2 use the lower bound constructions given in [7].

In Section 5.1 we will briefly describe the different results from communication complexity we will be using in this section. In Section 5.2 we will give the details of the proofs of Theorems 5.1 and 5.2.

5.1 Communication Complexity Results

Lower bounds of communication complexity have been used to provide lower bounds for the streaming complexity of problems. In Yao’s two party communication model, Alice and Bob get inputs and the objective is to compute a function of their inputs with minimum bits of communication. In one way communication, only Alice is allowed to send messages and Bob produces the final output; whereas in two way communication both Alice and Bob can send messages.

Definition 5.3

The one (two) way communication complexity of a problem \(\Pi \) is the minimum number of bits that must be sent by Alice to Bob (exchanged between Alice and Bob) to solve \(\Pi \) on any arbitrary input with success probability 2/3.

The following problems are very fundamental problems in communication complexity and we use these problems in showing lower bounds on the streaming complexity of problems considered in this paper.

-

(i)

\(\text{ Index}_n\): Alice gets as input \(\textbf{x} \in \{0,1\}^n\) and Bob has an index \(j \in [n]\). Bob wants to determine whether x\(_j=1\). Formally, \(\text{ Index}_n\) \((\textbf{x}, j) =1\) if \(\textbf{x}_j=1\) and 0, otherwise.

-

(ii)

\(\text{ Disj}_n\): Alice and Bob get inputs \(\textbf{x}, \textbf{y} \in \{0,1\}^n\), respectively. The objective is to decide whether there exists an \(i \in [n]\) such that \(x_i=y_i=1\). Formally, \(\text{ Disj}_n\) (x, y) \(=0\) if there exists an \(i \in [n]\) such that \(x_i=y_i=1\) and 1, otherwise.

-

(iii)

\(\text{ Perm}_n\) [30] : Alice gets a permutation \(\pi : [n]\rightarrow [n]\) and Bob gets an index \(j \in [n \log n]\). The objective of Bob is to decide the value of \(\text{ Perm}_n\) \((\pi , j)\), defined as the j-th bit in the string of 0’s and 1’s obtained by concatenating the bit expansions of \(\pi (1)\ldots \pi (n)\). In other words, let \(\Phi :[n \log n] \rightarrow [n] \times [\log n]\) be a bijective function defined as

$$ \Phi (j)=\left( \lceil \frac{j}{\log n} \rceil , j + \log n -\lceil \frac{j}{\log n} \rceil \times \log n \right) . $$For a permutation \(\pi : [n] \rightarrow [n]\), Bob needs to determine the value of the \(\gamma \)-th bit of \(\pi \left( \lceil \frac{j}{\log n} \rceil \right) \), where \(\gamma ={ \left( j + \log n -\lceil \frac{j}{\log n} \rceil \times \log n \right) }\).

Proposition 5.4

-

(i)

The one way communication complexity of \(\text{ Index}_n\) is \(\Omega (n)\).

-

(ii)

The two way communication complexity of \(\text{ Disj}_n\) is \(\Omega (n)\).

-

(iii)

The one way communication complexity of \(\text{ Perm}_n\) is \(\Omega (n \log n)\).

A note on reduction from \(\text{ Index}_n\), \(\text{ Disj}_n\), \(\text{ Perm}_n\): A reduction from a problem \(\Pi _1\) in one/two way communication complexity to a problem \(\Pi _2\) in streaming algorithms is typically as follows: The two players Alice and Bob device a communication protocol for \(\Pi _1\) that uses a streaming algorithm for \(\Pi _2\) as a subroutine. Typically in a round of communication, a player gives inputs to the input stream of the streaming algorithm, obtains the compact sketch produced by the streaming algorithm and communicates this sketch to the other player. This implies that a lower bound on the communication complexity of \(\Pi _1\) also gives a lower bound on the streaming complexity of \(\Pi _2\).

The following Proposition summarizes a few important consequences of reductions from problems in communication complexity to problems for streaming algorithms:

Proposition 5.5

-

(i)

If we can show a reduction from \(\text{ Index}_n\) to a problem \(\Pi \) in model \(\mathcal {M}\) such that the reduction uses a 1-pass streaming algorithm of \(\Pi \) as a subroutine, then \(\Pi \) is \((\mathcal {M},n)\)-hard.

-

(ii)

If we can show a reduction from \(\text{ Disj}_n\) to a problem \(\Pi \) in model \(\mathcal {M}\) such that the reduction uses a 1-pass streaming algorithm of \(\Pi \) as a subroutine, then \(\Pi \) is \((\mathcal {M},n/p,p)\)-hard, for any \(p \in \mathbb {N}\) [2, 4, 6].

-

(iii)

If we can show a reduction from \(\text{ Perm}_n\) to a problem \(\Pi \) in model \(\mathcal {M}\) such that the reduction uses a 1-pass streaming algorithm of \(\Pi \) as a subroutine, then \(\Pi \) is \((\mathcal {M},n \log n)\)-hard.

5.2 Proofs of Theorems 5.1 and 5.2

Proof of Theorems

5.1 The proofs for all three problems are similar. We first consider Feedback Vertex Set. To begin with, we show the hardness results of FVS for solution size \(k=0\).

Proof of Theorems

5.1 (I) We give a reduction from \(\text{ Perm}_n\) to FVS in the Al model when the solution size parameter \(k=0\). The idea is to build a graph G with \(\Delta _{av}(G) =\mathcal {O}(1)\) and construct edges according to the input of \(\text{ Perm}_n\), such that the output of \(\text{ Perm}_n\) is 0 if and only if G is cycle-free.

Let \(\mathcal {A}\) be a one pass streaming algorithm that solves FVS in Al model using \(o(n \log n)\) space. Let G be a graph with \(4n+2\) vertices \(u_1,\ldots ,u_n,\) \(v_1,\ldots ,v_n, u'_1, \dots ,\) \(u'_n, v'_1,\dots , v'_n, w,\) \(w'\). Let \(\pi \) be the input of Alice for \(\text{ Perm}_n\). See Fig. 2 for an illustration.

Alice’s input to \(\mathcal {A}\): Alice inputs the graph G first by exposing the vertices \(u_1,\ldots ,u_n, v_1,\ldots ,\) \(v_n\), sequentially. (i) While exposing the vertex \(u_i\), Alice gives as input to \(\mathcal {A}\) the edges \((u_i,u'_i),(u_i, v_{\pi (i)})\); (ii) while exposing the vertex \(v_i\), Alice gives the edges \((v_i,v'_i),(v_i,\) \( u_{\pi ^{-1}(i)})\) to the input stream of \(\mathcal {A}\).

After the exposure of \(u_1,\ldots ,u_n, v_1,\ldots ,\) \(v_n\) as per the Al model, Alice sends the current memory state of \(\mathcal {A}\), i.e the sketch generated by \(\mathcal {A}\), to Bob. Let \(j \in [n \log n]\) be the input of Bob and let \((\psi ,\gamma ) =\Phi (j)\).

Bob’s input to \(\mathcal {A}\): Bob exposes the vertices \(u'_1 \dots , u'_n, v'_1,\) \(\ldots ,\) \( v'_n,w,w'\), sequentially. (i) While exposing a vertex \(u'_i\) where \(i \ne \psi \), Bob gives the edge \((u'_i,u_i)\) to the input stream of \(\mathcal {A}\); (ii) while exposing \(u'_\psi \), Bob gives the edges \((u'_\psi ,u_\psi )\) and \((u'_\psi ,w')\); (iii) while exposing a vertex \(v'_i\), Bob gives the edge \((v'_i,v_i)\), and the edge \((v'_i,w)\) if and only if \( \text{ bit }(i,\gamma )=1\); (iv) while exposing w, Bob gives the edge \((w,w')\), and the edge \((w,v'_i)\) if and only if \( \text{ bit }(i,\gamma )=1\); (v) while exposing \(w'\), Bob gives the edges \((w',w)\) and \((w',u'_{\psi })\).

Observe that \(\Delta _{av}(G)=\mathcal {O}(1)\). Now we show that the output of FVS is NO if and only if \(\text{ Perm}_n\) \((\pi , y)=1\). Recall that \(k=0\).

From the construction, observe that \((w,w'), (w',u'_\psi ), (u'_\psi ,u_\psi ), (u_\psi , v_{\pi (\psi )}), \)\( (v_{\pi (\psi )}, v'_{\pi (\psi )}) \in E(G)\). When \(\text{ Perm}_n\) \((\pi , j) =1\), the edge \((v'_{\pi (\psi )},w)\) is present in G. So, G contains the cycle \(\mathcal {C}(w,w',u'_{\psi },\) \(u_\psi ,v_{\pi (\psi )},v'_{\pi (\psi )} )\), that is, the output of FVS is NO.

On the other hand, if the output of FVS is NO, then there is a cycle in G. From the construction, the cycle is \(\mathcal {C}(w,w',u'_{\psi },u_\psi ,v_{\pi (\psi )},v'_{\pi (\psi )})\). As \((v'_{\pi (\psi )},w)\) is an edge, the \(\gamma \)-th bit of \(\pi (\psi )\) is 1, that is \(\text{ Perm}_n\) \((\pi , j) =1\). Now by Propositions 5.4 and 5.5(iii), we obtain that Feedback Vertex Set is \(({\textsc {Al}}, n \log n)\)-hard even if \(\Delta _{av}(G)=\mathcal {O}(1)\) and when \(k=0\).\(\square \)

Illustration of Proof of Theorem 5.1 (I). Consider \(n=4\). Let \(\pi :[4]\rightarrow [4]\) such that \(\pi (1)=3, \pi (2)=4, \pi (3)=2\) and \(\pi (4)=1\). So the concatenated bit string is \(11001001^{{2}}\). In (a), \(j=5\), \(\Phi (j)=(\psi ,\gamma )=(3,1)\), \(\text{ Perm}_n\) \((\pi , j)=1\), and G contains a cycle. In (b), \(j=4\), \(\Phi (j)=(\psi ,\gamma )=(2,2)\), \(\text{ Perm}_n\) \((\pi , j)=0\), and G does not contain a cycle. Recall that we take n as a power of 2. For \(1 \le i \le n-1\), the bit expansion of i is the usual bit notation of i using \(\log _2 n\) bits; the bit expansion of n is \(\log _2 n\) many consecutive zeros. For example: Take \(n=32\). The bit expansion of 32 is 100000. We ignore the bit 1 and say that the bit expansion of 32 is 00000

Illustration of Proof of Theorem 5.1 (II). Consider \(n=4\). In (a), \(\textbf{x}=1001\) and \(\textbf{y}=0100\), that is, \(\text{ Disj}_n\) (x, y)\(=1\), and G does not contain a cycle. In (b), \(\textbf{x}=1100\) and \(\textbf{y}=0110\), that is, \(\text{ Disj}_n\) (x, y)\( = 0\), and G contains a cycle

Proof of Theorems

5.1 (II) We give a reduction from \(\text{ Disj}_n\) to FVS in the Al model when the solution size parameter \(k=0\). The idea is to build a graph G with \(\Delta (G) =\mathcal {O}(1)\) and construct edges according to the input of \(\text{ Disj}_n\), such that the output of \(\text{ Disj}_n\) is 1 if and only if G is cycle-free.

Let \(\mathcal {A}\) be a one pass streaming algorithm that solves FVS in Al model, such that \(\Delta (G)=\mathcal {O}(1)\), and the space used is o(n). Let G be a graph with 4n vertices \(u_{11},u_{12},u_{13},u_{14}, \ldots , u_{n1},\) \(u_{n2},u_{n3},u_{n4}\). Let \(\textbf{x,y}\) be the input of Alice and Bob for \(\text{ Disj}_n\), respectively. See Fig. 3 for an illustration.

Alice’s input to \(\mathcal {A}\): Alice inputs the graph G by exposing the vertices \(u_{11},u_{12},u_{21},u_{22} \) \( \ldots , u_{n1},\) \(u_{n2}\), sequentially. (i) While exposing \(u_{i1}\), Alice gives as input to \(\mathcal {A}\) the edge \((u_{i1},u_{i3})\). Also, Alice gives the edge \((u_{i1},u_{i2})\) as input to \(\mathcal {A}\) if and only if \({x_i=1}\); (ii) while exposing \(u_{i2}\), Alice gives the edge \((u_{i2},u_{i4})\) as input to \(\mathcal {A}\). Also, Alice gives the edge \((u_{i2},u_{i1})\) as input to \(\mathcal {A}\) if and only if \( x_i=1\).

After the exposure of \(u_{11},u_{12},u_{21},u_{22} \ldots , u_{n1},\) \(u_{n2}\) as per the Al model, Alice sends current memory state of \(\mathcal {A}\), i.e. the sketch generated by \(\mathcal {A}\), to Bob.

Bob’s input to \(\mathcal {A}\): Bob exposes the vertices \(u_{13},u_{14},u_{23},\) \(u_{24} \ldots ,\) \( u_{n3},u_{n4}\) sequentially. (i) While exposing \(u_{i3}\), Bob gives the edge \((u_{i3},u_{i1})\) as input to \(\mathcal {A}\), and gives the edge \((u_{i3},u_{i4})\) if and only if \({ y_i}=1\); (ii) while exposing \(u_{i4}\), Bob gives the edge \((u_{i4},u_{i2})\) as input to \(\mathcal {A}\), and gives the edge \((u_{i4},u_{i3})\) if and only if \( y_i=1\).

Observe that \(\Delta (G)\le 4\). Recall that \(k=0\). Now we show that the output of FVS is NO if and only if \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})} =0\).

From the construction, \((u_{i1},u_{i3}), (u_{i2},u_{i4}) \in E(G)\), for each \(i \in [n]\). If \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})} =0\), there exists \(i \in [n]\) such that \(x_i=y_i=1\). This implies the edges \((u_{i1},u_{i2})\) and \((u_{i3},u_{i4})\) are present in G. So, the cycle \(\mathcal {C}(u_{i1},u_{i2},u_{i3},u_{i4})\) is present in G, that is, the output of FVS is NO.

Conversely, if the output of FVS is NO, there exists a cycle in G. From the construction, the cycle must be \(\mathcal {C}(u_{i1},u_{i2},\) \(u_{i3},u_{i4})\) for some \(i \in [n]\). As the edges \((u_{i1},u_{i2})\) and \((u_{i3},u_{i4})\) are present in G, \(x_i=y_i=1\), that is, \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})} =0\).

Now by Propositions 5.4 and 5.5(ii), we obtain that Feedback Vertex Set is \(({\textsc {Al}}, n/p,p)\)-hard even if \(\Delta (G)=\mathcal {O}(1)\) and when \(k=0\). \(\square \)

Proof of Theorems

5.1 (III) We give a reduction from \(\text{ Disj}_n\) to FVS in the Va model when the solution size parameter \(k=0\). The idea is to build a graph G with vertex cover size bounded by K and \(\Delta (G)=\mathcal {O}(1)\), and construct edges according to the input of \(\text{ Disj}_n\), such that the output of \(\text{ Disj}_n\) is 1 if and only if G is cycle-free.

Illustration of Proof of Theorem 5.1 (III). Consider \(n=4\). In (a), \(\textbf{x}=1000\) and \(\textbf{y}=0101\), that is, \(\text{ Disj}_n\) \((\textbf{x}, \textbf{y})=1\), and G does not contain a cycle. In (b), \(\textbf{x}=0011\) and \(\textbf{y}=1010\), that is, \(\text{ Disj}_n\) \((\textbf{x}, \textbf{y})=0\), and G contains a cycle

Let \(\mathcal {A}\) be a one pass streaming algorithm that solves FVS in Va model, such that \(\text{ VC }(G) \le K\) and \(\Delta _{av}(G) =\mathcal {O}(1)\), and the space used is o(n). Let G be a graph with \(n+3\) vertices \(u_a,v_1,\ldots ,v_n,u_b,w\). Let \(\textbf{x,y}\) be the input of Alice and Bob for \(\text{ Disj}_n\), respectively. See Fig. 4 for an illustration.

Alice’s input to \(\mathcal {A}\): Alice inputs the graph G first by exposing the vertices \(u_a, v_1,\ldots ,v_n\), sequentially. (i) While exposing \(u_a\), Alice does not give any edge; (ii) while exposing \(v_i\), Alice gives the edge \((v_i,u_a)\), as input to \(\mathcal {A}\), if and only if \(x_i=1\).

After the exposure of \(u_a, v_1,\ldots ,v_n\) as per Va model, Alice sends the current memory state of \(\mathcal {A}\), i.e., the sketch generated by \(\mathcal {A}\), to Bob.

Bob’s input to \(\mathcal {A}\): Bob first exposes \(u_b\) and then exposes w. (i) While exposing \(u_b\), Bob gives the edge \((u_b,v_i)\) if and only if \(y_i=1\); (ii) while exposing w, Bob gives the edges \((w,u_a)\) and \((w,u_b)\), as inputs to \(\mathcal {A}\).

From the construction, observe that \(\text{ VC }(G) \le 2 \le K\) and \(\Delta _{av}(G) = \mathcal {O}(1)\). Recall that \(k=0\).

Now we show that the output of FVS is NO if and only if \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})} =0\).

From the construction, \((u_{a},w), (u_{b},w) \in E(G)\). If \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=0\), there exists \(i \in [n]\) such that \(x_i=y_i=1\). This implies the edges \((u_{a},v_{i})\) and \((u_{b},v_{i})\) are present in G. So, the cycle \(\mathcal {C}(u_{a},v_{i},u_{b},w)\) is present in G, that is, the output of FVS is NO.

Conversely, if the output of FVS is NO, there exists a cycle in G. From the construction, the cycle must be \(\mathcal {C}(u_{a},\) \(v_{i},u_{b},w)\) for some \(i \in [n]\). As the edges \((u_{a},v_{i})\) and \((u_{b},v_{i})\) are present in G, \(x_i=y_i=1\), that is, \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=0\).

Now by Propositions 5.4 and 5.5(ii), we obtain that Feedback Vertex Set parameterized by vertex cover size K is \(({\textsc {Va}}, n/p,p)\)-hard even if \(\Delta _{av}(G)=\mathcal {O}(1)\), and when \(k=0\).\(\square \)

In each of the above three cases, we can make the reduction work for any k, by adding k many vertex disjoint cycles of length 4, i.e. \(C_4\)’s, to G. In Theorem 5.1 (III), the vertex cover must be bounded. In the given reduction for Theorem 5.1 (III), the vertex cover of the constructed graph is at most 2. Note that by the addition of k many edge disjoint \(C_4\)’s, the vertex cover of the constructed graph in the modified reduction is at most \(2k+2\), and is therefore still a parameter independent of the input instance size.

This completes the proof of the Theorem 5.1 with respect to FVS.

If the graph constructed in the reduction, in any of the above three cases for Feedback Vertex Set, contains a cycle, then it is of even length. Otherwise, the graph is cycle free. Hence, the proof of this Theorem with respect to ECT is same as the proof for FVS.

Similarly, a slight modification can be made to the constructed graph, in all three of the above cases, such that a cycle in the graph is of odd length if a cycle exists. Thereby, the proof of this Theorem with respect to OCT also is very similar to the proof for FVS.\(\square \)

Proof of Theorems

5.2 We first show the hardness results of TD for \(k=0\) in all three cases.

Illustration of Proof of Theorem 5.2 (I). Consider \(n=4\). Let \(\pi :[4]\rightarrow [4]\) such that \(\pi (1)=3, \pi (2)=4, \pi (3)=2\), and \(\pi (4)=1\). So the concatenated bit string is 11001001. In (a), \(j=5\), \(\Phi (j)=(\psi ,\gamma )=(3,1)\), \(\text{ Perm}_n\) \((\pi , j)=1\) and G contains a triangle. In (b), \(j=4\), \(\Phi (j)=(\psi ,\gamma )=(2,2)\), \(\text{ Perm}_n\) \((\pi , j)=0\), and G does not contain any triangle

Proof of Theorems

5.2 (I) We give a reduction from \(\text{ Perm}_n\) to TD when the solution size parameter \(k=0\). Let \(\mathcal {A}\) be a one pass streaming algorithm that solves TD in Va model, such that \(\Delta _{av}(G)=\mathcal {O}(1)\), and the space used is \(o(n \log n)\).

Let G be a graph with \(2n+1\) vertices \(u_1,\ldots ,u_n, v_1,\ldots ,v_n, w\). Let \(\pi \) be the input of Alice for \(\text{ Perm}_n\). See Fig. 5 for an illustration.

Alice’s input to \(\mathcal {A}\): Alice inputs the graph G by exposing the vertices \(u_1,\ldots ,u_n, v_1, \) \(\ldots ,\) \(v_n\), sequentially. (i) While exposing the vertex \(u_i\), Alice does not give any edge; (ii) while exposing the vertex \(v_i\), Alice gives the edges \((v_{\pi (i)},u_i)\) as an input to the stream of \(\mathcal {A}\).

After the exposure of \(u_1,\ldots ,u_n, v_1,\ldots ,\) \(v_n\) as per the Va model, Alice sends the current memory state of \(\mathcal {A}\), i.e. the sketch generated by \(\mathcal {A}\), to Bob. Let \(j \in [n \log n]\) be the input of Bob and let \((\psi ,\gamma ) =\Phi (j)\).

Bob’s input to \(\mathcal {A}\): Bob exposes only the vertex w. Bob gives the edge \((w,u_\psi )\), and the edge \((w,v_i)\) if and only if \( \text{ bit }(i,\gamma )=1\), as input to the stream of \(\mathcal {A}\).

From the construction, note that \(\Delta _{av}(G)=\mathcal {O}(1)\). Recall that \(k=0\). Now we show that, the output of TD is NO if and only if \(\text{ Perm}_n\) \((\pi , j)=1\).

From the construction, the edges \((u_\psi ,v_{\pi (\psi )})\) and \((w,u_\psi )\) are present in G. If \(\text{ Perm}_n\) \(xy=1\), then \((v_{\pi (\psi )},w) \in E(G)\). So, there exists a triangle in G, that is, the output of TD is NO.

On the other hand, if the output of TD is NO, then there exists a triangle in G. From the construction, the triangle is formed with the vertices \(u_\psi ,v_{\pi (\psi )}\) and w. As \((v_{\pi (\psi )},w) \in E(G)\), the \(\gamma \)-th bit of \(\pi (\psi )\) is 1, that is, \(\text{ Perm}_n\) \((\pi , j)=1\).

Now by Propositions 5.4 and 5.5(iii), we obtain that TD is \(({\textsc {Va}}, n\log n)\)-hard even if \(\Delta _{av}(G)=\mathcal {O}(1)\), and when \(k=0\). \(\square \)

Illustration of Proof of Theorem 5.2 (II). Consider \(n=4\). In (a), \(\textbf{x}=1001\) and \(\textbf{y}=0100\), that is, \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=1\), and G does not contain any triangle. In (b), \(\textbf{x}=0110\) and \(\textbf{y}=1010\), that is, \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=0\), and G contains a triangle

Proof of Theorems

5.2 (II) We give a reduction from \(\text{ Disj}_n\) to TD when the solution size parameter \(k=0\). Let \(\mathcal {A}\) be a one pass streaming algorithm that solves TD in Va model, such that \(\Delta (G) =\mathcal {O}(1)\), and the space used is o(n). Let G be a graph with 3n vertices \(u_{11},u_{12},u_{13}, \ldots , u_{n1},u_{n2},u_{n3}\). Let \(\textbf{x,y}\) be the input of Alice and Bob for \(\text{ Disj}_n\). See Fig. 6 for an illustration.

Alice’s input to \(\mathcal {A}\): Alice inputs the graph G first by exposing the vertices \(u_{11}\), \(u_{12}\), \(u_{21}\), \(u_{22}\), ..., \(u_{n1}\), \(u_{n2}\), sequentially. (i) While exposing \(u_{i1}\), Alice does not give any edge; (ii) while exposing \(u_{i2}\), Alice gives the edge \((u_{i2},u_{i1})\), if and only if \(x_i=1\), as inputs to \(\mathcal {A}\).

After the exposure of \(u_{11},u_{12},u_{21},u_{22} \ldots , u_{n1},\) \(u_{n2}\) as per the Va model, Alice sends current memory state of \(\mathcal {A}\), i.e. the sketch generated by \(\mathcal {A}\), to Bob.

Bob’s input to \(\mathcal {A}\): Bob exposes the vertices \(u_{13}, \ldots , u_{n3}\), sequentially. While exposing \(u_{i3}\), Bob gives the edges \((u_{i3},u_{i1})\) and \((u_{i3},u_{i2})\) as two inputs to \(\mathcal {A}\) if and only if \( y_i=1\).

From the construction, note that \(\Delta (G) \le 2\). Recall that \(k=0\). Now we show that the output of TD is NO if and only if \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})} =0\).

If \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=0\), there exists \(i \in [n]\) such that \(x_i=y_i=1\). From the construction, the edges \((u_{i2},u_{i1})\), \((u_{i3},u_{i1})\) and \((u_{i3},u_{i2})\) are present in G. So, there exists a triangle in G, that is, the output of TD is NO.

Conversely, if the output of TD is NO, there exists a triangle in G. From the construction, the triangle is \((u_{i1},u_{i2},u_{i3})\) for some \(i \in [n]\). As the edge \((u_{i2},u_{i1}) \in E(G)\), \(x_i=1\); and as the edges \((u_{i3},u_{i1})\) and \((u_{i3},u_{i2})\) are in G, \(y_i=1\). So, \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})} =0\).

Now by Propositions 5.4 and 5.5(ii), we obtain that TD is \(({\textsc {Va}}, n/p,p)\)-hard even if \(\Delta (G)=\mathcal {O}(1)\), and when \(k=0\). \(\square \)

Illustration of Proof of Theorem 5.2 (III). Consider \(n=4\). In (a), \(\textbf{x}=1000\) and \(\textbf{y}=0101\), that is, \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=1\), and G does not contain any triangle. In (b), \(\textbf{x}=0011\) and \(\textbf{y}=1010\), that is, \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=0\), and G contains a triangle

Proof of Theorems

5.2 (III) We give a reduction from \(\text{ Disj}_n\) to TD parameterized by vertex cover size K, where \(\mathcal {A}\) is a one pass streaming algorithm that solves TD parameterized by K in Va model such that \(\Delta _{av}(G)=\mathcal {O}(1)\), and the space used is o(n). Let G be a graph with \(n+2\) vertices \(u_a,v_1,\ldots ,v_n,u_b\). Let \(\textbf{x,y}\) be the input of Alice and Bob for \(\text{ Disj}_n\). See Fig. 7 for an illustration.

Alice’s input to \(\mathcal {A}\): Alice inputs the graph G first by exposing the vertices \(u_a, v_1,\ldots ,v_n\) sequentially. (i) While exposing \(u_a\), Alice does not give any edge; (ii) while exposing \(v_i\), Alice gives the edge \((v_i,u_a)\) as input to \(\mathcal {A}\) if and only if \(x_i=1\).

After the exposure of \(u_a, v_1,\ldots ,v_n\) as per the Va model, Alice sends current memory state of \(\mathcal {A}\), i.e. the sketch generated by \(\mathcal {A}\), to Bob.

Bob’s input to \(\mathcal {A}\): Bob exposes \(u_b\) only. Bob gives the edge \((u_b,u_a)\) unconditionally, and an edge \((u_b,v_i)\) as input to \(\mathcal {A}\) if and only if \(y_i=1\).

From the construction, observe that \(\text{ VC }(G)\le 2 \le K\) and \(\Delta _{av}(G) =\mathcal {O}(1)\). Recall that \(k=0\).

Now we show that the output of TD is NO if and only if \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})} =0\).

Observe that \((u_a,u_b) \in E(G)\). If \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=0\), there exists an \(i \in [n]\) such that \(x_i=y_i=1\).

From the construction, the edges \((v_i,u_a)\) and \((u_b,v_i)\) are present in G. So, G contains the triangle with vertices \(u_a,u_b\) and w, i.e., the output of TD is NO.

On the other hand, if the output of TD is NO, there exists a triangle in G. From the construction, the triangle is formed with the vertices \(u_a,u_b\) and \(v_i\). As \((v_i,u_a)\in E(G)\) implies \(x_i=1\), and \((v_i,u_a) \in E(G)\) implies \(y_i=1\). So, \(\text{ Disj}_n\) \({(\textbf{x}, \textbf{y})}=0\).

Now by Propositions 5.4 and 5.5(ii), we obtain that TD parameterized by vertex cover size K is \(({\textsc {Va}}, n/p,p)\)-hard even if \(\Delta _{av}(G)=\mathcal {O}(1)\), and when \(k=0\). \(\square \)

In each of the above cases, we can make the reductions work for any k, by adding k many vertex disjoint triangles to G. In Theorem 5.2 (III), the vertex cover must be bounded. In the given reduction for Theorem 5.2 (III), the vertex cover of the constructed graph is at most 2. Note that by the addition of k many edge disjoint \(C_4\)’s, the vertex cover of the constructed graph in the modified reduction is at most \(2k+2\), and is therefore still a parameter independent of the input instance size.

Hence, we are done with the proof of the Theorem 5.2.\(\square \)

6 Conclusion

In this paper, we initiate the study of parameterized streaming complexity with structural parameters for graph deletion problems. Our study also compares the parameterized streaming complexity of several graph deletion problems in the different streaming models. In future, a natural question is to investigate why such a classification exists for seemingly similar graph deletion problems, and conduct a systematic study of other graph deletion problems as well.

Notes

It is standard in streaming that lower bound results are in bits, and the upper bound results are in words.

It is usual in streaming that the lower bound results are in bits, and the upper bound results are in words.

References

Assadi, S., Khanna, S., Li, Y.: Tight Bounds for Single-pass Streaming Complexity of the Set Cover Problem. In Proceedings of the 48th Annual ACM SIGACT Symposium on Theory of Computing, STOC, pp 698–711 (2016)

Agarwal, D., McGregor, A., Phillips, J.M., Venkatasubramanian, S., Zhu, Z.: Spatial Scan Statistics Approximations and Performance Study. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 24–33 (2006)

Bury, M., Grigorescu, E., McGregor, A., Monemizadeh, M., Schwiegelshohn, C., Vorotnikova, S., Zhou, S.: Structural Results on Matching Estimation with Applications to Streaming. Algorithmica 81(1), 367–392 (2019)

Bishnu, A., Ghosh, A., Mishra, G., Sen, S.: On the streaming complexity of fundamental geometric problems. CoRR, abs/1803.06875 (2018)

Cai, L.: Fixed-Parameter Tractability of Graph Modification Problems for Hereditary Properties. Inf. Process. Lett. 58(4), 171–176 (1996)

Chitnis, R.H., Cormode, G., Esfandiari, H., Hajiaghayi, M., Monemizadeh, M.: Brief Announcement New Streaming Algorithms for Parameterized Maximal Matching & Beyond. In Proceedings of the 27th ACM on Symposium on Parallelism in Algorithms and Architectures, SPAA, pp 56–58 (2015)

Chitnis, R., Cormode, G., Esfandiari, H., Hajiaghayi, M., McGregor, A., Monemizadeh, M., Vorotnikova, S.: Kernelization via Sampling with Applications to Finding Matchings and Related Problems in Dynamic Graph Streams. In Proceedings of the 27th Annual ACM-SIAM Symposium on Discrete Algorithms, SODA, pp 1326–1344 (2016)

Chitnis, R.H., Cormode, G., Hajiaghayi, M.T., Monemizadeh, M.: Parameterized Streaming: Maximal Matching and Vertex Cover. In Proceedings of the Twenty-Sixth Annual ACM-SIAM Symposium on Discrete Algorithms, SODA, pp 1234–1251 (2015)

Cormode, G., Dark, J., Konrad, C.: Independent Sets in Vertex-Arrival Streams. In Proceedings of the 46th International Colloquium on Automata, Languages, and Programming, ICALP, pp 45:1–45:14 (2019)

Cygan, M., Fomin, F.V., Kowalik, L., Lokshtanov, D., Marx, D., Pilipczuk, M., Pilipczuk, M., Saurabh, S.: Parameterized Algorithms, 1st edn. Springer Publishing Company, Incorporated (2015)

Cormode, G., Jowhari, H., Monemizadeh, M., Muthukrishnan, S.: The Sparse Awakens Streaming Algorithms for Matching Size Estimation in Sparse Graphs. In Proceedings of the 25th Annual European Symposium on Algorithms, ESA, volume 87, pp 29:1–29:15 (2017)

Cao, Y., Marx, D.: Interval Deletion Is Fixed-Parameter Tractable. ACM Trans. Algorithms, 11(3):21:1–21:35 (2015)

Cao, Y., Marx, D.: Chordal Editing is Fixed-Parameter Tractable. Algorithmica 75(1), 118–137 (2016)

Esfandiari, H., Hajiaghayi, M., Liaghat, V., Monemizadeh, M., Onak, K.: Streaming Algorithms for Estimating the Matching Size in Planar Graphs and Beyond. ACM Trans. Alg. 14(4), 1–23 (2018)

Fomin, F.V., Jansen, B.M.P., Pilipczuk, M.: Preprocessing subgraph and minor problems When does a small vertex cover help? J. Comput. Syst. Sci. 80(2), 468–495 (2014)

Fafianie, S., Kratsch, S.: Streaming kernelization. In Proceedings of the 39th International Symposium on Math. Found. Comput. Sci., MFCS, pp 275–286 (2014)

Fomin, F.V., Lokshtanov, D., Misra, N., Philip, G., Saurabh, S.: Hitting Forbidden Minors Approximation and Kernelization. SIAM J. Discret. Math. 30(1), 383–410 (2016)

Fomin, F.V., Lokshtanov, D., Misra, N., Saurabh, S.: Planar F-Deletion Approximation, Kernelization and Optimal FPT Algorithms. In Proceedings of the 53rd Annual IEEE Symposium on Foundations of Computer Science, FOCS, pP 470–479 (2012)

Guruswami, V., Velingker, A., Velusamy, S.: Streaming Complexity of Approximating Max 2CSP and Max Acyclic Subgraph. In Approximation, Randomization, and Combinatorial Optimization. Algorithms and Techniques, APPROX/RANDOM, pP 8:1–8:19 (2017)

Kapralov, M., Khanna, S., Sudan, M., Velingker, A.: \(1+\Omega (1)\) approximation to MAX-CUT Requires Linear Space. In Proceedings of the Twenty-Eighth Annual ACM-SIAM Symposium on Discrete Algorithms, SODA, pp 1703–1722 (2017)

Kim, E.J., Langer, A., Paul, C., Reidl, F., Rossmanith, P., Sau, I., Sikdar, S.: Linear Kernels and Single-Exponential Algorithms Via Protrusion Decompositions. ACM Trans. Algorithms, 12(2):21:1–21:41 (2016)

Kushilevitz, E., Nisan, N.: Communication Complexity. Cambridge University Press, New York, NY, USA (1997)

Kociumaka, T., Pilipczuk, M.: Faster deterministic Feedback Vertex Set. Inf. Process. Lett. 114(10), 556–560 (2014)

Marx, D.: Chordal Deletion is Fixed-Parameter Tractable. Algorithmica 57(4), 747–768 (2010)

McGregor, A.: Graph Stream Algorithms A Survey. SIGMOD Record 43(1), 9–20 (2014)

McGregor, A., Vorotnikova, S.: Planar Matching in Streams Revisited. In APPROX/RANDOM, Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik (2016)

McGregor, A., Vorotnikova, S.: A Simple, Space-Efficient. Streaming Algorithm for Matchings in Low Arboricity Graphs, In SOSA (2018)

McGregor, A., Vorotnikova, S., Vu, H.T.: Better Algorithms for Counting Triangles in Data Streams. In Proceedings of the 35th ACM SIGMOD-SIGACT-SIGAI Symposium on Principles of Database Systems, PODS, pp 401–411 (2016)

Reed, B.A., Smith, K., Vetta, A.: Finding odd cycle transversals. Oper. Res. Lett. 32(4), 299–301 (2004)

Sun, X., Woodruff, D.P.: Tight Bounds for Graph Problems in Insertion Streams. In Proceedings of the 18th International Workshop on Approximation Algorithms for Combinatorial Optimization Problems, APPROX, pp 435–448 (2015)

Thomassé, S.: A \(4k^{2}\) Kernel for Feedback Vertex Set. ACM Trans. Algorithms, 6(2):32:1–32:8 (2010)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

An extended abstract of this paper that appeared in COCOON 2020 was titled “Fixed-Parameter Tractability of Graph Deletion Problems over Data Streams”

A preliminary version of this paper has been accepted in COCOON 2020.

Appendices

Appendix

A Problem Definitions

In this Section we define the following problems formally.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bishnu, A., Ghosh, A., Kolay, S. et al. Small Vertex Cover Helps in Fixed-Parameter Tractability of Graph Deletion Problems over Data Streams. Theory Comput Syst 67, 1241–1267 (2023). https://doi.org/10.1007/s00224-023-10136-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00224-023-10136-w