Abstract

A dynamic iteration scheme for linear differential-algebraic port-Hamiltonian systems based on Lions–Mercier-type operator splitting methods is developed. The dynamic iteration is monotone in the sense that the error is decreasing and no stability conditions are required. The developed iteration scheme is even new for linear port-Hamiltonian systems governed by ODEs. The obtained algorithm is applied to a multibody system and an electrical network.

Similar content being viewed by others

1 Introduction

Dynamic iteration schemes for differential-algebraic equations (DAEs) have been widely used and discussed for multiphysics problems, which allow for an easy exploitation of the different properties of the subystems [1,2,3,4,5,6]. In general, we consider an initial value problem for a (semi-explicit index-1) DAE of the form

where \(\partial g(t,v,w)/\partial w\) is regular in a neighborhood of the solution \((v(t), w(t))^\top \), \(t\in [0, T]\). A general dynamic iteration scheme is given by an initial guess \((v^{(0)}(t), w^{(0)}(t))^\top \) (\(t\in [0,T]\), i.e., an initial waveform) and the solution \((v^{(k)}(t), w^{(k)}(t))^\top \) of the DAE for integer \(k\ge 1\)

with arbitrary splitting functions F, G fulfilling the compatibility conditions

Hence, a dynamic iteration defines as a mapping from the \((k-1)\)-st iterate to the k-th iterate:

Usually the integration interval [0, T] is partitioned into sub intervals, so-called time windows, to speed up convergence or even allow for convergence. One drawback of such schemes for DAEs is given by the fact that stability conditions for a guaranteed convergence must hold in a twofold manner:

-

(a)

Convergence within one time window has to be present [1, 7], and

-

(b)

Stable error propagation from window to window is needed [1, 2].

If one of these conditions is not met choosing arbitrary small time windows will not help out (in general).

In the case of initial value problems of ordinary differential equations

convergence of dynamic iteration schemes (analogously defined) is given for arbitrary long time windows [0, T]. However, the convergence might not be monotone, and the error might increase drastically in the beginning, causing an overflow in computer implementations, see chapter 3 for an example.

As an alternative, operator splitting is based not on splitting the ODE into subsystems componentwise, but on splitting the right-hand side of an ODE, which are solved one after the other, see [8] for an overview. Operator splitting cannot be easily generalized to DAEs, the splitting of the algebraic part poses convergence problems [9]. One way out is proposed in [10] for linear DAEs: there, the idea is to split the DAE into the underlying ODE and function evaluation of the algebraic components in terms of the differential components, which becomes computationally expensive for nonlinear systems, and destroys the structure of the respective DAE.

In this work, we show that an alternative iterative approach for linear DAEs can avoid the flaws of both dynamic iteration and operator splitting schemes described above if the DAE (1) is composed of coupled port-Hamiltonian (pH) systems. This type of systems is motivated by physics, provides an energy balance and gives a simple framework for coupled systems, see [11, 12] for an introduction to pH systems. Port-Hamiltonian DAEs are in particular investigated in [13,14,15,16,17]. Now, the alternative iterative approach is based on an iteration scheme from Lions and Mercier [18]. The convergence will be monotone, no stability conditions appear and the DAE structure does not have to be changed.

The paper is organized as follows: in the subsequent chapter 2, the framework of linear coupled port-Hamiltonian DAEs is set. Both, the perspective of an overall coupled system and the perspective of a system composed of coupled port-Hamiltonian differential-algebraic subsystems is given. Chapter 3 illustrates the limits of classical dynamic iteration schemes such as the Jacobi iteration, which motivates the derivation and analysis of operator splitting based dynamic iteration schemes in the following. Chapter 4 recapitulates some basic facts on maximal monotone operators, which are used in chapter 5 to derive the monotone convergence results of operator splitting based dynamic iteration schemes. An estimate for the convergence rate can be given in the case of systems governed by ODEs, or DAEs with strong enough dissipation. Numerical results are discussed in chapter 6. The paper finishes with some concluding remarks and an outlook.

2 The setting

We consider linear time-invariant initial value problems of DAE, which have the following structure:

Definition 1

For a final time \(T>0\), the descriptor variable \(x(t) \in {\mathbb {R}}^n\) and \(t\in [0,T]\), we consider

where E, J, R and Q are real \(n\times n\)-matrices, B is a \(n\times m\)-matrix, \(E x_0 \in {\mathbb {R}}^n\) is a given initial value, u is a given input and y is referred to as output. (The matrix E may have no full rank.) \(\square \)

Throughout this article, we are working with weak solutions.

Definition 2

(Solution) Let \(x_0\in {\mathbb {R}}^n\) and \(u\in L^2([0,T], {\mathbb {R}}^m)\). A function \(x\in L^2([0,T],{\mathbb {R}}^n)\) is called (weak) solution of (1) if \(Ex \in H^1([0,T],{\mathbb {R}}^n)\), \(Ex(0)=Ex_0\) and (1) is satisfied for \(t\in [0,T]\) almost everywhere. \(\square \)

Proposition 3

Assume that a differential-algebraic system (1) is given with \(E,J,R, Q\in {\mathbb {R}}^{n\times n}\), \(B\in {\mathbb {R}}^{n\times m}\) satisfying \(E^\top Q=Q^\top E\ge 0\), \(R=R^\top \ge 0\) and \(J^\top =-J\). Furthermore, let \(u\in L^2([0,T],{\mathbb {R}}^m)\), \(x_0\in {\mathbb {R}}^n\), and let \(x\in L^2([0,T],{\mathbb {R}}^n)\) be a solution of (1). Then the following statements hold:

-

(a)

The function \(Ex\mapsto x^{\top }Q^{\top }Ex\) (mapping \({{\,\textrm{im}\,}}E\rightarrow {\mathbb {R}}\)) is well-defined.

-

(b)

The function \(t\mapsto x(t)^\top Q^\top Ex(t)\) is weakly differentiable with

$$\begin{aligned} \tfrac{\textrm{d}}{\textrm{d} t}\big (x^\top Q^\top Ex\big ) = 2x^\top Q^\top \big (\tfrac{\textrm{d}}{\textrm{d} t}Ex) \,\in L^1([0,T],{\mathbb {R}}). \end{aligned}$$ -

(c)

The following dissipation inequality holds for all \(t\in [0,T]\):

$$\begin{aligned}{} & {} \tfrac{1}{2}\big (x(t)^\top Q^\top Ex(t)\big )-\tfrac{1}{2}\big (x_0^\top Q^\top Ex_0\big ) \\{} & {} \quad =-\int _0^tx(\tau )^\top Q^\top RQ x(\tau )d\tau +\int _0^tu(\tau )^\top y(\tau )d\tau \le \int _0^tu(\tau )^\top y(\tau )d\tau . \end{aligned}$$

Proof

-

(a)

Assume that \(x_1,x_2\in {\mathbb {R}}^{n}\) with \(Ex_1=Ex_2\). We have \(E^\top Q=Q^\top E\), and thus, for the Moore-Penrose inverse \(E^+\) of E, we have

$$\begin{aligned}E^\top Q=E^\top (E^+)^\top E^\top Q=E^\top (E^+)^\top Q^\top E.\end{aligned}$$Consequently, for \(X=(E^+)^\top Q^\top \in {\mathbb {R}}^{n\times n}\), we have \(Q^\top E=E^\top XE\), and thus

$$\begin{aligned}x_1^\top Q^\top Ex_1=(Ex_1)^\top X(Ex_1)=(Ex_2)^\top X(Ex_2)=x_2^\top Q^\top Ex_2.\end{aligned}$$Furthermore, it is easy to see that

$$\begin{aligned} E^\top X E= E^\top X^\top E. \end{aligned}$$(3) -

(b)

By using that \(Ex \in H^1([0,T],{\mathbb {R}}^n)\), the product rule for weak derivatives [19, Thm. 4.25] implies that, for X as in (a),

$$\begin{aligned}x^\top Q^\top Ex=(Ex)^\top X (Ex)\in W^{1,1}([0,T],{\mathbb {R}})\end{aligned}$$with

$$\begin{aligned} \tfrac{\textrm{d}}{\textrm{d} t}(x^\top Q^\top Ex)&= \tfrac{\textrm{d}}{\textrm{d} t}\left( (Ex)^\top X (Ex) \right) = 2 x^\top E^\top X \tfrac{\textrm{d}}{\textrm{d} t}\left( Ex\right) = 2 x^\top \tfrac{\textrm{d}}{\textrm{d} t}\left( E^\top X Ex\right) \\&= 2 x^\top \tfrac{\textrm{d}}{\textrm{d} t}\left( Q^\top Ex\right) = 2 x^\top Q^\top \tfrac{\textrm{d}}{\textrm{d} t}\left( Ex\right) . \end{aligned}$$In the latter calculation we also used (3).

-

(c)

The previous statement yields

$$\begin{aligned} \tfrac{\textrm{d}}{\textrm{d} t}\tfrac{1}{2}(x^\top Q^\top Ex)&=x^\top Q^\top \tfrac{\textrm{d}}{\textrm{d} t}(Ex) = x^\top Q^\top ((J-R)Qx+Bu) \\&= -(Qx)^\top R (Qx) + x^\top Q Bu \le (B^\top Qx)^\top u = y^\top u. \end{aligned}$$Now an integration on [0, t] leads to the dissipation inequality.

\(\square \)

Thus, we investigate the following class of port-Hamiltonian DAE:

Definition 4

(pH DAE) The system (1) with the assumptions of Proposition 3 is here referred as port-Hamiltonian DAE (pH DAE). \(\square \)

2.1 Perspective as overall coupled system

We further assume that (1) is composed of several port-Hamiltonian systems which are coupled in an energy-preserving way. The assumptions are collected in the following.

Assumption 5

(Overall coupled pH DAE) We consider the setting (1).

-

(a)

The matrices in (1) are, for some \(s,n_1,\ldots ,n_s\in {\mathbb {N}}\), structured as

$$\begin{aligned} E= & {} \begin{bmatrix}E_1&{}&{}\\ {} &{}\ddots \\ {} &{}&{}E_s\end{bmatrix}\!, \;\; R= \begin{bmatrix}R_1&{}&{}\\ {} &{}\ddots \\ {} &{}&{}R_s\end{bmatrix}\!, \;\; Q=\begin{bmatrix}Q_1&{}&{}\\ {} &{}\ddots \\ {} &{}&{}Q_s\end{bmatrix}\!, \end{aligned}$$(4)$$\begin{aligned} J= & {} \begin{bmatrix} J_1&{}J_{12}&{}\cdots &{}J_{1s}\\ -J_{12}^\top &{}\ddots &{}\ddots &{}\vdots \\ \vdots &{}\ddots &{}\ddots &{}J_{s-1,s}\\ -J_{1s}^\top &{}\cdots &{}-J_{s-1,s}^\top &{}J_s \end{bmatrix}\!,\;\; B=\begin{bmatrix}B_1\\ \vdots \\ \vdots \\ B_s\end{bmatrix}, \end{aligned}$$(5)with \(n_1+n_2+\cdots + n_s =n\), where

-

(i)

for \(i=1,\ldots c,\, s\), \(E_i,Q_i,J_i,R_i \in {\mathbb {R}}^{n_i\times n_i}\) with \(R_i=R_i^\top \ge 0\), \(J_i^\top =-J_i\), \(E_i^\top Q_i=Q_i^\top E_i\ge 0\), \(Q_i\) invertible and \(B_i \in \mathbb R^{n_i\times m}\);

-

(ii)

\(J_{ij}\in {\mathbb {R}}^{n_i\times n_j}\) for \(i,j\in \{1,\ldots ,s\}\) with \(i<j\).

-

(i)

-

(b)

\(\textrm{rk}\begin{bmatrix} E&R&J \end{bmatrix}=n\).

-

(c)

\(T>0\), \(x_0\in {\mathbb {R}}^n\) and \(u\in L^2([0,T];{\mathbb {R}}^m)\). Furthermore, for matrices \(Z\in {\mathbb {R}}^{n\times r}\), \(Z_1\in {\mathbb {R}}^{r\times r_1}\), with full column rank and

$$\begin{aligned} {{\,\textrm{im}\,}}Z=&\,\ker E,\\ {{\,\textrm{im}\,}}Z_1=&\,\ker RQZ\cap \ker Z^\top Q^\top JQZ, \end{aligned}$$the input u and the initial value \(Ex_0\) fulfill

$$\begin{aligned} Z_1^\top Z^\top Q^\top Bu\in&\,H^1([0,T];{\mathbb {R}}^{r_1}) \quad \text { with } Z_1^\top Z^\top Q^\top (JQx_0+Bu(0))=0. \end{aligned}$$\(\square \)

Remark 6

Notice that with Assumption 5, we have for (1):

-

(a)

\(Q^\top E=E^\top Q\ge 0,\quad R=R^\top \ge 0,\quad \textrm{and} \quad Q\) is invertible. Hence, the overall coupled pH DAE of Assumption 5 is a pH DAE (in the sense of Definition 4).

-

(b)

As \(Q^\top E\ge 0\), we have that \(EQ^{-1}=Q^{-\top }(Q^TE)Q^{-1}\) is positive semi-definite as well, and therefore possesses a matrix square root \((EQ^{-1})^{1/2}\).

-

(c)

The ith subsystem for the variable \(x_i\) reads:

$$\begin{aligned} E_i x_i = (J_i-R_i)Q_i x_i + B_i u \; - \sum _{j<i} J_{ji}^\top Q_j x_j + \sum _{i<j} J_{ij} Q_j x_j. \end{aligned}$$The coupling is facilitated by the off-diagonal terms within the matrix J. \(\square \)

Remark 7

(Coupled linear pH DAEs) A pH DAE of type (1) with coupling structure (4) and (5) is naturally given in the case of s coupled linear pH DAEs as follows: consider s subsystems of pH DAEs

\(i=1,\ldots ,s.\) with \({{\tilde{J}}}_i=-{{\tilde{J}}}_i^\top \), \(R_i=R_i^\top \ge 0\), and \(Q_i^\top E_i=E_i^\top Q_i \ge 0\). The input \(u_i\), the output \(y_i\) and \(B_i\) are split into

according to external and coupling quantities. The subsystems are coupled via external inputs and outputs by

These s systems can be condensed to one large pH DAE [3]

with \(J={{\tilde{J}}} - {{\hat{B}}} {{\hat{C}}} {{\hat{B}}}^{\top }\) and the condensed quantities

Equation (6) defines now a pH DAE of type (1) with structure given by (4) and (5): the matrices \({{\tilde{J}}}\) and R are block-diagonal, and the Schur complement type matrix \(-{{\hat{B}}} {{\hat{C}}} {{\hat{B}}}^{\top }\) has only off-block diagonal entries. Note that the coupling has been shifted from the port matrices \(B_i\) to the off-block diagonal part of J. \(\square \)

Remark 8

As has been shown in Remark 7, all coupled linear subsystems of pH DAEs can be transformed into one pH DAE without port-coupling, i.e., the coupling of the different subsystems can always be given through J. Therefore the structure given in assumption 5 comprises the general case of a set of arbitrary linear pH DAEs which are coupled by a linear coupling condition for the inputs and outputs of the single systems.

Now we collect some properties of systems (1) satisfying Assumption 5. To this end, we note that a matrix pencil \(sE-A\in {\mathbb {R}}[s]^{n\times n}\) is called regular if \(\det (sE-A)\) is not the zero polynomial. Furthermore, for a regular pencil \(sE-A\), the index \(\nu \in {\mathbb {N}}_0\) of the DAE \(\tfrac{\textrm{d}}{\textrm{d} t}E x(t)=Ax(t)+f(t)\) is the smallest number for which the mapping \(\lambda \mapsto \lambda ^{1-\nu }(\nu E-A)^{-1}\) is bounded outside a compact subset of \({\mathbb {C}}\). Note that the index equals to the number of differentiations of the DAE needed to obtain an ordinary differential equation [20, Chap. 2].

Proposition 9

Any system (1) fulfilling Assumption 5 has the following properties.

-

(a)

The pencil \(sE-(J-R)Q\in {\mathbb {R}}[s]^{n\times n}\) is regular.

-

(b)

There exists a unique solution x of (1).

-

(c)

The index of the DAE (1) is at most two.

Proof

-

(a)

For \(\lambda \in {\mathbb {C}}\) with \({{\,\textrm{Re}\,}}\lambda >0\), we show that \(C:=\lambda E-(J-R)Q\) has a trivial kernel. Let \(z\in {\mathbb {C}}^{n}\) with \(Cz=0\). Then

$$\begin{aligned} 0={{\,\textrm{Re}\,}}(z^* Q^\top C z)={{\,\textrm{Re}\,}}(\lambda z^* Q^\top Ez)-{{\,\textrm{Re}\,}}(z^* Q^\top JQx)+{{\,\textrm{Re}\,}}(z^* Q^\top RQz). \end{aligned}$$Invoking that \(J=-J^\top \), \(Q^\top E=E^\top Q\ge 0\) and \(R=R^\top \ge 0\), we obtain

$$\begin{aligned} \begin{aligned} {{\,\textrm{Re}\,}}(\lambda z^* Q^\top Ez)=&\,{{\,\textrm{Re}\,}}(\lambda )z^* Q^\top Ez\ge 0,\\ {{\,\textrm{Re}\,}}(z^* Q^\top JQz)=&\,{{\,\textrm{Re}\,}}((Qz)^* J(Qz))=0,\\ {{\,\textrm{Re}\,}}(z^* Q^\top RQz)=&\,{{\,\textrm{Re}\,}}((Qz)^* R(Qz))=(Qz)^* R(Qx)\ge 0, \end{aligned}\end{aligned}$$which leads to \(z^* Q^\top Ez=0\) and \((Qz)^* R(Qz)=0\). The positive semi-definiteness of \(Q^\top E\) and R implies that \(E^\top Qz=Q^\top Ez=0\) and \(RQz=0\). Using \((\lambda E-(J-R)Q)z=0\), this yields \(JQz=0\). Hence, by \(R=R^\top \) and \(J=-J^\top \),

$$\begin{aligned} Qz\in \ker \left[ \begin{matrix}E&R&J\end{matrix}\right] ^\top . \end{aligned}$$Assumption 5 (b) now gives \(Qz=0\). Since Q is invertible, we are led to \(z=0\).

-

(b)

By using that the pencil \(sE-(J-R)Q\) is regular, uniqueness of solutions of the initial value problem (1) follows from the considerations in [21, Sec. 1.1]. It remains to prove that a solution of (1) exists. Let \(Z\in {\mathbb {R}}^{n\times r}\), \(Z_1\in {\mathbb {R}}^{r\times r_1}\) be as in Assumption 5 (c). Furthermore, let \(Z'\in {\mathbb {R}}^{n\times (n-r)}\), \(Z_1'\in {\mathbb {R}}^{r\times (r-r_1)}\) be matrices with \({{\,\textrm{im}\,}}Z'=({{\,\textrm{im}\,}}Z)^\bot \) and \({{\,\textrm{im}\,}}Z_1'=({{\,\textrm{im}\,}}Z_1)^\bot \). Moreover, we introduce matrices \(Z_2\in {\mathbb {R}}^{(n-r)\times r_1}\), \(Z_2'\in {\mathbb {R}}^{(n-r)\times (n-r-r_1)}\) with full column rank and

$$\begin{aligned}{{\,\textrm{im}\,}}Z_2=\ker Z_1^\top Z^\top Q^\top (J-R)Z',\;\; {{\,\textrm{im}\,}}Z_2'=({{\,\textrm{im}\,}}Z_2)^\bot .\end{aligned}$$The construction of \(Z,Z',Z_1,Z_1',Z_2,Z_2'\) yields that the columns of the matrix

$$\begin{aligned} V:=\big [Z'Z_2', Z'Z_2,ZZ_1',ZZ_1\big ] \end{aligned}$$(7)are linearly independent, and thus, \(V,V^\top Q^\top \in {\mathbb {R}}^{n\times n}\) are invertible matrices. Then we obtain

$$\begin{aligned} V^\top Q^\top (sE-(J-R)Q)V= \begin{bmatrix} sE_{11}-A_{11}&{}sE_{12}-A_{12}&{}-A_{13}&{}-A_{14}\\ sE_{12}^\top -A_{21}&{}sE_{22}-A_{22}&{}-A_{23}&{}0\\ -A_{31}&{}-A_{32}&{}-A_{33}&{}0\\ A_{14}^\top &{}0&{}0&{}0 \end{bmatrix}, \end{aligned}$$(8)where

$$\begin{aligned} E_{11}=&\,(Z_2')^\top (Z')^\top Q^\top E Z'Z_2',&E_{12}=&\,(Z_2')^\top (Z')^\top Q^\top E Z'Z_2,\\E_{22}=&\,Z_2^\top (Z')^\top Q^\top E Z'Z_2,&A_{11}=&\,(Z_2')^\top (Z')^\top Q^\top (J-R)Q Z'Z_2',\\ A_{12}=&\,(Z_2')^\top (Z')^\top Q^\top (J-R)Q Z'Z_2,&A_{21}=&\,Z_2^\top (Z')^\top Q^\top (J-R)Q Z'Z_2',\\ A_{13}=&\,(Z_2')^\top (Z')^\top Q^\top (J-R)Q ZZ_1',&A_{31}=&\,(Z_1)'^\top Z^\top Q^\top (J-R)Q Z'Z_2',\\ A_{22}=&\,Z_2^\top (Z')^\top Q^\top (J-R)Q Z'Z_2,&A_{33}=&\,(Z_1)'^\top Z^\top Q^\top (J-R)Q ZZ_1',\\ A_{14}=&\,(Z_2')^\top (Z')^\top Q^\top JQ ZZ_1,\\ B_{1}=&\,(Z_2')^\top (Z')^\top Q^\top B,&B_{2}=&\,Z_2^\top (Z')^\top Q^\top B,\\ B_{3}=&\,(Z_1')^\top Z^\top Q^\top B,&B_{4}=&\,Z_1^\top Z^\top Q^\top B. \end{aligned}$$The construction of the matrices \(Z,Z',Z_1,Z_1',Z_2,Z_2'\) yields that

-

\(\left[ {\begin{matrix}E_{11}&{}E_{12}\\ E_{12}^\top &{}E_{22}\end{matrix}}\right] \) is positive definite. In particular, \(E_{22}\) is invertible,

-

\(A_{33}\) is invertible, and

-

\(A_{14}\) has full row rank.

The already proved regularity of \(sE-(J-R)Q\), which implies regularity of the pencil \(V^\top Q^\top (sE-(J-R)Q)QV\), which yields that \(A_{14}\) has moreover full column rank. As a consequence, \(A_{14}\) is square (i.e., \(2r_1=n-r\)) and invertible. Set \(z_0=(z_{10}^\top ,z_{20}^\top ,z_{30}^\top ,z_{40}^\top )\), \(z_{10}\in {\mathbb {R}}^{n-r-r_1}\), \(z_{20}\in {\mathbb {R}}^{r_1}\), \(z_{30}\in {\mathbb {R}}^{r-r_1}\), \(z_{40}\in {\mathbb {R}}^{r_1}\). By Assumption 5 (c), we have \(B_4u\in H^1([0,T];{\mathbb {R}}^{r_1})\) and

$$\begin{aligned} 0= & {} Z_1^\top Z^\top Q^\top (JQx_0+Bu(0)) =Z_1^\top Z^\top Q^\top JQx_0+B_4u(0)\\= & {} Z_1^\top Z^\top Q^\top JQ(Z'Z_2'z_{10}+Z'Z_2z_{20}+ZZ_1'z_{30}+ZZ_1z_{40})+B_4u(0)\\= & {} Z_1^\top Z^\top Q^\top JQZ'Z_2'z_{10}+B_4u(0)=-Z_1^\top Z^\top Q^\top J^\top QZ'Z_2'z_{10}+B_4u(0)\\= & {} -A_{14}^\top z_{10}+B_4u(0). \end{aligned}$$Consequently, \(z_1=(A_{14}^\top )^{-1}B_4u\) fulfills \(z_1\in H^1([0,T];{\mathbb {R}}^r)\), \(-A_{14}^\top z_1+B_4u(t)=0\) and \(z_1(0)=z_{10}\). Moreover, let \(z_2\in H^1([0,T];{\mathbb {R}}^{r_1})\) be the solution of the following ODE with initial value \(z_2(0)=z_{20}\)

$$\begin{aligned}{} & {} \tfrac{\textrm{d}}{\textrm{d} t}z_2(t)=E_{22}^{-1}(A_{22}-A_{23}A_{33}^{-1}A_{32})z_2(t)\\{} & {} \quad +E_{22}^{-1}\big (-\tfrac{\textrm{d}}{\textrm{d} t}E_{12}^\top z_1(t)+A_{21}z_1(t)+B_2u(t)-A_{23}A_{33}^{-1}z_1(t)-A_{23}A_{33}^{-1}B_3u(t)\big ), \end{aligned}$$and we successively define \(z_3\in L^2([0,T];{\mathbb {R}}^{r-r_1})\), \(z_4\in L^2([0,T];{\mathbb {R}}^{r_1})\) by

$$\begin{aligned} z_3(t)=&\,-A_{33}^{-1}A_{23}z_2(t)-A_{33}^{-1}A_{13}z_1(t),\\ z_4(t)=&\,-A_{14}^{-1}\big (\tfrac{\textrm{d}}{\textrm{d} t}E_{11}z_1(t)+\tfrac{\textrm{d}}{\textrm{d} t}E_{12}z_2(t)-A_{11}z_1(t)-A_{12}z_1(t)-A_{13}z_3(t)\big ). \end{aligned}$$Altogether, we have that \(z:=(z_1^\top ,z_2^\top ,z_3^\top ,z_4^\top )^\top \) is a solution of the differential-algebraic equation

$$\begin{aligned} \begin{aligned} \tfrac{\textrm{d}}{\textrm{d} t}\begin{bmatrix} E_{11}&{}E_{12}&{}0&{}0\\ E_{12}^\top &{}E_{22}&{}0&{}0\\ 0&{}0&{}0&{}0\\ 0&{}0&{}0&{}0 \end{bmatrix} \begin{pmatrix} z_{1}(t)\\ z_{2}(t)\\ z_3(t)\\ z_4(t) \end{pmatrix}=&\begin{bmatrix} A_{11}&{}A_{12}&{}A_{13}&{}A_{14}\\ A_{21}&{}A_{22}&{}A_{23}&{}0\\ A_{31}&{}A_{32}&{}A_{33}&{}0\\ -A_{14}^\top &{}0&{}0&{}0 \end{bmatrix} \begin{pmatrix} z_{1}(t)\\ z_{2}(t)\\ z_3(t)\\ z_4(t) \end{pmatrix}+ \begin{bmatrix} B_{1}\\ B_{2}\\ B_{3}\\ B_{4} \end{bmatrix}u(t),\\ \begin{bmatrix} E_{11}&{}E_{12}&{}0&{}0\\ E_{12}^\top &{}E_{22}&{}0&{}0\\ 0&{}0&{}0&{}0\\ 0&{}0&{}0&{}0 \end{bmatrix} \begin{pmatrix} z_{1}(0)\\ z_{2}(0)\\ z_3(0)\\ z_4(0) \end{pmatrix}=&\begin{bmatrix} E_{11}&{}E_{12}&{}0&{}0\\ E_{12}^\top &{}E_{22}&{}0&{}0\\ 0&{}0&{}0&{}0\\ 0&{}0&{}0&{}0 \end{bmatrix} \begin{pmatrix} z_{10}\\ z_{20}\\ z_{30}\\ z_{40} \end{pmatrix}. \end{aligned} \end{aligned}$$(9)Now setting

$$\begin{aligned}x(t):=(Q^\top )^{-1}(V^\top )^{-1}z(t),\end{aligned}$$ -

-

(c)

The result has been shown in [22, Thm. 4.3] and [16, Thm. 6.6]. Notice, this can be also seen from the proof of (b). \(\square \)

\(\square \)

Remark 10

Assume that the DAE (1) fulfills Assumption 5 (a) & (b). By using \(V\in {\mathbb {R}}^{n\times n}\) as defined as in (7), we can transform (1) to an equivalent DAE (9). Consequently, the existence of a solution of (1) implies \(z_1\in H^1([0,T];{\mathbb {R}}^{r_1})\) with, and thus \(B_4u=(A_{14}^\top )^{-1}\in H^1([0,T];{\mathbb {R}}^{r_1})\). Invoking that \(B_4=Z_1^\top Z^\top Q^\top B\), we obtain that \(Z_1^\top Z^\top Q^\top Bu\in H^1([0,T];{\mathbb {R}}^{r_1})\) with \(Z_1^\top Z^\top Q^\top (JQx_0+Bu(0))=0\) has to be fulfilled. Consequently, under Assumption 5 (a) & (b), the conditions in Assumption 5 (c) are also necessary for the existence of a solution of the DAE (1). \(\square \)

2.2 Perspective as coupled pH-subsystems

We take the perspective of the paper [23] for the system (1) and its partitioning given in Rem. 6. To this end, we need to introduce the internal inputs \(u_{ij}\), i.e., data stemming from the jth system being input for the ith system, and outputs \(y_{ij}\) (i.e., data from the i system to be transferred to the jth system). This reads for the ith subsystems (\(i=1,\ldots ,s\)):

with matrices \(E_i,Q_i,J_i,R_i \in {\mathbb {R}}^{n_i\times n_i}\), and \(B_i\in {\mathbb {R}}^{n_i\times m}\) fulfilling the properties of Assumption 5 (a), and \(B_{ij}\in {\mathbb {R}}^{n_i\times m_{ij}}\) for \(i=1,\ldots ,s\), \(j=1,\ldots ,s\), \(i\ne j\) with \(m_{ij}=m_{ji}\). The dissipation inequality for this subsystem reads

The overall input u is composed of the inputs of the subsystems. By forming a suitable expansion of the input spaces of the subsystems, it is no loss of generality to assume that \(u=u_1=\cdots =u_s\). The overall output is consequently given by the sum

Coupling is done such that \(x\mapsto x^\top Q^\top Ex\) with E and Q as in (4) is a storage function for the overall system [24]. This is for instance achieved by

or equivalently

since

The overall system is given by (1) with E, Q and R as in (4), and J structured as in (5) with

Remark 11

This coupling has the drawback that all variables enter the definition of the coupling. And we have as many outputs of a subsystem as the other subsystem has variables times the number of other subsystems. This will be treated differently in practice. An ’essential’ coupling will be used (minimizing the number of coupling variables).

Before we investigate the Lions/Mercier based algorithm, we revisit the Jacobi dynamic iteration and discuss some limitations.

3 Failure of Jacobi dynamic iteration

To motivate the development of monotone operator splitting based dynamic iteration schemes in chapter 5, we remind that classical approaches such as the Jacobi or Gauss-Seidel iteration scheme may not always be feasible for a computer implementation. To this end, we address system (1) in the following ODE setting: assigning \(E=Q=I\), the initial value problem (1) reads

For this system, we recall that the dynamic iteration is based on a splitting \(J-R=M-N\) and an iteration count \(k\in {\mathbb {N}}_0\). Now, let be an initial guess at \(k=0\) be given \(x^{(0)} \in C^{0}([0,T], {\mathbb {R}}^n)\) with \(x^{(0)}(0)=x_0\) (e.g., \(x^{(0)}(t)=x_0\)) for all \(t\in [0,T]\), the dynamic iteration scheme reads:

Remark 12

Note that setting \(M=J_d-R\) and \(N=-J_o\) in the case of the pHS-ODE system (10) defines a block-Jacobi dynamic iteration scheme. Here \(J_d\) is a block diagonal matrix with diagonal elements \(J_i\), \(i=1,\ldots ,s\), and \(J_o:=J-J_d\). \(\square \)

If we restrict to \(u \in C^{0}([0,T],{\mathbb {R}}^m)\), we obtain from (11) \(x^{(k+1)} \in C^{0}([0,T],{\mathbb {R}}^n)\). Let \(x \in C^{0}([0,T],{\mathbb {R}}^n)\) denote the analytic solution of (10). Then, the recursion error is given by \(\epsilon ^{(k)}:=x^{(k)}-x\). Following Burrage [25], we can derive an exact error recursion for the dynamic iteration (11). Doing this, for the system (10) with

and setting \(M=-R, N=-J_o=-J\), we get the error recursion on \(t \in [0,\, T]\):

Now, just for demonstration purposes and simplicity, let us assume that initial error has the format:

and \(D>0\). Using the maximum norm \(\Vert f\Vert _T:=\max _{t \in [0,T]} \Vert f(t)\Vert _2\) for \(f\in C^{0}([0,T],{\mathbb {R}}^n)\), the error reads for all \(k\ge \tau T -1\) (maximal error at T):

Example 13

We analyse a \(2\times 2\) version of (10) with the restriction (12), which reads:

and employing \(\tau , \nu >0\). The analytic solution and error evolution are given by:

The error amplification factor \(\nu T/(k+2)\) is bounded by one for all \(k>\nu T -2\). For all \(k < \nu T -2\) the error is increasing. Hence, the convergence is monotone (for all k) only if \(\nu T -2 \le 0\).

We remark that splitting the overall time window [0, T] in several windows is always a solution to obtain (good) convergence.

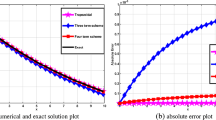

To illustrate the below up, we present two configurations: an overflow in single precision for \(T=10\) in Fig. 1 (left) and a initial blow up and then decrease for \(T=0.5\) in Fig. 1 (right). Of course, decreasing the window size further will result in a monotone convergence. \(\square \)

Jacobi iteration for system (13) with \(\nu =15\), \(\tau =0.01\), \(D=1\). Left: \(T=10\). The maximum error would be obtained at iteration \(k=148\): \(\sqrt{2} \cdot 150^{148}/148!\) and error decrease from \(k=149\) on. Overflow in single precision at \(k=40\).Right: \(T=0.5\). Maximum error for \(k=5\) (\(\nu T -2=5.5\)), then decrease. After \(k=29\), we achieve the solution (in single precision)

4 Recap on maximal monotone operators

For \(\omega \ge 0\), we introduce the weighted \(L^2\)-space

equipped with the norm \(\Vert \cdot \Vert _{2,\omega }\) defined as

Clearly, as \(T\in (0,\infty )\), we have \(L^2_\omega ([0,T],\mathbb R^n)=L^2([0,T],{\mathbb {R}}^n)\) with equivalent norms. Furthermore, if \(\omega =0\), then the norms in \(L^2_\omega ([0,T],{\mathbb {R}}^n)\) and \(L^2([0,T],{\mathbb {R}}^n)\) coincide.

Definition 14

(Contraction) Let X be a Banach space space with norm \(\Vert \cdot \Vert \). A (possibly nonlinear) operator \(A:D(A)\subset X\rightarrow X\) is called contractive, if

and strictly contractive, if there exists some \(L<1\), such that

\(\square \)

Definition 15

(Maximally monotone operator) Let X be a real Hilbert space with inner product \(\langle \cdot , \cdot \rangle \). A set \(Y\subset X\times X\) is called monotone, if

Furthermore, \(Y\subset X\times X\) is called maximally monotone, if it is monotone and not a proper subset of a monotone subset of \(X\times X\).

A (possibly nonlinear) operator \(A:D(A)\subset X\rightarrow X\) is called (maximally) monotone, if the graph of A, i.e., \(\{(x,Ax):\, x\in D(A)\}\), is (maximally) monotone. \(\square \)

Remark 16

Let \(A:D(A)\subset X\rightarrow X\) be a monotone operator. It follows from the definition of monotonicity that \(I+\lambda A\) is injective for all \(\lambda >0\).

Moreover, by [26, Theorem 2.2], the following three statements are equivalent:

-

(i)

A is maximally monotone,

-

(ii)

\(I+\lambda A\) is surjective for some \(\lambda >0\),

-

(iii)

\(I+\lambda A\) is surjective for all \(\lambda >0\).

Consequently, if A is maximally monotone, then \(I+\lambda A\) is bijective for all \(\lambda >0\). The Cauchy-Schwarz inequality together with the given monotonicity yields that

whence \(( I+\lambda A)^{-1}:X\rightarrow X\) is contractive.

Furthermore, \((I-\lambda A)(I+\lambda A)^{-1}\) is contractive. This follows with \({{\tilde{x}}}:= (I+\lambda A)^{-1}x\) and \({{\tilde{y}}}:= (I+\lambda A)^{-1}y\) from

\(\square \)

5 Dynamic iteration scheme

Now we develop a dynamic iteration scheme for the DAE (1) fulfilling Assumption 5. To facilitate the decoupling, we split J as follows

such that \(J_d = - J_d^\top \) and \(J_o=-J_o^\top \) (compare with Rem. 6 (c)).

Let \(\alpha \in [0,1]\), \(\mu ,\omega \in {\mathbb {R}}\) with \(0\le \mu \le \omega \). We introduce the affine-linear operator M by:

with domain

and the linear bounded multiplication operator N by

Remark 17

Notice that x is a solution of the DAE (1) if, and only if,

holds. \(\square \)

In the following, we use \(\alpha \in [0,1]\) in order to have both the contribution \((1- \alpha )R\) to N and the contribution \(\alpha R\) to M positive semi-definite.

Lemma 18

Let \(\omega ,\mu \in {\mathbb {R}}\) with \(0\le \mu \le \omega \), and let \(\alpha \in [0,1]\). Assume that the DAE (1) fulfills Assumption 5, and let the operator M be defined as in (15). Then \(MQ^{-1}\) is maximally monotone on \(L^2_\omega ([0,T],{\mathbb {R}}^n)\). More precisely, \(MQ^{-1}\) satisfies

for \(v, w\in Q D(M)\), and \(I+\lambda MQ^{-1}\) is surjective for every \(\lambda >0\).

Proof

First of all, we have for \(v, w\in Q D(M)\)

which shows that \(MQ^{-1}\) is monotone (thereby we also use integration by parts).

To prove that \(MQ^{-1}\) is maximally monotone, it suffices, by Remark 16, to prove that \(I+\lambda MQ^{-1}\) is surjective for some \(\lambda >0\). Assume that \(y\in L^2_\omega ([0,T],{\mathbb {R}}^n)\). Consider the matrices \({\tilde{E}}=EQ^{-1}\), \({\tilde{R}}=\alpha R +\frac{1}{\lambda }I\) and \({\tilde{Q}}=I\). Then the DAE

clearly fulfills Assumption 5 (a) &(b). Moreover, by positive definiteness of \({\tilde{R}}\), we obtain that any matrix \(Z\in {\mathbb {R}}^{n\times r}\) with full column rank and \({{\,\textrm{im}\,}}Z=\ker E\) fulfills \(\ker {\tilde{R}}QZ=\{0\}\). This means that Assumption 5 (c) is trivially fulfilled by (18), and Proposition 9 implies that (18) has a unique solution \({\tilde{v}}\in L^2_\omega ([0,T],{\mathbb {R}}^n)\) with \({\tilde{E}}{\tilde{v}}\in H^1([0,T],{\mathbb {R}}^n)\). Now consider \({v}\in L^2_\omega ([0,T],{\mathbb {R}}^n)\) with \(v(t)=e^{\mu t}{\tilde{v}}(t)\). Then \({\tilde{E}}v(0)={\tilde{E}}{\tilde{v}}(0)={\tilde{E}} Qx_0\), and the product rule for weak derivatives [19, Thm. 4.25] yields \({\tilde{E}}{v}\in H^1([0,T],\mathbb R^n)\) with

and thus

Since this means that \((I+\lambda MQ^{-1})v=y\), the result is shown. \(\square \)

Lemma 19

Let \(\lambda >0\), \(\mu \ge 0\), \(\alpha \in [0,1]\), and let \(R,E,Q\in {\mathbb {R}}^{n\times n}\) with the properties as in Assumption 5. Then, for \(K:=((1-\alpha )R+\mu EQ^{-1}-J_o)\)

Moreover, if

then \((1-\alpha )R+\mu EQ^{-1} = K+J_o\in {\mathbb {R}}^{n\times n}\) is invertible, and

where \(q\ge 0\) with

Proof

By using that \(K+K^\top \ge 0\), we see that \(I+\lambda K\) is an invertible matrix. Let \(x\in {\mathbb {R}}^n\) and \(y:=(I+\lambda K)^{-1}x\). Then

shows (19). To prove the remaining result, assume that (20) is fulfilled. Then it can be concluded from Assumption 5 (a) (i) that \((1-\alpha )R+\mu EQ^{-1}\) is positive definite. Furthermore, by

we have

Plugging this into (19), we obtain for all \(x\in {\mathbb {R}}^n\)

which completes the proof. \(\square \)

Remark 20

Condition (20) is equivalent to at least one of the following three statements being fulfilled:

-

(i)

\(\textrm{rk}\begin{bmatrix} E&R \end{bmatrix}=n\), \(\mu >0\) and \(\alpha <1\), or

-

(ii)

\(\textrm{rk}E=n\), \(\mu >0\), and \(\alpha \le 1\), or

-

(iii)

\(\textrm{rk}R=n\), \(\mu \ge 0\) and \(\alpha <1\). \(\square \)

As a direct conclusion of Lemma 19, we can draw the following result.

Lemma 21

Let \(\lambda ,\mu \ge 0\), \(\alpha \in [0,1]\), let \(R,E,Q\in {\mathbb {R}}^{n\times n}\) with the properties as in Assumption 5, and let the operator N be defined as in (16). The multiplication operator \(NQ^{-1}\) on \(L^2_\omega ([0,T],{\mathbb {R}}^n)\) is linear, bounded and satisfies

i.e., \(NQ^{-1}\) is maximally monotone. Furthermore, the multiplication operator \(x\mapsto (I-\lambda NQ^{-1})(I+\lambda NQ^{-1})^{-1}x\) is a contraction on \(L^2_\omega ([0,T],{\mathbb {R}}^n)\) for \(\lambda >0\) satisfying

Moreover, if \({{\,\textrm{im}\,}}E+{{\,\textrm{im}\,}}R={\mathbb {R}}^n\) and \(\alpha <1\), then

for \(q<1\) as in (22).

Proof

The first statement follows directly and the remaining statements are implied by Lemma 19. \(\square \)

Our dynamic iteration scheme is built on Rem. 17. Following Lions and Mercier [18], we study the algorithms

with \(x^0\in D(M)\) arbitrary.

Remark 22

Note that equation (17) is equivalent to

or equivalently,

\(\square \)

Remark 23

The iteration (23) is equivalent to

Theorem 24

Let \(\lambda >0\), \(\mu \ge 0\), and let \(\alpha \in [0,1]\). Assume that the DAE (1) fulfills Assumption 5, and let the operators M, N be defined as in (15) and (16). Let \(\lambda >0\) and \(x^0\in D(M)\). Let the sequence \((x^k)_k\) be defined by (23), and let x be the unique solution of (1). Then the following statements are fulfilled:

-

(a)

\((E x^k)_k\) converges to Ex in \(L^2([0,T],{\mathbb {R}}^n)\),

-

(b)

\((E x^k)_k\) converges uniformly to Ex on [0, T],

-

(c)

If \(\alpha >0\), then \((RQ x^k)_k\) converges to RQx in \(L^2([0,T],{\mathbb {R}}^n)\),

-

(d)

For the sequence \((z^k)_k\) given by

$$\begin{aligned} \forall \,k\in {\mathbb {N}}:\quad z^{k}:= (I+\lambda MQ^{-1}) Qx^{k}, \end{aligned}$$and the function \(z:=(I+\lambda MQ^{-1})Q x\), the real sequence \((\Vert z^{k}-z\Vert _{2,\omega })_k\) is monotonically decreasing, and

$$\begin{aligned} \forall \,k\in {\mathbb {N}}:\quad \Vert x^{k}- x\Vert _{2,\omega } \le \Vert Q^{-1}\Vert \Vert z^{k}-z\Vert _{2,\omega }. \end{aligned}$$(25)

Proof

Let \(\omega \in {\mathbb {R}}\) with \(\mu <\omega \). We have

and

We set

Then using Lemmas 21 and 18 we calculate

Thus the sequence \((\Vert \Delta z^{k}\Vert _{2,\omega })_k\) monotonically decreasing, and therefore it converges. Inequality (25) follows from Remark 16, which completes the proof of (d). Furthermore, the latter inequality gives

Combining (29) with the fact that the norms \(\Vert \cdot \Vert _{\omega ,2}\) and \(\Vert \cdot \Vert _{2}\) are equivalent, we are led to (c). In addition, (28) together with \(E =(EQ^{-1})^{1/2} (EQ^{-1})^{1/2} Q\)—and using that \(\Vert \Delta z^k\Vert _{2,\omega }\) is a convergent sequence—gives \((\Vert E \Delta x^k\Vert _{2,\omega }^2 )_k\) converges to zero, and the equivalence between the norms \(\Vert \cdot \Vert _{\omega ,2}\) and \(\Vert \cdot \Vert _{2}\) now implies (a).

It remains to prove (b). We repeat the calculation (27) but with \(t \in (0,T]\) instead of T in the last step and obtain

which shows that \( Ex^k(t)\rightarrow E x(t)\) uniformly in \(t\in [0,T]\). \(\square \)

Remark 25

Though the sequence \((\Vert \Delta x^k\Vert _{2,\omega })_k\) is usually not monotone decreasing, it is bounded by the monotone decreasing sequence \((\Vert Q^{-1}\Vert \Vert z^{k}-z\Vert _{2,\omega })_k\) due to (25). \(\square \)

Theorem 26

Let \(\lambda >0\), \(\mu \ge 0\), and let \(\alpha \in [0,1]\). Assume that the DAE (1) fulfills Assumption 5, and let the operators M, N be defined as in (15) and (16). Let \(x^0\in D(M)\). Let the sequence \((x^k)_k\) be defined by (23), and let x be the unique solution of (1). Then the following statements are fulfilled:

-

(a)

If (20) holds, then the sequence \((z^k)\) as defined in Theorem 24 (d) converges to \(z:=(I+\lambda MQ^{-1})Q x\) in \(L^2([0,T],{\mathbb {R}}^n)\). Further, \((Ex^k)_k\) converges to Ex in \(H^1([0,T],{\mathbb {R}}^n)\).

-

(b)

If (20) or

$$\begin{aligned} \textrm{rk}\begin{bmatrix} E&\alpha R \end{bmatrix}=n, \end{aligned}$$(30)is fulfilled, then \((x^k)_k\) converges to x in \(L^2([0,T],\mathbb R^n)\),

Proof

We start with the proof of (a): By (23) and Remark 22, we have

and we define \(\Delta z^{k}:= z-z^{k}\). Assume that (20) holds. By invoking Remark 16, Lemma 18 and Lemma 21, we obtain, for \(q<1\) as in Lemma 19,

This implies that \(z^k\) converges in \(L^2([0,T],{\mathbb {R}}^n)\) to z.

Next we prove that \((Ex^k)_k\) converges to Ex in \(H^1([0,T],{\mathbb {R}}^n)\). We already know from Theorem 24 (a) that \((E x^k)_k\) converges to Ex in \(L^2([0,T],{\mathbb {R}}^n)\). Hence, it suffices to prove that \((\tfrac{\textrm{d}}{\textrm{d} t}E x^k)_k\) converges to \(\tfrac{\textrm{d}}{\textrm{d} t}Ex\) in \(L^2([0,T],{\mathbb {R}}^n)\). By invoking that \((z^k)\) converges to z in \(L^2([0,T],\mathbb R^n)\), (25) yields that \((x^k)\) converges to x in \(L^2([0,T],{\mathbb {R}}^n)\). Now using the definition of x, z and M, we have

which gives

Since \((x^k)\) converges to x in \(L^2([0,T],{\mathbb {R}}^n)\), and further, \((z^k)\) converges to z in \(L^2([0,T],{\mathbb {R}}^n)\), the above equation implies that \(\tfrac{\textrm{d}}{\textrm{d} t}(E{x}^k)\) converges in \(L^2([0,T],{\mathbb {R}}^n)\) to \(\tfrac{\textrm{d}}{\textrm{d} t}(E{x})\). Altogether, this means that \((Ex^k)\) converges to Ex in \(H^1([0,T],{\mathbb {R}}^n)\).

Next we prove (b). The case where (20) is fulfilled, the result follows by a combination of (a) with (25).

Assume that (30) holds: Then, by invoking Assumption 5 (a) (i), the matrix \(Q^{\top }E+\alpha Q^{\top }RQ\) is positive definite, and thus invertible. On the other hand, \((((Q^{\top }E+Q^{\top }RQ) x^k)_k\) converges to \((Q^{\top }E+\alpha Q^{\top }RQ)x\) in \(L^2([0,T],\mathbb R^n)\) by Theorem 24. Consequently, \((x^k)_k\) converges to x in \(L^2([0,T],{\mathbb {R}}^n)\). \(\square \)

Based on the results of the previous section, we present a respective dynamic iteration algorithm. We recall that we choose \(\omega >0\) and we define

with \(x^0 \in D(M)\) arbitrary, and

Using (26) we obtain

Furthermore, relation \(z^k=(Q+\lambda M)x^k\) corresponds to the DAE

with initial conditions \(E_ix_i^n(0)=E_i x_{0,i}\) (\(i=1,\ldots ,s\)). Given \(z_i^k\), we have s decoupled subsystems.

Remark 27

-

(a)

If \(\textrm{rk}\begin{bmatrix} E&R \end{bmatrix}<n\), then it is not possible to formulate any convergence results for \((x^k)\), in general. As an example, consider a system (1) with

$$\begin{aligned}E=R=0_{2\times 2},\;Q=I_2,\;J=\left[ {\begin{matrix}0&{}1\\ -1&{}0\end{matrix}}\right] ,\;u=0\in L^2([0,T];{\mathbb {R}}^2),\end{aligned}$$which belongs to the class specified in Assumption 5 with \(s=2\) and \(n_1=n_2=1\). The unique solution of (1) is clearly given by \(x=0\) (the specification of the initial value \(x_0\) is obsolete by \(E=0\)). The above choice of E, R and Q leads to M in (15) being the zero operator, whereas N as in (16) corresponds to the multiplication with the skew-Hermitian matrix \(-J\). Consequently, for \(\lambda >0\), the iteration (24) now reads

$$\begin{aligned} x^{k+1}:=U x^{k}, \quad \text {where} \quad U=(I+\lambda J)(I-\lambda J)^{-1}. \end{aligned}$$By invoking Lemma 19, we see that U is a unitary matrix with \(U\ne I_2\). Therefore, \(\Vert x^{k+1}(t)\Vert =\Vert x^{k}(t)\Vert \) for all \(k\in {\mathbb {N}}\), \(t\in [0,T]\), and thus \(\Vert x^{k+1}\Vert _{2}=\Vert x^{k}\Vert _{2}\). It can be concluded that the sequence \((x^k)\) does not converge in \(L^2([0,T],{\mathbb {R}}^2)\) to \(x=0\). A closer look to the iteration yields that \((x^k)\) does not even have a subsequence which weakly converges in \(L^2([0,T],{\mathbb {R}}^2)\) to \(x=0\).

-

(b)

Under Assumption 5, (20) is equivalent to E and R fulfilling \(\textrm{rk}E=n\), or \(\textrm{rk}\begin{bmatrix} E&R \end{bmatrix}=n\) and \(\alpha >0\).

-

(c)

In the case of ordinary differential equations, i.e., \(E=I\), then Theorem 26 simplifies to the following:

-

(i)

If \(\mu >0\), or \(\textrm{rk}R=n\) and \(\alpha <1\), then \((z^k)\) converges to z in \(L^2([0,T],{\mathbb {R}}^n)\), and \((x^k)_k\) converges to x in \(H^1([0,T],{\mathbb {R}}^n)\).

-

(ii)

\((x^k)_k\) converges to x in \(L^2([0,T],{\mathbb {R}}^n)\). \(\square \)

-

(i)

The above discussion results in a Lions–Mercier-type dynamic iteration for pH systems, which is summarized in Algorithm 1. Notice, we have different formulas for the iteration in \(x_k\); the algorithm is based on the decoupled formulation of subsystems A.

6 Numerical results

We analyse the convergence rates for the new type of monotonic dynamic iterations (Lions–Mercier-type iteration). Then, we show numerical convergence results for the promising monotone convergence algorithms developed here.

6.1 Convergence rate discussion

For \(0<\alpha <1\) and \(K=((1-\alpha )R+\mu EQ^{-1}-J_o)\) the convergence rate is given by the contraction factor (22)

The optimal \(\lambda ^*\) will have the smallest error reduction factor. We find:

Remark 28

(Decoupled setting) In the decoupled case, \(J_o=0\), hence, the optimal error reduction reads:

which is only small for nearly equilibrated matrices K. This is in contrast to (e.g.) Jacobi dynamic iteration procedures, where we have immediate convergence. \(\square \)

Considering ODEs with \(E=I\) and setting \(\alpha =1\), we have \(K=\mu Q^{-1} -J_o\).

Remark 29

(ODE case)

-

(a)

Transformation to \(Q=I\): using the Cholesky factorization \(Q=V^\top V \), we multiply (1) with V, which yields

$$\begin{aligned} V \tfrac{\textrm{d}}{\textrm{d} t}x&= V (J-R) (V^\top V) x + V B u(t) \qquad \Leftrightarrow \quad \tfrac{\textrm{d}}{\textrm{d} t}{\tilde{x}} = ({\tilde{J}} - {\tilde{R}}) {\tilde{x}} + {\tilde{B}} u(t) \end{aligned}$$with \({\tilde{x}}=V x\), \({{\tilde{J}}}=V J V^\top , {{\tilde{R}}}= V R V^\top \) and \({{\tilde{B}}}= V B\) provides a remedy. Correspondingly, \(y= B^\top Q x\) is transformed into \({{\tilde{y}}} = {{{\tilde{B}}}}^\top {{\tilde{x}}}\).

-

(b)

Decoupled setting (\(J_o=0\)): The reduction factor is \(q^*= 1-\tfrac{1}{\text {cond}{(Q)}}\). Again, this is only small for scaling matrices Q with small condition number. The above transformation to \(Q=I\) yields \(q^{*}=0\). An alternative is a shift with a matrix-valued \(\lambda \) in Algorithm 1.

-

(c)

Coupled setting: for \(Q=I\) and the Euclidean norm this gives

$$\begin{aligned} \lambda ^*(\mu )&= \tfrac{1}{\sqrt{\mu ^2+\lambda _{\max }(J_o^\top J_o)}} \quad \text {and} \quad q^*\bigl (\mu ,\lambda ^*(\mu )\bigr )^2 =1-\tfrac{1}{\sqrt{1+{\lambda _{\max }(J_o^\top J_o)}/{\mu ^2}}}. \end{aligned}$$(31)

6.2 Results for monotone Lions–Mercier-type algorithm

6.2.1 Example with Jacobi failure

For system (13) holds \(Q=I\) and \(\lambda _{\max }(J_o^\top J_o)=\nu ^2\). This gives the optimal contraction constant via (31): \((q^*)^2 = {1-\tfrac{1}{\sqrt{1+ {\nu ^2}/{\mu ^2}}}}\). We see the following:

-

(a)

We have monotone convergence for the Lions–Mercier-type algorithm, Algorithm 1.

-

(b)

In the limit of an undamped error bound \(\omega \rightarrow 0^+\), the optimal convergence rate goes to one (for \(\nu \ne 0\)). This indicates an extremely slow convergence.

-

(c)

One can get arbitrarily small convergence rates \(q^*\) for \(\mu \rightarrow \infty \). However, this corresponds to more and more damped error norms, which do not allow to estimate the numerically important undamped error. \(\square \)

6.2.2 Simple \(2\times 2\) system with scaling

We introduce into the simple \(2\times 2\) example (13) a scaling matrix Q:

where \(\tau , \nu >0\) and \(q_1 \ge q_2 >0\). In the decoupled case, \(\nu =0\), the optimal reduction factor is given by (see Rem. 29(b)):

Figure 2 gives numerical results for the Lions–Mercier-type algorithm, showing that Q not being a multiple of I destroys the convergence in one step, although the system is fully decoupled.

Lions–Mercier-type iteration with (32): number of iterations (x) versus scaled error in coupling term v/z for various settings. First column provide the decoupled case (\(\nu =0\)), and the right column the coupled cases (\(\nu =1.5\)). Always \(\tau =-0.5\) and \(\omega =2.1\)

6.2.3 Two masses and three springs example with damping

Now, we consider two masses \(m_1,\, m_2 >0\), which are connected via massless springs \(K_1,\, K_2, K>0\) to walls with damping \(r_1, r_2>0\), see Fig. 3. To set up the system, we have positions \(q_1,\, q_2\) and momenta \(p_1,\, p_2\) for the masses \(m_1,\, m_2\), respectively. Then, the system can be modeled by the following Hamiltonian equation of motion:

Notice, this is not yet given in our pH format (1) for the overall systems. Before we treat the overall system, we set up a description as coupled pH-subsystem as in Sec. 2.2, let \(q=q_2\) denote the coupling variables, which we need for the first subsystem. Then, the two coupled pH systems read:

with quantities for the first subsystem: \(x_1 =[p_1,\,q_1,\, q_1-q]^\top \), \( z_1 = Q_1 x_1\),

and for the second subsystem we have: \(x_2 =[p_2,\, q_2]^\top \), \(z_2= Q_2 x_2\)

and the coupling interface reads:

Also the overall coupled system can be written in our pH-structure given in (1), with \(F={{\,\textrm{diag}\,}}(F_1,F_2)\) for \(F \in \{J,R,Q,B\}\), cf. Rem. 7:

Numerical results for the Lions–Mercier-type Algorithm 1 are given in Fig. 4. These results show that the estimate \(q^\star \approx 0.975\) for the convergence rate (with an optimal choice leading to \(q^\star \approx 0.944\)) is quite pessimistic in this case (for \(\mu =2\) – blue line with marker \(\times \)).

Visualisation of the numerical error reduction for Lions–Mercier-type iteration of system (33) versus iteration count. Left in x, center in z, right in z with scaled norm. Parameters of the benchmark: \(K_i=2\), \(m_1=m_2=2\), \(K=4\), \(r_1=0.5\), \(r_2=0.75\), and zero initial values apart from \(p_{2,0}=0.1\)). And iteration parameters: \(\omega =2.2\), \(\alpha =0.5\), \(\lambda =1.5\) as well as \(\mu =2\) (blue line with marker \(\times \)), \(\mu =0.1\) (red line with bullet marker) (color figure online)

Remark 30

The index of linear pH DAEs does not exceed two, see Proposition 9(c). This might be confusing, especially since multibody systems are known to have index three, if holonomic position contraints are involved [6]. However, note that in the port-Hamiltonian formulation of multibody systems, the state is composed of forces and velocities; position constraints are here hidden in velocity contraints via differentiation. The latter causes an index drop.

Including coupling and output quantities as variables, i.e., \( {{\tilde{x}}}_i = \begin{bmatrix} x_i,\, u_i,\,y_i \end{bmatrix}^\top \) yields a DAE, which has no damping (R) in the coupling or output equations. Thus it does not fit into our framework. \(\Box \)

Finally, we like to discuss a DAE example. To have a DAE multibody system, a simple choice are holonomic constraints. However, such a constraint comes without dissipation. Hence \(\text {rk}(E,R)\) is not full. Therefore, we change the field of applications.

6.3 Circuit example (DAE)

The electric circuit in Fig. 5 can be modelled as follows: for \(x^\top =\big [u_1, \, u_2,\, u_3,\, \jmath _1, u_4,\, u_5\big ]\) and \(E=\text {diag}(0, C_1, 0, L_1, 0, C_2)\) \(B^\top = \begin{bmatrix} 1,\, 0,\, 0,\, 0,\, 0,\, 0\end{bmatrix}\)

This example fulfills the rank condition \(\text {rk}(E,R) = n=6\) and has block-diagonal R.

Numerical results illustrating the Lions–Mercier-type Algorithm 1 are given in Fig. 6.

Results for the circuit (Fig. 5): We depict the convergence in \(x_3\) (first subsystem, first column) and in \(x_5\) (second subsystem, second column) for various iterations; approximation in red with \(\circ \) marker, reference in blue with \(\times \) marker. (Lions–Mercier parameters: \(\lambda =1.2\), \(\mu =1\), \(\omega =2.2\), \(\alpha =0.2\).) (color figure online)

The respective error reduction is illustrated in Fig. 7. These results show that the estimate \(q \approx 0.9998\) for the convergence rate (with an optimal choice leading to \(q^\star \approx 0.9980\)) is quite pessimistic in this case, too.

Error reduction for simple circuit (Fig. 5). We plot the achieved precision versus the iteration count for x (left panel), z (middle panel), scaled z (right panel)

Remark 31

(Rank condition for circuits)

We discuss circuit equation modelled by modified nodal analysis.

-

i)

Let a circuit include one voltage source. Then the model has a pure algebraic equation, which has no dissipation. Thus the rank condition is not satisfied.

-

ii)

Electric circuits with current sources, capacitors, inductors and resistors can be made to fulfill the rank condition by adding sufficiently many resistors.

-

iii

) The coupling—without auxiliary variables—can be facilitated via inductors.

\(\square \)

Error decay for MSD-chain with parameters given in Fig. 8. Left panel: error in the x-variable; right panel: error in the z variable (scaled)

Results for the coupled MSD-chain (see Fig. 8). The reference solution (blue with \(\times \) marker) is compared against the iterates (red with \(\circ \) marker) for \(l=10,20,30,40\) iterations (in the rows). Left panels: variable \(p_{1,2}\); right panels: variable \(q_{2,5}\) (color figure online)

6.4 Coupled MSD-Chain

A benchmark in port-Hamiltonian systemsFootnote 1 is the mass-spring-damper chain (MSD-chain), see [27]. We consider now two coupled MSD-chains, which consist of 25 mass-spring-damper elements each. The chains are coupled via a spring with stiffness \(K_{co}\), see Fig. 8. The mathematical model as pH system is given as follows. For both chains, we have

for variable \(x_i^\top = (q_{i,1},p_{i,1},\dotsc ,q_{i,25},p_{i,25})\) for positions \(q_{i,j}\) and velocities \(p_{i,j}\) (\(i=1,2\), \(j=1,\dotsc 25\)) with

and \(R_i = \text {diag} \bigl (0, r_{i,1}, 0, r_{i,2}, \ldots , 0, r_{i,25} \bigr )\) for \(i=1,2\). For the coupling, we introduce the additional scalar variable q or \(q_{1,1}-q\) (as in Sect. 6.2.3) and have the additional equation

which we put to subsystem (\(i=1\)) as:

with the enlarged matrices

using \(e_1^{\top } = (1,0,\ldots 0)\) and the coupling \(u_1 = y_2,\; u_2=-y_1\). Notice the overall unknowns \(\left( {\tilde{x}}_{1}^{\top }(t),\, x_2^{\top }(t) \right) \in {\mathbb {R}}^{101}\). As initial value at \(t=0\), we use zero, apart from \({\tilde{x}}_{1,5}=q_{1,3}(0)=0.1\), we simulate for \(t\in [0,2]\). Moreover, for the Lions–Mercier algorithm, we use the parameters: \(\lambda =3.5,\; \alpha =0.5,\; \mu =2,\; \omega =2.2\). As reference solution, we use a standard time integration (Runge–Kutta of order five) with very small steps. We find that the error decays as expected. To illustrate the behavior, we show in Fig. 9 the overall error (\(L^2\)-norm over all components summed over a discrete time grid).

To give further inside, we compare the reference solution (blue with \(\times \) marker) to the iterates (red with \(\circ \) marker) of the our Lions–Mercier-algorithm for various iteration counts in Fig. 10. This shows, how the algorithm converges. In the left column, we depict the variable \({\tilde{x}}_{1,4} =p_{1,2}\) (of the first subsystem) and in the second column, we depict the variable \({\tilde{x}}_{2,9}=q_{2,5}\).

7 Conclusions

For a general pH setting, we have shown existence and uniqueness of weak solutions. Furthermore, the perspective of coupled systems and coupling variables are treated. Then, it is demonstrated that Jacobi-type of dynamic iteration may fail in practice due to finite precision. To circumvent this, a Mercier-Lions-type dynamic iteration scheme is developed, which guarantees monotone convergence in a related variable. The proof is based on properties of the Cayley transform. Numerical results demonstrate the monotone convergence. As a drawback, we observe that the currently achieved optimal convergence rates still need some improvements in order to pave the way for a broader application.

References

Arnold, M., Günther, M.: Preconditioned dynamic iteration for coupled differential-algebraic systems. BIT Numer. Math. 41, 1–25 (2001)

Alì, G., Bartel, A., Günther, M., Romano, V., Schöps, S.: Simulation of coupled PDAEs: Dynamic iteration and multirate simulation. In: Günther, M. (ed.) Coupled Multiscale Simulation and Optimization in Nanoelectronics, pp. 103–156. Springer, Berlin (2015). https://doi.org/10.1007/978-3-662-46672-8_3

Bartel, A., Günther, M.: PDAEs in refined electrical network modeling. SIAM Rev. 60(1), 56–91 (2018). https://doi.org/10.1137/17M1113643

Bartel, A., Brunk, M., Schöps, S.: On the convergence rate of dynamic iteration for coupled problems with multiple subsystems. J. Comput. Appl. Math. 262, 14–24 (2014). https://doi.org/10.1016/j.cam.2013.07.031

Bartel, A., Brunk, M., Günther, M., Schöps, S.: Dynamic iteration for coupled problems of electric circuits and distributed devices. SIAM J. Sci. Comput. 35(2), 315–335 (2013). https://doi.org/10.1137/120867111

Arnold, M.: Modular time integration of coupled problems in system dynamics. In: Günther, M., Schilders, W. (eds.) Novel Mathematics Inspired by Industrial Challenges, pp. 57–72. Springer, Cham (2022). https://doi.org/10.1007/978-3-030-96173-2_3

Jackiewicz, Z., Kwapisz, M.: Convergence of waveform relaxation methods for differential-algebraic systems. SIAM J. Numer. Anal. 33(6), 2303–2317 (1996). https://doi.org/10.1137/S0036142992233098

MacNamara, S., Strang, G.: In: Glowinski, R., Osher, S.J., Yin, W. (eds.) Operator Splitting, pp. 95–114. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-41589-5_3

Diab, M., Tischendorf, C.: Splitting methods for linear circuit DAEs of index 1 in port-Hamiltonian form. In: van Beurden, M., Budko, N., Schilders, W. (eds.) Scientific Computing in Electrical Engineering, pp. 211–219. Springer, Cham (2021)

Diab, M., Tischendorf, C.: Splitting methods for linear coupled field-circuit DAEs. Submitted for publication (2022)

van der Schaft, A.J.: Port-Hamiltonian systems: Network modeling and control of nonlinear physical systems. In: Schlacher, K., Irschnik, H. (eds.) Advanced Dynamics and Control of Structures and Machines. CISM courses and lectures, vol. 444, pp. 127–167. Springer, Vienna (2004). https://doi.org/10.1007/978-3-7091-2774-2_9

Jeltsema, D., van der Schaft, A.J.: Port-Hamiltonian systems theory: an introductory overview. Found. Trends Syst. Control 1(2–3), 173–387 (2014). https://doi.org/10.1561/2600000002

van der Schaft, A.J.: Port-Hamiltonian differential-algebraic systems. In: Ilchmann, A., Reis, T. (eds.) Surveys in Differential-Algebraic Equations I. Differential-Algebraic Equations Forum, pp. 173–226. Springer, Berlin (2013). https://doi.org/10.1007/978-3-642-34928-7_5

Maschke, B., van der Schaft, A.J.: Generalized port-Hamiltonian DAE systems. Syst. Control Lett. 121, 31–37 (2018). https://doi.org/10.1016/j.sysconle.2018.09.008

Beattie, C., Mehrmann, V., Xu, H., Zwart, H.: Linear port-hamiltonian descriptor systems. Math. Control Signals Syst. 30(4), 17 (2018). https://doi.org/10.1007/s00498-018-0223-3

Gernandt, H., Haller, F.E., Reis, T.: A linear relation approach to port-Hamiltonian differential-algebraic equations. SIAM J. Matrix Anal. Appl. 42(2), 1011–1044 (2020). https://doi.org/10.1137/20M1371166

Maschke, B., van der Schaft, A.J.: Dirac and Lagrange algebraic constraints in nonlinear port-Hamiltonian systems. Vietnam J. Math. 48, 929–939 (2020). https://doi.org/10.1007/s10013-020-00419-x

Lions, P.L., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16(6), 964–979 (1979). Accessed 12 May 2022

Alt, H.W.: Linear Functional Analysis. Universitext. Springer, London (2016)

Kunkel, P., Mehrmann, V.: Differential-Algebraic Equations. Analysis and Numerical Solution. EMS Publishing House, Zürich, Switzerland (2006). https://doi.org/10.4171/017

Lamour, R., März, R., Tischendorf, C.: Differential Algebraic Equations: A Projector Based Analysis. Differential-Algebraic Equations Forum, vol. 1. Springer, Heidelberg (2013)

Mehl, C., Mehrmann, V., Wojtylak, M.: Linear algebra properties of dissipative Hamiltonian descriptor systems. SIAM J. Matrix Anal. Appl. 39(3), 1489–1519 (2018). https://doi.org/10.1137/18M1164275

Günther, M., Bartel, A., Jacob, B., Reis, T.: Dynamic iteration schemes and port-Hamiltonian formulation in coupled DAE circuit simulation. Int. J. Circuit Theory Appl. 49, 430–452 (2021). https://doi.org/10.1002/cta.2870

Cervera, J., van der Schaft, A.J., Baños, A.: Interconnection of port-Hamiltonian systems and composition of Dirac structures. Automatica 43(2), 212–225 (2007)

Burrage, K.: Parallel and Sequential Methods for Ordinary Differential Equations. Clarendon Press, Oxford (1995)

Barbu, V.: Nonlinear Differential Equations of Monotone Types in Banach Spaces. Springer Monographs in Mathematics. Springer, New York (2010). https://doi.org/10.1007/978-1-4419-5542-5

Gugercin, S., Polyuga, R.V., Beattie, C., van der Schaft, A.: Structure-preserving tangential interpolation for model reduction of port-Hamiltonian systems. Autom. J. IFAC 48(9), 1963–1974 (2012)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bartel, A., Günther, M., Jacob, B. et al. Operator splitting based dynamic iteration for linear differential-algebraic port-Hamiltonian systems. Numer. Math. 155, 1–34 (2023). https://doi.org/10.1007/s00211-023-01369-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-023-01369-5