Abstract

This work is concerned with linear matrix equations that arise from the space-time discretization of time-dependent linear partial differential equations (PDEs). Such matrix equations have been considered, for example, in the context of parallel-in-time integration leading to a class of algorithms called ParaDiag. We develop and analyze two novel approaches for the numerical solution of such equations. Our first approach is based on the observation that the modification of these equations performed by ParaDiag in order to solve them in parallel has low rank. Building upon previous work on low-rank updates of matrix equations, this allows us to make use of tensorized Krylov subspace methods to account for the modification. Our second approach is based on interpolating the solution of the matrix equation from the solutions of several modifications. Both approaches avoid the use of iterative refinement needed by ParaDiag and related space-time approaches in order to attain good accuracy. In turn, our new algorithms have the potential to outperform, sometimes significantly, existing methods. This potential is demonstrated for several different types of PDEs.

Similar content being viewed by others

1 Introduction

This paper is concerned with the efficient numerical solution of matrix equations that arise from implicit time integration of large systems of ordinary differential equations (ODEs). More specifically, we treat generalized Sylvester equations of the form

where \(F\in {\mathbb {R}}^{n\times n_t}\) and the columns of the unknown matrix \(X\in {\mathbb {R}}^{n\times n_t}\) contain the approximate solution of the ODE at \(n_t\) time steps with constant step size. The matrices \(A,M\in {\mathbb {R}}^{n\times n}\) are large and sparse, as they arise, for example, from the finite difference/element spatial discretization of a time-dependent partial differential equation (PDE). The matrices \(B_1,B_2\in {\mathbb {R}}^{n_t\times n_t}\) are banded Toeplitz matrices and contain the coefficients of the time integrator. We assume throughout this work that M and \(B_2\) are invertible.

Vectorizing (1) and using the Kronecker product \(\otimes \), we obtain the equivalent \(nn_t\times nn_t\) linear system

where \({\textbf{x}} = \textrm{vec}(X)\) and \({\textbf{f}} = \textrm{vec}(F)\). While the theoretical properties of generalized Sylvester equations are well understood and various numerical solution strategies have been developed [6, 46], the structure of the matrix coefficients in (1) comes with particular challenges that are addressed in this work.

Illustrative example. To illustrate (1) and motivate our developments, we consider the ODE

with \({\textbf{u}}(t)\in {\mathbb {R}}^n\). When such an ODE arises from the finite element discretization of a linear time-dependent PDE then A and M correspond to the stiffness matrix and the mass matrix, respectively, and M is symmetric positive definite. When using a finite difference discretization, we have \(M = I_n\). The implicit Euler method with step size \(\Delta t = T / n_t\) applied to (3) requires the successive solution of the linear systems

where \({\textbf{x}}_k \approx {\textbf{u}}(k\cdot \Delta t)\). Stacking these equations yields an \(nn_t\times nn_t\) linear system of the form

The system matrix can be rewritten in the form \(B_2\otimes A +B_1\otimes M\) from (2) by setting

In turn, the linear system can be rephrased as a matrix equation of the form (1).

Taking a closer look at (2), we identify the factors that have a role in determining such a peculiar structure. The Kronecker structure of the coefficient matrix is due to the time-independence of the coefficients of the linear ODE (3). The matrices \(B_1,B_2\) are Toeplitz because of the use of a constant step size. Indeed, this property is maintained when replacing implicit Euler by implicit multistep methods [27] such as BDF (backward differentiation formula). When using Runge–Kutta integrators [27], we still get banded Toeplitz matrices \(B_1,B_2\) but – as we will see in Sect. 4 – we have to embed A, M into larger matrices in order to arrive at a matrix equation of the form (1).

Existing work. Classical time integration approaches for ODEs proceed by solving the discretized equations, like (3), sequentially first for \(k =1\), then for \(k = 2\), and so on. This limits the potential for parallelizing the implementation of the integrator. The Parareal algorithm [22, 33] avoids this limitation by combining, iteratively, coarse (cheap) time steps that are performed sequentially with fine (expensive) time steps that are performed in parallel. There have been many developments and modifications of Parareal during the last two decades; we refer to [15, 21] for overviews and a historical perspective. Our work relates to the so called ParaDiag algorithms [20], which proceed somewhat differently from Parareal by explicitly exploiting the structure of the matrix Eq. (1). In passing, we mention that linear ODEs with constant coefficients, like the model problem (3), can be turned into an independent set of linear systems by combining an integral transform, like the Laplace transform, with quadrature for the numerical evaluation of the inverse transform; see [15, Sec. 5.5] and the references therein.

The basic idea of ParaDiag is simple: If \(B_2^{-1} B_1\) can be diagonalized by a similarity transformation then (1) decouples into \(n_t\) linear systems, which can be solved in parallel. The pitfall of this approach is that the most straightforward choice of time steps, constant time steps, leads to non-diagonalizable matrices; indeed, the matrix \(B_2^{-1} B_1\) in (5) is one big Jordan block. When choosing a geometrically increasing sequence of time steps, such as \(\Delta t_j= \Delta t_1 \tau ^{j-1}\) for some \(\tau >1\) then \(B_2^{-1}B_1\) has mutually distinct eigenvalues and thus becomes diagonalizable [35]. However, even when using a second order method in time, e.g., the Crank-Nicolson method, the condition number of the transformation matrix grows rapidly as \(n_t\) increases, which severely limits the number of time steps [18, 19].

A second class of ParaDiag algorithms allows for uniform time steps and perturbs \(B_1, B_2\) to enforce diagonalizability. More specifically, the perturbed system matrix takes the form

where \(C_1^{(\alpha )},C_2^{(\alpha )}\) are Strang-type [9, 38, 48] \(\alpha \)-circulant matricesFootnote 1 constructed from the Toeplitz matrices \(B_1,B_2\). This matrix can be used within a stationary iteration \(P_{\alpha }{\textbf{x}}^{(j)}=(P_{\alpha }-B_2\otimes A - B_1\otimes M){\textbf{x}}^{(j-1)}+{\textbf{f}}\) [34] or as a preconditioner for GMRES [10, 37] applied to (2). In the solution of linear systems with \(P_{\alpha }\) one takes advantage of the fact that the matrices \(C_1^{(\alpha )},C_2^{(\alpha )}\) are simultaneously diagonalized by a scaled Fourier transform:

where \(\Omega \in {\mathbb {C}}^{n_t\times n_t}\) is the discrete Fourier transform matrix and \(D_{\alpha }=\textrm{diag}(1, \alpha ^{\frac{1}{n_t}},\dots , \alpha ^{\frac{n_t-1}{n_t}})\). In turn, \( P_{\alpha }\) is block diagonalized:

Using the fast Fourier transform (FFT), this allows us to compute the solution of a linear system, with \(P_{\alpha }\) by combining FFT with the (embarrassingly parallel) solution of \(n_t\) linear systems with the matrices \((\lambda _{1,j}M +\lambda _{2,j}A\), \(j = 1,\ldots ,n_t\). This procedure, rephrased for the matrix equation corresponding to \(P_{\alpha }{\textbf{x}}={\textbf{f}}\), is summarized in Algorithm 1. Note that, even though the original ODE (3) features real matrices, Algorithm 1 requires the solution of complex linear systems, which is more expensive (often by a factor 2–4) than solving real linear systems. This disadvantage of having to pass to complex arithmetic is shared by all diagonalization based methods discussed in this work.

The use of the preconditioner \(P_\alpha \) can dramatically accelerate the convergence of an iterative solver for (2). This has been observed for parabolic problems in [37] when using \(P_{\alpha }\) with \(\alpha =\pm 1\) as a preconditioner in GMRES. In [20], wave propagation problems (integrated in time with a leap-frog finite difference scheme) have been successfully treated by choosing a relatively small value for \(0<\alpha <1\). Note, however, that \(\alpha \) cannot be chosen arbitrarily small because otherwise the condition number of the matrix \(D_\alpha \) (given by \(\alpha ^{(1-n_t)/n_t} \approx 1/\alpha \)) explodes and, in turn, roundoff error impedes convergence. In practice, this means that the choice of \(\alpha \) needs to strike a compromise and there is no choice of \(\alpha \) that yields sudden convergence. In turn, several iterations are needed to attain good accuracy, which adds significant computational overhead and communication cost because every iteration calls Algorithm 1 once.

New contributions. In this paper, we propose two novel strategies that avoid the overhead incurred by iterative procedures for solving (2).

Our first method, presented in Sect. 2, exploits the trivial observation that \(C_1^{(\alpha )} - B_1\) and \(C_2^{(\alpha )} - B_2\) have very low rank. Thus, the Sylvester equation addressed by Algorithm 1 can be viewed as a low-rank modification of the original Eq. (1), which allows us to apply the low-rank update from [30] for linear matrix equations. This update corrects the output of Algorithm 1 by solving a Sylvester equation with the same coefficients as (1) but with a different right-hand side that has low rank. The main novelty of Sect. 2 is in the analysis of the low-rank structure of the solution to this equation and the development of efficient algorithms.

Our second method, presented in Sect. 3, takes an interpolation point of view: Whenever we solve the linear system \(P_{\alpha }{\textbf{x}}={\textbf{f}}\) we are actually evaluating a vector-valued function \({\textbf{x}}(\alpha )\), depending on the complex parameter \(\alpha \), such that \({\textbf{x}}(0)\) corresponds to the solution of (2). More precisely, this function can be efficiently and accurately evaluated on a scaled unit circle. We suggest to construct an approximation for \({\textbf{x}}(0)\) via a linear combination of \({\textbf{x}}(\alpha )\) evaluated at all dth order roots of a complex number for some small value of d. This strategy is highly parallelizable as it requires to solve d completely independent linear systems with coefficient matrices of the form \(P_{\alpha }\). Moreover, it applies also to the cases where the matrices \(\delta B_j^{(\alpha )}\) are not low-rank, for example, when a time fractional differential operator is involved.

Section 4 extends our framework to implicit Runge–Kutta time integrators.

Section 5 is dedicated to a range of numerical experiments that highlight the advantages of our method for a broad range of situations.

2 Low-rank update approach

For a lower triangular banded Toeplitz matrix B, Strang’s \(\alpha \)-circulant preconditioner [38] is obtained from reflecting the lower band to the top right corner and multiplying it by \(\alpha \):

In particular, this implies that the rank of this modification is bounded by the width of the lower bandwidth. Applied to the time stepping matrices \(B_1\), \(B_2\), we obtain that

for certain low-rank matrices \(\delta B_1^{(\alpha )},\delta B_2^{(\alpha )}\). For instance, for the implicit Euler method (see (5)) we have \(\delta B_1^{(\alpha )}=-\frac{\alpha }{\Delta t}{\textbf{e}}_1 {\textbf{e}}_{n_t}^T\), where \({\textbf{e}}_j\) denoting the jth unit vector of length \(n_t\), and \(\delta B_2^{(\alpha )}=0\). Inspired by [30] we write the solution of (1) as \(X=X_0+\delta X\), where

After Eq. (7) is solved via Algorithm 1, the correction Eq. (8) becomes a generalized Sylvester equation in \(\delta X\) that has the same coefficients as (1) but a different right-hand side of rank at most \(\hbox {rank}(\delta B_1^{(\alpha )})+\hbox {rank}(\delta B_2^{(\alpha )})\). This remarkable property enables us to make use of low-rank solvers for linear matrix equations (see [46] for an overview) in order to approximate \(\delta X\). Note that, the choice of \(\alpha \ne 0\) has no influence on this strategy; for this reason we always choose \(\alpha =1\) when using the low-rank update approach. The resulting procedure is summarized in Algorithm 2.

Remark 1

It is not uncommon that the right-hand side matrix F of (1) has (numerically) low rank because, for example, the inhomogeneity \({\textbf{f}}\) of the ODE (3) is constant or varies smoothly with respect to t. In such a situation, a low-rank solver can be applied directly to (1) and the following approach has been suggested by Palitta [39]: A block Krylov subspace method is used for reducing the spatial dimension combined with an application of the Shermann–Morrison–Woodbury formula to the resulting reduced equation. Our low-rank approach has the advantage that it is agnostic to properties of F, at the expense of having to solve (7). In principle, Palitta’s method can be applied to solve the correction Eq. (8) but we found it more efficient for large \(n_t\) to use a two-sided approach that reduces the temporal dimension as well.

2.1 Solution of correction equation

In the following, we discuss the application of low-rank solvers to the correction Eq. (8). In view of the (assumed) invertibility of M and \(B_2\) we replace (8) by the following Sylvester matrix equation:

where

and \(\hbox {rank}(C)\le k:=\hbox {rank}(\delta B_1^{(1)})+\hbox {rank}(\delta B_2^{(1)})\ll \min \{n,n_t\}\). For given factorizations \(\delta B_1^{(1)}=U_1V_1^*\) and \(\delta B_2^{(1)}=U_2V_2^*\), a low-rank factorization \(C=UV^*\), with \(U\in {\mathbb {R}}^{n\times k}\) and \(V\in {\mathbb {R}}^{n_t\times k}\), is obtained by setting

Alternatively, when M is symmetric positive definite and its Cholesky factorization \(M=LL^T\) is available, a possible symmetry of A is preserved by considering

with  ,

,  ,

,  , and

, and  . In analogy to (10) an explicit rank-k factorization of

. In analogy to (10) an explicit rank-k factorization of  can be derived. In the rest of this section we focus on analyzing and solving (9) but we remark that our findings extend to (11) with minor modifications.

can be derived. In the rest of this section we focus on analyzing and solving (9) but we remark that our findings extend to (11) with minor modifications.

Various numerical methods have been developed to treat large-scale Sylvester equations with low-rank right-hand side, including the Alternating Direction Implicit method (ADI) [14, 40] and the Rational Krylov Subspace Method (RKSM) [5, 26, 42]. These methods compute approximate solutions of the form \(\widetilde{\delta X}= WYZ^*\), where W and Z are bases of (block) rational Krylov subspaces generated with the matrices \({\widetilde{A}},{\widetilde{B}}\) and starting block vectors U and V, respectively. More precisely, given two families \(\mathbf \xi =\{\xi _1,\dots ,\xi _{\ell }\}\), \(\mathbf \psi =\{\psi _1,\dots ,\psi _{\ell }\}\subset {\mathbb {C}}\) of so-called shift parameters, W and Z are chosen as bases of the following subspaces:

When shifts are repeated, the matrix power is increased. For example, when a single shift \(\xi _1\) is repeated \(\ell \) times, one uses \(\textrm{span}\big ((\xi _1I-{\widetilde{A}})^{-1}U,\dots , (\xi _{1}I-{\widetilde{A}})^{-\ell }U\big )\). We refer to the relevant literature [5, 13, 46] for a complete description of the computation of the factors W, Y, Z in these methods.

Let us comment on some implementation aspects of RKSM, which will be the method of choice in our implementation of Algorithm 2, line 4. In RKSM, the middle factor Y in the approximation \(\widetilde{\delta X}= WYZ^*\) is obtained by solving a compressed matrix equation, which does not contribute significantly to the overall computational time as long as the dimensions of the Krylov subspaces remain modest. Computing W and Z requires to solve shifted linear systems with the matrices \({\widetilde{A}},{\widetilde{B}}\); these operations can be performed without forming \(M^{-1}A\) and \(B_2^{-1}B_1\) explicitly, by means of the relations

In particular, we can still leverage properties like sparsity in A and M, or the bandedness of \(B_1,B_2\), when solving shifted linear systems with \({\widetilde{A}},{\widetilde{B}}\). Our implementation of RKSM relies on the Matlab toolbox rk-toolbox [7] for generating the factors W, Z. Finally and importantly, the selection of the shift parameters \(\mathbf \xi ,\mathbf \psi \) strongly affects the convergence of RKSM and we discuss suitable choices in the following section.

2.2 Low-rank approximability of the correction equation

In this section, we analyze the numerical low-rank structure of the solution \(\delta X\) to the correction Eq. (9), which also yields suitable choices of shift parameters in RKSM discussed above.

By the Eckart-Young-Mirsky theorem, \(\delta X\) admits good low-rank approximations if its singular values \(\sigma _j(\delta X)\) decay rapidly. Several works, including [1, 2, 41, 43], have established singular value decay bounds for solutions of Sylvester matrix equations. In particular, the framework presented in [4] applies to (9) and shows that [4, P. 323]

where \({\mathcal {R}}_{j,j}\) denotes the set of rational functions having numerator and denominator of degree at most j. Choosing a good candidate for the rational function r is difficult, especially when \({\widetilde{A}}\) and/or \({\widetilde{B}}\) are non-normal. To circumvent this difficulty, we loosen the bound (13) by considering the numerical range \({\mathcal {W}}({\widetilde{A}}) = \{ x^* A x:\Vert x\Vert _2 = 1\} \subset {\mathbb {C}}\). A result by Crouzeix and Palencia [11] states that

where the constant \(1+\sqrt{2}\) can be omitted when A is normal and the set \(E\supseteq {\mathcal {W}}({\widetilde{A}})\) is chosen to feature a simple geometry, for which the eventual rational approximation problem admits explicit solutions. Similarly, one has

for any set \(F \subset {\mathbb {C}}\) with \(F\supseteq {\mathcal {W}}(-{\widetilde{B}})\). Inserting these inequalities into (13), we arrive at

where the exponent a satisfies \(a = 0\) if both \({\widetilde{A}},{\widetilde{B}}\) are normal, \(a = 1\) if one of the two matrices is normal, and \(a = 2\) otherwise.

The quantity \(Z_j(E,F)\) and the related optimization problem are known in the literature as the Zolotarev number and Zolotarev problem for the sets E and F [4, 52]. Studying the Zolotarev problem is not only important from a theoretical but also from a computational point of view because identifying an extremal rational function \(r_j^*(z)\) for \(Z_j(E,F)\) allows for the fast solution of (9). Indeed, running j steps of ADI or RKSM with shift parameters given by the zeros and poles of \(r_j^*(z)\) ensures a convergence rate not slower than the decay rate of \(Z_j(E,F)\) [3, 32, 50]. A major complication of this construction is the need for choosing the sets E, F. On the one hand, it is desirable to choose E, F such that they enclose the numerical ranges of \({\widetilde{A}},{\widetilde{B}}\) as tightly as possible. On the other hand, explicit solutions for \(Z_j(E,F)\) are known only for a few selected geometries of E and F, including: two disjoint real intervals [52], the two connected components of the complement of an annulus [25], two disjoint discs [47]. Therefore, one usually encloses the numerical range by a disc or an interval (in the case of Hermitian matrices). In Sects. 2.2.1 and 2.2.2 below, we discuss two geometries in more detail: the case of two discs and the case of a disc and an interval. These enclosures will be used for generating the shift parameters in Algorithm 2 for most of our numerical examples.

2.2.1 Solution of the Zolotarev problem for two disjoint discs

Letting \({\mathbb {B}}(x,\rho )\) denote the closed disc with center \(x\in {\mathbb {C}}\) and radius \(\rho >0\), we consider the Zolotarev problem for

Starke [47] addressed the case \(x_E, x_F \in {\mathbb {R}}\) by explicitly constructing a rational function \(r_1^*(z)=\frac{z-q^*}{z-p^*}\) with \(q^*\in {\hspace{0.0pt}E}^{\textrm{o}} \), \(p^*\in {\hspace{0.0pt}F}^{\textrm{o}} \), and \(|r_1^{*}(z)|\) being constant on \(\partial E\) and on \(\partial F\). In view of the (generalized) near-circularity criterion [47, Theorem 3.1], this implies the optimality of the rational function \([r_1^*(z)]^j\) for \(Z_j(E,F)\), \(j\ge 1\).

The general case of two disjoint discs (with \(x_E,x_F \in {\mathbb {C}}\) not necessarily on the real line) can be derived from Starke’s result by relying on the invariance property of Zolotarev problems under Moebius transformations; see also [44, Example VIII.4.2]. For completeness and convenience of the reader, we provide the complete transformation that maps E, F to the complement of an annulus. More precisely, a Moebius map \(\Phi \) is constructed such that \(\Phi (F)\) is a closed disc centered at the origin and \(\Phi (E)\) is the complement of a larger open disc centered at the origin. We compose \(\Phi \) from three simpler Moebius maps \(\Phi _j\), \(j=1,2,3\), as follows (see also Fig. 1):

-

\(\Phi _1(z)= \frac{z-x_E}{\rho _E}\) maps E to the unit disc while F remains a disjoint disc,

-

\(\Phi _2(z)= z^{-1}\) maps \(\Phi _1(E)\) to the exterior of the unit disc and \(\Phi _1(F)\) to a disc enclosed by the unit circle,

-

\(\Phi _3(z)=\frac{z-\alpha }{z-\beta }\) maps \(\Phi _2(\Phi _1(F))\) to the exterior of a disc with center 0 and \(\Phi _2(\Phi _1(E))\) to a disc centered at 0 and enclosed by \(\partial \Phi _3(\Phi _2(\Phi _1(F)))\).

The parameters \(\alpha , \beta \in {\mathbb {C}}\) are chosen as the so-called common inverse points for the circles \(\partial \Phi _2(\Phi _1(E))=\partial {\mathbb {B}}(0,1)\) and \(\partial \Phi _2(\Phi _1(F))\) [28, Section 4.2]. Setting \( \Phi _2(\Phi _1(F))=:{\mathbb {B}}({\widetilde{x}}_F, \widetilde{\rho }_F)\) the values of \(\alpha ,\beta \) from satisfying the two equations

Clearly, one solution is given by

Observe that, \(\Phi _1(F)\) is a disc with center \(\Phi _1(x_F)\ne 0\) and radius \(\rho _F/\rho _E\). In particular, \(\Phi _1(F)\) attains its maximum and minimum modulus at the elements

Now, \(\Phi _2(z)=z^{-1}\) maps \(x_1\) and \(x_2\) into the minimum and maximum modulus elements of \({\mathbb {B}}({\widetilde{x}}_F, \widetilde{\rho }_F)\), respectively. This implies that \({\widetilde{x}}_F,\widetilde{\rho }_F\) take the following values:

Together with (14), this allows us to compute \(\alpha ,\beta \) explicitly and evaluate \(\Phi \). The complete expressions for \(\Phi \) and \(\Phi ^{-1}\) read as follows:

By the near-circularity criterion [47, Theorem 3.1], an extremal rational function for the complement of an annulus centered at 0 is \(z^{j}\), having the only pole \(\infty \) and the only zero at 0. Thus, an optimal rational function for the original problem is given by \(r_j^*(z)=\left( \frac{z-\Phi ^{-1}(0)}{z-\Phi ^{-1}(\infty )}\right) ^{j}\). The pole \(p^*\) and zero \(q^*\) are given by

Since \(\Phi \) maps the boundaries of E and F to the boundaries of the annulus, it follows that the Zolotarev numbers are given by

2.2.2 Quasi-optimal solution of the Zolotarev problem for a disc and an interval

Let us now consider the situation of a disc and a disjoint interval:

Similarly to the previous section, we build a Moebius transformation that recasts \(Z_j(E,F)\) as a Zolotarev problem for which a (quasi-optimal) solution is known. More specifically, we let \(\Theta =\Theta _2\circ \Theta _1\) where (see also Fig. 2):

-

\(\Theta _1(z)=\Phi _1(z)\) maps E to the unit disc while F remains a real disjoint interval,

-

\(\Theta _2(z)=\frac{z+1}{z-1}\) maps \(\Theta _1(E)\) to the closed left half of the complex plane and \(\Theta _1(F)\) to an interval \([{\tilde{a}}, {\tilde{b}}]\) of positive real numbers.

A straightforward calculation yields explicit expressions for \(\Theta \) and its inverse:

By the maximum modulus principle, it suffices to consider the boundary of \(\Theta (E)\) and thus the transformed Zolotarev problem takes the form

Let \({\tilde{r}}_j\) denote the extremal rational function for the Zolotarev problem \(Z_j( [-{\tilde{b}}, -{\tilde{a}}], [{\tilde{a}}, {\tilde{b}}])\), that is, the imaginary axis is replaced by \([-{\tilde{b}}, -{\tilde{a}}]\). This function has been extensively studied in the literature; see [4, Sec. 3.1] and the references therein. In particular, it holds that

where the poles \(\tilde{\psi }_{j,i} \in [{\tilde{a}},{\tilde{b}}]\), \(i=1,\dots , j\), can be expressed in closed form via elliptic integrals. Note that \({\tilde{r}}_j(-z) = 1/{\tilde{r}}_j(z)\) and the maximum absolute value of \({\tilde{r}}_j\) is 1, which is assumed throughout the imaginary axis. By [4, Corollary 3.2], it holds that \(Z_j( [-{\tilde{b}}, -{\tilde{a}}], [{\tilde{a}}, {\tilde{b}}]) \le 4 \eta ^{-2j}\) with \(\eta =\exp \left( \dfrac{\pi ^2}{2\log (4{\tilde{b}}/{\tilde{a}})}\right) \). Inserting \({\tilde{r}}_j\) into (17) gives the following upper bound for \(Z_j({\textbf{i}}{\mathbb {R}}, [{\tilde{a}}, {\tilde{b}}])\):

It has been shown in [12, 31] that \({\tilde{r}}_j\) satisfies the necessary optimality conditions for (17) and that it is within a factor 2 of the optimal solution: \(\delta _j \le 2 Z_j({\textbf{i}}{\mathbb {R}}, [{\tilde{a}}, {\tilde{b}}])\). To the best of our knowledge, it is still an open question whether \({\tilde{r}}_j\) is, in fact, an extremal rational function for (17). In summary, we have

with a quasi-optimal solution for \(Z_j(E,F)\) given by

The poles of the rational function (19) are not nested, that is, the poles of \({\widehat{r}}_j(z)\) are different from the ones of \({\widehat{r}}_{j+1}(z)\). This is a disadvantage when using the poles of \({\widehat{r}}_j(z)\) in an iterative method (such as ADI or RKSM) where usually the number of steps (the parameter j) is not known a priori. Choosing j adaptively would require to restart the method from scratch whenever j is changed. In such a situation it is preferable to use a sequence of rational functions with nested poles. The method of equidistributed sequences (EDS) [12, Section 4] allows the generation of a nested sequence of poles \(\xi _i\), \(i=1,2,\dots \), such that the rational functions \(\prod _{i=1}^j\tfrac{z+\xi _i}{z-\xi _i}\) have asymptotically optimal convergence rates for \(Z_j([-{\tilde{b}}, -{\tilde{a}}],[{\tilde{a}},{\tilde{b}}])\) as j increases, see also [36, Section 3.5]. In analogy with (20), the nested asymptotically optimal zeros and poles for \(Z_j(E,F)\) are given by \(\Theta ^{-1}(-\xi _i)\) and \(\Theta ^{-1}(\xi _i)\), respectively. In the following example and more generally in Sect. 5, we demonstrate that the shift parameters computed by means of EDS yield convergence rates very close to the ones obtained with the optimal solution of \(Z_j([-{\tilde{b}}, -{\tilde{a}}],[{\tilde{a}},{\tilde{b}}])\).

Example 1

Let us consider the following 1D heat equation from [16, Section 7.1]:

with \(w=0.05\) and \(h=100\). Discretizing in space with second order central differences and in time with the implicit Euler method on a uniform \(n\times n_t\) grid yields a matrix equation of the form \(AX+XB_1^T=F\) with \(A \in {\mathbb {R}}^{n\times n}\) and \(B_1 \in {\mathbb {R}}^{n_t\times n_t}\) are the matrices of centered and backward differences with respect to the x and t variables. For this example we set \(n=n_t=1000\) and rescale the equation by multiplying both sides with \(\Delta t\). We focus on solving the correction equation

where \(X_0\) satisfies \(AX_0+X_0(C_1^{(1)})^T= F\). For this purpose, we use RKSM based on one of the rational functions constructed above. The poles and zeros of the rational function determine the shifts in the rational Krylov subspaces for A and \(B_1\), respectively, see (12). We consider the following choices:

- 2DISCS:

-

single shifts \(p^*\) and \(q^*\) resulting from the Zolotarev problems with 2 discs, see (15);

- ZOL-DI:

-

optimal shifts resulting from the Zolotarev problem with a disc and an interval, see (20);

- EDS:

-

nested, asymptotically optimal shifts resulting from the Zolotarev problem with a disc and an interval; the computation of equidistributed shifts is described in [36, Section 3.5].

- EK:

-

alternating shifts 0 and \(\infty \), corresponding to the extended Krylov method [45].

The numerical ranges of the matrices \(A,B_1\), which determine the sets E, F, are known analytically: \({\mathcal {W}}(B_1)=\{z:\ |z+1|\le \cos (\pi /(n_t+1))\}\) and \({\mathcal {W}}(A)=[\lambda _{\min },\lambda _{\max }]\) with \(\lambda _{\min }=(2 - 2 \cos (\pi / (n+1)))\frac{(n+1)^2}{n_t}\) and \(\lambda _{\max }=(2 - 2 \cos (n \pi / (n+1)))\frac{(n+1)^2}{n_t}\). We can directly use these sets for generating the shift parameters in EDS and ZOL-DI, while 2DISCS uses the (suboptimal) inclusion \({\mathcal {W}}(A)\subset \{z:\ |z-(\lambda _{\max }+\lambda _{\min })/2|\le (\lambda _{\max }-\lambda _{\min })/2\}\).

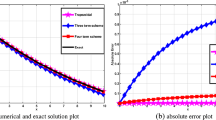

Letting \(\widetilde{\delta X}\) denote the approximate solution produced by one of the choices above, Fig. 3 reports the relative error history \(\Vert \widetilde{\delta X}-\delta X\Vert _2/\Vert \delta X\Vert _2\), where \(\delta X\) is a benchmark solution computed via the lyap command of Matlab, as the dimension of the rational Krylov subspaces increases. Finally, we report the relative error associated with the truncated singular values decomposition of \(\delta X\) to show the best error attainable. The results are shown in Fig. 3 together with the decay bounds (16) (Decay 2DISCS) and (19) (Decay ZOL-DI). The observed convergence of the residuals clearly highlights the advantage of choosing shifts via the Zolotarev problem with a disc and an interval, either optimally (ZOL-DI) or asymptotically optimally (EDS).

2.3 Cost analysis of Algorithm 2

In order to compare with our second approach in the next section, we briefly discuss the cost of Algorithm 2 as well as the potential for carrying out (embarrassingly) parallel computations. Let \(\mathcal {C}_{\textrm{sys}}\) denote the maximum cost of solving a lineaear system involving a linear combinationr system with a linear combination of the matrices A and M, for a constant number of right-hand sides, respectively; note that, \({\mathcal {C}}_{\textrm{sys}}\) is also an upper bound for the cost of solving with A or M, individually. The cost of a matrix–vector operation with the banded matrices \(B_1,B_2\) is \({\mathcal {O}}(n_t)\), which is negligible. We may assume \(k = {\mathcal {O}}(1)\), which holds for all time discretization schemes discussed in this work. We assume that RKSM converges after \(\ell \) iterations to fixed accuracy and that the shift parameters are all different, as it happens, for instance, in EDS. If A is symmetric positive definite and its condition number grows polynomially with n (as, e.g., for finite difference or finite element discretizations of elliptic operators) then the bound (19) predicts \(\ell = {\mathcal {O}}(\log n)\). RKSM needs to solve \({\mathcal {O}}( \ell )\) linear systems at cost \(\mathcal {C}_{\textrm{sys}}\) each for generating the left factor W of the approximate solution \(\delta X \approx WYZ^*\). The (re)orthogonalization of the bases W, Z accounts for another \({\mathcal {O}}((n+n_t)\ell ^2 )\) operations. As we expect \(\ell \) to be small, the cost for computing Y as the solution of the compressed equation is negligible. When \(M\ne I_n\), computing the low-rank factorization at line 3 requires an additional constant number of linear systems solves. By adding the cost of Algorithm 1 called in line 2 of Algorithm 2 we obtain the overall complexity

In terms of parallelization, note that the linear systems solves of Algorithm 1 are embarrassingly parallel on up to \(n_t\) cores. In this situation, the cost reduces to \( {\mathcal {O}}(\ell \mathcal {C}_{\textrm{sys}}+ (n+n_t)\ell ^2+nn_t\log (n_t)) \) for each of the \(n_t\) cores. A further reduction can be obtained by making use of parallel implementations of RKSM [8] and FFT.

3 An evaluation interpolation approach

As pointed out in the introduction, existing ParaDiag algorithms replace (1) with

for fixed \(\alpha \), where \(C_1^{(\alpha )},C_2^{(\alpha )}\) are \(\alpha \)-cyclic matrices. Solving Eq. (23) can be carried out efficiently with Algorithm 1 and serves as a preconditioner in a Krylov method or a stationary iteration.

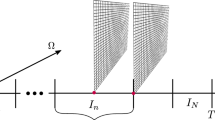

In this section we propose a new method that approximates the solution of (1) by combining solutions of the matrix Eq. (23) for different values of \(\alpha \). Given \(\rho >0\), we let \({\mathcal {X}}_{\rho }\) denote the matrix-valued function that maps \(z\in {\mathbb {C}}\) to the solution \(X = {\mathcal {X}}_{\rho }(z)\) of (23) for \(\alpha = \rho z\). In particular \({\mathcal {X}}_{\rho }(0)\) is the desired solution of (1). In the following, we assume that \({\mathcal {X}}_\rho (z)\) is analytic in the disc \(\{z:\ |z|<\widehat{\rho }\}\) for some \(\widehat{\rho }>1\); see Example 2 below for an illustration of this assumption.

Our approach proceeds by interpolating \({\mathcal {X}}_\rho (z)\) at the dth roots of unity for some integer d, \(\omega _j=\exp (2\pi {\textbf{i}} j/d)\) for \(j=0,\dots ,d-1\), with the interpolation data \({\mathcal {X}}_\rho (\omega _j)\) computed by Algorithm 1. It is well known (see, e.g., [49]) that the coefficients of the interpolating polynomial \(\widetilde{{\mathcal {X}}}^{(d)}(z)=\sum _{j=0}^{d-1}{\widetilde{X}}_j^{(d)} z^j\) can be obtained from the interpolation data by applying the inverse Fourier transform. In particular, we obtain

The described procedure is summarized in Algorithm 3. The analysis of the approximation error for such a trigonometric interpolation has a well established theory. The following result, retrieved by applying Theorem 12.1 from [49] to each entry, shows that \({\widetilde{X}}_0^{(d)}\) converges exponentially fast to the solution of (1) as d increases with the convergence rate depending on the radius of analyticity \(\widehat{\rho }\).

Theorem 1

Let \({\mathcal {X}}_\rho (z)\) be analytic on \(\{z:\ |z|<\widehat{\rho }\}\) with \(\widehat{\rho }>1\) and choose \(R\in (1,\widehat{\rho })\). Then the following holds for the entries of the approximation \({\widetilde{X}}_0^{(d)}\) returned by Algorithm 3:

We remark that it is possible to implement an adaptive doubling strategy for the choice of the parameter d. Whenever the accuracy of \({\widetilde{X}}_0^{(d)}\) is unsatisfactory, we can consider the computation of \({\widetilde{X}}_0^{(2d)}\). Since a dth root of the unity is also a 2dth root of the unity, this has the advantage that half of the evaluations of \({\mathcal {X}}_\rho (z)\) that we need in Algorithm 3 have already been computed at the previous iteration. As stopping criterion, one can verify that the norm of the difference between two consecutive approximations is smaller than a certain threshold. However, in all numerical experiments considered in this work we observe that the choices \(d=2,3\) already provide sufficient accuracy and there is little need for an adaptive choice for d.

Example 2

Let us consider the matrix equation \(AX+XB_1^T=F\) arising from the 1D heat equation of Example 1 with \(n=n_t\). Then \(C_1^{(\rho z)} = B_1 - \rho z {\textbf{e}}_1 {\textbf{e}}_{n}^T\) with the matrix \(B_1\) from (5) multiplied with \(\Delta t\). The eigendecomposition of \(C_1^{(\rho z)}\) is given by

where \(\zeta =\exp (2{\textbf{i}} \pi /n))\) and \(\Omega \) is the discrete Fourier transform. In particular, the eigenvalues of \(C_1^{(\rho z)}\) are contained in a disc with center 1 and radius \(|\rho z|^{1/n}\). Since A is symmetric positive definite, the spectra of A and \(-C_1^{(\rho z)}\) stay separated as long as \(|z| < \rho ^{-\frac{1}{n}} + \lambda _{\min }(A)\). This implies that \(X_\rho (z)\) is well-defined and, as a rational function, also analytic on the open disc with radius \(\rho ^{-\frac{1}{n}} + \lambda _{\min }(A)\) and hence the assumptions of Theorem 1 can be satisfied as long as \(\rho \le 1\). Assuming \(|\rho z|\le 1\), the 2-norm condition number of the eigenvector matrix for \(C_1^{(\rho z)}\) is bounded by \(1/|\rho z|\) and, in turn, \(\Vert {\mathcal {X}}_\rho (z)\Vert _F \le |\rho z \lambda _{\min } |^{-1} \Vert F \Vert _F\). Choosing \(R = 1/\rho \) in Theorem 1, we thus obtain

Hence, we expect to obtain very fast convergence with decreasing \(\rho \) and/or increasing d. Note that \(\rho \) cannot be chosen too small because otherwise roundoff error, due to the ill-conditioned eigenvector matrix of \(X(\rho \omega _j)\), starts interfering with the interpolation error. Both statements are confirmed by numerical experiments. Table 1 shows relative residual \(\textrm{Res}:=\Vert A{\widetilde{X}}_0+ {\widetilde{X}}_0B_1^T-F\Vert _F/ \Vert F\Vert _F\) for the matrix \({\widetilde{X}}_0\) computed by Algorithm 3 obtained with different values of \(n,\rho ,d\). The results indicate that excellent accuracy can already achieved for \(d=2\) when choosing \(\rho \) properly.

3.1 Cost analysis of Algorithm 3

In this section we maintain the assumptions and notation of Sect. 2.3. In particular, \(\mathcal {C}_{\textrm{sys}}\) denotes the cost of solving linear systems with a linear combination of the matrices A and M. The asymptotic complexity of Algorithm 3 is dominated by the d calls to Algorithm 1, that is,

As the calls to Algorithm 1 are embarrassingly parallel, the cost of Algorithm 3 reduces to

for each core if \(dn_t\) cores are available. Compared with the discussion in Sect. 2.3, this indicates that Algorithm 1 is better suited than Algorithm 2 in a massively parallel environment.

4 All-at-once Runge–Kutta formulation

In this section, we explain how the approaches presented in this work extend when the time discretization is performed by implicit Runge–Kutta methods. For this purpose, let us consider the differential problem (3) and assume, without loss of generality, that \(M=I\). The discretization by an Runge–Kutta method with s stages yields

where the coefficients \(b_i\) and \(c_i\) are the nodes and the weights of the Butcher tableau. By considering the Runge–Kutta matrix \(G=(g_{ih})\in {\mathbb {R}}^{s\times s}\) and setting \(K^{(j)}:=[{\textbf{k}}^{(j)}_1|\dots | {\textbf{k}}^{(j)}_s]\in {\mathbb {R}}^{n\times s}\), we can rewrite the second equation as

where \({\textbf{e}}\in {\mathbb {R}}^s\) is the vector of all ones, \({\textbf{e}}_i\in {\mathbb {R}}^s\) is the ith unit vector, and \(F^{(j)}:=[f(t_j+c_1\Delta t)|\dots |f(t_j+c_s\Delta t)]\in {\mathbb {R}}^{n\times s}\). Vectorizing (24) leads to

Therefore, we get an all-at-once linear system \({\mathcal {A}}{\textbf{x}}={\textbf{f}}\) of the form

where \({\textbf{b}}:=\Delta t[b_1,\dots ,b_s]\) is a row vector. Note that we can rewrite the \(2\times 2\) block matrix on the main diagonal as

Finally, by letting  , we can write the coefficient matrix of the linear system (25) as \({\mathcal {A}} =I\otimes {\widehat{A}} + B_1\otimes {\widehat{M}}\) where

, we can write the coefficient matrix of the linear system (25) as \({\mathcal {A}} =I\otimes {\widehat{A}} + B_1\otimes {\widehat{M}}\) where

This implies that (25) is equivalent to a generalized Sylvester equation. In view of ParaDiag techniques, it is natural to consider the matrix \({\mathcal {A}}^{(\alpha )}\), obtained by replacing \(B_1\) in (26) with an \(\alpha \)-cyclic matrix \(C_1^{(\alpha )}\). This leads to the following three extensions of the ParaDiag framework to Runge–Kutta methods.

Preconditioned Krylov method. Employ \({\mathcal {A}}^{(\alpha )}\) as a preconditioner for GMRES.

Evaluation interpolation approach. Employ a solver for linear systems with matrices \({\mathcal {A}}^{(\rho \omega _j)}\) as building block for Algorithm 3.

Low-rank updates. Solve the linear system \({\mathcal {A}}^{(1)}{\textbf{x}}={\textbf{f}}\) and compute the solution of the corresponding update equation, that has right-hand side of rank 1, with RKSM.

In the next sections we describe the details of the solver for linear systems with the matrix \({\mathcal {A}}^{(\alpha )}\) and of the update equation that has to be addressed with RKSM.

4.1 Solving linear systems with \({\mathcal {A}}^{(\alpha )}\)

After the diagonalization of the \(\alpha \)-cyclic matrix \(C_1^{(\alpha )}\), solving linear systems with the matrix \({\mathcal {A}}^{(\alpha )}\) requires to solve — possibly in parallel — \(n_t\) linear systems with a coefficient matrix of the form

Each of this linear systems corresponds to solving a (generalized) Sylvester equation of the form

with \(N_i\in {\mathbb {C}}^{(s+1)\times (s+1)}\), \(i=1,2\). Because s, the number of Runge–Kutta stages, is usually small we can solve (27) efficiently by computing generalized Schur decomposition [24] of \((N_1,N_2)\) via the QZ algorithm [29]. This yields unitary matrices \(Q,Z\in {\mathbb {C}}^{(s+1)\times (s+1)}\) such that

where \(T_1,T_2\in {\mathbb {C}}^{(s+1)\times (s+1)}\) are upper triangular. The correspondingly transformed Eq. (27) takes the form

which can be solved by forward substitution by rewriting the Eq. in block-wise form:

Assuming that \(T_1^{(11)}, T_2^{(11)}\) are scalar quantities we can first retrieve \(Y_1\in {\mathbb {C}}^n\) by solving a linear system with \((A+T_2^{(11}) I)\) and continuing to compute \(Y_2\in {\mathbb {C}}^{n\times s}\) via recursion. The solution X of (27) is obtained as \(YQ^*\).

4.2 Solving the update equation

In the case of Runge–Kutta methods, the low-rank update approach from Sect. 2 requires us to solve the generalized matrix equation

where \(X_0\in {\mathbb {C}}^{n(s+1)\times n_t}\) is the (reshaped) solution of the linear system \({\mathcal {A}}^{(1)}{\textbf{x}}={\textbf{f}}\) described in the previous section. Equation (28) has a right-hand side of rank 1 but it is not immediate to recast it as a Sylvester equation of the form (9) because the coefficient \({\widehat{M}}\) is not invertible. Given a shift parameter \(\sigma \in {\mathbb {C}}\), we add and subtract the quantity \(\sigma {\widehat{A}}\delta XB_1^T\) to (28), obtaining

Hence, if \(\sigma \) is chosen such that both \({\widehat{M}}+\sigma {\widehat{A}}\) and \(I-\sigma B_1^T\) are invertible, we can write an equation of the form \({\widetilde{A}} \delta X+\delta X{\widetilde{B}}^T = UV^*\) equivalent to (28) by setting:

This suggests to use the matrices \({\widetilde{A}},{\widetilde{B}}\) and the vectors \({\textbf{u}}, {\textbf{v}}\) to generate Krylov subspaces for the Eq. (28). Once again, there is no need to form explicitly \({\widetilde{A}},{\widetilde{B}}\) for performing matrix–vector products and solving shifted linear systems; for these operations we rely on the relations:

that only need to manipulate the matrices \({\widehat{A}}, {\widehat{M}}, B_1,I-\sigma B_1\), which are sparse when A is sparse.

In order to study the low-rank property of the solution to (28) and to provide suitable a priori choices for the shift parameters in RKSM, one would need to study the spectral properties of \({\widetilde{A}},{\widetilde{B}}\). The matrix \({\widetilde{B}}\) is Toeplitz triangular and we can explicitly compute its entries:

In particular, it has the only eigenvalue \((1-\sigma )^{-1}\) with multiplicity \(n_t\). However, the matrix \({\widetilde{A}}\) appears hard to analyze in general, even assuming strong properties such as A symmetric positive definite. For this reason, we postpone the analysis of (28) to future work and we propose the use of the extended Krylov method (see Example 2) or adaptive choices of the shift parameters [13] when solving the update equation with RKSM.

5 Numerical results

In this section, we compare the performances of Algorithm 2 and Algorithm 3 with those of the preconditioned GMRES method considered in [20, 37], on different examples. More specifically, we consider the following list of methods:

-

PGMRES: GMRES preconditioned by the solver of the matrix equation with a \(\alpha \)-circulant coefficient. If not stated otherwise, \(\alpha =1\) and the threshold for stopping GMRES is set to \(10^{-8}\),

-

Ev-Int: Algorithm 3. If not stated otherwise, we choose \(d=2\) and \(\rho =5\cdot 10^{-4}\).

-

2DISCS, ZOL-DI(4), EDS, EK: Algorithm 2 with different choices of poles; see Example 1 for details. Note that, the method ZOL-DI is not competitive, in terms of computational time, as it requires to recompute the bases of the Krylov subspaces from scratch at every iteration. For this reason we consider its variant ZOL-DI(4), that consists in cyclically repeating the 4 shifts corresponding to the Zolotarev problem with rational functions of degree (4, 4). We have chosen 4 shifts because preliminary experiments indicated that this choice offers good performance across a broader range of examples.

RKSM called at line 4 of Algorithm 2 is stopped when the relative residual of the update Eq. (9) is below the threshold \(10^{-8}\). The methods EK, 2DISCS, and ZOL-DI(4) rely on precomputed factorizations of the, possibly shifted, matrices \({\widetilde{A}}\) and \({\widetilde{B}}\) when generating the bases of the rational Krylov subspaces. If the matrices A and M in (1) are sparse, a sparse direct solver is exploited whenever the solution of a linear system involving a linear combination of A and M is required; e.g., at line 4 of Algorithm 3.

For each method we report the computational time required and the relative error \(\textrm{Res}:= \Vert A{\widehat{X}}B_2^T+M{\widehat{X}}B_1^T-F\Vert _F/\Vert F\Vert _F\) in order to assess the accuracy of the correspondent approximate solution \({\widehat{X}}\). The best timings of each case study are highlighted in bold and reported in Tables 2, 3, 4, 5, 6, 7, 8. For the various implementations of Algorithm 2 we also report the percentage of the CPU time that is spent for solving (9), labeled with \(\mathrm {T_{sylv}}\), the dimension (Dim) of the rational Krylov subspace generated and the rank (rk) of the approximate solution to (9), after recompression.

Apart from Sect. 5.7, all experiments have been performed on a laptop with a dual-core Intel Core i7-7500U 2.70 GHz CPU, 256KB of level 2 cache, and 16 GB of RAM. The algorithms are implemented in MATLAB and tested under MATLAB2020a, with MKL BLAS version 2019.0.3 utilizing both cores.

5.1 1D heat equation

We start by testing all the algorithms detailed at the beginning of this section on the matrix equation \(AX+XB_1^T=F\) from Example 1 for \(n=1089, 4225\) and \(n_t=2^8,\dots , 2^{11}\).

The results are reported in Table 2. As expected ZOL-DI(4) and EDS generate significantly lower dimensional Krylov subspaces with respect to those generated by EK and 2DISCS, while achieving comparable accuracies. Looking at the computational times, we see that, for all methods, we have an almost linear dependence on the size n and on the number of time steps \(n_t\). The methods with the most favorable timings are Ev-Int and ZOL-DI(4). The former is preferable when \(\mathrm {T_{sylv}}>50 \%\), which happens for the smaller instances of the equation. For all four implementations of Algorithm 2, the fraction of the time spent for solving the low-rank Sylvester equation decreases as the size of the problem increases. Since the advantage of using ZOL-DI(4) is inversely proportional to \(\mathrm {T_{sylv}}\) we get the best scenario for ZOL-DI(4) when \(n=4225\) and \(n_t=2048\) where we get about a \(2\times \) speedup over Ev-Int and a \(5\times \) speedup over PGMRES.

5.2 Convection diffusion

This example is concerned with the convection diffusion equation from [37, Section 6.2], which models the temperature distribution in a cavity having an external wall maintained at a constant (hot) temperature and a wind that determines a recirculating flow. The problem reads as follows:

where \(\epsilon = 0.005\), \(\chi _{\{x=1\}}\) is the indicator function of the set \(\{x=1\}\), and \({\textbf{w}} = (2y(1-x^2), -2x(1-y^2))\). The discretization is done via Q1 finite elements over the domain S and backward Euler time-stepping, together with streamline-upwind Petrov–Galerkin (SUPG) stabilization. The matrix equation corresponding to the all-at-once linear system takes the form \(AX+MXB_1^T=F\), where both A and M are sparse. To estimate the numerical range of \(M^{-1}A\), we compute the largest and smallest real parts, \(r_{\max },r_{\min }\), of its eigenvalues, by means of the Matlab command eigs. We remark that, \(r_{\max },r_{\min }>0\) and we denote \(c:=(r_{\max }+r_{\min })/2\). Then, 2DISCS employs \(\{z:\ |z-c|\le (r_{\max }-r_{\min })/2 \}\) as estimate for \({\mathcal {W}}(M^{-1}A)\) while ZOL-DI(4) and EDS makes use of the real interval \([r_{\min },r_{\max }]\). The performances of the numerical methods are reported in Table 3. Although we used the slightly higher tolerance \(10^{-6}\) to stop PGMRES, the latter needs 17 iterations to converge and this makes the other approaches significantly faster. Among the methods based on low-rank updates, ZOL-DI(4) and EDS are those that generate the Krylov subspaces of smallest dimensions, while maintaining a good accuracy. This feature makes EDS faster than EK although the latter has a cheaper iteration cost. Similar comments to the ones made in the previous example apply to the comparison between \(\texttt {ZOL-DI(4)}\) and \(\texttt {Ev-Int.}\)

5.3 Fractional space diffusion

We consider the fractional diffusion example from [51, Section 5]:

where \(\frac{\partial ^{\gamma _j}u^\pm }{\partial x}\) indicates the Riemann-Liouville right- and left- looking derivatives; the diffusion coefficients are set as \(a_1 = 1, a_2 = 0.5, b_1 = 0.2, b_2 = 1\), the differential orders are \(\gamma _1=1.75\) and \(\gamma _2=1.5\), and the source term is \(f(x,y,t)= 10\sin (3xyt)\). For space discretization, the second-order weighted and shifted Grünwald difference formula is employed; uniform backward Euler time stepping is applied. The all-at-once linear system matrix takes the form \(I\otimes A+B_1\otimes I\) where the matrix A has the structure

with \(T_1,T_2\) non sparse Toeplitz matrices; see [51] for a detailed description. In particular, matrix–vector operations with the matrix A are efficiently performed by relying on FFT and matrix equation techniques. Moreover, the minimum and maximum real part of the eigenvalues of A are easily obtained once estimates of the same quantities for the matrices \(T_1,T_2\) are computed. When running \(\texttt {2DISCS}, \texttt {ZOL-DI(4)}, \texttt {EDS}\), we approximate \({\mathcal {W}}(A)\) as we did in the previous example.

The results reported in Table 4 show that the methods based on low-rank updates struggle on this example because they have to generate somewhat large subspaces in order to achieve the desired accuracy. This is caused by the fact that the solution of the update Eq. (8) has a slightly higher rank with respect to the previous examples, and the approximations of \({\mathcal {W}}(A)\) are not tight, see Fig. 4. Nevertheless, ZOL-DI(4) achieves the best timings for \(n=4225\). Ev-Int maintains the usual behavior and turns out to be the preferable method for \(n=1089\).

5.4 Fractional time diffusion

We now consider an example where Algorithm 2 is not applicable due to the non locality of the time differential operator. We modify Example 2 by replacing the first-order time derivative with the Caputo time derivative of order \(\gamma \in (0,1)\):

and we keep the same parameters w, h of the integer derivative case. Discretizing the Caputo time derivative with the unshifted Grünwald-Letnikov formula, we get a matrix equation \(AX+XB_1^T=F\) where \(B_1\) is Toeplitz lower triangular and takes the form:

In particular, the usual way to construct a circulant matrix from \(B_1\) requires to apply a matrix \(\delta B_1^{(\alpha )}\) of rank \(n_t-1\). For this reason we restrict to PGMRES and Ev-Int for solving the all-at-once problem. The results reported in Table 5 refer to the case \(\gamma = 0.3\) and show that Ev-Int significantly outperform PGMRES, despite the low number of iterations needed by the latter to converge.

5.5 Wave equation

We consider the linear wave equation

with \(S =(0, 1)\), \(T=1\), \(u_0(x,y)=u_1(x,y)=\sin (\pi x)\sin (\pi y)\). As described in [20, Section 3], by discretizing with finite differences in space and with the implicit leap-frog scheme in time we get the all-at-once linear system \((B_1\otimes I+B_2\otimes A){\textbf{x}}={\textbf{f}}\) where \(A= \textrm{trid}(-1, 2, -1)\cdot (n+1)^2\) and

We note that, for this example, all methods based on low-rank updates generate quite small Krylov subspaces, and their dimensions do not grow as the parameters n and \(n_t\) vary. As a consequence, EK is the one that achieves the cheapest consumption of computational time and we only report its performances. We remark that, in order to get comparable accuracy we have considered \(d=3\) roots of the unit when running Ev-Int. The preconditioner employed in PGMRES uses the parameter \(\alpha =0.1\) as suggested in [20] and the tolerance for stopping the GMRES iteration has been set to \(10^{-7}\). The results are shown in Table 6. Comparing \(\texttt {PGMRES},\texttt {Ev-Int}\), and \(\texttt {EK}\), leads to similar conclusions as in the example of the 1D heat equation.

5.6 Runge–Kutta example

We present a numerical test on the all-at-once Runge–Kutta formulation introduced in Sect. 4. Let us start from the finite difference semidiscretization of the following differential problem:

We consider solving the all-at-once formulation (25) corresponding to the following four-stage (\(s=4\)), 3rd order, Diagonally Implicit Runge-Kutta method (DIRK):

More specifically, we solve (25) by applying the methods PGMRES, Ev-Int and EK adapted as described in Sect. 4. For this experiment, the parameter \(\rho \) used by Ev-Int is set to the value 0.05. As shift parameter for the update Eq. (28) solved by EK, it has been chosen \(\sigma = -2\). The performances of the 3 methods are reported in Table 7; as observed in previous tests, the approaches based on low-rank update and evaluation interpolation outperforms PGMRES for both speed and accuracy. As the number of time steps increases, the cost of the update equation becomes relatively cheap, making EK faster than Ev-Int. The relative residuals obtained with EK and Ev-Int are comparable.

Problem (29) admits the explicit solution \(U(t)=\exp (tA){\textbf{x}}_0\) so that we can compare approximate solutions with respect to a benchmark solution computed by means of the expm Matlab function. Figure 5 reports the relative error \(\Vert \widehat{{\textbf{x}}}_t-\exp (tA){\textbf{x}}_0\Vert _2/\Vert \exp (tA){\textbf{x}}_0\Vert _2\) of the approximants \(\widehat{{\textbf{x}}_t}\) returned by EK, for \(n=500\) and different time step sizes; the plot is in agreement with the order 3 of the Runge–Kutta scheme.

5.7 Preliminary experiments on parallelism

We conclude by testing the parallel scaling features of the proposed algorithms in a multi-core computational environment. The experiment in this section has been run on a server with two Intel(R) Xeon(R) E5-2650v4 CPU with 12 cores and 24 threads each, running at 2.20 GHz, using MATLAB R2022b with the Intel(R) Math Kernel Library Version 11.3.1. The test has been run using the SLURM scheduler, allocating 12 cores and 250 GB of RAM. Our implementation exploits parallelism for the solution of the block diagonal linear system generated by fast_diag_solve (lines 4-6 of Algorithm 1). This parallel routine is used as a preconditioner in PGMRES, it is called at line 2 of Algorithm 2, and it is called at line 4 of Ev-Int. Finally, we also parallelize the for loop in Ev-Int (lines 2-5 of Algorithm 3).

As case study, we consider the fractional diffusion example of Sect. 5.3 with \(n=4225\) and \(n_t=8192\), and we measure the execution time of the procedures PGMRES, Ev-Int, and ZOL-DI, as the number of cores p ranges in the set \(\{1,2,4,8,12\}\). The tolerance to stop GMRES has been set to \(10^{-6}\) in order to get comparable residual norms with the other two methods. To evaluate the efficiency of the implementation, we also compute the strong scaling efficiency (SE) with p cores defined as

The results reported in Table 8 clearly show that Ev-Int is the method that gains the most from parallelism, maintaining more than 70% of strong scaling efficiency, up to 12 cores. Also PGMRES leverages the use of multiple cores to the extent of becoming faster than ZOL-DI, when \(p=12\). On the contrary, our current implementation of ZOL-DI does not exploit more than one core for solving the update equation and, consequently, parallel efficiency drops as soon as the cost of (8) becomes dominant. An adaptation of the parallel implementation of RKSM [8] is nontrival and beyond the scope of this work, but it potentially addresses the scaling issue of ZOL-DI.

6 Conclusions

We have proposed a tensorized Krylov subspace method and an interpolation method for replacing the iterative refinement phase that is required by ParaDiag algorithms with uniform time steps. In the case of multistep methods, a theoretical analysis of the approximation property of the Krylov subspace method has been provided. We have shown several numerical tests demonstrating that our approaches can significantly outperform block-circulant preconditioned GMRES iterations for solving linear initial value problems. Let us emphasize that the focus of this work was on theoretical and algorithmic aspects; we have not carried out an extensive study of the parallel implementation of our algorithms, which will be subject to future work. From the analysis in Sects. 2.3 and 3.1 we expect that the interpolation method (Algorithm 3) will perform significantly better in a massively parallel environment.

We remark that Algorithm 2 and Algorithm 3 can directly be put in place instead of the existing ParaDiag algorithms for solving certain non-linear ODEs of the form \(M\dot{U}+f(U)=0\) [17] and for computing the so-called Coarse Grid Correction of the Parareal algorithm [22, 33].

In Sect. 4, we have discussed how to extend the methodology to the case of implicit Runge–Kutta time integration, but some questions remain for further work. In particular, a careful analysis of the low-rank approximability of Eq. (28) and of the choice of the shift parameter \(\sigma \), would be desirable.

References

Antoulas, A.C., Sorensen, D.C., Zhou, Y.: On the decay rate of Hankel singular values and related issues. Syst. Control Lett. 46(5), 323–342 (2002)

Baker, J., Embree, M., Sabino, J.: Fast singular value decay for Lyapunov solutions with nonnormal coefficients. SIAM J. Matrix Anal. Appl. 36(2), 656–668 (2015)

Beckermann, B.: An error analysis for rational Galerkin projection applied to the Sylvester equation. SIAM J. Numer. Anal. 49(6), 2430–2450 (2011)

Beckermann, B., Townsend, A.: Bounds on the singular values of matrices with displacement structure. SIAM Rev. 61(2), 319–344 (2019)

Benner, P., Li, R.-C., Truhar, N.: On the ADI method for Sylvester equations. J. Comput. Appl. Math. 233(4), 1035–1045 (2009)

Benner, P., Saak, J.: Numerical solution of large and sparse continuous time algebraic matrix Riccati and Lyapunov equations: a state of the art survey. GAMM-Mitt. 36(1), 32–52 (2013)

Berljafa, M., Güttel, S.: Generalized rational Krylov decompositions with an application to rational approximation. SIAM J. Matrix Anal. Appl. 36(2), 894–916 (2015)

Berljafa, M., Güttel, S.: Parallelization of the rational Arnoldi algorithm. SIAM J. Sci. Comput. 39(5), S197–S221 (2017)

Bertaccini, D.: The spectrum of circulant-like preconditioners for some general linear multistep formulas for linear boundary value problems. SIAM J. Numer. Anal. 40(5), 1798–1822 (2002)

Bertaccini, D., Ng, M.K.: Block \(\{\omega \}\)-circulant preconditioners for the systems of differential equations. Calcolo 40(2), 71–90 (2003)

Crouzeix, M., Palencia, C.: The numerical range is a \((1+\sqrt{2})\)-spectral set. SIAM J. Matrix Anal. Appl. 38(2), 649–655 (2017)

Druskin, V., Knizhnerman, L., Zaslavsky, M.: Solution of large scale evolutionary problems using rational Krylov subspaces with optimized shifts. SIAM J. Sci. Comput. 31(5), 3760–3780 (2009)

Druskin, V., Simoncini, V.: Adaptive rational Krylov subspaces for large-scale dynamical systems. Syst. Control Lett. 60(8), 546–560 (2011)

Ellner, N.S., Wachspress, E.L.: New ADI model problem applications. In: Proceedings of 1986 ACM Fall Joint Computer Conference, pp. 528–534, (1986)

Gander, M.J.: 50 years of time parallel time integration. In: Multiple Shooting and Time Domain Decomposition Methods, vol. 9 of Contrib. Math. Comput. Sci., pp. 69–113. (Cham: Springer, 2015)

Gander, M.J., Güttel, S.: PARAEXP: a parallel integrator for linear initial-value problems. SIAM J. Sci. Comput. 35(2), C123–C142 (2013)

Gander, M.J., Halpern, L.: Time parallelization for nonlinear problems based on diagonalization. In: Domain Decomposition Methods in Science and Engineering XXIII, vol. 116 of Lect. Notes Comput. Sci. Eng., pp. 163–170. (Cham: Springer, 2017)

Gander, M.J., Halpern, L., Rannou, J., Ryan, J.: A direct time parallel solver by diagonalization for the wave equation. SIAM J. Sci. Comput. 41(1), A220–A245 (2019)

Gander, M.J., Halpern, L., Ryan, J., Tran, T.T.B.: A direct solver for time parallelization. In: Domain Decomposition Methods in Science and Engineering XXII, vol. 104 of Lect. Notes Comput. Sci. Eng., pp. 491–499. (Cham: Springer, 2016)

Gander, M.J., Liu, J., Wu, S.-L., Yue, X, Zhou, T.: ParaDiag parallel-in-time algorithms based on the diagonalization technique. arXiv preprint arXiv:2005.09158, (2020)

Gander, M.J., Lunet, T., Ruprecht, D., Speck, R.: A unified analysis framework for iterative parallel-in-time algorithms. arXiv preprint arXiv:2203.16069, (2022)

Gander, M.J., Vandewalle, S.: Analysis of the parareal time-parallel time-integration method. SIAM J. Sci. Comput. 29(2), 556–578 (2007)

Gander, M.J., Wu, S.-L.: Convergence analysis of a periodic-like waveform relaxation method for initial-value problems via the diagonalization technique. Numer. Math. 143(2), 489–527 (2019)

Golub, G.H., Van Loan, C.F.: Johns Hopkins Studies in the Mathematical Sciences, 4th edn. Johns Hopkins University Press, Baltimore (2013)

Gončar, A.A.: The problems of E. I. Zolotarev which are connected with rational functions. Mat. Sb. 78(120), 640–654 (1969)

Grimme, E.J.: Krylov Projection Methods for Model Reduction. University of Illinois at Urbana-Champaign, Champaign (1997)

Hairer, E., Wanner, G.: Solving ordinary differential equations. II, vol. 14 of Springer Series in Computational Mathematics. (Berlin: Springer-Verlag 2010)

Henrici, P.: Applied And Computational Complex Analysis. Vol. 1. Wiley Classics Library. John Wiley & Sons, Inc., New York, 1988. Power series—integration—conformal mapping—location of zeros, Reprint of the (1974) original, A Wiley-Interscience Publication

Kågström, B., Kressner, D.: Multishift variants of the QZ algorithm with aggressive early deflation. SIAM J. Matrix Anal. Appl. 29(1), 199–227 (2006)

Kressner, D., Massei, S., Robol, L.: Low-rank updates and a divide-and-conquer method for linear matrix equations. SIAM J. Sci. Comput. 41(2), A848–A876 (2019)

Le Bailly, B., Thiran, J.P.: Optimal rational functions for the generalized Zolotarev problem in the complex plane. SIAM J. Numer. Anal. 38(5), 1409–1424 (2000)

Lebedev, V.: On a Zolotarev problem in the method of alternating directions. USSR Comput. Math. Math. Phys. 17(2), 58–76 (1977)

Lions, J.-L., Maday, Y., Turinici, G.: Résolution d’EDP par un schéma en temps pararéel. C. R. Acad. Sci. Paris Sér I Math. 332(7), 661–668 (2001)

Liu, J., Wu, S.-L.: A fast block \(\alpha \)-circulant preconditoner for all-at-once systems from wave equations. SIAM J. Matrix Anal. Appl. 41(4), 1912–1943 (2020)

Maday, Y., Rønquist, E.M.: Parallelization in time through tensor-product space-time solvers. C. R. Math. Acad. Sci. Paris 346(1–2), 113–118 (2008)

Massei, S., Robol, L.: Rational Krylov for Stieltjes matrix functions: convergence and pole selection. BIT 61(1), 237–273 (2021)

McDonald, E., Pestana, J., Wathen, A.: Preconditioning and iterative solution of all-at-once systems for evolutionary partial differential equations. SIAM J. Sci. Comput. 40(2), A1012–A1033 (2018)

Noschese, S., Reichel, L.: Generalized circulant Strang-type preconditioners. Numer. Linear Algebra Appl. 19(1), 3–17 (2012)

Palitta, D.: Matrix equation techniques for certain evolutionary partial differential equations. J. Sci. Comput. 87(3), 36 (2021)

Peaceman, D.W., Rachford, H.H., Jr.: The numerical solution of parabolic and elliptic differential equations. J. Soc. Indust. Appl. Math. 3, 28–41 (1955)

Penzl, T.: Eigenvalue decay bounds for solutions of Lyapunov equations: the symmetric case. Syst. Control Lett. 40(2), 139–144 (2000)

Ruhe, A.: Rational Krylov sequence methods for eigenvalue computation. Linear Algebra Appl. 58, 391–405 (1984)

Sabino, J.: Solution of large-scale Lyapunov equations via the block modified Smith method. ProQuest LLC, Ann Arbor, MI, (2007). Thesis (Ph.D.)–Rice University

Saff, E. B., Totik, V.: Logarithmic potentials with external fields, volume 316 of Grundlehren der mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences]. (Berlin: Springer-Verlag, 1997). Appendix B by Thomas Bloom

Simoncini, V.: A new iterative method for solving large-scale Lyapunov matrix equations. SIAM J. Sci. Comput. 29(3), 1268–1288 (2007)

Simoncini, V.: Computational methods for linear matrix equations. SIAM Rev. 58(3), 377–441 (2016)

Starke, G.: Near-circularity for the rational Zolotarev problem in the complex plane. J. Approx. Theory 70(1), 115–130 (1992)

Strang, G.: A proposal for Toeplitz matrix calculations. Stud. Appl. Math. 74, 171–176 (1986)

Trefethen, L.N., Weideman, J.A.C.: The exponentially convergent trapezoidal rule. SIAM Rev. 56(3), 385–458 (2014)

Wachspress, E.: The ADI Model Problem. Springer, New York (2013)

Wu, S.-L.: Toward parallel coarse grid correction for the parareal algorithm. SIAM J. Sci. Comput. 40(3), A1446–A1472 (2018)

Zolotarev, E.: Application of elliptic functions to questions of functions deviating least and most from zero. Zap. Imp. Akad. Nauk. St. Petersburg 30(5), 1–59 (1877)

Acknowledgements

The authors thank Bernd Beckermann for inspiring discussions on the Zolotarev problems in Sect. 2.2.

Funding

Open access funding provided by Università di Pisa within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work of the S. Massei was supported by the INdAM-GNCS project “Metodi basati su matrici e tensori strutturati per problemi di algebra lineare di grandi dimensioni”. The work of the J. Zhu has been supported by National Natural Science Foundation of China (Grant Nos. 11471150, 12161030) and China Scholarship Council (Contract No. 202006180060).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kressner, D., Massei, S. & Zhu, J. Improved ParaDiag via low-rank updates and interpolation. Numer. Math. 155, 175–209 (2023). https://doi.org/10.1007/s00211-023-01372-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-023-01372-w