Abstract

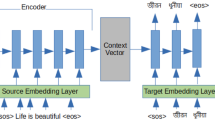

Attention-based neural machine translation (attentional NMT), which jointly aligns and translates, has got much popularity in recent years. Besides, a language model needs an accurate and larger bilingual dataset_ from the source to the target, to boost translation performance. There are many such datasets publicly available for well-developed languages for model training. However, currently, there is no such dataset available for the English-Afaan Oromo pair to build NMT language models. To alleviate this problem, we manually prepared a 25K English-Afaan Oromo new dataset for our model. Language experts evaluate the prepared corpus for translation accuracy. We also used the publicly available English-French, and English-German datasets to see the translation performances among the three pairs. Further, we propose a deep attentional NMT model to train our models. Experimental results over the three language pairs demonstrate that the proposed system and our new dataset yield a significant gain. The result from the English-Afaan Oromo model achieved 1.19 BLEU points over the previous English-Afaan Oromo Machine Translation (MT) models. The result also indicated that the model could perform as closely as the other developed language pairs if supplied with a larger dataset. Our 25K new dataset also set a baseline for future researchers who have curiosity about English-Afaan Oromo machine translation.

Similar content being viewed by others

REFERENCES

Kalchbrenner, N. and Blunsom, P., Recurrent continuous translation models, in Proceedings of the ACL Conference on Empirical Methods in Natural Language Processing (EMNLP), Association for Computational Linguistics, pp. 1700–1709.

Klein, G., Hernandez, F., Nguyen, V., and Senellart, J., The OpenNMT Neural Machine Translation Toolkit, 2020 Ed., Proceedings of the 14th Conference of the Association for Machine Translation in the Americas, Vol. 1: MT Research Track, 2020, pp. 102–109.

Li, X., Liu, L., Tu, Z., Li, G., Shi, S., and Meng, M.Q.H., Attending from foresight: A novel attention mechanism for neural machine translation, in IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2021, vol. 29, pp. 2606–2616: https://doi.org/10.1109/TASLP. 2021.3097939

Rubino, R., Marie, B., Dabre, R., et al., Extremely low-resource neural machine translation for Asian languages, Mach. Transl., 2021, vol. 34, pp. 347–382. https://doi.org/10.1007/s10590-020-09258-6

Bahdanau D., Cho K., and Bengio, Y., Neural machine translation by jointly learning to align and translate, 3rd International Conference on Learning Representations (ICLR), San Diego, USA, 2015. https://doi.org/10.48550/arXiv.1409.0473

Ashengo, Y.A., Aga, R.T., and Abebe, S.L., Context-based machine translation with recurrent neural network for English–Amharic translation, Mach. Transl., 2021, vol. 35, pp. 19–36. https://doi.org/10.1007/s10590-021-09262-4

Peng, R., Hao, T., and Fang, Y., Syntax-aware neural machine translation directed by syntactic dependency degree, Neural Comput. Appl., 2021, vol. 33, pp. 16609–16625. https://doi.org/10.1007/s00521-021-06256-4

Wu, X., Xia, Y., Zhu, J., et al., A study of BERT for context-aware neural machine translation, Mach. Learn., 2022, vol. 111, pp. 917–935. https://doi.org/10.1007/s10994-021-06070-y

Kang, L., He, S., Wang, M., et al., Bilingual attention-based neural machine translation, Appl Intel., 2022, https://doi.org/10.1007/s10489-022-03563-8

Nath, B., Sarkar, S., Das, S., et al., Neural machine translation for Indian language pair using a hybrid attention mechanism, Innovations Syst. Software Eng., 2022. https://doi.org/10.1007/s11334-021-00429-z

Ebisa, A. and Gemechu, G.R., Kanagachidambaresan. Machine learning approach to English-Afaan Oromo text-text translation: Using attention-based neural machine translation, 2021 4th International Conference on Computing and Communications Technologies (ICCCT), 2021, pp. 80–85. https://doi.org/10.1109/ICCCT53315.2021.9711807

Grönroos, S.A., Virpioja, S., and Kurimo, M., Transfer learning and sub-word sampling for asymmetric-resource one-to-many neural translation, Mach. Transl., 2020, vol. 34, pp. 251–286. https://doi.org/10.1007/s10590-020-09253-x

Nissanka, L.N.A.S.H., Pushpananda, B.H.R., and Weerasinghe, A.R., Exploring neural machine translation for Sinhala-Tamil languages pair, 2020 20th International Conference on Advances in ICT for Emerging Regions (ICTer), 2020, pp. 202–207. https://doi.org/10.1109/ICTer51097.2020.9325466

Cho, K., Bahdanau, D., Fethi, B., Holger, S., and Yoshua, B., Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation, 2014. arXiv:1406.1078v3.

Günter, K., Thomas, U., Andreas, M., and Sepp, H., Self-normalizing neural networks, Adv. Neural Inf. Process. Syst., 2017. arXiv:1706.02515v5 [cs.LG]:https://doi.org/10.48550/arXiv.1706.02515

Chen, K. et al., Towards more diverse input representation for neural machine translation, in IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2020, vol. 28, pp. 1586–1597. https://doi.org/10.1109/TASLP.2020.2996077

Chen, K., Wang, R., Utiyama, M., Sumita, E., and Zhao, T., Neural machine translation with sentence-level topic context, in IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2019, vol. 27, no. 12, pp. 1970–1984. https://doi.org/10.1109/TASLP.2019.2937190

Lin, Z., Feng, M., Santos, C.N.D., Yu, M., Xiang, B., Zhou, B., and Bengio, Y., A structured self-attentive sentence embedding, in Proceedings of the International Conference on Learning representations, 2017, p15: doi.org/https://doi.org/10.48550/arXiv.1703.03130

Parikh, A., Täckström, O., Das, D., and Uszkoreit, J., A decomposable attention model for natural language inference, in Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, 2016, pp 2249–2255. https://doi.org/10.48550/arXiv.1606.01933

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., and Polosukhin, Ł., Attention is all you need, Adv. Neural Information Processing Systems, Long Beach, California, 2017, pp 5998–6008. https://doi.org/10.48550/arXiv.1706.03762

Yang, B., Tu, Z, Wong, D.F., Meng, F., Chao Lidia, S., and Zhang, T., Modeling localness for self-attention networks, in Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 2018, pp 4449–4458. https://doi.org/10.48550/arXiv.1810.1018

Ampomah, I.K.E., McClean, S., and Hawe, G., Dual contextual module for neural machine translation, Mach. Transl., 2021, vol. 35, pp. 571–593. https://doi.org/10.1007/s10590-021-09282-0

Zhang, B., Xiong, D., Xie, J., and Su, J., Neural machine translation with GRU-gated attention model, in IEEE Transactions on Neural Networks and Learning Systems, 2020, vol. 31, no. 11, pp. 4688–4698. https://doi.org/10.1109/TNNLS.2019.2957276

Nguyen, Q.P., Vo, A.-D., Shin, J.-C., Tran, P., and Ock, C.-Y., Korean-Vietnamese meural machine translation system with Korean morphological analysis and Word sense disambiguation, in IEEE Access, 2019, vol. 7, pp. 32602–32616. https://doi.org/10.1109/ACCESS.2019.2902270

Sandaruwan, D., Sumathipala, S., and Fernando, S., Neural machine translation approach for Singlish to English translation, Int. J. Adv. ICT Emerg. Reg. (ICTer), 2021, vol. 14, no. 3, pp. 36–42.https://doi.org/10.4038/icter.v14i3.7230

Hadj Ameur, M.S., Guessoum, A., and Meziane, F., Improving Arabic neural machine translation via n-best list re-ranking, Mach. Transl., 2019, vol. 33, pp. 279–314. https://doi.org/10.1007/s10590-019-09237-6

Pathak, A., Pakray, P., and Bentham, J., English–Mizo Machine Translation using neural and statistical approaches, Neural Comput. Appl., 2019, vol. 31, pp. 7615–7631. https://doi.org/10.1007/s00521-018-3601-3

Duan, G., Yang, H., Qin, K., et al., Improving neural machine translation model with deep encoding information, Cognit. Comput., 2021, vol. 13, pp. 972–980. https://doi.org/10.1007/s12559-021-09860-7

Singh, M., Kumar, R., and Chana, I., Improving neural machine translation for low-resource Indian languages using rule-based feature extraction, Neural Comput. Appl., 2021, vol. 33, pp. 1103–1122. https://doi.org/10.1007/s00521-020-04990-9

Dewangan, S., Alva, S., Joshi, N., et al., Experience of neural machine translation between Indian languages, Mach. Transl., 2021, vol. 35, pp. 71–99. https://doi.org/10.1007/s10590-021-09263-3

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sector.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

The authors declare that they have no conflicts of interest.

About this article

Cite this article

Ebisa A. Gemechu, Kanagachidambaresan, G.R. English-Afaan Oromo Machine Translation Using Deep Attention Neural Network. Opt. Mem. Neural Networks 32, 159–168 (2023). https://doi.org/10.3103/S1060992X23030049

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S1060992X23030049