Abstract

We consider the problem of approximating the von Neumann entropy of a large, sparse, symmetric positive semidefinite matrix A, defined as \({{\,\textrm{tr}\,}}(f(A))\) where \(f(x)=-x\log x\). After establishing some useful properties of this matrix function, we consider the use of both polynomial and rational Krylov subspace algorithms within two types of approximations methods, namely, randomized trace estimators and probing techniques based on graph colorings. We develop error bounds and heuristics which are employed in the implementation of the algorithms. Numerical experiments on density matrices of different types of networks illustrate the performance of the methods.

Similar content being viewed by others

1 Introduction

The von Neumann entropy [56] of a symmetric positive semidefinite matrix A with \({{{\,\textrm{tr}\,}}(A) = 1}\) is defined as \(S(A) = {{\,\textrm{tr}\,}}(-A \log A)\), where \(\log A\) is the matrix logarithm. With the usual convention that \(0 \cdot \log 0 = 0\), the von Neumann entropy is given by

where \(\lambda _1, \ldots , \lambda _n\) are the eigenvalues of the \(n\times n\) matrix A. The von Neumann entropy plays an important role in several fields including quantum statistical mechanics [42], quantum information theory [5], and network science [15]. For example, computing the von Neumann entropy is necessary in order to determine the ground state of many-electron systems at finite temperature [1]. The von Neumann entropy of graphs is also an important tool in the structural characterization and comparison of complex networks [22, 36].

If the size of A is large, the computation of S(A) by means of explicit diagonalization can be too expensive, so it becomes necessary to resort to cheaper methods that compute approximations of the entropy. In recent years, a few papers have appeared devoted to this problem; see, e.g., [17, 31, 44, 59]. These papers investigate different approaches based on quadratic (Taylor) approximants, the global Lanczos algorithm, Gaussian quadrature and Chebyshev expansion. In general, the problem of computing the von Neumann entropy of a large matrix is difficult because the underlying matrix function is not analytic in a neighbourhood of the spectrum when the matrix is singular; difficulties can also be expected when A has eigenvalues close to zero, which is usually the case. In particular, polynomial approximation methods may converge slowly in such cases.

In this paper we propose to approximate the von Neumann entropy using either the probing approach developed in [29] or a stochastic trace estimator [39, 47, 49]. The main contributions of this work are outlined in the following.

In Sect. 2.1 we obtain an integral expression for the entropy function \(f(x) = -x \log x\) that relates it with a Cauchy-Stieltjes function [58, Chapter VIII], and we use it to derive error bounds for the polynomial approximation of f, which in turn lead to a priori bounds for the approximation of S(A) with probing methods. In order to also have a practical stopping criterion alongside the theoretical bounds, in Sect. 5.1 we propose some heuristics to estimate the error of probing methods, and in Sect. 6.2 we demonstrate their reliability with numerical experiments. In Sect. 3.1 we also use properties of symmetric M-matrices to show that, in the case of the graph entropy, the approximation obtained with a probing method is a lower bound for the exact entropy.

Both probing methods and stochastic trace estimators require the computation of a large number of quadratic forms with f(A), which can be efficiently approximated using Krylov subspace methods [30, 35]. In Sect. 4.2 we propose to combine polynomial Krylov iterations with rational Krylov iterations that use asymptotically optimal poles for Cauchy-Stieltjes functions [45]. In Sect. 4.3 we obtain new a posteriori error bounds and estimates for this task, building on the ones presented in [34], and we discuss methods to compute them efficiently. For the case of the graph entropy, we make use of a desingularization technique introduced in [11] that exploits properties of the graph Laplacian to compute quadratic forms more efficiently.

The resulting algorithm can be seen as a black box method that only requires an input tolerance \(\epsilon \) and computes an approximation of S(A) with relative accuracy \(\epsilon \). While this accuracy is not guaranteed when the rigorous bounds are replaced by heuristics, we found the algorithm to be quite reliable in practice.

Our implementation of the probing algorithm is compared to a state-of-the-art randomized trace estimator developed in [49] with several numerical experiments in Sect. 6, in which we approximate the graph entropy of various complex networks.

The rest of the paper is organized as follows. In Sect. 2 we recall some properties of the von Neumann entropy and we obtain bounds for the polynomial approximation of \(f(x) = -x \log x\), which we use in the subsequent sections. In Sect. 3 we give an overview of probing methods and stochastic trace estimators and we derive bounds for the convergence of probing methods. In Sect. 4 we describe the computation of quadratic forms using Krylov subpsace methods and we derive a posteriori error bounds and estimates. In Sect. 5 we summarize the overall algorithm and we discuss heuristics and stopping criteria, and in Sect. 6 we test the performance of the methods on density matrices of several complex networks. Finally, Sect. 7 contains some concluding remarks.

2 Properties of the von Neumann entropy

A density matrix \(\rho \) is a self-adjoint, positive semi-definite linear operator with unit trace acting on a complex Hilbert space. In quantum mechanics, density operators describe states of quantum mechanical systems. Here we consider only finite dimensional Hilbert spaces, so \(\rho \) is an \(n\times n\) Hermitian matrix. For simplicity, here we focus on real symmetric matrices; all the results are easily extended to the complex case.

Recall that the von Neumann entropy of a system described by the density matrix \(\rho \) [56] is given by

where \(\sigma (\rho )\) denotes the spectrum of \(\rho \), under the convention that \(0\cdot \log (0)=0\). Here \(\log (x)\) is the natural logarithm. Note that in the literature the entropy is sometimes defined using \(\log _2(x)\) instead, but since \(\log _2(x)=\log (x)/\log (2)\) the two definitions are equivalent up to a scaling factor. We also mention that in the original definition the entropy includes the factor \(\kappa _B\) (the Boltzmann constant), which we omit.

A straightforward way to compute \(S(\rho )\) is by diagonalization. However, this approach is unfeasible when the dimension is very large. Here we propose some approaches to compute approximations to the von Neumann entropy based on the trace estimation of matrix functions.

Note that the formula (2.1) is well defined even without the hypothesis of unit trace. Moreover, if \(\rho \) is given in the form \(\rho =\gamma A\) with \(\gamma >0\), we have the relation

In quantum mechanics, the von Neumann entropy of a density matrix gives a measure of how much a density matrix is distant from being a pure state, that is a rank-1 matrix with only one nonzero eigenvalue equal to one [57]. For any density matrix of size n, we have \(0\le S(\rho )\le \log (n)\). Moreover, \(S(\rho )=0\) if and only if \(\rho \) is a pure state and \(S(\rho )=\log (n)\) if and only if \(\rho =\frac{1}{n}I\) [56, 57].

The von Neumann entropy also has applications in network theory. Recall that a graph \(\mathcal {G}\) is given by a set of nodes \(\mathcal {V}=\{ v_1,\dots ,v_n \}\) and a set of edges \(\mathcal {E}\subset \mathcal {V}\times \mathcal {V}\). The graph is called undirected if \((v_i,v_j)\in \mathcal {E}\) if and only if \((v_j,v_i)\in \mathcal {E}\) for all \(i\ne j\). A walk on the graph of length \(\ell \) is a sequence of \(\ell +1\) nodes where two consecutive nodes are linked by an edge, for a total of \(\ell \) edges. We say that \(\mathcal {G}\) is connected if there exists a directed path from node i to node j for any pair of nodes (i, j). The adjacency matrix of a graph is a matrix \(A\in \mathbb {R}^{n\times n}\) such that \([A]_{ij}=1\) if \((v_i,v_j)\in \mathcal {E}\), and 0 otherwise. The degree of a node \(\deg (v_i)\) is the number of nodes that are linked with \(v_i\) by an edge. It can be expressed in the form \(\deg (v_i)=[A\varvec{1}]_i\), where \(\varvec{1}\) is the vector of all ones. Finally, the graph Laplacian is given by

Note that \(\mathcal {L}\) is a singular positive semidefinite matrix and the eigenvalue 0 is simple if and only if \(\mathcal {G}\) is connected, in which case the associated one-dimensional eigenspace is spanned by \(\varvec{1}\). Given the density matrix \(\rho = \mathcal {L}/{{\,\textrm{tr}\,}}(\mathcal {L})\), the von Neumann entropy of \(\mathcal {G}\) is defined as \(S(\rho )\) [15, 17].

It should be mentioned that, strictly speaking, the graph entropy defined in this manner is not a “true” entropy, since it does not satisfy the sub-additivity requirement. In other words, the graph entropy could decrease when an edge is added to the graph, see [23]. For this reason, different notions of graph entropy have been proposed in the literature; see, e.g., [32, 33]. These entropies still have the form of a von Neumann entropy, \(S = -{{\,\textrm{tr}\,}}(\rho \log \rho )\), but with a different definition of the density matrix \(\rho \) which, however, is still expressed as a function of a matrix (Hamiltonian) associated to the graph. Therefore, the techniques developed in this paper are still applicable, at least in principle.

2.1 Polynomial approximation

Denote the set of all polynomials with degree at most k with \(\Pi _k\). For a continuous function \(f:[a,b] \rightarrow \mathbb {R}\), define the error of the best uniform polynomial approximation in \(\Pi _k\) as

If A is a symmetric (or Hermitian) matrix with \(\sigma (A)\subset [a,b]\), estimating or bounding this quantity is crucial in the study of polynomial approximations of the matrix function f(A) [4] and for establishing decay bounds for the entries of f(A) [8, 24, 27].

An important case is the inverse function \(f(x)=1/x\) for \(x\in [a,b]\), \(0<a<b\), for which we have

where \(\kappa =b/a\); see, e.g., [46].

In general, an inequality of the form

for some fixed constants \(C>0\) and \(0<q<1\), is equivalent to the fact that f is analytic over an ellipse containing [a, b]; see [46, Theorem 73] for more details.

The function \(f(x)=x\log (x)\) is not analytic on any neighborhood of 0, so we cannot expect to find a geometric decay as in (2.6) if we consider [0, b], \(b>0\), as the interval of definition. However, since f is continuous, by the Weierstrass Approximation Theorem \(E_k(f,[0,b])\) must go to 0 as \(k\rightarrow \infty \). A more precise estimate is the following [59], derived by computing the coefficients of the Chebyshev expansion of f(x):

This shows that the decay rate of the error is algebraic in k. Our approach is based on an integral representation and leads to sharper bounds.

Recall that a Cauchy-Stieltjes function [10, 45] has the form

where \(\mu \) is a (possibly signed) real measure supported on \([0,+\infty )\) and the integral is absolutely convergent. An example is given by the function \(\log (1 + z)/z\) that has the expression

It is easy to check that the above identity also holds for \(z \in (-1, 0)\). With the change of variable \(x=1+z\) and some simple rearrangements, we get the following integral expression for the entropy:

With the additional change of variable \(s = t+1\), we can rewrite (2.9) in the form

Note that the above identities also hold for \(x = 0\) and \(x = 1\), because of the factor \(x(1-x)\) in front of the integral. This shows that although the entropy function \(-x\log (x)\) is not itself a Cauchy-Stieltjes function, we can recognize a factor with the form (2.8) in its integral representation. This observation will be important later for the selection of poles in a rational Krylov method; see Sect. 4.2.

Integral representations have proved useful for many problems related to polynomial approximations and matrix functions; see e.g. [9, 10, 27]. The following result shows that we can derive explicit bounds for \(E_k(f,[a,b])\).

Lemma 2.1

Let f(x) be defined for \(x\in [a,b]\) by

where \(g_t(x)\) is continuous for \((t,x)\in (0, \infty ) \times [a, b]\) and the integral (2.11) is absolutely convergent. Then

for all \(k\ge 0\).

Proof

For all \(t \in (0, \infty )\) and for any degree \(k \ge 0\), since \(g_t(x)\) is continuous over the compact interval [a, b] there exists a unique polynomial \(p_k^{(t)}(x)\in \Pi _k\) such that

For all \(x\in [a,b]\) we can define

This is well defined: for all \(x \in [a, b]\), the mapping \(t\mapsto p^{(t)}_k(x)\) is continuous for all \(t\in (0,\infty )\) in view of [46, Theorem 24], since \(g_t(x)\) is uniformly continuous for (t, x) in the compact sets contained in \((0,\infty )\times [a,b]\). Moreover, the integral is finite since

We want to show that \(p_k(x)\) is also a polynomial. Consider the expression

which defines the coefficients \(a_i(t)\) as functions of t. Formally, we have

where \({\bar{a}}_i:=\int _0^\infty a_i(t)\,{ \text {d}}t\). To conclude, we need to show that this expression is well defined, that is, the functions \(a_i(t)\) are integrable in t. Consider \(k+1\) distinct points \(x_0,\dots ,x_k\) in the interval [a, b] and let \(\varvec{a}(t)=[a_0(t),\dots ,a_k(t)]^T\), \(\varvec{p}(t)=[p_k^{(t)}(x_0),\dots ,p_k^{(t)}(x_k)]^T\). We have that \(V \varvec{a}(t)=\varvec{p}(t)\), where

is a Vandermonde matrix. Since V is nonsingular, we have that \(\varvec{a}(t)=V^{-1}\varvec{p}(t)\). Then, if we let \(V^{-1}=(c_{ij})_{i,j=0}^k\), we obtain the expression

where \(c_{ij}\) is independent of t for all i, j. This shows that, for all i, \(a_i(t)\) is continuous for \(t \in (0,\infty )\), and we have

hence \({\bar{a}}_i\) is well defined for \(i=0,\dots ,k\) and \(p_k(x)\) is a polynomial of degree at most k. Finally, we have

This concludes the proof. \(\square \)

The following result gives a new bound for \(E_k(x\log x,[a,b])\).

Theorem 2.2

Let \(0\le a< b\) and \(\gamma =a/b\). We have

for all \(k\ge 2\).

Proof

Let \(f(x)=x\log (x)\). Notice that \(f(x)=bf(b^{-1}x)+\log (b)x\), so

since \(k\ge 2\) and we can ignore terms of degree 1 for the polynomial approximation. For \(x\in [\gamma ,1]\) we can use the representation (2.10). Let \(g_t(x):=\frac{x(1-x)}{(1+t)(x+t)}\) be the integrand in (2.10) for all \(t>0\). Note that \(g_t(x)\) is a continuous fuction in the variables \((t, x) \in (0, \infty ) \times [a, b]\), so we can apply Lemma 2.1. Then we can write \(g_t(x)\) as

and since \(k\ge 2\) and \(1-\frac{x}{1+t}\) has degree 1, we get

Hence, by using (2.5) and Lemma 2.1 we get

where \(\kappa (t)=(1+t)/(\gamma +t)\). In order to bound the integral, consider the identities

We have

By checking the derivative, it can be shown that

is an antiderivative of \(\left( \sqrt{1+t}-\sqrt{\gamma +t}\right) ^{2k}\). Since \( \lim _{t\rightarrow \infty }F(t)=0\), we deduce that

This, combined with (2.16), concludes the proof. \(\square \)

Remark 2.3

The result of Theorem 2.2 is an improvement of (2.7). When \(a>0\), the right-hand side in (2.13) is the product of an algebraic and a geometric factor, so the decay is asymptotically faster. When \(a=0\), we have \(\gamma =0\) and (2.13) becomes

which is better than (2.7) for all \(k\ge 2\). The new bound (2.13) is compared with (2.7) in Fig. 1.

3 Trace estimation

Recall that a function f(A) of a symmetric matrix \(A\in \mathbb {R}^{n\times n}\) can be defined via a spectral decomposition \(A=U\Lambda U^T\), where U is orthogonal and \(\Lambda ={{\,\textrm{diag}\,}}(\lambda _1,\dots ,\lambda _n)\) is the diagonal matrix containing the eigenvalues. Then

provided that f is defined on the eigenvalues of A. A matrix function can be computed via diagonalization, using polynomial or rational approximants, or with algorithms tailored to specific matrix functions, such as scaling and squaring for the matrix exponential. We direct the reader to [38] for more details on these methods. In this section we present algorithms to estimate the trace of f(A) that do not require the explicit computation of the diagonal entries of f(A). It is necessary to resort to this class of algorithms whenever the size of A is too large to make the computation of f(A) (or its diagonal) feasible. In the sections that follow, we use the notation A to denote general matrices and \(\rho \) to denote density matrices, since most of our results are applicable in general for symmetric positive semidefinite matrices and not only to density matrices.

3.1 Probing methods

Let us summarize the approach described in [29] to compute the trace of a matrix function f(A), where \(A\in \mathbb {R}^{n\times n}\) is a sparse matrix. Recall that the graph \(\mathcal {G}(A)\) associated with A has nodes \(\mathcal {V}=\{1,\dots ,n\}\) and edges \(\mathcal {E}=\{ (i,j):[A]_{ij}\ne 0, i\ne j \}\), and the geodesic distance \({{\,\textrm{d}\,}}(i,j)\) between i and j is the shortest length of a walk that starts with i and ends with j, and is \(\infty \) if no such walk exists or 0 if \(i=j\).

Let \(V_1,\dots ,V_{s}\) be a coloring (i.e. a partitioning) of the set \(\{ 1,\dots ,n \}\). We write \({{\,\textrm{col}\,}}(i)={{\,\textrm{col}\,}}(j)\) if and only if i and j belong to the same set. We have a distance-d coloring if \({{\,\textrm{col}\,}}(i)\ne {{\,\textrm{col}\,}}(j)\) whenever \({{\,\textrm{d}\,}}(i,j)\le d\), where \({{\,\textrm{d}\,}}(i,j)\) is the geodesic distance between i and j in the graph \(\mathcal {G}(A)\) associated with A. The associated probing vectors are

where \(\varvec{e}_i\) is the i-th vector of the canonical basis. The induced approximation of the trace is

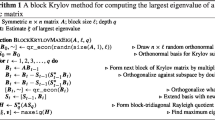

The problem of finding the minimum number s of colors, or sets, in the partition needed to get a distance-d coloring for a fixed d is NP-complete for general graphs [41]. It is important to keep s as small as possible since, in view of (3.2), it is the number of quadratic forms needed to compute \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\). A greedy and efficient algorithm to get a quasi optimal distance-d coloring is given by Algorithm 1 [53, Algorithm 4.2].

One can obtain different colorings depending on the order of the nodes; sorting the nodes by descending degree usually leads to a good performance [41, 53]. The number of colors obtained with this algorithm is at most \({{\,\mathrm{\Delta }\,}}^d+1\), where \({{\,\mathrm{\Delta }\,}}\) is the maximum degree of a node in the graph induced by A, and the cost is at most \({\mathcal {O}}(n {{\,\mathrm{\Delta }\,}}^d)\) if the d-neighbors \(W_i\) of each node i are computed with a traversal of the graph. Alternatively, the greedy coloring can be obtained by computing \(A^d\), which can be done using at most \(2 \lfloor \log _2 d \rfloor \) matrix-matrix multiplications [38, Section 4.1]. In our Matlab implementation of the probing method, we construct the greedy distance-d coloring by computing \(A^d\), since we found it to be faster than the graph-based approach; this is likely due to the more efficient Matlab implementation of matrix-matrix operations.

If A is \(\beta \)-banded, i.e. if \([A]_{ij}=0\) for \(|{i-j} |>\beta \), a simple distance-d coloring is given by

This coloring is optimal with \(s=d\beta +1\) if all the entries within the band are nonzero. Note that to diagonalize a symmetric banded matrix one must first reduce it to a tridiagonal form, and this costs \(O(\beta n^2)\) [54]. On the other hand, if we assume that quadratic forms with f(A) can be approximated with a fixed number of Krylov iterations, the computation of each quadratic form costs \(O(\beta n)\), and the cost for \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\) is approximatively \(O(d\beta ^2 n)\) and this can be much less than the cost for the diagonalization provided that the requested accuracy is not too high. For a general sparse matrix A, as an alternative to Algorithm 1 one can use the reverse Cuthill-McKee algorithm [20] to reorder the nodes and reduce the bandwidth, and then use (3.3) to find a distance-d coloring [29]. Depending on the structure of the graph induced by A this can yield good results, but in many cases better colorings are obtained by using Algorithm 1; see Example 3.4.

Regarding the accuracy of the approximation of \({{\,\textrm{tr}\,}}(f(A))\) by \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\) we have the following result [29, Theorem 4.4].

Theorem 3.1

([29]) Let \(A\in \mathbb {R}^{n\times n}\) be symmetric with spectrum \(\sigma (A)\subset [a,b]\). Let f(x) be defined over [a, b] and let \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\) be the approximation (3.2) of \({{\,\textrm{tr}\,}}(f(A))\) induced by a distance-d coloring. Then

If \(f(x)=-x\log (x)\), so that \({{\,\textrm{tr}\,}}(f(A))=S(A)\), we have the following result.

Corollary 3.2

Let \(A\in \mathbb {R}^{n\times n}\) be symmetric with \(\sigma (A)\subset [a,b]\), \(0\le a<b\), and put \(\gamma =a/b\). Then

for all \(d\ge 2\). In particular, if \(a=0\), we have

for all \(d\ge 2\).

Proof

The inequality (3.5) follows from Theorem 2.2 and Theorem 3.1. The inequality (3.6) follows from (3.5) with \(\gamma =a/b=0\). \(\square \)

Remark 3.3

The bound (3.5) can be pessimistic in practice, especially for large values of d. We will see this in Example 3.4 and in Sect. 6.2. A priori bounds based on polynomial approximations often fail to catch the exact convergence behavior in many problems related to matrix functions since a minimization problem over the spectrum of a matrix is relaxed to the whole spectral interval. This occurs in the convergence of polynomial Krylov methods [43, Section 5.6] and in the decay bounds on the entries of matrix functions [9, 28]. Also, the coloring we get via Algorithm 1 can return far more colors than needed for a distance-d coloring, and this can benefit the convergence in a way that is not predicted by the bound. We will see in Sect. 5.1 a more practical heuristic to predict the error with higher accuracy.

Example 3.4

Let us see how the bound and the convergence of the probing method perform in practice. We use the density matrix \(\rho =\mathcal {L}/{{\,\textrm{tr}\,}}(\mathcal {L})\), where \(\mathcal {L}\) is the Laplacian of the graph minnesota from the SuiteSparse Matrix Collection [21]. More precisely, we consider the biggest connected component whose graph Laplacian has size \(n=2640\) and 9244 nonzero entries.

For several values of d, we compute the approximation \({{\,\mathrm{\mathcal {T}}\,}}_d(-\rho \log \rho )\) associated with two different distance-d colorings: the first is obtained by the greedy coloring (Algorithm 1) after sorting the nodes by descending degree, while for the second we use the reverse Cuthill-McKee algorithm to get a 67-banded matrix and then (3.3).

In Fig. 2 we compare \({{\,\mathrm{\mathcal {T}}\,}}_d(-\rho \log (\rho ))\) with the value of \(S(\rho )\) obtained by diagonalizing \(\rho \), considered as exact. The left plot shows the error in terms of the number of colors (i.e. the number of probing vectors) associated with the distance-d colorings. In the right plot the errors are shown in terms of d together with bound (3.6), where \(b=\lambda _{\max }(\rho )\).

For a fixed value of d, the coloring based on the bandwidth provides a smaller error than the greedy one, but it also uses a larger number of colors. Indeed, we can see from the left plot that with the same computational effort the greedy algorithm obtains a smaller error. On the right we can observe that bound (3.6) is close to the error given by the greedy coloring for small values of d, while it fails to catch the convergence behavior for large values of d.

Absolute errors of the probing approximation of \(S(\rho )\) where the distance-d coloring is obtained either by the greedy procedure (Algorithm 1) or with the reverse Cuthill-McKee algorithm and the coloring (3.3) for banded matrices. On the left the abscissa represents the number of colors used for the coloring. On the right the errors are compared with bound (3.6) in terms of d

For the graph entropy, i.e. when \(\rho =\mathcal {L}/{{\,\textrm{tr}\,}}(\mathcal {L})\) and \(\mathcal {L}\) is a graph Laplacian of a graph \(\mathcal {G}\), we can show that \(-{{\,\mathrm{\mathcal {T}}\,}}_d(\rho \log \rho )\) is a lower bound for \(S(\rho )\). In the proof we use the fact that \(\rho \) is a symmetric M-matrix [14, Chapter 6], i.e. it can be written in the form \(\rho =\theta I-B\) where \(\theta >0\) and B is a nonnegative matrix such that \(\lambda \le \theta \) for all \(\lambda \in \sigma (B)\).

Lemma 3.5

Let \(A=\theta I-B\) be a symmetric M-matrix. Let \({{\,\textrm{d}\,}}(i,j)\) be the geodesic distance in the graph associated with A. Then \([-A\log (A)]_{ij}\le 0\) whenever \({{\,\textrm{d}\,}}(i,j)\ge 2\). If \(\theta <\exp (-1)\), then \([-A\log (A)]_{ij}\le 0\) for \(i\ne j\) and \(-A\log A\) is an M-matrix.

Proof

Suppose that \(\theta >\Vert B\Vert _2=\lambda _{\max }(B)\), so A is nonsingular. Then \(f(x)=-x\log x\) is analytic for \(|{x-\theta } |<\Vert B\Vert _2\) and it can be represented by the power series

Since \(\sigma (A)\subset (\theta -\Vert B\Vert _2,\theta +\Vert B\Vert _2)\), the series expansion holds for f(A):

If \({{\,\textrm{d}\,}}(i,j)\ge 2\) we have \([B]_{ij}=[-A]_{ij}=0\), and \(B^k\) is nonnegative for all \(k \ge 0\), so \([f(A)]_{ij}\le 0\). If \(\theta < \exp (-1)\), then \((1+\log \theta )B\) is nonpositive so \([f(A)]_{ij}\le 0\) for \(i\ne j\), and it is an M-matrix since it is positive semidefinite [14, Theorem 4.6].

If A is singular, consider the nonsingular M-matrix \(A+\epsilon I\) for \(\epsilon >0\). Then the results hold for \(f(A+\epsilon I)\) (we impose \(\theta +\epsilon <\exp (-1)\) if \(\theta <\exp (-1)\)) and notice that \(f(A)=\lim _{\epsilon \rightarrow 0}f(A+\epsilon I)\). This concludes the proof, since the limit of nonsingular M-matrices is an M-matrix. \(\square \)

Proposition 3.6

Let \(A\in \mathbb {R}^{n\times n}\) be a symmetric M-matrix, and let \({{\,\mathrm{\mathcal {T}}\,}}_d(-A\log (A))\) be the approximation (3.2) of S(A) induced by a distance-d coloring of \(\mathcal {G}(A)\) with \(d\ge 1\). Then \({{\,\mathrm{\mathcal {T}}\,}}_d(-A\log (A))\le S(A)\).

Proof

If \(V_1, \dots , V_s\) is the graph partitioning associated with a distance-d coloring, the error of the approximation can be written as

see [29] for more details. By definition of a distance-d coloring, for all \(i,j\in V_\ell \), \(i\ne j\), we have \({{\,\textrm{d}\,}}(i,j)\ge d+1 \ge 2\). Then, in view of Lemma 3.5, the right-hand side of (3.7) is nonnegative. \(\square \)

Remark 3.7

In this work we only consider the entropy of sparse density matrices. However, an important case is given by \(\rho = g(H)\), where H is the Hamiltonian of a certain quantum system and g is a function defined on the spectrum of H. Notable examples are the Gibbs state [19, 57] and the Fermi-Dirac state [1]. Despite \(\rho \) being a dense matrix in general, a sparse structure of H implies that \(\rho \) exhibits decay properties and can be well approximated by a sparse matrix [6, 7]. Moreover, since \(S(\rho )=-g(H)\log (g(H))\) one can apply the techniques described in this section to the composition \(-g(x)\log (g(x))\). Although the investigation of this problem is outside the scope of this work, it represents an interesting direction for future research. These considerations also hold for the randomized techniques discussed below.

3.2 Stochastic trace estimation

Let us consider the problem of computing \({{\,\textrm{tr}\,}}(B)\), where \(B \in \mathbb {R}^{n \times n}\) is a matrix that is not explicitly available but can be accessed via matrix–vector products \(B \varvec{x}\) and quadratic forms \(\varvec{x}^T B \varvec{x}\), for \(\varvec{x} \in \mathbb {R}^n\). The case of \(B = f(A)\) can be seen as an instance of this problem, since f(A) is expensive to compute, but \(f(A) \varvec{x}\) and \(\varvec{x}^T f(A) \varvec{x}\) can be efficiently approximated using Krylov methods, as we recall in Sect. 4.

Stochastic trace estimators compute approximations of \({{\,\textrm{tr}\,}}(B)\) by making use of the fact that, for any matrix B and any random vector \(\varvec{x}\) such that \(\mathbb {E}[\varvec{x} \varvec{x}^T] = I\), we have \(\mathbb {E}[\varvec{x}^T B \varvec{x}] = {{\,\textrm{tr}\,}}(B)\), where \(\mathbb {E}\) denotes the expected value. Hutchinson’s trace estimator [39] is a simple stochastic estimator that generates N vectors \(\varvec{x}_1, \dots , \varvec{x}_N\) with i.i.d. random \({\mathcal {N}}(0, 1)\) entries and approximates \({{\,\textrm{tr}\,}}(B)\) with

An algorithm that improves the convergence properties of Hutchinson’s estimator has been proposed in [47] with the name of Hutch++. It samples \(\Omega \in \mathbb {R}^{n\times N_r}\) with random i.i.d. \({\mathcal {N}}(0,1)\) entries, then computes \(B\Omega \) and an orthonormal basis \(Q\in \mathbb {R}^{n\times N_r}\) of \({{\,\textrm{range}\,}}(B\Omega )\). Then the estimator is given by

This estimator computes the trace of a rank \(N_r\) approximation of B and estimates the trace of the remaining part using the Hutchinson estimator with \(N_H\) samples, so its total cost is \(N_r\) matrix–vector products and \(N_r+N_H\) quadratic forms with B. It has been proven in [47, Theorem 4.1] that the complexity of Hutch++ is optimal up to logarithmic factors amongst algorithms that access a positive semidefinite matrix B via matrix–vector products.

The implementation of Hutch++ proposed in [18] allows to prescribe a target accuracy \(\epsilon >0\) and a failure probability \(\delta \in (0,1)\) in input and then choose adaptively the parameters \(N_r\) and \(N_H\) in order to get the tail bound

with as little computational effort as possible. This implementation goes under the name of adaptive Hutch++ and will be our stochastic estimator of choice for the von Neumann entropy in view of its flexibility and optimal convergence properties. Note that most of our theory for Krylov methods in Sect. 4 can be used in combination with any other stochastic estimator based on matrix–vector products and quadratic forms with \(B=f(A)\). For instance, we mention [52] where a low rank approximation of A constructed using powers of A is used to get a good approximation in case of fast decaying eigenvalues or large spectral gaps, and the paper [16] in which a Krylov subspace projection is integrated with the low rank approximation. Furthermore, our theory in Sect. 4 also applies to the techniques presented in the recent preprint [25] which improves on standard Hutch++ but does not allow an adaptive choice of the parameters as in adaptive Hutch++.

4 Computation of quadratic forms with Krylov methods

As shown in Sect. 3, the approximate computation of \({{\,\textrm{tr}\,}}(f(A))\) with probing methods or stochastic trace estimators can be reduced to the computation of several quadratic forms with f(A), i.e. expressions of the form \(\varvec{b}^T f(A) \varvec{b}\). In this section, we briefly describe how they can be efficiently computed using polynomial and rational Krylov methods.

A polynomial Krylov subspace associated to A and \(\varvec{b}\) is given by

More generally, given a sequence of poles \(\{ \xi _j \}_{j \ge 1} \subset (\mathbb {C}\cup \{\infty \}) {\setminus } {\sigma (A)} \cup \{0\} \), we can define a rational Krylov subspace as follows,

where \(q_{m-1}(z) = \displaystyle \prod \nolimits _{j = 1}^{m-1}(1 - z/\xi _j)\). If all poles are equal to \(\infty \), we have \(q_{m-1}(z) \equiv 1\) and \(\mathcal {Q}_m(A, \varvec{b})\) coincides with the polynomial Krylov subspace \(\mathcal {P}_m(A, \varvec{b})\), so \(\mathcal {P}_m(A, \varvec{b})\) can be considered as a special case of \(\mathcal {Q}_m(A, \varvec{b})\). Note that this definition of \(q_{m-1}\) does not allow us to have poles \(\xi _j = 0\); this can be fixed by changing the definition of \(q_{m-1}\) but it is not required in our case, since we are only going to use real negative poles and poles at \(\infty \).

Let us denote by \(V_m = [\varvec{v}_1 \, \dots \, \varvec{v}_m ]\) a matrix whose orthonormal columns span the Krylov subspace \(\mathcal {Q}_m(A, \varvec{b})\), and by \(A_m = V_m^T A V_m\) the projection of A onto the subspace. We can then project the problem on \(\mathcal {Q}_m(A, \varvec{b})\) and approximate \(\psi = \varvec{b}^T f(A) \varvec{b}\) in the following way,

If the basis \(V_m\) is constructed incrementally using the rational Arnoldi algorithm [51], we have \(\varvec{v}_1 = \varvec{b} / \Vert {\varvec{b}} \Vert _2\) and therefore

Note that the approximation \(\psi _m\) is closely related to the rational Krylov approximation of \(f(A) \varvec{b}\), which is given by

and is known to converge with a rate determined by the quality of rational approximations of f [35, Corollary 3.4]. We refer to [34, 35] for an extensive discussion on rational Krylov methods for the computation of matrix functions. The approximation (4.2) can be also interpreted in terms of rational Gauss quadrature rules, see for instance [2, 50].

Remark 4.1

The standard Arnoldi algorithm is inherently sequential since the computation of the new vector of the Krylov basis \(\varvec{v}_{m+1}\) requires the previous computation of \(\varvec{v}_m\). It is possible to parallelize it by solving several linear systems simultaneously and expanding the Krylov basis with blocks of vectors, with one of the strategies presented in [13], at the cost of lower numerical stability. Since in this work we are expected to compute several quadratic forms \(\varvec{b}^T f(A) \varvec{b}\), we can easily achieve parallelization by assigning the quadratic forms to different processors and thus we can neglect parallelism inside the computation of a single quadratic form.

Remark 4.2

We mention that for symmetric A it is possible to construct the Krylov basis \(V_m\) using a method based on short recurrences such as rational Lanczos [48]. This has the advantage of reducing the orthogonalization costs, which can become significant if m is large, and also avoids the need to store the matrix \(V_m\) when approximating the quadratic form \(\varvec{b}^T f(A) \varvec{b}\), see (4.2). However, the implementation in finite arithmetic of short recurrence methods can suffer from loss of orthogonality, which in turn can lead to a slower convergence. In order to avoid this potential problem, we use the rational Arnoldi method with full orthogonalization. Since we expect to attain convergence in a small number of iterations, the orthogonalization costs remain modest compared to the cost of operations with A.

4.1 Convergence

By [35, Corollary 3.4], the accuracy of the approximation (4.3) for \(f(A) \varvec{b}\) is related to the quality of rational approximations to the function f of the form \(r(z) = q_{m-1}(z)^{-1} p_{m-1}(z)\), where \(p_{m-1} \in \Pi _{m-1}\) and \(q_{m-1}\) is determined by the poles of the rational Krylov subspace (4.1).

In the case of quadratic forms we can prove a faster convergence rate using the fact that \(\psi _m = \psi \) for rational functions of degree up to \((2m-1, 2m-2)\).

Lemma 4.3

Assume that A is symmetric. Let \(p_{2m-1} \in \Pi _{2m-1}\) and define the rational function \(r(z) = q_{m-1}(z)^{-2} p_{2m-1}(z)\). Then we have

Proof

It is sufficient to prove this fact for \(p_{2m-1}(z) = z^k\), for \(k = 0, \dots , 2\,m-1\). Assuming for the moment that \(k = 2j + 1\) is odd, we have

Now using [35, Lemma 3.1], we obtain

The case of \(p_{m-1}(z) = z^k\) with k even can be proved in the same way, by writing

\(\square \)

Lemma 4.3 leads to the following convergence result for the approximation of quadratic forms, with the same proof as [35, Corollary 3.4].

Proposition 4.4

Let A be symmetric with spectrum contained in \([\lambda _\text {min}, \lambda _\text {max}]\), \(\psi = \varvec{b}^T f(A) \varvec{b}\) and denote by \(\psi _m\) the approximation (4.2). We have

By comparing Proposition 4.4 with [35, Corollary 3.4], we can expect the convergence for quadratic forms to be roughly twice as fast as the one for matrix–vector products with f(A).

4.2 Poles for the rational Krylov subspace

Recall that the function \(f(z) = x \log x\) has the integral expression (2.10), which corresponds to a Cauchy-Stieltjes function multiplied by the polynomial \(x(1-x)\). This implies that we can expect that a pole sequence that yields fast convergence for Cauchy-Stieltjes functions will be also effective in our case, especially if we add two poles at \(\infty \) to account for the degree-two polynomial.

The authors of [45] consider the case of a positive definite matrix A with spectrum in [a, b] and a Cauchy-Stieltjes function f, and relate the error for the computation of \(f(A) \varvec{b}\) with a rational Krylov method to the third Zolotarev problem in approximation theory. The solution to this problem is known explicitly and it can be used to find poles on \((-\infty , 0)\) that provide in some sense an optimal convergence rate for the rational Krylov method [45, Corollary 4]. However, the optimal Zolotarev poles are not nested, so they cannot be used to expand the Krylov subspace incrementally, and they are practical only if one knows in advance how many iterations to perform, for instance by relying on an a priori error bound. This drawback can be overcome by constructing a nested sequence of poles that is equidistributed according to the limit measure identified by the optimal poles, which can be done with the method of equidistributed sequences (EDS) described in [45, Section 3.5]. These poles have the same asymptotic convergence rate as the optimal Zolotarev poles and are usually better for practical purposes. To be computed, they require the knowledge of [a, b] or a positive interval \(\Sigma \) such that \([a, b] \subset \Sigma \).

As an alternative, one can also use poles obtained from Leja-Bagby points [3, 35]. These points can be computed with an easily implemented greedy algorithm and they have an asymptotic convergence rate that is close to the optimal one. See [35, Section 4] and the references therein for additional information.

Remark 4.5

For the function \(f(x) = x \log x\), the first few iterations of a polynomial Krylov method have a fast convergence rate that is close to the convergence rate of rational Krylov methods, even if it becomes asymptotically much slower for ill conditioned matrices. The faster initial convergence can be explained by the algebraic factor in the bound (2.13) for polynomial approximations of \(x \log x\). Since polynomial Krylov iterations are cheaper than rational Krylov iterations, this suggests the use of a mixed polynomial-rational method, that starts with a few polynomial Krylov steps and then switches to a rational Krylov method with, e.g., EDS poles to achieve a higher accuracy. These methods are compared numerically in Example 4.10, where we also test the performance of the a posteriori error bound that we prove in Sect. 4.3.

Remark 4.6

Note that in the context of the graph entropy the matrix A is a graph Laplacian, which is a singular matrix. Therefore in principle it is not possible to use the poles described in this section, since here we assume that A is positive definite. However, we can use one of the desingularization strategies described in [11] to remove the 0 eigenvalue of the graph Laplacian, obtaining a matrix with spectrum contained in \([\lambda _2, \lambda _n]\), where \(\lambda _2\) is the second smallest eigenvalue of A and \(\lambda _n\) is the largest one. In our implementation we use the approach that is called implicit desingularization in [11], which consists in replacing the initial vector \(\varvec{b}\) for the Krylov subspace with \(\varvec{c} = \varvec{b} - \frac{\mathbbm {1}^T \varvec{b}}{n} \mathbbm {1}\), where \(\mathbbm {1}\) is the vector of all ones. Since \(\varvec{c}\) is orthogonal to the eigenvector \(\mathbbm {1}\) associated to the eigenvalue 0, it can be shown that the convergence of a Krylov subspace method with starting vector \(\varvec{c}\) is the same as for a matrix with spectrum in \([\lambda _2, \lambda _n]\). An approximation of \(f(A) \varvec{b}\) can be then cheaply recovered from \(f(A) \varvec{c}\) using the fact that \(f(A) \mathbbm {1}= f(0) \mathbbm {1}\), and similarly for \(\varvec{b}^T f(A) \varvec{b}\). See [11] for more details.

4.3 A posteriori error bound

In this section we prove an a posteriori bound for the error in the computation of the quadratic form \(\varvec{b}^T f(A) \varvec{b}\) with a rational Krylov method. This bound is a variant of the one described in [34, Section 6.6.2] for \(f(A) \varvec{b}\), modified in order to account for the faster convergence rate in the case of quadratic forms.

We recall that after m iterations the rational Arnoldi algorithm yields the rational Arnoldi decomposition [12, Definition 2.3]

where \({{\,\textrm{span}\,}}V_{m+1} = \mathcal {Q}_{m+1}(A, \varvec{b})\) and \(\underline{K_m}\), \(\underline{H_m}\) are \((m+1) \times m\) upper Hessenberg matrices with full rank. Let us consider the situation when \(\xi _m = \infty \): in this case the last row of \(\underline{K_m}\) is zero, and the decomposition simplifies to

where \(H_m\) and \(K_m\) denote the \(m \times m\) leading principal blocks of \(\underline{H_m}\) and \(\underline{K_m}\), respectively, and \(\varvec{h}_{m+1}^T = h_{m+1, m} \varvec{e}_m^T\) denotes the last row of \(\underline{H_m}\). Note that \(K_m\) is nonsingular since \(\underline{K_m}\) has full rank, so we can rewrite the decomposition as

Remark 4.7

To derive the bound, we assume that \(\xi _m = \infty \) because it simplifies the rational Arnoldi decomposition and hence the expression of the bound. Such an assumption is not restrictive, since the value of \(\xi _m\) does not have any impact on \(V_m\) and \(A_m\), but only on \(\varvec{v}_{m+1}\) and the last column of \(\underline{H_m}\) and \(\underline{K_m}\). As we shall see later, we can use a technique described in [34, Section 6.1] to compute the bound for all m, without having to set the corresponding poles \(\xi _m = \infty \). Note that if \(\xi _m \ne \infty \), then (4.5) does not hold, and in particular \(V_m^T A V_m \ne H_m K_m^{-1}\).

By using the Cauchy integral formula for f, we can obtain the following expression for the error [34, Section 6.2.2]:

where \(\Gamma \) is a contour contained in the region of analyticity of f that encloses the spectrum of A, and

which can be seen as a residual vector of the shifted linear system \((z I - A) \varvec{x} = \varvec{b}\). It turns out that [34, Section 6.2.2]

Observe that we have

where we exploited the fact that \(\varvec{x}_m(z) \in \mathcal {Q}_m(A, \varvec{b}) \perp \varvec{r}_m(z)\).

By using this property in conjunction with (4.6), we can write the error for the quadratic form \(\varvec{b}^T f(A) \varvec{b}\) as

We can now follow the same steps used in [34, Section 6.2.2] to bound the integral in (4.7). Assume that \(A_m\) has the spectral decomposition \(A_m = U_m D_m U_m^T\), with \(U_m\) orthogonal and \(D_m = {{\,\textrm{diag}\,}}(\theta _1, \dots , \theta _m)\), and define the vectors

so that we have

and

where we defined \(\gamma _j = \displaystyle \sum _{\ell \,:\, \ell \ne j} \alpha _\ell \beta _\ell \dfrac{1}{\theta _j - \theta _\ell }\). By plugging this expression into (4.7) we get

where for the last equality we used the residue theorem [37, Theorem 4.7a].

Let us define

where the expression for \(z = \theta _j\) is obtained by taking the limit for \(z \rightarrow \theta _j\) in the definition for \(z \ne \theta _j\). The above computations immediately lead to the following a posteriori bound for the quadratic form error.

Theorem 4.8

Let A be a symmetric matrix with spectrum \(\sigma (A) \subset [\lambda _\text {min}, \lambda _\text {max}]\). Using the same notation as above, we have

Remark 4.9

We are mainly interested in the upper bound in (4.9) to have a reliable stopping criterion for the rational Krylov method, although the lower bound can also be of interest. We also mention that other bounds and error estimates can be obtained, such as the other ones described in [34, Section 6.6], but we found that the one derived in this section worked well enough for our purposes. Under certain assumptions, it is also possible to obtain upper and lower bounds for the quadratic form \(\varvec{b}^T f(A) \varvec{b}\) using pairs of rational Gauss quadrature rules, such as Gauss and Gauss-Radau quadrature rules. We refer to [2] for more details.

Example 4.10

In this example we test the accuracy of the lower and upper bounds given in (4.9) for polynomial and rational Krylov methods. We consider the computation of \(\varvec{b}^T f(A) \varvec{b}\), for a \(2000 \times 2000\) matrix A with eigenvalues given by the Chebyshev points for the interval \(\Sigma = [10^{-3}, 10^3]\), a random vector \(\varvec{b}\) and \(f(x) = x \log (x)\). The upper and lower bounds are computed numerically by evaluating \(g_m\) on a discretization of the interval \([\lambda _\text {min}, \lambda _\text {max}]\). In addition to the lower and upper bounds, we also consider a heuristic estimate of the error given by the geometric mean of the upper and lower bound in (4.9), i.e.

The results are shown in Fig. 3. On the left plot we show the convergence for the polynomial Krylov method and for a rational Krylov method with poles from an EDS for Cauchy-Stieltjes functions, and on the right plot a mixed polynomial-rational method that uses 10 poles at \(\infty \) (which correspond to polynomial Krylov steps) followed by 10 EDS poles (see Remark 4.5). The upper and lower bounds match the shape of the convergence curve quite well, although they are less accurate when polynomial iterations are used. Rather surprisingly, the geometric mean of the bounds gives a very accurate estimate for the error, even in the case when the bounds themselves are less accurate.

Remark 4.11

We do not have a rigorous explanation for the accuracy of the estimate based on the geometric mean of the bounds in (4.9), but from further experiments it seems to be very accurate also for other functions. Unfortunately, if the spectral interval \(\Sigma \) is known only approximately, the bounds become less tight and the geometric mean estimate usually ends up underestimating the actual error by one or two orders of magnitude.

Accuracy of error bounds and estimates for the relative error in the computation of \(\varvec{b}^T f(A) \varvec{b}\) with Krylov methods, where \(\varvec{b}\) is a random vector, \(f(x) = x \log (x)\) and A is a \(2000 \times 2000\) matrix with eigenvalues that are Chebyshev points in the interval \([10^{-3}, 10^3]\). Left: polynomial Krylov and rational Krylov with EDS poles for Cauchy-Stieltjes function. Right: 10 poles at \(\infty \) and 10 EDS poles for Cauchy-Stieltjes functions

4.3.1 Computation of the bound

Recall that the a posteriori bounds in (4.9) hold after the m-th iteration only if \(\xi _m = \infty \). In a practical scenario, i.e. when using the bounds as a stopping criterion for a rational Krylov method, it is desirable to evaluate the bounds after each iteration, without being forced to set the corresponding pole to \(\infty \). As anticipated in Remark 4.7, we provide here two approaches to evaluate the bounds in (4.9) even when \(\xi _m \ne \infty \).

One way to avoid setting poles to \(\infty \), proposed in [34, Section 6.1], is to use an auxiliary basis vector \(\varvec{v}_\infty \), which is initialized as \(\varvec{v}_\infty ^{(1)} = A \varvec{v}_1\) at the beginning of the rational Arnoldi algorithm, and maintained orthonormal to the basis vectors \(\{\varvec{v}_1, \dots , \varvec{v}_j\}\) at each iteration j, at the cost of only one additional orthogonalization per iteration. The basis \([V_j \; \varvec{v}_\infty ^{(j)}]\) is an orthonormal basis of the rational Krylov subspace \(\mathcal {Q}_{j+1}(A, \varvec{b})\) with poles \(\{ \xi _1, \dots , \xi _{j-1}, \infty \}\), and it is associated to the auxiliary Arnoldi decomposition

where \({\widetilde{K}}_j\varvec{e}_j = \varvec{e}_1\) and the last column of \(\underline{{\widetilde{H}}_j}\) contains the orthogonalization coefficients for \(\varvec{v}_\infty ^{(j)}\). This decomposition can be used to compute the bound (4.9) since the last row of \(\underline{{\widetilde{K}}_j}\) is zero by construction.

Remark 4.12

We point out that if \(\xi _j = \infty \) for some j, then the approach described above will not work from iteration \(j+1\) onward, since \(A \varvec{v}_1 \in \mathcal {Q}_{j+1}(A, \varvec{b})\) and therefore \(\varvec{v}_\infty ^{(j+1)} = \varvec{0}\) after orthogonalization. This is easily fixed by switching to a different auxiliary vector at iteration \(j+1\), such as \(\varvec{v}_\infty = A \varvec{v}_{j+1}\), or by setting directly at the start \(\varvec{v}_\infty = A^{\ell + 1} \varvec{v}_1\), where \(\ell \) is the number of poles at \(\infty \) used to construct the rational Krylov subspace.

In our experience, the technique described above can be sometimes subject to instability due to a large condition number of the matrix \({\widetilde{K}}_j\). We therefore also propose another approach, which is inspired by the methods for moving the poles of a rational Krylov subspace presented in [12, Section 4]. The idea is to add a pole at \(\infty \) at the beginning of the pole sequence, and reorder the poles at each iteration in order to always have the last pole equal to \(\infty \).

First of all, we recall how to swap poles in a rational Arnoldi decomposition. This procedure is a special case of the algorithm described in [12], but we still describe it in some detail for completeness. Recall that the poles of a rational Krylov subspace are the ratios of the entries below the main diagonals of \(\underline{H_j}\) and \(\underline{K_j}\) [12, Definition 2.3], i.e. \(\xi _j = h_{j+1, j}/k_{j+1, j}\). In other words, the poles \(\{\xi _1, \dots , \xi _j\}\) are the eigenvalues of the upper triangular pencil \(({\widehat{H}}_j, {\widehat{K}}_j)\), where we denote by \({\widehat{H}}_j\) the bottom \(j \times j\) block of \(\underline{H_j}\), and similarly for \({\widehat{K}}_j\). So we can obtain a transformation that swaps two adjacent poles in the same way as orthogonal transformations that reorder eigenvalues in a generalized Schur form [40]. Let \(U_j\) and \(W_j\) be \(j \times j\) orthogonal matrices such that the pencil \(U_j^T ({\widehat{H}}_j, {\widehat{K}}_j) W_j\) is still in upper triangular form and has the last two eigenvalues in reversed order; the matrices \(U_j\) and \(W_j\) only involve \(2 \times 2\) rotations, and they can be computed and applied cheaply as described in [40]. Defining \({\widehat{U}}_j = {{\,\textrm{blkdiag}\,}}(1, U_j) \in \mathbb {R}^{(j+1) \times (j+1)}\), we have the new rational Arnoldi decomposition

where

This decomposition has the same poles as \(A V_{j+1} \underline{K_j} = V_{j+1} \underline{H_j}\), with the difference that the last two poles \(\xi _{j-1}\) and \(\xi _j\) are now swapped. In particular, if \(\xi _{j-1} = \infty \), the last pole of the new decomposition is now \(\infty \), and hence the last row of \(\underline{{\widetilde{K}}_j}\) is equal to zero.

Given the pole sequence \(\{ \xi _1, \xi _2, \dots \}\), let us consider the rational Krylov subspace associated to the modified pole sequence \(\{ \infty , \xi _1, \xi _2, \dots \}\). Clearly, after the first iteration both pole sequences identify the same subspace \(\mathcal {Q}_1(A, \varvec{b})\), but the last (and first) pole of the modified sequence is \(\infty \), so the last row of \(\underline{K_1}\) is zero and we can use the decomposition \(A V_1 K_1 = V_2 \underline{H_1}\) to compute the bound (4.9). After the second iteration, if \(\xi _1 \ne \infty \), we can swap the poles \(\xi _1\) and \(\infty \) with the procedure outlined above to obtain the decomposition \(A V_2 K_2 = V_3 \underline{H_2}\) associated to the poles \(\{ \xi _1, \infty \}\), where again the last row of \(\underline{K_2}\) is equal to zero (for simplicity we still use the notation \(K_j\) instead of \({\widetilde{K}}_j\), and similarly for \(V_j\) and \(H_j\)). If \(\xi _1 = \infty \), there is no need to swap poles and we can proceed to the next iteration.

By repeating the same steps at each iteration, we can ensure that after j iterations we have a decomposition \(A V_j K_j = V_{j+1} \underline{H_j}\), associated to the poles \(\{ \xi _1, \dots , \xi _{j-1}, \infty \}\) in this order, so that the last row of \(\underline{K_j}\) is equal to zero and it can be used to compute the bound (4.9).

Remark 4.13

Note that \(V_j\) is a basis of \(\mathcal {Q}_j(A, \varvec{b})\), which is the same subspace that we would have obtained if we had run the rational Arnoldi algorithm with poles \(\{\xi _1, \dots , \xi _{j-1}\}\); so the method described in this section actually computes the approximation \(\psi _j\) and the bound associated to the poles \(\{\xi _1, \dots , \xi _{j-1}\}\), and not to the modified pole sequence \(\{ \infty , \xi _1, \dots , \xi _{j-2} \}\). The initial pole at \(\infty \) is only added to enable the computation of the bound and it is never used in the actual approximation.

5 Implementation aspects

In this section we outline the algorithm used to compute the entropy obtained by connecting the different components presented in the previous sections, and we briefly comment on some of the decisions that have to be taken in an implementation, especially concerning stopping criteria. Given a symmetric positive semidefinite matrix A with \({{\,\textrm{tr}\,}}(A) = 1\) and a target relative accuracy \(\epsilon \), the algorithm should output an estimate trest of \({{\,\textrm{tr}\,}}(f(A))\), where \(f(x) = - x \log x\), such that

using either the probing approach of Sect. 3.1 or a stochastic trace estimator from Sect. 3.2. Observe that the entropy of an \(n \times n\) density matrix is always bounded form above by \(\log n\), but it may be in principle very small, so we prefer to aim for a certain relative accuracy rather than an absolute accuracy. Quadratic forms and matrix–vector products with f(A) are computed using Krylov methods, specifically using a certain number of poles at \(\infty \) followed by the EDS poles described in Sect. 4.2.

Remark 5.1

In the following, we are going to use \({{\hat{\epsilon }}}\) to denote an absolute error, to distinguish it from the target relative accuracy \(\epsilon \). Note that we can easily transform absolute inequalities for the error into relative inequalities if we know in advance an estimate or a lower bound for \({{\,\textrm{tr}\,}}(f(A))\). Recall that if A is an M-matrix, \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\) is actually a lower bound for \({{\,\textrm{tr}\,}}(f(A))\) (Proposition 3.6). In the general case, any rough approximation of the entropy can be used for this purpose, since the important point is determining the order of magnitude of \({{\,\textrm{tr}\,}}(f(A))\).

Remark 5.2

The error in the approximation of S(A) can be divided into the error in the approximation of the trace using a probing method or a stochastic estimator, and the error in the approximation of the quadratic forms with f(A) using a Krylov subspace method. For simplicity, in the following we impose that the relative error associated to each of these two components is smaller than \(\epsilon /2\).

5.1 Probing method implementation

We begin by observing that it is not possible to cheaply estimate the error of a probing method a posteriori, since error estimates are usually based on approximations with different values of the distance d, which in general lead to completely different colorings that would require computing all quadratic forms from scratch.

For this reason, it is best to find a value of d that ensures a relative accuracy \(\epsilon \) a priori when using a distance-d coloring. This can be done using one of the bounds in Corollary 3.2, but it can often lead to unnecessary additional work, since the bounds usually overestimate the error by a couple of orders of magnitude; see Fig. 2. Therefore we also provide a heuristic criterion for choosing d that does not have the same theoretical guarantee as the bounds, but appears to work quite well in practice. In view of Corollary 3.2, we can expect the absolute error to behave as

for \(k = 2\) and some parameters \(C > 0\) and \(q \in (0,1)\). However, we found that sometimes the actual error behavior is better described with a different value of k, such as \(k = 3\), so we do not impose that \(k = 2\). To estimate the values of the parameters, we compute \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\) for \(d = 1, 2, 3\) and use the estimate

Assuming that (5.1) holds exactly and fixing the value of k, we can determine C and q by solving the equations

We can check when the resulting estimate is below \({{\hat{\epsilon }}}\) to heuristically determine d, i.e., we select d as

in order to have the approximate absolute error inequality

We found that the best results are obtained for \(k = 2\) and \(k = 3\), so in our implementation we use the maximum of the two corresponding estimates. Variants of this estimate include using other values of d to estimate the parameters instead of \(d = 1, 2, 3\), and using four different values in order to also estimate the parameter k. However, they usually give results that are similar or sometimes worse than the estimate presented above, so they are often not worth the additional effort required to compute them. In particular, using four values of d raises the risk of misjudging the value of q, causing the estimate to be inaccurate for large values of d. The error estimate (5.1) is compared to the actual error and the theoretical bound (3.5) for two different graphs in Fig. 4. The figure also includes a simple error estimate based on consecutive differences, which requires the computation of \({{\,\mathrm{\mathcal {T}}\,}}_{d+1}(f(A))\) to estimate the error for \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\).

Absolute error of the probing method with greedy coloring (Algorithm 1) compared with the theoretical bound (3.5) using \(a = \lambda _2\), the heuristic error estimate (5.1) and the simple error estimate \(|{{{\,\textrm{tr}\,}}(f(A) - {{\,\mathrm{\mathcal {T}}\,}}_d(f(A))} | \approx |{{{\,\mathrm{\mathcal {T}}\,}}_{d+1}(f(A)) - {{\,\mathrm{\mathcal {T}}\,}}_d(f(A))} |\). Left: Laplacian of the largest connected component of the graph minnesota, with 2640 nodes. Right: Laplacian of the largest connected component of the graph eris1176, with 1174 nodes

Remark 5.3

The heuristic criterion for selecting d requires the computation of \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\) for \(d = 1, 2, 3\), so it is more expensive to use than the theoretical bound (3.5). However, this cost is usually small compared to the cost of computing \({{\,\mathrm{\mathcal {T}}\,}}_d(f(A))\) for the selected value of d, especially if the requested accuracy is small. Note also that the heuristic criterion always computes \({{\,\mathrm{\mathcal {T}}\,}}_3(f(A))\), so it does more work than necessary when \(d \le 2\) would be sufficient. Nevertheless, in such a situation the theoretical bound (3.5) may suggest to use an even higher value of d (see Fig. 4).

After choosing d such that

using either the a priori bound (3.5) or the estimate (5.1), a distance-d coloring can be computed with one of the coloring algorithms described in Sect. 3.1, depending on the properties of the graph. The greedy coloring [53, Algorithm 4.2] is usually a good choice for general graphs.

Let us now determine the accuracy required in the computation of the quadratic forms. Assume that we have

where \(\{\varvec{v}_\ell \}_{\ell = 1}^s\) are the probing vectors used in the distance-d coloring. Let \({\hat{\psi }}_\ell \) denote the approximation of \(\varvec{v}_\ell ^T f(A) \varvec{v}_\ell \) obtained with a Krylov method. Recall that \(\Vert {\varvec{v}_\ell } \Vert _2 = |{V_\ell } |^{1/2}\), where \(V_\ell \) denotes the set of the partition associated to the \(\ell \)-th color. If we impose the conditions

where we normalized the accuracy requested for each quadratic form depending on \(\Vert {\varvec{v}_\ell } \Vert _2\), we obtain the desired absolute accuracy on the probing approximation

If we are aiming for a relative accuracy \(\epsilon \), we should select \({{\hat{\epsilon }}} = \frac{1}{2}\,\epsilon {{\,\textrm{tr}\,}}(f(A))\) in (5.2) and (5.4). In practice, \({{\,\textrm{tr}\,}}(f(A))\) will be replaced by a rough approximation (see Remark 5.1).

The overall probing algorithm is summarized in Algorithm 2.

5.2 Adaptive Hutch++ implementation

We use the Matlab code of [49, Algorithm 3] provided by the authors, modified to use Krylov methods for the computations with f(A). This algorithm requires an absolute tolerance \({{\hat{\epsilon }}}\) and a failure probability \(\delta \), and outputs an approximation \({{\,\textrm{tr}\,}}_\text {adap}(f(A))\) such that

To obtain an approximation within a relative accuracy \(\epsilon \), we can use \({{\hat{\epsilon }}} \approx \epsilon {{\,\textrm{tr}\,}}(f(A))\), using a rough approximation of \({{\,\textrm{tr}\,}}(f(A))\). Similarly to the probing method, in order to have a final relative error bounded by \(\epsilon \), in our implementation we use a tolerance \({{\hat{\epsilon }}} \approx \frac{1}{2} \epsilon {{\,\textrm{tr}\,}}(f(A))\) for adaptive Hutch++, and we set the accuracy for the computation of matrix–vector products and quadratic forms in order to ensure that the total error due to the Krylov approximations remains below \(\frac{1}{2} \epsilon {{\,\textrm{tr}\,}}(f(A))\). We omit the technical details to simplify the presentation.

5.3 Krylov method implementation

Quadratic forms with f(A) are approximated using a Krylov method with some poles at \(\infty \) followed by the EDS poles of Sect. 4.2, using as a stopping criterion either the a posteriori upper bound (4.9) or the estimate shown in Example 4.10.

The number of poles at \(\infty \) is chosen in an adaptive way, switching to finite poles when the error reduction in the last few iterations of the polynomial Krylov method is “small”. Specifically, we decide to switch to EDS poles after the k-th iteration if on average the last \(\ell \ge 1\) iterations did not reduce the error bound or estimate err_est by at least a factor \(c \in (0, 1)\), i.e. if

In our implementation we use \(\ell = 3\) and \(c = 0.75\), usually leading to at most 10 polynomial Krylov iterations.

Since EDS poles are contained in \((-\infty , 0)\), each rational Krylov iteration involves the solution of a symmetric positive definite linear system, which can be computed either with a direct method using a sparse Cholesky factorization, or iteratively with the conjugate gradient method using a suitable preconditioner. Note that the same EDS poles can be used for all quadratic forms, so the number of different matrices that appear in the linear systems is usually small and independent of the total number of quadratic forms. Although this depends on the accuracy requested for the entropy, the number of EDS poles used is almost always bounded by 10, and often much smaller than that: see the numerical experiments in Sect. 6 for some examples. This is a great advantage for direct methods, especially when the Cholesky factor remains sparse, since we can compute and store a Cholesky factorization for each pole and then reuse it for all quadratic forms. If the fill-in in the Cholesky factor is moderate, the cost of a rational iteration can become comparable to the cost of a polynomial one, leading to large savings when computing many quadratic forms. Of course, for large matrices with a general sparsity structure the computation of even a single Cholesky factor may be unfeasible, so the only option is to use a preconditioned iterative method. In such a situation, it is still possible to benefit from the small number of different matrices that appear in linear systems by storing and reusing preconditioners, but the gain is less evident compared to direct methods.

The matrix–vector products with f(A) in the Hutch++ algorithm are approximated with the same Krylov subspace method, with the difference that we use the a posteriori upper and lower bounds from [34, eq. (6.15)]. A geometric mean estimate similar to the one used in Example 4.10 can be also used in this context. For the computation of the a posteriori bounds we use the pole swapping technique with an auxiliary pole at \(\infty \) described in Sect. 4.3.1.

6 Numerical experiments

The experiments were done in Matlab R2021b on a laptop with operating system Ubuntu 20.04, using a single core of an Intel i5-10300 H CPU running at 2.5 GHz, with 32 GB of RAM. Since we are using Matlab, the execution times may not reflect the performance of a high performance implementation, but they are still a useful indicator when comparing different methods.

6.1 Test matrices

We consider a number of symmetric test matrices from the SuiteSparse Matrix Collection [21]. All matrices are treated as binary matrices, i.e. all edge weights are set to one. For each matrix, we extract the graph Laplacian associated to the largest connected component and we normalize it so that it has unit trace. We report some information on the resulting matrices in Table 1. For the four smallest matrices, the eigenvalues were computed via diagonalization, while for the larger matrices the eigenvalues \(\lambda _2\) and \(\lambda _n\) were approximated using eigs. The cost of solving a linear system with a direct method is highly dependent on the fill-in in the Cholesky factorization; the column labelled fill-in in Table 1 contains the ratios \({\texttt {nnz}}(R)/\texttt {nnz}(\rho )\), where \(\rho \) is the test matrix and R is the Cholesky factor of any shifted matrix \(\rho + \alpha I\), for \(\alpha > 0\) (nnz(M) denotes the number of nonzeros of a matrix M). All matrices have been ordered using the approximate minimum degree reordering option available in Matlab before factorization.

6.2 Probing bound vs. estimate

In this experiment, we fix a relative error tolerance \(\epsilon = 10^{-3}\) and we compare the choice of d given by the theoretical bound (3.5) with the one provided by the heuristic estimate (5.1). We report in Table 2 the error, the execution time, the value of d and the number of colors used in the two cases. When the theoretical bound is used, the selected value of d is significantly higher compared to the one chosen by the heuristic estimate, but in both cases the overall error remains below the tolerance \(\epsilon \). Moreover, for certain graphs using the theoretical bound leads to greedy colorings with a number of colors equal to the number of nodes in the graph, completely negating the advantage of using a probing method. Observe that the errors obtained with the larger value of d are not much smaller than the ones for the smaller value of d, because in both cases the quadratic forms are computed with target relative accuracy \(\epsilon \), so the probing error for the larger value of d is dominated by the error in the quadratic forms. In the case of the heuristic estimate, the execution time includes the time required to run the probing method for \(d = 1, 2, 3\) in order to evaluate (5.1). The stopping criterion for the Krylov subspace method uses the upper bound (4.9). The execution time can be further reduced by using the estimate (4.10) for the Krylov subspace method, as the following experiment shows. The diagonalization time for the matrices used in this experiment can be found in the last column of Table 4. Note that for smaller matrices, diagonalization is often the fastest method, but the advantage of approximating the entropy with a probing method is already evident for matrices of size \(n \approx 10000\).

6.3 Krylov bound vs. estimate

We fix an error tolerance \(\epsilon = 10^{-5}\) and compare the performance of the geometric mean error estimate (4.10) with the theoretical upper bound (4.9) for the Krylov subspace method. The value of d for the probing method is selected using the heuristic estimate (5.1). The entropy error, execution time, and total number of polynomial and rational Krylov iterations are reported in Table 3. We can see that using the estimate instead of the theoretical bound moderately reduces the computational effort, while still attaining the requested accuracy \(\epsilon \) on the entropy. In particular, observe that the number of rational Krylov iterations is significantly higher when using the upper bound (4.9). In this experiment, all linear systems are solved with direct methods and Cholesky factorizations are stored and reused.

6.4 Adaptive Hutch++

Here we test the performance and the accuracy of the adaptive implementation of Hutch++ [49, Algorithm 3]. A relative accuracy \(\epsilon \) is achieved by setting the absolute tolerance to \(\epsilon S(\rho )\), where \(S(\rho )\) is computed via diagonalization and considered as exact. The computational effort of Hutch++ is determined by the parameters \(N_r\) and \(N_H\) described in Sect. 3.2. In particular, the number of matrix–vector products is equal to \(N_r\) and the number of quadratic forms is equal to \(N_r+N_H\). We used \(\epsilon =10^{-2}, 10^{-3}\) as target tolerances and \(\delta =10^{-2}\) as failure probability. Matrix–vector products and quadratic forms are computed using the Krylov subspace method with the geometric mean estimate as a stopping criterion (4.10). In Table 4 we compare the results for the two tolerances, obtained as an average of 100 runs of the algorithm, including both the average and worst relative error. In the majority of cases, the worst error is below the input tolerance \(\epsilon \). We see that for \(\epsilon = 10^{-2}\) the computation with Hutch++ is very fast for all test matrices; on the other hand, the cost becomes significantly higher for \(\epsilon = 10^{-3}\), showing that the stochastic estimator quickly becomes inefficient as the required accuracy increases. Observe that for \(\epsilon = 10^{-2}\) adaptive Hutch++ uses only 3 matvecs for all tests problems, which is the minimum amount that can be used by the implementation in [49]. This means that the internal criteria of the algorithm have determined that spending more matvecs in the low rank approximation is not beneficial, and hence the convergence of the method is roughly the same as for Hutchinson’s estimator. A similar behavior can be observed in Table 7, and can be linked to the fact that for these test matrices \(\rho \), the matrix function \(-\rho \log \rho \) does not exhibit eigenvalue decay and hence cannot be well-approximated by low rank matrices. On the other hand, for problems where low rank approximation is more effective, stochastic estimators that exploit it such as Hutch++ can have much faster convergence.

6.5 Larger matrices

In this section we test the probing method and the adaptive Hutch++ algorithm on larger matrices, for which it would be extremely expensive to compute the exact entropy. In light of the results shown in Tables 2 and 3, we select the value of d for the probing method using the heuristic estimate (5.1) and we use the geometric mean estimate (4.10) for the Krylov subspace method. The results are reported in Tables 5 and 6 for the probing method, and in Table 7 for Hutch++. Figure 5 contains a more detailed breakdown of the execution time for the probing method used on the matrices of Table 5. We separate the time in preprocessing, where we evaluate the heuristic (5.1) to select d, and the main run of the algorithm with the chosen value of d. The time for the main run is further divided in coloring, and polynomial and rational Krylov iterations. The time for the Cholesky factorizations refers to the whole process, since the factors are computed and stored when a certain pole for a rational Krylov iteration is encountered for the first time.

In Table 5, we consider matrices with a “large-world” sparsity structure, such as road networks, and we use a relative tolerance of \(\epsilon = 10^{-4}\). For these matrices, for which the diameter and the average path length are relatively large, it is possible to compute distance-d colorings with a relatively small number of colors, and therefore probing methods converge quickly. Moreover, Cholesky factorizations can be computed cheaply and have a small fill-in, so it is possible to rapidly solve linear systems using a direct method. On the other hand, in Table 6 we consider matrices with a “small-world” sparsity structure, more typical of social networks and scientific collaboration networks. These matrices require a much larger number of colors to construct distance-d colorings, even for small values of d. The cost of probing methods is thus significantly higher on this kind of problem. In Table 6, only polynomial Krylov iterations are used due to the low relative tolerance \(\epsilon = 10^{-2}\), so it is never necessary to solve linear systems. Recall that these matrices also have a high fill-in in the Cholesky factorizations (see Table 1), so the conjugate gradient method with a suitable preconditioner is likely to be much more efficient than a direct method for solving a linear system.

In Table 7 we show the results for the adaptive Hutch++ algorithm, using relative tolerance \(\epsilon = 10^{-2}\). The results are obtained as an average of 100 runs of the algorithm. We can observe that the stochastic trace estimator works well for both large-world and small-world graphs, in contrast to the probing method.

Breakdown of the execution time of the probing method for the test matrices in Table 5

6.6 Algorithm scaling

To investigate how the complexity of the algorithms scales with the matrix size, we compare the scaling of the probing method and the stochastic trace estimator on two different test problems with increasing dimension. The first one is the graph Laplacian of a 2D regular square grid, and the second one is the graph Laplacian of a Barabasi-Albert random graph, generated using the pref function of the CONTEST Matlab package [55]. For the probing method on the 2D grid, we use the optimal distance-d coloring with \(\big \lceil \frac{1}{2} (d+1)^2 \big \rceil \) colors described in [26]. For both test problems, we use a relative tolerance \(\epsilon = 10^{-4}\) for the probing method, and a relative tolerance \(\epsilon = 10^{-2}\) and failure probability \(\delta = 10^{-2}\) for adaptive Hutch++, averaging over 100 runs. The results are summarized in Fig. 6, for graphs with a number of nodes from \(n = 2^{10}\) to \(n = 2^{20}\). As expected, the probing method is much more efficient in the case of the 2D grid, since the number of colors used in the distance-d colorings remains constant as n increases. On the other hand, for the Barabasi-Albert random graph, which has a small-world structure, the number of colors used in a distance-d coloring increases with the number of nodes, and hence the scaling for the probing method is significantly worse. The adaptive Hutch++ algorithm also has a better performance for the 2D grid, but the scaling in the problem size is good for both graph categories, since the number of vectors used in the trace approximation does not increase with the matrix dimension. However, the stochastic approach is only viable with a loose tolerance \(\epsilon \) for this kind of problem since low rank approximation is not effective, as discussed in Sect. 6.4. The initial decrease in the execution time for Hutch++ as n increases is caused by the fact that the adaptive algorithm uses a larger number of vectors for the graphs with fewer nodes.

7 Conclusions

In this paper we have investigated two approaches for approximating the von Neumann entropy of a large, sparse, symmetric positive semidefinite matrix. The first method is a state-of-the-art randomized approach, while the second one is based on the idea of probing. Both methods require the computation of many quadratic forms involving the matrix function f(A) with \(f(x) = - x \log x\), an expensive task given the lack of smoothness of f(x) at \(x=0\). We have examined the use of both polynomial and rational Krylov subspace methods, and combinations of the two. Pole selection and several implementation aspects, such as heuristics and stopping criteria, have been investigated. Numerical experiments in which the entropy is computed for a variety of networks have been used to test the various approximation methods. Not surprisingly, the performance of the methods is affected by the structure of the underlying network, especially for the method based on the probing idea. Our main conclusion is that the probing approach is better suited than the randomized one for graphs with a large-world structure, since they admit distance-d colorings with a relatively small number of colors. Conversely, for complex networks with a small-world structure, the number of colors required for distance-d colorings is larger, so the probing approach becomes more expensive. For this type of graphs, the randomized method is more competitive than the one based on probing, since it is less affected by the structure of the graph; however, for matrices in which low rank approximation cannot be exploited such as the graph Laplacians that we consider, randomized trace estimators are best suited for computing approximations with a relatively low accuracy, since their cost quickly grows as the requested accuracy is increased.

References

Aarons, J., Skylaris, C.K.: Electronic annealing Fermi operator expansion for DFT calculations on metallic systems. J. Chem. Phys 148(7), 074107 (2018)

Alahmadi, J., Pranić, M., Reichel, L.: Rational Gauss quadrature rules for the approximation of matrix functionals involving Stieltjes functions. Numer. Math. 151(2), 443–473 (2022)

Bagby, T.: On interpolation by rational functions. Duke Math. J. 36, 95–104 (1969)