Abstract

We consider INAR(1) processes modulated by an unobserved strongly dependent \(0-1\) process. The observed process exhibits zero inflation and long memory. A simple method is proposed for estimating the INAR-parameters without modelling the unobserved modulating process. Asymptotic results for the estimators are derived, and a zero-inflation test is introduced. Asymptotic rejection regions and asymptotic power under long-memory alternatives are derived. A small simulation study illustrates the asymptotic results.

Similar content being viewed by others

1 Introduction

Time series that consist of counts often exhibit zero inflation (see e.g. Young et al. 2022 and references therein). For instance, instrumental detection limits can lead to an increased number of zeroes. Also ecological data sets tend to contain a large proportion of zeroes that are classified by ecologists as "false zero counts" (Martin et al. 2005). Examples can also be found in other areas such as medicine, psychology, traffic or insurance, among others (Young et al. 2022). In this paper we consider zero inflation generated by a long-memory process. This is in contrast to the statistical literature where zero inflation processes are usually assumed to be iid or at most weakly dependent. The issue of zeroes occuring in long batches has been discussed in the recent applied literature. In many applications, zero inflation is related to missing values due to faulty measurement devices, instrumental detection limits or biological reasons. Also, the occurence of batches of zeroes may be caused by other unobserved time series (see e.g. Che et al. 2018). A natural approach to handling such data is to use models that exhibit long-range dependence with respect to zero inflation. Note that, in a related paper, Möller et al. (2018) discuss models that tend to produce relatively long runs of zeroes (e.g. ZT-Bar(1) processes). However, asymptotically, the autocorrelation function of their models decays exponentially and is thus summable. In contrast, the models considered here have long memory in the sense that autocorrelations decay hyperbolically and are not summable. Note also that a long-memory INAR model, a so-called fractional INARFIMA, is suggested in Quoreshi (2014). However, the INARFIMA model, as specified by Quoreshi, is not well defined, because it relies on a fractional thinning operator that yields infinity when applied to non-negative sequences.

More specifically, we consider INAR(1) processes modulated in a multiplicative way by an unobserved strongly dependent \(0-1\) process. Thus, the observed process is assumed to be of the form

where the stationary 0–1-process W(j) (\(j\in {\mathbb {N}}\)) is independent of the INAR(1) process \(X_{j}\) (\(j\in {\mathbb {N}}\)), and the autocovariances \(\gamma _{W}(k)=cov(W(j),W(j+k))\) are such that

for some constants \(0<c_{\gamma ,W}<\infty \), \(d\in (0,\frac{1}{2})\). Note that Eq. (2) implies that the spectral density

has a pole at the origin, of the form

where \(c_{f,W}=c_{\gamma ,W}\pi ^{-1}\Gamma (2d)\sin (\pi /2-\pi d)\) (Zygmund 1968; Beran et al. 2013, Theorem 1.3). We will use the notation

More specifically, we will assume \(X_{j}\) to be a Poisson INAR(1) process (McKenzie 1985; Al-Osh and Alzaid 1987) defined by

where \(\alpha \in (0,1)\), and \(\varepsilon _{j}\) are iid Poisson variables with intensity \(\lambda \). The thinning operator "\(\circ \)" (Steutel and Van Harn 1979; Gauthier and Latour 1994) means that, conditionally on \(X_{j-1}\), \(\alpha \circ X_{j-1}\) is a binomial random variable generated by \(X_{j-1}\) Bernoulli trials with success probability \(\alpha \). The unknown parameters are \(\alpha \) and \(\lambda \). Alternatively, one may also use the parameterization \(\alpha \) and \(\mu _{X}=E(X_{j})=\lambda /(1-\alpha )\).

Two questions regarding model Eq. (1) are addressed in this paper: a) estimation of the INAR(1) paramters \(\alpha \) and \(\mu _{X}\) without modelling the unobservable process W; b) testing for zero-inflation as specified by model Eq. (1).

Since the introduction of INAR models by McKenzie (1985) and Al-Osh and Alzaid (1987), the literature on integer-valued time series based on binomial thinning (Steutel and Van Harn 1979) has grown steadily. Some references are Du and Li (1991); Gauthier and Latour (1994); Da Silva and Oliveira (2004); Freeland and McCabe (2004, 2005); Gourieroux and Jasiak (2004); Jung et al. (2005); Puig and Valero (2007); Drost et al. (2008); Park and Kim (2012); Pedeli and Karlis (2013); Schweer and Weiss (2014); Pedeli et al. (2015) and Jentsch and Weiss (2019). Excellent overviews on integer-valued processes and references can be found for instance in Weiss (2018) and Davis et al. (2021). Testing for zero-inflation in INAR(1) models is discussed in a recent paper by Weiss et al. (2019). Also see Pavlopoulos and Karlis (2008); Barreto-Souza (2015); Weiss (2013), and Bourguignon and Weiss (2017) for over- and underdispersed INAR processes. References to long-memory processes can be found for instance in Beran (1994); Giraitis et al. (2012); Beran et al. (2013) and Pipiras and Taqqu (2017).

The paper is organized as follows. Basic results are established in Sect. 2. Question a) is addressed in Sect. 3. In Sect. 4, a test is developed for the null hypothesis of a non-inflated INAR(1) process against the alternative of a zero-inflated process as defined in Eq. (1). Asymptotic rejection regions are derived, as well as an asymptotic lower and upper bound for the power of the test. A small simulation study in Sect. 5 illustrates the results. Final remarks in Sect. 6 conclude the paper. Proofs are given in the appendix.

2 Basic results

2.1 Expected value and autocovariance function

Let \(Y_{j}\) be generated by model Eq. (1) where \(X_{j}\) is a Poisson INAR(1) process. We will use the notation \({\textbf{1}}\{A\}\) for the indicator function of a set (or event) A. Moreover, we define

Also, for \(k<0\), we set \(\gamma _{X}(k)=\gamma _{X}(-k)\), \(\gamma _{W} (k)=\gamma _{W}(-k)\), \(\gamma _{Y}(k)=\gamma _{Y}(-k)\), and, for \(\lambda \in [-\pi ,\pi ]\),

Due to mutual independence and stationarity of the two processes \(X_{j}\) and W(j) we have

and

Noting that

where \(\mu _{X}=\lambda /(1-\alpha )\), assumption Eq. (2) implies

and

Thus, although the original process \(X_{j}\) has exponentially decaying autocovariances, the observed process \(Y_{j}\) inherits long memory from the modulating process W(j).

Example 1

Let \(Z_{j}\) (\(j\in {\mathbb {Z}}\)) be a stationary Gaussian process with zero mean, variance one and spectral density function \(f_{Z}\) such that

for some \(0<c_{f,Z}<\infty \), \(d\in (0,\frac{1}{2})\). Also, let \(\kappa \ne 0\), and denote by \(\Phi \) and \(\phi \) the standard normal distribution and density function respectively. Then the process

is stationary with expected value \(\mu _{W}=\Phi (\kappa )\) and spectral density

where \(c_{f,W}=c_{f,Z}\varphi (\kappa )\).

Example 2

Another example of a modulating process with long memory can be obtained from a renewal reward process defined by

where \(\tau _{j}\) (\(j=1,2,...\)) is a renewal process with intensity \(\nu \), and \(\xi _{j}\in \{0,1\}\) are iid random variables with \(p_{\xi }=P(\xi _{j} =1)\in (0,1)\), independent of the process \(\tau _{j}\). Moreover, the interarrival times \(T_{j}=\tau _{j}-\tau _{j-1}\) are assumed to have a finite expected value \(E(T)=\mu \) and a marginal distribution function \(F_{T}\) such that

for some constants \(0<c_{F,T}<\infty \), \(1<\alpha <2\). Finally, to achieve stationarity, the distribution function of \(\tau _{0}\) is assumed to be given by \(F_{\tau _{0}}(x)=\mu ^{-1}\int _{0}^{x}(1-F_{T}(u))du\). Then \({\tilde{W}}\) is a continuous time stationary process with expected value \(\mu _{W}=p_{\xi }\) and autocovariance function

where

Setting \(W(j)={\tilde{W}}(j)\) (\(j=1,2,...\)), we obtain a discrete time stationary zero–one process with long memory as defined by Eq. (4) (see e.g. Beran et al. 2013, and references therein).

2.2 Sample mean and sample autocovariances

Standard methods for estimating the parameters of a Poisson INAR(1) process include moment and conditional least squares estimation. For both methods, estimators are functions of the sample mean and the lag-one sample autocorrelation. It is therefore of interest to study the effect of modulation on these statistics.

Consider first the sample mean \({\bar{y}}_{n}=n^{-1}\sum _{j=1}^{n}Y_{j}\) as an estimator of \(\mu _{Y}\). With respect to the expected value we have \(E(\bar{y}_{n})=\mu _{W}\mu _{X}\). Thus, \({\bar{y}}_{n}\) is biased, unless \(P(W(t)=1)=1\). The asymptotic distribution of \({\bar{y}}_{n}\) follows from the asymptotic distribution of \({\bar{w}}_{n}=n^{-1}\sum _{j=1}^{n}W(j)\), as stated in the next theorem. We will use the notation

Also, "\(\rightarrow _{d}\)" and "\(\rightarrow _{p}\)" will denote convergence in distribution and in probability respectively.

Theorem 1

Let the process W be such that

where \(Z_{W}\) is a standard normal random variable. Then, under the assumptions given above,

Remark 1

In Theorem 1, the assumption that \(X_{t}\) is an INAR(1) process is not needed. More generally, the result holds whenever the modulating process W has the properties given above, the modulated process \(Y_{t}\) is defined by Eq. (1), and \(X_{t}\) is a weakly stationary process with expected value \(\mu _{X}\) and summable autocovariances \(\gamma _{X}(k)\).

An analogous result can be obtained for sample autocovariances. In particular,

converges in probability to

Thus, the asymptotic bias of the unadjusted sample autocovariance is equal to

3 Conditional estimators

The modulating process W is usually not observable. Therefore the question arises whether consistent estimation of the INAR(1) parameters characterizing \(X_{j}\) is possible, without having to model the unobserved process W. A simple approach is to make use of the fact that \(P(Y_{j}=X_{j}|Y_{j}>0)=1\). For instance, we may consider conditional moment estimators.

More specifically, for a Poisson INAR(1) process with intensity \(\lambda \), we have \(\mu _{X}=E(X)=\lambda /(1-\alpha )\) and

Moreover,

and hence

Therefore,

provides a consistent nonparametric estimator of \(E(X_{j}|X_{j}>0)\). This motivates the definition of a conditional moment estimator of \(\mu _{X}\) as the solution \({\hat{\mu }}_{X}\) of

It is straightforward to prove that Eq. (11) provides a consistent estimator:

Theorem 2

Let \({\hat{\mu }}_{X}\) be defined by Eq. (11 ). Then

Remark 2

In Theorem 2, the assumption that \(X_{t}\) is an INAR(1) process is not needed. Suppose that \(X_{t}\) is a nonnegative integer valued weakly stationary process with a summable autocovariance function \(\gamma _{X}\). Then, calculating \(E(X_{t}|X_{t}>0)\), the function g and the nonparametric estimator \(R_{n}\) can be adapted accordingly to obtain a consistent estimator \({\hat{\mu }}_{X}\) via (??).

While consistency of \({\hat{\mu }}_{X}\) is not influenced by the modulating process, this is not true for the asymptotic distribution of \({\hat{\mu }}_{X}\). This is discussed in Theorem 4 (Sect. 4).

The usual moment estimator of the INAR(1)-parameter \(\alpha \) is obtained by the lag-one sample autocorrelation. However, as we saw above, for the modulated process \(Y_{j}\) sample autocovariances are asymptotically biased estimators of \(\gamma _{X}\). We may therefore consider an estimator based on the conditional autocovariance \(cov(Y_{j},Y_{j+1}|Y_{j},Y_{j+1}>0)\). By analogous arguments as above it is easy to see that

However, in terms of the INAR(1) parameters \(\alpha \) and \(\mu _{X}\), the right hand side of Eq. (12) is a rather complicated nonlinear function. As it turns out, a much simpler expression can be obtained for the noncentered conditional moment \(E(X_{j}X_{j+1}|X_{j},X_{j+1}>0)\). Specifically, for a Poisson INAR(1) process the following formulas hold:

Lemma 1

Let \(X_{j}\) be a Poisson INAR(1) process with parameters \(\mu _{X}\) and \(\alpha \). Then

and

A consistent nonparametric estimator of \(E(X_{j}X_{j+1}|X_{j},X_{j+1}>0)\) is given by

Setting

we define \({\hat{\alpha }}\) by

where \({\hat{\mu }}_{X}\) is obtained from (11). Equation (13) provides a consistent estimator:

Theorem 3

Let \({\hat{\alpha }}\) be defined by Eq. (13). Then

4 Testing for zero inflation

4.1 Definition of the test statistic

In this section, we consider the question how to test for zero-inflation due to modulation as specified by Eqs. (1), (5), (2) and (3). As before, we assume that the modulating process W is not observable. Using the notation

we may formulate the null hypothesis \(H_{0}\) and the alternative \(H_{1}\) as

For some general results on zero-inflation tests for INAR processes, see e.g. the recent paper by Weiss et al. (2019). Note that in our situation, we are testing explicitly for zero-inflation in the marginal distribution. We may therefore use a very simple statistic that compares the empirical relative frequency of zeroes with the frequency modelled by an INAR(1) process. Since \(P(Y_{j}=X_{j}|Y_{j}>0)=1\), we suggest to use the consistent conditional estimator of \(\mu _{X}\) defined in Eq. (11). Thus, let \({\hat{\mu }}_{X}\) be defined by Eq. (11), and let

and

We define the test statistic

Then, under \(H_{0}\), \(T_{n}\) converges to zero in probability, whereas under the alternative \(H_{1}\), it converges to a positive constant. Asymptotic rejection regions are derived in the following section.

4.2 Asymptotic rejection regions

Before deriving the asymptotic distribution of \(T_{n}\), we need to consider the joint asymptotic distribution of \({\hat{\mu }}_{X}\) and \({\tilde{p}}_{0,X}\). The following notation is adopted from Weiss et al. (2019):

and

Also, denote by

the odds ratio based on \(p_{0,X}\).

Theorem 4

Let

and suppose that \(H_{0}\) holds. Then

where \(\zeta =(\zeta _{1},\zeta _{2})\) is a zero mean bivariate normal random variable with covariance matrix \(\Sigma _{\zeta }=(c_{ij})_{i,j=1,2}\). The entries in \(\Sigma _{\zeta }\) are defined by

The asymptotic distribution of \(T_{n}\) under \(H_{0}\) is given by the following Theorem:

Theorem 5

Under \(H_{0}\),

where \(Z_{T}\) is a standard normal variable,

and \(\sigma _{ij}\) are given by Eqs. (14), (16), (15).

Based on Theorem 5, asymptotic rejection regions at a level of significance \(\alpha \in (0,1)\) can be defined by

where \(z_{1-\alpha }\) denotes the \((1-\alpha )-\)quantile of a standard normal variable.

4.3 Asymptotic power

We consider the asymptotic distribution of \(T_{n}\) under the alternative as specified by Eqs. (1), (2), (4) and (5) with \(0<p_{0,W}<1\). Note first that

converges in probability to

On the other hand, \({\hat{p}}_{0,X}\) converges in probability to \(p_{0,X}\). Therefore, under \(H_{1}\),

and

A more detailed result is given by the following Theorem. We will use the notation

and

Theorem 6

Suppose that \(H_{1}\) holds, as specified by Eqs. (1), (2), (4) and (5) with \(0<p_{0,W}<1\). Moreover, assume that

where \(Z_{1,W}\) is a standard normal random variable. Then, for n large enough,

Remark 3

Note that the rate at which the two bounds in Eq. (22) tend to zero, as \(n\rightarrow \infty \), is slower for larger values of d. Thus, the power of the test becomes weaker with increasing long memory in the modulating process W. Also note that, with increasing d, the bounds become less sharp.

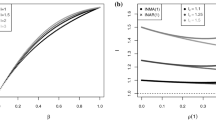

5 Simulations

We consider the model \(Y_{j}=W(j)X_{j}\) where \(W(j)={\tilde{W}}(j)\) and \({\tilde{W}}\) is the renewal reward process defined in Eq. (9). The following INAR(1) parameters are used: a) \(\mu _{W}=0.5\) with \(\lambda =0.4\) and \(\alpha =0.2\); b) \(\mu _{W}=1\) with \(\lambda =0.4\) and \(\alpha =0.6\). For W we consider \(\mu _{W}=p_{0,W}=\)0.5, 0.9, 1, and \(d=\)0.1, 0.4. In the simulations of the zero-inflation test we also add a case of extreme long memory, with \(d=0.49\). For each parameter combination and sample sizes \(n=\)100, 200, 400, 800 and 1000, ten thousand simulations were carried out. Simulated means and variances of the conditional moment estimates \({\hat{\mu }}_{X}\), as defined in Eq. (11) and \({\hat{\alpha }}_{cond}\), as defined in Eq. (13), are shown in Tables 1 (\(\mu _{W}=1\)), 2 and 3 (\(\mu _{W}=\)0.5, 0.9). For comparison, the unconditional estimates \({\bar{y}}\) and \({\hat{\alpha }}_{uncond}\) are also given. Under \(H_{0}\), we observe an undisturbed INAR(1) process. As expected, the conditional estimates have a larger variance than the unconditional ones (Table 1). Under \(H_{1}\), we have \(\mu _{W}<1\), so that \({\bar{y}}\) underestimates \(\mu _{X}\). Due to zero inflation, the absolute value of the bias increases as \(\mu _{W}\) decreases. In contrast, no relevant finite sample bias is observed for the conditional estimator \({\hat{\mu }}_{X}\). For the unconditional estimator of \(\alpha \), the effect of zero-inflation is much less clear. A noticeable bias of the unconditional estimator can only be observed for \(p_{0,W}=0.5\) and \(d=0.1\). A possible reason for the rather minor effect is that the potential bias under zero-inflation may be compensated by a modified lag-one autocorrelation of the observed process due to dependence in W.

Tables 4 show results for the zero-inflation test based on Eq. (20). Under \(H_{0}\), the simulated levels are reasonably close to the nominal value of 0.05. As expected, for smaller values of \(p_{0,W}\), the test has higher power. The effect of long memory is less obvious for mild zero-inflation (\(p_{0,W}=0.9\)). For \(p_{0,W}=0.5\), the simulation results show a clearer picture, with a tendency for slightly lower power when d is large.

Finally, Table 5 shows results for the case where \(X_{j}\) is generated by an INAR(2) process (Alzaid and Al-Osh 1990), but is misspecified as an INAR(1) model. Specifically, we simulate \(X_{j}=\alpha _{1}\circ X_{j-1} + \alpha _{1}\circ X_{j-2} + \varepsilon _{j}\) where \((\alpha _{1},\alpha _{2})=(0.2,0.2) \) and (0.6, 0.2) respectively, and \(\varepsilon _{j}\) are iid Poisson variables with \(\lambda =0.4\). For \((\alpha _{1},\alpha _{2})=(0.6,0.2)\), the simulated rejection frequencies under \(H_{0}\) are very close to the nominal level of 0.05. For \((\alpha _{1},\alpha _{2})=(0.2,0.2)\), rejection frequencies tend to be slightly too high. A possible explanation is that for \(\alpha _{1}=0.6\) the relative influence of \(X_{j-1}\) on \(X_{j}\) is much stronger than for \(\alpha _{1}=0.2\). In this sense, the INAR(2) process is closer to an INAR(1) process for larger values of the ratio \(\alpha _{1}/\alpha _{2}\), and the misspecification is less noticeable. On the other hand, under \(H_{1}\), rejection probabilities tend to be higher for \(\alpha _{1}=0.2\). This may be explained, at least partially, by the fact that for this parameter setting the level of significance is higher than the nominal level of 0.05. Generally speaking, one may conjecture that the test is likely to be fairly robust to mild deviations from an INAR(1) model. If serious deviations from the ideal model are expected, a modification of the test may be preferable (see Remarks 1 and 2 ). An interesting task for future research is to develop adaptive tests for zero-inflation that are applicable without the need of specifying which member of a certain family of "ideal models" (e.g. the family of all INAR(p) models with \(p=1,2,...\)) generates the unmodulated process \(X_{j}\).

6 Final remarks

In this paper, we introduced a zero-inflated INAR(1) model where zero-inflation is caused by a long-memory process. Parameter estimation and testing for zero-inflation was considered. Various extensions of this model are of interest. For instance, modulation may occur at the level of the innovations of the INAR(1) process. The structure of this type of modulated INAR(1) processes is much more complex, and will be considered elsewhere. Another obvious extension is to consider model (1), however replacing \(X_{j}\) by a general INAR(p) process. It is expected that the methods considered here can be extended to this case using analogous arguments as for \(p=1\).

Finally note that instead using the test statistic discussed in Sect. 4, one may consider the lengths of zero runs (for related results see e.g. Jazi et al. 2012, Qi et al. 2019). An advantage of the test considered here is its simplicity. In particular, under the alternative, the distribution of runs can be quite complicated. On the other hand, the estimator of \(\mu _{X}\) used here, omits parts of the information that may be useful for detecting deviations from the null hypothesis. The development of more efficient tests, for instance based on runs, is an interesting task for future research.

References

Al-Osh MA, Alzaid AA (1987) First-order integer-valued autoregressive (INAR(1)) process. J Time Ser Anal 8(3):261–275

Alzaid AA, Al-Osh MA (1990) An integer-valued pth-order autoregressive structure (INAR(p)) process. J Appl Probab 27(2):314–324

Barreto-Souza W (2015) Zero-modified geometric INAR(1) process for modelling count time series with deflation or inflation of zeros. J Time Ser Anal 36(6):839–852

Beran J (1994) Statistics for long-memory processes. Chapman and Hall, CRC Press, New York

Beran J, Feng Y, Ghosh S, Kulik R (2013) Long-memory processes-probabilistic properties and statistical methods. Springer, New York

Bourguignon M, Weiss CH (2017) An INAR(1) process for modeling count time series with equidispersion, underdispersion and overdispersion. Test 26(4):847–868

Che Z, Purushotham S, Cho K, Sontag D, Yan Liu Y (2018) Recurrent neural networks for multivariate time series with missing values. Sci Rep 8:6085. https://doi.org/10.1038/s41598-018-24271-9

Da Silva EM, Oliveira VL (2004) Difference equations for the higher order moments and cumulants of the INAR (1) model. J Time Ser Anal 25(3):317–333

Davis R, Fokianos K, Holan S, Joe H, Livsey J, Lund R, Pipiras V, Ravishanker N (2021) Count time series: a methodological review. J Am Stat Assoc 116:1–50

Drost FC, Van Den Akker R, Werker BJM (2008) Local asymptotic normality and efficient estimation for INAR (p) models. J Time Ser Anal 29(5):783–801

Du JG, Li Y (1991) The integerâ valued autoregressive (INAR (p)) model. J Time Ser Anal 12(2):129–142

Freeland RK, McCabe BPM (2004) Forecasting discrete valued low count time series. Int J Forecast 20(3):427–434

Freeland RK, McCabe BPM (2005) Asymptotic properties of CLS estimators in the Poisson AR(1) model. Stat Probab Lett 73(2):147–153

Gauthier G, Latour A (1994) Convergence forte des estimateurs des paramètres d’un processus GENAR(p). Ann Sci Math Québec 18(1):49–71

Giraitis L, Koul HL, Surgailis D (2012) Large sample inference for long memory processes. Imperial College Press, London

Gordon RD (1941) Values of Mills’ ratio of area to bounding ordinate of the normal probability integral for large values of the argument. Ann Math Stat 12:364–366

Gourieroux C, Jasiak J (2004) Heterogeneous INAR(1) model with application to car insurance. Insur Math Econ 34(2):177–192

Jazi MA, Jones G, Lai CD (2012) First-order integer valued AR processes with zero inflated Poisson innovations. J Time Ser Anal 33:954–963

Jentsch C, Weiss CH (2019) Bootstrapping INAR models. Bernoulli 25(3):2359–2408

Jung RC, Ronning G, Tremayne AR (2005) Estimation in conditional first order autoregression with discrete support. Stat Papers 46:195–224

Martin TG, Wintle BA, Rhodes JR, Kuhnert PM, Field SA, Low-Choy SJ, Tyre AJ, Possingham HP (2005) Zero tolerance ecology: improving ecological inference by modelling the source of zero observations. Ecol Lett 8:1235–1246

McKenzie E (1985) Some simple models for discrete variate time series. Water Resour Bull. 21(4):645–650

Möller TA, Weiß CH, Kim HY, Sirchenko A (2018) Modeling zero inflation in count data time series with bounded support. Methodol Comput Appl Probab 20:589–609

Park Y, Kim HY (2012) Diagnostic checks for integer-valued autoregressive models using expected residuals. Stat Papers 53(4):951–970

Pavlopoulos H, Karlis D (2008) INAR (1) modeling of overdispersed count series with an environmental application. Environmetrics 19(4):369–393

Pedeli X, Karlis D (2013) On composite likelihood estimation of a multivariate INAR(1) model. J Time Ser Anal 34(2):206–220

Pedeli X, Davison AC, Fokianos K (2015) Likelihood estimation for the INAR(p) model by saddlepoint approximation. J Am Stat Assoc 110(511):1229–1238

Pipiras V, Taqqu MS (2017) Long-range dependence and self-similarity. Cambridge University Press, Cambridge

Puig P, Valero J (2007) Characterization of count data distributions involving additivity and binomial subsampling. Bernoulli 13(2):544–555

Qi X, Li Q, Zhu F (2019) Modeling time series of count with excess zeroes and ones based on INAR(1) model with zero-and-one inflated poisson innovations. J Comput Appl Math 346:572–590

Quoreshi A (2014) A long-memory integer-valued time series model, INARFIMA, for financial application. Quantit Financ 14(12):2225–2235

Schweer S, Weiss CH (2014) Compound Poisson INAR(1) processes: stochastic properties and testing for overdispersion. Comput Stat Data Anal 77:267–284

Steutel FW, Van Harn K (1979) Discrete analogues of self-decomposability and stability. Ann Probab 7(5):893–899

Weiss CH (2013) Integer-valued autoregressive models for counts showing underdispersion. J Appl Stat 40(9):1931–1948

Weiss CH (2018) An introduction to discrete-valued time series. Wiley, Hoboken

Weiss CH, Homburg A, Puig P (2019) Testing for zero inflation and overdispersion in INAR(1) models. Stat Papers 60:823–848

Young DS, Roemmele ES, Yeh P (2022) (2022) Zero-inflated modeling part I: traditional zero-inflated count regression models, their applications, and computational tools. WIREs Comput Stat 14:e1541. https://doi.org/10.1002/wics.1541

Zygmund A (1968) Trigonometric series, vol 1. Cambridge University Press, Cambridge

Acknowledgements

We would like to thank the referees for insightful comments that helped to improve the presentation of the results.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix—Proofs, Tables

Appendix—Proofs, Tables

1.1 Proofs

Proof of Theorem 1

First of all, \(E({\bar{y}}_{n})=\mu _{W}\mu _{X}=p_{0,W}\mu _{X}\), because the two processes X and W are independent of each other. Moreover,

Now,

and

for a suitable constant \(0<c<\infty \). Therefore

Furthermore,

(see e.g. Beran et al. 2013, Corollary 1.2). Since \(d>0\), \(n^{-1}S_{n,1}\) is asymptotically negligible and the result follows. \(\square \)

Proof of Theorem 2

The function g is monotonically increasing, with \(\lim _{\mu \rightarrow 0}g\left( \mu \right) =1\) and \(\lim _{\mu \rightarrow \infty }g\left( \mu \right) =\infty \). Since \(R_{n}\ge 1\), Eq. (11) has a unique solution. Moreover, W is a stationary \(0-1-\)process, and

Tschebyschev’s inequality then implies

and

so that

\(\square \)

Proof of Lemma 1

First of all,

Then

\(\square \)

Proof of Theorem 3

First note that

so that

Then,

and

implies

Now, for any fixed \(\mu _{X}>0\), \(h(\alpha ,\mu _{X})\) is monotonically increasing in \(\alpha \) with

Hence, together with Theorem 2, we may conclude that, for n large enough, (13) has a unique solution and \({\hat{\alpha }}\rightarrow _{p}\alpha \). \(\square \)

Proof of Theorem 4

Recall that

and

where

and

Under \(H_{0}\), we have

so that

Since \({\hat{\mu }}_{X}\) is consistent, we may use a Taylor expansion

to obtain

Moreover, under \(H_{0}\), we also have \(p_{0,Y}=p_{0,X}\) and

Now

The second term can be written as

The first term can be simplified to

Thus,

Applying Theorem 3.1.1 in Weiss et al. (2019), this can be simplified to

where \(Z=(Z_{1},Z_{2})\) is a zero mean bivariate random variable with covariance matrix \(\Sigma =(\sigma _{ij})_{i,j=1,2}\), as defined by Eqs. (14), (16), (15). Note furthermore that \(p_{0,X}=\exp (-\mu _{X})\),

so that

and

Thus, we obtain

Moreover, by definition,

Therefore

where \(\zeta \) has the covariance matrix \(\Sigma _{\zeta }\) defined by Eqs. (17), (18) and (19). \(\square \)

Proof of Theorem 5

Note first that

Theorem 4 implies

and

where \(\zeta \) is defined in Theorem 4. Then formulas Eqs. (17),(18),(19) lead to

\(\square \)

Proof of Theorem 6

First note that

where

and

with

Also note that

By assumption,

where

(see e.g. Beran et al. 2013, Theorem 1.3). Moreover,

and hence

This implies

Also,

and

so that

We then obtain

with

and

Thus, overall

Moreover, under the given assumptions, \(n^{\frac{1}{2}-d}(S_{1,n},S_{2,n})\) are asymptotically jointly normal, so that

where \(Z_{3}\sim N(0,1)\). Hence, we have

For

this yields

Inequality (22) then follows from

(Gordon 1941) by plugging in

\(\square \)

1.2 Tables

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Beran, J., Droullier, F. On strongly dependent zero-inflated INAR(1) processes. Stat Papers (2023). https://doi.org/10.1007/s00362-023-01496-z

Received:

Revised:

Published:

DOI: https://doi.org/10.1007/s00362-023-01496-z