Abstract

Recent expansions in prison school offerings and the re-introduction of the Second Chance Pell Grant have heightened the need for a better understanding of the effectiveness of prison education programs on policy-relevant outcomes. We estimate the effects of various forms of prison education on recidivism, post-release employment, and post-release wages. Using a sample of 152 estimates drawn from 79 papers, we conducted a meta-analysis to estimate the effect of four forms of prison education (adult basic education, secondary, vocational, and college). We find that prison education decreases recidivism and increases post-release employment and wages. The largest effects are experienced by prisoners participating in vocational or college education programs. We also calculate the economic returns on educational investment for prisons and prisoners. We find that each form of education yields large, positive returns due primarily to the high costs of incarceration and, therefore, high benefits to crime avoidance. The returns vary across education types, with vocational education having the highest return per dollar spent ($3.05) and college having the highest positive impact per student participating ($16,908).

Similar content being viewed by others

Introduction

Following an explosive growth in U.S. incarceration rates starting in the 1980s, more than six in every 1,000 people in the U.S. population are behind bars, the highest rate in the world,Footnote 1 despite many other countries having higher violent crime rates. A 2003 estimate by the Prison Policy Initiative projected that six percent of Americans would be imprisoned at some point in their lifetime, including almost one-third of all African Americans.Footnote 2 The decision to incarcerate relatively more people comes with direct and indirect costs. One estimate (Wagner & Rabuy, 2017) places the cost to house prisoners at $80.7 billion, while the costs of policing, courts, health care, and various other expenses bring the total cost to $182 billion.

The indirect costs are potentially even more extensive. Incarceration decreases rates of employment (Apel & Sweeten, 2010) and education for both the incarcerated (Hjalmarsson, 2008) and their dependents (Shlafer et al., 2017). Because lower education levels have been found to have a causal effect on arrest and incarceration (Lochner & Moretti, 2004), the decision to incarcerate a parent increases the likelihood that the child ends up in prison. Levels of social engagement (Chattoraj, 1985) and civic participation (Lee et al., 2014) are negatively affected by incarceration. Just as the direct incarceration costs increased with the prison population, these indirect costs also did. These costs can be attenuated through effective policies and programs implemented within jails and prisons. If programs in prisons can provide inmates with skills that improve their post-release outcomes, they can reduce the indirect costs of incarceration and reduce the future costs of incarceration through reduced recidivism.

For decades, the notion that incarcerated people could see their paths changed was a minority opinion. The collapse in the mid-1970s of the goal of rehabilitation gave way to the “nothing works” mindset (Martinson, 1974), which led to bi-partisan support for increasingly punitive prison sentences and a reduction in rehabilitation programs. The 80 s and 90 s were defined by consequent escalations in punishments, including the Sentencing Reform Act of 1984, the Anti-Drug Abuse Act of 1986, and the expansion of three-strikes laws in the 1990s. Recently, policy and public opinion have changed course: Criminal justice reforms have been passed, including the First Step Act, which eased mandatory minimum sentence rules for judges, and the re-authorization of the Juvenile Justice and Delinquency Prevention Act (both in 2018). Nonetheless, incarceration rates remain high; the Sentencing Project (2019, p. 1) has described the 40-year incarceration trend as a “Massive Buildup and Modest Decline.” The incarceration rate has fallen slightly from its early 2000s peak, but this is still four times the 1970 rate. The share of Americans who feel that the criminal justice system is not tough enough has dropped by 50% since 1992, while the share who believe that the system is too tough has increased tenfold (Brenan, 2020). Several recent national polls – including ACLU’s Campaign for Smart Justice and Justice Action Network – show that a large majority of Americans believe rehabilitation should play a more prominent role in American criminal justice (Benenson Strategy Group, 2017).

One place where this reform is playing out is in prison classrooms. One of the victims of the 90 s “tough on crime” bills was educational offerings to prisoners, especially college programs. The 1994 Crime Bill eliminated the eligibility of Pell Grants for prisoners, and the rate of college participation in prison dropped from 19% in 1991 to 10% in 2004 (Sawyer, 2019). While college offerings have increased in the past decade (DiZerega & Eddy, 2022), educational opportunities beyond high school are still below their pre-1980s levels. Even programs that are offered spend a fraction of what is invested in students outside of prison. For example, Texas spends about $12,000 annually per student in the state’s public school system but only about $1,000 per incarcerated student.

The optimal education and job training policy for the incarcerated population hinges on the programs’ effectiveness. If programs are minimally effective at achieving the main goals of rehabilitation (reduction in recidivism, higher wages, or employment rates), then education programs are likely not worth the expense. However, expanding prison programs would likely be an effective public policy if the returns on investment for prison education are large.

Background and Literature Review

This study belongs to a large body of research on the effect of education and rehabilitation of the incarcerated. Much of the research has affected, or at the very least predicted, changes in policy. As noted by Smith et al. (2009), the long-held notion of American prisons as ideal homes for “resocializing” programs was called into question through Martinson’s (1974) review of the existing literature and the subsequent book covering the same data (Lipton et al., 1975). This research described the overall effectiveness of 231 correctional education programs operating between 1945 and 1967. Despite numerous positive effects described in their analysis (such as all studies for supervision of young parolees leading to decreased recidivism), the conclusion reached by the authors was that “nothing works” (Martinson, 1974).

The current study builds on several previous meta-analyses on prison education. Given the policy importance of research on prison education, the topic has been frequently studied. However, given the significant selection issues in who chooses to participate in prison education, previous meta-analyses have had to rely heavily on non-scientific comparisons or simple differences in means in their studies. Wilson et al. (2000) performed the first major meta-analysis on prison education programs a quarter-century after the work of Lipton et al. (1975). By evaluating the performance of 33 studies, their results showed that participation in an academic correctional program was associated with an 11 percent reduction in recidivism and an increased likelihood of post-release employment. They point to the need for more research that better provides unbiased estimates of causal effect, stating, “future evaluative research in this area could be strengthened through… designs that control for self-selection bias beyond basic demographic differences” (Wilson et al., 2000, pg. 347). Similarly, Cecil et al. (2000) and Bouffard et al. (2000) find educational programs associated with better post-release outcomes while emphasizing the need for a larger pool of high-quality studies.Footnote 3

Chappell (2003) aggregated 15 studies on postsecondary prison education, finding a significant reduction in recidivism, but pointed to the small number of studies that qualified for inclusion a “disappointment” while articulating some of the issues that prevented papers from being included, such as failure to report basic statistical results (Chappell, 2003, pg. 52). Aos et al. (2006) also performed a meta-analysis on 17 educational programs as part of a larger cost–benefit analysis of a collection of prison programs.

The most often-cited meta-analysis on prison education is by Davis et al. (2013). The authors performed a meta-analysis including 58 papers measuring the impact of corrections programs on recidivism, employment, and academic outcomes. Again, they support the findings of previous meta-analyses that participation in prison education decreases recidivism and increases employment. Moreover, as previous studies have concluded, the authors emphasize the need for higher-quality papers, stating in the forward of their paper, “This need for more high-quality studies that would reinforce the findings is one of the key areas the study recommends for continuing attention” (Davis et al., 2013, iii). A follow-up by many of the same authors (Bozick et al., 2018) provides similar findings. To date, these two papers represent the best we know about the effect of prison education.

Prison education programs differ significantly across types. This means that any meta-analysis that simultaneously measures the effect of ABE, secondary education, vocational, and college programs needs an especially large sample of papers to obtain separate estimates for each type. While both Davis et al. (2013) and Bozick et al. (2018) compared the relative effectiveness of different forms of prison education, the small number of papers available meant they needed to use all papers in their sample to estimate the relative effects. However, because many papers in the samples are likely plagued by selection bias, the resulting aggregate measures will be too. Over the 21 studies analyzed by Bozick et al. (2018), they found that education increases the odds ratio of post-release Employment by 12%. However, when they restrict their sample to only Level 4 or higher papers (of which none are Level 5), their estimate of the positive impact of education actually increases, but the resulting increased standard errors render the estimates statistically insignificant. This imprecision results from only 3 of the 21 studies qualifying as a level 4 on the SMS scale. Thus, the authors conclude that the subsample provides indeterminate results.

Fogarty and Giles (2018) highlight several issues with several of the papers used in both Davis et al. (2013) and Bozick et al. (2018), such as the inclusion of papers that do not evaluate prison education or the incorrect results used, including the fact that the only two randomized trials in the meta-analyses (Lattimore et al., 1990) are in fact analyses of the same program. However, neither Davis et al.’s (2013) nor Bozick et al.’s (2018) broader findings are dramatically affected by correcting these mistakes.

An additional issue obfuscates the findings of both Davis et al. (2013) and Bozick et al. (2018) in broader discussions about prison education. In both cases, the authors use odds ratios to measure treatment effect (as do we). Odds ratios differ from probabilities, so a 43% decrease in the odds ratio of recidivism is not the same as a 43% decrease in the likelihood of recidivism. Davis et al. (2013) found the odds ratio for education participants is 0.57 that of non-participants. The authors carefully point out that this equates to a 13-percentage point decrease, which equates to a roughly 25% decrease. Unfortunately, Bozick et al., (2018; p. 302) fail to perform this same conversion in their analysis, where participants have a relative odds ratio of 0.68. The authors state that “inmates participating in correctional education programs are 32% less likely to recidivate when compared with inmates who did not participate in correctional education programs.” Instead, their results are consistent with a recidivism reduction of about 19%.

This confusion about odds ratios represents a common struggle with policy-relevant research: the difficulty of providing easily digestible information while conducting the rigorous statistical analysis necessary to calculate results correctly. This is one of the primary reasons we emphasize the cost–benefit analysis of our findings, which provides a relatively simple metric to evaluate the average effectiveness of money spent on each type of prison education program.

Goals & Research Question

This study focuses on the efficacy of prisoner education, including adult basic education (ABE), secondary education, higher education, and job training. What effect do these programs provide in improving outcomes for prisoners and, by extension, the public? To answer this, we conducted a meta-analysis on a large set of papers that estimated prison education’s effect. Given the decentralized nature of prison education systems, numerous studies have estimated the impact of various programs, often finding different results. A more precise causal effect estimate can be identified by pooling these effects together. For this analysis, we included papers that measured one of the following outcomes: recidivism, post-release Employment, or post-release wages. We can estimate the return on investment (ROI) for prison education by including several relevant outcome variables in a single meta-analysis.

Prison education presents numerous problems for meta-analyses. The existence of different types of programs means that many more papers are needed to determine the effects of different types of education systems, making disaggregation into smaller subsets difficult. Additionally, most studies on prison education are observational, masking the differences in outcomes between those who receive education and those who do not, with the unobserved effects of whatever drove students to get an education in the first place. Meta-analyses in medical research can solve this by focusing on randomized control trials, which address any selection bias. In social science research, such trials are rare. Instead, reliance on quasi-experimental methods is necessary, which requires researchers engaged in observational research to compare the treatment group to an otherwise similar control group, aside from differences in educational choices. By building the largest sample of papers of any meta-analysis, we can compare different types of education using only high-quality papers, which has not been possible in previous meta-analysis subsets of the papers, a crucial step given the variation in how educational programs are implemented.

Data, Sample, and Outcomes

Our sample includes papers published between 1980 and 2023 that studied the impact of prison education programs in United States prisons. We included those who conducted primary research on the effect of education and training programs (instead of the effect of having already obtained such training before entering prison). More details on the method used to find papers are provided in Appendix 2.

This paper aims to estimate the return on investment for prison education, leading us to prioritize economically quantifiable outcomes. The outcomes of interest for this analysis are recidivism (typically defined in the included papers as a return to prison), Employment, and wages. While these are not the only relevant outcomes, they are most likely to be measured by researchers and have the most direct economic benefit.

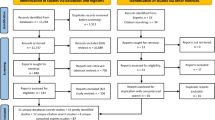

The initial search identified 750 papers for potential inclusion in the study. Of these, most (671) were eliminated for not presenting the results of an original study or for falling outside the scope of the analysis, such as studies estimating the effect of pre-incarceration education. We identified 79 papers that estimated the effect of education on one or more of our outcomes and obtained 149 separate estimates of the causal effect of prison education. Most of the novel papers in our sample have been published in the past five years after the most recent large-scale meta-analysis. However, we did identify several studies that were overlooked by previous analyses, especially less publicized studies conducted directly by various states’ Departments of Corrections.

Measures of Study Quality

Determining the quality of the studies used in the meta-analysis is critical, as empirical results can be affected by which studies are selected and the weights attached to each set of results. The Maryland Scientific Methods Scale (Farrington et al., 2003) has become a commonly used method of measuring study quality in the criminology field, as its grading system is catered specifically to the measure of program effect on criminal activity. The Maryland Scientific Methods Scale (SMS) provides a framework for grading studies from the lowest level of methodological rigor (level 1) to the highest (level 5). Level 1 studies, which lack a comparison group for measuring outcome differences, are omitted from our sample. Level 2 studies typically provide simple measures of the difference in the average outcomes between two groups (such as those who participated in education and those who did not). Levels 3–5 have a minimum interpretable design (Cook & Campbell, 1979), typically achieved with a quasi-random assignment (Levels 3–4) or random assignment (Level 5). We include all studies that earned a 2 or higher in our initial sample of papers but exclude studies scoring a 2 from our main estimates of causal effect for each education category.

Table 1 shows the breakdown of papers by outcome and SMS score. A complete list of papers identifying treatments, outcomes, and SMS scores is in Appendix 1. The recidivism sample includes 71 papers covering 102 separate estimates of causal effect, while our sample for Employment consists of 31 papers with 37 separate estimates.Footnote 4 Of the 102 recidivism estimates, 26 are graded as a 4 or 5 on the SMS scale. Eleven of the 37 employment estimates are graded as a 4 or 5. Forty-one papers with 71 separate estimates earn scores of 3 or more for our recidivism outcome, while 20 papers with 23 estimates achieve this in our employment sample. This large sample is critical to our analysis, as it allows us to compare the effects of different educational programs using only papers that scored high on the SMS score.

The sample of papers estimating the effect of education on wages is smaller, with ten papers providing 13 separate estimates of the causal effect. Despite the small number of papers estimating the effect on wages, they earn higher SMS scores. This is because papers that report standard errors are both likely to earn higher SMS scores (as they are more likely to use higher-quality methods) and more likely to be included in the wage sample.

Approach

We use random effects analysis, also called the DerSimonian-Laird method (DerSimonian & Laird, 1986). The primary alternative estimation strategy is fixed effects, which imposes an implicit assumption that the “true” causal effect of the intervention is common across studies. This assumption is almost certainly violated in the setting studied here, as different estimates reflect estimates of a causal effect on interventions from programs with a different method of implementation or prison populations.

Whenever possible, we used estimates of the impact of program participation instead of program completion. This has two benefits. First, the endogeneity of the decision to finish a program is likely to be strong; students who are driven enough to finish a program will likely differ from dropouts, so comparing these two groups will exacerbate omitted variable bias. Program participation is more likely to be affected by things outside prisoners’ control, such as their eligibility (e.g., based on location and reading level), and therefore is less likely to be plagued by estimation bias. Second, from a policy perspective, participation is more relevant than completion, especially since program completers are already included in the population of program participants. When we evaluate a program’s overall costs and benefits, we inherently do so against a counterfactual of no program. People who participate in the program and drop out may receive benefits anyway, and providing the education certainly costs money, so the most effective evaluation is to measure program participants (completers and non-completers) against non-participants.

In cases where the outcome variable is binary, we convert the outcome variable into an odds ratio, thereby normalizing the outcome across different studies. It is important to note that when considering odds ratios, they are not the same as absolute probabilities.Footnote 5 For example, if the odds ratio of recidivism is 0.8 for those who receive education and 1 for those without it, this does not mean that education will lead to a 20% drop in recidivism. It means that the odds ratio (defined as the probability of returning to prison divided by the probability of not returning) decreases by 20%.

When the outcome is binary, we can include studies in our analysis that presented differences in means, even if they do not report a standard error for their estimates, even though standard errors are necessary for inclusion in a meta-analysis. One characteristic of binary data is that the standard deviation of a distribution can be determined using only its mean, allowing us to back out the standard errors ourselves.Footnote 6 For our sample of studies estimating the effect of education on wages, we could only use papers that reported the standard errors or standard deviations, as we cannot back out these numbers using a simple difference in means.

When multiple recidivism horizons were measured (1 year, 2 years, 3 years), we used 3 years, as this is the most common outcome measured in the literature. Papers were not uniform in their definition of recidivism. Some papers defined recidivism by re-arrest, others by parole violations, and others by returns to prison. When papers reported multiple outcomes for recidivism, we used return to prison as the outcome variable. This is the most common outcome used by most papers in our sample.

In several studies (Anderson et al., 1991; Adams et al., 1994), the authors reported the effects of participating in multiple forms of education (such as academic + vocational). Previous meta-analyses (Bozick et al., 2018; Davis et al., 2013) included these estimates alongside the estimates of the effects of being in either academic or vocational programs. However, since this measures the effects of two doses of treatment instead of one, we incorporated these groups into a single regression, including dummy variables for both forms of education. This allows us to disentangle the separate effects.

When papers did not report standard errors, but statistical significance was reported (such as the 1 or 5-percent significance level), standard errors corresponding to that exact level of significance were selected. This is a conservative method since we will be underestimating the statistical significance of any findings. By imputing standard errors that were no smaller than the true standard errors, we avoided giving a study higher weight than is appropriate while also being able to use its findings.

Findings

Recidivism

Panel A of Table 2 presents the meta-analysis results across various subsamples of papers based on quality ratings. The odds ratio for recidivism is the probability that someone returns to prison divided by the probability that they do not. Using a 2021 Bureau of Justice Statistics (Durose & Antenangeli, 2021) estimate of 46% for the 5-year recidivism rate, the baseline odds ratio for recidivism is \(\frac{0.46}{1-0.46}=0.852.\)

As seen in the 3rd row of Panel A in Table 2, the odds ratio for the treatment group is 0.760. This means that receiving prison education leads to a 24% decrease in the odds of recidivism. To convert this into a probability, we multiply this by the baseline odds of recidivism, \(0.852 \bullet 0.76 = 0.6475\). An odds ratio of 0.6475 corresponds to a probability of 39.3%. This means that education decreases the probability of recidivism by 6.7 percentage points or 14.6% against a baseline of 46%. These results suggest that participation in a prison education program significantly decreases the likelihood of returning to prison.

However, this figure is an overstatement of actual causal effects, as it includes many papers that do not estimate effects using experimental or quasi-experimental methods. Therefore, we estimated the effect using only papers obtaining a 3, 4, or 5 on the Maryland SMS scale. Restricting the sample in this way is crucial for two reasons. First, these papers are more likely to avoid omitted variable bias and obtain unbiased causal effect estimates. However, an additional reason that is often overlooked in the literature is that high-quality papers are more likely to have larger standard errors because the things that make them less likely to provide biased estimates (inclusion of covariates, careful sample selection, robust or clustered standard errors) are the exact things that are likely to increase a study’s standard errors.

Using the sub-sample of high-quality papers, we find that participation in a program decreases the odds ratio of recidivism by 18.8%, which is smaller but still statistically and economically significant. If we further restrict our sample to only studies receiving a 4 or 5, we estimate education to decrease the odds of recidivism by 16.5%. This drift towards zero as we exclude papers unlikely to provide unbiased estimates suggests that the true causal effect is more likely to be 16–19 percent than 24–25 percent.

We find a drift in estimates of causal effect over time. While we find a 24% reduction in the odds of recidivism over our entire sample, this number drops to 12.4% when restricted to studies published since 2010. Some of this effect is because later papers are more likely to have more rigorous methods and, therefore, score higher on the SMS scale. As we have already seen, this leads to smaller causal effect estimates. However, the change in the composition of papers cannot explain this trend. Using just our sub-sample of studies earning SMS scores of 3 or higher, we estimate the causal effect since 2010 to be an 11% reduction in recidivism, compared to 18.8% for the entire sample. This difference has policy implications. While an 18.8% reduction in the odds ratio for recidivism is a good retrospective estimate of the effects on people who have already been released, the 11% reduction better reflects the effects of the current prison educational systems.

We also find variations in effects across educational programs. The programs evaluated in the papers studied here fall into one of the following categories: ABE, Secondary Education/GED, Vocational Education/Training, and Postsecondary Education. We estimate the effect of each kind of education program on our outcomes of interest. Given the large size of our sample, we can perform sub-group analysis while only relying on high-scoring papers (3, 4, or 5 SMS scores), supporting the claim that we can measure true estimates of a causal effect.

Panel B of Table 2 shows the differential effects of education type on reducing recidivism. We observe significant variation in the effect of education on recidivism. ABE and secondary education (high school, GED) appear to have similar effects, each leading to an 11–12 percent decrease in recidivism. Some of this similarity is due to the two groups being pooled in many estimates. The pool of papers for ABE and secondary education has 14 shared estimates, as numerous papers estimate the effect of “academic” programs, which could be either ABE or secondary programs.

Vocational education is somewhat more practical than ABE or secondary education, leading to a 15.6% decrease in the odds ratio for recidivism, but postsecondary education is where we observe the largest difference. College programs are especially effective tools for decreasing recidivism. We find that participation in a college program decreases the odds of recidivism by 41.5%. This number is so much larger than the other forms of education that point estimates for each of the other education categories fall outside the 95% confidence interval for college education. This means we can reject the null hypothesis that any other form of education is as effective as college education in reducing recidivism.

Unsurprisingly, college programs are the costliest form of intervention studied here. While other forms of education cost between $1,000 and $2,000 per year per student, college programs cost around $10,000. Bard College’s program, studied in one of the papers in this sample, costs $9,000 for each participant/year, while Pitzer College has a similar cost of $10,000. College programs also take longer to complete, meaning that not only is each participant/year more costly than other programs, but each student also participates in them for more years, further increasing the gap in the cost.

Employment

Table 3 presents the employment results. Using our entire sample of papers, we find that education increases the odds ratio of Employment by about 13.5%, which aligns with previous studies. Given baseline estimates of the percent of released prisoners employed, this equates to an increase of about 3.1 percentage points. Unlike our recidivism findings, the estimated effects of education on Employment do not depend strongly on the SMS scores of the papers used to build our sample. While we observe a decrease in our estimates when we use papers scoring 3, 4, or 5 on the Maryland scale, when we further restrict our sample to papers scoring only 4 or 5, we obtain an estimate of a 12.5% increase in the odds ratio for Employment, which is similar to that of our overall sample.

While we found the effect of education on recidivism to have dropped by more than half since 2010, our estimates of the effect of education on Employment using papers published since 2010 (a 12.4% increase) are almost the same as the estimates for the larger sample. Using our subset of papers with SMS scores of 3 or higher, the effect since 2010 (7.9%) is also similar to the 8.9% increase from the entire sample. Unlike our recidivism findings, we do not see a drift of causal effect in recent years.

Panel B of Table 3 shows the effects of different types of education on Employment. The pattern is similar to our recidivism findings. ABE and secondary education have the smallest effects. The effects are less than 3%, and both are statistically insignificant. This means that a significant uptick in observable Employment is not driving the decreased recidivism observed earlier for each of these forms of education. Vocational education has the clearest positive impact on Employment, increasing the odds of Employment by 11.8%. This further supports our recidivism findings that vocational education boosts post-release outcomes more than ABE or secondary education. We estimate that college programs increase the odds of Employment by an even larger amount (20.7%). However, the college estimate is derived from only a single study.

Earnings

Finally, we turn to estimates of the effect of education on earnings. Unfortunately, our sample is much smaller due primarily to the dearth of reported standard errors in most studies. Even if studies report causal effect estimates, findings cannot be included in a meta-analysis without standard errors. Table 4 shows the sample of 13 estimates using quarterly earnings. We normalized the numbers to quarterly earnings for each estimate and inflated them to 2020 values. We find that education increases quarterly earnings by $141.

Most of the papers in the sample estimate the effect of wages only on those employed (meaning that they are dropping unemployed people from the sample). Given the small number of studies, this estimate is only significant at the 10% level. While this result may seem like a by-product of the finding that education increases Employment, it is not. People induced into the workforce by any intervention (including education) are likely to be relatively low earners, meaning that it is possible to see an increase in Employment and a decrease in wages. The fact that we see a modest wage increase means that the wage effects amplify the employment effect instead of eating away at it. While we cannot reject the null hypothesis at the 5% level that education increases wages, we can reject a null hypothesis that increased Employment is associated with lower wages. Even a small wage reduction ($15) is outside the 95% confidence interval.

Sensitivity analysis is especially important because of the number of judgments involved in meta-analyses (choice of studies to include, grading, empirical framework). We performed a series of robustness checks to determine how much these decisions affected our final findings. One primary concern for meta-analyses is publication bias. Publication bias can lead to erroneous conclusions of significant effect, even in analyses exclusively using high-quality research. If studies that find no effect are less likely to be published, a meta-analysis could conclude a significant effect even if interventions have no true effect.

We test for the existence of publication bias using a “funnel plot” and its corresponding tests (Egger et al., 1997). If publication bias affects the distribution of the empirical findings, we expect to see an asymmetric distribution since studies on one side of the distribution would be truncated. This would mean that studies that either found null effects or counter-intuitive ones – education increasing recidivism or decreasing employment and wages – would be less likely to be written or published, resulting in a gap in the distribution. Previous meta-analyses (Bozick et al., 2018; Davis et al., 2013) have found evidence of publication bias. Our results (see Figs. 1, 2 and 3) show some evidence of publication bias for the recidivism sample (Fig. 1). There does appear to be a gap in the distribution on the right side of the graph, suggesting that papers finding especially large decreases in recidivism were more likely to be written and published than those finding null effects or increases in recidivism. However, the level of bias is not large enough to explain our main results for two reasons. First, the papers outside the left of the funnel are, on average, older and more likely to score lower on the SMS scale. If we restrict the sample to recent (post-2010) and high quality (3 or higher SMS score), we find no evidence of publication bias, yet we still find strong evidence that education decreases recidivism. We find little evidence of publication bias for our employment sample.

Estimating Returns on Investment

Given that prison education programs have a corresponding cost, a finding of positive effects is insufficient to support claims justifying such programs’ existence nor their expansion. It is vital to understand how these benefits compare to the costs of implementing the programs in the first place. Using a variety of estimates of costs (which we draw from various sources) and benefits (from the analysis presented here), we calculate the return on investment of education programs.

Obtaining an estimate of the cost of educating a prisoner is difficult. While many studies provide cost estimates, they almost always provide estimates of the cost of educating a student for one year, not the total cost of educating the average student. In their cost–benefit calculations, Davis et al. (2013) used estimates based on the average cost per student using a DOC annual education budget. If a student participates in programs for two or more years, the annual average cost per student will underestimate the cost of education. However, to determine the economic returns for educating a student, we also need to know how long a prisoner was taking classes.

As Aos et al. (2006) pointed out, to properly weigh the costs and benefits of different forms of prison education, we need to calculate the costs of different interventions independently. This is especially important given our findings. While college programs are the most effective, they are likely the most expensive. Many college students are completing two or four-year degrees. Compared to the average ABE length of study of 0.4 years (Cho & Tyler, 2013), the costs of these programs should not be expected to be comparable.

Our estimates for the cost of education are presented in Table 5. We use a variety of papers that have provided estimates of the total costs of educating students instead of the annual costs. Because programs differ in their cost of implementation, we provide separate estimates for ABE, secondary, vocational, and college education. ABE and secondary education are often pooled together as “academic” education, so the distinction between the two is not always clear when costs are reported. They were identical in each instance where ABE and secondary education costs were reported independently.

The cost numbers show that the costs for non-college education programs are similar. Vocational education costs slightly more, but the difference is small. It would cost the same amount to provide academic education to 50 students as it would provide vocational education to 47 students. College degree programs appear to be significantly more costly to implement. The average college program costs about $10,467 for each participant, almost five times as much as the per-participant cost for vocational education.

We estimate the benefits for each group separately, given the effects found in Tables 3 and 4. To quantify the benefits, we multiply the effects of education by the estimated marginal financial benefit using our estimates of casual effect derived from the sample of papers earning a 3, 4, or 5 SMS score. Since sample size limitations prevent us from estimating the wage effects separately for each education category, we assume that each participant experiences a boost equal to that of the sample-wide average, a $131 quarterly earnings increase.

Effects on Recidivism

Using our baseline recidivism odds ratio of 0.852, the 11% reduction in the odds of recidivism caused by ABE (as seen in Table 2) would lead to a 2.9 percentage point reduction in recidivism. Those decreases for secondary, vocational, and college students are 3.3, 4.17, and 12.74 percentage points, respectively. Reduced recidivism may also lead to a reduction of costs to society. For example, according to a 2015 Vera Institute study, the average cost of housing someone in a state prison was $33,274 (Mai & Subramanian, 2017). When inflated to 2022 dollars, that becomes $40,028. Given that most of the studies in our sample are for state or local prisons, this estimate is likely a good one for the average annual cost savings from a reduction in incarceration.

According to a recent Bureau of Justice Statistics report (Kaeble, 2021), the average prison stay is 2.7 years. Assuming this also reflects the average length of stay for the recidivists, the cost savings for every person deterred from recidivism due to education is $107,075 (2.7*$40,028). Therefore, taking the 2.9 percentage point decrease we found for ABE education, this would result in an average decrease in prison costs of $3,105 ($107,075*0.029). Using the 3.3 percentage point decrease for secondary education, 4.14 for vocational, and 12.74 for college, we find that education leads to prison-cost decreases of $3,533, $4,465, and $13,641, respectively.

Focusing only on the costs of crime as they relate to prison costs, we deliberately ignore the public costs of crime, including the costs to victims, police or court costs, or the costs to criminals’ families. Our decision not to include a calculation of these costs is not due to any belief that they are small but instead due to the tremendous complexity of their calculation. The cost of crime estimates varies wildly, depending on the underlying assumptions or estimation strategies researchers choose. There is also significant variation in estimates of the number of crimes committed per criminal, affecting any estimate of the costs associated with reincarceration.

Effects on Employment & Wages

For Employment, we must first consider that only those not incarcerated can be employed. According to the U.S. Department of Justice, the 3-year reincarceration rate (the most common horizon used in the employment sample) is 38.6% (Durose & Antenangeli, 2021). Using an estimate by the Prison Policy Initiative (Couloute & Kopf, 2018) of a 73% employment rate for released prisoners, we calculate that 44.8% of people released from prison will be employed, which we get by multiplying the 73% employment rate by the 61.4% of released prisoners who do not recidivate.

Using the baseline of 44.8%, we estimate that the 2.7% increase in the odds ratio for Employment caused by participation in ABE courses leads to a 0.66 percentage point increase in post-release Employment. This means that for every 100 students participating in prison ABE programs, about 0.66 additional people will be employed, against the counterfactual of no education program. We estimate employment increases of 0.54, 2.48, and 4.68 percentage points for secondary, vocational, and college education, respectively.

To calculate the benefit of increased Employment, we use a baseline measure of quarterly wages of $5,600 from Department of Justice data of prisoners released from federal prisons (Carson et al., 2021). Therefore, the 0.66 percentage point increase in Employment due to ABE participation leads to a $36.96 quarterly increase in economic gains per student educated in prison. For secondary, vocational, and college education, we estimate Employment leads to wage increases of $30.24, $138.88, and $262.08, respectively. Note that these gains are independent of the wage increases, which represent increases in income due to increases in hourly wages or hours worked, not due to an increase in Employment. The wage effect is potentially much broader since it may affect both people who found work because of a prison program and those who would have found work otherwise but experience increased wages due to the program.

The quarterly economic benefits of education via wages are $140.58 for each employed released prisoner. Starting with a baseline 44.8% employment rate, we calculate the post-release employment rate for those receiving ABE, secondary, vocational, or college education to be 45.46%, 45.34%, 47.28%, and 49.48%, respectively. We then multiply these numbers to determine the quarterly wage increase for the average education participant for each of these four types of education: $63.91, $63.74, $66.47, and $69.56. By adding these to the benefits of increased Employment, we calculate the quarterly total earnings increase for each of these four types of education: $100.87, $93.98, $205.35, and $331.64 for ABE, secondary, vocational, and college education, respectively.

However, it is important to calculate how long these benefits last. To fully determine the value of education via Employment, we must additionally estimate the current value of the increased income stream realized in the future. The estimated effects of education on wages and Employment depend on how we discount future values and the decay of positive economic effects. Tyler and Kling (2006) find that education’s positive wage and employment benefits faded mainly by the third year following release. Using this figure, while also considering standard present discounting of future revenue streams, we assume a quarterly decay rate of 9%. This means that the $100.87 economic benefit via ABE becomes $90.78 in the second quarter following release, $81.70 in the third quarter, and so on. By the end of the 3rd year, this decay would mean the economic gain would be about $28.49. Using this framework, we calculate the present value of education via wages and Employment over 20 years. The benefit of education via wages and Employment for ABE, secondary, vocational, and college education is $993.57, $925.69, $2022.73, and $3,266.60, respectively.

ROI Calculations by Program Type

Table 6 shows the ROI estimates for each of our types of education. While each of the four types of education yields positive returns, there is significant variation in the benefits relative to the money spent on education on a per-participant basis. ABE yields an estimated 106.27% return on educational investment. This means that each dollar spent on education creates benefits yielding $2.06 in terms of cost savings associated with incarceration or increased future earnings for the prisoner. Secondary education yields a similar ROI of 124.39%. The economic return to vocational education is the highest, at 205.13%. While the ROI for college (61.15%) is low compared to all other forms of education, this is due to the high costs, not the low benefits of education. The average investment in a college participant will yield $16,908 in benefits, which is $6,441 net of costs, which is by far the highest per-student return.

The primary economic benefit of prison education is the reduction in recidivism. Given the relatively low employment rates and wages of released prisoners, the marginal impacts of education on employment measures are relatively small compared to the high costs of crime and incarceration. This means that even the most myopic view on the benefits of education (one that looks only at prison costs, ignoring the positive effects on prisoners’ future wages and the social benefits of avoiding future crimes) would still find a significant positive return to most forms of prison education systems. For all groups of programs studied here, the cost savings via reduced recidivism means that governments can reduce their incarceration-related costs by investing in prisoner education.

Results also indicate that prison education can be described as a positive externality for taxpayers and society. For each type of education program, the societal benefit via decreased recidivism is at least double that of the increased employment benefits to prisoners. Given the high societal benefit of prison education, public funding of prison education would be justified purely through cost savings.

Of course, there are numerous benefits that we are not quantifying here, such as a reduction in the costs associated with crime as well as the positive societal benefits identified with education as a whole. Studies have even found that prison education reduces instances of prison misconduct (Courtney, 2019). While there are likely additional benefits to prisoners of education that we cannot measure here – such as increased family cohesion and the benefit of avoiding prison for the prisoner – it is unlikely these benefits exceed those external benefits realized by society.

Conclusion

While many studies have estimated the effect of prison education, comparisons of education participants to non-participants are plagued by selection bias. Many of the previous meta-analyses of prison education (Wilson et al. (2000), Cecil et al. 2000), Bouffard et al. (2000), Chappell (2003), Aos et al. (2006), Davis et al. (2013), Bozick et al. (2018)) have stressed the need for continued high-quality research to improve our understanding of how education affects post-release outcomes. While there continues to be a need for high-quality research on the subject, the recent increase in papers using quasi-experimental methods creates a need for a new meta-analysis. This paper fills that gap.

This study focuses on the efficacy of prisoner education, including ABE, secondary education, higher education, and job training, seeking to understand what effects these programs provide in improving outcomes for prisoners and, by extension, the public. Using the largest and most comprehensive set of papers used in a meta-analysis to date, we estimate the effect of prison education on various outcomes and calculate an economic return to spending on prison education. We find that prison education leads to significant decreases in recidivism, increases in Employment, and modest increases in wages. However, the impacts vary significantly across different types of education.

The breadth of our analysis provides numerous insights into public policy regarding prison education beyond those baseline results. Though we find education to be effective for each of the post-release outcomes studied, our point estimates are significantly smaller than previous meta-analyses. This is due to the increase in high-quality studies, which are more likely to find smaller effects, and more recent studies find education less effective than older studies. Because the benefits of prison education have been dropping in recent years, our estimates of the average effect should be seen as retrospective. Our main estimates are likely unrealistic expectations for future programs. However, prison education will provide significant economic and cost-saving benefits even with a more modest estimate of the causal effect.

Second, public money will be particularly well-spent on vocational education, given its high rate of return. Each dollar spent on vocational education reduces future incarceration costs by almost $2.17. Additionally, vocational education can be taken alongside academic education, as participation in one does not preclude participation in another helpful program.

Third, the financial benefits of these programs rely on prisons being able to reduce costs as rates of incarceration decrease. If smaller prison populations caused by education result in half-empty prisons with high fixed costs, it will result in higher per-prisoner costs, eating away at the economic benefits of decreased recidivism. The cost savings we estimate can only be fully realized if prisons respond to smaller populations by decreasing operating costs.

There are several limitations to our analysis. Despite the large number of papers, the dearth of papers using randomization means that the results could still be plagued by selection bias. Given the feasibility of using randomization in this setting, future research would be well-served to rely more heavily on randomized experiments. Also, our cost–benefit analysis does not wholly calculate the entire universe of costs and benefits. We do not include victimization costs or any external costs of increased crime (court or enforcement costs, negative externalities, etc.); therefore, our calculated benefits are smaller than a measure of the total societal benefits.

The costs of programs exhibit large variations. Therefore, our measure of average program costs may not reflect the true costs many programs face. Prison education programs feature high fixed costs and low marginal costs. As a result, the costs of programs appear to vary significantly in participation rates. The Windham School District, which serves most Texas prisoners, has a cost-per-participant that is a fraction of those estimates in other programs. We should expect the efficacy of education to decrease with program expansion, too. Students who are currently enrolled in education are likely to be those who stand to benefit the most. As programming expands, the marginal benefit is likely to decrease as education services are provided to students for whom the benefit is lower than average.

These limitations provide opportunities for future research and continued discussion. As education programs expand, evaluative goals should be prioritized, and randomized control trials should be conducted whenever possible. Second, in-depth analyses of the costs and benefits of prison programs are virtually non-existent. To our knowledge, only Aos et Al. (2001) have used detailed administrative data to evaluate the economic effectiveness of various prison programs. Much more research is needed in this space for policymakers to make well-informed decisions.

Notes

Cecil et al. (2000) state that “More methodologically rigorous research is needed in this area before definitive conclusions can be drawn” while according to Bouffard et al. (2000), “more research that is well designed is needed before any definitive conclusions can be drawn about this type of intervention.”.

The total number of studies included in the meta-analysis exceeds the number of papers included, as papers that study more than one outcome (such as recidivism and employment) will be used separately.

We use the log odds ratio for our statistical analysis, and then convert these results to odds ratios, which have an intuitive interpretation.

We recreate the data and run a logit regression. Whenever papers included cross-tabulated tables, we included any possible variables. For example, Clark (1991) included recidivism data for each year. We included year dummy variables in the recreated data and as covariates in the logit regression.

References

*Studies marked with an asterisk were evaluated for this study

Adams, K., Bennett, K. J., Flanagan, T. J., Marquart, J. W., Cuvelier, S. J., Fritsch, E., Gerber, J., Longmire, D. R., & Burton, V. S. J. (1994). A large-scale multidimensional test of the effect of prison education programs on offenders’ behavior. Prison Journal, 74(4), 433–449. https://doi.org/10.1177/0032855594074004004

*Allen, R. (2006). An economic analysis of prison education programs and recidivism. Emory University, Department of Economics.

*Anderson, D. B. (1982). The relationship between correctional education and parole success. Journal of Offender Counseling Services Rehabilitation, 5(3-4), 13-26https://doi.org/10.1300/J264v05n03_03

*Anderson, D. B., Schumacker, R. E., & Anderson, S. L. (1991). Releasee characteristics and parole success. Journal of Offender Rehabilitation, 17(1-2)https://doi.org/10.1300/J076v17n01_10

*Andrews, D. A., Zinger, I., Hoge, R. D., & Bonta, J. (1990). Does correctional treatment work? A clinically relevant and psychologically informed meta‐analysis. Criminology, 28(3), 369-404https://doi.org/10.1111/j.1745-9125.1990.tb01330.x

Aos, S., Miller, M. G., & Drake, E. (2006). Evidence-based adult corrections programs: What works and what does not. Olympia: Washington State Institute for Public Policy. Retrieved July 15, 2022, from https://www.researchgate.net/publication/237270608_Evidence-Based_Adult_Corrections_Programs_What_Works_and_What_Does_Not

*Aos, S., Phipps, P., Barnoski, R., & Lieb, R. (2001). The comparative costs and benefits of programs to reduce crime, version 4.0. Washington State Institute for Public Policy. https://eric.ed.gov/?id=ED453340

Apel, R., & Sweeten, G. (2010). The impact of incarceration on employment during the transition to adulthood. Social Problems, 57(3), 448–479. https://doi.org/10.1525/sp.2010.57.3.448

*Armstrong, G., & Atkin-Plunk, C. (2014). Evaluation of the Windham School District Correctional Education Programs – SY2010. Sam Houston State University.

*Armstrong, G., Giever, D., & Lee, D. (2012). Evaluation of the Windham school district correctional education programs. Sam Houston State University. Retrieved September 16, 2023, from https://www.semanticscholar.org/paper/Evaluation-of-the-Windham-School-District-Education-Armstrong-Giever/9060f6530d9b7f5a4a7ed87ba77213d11da67b61

* Batiuk, M. E., Lahm, K. F., McKeever, M., Wilcox, N., & Wilcox, P. (2005). Disentangling the effects of correctional education: Are current policies misguided? An event history analysis. Criminal Justice, 5(1), 55-74https://doi.org/10.1177/1466802505050979

Benenson Strategy Group. (2017). Smart justice campaign polling on American’s attitudes on criminal justice. aclu.org. Retrieved 4/10/2023, from https://www.aclu.org/report/smart-justice-campaign-polling-americans-attitudes-criminal-justice

*Blackburn, F. S. (1981). The relationship between recidivism and participation in a community college program for incarcerated offenders. Journal of Correctional Education (1974-), 32(3), 23–25. Retrieved July 15, 2022, from http://www.jstor.org/stable/44944747

*Blackhawk Technical College. (1996). RECAP (Rock County education and criminal addictions program) program manual, prepared to be of assistance in program replication. Blackhawk Technical College. Retrieved September 16, 2023, from https://eric.ed.gov/?q=Blackhawk&id=ED398353

Bouffard, J., MacKenzie, D. L., & Hickman, L. (2000). Effectiveness of Vocational Education and Employment Programs for Adult Offenders: A Methodology-Based Analysis of the Literature. Journal of Offender Rehabilitation, 31(2), 1–41. https://doi.org/10.1300/J076v31n01_01

Bozick, R., Steele, J., Davis, L., & Turner, S. (2018). Does providing inmates with education improve post-release outcomes? A meta-analysis of correctional education programs in the United States. Journal of Experimental Criminology, 14(3), 389–428. https://doi.org/10.1007/s11292-018-9334-6

Brenan, M. (2020). Fewer Americans call for tougher criminal justice system. GALLUP. Retrieved 4/9/2023, from https://news.gallup.com/poll/324164/fewer-americans-call-tougher-criminal-justice-system.aspx

*Brewster, D. R., & Sharp, S. F. (2002). Educational programs and recidivism in Oklahoma: Another look. The Prison Journal, 82(3), 314-334https://doi.org/10.1177/003288550208200302

Brody, L. (2019, October 19). Three [Incarcerated Students] beat Harvard in a debate. Here’s what happened next. Bard Prison Initiative. https://bpi.bard.edu/debate-what-happened-next/

*Bueche, J. K., Jr. (2014). Adult offender recidivism rates: how effective is pre-release and vocational education programming and what demographic factors contribute to an offenders return to prison (Order No. 29121812). Available from ProQuest Dissertations & Theses Global. (2674876790). Retrieved July 15, 2022, from https://www.proquest.com/dissertations-theses/adult-offender-recidivism-rates-how-effective-is/docview/2674876790/se-2

Carson, E. A., Sandler, D. H., Bhaskar, R., Fernandez, L. E., & Porter, S. R. (2021). Employment of persons released from federal prison in 2010. U.S. Department of Justice, Office of Justice Programs, Bureau of Justice Statistics. Retrieved September 16, 2023, from https://bjs.ojp.gov/library/publications/employment-persons-released-federal-prison-2010

Cecil, D.K. D.A. Drapkin, D.L. MacKenzie and L.J. Hickman (2000). The effectiveness of adult basic education and life-skills programs in reducing recidivism: A review and assessment of the research. Journal of Correctional Education, 51(2), 207–226. Retrieved July 15, 2022, from https://www.jstor.org/stable/pdf/41971937.pdf

Chappell, C. A. (2003). Postsecondary correctional education and recidivism: A meta-analysis of research conducted 1990–1999 (Order No. 3093357). Available from ProQuest Dissertations & Theses Global. (305330315). https://www.proquest.com/dissertations-theses/post-secondary-correctional-education-recidivism/docview/305330315/se-2

Chattoraj, B. N. (1985). The social, psychological and economic consequences of imprisonment. Social Defense, 20(79), 19–24.

*Cho, R., & Tyler, J. (2008). Prison-based adult basic education (ABE) and post-release labor market outcomes. Paper presented at the Reentry Roundtable on Education, John Jay College of Criminal Justice, New York, April 1. Retrieved July 15, 2022, from https://api.semanticscholar.org/CorpusID:41776473

Cho, R. M., & Tyler, J. H. (2013). Does prison-based adult basic education improve post-release outcomes for male prisoners in Florida? Crime and Delinquency, 59(7), 915–1005. https://doi.org/10.1177/0011128710389588

Clark, D. D. (1991). Analysis of return rates of the inmate college program participants. United States: State of New York, Department of Correctional Services. Retrieved September 16, 2023, from https://www.ojp.gov/ncjrs/virtual-library/abstracts/analysis-return-rates-inmate-college-program-participants

*Coffey, B. B. (1983). The effectiveness of vocational education in Kentucky’s correctional institutions as measured by employment status and recidivism (Order No. 8401354). Available from ProQuest Dissertations & Theses Global. (303167016). Retrieved July 15, 2022, from https://www.proquest.com/dissertations-theses/effectiveness-vocational-education-kentuckys/docview/303167016/se-2

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design and analysis issues for field research. Chicago: Rand McNally, 164, 23–29.

Couloute, L., & Kopf, D. (2018). Out of prison & out of work: Unemployment among formerly incarcerated people. Prison Policy Initiative. Retrieved April 11, 2023, from https://www.prisonpolicy.org/reports/outofwork.html

Courtney, J. A. (2019). The relationship between prison education programs and misconduct. Journal of Correctional Education (1974-), 70(3), 43–59. Retrieved July 15, 2022, from https://www.jstor.org/stable/26864369

*Cronin, J. (2011). The path to successful reentry: The relationship between correctional education, Employment and recidivism. University of Missouri, Institute of Public Policy, Retrieved September 16, 2023, from http://www.antoniocasella.eu/nume/Cronin_2011.pdf

*Darolia, R., Mueser, P., & Cronin, J. (2021). Labor market returns to a prison GED. Economics of Education Review, 82, 1-27, 102093 https://doi.org/10.1016/j.econedurev.2021.102093

Davis, L. M., Bozick, R., Steele, J. L., Saunders, J., & Miles, J. N. V. (2013). Evaluating the effectiveness of correctional education: A meta-analysis of programs that provide education to incarcerated adults. RAND Corporation. Retrieved September 16, 2023, from https://www.ojp.gov/ncjrs/virtual-library/abstracts/evaluating-effectiveness-correctional-education-meta-analysis

*Davis, S., & Chown, B. (1986). Recidivism among offenders incarcerated by the Oklahoma Department of Corrections who received vocational-technical training: A survival data analysis of offenders released January 1982 through July 1986. Retrieved July 15, 2022, from https://www.ojp.gov/ncjrs/virtual-library/abstracts/recidivism-among-offenders-incarcerated-oklahoma-department

Department of Corrections Services (2010). Follow-up study of offenders who earned high school equivalency diplomas (GEDs) while incarcerated in DOCS. New York Department of Corrections Services.

*Denney, M. G. T., & Tynes, R. (2021). The effects of college in prison and policy implications. Justice Quarterly, 38(7), 1542-1566https://doi.org/10.1080/07418825.2021.2005122

DerSimonian, R., & Laird, N. (1986). Meta-analysis in clinical trials. Controlled Clinical Trials, 7(3), 177–188. https://doi.org/10.1016/0197-2456(86)90046-2

*Dickman, C. (1987). Academic program participation and prisoner outcomes. Lansing, MI: Facilities Research and Evaluation Unit, Michigan Department of Corrections Program Bureau.

DiZerega, M., & Eddy, H. (2022). How state higher ed leaders are expanding college in prison. Vera. Retrieved April 10, 2023, from https://www.vera.org/news/how-state-higher-ed-leaders-are-expanding-college-in-prison

*Downes, E. A., Monaco, K. R., & Schreiber, S. O. (1989). Evaluating the effects of vocational education on inmates: A research model and preliminary results. Yearbook of Correctional Education, 249–262.

Durose, M. R., & Antenangeli, L. (2021), Recidivism of prisoners released in 34 states in 2012: A 5-year follow-up period (2012–2017). U.S. Department of Justice, Office of Justice Programs, Bureau of Justice Statistics. Retrieved September 16, 2023, from https://www.ojp.gov/library/publications/recidivism-prisoners-released-34-states-2012-5-year-follow-period-2012-2017

*Duwe, G., & Clark, V. (2014). The effects of prison-based educational programming on recidivism and employment. The Prison Journal, 94(4), 454-478https://doi.org/10.1177/0032885514548009

Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. British Medical Journal, 315(7109), 629–634. https://doi.org/10.1136/bmj.315.7109.629

Farrington, D. P., Gottfredson, D. C., Sherman, L. W., & Welsh, B. C. (2003). The Maryland scientific methods scale In Evidence-based crime prevention (pp. 13-21). Routledge. https://doi.org/10.4324/9780203166697

*Fine, M., Torre, M. E., Boudin, K., Bowen, I., Clark, J., Hylton, D., Martinez, M., Roberts, R. A., Smart, P., & Upegui, D. (2001). Changing minds the impact of college in a maximum-security prison: Effects on women in prison, the prison environment, reincarceration rates and post-release outcomes. The Graduate Center of the City University of New York & Women in Prison at the Bedford Hills Correctional Facility. Retrieved September 16, 2023, from https://www.ojp.gov/ncjrs/virtual-library/abstracts/changing-minds-impact-college-maximum-security-prison-effects-women

Fogarty, J., & Giles, M. (2018). Recidivism and education revisited: evidence for the USA. Working Paper 1806. https://doi.org/10.22004/ag.econ.275642

Fowles, R., & Christensen, M. (1995). A statistical analysis of Project Horizon: The Utah corrections education recidivism reduction plan. Salt Lake City, UT: University of Utah. Retrieved September 16, 2023, from https://content.csbs.utah.edu/~fowles/horizon.pdf

*Gaither, C. C. (1980). An evaluation of the Texas Department of Corrections’ Junior College Program. Masters Thesis, Northeast Louisiana Sate University, Monroe Louisiana. Accessed 15 July 2022

*Gordon, H. R. D., & Weldon, B. (2003). The Impact of Career and Technical Education Programs on Adult Offenders: Learning Behind Bars. Journal of Correctional Education, 54(4), 200–209. Retrieved July 15, 2022, from http://www.jstor.org/stable/23292175

*Harer, M. D. (1995). Prison education program participation and recidivism: A test of the normalization hypothesis. Washington, DC: Federal Bureau of Prisons, Office of Research and Evaluation, 182.

*Hill, L., Scaggs, S. J. A., & Bales, W. D. (2017). Assessing the statewide impact of the Specter Vocational Program on reentry outcomes: A propensity score matching analysis. Journal of Offender Rehabilitation, 56(1), 61-86https://doi.org/10.1080/10509674.2016.1257535

Hjalmarsson, R. (2008). Criminal justice involvement and high school completion. Journal of Urban Economics, 63(2), 613–630. https://doi.org/10.1016/j.jue.2007.04.003

*Holloway, J., & Moke, P. (1986). Postsecondary correctional education: An evaluation of parolee performance. Wilmington College. Retrieved September 16, 2023, from https://files.eric.ed.gov/fulltext/ED269578.pdf

*Hopkins, A. J. (1988) Maryland Department of Public Safety and Correctional Services, Offender recidivism report. Maryland Department of Public Safety and Correctional Services. Retrieved September 16, 2023, from https://www.ojp.gov/ncjrs/virtual-library/abstracts/offender-recidivism-report

*Hsieh, M.L., Chen, K.J., Choi, P.S., & Hamilton, Z. K. (2022). Treatment combinations: The joint effects of multiple evidence-based interventions on recidivism reduction. Criminal Justice and Behavior, 49(6), 911-929https://doi.org/10.1177/00938548211052584

Hudson Link for Higher Education in Prison. (n.d.). History. Retrieved July 15, 2022 from https://hudsonlink.org/about/history

* Hull, K. A., Forrester, S., Brown, J., Jobe, D., & McCullen, C. (2000). analysis of recidivism rates for participants of the academic/vocational/transition education programs offered by the Virginia Department of Correctional Education. Journal of Correctional Education, 51(2), 256–261. Retrieved July 15, 2022, from http://www.jstor.org/stable/41971944

*Ismailova, Z. (2007). Prison education program participation and recidivism. (Master’s thesis, Duquesne University). Retrieved July 15, 2022, from https://dsc.duq.edu/etd/685

*Jensen, E. L., Williams, C. J., & Kane, S. L. (2020). Do in-prison correctional programs affect post-release employment and earnings? International Journal of Offender Therapy and Comparative Criminology, 64(6–7), 674–690https://doi.org/10.1177/0306624X19883972

*Johnson, C. M. (1984). The effects of prison labor programs on post-release Employment and recidivism (critical theory, vocational education, work release) (Order No. 8424609). Available from ProQuest Dissertations & Theses Global. (303293727). Retrieved July 15, 2022, from https://www.proquest.com/dissertations-theses/effects-prison-labor-programs-on-post-release/docview/303293727/se-2

Kaeble, D. (2021). Time served in state prison, 2018. Bureau of Justice Statistics. Retrieved April 11, 2023, from https://bjs.ojp.gov/library/publications/time-served-state-prison-2018

*Kelso, C. E. (1996.) A study of the recidivism of Garrett Heyns Education Center graduates released between 1985 and 1991. Journal from the Northwest Center for the Study of Correctional Education 1(1), 45-51

*Kim, R. H., & Clark, D. (2013). The effect of prison-based college education programs on recidivism: Propensity score matching approach. Journal of Criminal Justice, 41(3), 196-204https://doi.org/10.1016/j.jcrimjus.2013.03.001

*Kim, R. (2010). Follow-up study of offenders who earned high school equivalency diplomas (GEDs) while incarcerated in DOCS. State of New York, Department of Correctional Services.

*La Roi, S. G. (2022). Correctional education: A pathway to reducing recidivism in Wisconsin? (166). Lawrence University Honors Projects. Retrieved July 15, 2022, from https://lux.lawrence.edu/luhp/166

*Lanaghan, P. (1998). The impact of receiving a general equivalency diploma while incarcerated on the rate of recidivism. Masters Thesis, Franciscan University of Steubenville.

*Langenbach, M., North, M. Y., Aagaard, L., & Chown, W. (1990). Televised instruction in Oklahoma prisons: A study of recidivism and disciplinary actions. Journal of Correctional Education, 41(2), 87–94. Retrieved July 15, 2022, from http://www.jstor.org/stable/41971591

Lattimore, P. K., Witte, A. D., & Baker, J. R. (1990). Experimental assessment of the effect of vocational training on youthful property offenders. Evaluation Review, 14(2), 115–133. https://doi.org/10.1177/0193841X9001400201

Lee, H., Porter, L. C., & Comfort, M. (2014). Consequences of family member incarceration: Impacts on civic participation and perceptions of the legitimacy and fairness of government. The Annals of the American Academy of Political and Social Science, 651(1), 44–73. https://doi.org/10.1177/0002716213502920

*Lichtenberger, E. J. (2007). The impact of vocational programs on post-release outcomes for full completers from the fiscal year 1999, 2000, 2001, and 2002 release cohorts. Center for Assessment, Evaluation, and Educational Programming, Virginia Tech

*Lichtenberger, E. J. (2011). Measuring the effects of the level of participation in prison-based career and technical education programs on recidivism. Working paper.

*Lichtenberger, E. J., O’Reilly, P. A., Miyazaki, Y., & Kamulladeen, R. M. (2009). Direct and indirect impacts of career and technical education on post-release outcomes. Center for Assessment, Evaluation, and Educational Programming, Virginia Tech.

*Lipsey, M. W., & Wilson, D. B. (1993). The efficacy of psychological, educational, and behavioral treatment: Confirmation from meta-analysis. American Psychologist, 48(12), 1181–1209https://doi.org/10.1037/0003-066X.48.12.1181

Lipton, D., Martinson, R., & Wilks, J. (1975). The effectiveness of correctional treatment: A survey of treatment evaluation studies. Greenwoo.

*Lochner, L., & Moretti, E. (2004). The effect of education on crime: Evidence from prison inmates, arrests, and self-reports. American Economic Review, 94(1), 155-189https://doi.org/10.1257/000282804322970751

*Lockwood, D. (1991). Prison higher education and recidivism: A program evaluation. Yearbook of correctional education, 1991, 187-201

*Long, J. S., Sullivan, C., Wooldredge, J., Pompoco, A., & Lugo, M. (2019). Matching needs to services: Prison treatment program allocations. Criminal Justice and Behavior, 46(5), 674-696https://doi.org/10.1177/0093854818807952

*Lopez, A. K. (2020). The impact of career and technical education program outcomes in the Windham school district on offender post-release employment status (Ed.D.) (Order No. 28180633). Available from ProQuest Dissertations & Theses Global. (2488256598). Retrieved April 9, 2023, from https://www.proquest.com/dissertations-theses/impact-career-technical-education-program/docview/2488256598/se-2

Mai, C., & Subramanian, R. (2017). The price of prisons: Examining state spending trends, 2010–2015. Vera Institute of Justice. Retrieved from https://www.vera.org/publications/price-of-prisons-2015-state-spending-trends

*Markley, H., Flynn, K., & Bercaw-Dooen, S. (1983). Offender skills training and employment success: An evaluation of outcomes. Corrective and Social Psychiatry and Journal of Behavior Technology Methods and Therapy, 29(1), 1-11

*Martinez, A. I., & Eisenberg, M. (2000a). Impact of educational achievement of inmates in the Windham School District on post-release employment. Criminal Justice Policy Council.

*Martinez, A. I., & Eisenberg, M. (2000b). Impact of educational achievement of inmates in the Windham School District on post-release employment. Criminal Justice Policy Council.

Martinson, R. (1974). What works? Questions and answers about prison reform. The Public Interest, 35, 22–54.

*McNeeley, S. (2023). The effects of vocational education on recidivism and employment among individuals released before and during the COVID‐19 pandemic. International Journal of Offender Therapy and Comparative Criminology. 67(15), 1547–1564. https://doi.org/10.1177/0306624X2311598

*Nally, J., Lockwood, S., Knutson, K., & Ho, T. (2012). An evaluation of the effect of correctional education programs on post-release recidivism and employment: An empirical study in Indiana. Journal of Correctional Education, 63(1), 69–89. Retrieved July 15, 2022, from https://www.jstor.org/stable/26507622

*New York State Dept of Correctional Services: Division of Program Planning, Research & Evaluation. (1992). Overview of department follow-up research on return rates of participants in major programs 1992. New York State Department of Correctional Services. Retrieved July 15, 2022, from https://www.ojp.gov/ncjrs/virtual-library/abstracts/overview-department-follow-research-return-rates-participants-major

*Nuttall, J., Hollmen, L., & Staley, E. M. (2003). The effect of earning a GED on recidivism rates. Journal of Correctional Education, 54(3), 90–94. https://www.jstor.org/stable/41971144

*O’Neil, M. (1990). Correctional higher education: Reduced recidivism? Journal of Correctional Education, 41(1), 28–31. https://www.jstor.org/stable/41970810

*Perry, C. (2015). Vocational and educational Programs: Impacts on recidivism. Harvard Dataverse, V1 https://doi.org/10.7910/DVN/28791

*Piehl, A. M. (1994). Learning while doing time. Malcolm Wiener Center for Social Policy, John F. Kennedy School of Government, Harvard University.

*Pompoco, A., Wooldredge, J., Lugo, M., Sullivan, C., & Latessa, E. J. (2017). Reducing inmate misconduct and prison returns with facility education programs. Criminology & Public Policy, 16(2), 515-547https://doi.org/10.1111/1745-9133.12290

*Roessger, K. M., Liang, X., Weese, J., & Parker, D. (2021). Examining moderating effects on the relationship between correctional education and post-release outcomes. Journal of Correctional Education, 72(1), 13–42. https://www.jstor.org/stable/27042234

*Ryan, T. P., & Desuta, J. F. (2000). A comparison of recidivism rates for operation outward reach (OOR) participants and control groups of non-participants for the years 1990 through 1994. Journal of Correctional Education, 51(4), 316–319. Retrieved July 15, 2022, from http://www.jstor.org/stable/41971949

*Sabol, W. J. (2007). Local labor market conditions and post-prison employment: Evidence from Ohio. In Shawn Bushway, Michael A. Stoll and David A. Weiman. Russell (eds.) Barriers to reentry? The labor market for released prisoners in post-industrial America, New York: Russell Sage Foundation pp. 257–303.

Sawyer, W. (2019). Since you asked: How did the 1994 crime bill affect prison college programs. Prison Policy Initiative, August. Retrieved July 15, 2022, from https://www.prisonpolicy.org/blog/2019/08/22/college-in-prison/

*Saylor, W. G., & Gaes, G. G. (1997). PREP: Training inmates through industrial work participation, and vocational and apprenticeship instruction. Corrections Management Quarterly, 1(2), 32-43

Sentencing Project (2019). U.S. prison population trends: Massive buildup and modest decline. The Sentencing Project. Retrieved September 16, 2023, from https://www.sentencingproject.org/app/uploads/2022/08/U.S.-Prison-Population-Trends.pdf

*Schumacker, R. E., Anderson, D. B., & Anderson, S. L. (1990). Vocational and academic indicators of parole success. Journal of Correctional Education, 41(1), 8–13. Retrieved July 15, 2022, from https://www.jstor.org/stable/41970805

*Shlafer, R. J., Reedy, T. and Davis, L. (2017), School‐based outcomes among youth with incarcerated parents: Differences by school setting. Journal of School Health, 87(9), 687-695https://doi.org/10.1111/josh.12539

*Smith, L. G., & Steurer, S. (2005). Pennsylvania Department of Corrections education outcome study. Correctional Education Association.

*Smith, M. J., Parham, B., Mitchell, J., Blajeski, S., Harrington, M., Ross, B., Johnson, J., Brydon, D. M., Johnson, J. E., Cuddeback, G. S., Smith, J. D., Bell, M. D., Mcgeorge, R., Kaminski, K., Suganuma, A., & Kubiak, S. (2023). Virtual reality job interview training for adults receiving prison-based employment services: A randomized controlled feasibility and initial effectiveness trial. Criminal Justice and Behavior, 50(2), 272-293https://doi.org/10.1177/00938548221081447

Smith, P., Gendreau, P., & Swartz, K. (2009). Validating the principles of effective intervention: A systematic review of the contributions of meta-analysis in the field of corrections. Victims & Offenders, 4(2), 148–169. https://doi.org/10.1080/15564880802612581

*Steurer, S. J., & Smith, L. G. (2003). Education reduces crime: Three-state recidivism study. Executive Summary. Retrieved July 15, 2022, from https://eric.ed.gov/?id=ED478452

Tennessee Higher Education Initiative (n.d.) About. Retrieved July 15, 2022 from https://www.thei.org/about/about