Abstract

Stated preference (SP) surveys typically ask respondents to make a choice under a hypothetical situation. However, the choice context is often unrealistic, leading to errors and biases in the response. To overcome this, revealed preference (RP) data has been used to create a more realistic choice context for generating SP questions. For instance, in stated adaptation (SA) surveys, users are asked to answer SP questions based on a specific RP context they actually experienced. One challenge in SA surveys is that it is difficult for the respondents to precisely recall the RP context, especially when there is a longer time gap between RP behavior and SP response (response lag). However, no empirical studies have been conducted to test how elicited preferences vary in response to changes in the response lag. This study empirically examines the impact of response lag on SP responses using real-time SA survey data collected from Kumamoto and Hiroshima, Japan. To accomplish this, we developed a survey tool that enables respondents to answer SP questions in real time, i.e., immediately after their RP behavior. The empirical results confirmed that systematic bias increases with an increase in response lag. Additionally, the results showed that the greater the response lag, the more respondents tended to focus on the SP attributes rather than the RP attributes. These findings indicate that the timing of responses is an important survey design parameter when conducting an SA survey.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The stated preference (SP) survey can be a useful tool as it helps to capture preferences towards new services or goods that are yet to be introduced. It also allows for measuring travel demand and policy effects without conducting social experiments, which are usually expensive and thus difficult to conduct frequently. However, SP survey data is known to suffer from various biases, including affirmation bias, unconstrained bias, justification bias, and policy response bias. These biases arise essentially because the choice results do not affect the actual life of the respondents (Ben-Akiva and Morikawa 1990; Caussade et al. 2004; Arentze et al. 2003; Bann 2002). We often call these biases caused by SP surveys hypothetical biases. Conventional SP surveys ask respondents to answer questions under hypothetical scenarios. Thus, when the respondents answer the survey, they may not be able to consider various contextual factors, i.e., the factors which represent the reality of the travel scenario. This may cause differences between their actual behavior and their stated preferences.

In order to alleviate these biases in SP data, several methods have been proposed, many of which incorporate information on actual behavior taken by respondents, i.e., revealed preference (RP) information (Rose et al. 2008; Train and Wilson 2008; Sadakane et al. 2010; Danaf et al. 2019; Feneri et al. 2021; Safira and Chikaraishi 2022). One such method is the stated adaptation (SA) survey (Danaf et al. 2019; Feneri et al. 2021; Safira and Chikaraishi 2022), where the respondents are asked to answer SP questions within their real decision context (i.e., RP context). The advantage of this approach is that various contextual factors, such as scheduling constraints experienced by the respondents, are taken into account, thereby reducing hypothetical biases (Feneri et al. 2021). With the advancement of the web- and smartphone-based surveys, it has become easier to incorporate RP information while asking SP questions. However, this would be valid only when the respondents could properly recall their memory.

One of the major factors influencing the accuracy of memory recall is the time elapsed after engaging in RP behavior. As the time-gap duration between the RP behavior and SP response (referred to as "response lag" in this study) increases, respondents may find it difficult to accurately recall the RP context. While there have been numerous studies examining the impact of incorporating RP data on SP responses, as reviewed in the following section, to the best of the authors’ knowledge, no study has explored how the response lag influences SP answers.

In this study, we quantitatively analyze the influence of response lag on preference elicitation results using a real-time SA survey in Japan. To accomplish this, we developed a survey tool that can reflect the RP context and allow respondents to answer SP questions in real time (Puspitasari et al. 2021). The real-time SA survey asked respondents to state their behavioral adaptations to a hypothetically introduced congestion pricing scheme, where the alternatives include: (1) keep the present trip under a road pricing scheme; (2) cancel the trip; (3) change the time of day; (4) change the destination; (5) change the travel mode; and (6) change the route. It is worth noting that the 1st and 6th options may not significantly impact their activity-travel schedule after the trip, while the other options could affect travel decisions for the remainder of the day. For example, “cancel trip” and “change the destination” options could affect the destination of the next trip due to the change in the origin; “change the travel mode” from car to public transit may make it difficult to reach some of the destinations where public transit services are not available; and “change the time of the day” could potentially force them to cancel some of the trips they had planned to do after the current one. In summary, some of the behavioral adaptations would require travelers to make considerable changes to their schedule, i.e., have a higher cost of schedule adjustment, while others would not. It can be expected that the respondents can recall such schedule adjustment costs when answering the SP question immediately after making the trip, while they may not be able to do so when answering the SP question at a later time. Thanks to the diffusion of smartphones, now we can easily ask the respondents to answer the SP question immediately after the trip using a push notification function (the details are explained in section “Data collection method”).

To investigate these impacts of response lag, this study formulates the following three hypotheses (Fig. 1):

-

H1.

The greater the response lag, the greater the systematic bias in the choice result.

-

H2.

The greater the response lag, the more difficult it becomes for the respondent to recall the memory of the RP context when answering SP questions, i.e., the response lag acts as a moderator variable that reduces the impacts of the RP attributes on the choice result.

-

H3.

The greater the response lag, the more hypothetical (and the less real) the choice context becomes, resulting in a more dominant influence of the SP attributes on the choice result, i.e., the response lag acts as a moderator variable that increases the impacts of the SP attributes on the choice result.

For the first hypothesis, we test the possibility of systematic bias in SP responses attributed to the response lag. Reasons for systematic biases could be (1) social desirability bias, (2) not being context aware, and/or (3) lack of real penalties/gains from the choice. Although we could not identify the reasons, we test for the null hypothesis where the added response lag variables do not affect the choice.

Regarding the second and third hypotheses, we test the hypothesis that the relative impacts of RP (or SP) attributes on the choice gets weaker (or stronger) as the responses lag increases (or decreases), i.e., by evaluating the impact of the response lag as a moderator variable. Essentially, we investigate whether longer response lags lead respondents to rely predominantly on SP attributes while disregarding the effects of the RP context.

The structure of this research is as follows. Section “Literature review” provides a literature review, while section “Data collection method” describes data utilized in the study. The results of the basic analysis are presented in section “The results of descriptive analysis”. Section “Modeling framework” describes the model used in the estimation. Section “Estimation results” presents the estimation results using data from the real-time SA survey, and section “Conclusions” concludes the paper by discussing key findings, contributions, and directions for future research.

Literature review

How to improve the reliability of SP surveys

There is a rich body of literature which demonstrates the usefulness of SP surveys for obtaining users’ preferences in hypothetical situations (Ben-Akiva and Morikawa 1990; Rose and Bliemer 2009; Hensher 1994). However, when respondents answer in the SP survey, various biases such as affirmation bias may occur due to the experimental nature of the method, so it is challenging to obtain accurate users’ preferences.

In order to deal with the problem associated with SP surveys, a number of methods have been proposed such as sending a message to users about a possibility of bias in the survey when they answer (Cheap Talk (Fifer et al. 2014)) or requesting them to attach a confidence level (e.g., 0–10 points Fifer et al. 2014) to their choices. Methods that combine SP and RP surveys have also been widely used to reduce the above-mentioned biases. In the following sections, we review the methods that combine SP and RP surveys.

Bias adjustment method for SP survey using RP information

The combined RP-SP model was proposed to enhance the advantages and reduce the disadvantages of both RP and SP data, allowing to control biases potentially contained in SP data (Ben-Akiva and Morikawa 1990). Additionally, an RP-SP simultaneous model with correlated error terms has been proposed (Morikawa 1994). However, these models do not directly consider how RP contextual factors affect the SP choice, and thus the RP-SP model can be seen as a compromise method that is useful when RP and SP data have already been independently collected.

SP survey design method combined with RP survey

In order to make the choice task more realistic, a number of SP survey designs that utilize RP information have been proposed. One such method is the pivoting SP survey method (Rose et al. 2008), which creates SP attribute levels based on the user’s information obtained in the RP survey. It adjusts the attribute levels by increasing or decreasing them based on a reference obtained from the RP survey. However, in the pivoting SP survey, RP information is only utilized to make the levels of SP attributes realistic, and the survey is not designed to encourage respondents to consider the RP context while answering SP questions. For instance, in a real-life context, some people may decide to pay the congestion charge if they have to attend an important meeting and cannot afford to be delayed. Such RP contextual information would be very important to estimate how many people would change their behavior after the introduction of congestion charge, but the pivoting SP survey cannot take this aspect into account. It has been reported that ignoring these contextual factors can lead to bias, for example, overestimating the preference for new travel modes (Huynh et al. 2017a, b).

Another method of combining RP information with SP surveys is the SA survey (Danaf et al. 2019; Feneri et al. 2021; Safira and Chikaraishi 2022). In an SA survey, questions are designed to reflect the user’s actual context and experience, allowing for SP responses that consider the RP context. The SP-off-RP method (Train and Wilson 2008) also aims to obtain SP responses considering the RP context, similar to the SA survey. However, in SA surveys, respondents may find it difficult to precisely recall the RP context with the increase in time after their response, i.e., an increase in response lag. As the response lag increases, the SA survey may not be able to gather SP responses that adequately account for the influence of the RP context. Therefore, we have developed a survey tool that can reflect the RP context in real time, while measuring the response lag (Puspitasari et al. 2021). The real-time stated adaptation (SA) survey allows respondents to answer SA questions in real time, utilizing RP information. This approach facilitates easier recall of the RP context and is expected to yield relatively reliable SP answers. However, to the best of our knowledge, there are no existing studies on real-time SA surveys in the transportation field except ours. In summary, the effectiveness of real-time responses has not been confirmed yet, and this study attempts to address this gap in research.

Studies on the effect of response time on choice decisions

Several studies have examined the impact of response time on choices in SP surveys. For instance, research on paper-based surveys has shown that when respondents were provided with self-reported reflection time, their preference for new goods decreased (Cook et al. 2012). Meanwhile, in web-based SP surveys, it was observed that an increase in response time increased the preference for new goods (Börger 2016). Moreover, in SP surveys, a longer response time was observed to decrease the variance of error terms and the variance of randomly distributed taste parameters in a mixed logit model (Haaijer et al. 2000; Rose and Black 2006). However, another study found contrary evidence and observed that response time increased error variance (Bech et al. 2011).

It is important to note that the response time examined in the aforementioned studies differs from the response lag addressed in this study. Specifically, two distinct mechanisms may influence the choice results based on the timing gap between RP behavior and SP response: (1) memory effects (also called “response lag” effects in this study), where a larger timing gap makes it more challenging for the respondents to accurately recall the RP context, and (2) time-to-think effects, where more time spent contemplating the choice task may lead to more precise SP answers. While the latter effects cannot be disregarded for complex choice tasks (such as selecting different environmental policy options affecting individual and collective welfare), we assume that the former effects dominate in the context of behavioral adaptations to a hypothetical congestion pricing scheme. This is because the survey primarily focuses on individual preferences and in principle, do not directly involve others' preferences. Additionally, the time-to-think effects are expected to manifest within a relatively short time gap (e.g., whether the respondent takes an additional 5 min to think), while the memory effects are expected to arise with longer time gaps (e.g., whether the respondent answers SP questions today or the following day). This study mainly focuses on relatively longer time gaps that would allow us to consider the influence of time gap as memory or response lag effects.

Data collection method

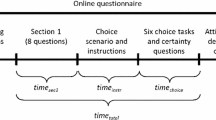

A real-time SA survey was conducted in Kumamoto and Hiroshima metropolitan areas during January and February 2020. The survey included 150 people selected from both cities who regularly pass through or visit the city center by car. Each respondent was requested to install a mobile phone application on their device, which recorded their RP behavioral histories, including travel times, for all trips made over the subsequent two weeks. The respondents were asked to fill in an RP survey before each trip to record their travel mode and purpose. During each trip, the respondent received a push notification to answer the SA question immediately, if he or she met the following three conditions: (a) using a car, (b) passing through the congestion charging area, departed from the area, or arrived at the area (Fig. 2), and (c) traveling during a specific time of day (from 6:00 to 19:00). This push notification allows respondents to answer the question in real-time. The SP survey recorded two distinct time points: the moment the participant pressed the button upon concluding the trip and the time when the SP response was successfully submitted. In this study, “response lag” is defined as the time interval between these two recorded instances.

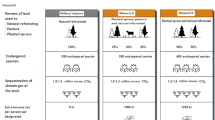

In the SP survey, participants were presented with a hypothetical scenario involving the payment of a congestion charge to enter and move around the city center. They were then asked to answer questions on behavioral changes. The SP survey displayed various combinations of attributes to the respondents on their smartphone screens, as shown in Fig. 3. The survey included four SP attributes: (1) travel time reduction, (2) basic pricing level, (3) pricing level during off-peak hours, and (4) start time of off-peak hours. Based on the above-mentioned attributes, the travel time reduction and the congestion charge were calculated using formulas in Table 1. Two different plans were utilized to compare user preferences for different pricing schemes. In plan 1, the congestion charge was set randomly, while in plan 2, the congestion charge was calculated based on travel time in the congestion charging area (see Table 1). The respondents were divided into two user groups, and both the congestion pricing schemes were implemented for each group during different time periods of the survey, as shown in Fig. 4. The survey included six alternative options for behavioral change: (1) keep the present trip under congestion pricing scheme, (2) cancel the trip, (3) change the time of day, (4) change the destination, (5) change the travel mode, and (6) change the route.

Figure 3 shows an example of an SP choice scenario presented to the user in Hiroshima (translated from Japanese to English in the figure) after completing their trip. The question is presented to them via a push notification once their trip is completed. In this particular example, the congestion charge was set at 500 JPY, travel time reduction would be 6 min (30 min of total travel time), and they made the trip between 09:00–16:00. The respondents were then asked to choose from the six alternatives mentioned above.

After data cleaning, we included a total of 1846 SA responses in the subsequent analysis. With 150 respondents in the study, each participant answered SA questions an average of 12.3 times, ranging from a minimum of one response to a maximum of 46 responses. The missing rate for SA questions stands at 1.96%, which is quite low, and this can be attributed to the incentives provided. Note that in some cases, respondents answered SA questions for trips on the way to the office and on the way back from the office. In such instances, we expect these two behavioral adaptations to be consistent. For example, if a respondent chose to use a car on the way to the office, they should ideally not choose a different travel mode on the way back. While checking this inconsistency could serve as a useful quality check for the data, we were unable to implement it due to differences in attribute levels presented to respondents between these two choice contexts. This made it challenging to assess inconsistency simply by comparing the choice results. In the future, to ensure the consistency of SA answers, attribute levels could be set for each trip chain rather than for each individual trip.

The results of descriptive analysis

Table 2 presents the distribution of SP responses, which can be categorized into unchanged and changed options. In one-third of SP scenarios, respondents chose “unchanged”, indicating their intention to continue the current trip and pay the congestion charge. Meanwhile, in the remaining two-thirds of SP scenarios, respondents chose “changed”, which included five different behavioral changes. These results indicate that the congestion pricing scheme has a non-negligible impact on travel behavior. Among the travelers who opted to change their behavior, a relatively small number of people chose “cancel the trip” (2nd alternative) and “change the destination” (4th alternative). This could be attributed to the challenges involved in cancelling or altering plans for commuting and business trips, as they would require significant adjustments to schedules. Conversely, respondents who chose “change the route” (6th alternative) were the largest, which implies that travelers tend to keep the original departure time, destination, and travel mode, but change their route to avoid the congestion charge. Figure 5 shows the relationship between SP choices and trip purpose. Respondents with duty purpose (business trips) showed the highest percentage (42.4%) for the 1st alternative, i.e., “no behavior change and pay the congestion charge”. Meanwhile, respondents traveling for commute purpose have the lowest percentage of selecting the 1st alternative (30.8%). This suggests that for business travelers, it is more difficult to change their travel behavior patterns (routes and travel modes) and they prefer to pay the congestion charge instead. On the other hand, commuters avoid the charge by changing their travel behavior more often as compared to other travel purposes. Figure 6 shows the relationship between SP choices and the levels of congestion charge. It was observed that as the congestion charge increased, the percentage of users choosing to pay the charge and continuing with their current behavior (i.e., “no behavior change and pay the congestion charge”) decreased. Additionally, when the congestion charge level was less than 100 JPY, the percentage of people who chose to pay the charge was extremely high (50.6%). Figure 7 demonstrates the relationship between SP choices and response lag. Note that we categorized the value of response lag for creating the figures (while a continuous value of response lag was used for model estimation). It was observed that a longer response lag corresponded to a higher percentage of respondents choosing the 1st alternative (“no behavior change and pay the congestion charge”). This suggests that a longer response lag may diminish the impact of the congestion charge, indicating the presence of potential systematic bias in stated preferences.

Modeling framework

To test the three hypotheses mentioned in section “Introduction”, we utilize the data from the SA survey to model behavioral changes resulting from the introduction of a congestion pricing scheme. Although the survey presented six adaptation options to the respondents, for the purpose of modeling the behavioral change, we merged them into three alternatives based on the cost of scheduling adjustment: (1) “no behavioral change”, which is used as a base alternative, (2) “change the route”, and (3) “other behavior change”, which includes options 2–5 (cancel the trip, change the time of day, change the destination and change the travel mode). The 3rd alternative entails a higher schedule adjustment cost, while the 2nd alternative (change the route) incurs a relatively lower schedule adjustment cost. Since the real-time SA survey involves multiple trips made by the same respondents, resulting in multiple SP answers from each respondent. This would cause correlations across answers from the same respondent. To account for this correlation, we employ a panel mixed logit model (MXL model). We compare the estimation results of the MXL model with that of a multinominal logit model (MNL model). Note that, instead of merging the alternatives into three groups as mentioned above, we could also remove the 2nd and 4th alternatives from the analysis. These alternatives were chosen by fewer than 3% of the respondents. However, we decided against this approach because removing those options from the choice set could potentially lead to an underestimation of the number of respondents who selected options involving a higher cost of scheduling adjustment.

The MNL model with response lag effects

In the formulation of the MNL model with response lag effects, the random utility function is defined as:

where \({V}_{ikj}\) represents the deterministic part of the utility function for individual \(i\)’s \(k\)-th choice scenario (for \(k\)-th trip) of alternative \(j\), and \({\varepsilon }_{ikj}\) is an error term following the standard Gumbel distribution. It is worth noting that in the SA survey, the error term (unobserved factor) would contain unobserved RP contextual information. Furthermore, the effect of such RP elements is expected to decrease as the response lag increases due to the memory gap. To account for this, we formulate the systematic utility function \({V}_{ikj}\) as follows:

where \({x}_{ikj}^{SP}\) and \({x}_{ikj}^{RP}\) are vectors of explanatory variables obtained from the SP and RP surveys, respectively. \({L}_{ik}\) is the response lag in minutes for individual \(i\) during the choice context \(k\), and \({\beta }_{j}^{SP}\) and \({\beta }_{j}^{RP}\) are vectors of coefficient parameters for the SP and RP variables, respectively. \({X}_{i}^{At}\) is a vector of explanatory variables representing individual attributes, while \({\beta }_{j}^{At}\) is a vector of coefficient parameters for \({X}_{i}^{At}\). \({\beta }_{j}^{const}\) is a constant term for each alternative, and \({\gamma }_{j}\) is the parameter coefficient for the response lag. In this research, we introduce the scale parameters \({\theta }_{ik}^{SP}\) and \({\theta }_{ik}^{RP}\) to quantify the influence of the response lag on the effects of RP and SP attributes. The scale parameters \({\theta }_{ik}^{SP}\) and \({\theta }_{ik}^{RP}\) are expressed as follows:

where, \({\alpha }^{SP}\) and \({\alpha }^{RP}\) are unknown parameters to be estimated, they capture the effect of response lag on the scale parameters \({\theta }_{ik}^{SP}\) and \({\theta }_{ik}^{RP}\). Overall, by directly including the response lag as an explanatory variable and introducing scale parameters for SP and RP attributes as functions of the response lag, we aim to confirm the presence of systematic bias due to the response lag (hypothesis 1) and quantify the influence of the response lag on the effects of RP and SP attributes on the choice results (hypotheses 2 and 3).

MXL model

In this research, we incorporate the unobserved inter-individual heterogeneity using a MXL model. The MXL model accounts for this heterogeneity through an additional error term \({\eta }_{ij}\). The random utility function is defined as:

where \({\eta }_{ij}\) is an error term that varies across alternatives and individuals, following a normal distribution with a mean of \(0\) and standard deviation of \({\sigma }_{j}\). The MXL model uses the same idiosyncratic error term \({\varepsilon }_{ikj}\) and equations for the systematic utility \({V}_{ikj}\) as the MNL model.

Estimation results

The explanatory variables representing RP attributes (\({x}_{ikj}^{RP}\)) and individual attributes (\({x}_{i}^{At}\)) are shown in Table 3. Since the primary focus of this study was to examine the influence of response lag on SP choices and its moderation on the effects of RP and SP variables, only two dummy variables representing individual attributes were included in the models, specifically to test the impact of age and income (one dummy variable for each attribute). Meanwhile, SP attributes (\({x}_{ikj}^{SP}\)) consist of the congestion charge (JPY) and time saving (min) variables, as explained in section “Data collection method”.

The estimation results are shown in Table 4 for the MNL model and Table 5 for the MXL model. Comparing the two models, the MXL model demonstrates improved performance with higher log final likelihood and adjusted \({\rho }^{2}\) values, indicating an improved model performance. This also suggests that the effect of unobserved heterogeneity among individuals is likely to be present.

The estimation results reveal two major findings. Firstly, as shown in Tables 4 and 5, the parameter representing the response lag (\({\gamma }_{j}\)) is significant with a negative sign for alternatives 2–5 (cancel the trip, change the time of day, change the destination, and change the travel mode), as well as alternative 6 (change the route) in both the MNL and MXL models. This implies that the respondents are more likely to choose alternative 1 (no behavioral change) as response lag increases. This tendency suggests that the response lag introduces systematic bias in the SP data and diminishes the impact of the congestion pricing scheme on the respondent’s choices. Secondly, the results of both models show that the estimated parameters \({\alpha }^{SP}\) and \({\alpha }^{RP}\) are positive and negative, respectively. Furthermore, \({\alpha }^{RP}\) is statistically significant for MNL model. This indicates that when the response lag is large, the SP attributes have a larger impact on the respondents’ choices, while the RP attributes have smaller impacts. These estimation results support the three hypotheses presented in section “Introduction” regarding the impact of response lags. However, it should be noted that the parameter \({\alpha }^{SP}\) was not statistically significant.

To visualize the effect of the response lag on the contribution of SP attributes, RP attributes, individual attributes, and the error term towards the total variance of utility differences, we employed the variance decomposition (Chikaraishi et al. 2010) and represented it graphically. The variance decomposition serves as a valuable tool to elucidate the distinct influences and the contribution of various sets of explanatory variables on the total variance of utility difference between an alternative and the base alternative. In this study, we analyzed the change in the contribution of each attribute in response to a change in response lag using this method. Figure 8 illustrates the results for MNL, where the vertical axis shows the proportion of variance attributed to SP attributes, RP attributes, individual attributes (At), and the error term, with the sum set to 100. The results for MXL, which also include the error term for individual heterogeneity (\({\eta }_{ij})\) is presented in Fig. 9. It is worth noting that the error term (white noise) may include unobserved RP contextual factors, and thus we anticipate that its contribution will decrease as the response lag increases. It is worth noting that our formulation can capture changes in the relative contribution of error term. Specifically, we can test whether, as the response lag increases, respondents tend to pay less attention to contextual factors that are mainly captured by the error term, resulting in the lower contribution of unobserved factors (see Appendix A). The horizontal axis of both figures represents the response lag, indicating the time elapsed between presenting the SP survey questions to the users and their actual response (1[minute], 1[hour], 3[hour], 1[day], 3[day], 10[day]).

With regard to the proportions of variances explained by different variables, both MNL and MXL models showed that RP attributes accounted for a relatively small proportion compared to SP attributes. As the response lag increased for all alternatives, the proportion of SP attributes increased, while the proportions of RP attributes, individual attributes, and error terms decreased. Based on the results, we observed that the proportion of the contribution of SP attributes in explaining the variance for the alternatives 2–5 (cancel the trip, change the time of day, change the destination, and change the travel mode) was larger than that for alternative 6 (change the route), so we could say that the proportion of SP attributes for the alternatives 2–5 was larger than that for alternative 6 where “pay the fee and perform the same action as the current one” is the base alternative. These findings suggest that respondents tend to consider RP context more when responding to SP questions with shorter response lags. Additionally, the contribution of the unobserved variables, which might include other unobserved RP contextual factors, also tend to decrease with an increase in the value of response lag. These findings highlight the importance of real-time responses in encouraging respondents to consider the RP context effectively. Finally, it was observed that the effect of the idiosyncratic error term representing unobserved inter-individual heterogeneity is higher than the effect of unobserved inter-trip heterogeneity.

Note that the RP attribute may act as a covariate that influences both the response lag and the choice result, which can introduce potential bias in the estimation results (Hoshino 2009). To address this issue and ensure the robustness of our findings, we conducted additional estimation considering the impact of RP contextual covariates using propensity scores. The method and estimation results can be found in the Appendix B. Overall, the results suggest that although there are some differences in the estimated parameters, the effect of response lag on the choice outcome and the signs of the parameters \({\alpha }^{SP}\) and \({\alpha }^{RP}\) are consistent with the results obtained without considering the propensity score.

In summary, our findings highlight that when the response lag is large, the SP attributes have a larger impact on the respondents’ choices, while the RP attributes have smaller impacts as shown in Tables 4 and 5. Simultaneously, Figs. 8 and 9 show that as the response lag increased for all alternatives, the proportion of contribution of SP attributes increased, while the contributions of RP attributes decreased, thus providing support for the hypotheses presented in the Introduction.

Conclusions

In this research, we evaluated the impact of response lag on choice behavior using data from real-time SA surveys conducted in Kumamoto and Hiroshima metropolitan areas in January and February 2020. The findings of this research support the hypotheses formulated in section “Introduction”. The key findings can be summarized as follows:

-

1.

The discrete choice models show significant negative impact of response lag on choices of other alternatives (including cancel the trip, change the time of day, change the destination and change the travel mode) and route change, relative to paying the congestion charge. These findings support the first hypothesis, which suggests that a longer response lag leads to a larger systematic bias in the choice results.

-

2.

The analysis of variance decomposition provided insights into the changes in the contribution of different attributes with varying response lag. The results support the second hypothesis, indicating that as the response lag increases, the impact of RP attributes on the choice result tends to decrease.

-

3.

Finally, it was observed that as the response lag increases, the impact of SP attributes on the choice result tends to increase, supporting the third hypothesis.

Based on the results of the study, it can be argued that a longer response lag in SA surveys, leads to respondents placing more emphasis on the SP attribute rather than considering the RP context. This introduces biases in the results of their responses and subsequent policy implications. For example, the results show that even with a high congestion pricing scheme, respondents often choose not to change their behavior, when the response lag was large. Such findings may encourage policy-makers to implement pricing schemes, but this study confirms that the systematic bias in responses increases with an increase in response lag. Typically, the decision-making process in congestion pricing schemes is likely to take place well before the start of the trip. This is especially true for regular daily commute trips, as users can prepare for a behavior change more carefully while taking the congestion charge into consideration. However, in certain scenarios the users’ decision-making processes may be similar to that of the SP survey in this study, and real-time responses may be effective. These scenarios include non-daily or non-regular trips, situations when travelers do not have prior information about the congestion charge, dynamic application of the congestion charge, and during the implementation of dynamic incentive systems such as Incentrip (incenTrip https://incentrip.org.

This study has some limitations and a number of remaining tasks. Firstly, we measured systematic bias through the estimated parameter for the response lag variable. Ideally, systematic bias should be measured by comparing actual with predicted choices. However, as that was not possible in this study, we formulated a hypothesis based on estimated model parameters. Secondly, studies in the future should try to estimate the model considering trip chaining, since respondents may answer the SP questions collectively (i.e., after the completion of all trips in a trip chain) and the response lag may be large if the trip chaining is complex. Another interesting future research agenda is to handle memory effects and time-to-think effects discussed in section “Literature review” simultaneously. To do that, we must observe three different timings: (1) the time push notification is sent, (2) the time a respondent starts answering the question, and (3) the time a respondent finishes answering the question. Unfortunately, only (1) and (3) were observed in this study. In the future, it would be worth designing a survey in which all of the above timings are observed.

The results of this research, which demonstrate the influence of response lag on SP responses, hold valuable implications for the development of transportation survey applications and the formulation of reliable transportation policies. Survey applications can be designed to encourage and incentivize lower response lags, prompting users to consider their real contexts when responding to SP questions. This approach would help mitigate the biases associated with SP surveys. To the authors’ knowledge, there are presently no commercial applications capable of integrating real-time SP questions into smartphone-based travel diary surveys. Developing such an application would be crucial for obtaining more reliable SP data.

References

Arentze, T., Borgers, A., Timmermans, H., DelMistro, R.: Transport stated choice responses: effects of task complexity, presentation format and literacy. Transp. Res. Part E Logist. Transp. Rev. 39(3), 229–244 (2003)

Bann, C.: An overview of valuation techniques: advantages and limitations. Asean Biodiversity 2(2), 8–16 (2002)

Bech, M., Kjaer, T., Lauridsen, J.: Does the number of choice sets matter? Results from a web survey applying a discrete choice experiment. Health Econ. 20, 273–286 (2011)

Ben-Akiva, M., Morikawa, T.: Estimation of switching models from revealed preferences and stated intentions. Transp. Res. Part A 24(6), 485–495 (1990)

Börger, T.: Are fast responses more random? Testing the effect of response time on scale in an online choice experiment. Environ. Resour. Econ.resour. Econ. 65, 389–413 (2016)

Caussade, S., Ortúzar, J., Rizzi, I.L., Hensher, D.: Assessing the influence of design dimensions on stated choice experiment estimates. Accid. Anal. Prev.. Anal. Prev. 36(4), 513–524 (2004)

Chikaraishi, M., Fujiwara, A., Zhang, J., Axhausen, K.W.: Identifying variations and covariations in discrete choice models. Transportation 38(6), 993–1016 (2010)

Cook, J., Jeuland, M., Maskery, B., Whittington, D.: Giving stated preference respondents “time to think”: results from four countries. Environ. Resour. Econ.resour. Econ. 51, 473–496 (2012)

Danaf, M., Atasoy, B., Azevedo, D.C.L., Ding-Mastera, J., Abou-Zeid, M., Cox, M., Zhao, F., Ben-Akiva, M.: Context-aware stated preferences with smartphone-based travel surveys. J. Choice Model. 31, 35–50 (2019)

Feneri, A.M., Rasouli, S., Timmermans, H.J.: Issues in the design and application of stated adaptation surveys to examine behavioral change: the example of Mobility-As-a-Service. In: Mladenović, M.N., Toivonen, T., Willberg, E., Geurs, K.T. (eds.) Transport in Human Scale Cities, pp. 96–108. Edward Elgar Publishing (2021)

Fifer, S., Rose, J., Greaves, S.: Hypothetical bias in stated choice experiments: is it a problem? And if so, how do we deal with it? Transp. Res. Part A Policy Pract. 61, 164–177 (2014)

Haaijer, R., Kamakura, W., Wedel, M.: Response latencies in the analysis of conjoint choice experiments. J. Mark. Res. 37, 376–382 (2000)

Hensher, D.: Stated preference analysis of travel choices: the state of practice. Transportation 21 (1994)

Hoshino, T: Statistical science of survey observation data: causal inference, selection bias, and data fusion (in Japanese). Iwanami shoten (2009)

Huynh, N.A., Chikaraishi, M., Fujiwara, A., Seya, H., Zhang, J.: Influences of tour complexity and trip flexibility on stated commuting mode: a case of mass rapid transit in Ho Chi Minh City. Asian Transp. Stud. 4(3), 536–549 (2017a)

Huynh, N.A., Chikaraishi, M., Fujiwara, A., Seya, H., Zhang, J.: Influences of pick-up/drop-off trips for children at school on parents’ commuting mode choice in Ho Chi Minh City: a stated preference approach. J. Eastern Asia Soc. Transp. Stud. 12, 652–671 (2017b)

Imai, K., van Dyk, D.A.: Causal inference with general treatment regimes. J. Am. Stat. Assoc. 99(467), 854–886 (2004)

incenTrip https://incentrip.org

Morikawa, T.: Correcting state dependence and serial correlation in the RP/SP combined estimation method. Transportation 21, 153–165 (1994)

Puspitasari, S., Varghese, V., Chikaraishi, M., Maruyama, T.: Exploring the effects of congestion pricing on travel behavior responses using real-time, context-aware, stated-preference data. J. Eastern Asia Soc. Transp. Stud. 14, 199–214 (2021)

Rose, J., Black, I.: Means matter, but variance matter too: decomposing response latency influences on variance heterogeneity in stated preference experiments. Mark. Lett. 17, 295–310 (2006)

Rose, J.M., Bliemer, M.C.J.: Constructing efficient stated choice experimental designs. Transp. Rev. 29, 587–617 (2009)

Rose, J., Bliemer, J.M., Hensher, A.D., Collins, A.: Designing efficient stated choice experiments in the presence of reference alternatives. Transp. Res. Part B Methodol. 42(4), 395–406 (2008)

Sadakane, K., Kobayashi, Y., Yamashita, I., Kusakabe, T., Asakura, Y.: Evaluation of introducing new travel mode by using probe-person-based stated-preference survey (in Japanese). In: Civil Engineering Planning Research and Proceedings (CD-ROM), vol. 42, no. 55 (2010)

Safira, M., Chikaraishi, M.: The impact of online food delivery service on eating-out behavior: a case of multi-service transport platforms (MSTPs) in Indonesia. Transportation (2022). https://doi.org/10.1007/s11116-022-10307-7

Train, K., Wilson, W.W.: Estimation on stated-preference experiments constructed from revealed-preference choices. Transp. Res. Part B Methodol. 42(3), 191–203 (2008)

Acknowledgements

The authors would like to acknowledge that a part of this research was conducted under the research project “Short-term travel demand prediction and comprehensive transport demand management”, supported by the Committee on Advanced Road Technology under the authority of the Ministry of Land, Infrastructure, Transport, and Tourism of Japan.

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: study conception and design: K. Fujiwara, V. Varghese, M. Chikaraishi, T. Maruyama; analysis and interpretation of results: K. Fujiwara, V. Varghese, M. Chikaraishi, T. Maruyama; draft manuscript preparation: K. Fujiwara, V. Varghese, M. Chikaraishi, T. Maruyama, A. Fujiwara. All authors reviewed the results and approved the final version of the manuscript. The authors do not have any conflicts of interest to declare.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: An alternative formulation for scale parameters

The first two components in Eq. (2) can be rewritten as follows:

where \({\theta }_{ik}^{\prime}={\theta }_{ik}^{SP}=\mathrm{exp}\left({\alpha }^{SP}\mathrm{ln}\left({L}_{ik}\right)\right)\), and \({\theta }_{ik}^{{\prime}{\prime}}=\frac{{\theta }_{ik}^{RP}}{{\theta }_{ik}^{SP}}=\mathrm{exp}\left(\left({\alpha }^{RP}-{\alpha }^{SP}\right)\mathrm{ln}\left({L}_{ik}\right)\right)\). This indicates that the original model with (1) scale parameter for RP, and (2) scale parameter for SP can be translated into the model with (1) overall scale parameter for both SP and RP variables, and (2) parameter which determines relative importance of RP with respect to SP. The overall scale parameter determines the relative contribution of unobserved components. It can be expected that, as response lag increases, respondents tend to pay less attention to contextual factors that are mainly captured by the error term, resulting in the lower contribution of unobserved factors.

Appendix B: Testing the robustness of the results using propensity scores

When estimating the model in section “Modeling framework”, it was assumed that RP attributes only influence the choice result. However, in reality, RP attributes may also affect the response lag as well, leading to differences in response lag depending on trip-related contextual factors. For instance, commuters may find it more challenging to respond in the morning compared to the evening. To address this, the RP attribute is represented as a covariate X that affects the response lag and choice result, the response lag is considered as a treatment variable Z, and the choice result is considered as an outcome variable Y. Using propensity scores, the study empirically examines the influence the RP attribute on both the response lag and the choice result.

Since the processing variable Z (response lag) is a continuous variable in this study, the propensity score method for continuous quantities as proposed by Imai and van Dyk (2004) is applied. A propensity score function is characterized by \(\theta\). Firstly, the estimated value of \(\widehat{\theta }\left({\varvec{X}}\right)={{\varvec{X}}}^{T}{\varvec{\zeta}}\) is calculated by employing a linear regression model using the covariate X, treatment variable Z and an unknown parameter \({\varvec{\zeta}}\). This estimated value serves as the propensity score. Subsequently, the propensity scores are used to stratify the data, dividing it into different strata based on the estimated propensity scores. The weight, denoted as \({W}_{kl}\), for a particular response lag \(k\) in stratum \(l\), is calculated by dividing the number of individuals (\({n}_{kl}\)) with that response lag in the stratum by the total number of individuals (\({N}_{l}\)) in the stratum. This weight calculation is performed using eq. (B1).

The estimation process incorporates a weighted likelihood function to account for the propensity score weights. The weight for individual \(i\) at response delay \(k\) in stratum \(l\), denoted as \({w}_{kli}\), is the reciprocal of \({W}_{kl}\) divided by the expected value of \({W}_{kl}\). This weight is used to weigh the contributions of each individual in the likelihood function.

where, \({P}_{i}(j)\) is the probability of individual \(i\) choosing alternative \(j\) and \({d}_{j}\) is a dummy variable which indicates whether individual \(i\) chose alternative \(j\) or not. The results of the model estimation using the above equations are shown in Table

6. Meanwhile, the results of variance decomposition are shown in Fig.

10. The results show that \({\alpha }^{RP}\) is negative and statistically significant, indicating a similar trend to the results obtained without considering the propensity score. Similarly, for variance decomposition, the results are similar to that of the models without considering the propensity score. These results confirm that the three hypotheses are supported in this model, as they were in the case without propensity score consideration.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fujiwara, K., Varghese, V., Chikaraishi, M. et al. Does response lag affect travelers’ stated preference? Evidence from a real-time stated adaptation survey. Transportation (2023). https://doi.org/10.1007/s11116-023-10435-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s11116-023-10435-8