Abstract

The StochAstic Recursive grAdient algoritHm (SARAH) algorithm is a variance reduced variant of the Stochastic Gradient Descent algorithm that needs a gradient of the objective function from time to time. In this paper, we remove the necessity of a full gradient computation. This is achieved by using a randomized reshuffling strategy and aggregating stochastic gradients obtained in each epoch. The aggregated stochastic gradients serve as an estimate of a full gradient in the SARAH algorithm. We provide a theoretical analysis of the proposed approach and conclude the paper with numerical experiments that demonstrate the efficiency of this approach.

Similar content being viewed by others

References

Ahn, K., Yun, C., Sra, S.: SGD with shuffling: optimal rates without component convexity and large epoch requirements. Adv. Neural Inf. Process. Syst. 33, 17526–17535 (2020)

Allen-Zhu, Z.: Katyusha: the first direct acceleration of stochastic gradient methods. In: Proceedings of the 49th Annual ACM SIGACT Symposium on Theory of Computing, pp. 1200–1205 (2017)

Allen-Zhu, Z., Yuan, Y.: Improved SVRG for non-strongly-convex or sum-of-non-convex objectives. In: International Conference on Machine Learning, pp. 1080–1089. PMLR (2016)

Bengio, Y.: Practical recommendations for gradient-based training of deep architectures. In: Neural Networks: Tricks of the Trade, 2nd ed., pp. 437–478. Springer (2012)

Bottou, L.: Curiously fast convergence of some stochastic gradient descent algorithms. In: Proceedings of the Symposium on Learning and Data Science, Paris, vol. 8, pp. 2624–2633. Citeseer (2009)

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. SIAM Rev. 60(2), 223–311 (2018)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. TIST 2(3), 1–27 (2011)

Cohen, M., Diakonikolas, J., Orecchia, L.: On acceleration with noise-corrupted gradients. In: International Conference on Machine Learning, pp. 1019–1028. PMLR (2018)

Cutkosky, A., Orabona, F.: Momentum-based variance reduction in non-convex sgd. arXiv preprint arXiv:1905.10018 (2019)

Defazio, A., Bach, F., Lacoste-Julien, S.: Saga: a fast incremental gradient method with support for non-strongly convex composite objectives. In: Advances in Neural Information Processing Systems, pp. 1646–1654 (2014)

Fang, C., Li, C.J., Lin, Z., Zhang, T.: Spider: near-optimal non-convex optimization via stochastic path integrated differential estimator. arXiv preprint arXiv:1807.01695 (2018)

Ghadimi, S., Lan, G., Zhang, H.: Mini-batch stochastic approximation methods for nonconvex stochastic composite optimization. Math. Program. 155(1–2), 267–305 (2016)

Gurbuzbalaban, M., Ozdaglar, A., Parrilo, P.A.: On the convergence rate of incremental aggregated gradient algorithms. SIAM J. Optim. 27(2), 1035–1048 (2017)

Hendrikx, H., Xiao, L., Bubeck, S., Bach, F., Massoulie, L.: Statistically preconditioned accelerated gradient method for distributed optimization. In: International Conference on Machine Learning, pp. 4203–4227. PMLR (2020)

Hu, W., Li, C.J., Lian, X., Liu, J., Yuan, H.: Efficient smooth non-convex stochastic compositional optimization via stochastic recursive gradient descent (2019)

Huang, X., Yuan, K., Mao, X., Yin, W.: An improved analysis and rates for variance reduction under without-replacement sampling orders. In: Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W. (eds.) Advances in Neural Information Processing Systems, vol. 34, pp. 3232–3243. Curran Associates Inc, Red Hook (2021)

Jain, P., Nagaraj, D., Netrapalli, P.: SGD without replacement: sharper rates for general smooth convex functions. arXiv preprint arXiv:1903.01463 (2019)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. Adv. Neural Inf. Process. Syst. 26, 315–323 (2013)

Khaled, A., Richtárik, P.: Better theory for SGD in the nonconvex world. arXiv preprint arXiv:2002.03329 (2020)

Koloskova, A., Doikov, N., Stich, S.U., Jaggi, M.: Shuffle SGD is always better than SGD: improved analysis of SGD with arbitrary data orders. arXiv preprint arXiv:2305.19259 (2023)

Li, B., Ma, M., Giannakis, G.B.: On the convergence of Sarah and beyond. In: International Conference on Artificial Intelligence and Statistics, pp. 223–233. PMLR (2020)

Li, Z., Bao, H., Zhang, X., Richtárik, P.: Page: a simple and optimal probabilistic gradient estimator for nonconvex optimization. In: International Conference on Machine Learning, pp. 6286–6295. PMLR (2021)

Li, Z., Richtárik, P.: Zerosarah: efficient nonconvex finite-sum optimization with zero full gradient computation. arXiv preprint arXiv:2103.01447 (2021)

Liu, D., Nguyen, L.M., Tran-Dinh, Q.: An optimal hybrid variance-reduced algorithm for stochastic composite nonconvex optimization. arXiv preprint arXiv:2008.09055 (2020)

Mairal, J.: Incremental majorization-minimization optimization with application to large-scale machine learning. SIAM J. Optim. 25(2), 829–855 (2015)

Malinovsky, G., Sailanbayev, A., Richtárik, P.: Random reshuffling with variance reduction: new analysis and better rates. arXiv preprint arXiv:2104.09342 (2021)

Mishchenko, K., Khaled Ragab Bayoumi, A., Richtárik, P.: Random reshuffling: simple analysis with vast improvements. Adv. Neural Inf. Process. Syst. 33 (2020)

Mokhtari, A., Gurbuzbalaban, M., Ribeiro, A.: Surpassing gradient descent provably: a cyclic incremental method with linear convergence rate. SIAM J. Optim. 28(2), 1420–1447 (2018)

Moulines, E., Bach, F.: Non-asymptotic analysis of stochastic approximation algorithms for machine learning. In: Shawe-Taylor, J., Zemel, R., Bartlett, P., Pereira, F., Weinberger, K. (eds.) Advances in Neural Information Processing Systems, vol. 24. Curran Associates Inc, Red Hook (2011)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, vol. 87. Springer, New York (2003)

Nguyen, L.M., Liu, J., Scheinberg, K., Takáč, M.: SARAH: a novel method for machine learning problems using stochastic recursive gradient. In: International Conference on Machine Learning, pp. 2613–2621. PMLR (2017)

Nguyen, L.M., Liu, J., Scheinberg, K., Takáč, M.: Stochastic recursive gradient algorithm for nonconvex optimization. arXiv preprint arXiv:1705.07261 (2017)

Nguyen, L.M., Nguyen, P.H., Richtárik, P., Scheinberg, K., Takác, M., van Dijk, M.: New convergence aspects of stochastic gradient algorithms. J. Mach. Learn. Res. 20, 176–1 (2019)

Nguyen, L.M., Scheinberg, K., Takáč, M.: Inexact SARAH algorithm for stochastic optimization. Optim. Methods Softw. 36(1), 237–258 (2021)

Nguyen, L.M., Tran-Dinh, Q., Phan, D.T., Nguyen, P.H., Van Dijk, M.: A unified convergence analysis for shuffling-type gradient methods. J. Mach. Learn. Res. 22(1), 9397–9440 (2021)

Park, Y., Ryu, E.K.: Linear convergence of cyclic saga. Optim. Lett. 14(6), 1583–1598 (2020)

Polyak, B.T.: Introduction to optimization

Qian, X., Qu, Z., Richtárik, P.: Saga with arbitrary sampling. In: International Conference on Machine Learning, pp. 5190–5199. PMLR (2019)

Recht, B., Ré, C.: Parallel stochastic gradient algorithms for large-scale matrix completion. Math. Program. Comput. 5(2), 201–226 (2013)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 400–407 (1951)

Schmidt, M., Le Roux, N., Bach, F.: Minimizing finite sums with the stochastic average gradient. Math. Program. 162(1–2), 83–112 (2017)

Shalev-Shwartz, S., Ben-David, S.: Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press, Cambridge (2014)

Shalev-Shwartz, S., Singer, Y., Srebro, N., Cotter, A.: Pegasos: primal estimated sub-gradient solver for SVM. Math. Program. 127(1), 3–30 (2011)

Stich, S.U.: Unified optimal analysis of the (stochastic) gradient method. arXiv preprint arXiv:1907.04232 (2019)

Sun, R.Y.: Optimization for deep learning: an overview. J. Oper. Res. Soc. China 8(2), 249–294 (2020)

Sun, T., Sun, Y., Li, D., Liao, Q.: General proximal incremental aggregated gradient algorithms: Better and novel results under general scheme. Adv. Neural Inf. Process. Syst. 32 (2019)

Takác, M., Bijral, A., Richtárik, P., Srebro, N.: Mini-batch primal and dual methods for SVMs. In: International Conference on Machine Learning, pp. 1022–1030. PMLR (2013)

Tropp, J.A.: User-friendly tail bounds for sums of random matrices. Found. Comput. Math. 12, 389–434 (2012)

Vanli, N.D., Gurbuzbalaban, M., Ozdaglar, A.: A stronger convergence result on the proximal incremental aggregated gradient method. arXiv preprint arXiv:1611.08022 (2016)

Vaswani, S., Bach, F., Schmidt, M.: Fast and faster convergence of SGD for over-parameterized models and an accelerated perceptron. In: The 22nd International Conference on Artificial Intelligence and Statistics, pp. 1195–1204. PMLR (2019)

Yang, Z., Chen, Z., Wang, C.: Accelerating mini-batch SARAH by step size rules. Inf. Sci. 558, 157–173 (2021)

Ying, B., Yuan, K., Sayed, A.H.: Variance-reduced stochastic learning under random reshuffling. IEEE Trans. Signal Process. 68, 1390–1408 (2020)

Ying, B., Yuan, K., Vlaski, S., Sayed, A.H.: Stochastic learning under random reshuffling with constant step-sizes. IEEE Trans. Signal Process. 67(2), 474–489 (2018)

Acknowledgements

The work of A. Beznosikov was supported by a grant for research centers in the field of artificial intelligence, provided by the Analytical Center for the Government of the Russian Federation in accordance with the subsidy agreement (agreement identifier 000000D730321P5Q0002) and the agreement with the Moscow Institute of Physics and Technology dated November 1, 2021 No. 70-2021-00138. This work was partially conducted while A. Beznosikov, was visiting research assistants in Mohamed bin Zayed University of Artificial Intelligence (MBZUAI).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

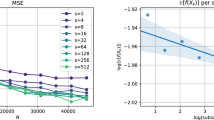

Appendix 1: Additional experimental results

Appendix 2: RR-SARAH

This Algorithm is a modification of the original SARAH using Random Reshuffling. Unlike Algorithm 1, this algorithm uses the full gradient \(\nabla P\).

Theorem 2

Suppose that Assumption 1 holds. Consider RR-SARAH (Algorithm 2) with the choice of \(\eta\) such that

Then, we have

Corollary 2

Fix \(\varepsilon\), and let us run RR-SARAH with \(\eta\) from (6). Then we can obtain an \(\varepsilon\)-approximate solution (in terms of \(P(w) - P^* \le \varepsilon\)) after

Appendix 3: Missing proofs for Sect. 3 and “Appendix 2”

Before we start to prove, let us note that \(\delta\)-similarity from Assumption 1 gives \((\delta /2)\)-smoothness of function \((f_i - P)\) for any \(i \in [n]\). This implies \(\delta\)-smoothness of function \((f_i - f_j)\) for any \(i,j \in [n]\):

Next, we introduce additional notation for simplicity. If we consider Algorithm 1 in iteration \(s \ne 0\), one can note that update rule is nothing more than

These new notations will be used further in the proofs. For Algorithm 2, one can do exactly the same notations with \(v_s = v^0_s = \nabla P(w_s)\).

Lemma 1

Under Assumption 1, for Algorithms 1 and 2 with \(\eta\) from (5) the following holds

Proof

Using L-smoothness of function P (Assumption 1 (i)), we have

With \(\eta \le \frac{1}{8nL} \le \frac{1}{(n+1)L}\), we get

Which completes the proof. \(\square\)

Lemma 2

Under Assumption 1, for Algorithms 1 and 2 the following holds

Proof

To begin with, we prove that for any \(k = n, \ldots , 0\), it holds

One can prove it by mathematical induction. For \(k = n\), we have \(\sum _{i=n}^{n} v^i_s = v^n_s\). Suppose (11) holds true for k, let us prove for \(k-1\):

Here we additionally used (9). This completes the proof of (11). In particular, (11) with \(k = 0\) gives

Using \(\Vert a + b\Vert ^2 \le 2\Vert a\Vert ^2 + 2\Vert b\Vert ^2\), we get

Again using mathematical induction, we prove for \(k = n, \ldots , 1\) the following estimate:

For \(k = n\), the statement holds automatically. Suppose (13) holds true for k, let us prove for \(k-1\):

Using \(\Vert a + b\Vert ^2 \le (1 + c)\Vert a\Vert ^2 + (1+ 1/c)\Vert b\Vert ^2\) with \(c = k\), we have

With \(\Vert a + b\Vert ^2 \le 2\Vert a\Vert ^2 + 2\Vert b\Vert ^2\) Assumption 1 (\(\delta\)-similarity (7) and L-smoothness), one can obtain

This completes the proof of (13). In particular, (13) with \(k = 1\) gives

With (8) and L-smoothness of function \(f_{\pi ^{1}_s}\) (Assumption 1 (i)), we have

Substituting of (14) to (12) completes proof. \(\square\)

Lemma 3

Under Assumption 1, for Algorithms 1 and 2 with \(\eta\) from (5) the following holds for \(i \in [n]\)

Proof

Assumption 1 (i) on convexity and L-smoothness of \(f_{\pi ^{i}_{s}}\) gives (see also Theorem 2.1.5 from [30])

Taking into account that \(\eta \le \frac{1}{8nL} \le \frac{1}{2\,L}\), we finishes the proof. \(\square\)

Proof of Theorem 2

For RR-SARAH \(v_s = \nabla P(w_s)\), then by Lemma 2, we get

Combining with Lemma 1, one can obtain

Next, we work with \(\sum \nolimits _{i=1}^n \Vert w^{i}_s - w_s\Vert ^2\). By Lemma 3 and the update for \(w^i_s\) ((8) and (10)), we get

Hence,

With \(\gamma \le \min \left\{ \frac{1}{8n L}; \frac{1}{8n^{2} \delta } \right\}\), we get

Strong-convexity of P ends the proof:

\(\square\)

Proof of Theorem 1

For Shuffled-SARAH \(v_s = \frac{1}{n} \sum _{i=1}^{n} f_{\pi ^{i}_{s-1}} (w^{i}_{s-1})\), then

With \(\sum \nolimits _{i=1}^n \Vert w^{i}_s - w_s\Vert ^2\) we can work in the same way as in proof of Theorem 2. In remains to deal with \(\sum _{i=1}^n \left\| w^{i}_{s-1} - w_s\right\| ^2\). Using Lemma 3 and the update for \(w^i_s\) ((8) and (10)), we get

Combining the results of Lemma 1 with (16), (15) and (17), one can obtain

The last step is deduced the same way as (17). Small rearrangement gives

With the choice of \(\eta \le \min \left\{ \frac{1}{8n L}; \frac{1}{8n^{2} \delta } \right\}\), we have

Again using that \(\eta \le \frac{1}{8nL}\), we obtain \(32\,L^2 \eta ^2 n^2 \le \left( 1 - \frac{\eta (n+1) \mu }{2}\right)\) and

Strong-convexity of P ends the proof. \(\square\)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Beznosikov, A., Takáč, M. Random-reshuffled SARAH does not need full gradient computations. Optim Lett 18, 727–749 (2024). https://doi.org/10.1007/s11590-023-02081-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-023-02081-x