Abstract

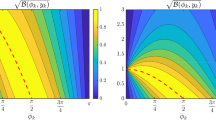

Although Anderson acceleration AA(m) has been widely used to speed up nonlinear solvers, most authors are simply using and studying the stationary version of Anderson acceleration. The behavior and full potential of the non-stationary version of Anderson acceleration methods remain an open question. Motivated by the hybrid linear solver GMRESR (GMRES Recursive), we recently proposed a set of non-stationary Anderson acceleration algorithms with dynamic window sizes AA(m,AA(n)) for solving both linear and nonlinear problems. Significant gains are observed for our proposed algorithms but these gains are not well understood. In the present work, we first consider the case of using AA(m,AA(1)) for accelerating linear fixed-point iteration and derive the polynomial residual update formulas for non-stationary AA(m,AA(1)). Like stationary AA(m), we find that AA(m,AA(1)) with general initial guesses is also a multi-Krylov method and possesses a memory effect. However, AA(m,AA(1)) has higher order degree of polynomials and a stronger memory effect than that of AA(m) at the k-th iteration, which might explain the better performance of AA(m,AA(1)) compared to AA(m) as observed in our numerical experiments. Moreover, we further study the influence of initial guess on the asymptotic convergence factor of AA(1, AA(1)). We show a scaling invariance property of the initial guess \(x_0\) for the AA(1,AA(1)) method in the linear case. Then, we study the root-linear asymptotic convergence factor under scaling of the initial guess and we explicitly indicate the dependence of root-linear asymptotic convergence factors on the initial guess. Lastly, we numerically examine the influence of the initial guess on the asymptotic convergence factor of AA(m) and AA(m,AA(n)) for both linear and nonlinear problems.

Similar content being viewed by others

Data Availability

Data will be available on request.

References

Anderson, D.G.: Iterative procedures for nonlinear integral equations. J. Assoc. Comput. Mach. 12, 547–560 (1965). https://doi.org/10.1145/321296.321305

Anderson, D.G.M.: Comments on Anderson acceleration, mixing and extrapolation. Numer. Algorithms 80(1), 135–234 (2019). https://doi.org/10.1007/s11075-018-0549-4

Bian, W., Chen, X., Kelley, C.T.: Anderson acceleration for a class of nonsmooth fixed-point problems. SIAM J. Sci. Comput. 43(5), S1–S20 (2021). https://doi.org/10.1137/20M132938X

Brown, J., Knepley, M.G., May, D.A., McInnes, L.C., Smith, B.: Composable linear solvers for multiphysics. In: 2012 11th International Symposium on Parallel and Distributed Computing, pp. 55–62. IEEE (2012). https://doi.org/10.1109/ISPDC.2012.16

Brune, P.R., Knepley, M.G., Smith, B.F., Tu, X.: Composing scalable nonlinear algebraic solvers. SIAM Rev. 57(4), 535–565 (2015). https://doi.org/10.1137/130936725

Carlson, N.N., Miller, K.: Design and application of a gradient-weighted moving finite element code. I. In one dimension. SIAM J. Sci. Comput. 19(3), 728–765 (1998). https://doi.org/10.1137/S106482759426955X

Chen, K., Vuik, C.: Composite Anderson acceleration method with two window sizes and optimized damping. Int. J. Numer. Methods Eng. (2022). https://doi.org/10.1002/nme.7096

Chen, K., Vuik, C.: Non-stationary Anderson acceleration with optimized damping. arXiv:2202.05295 (2022). https://doi.org/10.48550/arXiv.2202.05295

De Sterck, H., He, Y.: Anderson acceleration as a Krylov method with application to asymptotic convergence analysis (2021). https://doi.org/10.48550/arXiv.2109.14181

De Sterck, H., He, Y.: Linear asymptotic convergence of Anderson acceleration: fixed-point analysis (2021). https://doi.org/10.48550/arXiv.2109.14176

De Sterck, H., He, Y.: On the asymptotic linear convergence speed of Anderson acceleration, Nesterov acceleration, and nonlinear GMRES. SIAM J. Sci. Comput. 43(5), S21–S46 (2021). https://doi.org/10.1137/20M1347139

Eirola, T., Nevanlinna, O.: Accelerating with rank-one updates. pp. 511–520 (1989). https://doi.org/10.1016/0024-3795(89)90719-2

Evans, C., Pollock, S., Rebholz, L.G., Xiao, M.: A proof that Anderson acceleration improves the convergence rate in linearly converging fixed-point methods (but not in those converging quadratically). SIAM J. Numer. Anal. 58(1), 788–810 (2020). https://doi.org/10.1137/19M1245384

Eyert, V.: A comparative study on methods for convergence acceleration of iterative vector sequences. J. Comput. Phys. 124(2), 271–285 (1996). https://doi.org/10.1006/jcph.1996.0059

Fang, H.r., Saad, Y.: Two classes of multisecant methods for nonlinear acceleration. Numer. Linear Algebra Appl. 16(3), 197–221 (2009). https://doi.org/10.1002/nla.617

Haelterman, R., Degroote, J., Van Heule, D., Vierendeels, J.: On the similarities between the quasi-Newton inverse least squares method and GMRES. SIAM J. Numer. Anal. 47(6), 4660–4679 (2010). https://doi.org/10.1137/090750354

Kirby, R.C., Mitchell, L.: Solver composition across the PDE/linear algebra barrier. SIAM J Sci Comput 40(1), C76–C98 (2018). https://doi.org/10.1137/17M1133208

Layton, W.: Introduction to the numerical analysis of incompressible viscous flows. SIAM (2008). https://doi.org/10.1137/1.9780898718904

Lin, L., Yang, C.: Elliptic preconditioner for accelerating the self-consistent field iteration in Kohn-Sham density functional theory. SIAM J. Sci. Comput. 35(5), S277–S298 (2013). https://doi.org/10.1137/120880604

Miller, K.: Nonlinear Krylov and moving nodes in the method of lines. J. Comput. Appl. Math. 183(2), 275–287 (2005). https://doi.org/10.1016/j.cam.2004.12.032

Oosterlee, C.W., Washio, T.: Krylov subspace acceleration of nonlinear multigrid with application to recirculating flows. pp. 1670–1690 (2000). https://doi.org/10.1137/S1064827598338093

Ortega, J.M., Rheinboldt, W.C.: Iterative solution of nonlinear equations in several variables. SIAM (2000). https://doi.org/10.1137/1.9780898719468

Peng, Y., Deng, B., Zhang, J., Geng, F., Qin, W., Liu, L.: Anderson acceleration for geometry optimization and physics simulation. ACM Transactions on Graphics (TOG) 37(4), 1–14 (2018). https://doi.org/10.1145/3197517.3201290

Pollock, S., Rebholz, L.G.: Anderson acceleration for contractive and noncontractive operators. IMA J. Numer. Anal. 41(4), 2841–2872 (2021). https://doi.org/10.1093/imanum/draa095

Pollock, S., Rebholz, L.G., Xiao, M.: Anderson-accelerated convergence of Picard iterations for incompressible Navier-Stokes equations. SIAM J. Numer. Anal. 57(2), 615–637 (2019). https://doi.org/10.1137/18M1206151

Pollock, S., Schwartz, H.: Benchmarking results for the Newton-Anderson method. Results in Applied Mathematics 8, 100095 (2020). https://doi.org/10.1016/j.rinam.2020.100095

Pulay, P.: Convergence acceleration of iterative sequences the case of SCF iteration. Chem. Phys. Lett. 73(2), 393–398 (1980). https://doi.org/10.1016/0009-2614(80)80396-4

Pulay, P.: Improved SCF convergence acceleration. J. Comput. Chem. 3(4), 556–560 (1982). https://doi.org/10.1002/jcc.540030413

Shi, W., Song, S., Wu, H., Hsu, Y.C., Wu, C., Huang, G.: Regularized Anderson acceleration for off-policy deep reinforcement learning. arXiv:1909.03245 (2019)

Toth, A., Ellis, J.A., Evans, T., Hamilton, S., Kelley, C.T., Pawlowski, R., Slattery, S.: Local improvement results for Anderson acceleration with inaccurate function evaluations. SIAM J. Sci. Comput. 39(5), S47–S65 (2017). https://doi.org/10.1137/16M1080677

Toth, A., Kelley, C.T.: Convergence analysis for Anderson acceleration. SIAM J. Numer. Anal. 53(2), 805–819 (2015). https://doi.org/10.1137/130919398

van der Vorst, H.A., Vuik, C.: GMRESR: a family of nested GMRES methods. Numer. Linear Algebra Appl. 1(4), 369–386 (1994). https://doi.org/10.1002/nla.1680010404

Vuik, C.: Solution of the discretized incompressible Navier-Stokes equations with the GMRES method. Int J Numer Methods Fluids 16(6), 507–523 (1993). https://doi.org/10.1002/fld.1650160605

Walker, H.F., Ni, P.: Anderson acceleration for fixed-point iterations. SIAM J. Numer. Anal. 49(4), 1715–1735 (2011). https://doi.org/10.1137/10078356X

Wang, D., He, Y., De Sterck, H.: On the asymptotic linear convergence speed of Anderson acceleration applied to ADMM. J. Sci. Comput. 88(2), Paper No. 38, 35 (2021). https://doi.org/10.1007/s10915-021-01548-2

Washio, T., Oosterlee, C.W.: Krylov subspace acceleration for nonlinear multigrid schemes. pp. 271–290 (1997). http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.147.3799

Yang, C., Meza, J.C., Lee, B., Wang, L.W.: KSSOLV—a MATLAB toolbox for solving the Kohn-Sham equations. ACM Trans. Math. Software 36(2), Art. 10, 35 (2009). https://doi.org/10.1145/1499096.1499099

Yang, Y.: Anderson acceleration for seismic inversion. Geophysics 86(1), R99–R108 (2021). https://doi.org/10.1190/geo2020-0462.1

Zhang, J., O’Donoghue, B., Boyd, S.: Globally convergent type-I Anderson acceleration for nonsmooth fixed-point iterations. SIAM J. Optim. 30(4), 3170–3197 (2020). https://doi.org/10.1137/18M1232772

Funding

This work was partially supported by the National Natural Science Foundation of China [grant number 12001287]; the Startup Foundation for Introducing Talent of Nanjing University of Information Science and Technology [grant number 2019r106].

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Peter Benner

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, K., Vuik, C. Asymptotic convergence analysis and influence of initial guesses on composite Anderson acceleration. Adv Comput Math 49, 94 (2023). https://doi.org/10.1007/s10444-023-10095-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10444-023-10095-3