Sem@K: Is my knowledge graph embedding model semantic-aware?

Abstract

Using knowledge graph embedding models (KGEMs) is a popular approach for predicting links in knowledge graphs (KGs). Traditionally, the performance of KGEMs for link prediction is assessed using rank-based metrics, which evaluate their ability to give high scores to ground-truth entities. However, the literature claims that the KGEM evaluation procedure would benefit from adding supplementary dimensions to assess. That is why, in this paper, we extend our previously introduced metric Sem@K that measures the capability of models to predict valid entities w.r.t. domain and range constraints. In particular, we consider a broad range of KGs and take their respective characteristics into account to propose different versions of Sem@K. We also perform an extensive study to qualify the abilities of KGEMs as measured by our metric. Our experiments show that Sem@K provides a new perspective on KGEM quality. Its joint analysis with rank-based metrics offers different conclusions on the predictive power of models. Regarding Sem@K, some KGEMs are inherently better than others, but this semantic superiority is not indicative of their performance w.r.t. rank-based metrics. In this work, we generalize conclusions about the relative performance of KGEMs w.r.t. rank-based and semantic-oriented metrics at the level of families of models. The joint analysis of the aforementioned metrics gives more insight into the peculiarities of each model. This work paves the way for a more comprehensive evaluation of KGEM adequacy for specific downstream tasks.

1.Introduction

A knowledge graph (KG) is commonly seen as a directed multi-relational graph in which two nodes can be linked through potentially several semantic relationships. More formally, a knowledge graph

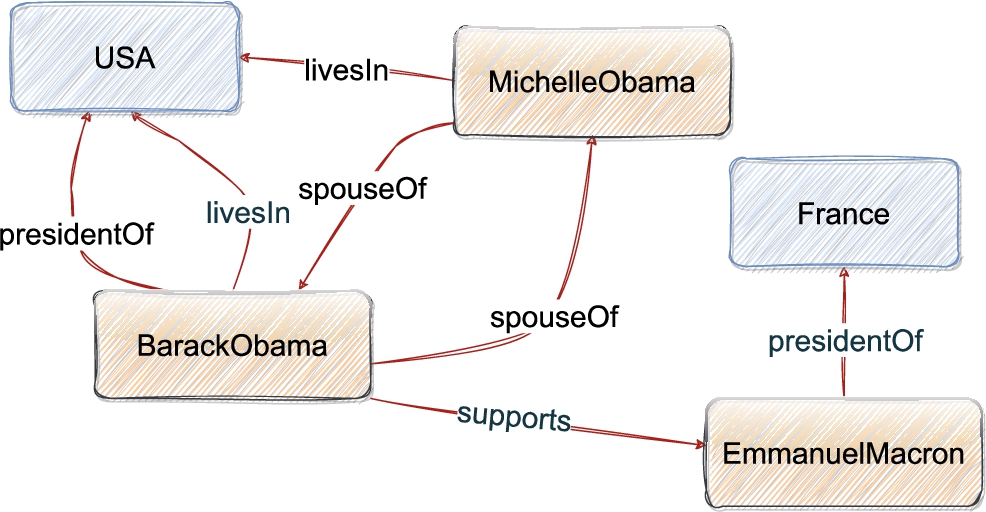

Fig. 1.

Excerpt of a KG containing some influential political figures and relations holding between them.

KGs are inherently incomplete, incorrect, or overlapping and thus major refinement tasks include entity matching, question answering, and link prediction [48,72]. The latter is the focus of this paper. Link prediction (LP) aims at completing KGs by leveraging existing facts to infer missing ones. In the LP task, one is provided with a set of incomplete triples, where the missing head (resp. tail) needs to be predicted. This amounts to holding a set of triples

The performance of KGEM for LP is evaluated using rank-based metrics such as Hits@K, Mean Rank (MR), and Mean Reciprocal Rank (MRR) that assess whether ground-truth entities are indeed given higher scores [54,72]. However, various works recently raised some caveats about such metrics [5,19,64]. For instance, they are not well-suited for drawing comparisons across datasets [5]. More importantly, they only provide a partial picture of KGEM performance [5]. Indeed, LP can lead to nonsensical triples, such as (BarackObama,isFatherOf,USA), being predicted as highly plausible facts, although they violate constraints on the domain and range of relations [23,73]. KGEMs with such issues may nevertheless reach a satisfying performance in terms of rank-based metrics.

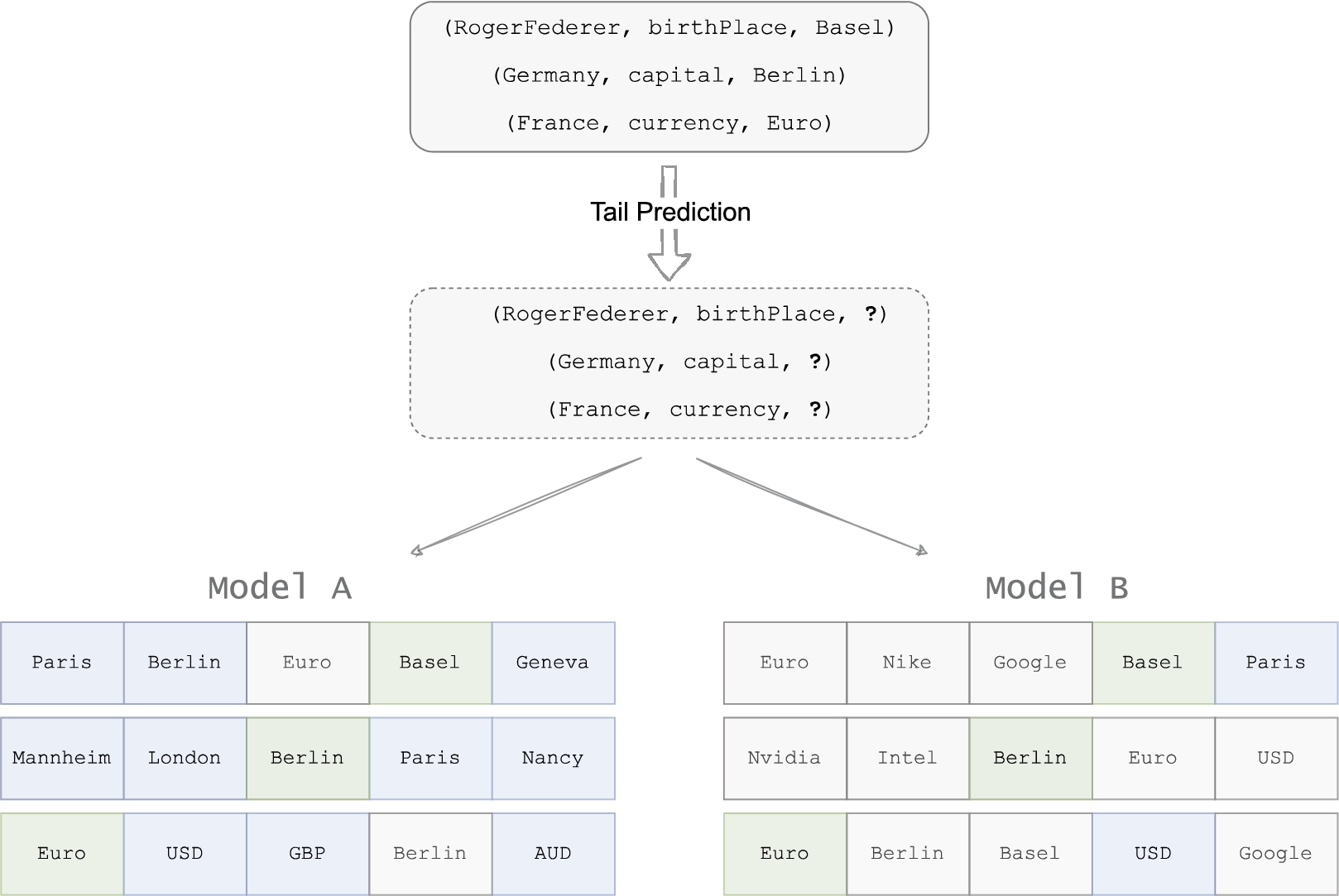

Few works propose to go beyond the mere traditional quantitative performance of KGEMs and address their ability to capture the semantics of the original KG, e.g., domain and range constraints, hierarchy of classes [22,40,49]. According to Berrendorf et al. [5], this would give a more complete picture of the performance of a KGEM. This is why we advocate for additional qualitative and semantic-oriented metrics to supplement traditional rank-based metrics and propose Sem@K to address this need. The relevance of using semantic-oriented metrics – more specifically Sem@K – is clearly visible in Fig. 2: Sem@K provides a supplementary dimension to the evaluation procedure and allows to confidently choose between two models. They are equally good in terms of rank-based metrics but Model A predicts entities that are semantically valid w.r.t. the range constraint.

More specifically, in this work, our goal is to assess the ability of popular KGEMs to capture the semantic profile of relations in a LP task, i.e., whether KGEMs predict entities that respects domain and range of relations. Henceforth, we refer to this aspect as the semantic awareness of KGEMs. To do so, we build on Sem@K, a semantic-oriented metric that we previously introduced [20,21] In [20], Sem@K was specifically defined for the recommendation task which was seen as predicting tails for a unique target relation. Sem@K was then extended in [21] to the more generic LP task, where not only tails but also heads are corrupted and all relations are considered. In the present work, we deepen the study of the semantic awareness of KGEMs by proposing different versions of Sem@K that take into account the different characteristics of KGs (e.g., hierarchy of types). Moreover, the semantic awareness of a wider range of KGEMs is analyzed – especially convolutional models. Likewise, a broader array of KGs is used, in order to benchmark the semantic capabilities of KGEMs on mainstream LP datasets. Thus, the following research questions are addressed:

– RQ1: how semantic-aware agnostic KGEMs are?

– RQ2: how the evaluation of KGEM semantic awareness should adapt to the typology of KGs?

– RQ3: does the evaluation of KGEM semantic awareness offer different conclusions on the relative superiority of some KGEMs compared to rank-based metrics?

– to evaluate KGEM semantic awareness on any kind of KG, we extend a previously defined semantic-oriented metric and tailor it to support a broader range of KGs.

– we perform an extensive study of the semantic awareness of state-of-the-art KGEMs on mainstream KGs. We show that most of the observed trends apply at the level of families of models.

– we perform a dynamic study of the evolution of KGEM semantic awareness vs. their performance in terms of rank-based metrics along training epochs. We show that a trade-off may exist for most KGEMs.

– our study supports the view that agnostic KGEMs are quickly able to infer the semantics of KG entities and relations.

The remainder of the paper is structured as follows. Related work is presented in Section 2. Section 3 outlines the main motivations for assessing the semantic awareness of KGEMs. Section 4 subsequently presents Sem@K, the semantic-oriented metric that fulfills this purpose. Sem@K comes in different flavors based on the typology of the datasets at hand and the intended use cases. In Section 5, we detail the datasets and KGEMs used in this work, before presenting the experimental findings in Section 6. A thorough discussion is provided in Section 7. Lastly, Section 8 outlines future research directions.

2.Related work

2.1.Link prediction using knowledge graph embedding models

Several LP approaches have been proposed to complete KGs. Symbolic approaches relying on rule-based [16,17,31,38,47] or path-based reasoning [12,32,79] are somewhat popular but are not considered in the present work. Instead, KGEMs are the focus of this paper.

In particular, this work is concerned with assessing the semantic awareness of agnostic KGEMs. The semantic awareness of such models is defined as their ability to score higher entities whose types belong to the domain and range of relations. Agnostic KGEMs are defined as KGEMs that rely solely on the structure of the KG to learn entity and relation representations. In this respect, these models differ according to several criteria, such as the nature of the embedding space or the type of scoring function [25,54,70,72]. In this work, KGEMs are considered with respect to three main families of models that are traditionally distinguished in the literature.

Geometric models are additive models that consider relations as geometric operations in the latent embedding space. A head entity h is spatially transformed using an operation τ that depends on the relation embedding r. Then the distance between the resulting vector and the tail entity t is used as a measure for assessing the plausibility of a fact

Semantic matching models are named this way as they usually use a similarity-based function to define their own scoring function. Semantic matching models are also referred to as matrix factorization models or tensor decomposition models, in the sense that a KG can be seen as a 3D adjacency matrix, in other words a three-way tensor. This tensor can be decomposed into a set of low-dimensional vectors that actually represent the entity and relation embeddings. Semantic matching models are subdivided into bilinear and non-bilinear models. Bilinear models share the characteristic of capturing interactions between two entity vectors using multiplicative terms. RESCAL [45] is the earliest introduced bilinear model for LP. It models entities as vectors and relations as matrices. The components

Neural network-based models rely on neural networks to perform LP. In neural networks, the parameters (e.g. weights and biases) are organized into different layers, with usually non-linear activation functions between each of these layers. The first introduced neural-network based model for LP is Neural Tensor Network (NTN) [59]. It can be seen as a combination of multi-layer perceptrons (MLPs) and bilinear models [25]. NTN defines a distinct neural network for each relation. This choice of parameterization makes NTN similar to RESCAL in the sense that both models achieve great expressiveness at the expense of computational concerns. The most recent neural network-based models rely on more sophisticated layers to perform a broader set of operations. Convolutional models are by far the most representative family of such models. They use convolutional layers to learn deep and expressive features from the input data, which pass through such layers to undergo convolution with low-dimensional filters ω. The resulting feature maps subsequently go through dense layers to obtain a final plausibility score. Compared with fully connected neural networks, convolution-based models are able to capture complex relationships with fewer parameters by learning non-linear features. While ConvE [13] reshapes head entity and relation embeddings before concatenating them into a unique input matrix to pass through convolutional layers, ConvKB [42] does not perform any reshaping and also puts the tail embedding into the concatenated input matrix. Other models were later proposed, such as ConvR [27] and InteractE [67] that both process triples independently. Another branch of convolutional models also considers the local neighborhood around each central entity. These are based on Graph Neural Networks (GNNs). The most representative GNN-based model for LP is R-GCN [57]. The key idea is to accumulate messages from the local neighborhood of the central node over multi-hop relations. By doing so, R-GCN is better able to model a long range of relational dependencies. However, R-GCN does not outperform baselines for the LP task [15]. Subsequent models have claimed superiority over R-GCN: SACN [58] introduces a weighted GCN to adjust the amount of aggregated information from the local neighborhood and KBGAT [41] relies on attention mechanism to generate more accurate embeddings. More recently, CompGCN [68] and DisenKGAT [75] showcased impressive performance with regard to the LP task.

2.2.Combining embeddings and semantics

The possibility of using additional semantic information has been extensively studied in recent works [10,23,30,37,46,71,78]. In general, the semantic information stems directly from an ontology, originally defined by Gruber as an “explicit specification of a conceptualization” [18]. Ontologies formally describe a specific application domain of interest (e.g. education, pharmacology, etc.) in which several classes (or concepts) and relations are identified and formally specified. Ontologies support KG construction by providing a schema that specifies the nature of entities, the semantic profile of relations, and other constraints that give the KG a semantic coherence.

A significant part of the literature incorporates such semantic information to constrain the negative sampling procedure and generate meaningful negative triples [23,30]. For instance, type-constrained negative sampling (TCNS) [30] replaces the head or the tail of a triple with a random entity belonging to the same type (rdf:type) as the ground-truth entity. Jain et al. [23] go a step further and use ontological reasoning to iteratively improve KGEM performance by retraining the model on inconsistent predictions.

Semantic information can also be embedded in the model itself. In fact, some KGEMs leverage ontological information such as entity types and hierarchy.

Embedding models project entities and relations of a KG into a vector space. Thus, the semantics of the original KG may not be fully preserved [23,49]. As stated by Paulheim [49], because embeddings are not meant to preserve the semantics of the KG, they are not interpretable and this can severely hinder explainability in domains such as recommender systems. Consequently, Paulheim [49] advocates for semantic embeddings. Similarly, Jain et al. [22] perform a thorough evaluation of popular KGEMs to better assess whether embeddings can express similarities between entities of the same type. A key finding is that because of overlapping relations among entities of different types, fine-grained semantics cannot be properly reflected by embeddings For instance, the task of finding semantically similar entities does not always provide satisfying results when working with entity embeddings [22].

2.3.Evaluating KGEM performance for link prediction

KGEM performance is evaluated in two stages. During the validation phase, KGEM performance is evaluated on the validation set

In both cases, the rank of the ground-truth entity from the test (resp. validation) set is used to compute aggregated rank-based metrics based on the top-K scored entities. The rank of the ground-truth entity can be determined in two different ways that depend on how observed facts – i.e. facts that already exist in the KG – are considered. In the raw setting, observed facts outranking the ground-truth are not filtered out, while this is the case in the filtered setting. For instance, assuming head prediction is performed on the given ground-truth triple (BarackObama, livesIn, USA), a KGEM may assign a lower score to this triple than to the following triple: (MichelleObama, livesIn, USA). The latter triple actually represents an observed fact. In the raw setting, this triple would not be filtered out from top-K scored triples. This can cause the evaluation procedure to not properly assess the KGEM performance. This is why in practice, the filtered setting is commonly preferred. In the present work, the filtered setting is also used.

KGEM performance is almost exclusively assessed using the following rank-based metrics: Hits@K, Mean Rank (MR), and Mean Reciprocal Rank (MRR) [19]. Disagreements exist as to how and when these metrics can be used and compared properly. In the following, we recall their definitions and discuss their limits.

Hits@K (Eq. (1)) accounts for the proportion of ground-truth triples appearing in the first K top-scored triples:

Mean Rank (MR) (Eq. (2)) corresponds to the arithmetic mean over ranks of ground-truth triples:

Mean Reciprocal Rank (MRR) (Eq. (3)) corresponds to the arithmetic mean over reciprocals of ranks of ground-truth triples:

As mentioned in Section 1, these metrics present some caveats. LP is often used to complete knowledge graphs, where the Open World Assumption (OWA) prevails. KGs are incomplete and, due to the OWA, an unobserved triple used as a negative one can still be positive. It follows that traditional evaluation methods based on rank-based metrics may systematically underestimate the true performance of a KGEM [73].

In addition, the aforementioned rank-based metrics have intrinsic and theoretical flaws, as pointed out in several works [5,19,64]. For example, Hits@K does not take into account triples whose rank is larger than K. As such, a model scoring the ground-truth in position

Recent works recommend using adjusted version of the aforementioned metrics. The Adjusted Mean Rank (AMR) proposed in [1] compares the mean rank against the expected mean rank under a model with random scores. In [5], Berrendorf et al. transform the AMR to define the Adjusted Mean Rank Index (AMRI) bounded in the

In Section 2.2, several approaches incorporating the semantics of entities and relations into the embeddings were mentioned. However, in such cases, the use of semantic information such as entity types and the hierarchy of classes is intended to improve KGEM performance in terms of the aforementioned rank-based metrics only. The underlying semantics of KGs is considered as an additional source of information during training but the ability of KGEMs to generate predictions in accordance with these semantic constraints is never directly addressed. This encourages further assessment of the semantic capabilities of KGEMs, as firstly suggested in [21]. In our work, we directly address this issue by assessing to what extent KGEMs are able to give high scores to triples whose head (resp. tail) belongs to the domain (resp. range) of the relation. When such information is not available – i.e. the KG does not rely on a schema containing rdfs:domain and rdfs:range properties – extensional constraints can still be used to evaluate the semantic capabilities of KGEMs, as detailed in Section 4.

3.Motivations and problem formulation

3.1.Motivating example

To motivate the use of Sem@K to evaluate KGEM quality, this section builds upon a minimalist example which is representative of the issue encountered while benchmarking the performance of several KGEM on the same dataset. As depicted in Fig. 2, two KGEMs that have been trained on the whole training set are tested on a batch of test triples. These KGEMs are referred to as Model A and Model B. Without loss of generality, it is assumed that the test set only comprises the three triples shown in Fig. 2. For the sake of clarity, only the tail prediction pass and the top-5 ranked candidate entities are depicted in Fig. 2. It should be noted that the performance of both models are strictly equal in terms of MR, MRR and Hits@K with

Fig. 2.

Motivating example. Tail prediction is performed for the three test triples contained in the upper insert. Model A and Model B output scores for each possible entity and only the top-five ranked tail candidates are depicted here. Model A and Model B have the same Hits@1, Hits@3 and Hits@5 values. But Model A has better semantic capabilities. Green, blue and white cells respectively denote the ground-truth entity, entities other than the ground-truth and semantically valid, and entities other than the ground-truth and semantically invalid.

3.2.Problem formulation

The traditional evaluation of KGEMs solely based on rank-based metrics can be flawed for several reasons. First, KGEMs benchmarked on the same test sets can exhibit very similar results. Using only rank-based metrics, the final choice only depends on the best achieved MRR and/or Hits@K. This raises the questions whether the chosen model is actually the best one, or whether its slight superiority over other KGEMs can be due to other factors such as better hyperparameter tuning or better modeling of a relational pattern highly present in the test set. Moreover, using only rank-based metrics does not provide the full picture of KGEM quality for the downstream LP task, as some dimensions of KGEMs are left unassessed (see Section 2). In this work, contrary to the mainstream approach consisting in comparing KGEM performance exclusively in terms of rank-based metrics, the trained KGEMs are also evaluated in terms of Sem@K which measures the ability of KGEMs to predict semantically valid triples with respect to the domain and range of relations.

4.Measuring KGEMs semantic awareness with Sem@K

The standard LP evaluation protocol consists in reporting aggregated results, considering the rank-based metrics presented in Section 2.3. As mentioned above, these metrics only provide a partial picture of KGEM performance [5]. To give a more comprehensive assessment of KGEMs, we aim at assessing their semantic awareness using our proposed metric called Sem@K [20,21]. In [20], Sem@K was specifically defined for the recommendation task which was seen as predicting tails for a unique target relation. Sem@K was then extended in [21] to the more generic LP task, where not only tails but also heads are corrupted and all relations are considered. In this work, this original formalization for LP is presented in Section 4.1, and enriched to take into account schemaless KGs (Section 4.3), or KGs with a class hierarchy (Section 4.4). As a consequence, Sem@K comes in 3 different versions (respectively denoted Sem@K[base], Sem@K[ext], and Sem@K[wup]) so as to adapt to KG typology. These distinct versions and their adequacy regarding the KG at hand are summarized in Table 1 and further detailed below. In the following, when no suffix is provided, it is assumed that we are concerned with Sem@K in general, regardless of the actual version.

Table 1

Typology of KGs and their respective adequacy for the presented Sem@K versions

| KG type | Sem@K[ext] | Sem@K[base] | Sem@K[wup] |

| Schemaless | × | ||

| Schema-defined, w/o class hierarchy | × | × | |

| Schema-defined, w/ class hierarchy | × | × | × |

4.1.Definition of Sem@K[base]

This version of Sem@K (Eq. (4)) accounts for the proportion of triples that are semantically valid in the first K top-scored triples:

4.2.A note on Sem@K, untyped entities, and the Open World Assumption

Traditional KGEM evaluation can be performed in all situations, regardless of whether entities are typed or whether the KG comes with a proper schema. When measuring the semantic capability of KGEMs – e.g. with Sem@K – some concerns arise. For instance, a fair question to ask is the following: how should untyped entities be considered? Indeed, in some KGs, some entities are left untyped. For example, in DBpedia the entity dbr:1._FC_Union_Berlin_players does not belong to any other class than owl:Thing. In some other cases as in DB15K and DB100K [11], entities have incomplete typing.

Under the OWA, an untyped entity should not count in the calculation of Sem@K since it is not possible to determine whether this entity has no types or no known types. Although this seems to be a fair option, it raises a major issue: it makes possible to score different sets of entities with rank-based metrics and Sem@K, which is not desirable. If there are M untyped entities in an ordered list of ranked entities, Hits@K, MR and MRR are calculated regardless of this fact, i.e. still taking into account the M untyped entities. However, Sem@K cannot be calculated for these M untyped entities. As such, MR, MRR and Hits@K would be calculated on the original entity set, whereas Sem@K would be computed on a different set of entities. This issue would be even more acute in the case of Sem@1 when the first ranked entity is untyped: Hits@1 and Sem@1 would be calculated on two different entities, which is not acceptable. Consequently, one strategy consists in removing untyped entities from the evaluation protocol, both regarding rank-based and semantic-based metrics. By doing so, consistency is ensured in the ranked list of entities across rank-based and semantic metrics evaluation.

4.3.Sem@K[ext] for schemaless KGs

Not all KGs come with a proper schema, e.g. relations do not appear in any rdfs:domain or rdfs:range clauses. In that particular situation, it can still be desirable to assess the semantic awareness of KGEMs. One approach is to maintain a list of all entities that have been observed as heads (resp. tails) of each relation r:

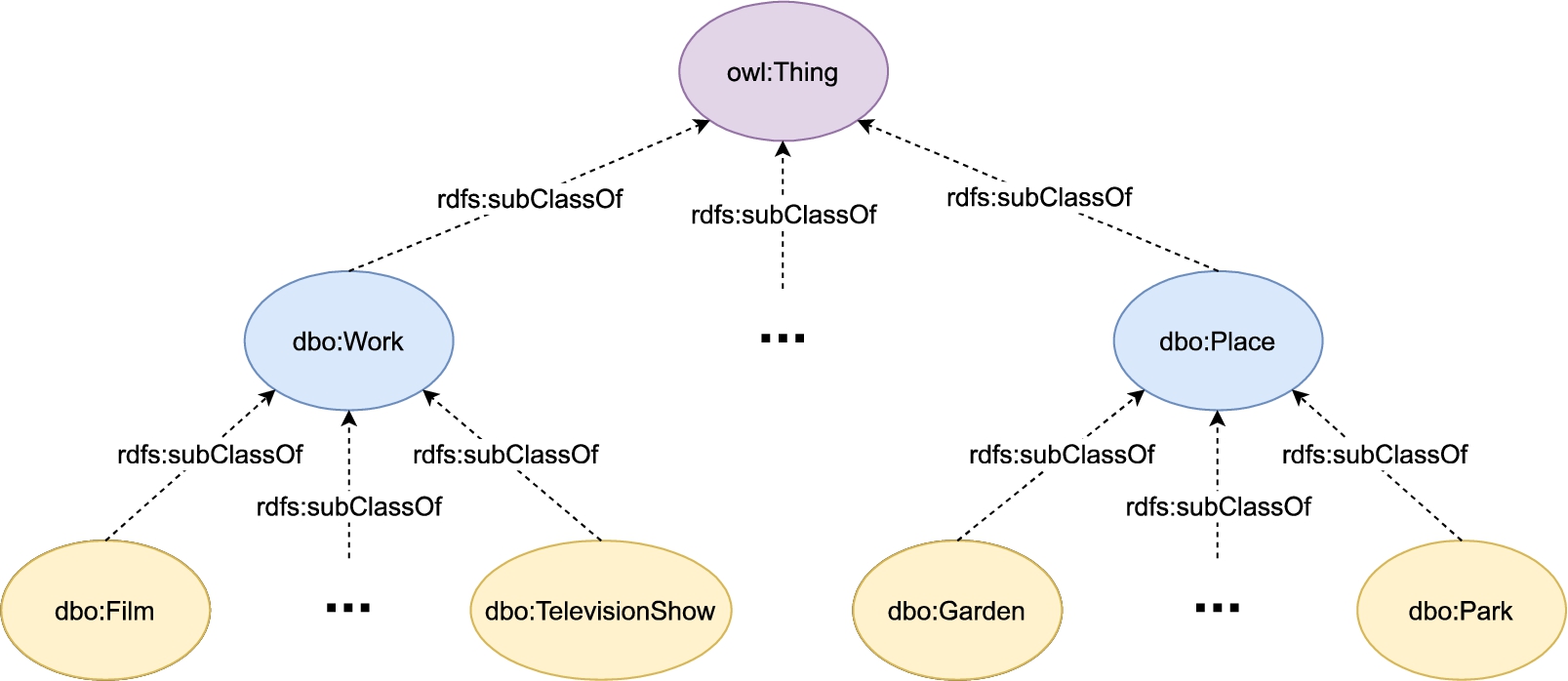

4.4.Sem@K[wup]: A hierarchy-aware version of Sem@K

Sem@K as previously defined equally penalizes all entities that are not of the expected type. However, KGs may be equipped with a class hierarchy that, in turn, can support a more fine-grained penalty for entities depending on the distance or similarity between their type and the expected domain (resp. range) in this hierarchy. To illustrate, consider Fig. 3 that depicts a subset of the DBpedia ontology dbo class hierarchy. Using the hierarchy-free version of Sem@K for the test triple (dbr:The_Social_Network, dbo:director, dbr:David_Fincher), predicting dbr:Friends or dbr:Central_Park as head would be penalized the same way in the compatibility function. However, it is clear that an entity of class dbo:TelevisionShow is semantically closer to dbo:Film – the domain of the relation dbo:director – than an entity of class dbo:Park, and thus should be less penalized.

To leverage such a semantic relatedness between concepts in Sem@K, the compatibility function can be adapted accordingly:

Several similarity measures σ have been proposed in the literature [33,34,52,53,76]. In this work, the Wu-Palmer similarity [76] (Eq. (7)) is used:

Considering the example in Fig. 3, using the Wu-Palmer score in the calculation of Sem@K, a head prediction of dbr:Friends and dbr:Central_Park for the ground-truth triple (dbr:The_Social_Network, dbo:director, dbr:David_Fincher) are now differently penalized. The instance dbr:Friends is of type dbo:TelevisionShow, so we have:

Fig. 3.

Excerpt from the DBpedia class hierarchy.

5.Experimental setting

5.1.Datasets

In order to draw reliable and general conclusions, a broad range of KGs are used in our experiments. They have been chosen due to their mainstream adoption in recent research works around KGEMs for LP and the fact that they have different characteristics (e.g. entities, relations, classes, presence of a class hierarchy). In this section, the schema-defined and schemaless KGs used in the experiments are detailed. Note that in our experiments, all the schema-defined KGs come with a class hierarchy inherited from either Freebase [6], DBpedia [2], YAGO [60], or schema.org. To meet requirements for evaluating KGEMs w.r.t. Sem@K (i.e. classes instantiated by entities, domain and range for relations), when necessary, subsets of schema-defined KGs are used, as explained in Section 5.1.1. Among the 4 schema-defined KGs presented hereafter, FB15K237-ET, DB93K and YAGO3-37K are derived from already existing KGs, while YAGO4-19K was specifically created and is made available on Zenodo11 and GitHub.22 The other KGs are made available on GitHub.33

5.1.1.Schema-defined KGs

The statistics of the datasets FB15K237-ET, DB93K, YAGO3-37K and YAGO4-19K used in our experiments are provided in Table 2. As discussed in Section 4.2, to create an experimental evaluation setting as unbiased and flawless as possible, the schema-defined KGs used in the experiments are filtered to keep typed entities only. This way, Sem@K is calculated under the CWA.

FB15K237-ET derives from FB15K [7] – a dataset extracted from the cross-domain Freebase KG [6]. In these experiments, we do not use FB15K, as it has been noted that this dataset suffers from test leakage, i.e., a large number of test triples can be obtained by simply inverting the position of the head and tail in the train triples [65]. The later introduced FB15K237 dataset [65] is a subset of the original FB15K without these inverse relations. To the best of our knowledge, there is no schema-defined version of FB15K237. However, a schema-defined version of FB15K is provided in [78]. Consequently, we based ourselves on this version of FB15K and mapped the extracted entity types, relation domains and ranges to the entities and relations found in FB15K237. The resulting schema-defined version of FB15K237 is named FB15K237-ET and includes only typed entities. Besides, validation and test sets contain triples whose relation have a well-defined domain (resp. range), as well as more than 10 possible candidates as head (resp. tail). This ensures Sem@K is not unduly penalized and can be calculated on the same set of entities as Hits@K and MRR, at least until

DB93K is a subset of DB100K, which was first introduced in [14]. A slightly modified version of DB100K has been proposed in [11]. Contrary to the initial version of DB100K, the latter version is schema-defined: entities are properly typed and most relations have a domain and/or a range. This second version is considered in the following experiments. However, some inconsistencies were found in the dataset. Some DBpedia entities only instantiate Wikidata44 or schema.org55 classes, while instantiation of classes from the DBpedia ontology actually exist. Moreover, some entities are only partially typed. It must also be noted that domains and ranges of relations have been extracted from DBpedia more than two years ago. DBpedia is a communautary and open-source project: DBpedia classification system relies on human curation, which sometimes implies a lack of coverage for some resources and updates for others. Consequently we associated all relations of DB100K to their most up-to-date domains and ranges.66 Similarly for entities, we associated all entities to their most up-to-date classes. Finally, we removed all untyped entities as well as validation and test relations having less than 10 observed entities, so as not to unfairly penalize Sem@K results in the validation and test phases.

YAGO3-37K derives from the schema-defined YAGO39K dataset [37] extracted from the cross-domain YAGO3 KG [60]. Compared to the original YAGO39K, in our experiments only typed entities are kept. In addition, relations having less than 10 observed heads or tails in the training set are discarded from the validation and test splits, for the same reason that keeping them would not reflect the actual Sem@K values. It should be noted that in the YAGO3 ontology, most relations have very generic domains and ranges which are very close to the root of the ontology hierarchy. To produce a more challenging evaluation setting of the models’ semantic awareness, a subset of hard relations was identified and only validation and test triples whose relation belongs to this subset are kept. The resulting dataset is named YAGO3-37K.

We built YAGO4-19K with several other subsets of the YAGO4 knowledge graph [63]. Similarly to other datasets, we focused on relations with a defined domain and range, and more than 10 triples to constitute the validation and test sets. We purposedly favored difficult relations to feature in the validation and test sets. To enrich the training set, additional relations were added based on a manual selection. Selected relations in validation and test sets as well as additional relations in the training set can be found on the GitHub repository of the dataset. It should be noted that the class hierarchy associated with entities in YAGO 4 is schema.org.

Table 2

Statistics of the schema-defined, hierarchical KGs used in the experiments

| Dataset | ||||||

| FB15K237-ET | 14,541 | 237 | 532 | 271,575 | 15,337 | 17,928 |

| DB93K | 92,574 | 277 | 311 | 237,062 | 18,059 | 36,424 |

| YAGO3-37K | 37,335 | 33 | 132 | 351,599 | 4,220 | 4,016 |

| YAGO4-19K | 18,960 | 74 | 1,232 | 27,447 | 485 | 463 |

5.1.2.Extensional KGs

Another range of datasets used in these experiments do not come with an ontological schema. In particular, this means relations do not have a clearly-defined domain or range. Although Codex-S and Codex-M are based on the Wikidata schema which does possess property constraints linking subject types to value type constraints, we limit ourselves to KGs that represent this information with rdfs:domain and rdfs:range predicates. Consequently, in this work, we only report Sem@K[ext] results for Codex-S and Codex-M. The statistics of the datasets Codex-S, Codex-M and WN18RR used in our experiments are provided in Table 3.

Table 3

Statistics of the schemaless KGs used in the experiments

| Dataset | |||||

| Codex-S | 2,034 | 42 | 32,888 | 1,827 | 1,828 |

| Codex-M | 17,050 | 51 | 185,584 | 10,310 | 10,311 |

| WN18RR | 40,943 | 11 | 86,834 | 3,034 | 3,134 |

WN18RR [13] originates from WN18, which is a subset of the WordNet KG [39]. As for FB15K, Toutanova et al. [65] reported a huge test leakage in the original WN18 dataset. More specifically, 94% of the train triples have inverse relations that are linked to test triples. Dettmers et al. [13] remove all inverse relations to propose WN18RR. In WN18RR, entities are nouns, verbs, and adjectives. Relations such as hypernym and derivationally related from hold between observed entities. Such relations are not linked to any rdfs:domain or rdfs:range predicates. Besides, relations such as derivationally related from can contain nouns, verbs or adjectives as both head or tail. It follows that it is not possible to infer any expected entity type for relations – in this case the word qualifier. This is why WN18RR is used in the extensional setting.

Codex-S [56] and Codex-M [56] are datasets extracted from Wikidata and Wikipedia. They cover a wider scope and purposely contain harder facts than most KGs [56]. Consequently, these datasets prove to be more challenging for the link prediction task. Compared to WN18RR, Codex-S and Codex-M contain entity types, relation descriptions and Wikipedia page extracts. Nonetheless, Wikidata does not contain rdfs:domain or rdfs:range predicates. The property constraints present in Wikidata are harder to manipulate than the rdfs:domain and rdfs:range clauses found in DBpedia. As such, in our experiments Codex-S and Codex-M are used in the extensional setting. Codex-S and Codex-M initially come with already generated hard negatives. In our experiments, we do not directly use these provided negative triples. Instead, we use the same Uniform Random Negative Sampling schema as for other datasets.

5.2.Baseline models

In this work, the semantic awareness of the most popular semantically agnostic KGEMs is analyzed. More specifically, the translational models TransE [7] and TransH [74], the semantic matching models DistMult [81], ComplEx [66] and SimplE [29], and the convolutional models ConvE [13], ConvKB [42], R-GCN [57] and CompGCN [68] are considered. Note that in the analysis of the results in Section 6, a distinction will be made between pure convolutional KGEMs (ConvE, ConvKB) and GNNs (R-GCN, CompGCN). Although the latter have convolutional layers, they are able to capture long-range interactions between entities due to their ability to consider k-hop neighborhoods. The characteristics of the models used in our experiments are mentioned hereinafter and summarized in Table 4.

Table 4

Summary of the KGEMs used in the experiments

| Model family | Model | Scoring function | Loss function |

| Geometric | TransE | Pairwise Hinge | |

| TransH | Pairwise Hinge | ||

| Semantic Matching | DistMult | Pairwise Hinge | |

| ComplEx | Pointwise Logistic | ||

| SimplE | Pointwise Logistic | ||

| Convolutional | ConvE | Binary Cross-Entropy | |

| ConvKB | Pointwise Logistic | ||

| R-GCN | DistMult decoder | Binary Cross-Entropy | |

| CompGCN | ConvE decoder | Binary Cross-Entropy |

TransE [7] is the earliest translational model. It learns representations of entities and relations such that for a triple

TransH [74] is an extension of TransE. It allows entities to have distinct representations when involved in different relations. Specifically,

DistMult [81] is a semantic matching model. It is characterized as such because it uses a similarity-based scoring function and matches the latent semantics of entities and relations by leveraging their vector space representations. More specifically, DistMult is a bilinear diagonal model that uses a trilinear dot product as its scoring function:

ComplEx [66] is also a semantic matching model. It extends DistMult by using complex-valued vectors to represent entities and relations:

SimplE [29] models each fact in both a direct and an inverse form. To do so, an entity e is simultaneously represented by two vectors

ConvE [13] first reshapes entity and relation embeddings and then concatenates them into a 2D matrix [h; r]. To model the interactions between entities and relations, ConvE subsequently uses 2D convolution over embeddings and layers of nonlinear features. The output is ultimately scored against the tail embedding t using the dot product. More precisely, the following scoring function is used:

ConvKB [42] also represents entities and relations as same-sized vectors. However, ConvKB does not reshape the embeddings of entities and relations. Plus, ConvKB also considers the tail embedding in the concatenation operation, thus obtaining the 2D matrix [h; r; t] after concatenation. Convolution by a set ω of T filters of shape

R-GCN [57] extends the idea of applying graph convolutional networks (GCNs) to multi-relational data. R-GCN operates on local graph neighborhoods and applies a convolution operation to the neighbors of each entity. By aggregating the messages coming from all the neighbors of an entity, the embedding of the latter is updated in accordance. Each entity thus has a hidden representation which directly depends on the hidden representations of its neighbors. This process of accumulating messages (i.e. the hidden representations of neighboring entities) and aggregating them so as to update the hidden representation of the central node is performed for each layer of the R-GCN model. More formally, the hidden representation of the entity i in the layer

CompGCN [68] improves over R-GCN by not only learning entity representations but also relation representations. Concretely, CompGCN performs a composition operation

5.3.Implementation details and hyperparameters

For the sake of comparisons, MRR, Hits@K and Sem@K all need to rely on the same code implementation. More specifically, for R-GCN77 and CompGCN,88 existing implementations were reused and Sem@K values were calculated on the trained models. Other KGEMs were implemented in PyTorch. To avoid time-consuming hyperparameter tuning, we took inspiration from the hyperparameters provided by LibKGE99 for Codex-S, Codex-M, WN18RR and FB15K237. However, LibKGE does not benchmark all the datasets and models used in our experiments. For such models, we stick to the hyperparameters provided by the original authors, when available. For the models with no reported best hyperparameters, as well as for the remaining datasets used in the experiments – i.e. DB93K, YAGO3-37K and YAGO4-19K – different combinations of hyperparameters were manually tried. We first trained our KGEMs for

6.Results

In the following, we perform an extensive analysis of the results obtained using the aforedescribed KGEMs and KGs. For the sake of clarity, the complete range of tables and plots are placed in Appendix B and C, respectively. When necessary to support our claim, some of them are duplicated in the body text.

6.1.Semantic awareness of KGEMs

This section draws on the Sem@K values (see Tables 8 and 9) achieved at the best epoch in terms of MRR to provide an analysis of the semantic awareness of state-of-the-art KGEMs. In other words, for such models we only consider a snapshot of their best epochs in terms of rank-based metrics.

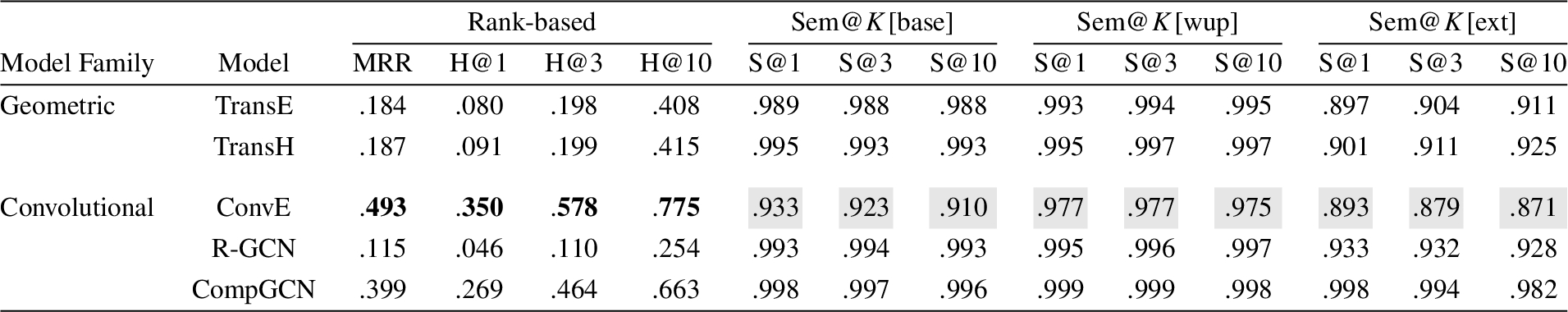

A major finding is that models performing well with respect to rank-based metrics are not necessarily the most competitive when it comes to their semantic capabilities. On YAGO3-37K (see Table 5) for instance, ConvE showcases impressive MRR and Hits@K values. However, it is far from being the best KGEM in terms of Sem@K, as it is outperformed by CompGCN, R-GCN and all translational models.

Table 5

Results on YAGO3-37K. Bold fonts and gray cells denote the best achieved results and the worst achieved results among the models reported in the table, respectively. Full results are available in Appendix B, Table 8

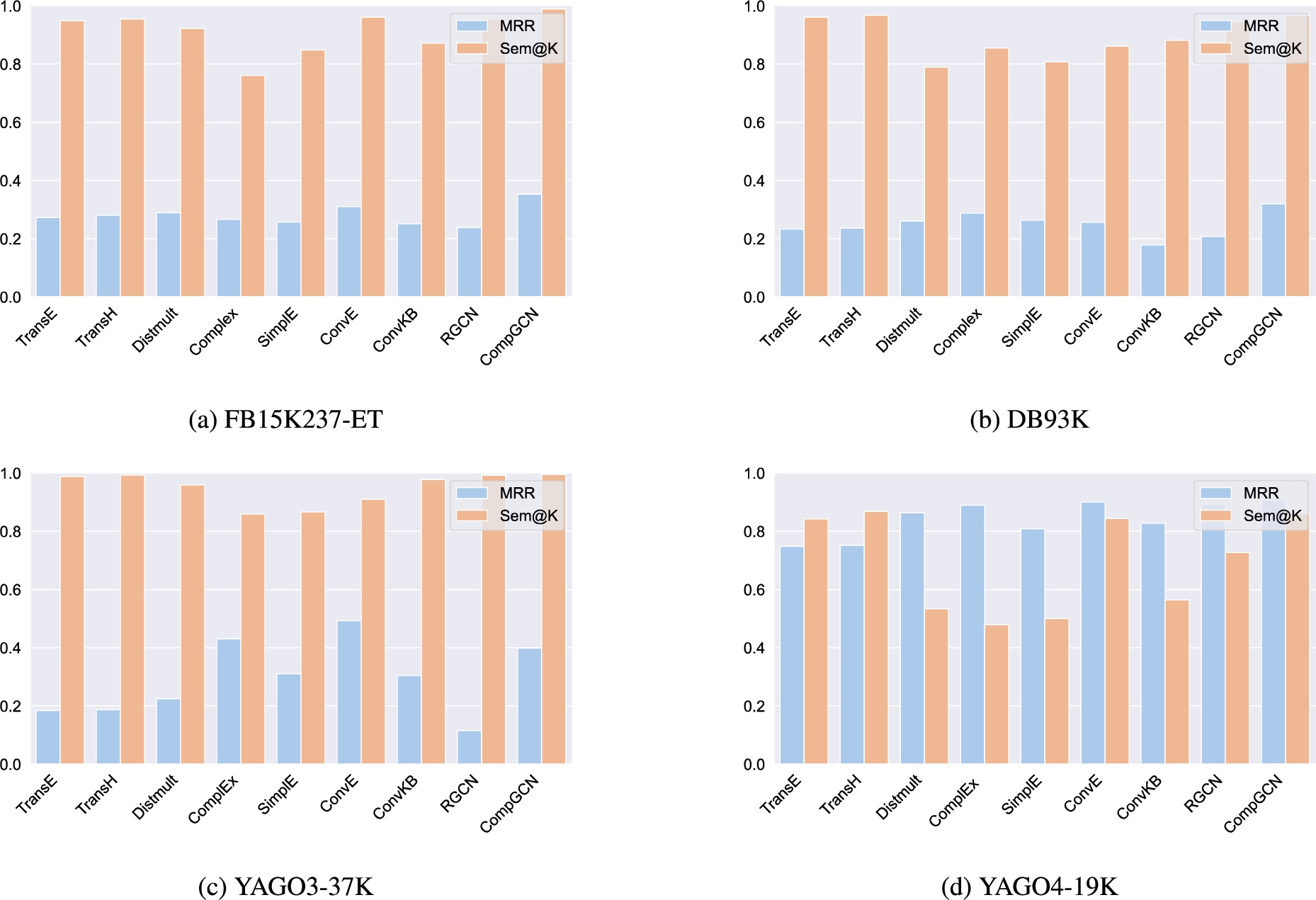

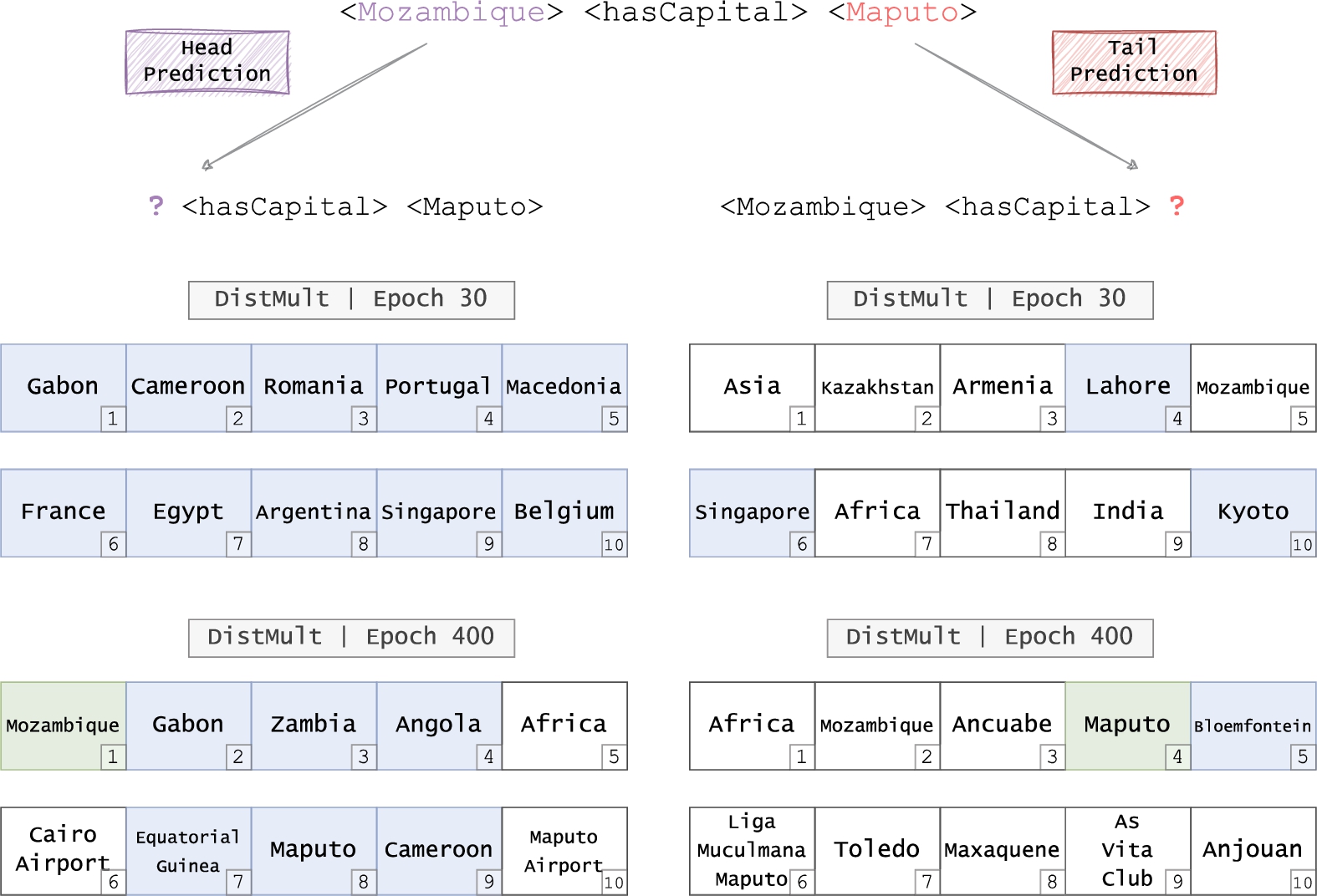

From a coarse-grained viewpoint, conclusions about the relative superiority of models with the distinct consideration of rank-based metrics and semantic awareness can be generalized at the level of models families. For example, semantic matching models (DistMult, ComplEx, SimplE) globally achieve better MRR and Hits@K values while their semantic capabilities are in most cases lower than the ones of translational models (TransE, TransH) – see Table 8 and Table 9 for detailed results w.r.t. rank-based and semantic-oriented metrics. A condensed view of the comparison between MRR and Sem@10 is also reported in Fig. 4 and Fig. 5. The respective hierarchies of such models for the benchmarked schema-defined and schemaless KGs are depicted in Fig. 6 and Fig. 7, respectively. It is clearly visible that KGEMs are grouped by family. In particular, GNNs and translational models showcase very promising semantic capabilities. GNNs are almost always the best regarding Sem@K[ext] values – not only for schemaless KGs (Fig. 7), but also for schema-defined KGs (see Table 8 for full results, and Fig. 4 for a quick glimpse). This means GNNs are more capable of predicting entities that have been observed as head (resp. tail) of a given relation. Translational models are very competitive in terms of Sem@K[base]. In Fig. 6, we clearly see that they consistently rank among the best performing models regarding Sem@K[base]. Interestingly, the semantic matching models DistMult, ComplEx and SimplE perform relatively poorly. This observation holds regardless of the nature of the KG, as they systematically rank among the worst performing models for schema-defined (Fig. 6) and schemaless (Fig. 7) KGs.

Fig. 4.

MRR and Sem@10 results achieved at the best epoch in terms of MRR for each model and on each schema-defined dataset.

Fig. 5.

MRR and Sem@10 results achieved at the best epoch in terms of MRR for each model and on each schemaless dataset.

Fig. 6.

Sem@K[base] comparisons between KGEMs on the 4 benchmarked schema-defined KGs. Colors indicate the family of models: blue, purple, green, and yellow cells denote GNNs, translational, convolutional, and semantic matching models, respectively. Regarding Sem@K, the relative hierarchy of models is consistent across KGs and we can clearly see that KGEMs are grouped by families of models.

![Sem@K[base] comparisons between KGEMs on the 4 benchmarked schema-defined KGs. Colors indicate the family of models: blue, purple, green, and yellow cells denote GNNs, translational, convolutional, and semantic matching models, respectively. Regarding Sem@K, the relative hierarchy of models is consistent across KGs and we can clearly see that KGEMs are grouped by families of models.](https://content.iospress.com:443/media/sw/2023/14-6/sw-14-6-sw233508/sw-14-sw233508-g007.jpg)

Fig. 7.

Sem@K[ext] comparisons between KGEMs on the 3 benchmarked schemaless KGs. Colors indicate the family of models: blue, purple, green, and yellow cells denote GNNs, translational, convolutional, and semantic matching models, respectively. Regarding Sem@K, the relative hierarchy of models is consistent across KGs and we can clearly see that KGEMs are grouped by families of models.

![Sem@K[ext] comparisons between KGEMs on the 3 benchmarked schemaless KGs. Colors indicate the family of models: blue, purple, green, and yellow cells denote GNNs, translational, convolutional, and semantic matching models, respectively. Regarding Sem@K, the relative hierarchy of models is consistent across KGs and we can clearly see that KGEMs are grouped by families of models.](https://content.iospress.com:443/media/sw/2023/14-6/sw-14-6-sw233508/sw-14-sw233508-g008.jpg)

Therefore, it seems that translational models are better able at recovering the semantics of entities and relations to properly predict entities that are in the domain (resp. range) of a given relation, while semantic matching models might sometimes be better at predicting entities already observed in the domain (resp. range) of a given relation (e.g. DistMult reaches very high Sem@K[ext] values on Codex-S, as evidenced in Fig. 7a). In cases when translational and semantic matching models provide similar results in terms of rank-based metrics, the nature of the dataset at hand – whether it is schema-defined or schemaless – might thus strongly influence the final choice of a KGEM.

Interestingly, CompGCN which is by far the most recent and sophisticated model used in our experiments, outperforms all the other models in terms of semantic awareness as, with very limited exceptions, it is the best in terms of Sem@K[base], Sem@K[ext] and Sem@K[wup]. In addition, it should be noted that R-GCN provides satisfying results as well. Except in a very few cases (e.g. outperformed by ComplEx on Codex-S and WN18RR in terms of Sem@1), R-GCN showcases better semantic awareness than semantic matching models. Most of the time, R-GCN also provides comparable or even higher semantic capabilities than translational models. In particular, Sem@K values achieved with R-GCN are similar to the ones achieved with TransE and TransH (e.g. on FB15K237-ET and YAGO3-37K, see Tables 8a and 8c) while the latter models are actually outperformed by R-GCN in terms of Sem@K[ext]. It appears clearly on Codex-M and WN18RR (Tables 9b and 9c), although the conclusion holds for all datasets.

Our experimental results suggest that the structure of GNNs seems to be able to encode the latent semantics of the entities and relations of the graph. This ability may be attributed to the fact that, contrary to translational models which only model the local neighborhood of each triple, GNNs update entity embeddings (and relation embeddings in the case of CompGCN) based on the information found in the extended, h-hop neighborhood of the central node. While translational and semantic matching models treat each triple independently, GNNs model interactions between entities on a large range of semantic relations. It is likely that this extended neighborhood comprises signals or patterns that help the model infer the classes of entities, thus providing very promising semantic capabilities in all experimental conditions.

6.2.Dynamic appraisal of KGEM semantic awareness

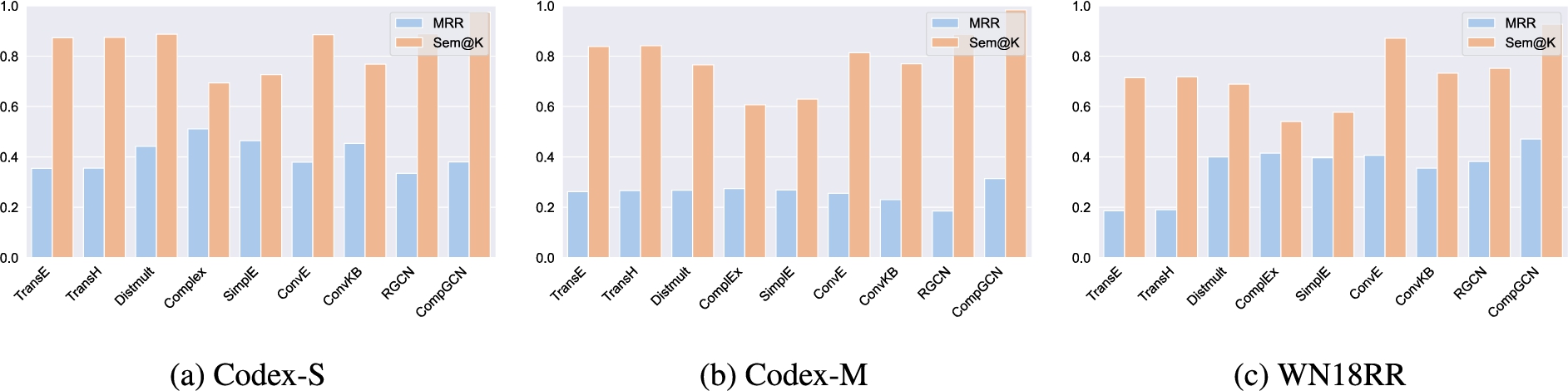

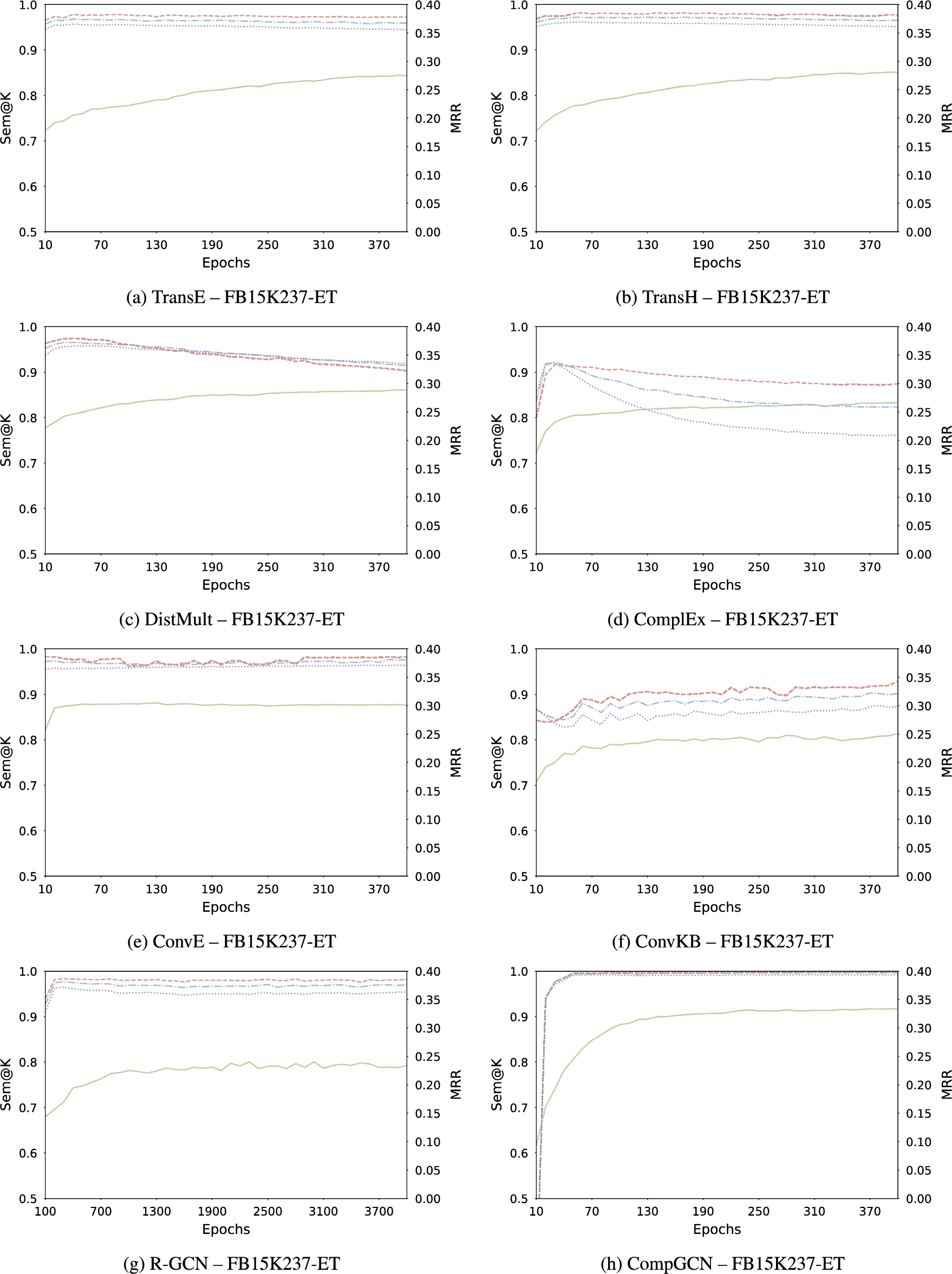

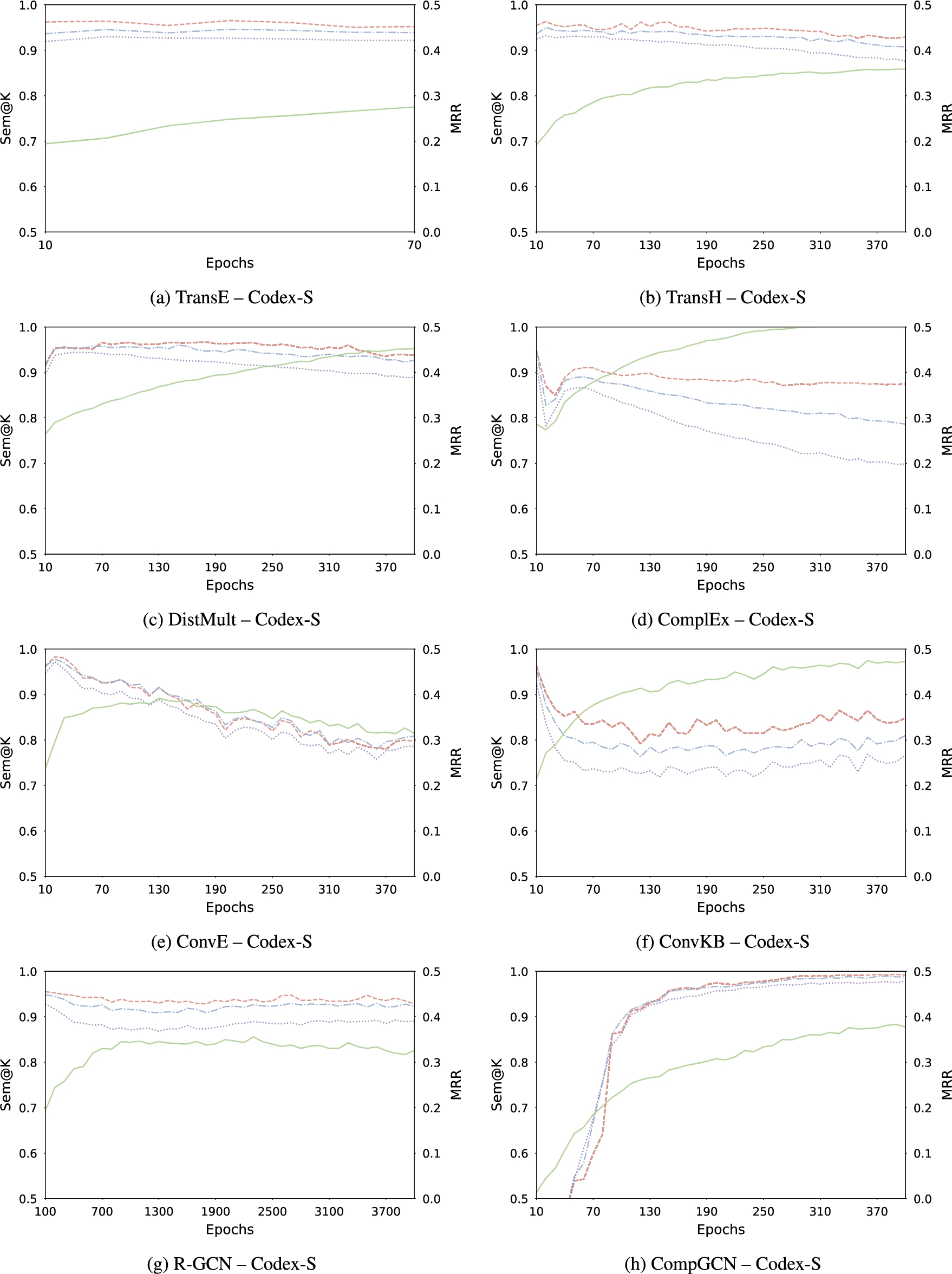

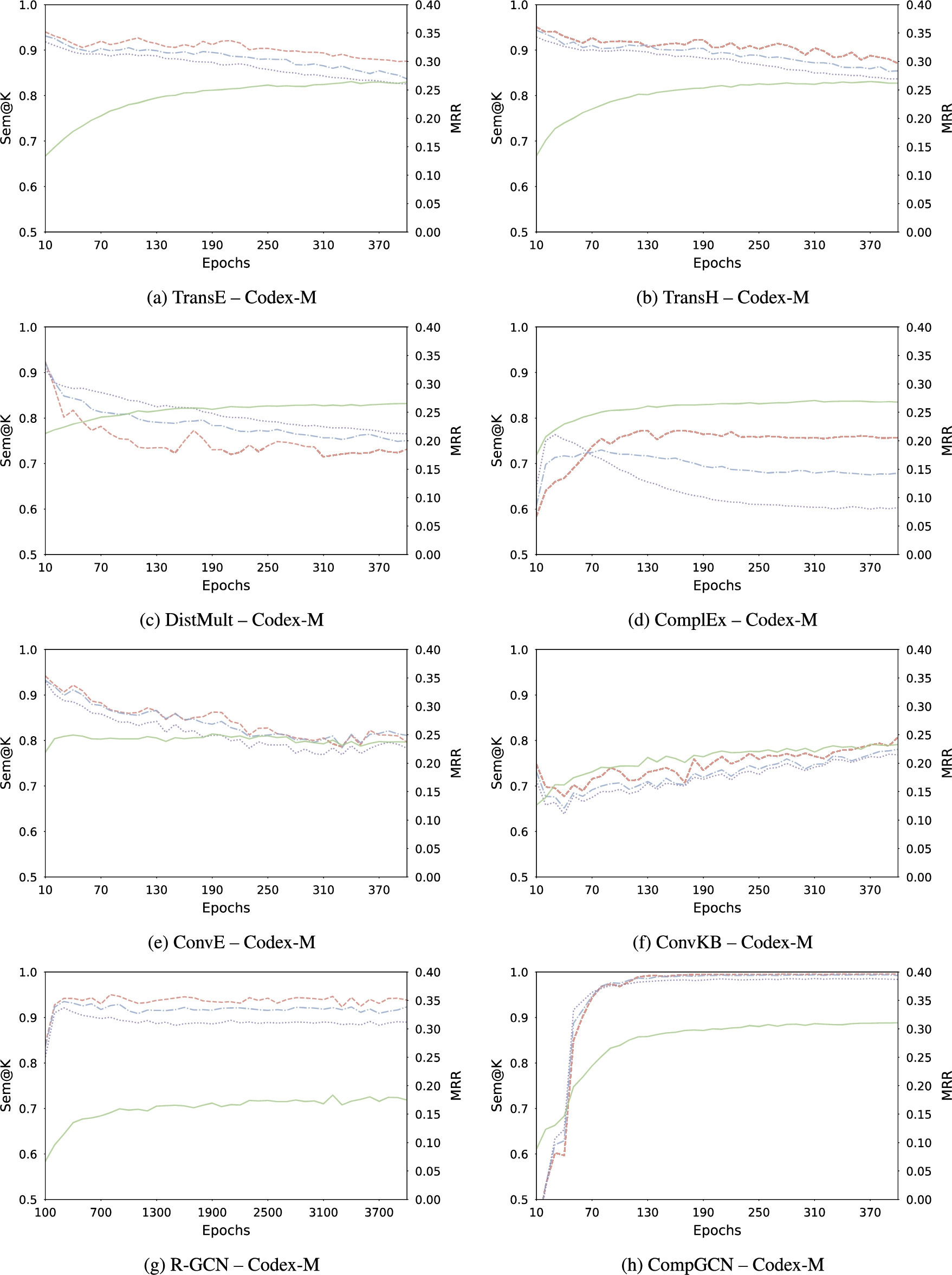

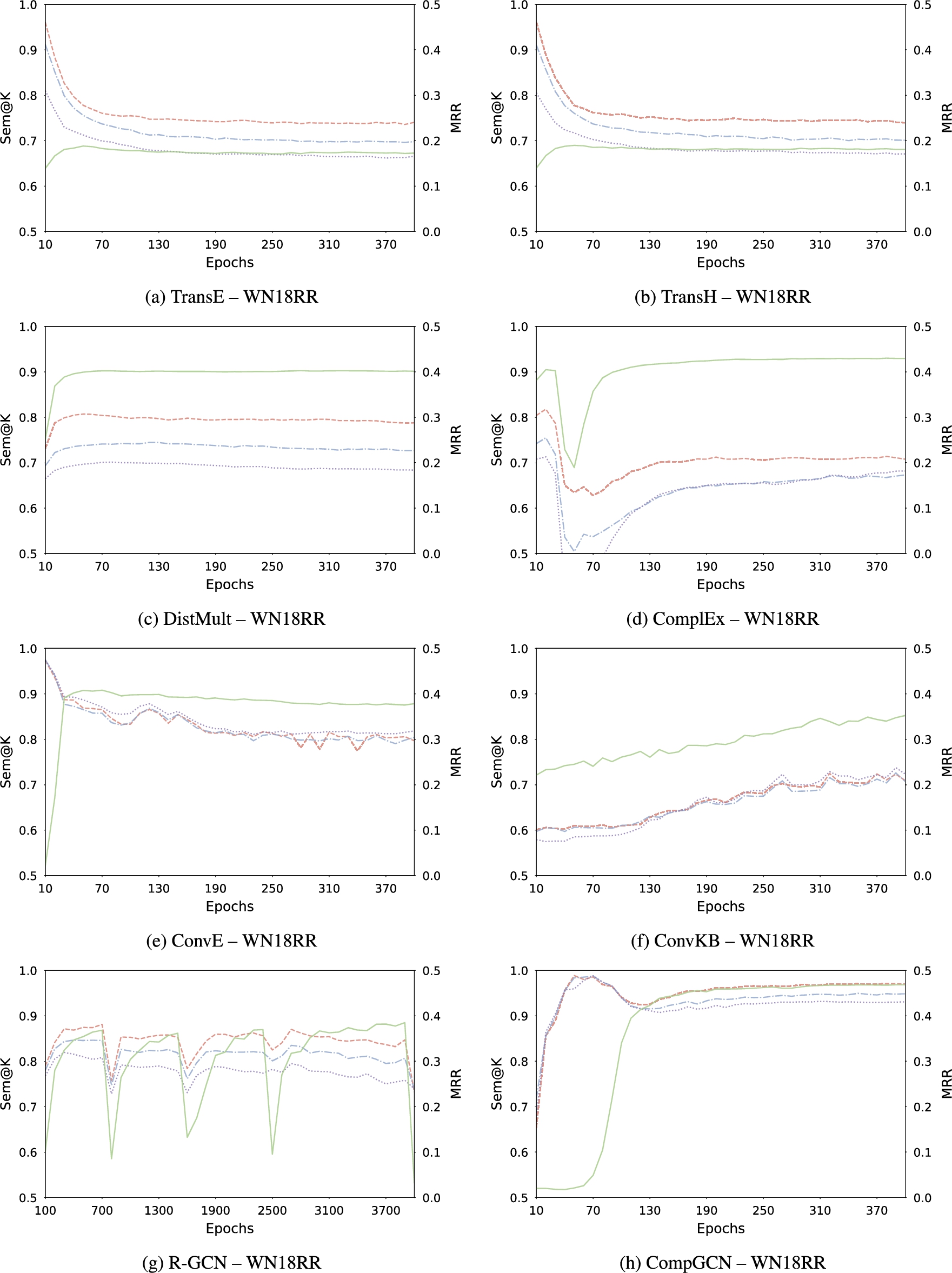

Fig. 8.

Evolution of MRR (green —), Sem@1 (red - -), Sem@3 (blue - · -), and Sem@10 (purple

For certain models, rank-based metrics performance and semantic capabilities improve jointly. For others, the enhancement of their performance in terms of rank-based metrics comes at the expense of their semantic awareness. Interestingly, trends emerge relatively to families of models. First, we observe that a trade-off exists for semantic matching models. Results are particularly striking on FB15K237-ET (see Fig. 8), where it is obvious that after reaching the best Sem@K values after a few epochs, Sem@K values of DistMult and ComplEx quickly drop while MRR continues rising. Conversely, translational models are more robust to Sem@K degradation throughout the epochs. Even though the best achieved Sem@K values are also reported in the very first epochs, once these values are reached they remain stable for the remaining epochs of training. This might be due to the geometric nature of such KGEMs, which will organize the representation space so as to

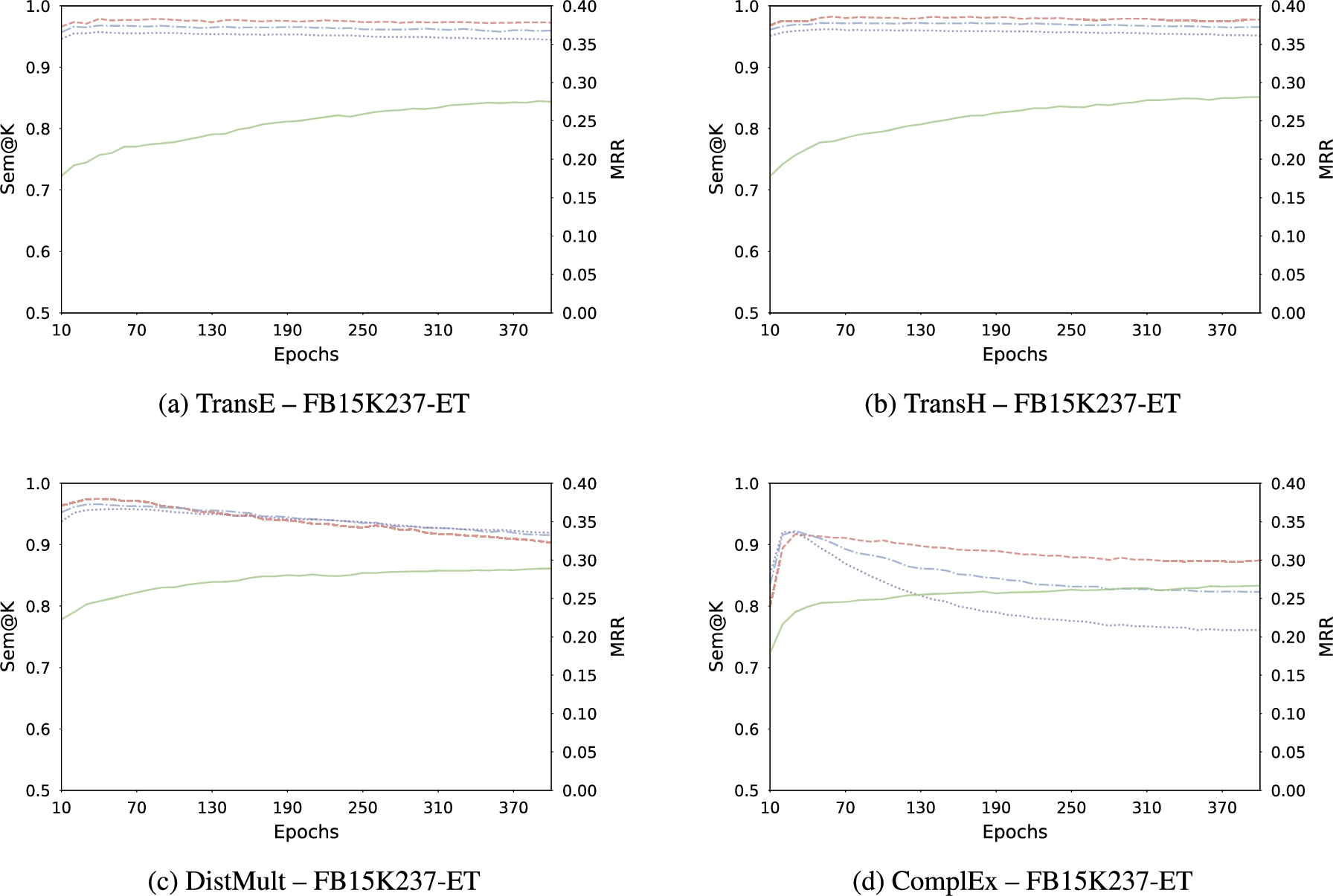

Plots of the joint evolution of MRR and Sem@K values show that most of the KGEMs reaches their best Sem@K values after a few number of epochs. This means that predictions get semantically valid in the early stages of training. As previously mentioned, Sem@K then usually start to decrease, as it has been noted for semantic matching models in particular. To this respect, an excerpt of the head and tail predictions of DistMult on YAGO3-37K is depicted in Fig. 9. Even though the ground-truth entity does not show up in neither the head nor the tail top-K list, we clearly see that after only 30 epochs of training, predictions made by DistMult are more meaningful than after 400 epochs of training. This relative trade-off between making semantically valid predictions and predictions that comprise the ground-truth entity higher in the top-K list calls for finding a compromise in terms of training. The LP task is usually addressed in terms of rank-based metrics only, hence the choice of performing more and more training epochs so as to find the optimal KGEM in terms of MRR and Hits@K. However, as discussed in the present work, adding training steps may improve KGEM performance at the expense of its semantic awareness. In many cases, rank-based metrics values only slightly increase, whereas Sem@K values drastically drop. For instance, comparing MRR vs. Sem@K evolution of DistMult on FB15K237-ET (Fig. 10c), we clearly see that after a moderate number of epochs, any additional epoch of training only provides a very slight improvement in terms of MRR, while it is very detrimental to Sem@K values. Depending on the use case, such a decline in the semantic capabilities of the model is not desirable, and a compromise is to be found between training more to increase KGEM predictive performance and stopping training early enough so as not to deteriorate its semantic awareness too much.

Fig. 9.

Top-ten ranked entities for head and tail predictions at epochs 30 and 400 for a sample triple from YAGO3-37K. Green, blue and white cells respectively denote the ground-truth entity, entities other than the ground-truth and semantically valid, and entities other than the ground-truth and semantically invalid. In this case, semantic validity is based on the domain and range of the relation <hasCapital>.

6.3.On the use of Sem@K for different kinds of KGs

As reported in Table 1, KGs based upon a schema and a class hierarchy are candidates for the computation of all the versions of Sem@K. For the schema-defined KGs used in our experiments, we choose to report values regarding all these metrics so as to enable multi-view comparisons across models. From Table 8 it can be clearly seen that the relative superiority of models is consistent throughout the different Sem@K definitions. From a higher perspective, this means that even for schema-defined KGs with a hierarchy class, Sem@K[ext] is already a good proxy. This may be a good option to only rely on the Sem@K[ext] whenever the computation of Sem@K[base] is too expensive, due to the entity type checking part. This is even more true for Sem@K[wup], which requires an additional step of semantic relatedness computation.

7.Discussion

Three major research questions have been formulated in Section 1. Based on the analysis presented in Section 6, we discuss each research question individually. We ultimately discuss the potential for further considerations of semantics into KGEMs.

7.1.RQ1: How semantic-aware agnostic KGEMs are?

From a coarse-grained viewpoint, we noted that KGEMs trained in an agnostic way prove capable of giving higher scores to semantically valid triples. However, disparities exist between models. Interestingly, these disparities seem to derive from the family of such models. Globally, translational models and GNNs – represented by R-GCN and CompGCN in this work – provide promising results. It appears that the two aforementioned families of KGEMs are better able than semantic matching models (DistMult, ComplEx, SimplE) at recovering the semantics of entities and relations to give higher score to semantically valid triples. In fact, semantic matching models are almost systematically the worst performing models in terms of semantic awareness. From a dynamic standpoint, it is worth noting the high semantic capabilities of KGEMs reached during the first epochs of training. In most cases, this is even during the first epochs that the optimal semantic awareness is attained.

7.2.RQ2: How KGEM semantic awareness’ evaluation should adapt to the typology of KGs?

Drawing on the initial version of Sem@K as presented in [21] – referred to herein as Sem@K[base] – an issue is quickly encountered when it comes to schemaless KGs, which do not contain any rdfs:domain (resp. rdfs:range) clause to indicate the class that candidate heads (resp. tails) should belong to. Our work introduces Sem@K[ext] – a new version of Sem@K that overcomes the aforementioned limitation. In addition, even with schema-defined KGs, Sem@K[base] is not necessarily sufficient in itself. This metric can be further enriched whenever a KG comes with a class hierarchy. In Section 4.4, we integrate class hierarchy into Sem@K by means of a similarity measure between concepts. We subsequently provide an example using the Wu-Palmer similarity score. The resulting Sem@K[wup] is used in the experiments in Section 6 and provide a finer-grained measure of KGEMs semantic awareness.

7.3.RQ3: Does the evaluation of KGEM semantic awareness offers different conclusions on the relative superiority of some KGEMs?

A major finding is that models performing well with respect to rank-based metrics are not necessarily the most competitive regarding their semantic capabilities. We previously noted that translational models globally showcase better Sem@K values compared to semantic matching models. Considering MRR and Hits@K, the opposite conclusion is often drawn. Hence, the performance of KGEMs in terms of rank-based metrics is not indicative of their semantic capabilities. The only exception that might exist is for GNNs that perform well both in terms of rank-based metrics and semantic-oriented measures.

The answers provided to the research questions also lead to consider new matters. As evidenced in Table 8 and Table 9, some KGs are more challenging with regard to Sem@K results. Due to its tailored extraction strategy that purposedly favored difficult relations to feature in the validation and test sets, YAGO4-19K is the schema-defined KG with the lowest achieved Sem@K. This observation raises a deeper question: what characteristics of a KG make it inherently challenging for KGEMs to recover the semantics of entities and relations? An extensive study of the influence of KGs characteristics on the semantic capabilities of KGEMs would require to benchmark them on a broad set of KGs with varying dimensions, so as to determine those that are the most prevalent. Such characteristics can be the total number of relations, the average number of instances per class, or a combination of different factors. We leave this experimental study for future work.

Recall that this work is motivated by the possibility of going beyond a mere assessment of KGEM performance regarding rank-based metrics. We showed that these metrics only evaluate one aspect of such models, somehow providing a partial view on the quality of KGEMs. Our proposal for further assessing KGEM semantic capabilities aims at diving deeper into their predictive expressiveness and measuring to what extent their predictions are semantically valid. However, this second evaluation component does not shed full light on the respective KGEM peculiarities. Other evaluation components may be added, such as the storage and computational requirements of KGEMs [51,69] and the environmental impact of their training and testing procedure [50]. Furthermore, the explainability of KGEMs is another dimension that deserves great attention [55,83].

7.4.Towards further considerations of semantics in knowledge graph embeddings models

The Sem@K metric presented in this work allows for a more comprehensive evaluation of KGEMs. Based on domains and ranges of relations, Sem@K assesses to what extent the predictions of a model are semantically valid. The present work constitutes one of several stepping stones toward the further consideration of ontological and semantic information in KGEM design and evaluation.

It should be noted that due to the only consideration of domains and ranges, Sem@K cannot indicate whether predictions are logically consistent with other constraints posed by the ontology. This is in contrast with Inc@K presented in [23] that takes a broader set of ontological axioms into account. However, Inc@K and Sem@K intrinsically assess distinct dimensions of predictions. While the former is concerned with the logical consistency of predictions, the latter focuses on whether these predictions are semantically valid. For instance, an ontology can specify that City is the range of livesIn, that Seattle is a City but not a Capital, and that entities of type President should be linked to a Capital through the relation livesIn. Hence, it would still be meaningful and semantically correct to predict that (BarackObama,livesIn,Seattle). However, this prediction is not logically consistent w.r.t. ontology specifications. Inc@K and SemK thus consider triples at different levels: while a given triple can be meaningful and semantically correct on its own, its combination with other triples may not be semantically valid or consistent with the ontology. In future work, we will consider more expressive ontologies and see how the broad collection of axioms that constitute them can be incorporated into Sem@K.

KGEMs evaluated in this work are all agnostic to ontological information in their design. However, some models that consider or ensure specific ontological or logical properties exist. For example, HAKE [84] is constructed with the purpose of preserving hierarchies, Logic Tensor Networks [3] are designed to ensure logical reasoning, and the training of TransOWL and TransROWL [11] is enriched with additional triples deduced from, e.g., inverse predicates or equivalent classes. Because of the integration of semantic information in their design or training, one could wonder if they present improved semantic awareness compared to agnostic models. Additionally, KGEMs can also be used to predict triples that represent class instantiations. A possible extension of the present work thus consists in studying whether predicted links and class instantiations are consistent and lead to increased Sem@K values. This would further qualify and highlight the semantic awareness and the consistency of predictions of KGEMs. We leave these questions for future work.

8.Conclusion

In this work, we consider the link prediction task and extend our previously introduced Sem@K metric to measure the ability of KGEMs to assign higher scores to triples that are semantically valid. In particular, to adapt to different types of KGs (e.g., schemaless, class hierarchy), we introduce Sem@K[base], Sem@K[ext], or Sem@K[wup]. Compared with the traditional evaluation approach that solely relies on rank-based metrics, we show that the evaluation procedure is enhanced with the addition of semantic-oriented metrics that bring an additional perspective on KGEM quality. Our experiments with different types of KGs highlight that there is no clear correlation between the performance of KGEMs in terms of traditional rank-based metrics versus their performance regarding semantic-oriented ones. In some cases, however, a trade-off does exist. Consequently, this calls for monitoring KGEM training under more scrutiny. Our experiments also point out that most of the conclusions that have been drawn actually hold at the level of families of models.

In future work, we will conduct experiments considering a broader array of KGEM families (e.g., KGEMs that include semantics) and propose evaluation metrics that consider additional and more expressive ontological constraints.

Notes

6 SPARQL queries were fired against DBpedia as of November 9, 2022.

Acknowledgements

This work is supported by the AILES PIA3 project (see https://www.projetailes.com/). Experiments presented in this paper were carried out using the Grid’5000 testbed, supported by a scientific interest group hosted by Inria and including CNRS, RENATER and several Universities as well as other organizations (see https://www.grid5000.fr).

Appendices

Appendix A.

Appendix A.Hyperparameters

For datasets with no reported optimal hyperparameters, grid-search based on curated hyperpamaters were performed. The full hyperparameter space is provided in Table 6. Chosen hyperparameters for each pair of KGEM and dataset are provided in Table 7.

Table 6

Hyperparameter search space

| Hyperparameters | Range |

| Batch Size | |

| Embedding Dimension | |

| Regularizer Type | |

| Regularizer Weight | |

| Learning Rate |

Table 7

Chosen hyperparameters for schema-defined and schemaless KGs used in the experiments.

| Model | Hyperparameters | FB15K237-ET | DB93K | YAGO3-37K | YAGO4-19K | Codex-S | Codex-M | WN18RR |

| TransE | 512 | 256 | 256 | 512 | 128 | 128 | 512 | |

| d | 100 | 200 | 150 | 100 | 100 | 100 | 100 | |

| λ | ||||||||

| TransH | 512 | 256 | 256 | 512 | 128 | 128 | 512 | |

| d | 100 | 200 | 150 | 100 | 100 | 100 | 100 | |

| λ | ||||||||

| DistMult | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 | |

| d | 100 | 200 | 150 | 100 | 100 | 100 | 100 | |

| λ | 0 | 0 | 0 | 0 | ||||

| ComplEx | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 | |

| d | 100 | 200 | 150 | 100 | 100 | 100 | 100 | |

| λ | ||||||||

| SimplE | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 | |

| d | 100 | 200 | 150 | 100 | 100 | 100 | 100 | |

| λ | 0 | 0 | ||||||

| ConvE | 512 | 256 | 256 | 512 | 512 | 512 | 512 | |

| d | 200 | 200 | 200 | 200 | 200 | 200 | 200 | |

| λ | ||||||||

| ConvKB | 512 | 256 | 256 | 512 | 512 | 512 | 512 | |

| d | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| λ | ||||||||

| R-GCN | d | 500 | 500 | 500 | 500 | 500 | 500 | 500 |

| λ | ||||||||

| CompGCN | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 | 1024 | |

| d | 200 | 200 | 200 | 200 | 200 | 200 | 200 | |

| λ | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

Appendix B.

Appendix B.Results achieved with the best reported hyperparameters

Results achieved with the best reported hyperparameters are presented in Tables 8 and 9.

Table 8

Rank-based and semantic-based results achieved at the best epoch in terms of MRR on the schema-defined knowledge graphs. Bold fonts indicate which model performs best with respect to a given metric

| Rank-based | Sem@K[base] | Sem@K[wup] | Sem@K[ext] | |||||||||||

| Model Family | Model | MRR | H@1 | H@3 | H@10 | S@1 | S@3 | S@10 | S@1 | S@3 | S@10 | S@1 | S@3 | S@10 |

| (a) FB15K237-ET | ||||||||||||||

| Geometric | TransE | .273 | .182 | .302 | .453 | .978 | .964 | .949 | .983 | .972 | .961 | .888 | .870 | .845 |

| TransH | .281 | .187 | .306 | .458 | .981 | .968 | .955 | .986 | .976 | .966 | .896 | .873 | .855 | |

| Semantic Matching | DistMult | .289 | .206 | .313 | .456 | .907 | .919 | .922 | .919 | .930 | .934 | .842 | .853 | .849 |

| ComplEx | .267 | .185 | .292 | .431 | .874 | .823 | .761 | .897 | .856 | .804 | .822 | .770 | .7005 | |

| SimplE | .257 | .180 | .275 | .416 | .895 | .878 | .848 | .911 | .896 | .869 | .839 | .817 | .776 | |

| Convolutional | ConvE | .310 | .211 | .337 | .490 | .972 | .970 | .961 | .977 | .973 | .968 | .883 | .880 | .870 |

| ConvKB | .251 | .159 | .261 | .411 | .918 | .897 | .872 | .935 | .918 | .899 | .842 | .812 | .781 | |

| R-GCN | .238 | .153 | .257 | .412 | .980 | .970 | .953 | .985 | .976 | .961 | .902 | .889 | .861 | |

| CompGCN | ||||||||||||||

| (b) DB93K | ||||||||||||||

| Geometric | TransE | .233 | .145 | .275 | .397 | .985 | .975 | .961 | .991 | .987 | .979 | .767 | .730 | .689 |

| TransH | .236 | .147 | .278 | .399 | .769 | .739 | .694 | |||||||

| Semantic Matching | DistMult | .261 | .202 | .287 | .369 | .865 | .831 | .790 | .890 | .865 | .833 | .716 | .670 | .617 |

| ComplEx | .287 | .213 | .325 | .417 | .941 | .917 | .877 | .955 | .937 | .907 | .815 | .767 | .698 | |

| SimplE | .252 | .202 | .274 | .339 | .896 | .871 | .837 | .915 | .895 | .867 | .774 | .719 | .659 | |

| Convolutional | ConvE | .256 | .183 | .289 | .392 | .871 | .862 | .862 | .882 | .874 | .874 | .764 | .750 | .734 |

| ConvKB | .178 | .121 | .199 | .283 | .883 | .895 | .881 | .902 | .895 | .881 | .742 | .702 | .659 | |

| R-GCN | .208 | .144 | .233 | .319 | .969 | .959 | .945 | .979 | .972 | .961 | .819 | .785 | .740 | |

| CompGCN | .976 | .967 | .991 | .982 | .975 | |||||||||

| (c) YAGO3-37K | ||||||||||||||

| Geometric | TransE | .184 | .080 | .198 | .408 | .989 | .988 | .988 | .993 | .994 | .995 | .897 | .904 | .911 |

| TransH | .187 | .091 | .199 | .415 | .995 | .993 | .993 | .995 | .997 | .997 | .901 | .911 | .925 | |

| Semantic Matching | DistMult | .225 | .112 | .251 | .465 | .885 | .940 | .959 | .921 | .962 | .980 | .795 | .851 | .886 |

| ComplEx | .430 | .250 | .551 | .780 | .740 | .820 | .859 | .911 | .935 | .950 | .662 | .735 | .794 | |

| SimplE | .311 | .081 | .468 | .749 | .377 | .733 | .866 | .786 | .901 | .944 | .310 | .640 | .777 | |

| Convolutional | ConvE | .933 | .923 | .910 | .977 | .977 | .975 | .893 | .879 | .871 | ||||

| ConvKB | .305 | .162 | .352 | .631 | .979 | .979 | .978 | .990 | .990 | .990 | .899 | .896 | .904 | |

| R-GCN | .115 | .046 | .110 | .254 | .993 | .994 | .993 | .995 | .996 | .997 | .933 | .932 | .928 | |

| CompGCN | .399 | .269 | .464 | .663 | ||||||||||

| (d) YAGO4-19K | ||||||||||||||

| Geometric | TransE | .749 | .651 | .833 | .901 | .975 | .890 | .843 | .980 | .914 | .870 | .844 | .647 | .547 |

| TransH | .752 | .656 | .829 | .898 | .979 | .848 | .656 | .564 | ||||||

| Semantic Matching | DistMult | .863 | .839 | .881 | .898 | .931 | .687 | .534 | .933 | .686 | .551 | .901 | .556 | .376 |

| ComplEx | .890 | .881 | .897 | .903 | .923 | .627 | .479 | .923 | .635 | .496 | .909 | .524 | .349 | |

| SimplE | .808 | .757 | .848 | .883 | .891 | .646 | .500 | .894 | .654 | .516 | .840 | .530 | .367 | |

| Convolutional | ConvE | .901 | .891 | .913 | .977 | .858 | .844 | .977 | .869 | .854 | .948 | .731 | .709 | |

| ConvKB | .828 | .772 | .883 | .908 | .865 | .614 | .564 | .870 | .639 | .590 | .793 | .460 | .361 | |

| R-GCN | .893 | .885 | .896 | .903 | .969 | .832 | .727 | .970 | .841 | .741 | .930 | .670 | .522 | |

| CompGCN | .906 | .862 | .981 | .912 | .870 | |||||||||

Table 9

Rank-based and semantic-based results achieved at the best epoch in terms of MRR on the schemaless knowledge graphs. Bold fonts indicate which model performs best with respect to a given metric

| Rank-based | Sem@K[ext] | |||||||

| Model Family | Model | MRR | H@1 | H@3 | H@10 | S@1 | S@3 | S@10 |

| (a) Codex-S | ||||||||

| Geometric | TransE | .354 | .223 | .409 | .620 | .927 | .900 | .873 |

| TransH | .355 | .225 | .410 | .621 | .928 | .901 | .875 | |

| Semantic Matching | DistMult | .442 | .336 | .487 | .661 | .935 | .919 | .887 |

| ComplEx | .872 | .786 | .694 | |||||

| SimplE | .464 | .368 | .514 | .641 | .876 | .789 | .727 | |

| Convolutional | ConvE | .379 | .259 | .439 | .610 | .912 | .913 | .886 |

| ConvKB | .453 | .361 | .493 | .632 | .858 | .806 | .769 | |

| R-GCN | .335 | .225 | .381 | .556 | .936 | .921 | .889 | |

| CompGCN | .380 | .268 | .427 | .597 | ||||

| (b) Codex-M | ||||||||

| Geometric | TransE | .262 | .188 | .284 | .405 | .889 | .855 | .839 |

| TransH | .266 | .190 | .291 | .415 | .894 | .862 | .842 | |

| Semantic Matching | DistMult | .268 | .199 | .291 | .402 | .726 | .749 | .765 |

| ComplEx | .274 | .213 | .298 | .386 | .768 | .687 | .608 | |

| SimplE | .269 | .212 | .289 | .378 | .799 | .713 | .629 | |

| Convolutional | ConvE | .255 | .184 | .280 | .395 | .863 | .839 | .814 |

| ConvKB | .230 | .156 | .250 | .376 | .797 | .771 | .770 | |

| R-GCN | .185 | .110 | .201 | .340 | .944 | .920 | .887 | |

| CompGCN | ||||||||

| (c) WN18RR | ||||||||

| Geometric | TransE | .186 | .032 | .303 | .455 | .770 | .756 | .715 |

| TransH | .191 | .034 | .306 | .458 | .773 | .760 | .717 | |

| Semantic Matching | DistMult | .399 | .372 | .413 | .444 | .795 | .732 | .688 |

| ComplEx | .430 | .400 | .446 | .487 | .715 | .669 | .673 | |

| SimplE | .397 | .375 | .406 | .438 | .690 | .598 | .673 | |

| Convolutional | ConvE | .406 | .375 | .422 | .459 | .865 | .854 | .871 |

| ConvKB | .356 | .302 | .391 | .444 | .712 | .713 | .732 | |

| R-GCN | .382 | .345 | .402 | .441 | .836 | .797 | .752 | |

| CompGCN | ||||||||

Appendix C.

Appendix C.Evolution of MRR and Sem@K values with respect to the number of epochs

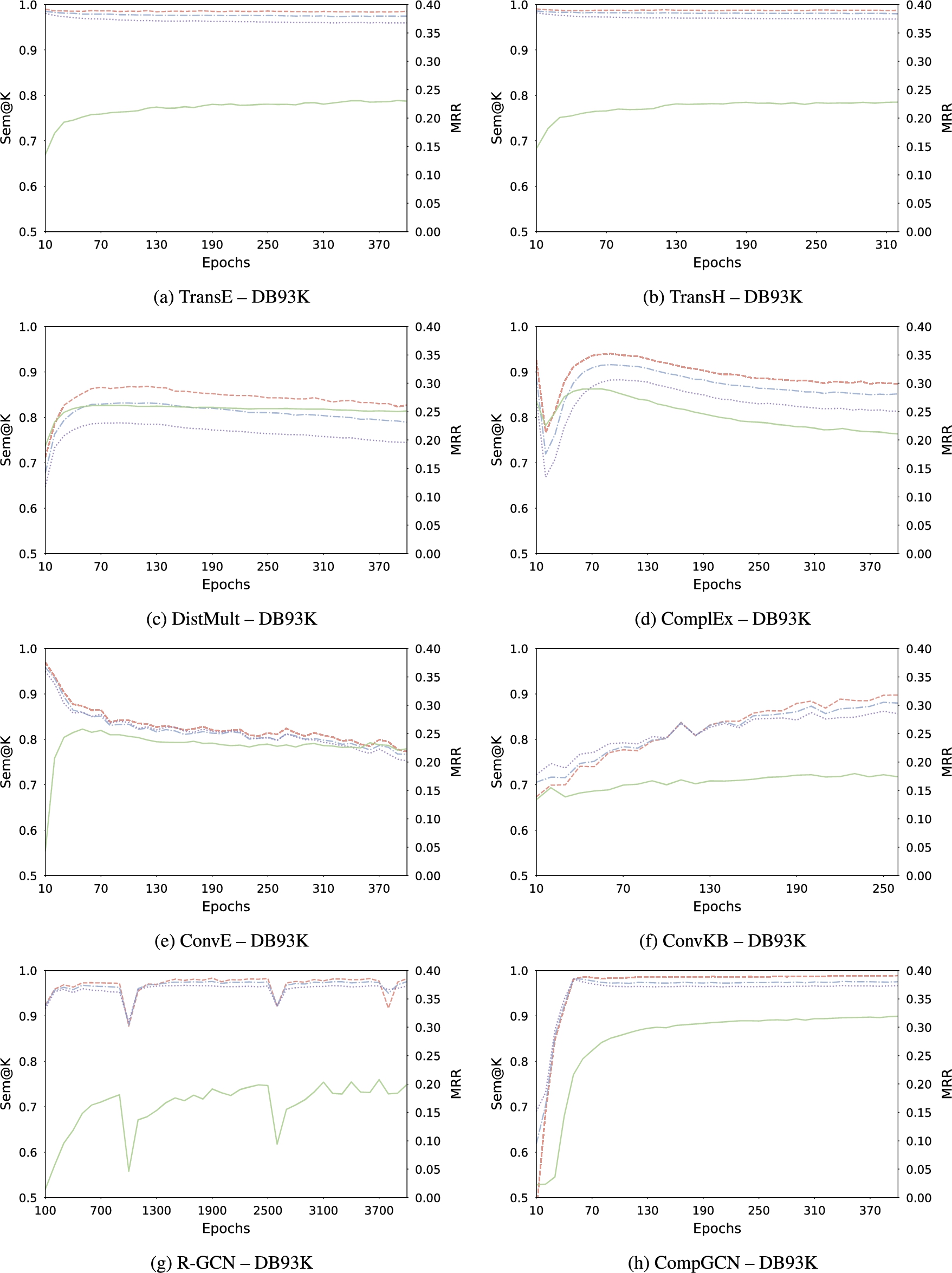

Fig. 10.

Evolution of MRR (green —), Sem@1 (red - -), Sem@3 (blue - · -), and Sem@10 (purple

Fig. 11.

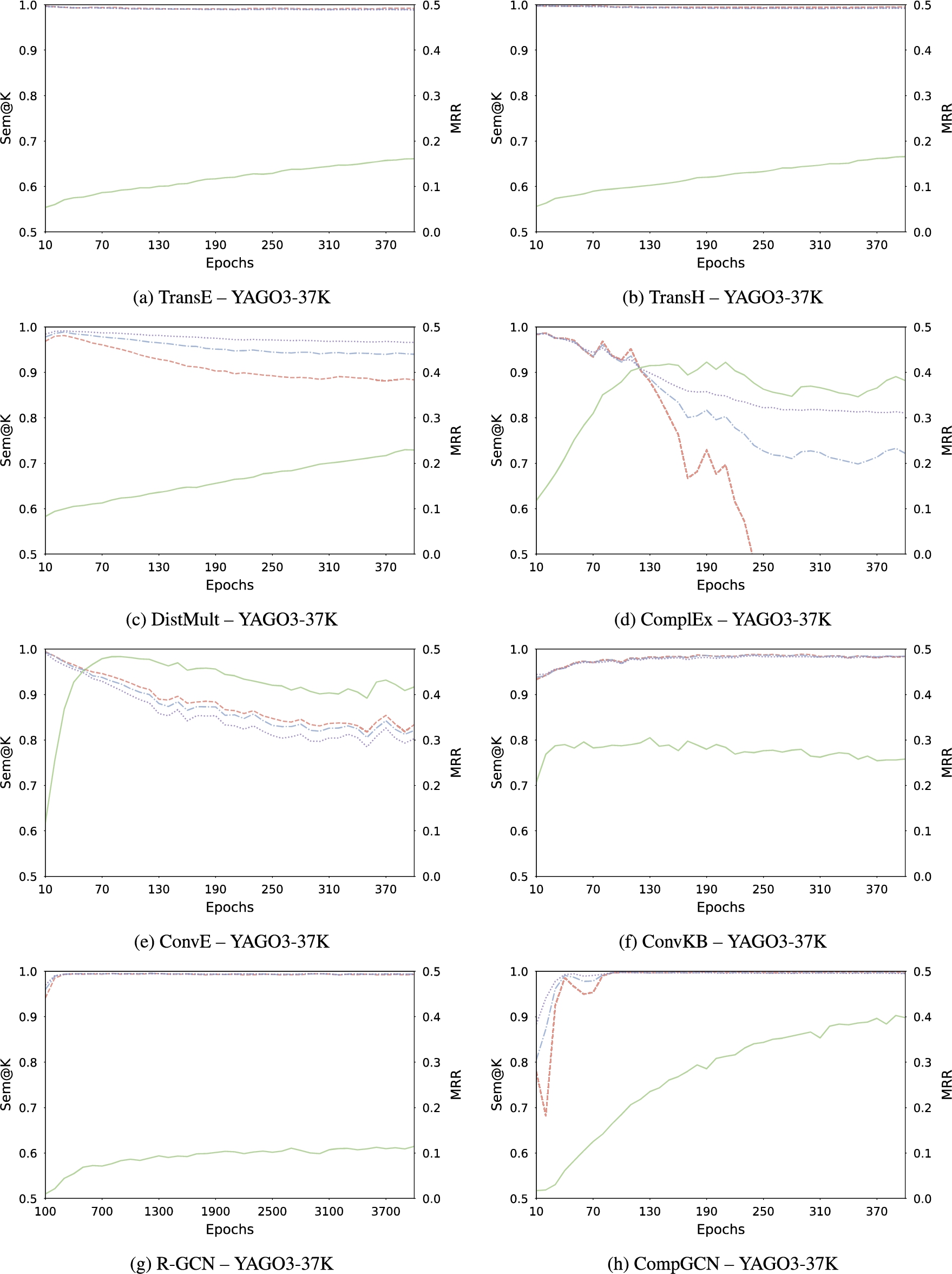

Evolution of MRR (green —), Sem@1 (red - -), Sem@3 (blue - · -), and Sem@10 (purple

Fig. 12.

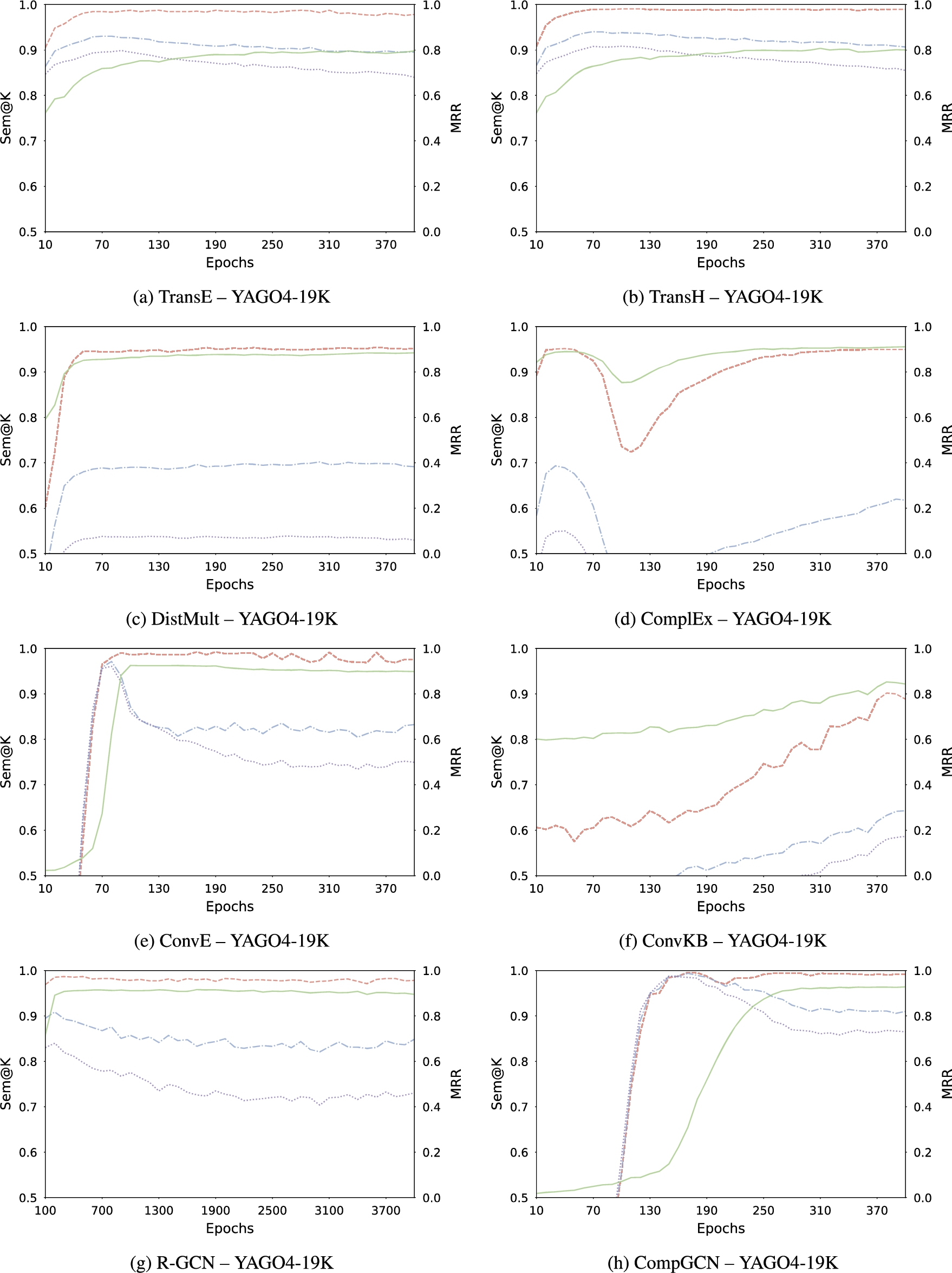

Evolution of MRR (green —), Sem@1 (red - -), Sem@3 (blue - · -), and Sem@10 (purple

Fig. 13.

Evolution of MRR (green —), Sem@1 (red - -), Sem@3 (blue - · -), and Sem@10 (purple

Fig. 14.

Evolution of MRR (green —), Sem@1 (red - -), Sem@3 (blue - · -), and Sem@10 (purple

Fig. 15.

Evolution of MRR (green —), Sem@1 (red - -), Sem@3 (blue - · -), and Sem@10 (purple

The evolution of MRR and Sem@K values with respect to the number of epochs is presented in Fig. 10, 11, 12, 13, 14, 15, and 16. For equity and clarity sakes, we choose to present 2 KGEMs for each family of model (translational models, semantic matching models, CNNs, and GNNs). Regarding semantic matching models, DistMult and ComplEx are chosen, as the evolution of their MRR and Sem@K values is less erratic than SimplE. The evolution of MRR and Sem@K values for SimplE are made available on the GitHub repository of the datasets.1010

Fig. 16.

Evolution of MRR (green —), Sem@1 (red - -), Sem@3 (blue - · -), and Sem@10 (purple

References

[1] | M. Ali, M. Berrendorf, C.T. Hoyt, L. Vermue, M. Galkin, S. Sharifzadeh, A. Fischer, V. Tresp and J. Lehmann, Bringing light into the dark: A large-scale evaluation of knowledge graph embedding models under a unified framework, IEEE Trans. Pattern Anal. Mach. Intell. 44: (12) ((2022) ), 8825–8845. doi:10.1109/TPAMI.2021.3124805. |

[2] | S. Auer, C. Bizer, G. Kobilarov, J. Lehmann, R. Cyganiak and Z.G. Ives, DBpedia: A nucleus for a web of open data, in: The Semantic Web, 6th International Semantic Web Conf., 2nd Asian Semantic Web Conf., ISWC + ASWC, Lecture Notes in Computer Science, Vol. 4825: , Springer, (2007) , pp. 722–735. |

[3] | S. Badreddine, A.S. d’Avila Garcez, L. Serafini and M. Spranger, Logic tensor networks, Artificial Intelligence 303: ((2022) ), 103649. doi:10.1016/j.artint.2021.103649. |

[4] | I. Balazevic, C. Allen and T.M. Hospedales, TuckER: Tensor factorization for knowledge graph completion, in: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, November 3–7, 2019, Association for Computational Linguistics, (2019) , pp. 5184–5193. doi:10.18653/v1/D19-1522. |

[5] | M. Berrendorf, E. Faerman, L. Vermue and V. Tresp, On the ambiguity of rank-based evaluation of entity alignment or link prediction methods, (2020) , arXiv preprint arXiv:2002.06914. |

[6] | K.D. Bollacker, C. Evans, P.K. Paritosh, T. Sturge and J. Taylor, Freebase: A collaboratively created graph database for structuring human knowledge, in: Proc. of the ACM SIGMOD International Conf. on Management of Data, ACM, (2008) , pp. 1247–1250. |

[7] | A. Bordes, N. Usunier, A. García-Durán, J. Weston and O. Yakhnenko, Translating embeddings for modeling multi-relational data, in: Conf. on Neural Information Processing Systems (NeurIPS), (2013) , pp. 2787–2795. |

[8] | Z. Cao, Q. Xu, Z. Yang, X. Cao and Q. Huang, Dual quaternion knowledge graph embeddings, in: Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, the Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, February 2–9, 2021, AAAI Press, (2021) , pp. 6894–6902, https://ojs.aaai.org/index.php/AAAI/article/view/16850. |

[9] | G. Chowdhury, M. Srilakshmi, M. Chain and S. Sarkar, Neural factorization for offer recommendation using knowledge graph embeddings, in: Proc. of the SIGIR Workshop on eCommerce, Vol. 2410: , (2019) . |

[10] | Z. Cui, P. Kapanipathi, K. Talamadupula, T. Gao and Q. Ji, Type-augmented relation prediction in knowledge graphs, in: Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, the Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, February 2–9, 2021, AAAI Press, (2021) , pp. 7151–7159, https://ojs.aaai.org/index.php/AAAI/article/view/16879. |

[11] | C. d’Amato, N.F. Quatraro and N. Fanizzi, Injecting background knowledge into embedding models for predictive tasks on knowledge graphs, in: The Semantic Web – 18th International Conference, ESWC 2021, Virtual Event, June 6–10, 2021, Proceedings, Lecture Notes in Computer Science, Vol. 12731: , Springer, (2021) , pp. 441–457. doi:10.1007/978-3-030-77385-4_26. |

[12] | R. Das, S. Dhuliawala, M. Zaheer, L. Vilnis, I. Durugkar, A. Krishnamurthy, A. Smola and A. McCallum, Go for a walk and arrive at the answer: Reasoning over paths in knowledge bases using reinforcement learning, in: 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30–May 3, 2018, Conference Track Proceedings, OpenReview.net, (2018) , https://openreview.net/forum?id=Syg-YfWCW. |

[13] | T. Dettmers, P. Minervini, P. Stenetorp and S. Riedel, Convolutional 2D knowledge graph embeddings, in: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th Innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18), New Orleans, Louisiana, USA, February 2–7, 2018, AAAI Press, (2018) , pp. 1811–1818, https://www.aaai.org/ocs/index.php/AAAI/AAAI18/paper/view/17366. |

[14] | B. Ding, Q. Wang, B. Wang and L. Guo, Improving knowledge graph embedding using simple constraints, in: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, ACL 2018, Melbourne, Australia, July 15–20, 2018, Volume 1: Long Papers, Association for Computational Linguistics, (2018) , pp. 110–121. doi:10.18653/v1/P18-1011. |

[15] | I. Ferrari, G. Frisoni, P. Italiani, G. Moro and C. Sartori, Comprehensive analysis of knowledge graph embedding techniques benchmarked on link prediction, Electronics 11: (23) ((2022) ). |

[16] | L. Galárraga, C. Teflioudi, K. Hose and F.M. Suchanek, Fast rule mining in ontological knowledge bases with AMIE+, VLDB J. 24: (6) ((2015) ), 707–730. doi:10.1007/s00778-015-0394-1. |

[17] | L.A. Galárraga, C. Teflioudi, K. Hose and F.M. Suchanek, AMIE: Association rule mining under incomplete evidence in ontological knowledge bases, in: 22nd International World Wide Web Conference, WWW ’13, Rio de Janeiro, Brazil, May 13–17, 2013, International World Wide Web Conferences Steering Committee / ACM, (2013) , pp. 413–422. doi:10.1145/2488388.2488425. |

[18] | T.R. Gruber, A translation approach to portable ontology specifications, Knowl. Acquis. 5: (2) ((1993) ), 199–220. doi:10.1006/knac.1993.1008. |

[19] | C.T. Hoyt, M. Berrendorf, M. Gaklin, V. Tresp and B.M. Gyori, A unified framework for rank-based evaluation metrics for link prediction in knowledge graphs, (2022) , arXiv preprint arXiv:2203.07544. |

[20] | N. Hubert, P. Monnin, A. Brun and D. Monticolo, New strategies for learning knowledge graph embeddings: The recommendation case, in: EKAW – 23rd International Conf. on Knowledge Engineering and Knowledge Management, Springer, (2022) , pp. 66–80. doi:10.1007/978-3-031-17105-5_5. |

[21] | N. Hubert, P. Monnin, A. Brun and D. Monticolo, Knowledge graph embeddings for link prediction: Beware of semantics! in: DL4KG@ISWC 2022: Workshop on Deep Learning for Knowledge Graphs, Held as Part of ISWC 2022: The 21st International Semantic Web Conference, Virtual, China, (2022) . |

[22] | N. Jain, J. Kalo, W. Balke and R. Krestel, Do embeddings actually capture knowledge graph semantics? in: The Semantic Web – 18th International Conf., ESWC, LNCS, Vol. 12731: , Springer, (2021) , pp. 143–159. |

[23] | N. Jain, T. Tran, M.H. Gad-Elrab and D. Stepanova, Improving knowledge graph embeddings with ontological reasoning, in: The Semantic Web – International Semantic Web Conf. ISWC, Vol. 12922: , (2021) , pp. 410–426. |

[24] | G. Ji, S. He, L. Xu, K. Liu and J. Zhao, Knowledge graph embedding via dynamic mapping matrix, in: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing of the Asian Federation of Natural Language Processing, ACL 2015, July 26–31, 2015, Beijing, China, Volume 1: Long Papers, The Association for Computer Linguistics, (2015) , pp. 687–696. doi:10.3115/v1/p15-1067. |

[25] | S. Ji, S. Pan, E. Cambria, P. Marttinen and P.S. Yu, A survey on knowledge graphs: Representation, acquisition, and applications, IEEE Trans. Neural Networks Learn. Syst. 33: (2) ((2022) ), 494–514. doi:10.1109/TNNLS.2021.3070843. |

[26] | Y. Jia, Y. Wang, H. Lin, X. Jin and X. Cheng, Locally adaptive translation for knowledge graph embedding, in: Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, Arizona, USA, February 12–17, 2016, AAAI Press, (2016) , pp. 992–998, http://www.aaai.org/ocs/index.php/AAAI/AAAI16/paper/view/12018. |

[27] | X. Jiang, Q. Wang and B. Wang, Adaptive convolution for multi-relational learning, in: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, June 2–7, 2019, Volume 1 (Long and Short Papers), Association for Computational Linguistics, (2019) , pp. 978–987. doi:10.18653/v1/n19-1103. |

[28] | R. Kadlec, O. Bajgar and J. Kleindienst, Knowledge base completion: Baselines strike back, in: Proc. of the 2nd Workshop on Representation Learning for NLP, Rep4NLP@ACL, (2017) , pp. 69–74. doi:10.18653/v1/W17-2609. |