A conceptual model for ontology quality assessment

Abstract

With the continuous advancement of methods, tools, and techniques in ontology development, ontologies have emerged in various fields such as machine learning, robotics, biomedical informatics, agricultural informatics, crowdsourcing, database management, and the Internet of Things. Nevertheless, the nonexistence of a universally agreed methodology for specifying and evaluating the quality of an ontology hinders the success of ontology-based systems in such fields as the quality of each component is required for the overall quality of a system and in turn impacts the usability in use. Moreover, a number of anomalies in definitions of ontology quality concepts are visible, and in addition to that, the ontology quality assessment is limited only to a certain set of characteristics in practice even though some other significant characteristics have to be considered for the specified use-case. Thus, in this research, a comprehensive analysis was performed to uncover the existing contributions specifically on ontology quality models, characteristics, and the associated measures of these characteristics. Consequently, the characteristics identified through this review were classified with the associated aspects of the ontology evaluation space. Furthermore, the formalized definitions for each quality characteristic are provided through this study from the ontological perspective based on the accepted theories and standards. Additionally, a thorough analysis of the extent to which the existing works have covered the quality evaluation aspects is presented and the areas further to be investigated are outlined.

1.Introduction

An ontology is a formal representation of the concepts in a particular domain of knowledge and the relationships between those concepts [61]. More specifically, an ontology has been defined as “a formal, explicit specification of a shared conceptualization” in [123] by merging the two prominent definitions of Gruber [61]: “ontology is an explicit specification of a conceptualization” and Borster [21]: “an ontology is a formal specification of a shared conceptualization”. The conceptualization denotes an abstract and simplified view of the world that consists of concepts that are expected in the world being represented, and relationships among them [121]. Thus, ontology has also been defined as a representational vocabulary [61,103]. A formal specification is machine-interpretability in a way that a machine can understand and process the specified conceptualization. This would enable ontology to be used for several purposes such as to make meaningful communication among their agents/users, facilitate interoperability, knowledge sharing & reuse among subsystems and, to distinguish the domain knowledge from operational knowledge [103]. These can be viewed as capabilities of ontologies [103,138] and consequently, ontologies are increasingly incorporated into information systems.

However, the above capabilities would not be a reality unless a good quality ontology is produced at the end of the development. This is because one trivial internal quality issue would cause multiple quality issues in the end product which may result in a high cost of debugging and fixing the issues and in turn loss of consumers’ trust. For instance, it has been revealed that the ontology-based system in the medicine domain has caused a 55% loss of information due to one missing explicit definition in the ontology [56,57,60,89,151]. Thus, it is required to sufficiently evaluate both the quality of the content of the ontology during the development, as well as, the ontology as a whole after the development [56]. This has been viewed as two aspects of ontology evaluation in [101,105,154] namely intrinsic and extrinsic that have been further discussed in Section 3. Moreover, it has been revealed that the availability of a widely accepted methodology or model, or approach for the quality evaluation of ontologies would be beneficial for producing a quality ontology [105]. However, such a methodology, model, or approach has not yet been identified for ontology quality evaluation as the way it is in the other related fields such as system and software engineering. For instance, the availability of widely agreed quality standards in software engineering such as ISO/IEC 9126 [78], ISO/IEC 9241-11 [76], ISO/IEC 25012 [75], ISO/IEC 25010 [77], and IEEE software quality standards [74] provide immense support in developing good quality software.

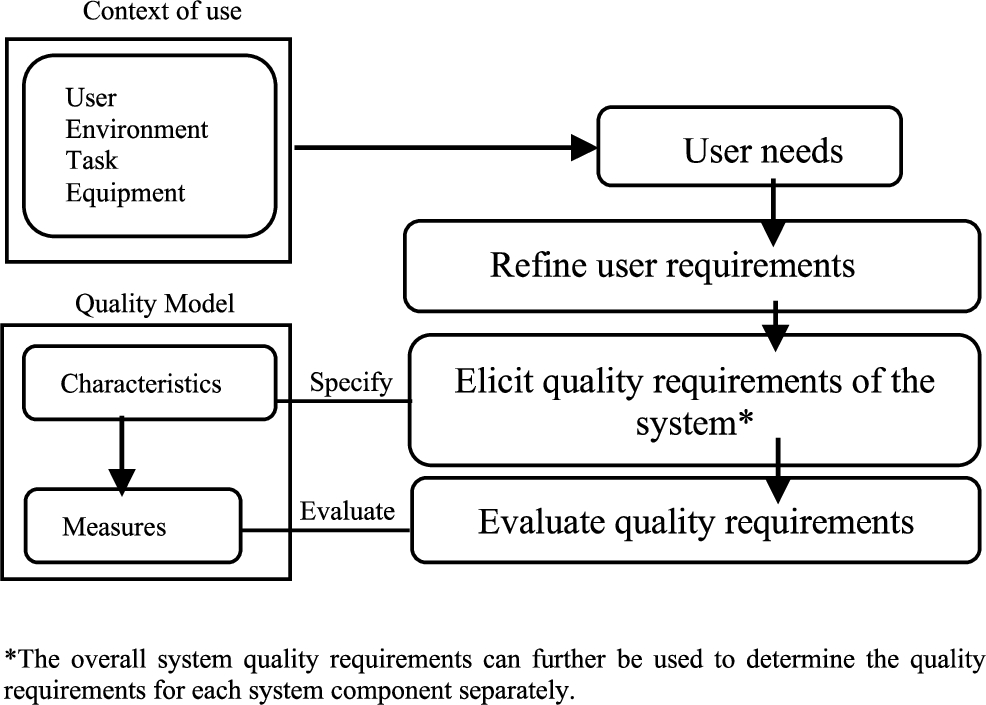

Fig. 1.

The abstraction process of specifying quality requirements.

Since this article is on the ontology quality aspect, first we examine quality from the general perspective. Quality is referred to as the fitness of a product or service or information for the needs of the intended users in a specified context [31]. Basically, the quality of a system indicates how far the system caters to user needs with respect to a particular context. The context (i.e., the context of use) defines the actual conditions under which a product (i.e., software/Information Systems (IS)) is used by intended users in performing a task (see Fig. 1). The context not only considers the technical environment of the system but also the user type (i.e., attitude, literacy, experience), the physical and social environment, the available equipment and tasks to be performed [31,127].

Quality characteristics are a set of properties that can be used to assess whether the intended user needs of a system or product are met. In software and system engineering, there are a set of standards [76–78] namely; ISO/IEC 2501n, ISO/IEC 2501n and ISO/IEC 2503n [77], which describe how the quality characteristics to be evaluated are identified with respect to the user needs of a system. According to the standards, the quality requirements, which can also be viewed as a set of quality characteristics, should be elicited from user needs at the requirement specification phase [17,141] (see Fig. 1). To this end, a quality model plays a significant role that consists of a proper set of quality characteristics and associated measures. The quality model provides a framework for determining characteristics in relation to user needs [75,77,127]. The identified quality characteristics can be evaluated at the intrinsic and extrinsic levels of a product using their respective measures. This evaluation helps to ensure that the required quality is being achieved across product development. Figure 1 shows the abstraction process of deriving the quality requirements and how a quality model supports that process. The significance of a quality model is further elaborated in Section 3 with an example.

If we apply the process in Fig. 1 to the ontology context, it is possible to define the user as a person who interacts with an ontology-based IS. Context of use is the user type (as stated above), the environments (social, physical, technical) that the users may involve and the task to be performed through the ontology. User needs are the set of requirements that are expected to be fulfilled by the ontology-based IS. Additionally, the quality requirements are a set of overall quality requirements* for the ontology-based IS elicited from the user needs in a particular context of use. From these overall quality requirements, it is possible to derive the quality requirements related to the ontology in a system. Thereafter, the ontology quality requirements can be specified using quality characteristics with the support of an ontology quality model. This concept further discusses in detail in Section 3 (see Fig. 5).

It can be understood that quality models play a significant role in determining the quality characteristics of a system. When considering the ontological point of view, there are several quality models which have been proposed for ontology quality assessments. However, none of them is widely accepted in practice. This could be because some of the models such as the semiotic metric suit [24], the quality model of Gangemi et al. [48,49], and OQuaRE [40] are generic. Thus, it is required to additional efforts to customize and add absent characteristics which are significant to the specified context. Moreover, some of the quality models such as OntoQualitas [114] and the quality model of Zhu et al. [110] are specific to a domain (i.e., domain-dependent). Therefore, these models do not guarantee to work well for domains other than their specified domain. Consequently, the researchers and practitioners face the following difficulties.

(i) Difficulty in determining the required set of quality characteristics and, in turn, the relevant quality measures to achieve the specified quality requirements [105].

(ii) Difficulty in differentiating the quality characteristics and quality measures as their definitions in the literature are vague and the terminologies have been used interchangeably [147]. This also has a negative impact on (i) above.

(iii) Inadequate knowledge in assessing the complete set of quality characteristics applicable for an ontology since the ontology quality assessment in practice has got limited only to a certain set of characteristics irrespective of many other characteristics which would become applicable and have been theoretically proposed [32].

Thus, there is in fact a requirement to perform a thorough analysis on the domain of ontology quality models and problems. As a result, a systematic review was performed to streamline the findings of the existing quality problems and thereby produce a conceptual quality model that is useful for researchers and practitioners to understand the characteristics, attributes, and measures to be considered in different aspects of ontology quality assessment. Moreover, in ontology development, ontology can be developed entirely from scratch or can be reused from the existing ontologies or a combination of both. However, ontology quality assessment is performed against the same set of requirements (i.e., characteristics) specified in a particular context regardless of whether the developed ontology is built from scratch or reused [102]. Thus, the conceptual model proposed in this study is helpful for assessing the quality of ontologies irrespective of how they are developed (i.e., from scratch and/or reused). Furthermore, when discussing the conceptual quality model, we focus on web ontologies which are built upon one of the standard web ontology languages such as RDF(S) [136] and different variants of OWL [135]. However, some quality characteristics such as compliance, consistency, completeness and accuracy can also be associated with other ontology languages. Moreover, we do not distinguish between TBox (i.e., ontology structure) and ABox (i.e., knowledge base) when discussing the quality characteristics. The term ontology is used to refer to both TBox and ABox.

The rest of the article is structured as follows; Section 2 presents the survey methods that we have followed. Section 3 provides preliminaries and conceptualization of ontology quality assessment to understand the concepts that have been described in the rest of the sections. The overview of the existing ontology quality models is presented in Section 4. In Section 5, the quality characteristics, associated quality measures, and definitions for each quality characteristic are described. Section 6 provides a discussion of the findings of the survey. Finally, Section 7 discusses the important gaps related to ontology quality models/approaches and Section 8 concludes the survey with highlights of future works.

2.Survey methodology

Firstly, a traditional theoretical review was performed by retrieving the related ontology quality surveys and the literature reviews, to explore the ontology quality evaluation and then, to identify the possible quality models, including significant ontology quality characteristics and measures, and the relations among the characteristics. Few attempts have been carried out to present such contributions [51,93,108,112,140]. However, we consider that they are not comprehensive surveys as they have not clearly defined: the research gaps and questions that they have addressed and the survey methodology that they have followed. Furthermore, these surveys have provided only a general overview. Moreover, the discussions were limited to a set of characteristics proposed in [48,57,58,62,142]. In addition to that, few comprehensive surveys on ontology evaluation have been identified (see Table 1) in which the main focus is on ontology evaluation in broader aspects, not specifically for ontology quality problems.

Table 1

The existing survey studies in ontology evaluation

| Article | Description |

| Vrandecic, 2010 [142] | Databases: Not specified |

| Scope: ontologies specified in Web Ontology languages | |

| Results: Presented the overview of domain- and task-independent evaluation of an ontology by emphasizing the quality assessment related to the aspects: vocabulary, syntax, structure, semantics, representation, and context. | |

| Gurk et al, 2017 [95] | Databases: Three databases: ScienceDirect, IEEE Xplore Digital Library, ACM Digital Library (the selected articles have not been mentioned) |

| Scope: ontologies specified in Web Ontology languages | |

| Results: Performed a survey to find the ontology quality metrics for a set of characteristics that are defined in ISO/IEC 25012 Data Quality Standard (This work is limited only to the inherent quality and inherent-system quality) | |

| Degbelo, 2017 [32] | Databases: Semantic Web Journal, Journal of Web Semantics (2003-2017) |

| Scope: ontologies specified in Web Ontology languages | |

| Results: Performed the review on quality criteria and strategies which have been used in the design and implementation stages of ontology development. Presented the gaps between theory and practice of ontology evaluation. | |

| McDaniel and Storey, 2019 [96] | Databases: Web of Science journals, approximately 170 articles |

| Scope: ontologies specified in Web Ontology languages | |

| Results: Performed the review on the evaluation of domain ontology. Classified and discussed the existing ontology evaluation research studies under the five classes: Domain/Task fit, Error-checking, Libraries, Metrics, and Modularization. |

We conducted the systematic review by following the methodologies described in [23,83] with the objective of addressing the issues (i), (ii), and (iii) stated in Section 1. Thus, the review includes a summarization of ontology quality problems and models: recognition of characteristics and measures. Eventually, the aim is to present a conceptual model that provides a basis for researchers to retrieve the quality characteristics upon the quality requirements. To achieve these objectives, the following research questions were derived;

– What ontology quality models are proposed for ontology quality assessment?

– What quality problems are discussed in the previous approaches with respect to ontology quality?

– What are the ontology quality characteristics and measures assessed in the previous approaches?

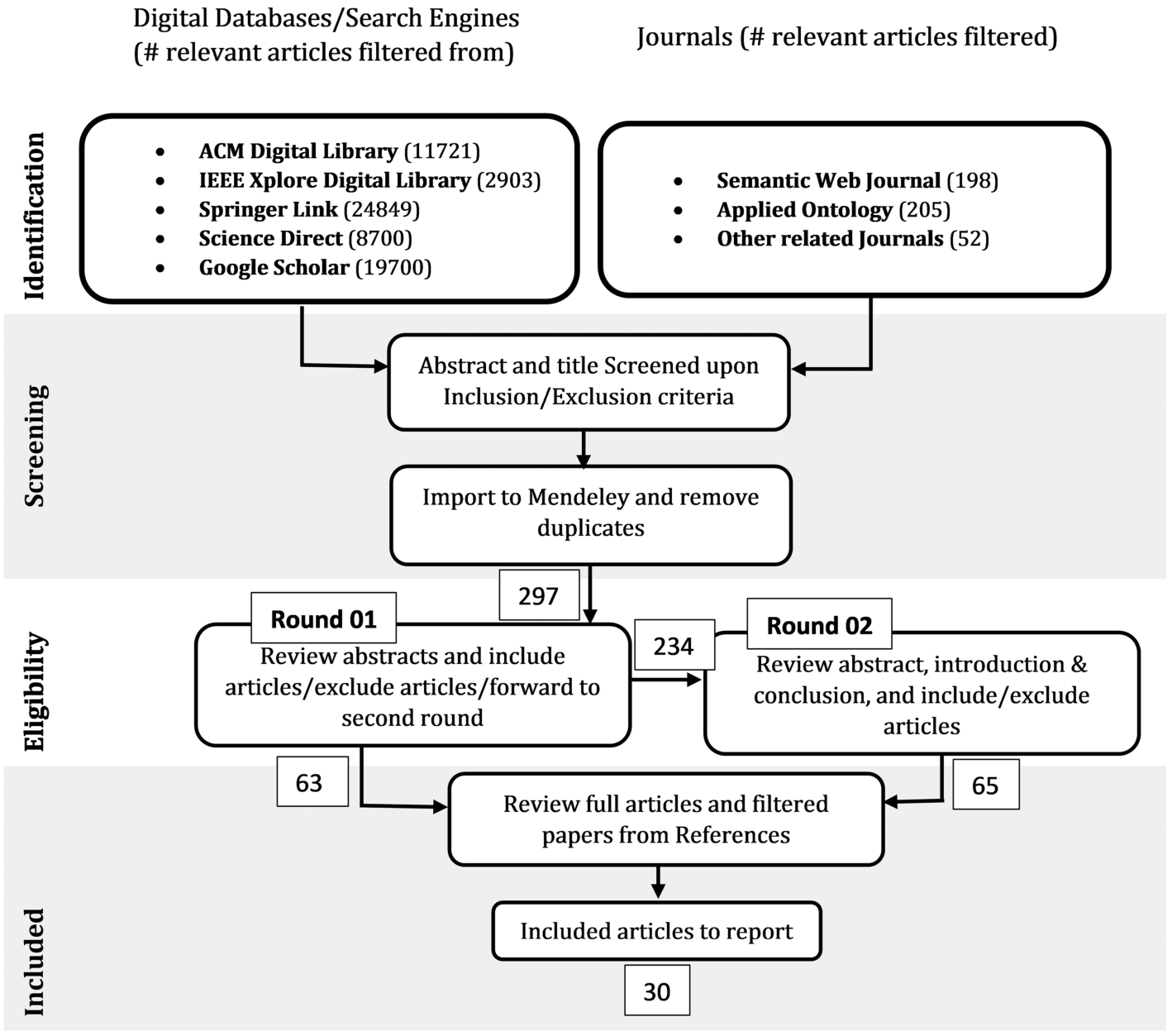

Then, the search terms were identified based on the research questions such as quality, ontology, ontology quality, assessment, evaluation, approach, criteria, measures, metrics, attributes, characteristics, methods, and methodology, and trial searches were executed using various combinations of the terms. Thereafter, the following combination was used to retrieve the relevant papers from the selected digital databases, journals, and search engines (see Fig. 2).

– [Ontology AND [Quality OR Evaluation OR Assessment]]

– [[Ontology AND Quality] AND [Criteria OR Measures OR Metric OR Characteristics OR Attributes]]

– [Ontology AND Quality] AND [Models OR Approach OR Methodology]]

These search combinations were performed according to the instructions given in each digital database under the advanced search.

Fig. 2.

Main steps of the review process and number of articles retrieved.

The following inclusion and exclusion criteria were defined; to reduce the likelihood of publication bias, to select the recently discussed studies and to keep the sample size down to make the review easier to handle. Accordingly, the defined inclusion and exclusion criteria were used to select the candidate studies at the screening and eligibility phases (see Fig. 2),

– Inclusion Criteria: Studies that are in English, published during the period (2010–2021), were peer-reviewed. Studies discussed ontology quality assessment, empirical or theoretical studies.

– Exclusion Criteria: Studies published as a short paper (less than 6 pages), tutorial, poster, and report. Studies did not present a rigorous approach to achieve the defined quality assessment objectives. Studies do not directly relevant to the research questions.

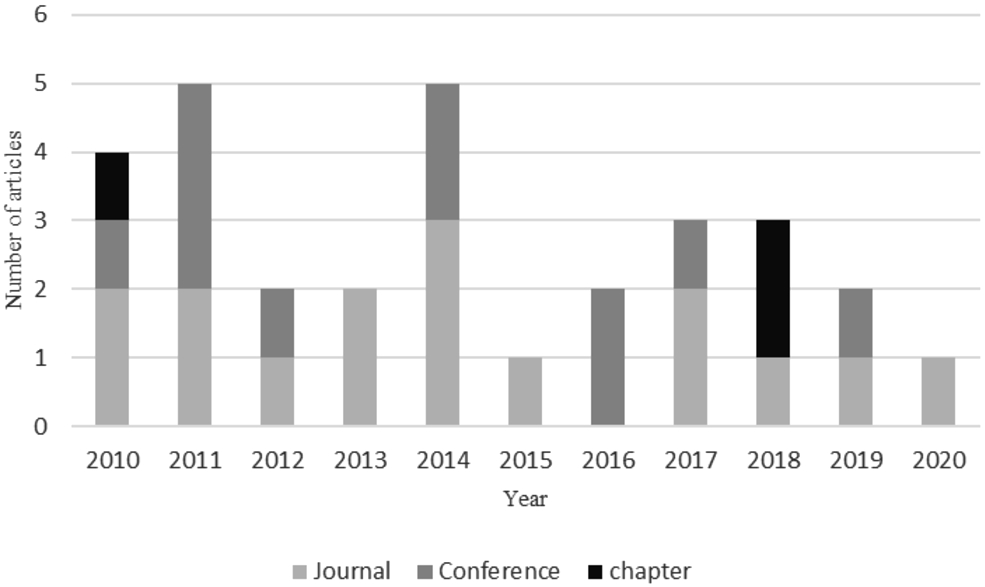

During the screening phase, the candidate studies were retrieved by performing a keyword search on titles and abstracts. Then, the duplicate studies were removed using the reference management tool (i.e., Mendeley). Thereafter, the abstracts were reviewed to filter the relevant studies. There are some cases wherein the abstract is vague and difficult to understand the real contribution. In this case, the papers were forwarded to the second round for further review of the introduction and conclusion as suggested in [23]. Altogether, 128 papers were forwarded for the full paper review. During this stage, forward and backward citations were searched and included the appropriate studies to report. It has been identified that some papers have two versions: journal and conference entitled to the same authors. In such a situation, the journal paper was selected. Finally, thirty (30) papers mentioned in the Appendix were selected for the reporting phase and they have been considered as the core papers in the survey. In addition to that, the milestone papers, which were captured through the backward citation searching (i.e., before 2010), have also been taken into account for the discussions (see the Appendix).

3.Preliminaries and conceptualization

In the context of quality, a number of ad hoc definitions and inconsistent terminologies appear in the literature leading to terminology misapplications and misinterpretations [13]. Thereby, in this section, the concepts and the terms that are relevant to our discussion are briefed to avoid miscommunication and thus to maintain consistency. Additionally, the conceptualization of ontology quality assessment is provided based on the existing theories and Table 2 presents a list of terms used in this article.

Table 2

Related terms and definitions

| Term | Definition |

| Quality model | Describes a set of characteristics and the relationships between them, also provides the basis for specifying quality requirements and evaluating the quality of an entity [75,77] |

| Context of use | Users, tasks, equipment (hardware, software and materials), and the physical and social environments in which a product is used [75,77] |

| Quality in use | The degree to which a product or system can be used by specific users to meet their needs to achieve specific goals with effectiveness, efficiency, freedom from risk and satisfaction in specific contexts of use [75,77] |

| Semiotics | The field of study of signs and their representations [107] |

| Design patterns | Reusable modeling solutions to recurrent ontology design problems [47] |

| Anti-pattern | Domain-related ontological misrepresentations [71] |

| Pitfalls | Potential errors, modeling flaws, and missing good practices in ontology development [111] |

| Lawfulness | Correctness of syntactic rules of the ontology profile [5,24] |

| Language Richness | The amount of ontology-related syntax features used [24] |

| Interpretability | Meaningfulness of terms [24] |

| Clarity | Comprehensibility of the term label [5,24] |

| Cohesion | The degree to which the elements of a module belong together [96] |

| Coupling | The degree of relatedness between ontology modules [104] |

| Tangledness | The multi-hierarchical nodes of a graph (i.e., a class with several parent classes) [50] |

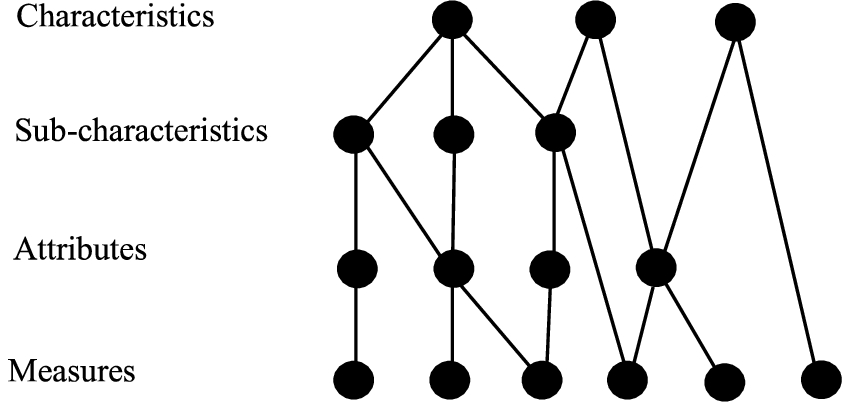

Terminologies related to quality models Quality models provide the basis for specifying quality requirements and evaluating the quality of an entity (i.e., software, tools, ontology, part of the software) [75,77]. In software engineering, quality models are twofold, relational (i.e., non-hierarchical) and hierarchical [55]. The relational model presents the correlation among the quality characteristics and this type of model has not been proposed for the ontology so far in the existing works. Only, the hierarchical models can be found which have usually four levels (see Fig. 3). The top-level (i.e., the first level) consists of a set of characteristics that are further decomposed into sub-characteristics at the next level (i.e., the second level). The third level would consist of associated attributes of characteristics/sub-characteristics. These attributes have measures, which lay at the bottom level (i.e., the fourth level), that can be used to assess the entity either quantitively or qualitatively. Thus;

Characteristics (i.e., Criteria): describe a set of attributes. An attribute is a measurable physical or abstract property of an entity [84]. In the ontology quality context, an entity can be a set of concepts, properties, or an ontology [80].

Measure (i.e., metrics): describes an attribute formally and assesses them either quantitively or qualitatively [11].

Fig. 3.

Association between characteristics, attributes, and measures.

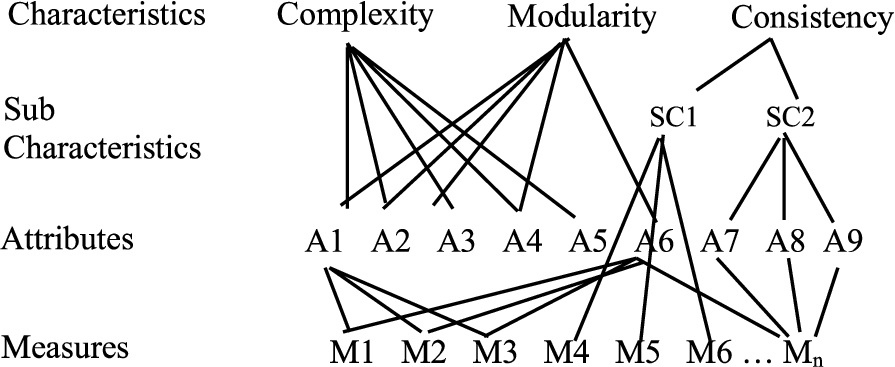

There are no definite requirements to have sub-characteristics for each characteristic or else characteristics/sub-characteristics should have attributes. Thereby, a characteristic can directly be related to measures without a set of attributes (see Fig. 3). Moreover, the same attributes/measures can be used to assess many characteristics. This has been further illustrated in Fig. 4 with the selected ontology characteristics. For instance, complexity, which is one of the ontology characteristics, describes the properties of the ontology structure (i.e., taxonomy, non-taxonomy) [48,49,116,142]. It is associated with several attributes such as size (

Fig. 4.

An example for a simple quality model.

Moreover, the measures of the attributes: size, depth, breadth, and fan-outness have also been used to assess the cohesion (

If we consider the consistency characteristic of an ontology, it can be further derived into two sub-characteristics namely, internal consistency (SC1) and external consistency (SC2) [58,153]. Internal consistency contains direct measures such as circularity errors (

Moreover, sub-characteristics also can be further derived into another set of sub-characteristics also called primitive characteristics [19] as the way it is in OQuaRE [40]. Additionally, it can be observed that some of the quality models consist of another higher level called dimensions or factors on top of the characteristics-level. It has been proposed to classify the characteristics/attributes which are related to similar aspects of the ontology. For instance, the quality model of Gangemi et al. [48,49] contains three dimensions namely; structural, functional and usability-related. Similarly, the quality model of Zhu et al. [153] has classified the characteristics under the content, presentation, and usage dimensions. However, there is no clear definition is given for the dimension in literature, thus, the term: dimension has been used interchangeably to define the term characteristics. For instance, in [146,152], the term: dimension is used to define characteristics. However, in our study, we use the terms in the order: dimensions, characteristics, sub-characteristics, attributes, and measures to describe the structure of a quality model whenever it is necessary.

Ontology layers (i.e., levels) The researchers have discussed the ontology quality layer-wise, or level-wise by concerning an ontology as a multi-layered vocabulary [22,57,108,112,142]. Initially, three layers to be focused on have been proposed in [57], namely: content, syntactic & lexicon, and architecture. Later, this was expanded by including the layers: hierarchical and context [22,142]. The syntactic layer considers the properties related to the language that is used to represent the ontology formally [22,57]. The hierarchy or taxonomy layer focuses on the properties related to the taxonomic structure (i.e., is-a relationship) of ontologies [22]. A number of measures have been defined related to the taxonomy layer [42,48,50,54,116]. These measures are often useful to observe the extent to which the concepts in an ontology are spread out in relation to the root concept of that ontology [42,116] and to track the evolution of ontologies easily [142]. The authors in [42,116] have shown that taxonomic measures such as maximum depth/breadth, average depth and depth/breadth variance are good predictors of ontology reliability. The architectural layer is known as the structural or design layer in [22,108]. It considers whether an ontology is modeled based on the pre-defined design principles and criteria [22,57]. The lexicon layer is also named as vocabulary or data layer [22,142], which takes into account the vocabulary that is used to describe concepts, properties, instances, and facts. The non-hierarchical relationships and semantic elements are considered under the semantic layer. To evaluate the vocabulary, architecture and semantic layers, some understanding of domain knowledge is required. The context layer concerns the application scope that the ontology is built for. Mainly, it determines whether the ontology satisfies the application requirements as a component of an information system or a part of a collection of ontologies [22]. We have performed a separate analysis on the ontology evaluation layers and their relationship between the evaluation approaches (i.e., methods): task-based, human-based, data-driven, and golden-based, which can be found in [147] for more detail.

Ontology development methodologies and design patterns Ontology methodologies and design patterns and principles provide guidelines for knowledge modeling and representation paving the path for accurate knowledge capturing and defining. This enables ontology developers to achieve the quality characteristics emphasized in this study such as compliance, consistency, completeness, and conciseness. Due to this reason, it is necessary to explore the development methodologies, design patterns and principles. However, discussing them in detail is out of the scope of this paper.

There are several methodologies introduced for ontology development such as Cyc [92], Uschold and King’s method [139], TOVE [64], METHONTOLOGY [59], SENSUS [128], On-To-Knowledge [120] and DILIGENT [110]. Among them, only Uschold and King’s method, TOVE and On-To-Knowledge have discussed ontology evaluation as a phase to be performed after the development of the entire ontology. The METHONTOLOGY mechanism performs the evaluation at every phase of ontology development. However, none of these methodologies have neither described nor revealed the ontology evaluation methods in detail [27,69]. More details of the comparison of these methodologies can be found in [27,29,87]. In addition to that, agile ontology development methodologies have been introduced by adopting agile principles and practices in software engineering. These include methodologies such as SAMOD [109], XD [18,30], AMOD [1] and CD-OAM framework [129]. Out of these, only SAMOD and XD discussed the evaluation (i.e., model test, data test, unit test) of ontologies at least in a nutshell. The significance of XD (i.e., eXtreme Design with Content Ontology Design Patterns) is the use of ontology content design patterns to construct ontologies that instinctively support to prevent of making common modeling mistakes. This, in turn, directs ontologists to construct a quality ontology, particularly assisting inexperienced ontologists [18].

Ontology Design Patterns (ODPs) are reusable modeling solutions to recurrent ontology design problems [47]. Several groups have proposed a set of design patterns. For instance, the authors in [6] have proposed seventeen ODPs in relation to the biological knowledge domain. They have classified ODPs into three groups namely (i) extension ODPs which provide solutions to bypass the limitations of OWL such as n-ary relations, (ii) good practice ODPs which support to construct of robust and cleaner design (iii) domain modeling ODPs which provide solutions for concrete modeling problems in biology [6]. Moreover, the semantic web best practices and deployment working group has introduced ODPs which include n-ary relations, classes as property values, value partitions/sets, and simple part-whole relations. In addition to that, the group ontologydesignpatterns.org suggests more than two hundred patterns and principles which have been categorized into six families namely; content ODPs, structural ODPs, correspondence ODPs, reasoning ODPs, presentation ODPs and lexico-syntactic ODPs [9]. The reuse of the patterns and principles can be determined at the ontology design phase with respect to the requirements of the ontology development and it makes ontology modeling faster [71,102]. To this end, the authors in [18,71] have shown through experiments that pattern-based ontology development avoids making common modeling mistakes. This in turn improves the quality and usability of ontologies.

Furthermore, OntoClean has proposed a set of principles (i.e., rules) that helps to identify problematic modeling choices associated with taxonomy relationships [65]. Mainly, the rules of OntoClean have been constructed upon a set of metaproperties related to taxonomy namely; identity, unity, dependence, essence and rigidity. By embedding these principles, an ontology-driven conceptual modeling language has been proposed namely OntoUML [16,71,106]. Moreover, OntoUML provides a set of design patterns that accelerate ontology modeling. In addition to that, it describes a set of anti-patterns to be avoided in modeling ontologies.

Ontology evaluation space An ontology-based information system usually consists of many components. The quality of each component of the system would influence the overall quality and in turn usability of the system which is often critical for business success [17,35]. As explained in Section 1, the quality requirements which are to be achieved through a system or each system component should be traced from user needs also known as business requirements (see Fig. 1). Specification of quality requirements can be viewed as a connected flow starting from user needs to the internal quality of a system. For instance, quality requirements can be elicited from user needs and they can also be defined as a set of quality requirements to be achieved by a system when it is in use. This is also defined as quality in use (see Fig. 5) [17,75]. Then, this set of quality requirements can be used to determine the external quality requirements of each component of a system, accordingly, determines the internal quality requirements. These quality requirements (i.e., Internal/External) can also be expressed as a set of characteristics with associated measures. For that, a quality model can be used that provides a framework for determining characteristics/sub-characteristics in relation to the quality requirements. Then, these characteristics/sub-characteristics can be evaluated using the relevant measures to ensure whether the desired quality in each stage is achieved (see Fig. 5).

For an ontology-based system, ontology quality requirements also need to be distinctly identified from the overall quality requirements of the system [141]. Based on that, the external and internal quality requirements to be achieved from the ontology can be derived. The external and internal quality have been discussed as extrinsic and intrinsic evaluation aspects of ontologies in [101,105]. Figure 5 presents the flow of specifying the ontology quality requirements with the associated evaluation aspects when an ontology is a component of a system [148]. It should be noted that the quality requirements related to the other software and hardware components of the ontology-based system have not been presented in Fig. 5.

The extrinsic evaluation considers quality under two aspects namely domain extrinsic and application extrinsic. The ontology quality requirements elicited from the user needs can be separated under these aspects [125,141]. For instance, quality requirements associated with domain knowledge are considered under the domain extrinsic aspect. On the other hand, ontology quality requirements that are specifically needed by the system that the ontology is deployed are considered under the application extrinsic aspect [101,105]. Moreover, the application extrinsic requirements are independent of the domain knowledge. In performing a quality evaluation under these aspects, an ontology is taken into account as a component of a system. Traditionally this evaluation is called “black-box” or “Task-based” testing [70,101] as the quality assessment being performed without peering into the ontology structure and content. Furthermore, this extrinsic evaluation has been defined as ontology validation in [121]. Accordingly, ontology is assessed to confirm whether the right ontology was built [121,142], mainly this is performed with the involvement of users and domain experts (see Fig. 5). Moreover, this has also been considered as the context-layer evaluation in [22,142].

The intrinsic evaluation focuses on the ontology content and structural quality to be achieved in relation to the extrinsic quality requirements. To this point, ontology is considered as an isolated component separated from the system. At this stage, ontology quality can be evaluated under two aspects: domain intrinsic and structural intrinsic [101], mainly performed by ontology developers. In the structural intrinsic aspect, the focus is given to the syntactic and structural requirements (i.e., syntactic, structural, architectural layers) which involve the specified conceptualization such as language compliance, conceptual complexity, and logical consistency. In the domain intrinsic aspect, ontology quality is evaluated with reference to the domain knowledge that is required for the intended needs of users [101]. Thus, ontology developers need to get the assistance of domain experts to evaluate this aspect. Moreover, the semantic, vocabulary and architectural layers of ontologies are evaluated under this aspect. Furthermore, the quality evaluation performed under the structural and domain intrinsic aspects are similar to the paradigm of “white-box” testing in software engineering [70]. Thus, from the intrinsic aspects, verification is being done to ensure whether the ontology is built in the right way [121,142].

The quality requirements identified under each aspect of the ontology evaluation space can be associated with the corresponding set of characteristics together with measures. Then, those characteristics can be measured in order to ensure whether the defined quality requirements are being achieved through ontology development. In the following section, we illustrate how the quality characteristics can be derived in relation to an ontology requirement using a use case in agriculture.

Use case We considered an ontology-based decision support system that provides pest and disease management knowledge for farmers. For simplicity, we assumed that ontology quality requirements have already been elicited from user needs and as such consider that the requirement specified below is the key quality requirement of the system.

– The ontology should provide correct pest and disease knowledge for user queries requested through the system.

From the extrinsic aspect of the ontology, the mentioned quality requirement is associated with domain knowledge in agriculture. Thus, it is a domain extrinsic quality requirement and it discusses the accuracy of knowledge to be provided through the ontology. At this stage, the accuracy of the knowledge can be observed in terms of the accuracy of answers given for the competency questions [150]. Moreover, accuracy should be evaluated with the assistance of domain experts and users. With respect to the identified extrinsic quality requirement, it is required to define what set of characteristics should be met from the intrinsic aspect. For that, we need to utilize techniques such as ROMEO [150] and GQM [11] that support deriving the intrinsic quality requirements from the higher-level (i.e., extrinsic) quality requirements. By adopting the ROMEO methodology [150], the following questions are formulated to derive the intrinsic quality requirements. For instance, to provide accurate pest and disease knowledge for farmers, domain knowledge should be correctly modeled for an ontology. For that, all required axioms11 with regard to pest and disease management should be correctly defined in the ontology. Accordingly, the question:

– Q1: Does the ontology capture the axioms correctly to represent knowledge in pest and disease management?

– Q2: Is the ontology free from internal contradiction?

Based on the derived questions in relation to the extrinsic quality requirement, it is required to identify the associated characteristics and the relevant measures to be evaluated. In this situation, ontology quality models play a key role that supports identifying the corresponding characteristic/sub-characteristic in relation to the derived questions. However, there is no such a well-formed quality model for ontologies as highlighted in Section 1. Thus, to this end, we determined the characteristics based on the literature survey. For instance, Q1 discusses external consistency as it considers whether the defined axioms are consistent with the domain knowledge. Q2 discusses internal consistency as it considers whether there are any internal contradictions within the ontology definitions. After identifying the characteristics, the relevant measures to be evaluated through ontology development should be identified. This process would be easier if a well-formed quality model is available. For the use case that we have considered, external consistency can be evaluated using the measure of precision, as explained in [150], which provides a ratio between the correctly defined axioms (Oi) and the total axioms defined in the ontology (Oa). The correctness/consistency of axioms (Oi) can be determined against a frame of reference (Fa) such as a valid corpus or the standard ontology [150]. Precision values can range from zero (0) to one (1). If the value of precision is zero (0) then it implies that the particular ontology has not correctly captured any of the axioms defined in the frame of reference. If the value of precision is 0.6 then it implies that the ontology has correctly captured 60% of the axioms with respect to the frame of reference.

If the value of precision is one (01) then it implies that the particular ontology has correctly captured all axioms with respect to the considered frame of reference. Meantime, it can be stated that the particular ontology has correctly represented the relevant knowledge of the domain.

Moreover, internal consistency can be assessed by observing the number of logical inconsistencies with the support of a reasoner. In addition to that, the measures such as the number of circularity errors, the number of subclass partitions with common classes and the number of partitions with common instances can be observed (see Table 7). These measures are considered as a set of pitfalls that could lead to wrong inference [58,111,114] and they can be detected using the tool OOPS! [111].

In this way, for the rest of the ontology quality requirements, the relevant ontology characteristics can be determined with the support of a quality model. This use case is further explained in the article [148] to illustrate how the other ontology characteristics (i.e., coverage, comprehensibility) and their measures are derived for the ontology requirements. These characteristics can then be measured through ontology development to ensure that the ontology being modeled is of good quality.

4.Ontology quality models

4.1.Quality models in software engineering

It is worthwhile to analyze the related contribution in neighboring research fields that support identifying established theories and practices relevant to our study. Moreover, the related theories and practices can be reused or adopted rather than developing the theories and practices from scratch. Therefore, the quality models in the software engineering field were explored. Many well-accepted quality models are available in software engineering such as McCall’s quality model [25], Boehm’s quality model [19], Garvin’s quality model [53], the data quality model: ISO/IEC 25012[75], and the ISO 9126 quality model [78] (see Table 3).

McCall’s quality model [25] has proposed eleven quality factors (i.e., can also be considered as dimensions) from the product perspectives which further have been divided into a set of characteristics and measures. Thus, it provides a hierarchal model containing three levels. Boehm’s quality model is a hierarchical model that is similar to McCall’s model. However, it provides a broader range of characteristics without defining the measures (see Table 3). The top level of Boehm’s quality model contains the characteristics: As-is utility, Maintainability, and Portability. Based on that, the intermediate and low levels describe the related sub-characteristics and primitive characteristics respectively.

Garvin’s quality model has described eight quality dimensions (i.e., factors) specifically for product quality that can be adapted to software engineering. Moreover, the dimensions have been defined at the abstract level, and some of them are subjective, thus it is difficult to measure.

The data quality model ISO/IEC 25012 has defined fifteen characteristics that have to be taken into account when assessing the quality of data of products [75]. The characteristics further have been categorized into two types namely inherent data quality and system-dependent data quality. The inherent data quality describes the characteristics of data such as accuracy, completeness, consistency, credibility, and currentness which have the intrinsic potential to satisfy stated and implied needs. The rest of the characteristics such as accessibility, compliance, confidentiality, efficiency, precision, traceability, understandability, availability, portability, and recoverability, come under the system-dependent data quality that must be preserved within a computer system when data is used under specified conditions.

Table 3

Comparison of software quality model

| Model | Levels and Characteristics |

| McCall’s quality model [25] | Levels: factor, characteristics, measures |

| Characteristics: correctness, reliability, efficiency, integrity, usability, maintainability, testability, flexibility, portability, reusability and interoperability | |

| Boehm’s quality model [19] | Levels: primary, intermediate, primitive |

| Characteristics (intermediate): portability, reliability, Efficiency, usability (Human Engineering), testability, understandability and modifiability | |

| Garvin’s quality model [53] | Level: product quality characteristics |

| Characteristics: performance, features, reliability, conformance, durability, serviceability, aesthetics, and perceived quality | |

| ISO/IEC 25012 [75] | Levels: inherent data and system-dependent data quality characteristics |

| Characteristics: accuracy, completeness, consistency, credibility, currentness, accessibility, compliance, confidentiality, efficiency, precision, traceability, understandability, availability, portability and recoverability | |

| ISO/IEC 25010 [78] | Levels: Factors (Quality in use, external quality, internal quality), characteristics, sub-characteristics and measures |

| Characteristics: functional suitability, performance efficiency, compatibility, usability, security, maintainability, portability, effectiveness in use, efficiency in use, satisfaction, free from risk, context coverage |

ISO 9126 model (ISO/IEC 9126:1991) is a widely accepted software product quality model that was produced in 1991 by the International Organization for Standardization (ISO). Later, it has been extended as ISO/IEC 9126-1:2001 which includes four parts:

– Part 1: Quality model

– Part 2: External Measures

– Part 3: Internal Measures

– Part 4: Quality in use Measures

Part 1: the quality model comprises with six characteristics related to internal and external software product quality which have further been decomposed into sub-characteristics. The measures of each characteristic/sub-characteristic have been defined under Parts: 2, 3, and 4 of the standard.

Furthermore, SQuaRE: ISO/IEC 25010 (Systems and software Quality Requirements and Evaluation): has been introduced by redesigning the ISO 9126 model with the ISO 14598 series of standards. The reason for redesigning the ISO 9126 model is that it contains issues as a result of the advancement of information technologies and the changes in its environment [78]. SQuaRE comprises the same set of characteristics that were defined in ISO 9126 with several amendments as described in [78].

From the ontological perspective, there are no agreed quality models as the way it is in software engineering. However, significant contributions have been made to the field and some quality models have been developed by adopting theories in software engineering. For instance, OQuaRE [40] and SemQuaRE [113] are quality models related to ontologies, constructed upon the theories described in system and software standards: SQuaRE-ISO/IEC 25010 [78]. Additionally, we realized that the findings of our survey can be classified as the way it has been done for data quality in ISO/IEC 25012. Moreover, it has been identified that some of the characteristics of the data quality model such as credibility, timeliness, recoverability and availability can be adopted for the ontology quality. This has been further discussed in Section 5. The subsequent section discusses the existing quality models for ontologies.

4.2.Quality models for ontologies

Initially, a framework has been proposed in [57] to verify that developers are building a correct ontology. The proposed framework consists of a set of characteristics namely soundness, correctness, consistency, completeness, conciseness, expandability, and sensitiveness, which have been adopted in later research works and developments [96,114,142,150]. Thereafter, significant methods [65,103,138] and tools such as OntoTrack [82], OntoClean [65], OntoQA [132], OntoMetric [90], SWOOP [82] have been proposed enabling quality assessment with several sets of characteristics mainly focusing on the intrinsic aspect. Afterward, several attempts have been taken to provide a generalized quality model, significantly, the semiotic metric suit [24], the quality model of Gangemi [48,49], and OQuaRE [40].

Semiotic metric suite [24] Semiotic theory [122] has been taken as the foundation for this model. According to [122], the semiotic theory is “the study of the interpretations of signs and norms” and it defines six levels namely syntactic, semantic, pragmatic, social, physical, and empiric. The semiotic metric suite has been developed by classifying a set of ontology quality attributes under the aforementioned levels, excluding physical and empiric levels. These aspects can also be considered as quality dimensions, which are as follows.

– Syntactic: Lawfulness, Richness

– Semantic: Interpretability, Consistency, Clarity

– Pragmatic: Comprehensiveness, Accuracy, Relevancy

– Social: Authority, History

Even though the authors have mentioned that this model has been constructed solely by focusing on the intrinsic aspect, the attributes: accuracy, relevancy, and all attributes in the social dimension take on an extrinsic nature. To the reason that, under the pragmatic and social levels, the ontology is assessed as a whole and measures are evaluated with reference to the domain experts’ knowledge [5], and external documents: usage logs, page ranking, details of external links with other ontologies [96]. Accordingly, it can be understood that the semiotic metric suite provides a set of quality attributes related to both intrinsic and extrinsic aspects of an ontology.

The quality model of Gangemi et al. [48, 49] By considering an ontology as a semiotic object “including graph objects, formal semantic spaces, conceptualizations, and annotation profiles”, the three main dimensions: structural, functional, and usability-related have been proposed. The structural dimension consists of thirty-two measures related to the topological, logical, and meta-logical characteristics of an ontology [50]. Primarily, it covers syntactic and formal semantics. Under the functional dimension, the possible attributes: precision, recall (i.e., coverage), and accuracy have been proposed to assess the conceptualization specified by the ontology with respect to the intended use. The measures of the usability-related dimension focus on the metadata (i.e., annotations) about the ontology and the elements related to the communication context. To this end, the attributes: presence, amount, completeness, and reliability have been described under three levels: recognition annotation, efficiency annotation, and interfacing annotation by Gangemi et al. [48,49].

OQuaRE [40] By adopting the standard SQuaRE (ISO/IEC 25000:2005) [77], the OQuaRE quality model has been proposed to evaluate ontology quality. It comprises the same set of characteristics defined in the standard ISO/IEC 25000:2005 such as functional adequacy, reliability, operability, maintainability, compatibility, and transferability. In addition to that, the structural characteristic has been included in the model to assess the inherent topological characteristics of an ontology. Furthermore, these characteristics have been decomposed into several sub-characteristics considering the ontological point of view including a set of associated measures which are available online.22

Based on our survey, it has been recognized that OQuaRE [40] has not considered the semantic features of an ontology such as coherency and coverage of the domain knowledge. These features are essential for ontologies to process meaningful interpretations. In addition to that, the authors in [114,142,153] have highlighted the issues related to OQuaRE. They have stated that the proposed sub-characteristics are subjective and are difficult to be applied in practice. Consequently, OQuaRE [40] has not appeared in the later research. However, noticeable studies are available related to the other two models: semiotic metric suit [24] and the quality model of Gangemi et al. [48,49].

OntoKeeper [5] and DoORS [98] are semiotic-metric-suite-driven initiatives. For instance, OntoKeeper has automated the semiotic metric suite by taking into account the intrinsic aspect of an ontology. DoORS is a web-based tool for evaluating and ranking ontologies. It has been developed by extending the semiotic metric suite [24] with a set of additional characteristics: structure, precision (i.e., instead of clarity), adaptability, ease of use, and recognition.

Table 4

The existing quality models for ontologies identified through the survey

| Model | Description | Dimensions | Characteristics and Sub-Characteristics/attributes |

| Quality model in ONTO-EVOAL [38], 2010 | Structure: Hierarchical model Base model: Gangemi et al.’s model Purpose: To assess the quality of evolving ontology Approach: Top-down approach: the characteristics have been derived from [50,132,142] Evaluation: Empirical evaluation has not been presented. | Content | Complexity |

| Cohesion | |||

| Conceptualization | |||

| Semantic Richness | |||

| Attribute Richness | |||

| Inheritance Richness | |||

| Abstraction | |||

| Usage | Completeness | ||

| Precision | |||

| Recall | |||

| Comprehension | |||

| OQuaRE [40], 2011 | Structure: Hierarchical model Base model: The SQuaRE standard Purpose: To rank, select, compare and assess the ontologies Approach: Top-down approach Evaluation: Empirical evaluation has been performed on two applications: Ontologies of Units of Measurementa and Bio ontologiesb | – | Structural |

| Functional Adequacy | |||

| Reliability | |||

| Operability | |||

| Maintainability | |||

| Compatibility | |||

| Transferability | |||

| (More sub-characteristics of these are available onlinec) |

Table 4

(Continued)

| Model | Description | Dimensions | Characteristics and Sub-Characteristics/attributes |

| OntoQualitas [114], 2014 | Structure: Hierarchical model Base model: No specific model is used Purposed: To evaluate the quality of an ontology whose purpose is the information interchanges between heterogeneous systems Approach: Top-down approach: adopted the ROMEO methodology [150], then the criteria have been derived from [24,58,65] Evaluation: Empirical evaluation has been performed on ontologies of enterprises interchange Electronic Business Documents | – | Language conformance |

| Completeness | |||

| Conciseness | |||

| Correctness | |||

| Syntactic Correctness | |||

| Semantic Correctness | |||

| Representation Correctness | |||

| Usefulness | |||

| Quality model in OOPS! [111], 2014 | Structure: Hierarchical model Base model: the model of Gangemi et al. [48,49] Purpose: To classify the identified pitfalls Approach: Top-down approach Evaluation: the model of Gangemi et al. [48,49] has been extended to classify the pitfalls, thus, no evaluation has been provided. | Structural | Correctness |

| Modeling Completeness | |||

| Ontology Language conformance | |||

| Functional | Requirement Completeness | ||

| Content Adequacy | |||

| Usability-related | Ontology Understanding | ||

| Ontology Clarity |

Table 4

(Continued)

| Model | Description | Dimensions | Characteristics and Sub-Characteristics/attributes |

| Quality model of Zhu et al. [153], 2017 | Structure: Hierarchical model Base model: No specific model is used Purpose: To evaluate ontology for Semantic Descriptions of Web Services Approach: Top-down approach Evaluation: Empirical evaluation has been performed on five ontologies which underlying of five web services in applications of reporting weather forecasts. The evaluation has been performed against the standardd weather ontology | Content | Correctness |

| Internal Consistency | |||

| External Consistency | |||

| Compatibility | |||

| Completeness | |||

| Syntactic Completeness | |||

| Semantic Completeness | |||

| Presentation | Well-formedness | ||

| Conciseness | |||

| Non-redundancy | |||

| Structural Complexity | |||

| Size | |||

| Relation | |||

| Modularity | |||

| Cohesion | |||

| Coupling | |||

| Usage | Applicability | ||

| Definability | |||

| Description Complexity | |||

| Adaptability | |||

| Tailorability | |||

| Composability | |||

| Extendibility | |||

| Transformability | |||

| Efficiency | |||

| Search Efficiency | |||

| Composition Efficiency | |||

| Invocation Efficiency | |||

| Comprehensibility |

Table 4

(Continued)

| Model | Description | Dimensions | Characteristics and Sub-Characteristics/attributes |

| Quality model of McDaniel et al. [98], 2018 | Structure: Hierarchical model Base model: The semiotic metric suit Purpose: To rank ontologies Approach: Top-down approach Evaluation: empirical evaluation has been performed by selecting ontologies from the Bio Portal ontology repository | Syntactic | Lawfulness |

| Richness | |||

| Structure | |||

| Semantic | Consistency | ||

| Interpretability | |||

| Precision | |||

| Pragmatic | Accuracy | ||

| Adaptability | |||

| Comprehensiveness | |||

| Ease of use | |||

| Relevance | |||

| Social | Authority | ||

| History | |||

| Recognition |

The quality model of Gangemi et al. [48,49] has been adopted in multiple studies [38,54,111,142]. OOPS! [111] has used that model to classify the proposed common pitfalls which can occur during ontology development. Furthermore, OOPS! has extended the dimensions to another level as presented in Table 4. The authors in [54] have used the structural measures defined in the model of Gangemi et al. [48,49] to evaluate the cognitive ergonomics of an ontology. Moreover, a theoretical model proposed in [142] contains eight characteristics: Accuracy, Adaptability, Clarity, Completeness, Computational efficiency, Conciseness, Consistency, and Organizational fitness, which have been derived through literature reviews including the set of characteristics proposed in Gangemi et al.’s list [48,49]. The quality model proposed in the ONTO-EVOAL approach has been constructed mainly based on Gangemi et al.’s model that contains two dimensions: content and usage with six characteristics (see Table 4) [38].

In addition to that, few efforts have been made in designing quality models for a specific purpose namely; OntoQualitas [114], the quality model for semantic descriptions of web services [153], and SemQuaRE [113]. OntoQualitas provides a set of characteristics and related measures to evaluate the quality of ontologies which are built upon the purpose of exchanging information between heterogeneous systems. The ROMEO methodology [150] is the basis that OntoQualitas follows to derive the measures relating to the quality characteristics. The applicability of the characteristics in OntoQualitas to the intended context was empirically evaluated. Focusing on the context of semantic descriptions of web services, the authors in [153] have presented a quality model which comprises four levels namely: aspects, attributes, factors, and metrics (see Table 4). These levels can be considered as dimensions, characteristics, sub-characteristics/attributes, and measures respectively as per our definitions in Section 3.

SemQuaRE [113] quality model has not specifically been defined for ontologies. However, it is for assessing the quality of semantic technologies. For instance, ontology engineering tools, Ontology matching tools, Reasoning systems, Semantic search tools, and Semantic web services.

Notably, there are two strategies that have been followed in constructing models for software quality [39] namely; the top-down approach and the bottom-up approach. The top-down approach starts to construct the model from the characteristics and then decomposes them into other levels which contain sub-characteristics and attributes respectively. Finally, the corresponding measures for each characteristic/attribute are identified. In contrast to that, the bottom-up approach first observes the related measures which have been defined in the existing studies and classifies them until reaching the top-level characteristics. [39]. When considering the discussed models related to ontologies, only SemQuaRE [113] model has been constructed following the bottom-up approach. All the other models [24,38,40,48,98,111,114,153] have followed the top-down approach, in which, the characteristics related to ontologies were first determined, and from that, the possible sub-characteristics/attributes and measures were derived. The quality models for ontologies that we have explored through the survey are summarized in Table 4. All of them are hierarchical quality models having the structure: <characteristics, sub-characteristics, attributes, measures> and there is no significant work identified on constructing a non-hierarchical (i.e., relational) quality model that shows the correlation between characteristics.

In Table 5, the characteristics/attributes proposed in the ontology quality models in [38,98,114,153] have been mapped with the respective aspects (i.e., structural intrinsic, domain intrinsic, extrinsic (domain/application), and quality in use). However, the models: OQuaRE [40] and the quality model in OOPS! [111] has not been included in Table 5. To the reason that the OQuaRE [40] characteristics have been defined by considering the ontology as a software artifact. Thus, it is difficult to distinctly map their characteristics with the ontological evaluation aspects. When considering OOPS!, it has adopted an existing model to classify a set of pitfalls and does not specifically provide a set of characteristics concerning the quality requirements.

If we consider other quality models, OntoQualitas and the model in ONTOEVOAL have defined characteristics mostly related to the intrinsic extent. Of which, the characteristics: completeness (coverage), conciseness (precision), and representational correctness, are associated with the domain that the ontology is considered (see Table 5). Additionally, OntoQualitas has taken quality in use of ontology into account by defining the characteristics usefulness, i.e., usefulness of the ontology for the heterogeneous information interchange. The quality model of Zhu et al. [153] concerns both intrinsic and extrinsic aspects. However, the sub-characteristics of applicability and efficiency have been specifically defined for the semantic web services context. When comparing these models, the characteristics proposed in the quality model of McDaniel et al. [98] cover many aspects of ontology quality evaluation. In which, authority, history, and recognition reflect user satisfaction. For instance, authority considers the number of linkages with other ontologies, history considers the number of revisions made to the ontology and how long it has been actively public. Recognition considers the number of times the ontology is downloaded and the reviews given to the ontology. Thus, these attributes are useful to understand to what extent the ontology is accepted by the community, then the positive values of the attributes imply user satisfaction with the ontology.

Table 5

The characteristics/attributes of the existing ontology quality models mapping with the evaluation aspects

| Model | Structure Intrinsic | Domain Intrinsic | Extrinsic (Domain/Application) | Quality in use |

| Quality model in ONTO-EVOAL [38], 2010 | Complexity | Completeness (Precision and recall) | ||

| Cohesion | ||||

| Conceptualization | ||||

| Abstraction | ||||

| Comprehension | ||||

| OntoQualitas, [114], 2014 | Language conformance | Completeness: Coverage | Usefulness | |

| Completeness: is-a/non-isa | Conciseness: Precision | |||

| Conciseness: is-a/non-isa | Semantic Correctness: interpretability, clarity | |||

| Syntactic Correctness | Representation correctness | |||

| Semantic Correctness: is-a/non-isa | ||||

| Quality model of Zhu et al. [153], 2017 | Internal consistency | External Consistency | Applicability | |

| Well-formedness | Compatibility | Adaptability | ||

| Structural Complexity | Completeness | Efficiency | ||

| Modularity | Conciseness | Comprehensibility | ||

| Quality model of McDaniel et al. [98], 2018 | Lawfulness | Consistency | Accuracy | Authority |

| Richness | Interpretability | Adaptability | History | |

| Structure | Precision | Ease of use | Recognition | |

| Comprehensiveness | Relevance |

In addition to that, by adopting the GQM (Goal-Question-Metrics) methodology [11], the study [150] employed in providing approaches to derive the measures of quality characteristics tracing from ontology requirements. In which, goals are the ontology requirements that are gradually refined into questions/sub-questions which reflect the respective quality characteristics to be measured. In this case, quality models act as a complementary component that supports deriving measures with respect to the characteristics reflected in each question. Thus, the proposed approaches would not be effective without a quality model that presents a set of characteristics and corresponding measures.

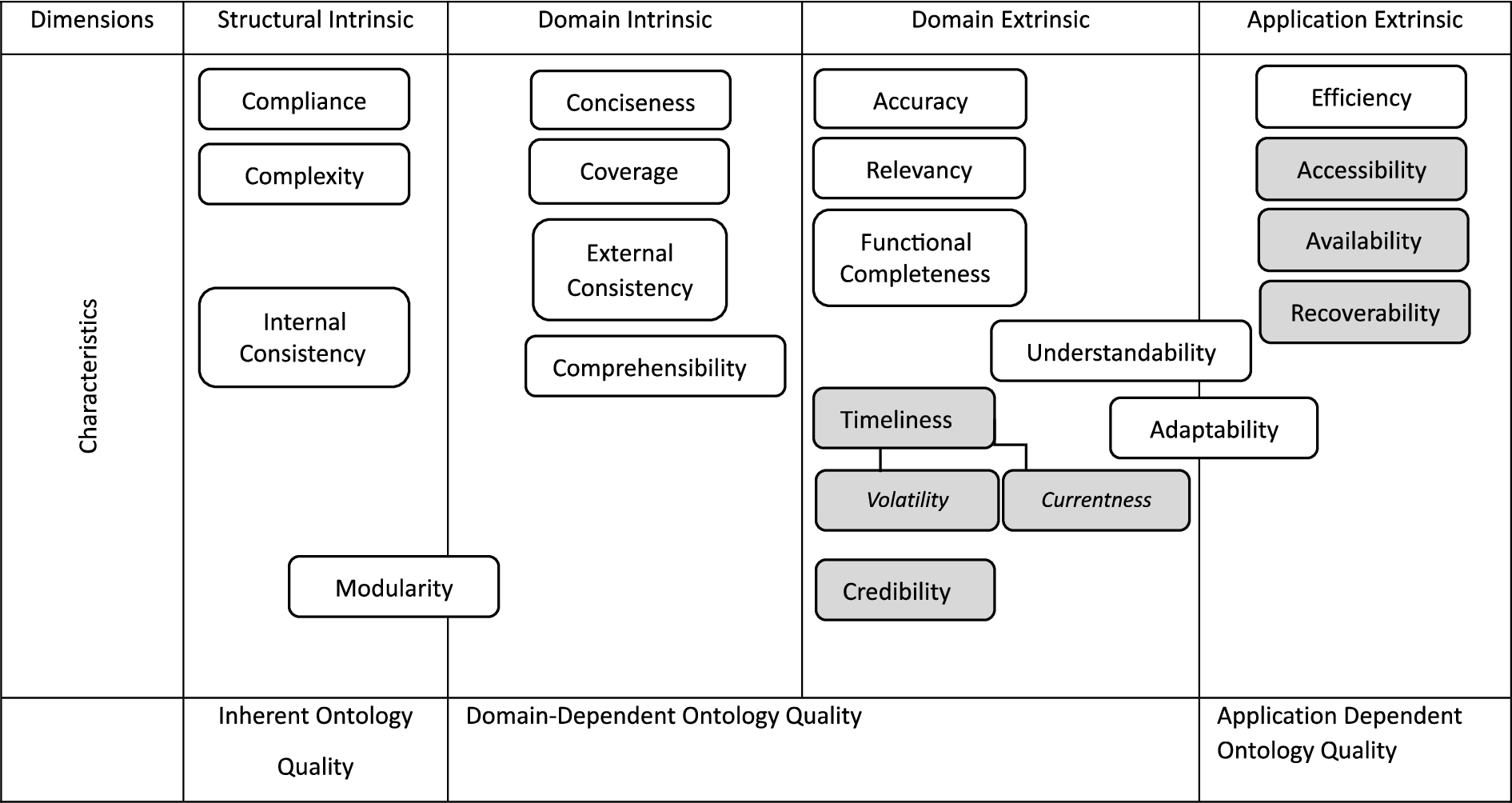

5.Classification of ontology quality characteristics

Several characteristics and measures have been discussed in the selected papers. From that, fourteen (14) significant characteristics: compliance, complexity, internal consistency, modularity, conciseness, coverage, external consistency, comprehensibility (i.e., intrinsic point of view), accuracy, relevancy, functional completeness, understandability (i.e., extrinsic point of view), adaptability, efficiency, were identified. Then, the identified characteristics were grouped under the four evaluation aspects which also can be defined as dimensions namely: Structural intrinsic, Domain intrinsic, Domain extrinsic and Application extrinsic. These dimensions were derived based on the ontology evaluation space as described in Section 3. Moreover, this model has the same nature as the ISO/IEC 25012- Data Quality model [75] which consists of only two categories namely; inherent data quality, and system-dependent data quality. However, we identified and defined three main categories for ontology quality viz: inherent ontology quality, domain-dependent ontology quality, and application-dependent ontology quality (see Table 6). The structural intrinsic aspect attaches to the inherent ontology quality. The domain intrinsic and domain extrinsic aspects are grouped under the domain-dependent ontology quality. Finally, the application extrinsic aspect was mapped with the application-dependent ontology quality.

Table 6

Ontology quality model (the gray-colored characteristics were adopted from the data quality standard: ISO/IEC 25012)

In addition to the identified characteristics through the survey, a set of characteristics that can be applied to ontology were adopted from ISO/IEC 25012 namely currentness, credibility, accessibility, availability and recoverability [75]. Moreover, other two time-related characteristics: timeliness and volatility were identified with the characteristic: currentness, in which, timeliness depends on both currentness and volatility characteristics [152]. Altogether, twenty-one (21) characteristics were mapped with the ontology evaluation space. Each of them is explained in the following sections and definitions were derived from the discussed theories and the standard ISO/IEC 25012. Moreover, the associated measures for each characteristic have been presented in tables in the respective sections.

5.1.Structural intrinsic characteristics (i.e., inherent ontology quality)

The structural intrinsic aspect considers the characteristics related to the language that is used to represent knowledge and the associated inherent quality of an ontology. i.e., ontology structural properties and internal consistency. The evaluation of the characteristics that come under this aspect does not depend on the knowledge of the domain that an ontology is being modeled. This is because the evaluation of the structural intrinsic characteristics is performed based on the rules, specifications, and guidelines defined in the ontology representation language, not based on the domain knowledge that an ontology is modeled. Moreover, many characteristics have quantitative measures. As a result, many tools such as OntoQA [132], OOPS! [111], OntoMetrics [90], XD analyzer [30], ontologyAnalyzer [7,126] and Delta [86] have automated the measures of structural intrinsic characteristics. Thus, ontology developers can easily use these tools for the structural intrinsic evaluation of an ontology (see Table 13).

Table 7

Measures of characteristics related to the structural intrinsic aspect

| Characteristic | Attribute | Measures |

| Compliance | Lawfulness | The ratio of the total number of breached rules in the ontology is divided by the number of statements in the ontology [5,24,98,114]. |

| Richness | The ratio of the total syntactical features used in the ontology divided by the total number of possible features in the ontology [5,24,98,114]. | |

| Pitfalls | The number of pitfalls related to the ontology languages (i.e., as explained in OOPS!) [111]. | |

| Complexity | Size | The number of classes, number of attributes, number of binary relationships, and number of instances [153]. The number of nodes in the ontology graph, maximal length of the path from a root node to a leaf node, number of leaves in the ontology graph; the number of nodes that have leaves among their children, and the number of arcs in the ontology graph. [50,54]. |

| Depth | Absolute depth, average depth, minimal depth, maximal depth; dispersion of depth; dispersion of depth divided by the average depth [50,54]. | |

| Breadth | Average breadth; average relation of adjacent levels breadth; maximal relation of adjacent levels breadth; the ratio of dispersion of relations of adjacent levels breadth to the average relation of adjacent levels breadth [50,54]. | |

| Fan-outness | The average number of leaf-children in a node, the maximal number of leaf-children in a node, minimal number of leaf-children in a node; dispersion of the number of leaf-children in a node [54]. | |

| Tangledness | The number of nodes with several parents, the ratio of the number of nodes with several parents to the number of all nodes of an ontology graph; the average number of parent nodes of a node, [50,54]. | |

| Cycles | The number of cycles in an ontology, the number of nodes that are members of any of the cycles divided by the number of all nodes of an ontology graph [50,54]. | |

| Relationship Richness | The ratio of the number of (non-inheritance) relationships (P), divided by the total number of relationships defined in the schema (the sum of the number of inheritance relationships (H) and non-inheritance relationships (P)) [10,97,133]. | |

| The ratio of the number of relationships that are being used by instances Ii that belong to Ci (P(Ii, Ij)) compared to the number of relationships that are defined for Ci at the schema level (P(Ci, Cj)) [133]. | ||

| Inheritance Richness | The average number of subclasses per class [133]. | |

| Attribute Richness | The average number of attributes (slots) per class [10,133]. | |

| Class Richness | The ratio of the number of non-empty classes (classes with instances) (C) divided by the total number of classes defined in the ontology schema (C) [133] | |

| Semantic Variance | Given an ontology O, which models in a taxonomic way a set of concepts C, the semantic variance of O is computed as the average of the squared semantic distance d (·, ·) between each concept ci ϵ C in O and the taxonomic Root node of O. The mathematical expression of the semantic variance can be found in [116]. | |

| Internal Consistency | – | The number of subclass partitions with common classes, the number of subclass partitions with common instances, the number of exhaustive subclass partitions with common classes, and the number of exhaustive subclass partitions with common instances [58,114]. |

| The number of logical consistencies (i.e., using reasoners) [68,152]. | ||

| Modularity | Cohesion | The number of ontology partitions, the number of minimally inconsistent subsets, and the average value of axiom inconsistencies [94]. The number of root nodes, maximal length of simple paths, the total number of reachable nodes from roots, the average depth of all leaf nodes [153], Average number of connected components (classes and instances) [38,133]. |

| Coupling | The ratio of the number of hierarchical relations that are disconnected after modularization to the total number of relations, The ratio of the number of disconnected non-hierarchical relations to the total number of relations after ontology modularization [104], the total number of relationships instances of the class have with instances of other classes [133], the number of classes in external ontologies which referenced by the discussed ontology [107]. |

5.1.1.Compliance

In this study, the term compliance is used to denote language conformity (i.e., syntactic correctness) and adherence to the guidelines and specifications provided by the ontology language. Language conformity refers to “how the syntax of the ontology representation conforms to an ontology language” [58]. To this end, Rico et al. [114] defined the term syntactic correctness by adopting the definition provided in [24] as “the quality of the ontology according to the way it is written”. Moreover, they have used the measures of attributes: lawfulness and richness proposed by Burton-Jones et al. [24] to gauge the syntactic correctness (see Table 7). Similarly, Zhu et al. [153] stated the term: well-formedness as “syntactic correctness with respect to the rules of the language in which it is written”. Furthermore, Neuhaus et al. [102] have stated that syntactic well-formedness is an important criterion for a well-built ontology. They have discussed syntactic correctness as a part of the craftsmanship dimension which considers “whether an ontology is well-built in a way that adheres to established best practices”. In addition to that, Poveda-Villalón et al. [111] have discussed ontology compliance, not only by considering the syntactic correctness but also by considering the standards, i.e., specification and guidelines/styles, introduced for the ontology language (i.e., OWL [99,106]). For instance, in addition to syntax correctness, the authors in [111] have considered whether the developers use the primitives provided in the ontology implementation languages correctly. For example, if we consider Web Ontology Language (OWL), there are a few pitfalls (i.e., style issues [99,106]) related to the ontology primitives such as defining “

Definition 1

Definition 1(Compliance).

Compliance refers to the degree to which the ontology being constructed is in accordance with the rules, specifications and guidelines defined in the ontology representation language.

5.1.2.Complexity

Complexity describes the topological properties of an ontology [48,49]. There is no definition found in the selected papers, nevertheless, several attributes have been described such as depth, breadth, fan-outness, category size, and semantic variance, to measure the complexity of ontologies (see Table 7). Moreover, complexity is also referred to as cognitive complexity in [81,124], because it influences how well users can understand the ontological structure and to interpret knowledge that the ontology is modeled. For instance, it is difficult for humans to understand an ontology with a thousand terms [81] which thus gets high depth, breadth and tangledness [50,54]. To this end, authors in [50] have defined depth, breadth and tangledness as a set of parameters (i.e., attributes) that adversely affect ontology cognitive ergonomics. Cognitive ergonomics has been defined as a principle related to the quality of an ontology that considers whether “an ontology can be easily understood, manipulated, and exploited by end-users” [49,50]. The same principle and structural attributes (i.e., depth. breadth, fan-out, circularity) have been adopted to assess the structure of educational ontologies to observe the balance of ontology structure and its perception by users [54]. Moreover, complexity is an important characteristic that provides pieces of evidence of redundancy, reliability, and efficiency of an ontology [54,81,116,153]. For instance, Sánchez et al. [116] stated that “the larger the topological features (i.e., average and variance of the taxonomic depth, and the maximum and variance of the taxonomic breadth), the higher the probability that the ontology is a reliable one”. On the other hand, increased complexity affects searching efficiency [41,81]. For instance, Evermann et al. [41] have shown that it would take a long time to search instances when the level of categories (i.e., concepts) is increased. Based on the facts, the complexity can be defined as in Definition 2.

Definition 2

Definition 2(Complexity).

Complexity refers to the extent of how complicated the ontology is.

5.1.3.Internal consistency