Abstract

Delivery robots and personal cargo robots are increasingly sharing space with incidentally co-present persons (InCoPs) on pedestrian ways facing the challenge of socially adequate and safe navigation. Humans are able to effortlessly negotiate this shared space by signalling their skirting intentions via non-verbal gaze cues. In two online-experiments we investigated whether this phenomenon of gaze cuing can be transferred to human–robot interaction. In the first study, participants (n = 92) watched short videos in which either a human, a humanoid robot or a non-humanoid delivery robot moved towards the camera. In each video, the counterpart looked either straight towards the camera or did an eye movement to the right or left. The results showed that when the counterpart gaze cued to their left, also participants skirted more often to the left from their perspective, thereby walking past each other and avoiding collision. Since the participants were recruited in a right-hand driving country we replicated the study in left-hand driving countries (n = 176). Results showed that participants skirted more often to the right when the counterpart gaze cued to the right, and to the left in case of eye movements to the left, expanding our previous result. In both studies, skirting behavior did not differ regarding the type of counterpart. Hence, gaze cues increase the chance to trigger complementary skirting behavior in InCoPs independently of the robot morphology. Equipping robots with eyes can help to indicate moving direction by gaze cues and thereby improve interactions between humans and robots on pedestrian ways.

Similar content being viewed by others

1 Introduction

Imagine you are on the pedestrian way to walk your dog or you are on your way to buy groceries. You greet your neighbours but also meet delivery robots, typically looking like autonomously driving boxes with six wheels [1, 2]. In Estonia, the USA or UK such a scenario is not only imagination but can happen daily, at least in certain areas. The robots drive on the pedestrian way to deliver goods or food with a range up to 5 km navigating autonomously using, e.g., cameras, radar, and ultrasonic senors [3, 4]. Unavoidably, they encounter pedestrians similar to the scenario described above. However, such encounters beg the question how delivery robots and pedestrians can easily and intuitively interact with each other to not run into or even hurt each other.

The requirements for safe, successful, and pleasant human–robot interaction (HRI) can be quite different dependent on the context and modality of the interaction [5]. Most HRI scenarios consider users who willingly engage in the interaction because they work in a company or factory that uses robots for specific tasks or they bought a robot to help them with household chores. However, on pedestrian ways, the interaction with a robot is often unintended, unplanned, and spontaneous. Rosenthal-von der Pütten and colleagues called people who are in an unplanned HRI InCoPs (incidentally copresent persons). InCops are confronted with unplanned interactions with robots in hotels and at airports as receptionists and information kiosks, and increasingly also on pedestrian ways when they encounter autonomously driving delivery robots [6,7,8]. An InCoP can be a vulnerable road user, i.e. someone using the road who is not shielded by a car, but can also be a person who sits in a cafe or a child playing on a nearby playground. InCoPs are characterized as people engaging in or observing an HRI just because they "happen to be there" [9, p. 272] and not because of specific usage intentions. In this paper we consider the case that InCoPs encounter a delivery robot autonomously driving on the pedestrian way and are suddenly confronted with the task to negotiate space with an unknown counterpart they potentially never have been interacting with before.

Different field studies revealed that InCoPs often intuitively avoid approaching mobile robots, such as autonomous delivery robots on pedestrian ways [10, 11], and misinterpret the driving behavior of approaching service robots even when these robots attempt to avoid the InCoPs by changing their trajectory leading to unsuccessful HRI. Babel and colleagues constitute after their observations of these misunderstandings that ”an autonomous robot in public should make its current and future actions transparent and expectable.” [7, p. 1633]. To foster safe and effortless navigation we need to explore the effectiveness of different communication styles for mobile robots to communicate their actions.

Since the development of delivery robots and autonomous vehicles (AV) is proceeding, different types of communication styles between vulnerable road users and delivery robots or AVs have been investigated in traffic research spanning both verbal and non-verbal cues [12,13,14]. Previous work found that gaze cues from AVs influence pedestrians’ safety perception [15]. A virtual head implemented on a delivery robot can improve the interaction between the robot and an encountering human [14]. Flash lights can be used by AVs to warn pedestrians not to cross the street when approaching [16]. But also text written on an AV or as verbal message can improve the perceived safety in a pedestrian-VA interaction [17]. However, research here mainly focused on interactions between vulnerable road users and AVs on traffic lanes and especially crossroads not on shared pedestrian ways.

One approach to improve HRI is to transfer interaction mechanisms from human–human interaction [18]. When humans approach and pass each other on pedestrian ways their visual field is an indication of their walking direction and gaze cues are used to intuitively avoid collision [19,20,21]. To facilitate HRI, gaze cues were successfully implemented in different non-navigational scenarios [22]. For instance, joint attention can improve hand-over tasks between humans and robots [23]. In a collaborative hand-over task, participants were able to react faster when a robot was looking at the object than somewhere else similar to the concept of shared attention in HHI [23]. People recall more content of a story told by a robot which looked to the conversational partner than when looking away [24]. Also, in an online-quiz participants used gaze cues by a virtual robot to answer multiple-choice questions. Participants scored significantly better when the robot’s gaze cues led to the correct answer [25]. Even gaze aversion can be used by a robot to influence a conversation with a human [26]. However, in these examples human interactants willingly participated in the interaction with the robots and the scenarios do not involve navigation in potentially narrow and crowded places. InCoPs, however, are usually unprepared to meet a robot. In this regard it is interesting that recent work demonstrated that robots can influence InCoPs’ evaluation of a robot in passer-by situations. In a hallway, participants passed a robot with a head. If the robot raised the head as a cue of noticing and turned its head as a cue of movement direction participants perception improved regarding the ease of passing [27]. However, it is unclear how InCoPs would react intuitively to gaze cues shown by approaching robots. In the current study we therefore explore the potential of gaze cues to convey the delivery robot’s planned trajectory and to trigger complementary navigation behavior in InCoPs for safe passing situations on pedestrian ways. Especially, we want to know whether gaze cues are perceived differently when provided by a human or robots of different morphology (humanoid vs. non-humanoid delivery robot).

2 Related Work

2.1 Human Navigation on Pedestrian Ways

When people walk on pedestrian ways their visual field is crucial for their walking direction [20, 21] and it serves multiple purposes. When people walk straight their eyes and head are also kept straight ahead and in the case they make a turn their visual field is turned prior to walking [20]. People walking ahead of us are used for gaze-following [28]. To prevent collision with obstacles and other pedestrians people’s eyes are fixated on them [21]. Furthermore, a person’s gaze behavior is influenced by the behavior of the other pedestrians. In a virtual reality setting, participants more often looked at a pedestrian walking straight towards them comparing two other pedestrians who changed their direction away from the participant [29]. Additionally, Hessels and colleagues observed in a study in which participants were walking through University corridors that confederates who ignored the participants were looked at the least, while confederates who tried to interact with the participants were looked at the most [30]. Hence, we visually attend to the way we are currently taking or we are about to take as well as to obstacles and people in our surroundings.

Moreover, we use gaze cues to communicate with others in passing situations [14, 19]. Nummenmaa and colleagues found that gaze cues can indicate the walking direction. In a simulation study, they found that participants skirted more to the opposite direction, evading their counterpart, dependent on the gaze cues the simulated counterpart showed (for a visual representation see Fig. 1), meaning that the gaze cues from an upcoming human such as staring in one direction or moving the eyes to one direction are used to interpret the walking direction and therefore to avoid collision by skirting to the opposite direction [19]. Furthermore, when the gaze direction of the counterpart pedestrian does not match to the walking direction the chance of navigational conflicts increase [14]. In an experiment conducted on a University hallway Hart et al. investigated whether people use gaze cues from approaching confederates to pass by. They found the chance of a collision between a confederate and a participant increased when the confederate’s gaze direction and moving direction did not match. However, when the confederate did not show any gaze because of e.g., looking at her phone the collision did not increase. Therefore, pedestrians’ gaze behavior takes on two tasks. On the one hand, through their visual field pedestrians are able to see whether their space in front of them is free to walk, and obstacles and other pedestrians can be identified and avoided [20, 29]. On the other hand, pedestrians are able to use gaze cues from approaching counterpart pedestrians to predict their walking direction and avoid collision based on this [14, 19].

2.2 Gaze Cues in Human–Robot Interaction

Research has shown that gaze cues from robots can influence the interaction between humans and robots (see [22, 31] for reviews of gaze cues in HRI). Humans receive an object faster if the robot looks at the object or at the person while handing the object over in comparison to looking away [32]. In collaborative tasks, gaze cues from robots influence the perception of the robots, such as the perceived likability or perceived social presence [33]. Robots can even influence the group feeling of participants and comfortable distance between humans and robots via gaze cues [34, 35].

Gaze cues have also been used in spatial interactions between humans and robots. If a human passes a robot Yamashita and colleagues have found that participants are able to easier recognize the robot’s driving direction when it turns its head towards the participants and afterwards to the driving direction [27]. In this case, the interaction was in general smoother and the robot was perceived as recognizing the participant. Angelopolos et al. investigated how people encountering the humanoid robot Pepper in a hallway interpret its direction intention [36]. Comparing different communication styles showed that legible navigation cues, such as head movement or deictic gestures are necessary to increase the chance of collision avoidance. To compare different types of non-verbal communication Hart et al. [14] conducted a laboratory study in which a robot and a participant encountered each other in a narrow hallway. Using either LEDs or a virtual head on a screen on top of the robot showed its intended driving direction. They found that the direction cues coming from the virtual head decreased the amount of collisions in comparison to the LED cues.

However, deictic gestures [36] and head movements [14, 36] might not be a feasible approach for delivery robots since adding arms or a head unnecessarily increases the mechanical and control complexity of the robot as well as its vulnerability since these parts are more sensitive to weather conditions and break more easily in case of collision or vandalism. Hence, integrating just a display with eyes providing gaze cues might be a good approach. Related to this, Chang et al. [15] have explored the impact of using human-like gaze cues by an autonomous car (with eyes on the front) on safe interaction at a pedestrian crossing in an VR study. The car either used gaze cues or not to communicate the intention to yield. Participants’ task was to stop walking across the street if they felt unsafe to make the crossing. Confronted with gaze cues pedestrians made more correct decisions regarding whether it is safe or not to cross the street compared to a car that did not use gaze cues.

In summary, HRI research of gaze cues mostly focused on interactions in which the human and the robot worked together, for instance, in collaborative tasks. In passing situations, HRI research is still scarce and mostly focused on the influence of head movements and rather explored the social perception of the robot. For delivery robots, however, adding a head might be counterproductive to the purpose of the robot, i.e. be weather resistant, robust and durable. Hence, adding just eyes providing gaze cues might be more feasible and yet still provide the possibility for intuitive human-like interaction.

2.3 Delivery Robots and AVs on the Streets

In the course of the progressive development of autonomously driving delivery robots and AVs, current research on interactions between these technologies and vulnerable road users is growing to investigate possible communication styles that can be implemented to facilitate interactions [15, 17, 37]. Not surprisingly, people want to meet delivery robots whose behavior is transparent and expectable [7, p. 1633] and behave cautious [10, 38, 39] or express to be cautious because of the lack of information on how the delivery robots might behave [40]. Meeting a delivery robot on a pedestrian way is still something new and unique causing interest and curiosity in many InCops often leading to explorative behavior [7, 39]. However, these observational field studies also show that many pedestrians are in a hurry or simply not overly interested in delivery robots. In these situations it is essential to InCops to quickly assess the robots moving intentions in order to pass it quickly and safely, but they lack a mental model of delivery robot behavior and often also direct information provided by the robot. The research by Yu et al. [41] tried to tap the first question of missing mental models by providing path predictions via augmented reality elements. In a VR study they showed participants their predicted path as well as the path of the robot thereby providing participants the chance to develop a mental model of the robot’s behavior in relation to their own behavior. For everyday interactions this approach seems not feasible because it would require pedestrians to wear some kind of device. However, the researchers highlight the importance of this approach in order to ”helping people develop a mental model of how mobile robots operate through dynamic path visualisations” [41, p. 218].

A simpler way is to equip AVs and delivery robots with communication signals conveying their intentions. Navigation communication for AVs and delivery robots is realized predominantly using light concepts (e.g., flashing lights) or verbal commands. For instance, Deb et al. [17] investigated different verbal or visual communication styles displayed on the front of an AV in a virtual reality (VR) study. Participants encountered an AV with a person sitting in the driving chair at a crosswalk. Results showed that participants felt safer and preferred some kind of visual communication style compared to no communication at all. Furthermore, the command ’walk’ either written in front of the car or as verbal message was preferred the most. As discussed above autonomous cars can also use gaze cues to indicate whether or not they intend to yield at an intersection as was explored in a VR study [15]. AVs are, however, different from delivery robots since for most of the time they do not share the same space as pedestrians and usually meet them only at intersections and crosswalks, while delivery robots are mostly envisioned to operate on pedestrian ways and thus would be constantly in contact with multiple vulnerable road users sharing the same space. Verbal commands to every single pedestrian could become annoying very quickly when in a crowded space. Regarding the communication of trajectories of mobile robots on pedestrian ways, Hetherington and colleagues investigated different light concepts in a video-based online study [42]. They found that participants preferred when robots showed arrows projected on the ground instead of flashing lights. Similarly, Kannan et al. [43] investigated in an online-study which kind of communication style participants would prefer if they would have to interact with a delivery robot. Results showed that participants preferred a display with text or signs over lights shown on the delivery robot to present the driving direction.

The presented studies on communication signals for delivery robots explored different signal types, however, gaze cues were not addressed to far. They also rely on people’s preferences and attitudes in online studies, instead of actual behavior. Since interaction studies are resource intensive and passing situations are a potential safety risk this approach is understandable. However, considering the work by Nummenmaa et al. [19] online studies can also be used to actually study humans’ immediate reactions to video stimuli instead of only assessing preferences, thereby, being a better predictor for real world behavior.

2.4 Summary and Research Objectives

Field studies with mobile service robots have demonstrated that the navigation behavior of robots is often misinterpreted by InCoPs. Even when the robot detects InCoPs and intends to avoid the person, people often simultaneous start a (conflicting) evading maneuver thereby causing the robot to stop to avoid a collision [7] and humans to circuitously pass the robot. Missing transparent communication about moving intentions has been identified as the main cause for this phenomenon [7, 10, 38,39,40]. Looking at how humans solve the task of passing each other might be a fruitful approach to solve this problem for robots. Previous work explored head movements or deictic gestures, but these would require the delivery robot to actually have a head or arms/hands which adds unnecessary complexity to the robot. Moreover, human-like features are intuitive to humans, however, too much of them can evoke increased interest in pedestrians possibly leading to unwanted extended interactions with the delivery robot thereby hindering its purpose of quick delivery. Equipping delivery robots with basic eyes to provide gaze cues for navigation might be a good middle way, especially since gaze cues have been proven useful in other HRI scenarios often involving humanoid robots. To close the gap between the different strands of previous work, we investigated whether gaze cues from humans and humanoid and non-humanoid robots have an influence on peoples’ intuitive skirting decision using short videos in which different counterparts move towards the camera and show different eye movements. We are interested in whether gaze cuing effects transfer to HRI and whether these anthropomorphic cues in non-humanoid robots influence their social perception by InCops.

- RQ1::

-

Can the effect of gaze cuing also be found in HRI with non-humanoid delivery robots?

- RQ2::

-

Does equipping a non-humanoid delivery robot with anthropomorphic cues (eyes and gaze cues) influence the social perception of the robot?

If a gaze cueing effect is present we expect based on the work by Nummenmaa et al. [19] that:

- H1::

-

Eye movements to the right or left from a human increase the probability to skirt to the opposite direction.

- H2::

-

Eye movements to the right or left from a humanoid robot increase the probability to skirt to the opposite direction.

To investigate the research questions and hypotheses, we conducted to online studies, Study 1 conducted in a right-hand driving country and Study 2 conducted in left-hand driving countries in order to control for automatism effects caused by left-/right-hand traffic.

3 Study 1

To investigate the above mentioned hypotheses and research questions we conducted a 3 (Counterpart) \(\times \) 3 (Gaze Cues) online experiment as an extension to Nummenmaa et al.’s work [19]. Participants watched short videos in which a counterpart walked or drove towards the camera in a hallway. The Counterpart (between-subject factor) was either a human, the humanoid robot Pepper or a non-humanoid delivery robot. In each video, the counterpart showed different Gaze Cues (within-subject factor). They either looked straight ahead or made an eye-movement to the right or left.

3.1 Methods

3.1.1 Videos

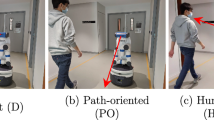

All videos had the same scenario of a narrow corridor (cf. Fig. 2). The camera angle was at eye-level and the camera picture zoomed in over time to give the participants the perception of walking forwards. In each video, one of the three counterparts came towards the camera (see Fig. 3 as an example). The human was a young Northern-European woman with no walking disabilities. She walked straight towards the camera with no head movement. In the video with straight eyes she did not move her eyes but looked straight to the camera. In case of an eye movement she started with straight eyes and moved her eyes to the left or right and back to straight eyes while walking straight. She continued until the end of the video. The humanoid robot was Pepper, a white robot with movable arms and head. It has one leg with a broad foot which has small wheels underneath to move around [44]. Since Pepper normally cannot move its eyes we edited moving eyes to the video at the position of its fixed eyes. To not distract the participants we also edited the video to look like the display in front of Pepper’s chest is turned off. The non-humanoid delivery robot is a mock-up with six wheels designed and built at the Chair Individual and Technology (iTec) at RWTH Aachen University. Using a Wizard-of-Design we drove the delivery robot towards the camera by remote control [45]. In front of the delivery robot was a tablet attached to show the eyes. In case of straight eyes both robots had straight eyes. In case of an eye movement the eyes on Pepper’s face and on the table of the delivery robot moved to the right or left while driving starting with straight looking eyes, similar to the human condition. The two robots had an approaching velocity of approx. 1.3 km/h. The human walked at average walking speed. The camera focus were on the counterparts’ eyes. Since Pepper and the delivery robot were smaller than the woman the camera perspective differed slightly. Each video was approx. 5 s.

3.1.2 Measurements

While watching the videos participants were asked to indicate in which direction they would skirt by clicking different keys on the keyboard (F = skirt to the participant’s left direction, J = skirt to the participant’s right direction, see Fig. 4). Hence, skirting direction was a binary measure. Additionally, we measured the reaction time from the moment the video started until the participants clicked on one key as a quality measurement. If participants needed too much time to indicate the skirting direction (more than 5 s) or clicked to fast (under 2 s) the trial counted as a fail. The threshold of 2 s was set because watching the video for only 2 s did not secure to have noticed the eye movement as gaze cues in the video. Reaction times higher than 5 s mean participants did not react while watching the video but afterwards.

Additionally, participants had to answer to different questionnaires. In order to investigate the participants’ social attribution towards the two robots they filled in the Robotic Social Attributes Scale (RoSAS) [46]. It has three sub scales — warmth (\(\alpha =.91\)), competence (\(\alpha =.84\)) and discomfort (\(\alpha =.82\)) — with six items each, such as the items happy and social from the sub scale warmth. For each item participants had to indicate how much they associate the seen robot with the item on a 9-point Likert scale.

To further explore the characteristics of the sample, participants were asked about their attitudes towards robots using the Negative Attitudes towards Robots Scale (NARS) [47], a scale assessing their expectations with regard to the robots seen in the videos [48, 49], and their prior experience with robots [50]. The English version of the NARS (\(\alpha =.80\)) [47] has three subscales: future/social influence (3 items, e.g., “I feel that if I depend on robots too much, something bad might happen”), relational attitudes (5 items, e.g., “I would feel uneasy if robots really had emotions”) and actual interactions and situations (3 items, e.g., “I would feel very nervous just standing in front of a robot”) with a 5-point Likert scale (“I do not agree at all.” to “I agree completely.”). The expectations towards robots were investigated by using the sub scale Expectedness (4 items, e.g. “The robot’s behavior is appropriate.”, 5-point Likert scale from strongly disagree to strongly agree, \(\alpha =.75\)) [48, 49]. If necessary we changed the entity’s name to "robot". We measured the participants’ previous experiences with robots using the sub scale Robot-related experiences from MacDorman and colleagues (5 items, e.g. “How many times in the past one (1) year have you read robot-related stories, comics, news articles, product descriptions, conference papers, journal papers, blogs, or other material?”, 6-point Likert scale from 0 to 5 or more, \(\alpha =.82\)) [50].

3.1.3 Procedure

The experiment was conducted online using the survey platform SoSci-Survey [51]. After asking for consent the experiment started by explaining the procedure and showing one video as a test trial. Then, the videos showing the counterparts and gaze cues started. Since Counterpart was a between-subjects factor and Gaze Cues a within-subjects factor participants watched either the human, Pepper, or the delivery robot presenting different gaze cues. Each video was shown 5 times meaning each participant watched in total 15 videos. While watching the randomly ordered videos participants were asked to indicate in which direction they would skirt by clicking different keys on the keyboard (F = skirt to the participant’s left, J = skirt to the participant’s right, see Fig. 4). Participants were informed that the experiment was not a speed test. However, they were instructed to click one of the keys as soon as they have decided in which direction to skirt. The video ended either after the participant clicked one of the keys or when the video was finished after 5 s.

After all 15 videos were shown, the participants completed the questionnaires described above. We intentionally asked these questions after showing the videos to increase the participants’ feeling of a spontaneous encounter with one of the counterparts while watching the videos. Participants who saw a robot in their videos (Pepper or delivery robot) were asked questions regarding their perception of and attitudes towards robots using RoSAS [46], NARS [47] and their expectations regarding the seen robots [49, 52]. These questionnaire were not administered to the participants in the human condition. Furthermore, we asked for the prior experience with robots of all participants [50]. Additionally, we assessed demographic data (age, gender, highest level of education), and participants were able to freely comment on the study. Finally, participants were thanked for their participation and debriefed about the purpose of the study.

3.1.4 Participants

Ninety-two participants were recruited via a course on inferential statistics from RWTH Aachen University over the period from 16th to 18th May 2021. Participation was voluntary and about 50 percent of course attendees completed the study. After completion of the study and debriefing, we used the resulting data for a hands-on analysis in the same statistics course utilized for recruiting. Thirteen participants did not complete the survey: Hence, they were excluded leaving 79 participants (27 women, 48 men, 2 diverse people and 2 people who did not specify their gender) for data analysis. The average age was \(M=22.94\) (\(SD=3.11\)). Forty-six participants had a high-school degree. Twenty-three participants were students with a Bachelor’s degree and ten participants had a Master’s degree.

Twenty-eight participants saw the human counterpart approaching. In 31 cases, Pepper drove towards the participants, and 20 participants watched the non-humanoid delivery robot driving towards them. Participants did not significantly differ in their prior knowledge of robots (\(n=79\), \(F(2,76) = 1.2\), \(p = 0.3\)). Additionally, participants who watched the two robots (\(n=51\)) did not significantly vary in their attitudes towards robots (NARS; future/social influence: \(t(42.53) = -\,0.40\), \(p = 0.69\); relational attitudes: \(t(45.71) = -\,0.14\), \(p = 0.89\); actual interactions and situations: \(t(35.69) = 0.04\), \(p = 0.96\)) and participants’ expectations did not significantly vary between conditions (\(n=51\), \(t(47.71)=0.15\), \(p=0.88\)).

3.2 Results

3.2.1 Data Cleansing Procedure

We only included data points with a reaction time between 2 and 5 s. At the beginning of the videos, the counterpart is down the hallway and approaches slowly meaning that the counterpart is too far away to see the eyes. Reaction times higher than 5 s means that the video is already terminated indicating that participants do not decide spontaneously or that participants were distracted. Hence, for reaction times out of the time range of 2–5 s the participants’ perception of gaze cues is not secured and these trials were excluded from data analysis. After the cleansing procedure in total \(n=645\) data points were included in the analysis.

3.2.2 Skirting Direction

For data analysis, we used the statistical environment R [53] and the package lme4 [54], which allowed for mixed-effects modeling. To investigate the influence of Gaze Cues and the type of Counterpart on the skirting direction we used the General Linear Mixed Effects Model on intercepts for binary data with gaze cues and counterparts as fixed effects, participant-ID as random effect (SD = 1.71, random effect for intercepts), and skirting direction as binary-scaled criterion because this was the maximal model that converged [55]. Since the fixed effects were nominal scaled with three conditions we compared the condition human to the other two counterparts and straight eyes to the two eye movements.

Results showed that if the counterpart moved their eyes to the left the probability of skirting to the right significantly decreased (\(b=-\,0.94\), \(p<0.001\)). Hence, the probability to skirt to the left increased significantly. However, there was no significant influence of gazing to the right (\(b=0.10\), \(p=0.69\)). If the counterpart moved their eyes to the left participants skirted in 59% of the trials to the left, whereby if the counterpart moved their eyes to the right or looked straight participants skirted in 45% of the trials or less times to the left (cf. Table 1 and Fig. 5). Also, the different counterparts had no significant influence on the probability to skirt to the right (Human vs. Pepper: \(b=-\,0.90\), \(p=0.11\); Human vs. Delivery Robot: \(b=0.14\), \(p=0.82\)). However, it is worth to mention that descriptively participants showed quite similar skirting behavior when the human (47% skirt to the left) or the delivery robot approached (40% skirt to the left). But if Pepper came towards the camera in 61% of all the cases participants skirted to the left (cf. Table 1).

3.2.3 Further Results

For the analysis of the social attributions to robots (RoSAS) only participants who have watched a robot were included (\(n=51\)). We found no significant differences on the sub-scales warmth (\(t(40.14) = -\,0.66\), \(p = 0.51\)) and competence (\(t(45.52) = -\,0.63\), \(p = 0.53\)). However, there was a significant difference between Pepper (\(M = 3.57\)) and the delivery robot (\(M = 2.66\)) on the sub-scale discomfort (\(t(46.43) = -\,2.14\), \(p = 0.04\)). Access to data and r-scripts is possible through the Open Science Framework storage [56].

3.3 Discussion

In this first study, we investigated whether gaze cues have an influence on the decision of skirting in HHI and HRI. In an online-experiment participants watched short videos in which either a human, the humanoid robot Pepper, or a non-humanoid delivery robot moved towards the camera showing either an eye-movement to the left or right or looking straight.

3.3.1 Skirting Direction

Results showed that gaze cues partly influence the skirting direction. Only if a counterpart looked to the left participants more often skirt to the left. But if a counterpart showed gaze cues to the right the probability to skirt to the right did not increase compared to the case of straight eyes. This partially fits to the results Nummenmaa et al. [19] who found that people skirt to the opposite direction in case of gaze cues shown by a human (for visual representation see Fig. 1). However, they compared left and right gaze cues without a condition of straight eyes. In contrast, we used a third condition as control condition showing that in case of straight eyes participants tend to show similar skirting behavior in comparison to gaze cues to the right (cf. Fig. 5). The participant sample in the first study was recruited from a University course of the RWTH Aachen University which is a right-hand driving country in which people might have the tendency to skirt intuitively to the right when no other direction cues are presented. Moreover, the majority of the participants were supposedly right-handed and thereby might have a stronger tendency to press the right button instead of the left button, in that making it difficult to attribute our findings solely to the tendency to skirt right because they are used to right-hand traffic. Study 2 shall therefore address the question whether the effect in skirting behavior is due to the right-handed driving in Germany.

Moreover, we did not find a significant influence of the counterpart on the skirting direction. In specific, the skirting behavior was quite similar when the human or the delivery robot were seen in the video (cf. Table 1). However, even though results were not significant (\(p=0.11\)) descriptive statistics showed differences between Pepper and the human. While participants skirted in 53% of the cases to the right if the human approached only 39% skirted to the right when Pepper drove towards the camera.

3.3.2 Social Attributions to Robots

Regarding the social attributions to robots warmth and competence, results showed no significant difference between the non-humanoid delivery robot and the humanoid robot Pepper. However, participants reported significantly more discomfort watching Pepper. Usually, Pepper does not have moving eyes. For this study we edited moving eyes similar to the delivery robot’s eyes to Pepper. This might have limited the believability of the eye-movement due to the video-editing resulting in possible disappointment [57].

4 Study 2

4.1 Research Plan and Hypotheses

After conducting the first study the question remains whether the results regarding the gaze cues are due to the fact that the first sample was recruited in Germany — a right-hand driving country — or due to the handedness of the participants. Therefore, we addressed this question in a follow-up study for which we recruited participants from countries with left-hand driving. In left-hand driving countries, people should have the natural tendency to skirt to the left (not to the right). Hence, expecting the reverse result as found in Study 1, participants should be influenced by gaze cues to the right increasing the probability to skirt to the right. We thus pose the following hypothesis:

- H3::

-

Eye movements from (a) a human, (b) a humanoid robot and (c) a non-humanoid delivery robot to the right increase the probability to skirt to the right in left-hand driving countries.

4.2 Methods

4.2.1 Online-Experiment

The design and procedure of the second experiment was similar to the first experiment: A 3 (Counterpart, between-subject) \(\times \) 3 (Gaze-Cues, within-subject) online-experiment. We used the same videos as in the first study to be able to compare the results of the studies to each other. However, we included two questions to check whether participants were attentive and recognized the gaze cues by asking the following questions: “What did the robot/human do in the videos?” and “What did the eyes of the robot/human do?”. Again, participants watched 15 videos of one of the counterparts and had to make a decision when and where to skirt. The same measurement instruments were used. Additionally, participants were asked for their country of origin and handedness. Inclusion criterion for this study was that participants live in a left-hand driving country. Thus, recruiting was performed in 22 English-speaking left-hand driving countries (e.g., Australia, Jamaica and United Kingdom). Furthermore, participants completed attention checks. They were asked to describe the scenario of the video (“Please briefly describe the scenery of the videos you just watched.”) and the clothes of the human (“Please briefly describe what the person was wearing in the videos you just watched.”) or the design of the robot (“Please briefly describe the robot you saw in the videos.”). As compensation participants received 3$ for their participation.

4.2.2 Participants

Participants (\(N=176\)) were recruited via Amazon Mechanical Turk in the period from 21st December 2021 to 2nd January 2022 [58]. Forty-eight participants needed to be excluded due to different excluding criteria: missing the included attention check, not recognizing the counterparts’ eyes, not finishing the study or not currently living in a left-hand driving country. The remaining 128 participants included in the data analysis were 88 men and 40 women. The average age was \(M=34\) \((SD=10.58)\). Ninety-five participants had a University degree, 14 participants had some kind of technical training, and 34 participants had no or a high school degree. 86% of the participants identified themselves as European. 4% were Asian, 3% were South-American. 2% came from Australia, and 1% identified as African. 2% of the participants had two nationalities and 2% did not indicate their nationality. 80% of the participants were right-handed, and 16% were left-handed. 3% indicated to be ambidextrous.

Participants did not significantly differ in their negative attitudes towards robots (NARS; \(n=84\); future/social influence: \(t(75.37) = -\,0.68\), \(p = 0.50\); relational attitudes: \(t(79.33) = -\,0.18\), \(p = 0.86\); actual interactions and situations: \(t(78.99) = -\,0.61\), \(p = 0.54\)) between robot conditions. Furthermore, participants did not significantly differ in their expectations towards the robots (\(n=84\), \(t(67.81)=1.09\), \(p=0.28\)). The participants’ prior knowledge was not significantly different between the three counterparts (\(n=128\), \(\chi ^2(2)=4.27\), \(p=0.12\)).

4.3 Results

4.3.1 Skirting Direction

We only included data points with a reaction time between 2 and 5 s. For a reasoning see Study 1. After the cleansing procedure in total \(n=1330\) videos were part of the analysis. Similar to the first study, we used the maximal converging General Linear Mixed Model for binary data with counterpart and gaze cues as fixed effects and participant-ID as random effect (SD = 1.31, random effect for intercepts) utilizing the R-package lme4 [53, 54]. The conditions human and straight eyes were used as references for their respective fixed effects. Results showed that gaze cues to the right (\(b=0.37\), \(p=0.02\)) and to the left (\(b=-\,0.34\), \(p=0.04\)) had a significant influence on the skirting direction. If the human or robots moved their eyes to the right in 44% of the cases participants skirted to the right. In comparison, if the counterpart moved their eyes to the left the chance to skirt to the right was 31%, and if they looked straight forward the chance was 37% (cf. Table 2 and Fig. 6). However, there was no significant influence of the counterpart (Human vs. Pepper: \(b=0.18\), \(p=0.61\); Human vs. Delivery Robot: \(b=-\,0.21\), \(p=0.34\)). In case of an approaching human in 37% of the trials participants skirted to the right. If Pepper came towards the camera in 35% of the trials participants skirted to the right. In 40% of the trials in which the delivery robot approached participants skirted to the right (cf. Table 2).

4.3.2 Further Results

Regarding the participants’ (\(n=128\)) social attributions towards robots (RoSAS), there were no significant differences in the sub scales warmth (\(t(81.47)=-\,0.02\), \(p=0.98\)), competence (\(t(67.76)=0.20\), \(p=0.84\)) and discomfort (\(t(79.04)=-\,0.53\), \(p=0.59\)) of RoSAS. Access to data and r-scripts is possible through the Open Science Framework storage [56].

5 Overall Discussion

In the empirical work described above we investigated whether gaze cues from approaching humans and robots influence participants’ skirting decisions to avoid collision. In two online-experiments participants watched short videos in which either a human, the humanoid robot Pepper, or a non-humanoid delivery robot moved towards the camera showing either an eye-movement to the left or right or looking straight. The aim was to explore whether gaze cues can be used to facilitate safe passing situations between autonomous delivery robots and InCoPs. In HHI, gaze cues are used to signal other pedestrians whether someone wants to skirt left or right to pass by as shown in an online study by Nummenmaa et al. [19]. Our studies conceptually replicate this work and investigate whether this mechanism can be transferred to HRI.

5.1 Gaze Cues

Within the two conducted studies we observed that gaze cues are used by participants to analyse the counterpart’s walking or driving direction. While Nummenmaa et al. discovered that gaze cues influence participants’ skirting decision in general [19] we further scrutinized this effect by implementing a third condition with straight eyes. Results from our first study suggested that gaze cues might be differently interpreted dependent on the country people live in, i.e. gaze cues might be differently effective depending on whether cars drive on the right or on the left side of the car lane. Specifically, results from inferential statistics only partly confirmed our hypotheses and research questions from the first study (H1, H2 and RQ1, cf. Sect. 2.4). Namely, eye movements significantly decreased the tendency to skirt to the right only in the case of gaze cues to the left. However, there was no significant increase of skirting to the right when the counterpart looked to the right in comparison to looking straight forward (cf. Sect. 3.2.2). A further inspection of the descriptive results of the first study showed that participants had a similar skirting behavior when the counterpart looked straight forward or looked to the right (cf. Fig. 5). This was consistent for all counterparts, i.e. a human counterpart (partial support for H1), a humanoid robot (partial support for H2), and a delivery robot (RQ1). This indicates an intuitive skirting direction to the right in case of no gaze cues in right-hand driving countries which is not further increased in case of gaze cues to the right. However, if counterparts show gaze cues to the left participants increased the chance to skirt to the left. We identified two possible causes for this effect: results could have been due to the right-hand traffic in Germany or they might have been an artifact because the majority of people are right-handed, hence, pressing the right button might have been preferred. Therefore, we repeated our study with participants in left-hand driving countries to be able to clearly attribute our effect to the gaze cues.

Contrary to our expectations based on Study 1 (H3, cf. Sect. 4.1), the phenomenon of how gaze cues influence skirting behavior were not limited to left gaze cues in the second study. Both, gaze cues to the left and right, increased the participants’ chance to skirt to the left and right, respectively (cf. Fig. 6). In contrast to Study 1, results of Study 2 suggest that gaze cues generally influence the skirting behavior as suggested by Nummenmaa et al. [19] and could therefore be used as an effective mechanism to navigate safely in shared public spaces such as pedestrian ways.

An open question is, however, why results differ between our two online studies. We identified enhanced data quality management in Study 2 as a possible cause. While Study 1 was conducted as part of a study course the execution of Study 2 was more systematic. Here, we included attention and manipulation checks to secure that only participants who actually recognized the eyes were part of the data analysis. Furthermore, with \(n=1330\) data points the sample size of Study 2 that was part of the data analysis of the skirting direction was more than twice that of Study 1 (\(n=645\)). Combining these two aspects increases the chance that extraneous influences such as missing the eye movements in the videos or individual participant characteristics were less influencing our data in Study 2 in comparison to Study 1. Therefore, specifically Study 2 replicated and extended the results from Nummenmaa et al. [19] showing that gaze cues to left and right do increase to skirt to the left or right, not only in comparison to the other direction but also comparing to looking straight. Furthermore, our results suggest that equipping especially delivery robots with eyes can increase the chance of successful intuitive interaction between humans and robots.

5.2 Type of Counterpart and Social Perception

Due to the fact that research regarding gaze cues in passing situations is still scarce we asked ourselves whether equipping a non-humanoid delivery robot with eyes via a tablet in front of the robot might influence the social perception of the robot (RQ2, cf. Sect. 2.4).

Therefore, participants were asked regarding their social attribution towards the approaching robots. Results of the first study showed that there was no significant difference in the sub-scales warmth and competence in both studies. However, we found a significant higher feeling of discomfort towards the humanoid robot Pepper in comparison to the non-humanoid delivery robot (cf. Sect. 3.2.3). On the one hand, these results show us that equipping a non-humanoid robot with eyes should not negatively influence the social perception. On the other hand, they opened the question why the gaze cues negatively influenced the social perception of the humanoid robot Pepper. Originally, we explained that phenomenon with the possibility of participants being disappointed due to the limited believability of the eye movement (cf. Sect. 3.3.2). In our second study, these results were not replicated although descriptive statistics indicate the same tendency (Pepper M = 3.11, delivery robot M = 2.92). Since we included a manipulation check regarding the eye movement in Study 2 our assumption is that differences in Study 1 were due to the effect of some participants actually missing the gaze cues when Pepper drove towards the camera and therefore feeling uncomfortable, because of intuitively feeling that something was off but not being able to point it out. We assume that Pepper’s video-edited eyes do not perfectly fit to the overall appearance causing this effect.

These results go hand in hand with the differences in the skirting direction behavior between the two studies. In both studies, there was no significant difference between the robots and the human counterpart with regards to the effectiveness of gaze cues (cf. Sects. 3.2.2 and 4.3.1). However in Study 1, participants’ skirting behavior was different on a descriptive level when Pepper approached in comparison to the human or the delivery robot (cf. Table 1). Again, by including a manipulation check in Study 2, this pattern was not replicated (cf. Table 2). Therefore, we believe that if the eye movements of robots are clearly visible they can positively influence the skirting direction of InCoPs and can function as gaze cues in situations where robots and humans pass each other in narrow hallways or pedestrian ways [36].

5.3 Limitations and Implications

We conducted online studies which eliminated the risk of infection in times of Covid-19. Moreover, an online study is a first reasonable step to investigating the potential effectiveness of gaze cues in different robot types before conducting resource intensive laboratory and field experiments, but this approach also has its downsides. By creating videos in which the human and robots came towards the camera the participants were able to imagine a pedestrian scenario. However, imagining to encounter a robot or human and clicking on a keyboard as a reaction leaves the possibility of a decreased external validity. Here, further research in interaction laboratory studies and observational field studies investigating humans and robots passing each other is needed [10, 11, 27]. Since people only clicked on a keyboard as reaction and did not actually evaded a counterpart we decided to only utilize the binary reaction of left or right and to not interpret the timing of reaction because among other issues this depends on the visibility of the gaze cues. Participants were asked to use a computer to participate in the studies. But the screen size was not determined and therefore reaction time was not interpreted. Since the timing of avoiding might be essential for successful passing, future research should investigate whether gaze cues influence the reaction time.

In the two presented studies, we only compared different types of eye movements in HHI and HRI in a hallway to see whether gaze cues can help interacting with passing robots and humans. However, we did not consider other possible cues to show moving direction, such as head movements or signs. This leaves space for future research to compare gaze cues with other possible cues [14] to benchmark their effectiveness. Additionally, dependent on the robot’s task spontaneous interactions with robots can happen in hallways (e.g., in hospitals [59]) but also outside on the streets [10]. Therefore, future research should investigate how gaze cues in HRI work in different kind of contexts and situations.

As mentioned above, by utilizing videos we were able to let the participants imagine the scenario of walking down the corridor and meeting either a person or a robot. The counterparts walked and drove straight towards the camera. As a potential limitation we identified that the body position of Pepper might look not completely straight but slightly to its right (cf. Fig. 2). If this would be the case and indeed would have influenced our data participants should generally skirt to the right in case of watching Pepper independently of the gaze cues. However, this was not the case (cf. Tables 1, 2). Therefore, we can assume that participants did indeed see the robot as driving straight towards the camera. Nevertheless, future research with alternative video material and alternative study methods, such as laboratory studies or field studies, should be conducted to generalize the present results.

Since delivery robots are more and more used to deliver goods, pedestrians and robots need to share space on pedestrian ways. Equipping non-humanoid delivery robots with eyes offers the possibility to positively influence participants’ skirting behavior. Therefore, future designs could implement moving eyes as visual non-verbal cues to indicate the robot’s driving behavior and decrease the risk of collision because people are able to interpret the robot’s planned direction [27, 36].

6 Conclusion

Results from two online studies support that gaze cues can be used to facilitate safe HRI passing situations. While in the first study only gaze cues to the left increased the chance to skirt to the left, results in the second study showed significant increasing of skirting direction in both directions. This difference can be explained by a higher power and a clearer data set due to manipulation and attention checks. Both results are irrespective of who provides gaze cues, a human, or different kinds of robots hinting to the possibility that gaze cues can be transferred from HHI to HRI.

References

Technologies S. Starship. https://www.starship.xyz/. Accessed 12 May 2023

Lennartz T. UrbANT. https://urbant.de/de/index.htm. Accessed 12 May 2023

Presse-Agentur D. Starship-Lieferroboter Werden in Europa Getestet. https://www.zeit.de/news/2016-07/06/computer-starship-lieferroboter-werden-in-europa-getestet-06164019. Accessed 12 May 2023

Marr B. The future of delivery robots. https://www.forbes.com/sites/bernardmarr/2021/11/05/the-future-of-delivery-robots/. Accessed 12 May 2023

Onnasch L, Roesler E (2021) A taxonomy to structure and analyze human–robot interaction. Int J Soc Robot 13(4):833–849. https://doi.org/10.1007/s12369-020-00666-5

Rosenthal-von der Pütten A, Sirkin D, Abrams A, Platte L (2020) The forgotten in hri: incidental encounters with robots in public spaces. In: Companion of the 2020 ACM/IEEE international conference on human–robot interaction. HRI ’20. Association for Computing Machinery, New York, NY, USA, pp 656–657. https://doi.org/10.1145/3371382.3374852

Babel F, Kraus J, Baumann M (2022) Findings from a qualitative field study with an autonomous robot in public: exploration of user reactions and conflicts. Int J Soc Robot 14(7):1625–1655. https://doi.org/10.1007/s12369-022-00894-x

Nielsen S, Skov MB, Hansen KD, Kaszowska A (2022) Using user-generated Youtube videos to understand unguided interactions with robots in public places. J Hum Robot Interact. https://doi.org/10.1145/3550280

Abrams AMH, Dautzenberg PSC, Jakobowsky C, Ladwig S, Rosenthal-von der Pütten AM (2021) A theoretical and empirical reflection on technology acceptance models for autonomous delivery robots. In: Proceedings of the 2021 ACM/IEEE international conference on human–robot interaction. HRI ’21. Association for Computing Machinery, New York, NY, USA, pp 272–280. https://doi.org/10.1145/3434073.3444662

Abrams AMH, Platte L, Rosenthal-von der Pütten A (2020) Field observation: interactions between pedestrians and a delivery robot. In: IEEE international conference on robot & human interactive communication ROMAN-2020. Crowdbot workshop: robots from pathways to crowds, ethical, legal and safety concerns of robot navigating human environments. http://crowdbot.eu/wp-content/uploads/2020/09/Short-Talk-1-Workshop_Abstract_Field-Observation_final.pdf

van Mierlo S (2021) Field observations of reactions of incidentally copresent pedestrians to a seemingly autonomous sidewalk delivery vehicle: an exploratory study. Master’s thesis, Universiteit Utrecht. http://mwlc.global/wp-content/uploads/2021/08/Thesis_Shianne_van_Mierlo_6206557.pdf

Mahadevan K, Somanath S, Sharlin E (2018) Communicating awareness and intent in autonomous vehicle-pedestrian interaction. In: Proceedings of the 2018 CHI conference on human factors in computing systems. CHI ’18. Association for Computing Machinery, New York, NY, USA, pp 1–12. https://doi.org/10.1145/3173574.3174003

Stanciu SC, Eby DW, Molnar LJ, Louis RMS, Zanier N, Kostyniuk LP (2018) Pedestrians/bicyclists and autonomous vehicles: how will they communicate? Transp Res Rec 2672(22):58–66. https://doi.org/10.1177/0361198118777091

Hart J, Mirsky R, Xiao X, Tejeda S, Mahajan B, Goo J, Baldauf K, Owen S, Stone P (2020) Using human-inspired signals to disambiguate navigational intentions. In: Wagner AR, Feil-Seifer D, Haring KS, Rossi S, Williams T, He H, Sam Ge S (eds) Social robotics. Springer, Cham, pp 320–331

Chang C-M, Toda K, Gui X, Seo SH, Igarashi T (2022) Can eyes on a car reduce traffic accidents? In: Proceedings of the 14th international conference on automotive user interfaces and interactive vehicular applications. AutomotiveUI ’22. Association for Computing Machinery, New York, NY, USA, pp 349–359. https://doi.org/10.1145/3543174.3546841

Li Y, Dikmen M, Hussein TG, Wang Y, Burns C (2018) To cross or not to cross: urgency-based external warning displays on autonomous vehicles to improve pedestrian crossing safety. In: Proceedings of the 10th international conference on automotive user interfaces and interactive vehicular applications. AutomotiveUI ’18. Association for Computing Machinery, New York, NY, USA, pp 188–197. https://doi.org/10.1145/3239060.3239082

Deb S, Carruth DW, Hudson CR (2020) How communicating features can help pedestrian safety in the presence of self-driving vehicles: virtual reality experiment. IEEE Trans Hum Mach Syst 50(2):176–186. https://doi.org/10.1109/THMS.2019.2960517

Reeves B, Nass C (1996) The media equation: how people treat computers, television, and new media like real people. Cambridge, UK, vol 10, p 236605

Nummenmaa L, Hyönä J, Hietanen JK (2009) I’ll walk this way: eyes reveal the direction of locomotion and make passersby look and go the other way. Psychol Sci 20(12):1454–1458. https://doi.org/10.1111/j.1467-9280.2009.02464.x

Hollands MA, Patla AE, Vickers JN (2002) “look where you’re going!’’: gaze behaviour associated with maintaining and changing the direction of locomotion. Exp Brain Res 143(2):221–230. https://doi.org/10.1007/s00221-001-0983-7

Kitazawa K, Fujiyama T (2010) Pedestrian vision and collision avoidance behavior: investigation of the information process space of pedestrians using an eye tracker. In: Klingsch WWF, Rogsch C, Schadschneider A, Schreckenberg M (eds) Pedestrian and evacuation dynamics 2008. Springer, Berlin, pp 95–108

Admoni H, Scassellati B (2017) Social eye gaze in human–robot interaction: a review. J Hum Robot Interact 6(1):25–63. https://doi.org/10.5898/JHRI.6.1.Admoni

Moon AJ, Troniak DM, Gleeson B, Pan MKXJ, Zheng M, Blumer BA, MacLean K, Croft EA (2014) Meet me where i’m gazing: how shared attention gaze affects human-robot handover timing. In: 2014 9th ACM/IEEE international conference on human–robot interaction (HRI), pp 334–341

Mutlu B, Forlizzi J, Hodgins J (2006) A storytelling robot: modeling and evaluation of human-like gaze behavior. In: 2006 6th IEEE-RAS international conference on humanoid robots, pp 518–523. https://doi.org/10.1109/ICHR.2006.321322

Lee W, Park CH, Jang S, Cho H-K (2020) Design of effective robotic gaze-based social cueing for users in task-oriented situations: how to overcome in-attentional blindness? Appl Sci 10(16):5413. https://doi.org/10.3390/app10165413

Andrist S, Tan XZ, Gleicher M, Mutlu B (2014) Conversational gaze aversion for humanlike robots. In: Proceedings of the 2014 ACM/IEEE international conference on human–robot interaction. HRI ’14. Association for Computing Machinery, New York, NY, USA, pp 25–32. https://doi.org/10.1145/2559636.2559666

Yamashita S, Kurihara T, Ikeda T, Shinozawa K, Iwaki S (2020) Evaluation of robots that signals a pedestrian using face orientation based on analysis of velocity vector fluctuation in moving trajectories, vol 34. Taylor & Francis, pp 1309–1323. https://doi.org/10.1080/01691864.2020.1811763

Gallup AC, Chong A, Couzin ID (2012) The directional flow of visual information transfer between pedestrians. Biol Let 8(4):520–522. https://doi.org/10.1098/rsbl.2012.0160

Bhojwani TM, Lynch SD, Bühler MA, Lamontagne A (2022) Impact of dual tasking on gaze behaviour and locomotor strategies adopted while circumventing virtual pedestrians during a collision avoidance task. Exp Brain Res 240(10):2633–2645. https://doi.org/10.1007/s00221-022-06427-2

Hessels RS, Benjamins JS, van Doorn AJ, Koenderink JJ, Holleman GA, Hooge ITC (2020) Looking behavior and potential human interactions during locomotion. J Vis 20(10):5–5. https://doi.org/10.1167/jov.20.10.5

Ruhland K, Peters CE, Andrist S, Badler JB, Badler NI, Gleicher M, Mutlu B, McDonnell R (2015) A review of eye gaze in virtual agents, social robotics and hci: behaviour generation, user interaction and perception. In: Computer graphics forum, vol 34. Wiley Online Library, pp 299–326

Zheng M, Moon A, Croft EA, Meng MQ-H (2015) Impacts of robot head gaze on robot-to-human handovers. Int J Soc Robot 7(5):783–798. https://doi.org/10.1007/s12369-015-0305-z

Terzioğlu Y, Mutlu B, Şahin E (2020) Designing social cues for collaborative robots: The role of gaze and breathing in human-robot collaboration. In: Proceedings of the 2020 ACM/IEEE international conference on human–robot interaction. HRI ’20. Association for Computing Machinery, New York, NY, USA, pp 343–357. https://doi.org/10.1145/3319502.3374829

Mutlu B, Shiwa T, Kanda T, Ishiguro H, Hagita N (2009) Footing in human–robot conversations: how robots might shape participant roles using gaze cues. In: Proceedings of the 4th ACM/IEEE international conference on human robot interaction. HRI ’09. Association for Computing Machinery, New York, NY, USA, pp 61–68. https://doi.org/10.1145/1514095.1514109

Takayama L, Pantofaru C (2009) Influences on proxemic behaviors in human–robot interaction. In: 2009 IEEE/RSJ international conference on intelligent robots and systems. IEEE, pp 5495–5502

Angelopoulos G, Rossi A, Napoli CD, Rossi S (2022) You are in my way: non-verbal social cues for legible robot navigation behaviors. In: 2022 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 657–662. https://doi.org/10.1109/IROS47612.2022.9981754

Che Y, Okamura AM, Sadigh D (2020) Efficient and trustworthy social navigation via explicit and implicit robot-human communication. IEEE Trans Robot 36(3):692–707. https://doi.org/10.1109/TRO.2020.2964824

Gehrke SR, Russo BJ, Phair CD, Smaglik EJ (2022) Evaluation of sidewalk autonomous delivery robot interactions with pedestrians and bicyclists. Technical report

van Mierlo S (2021) Field observations of reactions of incidentally copresent pedestrians to a seemingly autonomous sidewalk delivery vehicle: an exploratory study. Master’s thesis

Hardeman K (2021) Encounters with a seemingly autonomous sidewalk delivery vehicle: interviews with incidentally copresent pedestrians. Master’s thesis

Yu X, Hoggenmueller M, Tomitsch M (2023) Your way or my way: improving human-robot co-navigation through robot intent and pedestrian prediction visualisations. In: Proceedings of the 2023 ACM/IEEE international conference on human–robot interaction, pp 211–221

Hetherington NJ, Croft EA, Van der Loos HFM (2021) Hey robot, which way are you going? nonverbal motion legibility cues for human–robot spatial interaction. IEEE Robot Autom Lett 6(3):5010–5015. https://doi.org/10.1109/LRA.2021.3068708

Kannan SS, Lee A, Min B-C (2021) External human–machine interface on delivery robots: expression of navigation intent of the robot. In: 2021 30th IEEE international conference on robot & human interactive communication (RO-MAN), pp 1305–1312. https://doi.org/10.1109/RO-MAN50785.2021.9515408

Robotics S. For better business just add pepper. https://us.softbankrobotics.com/pepper. Accessed 23 May 2023

Riek LD (2012) Wizard of oz studies in hri: a systematic review and new reporting guidelines. J Hum Robot Interact 1(1):119–136. https://doi.org/10.5898/JHRI.1.1.Riek

Carpinella CM, Wyman AB, Perez MA, Stroessner SJ (2017) The robotic social attributes scale (rosas): development and validation. In: 2017 12th ACM/IEEE international conference on human–robot interaction (HRI), pp 254–262

Syrdal DS, Dautenhahn K, Koay KL, Walters ML (2009) The negative attitudes towards robots scale and reactions to robot behaviour in a live human–robot interaction study. Adaptive and emergent behaviour and complex systems

Horstmann AC, Krämer NC (2020) When a robot violates expectations: the influence of reward valence and expectancy violation on people’s evaluation of a social robot. In: Companion of the 2020 ACM/IEEE international conference on human–robot interaction. HRI ’20. Association for Computing Machinery, New York, NY, USA, pp 254–256. https://doi.org/10.1145/3371382.3378292

Burgoon JK, Walther JB (2006) Nonverbal expectancies and the evaluative consequences of violations. Hum Commun Res 17(2):232–265. https://doi.org/10.1111/j.1468-2958.1990.tb00232.x

MacDorman KF, Vasudevan SK, Ho C-C (2009) Does Japan really have robot mania? Comparing attitudes by implicit and explicit measures. AI Soc 23(4):485–510. https://doi.org/10.1007/s00146-008-0181-2

Leiner Dominik J (2019) SoSci Survey (version 3.3.17). https://www.soscisurvey.de

Horstmann AC, Krämer NC (2019) Great expectations? Relation of previous experiences with social robots in real life or in the media and expectancies based on qualitative and quantitative assessment. Front Psychol 10:939. https://doi.org/10.3389/fpsyg.2019.00939

Core Team R (2020) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

Bates D, Mächler M, Bolker B, Walker S (2015) Fitting linear mixed-effects models using lme4. J Stat Soft. https://doi.org/10.18637/jss.v067.i01

Barr DJ, Levy R, Scheepers C, Tily HJ (2013) Random effects structure for confirmatory hypothesis testing: keep it maximal. J Mem Lang 68(3):255–278. https://doi.org/10.1016/j.jml.2012.11.001

Jakobowsky CS, Abrams AMH, Rosenthal-von der Pütten AM. Gaze-cues of humans and robots on pedestrian ways—supplementary material. https://doi.org/10.17605/OSF.IO/NCWT5

Rosenthal-von der Pütten AM, Krämer NC (2015) Individuals’ evaluations of and attitudes towards potentially uncanny robots. Int J Soc Robot 7(5):799–824. https://doi.org/10.1007/s12369-015-0321-z

Amazon Mechanical Turk Ioia Amazon Mechanical Turk. https://www.mturk.com/. Accessed 23 May 2023

Mutlu B, Forlizzi J (2008) Robots in organizations: the role of workflow, social, and environmental factors in human–robot interaction. In: 2008 3rd ACM/IEEE international conference on human-robot interaction (HRI), pp 287–294. https://doi.org/10.1145/1349822.1349860

Acknowledgements

We thank our students Mathias Kilcher, Jayadev Madyal, and Marlon Spangenberg for developing the mock-up of the delivery robot, and Max Jung, Simon Kroes, and Lena Plum for helping to create the stimulus material.

Funding

Open Access funding enabled and organized by Projekt DEAL. The authors have no relevant financial or non-financial interests to disclose. The research leading to these results received funding from the German Federal Ministry of Eduction and Research (BMBF) under Grant Agreement No. 16SV8232.

Author information

Authors and Affiliations

Contributions

AA and CJ contributed to the study conception and design. Material preparation and data collection were performed by CJ and AA. Analysis was performed by CJ and AR-vdP. The first draft of the manuscript was written by CJ and AR-vdP and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical standard

The studies were conducted guaranteeing respect for the participating volunteers and human dignity. We followed the national, EU and international ethical guidelines and conventions as laid down in the following documents: The Ethical guidelines of the German Psychological Association; The Charter of Fundamental Rights of the EU; Helsinki Declaration in its latest version. Specifically, participants gave informed consent prior to the study and were properly debriefed after completion of the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jakobowsky, C.S., Abrams, A.M.H. & Rosenthal-von der Pütten, A.M. Gaze-Cues of Humans and Robots on Pedestrian Ways. Int J of Soc Robotics 16, 311–325 (2024). https://doi.org/10.1007/s12369-023-01064-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-023-01064-3