Abstract

Neural circuits with multiple discrete attractor states could support a variety of cognitive tasks according to both empirical data and model simulations. We assess the conditions for such multistability in neural systems using a firing rate model framework, in which clusters of similarly responsive neurons are represented as single units, which interact with each other through independent random connections. We explore the range of conditions in which multistability arises via recurrent input from other units while individual units, typically with some degree of self-excitation, lack sufficient self-excitation to become bistable on their own. We find many cases of multistability—defined as the system possessing more than one stable fixed point—in which stable states arise via a network effect, allowing subsets of units to maintain each others' activity because their net input to each other when active is sufficiently positive. In terms of the strength of within-unit self-excitation and standard deviation of random cross-connections, the region of multistability depends on the response function of units. Indeed, multistability can arise with zero self-excitation, purely through zero-mean random cross-connections, if the response function rises supralinearly at low inputs from a value near zero at zero input. We simulate and analyze finite systems, showing that the probability of multistability can peak at intermediate system size, and connect with other literature analyzing similar systems in the infinite-size limit. We find regions of multistability with a bimodal distribution for the number of active units in a stable state. Finally, we find evidence for a log-normal distribution of sizes of attractor basins, which produces Zipf’s Law when enumerating the proportion of trials within which random initial conditions lead to a particular stable state of the system.

Similar content being viewed by others

Data availability

MATLAB codes used to produce the results in this paper are available for public download at https://github.com/primon23/Multistability-Paper.

References

Abeles M, Bergman H, Gat I, Meilijson I, Seidemann E, Tishby N, Vaadia E (1995) Cortical activity flips among quasi-stationary states. Proc Natl Acad Sci U S A 92(19):8616–8620

Ahmadian Y, Fumarola F, Miller KD (2015) Properties of networks with partially structured and partially random connectivity. Phys Rev E Stat Nonlin Soft Matter Phys 91(1):012820. https://doi.org/10.1103/PhysRevE.91.012820

Amit DJ, Gutfreund H, Sompolinsky H (1985a) Spin-glass models of neural networks. Phys Rev A Gen Phys 32(2):1007–1018. https://doi.org/10.1103/physreva.32.1007

Amit DJ, Gutfreund H, Sompolinsky H (1985b) Storing infinite numbers of patterns in a spin-glass model of neural networks. Phys Rev Lett 55:1530–1531

Anishchenko A, Treves A (2006) Autoassociative memory retrieval and spontaneous activity bumps in small-world networks of integrate-and-fire neurons. J Physiol Paris 100(4):225–236. https://doi.org/10.1016/j.jphysparis.2007.01.004

Ballintyn B, Shlaer B, Miller P (2019) Spatiotemporal discrimination in attractor networks with short-term synaptic plasticity. J Comput Neurosci 46(3):279–297. https://doi.org/10.1007/s10827-019-00717-5

Battaglia FP, Treves A (1998) Stable and rapid recurrent processing in realistic autoassociative memories. Neural Comput 10(2):431–450

Benozzo D, La Camera G, Genovesio A (2021) Slower prefrontal metastable dynamics during deliberation predicts error trials in a distance discrimination task. Cell Rep 35(1):108934. https://doi.org/10.1016/j.celrep.2021.108934

Boboeva V, Pezzotta A, Clopath C (2021) Free recall scaling laws and short-term memory effects in a latching attractor network. Proc Natl Acad Sci U S A. https://doi.org/10.1073/pnas.2026092118

Bourjaily MA, Miller P (2011) Excitatory, inhibitory, and structural plasticity produce correlated connectivity in random networks trained to solve paired-stimulus tasks. Front Comput Neurosci 5:37. https://doi.org/10.3389/fncom.2011.00037

Brunel N (2003) Dynamics and plasticity of stimulus-selective persistent activity in cortical network models. Cereb Cortex 13(11):1151–1161

Cabana T, Touboul JD (2018) Large deviations for randomly connected neural networks: II. State-dependent interactions. Adv Appl Probab 50(3):983–1004

Chen B, Miller P (2020) Attractor-state itinerancy in neural circuits with synaptic depression. J Math Neurosci 10(1):15. https://doi.org/10.1186/s13408-020-00093-w

Daelli V, Treves A (2010) Neural attractor dynamics in object recognition. Exp Brain Res 203(2):241–248. https://doi.org/10.1007/s00221-010-2243-1

David HA, Nagaraja HN (2003) Order statistics, 3rd edn. Wiley, Hoboken. https://doi.org/10.1002/0471722162

Escola S, Fontanini A, Katz D, Paninski L (2011) Hidden Markov models for the stimulus-response relationships of multistate neural systems. Neural Comput 23(5):1071–1132. https://doi.org/10.1162/NECO_a_00118

Folli V, Leonetti M, Ruocco G (2016) On the maximum storage capacity of the Hopfield model. Front Comput Neurosci 10:144. https://doi.org/10.3389/fncom.2016.00144

Fuster JM (1973) Unit activity in prefrontal cortex during delayed-response performance: neuronal correlates of transient memory. J Neurophysiol 36(1):61–78. https://doi.org/10.1152/jn.1973.36.1.61

Goldberg JA, Rokni U, Sompolinsky H (2004) Patterns of ongoing activity and the functional architecture of the primary visual cortex. Neuron 42(3):489–500

Golos M, Jirsa V, Dauce E (2015) Multistability in large scale models of brain activity. PLoS Comput Biol 11(12):e1004644. https://doi.org/10.1371/journal.pcbi.1004644

Hebb DO (1949) The organization of behavior; a neuropsychological theory. Wiley, Hoboken

Hopfield JJ (1982) Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci 79(8):2554–2558. https://doi.org/10.1073/pnas.79.8.2554

Hopfield JJ (1984) Neurons with graded response have collective computational properties like those of two-state neurons. Proc Natl Acad Sci USA 81:3088–3092

Jones LM, Fontanini A, Sadacca BF, Miller P, Katz DB (2007) Natural stimuli evoke dynamic sequences of states in sensory cortical ensembles. Proc Natl Acad Sci U S A 104(47):18772–18777. https://doi.org/10.1073/pnas.0705546104

Ksander J, Katz DB, Miller P (2021) A model of naturalistic decision making in preference tests. PLoS Comput Biol 17(9):e1009012. https://doi.org/10.1371/journal.pcbi.1009012

La Camera G, Fontanini A, Mazzucato L (2019) Cortical computations via metastable activity. Curr Opin Neurobiol 58:37–45. https://doi.org/10.1016/j.conb.2019.06.007

Lerner I, Bentin S, Shriki O (2012) Spreading activation in an attractor network with latching dynamics: automatic semantic priming revisited. Cogn Sci 36(8):1339–1382. https://doi.org/10.1111/cogs.12007

Lerner I, Bentin S, Shriki O (2014) Integrating the automatic and the controlled: strategies in semantic priming in an attractor network with latching dynamics. Cogn Sci 38(8):1562–1603. https://doi.org/10.1111/cogs.12133

Lerner I, Shriki O (2014) Internally- and externally-driven network transitions as a basis for automatic and strategic processes in semantic priming: theory and experimental validation. Front Psychol 5:314. https://doi.org/10.3389/fpsyg.2014.00314

Linkerhand M, Gros C (2013) Generating functionals for autonomous latching dynamics in attractor relict networks. Sci Rep 3:2042. https://doi.org/10.1038/srep02042

Mazzucato L, Fontanini A, La Camera G (2015) Dynamics of multistable states during ongoing and evoked cortical activity. J Neurosci 35(21):8214–8231. https://doi.org/10.1523/JNEUROSCI.4819-14.2015

Mazzucato L, La Camera G, Fontanini A (2019) Expectation-induced modulation of metastable activity underlies faster coding of sensory stimuli. Nat Neurosci 22(5):787–796. https://doi.org/10.1038/s41593-019-0364-9

Miller P (2013) Stimulus number, duration and intensity encoding in randomly connected attractor networks with synaptic depression. Front Comput Neurosci 7:59. https://doi.org/10.3389/fncom.2013.00059

Miller P (2016) Itinerancy between attractor states in neural systems. Curr Opin Neurobiol 40:14–22. https://doi.org/10.1016/j.conb.2016.05.005

Miller P, Katz DB (2010) Stochastic transitions between neural states in taste processing and decision-making. J Neurosci 30(7):2559–2570. https://doi.org/10.1523/jneurosci.3047-09.2010

Miller P, Katz DB (2011) Stochastic transitions between states of neural activity. In: Ding M, Glanzman DL (eds) The dynamic brain: an exploration of neuronal variability and its functional Significance. Oxford University Press, Oxford, pp 29–46

Mitzenmacher M (2004) A brief history of generative models for power law and lognormal distributions. Internet Math 1(2):226–251

Morcos AS, Harvey CD (2016) History-dependent variability in population dynamics during evidence accumulation in cortex. Nat Neurosci 19(12):1672–1681. https://doi.org/10.1038/nn.4403

Moreno-Bote R, Rinzel J, Rubin N (2007) Noise-induced alternations in an attractor network model of perceptual bistability. J Neurophysiol 98(3):1125–1139. https://doi.org/10.1152/jn.00116.2007

Perin R, Berger TK, Markram H (2011) A synaptic organizing principle for cortical neuronal groups. Proc Natl Acad Sci U S A 108(13):5419–5424. https://doi.org/10.1073/pnas.1016051108

Perline R (2005) Strong, weak and false inverse power laws. Stat Sci 20(1):68–88

Ponce-Alvarez A, Nacher V, Luna R, Riehle A, Romo R (2012) Dynamics of cortical neuronal ensembles transit from decision making to storage for later report. J Neurosci off J Soc Neurosci 32(35):11956–11969. https://doi.org/10.1523/JNEUROSCI.6176-11.2012

Rabinovich M, Volkovskii A, Lecanda P, Huerta R, Abarbanel HD, Laurent G (2001) Dynamical encoding by networks of competing neuron groups: winnerless competition [Research Support, Non-U.S. Gov’t

Research Support, U.S. Gov't, Non-P.H.S.]. Physical Review Letters, 87(6), 068102. http://www.ncbi.nlm.nih.gov/pubmed/11497865

Rabinovich MI, Varona P, Tristan I, Afraimovich VS (2014) Chunking dynamics: heteroclinics in mind. Front Comput Neurosci 8:22. https://doi.org/10.3389/fncom.2014.00022

Rainer G, Miller EK (2000) Neural ensemble states in prefrontal cortex identified using a hidden Markov model with a modified EM algorithm. Neurocomputing 32:961–966. https://doi.org/10.1016/S0925-2312(00)00266-6

Rajan K, Abbott LF (2006) Eigenvalue spectra of random matrices for neural networks. Phys Rev Lett 97(18):188104

Recanatesis S, Pereira U, Murakami M, Mainen Z, Mazzucato L (2022) Metastable attractors explain the variable timing of stable behavioral action sequences. Neuron 110:139–153

Russo E, Treves A (2012) Cortical free-association dynamics: distinct phases of a latching network. Phys Rev E Stat Nonlin Soft Matter Phys 85(5 Pt 1):051920. https://doi.org/10.1103/PhysRevE.85.051920

Sadacca BF, Mukherjee N, Vladusich T, Li JX, Katz DB, Miller P (2016) The behavioral relevance of cortical neural ensemble responses emerges suddenly. J Neurosci 36(3):655–669. https://doi.org/10.1523/jneurosci.2265-15.2016

Seidemann E, Meilijson I, Abeles M, Bergman H, Vaadia E (1996) Simultaneously recorded single units in the frontal cortex go through sequences of discrete and stable states in monkeys performing a delayed localization task. J Neurosci 16(2):752–768

Sompolinsky H, Crisanti A (2018) Path integral approach to random neural networks. Phys Rev E 98:062120

Sompolinsky H, Crisanti A, Sommers HJ (1988) Chaos in random neural networks. Phys Rev Lett 61(3):259–262. https://doi.org/10.1103/PhysRevLett.61.259

Sompolinsky H, Kanter II (1986) Temporal association in asymmetric neural networks. Phys Rev Lett 57(22):2861–2864. https://doi.org/10.1103/PhysRevLett.57.2861

Song S, Sjöström PJ, Reigl M, Nelson S, Chklovskii DB (2005) Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS Biol 3(3):e68. https://doi.org/10.1371/journal.pbio.0030068

Song S, Yao H, Treves A (2014) A modular latching chain. Cogn Neurodyn 8(1):37–46. https://doi.org/10.1007/s11571-013-9261-1

Stepanyants A, Chklovskii DB (2005) Neurogeometry and potential synaptic connectivity. Trends Neurosci 28:387–394

Stern M, Sompolinsky H, Abbott LF (2014) Dynamics of random neural networks with bistable units. Phys Rev E Stat Nonlinear Soft Matter Phys 90(6):062710–062710. https://doi.org/10.1103/PhysRevE.90.062710

Strogatz SH (2015) Nonlinear dynamics and chaos, 2nd edn. Westview Press, Boulder

Taylor JD, Chauhan AS, Taylor JT, Shilnikov AL, Nogaret A (2022) Noise-activated barrier crossing in multiattractor dissipative neural networks. Phys Rev E 105(6–1):064203. https://doi.org/10.1103/PhysRevE.105.064203

Touboul JD, Ermentrout GB (2011) Finite-size and correlation-induced effects in mean-field dynamics. J Comput Neurosci 31(3):453–484. https://doi.org/10.1007/s10827-011-0320-5

Treves A (1990) Graded-response neurons and information encodings in autoassociative memories. Phys Rev A 42(4):2418–2430

Treves A (2005) Frontal latching networks: a possible neural basis for infinite recursion. Cogn Neuropsychol 22(3):276–291. https://doi.org/10.1080/02643290442000329

Wills TJ, Lever C, Cacucci F, Burgess N, O’Keefe J (2005) Attractor dynamics in the hippocampal representation of the local environment. Science 308(5723):873–876. https://doi.org/10.1126/science.1108905

Wilson H, Cowan J (1973) A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Kybernetik 13:55–80. https://doi.org/10.1007/BF00288786

Zurada JM, Cloete I, van der Poel E (1996) Generalized Hopfield networks for associative memories with multi-valued stable states. Neurocomputing 13:135–149

Acknowledgements

The authors are grateful to NIH-NINDS for support of this work via R01 NS104818 and to the Swartz Foundation for a fellowship to SQ. We acknowledge computational support from the Brandeis HPCC which is partially supported by the NSF through DMR-MRSEC 2011846 and OAC-1920147. SM is grateful to Merav Stern for helpful conversations in the early stages of this work.

Author information

Authors and Affiliations

Contributions

All authors contributed to the writing and editing of the manuscript and approved the final version. Simulations were carried out by JB, analysis by SM, SQ, and PM. The first draft was written by PM, who also conceived of the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Communicated by Benjamin Lindner.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Monte Carlo simulation method

Our standard procedure is to simulate 100 different realizations of the connectivity matrix to produce 100 random networks for a given parameter combination. For each connectivity matrix, we then complete sets of multiple trials, each trial with a distinct initial condition (100 trials for perturbation analysis in Fig. 2 and for scaling \(g\) in Fig. 3; 200 trials for parameter grids in Fig. 4; and 106 or 105 trials, respectively, for the networks with binary units in Figs. 8 and 9). For the small (\(N\le 25\)) networks with binary units in Fig. 8 all \({2}^{N}\) combinations of initial conditions are used with each unit at an initial rate of its minimum or maximum.

The continuous models are simulated using MATLAB’s “ode45” function. Each trial is simulated until either a maximum simulation time is reached (5000 \(\tau \) for Fig. 3 and 10,000 \(\tau \) for Fig. 4), or until a stopping condition is reached in the case that the maximum \({\dot{x}}_{i}\) at a given timestep is less than \({2\times10}^{-6}\). If this stopping condition is reached, then the activity is considered to have reached a stable state because the network possesses a point attractor at that set of firing rates. Logistic units are classified as active if their firing rate exceeds 0.5. Tanh units are considered active if the absolute value of their rate exceeds 0.001. For the continuous models, typically the first trial is initialized with inputs near zero, to test if the quiescent state is stable. For all subsequent trials, the initial rates of the units are set to a uniform random distribution over 0–1 and transformed by a logistic function with \({x}_{th}=0.5\) and \(\Delta =0.1\).

For the perturbation analysis, each trial of each network is simulated for a duration of 21,000 \(\tau \). Then, at each of 100 linearly spaced time points between 20,000 and 20,800 \(\tau \) 10% of the units’ firing rates are randomly perturbed upwards or downwards by \({10}^{-5}\) and the simulation is then continued from each such perturbed state for 200 \(\tau \). The root mean squared (RMS) deviation of the perturbed simulation from the original simulation quantifies the extent to which the perturbation causes a divergence in activity. The median RMS deviation over the 100 perturbations is then used to classify each trial as a point attractor, a limit cycle, or chaotic. The median RMS deviation exponentially decays for point attractors, exponentially increases for chaos, and increases but reaches a plateau at a low level for limit cycles. Classification thresholds are determined by the square of the correlation (R2) of a linear fit to the exponential RMS deviation and the magnitude of the RMS deviation averaged between 190 and 200 \(\tau \) post-perturbation. Trials with final RMS deviations below half the magnitude of the initial perturbation and with no units having a change in their firing rate exceeding 10–4 in the last 10 \(\tau \) of the unperturbed simulation are classified as point attractors. To classify trials as chaotic vs limit cycles, a classification boundary is determined as a function of each trials’ linear fit R2 and final RMS deviation. Trials above the line \(\mathrm{RMS \, deviation}=0.025{e}^{(-0.125 {R}^{2})}\) are classified as chaotic. This boundary allows the separation between these two dynamics because it accounts for both chaotic trials that very quickly converge to a large RMS deviation (large RMS deviation and low R2) and chaotic trials that have a slower exponential increase in their RMS deviation (lower RMS deviation at 190–200 \(\tau \) but high R2). Final activity states of the unperturbed simulations are used to confirm these classifications.

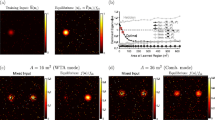

The dynamic regimes of individual networks changes as g is scaled. a Similar to Fig. 3b, but for networks simulated over a smaller range of g. A total of 100 random networks of logistic units (Δ = 0.2) with no self-connections (s = 0) of varying size (N = 10, 50, 100, 500, 1000) are simulated at each value of g. The same network can gain and lose multistability as g varies. Color scale indicates number of point attractors found within 100 trials. White indicates values of g that are not simulated due to computational limits. b The same simulations as in (a), but where color now indicates the classification of the set of activity observed across the 100 simulated trials. 1, no trials converge; 2, stable quiescence + some trials fail to converge; 3, only stable quiescence; 4, only a single stable active state; 5, some trials don’t converge + stable quiescence + at least one stable active state; 6, stable quiescence + a single stable active state; 7, multiple stable active states + no stable quiescence; 8, multiple stable active states + stable quiescence; 9, some trials fail to converge + a single stable active states; 10, some trials don’t converge + multistable

Appendix 2: Choice of single-unit input threshold

For comparison across systems with distinct single-unit response functions, \(f\left(x\right)\), we adjust the offset, \({x}_{th}\), such that a single unit becomes bistable with self-connection strength of \(s=1\), in all cases.

For the logistic response function, such a requirement means that a saddle-node bifurcation occurs at \(s=1\), with unstable and stable fixed points colliding at \({x}^{*}\) given by \({-x}^{*}+sf\left({x}^{*}\right)=0\) such that \({x}^{*}=f\left({x}^{*}\right)\) and \(\frac{\text d}{{\text{d}}x}{\left[-x+sf\left(x\right)\right]}_{{x}^{*}}=0\) such that \({\left.\frac{{\text{d}}f\left(x\right)}{{\text{d}}x}\right|}_{{x}^{*}}=1\). Combining these equations and using the result for the logistic function that \(\frac{{\text{d}}f\left(x\right)}{{\text{d}}x}=\frac{1}{\Delta }f\left(x\right)\left[1-f\left(x\right)\right]\) leads to the requirement:

For the binary response function, \(\left(x\right)=\) \({\text{Heaviside}}(x-{x}_{th})\), we have \({x}_{th}=1\), which can be seen from Eq. (13) in the limit \(\Delta \to 0\).

For the hyperbolic tangent response function, \(f\left(x\right)={\text{tanh}}\left(\frac{x-{x}_{th}}{\Delta }\right)\), a similar derivation leads to

which yields \({x}_{th}=0\) if \(\Delta =1\), matching the simplest response function, \(f\left(x\right)={\text{tanh}}\left(x\right)\), and as with the binary response function, \({x}_{th}=1\) if \(\Delta =0\).

Appendix 3: Networks with multiple states and no self-connections

Here, we verify that the majority of simulated networks up to a size of \(N=1000\) show multiple point attractors, while the range of \(g\) in which such multistability exists converges with increasing \(N\) to the narrow range found in our infinite-N analysis (\(1.53<g<1.57)\).

Appendix 4: General mean-field methods

4.1 Self-consistency of solutions

The self-consistent solution of Eqs. 2–6 is straight forward when \(x-sf\left(x\right)\) is a monotonic function such that there is a one-to-one mapping from \(\eta \) to \(x\) following Eq. 5. The variance, \({\sigma }^{2}\), of the Gaussian distribution of \(\eta \), is the only parameter to be calculated, so the iteration is one-dimensional and in our experience always converges using the MATLAB solver “fzero.” The procedure is, given an initial value of \({\sigma }^{2}\), which defines \(P\left(\eta \right)=\frac{1}{\sqrt{2\pi {\sigma }^{2}}}{\text{exp}}\left(-\frac{{\eta }^{2}}{2{\sigma }^{2}}\right)\) (from Eq. 6) we numerically integrate over \(\eta \). At each value of \(\eta \), we calculate \(x\left(\eta \right)\) by numerically inverting \(x-sf\left(x\right)=\eta \) (from Eq. 5), and hence calculate \(f\left(x\left(\eta \right)\right)\) and \({f}^{2}\left(x\left(\eta \right)\right)\). Multiplication of \({f}^{2}\left(x\left(\eta \right)\right)\) by \(P\left(\eta \right)\) combined with the numerical integration leads to \(\langle {f}^{2}\left( {\sigma }^{2}\right)\rangle \) (where we indicate its dependence on \({\sigma }^{2}\) because \(P\left(\eta \right)\) depends on \({\sigma }^{2}\)). For a self-consistent solution \({\sigma }^{2}={g}^{2}\langle {f}^{2}\left( {\sigma }^{2}\right)\rangle \) (Eq. 4), so we require the solver to find the zero-crossing of \({g}^{2}\langle {f}^{2}\left( {\sigma }^{2}\right)\rangle -{\sigma }^{2}\) when calculated in this manner. We use standard numerical integration grids of 5 × 104 points and test integration grids that are tenfold finer for select parameters to ensure the results are numerically accurate. Since multiple solutions for \({\sigma }^{2}\) are possible, we systematically vary initial conditions and the bounds of \({\sigma }^{2}\) to find zero crossings, to ensure we reach all solutions. We only ever find one solution or three solutions. With three solutions, we only count the lowest and highest values of \({\sigma }^{2}\) as the intermediate value corresponds to an unstable solution of Eq. 2.

In situations where \(x-sf\left(x\right)\) is non-monotonic, across a range of values of \(\eta \) the solution \(x\left(\eta \right)\) is not single-valued. In this case, an additional parameter is the point of the range of \(\eta \) at which we switch from one branch of \(x\) to another when calculating \(x\left(\eta \right)\). We test for solutions with different switching points in order to assess whether any solution is stable, as described in the later subsection, Multiple solutions for \(x\left(\eta \right)\). In such non-monotonic situations, we use finer integration grids of 5 × 105 points because of the sensitivity of \(x\left(\eta \right)\) near turning points of the curve. We also test integration grids that are tenfold finer for select parameters to ensure the results are numerically accurate. (See https://github.com/primon23/Multistability-Paper/ for full code).

4.2 Stability of solutions

To test whether a distribution of the interacting variables, \(x\), produces a stable fixed point, it is necessary to obtain information about the eigenvalues of the Jacobian matrix of the dynamical equations expanded linearly about the fixed point (Strogatz 2015). If all such eigenvalues have a negative real part then the fixed point is stable. Linearization around a fixed point, \({x}^{*}\), yields

where \(D\) is a diagonal matrix with elements equal to the corresponding derivatives of the response function, \(f^{\prime}\left({x}^{*}\right)\), and \(J\) is the unit variance, zero mean, Gaussian connectivity matrix.

We follow the methods of others (Ahmadian et al. 2015; Stern et al. 2014) who showed that eigenvalues of such a system are found at the complex values, \(z\), where

with

In the large-\(N\) limit, the sum within the Trace becomes an integral over the distribution of \(x\), to yield the criterion (Ahmadian et al. 2015; Stern et al. 2014):

As noted by (Stern et al. 2014), for the system to be stable it is necessary that Eq. (16) is not satisfied for any \(z\) with \(Re\left[z\right]>0\), which allows us to assess the case where \(Re\left[z\right]=0\) and note that any nonzero contribution to \(Im\left[z\right]\) increases the absolute value of the denominator in Eq. 16, so if there are no eigenvalues with \(z=0\) there cannot be any on the imaginary axis. Therefore, in general we require, for there to be no eigenvalues with positive real part, that

where we have substituted for \(P\left(\eta \right)\) and \({\sigma }^{2}={g}^{2}\langle {f}^{2}\left(x\right)\rangle .\) We have also assumed that the function in the denominator, \(1-sf^{\prime}\left(x\left(\eta \right)\right)\), is positive, as any negative portion of the function means there is a divergent positive contribution to the integral for some \(z\) with \(Re\left[z\right]=\) \(sf^{\prime}\left(x\left(\eta \right)\right)-1>0\).

4.3 Verification of analytic methods

While we follow exactly the methods of others (Stern et al. 2014) in the infinite-\(N\) analysis, the validity of ignoring the index of units in a mean-field manner has not been established rigorously for static solutions of the dynamical system. In this subsection, we justify our method.

First we explain why, for a specific set of firing rates, each unit experiences network input that corresponds to a random sample, from a Gaussian distribution of zero mean and variance equal to \({g}^{2}\langle {f}^{2}\left(x\right)\rangle \): Each input from one presynaptic unit to one postsynaptic unit is a random sample (independent of that of other units given that connections are independent) from a Gaussian of zero mean, and the sum of such Gaussian samples (to provide the total input) is equal to a Gaussian sample from a distribution with zero mean and variance equal to the sum of variances of individual samples. Since self-connections are treated differently, total input to each unit is sampled from a slightly different distribution of \(N-1\) connections, so for small-\(N\) the variance of the Gaussian distribution used in sampling is slightly different for each unit. However, the impact of one input is negligible in the large-\(N\) limit (Fig. 10).

If one input distribution satisfies the self-consistency requirements of Eqs. 2–6 and is stable, then the population activity is stable in the statistical sense (as an ensemble of rates), but that does not mean that each unit has a stable fixed firing rate. While the methods of Ahmadian et al. (2015) described in the preceding subsection allow us to determine whether a fixed point of the dynamical system is stable, we must still establish the existence of such a fixed point. We use the rest of this subsection to justify our claim of multiple stable fixed points, first in the case that the rates of individual units are single-valued in their network input (\(x\) is monotonic in \(\eta \)), and to show that multiple stable fixed points are possible if the circuit has two stable distributions of \(\eta \).

-

(1)

In Fig. 11, we show that the self-consistent solutions for the root mean squared firing rates, \(\langle {f}^{2}\left(x\right)\rangle \) and hence the variance of the zero-mean input distribution, of stable fixed points in simulated networks approach that of the infinite-\(N\) calculations as \(N\) increases (see also (Cabana and Touboul 2018), e.g., Theorem 5, for justification).

-

(2)

As \(N\) increases, the phase diagrams in Fig. 4 indicating multiple stable fixed points approach the corresponding infinite-N limits in Figs. 6 and 12.

-

(3)

As \(N\) increases, the range of \(g\) at which we find multistability without self-connections (Fig. 3) in simulations matches the range found using the infinite-\(N\) methods (Fig. 6b, x-axis).

-

(4)

As a heuristic argument, for a given stable distribution of inputs, there are \(N!\) combinations for ordering the units by firing rate. For the system to have a stable fixed point, one of those \(N!\) combinations must provide inputs that are ordered in the same manner. The probability of any individual order matching the required order is \(1/N!\). Therefore, for large-\(N\) this leads to a probability of \(1-{\left(1-\frac{1}{N!}\right)}^{N!}\cong 1-{e}^{-1}\) of there being one or more stable fixed points, with the number of fixed points following a Poisson distribution of mean 1. Indeed in Fig. 2e, the number of networks with at least one stable active attractor state is not significantly different from the expected number from this argument (58 out of 100 from simulations versus 63 out of 100 expected, but note that the sampling in simulations can be an undercount). Moreover, if we count the number of distinct stable active attractor states in each network, we find the numbers 42, 40, 13, 4, 1 for 0, 1, 2, 3, or 4 states, respectively, a result which is not significantly different from a Poisson distribution with mean of 1 (\(p=0.46\), Kolmorogov–Smirnov test).

-

(5)

In cases where the firing rates are always single-valued in the external inputs (\(s<\frac{1}{4\Delta }\) for networks of logistic units), the stable solutions may not be fixed points, as discussed in item 4). However, for parameters whereby a finite fraction, \(\frac{M}{N}\), of units have two possible stable firing rates given their external inputs, even allowing for correlations, there become an infinite number (\(o\left({2}^{M}\right)\)) of extra state combinations that can produce the desired ordering of inputs, suggesting the probability of multistability is on the order of \(1-{e}^{-{2}^{M}}\), which approaches 1 with increasing \(N\) and \(M\).

-

6)

When we use these methods to analyze networks in which units have the response function \(f\left(x\right)={\text{tanh}}(x)\), our results exactly match those of prior work, including the well-established transition to chaos at \(g=1\) if \(s=0\) (Fig. 12a).

Simulation results for networks with increasing \(N\) converge to the infinite-\(N\) analytic results. a–b Data for all simulations reaching fixed points from 25 simulations of 100 networks are averaged in the first column and separated out into states in which all units have low rate (quiescent, middle column) or one or more units are active (right column). Network-size of simulations is indicated by the color of the curve, with the root mean square of firing rates, \(\sqrt{\langle {f}^{2}\left(x\right)\rangle }\), taken across units in each network. These curves are compared with \(\sigma /g\) calculated for the infinite-\(N\) network (black curves). Units have logistic response functions with a \(\Delta \boldsymbol{ }=0.2\), \(s=0\), and b \(\Delta =0.2\), \(s=0.5\)

Phase diagram for networks with tanh units. a Results with \(\Delta =1\) replicate those of (Stern et al. 2014). b–f Region of multistability increases to lower \(s\) while remaining only for \(s\ge 1\) on the y-axis. (Black = chaos; cyan = quiescent only; orange = multiple active stable states.)

4.4 Multiple solutions for \(x\left(\eta \right)\)

\(x\left(\eta \right)\) Can have more than one value based on the solutions \(x-sf\left(x\right)=\eta \) for some values of \(\eta \). This requires that \(x-sf\left(x\right)\) is a non-monotonic function, which occurs if \({\text{max}}\left[f^{\prime}\left(x\right)\right]>1/s\) (to produce a region of negative slope in the function \(x-sf\left(x\right)\)). The need for a region of negative slope arises because in all cases considered here at large positive or negative values of \(x\), \({f}^{\prime}\left(x\right)=0\) and \(x-sf\left(x\right)\) has a slope of + 1. In cases of multiple solutions for \(x\left(\eta \right)\), care must be taken in the choice of \(x\left(\eta \right),\) as while stability is enhanced by choosing the solution with the lower value of \(f^{\prime}\left(x\left(\eta \right)\right),\) such a choice can lead to the lower value of \({f}^{2}\left(x\right)\) for some response functions (but not if \(f\left(x\right)={\text{tanh}}\left(x\right)\)) which can lead to the self-consistent solution for the distribution of \(\eta \) to become too narrow to support multistability, as discussed below.

In networks with the logistic response function, \(f\left(x\right)=\frac{1}{1+{\text{exp}}\left(\frac{{x}_{th}-x}{\Delta }\right)}\approx {\text{exp}}\left(\frac{{x-x}_{th}}{\Delta }\right)\) for \({x\ll x}_{th}\), is never exactly zero. Therefore, the Gaussian distribution of \(\eta \) will always have nonzero variance for \(g>0\) and, even if the distribution is narrow with very small variance, the distribution always retains some vanishingly small but nonzero density at the values of \(\eta \) required to support multiple solutions of \(x\left(\eta \right)\) if \(s>4\Delta \). However, if bifurcation points in \(x\left(\eta \right)\) require levels of the Gaussian-distributed \(\eta \) that are many standard deviations from its mean of zero, such solutions give exponentially small probability of multistability in a finite network, so are unlikely to be observed in practice. Therefore, we set a threshold, \({Z}_{{\text{max}}}\sigma \), in terms of the number, \({Z}_{{\text{max}}}\), of standard deviations, \(\sigma \), of the distribution of inputs, \(\eta \), such that if both bifurcation points, \({\eta }^{*}\), are beyond the threshold (\({\eta }^{*}<-{Z}_{{\text{max}}}\) or \({\eta }^{*}>{Z}_{{\text{max}}}\)) we ignore both the extra solutions and any instability they cause. To clarify the result of such a limit, we show results with multiple values of \({Z}_{{\text{max}}}\) in Fig. 13 (for logisitic response functions) and Fig. 14 (for tanh response functions), while using a default value of \({Z}_{{\text{max}}}=6\) in other figures. In this manner, we have used the results for an infinite system in which correlations are absent, but applied them to a system in which the number of units could range from \({10}^{3}\) to \({10}^{6}\) to \({10}^{15}\) (as \({Z}_{{\text{max}}}\) changes from 3 to 6 to 9) and the results be accurate for 999 networks in 1000 of that size. For further explanation, see also the text in Sect. 3.1.

Impact of the criterion for multistability in networks with logistic units. a–d Results with \(\Delta =0.2\) with varying threshold, \({Z}_{{\text{max}}}\), for the number of standard deviations from the mean input that a unit must receive before considering a unit in the network to switch state. The mathematical limit is shown in (d), while (a)–(c) indicate the multistable region growing with increased \({Z}_{{\text{max}}}.\) Note that in all cases the system with \(g=0\) on the y-axis cannot be multistable for \(s<1\). e–h Results for \(\Delta =0.1\). (Black = chaos; dark blue = chaos + quiescent stable; cyan = quiescent only; yellow = quiescent + active stable state; orange = multiple active stable states; red = stable quiescent + multiple active stables states; crimson = chaos + multiple stable active states.)

Impact of the criterion for multistability in networks with tanh units. a–d Results with \(\Delta =0.8\) with varying threshold, \({Z}_{{\text{max}}}\), for the number of standard deviations from the mean input that a unit must receive before considering a unit in the network to switch state. The mathematical limit is shown in (d), which in this case is minimally different from the results with lower \({Z}_{{\text{max}}}\) in (a–c). Note that in all cases the system with \(g=0\) on the y-axis cannot be multistable for \(s<1\). e–h Equivalent results for \(\Delta =0.4\), with a tiny, but observable dependence on \({Z}_{{\text{max}}}\). (Black = chaos; cyan = quiescent only; orange = multiple active stable states.)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Breffle, J., Mokashe, S., Qiu, S. et al. Multistability in neural systems with random cross-connections. Biol Cybern 117, 485–506 (2023). https://doi.org/10.1007/s00422-023-00981-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00422-023-00981-w