Abstract

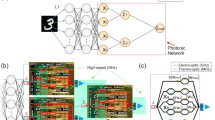

Innovations in deep learning technology have recently focused on photonics as a computing medium. Integrating an electronic and photonic approach is the main focus of this work utilizing various photonic architectures for machine learning applications. The speed, power, and reduced footprint of these photonic hardware accelerators (HA) are expected to greatly enhance inference. In this work, we propose a hybrid design of an electronic and photonic integrated circuit (EPIC) hardware accelerator (EPICHA), an electronic–photonic framework that uses architecture-level integrations for better performance. The proposed EPICHA has a systematic structure of reconfigurable directional couplers (RDCs) to build a scalable, efficient machine learning accelerator for inference applications. In the simulation framework, the input and output layers of a fully integrated photonic neural network use the same integrated photodetector and RDC technology as the activation function. Our system only compromises on latency because of the electro–optical conversion process and the hand-off between the electronic and photonic domains. Insertion losses in photonic components have a small negative impact on accuracy when using more deep learning stages. Our proposed EPICHA utilizes coherent operation, and hence uses a single wavelength of λ = 1550 nm. We used the interoperability feature of the Ansys Lumerical MODE, DEVICE, and INTERCONNECT tools for component modeling in the photonic and electrical domain, and circuit-level simulation using S-parameters with MATLAB. The electronic component acts as the controller, which generates the required analog voltage control signals for each RDC present in the photonic processing engine. We employed MathWorks MATLAB 2022b for the classification of handwritten digits from the MNIST database; the proposed coherent EPICHA achieved accuracy of 94%.

Similar content being viewed by others

Data availability

Enquiries about data availability should be directed to the authors.

References

Gokhale, V., Jin, J., Dundar, A., Martini, B., Culurciello, E.: A 240 G-ops/s Mobile Coprocessor for Deep Neural Networks, CVPR Workshop (2014).

Du, Z., Fasthuber, R., Chen, T., Ienne, P., Li, L., Luo, T., Feng, X., Chen, Y., Temam, O.: ShiDianNao: shifting vision processing closer to the sensor. In: International Symposium on Computer Architecture (ISCA), (2015)

Zhang, C., Li, P., Sun, G., Guan, Y., Xiao, B., Cong, J.: Optimizing FPGA-based accelerator design for deep convolutional neural networks. FPGA, (2015).

Chen, Y.-H., Yang, T.-J., Emer, J., Sze, V.: Eyeriss v2: a flexible accelerator for emerging deep neural networks on mobile devices. IEEE J. Emerg. Sel. Top. Circuits Syst (JETCAS) 9(2), 292–308 (2019)

Parashar, A., Rhu, M., Mukkara, A., Puglielli, A., Venkatesan, R., Khailany, B., Emer, J., Keckler, S.W., Dally, W.J.: SCNN: an accelerator for compressed-sparse convolutional neural networks. Int. Symp. Comput. Arch. (ISCA) (2017).

Markidis, S., Der Chien, S.W., Laure, E., Peng, I. B., Vetter, J.S.: Nvidia tensor core programmability, performance & precision, pp. 522–531 (2018).

Sodani, A., Gramunt, R., Corbal, J., Kim, H.-S., Vinod, K., Chinthamani, S., Hutsell, S., Agarwal, R., Liu, Y.-C.: Knights landing: second-generation Intel Xeon Phi product. IEEE Micro 36(2), 3446 (2016)

Amravati, A., Nasir, S.B., Thangadurai, S., Yoon, I., Raychowdhury, A: A 55nm time-domain mixed-signal neuromorphic accelerator with stochastic synapses and embedded reinforcement learning for autonomous micro-robots, pp. 124126, 2018

Valavi, H., Ramadge, P.J., Nestler, E., Verma, N.: A mixed-signal binarized convolutional-neural-network accelerator integrating dense weight storage and multiplication for reduced data movement, pp. 141–142 (2018)

Amaravati, A., Nasir, S.B., Ting, J., Yoon, I., Raychowdhury, A.: A 55-nm, 1.00.4 v, 125-pj/mac time-domain mixed-signal neuromorphic accelerator with stochastic synapses for reinforcement learning in autonomous mobile robots. IEEE J. Solid-State Circuits 54(1), 75–87 (2018)

Sunny, F.P., et al.: A survey on silicon photonics for deep learning. ACM J. Emerg. Technol. Comput. Syst. 17, 1–57 (2021)

Song, L., Qian, X., Li, H., Chen, Y.: Pipelayer: a pipelined reram-based accelerator for deep learning. In: 2017 IEEE International Symposium on High Performance Computer Architecture (HPCA), pp. 541552 (2017)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. The MIT Press (2016)

Marquez, B.A., Filipovich, M.J., Howard, E.R., Bangari, V., Guo, Z., Morison, H.D., De Lima, T.F., Tait, A.N., Prucnal, P.R., Shastri, B.J.: Silicon photonics for artificial intelligence applications. Photoniques 104, 40–44 (2020)

Sunny, F.P., Mirza, A., Nikdast, M., Pasricha, S.: ROBIN: a robust optical binary neural network accelerator. ACM Trans. Embed. Comput. Syst. (TECS) 20, 1–24 (2021)

Waldrop, M.M.: The chips are down for Moore’s law. Nature 530, 144–147 (2016)

Bai, B., Shu, H., Wang, X., Zou, W.: Towards silicon photonic neural networks for artificial intelligence. Sci. China Inf. Sci. 63, 1–4 (2020)

Misra, J., Saha, I.: Artificial neural networks in hardware: a survey of two decades of progress. Neurocomputing 74(1), 239255 (2010)

Intel delivers Real Time AI in Microsoft’s accelerated deep learning platform. [Online]. Available: https://newsroom.intel.com/news/inteldelivers-real-time-aimicrosofts-accelerated-deep-learning-platform/

Rajendran, B., et al.: Low-power neuromorphic hardware for signal processing applications: A review of architectural and system-level design approaches. IEEE Signal Process. Mag. 36(6), 97–110 (2019)

Schwartz, R., Dodge, J., Smith, N. A., Etzioni, O.: Green AI, arXiv preprint arXiv:1907.10597, (2019)

Strubell, E., Ganesh, A., McCallum, A.: Energy and policy considerations for deep learning in nlp, arXiv preprint arXiv:1906.02243, (2019)

Lacoste, A., Luccioni, A., Schmidt, V., Dandres, T.: Quantifying the carbon emissions of machine learning, arXiv preprint arXiv:1910.09700, (2019)

De Marinis, L., Catania, A., Castoldi, P., Contestabile, G., Bruschi, P., Piotto, M., Andriolli, N.: A codesigned integrated photonic electronic neuron. IEEE J. Quantum Electron. 58, 1–10 (2022)

Mittal, S.: A survey of ReRAM-based architectures for processing in-memory and neural networks. Mach. Learn. Knowl. Extract. 1(1), 75–114 (2019)

Shafiee, A., Nag, A., Muralimanohar, N., Balasubramonian, R., Strachan, J.P., Hu, M., Williams, R.S., Srikumar, V.: Isaac: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. ACM SIGARCH Comput. Arch. News 44(3), 1426 (2016)

Thomas, A., Niehorster, S., Fabretti, S., Shepheard, N., Kuschel, O., Kupper, K., Wollschlager, J., Krzysteczko, P., Chicca, E.: Tunnel junction based memristors as artificial synapses. Front. Neurosci. Neurosci. 9, 241 (2015)

Kalikka, J., Akola, J., Jones, R.O.: Simulation of crystallization in Ge2Sb2Te5: a memory effect in the canonical phase-change material. Phys. Rev. B 90, 184109 (2014)

Morozovska, A.N., Kalinin, S.V., Yelisieiev, M.E., Yang, J., Ahmadi, M., Eliseev, E.A., Evans, D.R.: Dynamic control of ferroionic states in ferroelectric nanoparticles. Acta Mater. Mater. 237, 118–138 (2022)

Zheng, Y., Wu, Y., Li, K., Qiu, J., Han, G., Guo, Z., Luo, P., An, L., Liu, Z., Wang, L., et al.: Magnetic random access memory (MRAM). J. Nanosci. Nanotechnol. Nanosci. Nanotechnol. 7, 117–137 (2007)

Tsai, H., Ambrogio, S., Narayanan, P., Shelby, R.M., Burr, G.W.: Recent progress in analog memory-based accelerators for deep learning. J. Phys. D Appl. Phys. 51(28), 283001 (2018)

Xu, B., Huang, Y., Fang, Y., Wang, Z., Yu, S., Xu, R.: Recent progress of neuromorphic computing based on silicon photonics: Electronic–Photonic co-design, device, and architecture. InPhotonics 9(10), 698 (2022)

Waldrop, M.M.: The chips are down for Moore’s law. Nat. News 530(7589), 144 (2016)

Pasricha, S., Dutt, N.: On-Chip Communication Architectures, Morgan Kauffman, ISBN 978-0-12-373892-9, Apr (2008).

Li, C., Hu, M., Li, Y., Jiang, H., Ge, N., Montgomery, E., Zhang, J., Song, W., Davila, N., Graves, C.E., Li, Z., Strachan, J.P., Lin, P., Wang, Z., Barnell, M., Wu, Q., Williams, R.S., Yang, J.J., Xia, Q.: Analogue signal and image processing with large memristor crossbars. Nat. Electron. 1, 5259 (2018)

Yao, P., Wu, H., Gao, B., Tang, J., Zhang, Q., Zhang, W.: Fully hardware implemented memristor convolutional neural network. Nature 577, 641–646 (2020)

Feng, C., Gu, J., Zhu, H., Ying, Z., Zhao, Z., Pan, D.Z., Chen, R.T.: Silicon photonic subspace neural chip for hardware-efficient deep learning. arXiv preprint arXiv:2111.06705. (2021).

Williams, R.S.: What’s next? [the end of Moore’s law]. Comput. Sci. Eng. 19, 713 (2017). https://doi.org/10.1109/MCSE.2017.31

Hamerly, R., Sludds, A., Bernstein, L., Prabhu, M., Roques-Carmes, C., Carolan, J., Yamamoto, Y., Soljacicť, M., Englund, D.: Towards large-scale photonic neural-network accelerators. In: 2019 IEEE International Electron Devices Meeting (IEDM), pp 22.8.122.8.4 (2019). https://doi.org/10.1109/IEDM19573.2019.8993624

Schuman, C.D., Potok, T.E., Patton, R.M., Birdwell, J.D., Dean, M.E., Rose, G.S., Plank, J.S.: A survey of neuromorphic computing and neural networks in hardware. arXiv preprint arXiv:1705. 06963 (2017)

Shin, D., Yoo, H.-J.: The heterogeneous deep neural network processor with a non-von Neumann architecture. Proc. IEEE 108, 12451260 (2020). https://doi.org/10.1109/JPROC.2019.2897076

Paolini, E., De Marinis, L., Cococcioni, M., Valcarenghi, L., Maggiani, L., Andriolli, N.: Photonic-aware neural networks. Neural Comput. Appl. 1–3 (2022).

Ohno, S., Tang, R., Toprasertpong, K., Takagi, S., Takenaka, M.: Si microring resonator crossbar array for on-chip inference and training of the optical neural network. ACS Photonics 9, 2614–2622 (2022)

Al-Qadasi, M.A., Chrostowski, L., Shastri, B.J., Shekhar, S.: Scaling up silicon photonic based accelerators: Challenges and opportunities. APL Photonics, 020902 (2022).

Mourgias-Alexandris, G., Moralis-Pegios, M., Tsakyridis, A., Simos, S., Dabos, G., Totovic, A., Passalis, N., Kirtas, M., Rutirawut, T., Gardes, F.Y., Tefas, A.: Noise-resilient and high-speed deep learning with coherent silicon photonics. Nat. Commun.Commun. 13, 1–7 (2022)

Zhou, H., Dong, J., Cheng, J., Dong, W., Huang, C., Shen, Y., Zhang, Q., Gu, M., Qian, C., Chen, H., Ruan, Z.: Photonic matrix multiplication lights up photonic accelerator and beyond. Light Sci. Appl. 11, 1–21 (2022)

Sunny, F.P., Mirza, A., Nikdast, M.: High-performance deep learning acceleration with silicon photonics. In: Silicon Photonics for High-Performance Computing and Beyond 2021 Nov 16 (pp. 367–382). CRC Press (2021).

Guo, K., Zeng, S., Yu, J., Wang, Y., Yang, H.: [DL] A survey of FPGA-based neural network inference accelerators. ACM Trans. Reconfig. Technol. Syst. 12, 126 (2019). https://doi.org/10.1145/3289185

Ali, M.M., Madhupriya, G., Indhumathi, R., Krishnamoorthy, P.: Performance enhancement of 8×8 dilated banyan network using crosstalk suppressed GMZI crossbar photonic switches. Photonic Netw. Commun. 42, 123–133 (2021)

Mubarak Ali, M., Madhupriya, G., Indhumathi, R., Krishnamoorthy, P, Photonic processing core for reconfigurable electronic–photonic integrated circuit. In: Arunachalam, V., Sivasankaran, K. (eds.) Microelectronic Devices, Circuits and Systems. ICMDCS 2021. Communications in Computer and Information Science, vol. 1392. Springer, Singapore (2021)

Meerasha, M.A., Ganesh, M., Pandiyan, K.: Reconfigurable quantum photonic convolutional neural network layer utilizing photonic gate and teleportation mechanism. Opt. Quant. Electron. 54, 770 (2022). https://doi.org/10.1007/s11082-022-04168-8

Meerasha, M.A., Meetei, T.S., Madhupriya, G., et al.: The design and analysis of a CMOS-compatible silicon photonic ON–OFF switch based on a mode-coupling mechanism. J. Comput. Electron.Comput. Electron. 19, 1651–1659 (2020). https://doi.org/10.1007/s10825-020-01550-1

Xu, C.L., Huang, W.P., Stern, M.S., Chaudhuri, S.K.: Full-vectorial mode calculations by finite difference method. IEE Proc. Optoelectron. 141(5), 281–286 (1994). https://doi.org/10.1049/ip-opt:19941419

Wang, Y., Chen, Z., Hu, H.: Analysis of waveguides on lithium niobate thin films. Crystals 8(5), 191 (2018)

Acknowledgements

The authors wish to acknowledge Ansys/Lumerical, Siemens, and The MathWorks, Inc.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mosses, A., Joe Prathap, P.M. Analysis and codesign of electronic–photonic integrated circuit hardware accelerator for machine learning application. J Comput Electron 23, 94–107 (2024). https://doi.org/10.1007/s10825-023-02123-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10825-023-02123-8