Abstract

Marketing interviews are widely used to acquire information on the behaviour, satisfaction, and/or needs of customers. Although online surveys are broadly available, one of the major challenges is to collect high-quality data, which is fundamental for marketing. Since online surveys are mostly unsupervised, the possibility of providing false answers is high, and large numbers of participants do not finish interviews, yet our understanding of the reasons behind this pattern remains unclear. Here, we examined the possible factors influencing response rates and aimed to investigate the impact of technical and demographic information on the probability of interview completion rates of multiple surveys. We applied survival analysis and proportional hazards models to statistically evaluate the associations between the probability of survey completion and the technical and demographic information of the respondents. More complex surveys had lower completion probabilities, although survey completion was increased when respondents used desktop computers and not mobile devices, and when surveys were translated to their native language. Meanwhile, age and gender did not influence completion rates, but the pool of respondents invited to complete the survey did affect completion rates. These findings can be used to improve online surveys to achieve higher completion rates and collect more accurate data.

Similar content being viewed by others

Introduction

One of the major problems in market research is associated with the collection of high-quality data from respondents. On average, just 15–20%, or lower, of invited people complete online surveys (e.g. Deutskens et al. 2004; Wright and Schwager 2008; Pan 2010; Pedersen and Nielsen 2016; Pan et al. 2022), and it requires huge efforts to increase the value over 60% (e.g. Van Selm and Jankowski 2006; Liu and Wronski 2018; Sammut et al. 2021). Furthermore, a notable proportion of completed interviews contain low quality data due to false answers (e.g. Motta et al. 2017; Kato and Miura 2021), and a variety of other factors influencing the overall experience of respondents during interviews (Couper 2000; Deutskens et al. 2004).

Different practices are available to identify respondents providing low quality data, but such practices are only suitable for cleaning the dataset before further usage, and not for increasing the number of completed interviews. To find possible ways of increasing people’s motivation to complete online surveys, and provide high-quality answers, we need to understand the factors influencing interview completion.

Online surveys have become increasingly popular over the last few decades (Couper 2000; Van Selm and Jankowski 2006; Fan and Yan 2010; Pan 2010), which has been facilitated by the inclusion of new technological developments. The entire process of completing surveys online can be divided into four major parts: (1) survey development, (2) survey delivery, (3) survey completion, and (4) survey return, with each part playing a role in decreasing completion rates (Fan and Yan 2010; Sammut et al. 2021). Several ways were identified and proposed for increasing the completion rate of online surveys, most of which have been increasingly used in the past few years, however, their effectiveness remains poorly understood.

In addition, it is worth noting that the completion rates of surveys can refer either to the proportion of respondents who complete a survey, i.e. those that answered all the questions in a survey, or to the ratio of answers given for one question, which is not empty or 'don't know' within a survey. In this study, we focus on the problems associated with low completion rates of online surveys, since this is an important aspect of surveys which marketers ubiquitously aim to increase (e.g. Deutskens et al. 2004; Wright and Schwager 2008). We also focus on factors influencing this phenomenon which remain unclear (see below). Hereafter, we use completion rate and response rate as synonyms, referring to survey completion rates.

The response rate of online surveys is highly associated with the quality of data (Van Selm and Jankowski 2006; Kato and Miura 2021; Sammut et al. 2021; Pan et al. 2022) which in turn is influenced by (1) incentives, (2) personalised invitations, (3) reminders, (4) timing, and (5) the length of the interview. These can all increase the motivation of participants, as confirmed in experimental studies (e.g. Deutskens et al. 2004; Van Selm and Jankowski 2006). Nevertheless, both the completion rate of online surveys and the quality of the data obtained in those surveys are related to the device used for participating in surveys (e.g. Mavletova 2013; Tourangeau et al. 2017), thus further complicating the situation with respect to obtaining high-quality data.

Other factors, such as behavioural, cultural (even religious), or ethnic differences, can also impact response rates (Van Selm and Jankowski 2006; Pan et al. 2022) and data quality (Tourangeau et al. 2017) in online surveys. The perceptions and preconceptions of people can also determine their level of interest in completing surveys. For example, ethnic differences, either related to the nationality or the affiliation of people who either set the study or are the recipients asked to complete the survey, are associated with lower response rates (Van Selm and Jankowski 2006; Pan et al. 2022). Finally, the timing of the invitation and the number of reminders (if any) have varying impacts on the probability of completing online surveys, even among surveys with similar topics (Pan 2010; Pan et al. 2022).

Despite there being an increasing number of studies examining the factors influencing the completion rates of online surveys (e.g. LaRose and Tsai 2014, Saleh and Bista 2017, Liu and Wronski 2018), studies examining behavioural, cultural, or technical factors are lacking. It is also unclear why some respondents start a survey, but never finish it. The survey completion rate can be considered as "interview survival". This idea originated from a widely used technique in medical sciences, known as "survival analysis" (Kaplan and Meier 1958; Kartsonaki 2016), which is a useful approach to estimate mortality and survival processes.

Here, we use survival analysis and proportional hazards models to investigate factors affecting the response rate of online surveys in market research, after defining the necessary concepts and describing the methods in detail (see the next section, Methodology). This approach allows us to statistically evaluate the associations between interview completion probability and the technical settings of the interviews, whilst also including the behavioural and demographic characteristics of the respondents. To achieve this goal, we used data from three online surveys that differed in complexity, but were programmed in the same tool (Dub InterViewer v7), ensuring the same technical conditions during data collection. It is worth noting that the complexity of surveys can originate from two sources: (1) they contain simple questions, but many of them (survey length) and, (2) they require complicated tasks to be completed, such as looped questions, best–worst scaling or conjoint tasks (such as advanced survey components). When we refer to ‘complex’ hereafter, we will not distinguish between survey length and the presence of complicated tasks.

We examine how best to optimise online surveys to increase their completion probability. To address this question, we need to acquire a better understanding of which factors influence the completion rate of surveys. First, we predict that modifying the survey (e.g. changing some functions or adding new questions) during the data collection phase will lower the proportion of surveys completed. Second, more complex surveys, containing many background calculations (i.e. a higher number of hidden questions), will also decrease the completion rate of surveys. Further, our third prediction is that although mobile devices will be more commonly used for completing surveys, complex surveys will have lower completion rates on mobile devices because some questions may not be compatible with mobile devices. Fourth, we predict that the interview completion probability will be lower when the survey language is English in contrast to the native languages of the respondents (e.g. Graddol 2003; Rao 2019). Panel services may provide different-quality sources for surveys and so our fifth prediction is that these sources influence the probability of surveys being completed. Finally, our sixth prediction is that younger respondents will be more likely finish surveys, but gender will not significantly influence the completion rates of online surveys (e.g. Zhang 2005; Thayer and Ray 2006; Zickuhr and Madden 2012).

The findings of our study will benefit stakeholders and decision makers in marketing industries where online surveys are used to collect data. More specifically, our study may influence fieldwork planning, sample size optimisation, cost reduction, and higher quality data collection in online surveys.

Methodology

Kaplan–Meier estimation for the time function of survey progression

Survival or event history analysis is a widely used method in demographic or medical research and is used to estimate mortality and healing/survival processes. The original terms describe the occurrence of an event of interest (referred to as death) and previous occurrences of other events (referred to as loss), where the former is a known event, whilst the latter may be accidental (unknown), or controlled (Kaplan and Meier 1958).

In marketing research, surveys (either online, telephonic, or face-to-face) that are used for data collection can be described as follows. Firstly, the position (the ith question answered) of interview ending (corresponds to death but is referred to as interview lifetime hereafter) when it is assumed that the interviewee has stopped the interview. Secondly, the lack of information when we are unable to identify the reason for the interview ending (which corresponds to loss but is referred to as censored record hereafter), e.g. the loss of internet connection. Assuming that the interview lifetime is independent of the censored records, S(i) is the estimated proportion of interviews in which the interview lifetime exceeds the ith question. Following Kaplan and Meier (1958), the product-limit estimate for N interviews can be defined as the interview lifetime series in increasing order, giving \(0\le {i}_{1}^{\prime}\le {i}_{2}^{\prime}\le \dots \le {i}_{N}^{\prime}\). Then,

where r assumes values for which \({i}_{r}^{\prime}\le i\) and \({i}_{r}^{\prime}\) measure the length of the interview lifetime. This distribution function maximises the likelihood of observations. When censored records are not present before the ith question, the estimate of S(i) is the binomial estimate, i.e. the proportion of interviews that passed the ith question (Kaplan and Meier 1958, see also James et al. 2021).

A hypothetical example

For simplicity, we use data from 10 interviews in which we can record the question (position) at which the interview stopped, as well as the occurrence of censored records in an interval of 1–16 (the number/position of questions), distinguishing censored records by inserting a hashtag (#) after the numbers. A stop is an event with a known outcome, i.e. the respondent completed the survey, or decided to terminate the survey prior to completion, which is defined here as the last question to have been answered. In contrast, a censored record is an event with no information as to why the respondent did not answer the remaining questions in the survey. Such unknown outcomes occur as a result of technical issues, or respondents getting bored during the course of the survey. Then, O is a set containing all positions when any event occurs, e.g. \(O=\{{1,2}\#,3\#,{4,5}\#,{10,12}\#,{13,14,16}\#\}\). In this case, censored records occurred in the 2nd, 3rd, 5th, 12th, and 16th positions and the interview stopped in the 1st, 4th, 10th, 13th, and 14th positions. Multiple events may take place in any of the positions, which should be distinguished as separate events.

We can count all events during this survey (Table 1). It is worth noting the events that occurred in the zero and the 17th positions were unknown, but these positions have no information content regarding the analysis. If more than one event happened in the same position, the number of total events replaced the current values. For simplicity, let us assume that zero or one event occurs in each of the positions.

The cumulated proportion of active interviews is the Kaplan–Meier estimate of the survival function, which values indicate from a medical perspective, the chance of surviving a disease or illness. Greater values therefore represent a greater chance that an individual survives the disease or illness. In our case, greater values are also better because they indicate that a larger number of active interviews are being completed. The Kaplan–Meier function allows us to investigate and interpret the stops and censored records during the interview (Fig. 1).

The Kaplan–Meier estimate function. The horizontal axis indicates the position of questions in the survey, and the vertical axis indicates the cumulated proportion of active interviews. The steps in the function curve correspond to the interview stops, whilst the vertical bars indicate the occurrence of censored records in each position

Comparison of groups

The demographic and cultural characteristics of participants, or the infrastructural differences among countries are only two examples of factors that may impact upon interview lifetimes. Therefore, any demographic data or technical information available during the interviews are useful. We used the Mantel-Haenszel log-rank test to estimate the differences between two survival curves (Hoffman 2019; James et al. 2021):

where O and E are the observed and expected events in group g when an event occurs (see also the next subsection). This test can be extended to multiple groups. According to its null hypothesis, the survival experience in the different groups is the same (Hazra and Gogtay 2017; James et al. 2021) in every moment (Fig. 2). Therefore, assuming a χ2 distribution, a p-value < 0.05, at the significant level of α = 0.05, suggests that the compared survival functions are significantly different. The Mantel-Haenszel log-rank test is non-parametric, and is named after its relation to a test using the logarithm of the ranks assigned to the data (Hazra and Gogtay 2017). All the figures in our study where at least two groups are shown represented by their own survival curves are a visual representation of the group comparisons based on Mantel-Haenszel log-rank test performed behind the visualizations.

Estimation of expected events

First, we introduce a special case, offering sufficient background to describe further characteristics of the survival function. Assuming that the probabilities of the occurrences of any event are equal, then the probability values will be uniformly distributed. If we consider a 20-position long section of a hypothetical survey between the 10th and 30th questions, the probability of an event in any position of this interval is \(1/20\) but zero outside of the interval.

In this case, the number of active interviews decreases proportionally as the interview progresses because people stop completing the interview so that the probability of the occurrence of an event in the ith position equals \((30-i)/20\), which corresponds to the values of the survival curve. Meanwhile, the Kaplan–Meier estimates of the survival function provide the probability of an event occurring after the ith question.

Now, we can estimate the expected proportion of the next event, in all the upcoming positions by comparing the number of active interviews to the probability of an event occurring in the ith position. In our example, this value is \((1/20)/((30-i)/20)\), which equals \(1/(30-i)\). The value is called the hazard rate (hereafter, HR) and is described by the hazard function (Kartsonaki 2016). Note that this ratio does not express probability, and values can be higher than 1. By approaching the endpoint of the interval (i.e. 30), the probability of the occurrence of an event in the ith position approaches zero, and thus, the limit of the HR will be infinite. Therefore, the relative interview completion probability function is

where the probability density function \(f(i)\) is the frequency of events per each position and \(S(i)\) is the survival function.

In the given example, five events occurred, and we can estimate each of their probabilities (Table 2). Applying the above equation (which represent the values of the probability density function divided by the values of the cumulated probability of active interviews in Table 1), the values of the relative interview completion probability function can also be calculated (Table 2).

These values are only known in discrete points of both the probability density function and the relative interview completion probability function. However, we can add these values to the ith position, resulting in a cumulative version of the probability density function, \(F(i)\), and a cumulative version of the relative interview completion function, \(H(i)\). Then, the probability of active interviews in the ith position can be calculated as 1 minus the frequency of events in the ith position:

Similarly, the probability of active interviews in the ith position can be estimated by calculating Euler’s number to the power of − 1 time the relative interview completion in the ith position:

This also means that the relative interview completion in the ith position can then also be derived from -1 time that the natural logarithm of the probability of active interviews in the ith position:

Cox’s regression for investigating the factors associated with survey progression

We were also interested in identifying factors influencing the progression of surveys, in addition to estimating the prominence and direction of these influences. However, time-dependent processes mostly change non-linearly, so we were able to apply Cox’s proportional hazards model (Cox 1972, see also James et al. 2021) to estimate the effects of different factors on the changes of the hazard function.

Let us assume that the values \(({x}_{1},{x}_{2},\dots ,{x}_{n})\) of the \({X}_{1},{X}_{2},\dots ,{X}_{n}\) factors affect the changes in the relative interview completion probability function and \({X}_{i}\) is time-independent, then

where \({h}_{0}(i)\) is the non-specified baseline value. Next, we replaced the values of the mth and nth observation in the previous equation and divide them, then

Consequently, the ratio of two relative interview completion probability values of two observations is time-independent, and independent from the baseline values in the Cox’s models. This ratio depends exclusively on the values of the influencing factors, which are characterised by a linear function, where \({b}_{1},{b}_{2},\dots ,{b}_{n}\) coefficients give both the direction and the magnitude of those influences.

Data sources

We used data, collected between 2019 and 2023, from three market research surveys, with different complexity, targeting customers’ travelling habits, shopping-related behaviours, and decisions whilst travelling (Table 3). The same survey-programming platform (Dub InterViewer v7), specified for creating online surveys (i.e. enabling the inclusion of custom-built solutions), was used for data collection in all three cases.

We never used any sensitive information from any of the respondents, nor used any core data from the surveys. We only collected variables that could be potentially linked to survey completion rates, when available, as follows: technical information (metadata version, device type, operating system, browser type, invitation source, survey language, question type), and demographic data (age, gender). All data processing and analyses were performed in R v4.2.2 (R Core Team 2022). Functions available in the ‘survival’ (Therneau 2022), ‘gtsummary’ (Daniel et al. 2021) ‘ggsurvfit’ (Daniel et al. 2023), and ‘patchwork’ (Pedersen 2022) packages were used for the analyses and the visualisation of the results.

Results

Dataset 1

Our first dataset was obtained from an online survey that was open to anyone to complete, provided they saw its advertisement globally. We used data collected from three regions (Europe, Mexico, and USA), which gave a total of 20,013 records. The three sub-surveys differed slightly in length (n = 50 ± 6, but 143 when counting all additional or technical forms hidden from respondents) and so we present the results pooled together. The length of the interviews varied between 86 and 3070 s (mean ± SD: 589.32 ± 235.45 s) from respondents who completed the surveys (n = 15,843); however, 20.84% of all records (n = 4170) were never finished. We considered a record as completed if it reached at least the 130th position (including technical variables) in the survey, because answering the final questions was optional.

The overall survival probability, i.e. the interview completion probability, was 0.81 close to the end of the survey (Fig. 3a). The highest impact on those completion rates was mostly observed for technical variables (Table 4; Fig. 3b–h); however, the behaviour of respondents did not differ with regard to their age (HR = 1, p = 0.744) and gender (compared to women: men, HR = 0.93, p = 0.601, prefer not to say, HR = 1.69, p = 0.601). However, it is prudent to acknowledge that demographic information was only available for ~ 1% of the records because the corresponding questions were asked at the end of the survey which is after most people had completed the survey. Therefore, their effects on survival rates cannot be clearly measured using this dataset.

The online interviews were more likely to be finished earlier (metadata version), and the newer versions decreased the probability of completion (Table 4). The completion rate was higher when the survey was taken on desktop computers than on mobile devices. However, the different operating systems showed varying performance, and among browsers, interview completion rates were significantly higher in Edge and Internet Explorer than in Chrome (Table 4). Surveys from the USA, or extended to Mexico, showed significantly higher hazard ratios, compared to European records. Respondents who filled in the surveys in Finnish or in Russian were more likely to be completed than those respondents who selected to undertake the survey in English. Finally, most of the respondents will found to be more likely to stick in a particular position when a technical (hidden) question followed next during the course of the interview (Table 4; Fig. 3).

Dataset 2

The second dataset contained records from a closed survey inviting respondents through four different sources. After removing those respondents (i) who failed a live quality check of the honesty or accuracy of their information (3.65% of all respondents), i.e. giving different answers when asking their age at both the beginning and end of the survey, (ii) who screened out (37.37%), i.e. did not belong to any target group of the survey, and (iii) who were placed in a closed quota (target) group (30.32%), we had a total of 6558 records.

This survey contained 26 questions, but some of them were asked multiple times in loops. Although this survey had fewer questions than the previous one, it was more complex, and thus contained 190 positions in total, counting all additional and technical variables. In this case, 27.45% of the records were marked as complete (n = 1800) and the rest (n = 4758) were never finished. Once outlier records with more than 1.5 h of interview length—which was considered an extremely long time for completing this type of survey—were removed, the length of the completed interviews varied between 140 and 5165 s (1019.24 ± 623.60 s).

For the survival analysis, we used 45.59% of the records (n = 2990) due to the extremely high number of invited respondents who never started the survey (n = 3568). In other words, those respondents never got passed the first question and subsequently distorted the survival curve (lowest interview completion probability was 0.27 and 0.60, respectively, Fig. 4), and had missing data for all other variables. Unfortunately, we do not have the necessary information to explicitly determine what caused this pattern. Two of the main causes could be that (1) the respondents received the invitation and decided not to enter in the survey, or (2) they entered the survey, but after reading the introduction, they closed it. Depending on the options of the data collection tool, both possibilities could equally be marked as “survey was not started”.

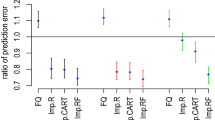

Technical variables significantly affected the completion rate of this survey, even after controlling for demographic characteristics of the respondents (Table 5; Fig. 5). Only age showed a significant effect (HR = 0.96, p = 0.001), when demographic variables were included in the model.

Interviews were more likely to be completed on desktop devices than on mobile devices and respondents were more likely to stop when answering technical (hidden) questions (Table 5). Some sources provided more respondents who completed the survey than other sources, and these effects remained significant even after controlling for demographic variables. In contrast, the metadata version of the survey and survey language became non-significant after including age and gender terms in the model, although all languages other than English showed a trend towards having higher completion rates before that step was taken.

Dataset 3

The third dataset constituted a closed survey inviting respondents through five different sources. After removing respondents (i) who did not meet the criteria of the survey or who answered the questions very quickly and thus, were considered as invalid (39.14%), and (ii) who were placed in a closed quota (target) group (28.75%), we had a total of 29,053 records.

This survey was the most complex, containing 61 questions, including loops and custom forms developed for showing specific tasks to the respondents. When considering all technical variables, it had 225 positions in total. Although the highest number of respondents participated in this survey, just 10.84% of the surveys was marked as complete (n = 3150) and the rest (n = 25,903) were left incomplete by the respondents. The length of the completed interviews varied between 249 and 4036 s (901.71 ± 409.65 s).

We kept 22.26% of all records due to the extremely high number of invited respondents (n = 22,587) who never proceeded past question one so that they distorted the survival curve (the lowest interview completion probability was 0.11 and 0.49, respectively, Fig. 6) and had missing data for all other variables.

Demographic characteristics alone did not influence the probability of survey completion. Compared to middle aged respondents, both older (HR = 0.81, p = 0.147) and younger people (HR = 0.90, p = 0.485) were statistically similar, just like women vs men (HR = 0.87, p = 0.259). Later versions (metadata version) of the survey had lower probabilities of being completed, as well as completing the survey on mobile devices (Table 6; Fig. 7). However, all languages other than English provided lower hazard ratios, and compared to source one, whilst three of the other sources also resulted in a higher probability of being completed by the respondents.

Most of the results outlined above showed the same pattern when we included age groups and gender in the models. In this case, however, both older and younger people were more likely to complete the surveys than middle aged respondents, whilst women and men were still statistically similar (Table 6; Fig. 7).

Discussion

We found that technical conditions, such as the survey language, the type of the device used for giving answers, and the complexity of the survey were major factors influencing the probability of respondents completing online surveys. Meanwhile, the demographic characteristics of the respondents only marginally influenced the probability of online surveys being completed by respondents. Together, these results advance our understanding of the factors influencing the likelihood of online surveys being completed by respondents.

We examined if different versions of surveys impacted the completion rates. We found that changing some functions or adding new questions during the data collection phase, which was seen as an increased version number of the survey, can be risky. Although we were unable to clearly identify those cases in which a live interview was stopped due to the ongoing modifications of the survey, if there were any, we found that later versions of surveys were less likely to be completed than earlier versions of surveys. It is therefore recommended that sufficient time is scheduled to finalise surveys before programming/publishing them.

This is especially valid for more complex surveys, with many background calculations and a higher number of hidden questions. We found that the probability of surveys being completed was lower for more complex surveys among our three datasets. We therefore suggest keeping online surveys as simple as possible if high completion rates are desired (see also Deutskens et al. 2004). For example, if the questions focus on different aspects of a wider topic, then we recommend that the questions are split into multiple sections. These sections can be asked with some time-shift from the same respondent in subsequent surveys, when asking the same person is an important criterion. Alternatively, each section could have its own sample of respondents. Nevertheless, we recommend that clear information and instructions should be provided at the beginning of surveys, and that respondents are provided with the possibility of stopping the survey at any point and then resuming the survey at a later time. Re-inviting (or reminding) respondents is also an opportunity to increase the number of completed interviews (Deutskens et al. 2004; Van Selm and Jankowski 2006; Sammut et al. 2021).

Web surveys were available before the turn of the last millennium, but only really became popular during the early stage of this millennium, which is when issues over their completion rates began to emerge. Couper (2000) and Van Selm and Jankowski (2006) revealed some possible solutions to increase the probability of respondents completing surveys, although the technology has developed rapidly since, allowing respondents to answer online surveys on the go.

We also showed that the type of device used to complete the online survey influenced the probability of survey completion (Tourangeau et al. 2017). Although mobile devices are more commonly used during our daily activities, complex surveys resulted in lower completion rates on mobile devices (Mavletova 2013). Several factors may have caused this pattern. First, mobile devices have some technical limitations (Tourangeau et al. 2017), e.g. smaller screen size, and different software composition, so that optimising online surveys is still challenging. Despite the responsive survey designs available today, that are specifically developed for mobile devices, the stability of the connection to internet service providers and internet access may cause further issues when respondents are trying to complete the survey. Some of the questions are designed for adding more interactivity to the surveys, and thus, increasing the motivation of respondents; however, these questions could serve to be problematic by incorrectly displaying those questions and thus having the opposite effect. For instance, compared to the textual version, the visual presentation of the same survey had a lower response rate (Deutskens et al. 2004). However, we were unable to rule out the effect of novelty, and less developed technical background in those years, on our results. In general, then, simple survey designs strongly encourage completion rates (Van Selm and Jankowski 2006; Saleh and Bista 2017; Sammut et al. 2021; Pan et al. 2022), and mobile devices can also produce data of similar quality as surveys launched on desktop devices (Tourangeau et al. 2017). Our results further suggest that when being asked to complete interactive and complex surveys, respondents are made aware of the extra time needed to complete those surveys, whilst also being encouraged to complete the surveys on desktop devices.

Dataset 1 contained information on the influence of browser identity on the completion probabilities of online surveys and showed that different browsers impacted survey completion rates. Our analyses may provide the opportunity to test the optimal technical conditions during a soft launch, when the survey is opened for a small portion of the planned number of respondents. Based on the available information, such programming can be further improved, or recommendations can be implemented to encourage respondents to complete surveys before starting online surveys.

We found partial support for our prediction that the probability of survey completion would be lower in English compared to the native languages of the respondents. The completion probability was lower in surveys filled out in English than on some of the native languages. However, when we controlled for the demography of respondents in the multi-variable statistical comparisons, some of these relationships were masked. Overall, this suggests that, as expected, respondents feel more comfortable when filling out the survey in their native languages. Consequently, we recommend that surveys are provided in the language that is native to the respondents because it is likely to substantially increase the probability of survey completion rates. However, it is important to provide translations, not only for the core survey, but also for all additional text including invitations and follow-up advertisements, and web pages (Van Selm and Jankowski 2006; Pan et al. 2022).

Whilst translating surveys may require additional financial investments, the costs may be reduced by selecting more reliable data sources. For example, panel services may provide respondents with higher quality survey experiences (e.g. Mavletova 2013; Pedersen and Nielsen 2016), and so investigating their influence on the probability of survey completion has direct benefits for marketing firms. It is not necessarily the best strategy to use multiple sources, particularly if they are unable to provide the highest quality sample regarding the topic of research. Further, these sources can also be tested in an initial phase of the data collection period.

Moreover, the demographic characteristics of respondents played only a minor role in affecting the completion rates of online surveys. In most cases, neither age nor gender had any significantly detectable effect on the completion rates of surveys. Therefore, our prediction that younger participants are more likely finish surveys (e.g. Van Selm and Jankowski 2006, but see also Saleh and Bista 2017) was only partially supported. We found some support for this prediction in dataset 3, where both younger and older people were more likely to complete surveys than middle aged respondents. Meanwhile, women and men never differed in their probability of completing online surveys. The absence of sex-specific completion rates of surveys reflects the absence of sex-specific familiarity levels with mobile devices and online technologies.

Finally, incentives are proven to be important determinants of high survey completion probabilities (e.g. Deutskens et al. 2004; Van Selm and Jankowski 2006; LaRose and Tsai 2014; Pedersen and Nielsen 2016; Kato and Miura 2021; Sammut et al. 2021; Pan et al. 2022). Unfortunately, we did not have the chance to test their effect in our datasets. Nevertheless, we suggest that further studies focus on data quality because that ultimately encourages respondents to provide more accurate answers.

Conclusions

In summary, we conclude that survival analysis and proportional hazards models are suitable tools for investigating the probability of online surveys being completed by respondents. These analyses are relatively easy to perform and can provide useful information for survey optimisation. These modifications will not just directly increase the completion probability of online surveys, but also decrease the costs of the data collection phase, which can be one of the most expensive part of the research process. However, high data quality is necessary to make decisions, and can also influence the cost–benefit ratio of online data collection.

Limitations and further research directions

When interpreting the findings of our study, it is important to consider that our study has some limitations. Unfortunately, for example, we did not have information on the demographic characteristics of many of the respondents in Dataset 1, since the survey asked for demographic characteristics towards the end of the survey, when many respondents had already quit the survey. Including demographic variables can influence the model results, as demonstrated for Datasets 2 and 3, and so the lack of those variables in Dataset 1 is a limitation. Therefore, including the demographic section at the beginning of surveys would greatly increase the probability of that data being collected.

Meanwhile, a significant proportion of surveys in Datasets 2 and 3 remained on question one and never went on to the second question, and whilst we are unsure why this pattern occurred, it may be possible to compare multiple data collection platforms and use more elaborate invitation processes to increase survey completion rates.

Furthermore, our study is based on several surveys, yet are not representative of the full population of the large number of ongoing surveys. We believe that the surveys we analysed cover the most typical characteristics of regular surveys, thus, the results are very useful. Nevertheless, a meta-analytic research based on systematic and balanced designs may find more accurate results. For instance, periodically repeated surveys with the same or slightly modified questions sets, or experiments incorporating alternative versions of questions (e.g. text vs. media content shown in two separated groups of respondents) could reveal additional factors influencing completion rates beyond our finding.

As incentives are divisive, but certainly have a huge influence on completion rates of online surveys, studies using different incentives could benefit from the application of survival analysis proposed here to better understand the most powerful rewards for respondents which in turn encourages them to complete online surveys.

Data availability

The authors were given permission to analyse the data, but not to provide it in a publicly available space. However, please contact the corresponding author to request access to the data set.

References

Couper, M.P. 2000. Web surveys: A review of issues and approaches. The Public Opinion Quarterly 64 (4): 464–494.

Cox, D.R. 1972. Regression models and life-tables. Journal of the Royal Statistical Society: Series B 34 (2): 187–202.

Daniel, D.S., M. Baillie, S. Haesendonckx, T. Treis. 2023. ggsurvfit: Flexible time-to-event figures. https://CRAN.R-project.org/package=ggsurvfit.

Daniel, D.S., K. Whiting, M. Curry, J.A. Lavery, and J. Larmarange. 2021. Reproducible summary tables with the gtsummary package. The R Journal 13 (1): 570–580.

Deutskens, E., K. De Ruyter, M. Wetzels, and P. Oosterveld. 2004. Response rate and response quality of internet-based surveys: An experimental study. Marketing Letters 15 (1): 21–36.

Fan, W., and Z. Yan. 2010. Factors affecting response rates of the web survey: A systematic review. Computers in Human Behavior 26 (2): 132–139.

Graddol, D. 2003. The decline of the native speaker. In Translation today: Trends and perspectives, ed. G. Anderman and M. Rogers, 152–167. Multilingual Matters.

Hazra, A., and N. Gogtay. 2017. Biostatistics series module 9: Survival analysis. Indian Journal of Dermatology 62 (3): 251–257.

Hoffman, J.I.E. 2019. Chapter 35—Survival analysis. In Basic biostatistics for medical and biomedical practitioners (2nd edition), ed. J.I.E. Hoffman, 599–619. Academic Press.

James, G., D. Witten, T. Hastie, and R. Tibshirani. 2021. Survival analysis and censored data. In An introduction to statistical learning with applications in R. Springer texts in statistics, ed. G. James, D. Witten, T. Hastie, and R. Tibshirani, 461–495. New York: Springer.

Kaplan, E.L., and P. Meier. 1958. Nonparametric estimation from incomplete observations. Journal of the American Statistical Association 53 (282): 457–481.

Kartsonaki, C. 2016. Survival analysis. Diagnostic Histopathology 22 (7): 263–270.

Kato, T., and T. Miura. 2021. The impact of questionnaire length on the accuracy rate of online surveys. Journal of Marketing Analytics 9 (2): 83–98.

LaRose, R., and H.S. Tsai. 2014. Completion rates and non-response error in online surveys: Comparing sweepstakes and pre-paid cash incentives in studies of online behavior. Computers in Human Behavior 34: 110–119.

Liu, M., and L. Wronski. 2018. Examining completion rates in web surveys via over 25,000 real-world surveys. Social Science Computer Review 36 (1): 116–124.

Mavletova, A. 2013. Data quality in PC and mobile web surveys. Social Science Computer Review 31 (6): 725–743.

Motta, M.P., T.H. Callaghan, and B. Smith. 2017. Looking for answers: Identifying search behavior and improving knowledge-based data quality in online surveys. International Journal of Public Opinion Research 29 (4): 575–603.

Pan, B. 2010. Online travel surveys and response patterns. Journal of Travel Research 49 (1): 121–135.

Pan, B., W.W. Smith, S.W. Litvin, Y. Yuan, and A. Woodside. 2022. Ethnic bias and design factors impact response rates of online travel surveys. Journal of Global Scholars of Marketing Science 32 (2): 129–144.

Pedersen, T. 2022. Patchwork: The composer of plots. https://CRAN.R-project.org/package=patchwork.

Pedersen, M.J., and C.V. Nielsen. 2016. Improving survey response rates in online panels: Effects of low-cost incentives and cost-free text appeal interventions. Social Science Computer Review 34 (2): 229–243.

R Core Team. 2022. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/.

Rao, P.S. 2019. The role of English as a global language. Research Journal of English 4 (1): 65–79.

Saleh, A., and K. Bista. 2017. Examining factors impacting online survey response rates in educational research: Perceptions of graduate students. Journal of MultiDisciplinary Evaluation 13 (29): 63–74.

Sammut, R., O. Griscti, and I.J. Norman. 2021. Strategies to improve response rates to web surveys: A literature review. International Journal of Nursing Studies 123: 104058.

Thayer, S.E., and S. Ray. 2006. Online communication preferences across age, gender, and duration of internet use. CyberPsychology & Behavior 9 (4): 432–440.

Therneau, T.M. 2022. A package for survival analysis in R. https://CRAN.R-project.org/package=survival.

Tourangeau, R., A. Maitland, G. Rivero, H. Sun, D. Williams, and T. Yan. 2017. Web surveys by smartphone and tablets: Effects on survey responses. Public Opinion Quarterly 81 (4): 896–929.

Van Selm, M., and N.W. Jankowski. 2006. Conducting online surveys. Quality and Quantity 40: 435–456.

Wright, B., and P.H. Schwager. 2008. Online survey research: Can response factors be improved? Journal of Internet Commerce 7 (2): 253–269.

Zhang, Y. 2005. Age, gender, and internet attitudes among employees in the business world. Computers in Human Behavior 21 (1): 1–10.

Zickuhr, K., and M. Madden. 2012. Older adults and internet use. Pew Internet & American Life Project 6: 1–23.

Acknowledgements

We are grateful to the market research agency, who wished to remain anonymous during this project, for providing us with access to their datasets, and DataExpert Services Ltd, who participated in data collection.

Funding

Open access funding provided by University of Debrecen.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Münnich, Á., Kocsis, M., Mainwaring, M.C. et al. Interview completed: the application of survival analysis to detect factors influencing response rates in online surveys. J Market Anal (2024). https://doi.org/10.1057/s41270-023-00282-y

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41270-023-00282-y