Abstract

We study the stability of accuracy during the training of deep neural networks (DNNs). In this context, the training of a DNN is performed via the minimization of a cross-entropy loss function, and the performance metric is accuracy (the proportion of objects that are classified correctly). While training results in a decrease of loss, the accuracy does not necessarily increase during the process and may sometimes even decrease. The goal of achieving stability of accuracy is to ensure that if accuracy is high at some initial time, it remains high throughout training. A recent result by Berlyand, Jabin, and Safsten introduces a doubling condition on the training data, which ensures the stability of accuracy during training for DNNs using the absolute value activation function. For training data in \(\mathbb {R}^n\), this doubling condition is formulated using slabs in \(\mathbb {R}^n\) and depends on the choice of the slabs. The goal of this paper is twofold. First, to make the doubling condition uniform, that is, independent of the choice of slabs. This leads to sufficient conditions for stability in terms of training data only. In other words, for a training set T that satisfies the uniform doubling condition, there exists a family of DNNs such that a DNN from this family with high accuracy on the training set at some training time \(t_0\) will have high accuracy for all time \(t>t_0\). Moreover, establishing uniformity is necessary for the numerical implementation of the doubling condition. We demonstrate how to numerically implement a simplified version of this uniform doubling condition on a dataset and apply it to achieve stability of accuracy using a few model examples. The second goal is to extend the original stability results from the absolute value activation function to a broader class of piecewise linear activation functions with finitely many critical points, such as the popular Leaky ReLU.

Similar content being viewed by others

Code Availability

The code in this work is available and will be provided upon request.

References

LeCun, Y., Boser, B., Denker, J., Henderson, D., Howard, R., Hubbard, W., Jackel, L.: Handwritten digit recognition with a back-propagation network. Adv. Neural Inf. Process. Syst. 2, (1989)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Commun. ACM 60(6), 84–90 (2017)

Hinton, G., Deng, L., Yu, D., Dahl, G.E., Mohamed, A.-R., Jaitly, N., Senior, A., Vanhoucke, V., Nguyen, P., Sainath, T.N., et al.: Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 29(6), 82–97 (2012)

Sutskever, I., Vinyals, O., Le, Q.V.: Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 27 (2014)

Berlyand, L., Jabin, P.-E., Safsten, A.: Stability for the training of deep neural networks and other classifiers. Math. Models Methods Appl. Sci. 31(11), 2345–2390 (2021)

Goodfellow, I., Bengio, Y., Courville, A.: Deep learning. MIT Press, (2016)

Soudry, D., Hoffer, E., Nacson, M.S., Gunasekar, S., Srebro, N.: The implicit bias of gradient descent on separable data. J. Mach. Learn. Res. 19(1), 2822–2878 (2018)

Shalev-Shwartz, S., Singer, Y., Srebro, N.: Pegasos: Primal estimated sub-gradient solver for svm. In: Proceedings of the 24th International Conference on Machine Learning, pp. 807–814 (2007)

Zhang, C., Bengio, S., Hardt, M., Recht, B., Vinyals, O.: Understanding deep learning (still) requires rethinking generalization. Commun. ACM 64(3), 107–115 (2021)

Ma, S., Bassily, R., Belkin, M.: The power of interpolation: Understanding the effectiveness of sgd in modern over-parametrized learning. In: International Conference on Machine Learning, pp. 3325–3334. PMLR, (2018)

Kawaguchi, K., Kaelbling, L.P., Bengio, Y.: Generalization in deep learning. arXiv:1710.05468 (2017)

Cohen, O., Malka, O., Ringel, Z.: Learning curves for overparametrized deep neural networks: A field theory perspective. Phys. Rev. Res. 3(2), 023034 (2021)

Xu, Y., Li, Y., Zhang, S., Wen, W., Wang, B., Dai, W., Qi, Y., Chen, Y., Lin, W., Xiong, H.: Trained rank pruning for efficient deep neural networks. In: 2019 Fifth Workshop on Energy Efficient Machine Learning and Cognitive Computing-NeurIPS Edition (EMC2-NIPS), pp. 14–17. IEEE, (2019)

Yang, H., Tang, M., Wen, W., Yan, F., Hu, D., Li, A., Li, H., Chen, Y.: Learning low-rank deep neural networks via singular vector orthogonality regularization and singular value sparsification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 678–679 (2020)

Xue, J., Li, J., Gong, Y.: Restructuring of deep neural network acoustic models with singular value decomposition. In Interspeech, pp. 2365–2369 (2013)

Cai, C., Ke, D., Xu, Y., Su, K.: Fast learning of deep neural networks via singular value decomposition. In: Pacific Rim International Conference on Artificial Intelligence, pp. 820–826. Springer, (2014)

Anhao, X., Pengyuan, Z., Jielin, P., Yonghong, Y.: Svd-based dnn pruning and retraining. J. Tsinghua Univ. Sci. Technol. 56(7), 772–776 (2016)

Berlyand, L., Sandier, E., Shmalo, Y., Zhang, L.: Enhancing accuracy in deep learning using random matrix theory. arXiv:2310.03165 (2023)

Shmalo, Y., Jenkins, J., Krupchytskyi, O.: Deep learning weight pruning with rmt-svd: Increasing accuracy and reducing overfitting. arXiv:2303.08986 (2023)

Staats, M., Thamm, M., Rosenow, B.: Boundary between noise and information applied to filtering neural network weight matrices. Phys. Rev. E 108, L022302 (2023)

Gavish, M., Donoho, D.L.: The optimal hard threshold for singular values is \(4/\sqrt{3}\). IEEE Trans. Inf. Theory 60(8), 5040–5053 (2014)

Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. CoRR, abs/1412.6572, (2014)

Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I., Fergus, R.: Intriguing properties of neural networks. arXiv:1312.6199 (2013)

Zheng, S., Song, Y., Leung, T., Goodfellow, I.: Improving the robustness of deep neural networks via stability training. In: Proceedings of the Ieee Conference on Computer Vision and Pattern Recognition, pp. 4480–4488 (2016)

Thulasidasan, S., Chennupati, G., Bilmes, J.A., Bhattacharya, T., Michalak, S.: On mixup training: Improved calibration and predictive uncertainty for deep neural networks. Adv. Neural Inf. Process. Syst. 32 (2019)

Acknowledgements

I am very grateful to my advisor Dr. Berlyand for the formulation of the problem and many useful discussions and suggestions. I am also grateful to Dr. Jabin, Dr. Sodin, Dr. Golovaty, Alex Safsten, Oleksii Krupchytskyi, and Jon Jenkins for their useful discussions and suggestions that led to the improvement of the manuscript. Your help and time are truly appreciated. Finally, I would like to express my sincere gratitude to the referees who provided detailed feedback and valuable insights, which not only helped to improve the quality of this work but also deepened my understanding of the topic.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

I hereby declare that I have no competing interests or financial interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

1.1 Proof of Lemma 6.2

Suppose \(\mu (P_1) \ne \varnothing \). If either \((1+\sigma )\,\mu (\varepsilon \,\bar{S})\geqslant \delta \) or \((1+\sigma )\,\mu (\varepsilon \,\bar{S}')\geqslant \delta \), we are done.

If both \( (1+\sigma )\,\mu (\varepsilon \,\bar{S})<\delta \) and \( (1+\sigma )\,\mu (\varepsilon \,\bar{S}')<\delta \). Then

Here we are using an averaging trick to deal with the complication that we only have one \(S_\varepsilon ^0\) and \(S_{\kappa \varepsilon }^0\). Applying now (42) and (43), we find that proving the doubling condition with \(\sigma '=\sigma /2\). Meaning, for \(\varepsilon =\ell _S \kappa ^i< \beta \) we have

The critical point of \(\lambda _1\) at \(x_1=0\) would then be the only complicated part of \(\lambda _1\).\(\square \)

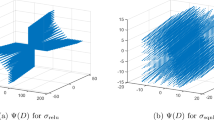

Classification confidence for the toy DNN for the Example 2.1 at different stages of training

1.2 Proof of Lemma 6.5: upper bound on the loss

Proof

We know the DNN \(\phi \) is of the form

Thus, (63) gives

For each \(k\ne i(s)\), \(X_k(s,\alpha )-X_{i(s)}(s,\alpha )\leqslant \max _{k\ne i(s)} X_k(s,\alpha )- X_{i(s)}(s,\alpha )=-\delta X(s,\alpha )\). Using (6) and (64), we obtain the following estimates on \(p_{i(s)}(s,\alpha )\):

Finally, (65) gives estimates on loss:

From (66) we get

\(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shmalo, Y. Stability of accuracy for the training of DNNs via the uniform doubling condition. Ann Math Artif Intell 92, 439–483 (2024). https://doi.org/10.1007/s10472-023-09919-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10472-023-09919-1

Keywords

- Deep Neural Networks (DNNs)

- Training stability

- Cross-entropy loss Function

- Accuracy in machine learning

- Doubling condition

- Training data