Abstract

State preparation is a necessary component of many quantum algorithms. In this work, we combine a method for efficiently representing smooth differentiable probability distributions using matrix product states with recently discovered techniques for initializing quantum states to approximate matrix product states. Using this, we generate quantum states encoding a class of normal probability distributions in a trapped ion quantum computer for up to 20 qubits. We provide an in depth analysis of the different sources of error which contribute to the overall fidelity of this state preparation procedure. Our work provides a study in quantum hardware for scalable distribution loading, which is the basis of a wide range of algorithms that provide quantum advantage.

Similar content being viewed by others

Introduction

Quantum state preparation is an important step in many quantum algorithms such as Monte-Carlo methods for quantum computers1, quantum algorithms for systems of linear equations2, various quantum machine learning algorithms3,4 and Hamiltonian simulation5,6 which are expected to have broad applications in a variety of fields. In order for these algorithms to provide quantum advantage, this state preparation procedure must be performed efficiently and with sufficiently low noise.

Previously, in the literature, many near-term techniques have been proposed to realize quantum state preparation, including numerical integration7,8 and quantum generative adversarial networks9,10. Reference 8,9,10 also experimentally implemented their state preparation protocols. However, there is a lack of study into their efficiency. There have also been fault-tolerant proposals for quantum state preparation11,12,13,14, with theoretical guarantees in terms of convergence, circuit depth, and the number of ancillas. However, due to their high resource requirements, these techniques cannot be realized experimentally on near-term devices. On the other hand, refs. 15,16 considered black-box state preparation which assumes the existence of an oracle that produces target coefficients. This unfortunately does not allow an end-to-end implementation.

In this work, we make use of matrix product states (MPS) which are an interesting class of quantum wave functions whose probability amplitudes can be described using a specific tensor structure. MPS based algorithms are some of the most powerful classical numerical techniques for manipulating large scale quantum states17. These states were originally studied in the context of the density matrix renormalization group algorithm18,19 and used mainly for simulating the ground states of local many-body quantum systems. The key property is that the amount of entanglement is the relevant quantity which controls the efficiency of the MPS representation20,21. Since their initial discovery, these tensor network structures and their associated algorithms have grown in popularity and have been applied to many different areas of quantum information theory22,23.

In ref. 24, it was realized that when the amplitudes of a quantum state can be described by a smooth function, the entanglement of the state grows very slowly with increasing qubit number. These properties of MPS states and smooth wave functions were used in ref. 25, to describe an efficient method of approximating probability distributions using MPS and quantum circuits with a single layer of two-qubit entangling unitaries. Recently, efforts have been made to prepare matrix product state wave functions to greater accuracy with more general short-depth quantum circuits. In particular, ref. 26 developed an iterative algorithm which can approximate high bond dimension MPS states using short-depth circuits composed of two-qubit unitary gates.

In this paper, we propose and experimentally demonstrate preparation of quantum states which encode normal distributions as amplitudes. Importantly, we do a detailed analysis of the theoretical and experimental error of our protocol. Our general procedure involves several steps. First we approximate the normal distribution with a low degree piece-wise polynomial function given by the Irwin-Hall distributions. We then use the procedure given in ref. 27 to exactly prepare a low bond dimension matrix product state which encodes this piece-wise polynomial distribution. Next, use the iterative MPS loading procedure described in ref. 26 to create a controlled low depth quantum circuit which prepares an approximation to the MPS circuit. Finally, we execute the circuit and measure the resulting probability distribution.

The rest of this paper is organized as follows. In section “Quantum State Preparation” to “Distance Measures and Statistical Analysis”, we review the matrix product state formalism and the iterative method which allows us to prepare MPS states with low depth quantum circuits. In section “Irwin-Hall error analysis” to “Resource Estimations” we provide a rigorous theoretical analysis of the sources of error contributing to the difference between the ideal probability distribution and the one produced by our procedure. In section “Applicability to Monte Carlo Integration”, we analyze the applicability to Monte Carlo integration and discuss the limitations in asymptotic scaling in terms of the error tolerance. In section “Experimental Demonstration”, we show the result of applying our state preparation procedure to prepare quantum wave functions on up to 20 qubits using a state-of-the-art trapped ion quantum computer. Finally, we conclude by summarizing our results and discussing potential applications of this work in section “Discussion”.

Results

Quantum state preparation

The main objective of the state preparation procedure is to generate a quantum circuit which initializes a wavefunction on N qubits,

whose amplitudes cx are set to a specified value. It is known that in general, such a circuit must include a number of gates that scales exponentially with N28. This is true even if the goal is to only approximately prepare a state \(\left\vert {{{\Psi }}}^{{\prime} }\right\rangle\) to some fixed precision ϵ such that \(| | \left\vert {{{\Psi }}}^{{\prime} }\right\rangle -\left\vert {{\Psi }}\right\rangle | | \le \epsilon\), where ∥ ⋅ ∥ is understood as the L2 norm.

However, if we restrict ourselves to certain families of wave functions with specific structure, it becomes possible to efficiently prepare the state with a circuit which contains only a number of quantum gates that scales polynomially with N. For instance, Grover and Rudolf7 gave a procedure for efficiently preparing wavefunctions whose amplitudes are described by an efficiently integrable probability distribution. However, ref. 29 pointed out that the Grover-Rudolf method does not give the quadratic speedup in quantum Monte-Carlo algorithm when classical Monte-Carlo integration is used to determine the gate angles in the state preparation circuit. Reference30 presented a quantum algorithm to efficiently prepare normal distributions using Mid-Circuit Measurement and Reuse. Reference12 presented a quantum algorithm that prepares any N-qubit quantum state with Θ(N)-depth circuit, with an exponential amount of ancillary qubits. Reference14 described a state preparation algorithm that uses quantum eigenvalue transformation, and obtains promising gate complexity. However, the actual Toffoli gates count of O(104) means it will remain unreachable in the near future.

In ref. 25, it was shown that if the probability distribution to be encoded is a smooth differentiable function, then there exists an efficient preparation scheme based on an encoding of the probability distribution as a matrix product state tensor network. Matrix product state wave functions are a class of quantum states on which efficient classical computations can be performed even when the number of qubits in the system is large. As we will describe in this section, there exist efficient methods of generating MPS representations of low degree polynomial functions, as well as methods for approximately preparing MPS wave functions using low depth quantum circuits. In this work, we use this encoding scheme to prepare states which approximate normal probability distributions. With this technique, we are also able to make use of the well known family of piece-wise polynomial functions known as the Irwin-Hall distributions which approximate the normal distribution to arbitrary accuracy.

MPS formalism

A Matrix Product State (MPS) is a wave function of the form

where the terms \({M}_{{\alpha }_{i-1},{\alpha }_{i}}^{[i],{\sigma }_{i}}\) are N different 3-index tensors, and we use the Einstein summation convention that repeated indices are summed over. Each tensor contains a ‘physical’ index σi ∈ [1,d], and ‘bond’ indices αi ∈ [1,χ]17. Here, d is the local dimension of the quantum state, so that d = 2 for qubits.

We call the maximum value of the bond index, χ, the bond dimension of the MPS. Wave functions which can be represented by a bond dimension χ MPS can be completely defined using only d*N matrices of dimension χ × χ and therefore can be stored using only \({d*N*{\chi}^{2}}\) complex numbers instead of storing all 2N complex amplitudes directly.

Only a small subset of wave functions can be represented exactly with a finite bond dimension MPS. In particular, MPS wave functions are very good approximations for quantum states with low entanglement. The entanglement entropy, \(S=-Tr[{\rho }_{A}\log {\rho }_{A}]\), of a MPS wave function with fixed bond dimension χ is bounded by \(S\le \log (\chi )\).

In general, if we let χ = 2N, we can represent any wavefunction \(\left\vert \psi \right\rangle\) using the MPS form given by Eq (2). One of the most important features of MPS is the ability to compress such a wavefunction in a controlled manner to create a fixed small χ approximation to \(\left\vert \psi \right\rangle\). The most straightforward approach to this is the singular value decomposition (SVD) compression scheme, described in ref. 17. We start with the wave function whose coefficients are given by the N component tensor \({\psi }_{{\sigma }_{1},{\sigma }_{2},\ldots ,{\sigma }_{N}}\) such that

This tensor is reshaped into a rectangular matrix \({\psi }_{{\sigma }_{1},({\sigma }_{2}\ldots {\sigma }_{N})}\). A SVD on this matrix allows us to write

We restrict the sum to include only the largest m singular values of the diagonal matrix S. We can now reshape the remaining tensor to form the matrix \({\psi }_{({a}_{1}{\sigma }_{2}),({\sigma }_{3}\ldots {\sigma }_{N})}\). A SVD is applied to this matrix and the process is repeated for all qubits i, resulting in the decomposition given above, where each matrix \(U={M}_{{\alpha }_{i},{\alpha }_{i+1}}^{[i],{\sigma }_{i}}\). For matrix dimension (m × n) with m > n, the cost of the SVD is \(\tilde{O}(m{n}^{2})\), which implies that the SVD costs \({{{\mathcal{O}}}}(N{\chi }^{2}{\chi }^{{\prime} })\) when truncating from bond dimension χ to bond dimension \({\chi }^{{\prime} }\). There also exist more complex compression techniques17 which may be more effective in certain cases. For example, the iterative variational compression algorithm which solves a series of \({\chi }^{{\prime} 2}\times {\chi }^{{\prime} 2}\) linear equations which depends only on the truncated bond dimension \({{{\mathcal{O}}}}({\chi }^{{\prime} })\). Although deriving these equation involves contracting over the original bonds of size χ, which has cost \({{{\mathcal{O}}}}({\chi }^{{\prime} }{\chi }^{2})\), this method can lead to a large practical speedup in certain situations.

For each matrix, the so called truncation error is given by the sum of the squares of the discarded singular values \({\epsilon }^{2}=\mathop{\sum }\nolimits_{i = m+1}^{{2}^{N}}{\lambda }_{i}^{2}\), and controls the fidelity of this compression method17. For a state \(\left\vert \psi \right\rangle\) and allowed error ϵ, we say that it can be approximately represented as a MPS if, for arbitrary N, there exists a fixed χ MPS, \(\left\vert \tilde{\psi }\right\rangle\) such that

In this case, efficient classical computations can be performed on \(\left\vert \tilde{\psi }\right\rangle\).

Smooth differentiable functions

It turns out that many states that are input to quantum algorithms inherently possess a low degree of entanglement and, therefore, can be represented using MPS. For example, common distributions used in Monte Carlo methods include the uniform distribution, the normal distribution, and the log-normal distribution.

Most importantly for our purposes, smooth differentiable real-valued functions which are appropriately encoded into the amplitude of quantum states satisfy this low entanglement property. Consider a normalized real smooth probability distribution f(x) defined on an interval [a, b]. A discretized version of this function can be encoded into the amplitudes of an N-qubit quantum register. Specifically, throughout this work, we use the big-endian binary encoding on the interval [a, b], such that

where h = (2N − 1)/(b − a) and k = k(σ) can be represented by a binary bit-string σ = σ1σ2…σN so that

Therefore, the discretized amplitude encoded wave function takes the form

where \(\left\vert k\right\rangle\) is the integer representation of the computational basis state \(\left\vert {{{\boldsymbol{\sigma }}}}\right\rangle\) in big-endian notation. For a fixed number of qubits N, the wave function \(\left\vert \psi \right\rangle\) encodes a discretized version of the probability distribution f(x), sampled at the discrete points xk.

Consider the effect of adding one additional qubit to the state \(\left\vert \psi \right\rangle\) so that \(\left\vert {\sigma }_{1}{\sigma }_{2}\ldots {\sigma }_{N}\right\rangle \to \left\vert {\sigma }_{1}{\sigma }_{2}\ldots {\sigma }_{N}{\sigma }_{N+1}\right\rangle\). Within the big-endian binary encoding scheme, adding one additional quantum register doubles the density of the discretized points in f(xk). Furthermore, since the added qubit encodes the least significant bit of k, the location of the previous 2N points is not changed by adding one more qubit to the system.

In ref. 24, the entanglement entropy of these discretized amplitude encoded wave functions was carefully analyzed. It was found that adding one additional qubit leads to only a small increase in the entanglement of the state \(\left\vert \psi \right\rangle\), and that this change is controlled by the maximum value of the derivative of f(x)

Specifically, adding one additional qubit increases the entanglement entropy S(ρ) of the final state as

Therefore, for each additional qubit, we add to our encoding, the discretization error is cut in half, but only a vanishingly small amount of entanglement is added to the system.

As shown in ref. 25, the slow scaling of the entanglement entropy with system size open up the possibility of efficiently representing wave functions of the form in Eq. (8) using MPS. For a given probability distribution f(x), we can create a MPS representation of \(\left\vert \psi \right\rangle\) by setting the elements of \({M}_{{\alpha }_{i},{\alpha }_{j}}^{{\sigma }_{j}}\) in Eq. (2) to appropriate values. The elements of M can be exactly determined by performing the repeated SVD on the wave function amplitudes \({\psi }_{{\sigma }_{1},{\sigma }_{2},\ldots {\sigma }_{N}}=f({x}_{(k({{{\boldsymbol{\sigma }}}}))})\). However, for large N, storing the tensor for original ψ becomes computationally intractable.

Instead, we can efficiently determine the elements of M for arbitrarily large N if our function f(xk) is a degree-p polynomial function \(f(x)=\mathop{\sum }\nolimits_{i = 0}^{p}{a}_{i}{x}_{i}\). In this case, ref. 27 explicitly gives the elements of the matrices M in terms of the coefficients ai. For this, first we define the auxiliary variables ti(σi), defined for a given bit-string k = k(σ).

Now we can write the elements of \({M}_{{\alpha }_{i},{\alpha }_{j}}^{[j]{\sigma }_{j}}\) as

where \({C}_{i}^{j}\) is the binomial coefficient and where all bond indices αi ∈ (0, …, p). These equations are directly adapted from Theorem 6 of ref. 27. Notice that for a degree-p polynomial, we must calculate only 2 × N × p2 coefficients, where half of these coefficients are zero, giving us an efficient representation of the function f(x) in the MPS representation.

Furthermore, in ref. 25, a method was presented for extending this encoding to piece-wise degree-p polynomial functions when the domain is a fraction of the full domain [a, b]. In this case, we wish to encode the function into 2k separate regions, where the function of the ℓth region is given by

To encode this function, we first represent fℓ(x) as an MPS on the full domain [a, b], using Eqs. (13)–(16), and then ‘zero out’ elements of the tensors \({M}_{{\alpha }_{i}{\alpha }_{j}}^{[j]{\sigma }_{j}}\) corresponding to regions outside the domain of region ℓ. To do this, first let ℓ be represented by a binary bit-string ℓ = b1b2…bk. Then, we set

All matrices \({M}_{{\alpha }_{i},{\alpha }_{j}}^{[j],{\sigma }_{j}}\) for j > k are left unchanged. In other words, if we replace all the matrices in Eq. (2), \({M}_{{\alpha }_{i},{\alpha }_{j}}^{[j],{\sigma }_{j}}\), with a zero matrix of equal size if bj ≠ σj, it will have the effect of setting all amplitudes outside the domain of region ℓ to zero.

Finally, we can put all these pieces together to write an efficient encoding scheme for representing a piece-wise degree-p polynomial function of 2k separate domains as a low bond dimension MPS state. For each sub-region on the full domain [a, b], we encode fℓ(x) into the MPS Mℓ. We then use the property that two MPS, M1 and M2 with bond dimensions χ and \({\chi }^{{\prime} }\) can be added together to form a MPS M3 = M1 + M2 of bond dimension \(\chi +{\chi }^{{\prime} }\). Therefore, we may add all MPS on the 2k sub-domains together to get a final representation \({{{{\bf{M}}}}}_{T}=\mathop{\sum }\nolimits_{\ell = 0}^{{2}^{k}}{M}_{\ell }\), which is a MPS of bond dimension 2k(p + 1). MT is an efficient approximation to the target quantum state which we wish to prepare using a quantum device. The procedure for generating MT is summarized in Algorithm 1.

Algorithm 1

MPS Encoding Procedure

Input: A degree-p piece-wise function \({f}_{\ell }(x)=\mathop{\sum }\nolimits_{j = 0}^{p}{a}_{j}^{(\ell )}{x}^{j}\). System size N. Domain [a,b]. Support bit k.

Output: A χ≤2k(p + 1) MPS, MT which encodes fℓ(x)

1: for ℓ ← 1 to 2k do

2: Encode fℓ(x) into Mℓ on domain [a,b]

3: Zero out Mℓ outside domain Dℓ

4: end for

5: return \({{{{\bf{M}}}}}_{T}\leftarrow \mathop{\sum }\nolimits_{\ell = 0}^{{2}^{k}}{{{{\bf{M}}}}}_{\ell }\)

Quantum circuits for MPS states

We now describe how we can generate low depth quantum circuits which prepare MPS wave functions. A bond dimension χ MPS state can be exactly created with a quantum circuit which is composed of N local unitary operators each acting on \(m=\log (\chi )+1\) qubits. While in principle this allows us to efficiently map a given MPS to a polynomial depth quantum circuit, the constant factor overhead of compiling arbitrary m-qubit quantum gates to a basic set of one and two-qubit gates quickly becomes infeasible for NISQ devices.

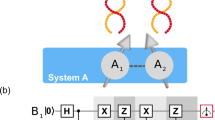

Consequently, a number of proposals for approximately generating bond dimension = χ MPS states using low depth quantum circuits have been put forward26,31,32,33,34. In this work, we take the iterative approach of ref. 26. In this method, a high bond dimension MPS wave function is iteratively approximated by applying D layers of local unitary operators. Each layer of gates is designed to prepare a χ = 2 MPS wave function and can therefore be efficiently prepared using only two-qubit unitary operators. The procedure for constructing the specific set two-qubit unitary operators which exactly prepares a χ = 2 MPS state is described in ref. 26, and results in a circuit architecture of the form shown in Fig. 1a. When several of these layers are combined together, a circuit in the form of Fig. 1b is able to approximately prepare a χ ≫ 2 MPS wave function. The key idea of the algorithm is that each layer of gates also acts as a “disentangler” operator which can be applied to the original wavefunction. The algorithm proceeds as follows

a A MPS state with all bond dimensions equal to 2 can exactly be contructed using (N-1) 2-qubit unitary operators plus one single qubit rotation, arranged in the pictured order. b A higher bond dimension MPS state can be approximately prepared by repeatedly applying single layer MPS approximations, following the construction of ref. 26. c If the entries of the MPS state are real, then all 2-qubit gates U are O(4) operators and can be implemented using single qubit rotations and only 2 CNOT gates if \(\det (U)=+1\), or (d) 2 CNOT gates plus a SWAP operator if \(\det (U)=-1\).

Algorithm 2

Iterative Circuit Preparation

Input: A target MPS function \(\left\vert {\psi }_{0}\right\rangle\)

Output: A quantum circuit Utot = U0U1…UD−1

1: for i ← 0 to D − 1 do

2: Truncate \(\left\vert {\psi }_{i}\right\rangle\) to form a bond dimension 2 MPS \(\left\vert {\tilde{\psi }}_{i}\right\rangle\)

3: Generate Ui s.t. \({U}_{i}\left\vert 0\right\rangle =\left\vert {\tilde{\psi }}_{i}\right\rangle\)

4: Generate \(\left\vert {\psi }_{i+1}\right\rangle ={U}_{i}^{{\dagger} }\left\vert {\psi }_{i}\right\rangle\)

5: end for

6: return Utot = U0U1…UD−1

The truncation procedure applied in Algorithm 2 is the SVD compression method described in section “MPS formalism”. Each layer of unitary gates, Ui approximately prepares the target wave function \(\left\vert {\psi }_{i-1}\right\rangle\). Therefore, the adjoint circuit layer \({U}_{i}^{{\dagger} }\) will approximately take the target \(\left\vert {\psi }_{i-1}\right\rangle\) to the product state \(\left\vert 0\right\rangle\). After each iteration, the entanglement of the wave function \(\left\vert {\psi }_{i}\right\rangle\) is therefore lower than the previous wave function \(\left\vert {\psi }_{i-1}\right\rangle\). In this way, the bond dimension two approximations \(\left\vert \tilde{\psi }\right\rangle\) become increasingly accurate. The final state we produce is

The fidelity of this state preparation procedure is given by the expression.

Therefore, the accuracy of this preparation method depends on the ability of the unitary operators to disentangle the wave function. In section “Distance Measures and Statistical Analysis”, we look at how the approximation error decreases as the number of layers increases for wave functions described by a normal distribution. For these wave functions, a small number of layers is generally sufficient to prepare a good approximate wave function. Therefore, using the techniques described in this section, we can efficiently create low depth quantum circuits which approximately prepare the target normal distribution wave functions on a large number of qubits. After executing this circuit, a projective measurement on all qubits generates a single bit-string, which can converted, using Eq. (6), to one of 2N discrete values on the interval [a, b]. Therefore, sampling many output bit-strings generated by the quantum circuit allows one to sample real numbers from the probability distribution encoded in the quantum wave function.

Irwin-Hall distribution

The Irwin-Hall distribution is the continuous probability distribution for the sum of n i.i.d. \({{{\mathcal{U}}}}(0,1)\) random variables,

Here \({{{\mathcal{U}}}}(0,1)\) is the uniform random variable over [0, 1].

The probability density function (pdf) is given by

where \({{{\rm{sgn}}}}(x-k)\) denotes the sign function

From here, we see that the pdf of Xn is piece-wise polynomial, with n pieces, and each polynomial is of degree n − 1.

By the Central Limit Theorem, as n increases, the Irwin-Hall distribution converges to a normal distribution with mean μ = n/2 and variance σ2 = n/12. Formally, it means

where \({{{\mathcal{N}}}}(\mu ,{\sigma }^{2})\) denotes the normal distribution with mean μ and variance σ2. For a sequence of real-valued random variables, convergence in distribution means

at which F is continuous. Here Fn, F denote cumulative distribution functions (cdf). In Section “Irwin-Hall error analysis”, we present a more rigorous convergence result in pdf.

Distance measures and statistical analysis

We use common distance measures such as the L1 and sup norm between the pdf/cdf of the Irwin-Hall distributions and the corresponding normal distributions. This can be done when the pdf/cdf is available analytically or numerically. When experiments are performed, there is no access to the intrinsic quantum state, as only measurement samples are collected. In this case, we use the 1-sided Kolmogorov-Smirnov test and obtain the KS statistics, defined as

Here Fn(x) is the empirical cdf derived from the samples, and \(\tilde{F}\) is the cdf of the ideal distribution to which it is compared. The KS statistics can be thought of as an approximation of the sup norm between the cdfs. This KS statistic can also be used to test whether the two underlying distributions Fn(x) and \(\tilde{F}(x)\) differ from each other with statistical significance. When a finite number of samples s are taken from both distibutions, the null hypothesis that \({F}_{n}(x)=\tilde{F}(x)\) is accepted if

where α is the significance level which is commonly taken to be α = 0.05.

We note that there are alternative statistical test methods such as the Shapiro-Wilk test or the Anderson-Darling test. However, their test statistics are not as useful as the KS statistics.

A thorough understanding of the error analysis associated with state preparation is important. In an application such as Monte Carlo methods, one often needs to obtain results to a desired accuracy. Therefore, the state preparation needs to prepare the corresponding distribution to a precision that is compatible with the desired application.

Irwin-Hall error analysis

Define the random variable

Let \({f}_{{Z}_{n}}\) be the pdf of Zn and \({f}_{{{{\mathcal{N}}}}}\) be the pdf of \({{{\mathcal{N}}}}(0,1)\).

Theorem 1

\(\parallel {f}_{{Z}_{n}}-{f}_{{{{\mathcal{N}}}}}{\parallel }_{\infty }\le O\left(\frac{1}{n}\right)\).

The proof is given in Appendix.

The error between pdfs of Irwin-Hall distributions and the corresponding normal distribution is also analyzed numerically. Since \({f}_{{Z}_{n}}\) has bounded support on \([-\sqrt{3n},\sqrt{3n}]\), and \({f}_{{{{\mathcal{N}}}}}(x)\to 0\) monotonically as ∣x∣ → ∞, it is sufficient to consider \(\parallel {f}_{{Z}_{n}}-{f}_{{{{\mathcal{N}}}}}{\parallel }_{\infty }\) on \([-\sqrt{3n},\sqrt{3n}]\). We take points with spacing \(\frac{\sqrt{3}}{50\sqrt{n}}\) in this domain and numerically evaluate \({f}_{{Z}_{n}}\) and \({f}_{{{{\mathcal{N}}}}}\) on these points. We numerically estimate the gradient of the max error to be −1.57 and that of the average error to be −2.06. Therefore, based on numerical calculation shown in Fig. 2a,

We also list similar results for the cdf.

Lemma 2

35\(\parallel {F}_{{Z}_{n}}-{F}_{{{{\mathcal{N}}}}}{\parallel }_{\infty }\le O\left(\frac{1}{n}\right)\).

The error between cdf of Irwin-Hall distributions and the corresponding normal distribution is analyzed numerically as well. Based on numerical calculation show in Fig. 2b,

We also compare the theoretical error scaling of our method against other methods. In ref. 30, it was numerically studied that the KL divergence between the distribution produced by the algorithm and the corresponding exact analytical normal distribution scales as Θ(1/t2), where t counts the iterations and can be thought of as proportional to the circuit depth. Since \(\log (p/q)\approx (p-q)/q\) for p ≈ q, the 1-norm between the distribution produced by ref. 30 and the corresponding exact analytical normal distribution also scales as Θ(1/t2). However, this algorithm uses the Mid-Circuit Measurement and Reuse scheme and is thus not suitable for implementation with quantum Monte Carlo algorithms1.

Discretization error

We also discuss briefly the discretization error. The normal distribution is a continuous probability distribution. However, in modern computers, decimal numbers are typically represented as as 32/64/128 bit floats and there is some inherent discretization. When the distribution is loaded onto a quantum state, there is further discretization due to the finite number of qubits. As an example, let us assume the Irwin-Hall order of 16. We discretize \({{{\mathcal{N}}}}(0,1)\) and Zn (Irwin-Hall of order n) for n = 16 over the domain of \([-4\sqrt{3},4\sqrt{3}]\) over qubits up to 23, and compared against the corresponding \({{{\mathcal{N}}}}(0,1)\) over (−∞, ∞) in Fig. 3.

As we can see, the error from the discretized Irwin-Hall distribution and from the discretized normal coincides for less than nine qubits. But for the discretized Irwin-Hall distribution, the error plateaus after 12–13 qubits, indicating that the error now mostly comes from the Irwin-Hall approximation to the normal distribution and not the discretization.

MPS circuit approximation error analysis

We also look at the convergence of our iterative MPS loading scheme to the ideal normal distribution as circuit depth is increased. First, we make a distinction between the target Irwin-Hall distribution, which is the state we load into the untruncated MPS wave function, and the ideal normal distribution which is the ultimate target distribution. As the number of layers in the iterative MPS circuit construction is increased, the approximation error between the output of the quantum circuit, \(\left\vert \psi \right\rangle\), and target Irwin-Hall distribution \(\left\vert \phi \right\rangle\), strictly decreases. This behavior can be seen in Fig. 4a, where we prepare Irwin-Hall distributions with n = 8 and 16. We find that the infidelity, \(I=1-| \left\langle \psi | \phi \right\rangle |\), empirically decays as \(I \sim {\left(\frac{1}{D}\right)}^{\alpha }\) for large D with α = 1.08 for n = 8 and α ≈ 1.22 for n = 16. We also fit the average decay rate for all n in Appendix. We note that in both cases, the infidelity appears to decay more rapidly for the first few layers before slowing down as more layers are added. Also, it has recently been proposed that a gate-by-gate optimization method discussed in ref. 31 may lead to a more rapid decay of the infidelity, especially as the number of layers in the MPS circuits is increased.

a The error between the distribution of the prepared wave function and target Irwin-Hall distribution as the number of MPS layers increases. We show both the wave function infidelity and the KS statistic between the output probability distributions. b The KS statistic between the prepared distributions and the ideal normal distributions for different Irwin-Hall orders as a function of MPS circuit depth. These plots are based on simulations of the quantum circuits with N = 14 qubits.

We also look at the KS statistic between output and target distributions, which measures the maximum difference between the cumulative density functions of the two distributions. This metric has additional experimental relevance as it can be directly estimated from a finite number of measurements taken in the computational basis on the prepared quantum state. In Fig. 4a, we show the behavior of the KS statistic between \(\left\vert \psi \right\rangle\) and the target Irwin-Hall distribution as the number of MPS layers increases. Again, we see that the general trend also decreases as a power law with increasing layer depth. However, in this case the behavior of KS statistic is not a smooth function and is not strictly decreasing. This simply demonstrates that state fidelity is not always in one-to-one correspondence with all measures of distance between the prepared and target output distributions.

This fact is further emphasized when we compare distributions prepared by our quantum circuit simulation and the ideal normal distribution. We plot this comparison in Fig. 4b, for n = 8, 16, 32 and 64 and N = 14. At high circuit depth, where we apply many circuit layers of the iterative MPS state preparation procedure, we very closely reproduce the exact Irwin-Hall distribution in the amplitudes of the quantum state. We find that in all cases, a single layer of the MPS circuit already achieves a fairly low value for the KS statistic, comparable with the error originating solely from the Irwin-Hall approximation as shown in Fig. 3. We also find that differences between the target Irwin-Hall distribution and the target ideal distribution in many cases offset the differences between the prepared distribution and the target Irwin-Hall distribution. This results in relatively strong fluctuations in the KS statistic value with increasing circuit depth. In all cases, the Irwin-Hall error dominates the MPS approximation error beyond depth D = 5. In fact, it is only for n = 32 and n = 64, that there appears to be any significant benefit in going beyond D = 1.

In summary, in this section we studied the error introduced to our state preparation routine by the Irwin-Hall approximation, qubit discretization and the MPS circuit preparation routine. We find that for the Irwin-Hall orders which we consider, a circuit with gates linear in the number of qubits N and with only a small number of layers D, is sufficient to prepare a probability distribution with error comparable to that introduced by the Irwin-Hall approximation. We believe these values of n, N, and D will be sufficient for most practical applications for the near future. Of course, a more accurate approximation of the normal distribution will require a higher circuit depth to prepare. In the next subsection we provide an analysis of how these quantities must scale in this higher precision limit. However, any easy-to-prepare probability distribution which approximates the exact normal distribution will likely be well approximated by a finite depth MPS circuit.

Resource estimations

Here we list the computational resources of our algorithm, both from classical and quantum point of view in Table 1, with respect to ϵ, as defined in Eq. (5) as \(| | \left\vert \psi \right\rangle -\left\vert \tilde{\psi }\right\rangle | | \le \epsilon\), where \(\left\vert \psi \right\rangle\) is the wave functions with amplitudes proportional to the square root of ideal normal distribution and \(\left\vert \tilde{\psi }\right\rangle\) our MPS state. Note that we assume both the Irwin-Hall approximation and the MPS approximation introduces error of O(ϵ). First, we numerically study the relation between ϵ and the Irwin-Hall order n as shown in Fig. 5. We derive ϵ = O(n−1.07), and therefore n = O(ϵ−1/1.07). In Appendix, we showed that classically computing all the coefficients of an Irwin-Hall distribution of order n has complexity O(n2) = O(ϵ−1.87).

Distance between the ideal state and Irwin-Hall approximated state in L2 norm (ϵ as defined in Eq. (5)).

For an Irwin-Hall distribution of order n, our MPS is a sum of n piece-wise MPS components each with bond dimension n + 1. Each piece-wise MPS component involves the calculation of Nn2 coefficients so that the total classical cost of this calculation is \(\tilde{O}(N{n}^{3})\). The sum of these component is then a MPS with χ = n(n + 1), and the cost of generating this MPS state is Nχ2 ~ Nn4, which is the dominant contribution in this portion of the calculation. This scaling can be reduced by truncating the MPS bond dimension χ after each addition of the piece-wise MPS components which each has χp = (n + 1), instead of only truncating after summing all components. In this case, there are n computations each with complexity \({\chi }_{p}^{2}={n}^{2}\), leading to an overall complexity of Nn3. The classical processing cost associated with truncating the MPS to bond dimension \({\chi }^{{\prime} } < \chi\) is \(N{\chi }^{{\prime} 3}\) using the variational compression scheme and \(N{\chi }^{2}{\chi }^{{\prime} }\) using the SVD compression method17. When generating the quantum circuit, we must repeatedly disentangle the MPS state D times, then truncate to bond dimension \({\chi }^{{\prime} }=2\). This has complexity \(\tilde{O}(N{\chi }^{{\prime} 2}D)\). Therefore overall, the cost associated with generating the quantum circuit for preparing an Irwin-Hall distribution of order n is \(\tilde{O}(N{n}^{4}+N+ND)\), where we ignore the constant cost associated with \({\chi }^{{\prime} }\). We saw that n = O(ϵ−1/1.07). We also saw that the discretization error decays exponentially with the number of qubits N, so that ϵ ~ e−γN, for some constant γ, so that \(N \sim \log (1/\epsilon )\). In Appendix, we show that ϵ2 ~ 1/Db for some power b, which we numerically estimate to be b ≥ 1.15 for the Irwin-Hall functions we studied in this work. Therefore, we can write D ~ ϵ−1.74, so that the classical processing of the MPS manipulations scales as \(\tilde{O}(\log (1/\epsilon )[{\epsilon }^{-4/1.07}+{\epsilon }^{-1.74}])=\tilde{O}({\epsilon }^{-3.74})\) after dropping polylog terms and subleading terms.

The quantum circuit corresponding to D rounds of MPS approximations has circuit depth O(DN). In Appendix, we show that for a given ϵ, the error associated with the MPS circuit approximation to the exact Irwin-Hall wave function is essentially independent of both the number of qubits N and the Irwin-Hall order n. Furthermore, we show that ϵ decays like a power of the circuit depth D, so that ϵ2 ~ 1/Db for some constant b. We numerically show that b≥1.15. Therefore the quantum circuit complexity is given by \(\tilde{O}(ND)=\tilde{O}(\log (1/\epsilon ){\epsilon }^{-1.74})=\tilde{O}({\epsilon }^{-1.74})\).

We expect it is possible to improve the complexity scaling of both the classical MPS approximation and the MPS circuit depth D. For example, if we truncate the MPS bond dimension χ after each addition of the piece-wise MPS components, we will get the MPS approximation to scale as \(\tilde{O}({\epsilon }^{-2.80})\) instead of \(\tilde{O}({\epsilon }^{-3.74})\). We believe it is possible to improve the MPS circuit depth D through better approximation methods for low depth circuit construction, for example using ref. 31. In this sense, the exponent b in the scaling \(\tilde{O}(ND)=\tilde{O}({\epsilon }^{-2/b})\) should be thought of as an upper bound for this analysis. Furthermore, we see in Appendix, that if we were to use the exact circuit construction for an MPS of bond dimension \({\chi }^{{\prime} }\), instead of the low depth circuit approximation, the error appears to decrease exponentially as \(\epsilon \sim {e}^{-b{\chi }^{{\prime} }}\) for some constant b. The depth of an exact MPS circuit construction scales like \(D \sim \tilde{O}({\chi }^{{\prime} 2})\), which would imply that the circuit complexity would scale like \(\tilde{O}({\log }^{2}(1/\epsilon ))\). While the constant overhead of the exact MPS circuit implementation implies it is less practical at moderate values of ϵ than the low depth approximations, it may provide a large advantage when trying to prepare states with very small approximation error ϵ.

Note that normal distributions of different parameters can all be obtained from the standard one by rescaling and shifting. So parameters of the normal distribution do not contribute to the circuit complexity here.

Applicability to Monte Carlo integration

The resource estimation of our algorithm allows an end-to-end error analysis of applications where our algorithm can be a subroutine. As an example we discuss in detail how our algorithm would affect the results of a Monte Carlo integration.

As a reminder we assume \(| | \left\vert \psi \right\rangle -\left\vert \tilde{\psi }\right\rangle | | \le \epsilon\), where \(\left\vert \psi \right\rangle\) is the wave functions with amplitudes proportional to the square root of ideal normal distribution and \(\left\vert \tilde{\psi }\right\rangle\) our MPS state. We also implicitly assume both the Irwin-Hall approximation and the MPS approximation introduces error of O(ϵ).

In classical Monte Carlo integration, we estimate \({\mathbb{E}}[g]\) by \(\frac{1}{s}\mathop{\sum }\nolimits_{i = 0}^{s}g({x}_{i})\), with xi sampled from the desired distribution. The central limit theorem states that

where s is the number of times that we sample the data. So the error of Monte Carlo integration is \(O(\sigma /\sqrt{s})\), where σ2 = Var[g].

This assumes xi is sampled from the desired distribution. In our case, we are using the Irwin-Hall distribution together with MPS to approximate \({{{\mathcal{N}}}}\), so it will introduce additional error as g(xi)’s are biased estimators now. The additional error is O(ϵ), as we show in Appendix.

We may also express the complexities of classical and quantum Monte Carlo integration in terms of δ, which is the accuracy that we want a Monte Carlo integration to achieve. Classically, assuming sampling from a normal distribution takes O(1), the complexity of a Monte Carlo simulation is proportional to the number of samples s, which is O(δ−2). We note here that our assumption on the sampling complexity of normal distribution is fairly strong and unlikely to be true. More analysis on the error dependence of classical sampling algorithms for normal distributions, such as the Box-Muller transform36 or Ziggurat algorithm37, is needed.

In quantum Monte Carlo integration, we have \(t=\tilde{O}({\delta }^{-1})\). Assuming the additive error introduced by the state preparation algorithm is comparable to δ, we have O(ϵ) = δ. Thus we can express the computational resources of our algorithm in terms of δ as.

The end-to-end complexity of a quantum Monte Carlo algorithm leveraging our state preparation subroutine will be \(\tilde{O}({\delta }^{-2.74})\), as the state preparation subroutine is used t times. The one-time classical pre-processing of our state preparation subroutine is dominated by the MPS approximation, which scales as \(\tilde{O}({\delta }^{-3.74})\). In reality, it’s possible to complete the classical pre-processing before-hand, and have the quantum subroutine as part of a standard state preparation toolkit.

As seen from this analysis (Table 2), quantum Monte Carlo integration using our current implementation of state preparation does not provide an asymptotic advantage over classical Monte Carlo integration. However, as pointed in Section “Resource Estimations”, exact MPS constructions can be used in the low δ limit, which should improve the complexity.

Experimental demonstration

In this section, we show the experimental demonstration of our state preparation procedure on the IonQ Aria generation of trapped ion quantum computer. First, in Fig. 6, we demonstrate our ability to prepare normal probability distributions with different variances on quantum states with 10 to 20 qubits. This involves preparing all 210 to 220 amplitudes of the quantum wave function. By using the MPS state preparation method, all amplitudes can be set by applying only between 20–120 CNOT gates. We also highlight that to the best of our knowledge, this is the largest demonstration of state preparation for applications in quantum Monte Carlo to date.

Experiments were run on IonQ Aria on circuits with between 10 to 20 qubits, with MPS depth D between 1 to 3. Each circuit uses 2 × (N − 1) × D CX gates and 10000 shots were taken on each circuit. Here we show three illustrative implementations, with (a) N = 8, n = 8, D = 1, (b) N = 20, n = 16, D = 1 and (c) N = 10, n = 16, D = 3. In all figures, samples taken from the QPU are shown in orange and can be compared to an equal number of samples drawn from the noiseless simulator in blue and to the ideal pdf shown in gray.

Demonstration of the full algorithm on the quantum hardware requires compiling the generic 4 × 4 unitary gates generated by the iterative MPS encoding procedure into a set of fundamental gates, which we choose to be arbitrary single qubit rotations plus the 2-qubit CNOT gate. Note that when running on the IonQ hardware, each CNOT gate is automatically transpiled to a single native 2-qubit Molmer-Sorensen gate, so that the total number of basic 2-qubit gates remains the same. Also note that the output of the MPS encoding are real 4x4 unitary matrices, implying that each gate is an O(4) transformation. We therefore use the result of ref. 38, in order to compile each unitary to 2 CNOT gates plus a potential SWAP gate, as show in Fig. 1. On the IonQ architecture, the all-to-all connectivity of the lattice implies that SWAP gates can be removed at the cost of a reordering of the qubits in software. Therefore, each O(4) transformation is implemented using only 2 CNOT gates.

We show three illustrative examples of the output distributions of the prepared wave functions. For each set of hyper-parameters, we make 10000 measurements and plot the histogram of the measured bit strings, converted to the scale of the original Irwin-Hall distribution Xn (So that μ = n/2). The standard deviation of the distributions is \(\sigma =\sqrt{n/24}\). (Note, while Xn is an approximation to \({{{\mathcal{N}}}}(n/12,\sqrt{n/12})\), we are comparing to \({{{\mathcal{N}}}}(n/12,\sqrt{n/24})\). This is because we are preparing quantum state with probabilities proportional to the pdf of Xn, and this corresponds to a quantum state with probability amplitudes the square root of the pdf of Xn. Because the square root of the pdf of a normal distribution \({{{\mathcal{N}}}}(\mu ,\sigma )\) is the pdf of \({{{\mathcal{N}}}}(\mu ,\sigma /\sqrt{2})\), and the fact that \({X}_{n}\to {{{\mathcal{N}}}}(n/12,\sqrt{n/12})\), the standard deviation of the distribution is \(\sqrt{n/24}\)). We see that the experimental measurements give good visual agreement with the clean simulation and the ideal pdf in all cases. As the quantum gate noise is the largest source of error in the algorithm, we find that the best results are always obtained for a single layer of the MPS iterative preparation procedure. In general, the fewer gates applied the higher the fidelity of the output distribution. We can visualize this behavior in Fig. 6. The shortest depth circuits we apply prepare 10-qubit systems with MPS depth 1. We show the case when n = 8 and see very strong agreement with the expected histogram on 10000 samples using the noiseless simulator. The effect of the noise is to slightly broaden the normal peak and to create additional samples at the tails of the distribution. The same general behavior is seen when preparing a 20 qubit system with MPS depth 1. We show the case when n = 16. In this case, the number of basic gates is roughly doubled, resulting in a higher rate of noise and a broader measured output distribution. However, we still see a strong visual overlap with the simulated noiseless distribution and the ideal pdf function. Finally, in order to better visualize the effects of noise, we also show the output for preparing a 10 qubit system at depth 3. We see that in this case, the noise is significantly higher.

We now quantify the effect of noise over the full set of prepared normal distributions. We show the results in Fig. 7. We evaluate the quality of our experimental state preparation by comparing the finite number of measurements from the quantum circuit to measurements sampled from an ideal normal distribution using the Kolmogorov-Smirnov statistic. In Fig. 7a we show the KS statistic for the n = 16 normal distributions for 10-20 qubits and 1–3 MPS layers. As mentioned, the best results are found for a single MPS layer. The best KS statistic value we find is Dn ≈ 0.09, and increases to around Dn ≈ 0.15 for the largest system sizes. We can compare these values to the value required to pass the KS test of the equality of the measured and target distributions given by Eq. (28). Using this equation, we can expect to pass this test with statistical significance α = 0.05, when the number of samples, s, taken from both distributions is s ≤ 450 for Dn = 0.09, and s ≤ 150 for Dn = 0.15. In contrast to the noiseless case, on the noisy quantum computer, the value of the KS statistic increases with number of layers and number of qubits. That is, the noise generated from the additional gates overcomes the higher fidelity of the underlying normal approximation when preparing states using higher number of MPS layers. There is a clear increase in the KS statistic both as the number of qubits and the number of MPS layers increases. In general, the largest source of noise on near-term quantum computers comes from the execution of two-qubit gates. The overall effect of this noise can be clearly seen in Fig. 7b, where we plot KS statistic vs the total number of two-qubit gates in the associated quantum circuit. While there can be significant variance in the noise from circuit to circuit, likely originating from small fluctuations in system performance with time, the overall trend is consistent. The KS statistic ranges from 0.09 to 0.25 in the worst case and appears to increase linearly with the total number of two-qubit gates in the circuit.

a Scaling of the KS statistic with circuit width (Number of Qubits) for MPS depths D = 1, 2, 3, and n = 16. As explained in the text, with current device noise levels, optimal state preparation occurs with D = 1. b Scaling of the KS statistic with number of two-qubit gates demonstrates that these circuit operations are the dominant source of noise in the state preparation procedure.

Note that while we did not extend this pattern down to zero gates, the y-intercept is expected to be nonzero due to the presence of state preparation and measurement (SPAM) errors. While we expect SPAM errors to be relatively low on ion trap quantum computers, they still likely make a nontrivial contribution to the overall error. Also, we cannot reliable apply our state preparation procedure in the zero gate limit and so the approximation error may be a large contributor to the overall error in this limit. Therefore the zero-gate extrapolation error is likely a combination of both SPAM errors and the state preparation approximation error.

Discussion

Our paper gives a procedure that produces U such that \(U\left\vert {0}^{N}\right\rangle\) has probability amplitudes proportional to a normal distribution. Our circuit for U does not involve any ancilla qubit and succeeds with certainty. This has a number of advantages over other methods in applications where U needs to be applied iteratively1. If an algorithm only applies U with probability 1 − δ13,30, then m sequential applications of U would succeed with probability (1−δ)m, which may be not sufficient for a practical application. If an algorithm uses k ancillary qubits12,13,30 such that the ancillary qubits cannot be discarded or reused between successive applications of U, then m sequential applications of U would need km ancillary qubits, which is undesirable since quantum computers will be resource-constrained in the foreseeable future.

Our Matrix Product State based procedure, on the other hand, uses only N qubits to prepare a discretized probability distribution on 2N points, and succeeds with probability 1 in the limit of noiseless quantum gates. The trade-off for these advantages is that we can only approximately prepare the distribution to fixed accuracy for a quantum circuit with gates linear in the number of qubits. The main sources of this error originate from approximating our target function with a piece-wise polynomial function and from approximately preparing the low bond dimension MPS wave function with a short depth quantum circuit.

Throughout this work, we carefully analyzed the error originating from both these sources. We chose to approximate the normal distribution using the order n Irwin-Hall distribution, which gives a fast, deterministic method for generating the appropriate unitary circuit U. We found that the error from this approximation decreases polynomially with n. In order to approximately encode this Irwin-Hall distribution into the wave function amplitudes using a short depth circuit, we use the iterative circuit construction method of ref. 26. While our method does not give the best theoretical complexity in terms of ϵ (as defined in Section “Quantum State Preparation”), the short circuit depth makes it far more appealing than other methods with better asymptotic complexity. Also, intrinsic restrictions on hardware accuracy and discretization errors arising from floating-point arithmetic mean that it may not be possible to go down to infinitesimal ϵ in a practical application.

We also note that the circuit structure of our algorithm, as depicted in Fig. 1, only assumes nearest-neighbor interaction of the underlying device. This implies that our algorithm can be implemented on a wide range of hardware architecture, such as ion trap devices, superconducting devices and so on, without any additional swap gate.

We experimentally validated this loading technique on a trapped ion quantum computer for a range of circuits with varying width and depth. We were able to successfully generate the target wave functions on circuits with up to 20 qubits. Furthermore, the measured KS statistics were comparable to previous state preparation experiments9, while acting on circuits with many more qubits. We also studied at the scaling of the KS statistic with circuit depth, and found that, as expected, with the hardware noise is currently the largest source of error in this state preparation procedure, limiting the effectiveness of applying higher-depth loading circuits. With our current experiments, we are approaching the capacity of generating single precision random quantum variables, although we expect a large improvement in gate-error rates is still required to take full advantage of such high precision numbers.

A number of possible directions stemming from this work immediately present themselves for future research. One possibility is to study improvements to the MPS approximation method for low depth circuit construction. Another is to extend this work to study more general probability distributions. This involves searching for simple piece-wise polynomial representations of these distributions with controllable approximation errors. Finally, we leave to future research a full end-to-end implementation of a quantum amplitude estimation algorithms which makes use of this state preparation subroutine, and an associated analysis of the error rates required to achieve quantum advantage using these approaches.

Data availability

The data supporting the study’s findings are available from the corresponding author upon reasonable request.

Code availability

The code supporting the study’s findings are available from the corresponding author upon reasonable request.

References

Montanaro, A. Quantum speedup of Monte Carlo methods. Proc. R. Soc. A Math. Phys. Eng. Sci. 471, 20150301 (2015).

Harrow, A. W., Hassidim, A. & Lloyd, S. Quantum algorithm for linear systems of equations. Phys. Rev. Lett. 103, 150502 (2009).

Kerenidis, I. & Prakash, A. Quantum Recommendation Systems. In 8th Innovations in Theoretical Computer Science Conference (ITCS 2017), vol. 67 of Leibniz International Proceedings in Informatics (LIPIcs), (ed. Papadimitriou, C. H.) 49:1–49:21, https://doi.org/10.4230/LIPIcs.ITCS.2017.49 (Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik, Dagstuhl, Germany, 2017).

Mitarai, K., Negoro, M., Kitagawa, M. & Fujii, K. Quantum circuit learning. Phys. Rev. A 98, 032309 (2018).

Childs, A. M., Maslov, D., Nam, Y., Ross, N. J. & Su, Y. Toward the first quantum simulation with quantum speedup. Proc. Natl Acad. Sci. 115, 9456–9461 (2018).

Low, G. H. & Chuang, I. L. Hamiltonian simulation by qubitization. Quantum 3, 163 (2019).

Grover, L. & Rudolph, T. Creating superpositions that correspond to efficiently integrable probability distributions. Preprint at arXiv:quant-ph/0208112 (2002).

Carrera Vazquez, A. & Woerner, S. Efficient state preparation for quantum amplitude estimation. Phys. Rev. Appl. 15, 034027 (2021).

Zoufal, C., Lucchi, A. & Woerner, S. Quantum generative adversarial networks for learning and loading random distributions. npj Quantum Inf. 5, 103 (2019).

Zhu, E. Y. et al. Generative quantum learning of joint probability distribution functions. Phys. Rev. Res. 4, 043092 (2022).

Sun, X., Tian, G., Yang, S., Yuan, P. & Zhang, S. Asymptotically optimal circuit depth for quantum state preparation and general unitary synthesis. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 1–1, https://doi.org/10.1109/TCAD.2023.3244885 (2023).

Zhang, X.-M., Li, T. & Yuan, X. Quantum state preparation with optimal circuit depth: Implementations and applications. Phys. Rev. Lett. 129, 230504 (2022).

Rattew, A. G. & Koczor, B. Preparing arbitrary continuous functions in quantum registers with logarithmic complexity. Preprint at arXiv:quant-ph/2205.00519 (2022).

McArdle, S., Gilyén, A. & Berta, M. Quantum state preparation without coherent arithmetic. Preprint at arXiv:quant-ph/2210.14892 (2022).

Sanders, Y. R., Low, G. H., Scherer, A. & Berry, D. W. Black-box quantum state preparation without arithmetic. Phys. Rev. Lett. 122, 020502 (2019).

Bausch, J. Fast black-box quantum state preparation. Quantum 6, 773 (2022).

Schollwöck, U. The density-matrix renormalization group in the age of matrix product states. Ann. Phys. 326, 96–192 (2011).

White, S. R. Density matrix formulation for quantum renormalization groups. Phys. Rev. Lett. 69, 2863–2866 (1992).

White, S. R. Density-matrix algorithms for quantum renormalization groups. Phys. Rev. B 48, 10345–10356 (1993).

Vidal, G., Latorre, J. I., Rico, E. & Kitaev, A. Entanglement in quantum critical phenomena. Phys. Rev. Lett. 90, 227902 (2003).

Latorre, J., Rico, E. & Vidal, G. Ground state entanglement in quantum spin chains. Quantum Inf. Computation 4, 48–92 (2004).

Vidal, G. Efficient classical simulation of slightly entangled quantum computations. Phys. Rev. Lett. 91, 147902 (2003).

Perez-Garcia, D., Verstraete, F., Wolf, M. M. & Cirac, J. I. Matrix product state representations. Quantum Info. Comput. 7, 401–430 (2007).

García-Ripoll, J. J. Quantum-inspired algorithms for multivariate analysis: from interpolation to partial differential equations. Quantum 5, 431 (2021).

Holmes, A. & Matsuura, A. Y. Efficient quantum circuits for accurate state preparation of smooth, differentiable functions. In Proc. IEEE International Conference on Quantum Computing and Engineering (QCE), 169–179, https://doi.org/10.1109/QCE49297.2020.00030 (2020).

Ran, S.-J. Encoding of matrix product states into quantum circuits of one-and two-qubit gates. Phys. Rev. A 101, 032310 (2020).

Oseledets, I. Constructive representation of functions in low-rank tensor formats. Constr. Approx. 37, 1–18 (2013).

Knill, E. Approximation by quantum circuits. Preprint at arXiv:quant-ph/9508006 (1995).

Herbert, S. No quantum speedup with grover-rudolph state preparation for quantum Monte Carlo integration. Phys. Rev. E 103, 063302 (2021).

Rattew, A. G., Sun, Y., Minssen, P. & Pistoia, M. The efficient preparation of normal distributions in quantum registers. Quantum 5, 609 (2021).

Rudolph, M. S., Chen, J., Miller, J., Acharya, A. & Perdomo-Ortiz, A. Decomposition of matrix product states into shallow quantum circuits. Quantum Sci. Technol. 9, 015012 (2023).

Shirakawa, T., Ueda, H. & Yunoki, S. Automatic quantum circuit encoding of a given arbitrary quantum state, Preprint at arXiv:quant-ph/2112.14524 (2021).

Haghshenas, R., Gray, J., Potter, A. C. & Chan, G. K.-L. Variational power of quantum circuit tensor networks. Phys. Rev. X 12, 011047 (2022).

Lin, S.-H., Dilip, R., Green, A. G., Smith, A. & Pollmann, F. Real- and imaginary-time evolution with compressed quantum circuits. PRX Quantum 2, 010342 (2021).

Sherman, R. Error of the normal approximation to the sum of n random variables. Biometrika 58, 396–398 (1971).

Box, G. E. P. & Muller, M. E. A note on the generation of random normal deviates. Ann. Math. Stat. 29, 610–611 (1958).

Marsaglia, G. Expressing a random variable in terms of uniform random variables. Ann. Math. Stat. 32, 894–898 (1961).

Vatan, F. & Williams, C. Optimal quantum circuits for general two-qubit gates. Phys. Rev. A 69, 032315 (2004).

Acknowledgements

This work is a collaboration between Fidelity Center for Applied Technology, Fidelity Labs, LLC., and IonQ Inc. The Fidelity publishing approval number for this paper is 1075809.1.0.

Author information

Authors and Affiliations

Contributions

J.I. proposed using matrix product states for state preparation, designed the quantum circuits, performed experiments, and carried out the error analysis. S.J. contributed to the design of the algorithm and experimental error analysis. E.Y.Z. initiated the idea of using Irwin-Hall polynomials, and contributed to theoretical error analysis.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Iaconis, J., Johri, S. & Zhu, E.Y. Quantum state preparation of normal distributions using matrix product states. npj Quantum Inf 10, 15 (2024). https://doi.org/10.1038/s41534-024-00805-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-024-00805-0