Abstract

In several observational contexts where different raters evaluate a set of items, it is common to assume that all raters draw their scores from the same underlying distribution. However, a plenty of scientific works have evidenced the relevance of individual variability in different type of rating tasks. To address this issue the intra-class correlation coefficient (ICC) has been used as a measure of variability among raters within the Hierarchical Linear Models approach. A common distributional assumption in this setting is to specify hierarchical effects as independent and identically distributed from a normal with the mean parameter fixed to zero and unknown variance. The present work aims to overcome this strong assumption in the inter-rater agreement estimation by placing a Dirichlet Process Mixture over the hierarchical effects’ prior distribution. A new nonparametric index \(\lambda\) is proposed to quantify raters polarization in presence of group heterogeneity. The model is applied on a set of simulated experiments and real world data. Possible future directions are discussed.

Similar content being viewed by others

1 Introduction

In several contexts, decision-making relies heavily (or exclusively) on expert ratings, especially in situations where a direct quantification of quality of an object or a subject is either impossible or unavailable. Examples include applicant selection procedures, grading of student assignments in education, or risk evaluation in emergencies, all of which rely on observational ratings made by experts. For ease of exposition, throughout this paper we will refer to evaluation of students’ work in an educational context as the primary example. To ensure consistency across different teachers, harmonization of marking criteria is often used to improve inter-rater agreement and homogeneity (Gisev et al. 2013; Gwet 2008); however, discrepancies between grades assigned by different teachers may still persist (Bygren 2020; Barneron et al. 2019; Makransky et al. 2019; Zupanc and Štrumbelj 2018), reflecting each teacher’s approach to evaluation. Therefore, statistical models that can capture inter-rater agreement (or disagreement) can shed light on heterogeneity between teachers and aid the mark moderation process (Bygren 2020; Barneron et al. 2019; Crimmins et al. 2016).

The specific context that we are considering in this work is the observational setting where a set of raters are evaluating different sets of itemsFootnote 1 out of a total population of items; these sets may be completely disjoint (i.e., each item is evaluated by exactly one rater). Each item is represented by a set of covariates, assumed to follow some distribution. Within a hierarchical statistical model, a common assumption is that raters (who may or may not include covariates) may each be characterized through a latent variable capturing e.g. whether an evaluator is generous or how they assess different aspects of the work. In the simplest setting, in an evaluation context where there is no space for subjectivity, these latent variables will be identical for all raters, in the sense that their view of the item is identical and as a result their evaluation style is assumed to be the same. However, it is well-known in many scientific fields, e.g., cognitive neuroscience (Barneron et al. 2019; Makransky et al. 2019; Briesch et al. 2014), statistics (Agresti 2015; Gelman et al. 2013) and psychometrics (Bartoš et al. 2020; Nelson and Edwards 2015; Hsiao et al. 2011), that individual variability in rating tasks (Wirtz 2020) needs to be accounted for when aggregating or interpreting individual raters’ recommendations.

Existing works account for heterogeneity between raters through a latent variable within a mixed-effects model (Martinková et al. 2023; Bartoš et al. 2020; Nelson and Edwards 2015, 2008). In other words, a regression model is used where the rating is modelled conditionally on covariates with a random effect that varies across raters. However, the distribution of the latent variable is typically assumed to be unimodal, and cannot capture eg. polarisation or clustering of rater types. The present work aims to extend these models to account for clustered variability between raters. Through a Bayesian approach, a Dirichlet process mixture prior is placed over the hierarchical rater effects in a linear model. This flexible prior naturally accommodates different clusters among raters (i.e., different distributions for the rater effects). A multiple-level model is specified in which observations (i.e., ratings) are nested within raters, and in turn these are nested within clusters. These clusters reflect distinct groups of raters in terms of their decision-making, and can be used to characterise the level of (dis)agreement. The level of multimodality (i.e., how separated the latent group densities are) quantifies the polarization of the latent groups. For instance, a large variance between teacher scores might be due to both the presence of two main divergent latent trends among them or to a high level of noise in their assessing (Koudenburg and Kashima 2022). It is important to differentiate the two cases and quantify the group polarization both for theoretical and practical purposes. Differentiate systematic differences of opinion against high level of noise might be needed (Koudenburg et al. 2021). They are two very different cases and much attention must be paid in distinguishing one another. The former is a case of high group polarization (Esteban and Ray 1994): two different teachers clusters emerge with a small within-cluster variance and a large variance between different clusters. In the second case only one cluster emerge with a large variance. It might be argue that in the first case, even that the overall agreement might be quite low since there are two main different trends among raters, there might be a high agreement within the same trend (Tang et al. 2022). Assuming the latent agreement among raters as the degree of latent similarity in rating, an index regarding the polarization of the different possible groups of raters might be informative (Koudenburg and Kashima 2022; Tang et al. 2022; Koudenburg et al. 2021). In this work we introduce a novel index to quantify the latent polarization among raters through the posterior distribution of the hierarchical effects (DiMaggio et al. 1996). It naturally derives from the nonparametric model and overcomes some strong assumptions (e.g., the number of latent groups, the ratings distribution) of the previous indices (Koudenburg et al. 2021; Esteban and Ray 1994). This nonparametric index, referred to as \(\lambda\) index, is based on the shape of the posterior distribution of the hierarchical effects. It connects two different research lines: it relates the works on distribution polarization of opinions (Koudenburg and Kashima 2022; Tang et al. 2022; Koudenburg et al. 2021) with those about the inter-rater agreement analysis (Martinková et al. 2023; Bartoš et al. 2020; Nelson and Edwards 2015; Gisev et al. 2013; Gwet 2008; Nelson and Edwards 2008).

The paper proceeds as follows: Sect. 2 is devoted to the general psychometric framework, the key concepts of inter-rater agreement, inter-rater reliability are introduced; the statistical model is specified in Sect. 3 and the adopted Gibbs sampler in Sect. 4; the novel rater similarity index is described in Sect. 5; simulation studies are reported in Sect. 6, as an illustrative example, a real data analysis is described in Sect. 8; it is followed by conclusion and future directions in Sect. 1.

2 Existing work in inter-rater agreement and hierarchical effects models

Several methods and statistical models that aim to account for inter-rater variability have appeared in the literature (Nelson and Edwards 2015; Gwet 2008; Cicchetti 1976). Models such as the Cultural Consensus Theory (Oravecz et al. 2014), which explores individuals’ shared cultural knowledge, have been proposed to capture unobserved agreement and similar trends in groups of raters (Dressler et al. 2015). Two related but different concepts have been introduced: inter-rater agreement and inter-rater reliability. The former refers to the extent to which different raters’ evaluations are concordant (i.e, they assign the same value to the same item), whereas the latter refers to the extent to which their evaluations consistently distinguish different items (Gisev et al. 2013). In other words, while the inter-rater agreement indices quantify the observed concordance, the inter-rater reliability indices aim to quantify the consistency of their evaluations (e.g., despite assigning different values, the distinction among the items is the same). The present work focuses on latent agreement intended as homogeneity in the evaluators’ point of view (Tang et al. 2022; Esteban and Ray 1994).

A number of methods are available to quantify both inter-rater agreement and inter-rater reliability. Indices for pairs (Nelson and Edwards 2008; McHugh 2012) or multiple raters (Jang et al. 2018), for binary (Gwet 2008), polytomous (Nelson and Edwards 2015) or continuous (Liljequist et al. 2019) ratings are commonly used in different contexts. Recent developments using the framework of Hierarchical Linear Models (i.e., HLMs) provide a more accurate estimation of inter-rater reliability accounting for different sources of variability (Martinková et al. 2023).

Despite the popularity of work on this issue, less attention has been paid to possible latent similarities of the raters (Wirtz 2020). From a psychometric point of view, it can be appealing to assess the extent to which different raters might be heterogeneous in their ratings (Martinková et al. 2023; Bartoš et al. 2020; Koudenburg et al. 2021; Casabianca et al. 2015; Nelson and Edwards 2015; Gisev et al. 2013; DeCarlo 2008; Gwet 2008; Nelson and Edwards 2008).

There are certain situations in which the subjective opinion of the raters is very informative; as a simple example, the type of teachers’ training or experience can be thought of as latent states which affect a range of evaluations differently (Childs and Wooten 2023; Barneron et al. 2019; Bonefeld and Dickhäuser 2018; Dee 2005). Sometimes the major interest is not on the mere consistency between raters, but on their actual evaluation. For instance, in a selection process the actual students’ scores are very relevant for their admission (Zupanc and Štrumbelj 2018). Even if a strict standardization of teachers evaluation is not feasible, some statistical methods can tackle these issues. In all these contexts the assessment of uniformity among raters could be useful and would provide further information about the rating process.

To this aim, existing work, e.g. Martinková et al. (2023); Nelson and Edwards (2015); Casabianca et al. (2015); Hsiao et al. (2011); Cao et al. (2010); DeCarlo (2008), adopts an hierarchical approach where correlations between ratings are naturally captured through an hierarchical Bayesian model. Each rater \(i = 1,\ldots ,I\) is assumed to be rating a different set of items \({\mathscr {J}}_i \in {\mathscr {J}}\), \({\mathscr {J}}_i \cap {\mathscr {J}}_{i+1} = \emptyset\).Footnote 2 The rating \(y_{ij}\) of the item \(j \in {\mathscr {J}}_i\) carried out by rater \(i = 1,\ldots ,I\), is modelled as follows:

Here \({\textbf{x}}_{ij}\) and \({\textbf{z}}_{ij}\) are, respectively, \(1 \times p\) and \(1 \times q\) vectors of distinct explanatory variables of rating \(y_{ij}\); \({\beta }\) is a \(p \times 1\) vector of non varying effects and \({\textbf{u}}_i\) is a \(q \times 1\) vector the hierarchical effects of rater i.

In the standard HLM formulation, the following distribution is specified for the rater effects:

Where \(N_q(\cdot )\) stands for a q-variate normal distribution; Here \({\textbf{0}}\) is a \(q \times 1\) zero vector and \(\pmb {\varSigma }\) is a \(q \times q\) positive semi-definite covariance matrix. For the hierarchical normal linear model \(\varepsilon _{ij} \sim N(0,\sigma _\varepsilon^2 )\), with \({\textbf{u}}_i\) and \(\varepsilon _{ij}\) typically assumed independent. The distribution of each vector-valued hierarchical effects \({\textbf{u}}_i\) is then assumed to follow some distribution and captures variability across different raters.

In the above mentioned example, \(y_{ij}\) is the score given to student \(j \in {\mathscr {J}}_i\)’s essay by teacher i. Since an observational approach is adopted (i.e., each raters rates a different set of items), the effect of the student is not identifiable (each student is rated only by one rater). Assuming that students effects are i.i.d., their variance is added to that of the residuals. In the univariate case (i.e., when \(z_i=1\), varying intercept model) the relevance of the raters effect \(u_i \sim N(0,\sigma ^2_u)\), where \(\sigma ^2_u>0\) is the variance, might be quantified through the intraclass correlation coefficient (i.e., ICC):

It is the ratio between the variance of the raters effect and the total variability of the model, i.e., the proportion of variance of the score due to the teacher, which reflects the correlation of two ratings given by the same rater. Smaller values of ICC indicate a small effect of the rater on the student’s score.

3 Dirichlet process mixture and hierarchical effects

The HLM assumption regarding the distribution of the hierarchical effects is crucial in characterising different possible clusters or latent patterns of heterogeneity among raters (Dorazio 2009). The common Gaussian assumption for the distribution of the these effects may obscure skewness and multimodality present in the data. A more flexible specification of the hierarchical effects distribution can help capture more complex patterns of variability. Models that account for skew-normal (Lin and Lee 2008), skew-normal-cauchy (Kahrari et al. 2019), multivariate t (Wang and Lin 2014), extreme values (McCulloch and Neuhaus 2021) effects distributions have been proposed (Schielzeth et al. 2020). Nevertheless, they poorly account for the possible presence of multimodality in those distributions. In this regard, a mixture distribution has been proposed as a potential solution (Heinzl and Tutz 2013; Kyung et al. 2011; Kim et al. 2006). Each mode can then correspond to a cluster with a similar pattern (e.g., the same deviation from the population mean). Several works have explored this issue in the past two decades (Villarroel et al. 2009; Tutz and Oelker 2017). For instance, Verbeke and Lesaffre (Verbeke and Lesaffre 1996) proposed a standard normal mixture distributions for the hierarchical effects. James and Sugar (James and Sugar 2003) explored this approach in the context of functional data. De la Cruz-Mesía (De la Cruz-Mesia and Marshall 2006) proposed a mixture distribution for non-linear hierarchical effects in modelling continuous time autoregressive errors. A heteroscedastic normal mixture model in the hierarchical effects distribution was considered in linear (Komárek et al. 2010) and generalized hierarchical linear (Komárek and Komárková 2013) models. Despite the breadth of specifications for the mixture model, in all the aforementioned models, the number of mixture components needs to be specified. Although this may not be a critical assumption in certain contexts, it may be questionable or detrimental in settings with a lack of a priori information on the level of multimodality, especially in cases where the characterisation of the multimodality is of direct interest.

When the number of components of the mixture is unknown, a Dirichlet Process Mixture (hereafter DPM) for the hierarchical effects is a natural extension (Gill and Casella 2009; Navarro et al. 2006; Verbeke and Lesaffre 1996). This nonparametric extension allows the model to capture an unknown marginal distribution of the hierarchical effects through the Dirichlet Process (Antoniak 1974; Ferguson 1973). Modeling the hierarchical effect \({\textbf{u}}_i\) as an infinite mixture of some distribution family (e.g., Normal) enables the model to account for possible multimodality without specifying the number of mixture components. Some existing works adopted this nonparametric approach and pose a DPM prior over the hierarchical effects (e.g., Heinzl and Tutz (2013); Heinzl et al. (2012); Kyung et al. (2011)).

The HLM of Eq. (1) is then specified in the same way as before through:

The following hierarchical prior distribution is placed over the raters effects:

where \(\mathbf {\mu }_i\) and \({\textbf{Q}}_i\) are, respectively, the \(q \times 1\) a location parameter vector and the \(q \times q\) positive semi-definite covariance matrix for the hierarchical effects \({\textbf{u}}_i\) of rater \(i=1,\ldots ,I\). Here \(\varepsilon _{ij} \sim N(0,\sigma _\varepsilon^2 )\), \(i = 1,\ldots ,I\), \(j \in {\mathscr {J}}_i\); \({\textbf{u}}_i\) and \(\varepsilon _{ij}\) are assumed independent as before.

3.1 DPM as a generative process for the hierarchical effects

Here, \(DP(\alpha , G_0)\) is a DPM with \(\alpha >0\) precision parameter and base measure \(G_0\). These specify the mixing distribution G (Heinzl and Tutz 2013), so that each realization of G is almost surely a discrete probability measure on the space \((\Omega , {\mathscr {F}})\) (Blackwell 1973). Thus, since the DPM is a discrete generative process with non-zero probability of ties, some of the realizations might be identical to each other with probability determined by the precision parameter \(\alpha\). Therefore, specifying this hierarchical model on the components location parameters \(\mathbf {\mu }\) induces a clustering in the hierarchical effects (i.e., the raters) (Kyung et al. 2011); hierarchical effects belonging to the same c-th cluster with location parameter \(\mathbf {\mu }_c\) are then independent and identically distributed. In other words, in the context of the HLM, the DPM specifies the component-specific location parameter \(\mathbf {\mu }_c\), so that each rater has each has their own unique hierarchical effects value \({\textbf{u}}_i\) (Heinzl and Tutz 2013).

The DPM is a generative process commonly used in conjunction with a parametric family of distributions (e.g., Normal, Poisson), and the base measure parameter \(G_0\) denotes this specified distribution. Thus, for any element \(A_n\), \(n=1,\ldots ,N\), of \({\mathscr {A}}\), a finite measurable partition of \(\Omega\),

where \(Dir(\cdot )\) stands for the Dirichlet distribution, and \(G_0\) defines the expectation of GFootnote 3, therefore they have the same support. The parameter \(\alpha\), a multiplicative constant of the vector-valued Dirichlet parameter, determines the probability of a new realization of the process to be different of the previous ones (Blackwell and MacQueen 1973). In other words, it governs the probability that the DPM generates a new cluster. Formally, the generative property of the DPM is that, for \(i=1,\ldots ,I\), with I being for instance the total number of raters:

the probability that the new I-th realization \(\mu _I\) of G assumes a different values than the previous ones is described by the well known Pólya Urn Model:

with \(C \in {\mathbb {N}}\) being the number of already observed distinct clusters among the realizations of G (i.e., the number of the different values of \(\mathbf {\mu }\) already observed, in other words the number of clusters) and \(r_c\) counts the elements in the c-th cluster. Basically, since G is a discrete probability measure, the C clusters represent different point masses (or different sets of point masses in the multivariate case) and \(r_c\) is the frequency of each of them. Considering the conditional distribution of \(\mu _I\) as a mixture distribution, the probability that \(\mathbf {\mu }_I\) is a new point mass sampled from \(G_0\) is proportional to \(\alpha\), the probability that it is equal to the already observed c-th point mass is proportional to \(r_c\). In this notation, the role of \(\alpha\) in sampling a new (not already observed) value of \(\mathbf {\mu }_I\) (i.e., a new point mass, a new cluster) is interpretable.

To this regard, Sethuraman (1994) described a stick-breaking construction of the DP.Footnote 4 In this formulation G is equivalent to:

where \(\delta\) is the Dirac measure on \(\mathbf {\mu }_c\) and \(\mathbf {\mu }_c \,{\mathop {\sim }\limits ^{iid}}\, G_0\) is assumed. The weights \(\{\pi _c\}_{c=1}^{\infty }\) of the infinite mixture result from the stick-breaking procedure as follows:

with \(Be(\cdot )\) indicating the Beta distribution and \(\{\nu _c\}_{c=1}^{\infty }\) being reparameterized weights. It is even more explicit in this construction that the random measure G is a mixture of point masses. The distribution of the random weights \(\mathbf {\pi }\) (i.e., the probability of different allocation to the clusters) is governed through the stick-breaking process by the precision parameter \(\alpha\). Further details are given in the Appendix.

In practice, one of the established approximations to the stick-breaking process is to truncate the infinite number of components to a large, finite value:

for large enough value of R (Tutz and Oelker 2017; Gelman et al. 2013).

In summary, the hierarchical effects distribution considering a stick breaking construction of the DPM might be then specified as follow:

With this nonparametric model specification, latent common tendencies among raters might emerge through the components of the model (Heinzl and Tutz 2013; Heinzl et al. 2012; Kyung et al. 2011). The Bayesian approach allows us to characterize the shape of the distribution of the rater effects, as well as explore the effect of uncertainty on these (Gelman et al. 2013). For example, in an applied context, strict vs. accommodating are very common latent states that drive students’ essays grading process (Zupanc and Štrumbelj 2018; Briesch et al. 2014; Dee 2005).

4 Prior distributions and estimation procedure

The DPM mixture model has been well studied in the literature in a variety of different settings, especially within Bayesian inference (Canale and Prünster 2017; Müller et al. 2015). Several sampling schemes have been proposed both in the Bayesian context (e.g., Canale and Dunson (2011); Dahlin et al. (2016); Kyung et al. (2011)) and in the frequentist one (e.g., Tutz and Oelker (2017)). Within the Bayesian framework, Gibbs sampling (Dahlin et al. 2016), slice sampler (Kyung et al. 2011; Walker 2007), Sequential Monte Carlo algorithms (Ulker et al. 2010), split-merge algorithms (Bouchard-Côté et al. 2017), have been proposed among others.

In this work, the model specification permits the use of conjugate priors, so that a blocked Gibbs sampling can be used (Heinzl and Tutz 2013; Heinzl et al. 2012; Kyung et al. 2011)., with details shown below.

4.1 Prior specification

Several of the parameters in the model have conjugate prior distributions which allow easier computation.

-

For the effects \({\beta }\) the following hierarchical prior is assigned:

$$\begin{aligned}&{\beta }|{\textbf{b}}_{\beta }, {\textbf{B}}_{\beta } \sim N_p({\textbf{b}}_{\beta }, {\textbf{B}}_{\beta })\\&{\textbf{b}}_{\beta } \sim N_p({\textbf{b}}_0, {\textbf{S}}_0)\\&{\textbf{B}}_{\beta }=diag(\sigma _{\beta _1}^2,\ldots ,\sigma _{\beta _p}^2)\\&\sigma _{\beta _m}^2 \,{\mathop {\sim }\limits ^{iid}}\, IG(a_{\beta _0}, b_{\beta _0} ) \end{aligned}$$for \(m =1,\ldots ,p\), where p is the number of covariates associated to the effects \({\beta }\). Here, \(IG(\cdot )\) stands for inverse-gamma with shape parameters \(a_{\beta _0}>0\) and rate parameters \(b_{\beta _0}>0\). Where \({\textbf{b}}_0\) and \({\textbf{S}}_0\) are, respectively, the \(p \times 1\) vector of location parameters and the \(p \times p\) positive semi-definite covariance matrix of \({\textbf{b}}_\beta\) (i.e, the location parameter vector of the non varying effect \({\beta }\)); \({\textbf{b}}_\beta\) and \({\textbf{S}}_\beta\) are, respectively, the \(p \times 1\) location parameter and the \(p \times p\) positive semi-definite covariance matrix for \({\beta }\) (i.e., the non varying effect). The set \(\{{\textbf{b}}_0, {\textbf{S}}_0,a_{\beta _0}, b_{\beta _0}\}\) of the hyperparameters are specified by the user. A diagonal matrix is suggested for \({\textbf{S}}_0\) as showed by Heinzl et al. (2012).

-

A diagonal structure for the \(q \times q\) prior covariance matrix \({\textbf{Q}}_r\) for the hierarchical effects is specified as follows for each mixture component \(r = 1,\ldots ,R\) and each each related covariate \(d =1,\ldots , q\):

$$\begin{aligned} {\textbf{Q}}_r= & {} diag(\sigma _{Q_{1r}}^2,\ldots ,\sigma _{Q_{qr}}^2) \end{aligned}$$For the base measure \(G_0\)Footnote 5 and the precision parameter \(\alpha\) of the DP mixture model the following priors are specified:

$$\begin{aligned} G_0= & {} N_q(\mathbf {\mu }_0,{\textbf{D}}_0) \times IG(a_{Q_0},b_{Q_0})^q \\ \mathbf {\mu }_0\sim & {} N_q({\textbf{m}}_0, {\textbf{W}}_0)\\ {\textbf{D}}_0= & {} diag(\sigma _{{D_0}_1}^2,\ldots ,\sigma _{{D_0}_q}^2)\\ \sigma _{{D_0}_d}^2\sim & {} IG(a_{D_0},b_{D_0})\\ \alpha\sim & {} Ga(c_0^{\alpha },C_0^{\alpha }) \end{aligned}$$for \(d=1,\ldots ,q\), where q is the number of covariates associated to the hierarchical effects \({\textbf{u}}_i\), and for \(a_{D_0},a_{Q_0}>0\) and \(b_{Q_0}, b_{D_0}>0\). Here \(Ga(\cdot )\) stands for Gamma distribution with \(c_0^{\alpha }>0\) and \(C_0^{\alpha }>0\) respectively the shape and the rate parameters. Where \({\textbf{m}}_0\) and \({\textbf{W}}_0\) are, respectively, the \(q \times 1\) location parameter vector and the \(q \times q\) positive semi-definite covariance matrix of \(\mathbf {\mu }_0\) (i.e. the location parameter of the base measure \(G_0\)); \(\mathbf {\mu }_0\) and \({\textbf{D}}_0\) are, respectively, the \(q \times 1\) location parameter vector and the \(q \times q\) positive semi-definite covariance matrix of the base measure \(G_0\). The set \(\{a_{Q_0},b_{Q_0}, {\textbf{m}}_0, {\textbf{W}}_0,a_{D_0}, b_{D_0}, c_0^{\alpha }, C_0^{\alpha }\}\) of the hyperparameters need to be fixed. A diagonal structure is suggested for \({\textbf{W}}_0\) as above.

-

The following prior is assigned to the noise variance:

$$\begin{aligned} \sigma _\varepsilon\sim & {} Ga(a_\varepsilon ,b_\varepsilon ) \end{aligned}$$with \(a_\varepsilon >0\) and \(b_\varepsilon >0\) hyperparameters fixed by the user as well.

4.2 Posterior sampling

Since most of the parameters in the model have conjugate prior distributions, a blocked Gibbs sampling algorithm was used for the posterior sampling (Ishwaran and James 2001).

The parameter vector for the model is \(\theta =\{ {\beta }, {\textbf{b}}_\beta ,{\textbf{B}}_\beta , \mathbf {\mu }_0,{\textbf{D}}_0, {\textbf{Q}}, \sigma _{\varepsilon }, \alpha , \mathbf {\pi }, {\textbf{c}} \}\) which is updated at each state of the Markov chain of the Gibbs sampling. Here \({\textbf{c}}= (c_1,\ldots ,c_I)\) is the allocation parameter of the raters to the clusters and. Further details on the following sampling are given in the Appendix. The closed-form posteriors are as follows.

-

1.

Update parameters referring to effects \({\beta }\):

$$\begin{aligned} {\beta }| {\textbf{b}}_\beta , {\textbf{B}}_\beta , {\textbf{u}}, \sigma _\varepsilon , {\textbf{y}}\sim & {} N_p({\textbf{b}}_\beta ^{*},{\textbf{B}}_\beta ^{*}) \end{aligned}$$For each covariate \(m=1,\ldots ,p\) associated with a non varying effect \(\beta _m\),

$$\begin{aligned} b_{\beta _m}|\sigma _{\beta _m}^2, \beta _m\sim & {} N \left( \left( \frac{1}{\sigma _{\beta _m}^2} + \frac{1}{s_{0_m}^2}\right) ^{-1} \left( \frac{\beta _m}{\sigma _{\beta _m}^2} + \frac{m_{\beta _m}}{s_{0_m}^2} \right) , \left( \frac{1}{\sigma _{\beta _m}^2} + \frac{1}{s_{0_m}^2}\right) ^{-1}\right) \\ \sigma _{\beta _m}^2| b_{\beta _m}, \beta _m\sim & {} IG\left( a_{b_0} + \frac{1}{2}, \frac{1}{2} (\beta _m-b_{\beta _m})\right) \end{aligned}$$ -

2.

Update parameters referring to hierarchical effects:

-

For each rater \(i = 1,\ldots ,I\):

$$\begin{aligned} {\textbf{u}}_i|\mathbf {\mu }_{c_i}, \mathbf {\mu }_0, {\textbf{Q}}_0, {\beta }, \sigma _\varepsilon , {\textbf{y}}_i\sim & {} N_q(\mathbf {\mu }_{c_i}^*,{\textbf{Q}}_{c_i}^*), \end{aligned}$$where \(\mathbf {\mu }_{c_i}\) is the location parameter vector of the cluster where the i-th rater is allocated.

-

For each component \(r=1,\ldots ,R\) of the truncated mixture: - If \(\not \exists i: c_i = r\) (if no rater are currently allocated into cluster r), for each covariate \(d =1,\ldots ,q\) associated to an hierarchical effect (independently):

$$\begin{aligned} \mathbf {\mu }_r | \mathbf {\mu }_0, {\textbf{D}}_0\sim & {} N_q(\mathbf {\mu }_0, {\textbf{D}}_0) \\ \sigma _{Q_{dr}}^2\sim & {} IG(a_{Q_0},b_{Q_0}) \end{aligned}$$- If \(\exists i: c_i = r\) (if at least one rater assigned to component r), for each covariate \(d =1,\ldots ,q\) associated to an hierarchical effect (independently):

$$\begin{aligned} \mu _{r_d} | \sigma _{Q_m}^2, \mu _{0_r}, \sigma _{D_{0_m}}^2, {\textbf{u}}, {\textbf{c}}\sim & {} N(\mu _{0_r}^*, \sigma _{D_{0_m}}^{2*}) \\ \sigma _{Q_{dr}}^2|\mathbf {\mu }, {\textbf{u}}\sim & {} IG \left( a_{Q_0}^*, b_{Q_0}^* \right) \end{aligned}$$Essentially, at each iteration t, if the r-th cluster is empty the component location parameters \(\mu _r\) are sampled from the prior as suggested by (Gelman et al. 2013), otherwise they are drawn from the above mentioned closed-form posterior.

-

Each rater \(i =1,\ldots ,I\) is re-allocated into a cluster:

$$\begin{aligned} c_i| \mathbf {\pi }, \mathbf {\mu }, {\textbf{Q}},{\textbf{u}}_i\sim & {} Cat(\mathbf {\omega }_i^*) \end{aligned}$$where \(Cat(\cdot )\) stands for Categorical distribution, and \(\mathbf {\omega }_i^*\) is reported in the Appendix. A truncated approximation for the DPM mixture model was used (Gelman et al. 2013; Heinzl et al. 2012) for a large value of R. The stick-breaking construction was used to generate the mixture weights \(\pi _{1:R}\).

-

For each component \(r = 1,\ldots ,R-1\):

$$\begin{aligned} v_r|\mathbf {\phi }, \alpha\sim & {} Be \left( 1+c_r,\alpha +\displaystyle \sum _{l=c+1}^{R} r_l \right) \end{aligned}$$and \(v_R=1\) for the last cluster. Here \(c_r\) is the number of raters assigned to the cluster r, and \(r_l\) is the number of raters assigned to the cluster l.

-

The precision parameter is updated as follows:

$$\begin{aligned} \alpha |v_1,\ldots ,v_{R-1}\sim & {} Ga\left( R-1+a_\alpha ,b_\alpha -\displaystyle \sum _{c=1}^{R-1} ln(1-v_r) \right) \end{aligned}$$ -

For each covariate \(d =1,\ldots ,q\) associated with an hierarchical effect the base measure parameters are updated:

$$\begin{aligned} \mu _{0_d}|\sigma _{D_{0_d}}^2, \mathbf {\mu }\sim & {} N\left( \left( \frac{I}{\sigma _{D_{0_d}}^2} + \frac{1}{\sigma _{W_{0_d}}^2} \right) ^{-1} \left( \frac{I}{\sigma _{D_{0_d}}^2} {\overline{\mu }}_d + \frac{m_{0_d}}{\sigma _{W_{0_d}}^2} \right) , \left( \frac{I}{\sigma _{D_{0_d}}^2} + \frac{1}{\sigma _{W_{0_d}}^2} \right) ^{-1} \right) \end{aligned}$$where \({\overline{\mu }}_d\) is the mean of the location parameters related to the d-th covariate over all the clusters.

$$\begin{aligned} \sigma _{D_{0_m}}^2|\mu _{0_m},\mathbf {\mu }\sim & {} IG \left( a_{D_0} + \frac{I}{2}, b_{D_0}+\frac{1}{2}\displaystyle \sum _{i=1}^{I} (\mu _{i_m}-\mu _{0_m})^2 \right) \end{aligned}$$

-

-

3.

Update the error variance:

$$\begin{aligned} \sigma _\varepsilon ^2|{\beta }, {\textbf{u}}, {\textbf{y}}\sim & {} IG \left( a_\varepsilon + \frac{1}{2}I |{\mathscr {J}}|, b_\varepsilon +\frac{1}{2}\displaystyle \sum _{i=1}^{I} \displaystyle \sum _{j \in {\mathscr {J}}_i}\left( y_{ij}-{\textbf{x}}_{ij}' \beta -{\textbf{z}}_{ij}' {\textbf{u}}_i \right) ^2 \right) . \end{aligned}$$Here \(|{\mathscr {J}}|\) is the cardinality of the set of all the rated items \({\mathscr {J}}\), it equals the number of observations.

5 The nonparametric \(\lambda\) index

The marginal posterior distribution of the hierarchical effects in the model outlined above captures information about the polarization or disagreement among raters (on the assumption that the model captures the data adequately). The ICC (i.e., intraclass correlation coefficient, (Martinková et al. 2023; Bartoš et al. 2020; Agresti 2015; Gelman et al. 2013)) might adequately quantify inter-rater variability if the normal distributional assumption of the rater hierarchical effect holds. Two assumptions are made computing the standard ICC considering a normal distributed hierarchical effect. Firstly, that the raters are sampled from the same population. Secondly, that possible different latent trends among raters are not interesting or eventually regarded as disagreement ratings. This might be a good first approximation of the rating process. Nevertheless, when more detailed considerations are needed, or subtle heterogeneity among raters is expected, the standard ICC might be less informative and inaccurate. Besides the latter issue, further information about the shape of the posterior might be quantified. For instance, in presence of a bimodal hierarchical effects distribution with two very distant modes (for example, when opinions are polarised), considering the posterior distribution of \(\sigma _u\) as an index of variability among raters might be misleading.

Several indexes have been proposed to quantify group opinion polarization (e.g., (Tang et al. 2022; Koudenburg and Kashima 2022; Koudenburg et al. 2021; Esteban and Ray 1994)) and to measure distribution bimodality (e.g., the Ashman’s D (Forchheimer et al. 2015) or the bimodal separation index (Zhang et al. 2003)). The strong assumptions behind their use limit them to be valid options only in the parametric context or when the number of clusters is known. A model based nonparametric index is here proposed to overcome these limitations.

To this end the full estimated distribution of \({\textbf{u}}\) resulting from the model might be useful. At each iteration t, the density of \({\textbf{u}}\) is given by the corresponding mixture model given the parameters at iteration t. Following the formulation of (Gelman et al. 2013), the set of modes and antimodes (i.e., the lowest frequent value between two modes) is identified. When the distribution of \({\textbf{u}}\) is multimodal, the latent polarization (disagreement) \(\lambda\) is then defined as the log ratio between the mean density of the modes and the that of the anti-modes, it is zero when it is unimodal:

Where M is the number of modes \(\gamma _m\), \(m=1,\ldots ,M\) and the number of antimodes \(\zeta _m\), \(m=1,\ldots ,M-1\) of the density of \({\textbf{u}}\); \(f_{u}(\cdot )\) denotes the density at a specific point. Larger values of \(\lambda\) indicate strongly multimodal distribution of the hierarchical effects, whereas smaller values are evidence of weak multimodality, thus the estimated hierarchical effects are less concentrated.

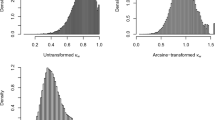

As it is shown in Fig. 1 larger values of \(\lambda\) indicate distribution polarization, whereas smaller values indicate a less concentrated and more spread density distribution. The \(\lambda\) index is strongly affected by both location and scale parameters of the mixture components. For this reason it might be very informative in presence of multimodal distributions. Assuming such a raters’ group polarization as a result of low latent agreement among raters, the \(\lambda\) index might be a useful diagnostic tool.

Different values of \(\lambda\) indicate different polarization levels. Three different values of \(\lambda\) were computed for three different mixture distributions, respectively. The realizations of these distribution are here referred to as u. Black dotted lines indicate the mean mode density, red dotted lines indicate the mean antimode density. a High polarization: the mixture components are highly and clearly separate, the mean density values of the modes is far larger then the mean density value of the antimodes; the log-density ratio between these two quantities is \(\lambda =2.55\). b Medium polarization: the mixture components are clearly separated, but the mean density values of the modes is closer to the mean density value of the antimodes; the log-density ratio between these two quantities is \(\lambda =0.81\). c Low polarization: the mixture components are not clearly separated, the mean density values of the modes is very close to the mean density value of the antimodes; the log-density ratio between these two quantities is \(\lambda =0.19\). d No polarization: the mixture distribution has only one mode (i.e., \(\gamma _1\)) and \(\lambda =0\) since the number of mode is not greater then one

6 Simulation studies

The following simulations aim to evidence how the values of \(\lambda\) varying across different polarization settings. The first simulation investigates the role of the precision parameter \(\alpha\) and the variance of the mixture components in determining the values of \(\lambda\). The second one shows the complementary role of \(\lambda\) in the inter-rater agreement analysis and how this index varies across different settings.

6.1 Simulation 1: DPM and \(\lambda\)

6.1.1 Simulation setting

The first simulation study explores the role that the precision parameter of the Dirichlet Process and the variance of the components have in determining the values of the log-density index \(\lambda\). For simplicity purpose the mixture components are assumed to have the same variance Q in this simulation, so the component subscription will be omitted. The objective is to study the effect of \(\alpha\) and Q, on \(\lambda\) conditional on all the other variables. Since the former has a crucial role in the determination on the point masses of G, and thus the concentration of its realizations, an inverse relation between \(\alpha\) and \(\lambda\) is expected if Q is fixed. Likewise, an inverse relation between Q and \(\lambda\) is expected if \(\alpha\) is fixed. It is interpretable as an index of the sharpness of the modes. For this reason both the precision parameter of the DPM and the variance of its components are expected to have an effect on \(\lambda\). Controlling for Q (i.e., keeping it fixed), the expected relation is: the smaller \(\alpha\), i.e. the precision of the DPM mixture, the larger \(\lambda\), i.e. the relative density around the modes; controlling for \(\alpha\) (i.e., keeping it fixed), the expected relation is: the smaller Q, i.e. the variance of the components of the DPM, the larger \(\lambda\). The parameters of the base measures \(G_0\) have a non-negligible role in determining \(\lambda\), so in this section focus is devoted to the relation between the precision parameter \(\alpha\), the mixture components variance Q and the index \(\lambda\). Indeed, in all the study simulations the values of the other parameters involved in the DPM have been kept fixed across the scenarios.

Data generating process

The experimental design is as follows. For 4 different values of \(\alpha = (0.1, 1, 5, 20)\) and 2 different values of \(Q = (0.1, 1.5)\) a set of independent observations \(u=1,\ldots ,n\) are drawn from the following DPM:

Where \(\mu _c\) and \(\pi _c\) are the location parameter and the mixing proportion of the component c, respectively; \(G_0\) is the base measure; and \(\nu _c\) is the parameter of the stick-breaking. Following the above mentioned truncated stick-breaking construction, here R is the maximum number of observable cluster. Across the eighth scenarios the following quantities are assigned: the number of observations \(n=500\), the maximum number of clusters \(R=50\), the base measure \(G_0:\) \(U(-6,6)\). Here, \(U(\cdot )\) stands for uniform distribution. The use of these distributions in the present experimental context aims to highlight the effect of different values of \(\alpha\) and Q on \(\lambda\) in a more evident and interpretable manner.

6.1.2 Results

As shown in Tables 1 and 2 as \(\alpha\) increases, and so the number of point masses of G increases as well, \(\lambda\) decreases. The density of the observations \(u=1,\ldots ,n\) is concentrated around few point masses (few modes) for lower value of \(\alpha\) and is spread out the larger. Note also the change of density of the antimodes. As expected, it is proportional to the precision parameter in a positive fashion. As the observations are more spread as \(\alpha\) increases, there are fewer intervals in the support with relative small density: \(\lambda\) index decreases at larger values of \(\alpha\) (column-wise Tables 1 and 2). A similar proportional relation is observed between the variance of the mixture components Q and \(\lambda\) when \(\alpha\) is kept fixed (row-wise Tables 1 and 2). Smaller values of both \(\alpha\) and Q result in a high polarized distribution of \(u=1,\ldots ,n\) and correspond to larger values of \(\lambda\). Whereas larger values of both \(\alpha\) and Q result in a low polarized distribution and correspond to smaller values of \(\lambda\). It is is an index of how spread the density is over the support of the hierarchical effects.

From an interpretative point of view, \(\lambda\) indicates the degree of overlap between the infinitely many clusters. It might be informative of the separation between them. Since this quantification is based on a non-parametric density, \(\lambda\) is not directly related to the number of the group, or to the cluster location. It indicates the degree to which the independent observations drawn from a DPM overlap; the variance of the cluster Q also plays a crucial role. The index thus quantifies the combined effect of the parameters to assess the extent to which possible different opinions (i.e., the modes) might be strongly shared among the raters (i.e., the modes are sharp pick of density). To this regard, \(\lambda\) is a polarization index in presence of heterogeneity. The higher the polarization levels, the larger the values of the index. The practical interpretation and the operational decisions must be guided by the field of application.

6.2 Simulation 2: inter-rater agreement and \(\lambda\)

6.2.1 Simulation setting

The following simulation study aims to highlight the complementary role of \(\lambda\) as an additional summary metric in inter-rater agreement analysis. The varying intercept parametrization is hereafter adopted as univariate case for the raters effects. To this aim the standard modelling approach (i.e., the normal distributed varying intercept and the resulting ICC) is compared with the nonparametric proposed above (i.e., the DPM prior over the varying intercept and \(\lambda\)). The experiment evaluates the performance of both standard ICC and \(\lambda\) in the presence of heterogeneity between raters’ evaluations due to a multimodal distribution of the hierarchical effects.

Data generating process

Three experimental scenarios were planned, in which a different clustering on the raters’ intercept parameter was specified in the generative model. In each scenario the rater’s intercept \(u_i\) was generated from a bimodal Gaussian mixture. The location parameters of the mixture components were fixed across the scenarios, \(\mu _1=-3\) and \(\mu _2=3\); whereas decreasing values (1, 0.5, 0.1) were assigned to the components scale parameters \(Q_1\) and \(Q_2\) (see Table 3). This resulted in different polarization scenarios. The mixture components were kept equiprobable (\(\pi _c=0.5\)), \(c \in \{1,2\}\) throughout. The number of raters \(I=100\) and the number of items \(J=250\) were fixed across the scenarios. One continuous covariate \(x_{ij}\) with an effect \(\beta =2\) was used and it was the same across the scenarios.

Standard model approach

The following priors were specified for the standard hierarchical effect model (i.e., the varying intercepts are assumed to be i.i.d. normal distributed):

for \(i =1,\ldots ,I\); \(Exp(\cdot )\) stands fro the exponential distribution and \(\beta\) is the non-hierarchical effect, \(\sigma _u\) and \(\sigma _\varepsilon\) are the hierarchical effect and the noise variances parameters, respectively. A logic of complexity penalization was used in the choice of the above mentioned priors distributions (Simpson et al. 2017). The posterior of each standard hierarchical effect model were sampled using NUTS-Hamiltonian MCMC in Stan language (Stan Development Team 2022).

Nonparametric model approach

The set of priors introduce in Sect. 4 were elicited for the DPM models with the following hyperparameters as suggested by (Heinzl et al. 2012): \({\textbf{b}}_0= {\textbf{0}}, {\textbf{S}}_0= 1000{\textbf{I}}_p\), \(a_{\beta _0}=0.005, b_{\beta _0}=0.005, {\textbf{m}}_0= {\textbf{0}}\), \({\textbf{W}}_0=100{\textbf{I}}_q, a_{D_0}=0.5, b_{D_0}=0.5\), \(a_{Q_0}=0.001, b_{Q_0}=0.001, a_\alpha =2, b_\alpha =2,a_\varepsilon =0.005, b_\varepsilon =0.005\). As result of some preliminary analysis, a dense grid of 481 equally-spaced values from − 12 to 12 (i.e., with a fixed interval of 0.05) was used to monitoring the mixture density of the nonparametric varying intercept \(u_i\) at each iteration. The posterior distribution of the nonparametric hierarchical effect is obtained as the set of the mean density of each point of the grid over the iterations (Gelman et al. 201320132013).

In all the computations for both the models 55,000 iteration with 5000 burn-in were used, the Markov chains were thinned the by a factor of 50, resulting in samples of size 1000 (Heinzl et al. 2012).

6.2.2 Results

As shown in Table 4 the standard model (i.e., that in which hierarchical raters intercepts are assumed to be i.i.d. normally distributed) due to the rigid distributional assumption of the hierarchical parameters is not able to capture the possible multimodal distribution and it resulted in a large value of the hierarchical effect variance \(\sigma _u\) (see Table 4 and Fig. 2). As a result, the ICC didn’t capture almost any difference among the three different scenarios (see Table 4 and Fig. 3). On the contrary, the DPM model, due to the flexible nonparametric specification of the intercepts prior, showed a good performance. As evident from Fig. 4 and Table 4 the DPM model was far more able to reproduce the data generating process. The different mixture used to generate the data emerged clearly from the posterior of the grid adopted to monitoring \({\textbf{u}}\) (Fig. 4). Since the DPM model properly learn the multi-modalities of the raters intercepts density, the index \(\lambda\), being based on the ratio between the mean density of the modes and that of the antimodes present in the grid at each iteration, showed to be able to differentiate the three different polarization scenarios. It gives some interesting information regarding the shape of the non-parametric mixture distribution. The 95% credible interval of \(\lambda\) (see Table 5 and Fig. 5) as estimated in the three different scenarios highlighted different degrees of amplitude and separation (i.e., different degrees of polarization) along them. The index \(\lambda\) is computed as a logarithm of the ratio of the average mode density against the density of the antimodes at each iteration t of the posterior sampler. So, in the first scenario, the values of the 95% credible interval are smaller, indicating that in most of the iterations the difference between the mean density at the modes and that of the antimodes was very small. In terms of the third scenario, \(\lambda\) assumed rather larger values along the iterations as evidence that the mode density is far larger than that of the antimodes. In other words, the rater clusters were separated and clearly distinct. The parameters of the DPM are the most influential with regard to \(\lambda\). Specifically, the location parameters \(\mu _c\), \(c=1,\ldots ,R\), and the scale parameters \(Q_c\), \(c=1,\ldots ,R\), showed to have a combined effect of the proposed index. The 95% HDP intervals of the parameters of both the DPM prior model and that with normal distributional assumption are reported in Tables 6 and 7, respectively.

95% HPD intervals of \(\sigma _u\) from the standard models (i.i.d. normal distributed varying intercepts): a Scenario 1: lower polarization. b Scenario 2: medium polarization. c Scenario 3: higher polarization. Scenario 3: higher polarization. These models, due to their rigid distributional assumption, poorly differentiate the three different polarization scenarios

7 Large scale performance assessment

The evaluation heterogeneity of teachers is a long-standing issue in psychometrics (Uto 2022; Shirazi 2019; Bonefeld and Dickhäuser 2018; Casabianca et al. 2015; DeCarlo 2008). Highly biased scored might have a detrimental effect on students proficiency and education (Chin et al. 2020; Paredes 2014; Cooper 2003). The proposed nonparametric model and the index \(\lambda\) might be valuable tools to address this issue. They might help to shed light on very biased assessment contexts and to provide fairer scores. The estimated hierarchical effect of each teacher (which may be interpreted as the teacher’s bias) might be used to adjust the observed score. The index \(\lambda\) might quantify teachers polarization in their grading.

The Matura data set

As an illustrative real data application, a large scale performance assessment data set was analysed (Zupanc and Štrumbelj 2018). The DPM-model was applied to a large-scale essay assessment data obtained during the nation-wide external examination conducted by the National Examination Centre in upper secondary schools in Slovenia also known as Matura and analyzed in Zupanc and Štrumbelj (2018). These data were related to the spring term argumentative essays for years between 2010 and 2014. Particular attention is devoted to the distinction between two main aspects of essay writing: the language correctness (i.e., the presence of grammatical or syntactic errors) and the the good argumentation of the content (i.e., a good and clear presentation of all the arguments). Regarding the data structure, students are nested within the teachers. So that each student’s essay is evaluated by one trained teacher, who is asked to grade it concerning two different rubrics. An essay can receive a score between 0 and 20 for the language-related rubric and between 0 and 30 for the content-related one. Prior analysis of these data (Zupanc and Štrumbelj 2018) revealed that heterogeneity among teachers was broadly down to two types: strict and lenient. The two different trends might be captured by the model and their polarization quantified by the \(\lambda\) index.

For this reason N=2616 students’ essays, each scored by one of I=18 different teachers, were considered for the analysis.Footnote 6 The objective of this application is to analyze teachers’ individual differences in scoring the essay content, controlling for its language correctness. How lenient or strict they are in scoring the quality of an essay content, without the effect of the language correctness. The content score is commonly ways more susceptible to idiosyncrasies or biases of the teacher than the language-related score, which is generally more objective (Childs and Wooten 2023; Zhu et al. 2021; Shirazi 2019). Accordingly, the content-related score was specified as outcome variable and the language-related score as covariate with a non varying effect. A DPM hierarchical prior was specified over the teachers’ intercepts. All the scores were re-scaled for this analysis to get a easier parameters value interpretationFootnote 7 (Gelman et al. 2013).

7.1 Results

The language-related score showed a posterior mean effect of 0.27 on the content-related score, with a (0.16, 0.38) 95% credible interval. The language correctness of the essay writing had moderate role in predicting the evaluation of the its content. As shown by Fig. 6a the DPM-model learned the presence of two main trends from the data. The bimodal non-parametric distribution over the grid suggested that the teachers were rather heterogeneous in the essay scoring process. More precisely, they seemed to be slightly polarized around two main tendencies. Some teacher showed a slightly more lenient or stricter than the others (i.e., who had a larger or smaller hierarchical effect posterior mean, respectively), see Fig. 6. The \(\lambda\) index showed a posterior mean of 1.87 which suggested a low polarization. The \(\lambda\) 95% HPD interval was (0.0, 6.83) which indicated a non negligible occurrence of quite high values of \(\lambda\). All the other parameters credible intervals are reported in Table 8

Assuming this latent group polarization as a low latent agreement among raters, the \(\lambda\) index might be used in a diagnostic manner. Considering the present application, some solutions might be suggested for a fairer assessment process. Firstly, assuming a very negligible noise term, the teacher’s estimated bias might be removed from the actual score. Another practical solution might be the implementation ad hoc training aimed to a much more shared point of view in essay scoring.

a 95% HPD of the monitoring grid for the teachers’ hierarchical effects \(u=1,\ldots ,I\). b Posterior mean of the hierarchical effect of each teacher. Two different clusters emerged from the analysis, as expected: the more lenient (to the right-hand side) and the stricter (to the left-hand side). c \(\lambda\)’ 95% credible intervals. It indicate a moderate polarized posterior distribution of the posterior hierarchical effects

8 Conclusions

Most of the statistical models commonly used to analyze data from such observational contexts haven’t shown to be very flexible to certain types of heterogeneity among raters. The common HLMs with a normal (or unimodal) distributional assumption for the hierarchical effects cannot capture any possible latent clusters, i.e. any multimodality. Indeed, the residual covariance modelled through the hierarchical effects might be informative about different latent similarities among raters. In this regard, incorporating a DPM in the prior of the hierarchical effects distribution is a flexible choice to address this issue.

Consequently, the estimation of the agreement among the raters should take into account the possible multimodal distribution of the hierarchical effects. Interest might not be exclusively on the proportion of variance attributable to the hierarchical effects over the total variance (i.e., the main interpretation of the ICC); instead, it might be more appealing to explore the entire multimodal density. Since the DPM naturally accommodates clusters among hierarchical effects (i.e., among raters), it is natural to consider the extent to which the mixture components are separated. Since \(\lambda\) is based on the density approximated through the grid approach \(f({\textbf{u}})\), it reflects both the clustering induced by the Dirichlet process and the variance of the mixture components. Due to the particular information carried by \(\lambda\) it might be more informative about the latent agreement among raters than the solely ICC. The latter is very useful when the normal distributional assumption of the hierarchical effects holds. However, in the presence of multimodality the estimate of the variance of the hierarchical effect \(\sigma _u\) is not accurate (it might be over-estimated) and the related ICC might be non-informative.

In contexts in which strong beliefs about the exact number of cluster are present or it is supported by some sort of evidence, an hierarchical model with a prior finite mixture distribution over the hierarchical effects is expected to have comparably good performance as well. The parametric variance of a mixture might be take into account in the ICC formula in these cases. For the above mentioned reasons, added flexibility and the shrinkage property the DPM was here preferred.

Many other studies are needed to fully understand the performance of \(\lambda\) across different combinations of the Dirichlet process parameters. Future works might highlight the role of \(\lambda\) when the rating is either expressed on a dichotomous or on a polytomous scale. Further studies might highlight the computation of \(\lambda\) when multivariate hierarchical effects are specified. Comparisons between this index and the others widely used in these cases (Tang et al. 2022; Forchheimer et al. 2015; Zhang et al. 2003) might be a focus of future studies. Further application of \(\lambda\) in a non-parametric context might be studied (Canale and Prünster 2017).

Notes

Commonly referred to as subjects in the rating context.

The multiple rating case (i.e., raters rate the same set of items, \({\mathscr {J}}_i = {\mathscr {J}}\), \(i=1,\ldots ,I\)) is addressed in Appendix.

Considering the partition \((A,A^c)\) of \(\Omega\) and thus that \(G(A) \sim Be(\alpha G_0(A),\alpha G_0(A^c))\) the expectation of G(A) is defined as: \({\mathbb {E}}[G(A)]=\frac{\alpha G_0(A)}{\alpha G_0(A)+\alpha G_0(A^c)}= \frac{\alpha G_0(A)}{\alpha (G_0(A)+G_0(A^c))}=G_0(A).\)

Assuming independence between the location and the scale parameters of each mixture component, and between all the scale parameters for each covariate \(d=1,\ldots ,q\), \(G_0\) is then the product of the q-variate normal and the q inverse gamma distributions.

For illustrative purposes, only the variables related to the first teachers were considered.

The following transformation was applied to standardize the score: \(f(x)=\frac{x-{\overline{x}}}{\hat{\sigma _x}}\), where \({\overline{x}}=\frac{1}{N}\sum _{n=1}^{N}x_n\) was the sample mean and \(\hat{\sigma _x}=\sqrt{\frac{1}{N}\sum _{n=1}^{N-1}(x_n-{\overline{x}})^2}\) the sample standard deviation.

References

Agresti A (2015) Foundations of linear and generalized linear models

Antoniak CE (1974) Mixtures of Dirichlet processes with applications to Bayesian nonparametric problems. Ann Stat 2(6):1152–1174

Barneron M, Allalouf A, Yaniv I (2019) Rate it again: using the wisdom of many to improve performance evaluations. J Behav Dec Mak 32(4):485–492

Bartoš F, Martinkova P, Brabec M (2020) Testing heterogeneity in inter-rater reliability, pp 347–364

Blackwell D (1973) Discreteness of Ferguson selections. Ann Stat 1(2):356–358

Blackwell D, MacQueen JB (1973) Ferguson distributions via Polya urn schemes. Ann Stat 1(2):353–355

Bonefeld M, Dickhäuser O (2018) (Biased) grading of students’ performance: students’ names, performance level, and implicit attitudes. Front Psychol 9:481

Bouchard-Côté A, Doucet A, Roth A (2017) Particle Gibbs split-merge sampling for Bayesian inference in mixture models. J Mach Learn Res 18:1–39

Briesch A, Hemphill E, Volpe R, Daniels B (2014) An evaluation of observational methods for measuring response to Classwide intervention. In: School psychology quarterly: the official journal of the Division of School Psychology, American Psychological Association, vol 30

Bygren M (2020) Biased grades? Changes in grading after a blinding of examinations reform. Assess Eval Higher Educ 45(2):292–303

Canale A, Dunson DB (2011) Bayesian kernel mixtures for counts. J Am Stat Assoc 106(496):1528–1539 (PMID: 22523437)

Canale A, Prünster I (2017) Robustifying Bayesian nonparametric mixtures for count data. Biometrics 73(1):174–184

Cao J, Stokes SL, Zhang S (2010) A Bayesian approach to ranking and rater evaluation: an application to grant reviews. J Educ Behav Stat 35(2):194–214

Casabianca JM, Lockwood JR, Mccaffrey DF (2015) Trends in classroom observation scores. Educ Psychol Meas 75:311–337

Childs TM, Wooten NR (2023) Teacher bias matters: an integrative review of correlates, mechanisms, and consequences. Race Ethn Educ 26(3):368–397

Chin MJ, Quinn DM, Dhaliwal TK, Lovison VS (2020) Bias in the air: a nationwide exploration of teachers’ implicit racial attitudes, aggregate bias, and student outcomes. Educ Res 49(8):566–578

Cicchetti DV (1976) Assessing inter-rater reliability for rating scales: resolving some basic issues. Br J Psychiatry 129(5):452–456

Cooper CW (2003) The detrimental impact of teacher bias: lessons learned from the standpoint of African American mothers. Teach Educ Quart 30(2):101–116

Crimmins G, Nash G, Oprescu F, Alla K, Brock G, Hickson-Jamieson B, Noakes C (2016) Can a systematic assessment moderation process assure the quality and integrity of assessment practice while supporting the professional development of casual academics? Assess Evaluat Higher Educ 41(3):427–441

Dahlin J, Kohn R, Schön TB (2016) Bayesian inference for mixed effects models with heterogeneity

De la Cruz-Mesia R, Marshall G (2006) Non-linear random effects models with continuous time autoregressive errors: a Bayesian approach. Stat Med 25(9):1471–1484

DeCarlo LT (2008) Studies of a latent-class signal-detection model for constructed-response scoring. ETS Res Rep Ser. https://doi.org/10.1002/j.2333-8504.2008.tb02149.x

Dee TS (2005) A teacher like me: does race, ethnicity, or gender matter? Am Econ Rev 95(2):158–165

DiMaggio P, Evans J, Bryson B (1996) Have American’s social attitudes become more polarized? Am J Sociol 102(3):690–755

Dorazio RM (2009) On selecting a prior for the precision parameter of Dirichlet process mixture models. J Stat Plann Inference 139(9):3384–3390

Dressler WW, Balieiro MC, dos Santos JE (2015) Finding culture change in the second factor: stability and change in cultural consensus and residual agreement. Field Methods 27(1):22–38

Esteban J-M, Ray D (1994) On the measurement of polarization. Econometrica 62(4):819–851

Ferguson TS (1973) A Bayesian analysis of some nonparametric problems. Ann Stat 1(2):209–230

Forchheimer D, Forchheimer R, Haviland D (2015) Improving image contrast and material discrimination with nonlinear response in bimodal atomic force microscopy. Nat Commun 6:6270

Gelman A, Carlin J, Stern H, Dunson D, Vehtari A, Rubin D (2013) Bayesian data analysis. Chapman and Hall/CRC, Boca Raton

Gill J, Casella G (2009) Nonparametric priors for ordinal Bayesian social science models: specification and estimation. J Am Stat Assoc 104(486):453–454

Gisev N, Bell JS, Chen TF (2013) Interrater agreement and interrater reliability: key concepts, approaches, and applications. Res Soc Adm Pharm 9(3):330–338

Gwet KL (2008) Computing inter-rater reliability and its variance in the presence of high agreement. Br J Math Stat Psychol 61(1):29–48

Heinzl F, Tutz G (2013) Clustering in linear mixed models with approximate Dirichlet process mixtures using EM algorithm. Stat Model 13(1):41–67

Heinzl F, Kneib T, Fahrmeir L (2012) Additive mixed models with Dirichlet process mixture and p-spline priors. AStA Adv Stat Anal 96:47–68

Hsiao CK, Chen P-C, Kao W-H (2011) Bayesian random effects for interrater and test-retest reliability with nested clinical observations. J Clin Epidemiol 64(7):808–814

Ishwaran H, James L (2001) Gibbs sampling methods for stick-breaking priors. J Am Stat Assoc 96:161–173

James GM, Sugar CA (2003) Clustering for sparsely sampled functional data. J Am Stat Assoc 98(462):397–408

Jang JH, Manatunga AK, Taylor AT, Long Q (2018) Overall indices for assessing agreement among multiple raters. Stat Med 37(28):4200–4215

Kahrari F, Ferreira CS, Arellano-Valle RB (2019) Skew-normal-Cauchy linear mixed models. Sankhya B Indian J Stat 81(2):185–202

Kim S, Tadesse MG, Vannucci M (2006) Variable selection in clustering via Dirichlet process mixture models. Biometrika 93(4):877–893

Komárek A, Komárková L (2013) Clustering for multivariate continuous and discrete longitudinal data. Ann Appl Stat 7(1):177–200

Komárek A, Hansen BE, Kuiper EMM, van Buuren HR, Lesaffre E (2010) Discriminant analysis using a multivariate linear mixed model with a normal mixture in the random effects distribution. Stat Med 29(30):3267–3283

Koudenburg N, Kashima Y (2022) A polarized discourse: effects of opinion differentiation and structural differentiation on communication. Personal Soc Psychol Bull 48(7):1068–1086

Koudenburg N, Kiers HAL, Kashima Y (2021) A new opinion polarization index developed by integrating expert judgments. Front Psychol 12:738258

Kyung M, Gill J, Casella G (2011) New findings from terrorism data: Dirichlet process random-effects models for latent groups. J R Stat Soc Ser C (Appl Stat) 60(5):701–721

Liljequist D, Elfving B, Skavberg Roaldsen K (2019) Intraclass correlation—a discussion and demonstration of basic features. PLoS ONE 14(7):1–35

Lin TI, Lee JC (2008) Estimation and prediction in linear mixed models with skew-normal random effects for longitudinal data. Stat Med 27(9):1490–1507

Makransky G, Terkildsen T, Mayer R (2019) Role of subjective and objective measures of cognitive processing during learning in explaining the spatial contiguity effect. Learn Instr 61:23–34

Martinková P, Bartoš F, Brabec M (2023) Assessing inter-rater reliability with heterogeneous variance components models: flexible approach accounting for contextual variables. J Educ Behav Stat 48(3):349–383

McCulloch CE, Neuhaus JM (2021) Improving predictions when interest focuses on extreme random effects. J Am Stat Assoc 118(541):504–513

McHugh M (2012) Interrater reliability: the kappa statistic. Biochemia medica : časopis Hrvatskoga društva medicinskih biokemičara /HDMB 22:276–82

Müller P, Quintana FA, Jara A, Hanson T (2015) Bayesian nonparametric data analysis, vol 1. Springer, Berlin

Navarro DJ, Griffiths TL, Steyvers M, Lee MD (2006) Modeling individual differences using Dirichlet processes. J Math Psychol, 50(2):101–122. Special Issue on Model Selection: Theoretical Developments and Applications

Nelson KP, Edwards D (2008) On population-based measures of agreement for binary classifications. Canad J Stat 36:411–426

Nelson K, Edwards D (2015) Measures of agreement between many raters for ordinal classifications. Stat Med 34:3116–3132

Oravecz Z, Vandekerckhove J, Batchelder WH (2014) Bayesian cultural consensus theory. Field Methods 26(3):207–222

Paredes V (2014) A teacher like me or a student like me? Role model versus teacher bias effect. Econ Educ Rev 39:38–49

Rigon T, Durante D (2021) Tractable Bayesian density regression via logit stick-breaking priors. J Stat Plann Inference 211:131–142

Rodriguez A, Dunson D (2011) Nonparametric Bayesian models through probit stick-breaking processes. Bayesian Anal 6:145–178

Schielzeth H, Dingemanse NJ, Nakagawa S, Westneat DF, Allegue H, Teplitsky C, Réale D, Dochtermann NA, Garamszegi LZ, Araya-Ajoy YG (2020) Robustness of linear mixed-effects models to violations of distributional assumptions. Methods Ecol Evolut 11(9):1141–1152

Sethuraman J (1994) A constructive definition of Dirichlet priors. Stat Sin 4(2):639–650

Shirazi MA (2019) For a greater good: Bias analysis in writing assessment. SAGE Open 9(1):2158244018822377

Simpson D, Rue H, Riebler A, Martins TG, Sørbye SH (2017) Penalising model component complexity: a principled, practical approach to constructing priors. Stat Sci 32(1):1–28

Stan Development Team (2022) RStan: the R interface to Stan. R package version 2.21.7

Stefanucci M, Canale A (2021) Multiscale stick-breaking mixture models. Stat Comput 31:13

Tang T, Ghorbani A, Squazzoni F, Chorus CG (2022) Together alone: a group-based polarization measurement. Qual Quant 56:3587–3619

Tutz G, Oelker M-R (2017) Modelling clustered heterogeneity: fixed effects, random effects and mixtures. Int Stat Rev 85(2):204–227

Ulker Y, Günsel B, Cemgil T (2010) Sequential Monte Carlo samplers for Dirichlet process mixtures. In: Teh YW, Titterington M (eds), Proceedings of the 13th international conference on artificial intelligence and statistics, volume 9 of proceedings of machine learning research, pp 876–883, Chia Laguna Resort, Sardinia, Italy. PMLR

Uto M (2022) A Bayesian many-facet Rasch model with Markov modeling for rater severity drift. Behav Res Methods 55(7):3910–3928

Verbeke G, Lesaffre E (1996) A linear mixed-effects model with heterogeneity in the random-effects population. J Am Stat Assoc 91(433):217–221

Villarroel L, Marshall G, Barón AE (2009) Cluster analysis using multivariate mixed effects models. Stat Med 28(20):2552–2565

Walker SG (2007) Sampling the Dirichlet mixture model with slices. Commun Stat Simul Comput 36(1):45–54

Wang W-L, Lin T-I (2014) Multivariate t nonlinear mixed-effects models for multi-outcome longitudinal data with missing values. Stat Med 33(17):3029–3046

Wirtz MA (2020) Interrater reliability. Springer, Cham, pp 2396–2399

Zhang C, Mapes BE, Soden BJ (2003) Part a no. 594 q. J R Meteorol Soc 129:2847–2866

Zhu Y, Fung AS-L, Yang L (2021) A methodologically improved study on raters’ personality and rating severity in writing assessment. SAGE Open 11(2):21582440211009476

Zupanc K, Štrumbelj E (2018) A Bayesian hierarchical latent trait model for estimating rater bias and reliability in large-scale performance assessment. PLoS ONE 13(4):1–16

Funding

Open access funding provided by Università degli Studi di Padova. The authors have no relevant financial or non-financial interests to disclose.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article. All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript. The authors have no financial or proprietary interests in any material discussed in this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Remarks for multiple ratings

When raters rate the same set of items \({\mathscr {J}}_i = {\mathscr {J}}\), \(i=1,\ldots ,I\) a varying intercept can be identified for each item (Martinková et al. 2023; Agresti 2015; Nelson and Edwards 2015). These term might be added to Eq. (1) (which is the same in both the standard and nonparametric formulation):

In both the standard HLM (i.e., assuming a multivariate normal distributed hierarchical rater effect) and the nonparametric HLM (i.e., specifying a DPM over the rater effect) the following distribution might be specified:

where \(\sigma _\delta >0\) is the scale parameter of \(\delta\) and \(J=|{\mathscr {J}}|\). See Sects. 2 and 3 for the other quantities and their distribution assumption. Specifying a conjugate prior for \(\sigma _\delta\) additional steps might be added to the Gibbs sampling for the nonparametric HLM.

The main results of the present work and the interpretation of \(\lambda\) (see Sect. 5) still hold for this model specification.

1.2 Details on Dirichlet process mixture

As noticed above, \(\alpha\) is proportional to the concentration of the realizations of G in point masses. Indeed, considering the partition \((A,A^c)\) of \(\Omega\), the variance of G(A) is defined as

Thus, larger values of \(\alpha\), conditioning on the number of raters I, reduce the variability of the DP, i.e. the process samples most of the time from \(G_0\), G tends to be an infinite number of point masses: the empirical distribution of G tends to become a discrete approximation of the parametric \(G_0\). In this case there is no a strong clustering since the probability of ties is very low. On the contrary, smaller values of \(\alpha\) induce a strong clustering, the random weights distribution concentrate the probability mass to few points of the support of G and the probability of ties is higher. Which in the present model means that several \(u_i\) will be independent and identically distributed from a normal distribution indexed by the same parameters. Moreover, Antoniak (Antoniak 1974) demonstrated that

where C is the number of clusters. Thus, the expected number of point masses of G is proportional to both the \(\alpha\) and the number of raters I. Every consideration regarding the role of the precision parameter on the distribution of G should be conditioned to I.

1.3 Details on the Gibbs sampling

Further details regarding some parameters of the posterior sampling are showed as follow.

The following matrix notation is here adopted: \({\textbf{X}}_i=({\textbf{x}}_{i1}',\ldots ,{\textbf{x}}_{i|{\mathscr {J}}_i|}')\), \({\textbf{Z}}_i=({\textbf{z}}_{i1}',\ldots ,{\textbf{z}}_{i|{\mathscr {J}}_i|}')\), are the design matrices for each rater \(i=1,\ldots ,I\); and \({\textbf{X}}=({\textbf{X}}_1,\ldots ,{\textbf{X}}_I)\) and \({\textbf{Z}}=diag({\textbf{Z}}_1,\ldots ,{\textbf{Z}}_I)\) are the full design matrices.

-

1.

Referring to the non varying effects:

$$\begin{aligned} {\textbf{b}}_\beta ^{*}= & {} \left( {\textbf{B}}_\beta ^{-1} + \frac{1}{\sigma _\varepsilon ^2} {\textbf{X}}'{\textbf{X}}\right) ^{-1} \left( {\textbf{B}}_\beta ^{-1}{\textbf{b}}_\beta + \frac{1}{\sigma _\varepsilon ^2} {\textbf{X}}'\left( {\textbf{y}}-\textbf{Zu}\right) \right) \\ {\textbf{B}}_\beta ^{*}= & {} \left( {\textbf{B}}_\beta ^{-1} + \frac{1}{\sigma _\varepsilon ^2} {\textbf{X}}'{\textbf{X}}\right) ^{-1} \end{aligned}$$ -

2.

Referring to hierarchical effects:

-

For each rater \(i =1,\ldots ,I\):

$$\begin{aligned} \mathbf {\mu }_{c_i}^*= & {} \left( {\textbf{D}}_0^{-1} + \frac{1}{\sigma _\varepsilon ^2} {\textbf{Z}}'_i{\textbf{Z}}_i\right) ^{-1} \left( {\textbf{D}}_0^{-1}\mathbf {\mu }_{c_i} + \frac{1}{\sigma _\varepsilon ^2} {\textbf{Z}}'_i \left( {\textbf{y}}_i-{\textbf{X}}_i{\beta }\right) \right) \\ {\textbf{Q}}_{c_i}^*= & {} \left( {\textbf{D}}_0^{-1} + \frac{1}{\sigma _\varepsilon ^2} {\textbf{Z}}'_i{\textbf{Z}}_i \right) ^{-1} \end{aligned}$$Here \(\mathbf {\mu }_{c_i}\) is the location parameter vector of the cluster where the rater i is allocated.

-

For each component \(r = 1,\ldots ,R\) and each variable \(d=1,\ldots ,q\), associated with an hierarchical effect:

$$\begin{aligned} \mu _{0_r}^*= & {} \left( \frac{c_r}{\sigma _{Q_d}^2} + \frac{1}{\sigma _{D_{0_d}}^2} \right) ^{-1} \left( \frac{c_r}{\sigma _{Q_d}^2} {\overline{u}}_{d,r} + \frac{\mu _{0_r}}{\sigma _{D_{0_d}}^2} \right) \\ \sigma _{D_{0_d}}^{2*}= & {} \left( \frac{c_r}{\sigma _{Q_d}^2} + \frac{1}{\sigma _{D_{0_d}}^2} \right) ^{-1} \\ \sigma _{Q_{dr}}^2|\mathbf {\mu }, {\textbf{u}}\sim & {} IG \left( a_{Q_0} + \frac{r_c}{2}, b_{Q_0}+\frac{1}{2}\displaystyle \sum _{i=1}^{r_c} (u_{i_d}-\mu _{dr})^2 \right) \end{aligned}$$Here \({\overline{u}}_{dr}\) is the mean of the d-th hierarchical effect in the cluster r.

-

For rater \(i =1,\ldots ,I\) and each component \(r =1,\ldots ,R\):

$$\begin{aligned} \mathbf {\omega }_{i_r}^*= & {} \frac{\pi _r N_q({\textbf{u}}_i|\mathbf {\mu }_r, {\textbf{Q}}_r)}{\sum _{r=1}^{R} \pi _r N_q({\textbf{u}}_i|\mathbf {\mu }_r, {\textbf{Q}}_r)}. \end{aligned}$$

-

1.4 Some trace plots

See Fig. 7.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mignemi, G., Calcagnì, A., Spoto, A. et al. Mixture polarization in inter-rater agreement analysis: a Bayesian nonparametric index. Stat Methods Appl (2024). https://doi.org/10.1007/s10260-023-00741-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s10260-023-00741-x