Abstract

We investigate the effect of omnipresent cloud storage on distributed computing. To this end, we specify a network model with links of prescribed bandwidth that connect standard processing nodes, and, in addition, passive storage nodes. Each passive node represents a cloud storage system, such as Dropbox, Google Drive etc. We study a few tasks in this model, assuming a single cloud node connected to all other nodes, which are connected to each other arbitrarily. We give implementations for basic tasks of collaboratively writing to and reading from the cloud, and for more advanced applications such as matrix multiplication and federated learning. Our results show that utilizing node-cloud links as well as node-node links can considerably speed up computations, compared to the case where processors communicate either only through the cloud or only through the network links. We first show how to optimally read and write large files to and from the cloud in general graphs using flow techniques. We use these primitives to derive algorithms for combining, where every processor node has an input value and the task is to compute a combined value under some given associative operator. In the special but common case of “fat links,” where we assume that links between processors are bidirectional and have high bandwidth, we provide near-optimal algorithms for any commutative combining operator (such as vector addition). For the task of matrix multiplication (or other non-commutative combining operators), where the inputs are ordered, we present tight results in the simple “wheel” network, where procesing nodes are arranged in a ring, and are all connected to a single cloud node.

Similar content being viewed by others

1 Introduction

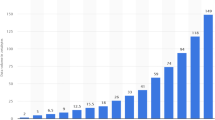

In 2018 Google announced that the number of users of Google Drive is surpassing one billion [28]. Earlier that year, Dropbox stated that in total, more than an exabyte (\(10^{18}\) bytes) of data has been uploaded by its users [15]. Other cloud-storage services, such as Microsoft’s OneDrive, Amazon’s S3, or Box, are thriving too. The driving force of this paper is our wish to let other distributed systems take advantage of the enormous infrastructure that makes up the complexes called “clouds.” Let us explain how.

The computational and storage capacities of servers in cloud services are well advertised. A lesser known fact is that a cloud system also entails a massive component of communication, that makes it appear close to almost everywhere on the Internet. (This feature is particularly essential for cloud-based video conferencing applications, such as Zoom, Cisco’s Webex and others.) In view of the existing cloud services, our fundamental idea is to abstract a complete cloud system as a single, passive storage node.

To see the benefit of this approach, consider a network of the “wheel” topology: a single cloud node is connected to n processing nodes arranged in a cycle (see Fig. 1). Suppose each processing node has a wide link of bandwidth n bits per time unit to its cycle neighbors, and a narrower link of bandwidth \(\sqrt{n}\) to the cloud node. Further suppose that each processing node has an n-bit vector, and that the goal is to calculate the sum of all vectors. Without the cloud (Fig. 1, left), such a task requires at least \(\Omega (n)\) time units—to cover the distance; on the other hand, without using the cycle links (Fig. 1, middle), transmitting a single vector from any processing node (and hence computing the sum) requires \(\Omega (n/\sqrt{n}) = \Omega (\sqrt{n})\) time units—due to the limited bandwidth to the cloud. But using both cloud links and local links (Fig. 1, right), the sum can be computed in \({\tilde{\Theta }} (\root 4 \of {n} )\) time units, as we show in this paper.

More generally, in this paper we initiate the study of the question of how to use an omnipresent cloud storage to speed up computations, if possible. We stress that the idea here is to develop a framework and tools that facilitate computing with the cloud, as opposed to computing in the cloud.

Specifically, in this paper we introduce the computing with the cloud model (CWC), and present algorithms to compute schedules that efficiently combine distributed inputs to compute various functions, such as vector addition and matrix multiplication. To this end, we first show how to implement (using dynamic flow techniques) primitive operations that allow for the efficient exchange of large messages (files) between processing nodes and cloud nodes. Finally, we show how to use the combining schedules to implement some popular applications, including federated learning [34] and file de-duplication (dedup) [35].

1.1 Model specification

The “Computing with the Cloud” (CWC) model is a synchronous network whose underlying topology is described by a weighted directed graph \(G=(V, E, w)\). The node set consists of two disjoint subsets: \(V=V_p\cup V_c\), where \(V_p\) is the set of processing nodes, and \(V_c\) is the set of cloud nodes. Cloud nodes are passive nodes that function as shared storage: they support read and write requests, and do not perform any other computation.

We use n to denote the number of processing nodes (the number of cloud nodes is typically constant).

The set of links connecting processing nodes is denoted by \(E_L\) (“local links”), and \(E_C\) (“cloud links”) denotes the set of links that connect processing nodes to cloud nodes. Each link \(e\in E=E_L\cup E_C\) has a prescribed bandwidth \(w(e)>0\) ( there are no links between different cloud nodes). We denote by \(G_p{\mathop {=}\limits ^\textrm{def}}(V_p,E_L)\) the graph \(G-V_c\), i.e., the graph spanned by the processing nodes.

Our execution model is the standard synchronous network model, based on CONGEST [36]. Executions proceed in synchronous rounds, where each round consists of processing nodes receiving messages sent in the previous round, doing an arbitrary local computation, and then sending messages. The size of a message sent over a link e in a round is at most w(e) bits.

Cloud nodes do not perform any computations: they can only receive requests we denote by FR and FW (file read and write, respectively), to which they respond in the following round. More precisely, each cloud node has unbounded storage space; to write, a processing node \(v_i\) invokes \(\texttt {FW} \) with arguments that describe the filename f, a bit string S, and the location (index) within f that is the starting point for writing S. It is assumed that \(|S|\le w(v_i,v_c)\) bits (longer writes are implemented by a sequence of FW operations). To read, a processing node \(v_i\) invokes \(\texttt {FR} \) with arguments that describe a filename f and the range of indices to fetch from f. Again, we assume that the size of the range in any single FR invocation by node \(v_i\) is at most \(w(v_i,v_c)\).Footnote 1

FW operations are exclusive, i.e., when an FW operation is executing, no other operation (read or write) referring to the same file locations is allowed to take place concurrently. Concurrent FR operations reading from the same location are allowed.

Discussion. We believe that our model is fairly widely applicable. A processing node in our model may represent anything from a computer cluster with a single gateway to the Internet, to cellphones or even smaller devices—anything with a non-shared Internet connection. The local links can range from high-speed fibers to Bluetooth or infrared links. Typically in this setting the local links have bandwidth much larger than the cloud links (and cloud downlinks in many cases have larger bandwidth than cloud uplinks). Another possible interpretation of the model is a private network (say, in a corporation), where a cloud node represents a storage device or a file server. In this case the cloud link bandwidth may be as large as the local link bandwidth.

1.2 Problems considered and main results

In this paper we address the question of how to efficiently move information around a CWC network (the model described above). To avoid confusion, we start by distinguishing between schedules, which specify what string of bits to send over each link in every step (cf. Definition 2.3), and algorithms, which compute these schedules. While the schedules are inherently parallel because they concern all links in each step, the algorithms we present in this paper are typically centralized. Our main objective is to find optimal or near-optimal schedules (i.e., whose timespan is close to the best possible). For the positive results, we present efficient (i.e., polynomial-time) algorithms that produce such schedules.

Our main results are algorithms to compute schedules that allow the users to combine values stored at nodes. These schedules use building blocks that facilitate efficient transmission of large messages between processing nodes and cloud nodes. These building block schedules, in turn, are computed in a straightforward way using dynamic flow techniques. Finally, we show how to use the combining schedules to derive new algorithms for federated learning and file de-duplication (dedup) in the CWC model. More specifically, we provide implementations of the following tasks.

Basic cloud operations: Let \(s\in {\mathbb {N}}\) be a given parameter. We use \(v_c\) to denote a cloud node below.

-

\(\textsf {cW} _i\) (cloud write): write an s-bits file f stored at node \(i\in V_p\) to node \(v_c\).

-

\(\textsf {cR} _i\) (cloud read): fetch an s-bits file f from node \(v_c\) to node \(i\in V_p\).

-

\(\textsf {cAW} \) (cloud all write): for each \(i\in V_p\), write an s-bits file \(f_i\) stored at node i to node \(v_c\).

-

\(\textsf {cAR} \) (cloud all read): for each \(i\in V_p\), fetch an s-bits file \(f_i\) from node \(v_c\) to node i.

Combining and dissemination operations:

-

\(\textsf {cComb} \): (cloud combine): Each node \(i\in V_p\) has an s-bits input string \(S_i\), and there is a binary associative operator \(\otimes :\left\{ 0,1 \right\} ^s\times \left\{ 0,1 \right\} ^s\rightarrow \left\{ 0,1 \right\} ^s\). The requirement is to write to a cloud node \(v_c\) the s-bits string \(S_1\otimes S_2\otimes \cdots \otimes S_n\). Borrowing from Group Theory, we call the operation \(\otimes \) multiplication, and \(S_1\otimes S_2\) is the product of \(S_1\) by \(S_2\). In general, \(\otimes \) is not necessarily commutative. We assume the existence of a unit element for \(\otimes \), denoted \(\overline{{\textbf{1}}}\), such that \(\overline{{\textbf{1}}}\otimes S=S\otimes \overline{{\textbf{1}}}=S\) for any s-bits strings S. The unit element is represented by a string of O(1) bits. Examples for commutative operators include vector (or matrix) addition over a finite field, logical bitwise operations, leader election, and the top-k problem. Examples for non-commutative operators may be matrix multiplication (over a finite field) and function composition. Note that we require that the result of applying \(\otimes \) is as long as each of its operands.

-

cCast (cloudcast): All the nodes \(i\in V_p\) simultaneously fetch a copy of an s-bits file f from node \(v_c\). (Similar to network broadcast.)

Applications. cComb and cCast can be used directly to provide matrix multiplication, matrix addition, and vector addition. We also outline the implementation of the following.

Federated learning (FL) [34]: In FL, a collection of agents collaborate in training a neural network to construct a model of some concept, but the agents want to keep their data private. Unlike [34], in our model the central server is a passive storage device that does not carry out computations. We show how elementary secure computation techniques, along with our combining algorithm, can efficiently help training an ML model in the federated scheme implemented in CWC, while maintaining privacy.

File deduplication: Deduplication (or dedup) is a task in file stores, where redundant identical copies of data are identified (and possibly unified)—see, e.g., [35]. Using cComb and cCast, we implement file dedup in the CWC model on collections of files stored at the different processing nodes. The algorithm keeps a single copy of each file and pointers instead of the other replicas.

Special topologies. The complexity of the schedules we present depends on the given network topology. We study a few cases of interest.

First, we consider s-fat-links network, defined to be, for a given parameter \(s\in {\mathbb {N}}\), as the CWC model with the following additional assumptions:

-

All links are symmetric, i.e., \(w(u,v)=w(v,u)\) for every link \((u,v) \in E\).

-

Local links have bandwidth at least s.

-

There is only one cloud node \(v_c\).

The fat links model seems suitable in many real-life cases where local links are much wider than cloud links (uplinks to the Internet), as is the intuition behind the HYBRID model [6].

Another topology we consider is the wheel network, depicted schematically in Fig. 1 (right). In a wheel system there are n processing nodes arranged in a ring, and a cloud node connected to all processing nodes. The wheel network is motivated by non-commutative combining operations, where the order of the operands induces a linear order on the processing nodes, i.e., we view the nodes as a line, where the first node holds the first input (operand), the second node holds the second input etc. The wheel is obtained by connecting the first and the last nodes for symmetry, and then connecting all to a cloud node.

Overview of techniques. As mentioned above, schedules for the basic file operations (cW, cR, cAW and cAR) are computed optimally using dynamic flow techniques, or more specifically, quickest flow (Sect. 2), which have been studied in numerous papers in the past (cf. [10, 37]). We present closed-form bounds on cW and cR for the wheel topology with general link bandwidths in Sect. 4.

We present tight bounds for cW and cR in the s-fat-links network, where s is the input size at all nodes. We then continue to consider the tasks cComb with commutative operators and cCast, and prove nearly-tight bounds on their time complexity in the s-fat-links network (Theorems 3.7, 3.8, 3.10). The idea is to first find, for every processing node i, a cluster of processing nodes that allows it to perform cW in an optimal number of rounds. We then perform cComb by combining the values within every cluster using convergecast [36], and then combining the results in a computation-tree fashion. We perform the described procedure in near-optimal time.

Non-commutative operators are explored in the natural wheel topology. We present algorithms to compute schedules for wheel networks with arbitrary bandwidth (both cloud and local links). We prove an upper bound for cComb (Theorem 4.6 ) and a nearly-matching lower bound (Theorem 4.10).

Paper organization. In Sect. 2 we study the topology of basic primitives in the CWC model. In Sect. 3 we study combining schedules in general topologies in fat links networks. In Sect. 4 we consider combining for non-commutative operators in the wheel topology. In Sect. 5 we discuss application level usage of the CWC model, such as federated learning and deduplication. Conclusions and open problems are presented in Sect. 6.

1.3 Related work

Our model is based on, and inspired by, a long history of theoretical models in distributed computing. To gain some perspective, we offer here a brief non-comprehensive review.

Historically, distributed computing is split along the dichotomy of message passing vs shared memory [18]. While message passing is deemed the “right” model for network algorithms, the shared memory model is the abstraction of choice for programming multi-core machines.

The prominent message-passing models are LOCAL [31], and its derived CONGEST [36]. (Some models also include a broadcast channel, e.g. [2].) In both LOCAL and CONGEST, a system is represented by a connected (typically undirected) graph, in which nodes represent processors and edges represent communication links. In LOCAL, message size is unbounded, and in CONGEST, message size is restricted, typically to \(O(\log n)\) bits. Thus, CONGEST accounts not only for the distance information has to traverse, but also for information volume and the bandwidth available for its transportation.

While most algorithms in the LOCAL and CONGEST models assume fault-free (and hence synchronous) executions, in the distributed shared memory model, asynchrony and faults are the primary source of difficulty. Usually, in the shared memory model one assumes that there is a collection of “registers,” accessible by multiple threads of computation that run at different speeds and may suffer crash or even Byzantine faults (see, e.g., [5]). The main issues in this model are coordination and fault-tolerance. Typically, the only quantitative hint to communication cost is the number and size of the shared registers.

The CONGESTED CLIQUE (CC) model [32] is a special case of CONGEST, where the underlying graph is assumed to be fully connected. The CC model is appropriate for computing in the cloud, as it has been shown that under some relatively mild conditions, algorithms designed for the CC model can be implemented in the MapReduce model, i.e., run in datacenters [22]. Another model for computing in the cloud is the MPC model [24]. Recently, the HYBRID model [6] was proposed as a combination of CC with classical graph-based communication. More specifically, the HYBRID model assumes the existence of two communication networks: one for local communication between neighbors, where links are typically of infinite bandwidth (exactly like LOCAL); the other network is a node-congested clique, i.e., a node can communicate with every other node directly via “global links,” but there is a small upper bound (typically \(O(\log n)\)) on the total number of messages a node can send or receive via these global links in a round. Even though the model was presented only recently, there is already a line of algorithmic work in it, in particular for computing shortest paths [4, 6, 11, 25, 26].

Another classical model of easily accessible shared memory is the PRAM [17], whose original focus was on detailed complexity of parallel computation. An early attempt to incorporate communication bandwidth constraints in PRAM, albeit indirectly, is due to Mansour et al. [33], who considered PRAM with m words of shared memory, p processors and input length n, in the regime where \(m\ll p\ll n\). The LogP model [13] by Culler et al. aimed at adjusting the PRAM model to network-based realizations. Another proposal that models shared memory explicitly is the QSM model of Gibbons et al. [20], in which there is an explicit (typically uniform) limit on the bandwidth connecting processors to shared memory. QSM is similar to our CWC model, except that there is no network connecting processors directly. A thorough comparison of bandwidth limitations in variants of the BSP model [38] and of QSM is presented by Adler et al. [1]. We note that LogP and BSP do not provide shared memory as a primitive object: the idea is to describe systems in a way that allows implementations of abstract shared memory.

Recently, Aguilera et al. [3] introduced the “m &m” model that combines message passing with shared memory. The m &m model assumes that processes can exchange messages via a fully connected network, and there are shared registers as well, where each shared register is accessible only by a subset of the processes. The focus in [3] is on solvability of distributed tasks in the presence of failures, rather than performance.

Discussion. Intuitively, our CWC model can be viewed as the classical CONGEST model over the processors, augmented by special cloud nodes (object stores) connected to some (typically, many) compute nodes. To reflect modern demands and availability of resources, we relax the very stringent bandwidth allowance of CONGEST, and usually envision networks with much larger link bandwidth (e.g., \(n^\epsilon \) for some \(\epsilon >0\)).

Considering previous network models, it appears that HYBRID is the closest to CWC, even though HYBRID was not expressly designed to model the cloud. In our view, CWC is indeed more appropriate for computation with the cloud. First, in most cases, global communication (modeled by clique edges in HYBRID) is limited by link bandwidth, unlike HYBRID’s node capacity constraint, which models computational bandwidth. Second, HYBRID is not readily amenable to model multiple clouds, while this is a natural property of CWC.

Regarding shared memory models, we are unaware of topology-based bandwidth restriction on shared memory access in distributed models. In some general-purpose parallel computation models (based on BSP [38]), communication capabilities are specified using a few global parameters such as latency and throughput, but these models deliberately abstract topology away. In distributed (asynchronous) shared memory, the number of bits that need to be transferred to and from the shared memory is seldom explicitly analyzed.

2 Implementation of basic communication primitives in CWC

In this section we consider the basic operations of reading or writing to the cloud, by one or all processors. First, we give optimal results using standard dynamic flow techniques. While optimal, the resulting schedules are somewhat opaque in the sense that they do not give much intuition about the construction. We then consider the special case of networks with fat links, where we introduce the notion of “cloud clusters” that are both intuitive and can be used in a straightforward way to give good approximate solutions to the basic tasks.

We first review dynamic flows in Sect. 2.1, and then apply them to the CWC model in Sect. 2.2. Cloud clusters for fat-links networks are introduced in Sect. 2.3.

2.1 Dynamic flows

The concept of quickest flow [10], a variant of dynamic flow [37], is defined as follows.Footnote 2 A flow network consists of a directed weighted graph \(G=(V,E,c)\) where \(c:E\rightarrow {\mathbb {N}}\), with a distinguished source node and a sink node, denoted \(s,t\in V\), respectively. A dynamic flow with time horizon \(T\in {\mathbb {N}}\) and flow value F is a mapping \(f:E\times [1,T]\rightarrow {\mathbb {N}}\) that specifies for each edge e and time step j, how much flow e carries between steps \(j-1\) and j, subject to the natural constraints:

-

Edge capacities. For all \(e\in E, j\in [1,T]\):

$$\begin{aligned} f(e,j)\le ~c(e) \end{aligned}$$(1) -

Only arriving flow can leave. For all \( v\in V{\setminus }\left\{ s \right\} , j\in [1,T-1]\):

$$\begin{aligned} \sum _{i=1}^{j}\sum _{(u,v)\in E}\!\!f((u,v),i) \,\ge \sum _{i=1}^{j+1}\sum _{(v,w)\in E}\!\!f((v,w),i) \end{aligned}$$(2) -

No leftover flow at time T. For all \(v\in V\setminus \left\{ s,t \right\} \):

$$\begin{aligned} \sum _{j=1}^{T}\sum _{(u,v)\in E}\!\!f((u,v),j) \,= \sum _{j=1}^{T}\sum _{(v,w)\in E}\!\!f((v,w),j) \end{aligned}$$(3) -

Flow value (source).

$$\begin{aligned} \sum _{j=1}^T\left( \sum _{(s,v)\in E}\!\!f((s,v),j) \!\!\,\right. \left. -\!\! \sum _{(u,s)\in E}\!\!f((u,s),j)\right) =F\nonumber \\ \end{aligned}$$(4) -

Flow value (sink).

$$\begin{aligned} \sum _{j=1}^T\left( \sum _{(t,v)\in E}\!\!f((t,v),j) \!\!\,\right. \left. -\!\!\sum _{(u,t)\in E}\!\!f((u,t),j)\right) =-F \nonumber \\ \end{aligned}$$(5)

Usually in dynamic flows, T is given and the goal is to maximize F. In the quickest flow variant, the roles are reversed:

Definition 2.1

Given a flow network, the quickest flow for a given value F is a dynamic flow f satisfying (1–5) above with flow value F, such that the time horizon T is minimal.

Theorem 2.1

([10]) The quickest flow be computed in strongly polynomial time.

The Evacuation Problem [23] is a variant of dynamic flow that we use, specifically the case of a single sink node [7]. In this problem, each node v has an initial volume of \(F(v)\ge 0\) flow units, and the goal is to ship all the flow volume to a single sink node t in shortest possible time (every node v with \(F(v)>0\) is considered a source). Similarly to the single source case, shipment is described by a mapping \(f:E\times [1,T]\rightarrow {\mathbb {N}}\) where T is the time horizon. The dynamic flow is subject to the edge capacity constraints (Eq. 1), and the following additional constraints:

-

Only initial and arriving flow can leave. For all \( v\in V, j\in [1,T-1]\):

$$\begin{aligned}{} & {} F(v)+\sum _{i=1}^{j}\sum _{(u,v)\in E}\!\!f((u,v),i) \,\nonumber \\ {}{} & {} \qquad \ge \sum _{i=1}^{j+1}\sum _{(v,w)\in E}\!\!f((v,w),i) \end{aligned}$$(6) -

Flow value (sources). For all \(v\in V\setminus \left\{ t \right\} \):

$$\begin{aligned}{} & {} \sum _{j=1}^T\left( \sum _{(v,w)\in E}\!\!f((v,w),j) -\!\! \sum _{(u,v)\in E}\!\!f((u,v),j)\right) \nonumber \\ {}{} & {} \qquad =F(v) \end{aligned}$$(7) -

Flow value (sink).

$$\begin{aligned}{} & {} \sum _{j=1}^T\left( \sum _{(u,t)\in E}\!\!f((u,t),j) -\!\! \sum _{(t,w)\in E}\!\!f((t,w),j) \right) \nonumber \\ {}{} & {} \qquad = \sum _{v\in V}{F(v)} \end{aligned}$$(8)

Formally, we use the following definition and result.

Definition 2.2

Given a flow network in which each node v has value F(v), a solution to the evacuation problem is a dynamic flow f with multiple sources satisfying (1) and (6–8). The solution is optimal if the time horizon T of f is minimal.

Theorem 2.2

([7]) An optimal solution to the evacuation problem can be computed in strongly polynomial time.

2.2 Using dynamic flows in CWC

In this section we show how to implement (i.e., compute schedules for) basic cloud access primitives using dynamic flow algorithms. These are the tasks of reading and writing to or from the cloud, invoked by a single node (cW and cR), or by all nodes (cAW and cAR). Our goal in all the tasks and algorithms is to find a schedule that implements the task in the minimum amount of time.

Definition 2.3

Given a CWC model, a schedule for time interval I is a mapping that assigns, for each time step in I and each link (u, v): a send (or null) operation if (u, v) is a local link, and a FW or FR (or null) operation if (u, v) is a cloud link.

We present optimal solutions to these problems in general directed graphs, using the quickest flow algorithm.

2.2.1 Serving a single node

Let us consider cW first. We start with a lemma stating the close relation between schedules as defined above and dynamic flows.

Lemma 2.3

Let \(G=(V,E,w)\) be a graph in the CWC model. There exists a schedule implementing \(\textsf {cW} _i\) from processing node i to cloud node \(v_c\) with string S of size s in T rounds if and only if there exists a dynamic flow of value s and time horizon T from source node i to sink node \(v_c\).

Proof

Converting a schedule to a dynamic flow is trivial, as send and receive operations between processing nodes and FW and FR operations directly translate to a dynamic flow that transports the same amount of flow satisfying bandwidth constraints. For the other direction, let f be a dynamic flow of time horizon T and value s from node i to the cloud \(v_c\). We construct a schedule implementing \(\textsf {cW} _i\) as follows.

First, we construct another dynamic flow \(f'\) which is the same as f, except that no flow leaves the sink node \(v_c\). Formally, given a dynamic flow g, a node v and a time step t, let \(\textrm{stored}_g(v,t)\) be the volume of flow stored in v at time t according to g. Flow \(f'\) is constructed by induction; At time step 1, \(f'\) is defined to be the same as f, except for setting \(f'(e,1) = 0\) for every edge e that leaves \(v_c\). Let \(t\in \left\{ 1,T-1 \right\} \). For step \(t+1\), \(f'\) is defined to be the same as f, except for capping the total flow that leaves any node v by \(\textrm{stored}_{f'}(v,t)\), and setting \(f'(e,1) = 0\) for every edge e that leaves the sink.

Let \(G_p = G-\{v_c\}\), and let \(\textrm{stored}_g(G_p, t)\) denote \(\sum _{v\in V_p}\!\textrm{stored}_g(v,t)\) for some dynamic flow g. Initially, \(\textrm{stored}_f(G_p, 0) = \textrm{stored}_{f'}(G_p, 0) = s\), and by the induction, in every step \(t\in \left\{ 1,T \right\} \), \(\textrm{stored}_f(G_p, t) \ge \textrm{stored}_{f'}(G_p, t)\) due to flow that was not sent from \(v_c\) to \(G_p\). Also, in time step T, \(\textrm{stored}_f(G_p, T) = 0\) since all flow was sent to the sink, and thus \(\textrm{stored}_{f'}(G_p, T) = 0\) as well and \(\textrm{stored}_{f'}(v_c,T) = s\) due to flow conservation. Therefore, \(f'\) is a dynamic flow with time horizon (at most) T and value s.

Given \(f'\), we construct a schedule \({{\mathcal {S}}}\) to implement \(\textsf {cW} _i\) by encoding flow using the operations of message send, message receive, and FW (no FR operations are required because in \(f'\) flow never leaves \(v_c\)). To specify which data is sent in every operation of the schedule, refer to all FW operations of the schedule. Let K be the number of FW operations in \({{\mathcal {S}}}\), let \(i_k\) be the node initiating the k-th call to FW and let \(l_k\) be the size of the message in that call. We assign the data transferred on each link during \({{\mathcal {S}}}\) so that when node \(i_k\) runs the k-th FW, it writes the \(l_k\) bits of S starting from index \(s\cdot { \sum _{j=1}^{k-1}{l_j}}\). Note that processing nodes do not need to exchange indices of the data they transfer, as all nodes can calculate in preprocess time the schedule and thus “know in advance” the designated indices of the transferred data.

Correctness of the schedule S follows from the validity of f, as well as its time complexity. \(\square \)

Theorem 2.4

Given any instance \(G=(V,E,w)\) of the CWC model, an optimal schedule realizing \(\textsf {cW} _i\) can be computed in polynomial time.

Proof

Consider a \(\textsf {cW} \) issued by a processing node i, wishing to write s bits to cloud node \(v_c\). We construct an instance of quickest flow as follows. The flow network is G where w is the link capacity function, node i is the source and \(v_c\) is the sink. The requested flow value is s. The solution, computed by Theorem 2.1, is directly translatable to a schedule, after assigning index ranges to flow parts according to Lemma 2.3. Optimality of the resulting schedule follows from the optimality of the quickest flow algorithm. \(\square \)

\(\blacktriangleright \) Remarks.

-

Note that in the presence of multiple cloud nodes, it may be the case that while writing to one cloud node, another cloud node is used as a relay station.

-

Schedule computation can be carried out off-line: we can compute a schedule for each node i and for each required file size s (possibly consider only powers of \(1+\epsilon \) for some \(\epsilon >0\)), so that in run-time, the initiating node would only need to tell all other nodes which schedule to use.

Next, we observe that the reduction sketched in the proof of Theorem 2.4 works for reading just as well: the only difference is reversing the roles of source and sink, i.e., pushing s flow units from the cloud node \(v_c\) to the requesting node i. We therefore have the following theorem.

Theorem 2.5

Given any instance of the CWC model, an optimal schedule realizing \(\textsf {cR} _i\) can be computed in polynomial time.

2.2.2 Serving multiple nodes

Consider now operations with multiple invocations. Let us start with \(\textsf {cAW} \) (cAR is analogous, as above). Recall that in this task, each node has a (possibly empty) file to write to a cloud node. If all nodes write to the same cloud node, then using the evacuation problem variant of the quickest flow algorithm solves the problem (see Definition 2.2), Specifically, we have the following theorem.

Theorem 2.6

Given any instance \(G=(V,E,w)\) of the CWC model, an optimal schedule realizing cAW (cAR) in which every node i needs to write (read) a message of size \(s_i\) to (from) cloud node \(v_c\) can be computed in strongly polynomial time.

Proof

Similarly to Lemma 2.3, it is easy to see that there is such a schedule for cAW if and only if there is a dynamic flow solving the evacuation problem (Definition 2.2). Thus in order to solve cAW, we can construct a flow network as in Theorem 2.4, apply Theorem 2.2 to get the solution, and then translate it into a schedule. A schedule for cAR can be obtained by reversing the schedule for cAW, similarly to Theorem 2.5. \(\square \)

However, in the case of multiple cloud nodes, we resort to the quickest multicommodity flow, defined as follows [37]. We are given a flow network as described in Sect. 2.1, but with k source-sink pairs \(\left\{ (s_i,t_i) \right\} _{i=1}^k\), and k demands \(d_1,\ldots ,d_k\). We seek k flow functions \(f_i\), where \(f_i\) describes the flow of \(d_i\) units of commodity i from its source \(s_i\) to its sink \(t_i\), subject to the usual constraints: the edge capacity constraints (1) applies to the sum of all k flows, and the node capacity constraints (2–3), as well as the source and sink constraints (4–5) are specified for each commodity separately.

It is known that determining whether there exists a feasible quickest multicommodity flow with a given time horizon T is NP-hard, but on the positive side, there exists an FPTAS to it [16], i.e., we can approximate the optimal T to within \(1+\epsilon \), for any constant \(\epsilon > 0\). Extending Theorem 2.6 in the natural way, we obtain the following result.

Theorem 2.7

Given any instance of the CWC model and \(\epsilon >0\), a schedule realizing \(\textsf {cAW} \) or \(\textsf {cAR} \) can be computed in time polynomial in the instance size and \(\epsilon ^{-1}\). The length of the schedule is at most \((1+\epsilon )\) times larger than the optimal length.

2.3 Cloud clusters in fat-links networks

Recall that in the case of an s-fat-links network, all local links have bandwidth at least s, and all links are symmetric. For this case we develop a rather intuitive framework for the basic tasks, introducing the concept of cloud clusters.

Consider \(\textsf {cW} _i\), where i wishes to write s bits to a given cloud node. The basic tension in finding an optimal schedule for \(\textsf {cW} _i\) is that in order to use more cloud bandwidth, more nodes need to be enlisted. But while more bandwidth reduces the transmission time, reaching remote nodes (that provide the extra bandwidth) increases the traversal time. Our algorithm looks for the sweet spot where the conflicting effects are more-or-less balanced.

For example, consider a simple path of n nodes with infinite local bandwidth, where each node is connected to the cloud with bandwidth x (Fig. 2). Suppose that the leftmost node l needs to write a message of s bits to the cloud. By itself, writing requires s/x rounds. Using all n nodes, uploading would take O(s/nx) rounds, but \(n-1\) rounds are needed to ship the messages to the fellow-nodes. The optimal solution in this case is to use only \(\sqrt{s/x}\) nodes: the time to ship the file to all these nodes is \(\sqrt{s/x}\), and the upload time is \(\frac{s/\sqrt{s/x}}{x} = \sqrt{s/x}\), because each node needs to upload only \(s/\sqrt{s/x}\) bits.

In general, we define “cloud clusters” to be node sets that optimize the ratio between their diameter and their total bandwidth to the cloud. The schedules produced by our algorithms for cW and cR use nodes of cloud clusters. We prove that the running-time of our implementation is asymptotically optimal. Formally, we have the following.

Definition 2.4

Let \(G=(V,E,w)\) be a CWC system with processor nodes \(V_p\) and cloud nodes \(V_c\). The cloud bandwidth of a processing node \(i \in V_p\) w.r.t. a given cloud node \(v_c\in V_c\) is \(b_c(i){\mathop {=}\limits ^\textrm{def}}w(i,v_c)\). A cluster \(B \subseteq V_p\) in G is a connected set of processing nodes. The cloud (up or down) bandwidth of cluster B w.r.t a given cloud node, denoted \(b_c(B)\), is the sum of the cloud bandwidth to \(v_c\) over all nodes in B: \(b_c(B){\mathop {=}\limits ^\textrm{def}}\sum _{i\in B}b_c(i)\). The (strong) diameter of cluster B, denoted \(\textrm{diam}(B)\), is the maximum distance between any two nodes of B in the induced graph G[B]: \(\textrm{diam}(B) = \max _{u,v \in B} {dist_{G[B]}(u, v)}\).

We use the following definition for the network when ignoring the cloud. Note that the metric here is hop-based—w indicates link bandwidths.

Definition 2.5

Let \(G=(V,E,w)\) be a CWC system with processing nodes \(V_p\) and cloud nodes \(V_c\). The ball of radius r around node \(i\in V_p\), denoted \(B_{r}({i})\) is the set of nodes at most r hops away from i in \(G_p\).

Finally, we define the concept of cloud cluster of a node.

Definition 2.6

Let \(G=(V,E,w)\) be a CWC system with processing nodes \(V_p\) and cloud node \(v_c\), and let \(i\in V_p\).

Given \(s\in {\mathbb {N}}\), the s -cloud radius of node i, denoted \(k_{s}({i})\), is defined to be

The ball \(B_{i}{\mathop {=}\limits ^\textrm{def}}B_{k_{s}({i})}({i})\) is the s-cloud cluster of node i. The timespan of the s-cloud cluster of i is denoted \(Z_{i}{\mathop {=}\limits ^\textrm{def}}k_{s}({i}) + \frac{s}{b_c(B_{i})}\).

We sometimes omit the s qualifier when it is clear from the context.

In words, \(B_{i}\) is a cluster of radius \(k({i}) \) around node i, where \(k({i})\) is the smallest radius that allows writing s bits to \(v_c\) by using all cloud bandwidth emanating from \(B_{i}\) for \(k({i})+1\) rounds. \(Z_{i}\) is the time required (1) to send s bits from node i to all nodes in \(B_{i}\), and (2) to upload s bits to \(v_c\) collectively by all nodes of \(B_{i}\). Note that \(B_{i}\) is easy to compute. We can now state our upper bound.

Theorem 2.8

Given a fat-links CWC system, Algorithm 1 solves the s-bits \(\textsf {cW} _i\) problem in \(O(Z_{i})\) rounds on \(B_{i}\).

Proof

The algorithm broadcasts all s bits to all nodes in \(B_{i}\), and then each node writes a subrange of the data whose size is proportional to its cloud bandwidth. Correctness is obvious. As for the time analysis: Steps 1–2 require \(O(k({i}))\) rounds. In the loop of steps 4–5, \(b_c(B_{i})\) bits are sent in every round, and thus it terminates in \(O({s}/{b_c(B_{i})})\) rounds. The theorem follows from the definition of \(Z_{i}\). \(\square \)

Next, we show that our solution for \(\textsf {cW} _i\) is optimal, up to a constant factor. We consider the case of an incompressible input string: such a string exists for any size \(s\in {\mathbb {N}}\) (see, e.g., [30]). As a consequence, in any execution of a correct algorithm, s bits must cross any cut that separates i from the cloud node, giving rise to the following lower bound.

Theorem 2.9

Any algorithm solving \(\textsf {cW} _i\) in a fat-links CWC requires \(\Omega (Z_{i})\) rounds.

Proof

By definition, \(Z_{i} = k({i}) + {s}/{b_c(B_{i})}\). Lemmas 2.10 and 2.11 show that each term of \(Z_{i}\) is a lower bound on the running time of any algorithm for \(\textsf {cW} _i\). \(\square \)

Lemma 2.10

Any algorithm solving \(\textsf {cW} _i\) in a fat-links CWC system requires \(\Omega \left( k({i})\right) \) rounds.

Proof

Let A be an algorithm for \(\textsf {cW} _i\) that writes string S in \(t_A\) rounds. If \(t_A \ge \textrm{diam}(G_p)\) then \(t_A \ge k({i})\) and we are done. Otherwise, we count the number of bits of S that can get to the cloud in \(t_A\) rounds. Since S is initially stored in i, in a given round t, only nodes in \(B_{t-1}({i})\) can write pieces of S to the cloud. Therefore, overall, A writes to the cloud at most \(\sum _{t=1}^{t_A}b_c(B_{t-1}({i})) \le t_A\cdot b_c(B_{t_A-1}({i}))\) bits. Hence, by assumption that A solves \(\textsf {cW} _i\), we must have \(t_A\cdot b_c(B_{t_A-1}({i})) \ge s\). The lemma now follows from definition of \(k({i})\) as the minimal integer \(\ell \le \textrm{diam}(G_p)\) such that \((\ell \!+\!1) \cdot b_c( B_{\ell }({i}) ) \ge s\) if it exists (otherwise, \(k_c(i)=\textrm{diam}(G_p)\) and \(t_A>\textrm{diam}(G_p)\)). Either way, we are done. \(\square \)

Lemma 2.11

Any algorithm solving \(\textsf {cW} _i\) in a fat-links CWC system requires \(\Omega \left( {s}/{b_c({B_{i}})} \right) \) rounds.

Proof

Let A be an algorithm that solves \(\textsf {cW} _i\) in \(t_A\) rounds. If \(k({i})=\textrm{diam}(G_p)\) then \(B_{i}\) contains all processing nodes \(V_p\), and the claim is obvious, as no more than \(b_c({V_p})\) bits can be written to the cloud in a single round. Otherwise, \(k({i}) \ge {s}/{b_c({B_{i}})} - 1\) by Definition 2.6, and we are done since \(t_A = \Omega (k({i}))\) by Lemma 2.10. \(\square \)

By reversing time (and hence information flow) in a schedule of cW, one gets a schedule for cR. Hence we have the following immediate corollaries.

Theorem 2.12

\(\textsf {cR} _i\) can be executed in \(O(Z_{i})\) rounds in a fat-links CWC.

Theorem 2.13

\(\textsf {cR} _i\) in a fat-links CWC requires \(\Omega (Z_{i})\) rounds.

\(\blacktriangleright \) Remark: The lower bound of Theorem 2.9 and the definition of cloud clusters (Definition 2.6) show an interplay between the message size s, cloud bandwidth, and the network diameter; For large enough s, the cloud cluster of a node includes all processing nodes (because the time spent crossing the local network is negligible relative to the upload time), and for small enough s, the cloud cluster includes only the invoking node, rendering the local network redundant.

\(\blacktriangleright \) Large operands. Suppose that a given CWC system S is s-fat-links, and we need to implement an operation (e.g., cW) with operand size \(s'>s\). We can still use the algorithms from this section, at the cost of increasing the running time by a “scaling factor” of \(\lceil s'/s \rceil \). The idea is to first compute a schedule for the operation in a system \(S'\) which is identical to S except that all link bandwidths are multiplied by the scaling factor \(\lceil s'/s \rceil \). The schedule for \(S'\) is then emulated in S by executing each step of \(S'\) by \(\lceil s'/s \rceil \) steps of S.

We note that in this case the resulting schedule of S may be non-optimal, because our optimality arguments do not apply to the emulation. More concretely, suppose that the schedule produced for a certain operation in \(S'\) is T steps long. Then, by the lower bounds in this section, we know that the operation cannot be implemented in o(T) steps in \(S'\), implying the same lower bound for S (because S is less powerful than \(S'\)). The emulation outlined above shows that the operation can be implemented in S in \(\lceil s'/s \rceil T\) steps, so the best implementation time in S may be anywhere in the intersection of \(\Omega (T)\) and \(O\left( \lceil s'/s \rceil T\right) \).

3 Computing and writing combined values

In this section we consider combining operations, reminiscent of convergecast in CONGEST [36]. Note that flow-based techniques are not applicable in the case of writing a combined value, because the very essence of combining violates conservation constraints (i.e., the number of bits entering a node may be different from the number of bits leaving it).

In Sect. 3.1 we explain how to implement cComb in the general case using cAW and cAR. The implementation is simple and generic, but may be inefficient. We offer partial remedy in Sect. 3.2, where we present one of our main results: an algorithm to compute schedules for cComb in the special (but common) case, where \(\otimes \) is commutative and the local network has “fat links,” i.e., all local links have capacity at least s. For this important case, we show how to complete the task in time larger than the optimum by an \(O(\log n)\) factor.

3.1 Combining non-commutative operators in general graphs

We now present algorithms to compute schedules for cComb and for cCast on general graphs, using the primitives treated in Sect. 2. Note that with a non-commutative operator, the operands must be ordered; using renaming if necessary, we assume w.l.o.g. that in such cases the nodes are indexed by the same order as their operands.

Theorem 3.1

Let \(T_s\) be the time required for cAW (and cAR) when all files have size s. Then Algorithm 2 produces a schedule for cComb whose timespan is in \(O(T_s \log {n})\) rounds.

Proof

The idea is to do the combining over a binary “computation tree” by using the cloud to store the partial results. The computation tree is defined as follows (see Fig. 3). Let \(X_i^j\) denote the i-th node at level j, as well as the value of that node. The leaves \(X_i^0\) are the input values, and the value of an internal node \(X_{i}^{j+1}\) at level \(j+1\) with left child \(X_{2i}^{j}\) and right child \(X_{2i+1}^{j}\) is \(X_{2i}^{j}\otimes X_{2i+1}^{j}\). Pseudocode is provided in Algorithm 2. Correctness of the algorithm follows from the observation that after each execution of Step 4, there are m files of size s written in the cloud, whose product is the required output, and that m is halved in every iteration. If at any iteration m is odd, then node \(\lceil m/2 \rceil -1\) only needs to read one file, and therefore we set the other file that it reads to be \(\overline{{\textbf{1}}}\). When m reaches 1, there is only 1 file left, which is the required result.

As for the time analysis: Clearly, a single iteration of the while loop takes \(3T_s = O(T_s)\) rounds.Footnote 3 There are \(\lceil \log n \rceil \) iterations due to Step 11. Step 15 is completed in \(O(T_s)\) rounds, and thus the total schedule time is \(O(T_s \log {n})\). \(\square \)

In a way, cCast is the “reverse” problem of cComb, since it starts with s bits in the cloud and ends with s bits of output in every node. However, cCast is easier than cComb because our model allows concurrent reads and disallows concurrent writes to the same memory location. We have the following result.

Theorem 3.2

Let \(T_s\) be the time required to solve cAR when all files have size s. Then cCast can be solved in \(T_s\) rounds.

Proof

First note that if there were n copies of the input file S in the cloud, then cCast and cAR would have been the exact same problem. The theorem follows from the observation that any algorithm for cAR with n inputs of size s in the cloud can be modified so that each invocation of \(\texttt {FR} \) with argument \(S_i\) is converted to FR with argument S (the input of cCast). \(\square \)

3.2 Combining commutative operators in fat links network

In some cases, we can implement cComb more efficiently than the promise of Theorem 3.2. Specifically, if the combining operator is commutative and the network is an s-fat links network, we use the cloud clusters (as defined in Sect. 2.3) to do the combining in time which is at most a polylogarithmic factor larger than optimum. The saving is a result of using multiple concurrent cW and cR operations instead of cAW and cAR.

The high-level idea is simple. Assume that we are given a partition \({\mathcal {C}}=\left\{ B_1,\ldots ,B_k \right\} \) of the nodes set into clusters, i.e., \(\cup _i B_i=V_p\) and \(B_i\cap B_j=\emptyset \) for \(i\ne j\). We first compute the result of combining within each cluster \(B_i\) using the local network and then combine the cluster results using the cloud operations. Below, we first explain how to implement cComb using any given partition \({\mathcal {C}}\) of the nodes. We later show how to find good partitions.

Consider first combining within clusters. We assume that we are given a distinguished leader node \(r(B)\in B\) for each cluster \(B\in {\mathcal {C}}\). We proceed as follows (see Algorithm 3). First we construct, in each cluster \(B\in {\mathcal {C}}\), a spanning tree rooted at r(B). We then apply convergecast using \(\otimes \) over the tree. Clearly (see, e.g., [36]) we have:

Lemma 3.3

Algorithm 3 computes \(P_B = \bigotimes _{i\in B} S_i\) at node r(B) in \(O(\textrm{diam}(B))\) rounds.

When Algorithm 3 terminates in all clusters, the combined result of every cluster is stored in its leader. We combine the cluster results by filling in the values of a virtual computation tree defined over the clusters (see Fig. 3). The leaves of the tree are the combined values of the clusters of \({\mathcal {C}}\), as computed by Algorithm 3. To fill the values of other nodes in the computation tree, we use the clusters of \({\mathcal {C}}\): Each node in the tree is assigned a cluster which computes its value using the cR and cW primitives.

Specifically, in Algorithm 4 we consider a binary tree with \(|{\mathcal {C}}|\) leaves, where each non-leaf node has exactly two children. The tree is constructed from a complete binary tree with \(2^{\lceil \log |{\mathcal {C}}| \rceil }\) leaves, after deleting the rightmost \(2^{\lceil \log |{\mathcal {C}}| \rceil }-|{\mathcal {C}}|\) leaves. (If after this deletion the rightmost leaf is the only child of its parent, we delete the rightmost leaf and repeat until this is not the case.)

We associate each node y in the computation tree with a cluster \(\textrm{cl}(y)\in {\mathcal {C}}\) and a value \(\textrm{vl}(y)\), computed by the processors in \(\textrm{cl}(y)\). Clusters are assigned to leaves by index: The i-th leaf from the left is associated with the i-th cluster of \({\mathcal {C}}\). For internal nodes, we assign the clusters arbitrarily except that we ensure that no cluster is assigned to more than one internal node. (This is possible because in a tree where every node has two or no children, the number of internal nodes is smaller than the number of leaves.)

The clusters assigned to tree nodes compute the values as follows (see Algorithm 4). The value associated with a leaf \(y_{B}\) corresponding to cluster B is \(\textrm{vl}(y_{B})=P_B\). This way, every leaf x has \(\textrm{vl}(x)\), stored in the leader of \(\textrm{cl}(x)\), which can write it to the cloud using cW. For an internal node y with children \(y_l\) and \(y_r\), the leader of \(\textrm{cl}(y)\) obtains \(\textrm{vl}(y_l)\) and \(\textrm{vl}(y_r)\) using cR, computes their product \(\textrm{vl}(y)=\textrm{vl}(y_l)\otimes \textrm{vl}(y_r)\) and invokes \(\textsf {cW} \) to write it to the cloud. The executions of cW and cR in a cluster B are done by the processing nodes of B.

Computation tree values are filled layer by layer, bottom up. To analyze the running time we use the following definition.

Definition 3.1

Let \({\mathcal {C}}\) be a partition of the processing nodes into connected clusters, and let B be a cluster in \({\mathcal {C}}\). The timespan of node i in B , denoted \(Z_B(i)\), is the minimum number of rounds required to perform \(\textsf {cW} _i\) (or \(\textsf {cR} _i\)), using only nodes in B. The timespan of cluster B, denoted Z(B), is given by \(Z(B) = \min _{i\in B}Z_B(i)\). The timespan of the partition \({\mathcal {C}}\), denoted \({Z}({\mathcal {C}})\), is the maximum timespan of its clusters.

In words, the timespan of cluster B is the minimum time required for any node in B to write an s-bit string to the cloud using only nodes of B.

With these definitions, we can state the following result.

Lemma 3.4

Let \(\left\{ P_1,\ldots ,P_m \right\} \) be the values stored at the leaders of the clusters when Algorithm 4 is invoked. Then Algorithm 4 computes \(\bigotimes _{i=1}^m{P_i}\) in \(O({Z}({\mathcal {C}}) \cdot \log {|{\mathcal {C}}|})\) rounds.

Proof

Computing all values in a tree layer requires a constant number of cW and cR invocations in a cluster, i.e., by Definition 3.1, at most \(O({Z}({\mathcal {C}}))\) rounds of work in every layer. The number of layers is \(\lceil \log |{\mathcal {C}}| \rceil \). The result follows. \(\square \)

Combining Lemma 3.3 and Lemma 3.4, we can give an upper bound for any given cover \({\mathcal {C}}\).

Theorem 3.5

Given a partition \({\mathcal {C}}\) of the processing nodes where all clusters have diameter at most \(D_{\textrm{max}}\), cComb can be solved in a fat-links CWC in \(O\left( D_{\textrm{max}} + {Z}({\mathcal {C}}) \cdot \log |{\mathcal {C}}|\right) \) rounds.

\(\blacktriangleright \) Remark. We note that in Algorithm 4, Lines 6, 7 and 12 essentially compute cAR and cAW in which only the relevant cluster leaders have inputs. Therefore, these calls can be replaced with a collective call for appropriate cAR and cAW. By using optimal schedules for cAW and cAR, the running-time can only improve beyond the upper bound of Theorem 3.5.

Partitioning the nodes. We now arrive at the problem of designing a good partition. This is done as follows (see Algorithm 5). We consider the set of all s-cloud clusters (recall that a cloud cluster is defined for each processing node in Definition 2.6). From these clusters we pick a maximal set \({\mathcal {C}}_1\) of disjoint clusters, say by the greedy algorithm. We then extend the clusters of \({\mathcal {C}}_1\) to cover all nodes while keeping them disjoint, in a breadth-first fashion: each iteration of the while loop (line 4) adds another layer of uncovered nodes to each cluster. We have the following:

Lemma 3.6

The output of Algorithm 5 is a collection of disjoint clusters whose union is \(V_p\). Furthermore, the diameter of the largest cluster in the output is at most \(3\cdot D_{\max }\), where \(D_{\max }\) is the largest s-cluster diameter.

Proof

The disjointness of the sets in the output follows by induction: The initial clusters are disjoint by line 2, and thereafter, clusters are extended only by nodes which are not already members in other clusters in \({\mathcal {C}}_1\) (line 5). Clearly, by line 7, a node is added to a single cluster.

It is easy to see that when the algorithm terminates, the sets in the output cover all nodes: the distance of a node from \({\mathcal {C}}_1\) decreases by 1 in each iteration of the while loop. Finally, regarding the diameter of clusters in \({\mathcal {C}}_1\), note that by maximality of the input \({\mathcal {C}}_0\), each node not covered by \({\mathcal {C}}_1\) after the execution of line 2 is at distance at most \(D_{\max }\) from some node in \({\mathcal {C}}_1\). Since the diameter of each cluster increases by at most 2 in each iteration of the while loop, and since there are at most \(D_{\max }\) iterations, the lemma follows. \(\square \)

Conclusion. We now arrive at our main result for this section. We use the following definition.

Definition 3.2

Let \(G=(V,E,w)\) be a CWC system with fat links. \({Z_{\max }}{\mathop {=}\limits ^\textrm{def}}\max _{i\in V_p} Z_{i}\) is the maximal timespan in G.

In words, \({Z_{\max }}\) is the maximal amount of rounds that is required for any node in G to write an s-bit message to the cloud, up to a constant factor (cf. Theorem 2.9).

Theorem 3.7

Let \(G=(V,E,w)\) be a CWC system with fat links.

Then cComb with a commutative combining operator can be solved in \(O({Z_{\max }}\log n)\) rounds.

Proof

Let \({\mathcal {C}}\) be the partition output by Algorithm 5. By Lemma 3.6, the diameter of any cluster in \({\mathcal {C}}\) is at most 3 times larger than the maximal diameter of any s-cloud cluster of the system. It follows from Lemma 3.3 that we can apply Algorithm 3 in each cluster \(B\in {\mathcal {C}}\) and compute its combined value in time proportional to its diameter, which is bounded by Z(B) (cf. Definition 2.6). Since the clusters are disjoint by Lemma 3.6, we can compute all these values in parallel in time \(O(Z({\mathcal {C}}))\). Finally, we invoke Algorithm 4, which produces the desired result in additional \(O({Z}({\mathcal {C}}) \cdot \log {|{\mathcal {C}}|})\) rounds by Lemma 3.4. \(\square \)

Our algorithm produces schedules which are optimal to within a logarithmic factor, as stated in the following theorem.

Theorem 3.8

Let \(G=(V,E,w)\) be a CWC system with fat links.

Then cComb requires \(\Omega (Z_{\max })\) rounds.

Proof

By reduction from cW. Let i be any processing node. Given a bit string S, assign \(S_i=S\) as the input of node i in cComb, and for every other node \(j \ne i\), assign \(S_j = \overline{{\textbf{1}}}\). Clearly, any algorithm for cComb that runs with these inputs solves \(\textsf {cW} _i\) with input S. The result follows from Theorem 2.9. \(\square \)

cCast . To implement cCast, one can reverse the schedule of cComb. However, a slightly better implementation is possible, because there is no need to ever write to the cloud node. More specifically, let \({\mathcal {C}}\) be a partition of \(V_p\). In the algorithm for cCast, each cluster leader invokes cR, and then the leader disseminates the result to all cluster members. The time complexity for a single cluster B is O(Z(B)) for the cR operation, and \(O(\textrm{diam}(B))\) rounds for the dissemination of S throughout B (similarly to Lemma 3.3). We obtain the following result.

Theorem 3.9

Let \(G=(V,E,w)\) be a CWC system with fat links. Then cCast can be performed in \(O({Z_{\max }})\) rounds.

Finally, we note that since any algorithm for cCast also solves \(\textsf {cR} _i\) problem for every node i, we get from Theorem 2.13 the following result.

Theorem 3.10

Let \(G=(V,E,w)\) be a CWC system with fat links. Any algorithm solving cCast requires \(\Omega (Z_{\max })\) rounds.

4 Non-commutative operators and the wheel settings

In this section we consider cComb for non-commutative operators in the wheel topology (Fig. 1).

Trivially, Theorem 3.5 applies in the non-commutative case if the ordering of the nodes happens to match an ordering induced by the algorithm, but this need not be the case in general. However, it seems reasonable to assume that processing nodes are physically connected according to their combining order. Neglecting other possible connections, assuming that the last node is also connected to the first node for symmetry, and connecting a cloud node to all processors, we arrive at the wheel topology, which we study in this section.

Our main result in this section is an algorithm for cComb in the wheel topology that works in time which is a logarithmic factor larger than optimal. In contrast to the result of Theorem 3.7 that applies any topology but requires fat links, here we analyze a particular topology with arbitrary bandwidths. We note that by using standard methods [27], the algorithm presented in this section can be extended to compute, with the same asymptotic time complexity, all prefix sums, i.e., compute \(\bigotimes _{i=0}^jS_i\) for each \(0\le j<n\).

4.1 Cloud intervals and the complexity of cW and cR in the wheel topology

We start by defining cloud intervals. Cloud intervals in the wheel settings correspond to cloud clusters in general topologies, defined in Sect. 2.3.

Definition 4.1

The cloud bandwidth of a processing node \(i \in V_p\) in a given wheel graph is \(b_c(i){\mathop {=}\limits ^\textrm{def}}w(i,v_c)\). An interval \([{i}, {i\!+\!k}]{\mathop {=}\limits ^\textrm{def}}\left\{ i,i\!+\!1,\ldots ,i\!+\!k \right\} \subseteq V\) is a path of processing nodes in the ring. Given an interval \(I=[i,i+k]\), \(|I|=k+1\) is its size, and k is its length. The cloud bandwidth of I, denoted \(b_c(I)\), is the sum of the cloud bandwidth of all nodes in I: \(b_c(I)=\sum _{i\in I}b_c(i)\). The bottleneck bandwidth of I, denoted \(\phi (I)\), is the smallest bandwidth of a link in the interval: \(\phi (I)=\min \left\{ w(i,i\!+\!1)\mid i,i\!+\!1\in I \right\} \). If \(|I|=1\), define \(\phi (I) = \infty \).

For ease of presentation we consider the “one sided” case in which node i does not use one of its incident ring links. As we shall see, this limitation does not increase the time complexity by more than a constant factor.

Definition 4.2

Let i be a processing node in the wheel settings. We define the following quantities for clockwise intervals; counterclockwise intervals are defined analogously.

-

\(k_c(i)\) is the length of the smallest interval starting at i, for which the product of its size by the total bandwidth to the cloud along the interval exceeds s, i.e.,

$$\begin{aligned} k_c(i) = \min \left( \left\{ n \right\} \,\cup \, \left\{ k\mid \left( k\!+\!1\right) \cdot b_c([i,i\!+\!k]) \ge s \right\} \right) ~. \end{aligned}$$ -

\(k_\ell (i)\) is the length of the smallest clockwise interval starting at node i, for which the bandwidth of the clockwise-boundary link bandwidth is smaller than the total cloud bandwidth of the interval, i.e.,

$$\begin{aligned} k_\ell (i)= & {} \min \big (\left\{ n \right\} \,\cup \, \left\{ k \right\} \mid w(i\!+\!k,i\!+\!k\!+\!1)\\ {}{} & {} < b_c([{i}, {i\!+\!k}]) \big )~. \end{aligned}$$ -

\(k({i})= \min \left\{ k_c(i), k_\ell (i) \right\} \).

-

\(I_{i} = [{i}, {i\!+\!k({i})}] \). The interval \(I_{i}\) is called the (clockwise) cloud interval of node i.

-

\(Z_{i} = |I_{i}| + \dfrac{s}{\phi (I_{i})} + \dfrac{s}{b_c(I_{i})}\). \(Z_{i}\) is the timespan of the (clockwise) cloud interval of i.

The concept of cloud intervals is justified by the following results.

Theorem 4.1

Given the cloud interval \(I_i\) of node i, Algorithm 1 solves the s-bits \(\textsf {cW} _i\) problem in \(O(Z_{i})\) rounds.

Proof

The BFS tree of the interval would be a simple line graph, that is the whole interval. Step 2 of Algorithm 1 requires \(O\left( |I_{i}| + {\frac{s}{\phi \left( I_{i} \right) }} \right) \) rounds: there are s bits to send over \(\Theta (|I_i|)\) hops with bottleneck bandwidth \(\phi (I_i)\). The rest of the time analysis is the same as in Theorem 2.8. \(\square \)

We have the following immediate consequence.

Theorem 4.2

Let \(Z_i^\ell \) and \(Z_i^r\) denote the timespans of the counterclockwise and the clockwise cloud intervals of i, respectively. Then \(\textsf {cW} _i\) can be solved in \(O(\min (Z_i^\ell ,Z_i^r))\) rounds.

The upper bounds are essentially tight, as we show next. We start with one sided intervals.

Theorem 4.3

In the wheel settings, any algorithm for \(\textsf {cW} _i\) which does not use link \(({i\!-\!1}, i)\) requires \(\Omega (Z_{i})\) rounds.

Proof

We show that each term of \(Z_{i}\) is a lower bound on the running time of any algorithm solving \(\textsf {cW} _i\). First, note that any algorithm for \(\textsf {cW} _i\) that does not use edge \(({i\!-\!1}, i)\) requires \(\Omega (k_c(i)) \ge \Omega \left( k({i})\right) = \Omega \left( |I_{i}|\right) \) rounds, due to the exact same arguments as in Lemma 2.10.

Next, we claim that any algorithm for \(\textsf {cW} _i\) which does not use edge \(({i\!-\!1}, i)\) requires \(\Omega ({s}/{\phi (I_{i})})\) rounds. To see that note first that if \(k({i})=0\), then \(\phi (I_{i})=\infty \) and the claim is trivial. Otherwise, let \((j,j\!+\!1)\in E\) be any link in \(I_{i}\) with \(w({{j}, {j\!+\!1}}) = \phi \left( I_{i} \right) \). Note that \(j-i<k({i})\) because \(j+1\in I_i\). Consider the total bandwidth of links emanating from the interval \(I' {\mathop {=}\limits ^\textrm{def}}[{i}, {j}] \). Since we assume that the link \((i\!-\!1,i)\) is not used, the number of bits that can leave \(I'\) in t rounds is at most \(t\cdot \left( b_c(I')+ w({{j}, {j\!+\!1}})\right) \). Notice that at least s bits have to leave \(I'\). Observe that \(b_c(I') \le w({{j}, {j\!+\!1}})\), because otherwise we would have \(k_\ell (i)=j-i\), contradicting the fact that \(k(i)>j-i\). Therefore, any algorithm A that solves \(\textsf {cW} _i\) in \(t_A\) rounds satisfies

and the claim follows.

Finally, we claim that any algorithm A for \(\textsf {cW} _i\) which does not use edge \(({i\!-\!1}, i)\) requires \(\Omega \left( {s}/{b_c({I_{i}})} \right) \) rounds. To see that, recall that \(k({i}) = \min (k_c(i), k_\ell (i))\). If \(k({i})=n\) then \(I_{i}\) contains all processor nodes \(V_p\), and the claim is obvious, as no more than \(b_c({V_p})\) bits can be written to the cloud in a single round. Otherwise, we consider the two cases: If \(k({i}) = k_c(i)\), then \(k({i}) \ge {s}/{b_c({I_{i}})} - 1\) by definition, and we are done since \(t_A = \Omega (k({i}))\). Otherwise, \(k({i}) = k_\ell (i)\). Let us denote \(w_R=w({{i\!+\!k({i})}, {i\!+\!k({i})\!+\!1}})\). In this case we have \(w_R < b_c(I_{i})\). We count how many bits can leave \(I_{i}\). In a single round, at most \(b_c({I_{i}})\) bits can leave through the cloud links, and at most \(w_R\) bits can leave through the local links. Since A solves \(\textsf {cW} _i\), we must have \( s ~\le ~t_A\cdot \left( b_c({I_{i}}) +w_R\right) ~\le ~ 2t_A \cdot b_c({I_{i}}) ~,\) and hence \(t_A = \Omega \left( {s}/{b_c({I_{i}})} \right) \). \(\square \)

Theorem 4.4

Let \(Z_i^\ell \) and \(Z_i^r\) denote the timespans of the counterclockwise and the clockwise cloud intervals of i, respectively. Then \(\textsf {cW} _i\) requires \(\Omega (\min (Z_i^\ell ,Z_i^r))\) rounds in the wheel settings.

Proof

Let T be the minimum time required to perform \(\textsf {cW} _i\). Due to Lemma 2.3, we know that there is a dynamic flow mapping with time horizon T and flow value s from node i to the cloud. Let f be such a mapping. We assume that no flow is transferred to the source node i, as we can modify f so that these flow units would not be sent from i at all until the point where they were previously sent back to i. Let \(s_L\) and \(s_R\) be the total amount of flow that is transferred on links \((i-1,i)\) and \((i,i+1)\), respectively, and assume w.l.o.g. that \(s_R \ge s_L\). Let \(f'\) be a new dynamic flow mapping which is the same as f, except that no flow is transferred on link \((i-1,i)\). Since f is a valid dynamic flow that transfers all s flow units from i to the cloud, \(f'\) has flow value at least \(s-s_L\). Let A be a schedule derived from \(f'\). The runtime of A is at most T rounds. Let \(A'\) be a schedule that runs A twice: \(A'\) would transfer \(2(s-s_L)\) bits from node i to the cloud. Since \(s \ge s_R+s_L\), we get that: \(2(s-s_L) = 2\,s -2s_L \ge s + s_L + s_R -2s_L \ge s\), and thus \(A'\) solves \(\textsf {cW} _i\) without using link \((i-1,i)\). From Theorem 4.3, we get a lower bound for 2T of \(\Omega (Z_i^r) = \Omega (\min (Z_i^\ell ,Z_i^r))\). \(\square \)

From Theorem 4.2 and Theorem 4.4 we get the following corollary for the uniform wheel:

Corollary 4.5

Consider the uniform wheel topology, where all cloud links have bandwidth \(b_c\), and all local links have bandwidth \(b_\ell \ge b_c\). In this case cW can be solved in \(\Theta \left( {s\over b_\ell }+\min (\sqrt{s\over b_c},{b_\ell \over b_c})\right) \) rounds. If \(b_\ell <b_c\), the running time is \(\Theta (s/b_c)\) rounds.

Proof

If \(b_\ell <b_c\), \(k_\ell (i)=0\), \(\phi (I_i)=\infty \) and the result follows. Otherwise, by definition we have \(k_c(i)=\sqrt{s/b_c}-1\) and \(k_\ell (i)=b_\ell /b_c-1\), hence \(|I_{i}|=O(\min (\sqrt{s/b_c},b_\ell /b_c))\). It follows that \(b_c(I_{i})=O(\min (\sqrt{s\cdot bc},b_\ell ))\). The result follows by noting that \(\phi \left( I_{i} \right) =b_\ell \). \(\square \)

Recall the example of Fig. 1: there we have \(b_c=\sqrt{s}\) and \(b_\ell \ge s^{3/4}\), and the running time is \(O(s^{1/4})\).

\(\blacktriangleright \) Remark. Notice that the same upper and lower bounds hold for the \(\textsf {cR} _i\) problem as well.

4.2 Combining in the wheel setting

We are now ready to adapt Theorem 3.5 to the wheel settings.

Definition 4.3

Given an n-node wheel, for each processing node i, let \(I_{i}\) be the cloud interval of i with the smaller timespan (clockwise or counter-clockwise). Define \(j_{\max } = {\text {*}}{argmax}_i\left\{ |I_{i}| \right\} \), \(j_c = {\text {*}}{argmin}_i \left\{ b_c({I_{i}}) \right\} \), and \(j_\ell = {\text {*}}{argmin}_i \left\{ \phi \left( I_{i} \right) \right\} \). Finally, define \(Z_{\max } = {|I_{j_{\max }}| + \frac{s}{\phi \left( I_{j_\ell } \right) }+} { \frac{s}{b_c({I_{j_c}})} }\).

In words: \(j_{\max }\) is the node with the longest cloud interval, \(j_c\) is the node whose cloud interval has the least cloud bandwidth, and \(j_\ell \) is the node whose cloud interval has the narrowest bottleneck.

Our upper bound is as follows.

Theorem 4.6

In the wheel settings, cComb can be solved in \(O({Z_{\max }}\log n)\) rounds.

The general approach to prove Theorem 4.6 is similar to the one taken in the fat-links case: partition the nodes into clusters (intervals in the wheel case), compute combined values in the clusters using local links, and then complete the computation tree using cR and cW. The third stage is identical to the fat-links case, but the first two are not. We elaborate on them now.

\(\blacktriangleright \) Partitioning the nodes. Given the set of cloud intervals, we construct a cover, i.e., a (not necessarily disjoint) set of cloud intervals whose union is \(V_p\), the set of all processing nodes. We can do this so that every node is a member in a constant number of intervals in the cover.

Specifically, let \({\mathcal {C}}\) be the set of all cloud intervals \(I_{i}\). We select a cover \({\mathcal {C}}' \subseteq {\mathcal {C}}\) such that every node is a member of either one or two intervals of \({\mathcal {C}}'\). It is straightforward to find such a cover, say, by a greedy algorithm. In fact, a cover with a minimal number of intervals is found by the algorithm in [29] (in \(O(n\log n)\) sequential time). The covers produced by [29] are sufficient for that matter, as the following lemma states.

Lemma 4.7

Let \({\mathcal {C}}\) be a collection of intervals and denote \(U=\bigcup _{I\in {\mathcal {C}}}I\). Let \({\mathcal {C}}'\subseteq {\mathcal {C}}\) be a minimal-cardinality cover of U, and let \(\textrm{load}_{{\mathcal {C}}'}(i)= |\{I\in {\mathcal {C}}': I\ni i\}|\). Then for all \(i\in U\), \(1\le \textrm{load}_{{\mathcal {C}}'}(i)\le 2\).

Proof

Clearly \(\textrm{load}_{{\mathcal {C}}'}(i)\ge 1\) for all \(i\in U\) since \({\mathcal {C}}'\) is a cover of U. For the upper bound, first note that by the minimality of \(|{\mathcal {C}}'|\), there are no intervals \(I,I'\in {\mathcal {C}}'\) such that \(I\subseteq I'\), because in this case I could have been discarded. This implies that the right-endpoints of intervals in \({\mathcal {C}}'\) are all distinct, as well as the left-endpoints. Now, assume for contradiction, that there exist three intervals \(I,I',I''\in {\mathcal {C}}'\) such that \(I\cap I'\cap I''\ne \emptyset \) (i.e., there is at least one node which is a member of all three). Then \(I\cup I'\cup I''\) is a contiguous interval. Let \(l=\min (I\cup I'\cup I'')\) and \(r=\max (I\cup I'\cup I'')\). Clearly, for one of the three intervals, say I, no endpoint is l or r. But this means that \(I\subseteq I'\cup I''\), i.e., we can discard I, in contradiction to the minimality of \(|{\mathcal {C}}'|\). \(\square \)

After selecting the minimal cover, we need to take care of node 0: We require that the interval that contains node 0 does not contain node \(n-1\). If this is not the case after computing the cover, we split the interval \(I_0 \in {\mathcal {C}}'\) that contains node 0 into two subintervals \(I_0=I_0^L \cup I_0^R\), where \(I_0^L\) is the part that ends with node \(n-1\), and \(I_0^R\) is the part that starts with node 0.

\(\blacktriangleright \) Computing within intervals. Given a cover \({\mathcal {C}}\), we compute the combined values within each cloud intervals in \({\mathcal {C}}\). We now explain how this is done. First, we require that each input \(S_i\) is associated with a single interval in \({\mathcal {C}}\). To this end, we use the rule that if a node i is a member in two intervals I and \(I'\), then its input \(S_i\) is associated with the interval I satisfying \(\max (I)<\max (I')\), and a unit input \(\overline{{\textbf{1}}}\) is associated with i in the context of \(I'\), where \(\overline{{\textbf{1}}}\) is the unit (neutral) operand for \(\otimes \). Intuitively, this rule means that the overlapping regions in an interval are associated with the “left” (counterclockwise) interval.

To do the computation, we apply a computation tree combining approach now within the intervals. Consider an interval I, and let \(p=2^{\lceil \log |I| \rceil }\). We map I to a complete binary tree with p leaves, where the leftmost \(p-|I|\) leaves have the unit input \(\overline{{\textbf{1}}}\). These leaves are emulated by the leftmost node of I (the emulation is trivial). The actual computation proceeds in stages, where each stage \(\ell \) computes all level-\(\ell \) products in parallel. Let \(S_i^j\) denote the product of \(S_i,\ldots ,S_j\). The algorithm maintains the invariant that after \(S_i^j\) is computed, it is stored in node j. Initially, by assumption, for all \(0\le i< n\), we have that \(S_i^i\) is stored at node i. The computation of a stage is performed as follows.

Let \(S_i^{j}=S_i^k\otimes S_{k+1}^j\) be a product we wish to compute at level \(\ell \), and let \(S_i^k, S_{k+1}^j\) be the values held by its children. Note that \(k+1-i=j-k=2^{\ell -1}\). The algorithm forwards \(S_{i}^k\) from node k to node j, which multiplies it by (the locally stored) \(S_{k+1}^j\), thus computing \(S_i^j\), which is stored in node j for the next level. This way, the number of communication rounds is just the time required to forward s bits from k to j. Using pipelining, the number of rounds required is

We therefore have the following lemma.

Lemma 4.8

Computing the combined value of an interval I can be done in \(O\left( |I_{j_{\max }}|+\log |I_{j_{\max }}|\cdot {s\over \phi (I_{j_\ell })}\right) \) rounds in the wheel settings, with \(P_I\) stored in the rightmost node of I for each cloud interval I.

Proof

We compute the values as described above. Correctness is obvious. Regarding time complexity, we conclude from Eq. 9 that the total time required to compute the product of all inputs of any interval I is at most

\(\square \)

Finally, we have to deal with the possible overlap of intervals in the cover. This is done by simple time-multiplexing: by Lemma 4.7, each node is contained in at most two intervals, and hence the set of even-numbered intervals (starting from the interval containing node 0) are disjoint, and so is the set of the odd-numbered intervals. Time multiplexing is done by activating all even-numbered intervals every even time slot, and activating the odd-numbered intervals at every odd time slot. This way we can compute the combined values of all intervals in parallel, with only a constant factor increase in the asymptotic complexity stated in Lemma 4.8.

\(\blacktriangleright \) Combining the interval values. After computing the combined values of each interval of C, we combine these values in a computation tree fashion using cW and cR, as in Algorithm 4. Again, we have to deal with the overlap, and we do it using multiplexing. A slight complication here is due to the possible splitting of the cloud interval that contains node 0: the cR and cW operations still require the full cloud interval. To solve this problem, we multiplex the parallel invocations of cR and cW over four time slots: one of the even-numbered intervals excluding the interval containing node 0, two for the interval containing node 0 (one for each of its sub-intervals), and one for odd-numbered intervals.

We have the following lemma.

Lemma 4.9

Given the interval combined values, the root value of the computation tree is computed in \(O({Z_{\max }}\cdot \log n)\) rounds.

Proof

As explained above, by employing time multiplexing, we can run Algorithm 4 with a constant-factor slowdown. Thus, similarly to Lemma 3.4, completing the computation tree requires \(O({Z_{\max }}(C') \cdot \log |C'|)\) rounds. Noting that \(|C'|\le |C| \le n\) and that all intervals in \(C'\) are cloud intervals, we get an upper bound of \(O({Z_{\max }}\cdot \log n)\) rounds. \(\square \)

The proof of Theorem 4.6 can now be completed as follows. The correctness of the algorithm is derived from the general case (Theorem 3.5). As for the time analysis: The low levels of the algorithm (computing within intervals) require \(O({Z_{\max }}\log n)\) according to Lemma 4.8, noting that \(|I_{j_{\max }}|\le n\) and that \(Z_{\max } = {|I_{j_{\max }}| + \frac{s}{\phi \left( I_{j_\ell } \right) } + \frac{s}{b_c({I_{j_c}})} }\) by definition. The high levels of the algorithm (completing the computation tree using cR and cW) require \(O({Z_{\max }}\cdot \log {n})\) according to Lemma 4.9. All in all, the algorithm terminates in \(O({Z_{\max }}\cdot \log {n})\) rounds. \(\square \)

We close our treatment of the wheel topology with the lower bound.

Theorem 4.10

Any algorithm for cComb in the wheel topology requires \(\Omega (Z_{\max })\) rounds.

Proof

Similarly to Theorem 3.8, \(\Omega (Z_i)\) is a lower bound for every node i, by reduction from \(\textsf {cW} _i\). Recall that \(Z_{i} = {|I_{i}| + {{s}\over {\phi \left( I_{i} \right) }} + {{s}\over {b_c({I_{i}})}}}\). For index \(j_{\max }\) we get a lower bound of \(\Omega (Z_{j_{\max }}) \in \Omega (|I_{j_{\max }}|)\). For index \(j_\ell \) we get a lower bound of \(\Omega ({{s}/{\phi \left( I_{j_\ell } \right) }})\). For index \(j_c\) we get a lower bound of \(\Omega \left( {s}/{b_c({I_{j_c}}) }\right) \). Summing them all up, gives the desired lower bound. \(\square \)

Remark. We note that if the combining operator can be applied to the operands in a piecewise fashion (as in vector addition, where a coordinate of the sum can be computed based only on the corresponding values of the coordinates of the summands), tighter pipelining is possible, yielding overall complexity which is essentially \(O(Z_{\max }+\log n)\). Details can be found in [21].

5 CWC applications

In this section we briefly explore some of the possible applications of the results shown in this paper to two slightly more involved applications, namely Federated Learning (Sect. 5.1) and File Deduplication (Sect. 5.2).

5.1 Federated learning in CWC