Abstract

University rankings are gaining importance worldwide. While the top-ranked universities are the subject of numerous academic publications, very little literature is available on universities in the lower two-thirds of the ranking list. In this study, we analysed the sensitivity of year to year changes in position in the Times Higher Education World University Rankings (THE) of universities from the Czech Republic, Hungary, Poland, and Slovakia using hypothetical scenarios of potential changes in their research outputs. A regression model was developed that quantifies the effect of the inclusion of institutions that are new to ranking as well as dropouts on the ranking list. We also developed a method to allow customized sensitivity analysis of the subsequent year's rank from an institution's projected research output, given its current rank and research output, assuming that other ranking indicators remain unchanged over 1–2 years. It should be noted that most universities in the region have ranked below 800, and while research output has been increasing, it was observed that ranks worsened for most universities from these four countries. The Field-Weighted Citation Impact and the total volume of publications had the highest effect on rankings, while the proportion of Scimago Q1 publications did not have a consistent effect on the projected next year's ranks. In most universities, the growth in research performance indicators were below the level that would be required to offset the rank changes due to the inclusion of new entrants in ranking. The findings of this research suggest that universities have to consider complex strategies to improve their ranking focusing on other activities than research such as reputation, internationalization, or industry income. With due caution, our results might be generalized to most of the universities below 800 in the THE. The rank prediction tool presented in this article is available online at https://hecon.uni-obuda.hu/en/rank-calculator/.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

University rankings attract global attention by serving as a reference point for students (Dearden et al., 2019; Wut et al., 2022). High rankings are recognised by governments and partner institutions, positively influencing student recruitment, research funding, and global partnerships (Anowar et al., 2015; Grewal et al., 2008). Despite some critical voices (Thorp, 2023; Vernon et al., 2018), improving university rank positions has become part of the political agenda in many countries across Asia or the European Union (Dong et al., 2020) (Whittle & Rampton, 2020) (Galgánková, 2020; Kowalska et al., 2018; Łącka & Brzezicki, 2020; Lannert & Derényi, 2021). The resulting competition drives innovative practices and investments in research, infrastructure, and facilities to maintain their top ranks (Huenneke et al., 2017). Governments often provide financial incentives for the attainment of top-tier rankings (Benito et al., 2019; OECD, 2007; Marope et al., 2013), motivating universities to launch higher educational excellence programs and improve their ranking positions (Taylor & Braddock, 2007).

Higher education excellence is shaped by a variety of economic, societal and cultural factors (Pietrucha, 2018; Tijssen & Winnink, 2018). The best-known ranking systems, Quacquarelli Symonds (QS) and Times Higher Education (THE) (Ciubotaru, 2022) are based on indicators of research reputation, the learning and teaching environment, citations, internationalisation, and industry income (QS, 2021; THE, 2022). There is a wealth of research on modelling global university ranks using their performance indicator components (Agarwal & Tayal, 2022; Anahideh & Mohabbati-Kalejahi, 2022; De Luna Pamanes et al., 2020; Kaycheng, 2015; Liu et al., 2022; Tabassum et al., 2017), or independent variables including financial, scientific, staff-related metrics, or past ranks (Cantwell & Taylor, 2013; Marconi & Ritzen, 2015; McAleer et al., 2019; Pride & Knoth, 2018) The existing applied techniques included a range of regression(Cantwell & Taylor, 2013; De Luna Pamanes et al., 2020; Kaycheng, 2015; Liu et al., 2022; Marconi & Ritzen, 2015; McAleer et al., 2019) and machine-learning models (Agarwal & Tayal, 2022; De Luna Pamanes et al., 2020; Liu et al., 2022; Popoola et al., 2018; Tabassum et al., 2017) A number of studies suggest that the performance indicators of THE ranking are highly correlated, and university ranks are mainly predicted by two factors: academic reputation and citations (De Luna Pamanes et al., 2020; Kaycheng, 2015).

University rankings are often analysed in the context of adjacent competitors of universities that are considered close competitors or peers (Anahideh & Mohabbati-Kalejahi, 2022; Grewal et al., 2008). In our research, we analyse rankings of universities of the Visegrad Group (we refer to V4 in the text) countries, namely Poland, Hungary, the Czech Republic, and Slovakia (Table 1) (Schmidt, 2016). Most institutions in this region occupy positions below top 800 in the World University Rankings (Vašenda, 2019). The V4 countries started to invest increasingly in higher education and research, aiming to enhance the scientific output, internationalisation, and ranking positions of their universities (‘V4 UniVisibility Program’, 2021) While improving visibility on the global reputation surveys, changing staff composition, internationalisation or building industrial relations may take years, changes in scientific output can impact ranking positions on the short-term (Robinson-Garcia et al., 2019). Being viewed as the most impactful short-term strategy to improve their ranking positions, most V4 universities managed to increase their publication output over the past years. Despite such efforts, the ranking positions of V4 universities have shown an almost uniformly declining trend over the same time period. Hence, understanding the sensitivity of ranking positions to changes in the scientific performance may provide valuable insights to decision-makers when they develop strategic targets for their institutions.

While research on the highest-ranked universities is abundant (Cantwell & Taylor, 2013; De Luna Pamanes et al., 2020; Kaycheng, 2015; Szluka et al., 2023), analysis on the lower end of the list is scarce. Top positions on the ranking lists are relatively stable (Grewal et al., 2008; Safón, 2019), but exact positions below rank 600 are volatile and difficult to predict (Liu et al., 2022; Osipov et al., 2020). The number of universities ranked by THE increased from 200 in 2011 to 1800 in 2023 (World University Rankings, 2023). Several reputational elements, such as the Matthew effect or halo effect have stabilising effects on the positions of top-ranked universities (Grewal et al., 2008; Safón, 2019), while the positions of lower ranked institutions are challenged by both their incumbent competitors and new entries on the list. Entering the competition for top positions in global university rankings is a priority in many countries’ higher educational policy (Lee et al., 2020), but there is limited research on quantifying the effect of new entrants on ranking positions, which is beyond the control of incumbent universities. Furthermore, while reputation seems to be a key factor in determining the rankings of top universities (Grewal et al., 2008; Safón, 2019), anecdotal evidence suggests that reputation is hardly measurable in the lower end of the ranking lists, leading to a proportionally greater influence of research output, a factor, that institutions can control. (Pandiella-Dominique et al., 2018).

While research output is increasing, the setback in the rankings of V4 universities represents a concern in the face of growing competition for higher ranking positions. There is a research gap in understanding the evolution and sensitivity of the V4 universities’ rankings to changes in their research output. This motivated our objective to analyse the relationship between the research output of V4 universities and their global rankings. We aimed to understand, how the ranks of the V4 region’s universities have evolved over recent years, what is the effect of new entries on rankings, and what are the relationships between changes in the institutions’ research performance indicators (RPIs) and their next year’s THE ranking positions. Furthermore, we explored how sensitive next year’s rankings are to various research performance patterns of V4 universities, assuming that other performance indicators remain stable over 1–2 years. Finally, we developed a method to allow customised sensitivity analysis of next year’s rank from institutions’ planned research output, given its current rank and research output.

Methods

We combine three separate datasets in our analysis covering four calendar years from 2019 until 2022: we calculate the exact ranks of V4 universities using published performance indicators by THE, we count new entrants and dropouts on the THE list and collect RPIs of universities from the V4 region. The evolution of rankings and RPIs over time are then visualized, which shows the relationship between rankings and RPIs, quantify the effect of new entries on ranking positions, evaluate the sensitivity of THE rankings to changes in RPIs using a regression model, and propose a rank prediction method.

Data sources

We collected published university ranks and THE performance indicator scores for exact rank calculations from the website of Times Higher Education World University Rankings (www.timeshighereducation.com) (THE, 2022). RPIs were collected from the SciVal database (www.scival.com). Both SciVal and THE process data from the Scopus database, so the RPIs used in this research reflected the part of the research output of universities, which impacts their THE rankings. Our analysis did not include regional or other specialised rankings. 2023 THE ranking data is the one published in 2022.

Exact THE ranks

From rank 201,401, 601 and 1201, THE list presents rankings in widening ranges of 50, 100, 200 and 300 ranks, respectively. Therefore, for all V4 universities on the list, we calculated exact ranks for five years from 2019 until 2023 using the THE method. We validated the accuracy of our method on exact ranks published for the top 200 universities (Pearson r > 0.999, p < 0.001). The THE methodology incorporates five key categories of indicators, including teaching, research, citations, international outlook, and industry income, with weights of 30%, 30%, 30%, 7.5%, and 2.5%, respectively (THE, 2022). The performance indicator scores were only used for the calculation of exact ranks but were not included among the RPIs used in the sensitivity analysis. Throughout this paper, smaller rank numbers will be referred to as better or higher ranks, and greater rank numbers as worse or lower ranks on the THE World University Ranking list. A decrease in rank numbers will denote improvement in an institution’s ranking position. We note that some universities may share the same exact rank, which is compensated at the end of each THE rank range. For example, in 2022 in the 601–800 range six ranks were shared by two-two universities. Ranks 795–800 were not assigned in this range and rank 794 was followed by rank 801. Within the 200 rank positions in this range, 194 ranks were assigned to 200 institutions. Between 2019 and 2022, the correlation between the unique rank positions and assigned THE ranks was near-perfect (r > 0.999) in the 400+ range, so we ignored the effect of shared ranks in our calculations.

Rank shift due to new entrants and dropouts in ranking

From the THE homepage (THE, 2023), we compared the lists of ranked institutions, recording the number of new institutions added to the ranking list (new entrants) and those removed from the list (dropouts) in each year from 2019 to 2022. Institutional name changes were matched and merged institutions were also identified. We collected data on new entrants and dropouts for each 100-rank interval on the list (e.g., new entrants and dropouts between ranks 1–100, 101–200, etc.). From the properties of ordered sets it follows, that new entrants and dropouts in higher positions change the rankings of institutions in lower positions on the list. For example, the position of an institution ranked 600 is changed upwards or downwards respectively by new entrants or dropouts between ranks 1–600, while an institution ranked 800 is also affected by new entrants and dropouts between ranks 601–800. We denoted the net change from new entrants and dropouts as “rank shift.” As new institutions typically enter THE rankings anywhere between ranks 200 and 1800, rank shifts at specific rank positions can be modelled via a continuous function (see below). Therefore, we fit a polynomial curve on the rank shifts recorded in 100-rank intervals in each year (see below).

Research performance indicators

To follow the THE ranking methodology, we collected five-year running average values of RPIs of each institution from SciVal (2023). The preceding five years’ average was calculated for year t (denoted with lower index 5t). The change of RPIs in year t was entered in our analyses in the form of growth ratios. Growth ratios for year t were calculated by dividing the preceding 5-year’s average RPI with the preceding 5 year’s average for year t − 1. Growth rates referred to the difference between the preceding 5-years average RPIs of two years divided by the preceding 5-year’s average of the RPI in the base year. (A growth ratio of 1.1 corresponds to a growth rate of 10%.) We denoted as year-on-year growth rate if two years values were compared, not 5-year averages.

Contributing to 30% of THE ranking (THE, 2022), the citation score is based on the Field-Weighted Citation Impact (FWCI). FWCI is calculated from citations received by an institution’s publications relative to the average number of citations expected from similar publications from the same year, having the same type and discipline in the Scopus database. FWCI reflects the research influence of an institution relative to the average of its scientific field (Scopus, 2022). We denoted the 5-year average FWCI in year t as FWCI5t. Also, as a proxy to research volume and reputation, we included the 5-year average of the total volume of scientific output (VT5t) including all publications indexed in Scopus with the institution’s affiliation. We also collected the 5-year average of the number of Scimago Q1 publications from the institution (VQ5t) (SJR, 2023). To reflect the proportion of top-tier journals in research output, we computed the proportion of Q1 publications within the total volume of publications, as shown in Eq. (1).

where \({P}_{Q5t}\) denotes the proportion of Q1 publications, \({V}_{Q5t}\) the number of Q1 publications over the last 5 years in Scimago database and \({V}_{T5t}\) the total publication volume over the last five years.

Descriptive statistics and data visualisation

We used heatmaps to provide a comprehensive assessment of the ranking performance of universities for the entire V4 region. One heatmap was designed to illustrate the ranking status of each university from 2019 until 2023 (Fig. 1). The exact ranks of the institutions in each year and the number of Q1 papers in the first and last years of the 10-year observed period were provided for each institution. Non-ranked years were depicted with grey colours. The change in the volume of published Scimago Q1 articles between 2013 and 2022 was shown for each institution on another heatmap (Fig. 2). On both heatmaps, shades from orange to dark red depicted unfavourable trends (e.g., ranking status below 800 or declining publication volume), yellow depicted intermediate status at rank 800 or stagnation, and colours from yellowish green to dark green depicted favourable trends (e.g., ranking status beyond 800 or rapid growth).

We also tabulated the number of ranked institutions, the minimum, median and maximum ranks and 5-year running averages of the key RPIs by country and by year (Appendix 1, Table 4).

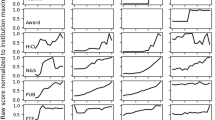

Furthermore, we plotted the growth ratios of the 5-year running averages of RPIs by the actual rank of each institution. The trends are shown by fitting fractional polynomial curves over the scatterplots (Fig. 3).

We assumed that the rank of an institution is impacted by both its scientific output and the dynamic changes to the list caused by new entrants and dropouts. Our rank prediction model is shown in the Eq. (2).

where RPt denotes the predicted rank in year t, and REt denotes the expected rank in year t. Expected rank is the rank that the university would achieve if the list would only be changed by the new entrants and dropouts compared to the previous ranking cycle, and the ordering of already ranked institutions on the list would be preserved. Rank gain in year t, denoted by RGt is the difference between the predicted and the expected rank.

Predicted ranks were derived in a two-step process. First, REt was calculated from the institutions actual rank in previous year (RA(t−1)) and the rank shift (RSt) due to new entries/dropouts (Eq. (3)).

where \({R}_{{\text{E}}t}\) denotes the expected rank, \({R}_{{\text{A}}(t-1)}\) is the institution’s rank in previous year and \({R}_{{\text{S}}(t)}\) is the rank shift due to new entries and dropouts. \({R}_{{\text{E}}t}\) of an institution could only be calculated for those years, which were preceded by a ranked year.

We estimated RSt as a function or RA(t−1), by fitting a 5th order polynomial curve on the rank shifts in each 100-rank band, as shown in Eq. (4). For the estimation of model parameters to predict RGtvalues (see below), RSt values were calculated separately for each year between 2020 and 2023, while for the prediction of future ranks, RSt+1 values were predicted from the average of rank shifts between years 2020 and 2023.

where \({R}_{{\text{St}}}\) is the shift in rank due to new entrants or dropouts, RA(t−1) denotes the actual rank of the institution in the previous year.

In the second step, we modelled rank gains (RGt) as a function of scientific output. Our assumptions for this step were the following: the absolute performance level of an institution in year t − 1 predicts its actual rank in year t − 1 and hence, its rank shift, and its expected rank in year t (Eq. (3)). The ranks of two adjacent institutions will be shifted by nearly the same amount, and if the performance of all ranked institutions increases at the same rate, their rank order remains preserved. Therefore, rank gains (RGt) (i.e., deviations of the actual ranks versus the expected rank order in year t) depend on the growth ratios of an institution’s performance compared to the growth ratio of others. Institutions with performance growth ratios greater compared to their neighbours’ on the list improve their position (i.e., their RGt value becomes negative). RGt values were computed as the difference between RAt and REt of each institution in each year t that was preceded by a ranked year (Eq. (5)).

where RGt represents the difference between the actual (RAt) and expected rank (REt).

We modelled RGt in year t using the growth ratio of RPIs (i.e., the ratio of 5-year averages of RPIs in year t and preceding year t − 1). The vector of predictors is denoted by xt,

where xitg = xit/xi(t − 1) represents the growth ratio of a single RPI denoted with i in year t (Eq. (6)). (We note that this specification provided a better fit than expressing percentage growth in logarithmic form).

We assumed that three types of interactions could modify the effect of predictors. First, the growth ratio of other RPIs (e.g., the growth ratio of publication volume may impact ranking positions differently at high or low growth ratios of publication quality). We denoted the vector of all pairwise interactions between the predictors as xgt. A single term is written as xgit⋅xgjt (i ≠ j). The absolute levels of the indicators may also modify the effect of predictors (e.g., the growth ratio of FWCI5t may have a different effect at high or low overall publication volume). We denote the vector of interaction terms with the absolute levels of predictors in year t as xat. As a single term in xat can be written as xgit⋅xjt (i and j are allowed to be the same indicator). Finally, to capture the changing importance of scientific performance at different rank levels (e.g., the contribution of the reputation survey is increasing at better rank positions), we included a vector of interactions of all previous terms with RA(t−1), denoted as xrt. The elements of xrt include xgit⋅RA(t−1), xgit·xgjt⋅RA(t−1) and xgit·xjt·RA(t−1) Finally, to reflect the serial correlation of RGt (e.g., strategic initiatives with lasting effect on an institution’s performance), we added RGt with 1-year lag RG(t−1). Altogether, our hypothetical model to predict RGt can be written as

where α is the intercept and β denotes coefficients for the predictors.

At first, given the non-normal distribution of many predictors, we observed the Spearman rank correlation matrix for all predictors, to analyse the bivariate associations between the raw predictors and the constructed interaction terms (Bishara & Hittner, 2015). Then the estimation of model coefficients was performed via OLS regression with errors clustered on the level of institutions. We developed six regression models and present our findings based on the model that was found to be optimal (M1) through the estimation and selection process detailed in Appendix 3. Predicted ranks were calculated by adding previous year’s rank (RA(t−1)), RSt as calculated using the estimated coefficients of Eq. (4) and RGt, computed using the estimated coefficients of selected variables from Eq. (7).

Exploring change patterns of RPIs via strategic scenarios

To understand if some change patterns of RPIs are associated with better ranking positions, we predicted ranks in various strategic scenarios detailed in Table 2.

In each scenario, we made predictions from baseline rank positions of 800,1000 and 1200. We computed the mean values of the RPIs for each baseline rank, and applied growth rates of 5%, 10% and 15% for the 5-year averages of RPIs.

Results

Descriptive results

The number of ranked institutions in the V4 region increased from 35 in 2019 to 68 in 2023. While Czech Republic had the most of ranked universities in 2019 (N = 14), by 2023, Poland took over the lead. In 2023, Poland, Czech Republic, Hungary and Slovakia had 32, 18, 11 and 7 ranked universities, respectively (Appendix 1, Table 4). Most institutions from the region were ranked below 800. This proportion increased from 81.1% in 2019 to 93.7% by 2023, showing an overall declining trend. The median rank decreased from 1054 in 2020 to 1372 in 2023 in the four countries (Appendix 1, Table 4). While the total publication volume of V4 institutions stagnated between 2019 and 2022, both the research influence and proportion of Q1 publications improved over the same period. Median FWCI5t increased from 0.94 in 2020 to 0.98 in 2023. The median proportion of Q1 publications increased from 0.34 in 2020 to 0.44 in 2023. A detailed summary table of ranks and RPIs by country are summarized in Appendix 1—Table 4.

The heatmap in Fig. 1 shows rank changes over time for each institution. The colour shift towards dark red tones from 2019 to 2023 illustrates that most universities lost ranks, but a few demonstrated improvements. Semmelweis University from Hungary emerged as the most prominent performer, displaying a consistent upward trajectory across all assessed periods. Additionally, Jagiellonian University from Poland exhibited a favourable trend, albeit with a notable setback in 2023 compared to 2022.

Figure 2 provides insight into the year-on-year growth rates of published Q1 articles from 2013 to 2022. The predominant green colours of the heatmap indicated a gradual yet steady growth in the number of published Q1 articles in most V4 universities. The pandemic years of 2020 and 2021 were particularly remarkable, when the number of published Q1 articles grew rapidly in nearly all V4 universities compared to previous years. Country-specific heatmaps are presented in Figures. 7–10 in Appendix 2.

The growth ratios of the 5-year averages of RPIs by rank are shown in Figure 3. For all indicators, we observed the highest growth ratios at the lowest ranks, suggesting that the competitive dynamics of universities change with their ranks and universities with lowest baseline performance can achieve highest growth ratios, probably due to their lower baseline performance. The association between the growth ratios of FWCI5t and PQ5t and the institutional rank had reverse J-shape, with stagnation between rank positions of 1000 and 500, and a modest increase in growth ratios of the institutions at the highest ranks. Growth ratios of total publication volume (VT5t) varied greatly at lower ranks, while the total publication output beyond rank 1000 tended to show growth ratio between 1.0 and 1.1 (i.g., single-digit growth rate).

Correlations between variables

The correlation matrix of the variables is shown in Table 5 (in Appendix 3) and supports the assumptions of our rank prediction strategy. REt is nearly perfectly correlated with RA(t−1) (r = 0.97), suggesting that the actual rank of an institution in year t − 1 predicts nearly perfectly its expected rank in year t, shifted by new entries and dropouts in the list. However, RGt shows no significant correlation with REt (r = − 0.04), but it exhibits weak to moderate correlations with the RPIs in the predictor vectors. This suggests that changes of an institution’s scientific performance (partly) predict its position relative to its expected rank. However, the serial correlation between RG(t−1) and RGt was weak (r = 0.19), suggesting that the previous year’s rank gain will have only minimal influence on how the institution will perform in its new position.

Predicted rank shifts due to new entries and dropouts

Between 2020 and 2023, after deducing dropouts, there were no new entries at positions above rank 200, while the number of new entries per year below rank 200 increased linearly by 1.2 per 100 range. For example, on average, there were 1, 8.2 and 14.5 new entrants between ranks 201–300, 801–900 and 1201–1300, respectively. The mean rank shift due to new entrants and dropouts per year in the same ranges was 1.3, 38 and 80.5, respectively. The parameters of the rank shift (RSt) calculator at specific rank positions are shown in Eq. (8).

where RSt the shift in rank due to new entries/dropouts, and RA(t−1) denotes the actual rank of the institution in the previous year.

The fit of the 5th-order polynomial curve was nearly perfect (R2 > 0.999). The prediction error term for RSt was several magnitudes smaller than that of rank gain (RGt), therefore we ignored it in the final rank predictions. Rank shift (RSt) at specific ranks as a continuous function of RA(t−1) is depicted in Fig. 4.

Predicted rank gains

There were 200 observations with RGt values available after at least one ranked year, out of which 191 observations were included in the estimation sample. Intermediate results of the data preparation and variable selection processes are provided in the Appendix 4. Altogether, the 31 predictor variables in the full model explained 32.8% of the variance of RGt, while the three predictors selected for the final model explained 22.1%. The growth ratios of all three key RPIs were included in some form of interaction with other predictors. Negative coefficients indicated a contribution to better-than-expected ranks. In line with the THE methodology, the growth of FWCI5t (FWCI5tg) had the greatest influence on RGt, which was enhanced by higher FWCI5t values. The growth ratio of the total publication volume (VT5tg) also improved RGt, increased by higher FWCI5t growth ratios, and by worse rank positions. (i.e., with the same growth ratio in publication volume, more institutions can be overtaken at lower ranks. However, institutions at lower positions also lose more ranks due to new entrants in ranking.) Unexpectedly, the positive coefficient of PQ5tg suggested that high growth ratios in the proportion of Q1 publications were associated with poorer rank positions. This effect was enhanced by high FWCI5t values, and lower ranks. (Table 3). However, in the sensitivity analysis with alternative models the role of PQ5tg was rather inconsistent (Appendix 4, Table 6).

Predicting next-year-ranks

The final model to predict next year’s ranks (RA(t+1)) is shown in Eq. (9). Using the parameters of Eq. (8) for predicting RSt+1 and the coefficients of Table 3 estimated for in-sample predictions of RGt+1, RP(t+1) (i.e., predictions using the available historical ranks and RPI data for the estimation of model parameters) predicted actual ranks with R2 value of 0.9997 and root mean squared error (RMSE) of 54.6, mean absolute error (MAE) of 42.5, and normally distributed error terms. The RMSE value of 54.6 suggests that 68% of in-sample predictions were within the actual rank ± 55 range. Within sample predictions of next year’s expected ranks (RE(t+1)) assuming unchanged research output, and predicted ranks (RP(t+1)) considering both rank shifts and changes in research output were plotted against current ranks in Fig. 5.

To aid the interpretation of our results, we calculate the next-year predicted rank of an institution currently ranked 1000 with no change in its scientific performance (i.e., FWCI5tg = PQ5tg = VT5tg = 1). The mean FWCI5t at rank 1000 is 1.15. The predicted rank gain (RG(t+1)) using the coefficients of Table 3 is 9.4 (− 165.3 × 1.15 + 0.06 × 1.15 × 1000–0.14 × 1000 + 270.5). Using Eq. (8), the predicted rank shift (RS(t+1)) is 46. Following Eq. (9), the predicted rank is 1055.4 (1000 + 46 + 9.4). Altogether, without change in its performance, the institution is expected to lose 55–56 rank positions by next year. To allow customised sensitivity analysis, we included a calculator as a supplementary file.

Exploring strategic scenarios

Following the strategic scenarios, in Table 2, we show rank predictions from various baseline rank positions and growth rates. (Fig. 6.) To offset the rank shifts, an institution at rank 1200 must increase its overall 5-year average scientific output by approximately 15%, while at rank 1000, 10% and at rank 800, 6% growth may be enough to preserve positions. While the growth of FWCI5t has the most influence on an institution’s ranking, those universities were most successful, who increased both their FWCI5t and total publication volume. Although FWCI5t and PQ5t were strongly correlated (r = 0.62) in our sample, the increasing proportion of Q1 publications did not contribute to better rankings. We note, that while high growth rates of PQ5t suggest a rapid evolution of publications in top-tier journals, the observed growth rates of FWCI5t and VT5t remained below the threshold required to offset the rank shifts due to new entries, which explains the overall declining trend of university rank positions in the region.

Discussion

In this paper we described that the THE World University Ranking positions of most universities in the V4 region have been decreasing over the past years, even though the citation impact and proportion of Q1 publications have been increasing over the same time period. We explored the sensitivity of next year’s ranks of V4 universities to changes in their research performance, if other performance indicators remain stable over 1–2 years. Simultaneous growth in the overall volume of publications and FWCI seems to be the scenario with greatest positive impact on ranks, while, contrary to the expectations, the growth in the proportion of Q1 publications did not have positive impact on rankings. Outside the control of ranked institutions, the large number of new entrants in the THE ranking list shifts the ranks of V4 universities downwards, and the growth in the research output of most universities cannot offset the negative rank shifts. To allow customised sensitivity analysis of next year’s rank from institutions’ planned research output given their current rank and research output, we developed a model with in-sample prediction performance characterised by R2 of 0.9997 and RMSE of 54.6.

With the increasing use of international rankings for higher education decision making, more and more studies are examining the reliability and impact of rankings, the weaknesses and strengths of different methodologies, and their potential biases (Kováts, 2015). In addition to these studies, an increasing number of papers have been written on the trends and predictability of the determinants of rankings, such as FWCI (Wagner et al., 2018). Furthermore, models for predicting ranking position represent an active field of research. These approaches are typically based on the performance indicators of existing ranking systems, with a focus on research indicators. In our research we used THE’s publicly available league tables, scores and weights of the main performance indicators, and publicly available data on the RPIs from SciVal database of Czech, Hungarian, Slovak, and Polish universities. To the best of our knowledge, our approach is unique as we have considered the dynamic nature of rankings. We assessed the impact of scientific performance and new entries and dropouts on the THE ranking positions of universities from these four countries between 2019 and 2023. Another unique feature of our study is that while previous studies have attempted to predict the ranking of universities at the top of the rankings for example a group developed a predictive model for universities in the top 200 in THE world list (De Luna Pamanes et al., 2020) our research focused on universities of the V4 region where universities are typically ranked in the lower range of THE rankings with positions below rank 800. These universities also face similar geopolitical challenges that affect their competitiveness in the rankings, such as language barriers (van Raan et al., 2011), historic policy factors (Antonowicz et al., 2017).

The data suggest that, in terms of overall representation within THE ranking system, Poland has emerged as the clear leader in the region followed by Czech Republic. Hungary and Slovakia lagged regarding the number of ranked universities. On the other hand, it was observed that the number of universities ranked beyond 800 is approximately the same in three countries: Poland, the Czech Republic, and Hungary. In contrast, Slovakia has no universities ranked beyond 800. While being ranked or the number of ranked universities can be considered a significant indicator of the quality of higher education in a country, the positions at which the ranked universities stand are also of great importance (Banász et al., 2023). Poland has the most ranked institutions out of the four countries, although the number of universities ranked in the top 800 in Poland, Czech Republic and Hungary is almost the same. This suggests that a higher quantity of ranked universities may not mean that a country has a higher quality of higher education across all its universities. It is important for policymakers, decision makers and university executives to focus on enhancing the quality of education and research in all institutions, rather than just select a few, to ensure the long-term sustainability and competitiveness of their higher education systems (Dachyar & Dewi, 2015; OECD, 2007; Simina, 2022).

We chose heatmaps to visualise the performance of these universities in the THE world ranking. This showed that with few exceptions, the ranking of universities of the region showed a declining trend, despite the increasing research prestige and influence. The growth rates of the two most impactful indicators, FWCI and overall publication volume were insufficient to offset the loss in ranks due to new entries in ranking. We found that among the examined universities the growth rate of proportion of Q1 publications and FWCI was higher for universities beyond 1000. It is important to note that for universities with lower publication output a small change in the number of already small number of Q1 publications leads to a larger relative growth compared to the previous year. Our results suggest that this effect may weaken at the 500–1000 ranks, and then the growth is more modest for the top universities in the list. Furthermore, our results highlight that the impact of new entrants in ranking is stronger under the range 1000–1200, new entries push incumbent institutions downwards up to 50–70 positions in each year, that is a major threat on their ranking position, largely outside their control. Unless achieving a constant double-digit growth in FWCI and overall publication volume, progress in ranking for universities of the region seems elusive. Accordingly, it is imperative to take into account two pivotal factors. Firstly, the addition of new universities to the ranking each year can lead to a decline in the ranking of other universities. Secondly, universities must strive to make significant enhancements in their RPIs. Hence, universities need to consider the combination of “new entries” and “significant improvement in RPIs" as the two decisive factors.

In our sample, FWCI was strongly correlated with rank, in line with the THE methodology (FWCI weight of 30%). While universities with higher citations are more likely to have higher publication output, a higher volume of publications does not necessarily result in more citations (De Luna Pamanes et al., 2020). These findings are further nuanced by the results of our strategic scenario Q and VT5t, which suggests that the proportion of Q1 publications did not have a positive effect on ranks. This observation can be partly explained by the Matthew Effect (Merton, 1968) which suggests that entities with more existing recognition often from developed countries or prestigious universities tend to receive more attention and citations for their work (Gomez et al., 2022). Consequently, publishing in high-tiered journals may result in fewer citations for less well-known universities or countries due to existing biases in the academic community. On the other hand, increasing the volume of publications, which includes contributions to both high and low-tiered journals appear to improve rank and makes it more likely to receive citations. Given that citations carry significant weight in academic rankings, an increase in the overall volume of publications can lead to rank improvement. THE's new methodology aims to moderate the impact of FWCI to ranks to better measure research impact and filter out FWCI potential bias (Davis, 2022). Despite the intention, it is questionable how the new methodology will change the dynamics of Q1 publications and the new citation indicators.

Despite the strong explanatory power of our overall model (R2 = 0.9997), the moderate explanatory power of our rank gain model (R2 = 0.221) suggests that there are elements that we have not taken into account, and the mean absolute error of 42.5 ranks could be further improved. Banász et al. argue that universities should consider an appropriate composite of indicators in order to move up in the rankings, while highlighting the salience of reputation emphasising its self-reinforcing effect (Banász et al., 2023). The statistical analysis presented by Benito et al. drew attention to the influential power of income, their work was based on an analysis of the top 300 universities in the QS and their results showed that funding explained up to 51% of the variability of the positions attained by the universities in the rankings (Benito et al., 2019; OECD, 2007; Marope et al., 2013). While income can be influenced by a targeted strategy, reputation is a challenging factor to control To address this challenge and get recognition, quality of the teaching and research has to improve, universities could prioritize investments in research infrastructure and faculty development, attract and retain talented researchers and educators, or collaborate with international institutions to promote cross-border knowledge exchange and innovation (Bilous, 2015; Dachyar & Dewi, 2015).

Although some of the studies seek to provide alternative solutions to the rankings, such as Banász's classification solution (Banász et al., 2023), which aims to compare similar universities with similar universities, or such as different national rankings adjusted for local specificities, that are not in line with the results of global rankings (Çakır et al., 2015). Globalisation and the increasing competition between universities, as well as the popularity of international generic rankings as opposed to more narrowly defined rankings (regional or subject), justify the focus of our research on the methodology of a widely recognised generic ranking, THE world university ranking. Understanding the dynamics and limitations of these rankings is of key importance when planning programs based on them, interpreting published league tables.

Strengths and weaknesses

One potential limitation of our study is that we have focused on a relatively small number of universities, and they exhibit homogeneity in their ranks over a five-year period. Including more universities from other regions and other rank ranges could improve the generalisability of our findings beyond the V4 region and ranks above 800. The explanatory power of our rank gain model could be improved by including additional factors beyond RPIs. However, to aid the predictions of next year’s ranks during strategic planning in the universities, it is important that non-standardised values of institution-level indicator data are uniformly available from data sources external to the THE rankings, similarly to the RPIs presented in the SciVal database. Indicators in this form should also provide meaningful input to decision-makers, which can be readily translated to strategic targets. Furthermore, the rank shift model assumed that future rank shifts follow the average of those in the previous years. However, with the expansion of the THE list, rank shifts may change over time in various rank ranges of the list. Modelling the dynamic changes of rank shifts could further improve the predictive power of our model and decrease mean absolute error. Finally, our model considered only 4 consecutive years. Therefore, we consider that the model results remain valid for the 1–3 years, when the stability of model parameters over time have to be re-examined. Finally, the model performance has been reported for in-sample predictions, while its out-of sample performance will have to be tested when 2024 ranks will be available for the included institutions.

The main strength of our approach is that we have identified an important factor that has been so far ignored in the literature, the impact of new entrants on the position of already ranked universities. This factor becomes more important as the popularity of rankings are increasing. Our research also shows that the effect of rank shifts is particularly strong for universities at the lower range of the ranking list. We have also developed an applicable, interactive tool that can predict with high confidence the next year's rank of a given university along the planned RPIs.

Conclusion

This work first analysed the sensitivity of the next year’s Times Higher Education World University Rankings positions of universities from Czech Republic, Hungary, Poland and Slovakia to the changes in their research output. A regression model was developed to quantify the effect of new entrants and dropouts on the ranking list, as well as the sensitivity of ranks to various research performance patterns. A method was developed that allowed customizing the sensitivity analysis of next year’s rank from an institution’s planned research output, given its current rank and research output, assuming that other performance indicators remain unchanged over 1–2 years.

A descending trend was observed in the ranks for most universities from these four countries. We observed that the Field-Weighted Citation Impact and the total volume of publications had the highest effect on ranks, while the proportion of Scimago Q1 publications did not have a consistent effect on projected next year's ranks. In most universities, the growth in RPIs was below the level that would be required to offset the rank changes due to the inclusion of new entrants in ranking. The findings of this research suggest that universities have to consider complex strategies to improve their ranking focusing on other activities than research such as reputation, internationalization, or industry income.

Given the results and findings of this work, we can conclude that establishing and maintaining complex ranking strategies requires awareness that experiences and knowledge about ranking might be transferred between different jurisdictions, such as regions, countries and universities, but not necessarily in a direct way and not without difficulties and limitations. Some elements of this complex system are likely to be easier to adopt while other ones might be more difficult to transfer. Complementary local data collection and local research are needed to adapt and to transfer ranking experiences to the local context. Each region and country must also develop its own strategies to have better formulated and evaluated initiatives and strategies to improve ranking positions. It is also proposed that all universities should have trained ranking specialists and that ranking-related training should be available at national level. This study utilizes existing knowledge from other regions and applies relevant findings to the V4 regions. To conduct a comprehensive analysis, it was necessary to collect and analyse local data from universities in the lower third of the ranking. With due caution, our results may be generalizable to most of the universities below 800 in the THE.

We recommend universities conducting similar research to incorporate the findings of this study, ensuring the proper utilization of local data. To do so, we provide our rank prediction tool presented via this link: https://hecon.uni-obuda.hu/en/rank-calculator/.

References

Agarwal, N., & Tayal, D. K. (2022). FFT based ensembled model to predict ranks of higher educational institutions. Multimedia Tools and Applications, 81(23), 34129–34162. https://doi.org/10.1007/s11042-022-13180-9

Anahideh, H., & Mohabbati-Kalejahi, N. (2022). Local explanations of global rankings: Insights for competitive rankings. IEEE Access, 10, 30676–30693. https://doi.org/10.1109/ACCESS.2022.3159245

Anowar, F., Helal, M. A., Afroj, S., Sultana, S., Sarker, F., & Mamun, K. A. (2015). A critical review on world university ranking in terms of top four ranking systems. In New trends in networking (pp. 559–566). Springer. https://doi.org/10.1007/978-3-319-06764-3_72

Antonowicz, D., Kohoutek, J., Pinheiro, R., & Hladchenko, M. (2017). The roads of ‘excellence’ in Central and Eastern Europe. European Educational Research Journal, 16(5), 547–567. https://doi.org/10.1177/1474904116683186

Banász, Z., Kosztyán, Z. T., Csányi, V. V., & Telcs, A. (2023). University leagues alongside rankings. Quality & Quantity, 57(1), 721–736. https://doi.org/10.1007/s11135-022-01374-0

Benito, M., Gil, P., & Romera, R. (2019). Funding, is it key for standing out in the university rankings? Scientometrics, 121(2), 771–792. https://doi.org/10.1007/s11192-019-03202-z

Bilous, M. (2015). UNIVERSITY RANKING IMPROVING TOOLS IN MODERN INFORMATION EDUCATIONAL ENVIRONMENT. Information Technologies in Education, 23, 90–99. https://doi.org/10.14308/ite000539

Bishara, A. J., & Hittner, J. B. (2015). Reducing bias and error in the correlation coefficient due to nonnormality. Educational and Psychological Measurement, 75(5), 785–804. https://doi.org/10.1177/0013164414557639

Çakır, M. P., Acartürk, C., Alaşehir, O., & Çilingir, C. (2015). A comparative analysis of global and national university ranking systems. Scientometrics, 103(3), 813–848. https://doi.org/10.1007/s11192-015-1586-6

Cantwell, B., & Taylor, B. J. (2013). Global status, intra-institutional stratification and organizational segmentation: A time-dynamic Tobit analysis of ARWU position among U.S. universities. Minerva, 51(2), 195–223. https://doi.org/10.1007/s11024-013-9228-8

Ciubotaru, A. (2022). How to interpret university rankings and what are they good for? https://www.mastersportal.com/articles/3081/how-to-interpret-university-rankings-and-what-are-they-good-for.html#:~:text=Thethreemostpopularuniversity,universitiesbasedondifferentmetrics

Dachyar, M., & Dewi, F. (2015). Improving university ranking to achieve university competitiveness by management information system. IOP Conference Series: Materials Science and Engineering, 83, 012023. https://doi.org/10.1088/1757-899X/83/1/012023

Davis, N. (2022). WUR 3.0 explained. November.

De Luna Pamanes, A., Urbina, J. A. A., Ortiz, F. J. C., & Cancino, H. G. C. (2020a). The world university rankings model validation and a top 50 universities predictive model. In 2020 3rd International conference on computer applications & information security (ICCAIS) (pp. 1–5). https://doi.org/10.1109/ICCAIS48893.2020.9096841

Dearden, J. A., Grewal, R., & Lilien, G. L. (2019). Strategic manipulation of university rankings, the prestige effect, and student university choice. Journal of Marketing Research, 56(4), 691–707. https://doi.org/10.1177/0022243719831258

Dong, X., Maassen, P., Stensaker, B., & Xu, X. (2020). Governance for excellence and diversity? The impact of central and regional affiliation for the strategic positioning of Chinese top universities. Higher Education, 80(5), 823–837. https://doi.org/10.1007/s10734-020-00516-3

Furnival, G. M., & Wilson, R. W. (1974). Regressions by leaps and bounds. Technometrics, 16(4), 499–511. https://doi.org/10.1080/00401706.1974.10489231

Galgánková, V. (2020). Competitiveness of V4 countries using the global competitiveness index. SHS Web of Conferences, 74, 06007. https://doi.org/10.1051/shsconf/20207406007

Gomez, C. J., Herman, A. C., & Parigi, P. (2022). Leading countries in global science increasingly receive more citations than other countries doing similar research. Nature Human Behaviour, 6(7), 919–929. https://doi.org/10.1038/s41562-022-01351-5

Grewal, R., Dearden, J. A., & Llilien, G. L. (2008). The university rankings game. The American Statistician, 62(3), 232–237. https://doi.org/10.1198/000313008X332124

Huenneke, L. F., Stearns, D. M., Martinez, J. D., & Laurila, K. (2017). Key strategies for building research capacity of university faculty members. Innovative Higher Education, 42(5–6), 421–435. https://doi.org/10.1007/s10755-017-9394-y

Hungarian Central Statistical Office, Czech Statistical Office, Statistics Poland, & Statistical Office of the Slovak Republic. (n.d.). Main indicators of the Visegrad four group countries. https://www.ksh.hu/docs/eng/xftp/idoszaki/ev4_fobbadatok.pdf

Kaycheng, S. (2015). Multicolinearity and indicator redundancy problem in world university rankings: An example using times higher education world university ranking 2013–2014 Data: Multicolinearity in university rankings. Higher Education Quarterly, 69(2), 158–174. https://doi.org/10.1111/hequ.12058

Kováts, G. (2015). “New” rankings on the scene: The U21 ranking of national higher education systems and U-Multirank. In The European higher education area (pp. 293–311). Springer. https://doi.org/10.1007/978-3-319-20877-0_20

Kowalska, A., Kovarnik, J., Hamplova, E., & Prazak, P. (2018). The selected topics for comparison in Visegrad four countries. Economies, 6(3), 50. https://doi.org/10.3390/economies6030050

Łącka, I., & Brzezicki, Ł. (2020). Efficiency of the research and development activities of technical universities in Poland. Nierówności Społeczne a Wzrost Gospodarczy, 63(3), 258–274. https://doi.org/10.15584/nsawg.2020.3.13

Lannert, J., & Derényi, A. (2021). Internationalization in Hungarian higher education. Recent developments and factors of reaching better global visibility. Hungarian Educational Research Journal, 10(4), 346–369. https://doi.org/10.1556/063.2020.00034

Lee, J., Liu, K., & Wu, Y. (2020). Does the Asian catch-up model of world-class universities work? Revisiting the zero-sum game of global university rankings and government policies. Educational Research for Policy and Practice, 19(3), 319–343. https://doi.org/10.1007/s10671-020-09261-x

List of All Universities in the World 2023 – AUBSP. (2023). https://www.aubsp.com/universities-in-world/

Liu, X., Chen, G., Wen, S., & Han, X. (2022). Analysis and prediction of QS world university rankings based on data mining technology. In 2022 6th International conference on education and multimedia technology (pp. 60–64). https://doi.org/10.1145/3551708.3556207

Marconi, G., & Ritzen, J. (2015). Determinants of international university rankings scores. Applied Economics, 47(57), 6211–6227. https://doi.org/10.1080/00036846.2015.1068921

Marope, P. T. M., Wells, P. J., & Hazelkorn, E. (2013). Rankings and accountability in Higher Education: Uses and Misuses. UNESCO.

McAleer, M., Nakamura, T., & Watkins, C. (2019). Size, internationalization, and university rankings: Evaluating and Predicting Times Higher Education (THE) data for Japan. Sustainability, 11(5), 1366. https://doi.org/10.3390/su11051366

Merton, R. K. (1968). The Matthew effect in Science: The reward and communication systems of science are considered. Science, 159(3810), 56–63. https://doi.org/10.1126/science.159.3810.56

OECD. (2007). How do rankings impact on higher education. IMHE INFO—Programme on Institutional Management in Higher Education, 1–4 December 2007. OECD.

Osipov, G., Karepova, S., Ponkratov, V., Karaev, A., Masterov, A., & Vasiljeva, M. (2020). Economic and mathematical methods for ranking eastern European universities. Industrial Engineering & Management Systems, 19(1), 273–288. https://doi.org/10.7232/iems.2020.19.1.273

Pandiella-Dominique, A., Moreno-Lorente, L., García-Zorita, C., & Sanz-Casado, E. (2018). Modelo de estimación de los indicadores del Academic ranking of world universities (Shanghai ranking). Revista Española De Documentación Científica, 41(2), 204. https://doi.org/10.3989/redc.2018.2.1462

Pietrucha, J. (2018). Country-specific determinants of world university rankings. Scientometrics, 114(3), 1129–1139. https://doi.org/10.1007/s11192-017-2634-1

Popoola, S. I., Atayero, A. A., & Adeyemi, O. J. (2018). Prediction model for world ranking of young universities using artificial neural network. Scopus, 2018(SEP), 1248–1249. https://www.scopus.com/inward/record.uri?eid=2-s2.0-85067056843&partnerID=40&md5=a60bb658e59ab050b0d8bc50c218e634

Population with tertiary education. (2022). OECD. https://doi.org/10.1787/0b8f90e9-en

Pride, D., & Knoth, P. (2018). Peer review and citation data in predicting university rankings, a large-scale analysis. arXiv Preprint. https://doi.org/10.48550/ARXIV.1805.08529

QS. (2021). Understanding the Methodology: QS World University Rankings. https://www.topuniversities.com/university-rankings-articles/world-university-rankings/understanding-methodology-qs-world-university-rankings#:~:text=TheQSmethodologyhassix,%2Cresearchimpact%2Cinternationalisation.

Robinson-Garcia, N., Torres-Salinas, D., Herrera-Viedma, E., & Docampo, D. (2019). Mining university rankings: Publication output and citation impact as their basis. Research Evaluation, 28(3), 232–240. https://doi.org/10.1093/reseval/rvz014

Safón, V. (2019). Inter-ranking reputational effects: An analysis of the Academic Ranking of World Universities (ARWU) and the Times Higher Education World University Rankings (THE) reputational relationship. Scientometrics, 121(2), 897–915. https://doi.org/10.1007/s11192-019-03214-9

Schmidt, A. (2016). Friends forever? The role of the Visegrad group and European integration. Politics in Central Europe, 12(3), 113–140. https://doi.org/10.1515/pce-2016-0019

SciVal. (2023). SciVal. https://www.scival.com/landing

Scopus. (2022). What is Field-weighted Citation Impact (FWCI)? https://service.elsevier.com/app/answers/detail/a_id/14894/supporthub/scopus/~/what-is-field-weighted-citation-impact-%28fwci%29%3F/

Simina, P. R. A. (2022). The importance of sustainability for university competitiveness. Studies in Business and Economics, 17(1), 187–201. https://doi.org/10.2478/sbe-2022-0013

SJR. (2023). Scimago journal and country rank. https://www.scimagojr.com/journalrank.php

Szluka, P., Csajbók, E., & Győrffy, B. (2023). Relationship between bibliometric indicators and university ranking positions. Scientific Reports, 13(1), 14193. https://doi.org/10.1038/s41598-023-35306-1

Tabassum, A., Hasan, M., Ahmed, S., Tasmin, R., Abdullah, D. Md., & Musharrat, T. (2017). University ranking prediction system by analyzing influential global performance indicators. In 2017 9th International conference on knowledge and smart technology (KST) (pp. 126–131). https://doi.org/10.1109/KST.2017.7886119

Taylor, P., & Braddock, R. (2007). International university ranking systems and the idea of university excellence. Journal of Higher Education Policy and Management, 29(3), 245–260. https://doi.org/10.1080/13600800701457855

THE. (2022). World University Rankings 2023: Methodology. https://www.timeshighereducation.com/world-university-rankings/world-university-rankings-2023-methodology

THE. (2023). World University Rankings 2023. https://www.timeshighereducation.com/world-university-rankings/2023/world-ranking

Thorp, H. H. (2023). Revolt against educational rankings. Science, 379(6631), 419–419. https://doi.org/10.1126/science.adg8723

Tijssen, R. J. W., & Winnink, J. J. (2018). Capturing ‘R&D excellence’: Indicators, international statistics, and innovative universities. Scientometrics, 114(2), 687–699. https://doi.org/10.1007/s11192-017-2602-9

V4 UniVisibility Program. (2021). ÓU. https://uni-obuda.hu/v4-uni-visibility/

van Raan, A. F. J., van Leeuwen, T. N., & Visser, M. S. (2011). Severe language effect in university rankings: Particularly Germany and France are wronged in citation-based rankings. Scientometrics, 88(2), 495–498. https://doi.org/10.1007/s11192-011-0382-1

Vašenda, J. (2019). Visegrad Group countries compared through world university rankings. International Education Journal, 18(3), 100–115.

Vernon, M. M., Balas, E. A., & Momani, S. (2018). Are university rankings useful to improve research? A systematic review. PLOS ONE, 13(3), e0193762. https://doi.org/10.1371/journal.pone.0193762

Wagner, C. S., Whetsell, T., Baas, J., & Jonkers, K. (2018). Openness and impact of leading scientific countries. Frontiers in Research Metrics and Analytics. https://doi.org/10.3389/frma.2018.00010

Whittle, M., & Rampton, J. (CSES). (2020). Towards a 2030 vision on the future of universities in Europe. Policy Report. Centre for Strategy. https://doi.org/10.2777/510530

World University Rankings. (2023, September 25). Times Higher Education (THE). https://www.timeshighereducation.com/world-university-rankings/2024/world-ranking

Wut, T.-M., & XuLee, J. S. W. (2022). Does university ranking matter? Choosing a university in the Digital Era. Education Sciences, 12(4), 229. https://doi.org/10.3390/educsci12040229

wwwww.scival.com. (2023). https://www.scival.com/

wwwwww.timeshighereducation.com. (2023). https://www.timeshighereducation.com/

Funding

Open access funding provided by Óbuda University. The research was supported by the International Visegrad Fund (Project ID: 22120009). Project website: https://uni-obuda.hu/v4-uni-visibility/. Horseman Bruce Associates Ltd has received consultant honoraria from Óbuda University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

Appendix 1: Country performance in terms of rank and scientific performance

See Table 4.

Appendix 2: Heatmaps of universities of the four examined countries

Appendix 3: Data management and model selection

For institutions with only one ranked year, RG(t−1) values were imputed using a panel regression of RG(t−1) values on RAt, and calendar year (model not shown). As a sensitivity analysis, we also explored results on the dataset without imputation. To detect the effect of outliers, we fitted robust MM regression and ordinary least squares (OLS) regression on RG and the main predictors (Fig. 11). We observed unacceptably high leverage values (> 4/N) for some data points. To mitigate the effect of outliers, we deleted 5% of the cases with greatest Mahalanobis distances from the sample mean for single predictors and the multivariate sample, resulting in similar OLS and robust regression parameters. Scatterplots of RGt and key predictors before and after removing outliers are shown in Figs. 12 and 13.

As a next step, we selected relevant predictors from the hypothetical model. First, the bivariate relations between the dependent and predictor variables were explored via correlation analysis (Table 5). Also, we calculated the Shapley values to explore the contribution of the main predictor groups to explaining the variance of RG. Next, we used backward, forward, and stepwise selection and the Furniwal and Wilson leaps and bounds algorithm (Furnival & Wilson, 1974) to identify candidate models. The final model was selected if the following criteria were met: all coefficients were significant (p ≤ 0.05), the Ramsay RESET test suggested no functional misspecification error, and multicollinearity was absent with VIF values < 10 for all variables. When meeting these criteria, preferably all three scientific output indicators (FWCI, VT and PQ) had to be included in the model in at least one interaction term, and the model should have higher R2 and/or lower Akaike’s information criterion (AIC) values than its alternatives.

Appendix 4: Results of the data preparation and variable selection

Rank positions of two preceding years were not available for 64 observations, therefore RG(t−1) had to be imputed. Nine observations were removed because of outliers. Altogether, 191 observations were included in the full estimation sample, and the dataset was complete for 130 observations without the imputated values of RG(t−1). The scatterplots of RG and the main predictors before and after removing outliers are shown in Fig. 12, respectively. RPIs explained altogether approximately one third of the variance of RGt. The R2 of the full model with all predictors was 0.328. The Shapley values indicated the predictor groups RG(t−1), x′, \({x}^{{{\text{g}}}{\prime}}\), \({x}^{{{\text{a}}}{\prime}}\), and xr contributed by explaining 1.5%, 14.8%, 14.8%, 28.4% and 40.5% of the variance.

The variable selection methods provided a variety of proposed models on the imputed and non-imputed sample. The models are shown in Table 6. Model performance was evaluated on the full sample without outliers in both cases. Based on the selection criteria, M1 was selected as the optimal rank predictor. The explanatory power of M2 was slightly lower. M3 had the best explanatory power from models selected from the imputed sample, so we included for comparison, although it did not meet the selection criteria because of multicollinearity. Although similar in performance, different predictors were selected when the sample was used without imputation. In this sample, M4 showed best performance, followed by M5. While M6 had better explanatory power than the other models, because of multicollinearity it did not meet the selection criteria.

We compared the six models for a hypothetical institution ranked 1000 with 10% growth rate in the strategic scenarios. Combined growth of publication volume and FWCI would provide the greatest rank gains in all models. The results suggest that approximately 10% growth is required across all indicators to preserve the institution’s previous year’s rank. However, the models were inconclusive about the contribution of growing publication quality to better ranking positions. While keeping all metrics unchanged, efforts to increase the proportion of Q1 publications seemed to be counterproductive in models M1, M3 and M4, while in M2 and M5 publication quality was not a significant predictor. In M6 with the highest explanatory power, increasing publication quality seemed to contribute positively to better ranking positions.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tóth, B., Motahari-Nezhad, H., Horseman, N. et al. Ranking resilience: assessing the impact of scientific performance and the expansion of the Times Higher Education Word University Rankings on the position of Czech, Hungarian, Polish, and Slovak universities. Scientometrics 129, 1739–1770 (2024). https://doi.org/10.1007/s11192-023-04920-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-023-04920-1

Keywords

- University rankings

- Bibliometric indicators

- Higher education

- Ranking prediction model, predictive factors