Abstract

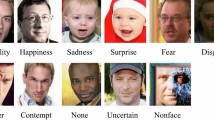

Facial Emotion detection (FER) is primarily used to assess human emotion to meet the demands of several real-time applications, including emotion detection, computer–human interfaces, biometrics, forensics, and human–robot collaboration. However, several current techniques fall short of providing accurate predictions with a low error rate. This study focuses on modeling an effective FER with unrestricted videos using a hybrid SegNet and ConvNet model. SegNet is used to segment the regions of facial expression, and ConvNet is used to analyze facial features and to make predictions about emotions like surprise, sadness, and happiness, among others. The suggested hybridized approach uses a neural network model to classify face characteristics depending on their location. The proposed model aims to recognize facial emotions with a quicker convergence rate and improved prediction accuracy. This work takes into account the internet-accessible datasets from the FER2013, Kaggle, and GitHub databases to execute execution. To accomplish generalization and improve the quality of prediction, the model acts as a multi-modal application. With the available datasets throughout the testing procedure, the suggested model provides 95% prediction accuracy. Additionally, the suggested hybridized model is used to calculate the system's importance. The experimental results show that, in comparison to previous techniques, the expected model provides superior prediction results and produces better trade-offs. Other related statistical measures are also assessed and contrasted while the simulation is being run in the MATLAB 2020a environment.

Similar content being viewed by others

Data availability

Enquiries about data availability should be directed to the authors.

References

Amin-Naji M, Aghagolzadeh A, Ezoji M (2019) Ensemble of CNN for multi-focus image fusion. Inf Fusion 51:201–214

Canedo D, Neves AJ (2019) Facial expression recognition using computer vision: a systematic review. Appl Sci 9(21):4678

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), 21–26. pp 1800–1807

Dhall A, Goecke R, Joshi J, Hoey J, Gedeon T (2016) EmotiW 2016: video and group-level emotion recognition challenges. https://doi.org/10.1145/2993148.2997638

Gan Y (2018) Facial expression recognition using convolutional neural network. In Proceedings of the 2nd international conference on vision, image and signal processing 1–5.

Hans ASA, Smitha R (2021) A CNN-LSTM based deep neural networks for facial emotion detection in videos. Int J Adv Signal Image Sci 7(1):11–20

He XZ, Ren S, Sun J Deep residual learning for image recognition, CoRR, abs/1512.03385, 2015.

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE Conference on computer vision and pattern recognition (CVPR), 27–30. pp 770–778.

Hussain SA, Ahlam SAAB (2020) A real time face emotion classifcation and recognition using deep learning model. J Phys Conf Ser 1432(1):012087

Jia ES, Donahue J, Karayev S, Long J, Girshick R, Guadarrama S, Darrell T Caffe: Convolutional architecture for fast feature embedding, in ACM MM, 2014

Jiang JL, Roshan Zamir A, Toderici G, Laptev I, Shah M, Sukthankar R Thumos challenge: Action recognition with a large number of classes. http://crcv.ucf.edu/THUMOS14, 2014.

Kaya H, Gürpınar F, Afshar S, Salah A (2015) Contrasting and combining least squares based learners for emotion recognition in the wild. In: 2015 ACM international conference on multimodal interaction, pp 459–466

Kumar N, Bhargava D (2017) A scheme of features fusion for facial expression analysis: a facial action recognition. J Stat Manag Syst 20(4):693–701

Qiu LZ, Yao T, Mei T, Rui Y, Luo J Action recognition by learning deep multi-granular spatio-temporal video representation, in ICMR. ACM, 2016, pp. 159–166.

Liu CS, van den Hengel A The treasure beneath convolutional layers: Cross-convolutional-layer pooling for image classification,” in CVPR, 2015

Mollahosseini A, Chan D, Mahoor MH (2016) Going deeper in facial expression recognition using deep neural networks. In: 2016 IEEE winter conference on applications of computer vision (WACV), 7–10. pp 1–10.

Mollahosseini, A., Chan, D., & Mahoor, M. H. Going deeper in facial expression recognition using deep neural networks. In 2016 IEEE winter conference on applications of computer vision (WACV) 1–10 (IEEE, 2016).

Ng, Hausknecht MJ, Vijayanarasimhan S, Vinyals O, Monga R, Toderici G Beyond short snippets: Deep networks for video classification. in CVPR, 2015.

Niu H et al (2020) Deep feature learnt by conventional deep neural network. Comput Electr Eng 84:106656

Niu B, Gao Z, Guo B (2021) Facial expression recognition with LBP and ORB features. Comput Intell Neurosci 2021:1–10

Nonis F, Dagnes N, Marcolin F, Vezzetti E (2019) 3d approaches and challenges in facial expression recognition algorithms—a literature review. Appl Sci 9(18):3904

Patil MN, Iyer B, Arya R. Performance evaluation of PCA and ICA algorithm for facial expression recognition application. In Proceedings of fifh international conference on sof computing for problem solving 965–976 (Springer, 2016).

Sarangi P, Mishra B, Dehuri S (2017) Pyramid histogram of oriented gradients based human ear identifcation. Int J Control Theory Appl 10:125–133

Szegedy WL, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A Going deeper with convolutions. in CVPR, 2015.

Wang F et al (2020) Emotion recognition with convolutional neural network and eeg-based efdms. Neuropsychologia 146:107506

Xu YY, Hauptmann AG A discriminative CNN video representation for event detection, in CVPR, 2015

Yang B, Xiang X, Xu D, Wang X, Yang X (2017a) 3d palm print recognition using shape index representation and fragile bits. Multimed Tools Appl 76(14):15357–15375

Yang B, Cao J, Ni R, Zhang Y (2017b) Facial expression recognition using weighted mixture deep neural network based on doublechannel facial images. IEEE Access 6:4630–4640

Yao A, Cai D, Hu P, Wang S, Sha L, Chen Y (2016) HoloNet: towards robust emotion recognition in the wild. In: Paper presented at the Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan

Zhao X, Liang X, Liu L, Li T, Han, Y., Vasconcelos, N., Yan, S. Peak-piloted deep network for facial expression recognition. In European Conference on Computer Vision 425–442 (Springer, 2016).

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

The article does not contain any studies with human participants or animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bhushanam, P.N., Kumar, S.S. Modelling an efficient hybridized approach for facial emotion recognition using unconstraint videos and deep learning approaches. Soft Comput 28, 4593–4606 (2024). https://doi.org/10.1007/s00500-024-09668-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-024-09668-1