Abstract

Filter pruning of convolutional neural networks (CNNs) is a common technique to effectively reduce the memory footprint, the number of arithmetic operations, and, consequently, inference time. Recent pruning approaches also consider the targeted device (i.e., graphics processing units) for CNN deployment to reduce the actual inference time. However, simple metrics, such as the \(\ell ^1\)-norm, are used for deciding which filters to prune. In this work, we propose a hardware-aware technique to explore the vast multi-objective design space of possible filter pruning configurations. Our approach incorporates not only the targeted device but also techniques from explainable artificial intelligence for ranking and deciding which filters to prune. For each layer, the number of filters to be pruned is optimized with the objective of minimizing the inference time and the error rate of the CNN. Experimental results show that our approach can speed up inference time by 1.40× and 1.30× for VGG-16 on the CIFAR-10 dataset and ResNet-18 on the ILSVRC-2012 dataset, respectively, compared to the state-of-the-art ABCPruner.

Similar content being viewed by others

1 Introduction

Filter pruning has become one of the dominant techniques for reducing the inference time, memory footprint, and energy consumption of convolutional neural networks (CNNs) to increase their usability in embedded devices such as smartphones and other wearable devices [1]. However, determining the individual number of filters to be pruned in each layer is a challenging problem because CNNs may consist of tens to hundreds of convolutional layers with up to hundreds of filters per layer, resulting in a vast design space [2]. Moreover, evaluating each pruned CNN configuration may take a considerable amount of time. Recent publications address this problem by presenting approaches to reduce the design space while applying exploration-based optimization strategies such as evolutionary algorithms [2,3,4]. This can significantly reduce the number of operations required for inference of the CNN. Reduction is achieved in a number of ways, such as setting bounds for preserved filters or defining a small set of fixed cut rates that are applied to all layers [3]. Unfortunately, however, the approaches above ignore to incorporate also hardware knowledge of the target device, such as the graphics processing unit (GPU) on which the CNN is deployed. In [5], an approach is proposed to reduce the huge design space of possible filter pruning configurations by introducing a hardware-dependent clipping step size S and a step size vector \(\textbf{S}\). In this work, we extend this approach with a novel metric from explainable artificial intelligence (XAI) for ranking filters to decide which filters to prune in each layer and determine \(\textbf{S}\) by performing a sensitivity analysis before the design space exploration (DSE).

The general objective of state-of-the-art filter pruning DSE methods found in the literature, such as [2, 3], and [6], is to minimize the number of floating-point operations (FLOPs) required to compute the convolutional layers of a CNN. Although this objective may indirectly reduce the inference time on the target device, we show that focusing solely on FLOP minimization does not always lead to performance-optimal configurations. Instead, we rather propose to incorporate the objective of inference time directly by performing hardware measurements on the target device.

Efficient sets obtained by a DSE with the objective of minimizing the number of floating-point operations (FLOPs) (red front) and of minimizing the inference time (blue front) on the Nvidia RTX 2080 Ti GPU, respectively. Each point represents a non-dominated pruned CNN configuration of the VGG-11 baseline model, trained on the CIFAR-10 dataset

As a motivating example, consider Fig. 1. Shown are two efficient setsFootnote 1 consisting of pruned CNN configurations (represented by each dot) obtained after performing a DSE, using the multi-objective evolutionary algorithm (MOEA) NSGA-II [7] (see Sect. 5 for the detailed settings) to find optimal pruning configurations on the CIFAR-10 dataset [8] for the VGG-11 benchmark [9]. One efficient set originates from a DSE performed with the objective of minimizing inference time (blue front) on an Nvidia RTX 2080 Ti GPU, and the other one from a DSE performed with the objective of minimizing the number of FLOPs (red front). Minimizing the error rate is in both variants the second objective. Each DSE was run for 12 h, and the inference time of the resulting pruned CNN configurations optimized for FLOPs was then measured on the Nvidia RTX 2080 Ti for comparison.

When visually inspecting the two fronts, it can be clearly seen that the minimization of FLOPs does not adequately minimize the inference times. But in order to compensate for the extra effort for the deployment and inference time measurements on a target platform, we present different approaches to reducing the design space, including separating the convolutional layers into groups and reducing the dataset size required for retraining during the DSE (see Sect. 4). Our contributions can be summarized as follows:

-

Introduction of a hardware-aware DSE to systematically explore the vast design space of filter pruning options of a given CNN. Different from previous results presented in [5] to reduce the design space by introducing a global step size S and a step size vector \(\textbf{S}\), we introduce a novel metric from explainable artificial intelligence (XAI) to rank the importance of each filter per layer for pruning.

-

Automatic determination of \(\textbf{S}\), based on a layer sensitivity analysis for a given CNN, requires no user expertise. The more sensitive layers are assigned a small step size to allow for a finer search space.

-

For minimizing both the error rate R and inference time I of a given CNN, an MOEA-based DSE is introduced incorporating the XAI-based filter pruning technique. As a result, a large number of non-dominated configurations with high diversity among the contradicting objectives can be identified.

-

Evaluation: We demonstrate the performance of our hardware-aware DSE for XAI-based filter pruning on an Nvidia RTX 2080 Ti GPU for four different CNN benchmarks. Solutions are found providing considerable reductions in inference time I with similar error rates R compared to the unpruned baseline, to the best pruned CNN configurations found by ABCPruner [3] and previous work that did not use XAI [5]. For VGG-16, we provide an in-depth analysis by comparing one found pruning configuration by the XAI-based approach with the configuration found by ABCPruner.

2 Related Work

In CNN filter pruning or channel pruning (filter pruning “in reverse direction” [10]), entire filters are removed, which leads to (1) a reduction of the memory footprint, as the pruned filters do not have to be stored, and (2) a reduction of the inference time, as the arithmetic operations for the pruned filters are omitted. It appears that CNNs are typically over-parametrized and that the individual filters of each layer differ in importance, such that removing the less important filters has (almost) no impact on the overall error rate of the CNN [11]. The main question here is how to measure the importance of the filters. One area of research in filter pruning is, therefore, the introduction of different criteria for measuring the importance of filters. Here, the most common criteria are the \(\ell ^1\)-norm (importance of a filter corresponds to its absolute weight sum), geometric median, Taylor expansion loss, or Average Percentage of Zero activations (APoZ) [11,12,13]. Another approach to rank filters in order of importance is to use metrics from explainable AI (XAI) with the idea of explaining why a neural network produces the output from the given input image it receives. XAI is commonly used to guide the pruning of DNNs, e.g., [14,15,16,17]. In these works, the contribution of each filter in a CNN to the resulting output prediction is determined by backpropagation. In the work presented in the following, we make use of this method to determine which filters of a layer to prune and which rather not (see Sect. 3.2).

ThiNet [6] reduces the number of filters of a convolutional layer i based on the preserved channels in the subsequent convolutional layer \(i+1\). Here, based on a given compression rate (how many channels of layer \(i+1\) are preserved), the reconstruction error of the resulting feature maps of layer \(i+1\) is minimized by a greedy search algorithm.

Radu et al. [18] extensively study the effect of pruning different numbers of filters on the actual inference time on embedded hardware devices. Their findings show that filter pruning does not always lead to a reduction in inference time when deployed on the target platform, confirming our experimental findings (see Sect. 5.1). Li et al. [19] take into account the actual latency when executing the pruned CNN. Here, the latency is estimated with a heuristic model. However, the model may not be sufficiently accurate when being executed on a different platform or when a different version of a compiler (e.g., TensorRT) is used. Similarly, HALP [20] uses a look-up table acquired from pre-measurements, which may also impair accuracy. Another remaining question is how many measurements are required to get an accurate latency estimation for the vast design space.

However, what all approaches above have in common is that the pruning rates of filters have to be set manually for each layer, so automatic compression of CNNs is not possible.

Several recent works use Evolutionary Algorithms (EAs) for automatic filter pruning of CNNs to optimize for two conflicting objectives: the number of pruned filters and the overall error rate of CNNs [2, 4]. KGEA [4] proposes a genetic algorithm to find the so-called knee solution in the resulting Pareto front, which gives a trade-off between the total number of filters of the CNN and the error rate. KGEA avoids using heuristic criteria and ranks the filters with the genetic algorithm (here NSGA-II). A problem appears for deeper CNNs, having more filters, which leads to a significant increase in the design space. Moreover, the found knee solution for this approach only gives the optimal trade-off between the overall number of filters and the error rate of the CNN, while the real inference time is not evaluated on the hardware, and specific mappings of each convolutional layer on the hardware are not considered. Similar to this approach, ABCPruner [3] ranks the filters with an artificial bee colony algorithm to automatically find the least important filters for each layer. To reduce the design space and evaluate deeper CNNs, a parameter \(\alpha\) is introduced, which represents a lower bound for the number of preserved filters in each layer: For \(\alpha =0.8\), 80% of the filters in each layer are preserved, and thus the design space for each layer is also reduced by 80%. The optimal solution corresponds to the trade-off between the number of FLOPs and the error rate of the CNN. However, \(\alpha\) does not relate to the underlying hardware, and the user has to know which value fits best. \(\alpha\) is set globally for all layers of the CNN, whereas in our approach, setting step sizes, i.e., for a group of layers, is much more fine-granular and takes into account the different importance of layers. IEEpruner [2] uses the NSGA-II algorithm to optimize the pruning criteria such as \(\ell ^1\)-norm, geometric mean, APoZ, that measure the importance of filters. Each convolutional layer i is pruned considering the pruning criteria with the highest reduction of filters that still maintains the accuracy of the CNN. But this does not take into account the underlying hardware and the ultimate goal of minimizing the inference time is not considered by the MOEA. AACP [21] uses the \(\ell ^1\)-norm to determine the importance of filters and introduces a step size vector to reduce the size of the design space. An improved differential evolutionary algorithm (IDE) is used for optimization. However, it does also not incorporate information about the target hardware platform to define the step sizes for a group of layers. Another drawback is that no retraining is performed after each pruning step to maintain the accuracy of a pruned CNN [22].

Finally, another deficiency of the approaches mentioned before is that minimizing the number of floating-point operations, number of filters, or memory space in some cases does not necessarily lead to solutions minimal in the inference time. As our introductory example in Fig. 1 has shown, the selected mapping on the device highly impacts the inference time, which will be analyzed in detail in our experiment section (see Sect. 5). Rather, we are proposing a hardware-aware DSE for filter-pruning to directly minimize the inference time on a target device. Also, in contrast to previous approaches, we introduce techniques for design space reduction with respect to execution properties of the convolutional layers on the target hardware (GPUs in our case), which is explained in more detail in Sect. 3.3.

In DyFiP [16], an XAI-based filter ranking technique is proposed to select filters in a CNN for pruning that are less important for different classes of a given dataset. However, [16] does not perform any exploration of how many filters to prune in each layer. In our MOEA-based approach, the number of filters to be pruned in each layer is encoded in a chromosome and explored during a DSE to analyze inference time and error rate trade-offs. The selection of which filters exactly to prune per layer is determined for each design point based on XAI-based filter ranking similar to [16], thus preferring the pruning of less sensitive filters.

3 Fundamentals

In the following, we briefly describe the idea of filter pruning of convolutional layers (see Sect. 3.1). Then, in Sect. 3.2, we introduce the DeepLIFT algorithm to measure the importance of each filter to direct filter pruning. Finally, in Sect. 3.3, we introduce the sensitivity analysis to automatically determine the step sizes of \(\textbf{S}\).

3.1 Filter Pruning of CNNs

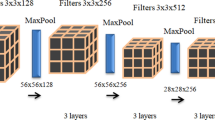

In CNNs, a convolutional layer i transforms so-called input feature maps, using a 3-dimensional tensor of shape \(R_i \times C_i \times N_i\), into a 3-dimensional tensor of shape \(R_{i+1} \times C_{i+1} \times M_i\), called output feature maps. Herein, \(R_i\) and \(C_i\) denote the number of rows and columns of one input feature map. \(N_i\) represents the number of input feature maps. Likewise, \(R_{i+1}\), \(C_{i+1}\), and \(M_i\) represent the number of rows, columns, and output feature maps. The transformation applies \(M_i\) 3-dimensional convolutional filters of shape \(K_i \times K_i \times N_i\) on the input feature maps. In this context, \(K_i\) denotes the kernel size of the ith layer. The number of floating-point operations (FLOPs) to compute a convolutional layer i is obtained as \(FLOPs _i = R_{i+1}\cdot C_{i+1} \cdot M_i \cdot N_i \cdot K_i^2\) [23].

Filter pruning aims to reduce the number of filters \(M_i\). This involves ranking the filters of a convolutional layer, which is explained in more detail in Sect. 3.2. The pruning of filters reduces the number of floating-point operations by a factor of \(\frac{M_i}{m_i}\), where \(1 \le m_i \le M_i\) is the number of preserved filters of convolutional layer i, resulting in a correspondingly reduced number of floating-point operations \(FLOPs _i^\textrm{pruned} = R_{i+1}\cdot C_{i+1} \cdot m_i \cdot N_i \cdot K_i^2\) required for convolutional layer i. Since the number of output feature maps \(M_i\) of convolutional layer i is equal to the number of input feature maps of the subsequent convolutional layer \(i+1\), \(N_{i+1}=M_i\), the number of floating-point operations of layer \(i+1\) is reduced by the same factor, i.e., \(FLOPs _{i+1}^\textrm{pruned} = R_{i+2}\cdot C_{i+2} \cdot m_{i+1} \cdot m_i \cdot K_{i+1}^2\). In a nutshell, filter pruning may indirectly affect the performance (inference time) of a CNN by reducing the number of floating-point operations to be performed. On the other hand, the error rate R generally increases the more filters are pruned.

3.2 XAI-Based Ranking of Filters

Typically, filter pruning is based on ranking the filters in each layer i that cause the smallest increase in the error rate of a given CNN. In this process, each filter gets assigned a concrete value that can be interpreted as the importance of the filter. The best-known methods for determining the importance of filters are the calculation of the \(\ell ^1\)-norm, the geometric median, or the average percentage of zero activations (APoZ) [12]. In [5], the \(\ell ^1\)-norm was used. For each filter within a considered layer i, all weights are summed up. Then, all filter sums are ranked, and the filters with the lowest sums are pruned. In this work, we propose to use the DeepLIFT algorithm for filter ranking that stems from the field of XAI. A recent work by Sabih et al. [16] showed how to extend DeepLIFT and use its importance measures to globally prune the filters of a CNN in order to minimize its latency. The method takes a sample of a dataset as input and outputs so-called saliency maps. For each filter in the CNN, a saliency map is created that has the same dimensions as the corresponding output feature maps and is computed during the backward pass. Let \(\varTheta\) be a set of all saliency maps of a neural network, and let \(I(d, \varTheta )\) denote the importance of all saliency map elements from sample d, with \(0 \le d < |D|\), with \(|D|\) being the number of samples used from the validation data set. Then, we can define the total saliency map importance as the average of importances obtained from \(|D|\) samples:

\(|D|\) varies depending on the type and size of the dataset, and its typical value is 1–2% of the validation dataset. The importance of a filter can then be computed by calculating the \(\ell ^1\)-norm of the corresponding saliency map. For each sample, the XAI method outputs a saliency map O for each convolutional filter in layer i with height \(R_{i+1}\) and width \(C_{i+1}\), where the importance is given by

XAI-based criteria generally outperform the simpler magnitude-based criteria. In the experiments and results reported in Sect. 5, we compare the XAI-based criteria with the magnitude-based criteria for a different amount of filters that were pruned [16].

3.3 Layer Sensitivity Dependent Pruning Step Size

We use this sensitivity analysis methodology to automatically obtain a pruning step size for each layer in a given CNN. We formulate the sensitivity analysis as a classical machine learning problem using different combinations of layer pruning as input features. The error rate objective is used as the output. A classical ML model, in this case, random forest, is then applied, and the importance of the features (layers in our case) is calculated. These importance values correspond to the sensitivity of the layers. The sensitivity analysis requires a certain number of evaluations (\(N_{se}\)), where each evaluation corresponds to a pruning configuration and a corresponding accuracy. The \(N_{se}\) and the number of samples of a dataset to obtain accuracy are hyperparameters that depend on the complexity of the use case and the time budget. For the sensitivity analysis of the VGG-16 model on the CIFAR-10 dataset, we use \(N_{se}=128\) different evaluations.

4 Design Space Exploration

The two conflicting objectives analyzed in the following using a Design Space Exploration (DSE) are the inference time I as well as the error rate R, both to be minimized for a given CNN consisting of a set V of convolutional layers [23]. For each convolutional layer i, consisting of \(M_i\) filters in the unpruned case, the number of unpruned filters \(m_i\), with \(1 \le m_i \le M_i\), is optimized.

By introducing a two-objective cost function f to be minimized and making use of a variable \(x_i\) per layer (i.e., 0 \(\le i < |V|\)), the optimization problem can be formulated as follows:

The vector \(\textbf{x}\) is denoted as pruned configuration or design point. Here, the entries \(x_i\) denote the number of filters pruned for a convolutional layer i of the CNN, such that for each convolutional layer i, the number of remaining filters is obtained as \(m_i = M_i - x_i\). The size of the design space \(\varOmega\) of all possible filter pruning combinations is thus \(|\varOmega | = \prod _{i=0}^{|V| - 1}{M_i}\). However, for many CNNs, this design space is prohibitively large, such that it would take a considerably large amount of time to explore and evaluate all design points. In the case of ResNet-18, including \(|V|=17\) convolutional layers, where the number of filters ranges from \(M_i = 64\) to 512, the number of possible pruned configurations is \(|\varOmega |=10^{38}\). To evaluate the error rate of each pruned configuration, compiling it using TensorRT [24] followed by measuring the inference time on the target hardware amounts to approximately 160 s for ResNet-18; this would result in more than \(10^{32}\) years to explore the entire design space, which is not feasible. The evaluation time may even increase further if retraining is performed for each pruned configuration, which might be necessary to maintain the error rate of a pruned CNN. Therefore, we present two approaches to reduce the design space \(\varOmega\), considering GPUs as target devices (see Sect. 4.1).

4.1 Reduction of Design Space

We propose to reduce the design space by (a) grouping the convolutional layers and by (b) introducing a global step size S and a step size vector \(\textbf{S}\), respectively, for the groups of convolutional layers. The motivation for (a) arises from the insight that layers with an equal number of filters have similar pruning rates in inference time optimal solutions, as could be seen for the VGG-16 layers in ABCPruner [3]. The motivation for (b) arises from the findings in [5], where steps in the inference time I are clearly visible as a function of the number of filters \(m_i\) with a spacing of 32 and 64 and small dips at a spacing of four filters.

We present two approaches, the grouped fixed step size (GFS) approach, where a global step size S is fixed for all groups of convolutional layers, and the more fine-granular grouped variable step size (GVS) approach, where an individual step size can be set for each group, resulting in a step size vector \(\textbf{S}\).

Schematic of the GFS and GVS approaches for VGG-16. First, VGG-16’s 13 convolutional layers i (\(0 \le i \le 12\)) are divided into \(|G|=4\) groups, with \(L_0=64\), \(L_1=128\), \(L_2=256\), and \(L_3=512\) filters. In the case of the GFS approach, a global step size, e.g., \(S=32\), is set. In the case of the GVS approach, different step sizes are assigned to the individual groups, here \(\textbf{S} = (8,8,16,32)\), which leads to a finer granularity for the layers along with a smaller number of filters

Figure 2 illustrates the grouping of the convolutional layers, as well as the GFS and GVS approaches, using the example of VGG-16, including \(|V|=13\) convolutional layers. The resulting size of the design space \(\varOmega\) is shown at the bottom of the figure. First, the grouping of convolutional layers is performed, where all convolutional layers i are partitioned into disjoint groups \(G_j \in G\). The layers in one group always have the same number of filters. In the example, the number of groups is chosen as \(|G|=4\), and the layers with the same number of filters \(M_i\) are grouped together. The number \(|G|\) of groups of convolutional layers is adjustable and influences both the exploration time and the quality of the results obtained by the DSE, which was further investigated in [5].

4.1.1 Grouped Fixed Step Size (GFS)

In the case of the GFS approach, a global step size S is set (see, e.g., \(S=32\) in Fig. 2), which is the same for all groups of convolutional layers. S can be set to \(1 \le S \le L_j\), where \(L_j\) is the number of filters of the grouped convolutional layers \(G_j\). The size of the resulting design space \(|\varOmega |\) is obtained as \(|\varOmega | = \prod _{j=0}^{|G|-1} \left\lceil \frac{L_j}{S}\right\rceil\). For the GFS approach, the optimization problem introduced in Eq. (4.1) therefore refines to

4.1.2 Grouped Variable Step Size (GVS)

In the case of the GVS approach, an individual step size \(S_j\) is defined for each group \(G_j\) of convolutional layers, resulting in a vector \(\textbf{S}\) whose elements are the individual step sizes \(S_j\) for each group of convolutional layers. These step sizes \(S_j\) can be chosen according to \(1 \le S_j \le L_j\). The resulting size of the reduced design space is therefore \(|\varOmega | = \prod _{j=0}^{|G|-1} \left\lceil \frac{L_j}{S_j}\right\rceil\). For the GVS approach, the optimization problem introduced in Eq. (4.1) thus refines to

In [5], the step sizes \(S_j\) were set in such a way that the same number of configurations is achieved for each layer, and it is assumed that input and output layers are more sensitive to pruning. In this work, we perform a sensitivity analysis ahead of the DSE. The more sensitive layers are assigned a smaller step size so that the same number of configurations is achieved for each layer.

For the reduced design space, the EA explores the number of filters for each layer. The decision of which filters to prune is based on a ranking criterion (see Sect. 3.2), which is completely separated from the EA, so different importance criteria may be integrated and even compared. In the upcoming experiments reported in Sect. 5, we analyze and compare filter rankings based on computing the \(\ell ^1\)-norm of weights and the XAI-based filter ranking scheme introduced in Sect. 3.2. The XAI-based filter ranking and the sensitivity analysis have to be done only once before the DSE. During the DSE, for each design point \(\textbf{x}\) (pruned CNN configuration), the resulting error rate \(R(\textbf{x})\) is determined, and after compilation for the GPU, the resulting inference time \(I(\textbf{x})\) is obtained.

5 Experiments

The following experiments were conducted for the VGG-11 and VGG-16 benchmarks [9] on the CIFAR-10 dataset [8], and the ResNet-18 and ResNet-101 benchmarks [25] on the ILSVRC-2012 dataset [26]. As in [5], the optimization problems for the proposed GFS (see Eq. (4.2)) and GVS (see Eq. (4.3)) approaches were solved using the NSGA-II [7] multi-objective evolutionary algorithm (MOEA), embedded in the Opt4J framework [27]. The MOEA was configured with a population size of 100, 25 parents per generation, an offspring of 25 per generation, and a crossover rate of 0.95, and the DSE was performed over 1000 generations. The compilation is done with TensorRT [24], version 7.2.3, the resulting inference time \(I(\textbf{x})\) equals the computed average over 1000 runs on an Nvidia RTX 2080 Ti GPU, a member of the Turing architecture family, which contains 11GB GDDR6 RAM. In the following, we compare the novel XAI-based filter ranking presented in Sect. 3.2 with ranking the filters by computing the \(\ell ^1\)-norm of the filter weights according to [5] for the GFS (see Sect. 4.1.1) and the GVS (see Sect. 4.1.2) approach. For a fair comparison, we use the same step sizes and number of groups.

5.1 VGG-11 and VGG-16 on CIFAR-10

First, the GFS and GVS approaches were applied to VGG-11 and VGG-16. Similar to [5], the entire CIFAR-10 dataset is used for retraining each explored design point during the DSE. The batch size for retraining and inference time measurement is set to \(B=128\). For VGG-11, no grouping of convolutional layers was performed such that \(|L|=13\) convolutional layers of VGG-16 were divided into \(|G|=8\) groups. With this, adjacent layers with the same number of filters are assigned to the same group. Based on prior knowledge that the first layers are more sensitive to pruning [3], we did not group the first four layers of VGG-16, and the layers with \(M_i=256\) and \(M_i=512\) are divided into two groups. The global step size is set to \(S=32\) because the inference time on the RTX 2080 Ti is significantly reduced if the filter number is a multiple of 32 and 64 (see [5]). The size of the design space for the GFS approach is \(|\varOmega |=16,384\).

To compare the convergence of the GFS and the GVS-XAI determining the individual step sizes of \(\textbf{S}\) for VGG-16 with and without sensitivity analysis, the hypervolume indicator [28] is used as a quality indicator. The hypervolume takes into account the volume of the objective space occupied by an efficient set. For the hypervolume as a reference point, the inference time I of the unpruned configuration of VGG-16 and the maximum error rate \(R=90\%\) is chosen, which corresponds to randomly guessing one out of the ten classes of the CIFAR-10 dataset. For each design point \(\textbf{x}\) the inference time \(I(\textbf{x})\) and error rate \(R(\textbf{x})\) are normalized by the reference point’s respective values, and the decrease in inference time and error rate (in percent) is added to the hypervolume. In the first experiment, we show how the determination of the step sizes \(\textbf{S}\) by a previously performed sensitivity analysis leads to faster convergence, indicated by the hypervolume indicator, and compare it with the GFS, where all layers are assigned the same global step size \(S=32\) from [5] and the GVS-XAI approach without sensitivity analysis, and the step size vector \(\textbf{S}=(8,8,8,8,8,16,16,16,16)\) is chosen.

Based on the sensitivities shown in Fig. 3, the step size vector is set to \(\textbf{S}=(8,8,8,8,8,16,64,64)\) so that the last groups of layers, which are less sensitive, are assigned a larger step size. It can be seen that the initial assumption that the input and output layers are more sensitive does not hold for the VGG-16 model on CIFAR-10, as the output layers show low sensitivity. This advantage is evident when comparing the hypervolumes with respect to the evaluations in Fig. 4 for the three approaches. It can be seen that the fixed step size GFS (red) converges as fast as the sensitivity-based GVS (green) but with a lower hypervolume (78.1%), indicating that the search space may be too small. With sensitivity analysis, the GVS approach converged to a hypervolume faster than without sensitivity analysis (blue).

Hypervolume of GFS (red) and GVS, where the step size vector \(\textbf{S}\) is set based on user expertise (blue) determined by sensitivity analysis (green) for VGG-16 trained on the CIFAR-10 with \(|G|=8\), targeting the RTX 2080 Ti GPU. With sensitivity analysis, the hypervolume increases significantly faster than with the other methods. GFS and the GVS version based on sensitivity analysis require about 5,000 evaluations before their hypervolumes converge

To compare the two resulting efficient sets of the GFS-\(\ell ^1\) and the GFS-XAI approach for VGG-11 and VGG-16 as well as the GVS-\(\ell ^1\) and the sensitivity-based GVS-XAI approach, also the hypervolume indicator [28] is used as a quality indicator. For each DSE, 5,000 evaluations of pruned configurations were performed. For each pruned configuration in the resulting efficient set, retraining for 150 epochs was performed, which is the same number of epochs as used by ABCPruner.

In Table 1, one sees that in all cases, the XAI-based filter ranking achieves a higher hypervolume than the \(\ell ^1\)-norm. Comparing the two different approaches, GFS and GVS, the GVS approach exceeds the hypervolume of the GFS approach by finding pruned configurations with a lower error rate and inference time that are not part of the design space of the GFS approach. This shows that the results can be improved with the more fine-granular GVS approach. However, here the trade-off has to be made with respect to the overall time for the DSE [5].

Efficient sets for VGG-16 on CIFAR-10 dataset, determined by DSE using the GVS-\(\ell ^1\) (magenta) and the GVS-XAI (light blue) pruning technique, with \(|G|=8\), targeting the RTX 2080 Ti GPU, after 5000 evaluations. The resulting efficient sets for each approach after retraining for 150 epochs are shown. The found pruning configuration by ABCPruner (ABCPruner-80%) and the three configurations found by GVS-XAI-based (GVS-XAI (a), (b), and (c)) that dominate the ABCPruner configurations in both objectives are indicated by stars and used for the comparison in Table 2

For the GVS approach on VGG-16, the resulting efficient sets are shown in Fig. 5. Overall, the GVS-XAI pruner found 18 configurations, more than found by ABCPruner (up to 10 configurations) or KGEA (only one pruned configuration). This allows the user to select the desired pruned configurations that meet their requirements, i.e., that are within a certain inference time corridor. Comparing the fronts of the efficient sets found by the GVS-\(\ell ^1\) pruner (magenta) with GVS-XAI pruner (light blue), it can be seen that the GVS-XAI pruner finds pruning configurations that dominate the found configurations of GVS-\(\ell ^1\). The pruning configurations highlighted by the stars in light blue in Fig. 5 dominate the ABCPruner-80% configuration [3] in both objectives.

Number of filters \(m_i\) per layer i for the found pruning configurations of ABCPruner-80% and GVS-XAI-based pruning (configuration GVS-XAI (b) in Fig. 5) compared to the baseline for VGG-16 on CIFAR-10. For GVS-XAI pruner the layer \(i=4\) and \(i=5\) as well as layer \(i=7\) to \(i=9\) and \(i=10\) to \(i=12\) are grouped together

In Fig. 6, the found configuration of the GVS-XAI pruner (GVS-XAI (b)), the best-found configuration by ABCPruner-80% and the VGG-16 baseline are compared in terms of the number of filters \(m_i\) for each layer i. One can see that the evolutionary algorithm finds configurations that may differ from the pruning configurations produced by ABCPruner-80%. For ABCPruner-80%, there is more variation in the filter number \(m_i\), as no grouping of layers is performed. Especially layer \(i=10\) and layer \(i=12\) get assigned more filters compared to the GVS-XAI pruner, achieving a speedup of \(1.40\times\) compared with the ABC-Pruner-80% configuration and a speedup of \(3.35\times\) compared to the VGG-16 unpruned model (see Table 2). In addition, the GVS-XAI pruner achieves a significant reduction in FLOPs and the number of parameters (see the rightmost column in Table 2) compared to all other filter pruning approaches such as ABCPruner-80% [3], ThiNet [6] and KGEA [4], which also do not perform hardware-aware filter pruning.

5.2 ResNet-18 and ResNet-101 on ILSVRC-2012

Considering ResNet-18 and ResNet-101, we use 1% of the dataset for retraining each design point for one epoch during the DSE. Again, the batch size is set to \(B=128\) for retraining. The inference time for ResNet-18 was determined for a batch size of \(B=128\), whereas for ResNet-101, the batch size for the inference time measurement was chosen as \(B=1\). For each design point on the resulting efficient set, retraining of 90 epochs with the entire dataset is applied, which is the same number as used by ABCPruner. For the GVS-XAI approach for ResNet-18 and ResNet-101, we choose a step size vector of \(\textbf{S}=(4,4,8,8,16,16,32,32)\) to provide a finer granularity for the first convolutional layers, containing a lower amount of filters. In the case of ResNet-18, which consists of 16 convolutional layers, two convolutional layers are assigned to one group, resulting in a total of \(|G|=8\) groups. For ResNet-18 on ILSVRC-2012, a selected configuration achieves a speedup of \(1.88\times\) compared to 1.44x provided by ABCPruner-70%, as well as an increase of \(0.81\%\) in top1-accuracy compared to the ABCPruner configuration (see Table 2). Here, the number of parameters is slightly higher compared to the found configuration by \(GVS-\ell ^1\) and ABCpruner. The reason is that the GVS-XAI pruner configuration shows higher pruning rates in the first layers, consisting of fewer parameters. However, the last layers, which contain a higher number of pruning parameters, are pruned less because minimizing the number of parameters of the CNN is not one of the goals of DSE. The \(|V|=99\) layers of ResNet-101 were divided into the 8 groups as follows. Group \(j=0\) consists of the first 3 convolutional layers, group \(j=1\) of the following 6 convolutional layers, \(j=2\) of 4, \(j=3\) of 8, \(j=4\) of 23, \(j=5\) of 46, \(j=6\) of 6, and \(j=7\) of 3 convolutional layers. Again, we assume that the first and last layers are more sensitive to pruning, so fewer layers were assigned to one group. On ResNet-101, the GVS-XAI approach found a sample configuration exhibiting a speedup of \(1.35\times\) with respect to the unpruned baseline (\(1.33\times\) for ABCPruner-80%) as well as an increase of \(0.41\%\) in top1-accuracy compared to the ABCPruner configuration. Overall, our GVS-XAI pruner finds configurations that decrease the inference time significantly while achieving equal and sometimes even higher accuracies on the data sets compared to ABCPruner.

Comparing the number of training epochs, ABCPruner needs 12 training epochs for one pruning configuration and, thus, a total of 120 training epochs for obtaining ten different configurations for either the VGG or ResNet model [3]. In contrast, our approaches do not perform any retraining during the DSE in the case of the VGG models. Of course, one has to consider that for evaluating a pruned configuration, the execution time and error rate have to be assessed on the GPU. Here, we need 1000 inferences for one evaluation, which is still much less overhead than ABCPruner, considering that 120 training epochs already require 6,000,000 inferences during training on the CIFAR-10 dataset (compared to the 5,000,000 inferences for 5,000 evaluations). For ResNet models, we use only 1% of the dataset for retraining and perform 5,000 evaluations, resulting in a total of 50 training epochs.

6 Conclusion

We have presented a novel hardware-aware design space exploration (DSE) approach for automatic filter pruning of CNNs based on XAI-based filter ranking. It was shown that the huge design space of pruning options could be significantly reduced. The approach provides knobs to adjust the DSE’s granularity and consider execution properties of the convolutional layers on the target hardware (GPUs in our case). With the integrated XAI-based filter ranking, we are able to find better pruning configurations than with ranking the filters by computing the \(\ell ^1\)-norm of the filter weights. We proposed to utilize sensitivity analysis of layers to automatically specify the step sizes for layers for search space reduction. The resulting efficient set of non-dominated configurations provides great diversity in terms of the two objectives, inference time I and error rate R. Based on the user’s constraints on I and R, the most promising CNN configurations can be selected for further retraining. Overall, for VGG-16 on the CIFAR-10 dataset, our presented GVS-XAI approach can achieve a speedup compared to state-of-the-art ABCPruner by \(1.54\times\) requiring fewer parameters and FLOPs and even showing a slight increase in accuracy. For ResNet-18 and ResNet-101 on the ILSVRC-2012 dataset, we achieved a speedup of \(1.88\times\) and \(1.35\times\), respectively, when deployed on an Nvidia RTX 2080 Ti device with slightly higher accuracy than ABCPruner and GVS-\(\ell ^1\). In this work, we measured the execution time for each pruning configuration on the high-end RTX-2080 Ti GPU. This approach might be too time-consuming for less powerful GPUs, e.g., Nvidia AGX, as the inference time has to be measured for each configuration. Modeling the inference time for the particular GPU using a performance model could speed up the DSE. However, performance modeling of GPUs under certain workloads is a research subject on its own. It is not straightforward since GPU architectures, especially their memory hierarchies, are getting increasingly complex. Lechner and Jantsch [29] proposed a framework that also requires several days for performance modeling of embedded GPUs. Regardless of using measurements or a performance model, the DSE benefits from our presented search space reduction techniques. In the future, we also intend to apply the presented DSE to other accelerator targets, such as FPGAs [30] and custom-tailored architectures for highly parallel CNN acceleration [31].

Notes

Efficient sets are approximations of Pareto sets. Since an evolutionary multi-objective algorithm typically cannot guarantee finding truly Pareto-optimal points, shown is the efficient set of non-dominated points after a DSE run.

References

Hoefler, T., et al.: Sparsity in deep learning: pruning and growth for efficient inference and training in neural networks. J. Mach. Learn. Res. 22, 241:1-241:124 (2021)

Zhang, Y., et al.: Improvement of efficiency in evolutionary pruning . In: Proceedings of the International Joint Conference on Neural Networks (IJCNN), pp. 1–8. IEEE (2021). https://doi.org/10.1109/IJCNN52387.2021.9534055

Lin, M., et al.: Channel pruning via automatic structure search . In: Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI), pp. 673–679 (2020). https://doi.org/10.24963/ijcai.2020/94

Zhou, Y., Yen, G.G., Yi, Z.: A knee-guided evolutionary algorithm for compressing deep neural networks. IEEE Trans. Cybern. 51(3), 1626–1638 (2021). https://doi.org/10.1109/TCYB.2019.2928174

Heidorn, C., et al.: Hardware-aware evolutionary filter pruning . In: Embedded Computer Systems: Architectures, Modeling, and Simulation—22nd International Conference, SAMOS 2022, Samos, Greece, July 3–7, 2022, Proceedings, vol. 13511. Lecture Notes in Computer Science, pp. 283–299. Springer, (2022). https://doi.org/10.1007/978-3-031-15074-6_18

Luo, J.-H., et al.: ThiNet: pruning CNN filters for a thinner net. IEEE Trans. Pattern Anal. Mach. Intell. 41(10), 2525–2538 (2019). https://doi.org/10.1109/TPAMI.2018.2858232

Deb, K., et al.: A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evolut. Comput. 6(2), 182–197 (2002). https://doi.org/10.1109/4235.996017

Krizhevsky, A.: Learning Multiple Layers of Features from Tiny Images. University of Toronto (2012)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: Proceedings of the 3rd International Conference on Learning Representations (ICLR) (2015)

Ma, X., et al.: Non-structured DNN weight pruning—Is it beneficial in any platform? In: The Computing Research Repository (CoRR) (2020). arXiv: 1907.02124 [cs.LG]

Li, H., et al.: Pruning filters for efficient ConvNets . In: Proceedings of the 5th International Conference on Learning Representations (ICLR) (2017)

Molchanov, P., et al.: Pruning convolutional neural networks for resource efficient inference. In: Proceedings of the 5th International Conference on Learning Representations (ICLR) (2017)

Hu, H., et al.: Network trimming: a data-driven neuron pruning approach towards efficient deep architectures . In: The Computing Research Repository (CoRR) (2016). arXiv: 1607.03250 [cs.NE]

Shrikumar, A., Greenside, P, Kundaje, A.: Learning important features through propagating activation differences . In: Proceedings of the 34th International Conference on Machine Learning (ICML), vol. 70, pp. 3145–3153 (2017)

Sabih, M., Hannig, F., Teich, J.: Utilizing explainable AI for quantization and pruning of deep neural networks . In: The Computing Research Repository (CoRR) (2020). arXiv: 2008.09072 [cs.CV]

Sabih, M., Hannig, F., Teich, J.: DyFiP: explainable AI-based dynamic filter pruning of convolutional neural networks . In: Proceedings of the 2nd European Workshop on Machine Learning and Systems (EuroMLSys), pp. 109–115. ACM (2022). https://doi.org/10.1145/3517207.3526982

Muhammad Sabih et al.: MOSP: Multi-objective sensitivity pruning of deep neural networks. In: The 13th International Green and Sustainable Computing Conference (IGSC), pp 1–8. IEEE (2022). https://doi.org/10.1109/IGSC55832.2022.9969374

Radu, V., et al.: Performance aware convolutional neural network channel pruning for embedded GPUs . In: Proceedings of the IEEE International Symposium on Workload Characterization (IISWC), pp. 24–34. IEEE (2019). https://doi.org/10.1109/IISWC47752.2019.9042000

Li, X., et al.: Partial order pruning: for best speed/accuracy trade-off in neural architecture search . In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9145–9153. IEEE (2019). https://doi.org/10.1109/CVPR.2019.00936

Shen, M., et al.: HALP: hardware-aware latency pruning . In: The Computing Research Repository (CoRR) (2021). arXiv: 2110.10811 [cs.CV]

Lin, L., Yang, Y., Guo, Z.: AACP: model compression by accurate and automatic channel pruning . In: The Computing Research Repository (CoRR) (2021). arXiv: 2102.00390 [cs.CV]

Han, S., et al.: Learning both weights and connections for efficient neural network . In: Proceedings of the Annual Conference on Neural Information Processing Systems, pp. 1135–1143 (2015)

Schuster, A., et al.: Design space exploration of time, energy, and error rate trade-offs for CNNs using accuracy-programmable instruction set processors . In: Proceedings of the 2nd International Work- shop on IoT, Edge, and Mobile for Embedded Machine Learning (ITEM), pp. 375–389. Springer (2021). https://doi.org/10.1007/978-3-030-93736-2_29

Corp, N.: Nvidia TensorRT documentation. (2021). https://docs.nvidia.com/deeplearning/tensorrt/developer-guide/index.html. visited on 10 Apr 2021

He, K., et al.: Deep residual learning for image recognition . In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778. IEEE (2016). https://doi.org/10.1109/CVPR.2016.90

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. In: The Computing Research Repository (CoRR) (2014). arXiv: 1409.0575 [cs.CV]

Lukasiewycz, M., et al.: Opt4J—a modular framework for meta-heuristic optimization . In: Proceedings of the Genetic and Evolutionary Computing Conference (GECCO), pp. 1723–1730. ACM (2011). https://doi.org/10.1145/2001576.2001808

Zitzler, E., Thiele, L.: Multiobjective evolutionary algorithms: a comparative case study and the strength pareto approach. IEEE Trans. Evolut. Comput. 3(4), 257–271 (1999). https://doi.org/10.1109/4235.797969

Lechner, M., Jantsch, A.: Blackthorn: latency estimation framework for CNNs on embedded Nvidia platforms. IEEE Access 9, 110074–110084 (2021). https://doi.org/10.1109/ACCESS.2021.3101936

Plagwitz, P., et al.: A Safari through FPGA-based neural network compilation and design automation flows. In: Proceedings of the 29th IEEE International Symposium on Field-Programmable Custom Com- puting Machines (FCCM), pp. 10–19. IEEE (2021). https://doi.org/10.1109/FCCM51124.2021.00010

Heidorn, C., Hannig, F., Teich, J.: Design space exploration for layer-parallel execution of convolutional neural networks on CGRAs . In: Proceedings of the 23rd International Workshop on Software and Compilers for Embedded Systems (SCOPES), pp. 26–31. ACM (2020). https://doi.org/10.1145/3378678.3391878

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was partially funded by the German Federal Ministry for Education and Research (BMBF) within project KISS (01IS19070).

Author information

Authors and Affiliations

Contributions

CH and MS contributed equally to this manuscript. CH wrote the paper and assisted in performing the evaluation. MS integrated the XAI algorithm for measuring filter importance, performed the evaluation, and proofread the paper. NM initially implemented and evaluated the L1-norm-based filter pruning. CS, FH provided valuable guidance during the implementation and contributed to the writing. JT, FH performed the technical review, supervised the work, and contributed to the writing.

Corresponding author

Ethics declarations

Conflict of interest

The authors report no conflicts of interest. The funders had no role in the design of the study; collection, analysis, or interpretation of the data; in writing the manuscript, or in the decision to publish the results.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heidorn, C., Sabih, M., Meyerhöfer, N. et al. Hardware-Aware Evolutionary Explainable Filter Pruning for Convolutional Neural Networks. Int J Parallel Prog 52, 40–58 (2024). https://doi.org/10.1007/s10766-024-00760-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10766-024-00760-5