Abstract

Continuing our earlier work in Nam et al. (One-step replica symmetry breaking of random regular NAE-SAT I, arXiv:2011.14270, 2020), we study the random regular k-nae-sat model in the condensation regime. In Nam et al. (2020), the (1rsb) properties of the model were established with positive probability. In this paper, we improve the result to probability arbitrarily close to one. To do so, we introduce a new framework which is the synthesis of two approaches: the small subgraph conditioning and a variance decomposition technique using Doob martingales and discrete Fourier analysis. The main challenge is a delicate integration of the two methods to overcome the difficulty arising from applying the moment method to an unbounded state space.

Similar content being viewed by others

1 Introduction

Building on the earlier theory of spin-glasses, statistical physicists in the early 2000s developed a detailed collection of predictions for a broad class of sparse random constraint satisfaction problems (rcsp). These predictions describe a series of phase transitions as the constraint density varies, which is governed by one-step replica symmetry breaking (1rsb) ([31, 34]; cf. [4] and Chapter 19 of [33] for a survey). We study one of such rcsp’s, named the random regular k-nae-sat model, which is perhaps the most mathematically tractable among the 1rsb class of rcsp’s. As a continuation of our companion work [37], this paper completes our program to establish that the 1rsb prediction for the random regular nae-sat hold with probability arbitrarily close to one.

The nae-sat problem is a random Boolean cnf formula, where n Boolean variables are subject to constraints in the form of clauses which are the “or” of k of the variables or their negations chosen uniformly at random. The formula itself is the “and” of these clauses. A variable assignment \(\underline{x}\in \{0,1\}^{n}\) is called a nae-sat solution if both \(\underline{ x}\) and \(\lnot \underline{ x}\) evaluate to true. We then choose a uniformly random instance of d-regular (each variable appears d times) k-nae-sat (each clause has k literals) problem, which gives the random d-regular k-nae-sat problem with clause density \(\alpha =d/k\) (see Sect. 2 for the formal definition).

Let \(Z_n\) denote the number of solutions for a given random d-regular k-nae-sat instance. The physics prediction is that for each fixed \(\alpha \), there exists \(\textsf {f}(\alpha )\) called the free energy such that

A direct computation of the first moment \(\mathbb {E}Z_n\) gives that

(\(\textsf {f}^{\textsf {rs}}(\alpha )\) is called the replica-symmetric free energy), so \(\textsf {f}\le \textsf {f}^{\textsf {rs}}\) holds by Markov’s inequality. The work of Ding–Sly–Sun [25] and Sly–Sun–Zhang [43] established some of the physics conjectures on the description of \(Z_n\) and \(\textsf {f}\) given in [31, 36, 45], which are summarized as follows.

-

([25]) For large enough k, there exists the satisfiability threshold \(\alpha _{\textsf {sat}}\equiv \alpha _{\textsf {sat}}(k)>0\) such that

$$\begin{aligned} \lim _{n\rightarrow \infty } \mathbb {P}(Z_n>0) = {\left\{ \begin{array}{ll} 1 &{} \text { for } \alpha \in (0,\alpha _{\textsf {sat}});\\ 0 &{} \text { for }\alpha > \alpha _{\textsf {sat}}. \end{array}\right. } \end{aligned}$$ -

([43]) For large enough k, there exists the condensation threshold \(\alpha _{\textsf {cond}}\equiv \alpha _{\textsf {cond}}(k)\in (0,\alpha _{\textsf {sat}})\) such that

$$\begin{aligned} \textsf {f}(\alpha )= {\left\{ \begin{array}{ll} \textsf {f}^{\textsf {rs}}(\alpha ) &{} \text { for } \alpha \le \alpha _{\textsf {cond}};\\ \textsf {f}^{1\textsf {rsb}}(\alpha ) &{} \text { for } \alpha > \alpha _{\textsf {cond}}, \end{array}\right. } \end{aligned}$$(1)where \(\textsf {f}^{1\textsf {rsb}}(\alpha )\) is the 1rsb free energy. Moreover, \(\textsf {f}^{\textsf {rs}}(\alpha ) > \textsf {f}^{\textsf {1\textsf {rsb}}}(\alpha )\) holds for \(\alpha \in (\alpha _{\textsf {cond}},\alpha _{\textsf {sat}})\). For the explicit formula and derivation of \(\textsf {f}^{1\textsf {rsb}}(\alpha )\) and \(\alpha _{\textsf {cond}}\), we refer to Section 1.6 of [43] for a concise overview.

Furthermore, there are more detailed physics predictions that the solution space of the random regular k-nae-sat is condensed when \(\alpha \in (\alpha _{\textsf {cond}},\alpha _{\textsf {sat}})\) into a finite number of clusters. Here, cluster is defined by the connected component of the solution space, where we connect two solutions if they differ by one variable. Indeed, in [37], we proved that for large enough k, the solution space of random regular k-nae-sat indeed becomes condensed in the condensation regime for a positive fraction of the instances. That is, it holds with probability strictly bounded away from 0.

The following theorem strengthens the aforementioned result and shows that the condensation phenomenon holds with probability arbitrarily close to 1.

Theorem 1.1

Let \(k\ge k_0\) where \(k_0\) is a large absolute constant, and let \(\alpha \in (\alpha _{\textsf {cond}}, \alpha _{\textsf {sat}})\) such that \(d\equiv \alpha k\in \mathbb {N}\). For all \(\varepsilon >0\) and \(M\in \mathbb {N}\), there exist constants \(K\equiv K(\varepsilon ,\alpha ,k)\in \mathbb {N}\) and \(C\equiv C(M,\varepsilon ,\alpha ,k)>0\) such that with probability at least \(1-\varepsilon \), the random d-regular k-nae-sat instance satisfies the following:

-

(a)

The K largest solution clusters, \(\mathcal {C}_1,\ldots ,\mathcal {C}_K\), occupy at least \(1-\varepsilon \) fraction of the solution space;

-

(b)

There are at least \(\exp (n \text {\textsf{f}}^{1 \text {\textsf{rsb}}}(\alpha ) -c^{\star }\log n -C )\) many solutions in \(\mathcal {C}_1,\ldots ,\mathcal {C}_M\), the M largest clusters (see Definition 2.19 for the definition of \(c^\star \)).

Remark 1.2

Throughout the paper, we take \(k_0\) to be a large absolute constant so that the results of [25, 43] and [37] hold. In addition, it was shown in [43, Proposition 1.4] that \((\alpha _{\textsf {cond}}, \alpha _{\textsf {sat}})\) is a subset of \((\alpha _{\textsf {lbd}}, \alpha _{\textsf {ubd}})\), where \(\alpha _{\textsf {lbd}}\equiv (2^{k-1}-2)\log 2\) and \(\alpha _{\textsf {ubd}}\equiv 2^{k-1}\log 2\), so we restrict our attention to \(\alpha \in (\alpha _{\textsf {lbd}}, \alpha _{\textsf {ubd}})\).

1.1 One-step replica symmetry breaking

In the condensation regime \(\alpha \in (\alpha _{\textsf {cond}},\alpha _{\textsf {sat}})\), the random regular k-nae-sat model is believed to possess a single layer of hierarchy of clusters in the solution space. That is, the solutions are fairly well-connected inside each cluster so that no additional hierarchical structure in it. Such behavior is conjectured in various other models such as random graph coloring and random k-sat. We remark that there are also other models such as maximum independent set (or high-fugacity hard-core model) in random graphs with small degrees [10] and Sherrington-Kirkpatrick model [41, 44], which are expected or proven [6] to undergo full rsb, which means that there are infinitely many levels of hierarchy inside the solution clusters.

A way to characterize 1rsb is to look at the overlap between two uniformly drawn solutions. In the condensation regime, there are a bounded number of clusters containing most of the solutions. Thus, the event of two solutions belonging to the same cluster, or different clusters, each happen with a non-trivial probability. According to the description of 1rsb, there is no additional structure inside each cluster, so the Hamming distance between the two solutions is expected to concentrate precisely at two values, depending on whether they came from the same cluster or not.

It was verified in [37] that the overlap concentrates at two values for a positive fraction of the random regular nae-sat instances. Theorem 1.4 below verifies that the overlap concentration happens for almost all random regular nae-sat instances.

Definition 1.3

For \(\underline{x}^1,\underline{x}^2 \in \{0,1\}^n\), let \(\underline{y}^i = 2\underline{x}^i - {\textbf {1}}\). The overlap \(\rho (\underline{x}^1,\underline{x}^2)\) is defined by

In words, the overlap is the normalized difference between the number of variables with the same value and the number of those with different values.

Theorem 1.4

Let \(k\ge k_0\), \(\alpha \in (\alpha _{\textsf {cond}}, \alpha _{\textsf {sat}})\) such that \(d\equiv \alpha k\in \mathbb {N}\), and \(p^\star \equiv p^\star (\alpha ,k)\in (0,1)\) be a fixed constant (for its definition, see Definition 6.8 of [37]). For all \(\varepsilon >0\), there exist constants \(\delta =\delta (\varepsilon ,\alpha ,k)>0\) and \(C\equiv C(\varepsilon ,\alpha ,k)\) such that with probability at least \(1-\varepsilon \), the random d-regular k-nae-sat instance \(\mathscr {G}\) satisfies the following. Let \( {x}^1, {x}^2\in \{0,1\}^n\) be independent, uniformly chosen satisfying assignments of \(\mathscr {G}\). Then, the absolute value \(\rho _{\text {abs}} \equiv |\rho |\) of their overlap \(\rho \equiv \rho (\underline{x}^1,\underline{x}^2)\) satisfies

-

(a)

\(\mathbb {P}(\rho _{\text {abs}}\le n^{-1/3} |\mathscr {G}) \ge \delta \);

-

(b)

\(\mathbb {P}( \big |\rho _{\text {abs}}-p\big | \le n^{-1/3} |\mathscr {G})\ge \delta \);

-

(c)

\(\mathbb {P}( \min \{ \rho _{\text {abs}}, |\rho _{\text {abs}}-p|\} \ge n^{-1/3}|\mathscr {G})\le Cn^{-1/4}\).

1.2 Related works

Many of the earlier works on rcsps focused on determining the satisfiability thresholds and for rcsp models that are known not to exhibit rsb, such goals were established. These models include random linear equations [7], random 2-sat [12, 13], random 1-in-k-sat [1] and k-xor-sat [22, 26, 38]. On the other hand, for the models which are predicted to exhibit rsb, intensive studies have been conducted to estimate their satisfiability threshold (random k-sat [5, 18, 30], random k-nae-sat [2, 17, 21] and random graph coloring [3, 14, 15, 19]).

More recently, the satisfiability thresholds for rcsps in the 1rsb class have been rigorously determined for several models including maximum independent set [24], random regular k-nae-sat [25], random regular k-sat [18] and random k-sat [23]). These works carried out a demanding second moment method to the number of clusters instead of the number of solutions. Although determining the colorability threshold is left open, the condensation threshold for random graph coloring was established in [9], where they conducted a challenging analysis based on a clever “planting" technique, and the results were generalized to other models in [16]. Also, [8] identified the condensation threshold for random regular k-sat, where each variable appears d/2-times positive and d/2-times negative.

Further theory was developed in [43] to establish the 1rsb free energy for random regular k-nae-sat in the condensation regime by applying the second moment method to the \(\lambda \)-tilted partition function. Later, our companion paper [37] made further progress in the same model by giving a cluster level description of the condensation phenomenon. Namely, [37] showed that with positive probability, a bounded number of clusters dominate the solution space and the overlap concentrates on two points in the condensation regime. Our main contribution is to push the probability arbitrarily close to one and show that the same phenomenon holds with high probability.

Lastly, [11] studied the random k-max-nae-sat beyond \(\alpha _{\textsf {sat}}\), where they verified that the 1rsb description breaks down before \(\alpha \asymp k^{-3}4^k\). Indeed, the Gardner transition from 1rsb to full rsb is expected at \(\alpha _{\textsf {Ga}}\asymp k^{-3}4^k >\alpha _{\textsf {sat}}\) [32, 35], and [11] provides evidence of this phenomenon.

1.3 Proof ideas

In [37], the majority of the work was to compute moments of the tilted cluster partition function \(\overline{{{\textbf {Z}}}}_\lambda \) and \(\overline{{{\textbf {Z}}}}_{\lambda ,s}\), defined as

where the sums are taken over all clusters \(\Upsilon \). Moreover, let \(\overline{{{\textbf {N}}}}_{s}\) denote the number of clusters whose size is in the interval \([e^{ns}, e^{ns +1} )\), i.e.

Denote by \(s_\circ \) the size of the solution space in normalized logarithmic scale from Theorem 1.1:

where \(c_\star \) is the constant introduced in Theorem 1.1 and \(C\in \mathbb {R}\). In [37], we obtained the estimates on \(\overline{{\textbf {N}}}_{s_\circ }\) from the second moment method showing that \(\mathbb {E}[\overline{{{\textbf {N}}}}_{s_\circ }^2] \lesssim _{k} (\mathbb {E}\overline{{{\textbf {N}}}}_{s_\circ })^2\) holds, and that \(\mathbb {E}\overline{{{\textbf {N}}}}_{s_\circ }\) decays exponentially as \(C\rightarrow -\infty \). Thus, it was shown in [37] that

However, in order to establish (a) and (b) of the Theorem 1.1, we need to push the probability in the second line to \(1-\varepsilon \).

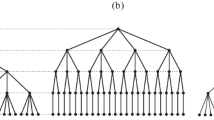

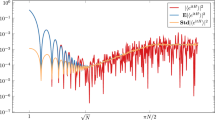

To do so, one may hope to have \(\mathbb {E}[\overline{{{\textbf {N}}}}_{s_\circ }^2] \approx (\mathbb {E}\overline{{{\textbf {N}}}}_{s_\circ })^2\) to deduce \(\mathbb {P}(\overline{{{\textbf {N}}}}_{s_\circ } >0) {\rightarrow } 1\) for large enough C, but this is false in the case of random regular nae-sat. The primary reasons is that short cycles in the graph causes multiplicative fluctuations in \(\overline{{{\textbf {N}}}}_{s_\circ }\). Therefore, our approach is to rescale \(\overline{{{\textbf {N}}}}_{s_\circ }\) according to the effects of short cycles, rescaled partition function \(\widetilde{{{\textbf {N}}}}_{s_\circ }\) concentrate around its expectation. That is, \(\mathbb {E}[\widetilde{{{\textbf {N}}}}_{s_\circ }^2] \approx (\mathbb {E}\widetilde{{{\textbf {N}}}}_{s_\circ })^2\) (to be precise, this will only be true when C is large enough, due to the intrinsic correlations coming from the largest clusters). Furthermore, we argue that the fluctuations coming from the short cycles are not too big, and hence can be absorbed by \(\overline{{{\textbf {N}}}}_{s_\circ }\) if \(\mathbb {E}\overline{{{\textbf {N}}}}_{s_\circ }\) is large. To this end, we develop a new argument that combines small subgraph conditioning [39, 40], which is a widely used tool in problems on random graphs, and the Doob martingale approach used in [24, 25], which are not effective in our model if used alone.

The small subgraph conditioning method ([39, 40]; for a survey, see Chapter 9.3 of [29]) has proven to be useful in many settings [27, 28, 42] to derive the precise distributional limits of partition functions. For example, [27] applied this method to the proper coloring model of bipartite random regular graphs, where they determined the limiting distribution of the number of colorings. However, this method relies much on the algebraic identities specific to the model which are sometimes not tractable, including our case. Roughly speaking, one needs a fairly clear combinatorial formula of the second moment to carry out the algebraic and combinatorial computations.

Another technique that inspired our proof, which we will refer to as the Doob martingale approach, was introduced in [24, 25]. This method rather directly controls the multiplicative fluctuations of \(\overline{\text {{\textbf {N}}}}_{s_\circ }\) by investigating the Doob martingale increments of \(\log \overline{{{\textbf {N}}}}_{s_\circ }\). It has proven to be useful in the study of models like random regular nae-sat, as seen in [25]. However, in the spin systems with infinitely many spins like our model, some of the key estimates in the argument become false, due to the existence of rare spins (or large free components).

Our approach blends the two techniques in a novel way to back up each other’s limitations. Although we could not algebraically derive the identities required for the small subgraph conditioning, we instead deduce them by a modified Doob martingale approach for the truncated model which has a finite spin space. Then, we send the truncation parameter to infinity on these algebraic identities, and show that they converge to the corresponding formulas for the untruncated model. This step requires a more refined understanding on the first and second moments of \(\widetilde{{{\textbf {N}}}}_{s_\circ }\) including the constant coefficient of the leading exponential term, whereas the order of the leading order was sufficient in the earlier works [25, 43]. We then appeal to the small subgraph conditioning method to deduce the conclusion based on those identities. We believe that our approach is potentially applicable to other models with an infinite spin space where the traditional small subgraph conditioning method is not tractable.

1.4 Notational conventions

For non-negative quantities \(f=f_{d,k, n}\) and \(g=g_{d,k,n}\), we use any of the equivalent notations \(f=O_{k}(g), g= \Omega _k(f), f\lesssim _{k} g\) and \(g \gtrsim _{k} f \) to indicate that for each \(k\ge k_0\),

with the convention \(0/0\equiv 1\). We drop the subscript k if there exists a universal constant C such that

When \(f\lesssim _{k} g\) and \(g\lesssim _{k} f\), we write \(f\asymp _{k} g\). Similarly when \(f\lesssim g\) and \(g\lesssim f\), we write \(f \asymp g\).

2 The Combinatorial Model

We begin with introducing the mathematical framework to analyze the clusters of solutions. We follow the formulation derived in [43, Section 2]. In [37], we needed further definitions in addition to those from [43], but in this work it is enough to rely on the concepts of [43]. In this section, we briefly review the necessary definitions for completeness.

There is a natural graphical representation to describe a d-regular k-nae-sat instance by a labelled (d, k)-regular bipartite graph: Let \(V=\{ v_1, \ldots , v_n \}\) and \(F=\{a_1, \ldots , a_m \}\) be the sets of variables and clauses, respectively. Connect \(v_i\) and \(a_j\) by an edge if \(v_i\) is one of the variables contained in the clause \(a_j\). Let \(\mathcal {G}=(V,F,E)\) be this bipartite graph, and let \(\texttt {L}_e\in \{0,1\}\) for \(e\in E\) be the literal corresponding to the edge e. Then, the labelled bipartite graph \(\mathscr {G}=(V,F,E,\underline{\texttt {L}})\equiv (V,F,E,\{\texttt {L}_{e}\}_{e\in E})\) represents a nae-sat instance.

For each \(e\in E\), we denote the variable(resp. clause) adjacent to it by v(e) (resp. a(e)). Moreover, \(\delta v\) (resp. \(\delta a\)) are the collection of adjacent edges to \(v\in V\) (resp. \(a \in F\)). We denote \(\delta v {\setminus } e:= \delta v {\setminus } \{e\}\) and \(\delta a \setminus e:= \delta a \setminus \{e\}\) for simplicity. Formally speaking, we regard E as a perfect matching between the set of half-edges adjacent to variables and those to clauses which are labelled from 1 to \(nd=mk\), and hence a permutation in \(S_{nd}\).

Definition 2.1

For an integer \(l\ge 1\) and \(\underline{{\textbf {x}}}=(\textbf{x}_i) \in \{0,1\}^l\), define

Let \(\mathscr {G}= (V,F,E,\underline{\texttt {L}})\) be a nae-sat instance. An assignment \(\underline{{\textbf {x}}}\in \{0,1\}^V\) is called a solution if

where \(\oplus \) denotes the addition mod 2. Denote \(\textsf {SOL}(\mathscr {G})\subset \{0,1\}^V\) by the set of solutions and endow a graph structure on \(\textsf {SOL}(\mathscr {G})\) by connecting \(\underline{{\textbf {x}}}\sim \underline{{\textbf {x}}}'\) if and only if they have a unit Hamming distance. Also, let \(\textsf {CL}(\mathscr {G})\) be the set of clusters, namely the connected components under this adjacency.

2.1 The frozen configuration

Our first step is to define frozen configuration which is a basic way of encoding clusters. We introduce free variable which we denote by \({\texttt {f}}\), whose Boolean addition is defined as \(\texttt {f}\oplus 0=\texttt {f}\oplus 1=\texttt {f}\). Recalling the definition of \(I^{\textsc {nae}}\) (6), a frozen configuration is defined as follows.

Definition 2.2

(Frozen configuration). For \(\mathscr {G}= (V,F,E, \underline{\texttt {L}})\), \(\underline{x}\in \{0,1,{\texttt {f}}\}^V\) is called a frozen configuration if the following conditions are satisfied:

-

No nae-sat constraints are violated for \(\underline{x}\). That is, \(I^{\textsc {nae}}(\underline{x};\mathscr {G})=1\).

-

For \(v\in V\), \(x_v\in \{0,1\}\) if and only if it is forced to be so. That is, \(x_v\in \{0,1\}\) if and only if there exists \(e\in \delta v\) such that a(e) becomes violated if \(\texttt {L}_e\) is negated, i.e., \(I^{\textsc {nae}} (\underline{x}; \mathscr {G}\oplus \mathbb {1}_e )=0\) where \(\mathscr {G}\oplus \mathbb {1}_e\) denotes \(\mathscr {G}\) with \(\texttt {L}_e\) flipped. \(x_v={\texttt {f}}\) if and only if no such \(e\in \delta v\) exists.

We record the observations which are direct from the definition. Details can be found in the previous works ([25], Section 2 and [43], Section 2).

-

(1)

We can map a nae-sat solution \(\underline{{\textbf {x}}}\in \{0,1 \}^V\) to a frozen configuration via the following coarsening algorithm: If there is a variable v such that \(\textbf{x}_v\in \{0,1\}\) and \(I^{\textsc {nae}}(\underline{{\textbf {x}}};\mathscr {G}) = I^{\textsc {nae}}(\underline{{\textbf {x}}}\oplus \mathbb {1}_v;\mathscr {G})=1\) (i.e., flipping \(\textbf{x}_v\) does not violate any clause), then set \(\textbf{x}_v = \text {{\texttt {f}}}\). Iterate this process until additional modifications are impossible.

-

(2)

All solutions in a cluster \(\Upsilon \in \textsf{CL}(\mathscr {G})\) are mapped to the same frozen configuration \(\underline{x}\equiv \underline{x}[\Upsilon ] \in \{0,1,\text {{\texttt {f}}}\}^V\). However, coarsening algorithm is not necessarily surjective. For instance, a typical instance of \(\mathscr {G}\) does not have a cluster corresponding to all-free (\(\underline{{{\textbf {x}}}}\equiv {\texttt {f}}\)).

2.2 Message configurations

Although the frozen configurations provides a representation of clusters, it does not tell us how to comprehend the size of clusters. The main obstacle in doing so comes from the connected structure of free variables which can potentially be complicated. We now introduce the notions to comprehend this issue in a tractable way.

Definition 2.3

(Separating and forcing clauses). Let \(\underline{x}\) be a given frozen configuration on \(\mathscr {G}= (V,F,E,\underline{\texttt {L}})\). A clause \(a\in F\) is called separating if there exist \(e, e^\prime \in \delta a\) such that \(\texttt {L}_{e}\oplus x_{v(e)} = 0, \quad \texttt {L}_{e^\prime } \oplus x_{v(e^\prime )}=1.\) We say \(a\in F\) is non-separating if it is not a separating clause. Moreover, \(e\in E\) is called forcing if \(\texttt {L}_{e}\oplus x_{v(e)} \oplus 1 = \texttt {L}_{e'}\oplus x_{v(e')}\in \{0,1\}\) for all \(e'\in \delta a(e) {\setminus } e\). We say \(a\in F\) is forcing, if there exists \(e\in \delta a\) which is a forcing edge. In particular, a forcing clause is also separating.

Observe that a non-separating clause must be adjacent to at least two free variables, which is a fact frequently used throughout the paper.

Definition 2.4

(Free cycles). Let \(\underline{x}\) be a given frozen configuration on \(\mathscr {G}= (V,F,E,\underline{\texttt {L}})\). A cycle in \(\mathscr {G}\) (which should be of an even length) is called a free cycle if

-

Every variable v on the cycle is \(x_v = {\texttt {f}}\);

-

Every clause a on the cycle is non-separating.

Throughout the paper, our primary interest is on the frozen configurations which does not contain any free cycles. If \(\underline{x}\) does not have any free cycle, then we can easily extend it to a nae-sat solution in \(\underline{{\textbf {x}}}\) such that \(\textbf{x}_v = x_v\) if \(x_v\in \{0,1\}\), since nae-sat problem on a tree is always solvable.

Definition 2.5

(Free trees). Let \(\underline{x}\) be a frozen configuration in \(\mathscr {G}\) without any free cycles. Consider the induced subgraph H of \(\mathscr {G}\) consisting of free variables and non-separating clauses. Each connected component of H is called free piece of \(\underline{x}\) and denoted by \(\mathfrak {t}^{\text {in}}\). For each free piece \(\mathfrak {t}^{\text {in}}\), the free tree \(\mathfrak {t}\) is defined by the union of \(\mathfrak {t}^{\text {in}}\) and the half-edges incident to \(\mathfrak {t}^{\text {in}}\).

For the pair \((\underline{x}, \mathscr {G})\), we write \(\mathscr {F}(\underline{x},\mathscr {G})\) to denote the collection of free trees inside \((\underline{x}, \mathscr {G})\). We write \(V(\mathfrak {t})=V(\mathfrak {t}^{\text {in}})\), \(F(\mathfrak {t})=F(\mathfrak {t}^{\text {in}})\) and \(E(\mathfrak {t})=E(\mathfrak {t}^{\text {in}})\) to be the collection of variables, clauses and (full-)edges in \(\mathfrak {t}\). Moreover, define \(\dot{\partial } \mathfrak {t}\) (resp. \(\hat{\partial } \mathfrak {t}\)) to be the collection of boundary half-edges that are adjacent to \(F(\mathfrak {t})\) (resp. \(V(\mathfrak {t})\)), and write \(\partial \mathfrak {t}:= \dot{\partial }\mathfrak {t}\sqcup \hat{\partial } \mathfrak {t}\)

We now introduce the message configuration, which enables us to calculate the size of a free tree (that is, number of nae-sat solutions on \(\mathfrak {t}\) that extends \(\underline{x}\)) by local quantities. The message configuration is given by \(\underline{\tau }= (\tau _e)_{e\in E} \in \mathscr {M}^E\) (\(\mathscr {M}\) is defined below). Here, \(\tau _e=(\dot{\tau }_e,\hat{\tau }_e)\), where \(\dot{\tau }\) (resp. \(\hat{\tau }\)) denotes the message from v(e) to a(e) (resp. a(e) to v(e)).

A message will carry information of the structure of the free tree it belongs to. To this end, we first define the notion of joining l trees at a vertex (either variable or clause) to produce a new tree. Let \(t_1,\ldots , t_l\) be a collection of rooted bipartite factor trees satisfying the following conditions:

-

Their roots \(\rho _1,\ldots ,\rho _l\) are all of the same type (i.e., either all-variables or all-clauses) and are all degree one.

-

If an edge in \(t_i\) is adjacent to a degree one vertex, which is not the root, then the edge is called a boundary-edge. The rest of the edges are called internal-edges. For the special case where \(t_i\) consists of a single edge and a single vertex, we regard the single edge to be a boundary-edge.

-

\(t_1,\ldots ,t_l\) are boundary-labelled trees, meaning that their variables, clauses, and internal edges are unlabelled (except we distinguish the root), but the boundary edges are assigned with values from \(\{0,1,{{\texttt {S}}}\}\), where \({{\texttt {S}}}\) stands for ‘separating’.

Then, the joined tree \(t \equiv \textsf {j}(t_1,\ldots , t_l) \) is obtained by identifying all the roots as a single vertex o, and adding an edge which joins o to a new root \(o'\) of an opposite type of o (e.g., if o was a variable, then \(o'\) is a clause). Note that \(t= \textsf {j}(t_1,\ldots ,t_l)\) is also a boundary-labelled tree, whose labels at the boundary edges are induced by those of \(t_1,\ldots ,t_l\).

For the simplest trees that consist of single vertex and a single edge, we use 0 (resp. 1) to stand for the ones whose edge is labelled 0 (resp. 1): for the case of \(\dot{\tau }\), the root is the clause, and for the case of \(\hat{\tau }\), the root is the variable. Also, if its root is a variable and its edge is labelled \({{\texttt {S}}}\), we write the tree as \({{\texttt {S}}}\).

We can also define the Boolean addition to a boundary-labelled tree t as follows. For the trees 0, 1, the Boolean-additions \(0\oplus \texttt {L}\), \(1\oplus \texttt {L}\) are defined as above (\(t\oplus \texttt {L}\)), and we define \({{\texttt {S}}}\oplus \texttt {L}= {{\texttt {S}}}\) for \(\texttt {L}\in \{0,1\}\). For the rest of the trees, \(t \oplus 0:= t\), and \(t\oplus 1\) is the boundary-labelled tree with the same graphical structure as t and the labels of the boundary Boolean-added by 1 (Here, we define \({{\texttt {S}}}\oplus 1 = {{\texttt {S}}}\) for the \({{\texttt {S}}}\)-labels).

Definition 2.6

(Message configuration). Let \(\dot{\mathscr {M}}_0:= \{0,1,\star \}\) and \(\hat{\mathscr {M}}_0:= \emptyset \). Suppose that \(\dot{\mathscr {M}}_t, \hat{\mathscr {M}}_t\) are defined, and we inductively define \(\dot{\mathscr {M}}_{t+1}, \hat{\mathscr {M}}_{t+1}\) as follows: For \(\hat{\underline{\tau }} \in (\hat{\mathscr {M}}_t)^{d-1}\), \(\dot{\underline{\tau }} \in (\dot{\mathscr {M}}_t)^{k-1}\), we write \(\{\hat{\tau }_i \}:= \{\hat{\tau }_1,\ldots ,\hat{\tau }_{d-1} \}\) and similarly for \(\{\dot{\tau }_i \}\). We define

Further, we set \(\dot{\mathscr {M}}_{t+1}:= \dot{\mathscr {M}}_t \cup \dot{T}( \hat{\mathscr {M}}_t^{d-1} ) {\setminus } \{{\texttt {z}}\}\), and \(\hat{\mathscr {M}}_{t+1}:= \hat{\mathscr {M}}_t \cup \hat{T}(\dot{\mathscr {M}}_t^{k-1} )\), and define \(\dot{\mathscr {M}}\) (resp. \(\hat{\mathscr {M}}\)) to be the union of all \(\dot{\mathscr {M}}_t\) (resp. \(\hat{\mathscr {M}}_t\)) and \(\mathscr {M}:= \dot{\mathscr {M}} \times \hat{\mathscr {M}}\). Then, a (valid) message configuration on \(\mathscr {G}=(V,F,E,\underline{\texttt {L}})\) is a configuration \(\underline{\tau }\in \mathscr {M}^E\) that satisfies (i) the local equations given by

for all \(e\in E\), and (ii) if one element of \(\{\dot{\tau }_e,\hat{\tau }_e\}\) equals \(\star \) then the other element is in \(\{0,1\}\).

In the definition, \(\star \) is the symbol introduced to cover cycles, and \({\texttt {z}}\) is an error message. See Figure 1 in Section 2 of [43] for an example of \(\star \) message.

When a frozen configuration \(\underline{x}\) on \(\mathscr {G}\) is given, we can construct a message configuration \(\underline{\tau }\) via the following procedure:

-

1.

For a forcing edge e, set \(\hat{\tau }_e=x_{v(e)}\). Also, for an edge \(e\in E\), if there exists \(e^\prime \in \delta v(e) {\setminus } e\) such that \(\hat{\tau }_{e^\prime } \in \{0,1\}\), then set \(\dot{\tau }_e=x_{v(e)}\).

-

2.

For an edge \(e\in E\), if there exist \(e_1,e_2\in \delta a(e){\setminus } e\) such that \(\{\texttt {L}_{e_1}\oplus \dot{\tau }_{e_1}, \texttt {L}_{e_2}\oplus \dot{\tau }_{e_2}\}=\{0,1\}\), then set \(\hat{\tau }_e = {{\texttt {S}}}\).

-

3.

After these steps, apply the local equations (8) recursively to define \(\dot{\tau }_e\) and \(\hat{\tau }_e\) wherever possible.

-

4.

For the places where it is no longer possible to define their messages until the previous step, set them to be \(\star \).

In fact, the following lemma shows the relation between the frozen and message configurations. We refer to [43], Lemma 2.7 for its proof.

Lemma 2.7

The mapping explained above defines a bijection

Next, we introduce a dynamic programming method based on belief propagation to calculate the size of a free tree by local quantities from a message configuration.

Definition 2.8

Let \(\mathcal {P}\{0,1\} \) denote the space of probability measures on \(\{0,1\}\). We define the mappings \(\dot{{{\texttt {m}}}}:\dot{\mathscr {M}} \rightarrow \mathcal {P}\{0,1\}\) and \(\hat{{{\texttt {m}}}}: \hat{\mathscr {M}} \rightarrow \mathcal {P}\{0,1\}\) as follows. For \(\dot{\tau }\in \{0,1\}\) and \(\hat{\tau }\in \{0,1\}\), let \(\dot{{{\texttt {m}}}}[\dot{\tau }] =\delta _{\dot{\tau }}\), \(\hat{{{\texttt {m}}}}[\hat{\tau }] = \delta _{\hat{\tau }}\). For \(\dot{\tau }\in \dot{\mathscr {M}} {\setminus } \{0,1,\star \}\) and \(\hat{\tau }\in \hat{\mathscr {M}} {\setminus } \{0,1,\star \}\), \(\dot{{{\texttt {m}}}}[\dot{\tau }]\) and \(\hat{{{\texttt {m}}}}[\hat{\tau }]\) are recursively defined:

-

Let \(\dot{\tau } = \dot{T}(\hat{\tau }_1,\ldots ,\hat{\tau }_{d-1})\), with \(\star \notin \{\hat{\tau }_i \}\). Define

$$\begin{aligned} \dot{z}[\dot{\tau }] := \sum _{\textbf{x}\in \{0,1\} } \prod _{i=1}^{d-1} \hat{{{\texttt {m}}}}[\hat{\tau }_i](\textbf{x}), \quad \dot{{{\texttt {m}}}}[\dot{\tau }](\textbf{x}) := \frac{1}{\dot{z}[\dot{\tau }]} \prod _{i=1}^{d-1} \hat{{{\texttt {m}}}}[\hat{\tau }_i](\textbf{x}). \end{aligned}$$(10)Note that these equations are well-defined, since \((\hat{\tau }_1,\ldots , \hat{\tau }_{d-1})\) are well-defined up to permutation.

-

Let \(\hat{\tau } = \hat{T} ( \dot{\tau }_1,\ldots ,\dot{\tau }_{k-1}; \texttt {L})\), with \(\star \notin \{\dot{\tau }_i \}\). Define

$$\begin{aligned} \hat{z}[\hat{\tau }] := 2-\sum _{\textbf{x}\in \{0,1\}} \prod _{i=1}^{k-1} \dot{{{\texttt {m}}}}[\dot{\tau }_i](\textbf{x}), \quad \hat{{{\texttt {m}}}}[\hat{\tau }](\textbf{x}) := \frac{1}{\hat{z}[\hat{\tau }]} \left\{ 1- \prod _{i=1}^{k-1} \dot{{{\texttt {m}}}}[\dot{\tau }_i](\textbf{x}) \right\} . \end{aligned}$$(11)Similarly as above, these equations are well-defined.

Moreover, observe that inductively, \(\dot{{{\texttt {m}}}}[\dot{\tau }], \hat{{{\texttt {m}}}}[\hat{\tau }] \) are not Dirac measures unless \(\dot{\tau }, \hat{\tau }\in \{0,1\}\).

It turns out that \(\dot{{{\texttt {m}}}}[\star ], \hat{{{\texttt {m}}}}[\star ]\) can be arbitrary measures for our purpose, and hence we assume that they are uniform measures on \(\{0,1\}\).

The Eqs. (10) and (11) are known as belief propagation equations. We refer the detailed explanation to [43], Section 2 where the same notions are introduced, or to [33], Chapter 14 for more fundamental background. From these quantities, we define the following local weights which are going to lead us to computation of cluster sizes.

where the last identities in the last two lines hold for any choices of i. These weight factors can be used to derive the size of a free tree. Let \(\mathfrak {t}\) be a free tree in \(\mathscr {F}(\underline{x},\mathscr {G})\), and let \(w^{\text {lit}} (\mathfrak {t}; \underline{x},\mathscr {G})\) be the number of nae-sat solutions that extend \(\underline{x}\) to \(\{0,1\}^{V(\mathfrak {t})}\). Further, let \(\textsf {size}(\underline{x},\mathscr {G})\) denote the total number of nae-sat solutions that extend \(\underline{x}\) to \(\{0,1\}^V.\)

Lemma 2.9

([43], Lemma 2.9 and Corollary 2.10; [33], Ch. 14). Let \(\underline{x}\) be a frozen configuration on \(\mathscr {G}=(V,F,E,\underline{\texttt {L}})\) without any free cycles, and \(\underline{\tau }\) be the corresponding message configuration. For a free tree \(\mathfrak {t}\in \mathscr {F}(\underline{x};\mathscr {G})\), we have that

Furthermore, let \(\Upsilon \in \textsf {CL}(\mathscr {G})\) be the cluster corresponding to \(\underline{x}\). Then, we have

2.3 Colorings

In this subsection, we introduce the coloring configuration, which is a simplification of the message configuration. We give its definition analogously as in [43].

Recall the definition of \(\mathscr {M}=\dot{\mathscr {M}}\times \hat{\mathscr {M}}, \) and let \(\{ {\texttt {F}}\} \subset \mathscr {M}\) be defined by \(\{{\texttt {F}}\}:= \{\tau \in \mathscr {M}: \, \dot{\tau } \notin \{ 0,1,\star \} \text { and } \hat{\tau }\notin \{ 0,1,\star \} \}\).

Note that \(\{{\texttt {F}}\}\) corresponds to the messages on the edges of free trees, except the boundary edges labelled either 0 or 1.

Define \(\Omega := \{{{{\texttt {R}}}}_0, {{{\texttt {R}}}}_1, {{{\texttt {B}}}}_0, {{{\texttt {B}}}}_1\} \cup \{{\texttt {F}}\}\) and let \(\textsf {S}: \mathscr {M}\setminus \{(\star ,\star )\} \rightarrow \Omega \) be the projections given by

Here, we note that a (valid) message configuration \(\underline{\tau }=(\tau _e)_{e\in E} \in \mathscr {M}^{E}\) cannot have an edge e such that \(\tau _e=(\star ,\star )\) (see Definition 2.6), thus we may safely exclude the spin \((\star ,\star )\) from \(\mathscr {M}\).

For convenience, we abbreviate \(\{{{{\texttt {R}}}}\}= \{{{{\texttt {R}}}}_0, {{{\texttt {R}}}}_1 \}\) and \(\{{{{\texttt {B}}}}\} = \{{{{\texttt {B}}}}_0, {{{\texttt {B}}}}_1 \}\), and define the Boolean addition as \({{{\texttt {B}}}}_\textbf{x}\oplus \texttt {L}:= {{{\texttt {B}}}}_{\textbf{x}\oplus \texttt {L}}\), and similarly for \({{{\texttt {R}}}}_\textbf{x}\). Also, for \(\sigma \in \{ {{{\texttt {R}}}},{{{\texttt {B}}}},{{\texttt {S}}}\}\), we set \(\dot{\sigma }:= \sigma =:\hat{\sigma }\).

Definition 2.10

(Colorings). For \(\underline{\sigma }\in \Omega ^d\), let

Also, define \( \hat{I}^{\text {lit}}: \Omega ^k \rightarrow \mathbb {R}\) to be

On a nae-sat instance \(\mathscr {G}= (V,F,E,\underline{\texttt {L}})\), \(\underline{\sigma }\in \Omega ^E\) is a (valid) coloring if \(\dot{I}(\underline{\sigma }_{\delta v})=\hat{I}^{\text {lit}}((\underline{\sigma }\oplus \underline{\texttt {L}})_{\delta a}) =1 \) for all \(v\in V, a\in F\).

Given nae-sat instance \(\mathscr {G}\), it was shown in Lemma 2.12 of [43] that there is a bijection

The weight elements for coloring, denoted by \(\dot{\Phi }, \hat{\Phi }^{\text {lit}}, \bar{\Phi }\), are defined as follows. For \(\underline{\sigma }\in \Omega ^d,\) let

For \(\underline{\sigma }\in \Omega ^k\), let

(If \(\sigma \notin \{{{{\texttt {R}}}}\}, \) then \(\dot{\tau }(\sigma _i)\) is well-defined.)

Lastly, let

Note that if \(\hat{\sigma }={{\texttt {S}}}\), then \(\bar{\varphi }(\dot{\sigma },\hat{\sigma })=2\) for any \(\dot{\sigma }\). The rest of the details explaining the compatibility of \(\varphi \) and \(\Phi \) can be found in [43], Section 2.4. Then, the formula for the cluster size we have seen in Lemma 2.9 works the same for the coloring configuration.

Lemma 2.11

([43], Lemma 2.13). Let \(\underline{x}\in \{0,1,\text {{\texttt {f}}}\}^V\) be a frozen configuration on \(\mathscr {G}=(V,F,E,\underline{\texttt {L}})\), and let \(\underline{\sigma }\in \Omega ^E\) be the corresponding coloring. Define

Then, we have \(\textsf {size}(\underline{x};\mathscr {G}) = w_\mathscr {G}^{\text {lit}}(\underline{\sigma })\).

Among the valid frozen configurations, we can ignore the contribution from the configurations with too many free or red colors, as observed in the following lemma.

Lemma 2.12

([25] Proposition 2.2, [43] Lemma 3.3). For a frozen configuration \(\underline{x}\in \{0,1,\text {{\texttt {f}}}\}^{V}\), let \({{{\texttt {R}}}}(\underline{x})\) count the number of forcing edges and \(\text {{\texttt {f}}}(\underline{x})\) count the number of free variables. There exists an absolute constant \(c>0\) such that for \(k\ge k_0\), \(\alpha \in [\alpha _{\textsf {lbd}}, \alpha _{\textsf {ubd}}]\), and \(\lambda \in (0,1]\),

where \(\textsf {size}(\underline{x};\mathscr {G})\) is the number of nae-sat solutions \(\underline{{\textbf {x}}}\in \{0,1\}^{V}\) which extends \(\underline{x}\in \{0,1,\text {{\texttt {f}}}\}^{V}\).

Thus, our interest is in counting the number of frozen configurations and colorings such that the fractions of red edges and the fraction of free variables are bounded by \(7/2^k\). To this end, we define

where \({{{\texttt {R}}}}(\underline{\sigma })\) count the number of red edges and \(\text {{\texttt {f}}}(\underline{\sigma })\) count the number of free variables. The superscript \(\text {tr}\) is to emphasize that the above quantities count the contribution from frozen configurations which only contain free trees, i.e. no free cycles (Recall that by Lemma 2.7 and (14), the space of coloring has a bijective correspondence with the space of frozen configurations without free cycles). Similarly, recalling the definition of \(\overline{\text {{\textbf {N}}}}_s\) in (3), total number of clusters of size in \([e^{ns},e^{ns+1})\), \({{\textbf {N}}}_s\) is defined to be

Hence, \(e^{-n\lambda s-\lambda }{{\textbf {Z}}}_{\lambda ,s}\le {{\textbf {N}}}_{s}\le e^{-n\lambda s} {{\textbf {Z}}}_{\lambda ,s}\) holds.

Definition 2.13

(Truncated colorings). Let \(1\le L< \infty \), \(\underline{x}\) be a frozen configuration on \(\mathscr {G}\) without free cycles and \(\underline{\sigma }\in \Omega ^E\) be the coloring corresponding to \(\underline{x}\). Recalling the notation \(\mathscr {F}(\underline{x};\mathscr {G})\) (Definition 2.5), we say \(\underline{\sigma }\) is a (valid) L-truncated coloring if \(|V(\mathfrak {t})| \le L\) for all \(\mathfrak {t}\in \mathscr {F}(\underline{x};\mathscr {G})\). For an equivalent definition, let \(|\sigma |:=v(\dot{\sigma })+v(\hat{\sigma })-1\) for \(\sigma \in \{{\texttt {F}}\}\), where \(v(\dot{\sigma })\) (resp. \(v(\hat{\sigma })\)) denotes the number of variables in \(\dot{\sigma }\) (resp. \(\hat{\sigma }\)). Define \(\Omega _L:= \{{{{\texttt {R}}}},{{{\texttt {B}}}}\}\cup \{{\texttt {F}}\}_L\), where \(\{{\texttt {F}}\}_L\) is the collection of \(\sigma \in \{{\texttt {F}}\}\) such that \(|\sigma | \le L\). Then, \(\underline{\sigma }\) is a (valid) L-truncated coloring if \(\underline{\sigma }\in \Omega _L^E\).

To clarify the names, we often call the original coloring \(\underline{\sigma }\in \Omega ^E\) the untruncated coloring.

Analogous to (15), define the truncated partition function

2.4 Averaging over the literals

Let \(\mathscr {G}=(V,F,E,\underline{\texttt {L}})\) be a nae-sat instance and \(\mathcal {G}=(V,F,E)\) be the factor graph without the literal assignment. Let \(\mathbb {E}^{\text {lit}}\) denote the expectation over the literals \(\underline{\texttt {L}}\sim \text {Unif} [\{0,1\}^E]\). Then, for a coloring \(\underline{\sigma }\in \Omega ^{E}\), we can use Lemma 2.11 to write \(\mathbb {E}^{\text {lit}}[w_\mathscr {G}^{\text {lit}}(\underline{\sigma }) ]\) as

To this end, define

We now recall a property of \(\hat{\Phi }^{\text {lit}}\) from [43], Lemma 2.17:

Lemma 2.14

([43], Lemma 2.17). \(\hat{\Phi }^{\text {lit}}\) can be factorized as \(\hat{\Phi }^{\text {lit}}(\underline{\sigma }\oplus \underline{\texttt {L}}) = \hat{I}^{\text {lit}}(\underline{\sigma }\oplus \underline{\texttt {L}}) \hat{\Phi }^{\text {m}}(\underline{\sigma })\) for

As a consequence, we can write \(\hat{\Phi }(\underline{\sigma })^\lambda = \hat{\Phi }^{\text {m}}(\underline{\sigma })^\lambda \hat{v}(\underline{\sigma })\), where

2.5 Empirical profile of colorings

The coloring profile, defined below, was introduced in [43]. Hereafter, \(\mathscr {P}(\mathfrak {X})\) denotes the space of probability measures on \(\mathfrak {X}\).

Definition 2.15

(Coloring profile and the simplex of coloring profile, Definition 3.1 and 3.2 of [43]). Given a nae-sat instance \(\mathscr {G}\) and a coloring configuration \(\underline{\sigma }\in \Omega ^E \), the coloring profile of \(\underline{\sigma }\) is the triple \(H[\underline{\sigma }]\equiv H\equiv (\dot{H},\hat{H},\bar{H}) \) defined as follows.

A valid H must satisfy the following compatibility equation:

The simplex of coloring profile \(\varvec{\Delta }\) is the space of triples \(H=(\dot{H},\hat{H},\bar{H})\) which satisfies the following conditions:

-

\(\dot{H} \in \mathscr {P}(\text {supp}\,\dot{\Phi }), \hat{H} \in \mathscr {P}(\text {supp}\,\hat{\Phi })\) and \(\bar{H} \in \mathscr {P}(\Omega )\).

-

\(\dot{H},\hat{H}\) and \(\bar{H}\) satisfy (18).

-

Recalling the definition of \(\text {{\textbf {Z}}}_{\lambda }\) in (15), \(\dot{H},\hat{H}\) and \(\bar{H}\) satisfy \(\max \{\bar{H}({\texttt {f}}),\bar{H}({{{\texttt {R}}}})\} \le \frac{7}{2^k}\).

For \(L <\infty \), we let \(\varvec{\Delta }^{(L)}\) be the subspace of \(\varvec{\Delta }\) satisfying the following extra condition:

-

\(\dot{H} \in \mathscr {P}(\text {supp}\,\dot{\Phi }\cap \Omega _{L}^{d}), \hat{H} \in \mathscr {P}(\text {supp}\,\hat{\Phi }\cap \Omega _L^{k})\) and \(\bar{H} \in \mathscr {P}(\Omega _L)\).

Given a coloring profile \(H\in \varvec{\Delta }\), denote \({{\textbf {Z}}}_{\lambda }^{\text{ tr }}[H]\) by the contribution to \({{\textbf {Z}}}_{\lambda }^{\text{ tr }}\) from the coloring configurations whose coloring profile is H. That is, \({{\textbf {Z}}}_\lambda ^{\text{ tr }}[H]\!:=\! \sum _{\underline{\sigma }:\; H[\underline{\sigma }] = H} w^{\text{ lit }}(\underline{\sigma })^\lambda \). For \(H \in \varvec{\Delta }^{(L)}\), \({{\textbf {Z}}}^{(L),\text{ tr }}_{\lambda }[H]\) is analogously defined. In [43], they showed that \(\mathbb {E}{{\textbf {Z}}}^{(L),\text{ tr }}_\lambda [H]\) for the L-truncated coloring model can be written as the following formula, which is a result of Stirling’s approximation:

Similar to \(F_{\lambda ,L}(H)\) for \(H\in \varvec{\Delta }^{(L)}\), the untruncated free energy \(F_{\lambda }(H)\) for \(H\in \varvec{\Delta }\) is defined by the same equation \(F_{\lambda }(H):=\Sigma (H)+\lambda s(H)\).

2.6 Belief propagation fixed point and optimal profiles

It was proven in [43] that the truncated free energy \(F_{\lambda ,L}(H)\) is maximized at the optimal profile \(H^\star _{\lambda ,L}\), defined in terms of Belief Propagation(BP) fixed point. In this subsection, we review the necessary notions to define \(H^\star _{\lambda ,L}\) (cf. Section 5 of [43]). To do so, we first define the BP functional: for probability measures \(\dot{\textbf{q}},\hat{\textbf{q}}\in \mathscr {P}(\Omega _L)\), where \(L<\infty \), let

where \(\sigma \in \Omega _L\) and \(\cong \) denotes equality up to normalization, so that the output is a probability measure. Now, restrict the domain to the probability measures with one-sided dependence, i.e. satisfying \(\dot{\textbf{q}}(\sigma )=\dot{f}(\dot{\sigma })\) and \(\hat{\textbf{q}}(\sigma )=\hat{f}(\hat{\sigma })\) for some \(\dot{f}:\dot{\Omega }_L\rightarrow \mathbb {R}_{\ge 0}\) and \(\hat{f}:\hat{\Omega }_L\rightarrow \mathbb {R}_{\ge 0}\). It can be checked that \(\dot{\textbf{B}}_{1,\lambda }, \hat{\textbf{B}}_{1,\lambda }\) preserve the one-sided property, inducing

More precisely, for \(\hat{q}\in \mathscr {P}(\hat{\Omega }_L)\) and \(\dot{q} \in \mathscr {P}(\dot{\Omega }_L)\), define the probability measures \(\dot{\text {BP}}_{\lambda ,L}(\hat{q})\in \mathscr {P}(\dot{\Omega }_L)\) and \(\hat{\text {BP}}_{\lambda ,L}(\dot{q})\in \mathscr {P}(\hat{\Omega }_L)\) as follows. For \(\dot{\sigma }\in \dot{\Omega }_L\) and \(\hat{\sigma }\in \hat{\Omega }_L\), let

where \(\hat{\sigma }^\prime \in \hat{\Omega }_L\) and \(\dot{\sigma }^\prime \in \dot{\Omega }_L\) are arbitrary with the only exception that when \(\dot{\sigma }\in \{{{{\texttt {R}}}},{{{\texttt {B}}}}\}\) (resp. \(\hat{\sigma }\in \{{{{\texttt {R}}}},{{{\texttt {B}}}}\}\)), then we take \(\hat{\sigma }^\prime = \dot{\sigma }\) (resp. \(\dot{\sigma }^\prime = \hat{\sigma }\)) so that the RHS above is non-zero. From the definition of \(\dot{\Phi },\hat{\Phi }\), and \(\bar{\Phi }\), it can be checked that the choices of \(\hat{\sigma }^\prime \in \hat{\Omega }_L\) and \(\dot{\sigma }^\prime \in \dot{\Omega }_L\) do not affect the values of the RHS above (see (12)). The normalizing constants \(\dot{\mathscr {Z}}_{\hat{q}}\) and \(\hat{\mathscr {Z}}_{\dot{q}}\) are given by

Here, \(\hat{\sigma }^\prime \in \hat{\Omega }_L\) and \(\dot{\sigma }^\prime \in \dot{\Omega }_L\) are again arbitrary. We then define the Belief Propagation functional by \(\text {BP}_{\lambda ,L}:= \dot{\text {BP}}_{\lambda ,L}\circ \hat{\text {BP}}_{\lambda ,L}\). The untruncated BP map, which we denote by \(\text {BP}_{\lambda }:\mathscr {P}(\dot{\Omega }) \rightarrow \mathscr {P}(\dot{\Omega })\), is analogously defined, where we replace \(\dot{\Omega }_L\)(resp. \(\hat{\Omega }_L\)) with \(\dot{\Omega }\)(resp. \(\hat{\Omega }\)).

Remark 2.16

In defining the untruncated BP map, note that \(\dot{\Omega }\) and \(\hat{\Omega }\) are not a finite set, thus the normalizing constant, analogue of (22), may be infinite. However, from the definitions of \(\dot{\Phi },\hat{\Phi }\), and \(\bar{\Phi }\), we have that \(\bar{\Phi }(\sigma _1)\dot{\Phi }(\underline{\sigma })\le 2\) and \(\bar{\Phi }(\tau _1)\dot{\Phi }(\underline{\tau })\le 2\) for \(\underline{\sigma }=(\sigma _1,\ldots ,\sigma _d) \in \Omega ^{d}\) and \(\underline{\tau }=(\tau _1,\ldots ,\tau _k) \in \Omega ^k\). Thus, it follows that the normalizing constants for the untruncated BP map are at most 2. We also remark that \(\underline{\sigma }=((\dot{\sigma }_1,\hat{\sigma }_1),\ldots ,(\dot{\sigma }_d,\hat{\sigma }_d))\in \Omega ^{d}\) such that \(\dot{\Phi }(\underline{\sigma })\ne 0\) is fully determined by \((\dot{\sigma }_1,\hat{\sigma }_1)\) and \(\hat{\sigma }_2,\ldots ,\hat{\sigma }_d\). Thus, the second sum \(\underline{\sigma }\in \Omega _L^d\) in the definition of \(\dot{\mathscr {Z}}_{\hat{q}}\) in (22) can be replaced with the sum over \(\sigma _1\in \Omega , \hat{\sigma }_2,\ldots , \hat{\sigma }_d\in \hat{\Omega }\). The analogous remark holds for the \(\hat{\mathscr {Z}}_{\dot{q}}\) and for the untruncated model.

Let \(\mathbf {\Gamma }_C\) be the set of \(\dot{q} \in \mathscr {P}(\dot{\Omega })\) such that

where \(\{{{{\texttt {R}}}}\}\equiv \{{{{\texttt {R}}}}_0,{{{\texttt {R}}}}_1\}\) and \(\{{{{\texttt {B}}}}\}\equiv \{{{{\texttt {B}}}}_0,{{{\texttt {B}}}}_1\}\). The proposition below shows that the BP map contracts in the set \(\mathbf {\Gamma }_C\) for large enough C, which guarantees the existence of Belief Propagation fixed point.

Proposition 2.17

(Proposition 5.5 item a,b of [43]). For \(\lambda \in [0,1]\), the following holds:

-

1.

There exists a large enough universal constant C such that the map \(\text {BP}\equiv \text {BP}_{\lambda ,L}\) has a unique fixed point \(\dot{q}^\star _{\lambda ,L}\in \mathbf {\Gamma }_C\). Moreover, if \(\dot{q}\in \mathbf {\Gamma }_C\), \(\text {BP}\dot{q}\in \mathbf {\Gamma }_C\) holds with

$$\begin{aligned} ||\text {BP}\dot{q}-\dot{q}^\star _{\lambda ,L}||_1\lesssim k^2 2^{-k}||\dot{q}-\dot{q}^\star _{\lambda ,L}||_1. \end{aligned}$$(24)The same holds for the untruncated BP, i.e. \(\text {BP}_{\lambda }\), with fixed point \(\dot{q}^\star _{\lambda }\in \Gamma _C\). \(\dot{q}^\star _{\lambda ,L}\) for large enough L and \(\dot{q}^\star _{\lambda }\) have full support in their domains.

-

2.

In the limit \(L \rightarrow \infty \), \(||\dot{q}^\star _{\lambda ,L}-\dot{q}^\star _{\lambda }||_1 \rightarrow 0\).

For \(\dot{q} \in \mathscr {P}(\dot{\Omega })\), denote \(\hat{q}\equiv \hat{\text {BP}}\dot{q}\), and define \(H_{\dot{q}}=(\dot{H}_{\dot{q}},\hat{H}_{\dot{q}}, \bar{H}_{\dot{q}})\in \varvec{\Delta }\) by

where \(\dot{\mathfrak {Z}}\equiv \dot{\mathfrak {Z}}_{\dot{q}},\hat{\mathfrak {Z}}\equiv \hat{\mathfrak {Z}}_{\dot{q}}\) and \(\bar{\mathfrak {Z}}\equiv \bar{\mathfrak {Z}}_{\dot{q}}\) are normalizing constants.

Definition 2.18

(Definition 5.6 of [43]). The optimal coloring profile for the truncated model and the untruncated model is the tuple \(H^\star _{\lambda ,L}=(\dot{H}^\star _{\lambda ,L},\hat{H}^\star _{\lambda ,L},\bar{H}^\star _{\lambda ,L})\) and \(H^\star _{\lambda }=(\dot{H}^\star _{\lambda },\hat{H}^\star _{\lambda },\bar{H}^\star _{\lambda })\), defined respectively by \( H^\star _{\lambda ,L}:= H_{\dot{q}^\star _{\lambda ,L}}\) and \(H^\star _{\lambda }:=H_{\dot{q}^\star _{\lambda }}\).

Definition 2.19

For \(k\ge k_0,\alpha \in (\alpha _{\textsf {cond}}, \alpha _{\textsf {sat}})\) and \(\lambda \in [0,1]\), define the optimal \(\lambda \)-tilted truncated weight \(s^\star _{\lambda ,L}\equiv s^\star _{\lambda ,L}(\alpha ,k)\) and untruncated weight \(s^\star _{\lambda } \equiv s^\star _{\lambda }(\alpha ,k)\) by

Then, define the optimal tilting constants \(\lambda ^\star _L\equiv \lambda ^\star _L(\alpha ,k)\) and \(\lambda ^\star \equiv \lambda ^\star (\alpha , k)\) by

Finally, we define \(s^\star _L\equiv s^\star _L(\alpha ,k),s^\star \equiv s^\star (\alpha ,k)\) and \(c^\star \equiv c^\star (\alpha ,k)\) by

We remark that \(s^\star = \textsf {f}^{1\textsf {rsb}}(\alpha )\) and \(\lambda ^\star \in (0,1)\) holds for \(\alpha \in (\alpha _{\textsf {cond}}, \alpha _{\textsf {sat}})\) (see Proposition 1.4 of [43]).

To end this section, we define the optimal coloring profile in the second moment (cf. Definition 5.6 of [43]). Define the analogue of \((\dot{\Phi },\hat{\Phi },\bar{\Phi })\) in the second moment \((\dot{\Phi }_2,\hat{\Phi }_2,\bar{\Phi }_2)\) by \(\dot{\Phi }_2:=\dot{\Phi }\otimes \dot{\Phi }\), \(\bar{\Phi }_2:=\bar{\Phi }\otimes \bar{\Phi }\) and

Then, the BP map in the second moment  is defined by replacing \((\dot{\Phi },\hat{\Phi },\bar{\Phi })\) in (20) by \((\dot{\Phi }_2,\hat{\Phi }_2,\bar{\Phi }_2)\). Moreover, analogous to (25), define

is defined by replacing \((\dot{\Phi },\hat{\Phi },\bar{\Phi })\) in (20) by \((\dot{\Phi }_2,\hat{\Phi }_2,\bar{\Phi }_2)\). Moreover, analogous to (25), define  for \(\dot{q}\in \mathscr {P}\big ((\dot{\Omega }_L)^2\big )\) by replacing \((\dot{\Phi },\hat{\Phi },\bar{\Phi })\) in (25) by \((\dot{\Phi }_2,\hat{\Phi }_2,\bar{\Phi }_2)\). Here,

for \(\dot{q}\in \mathscr {P}\big ((\dot{\Omega }_L)^2\big )\) by replacing \((\dot{\Phi },\hat{\Phi },\bar{\Phi })\) in (25) by \((\dot{\Phi }_2,\hat{\Phi }_2,\bar{\Phi }_2)\). Here,  and

and  .

.

Definition 2.20

(Definition 5.6 of [43]). The optimal coloring profile in the second moment for the truncated model is the tuple \(H^{\bullet }_{\lambda ,L}=(\dot{H}^{\bullet }_{\lambda ,L},\hat{H}^{\bullet }_{\lambda ,L},\bar{H}^{\bullet }_{\lambda ,L})\) defined by  .

.

3 Proof Outline

Recall that \({{\textbf {N}}}_s^{\text{ tr }}\equiv {{\textbf {Z}}}_{0,s}^{\text{ tr }}\) counts the number of valid colorings with weight between \(e^{ns}\) and \(e^{ns+1}\), which do not contain a free cycle. Also, recalling the constant \(s_\circ (C)\equiv s_\circ (n,\alpha ,C)\) in (4). It was shown in [37] that for fixed \(C\in \mathbb {R}\), \(\mathbb {E}{{\textbf {N}}}^{\text{ tr }}_{s_{\circ }(C)}\asymp _{k} e^{\lambda ^\star C}\) holds and we have the following:

Hence, the Cauchy-Schwarz inequality shows that there is a constant \(C_k<1\), which only depends on \(\alpha \) and k, such that for \(C>0\),

The remaining work is to push this probability close to 1. The key to proving Theorem 1.1 and 1.4 is the following theorem.

Theorem 3.1

Let \(k\ge k_0\), \(\alpha \in (\alpha _{\textsf {cond}}, \alpha _{\textsf {sat}})\), and set \(\lambda ^\star , s^\star \) as in Definition 2.19. For every \(\varepsilon >0\), there exists \(C(\varepsilon ,\alpha ,k)>0\) and \(\delta \equiv \delta (\varepsilon ,\alpha ,k)>0\) such that we have for \(n\ge n_0(\varepsilon ,\alpha ,k)\) and \(C\ge C(\varepsilon ,\alpha ,k)\),

where \(s_\circ (C)\equiv s_\circ (n,\alpha ,C)\equiv s^\star -\frac{ \log n}{2\lambda ^\star n} - \frac{C}{n}\).

Theorem 3.1 easily implies Theorems 1.1 and 1.4: in [37, Remark 6.11], we have already shown that Theorem 3.1 implies Theorem 1.4, so we are left to prove Theorem 1.1.

Proof of Theorem 1.1

By Theorem 3.22 of [37], \(\mathbb {E}{{\textbf {N}}}^{\text{ tr }}_{s_{\circ }(C)}\asymp e^{\lambda ^\star C}\), so Theorem 3.1 implies Theorem 1.1-(b). Hence, it remains to prove Theorem 1.1-(a). Fix \(\varepsilon >0\) throughout the proof. By Theorem 3.1, there exists \(C_1\equiv C_1(\varepsilon ,\alpha ,k)\) such that

Note that on the event \({{\textbf {N}}}_{s_\circ (C_1)}^{\text{ tr }}>0\), we have

where Z denotes the number of nae-sat solutions in \(\mathscr {G}\). Moreover, it was shown in [37, Theorem 1.1-(a)] that for \(C_2\le n^{1/5}\), we have

where \(C_k\) is a constant that depends only on k and the sum in the lhs is for \(s\in n^{-1}\mathbb {Z}\). Therefore, by Markov’s inequality, we can choose \(C_2\equiv C_2(\varepsilon ,\alpha ,k)\) to be large enough so that

Furthermore, by Theorem 1.1-(a) of [37], there exists \(C_3\equiv C_3(\varepsilon ,\alpha ,k)\) such that

Finally, Theorem 3.24 and Proposition 3.25 of [37] show that for \(|C|\le n^{1/4}\), \(\mathbb {E}{{\textbf {N}}}_{s_{\circ }(C)}\asymp _{k}e^{-\lambda ^\star C}\) holds. Thus, we can choose \(K\equiv K(\varepsilon ,\alpha ,k)\in \mathbb {N}\) large enough so that

Therefore, by (30)–(33), the conclusion of Theorem 1.1-(a) holds with \(K=K(\varepsilon ,\alpha ,k)\). \(\square \)

3.1 Outline of the proof of Theorem 3.1

In this subsection, we discuss the outline of the proof of Theorem 3.1. We begin with a natural way of characterizing cycles in \(\mathscr {G}= (\mathcal {G}, \underline{\texttt {L}})\) which was also used in [20].

Definition 3.2

(\(\zeta \)-cycle). Let \(l>0\) be an integer and for each \({\zeta }\in \{0,1\}^{2l}\), a \(\zeta \)-cycle in \(\mathscr {G}=(\mathcal {G},\underline{\texttt {L}})\) consists of

which satisfies the following conditions:

-

\(v_1,\ldots ,v_l\in [n]\equiv V\) are distinct variables, and for each \(i\in [l]\), \(e_{v_i}^0\) and \(e_{v_i}^1\) are distinct half-edges attached to \(v_i\).

-

\(a_1,\ldots ,a_l\in [m]\equiv F\) are distinct clauses, and for each \(i\in [l]\), \(e_{a_i}^0\) and \(e_{a_i}^1\in [k]\) are distinct half-edges attached to \(a_i\). Moreover,

$$\begin{aligned} a_1 = \min \{a_i:i\in [l] \}, \quad \text {and} \quad e_{a_1}^0<e_{a_1}^1. \end{aligned}$$(34) -

\((e_{v_i}^1,e_{a_{i+1}}^0)\) and \((e_{a_i}^1,e_{v_i}^0)\) are edges in \(\mathcal {G}\) for each \(i\in [l]\). (\(a_{l+1}=a_1\))

-

The literal on the half-edge \(\texttt {L}({e_{a_i}^j})\) is given by \(\texttt {L}({e_{a_i}^j}) = \zeta _{2(i-1)+j }\) for each \(i\in [l]\) and \(j\in \{0,1\}\). (\(\zeta _0=\zeta _{2l}\))

Note that (34) is introduced in order to prevent overcounting. Also, we denote the size of \(\zeta \) by \(||\zeta ||\), defined as

Furthermore, we denote by \(X({\zeta })\) the number of \(\zeta \)-cycles in \(\mathscr {G}=(\mathcal {G},\underline{\texttt {L}})\). For \(\zeta \in \{0,1\}^{2\,l}\), it is not difficult to see that

Moreover, \(\{X({\zeta })\}\) are asymptotically jointly independent in the sense that for any \(l_0>0\),

Both (36) and (37) follow from an application of the method of moments (e.g., see Theorem 9.5 in [29]). Given these definitions and properties, we are ready to state the small subgraph conditioning method, appropriately adjusted to our setting.

Theorem 3.3

(Small subgraph conditioning [39, 40]). Let \(\mathscr {G}= (\mathcal {G}, \underline{\texttt {L}})\) be a random d-regular k-nae-sat instance and let \(X({\zeta })\equiv X({\zeta ,n})\) be the number of \(\zeta \)-cycles in \(\mathscr {G}\) with \(\mu ({\zeta })\) given as (36). Suppose that a random variable \(Z_n\equiv Z_n(\mathscr {G})\) satisfies the following conditions:

-

(a)

For each \(l\in \mathbb {N}\) and \(\zeta \in \{0,1\}^{2l}\), the following limit exists:

$$\begin{aligned} 1+ \delta ({\zeta }) \equiv \lim _{n \rightarrow \infty } \frac{\mathbb {E}[ Z_nX({\zeta })]}{\mu ({\zeta })\mathbb {E}Z_n }. \end{aligned}$$(38)Moreover, for each \(a,l\in \mathbb {N}\) and \(\zeta \in \{0,1 \}^{2\,l}\), we have

$$\begin{aligned} \lim _{n\rightarrow \infty } \frac{\mathbb {E}[Z_n (X(\zeta ))_a] }{ \mathbb {E}Z_n} = (1+\delta (\zeta ))^a \mu (\zeta )^a, \end{aligned}$$where \((b)_a\) denotes the falling factorial \((b)_a:= b(b-1)\cdots (b-a+1)\).

-

(b)

The following limit exists:

$$\begin{aligned} C\equiv \lim _{n \rightarrow \infty } \frac{\mathbb {E}Z_n^2}{(\mathbb {E}Z_n)^2}. \end{aligned}$$ -

(c)

We have \(\sum _{l=1}^\infty \sum _{\zeta \in \{0,1\}^{2\,l}} \mu ({\zeta }) \delta ({\zeta })^2 <\infty .\)

-

(d)

Moreover, the constant C satisfies \(C\le \exp \left( \sum _{l=1}^\infty \sum _{\zeta \in \{0,1\}^{2\,l}} \mu ({\zeta }) \delta ({\zeta })^2 \right) \).

Then, we have the following conclusion:

where \(\bar{X}({\zeta })\) are independent Poisson random variables with mean \(\mu ({\zeta })\).

We briefly explain a way to understand the crux of the theorem as follows. Since \(\{X({\zeta })\}\) jointly converges to \(\{\bar{X}({\zeta })\}\), it is not hard to see that

using the conditions (a),(b),(c),(d) (e.g. see Theorem 9.12 in [29] and its proof). Therefore, conditions (b) and (d) imply that the conditional variance of \(Z_n\) given \(\{X({\zeta })\}\) is negligible compared to \((\mathbb {E}Z_n)^2\), and hence the distribution of \(Z_n\) is asymptotically the same as that of \(\mathbb {E}\big [Z_n\big | \{X({\zeta })\}\big ]\) as addressed in the conclusion of the theorem.

Having Theorem 3.3 in mind, our goal is to (approximately) establish the four assumptions for (a truncated version of) \({{\textbf {Z}}}_{\lambda ,s_\circ (C)}^{\text{ tr }},\) for \(s_{\circ }(C)\equiv s^\star -\frac{\log n}{2\lambda ^\star n}-\frac{C}{n}\). The condition (b) has already been obtained from the moment analysis from [37]. The condition (a) will be derived in Proposition 4.1 below and (c) will be derived in Lemma 4.6 below. The condition (d), however, will be true only in an approximate sense, where the approximation error becomes smaller when we take the constant C larger because of within-cluster correlations.

In the previous works [27, 28, 39, 40, 42], the condition (d) could be obtained through a direct calculation of the second moment in a purely combinatorial way. However, this approach seems to be intractable in our model; for instance, the main contributing empirical measure to the first moment \(H^\star _{\lambda }\) barely has combinatorial meaning.

Instead, we first establish (39) for the L-truncated model, by showing the concentration of the rescaled partition function, introduced in (40) below. The truncated model will be easier to work with since it has a finite spin space unlike the untruncated model. Then, we rely on the convergence results regarding the leading constants of first and second moments, derived in [37], to deduce (d) for the untruncated model in an approximate sense. We then apply ideas behind the proof of Theorem 3.3 to deduce Theorem 3.1 (for details, see Sect. 6).

We now give a more precise description on how we establish (d) for the truncated model. Let \(1\le L,l_0 <\infty \) and \(\lambda \in (0,\lambda ^\star _L)\), where \(\lambda ^\star _L\) is defined in Definition 2.19. Then, define the rescaled partition function \({{\textbf {Y}}}^{(L)}_{\lambda , l_0}\)

Here, \(\delta _{L}(\zeta )\) is the constant defined in (38) for \(Z_n = {{\textbf {Z}}}_{\lambda }^{(L),\text{ tr }}\), assuming its existence. The precise definition of \(\delta _{L}(\zeta )\) is given in (45). The reason to consider \(\widetilde{{{\textbf {Z}}}}^{(L),\text{ tr }}_{\lambda }\) instead of \({{\textbf {Z}}}^{(L),\text{ tr }}_{\lambda }\) is to ignore the contribution from near-identical copies in the second moment. Then, Proposition 3.4 below shows that the rescaled partition function is concentrated for each \(L<\infty \). Its proof is provided in Sect. 5.

Proposition 3.4

Let \(k\ge k_0\), \(L<\infty \), and \(\lambda \in (\lambda ^\star _L-0.01\cdot 2^{-k},\lambda ^\star _L)\). Then we have

Remark 3.5

An important thing to note here is that Proposition 3.4 is not true for \(\lambda =\lambda ^\star _L\). If \(\lambda <\lambda ^\star _L\), then \(s^\star _{\lambda ,L}<s^\star _L\), so there should exist exponentially many clusters of size \(e^{n s^\star _{\lambda ,L}}\). Therefore, the intrinsic correlations within clusters are negligible (that is, when we pick two clusters at random, the probability of selecting the same one is close to 0) and the fluctuation is taken over by cycle effects. However, when there are bounded number of clusters of size \(e^{ns^\star _{\lambda ,L}}\) (that is, when \(\lambda \) is very close to \(\lambda ^\star _L\)), within-cluster correlations become non-trivial. Mathematically, we can see this from (29), where we can ignore the first moment term in the rhs of (29) if (and only if) it is large enough.

Nevertheless, for \(s_\circ (C)\) defined as in Theorem 3.1, we will see in Sect. 6 that if we set C to be large, then (d) holds, and hence the conclusion of Theorem 3.3 holds with a small error.

Further notations. Throughout this paper, we will often use the following multi-index notation. Let \(\underline{a}=(a_\zeta )_{||\zeta ||\le l_0}\), \(\underline{b}=(b_\zeta )_{||\zeta ||\le l_0}\) be tuples of integers indexed by \(\zeta \) with \(||\zeta ||\le l_0\). Then, we write

Moreover, for non-negative quantities \(f=f_{d,k, L, n}\) and \(g=g_{d,k,L, n}\), we use any of the equivalent notations \(f=O_{k,L}(g), g= \Omega _{k,L}(f), f\lesssim _{k,L} g\) and \(g \gtrsim _{k,L} f \) to indicate that \(f\le C_{k,L}\cdot g\) holds for some constant \(C_{k,L}>0\), which only depends on k, L.

4 The Effects of Cycles

In this section, our goal is to obtain the condition (a) of Theorem 3.3 for (truncated versions of) \({{\textbf {Z}}}^{(L),\text{ tr }}_{\lambda }\) and \({{\textbf {Z}}}^{\text{ tr }}_{\lambda ,s_n}\), where \(|s_n-s^\star _{\lambda }|=O(n^{-2/3})\) (see Proposition 4.1 below). To do so, we first introduce necessary notations to define \(\delta (\zeta )\) appearing in Theorem 3.3.

For \(\lambda \in [0,1]\), recall the optimal coloring profile of the untruncated model \(H^\star _{\lambda }\) and truncated model \(H^\star _{\lambda ,L}\) from Definition 2.18. We denote the two-point marginals of \(\dot{H}^\star _{\lambda }\) by

and similarly for \(\dot{H}^\star _{\lambda ,L}\). On the other hand, for \({\underline{\texttt {L}}}\in \{0,1\}^k\), consider the optimal clause empirical measure \(\hat{H}^{\underline{\texttt {L}}}_{\lambda }\) given the literal assignment \(\underline{\texttt {L}}\in \{0,1\}^{k}\) around a clause, namely for \(\underline{\sigma }\in \Omega ^k\),

where \(\hat{\mathfrak {Z}}^{\underline{\texttt {L}}}_{\lambda }\) is the normalizing constant. Note that \(\hat{\mathfrak {Z}}^{\underline{\texttt {L}}}_{\lambda } = \hat{\mathfrak {Z}}_{\lambda }\) is independent of \(\underline{\texttt {L}}\) due to the symmetry \(\dot{q}_\lambda ^\star (\dot{\sigma })=\dot{q}_\lambda ^\star (\dot{\sigma }\oplus 1)\). Similarly, define \(\hat{H}^{\underline{\texttt {L}}}_{\lambda ,L}\) for the truncated model. Given the literals \(\texttt {L}_1,\texttt {L}_2\) at the first two coordinates of a clause, the two point marginal of \(\hat{H}^{\underline{\texttt {L}}}_{\lambda }\) is defined by

where the second equality holds for any \(\underline{\texttt {L}}\in \{0,1\}^k\) that agrees with \(\texttt {L}_1,\texttt {L}_2\) at the first two coordinates, due to the symmetry \(\hat{H}^{\underline{\texttt {L}}}_{\lambda }(\underline{\tau })=\hat{H}_{\lambda }^{\underline{\texttt {L}}\oplus \underline{\texttt {L}}'}(\underline{\tau }\oplus \underline{\texttt {L}}')\). The symmetry also implies that

for any \(\texttt {L}_1, \texttt {L}_2 \in \{0,1\}\) and \(\tau _1\in \Omega \). We also define \(\hat{H}^{\texttt {L}_1,\texttt {L}_2}_{\lambda ,L}\) analogously for the truncated model.

Then, we define \(\dot{A}\equiv \dot{A}_{\lambda },\hat{A}^{\texttt {L}_1,\texttt {L}_2}\equiv \hat{A}^{\texttt {L}_1,\texttt {L}_2}_{\lambda }\) to be the \(\Omega \times \Omega \) matrices as follows:

and \(\Omega _L\times \Omega _L\) matrices \(\dot{A}_{\lambda ,L}\) and \(\hat{A}_{\lambda ,L}^{\texttt {L}_1,\texttt {L}_2}\) are defined analogously using \(\dot{H}^\star _{\lambda ,L}, \hat{H}^{\texttt {L}_1,\texttt {L}_2}_{\lambda ,L}\) and \(\bar{H}^\star _{\lambda ,L}\). Note that both matrices have row sums equal to 1, and hence their largest eigenvalue is 1. For \(\zeta \in \{0,1\}^{2l}\), we introduce the following notation for convenience:

where \(\zeta _0 = \zeta _{2l}\). Moreover, we define \((\dot{A}_L \hat{A}_L)^\zeta \) analogously. Then, the main proposition of this section is given as follows.

Proposition 4.1

Let \(L,l_0>0\) and let \(\underline{X} = \{X({\zeta })\}_{||\zeta ||\le l_0}\) denote the number of \(\zeta \)-cycles in \(\mathscr {G}\). Also, set \(\mu ({\zeta })\) as (36), and for each \(\zeta \in \cup _l\{0,1\}^{2l}\), define

Then, there exists a constant \(c_{\textsf {cyc}}=c_{\textsf {cyc}}(l_0)\) such that the following statements hold true:

-

(1)

For \(\lambda \in (0,1)\) and any tuple of nonnegative integers \(\underline{a}=(a_\zeta )_{||\zeta ||\le l_0}\), such that \(||\underline{a}||_\infty \le c_{\textsf {cyc}} \log n\), we have

$$\begin{aligned} \mathbb {E}\left[ \widetilde{{{\textbf {Z}}}}_{\lambda }^{(L),\text{ tr }} \cdot (\underline{X})_{\underline{a}} \right] = \left( 1+ err(n,\underline{a}) \right) \left( \underline{\mu } ( 1+ \underline{\delta }_L)\right) ^{\underline{a}} \mathbb {E}\widetilde{{{\textbf {Z}}}}_{\lambda }^{(L),\text{ tr }}, \end{aligned}$$(46)where \(\widetilde{{{\textbf {Z}}}}^{(L),\text{ tr }}_{\lambda }\) is defined in (40) and \(err(n,\underline{a}) = O_k \left( ||\underline{a}||_1 n^{-1/2} \log ^2 n \right) \).

-

(2)

For \(\lambda \in (0,\lambda _L^\star )\), where \(\lambda ^\star _{L}\) is defined in Definition 2.19, the analogue of (46) holds for the second moment as well. That is, for \(\underline{a}=(a_\zeta )_{||\zeta ||\le l_0}\) with \(||\underline{a}||_\infty \le c_{\textsf {cyc}} \log n\), we have

$$\begin{aligned} \mathbb {E}\left[ \big (\widetilde{{{\textbf {Z}}}}_{\lambda }^{(L),\text{ tr }}\big )^2 \cdot (\underline{X})_{\underline{a}} \right] = \left( 1+ err(n,\underline{a}) \right) \left( \underline{\mu } ( 1+ \underline{\delta }_L)^2\right) ^{\underline{a}} \mathbb {E}\big (\widetilde{{{\textbf {Z}}}}_{\lambda }^{(L),\text{ tr }}\big )^2. \end{aligned}$$(47) -

(3)

Under a slightly weaker error given by \(err'(n,\underline{a}) = O_k(||\underline{a}||_1 n^{-1/8})\), the analogue of (1) holds the same for the untruncated model with any \(\lambda \in (0,1)\). Namely, analogously to (40), define \(\widetilde{{{\textbf {Z}}}}_{\lambda }^{\text{ tr }}\) and \(\widetilde{{{\textbf {Z}}}}^{\text{ tr }}_{\lambda ,s}\) by

$$\begin{aligned}&\widetilde{{{\textbf {Z}}}}^{\text{ tr }}_{\lambda }:=\sum _{||H-H^\star _{\lambda }||_1\le n^{-1/2}\log ^2 n}{{\textbf {Z}}}^{\text{ tr }}_{\lambda }[H];\nonumber \\ {}&\widetilde{{{\textbf {Z}}}}^{\text{ tr }}_{\lambda ,s}:=\sum _{||H-H^\star _{\lambda }||_1\le n^{-1/2}\log ^2 n}{{\textbf {Z}}}^{\text{ tr }}_{\lambda }[H]\mathbb {1}\big \{s(H)\in [ns,ns+1)\big \}\,. \end{aligned}$$(48)Then, (46) continues to hold when we replace \(\widetilde{{{\textbf {Z}}}}_{\lambda }^{(L),\text{ tr }},err(n,\underline{a})\) and \(\underline{\delta }_L\) by \(\widetilde{{{\textbf {Z}}}}_{\lambda }^{\text{ tr }}, err'(n,\underline{a})\) and \(\underline{\delta }\) respectively. Moreover, (46) continues to hold when we replace \(\widetilde{{{\textbf {Z}}}}_{\lambda }^{(L),\text{ tr }},err(n,\underline{a})\) and \(\underline{\delta }_L\) by \({{\textbf {Z}}}_{\lambda ,s_n}^{\text{ tr }}, err'(n,\underline{a})\) and \(\underline{\delta }\) respectively, where \(|s_n-s^\star _{\lambda }|=O(n^{-2/3})\).

-

(4)

For each \(\zeta \in \cup _l \{0,1\}^{2l}\), we have \(\lim _{L\rightarrow \infty } \delta _L (\zeta ) = \delta (\zeta )\).

In the remainder of this section, we focus on proving (1) of Proposition 4.1. In the proof, we will be able to see that (2) of the proposition follow by an analogous argument (see Remark 4.4). The proofs of (3) and (4) are deferred to Appendix 6, since they require substantial amounts of additional technical work.

For each \(\zeta \in \{0,1\}^{2l}\) and a nonnegative integer \(a_\zeta \), let \(\mathcal {Y}_i \equiv \mathcal {Y}_i(\zeta ) \in \big \{\{v_{\iota },a_{\iota },(e_{v_{\iota }}^{j}, e_{a_{\iota }}^{j})_{j=0,1} \}_{\iota =1}^l\big \}\), \(i\in [a_\zeta ]\) denote the possible locations of \(a_\zeta \) \(\zeta \)-cycles defined as Definition 3.2. Then, it is not difficult to see that

where the summation runs over distinct \(\mathcal {Y}_1,\ldots ,\mathcal {Y}_{a_\zeta }\), and \(\underline{\texttt {L}}(\mathcal {Y}_i;\mathscr {G})\) denotes the literals on \(\mathcal {Y}_i\) inside \(\mathscr {G}\). Based on this observation, we will show (1) of Proposition 4.1 by computing the cost of planting cycles at specific locations \(\{\mathcal {Y}_i\}\). Moreover, in addition to \(\{\mathcal {Y}_i\}\), prescribing a particular coloring on those locations will be useful. In the following definition, we introduce the formal notations to carry out such an idea.

Definition 4.2

(Empirical profile on \(\mathcal {Y}\)). Let \(L,l_0>0\) be given integers and let \(\underline{a}=(a_\zeta )_{||\zeta ||\le l_0}\). Moreover, let

denote the distinct \(a_\zeta \) \(\zeta \)-cycles for each \(||\zeta ||\le l_0\) inside \(\mathscr {G}\) (Definition 3.2), and let \(\underline{\sigma }\) be a valid coloring on \(\mathscr {G}\). We define \({\Delta } \equiv {\Delta }[\underline{\sigma };\mathcal {Y}]\), the empirical profile on \(\mathcal {Y}\), as follows.

-

Let \(V(\mathcal {Y})\) (resp. \(F(\mathcal {Y})\)) be the set of variables (resp. clauses) in \(\cup _{||\zeta ||\le l_0} \cup _{i=1}^{a_\zeta } \mathcal {Y}_i(\zeta )\), and let \(E_c(\mathcal {Y})\) denote the collection of variable-adjacent half-edges included in \(\cup _{||\zeta ||\le l_0} \cup _{i=1}^{a_\zeta } \mathcal {Y}_i(\zeta )\). We write \(\underline{\sigma }_\mathcal {Y}\) to denote the restriction of \(\underline{\sigma }\) onto \(V(\mathcal {Y})\) and \(F(\mathcal {Y})\).

-

\(\Delta \equiv \Delta [\underline{\sigma };\mathcal {Y}] \equiv (\dot{\Delta }, (\hat{\Delta }^{\underline{\texttt {L}}})_{\underline{\texttt {L}}\in \{0,1\}^k}, \bar{\Delta }_c)\) is the counting measure of coloring configurations around \(V(\mathcal {Y}), F(\mathcal {Y})\) and \(E_c(\mathcal {Y})\) given as follows.

$$\begin{aligned} \begin{aligned}&\dot{\Delta } (\underline{\tau }) = |\{v\in V(\mathcal {Y}): \underline{\sigma }_{\delta v} = \underline{\tau } \} |, \quad \text {for all } \underline{\tau } \in \dot{\Omega }_L^d;\\&\hat{\Delta }^{\underline{\texttt {L}}} (\underline{\tau }) = |\{a\in F(\mathcal {Y}): \underline{\sigma }_{\delta a} = \underline{\tau }, \;\underline{\texttt {L}}_{\delta a} = \underline{\texttt {L}}\} |, \quad \text {for all } \underline{\tau } \in \dot{\Omega }_L^k, \; \underline{\texttt {L}}\in \{0,1\}^k;\\&\bar{\Delta }_c ({\tau }) = |\{e\in E_c(\mathcal {Y}): \sigma _{e} = \tau \} |, \quad \text {for all } \tau \in \dot{\Omega }_L. \end{aligned} \end{aligned}$$(50) -

We write \(|\dot{\Delta }| \equiv \langle \dot{\Delta },1 \rangle \), and define \(|\hat{\Delta }^{\underline{\texttt {L}}} |\), \(|\bar{\Delta }_c|\) analogously.

Note that \(\Delta \) is well-defined if \(\mathcal {Y}\) and \(\underline{\sigma }_\mathcal {Y}\) are given.

In the proof of Proposition 4.1, we will fix \(\mathcal {Y}\), the locations of \(\underline{a}\) \(\zeta \)-cycles, and a coloring configuration \(\underline{\tau }_\mathcal {Y}\) on \(\mathcal {Y}\), and compute the contributions from \(\mathscr {G}\) and \(\underline{\sigma }\) that has cycles on \(\mathcal {Y}\) and satisfies \(\underline{\sigma }_\mathcal {Y}= \underline{\tau }_\mathcal {Y}\). Formally, abbreviate \({{\textbf {Z}}}^\prime \equiv \widetilde{{{\textbf {Z}}}}^{(L),\text{ tr }}_{\lambda }\) for simplicity and define

Then, we express that

where the notation in the last equality is introduced for convenience. The key idea of the proof is to study the rhs of the above equation. We follow the similar idea developed in [25], Section 6, which is to decompose \({{\textbf {Z}}}^\prime \) in terms of empirical profiles of \(\underline{\sigma }\) on \(\mathcal {G}\). The main contribution of our proof is to suggest a method that overcomes the complications caused by the indicator term (or the planted cycles).

Proof of Proposition 4.1-(1)

As discussed above, our goal is to understand \(\mathbb {E}[{{\textbf {Z}}}^\prime \mathbb {1}\{\mathcal {Y},\underline{\tau }_\mathcal {Y}\} ]\) for given \(\mathcal {Y}\) and \(\underline{\tau }_\mathcal {Y}\). To this end, we decompose the partition function in terms of coloring profiles. It will be convenient to work with

the non-normalized empirical counts of H. Moreover, if g is given, then the product of the weight, clause, and edge factors is also determined. Let us denote this by w(g), defined by

Recalling the definition of \({{\textbf {Z}}}^\prime \equiv \widetilde{{{\textbf {Z}}}}^{(L),\text{ tr }}_{\lambda }\) in (40), we consider g such that \(||g-g^\star _{\lambda ,L}||_1\le \sqrt{n}\log ^2 n \), where we defined

Now, fix the literal assignment \(\underline{\texttt {L}}_E\) on \(\mathscr {G}\) which agrees with those on the cycles given by \(\mathcal {Y}\). Finally, let \(\Delta =(\dot{\Delta },\hat{\Delta }, \bar{\Delta }_c)\) denote the empirical profile on \(\mathcal {Y}\) induced by \(\underline{\tau }_\mathcal {Y}\). Then, we have

where we wrote \(H^\star = H^\star _{\lambda ,L}\) and the last equality follows from \(||g-g^\star _{\lambda ,L}||\le \sqrt{n}\log ^2 n\).

In the remaining, we sum the above over \(\mathcal {Y}\) and \(\underline{\tau }_\mathcal {Y}\), depending on the structure of \(\mathcal {Y}\). To this end, we define \(\eta \equiv \eta (\mathcal {Y})\) to be

where \(|\hat{\Delta }| = \sum _{\underline{\texttt {L}}} |\hat{\Delta }^{\underline{\texttt {L}}}|\) and noting that \(|\dot{\Delta }|, |\hat{\Delta }|\) and \(|\bar{\Delta }_c|\) are well-defined if \(\mathcal {Y}\) is given. Note that \(\eta \) describes the number of disjoint components in \(\mathcal {Y}\), in the sense that

Firstly, suppose that all the cycles given by \(\mathcal {Y}\) are disjoint, that is, \(\eta (\mathcal {Y})=0\). In other words, all the variable sets \(V(\mathcal {Y}_i (\zeta ))\), \(i\in [a_\zeta ], ||\zeta ||\le l_0\) are pairwise disjoint, and the same holds for the clause sets \(F(\mathcal {Y}_i (\zeta ))\). In this case, the effect of each cycle can be considered to be independent when summing (55) over \(\underline{\tau }_\mathcal {Y}\), which gives us

where \((\dot{A}_L \hat{A}_L)^\zeta \) defined as (44). Also, note that although \({\Delta }\) is defined depending on \(\underline{\tau }_\mathcal {Y}\), \(|\bar{\Delta }_c|\) in the denominator is well-defined given \(\mathcal {Y}\). Thus, averaging the above over all \(\underline{\texttt {L}}_E\) gives

Moreover, setting \(a^\dagger = \sum _{||\zeta ||\le l_0} a_\zeta ||\zeta ||\), the number of ways of choosing \(\mathcal {Y}\) to be \(\underline{a}\) disjoint \(\zeta \)-cycles can be written by

Having this in mind, summing (58) over all \(\mathcal {Y}\) that describes disjoint \(\underline{a}\) \(\zeta \)-cycles, and then over all \(||g-g^\star _{\lambda ,L}||\le n^{2/3}\), we obtain that