Abstract

In recent years, the dimensionality reduction has become more important as the number of dimensions of data used in various tasks such as regression and classification has increased. As popular nonlinear dimensionality reduction methods, t-distributed stochastic neighbor embedding (t-SNE) and uniform manifold approximation and projection (UMAP) have been proposed. However, the former outputs only one low-dimensional space determined by the t-distribution and the latter is difficult to control the distribution of distance between each pair of samples in low-dimensional space. To tackle these issues, we propose novel t-SNE and UMAP extended by q-Gaussian distribution, called q-Gaussian-distributed stochastic neighbor embedding (q-SNE) and q-Gaussian-distributed uniform manifold approximation and projection (q-UMAP). The q-Gaussian distribution is a probability distribution derived by maximizing the tsallis entropy by escort distribution with mean and variance, and a generalized version of Gaussian distribution with a hyperparameter q. Since the shape of the q-Gaussian distribution can be tuned smoothly by the hyperparameter q, q-SNE and q-UMAP can in- tuitively derive different embedding spaces. To show the quality of the proposed method, we compared the visualization of the low-dimensional embedding space and the classification accuracy by k-NN in the low-dimensional space. Empirical results on MNIST, COIL-20, OliverttiFaces and FashionMNIST demonstrate that the q-SNE and q-UMAP can derive better embedding spaces than t-SNE and UMAP.

Similar content being viewed by others

1 Introduction

Recently, the number of dimensions of data has been increasing in the world. The dimensionality reduction has been widely used to the high-dimensional data for regression, classification, feature analysis, and visualization. As linear dimensionality reduction techniques, principal component analysis (PCA) [9], multidimensional scaling (MDS) [4], Fisher’s linear discriminant analysis (LDA) [8], canonical correlations analysis (CCA) [17], linear regression, and locally linear embedding (LLE) [12] have been proposed. As nonlinear dimensionality reduction techniques, kernel PCA [13], neural network (NN), Isomap [16], Visualizing Large-scale and High-dimensional Data (LargeVis) [15], stochastic neighbor embedding (SNE) [5], t-distributed SNE (t-SNE) [6], and uniform manifold approximation and projection (UMAP) [7] have been proposed.

For the visualization of high-dimensional data, the SNE [5], t-SNE [6], and UMAP [7] are often used. They consider the proximity between high-dimensional space and low-dimensional space using a probability distribution. In high-dimensional space, the Gaussian distribution is used as a probability distribution by SNE, t-SNE, and UMAP. By using the Gaussian distribution, they can consider the similarity between samples in high-dimensional space. In low-dimensional space, the SNE uses the Gaussian distribution. On the other hand, the t-SNE uses the t-distribution in low-dimensional space instead of the normal Gaussian distribution. In the paper of t-SNE [6], the t-SNE can make better visualization than SNE because the t-distribution allows samples with closer distances to be embedded closer together and samples with large distances to be embedded farther apart. However, the t-SNE takes a long computational time when the total number of samples is large [7]. The t-SNE also cannot control the low-dimensional space, because distribution in low-dimensional space cannot be changed. To improve computational time, several algorithms are proposed [2, 19, 20]. The UMAP reduces the computational time to t-SNE because it does not use all samples when computing similarity in high-dimensional space. The UMAP uses a curve that is similar to the probability distribution in low-dimensional space. Since the curve can be changed, the UMAP can control the low-dimensional space. These methods consider the Gaussian distribution, the t-distribution, and the curve similar to a probability distribution to embed the proximity of high-dimensional data into low-dimensional space.

The q-Gaussian distribution [14] is a probability distribution obtained when the Tsallis entropy is maximized by escort distribution with mean and variance. The q-Gaussian distribution is the generalized version of the Gaussian distribution with a hyperparameter q. It has Gaussian distribution when \(q\rightarrow 1\), moreover it has t-distribution with degrees of freedom \(\nu\) when \(q=1+\frac{2}{\nu +1}\) and Cauchy distribution when \(q=2\). The advantage of the q-Gaussian distribution is that several distributions can be selected smoothly by tuning the hyperparameter q.

In this paper, we propose a new nonlinear dimensionality reduction method using the q-Gaussian distribution to improve t-SNE and UMAP. Since the q-Gaussian distribution can change the distribution smoothly, using the q-Gaussian distribution provides an intuitive operation by choosing hyperparameter q. Thus, we can generate different visualizations by changing q. By gradually changing the q, we can dynamically notice the local connectivities of the samples. This dynamic visualization allows users to understand detailed data relationships. We experimentally verified the advantages of the proposed method by changing the conventional distributions to the q-Gaussian distribution. These are called q-Gaussian-distributed stochastic neighbor embedding (q-SNE) [1] and q-Gaussian-distributed uniform manifold approximation and projection (q-UMAP). For q-SNE, it can control visualization and can be same as t-SNE by tuning the hyperparameter q. For q-UMAP, it can also control visualization and provide intuitive selectivity of distribution for low-dimensional space by choosing hyperparameter q than UMAP.

In the experiments section, we will show the effectiveness of our method by comparison of embedding visualization and evaluated accuracies. For experiments, we used MNIST, COIL-20, OliverttiFaces, and FashionMNIST dataset. We also assessed the classification performance of the k-NN-based classifier in the embedding space obtained by each method. As the datasets used in this paper have class labels, we can measure the classification performance as the classification accuracy. We expect that the samples belonging to the same class form a cluster in the original high-dimensional feature space. This structure should be preserved in the embedded space. A high classification accuracy in the embedded space indicates that the embedding space preserves the class information well. From the experiments, we confirmed that q-SNE and q-UMAP achieved better classification accuracies than t-SNE and UMAP.

2 Related works

2.1 Probability distribution

The probability distribution is used for most dimensionality reduction techniques because they can take into account the proximity of high-dimensional data. The Gaussian distribution is used for SNE [5], t-SNE [6], and UMAP [7] in high-dimensional space. For SNE [5], it also use the Gaussian distribution in low-dimensional space. The Gaussian distribution with a one-dimensional observation s is defined as:

where \(\mu\) and \(\sigma\) are mean and variance.

The t-SNE [6] uses the t-distribution in low-dimensional space and is defined as follows.

where \(\nu\) is degree of freedom.

2.2 q-Gaussian distribution

The probability distribution such as Gaussian distribution or t-distribution is used for dimensionality reduction. The q-Gaussian distribution [14] is derived by the maximization of the Tsallis entropy of escort distribution with mean and variance and is a generalization of the Gaussian distribution.

The information geometry is a theoretical method to analyze intrinsic geometrical structures underlying information systems. Tanaka [14] studies an extended information geometry that can handle distributions other than the exponential family. In such extended information geometry, the q-Gaussian distribution is an example of the distribution defined as

where q, \(\mu\), and \(\sigma\) are hyperparameter, mean, and variance, respectively. The q-Gaussian distribution can also be derived by maximizing the Tsallis entropy by escort distribution with mean and variance. The normalization factor \(Z_q\) is given by

where \({\textrm{Beta}}()\) is the beta function. When \(q\rightarrow 1\), the q-Gaussian distribution becomes normal Gaussian distribution. When \(q=1+\frac{2}{\nu +1}\), the q-Gaussian distribution becomes t-distribution with \(\nu\) degrees of freedom. When \(q=2\), the q-Gaussian distribution becomes Cauchy distribution. The q-Gaussian distribution can express various probability distributions intuitively by tuning the hyperparameter q.

2.3 t-SNE

The t-distributed stochastic neighbor embedding (t-SNE) [6] has been proposed as a nonlinear dimensionality reduction technique. The t-SNE uses a probability distribution to embed proximity of high-dimensional data in low-dimensional space The t-SNE uses Gaussian distribution for high-dimensional data and t-distribution for low-dimensional data.

Let \(\{\varvec{x}_i|i=1\ldots N\}\) be a set of samples in the high-dimensional space, where \(\varvec{x}_i = \begin{bmatrix} x_{i1}&x_{i2}&\cdots&x_{iD} \end{bmatrix}^T\). D is a number of dimensions of the high-dimensional data, and \(D>2\). The conditional probability in the high-dimensional space is defined as follows using the local Gaussian distribution:

where \(\sigma _i\) is the variance of the local Gaussian distribution around sample \(\varvec{x}_i\) which is determined by the binary search using the entropy defined as:

where k is called perplexity. In Eq. (5), we set \(p_{i|i}\) to 0 because we are only interested in pairwise similarity. To consider the symmetry, the joint probability is defined as:

where \(p_{i}=p_{j}=\frac{1}{N}\), \(p_{ii}=0\), and \(p_{ij}=p_{ji}\) for \(\forall i,j\).

Let \(\{\varvec{y}_i|i=1\ldots N\}\) be a set of embeddings in the low-dimensional space, where \(\varvec{y}_i=\begin{bmatrix} y_{i1}&\cdots&y_{id} \end{bmatrix}^T\). d and \(d<D\). The joint probability in low-dimensional space is defined as follows using the local t-distribution:

where \(r_{ii}=0\) and \(r_{ij}=r_{ji}\) for \(\forall i,j\). Then, the Kullback–Leibler divergence is defined as:

The gradient with respect to the embedding is derived as follows:

The reason why t-SNE provides a more powerful visualization than SNE is that the t-distribution is a heavily tailed distribution and is narrow near its center, so that closer samples are embedded closer together and farther samples are embedded farther apart.

2.4 UMAP

The t-SNE is great for visualization, but is computationally time consuming when the number of samples is large. The uniform manifold approximation and projection (UMAP) [ [7]] has been proposed as a nonlinear dimensionality reduction technique. UMAP requires less computation time than t-SNE since it uses only a subset of samples when calculating joint probabilities in high-dimensional space. The conditional probability in high-dimensional space is defined as:

where \(\rho _i\) is the nearest neighbor distance between one sample and i-th sample and \(\sigma _i\) is the variance which is determined by binary search by using the entropy defined as:

where u is the number of nearest neighbor and \(\beta\) is a set of u nearest samples. This factor makes the UMAP faster than t-SNE in terms of computational time because it does not use all samples. To consider the symmetry, the joint probability is defined as:

where \(p_{ii}=0\) and \(p_{ij}=p_{ji}\) for \(\forall i,j\). The joint probability in low-dimensional space is defined as:

where a and b are fitted by

where \({\textrm{min}}\_{\textrm{dist}}\) is a hyperparameter to control joint probability function in low-dimensional space. Then, the loss function is binary cross-entropy (CE) instead of Kullback–Leibler divergence defined as

The gradient is derived as follows:

Since the joint probability in low-dimensional space is changed by tuning \({\textrm{min}}\_{\textrm{dist}}\), the UMAP can make various embedding.

2.5 Varints of t-SNE

A global t-SNE (GTSNE) [18] and a stochastic cluster embedding (SCE) [21] have been proposed as variants of the t-SNE. The idea of GTSNE is adding a loss function by using global k-means centroids to optimize t-SNE. The SCE adds some weights, which means cluster, to low-dimensional similarity. These ideas do not focus on a probability distribution for similarity between samples. Our proposal can be applied to these variants since we focus on a probability distribution for similarity between low-dimensional samples in this paper.

3 Nonlinear dimensionality reduction with q-Gaussian distribution

This figure shows the graphs about Gaussian distribution, t-distribution (dotted line), q-Gaussian distribution (solid line), and the joint probability function of Eq. (14) of UMAP (dashed line) by setting various hyperparameter q and \(min\_dist\), respectively. When \(q=1\), the graph is close to usual Gaussian distribution (green solid line and blue dashed line). When \(q=2\), the graph is close to t-distribution of degrees of freedom 1 (purple solid line and orange dashed line)

In this section, we introduce a novel method for nonlinear dimensionality reduction using the q-Gaussian distribution instead of the conventional distributions. The reason for using the q-Gaussian distribution is that the q-Gaussian distribution can change the distribution smoothly, providing an intuitive operation by choosing hyperparameter q.

3.1 q-Gaussian-distributed stochastic neighbor embedding

Since the t-SNE makes better visualization than the SNE by changing the probability distribution from Gaussian to t-distribution [6], we can understand that the embedding depends on a low-dimensional probability distribution.

However, t-SNE cannot change the low-dimensional probability distribution. Therefore, t-SNE cannot change the proximity between each sample in the embedding space.

To solve this problem, we proposed to use q-Gaussian distribution in low-dimensional space instead of t-distribution as an extension and improvement of t-SNE. This novel method is called q-Gaussian stochastic neighbor embedding (q-SNE) [1]. The symmetric joint probability in high-dimensional space is the same as in Eq. (7). The joint probability in low-dimensional space is defined as follows by using the local q-Gaussian distribution:

where q is the hyperparameter of q-Gaussian distribution, \(r_{ii}=0\), and \(r_{ij}=r_{ji}\) for \(\forall i,j\). The Kullback–Leibler divergence is same as Eq. (9) The gradient is derived as:

The q-SNE uses the q-Gaussian distribution for low-dimensional probability distribution instead of t-distribution of t-SNE. The q-Gaussian distribution can express various probability distributions by tuning the hyperparameter q. Therefore, q-SNE can provide more various embeddings and visualizations than t-SNE. We can find the best visualization by choosing hyperparameter q. We show the effectiveness of q-SNE in experiments.

3.2 q-Gaussian-distributed uniform manifold approximation and projection

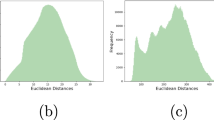

The UMAP can perform faster than t-SNE and can control low-dimensional space by changing the shape of the curve in low-dimensional space. However, the joint probability function Eq. (14) cannot be determined intuitively since parameters a and b are set by hyperparameter \(min\_dist\), and it is difficult to control the embedding results. In Fig. 1, we show the set of q-Gaussian distributions by setting various values of the hyperparameter q and the joint probability function Eq. (14) of UMAP with parameter a and b fitted by setting various hyperparameter \(min\_dist\). According to this figure, the q-Gaussian distribution is smoothly changed by setting q, and we can determine the shape of the probability distribution intuitively. In contrast, the shapes of most curves in the function Eq. (14) of UMAP are similar (except green dashed line), and it is difficult to determine their shapes intuitively.

To solve this problem, we propose to use q-Gaussian distribution in low-dimensional space instead of the curve of UMAP. This novel technique is called q-Gaussian-distributed uniform manifold approximation and projection (q-UMAP) The q-UMAP uses the q-Gaussian distribution in low-dimensional space instead of the joint probability function Eq. (14) of UMAP.

The joint probability in high-dimensional space is the same as Eq. (13). The joint probability in low-dimensional space is defined as follows:

where q is hyperparameter of q-Gaussian distribution. Then, the loss function is the same binary cross-entropy (CE) as in Eq. (16). The gradient is derived as:

Since the q-Gaussian distribution is used in low-dimensional space, the fitting part of UMAP for parameters a and b is not necessary. The q-UMAP can control the embedding result intuitively with less computational time than UMAP by using the q-Gaussian distribution. We show the effectiveness of q-UMAP in experiments.

4 Experiments

This figure shows embeddings of swissroll dataset by using q-SNE, q-UMAP, and UMAP with each parameter. When \(q=2.0\) of q-SNE, the embedding is same as t-SNE. The most of left shows 3D mapping of swiss roll dataset. The perplexity for q-SNE is 30. The number of nearest neighbor for q-UMAP and UMAP is 15

4.1 Preliminary experiment using Swissroll data

To show the effectiveness of dimensionality reduction using q-Gaussian distribution, we have done experiments using the swissroll dataset. The swissroll dataset is 3D data and includes 1500 data points. We embedded swissroll data on two-dimensional space by UMAP, q-SNE, and q-UMAP. For UMAP, we set the hyperparameter \(min\_dist\) to 0.005, 0.01, 0.05, 0.1, and 0.5. For q-SNE and q-UMAP, we set the hyperparameter q to 1.1, 1.5, 2.0, 2.5, and 2.9. For q-SNE, we set the perplexity to 30. For q-UMAP and UMAP, we set the number of nearest neighbor to 15. The embedding is shown in Fig. 2. The visualization of 3D swissroll data is shown at most left in Fig. 2. When \(q=2.0\) for q-SNE, the embedding is the same as t-SNE. When \(q=1.1\) for q-SNE, the embedding is close to SNE. For UMAP, we can see that the results of embedding are almost similar if the value of \(min\_dist\) is between 0.005 and 0.1, because the shape of the curve is almost similar, as shown in Fig. 1. It is difficult to express an embedding intuitively when \(min\_dist\) is between 0.1 and 0.5. As the value of q increases, the shape of the distribution in Fig. 1 becomes sharper, and the results of embedding in Fig. 2 becomes more clustered. According to these results, q-SNE and q-UMAP can obtain various embedding results by using the characteristics of the q-Gaussian distribution for each hyperparameter q. We can see that the embedding when \(q=1.5\) is the best visualization for swissroll. By using q-Gaussian distribution, we can intuitively create various embedding spaces.

This figure shows embeddings of MNIST, COIL-20, OlivettiFaces, and FashionMNIST dataset by using PCA, Isomap, t-SNE, UMAP, q-SNE, and q-UMAP. The hyperparameters for each embedding of t-SNE, UMAP, q-SNE and q-UMAP are used when the evaluated accuracies of Table 1 is the best

4.2 Comparison experiments

In this section, we present the visualization of the embedding results for the MNIST, COIL-20, Olivetti Faces, and FashionMNIST datasets. Furthermore, we assess the classification accuracies within these embedding spaces by employing the k-nearest neighbor (k-NN) method [11]. Given that these datasets are labeled, it allows us to evaluate the classification accuracy directly. It is hypothesized that a high classification accuracy in the embedded space is indicative of the preservation of class information within that space. For comparative analysis, we utilized dimensionality reduction techniques t-SNE, UMAP, q-SNE, and q-UMAP.

The MNIST dataset is the gray scale image dataset of handwritten digits from 0 to 9. It has 60,000 images and the size of each image is \(28 \times 28\) pixels. We randomly selected 10,000 images preliminary. The COIL-20 dataset is the gray scale image dataset of 20 objects. It has 1,440 images and the size of each image is \(128 \times 128\) pixels. Each object was placed on a motorized turntable against a black background. The turntable was rotated 360 degrees to vary object pose to a fixed camera. Images of the objects were taken at pose intervals of 5 degrees. The OlivettiFaces dataset is the gray scale image dataset of 40 persons. It has 400 images and the size of each image is \(92\times 112\) pixels. The FashionMNIST dataset is the gray scale image dataset of ten fashion items. It has 60,000 images and the size of each image is \(28 \times 28\) pixels. We randomly selected 10,000 images preliminary.

First, we show the embedding space of MNIST dataset by using t-SNE, UMAP, q-SNE, and q-UMAP with different hyperparameters in Fig. 3. We can know the relationship between hyperparameter n_neighbors and \(min\_dist\), perplexity and q, or n_neigbors and q. According to Fig. 3a, it is difficult to adjust embedding space because the figures in the top two rows or the bottom two rows make little difference, respectively. According to Fig. 3b and c, we can know the gradually changing the embedding by changing hyperparameter q. We can easily and intuitively control the embedding by using q-Gaussian distribution characteristics.

Next, we show the embedding space of MNIST, COIL-20, OlivettiFaces, and FashionMNIST by using PCA, Isomap, t-SNE, UMAP, q-SNE, and q-UMAP in Fig. 4. Also, we show the classification accuracies by using k-NN in the embedding spaces generated by t-SNE, UMAP, q-SNE, and q-UMAP in Table 1. The k of k-NN is 5. The classification accuracies are averaged 5 trials with different seeds. In Fig. 4, the hyperparameters for each embedding method are used when the classification accuracies of Table 1 is the best. According to Fig. 4, q-SNE is embedded more tightly together than t-SNE. The embedding of q-UMAP becomes almost same as the embedding of UMAP, however, q-UMAP can control intuitively than UMAP according to Fig. 3. Table 1 shows that q-SNE scores better than the other methods on all datasets. When considering the pairs of all samples in high-dimensional space like q-SNE, it is better that the sample is embedded so that it spreads by choosing lower q. When considering the pairs of some samples in high-dimensional space like q-UMAP, it is better that the sample is embedded so that it solidifies by choosing higher q. Table 2 shows the comutational time of q-UMAP and q-SNE for each dataset. The times are also averaged 5 trials with different seeds. According to this table, q-UMAP can give faster computational time. The reason why there are time differences between each dataset is because of the number of samples and the sample image size for each dataset. The difference in computation time between UMAP and q-UMAP or t-SNE and q-SNE is slight and from the equation there is no difference in computation cost.

The q-SNE can give the higher classification accuracy than q-UMAP because it uses all samples in high-dimensional space. The q-UMAP is faster than q-SNE because it uses some samples in high-dimensional space. This indicates that the best embedding can be selected by setting the hyperparameter q of the q-Gaussian distribution. One strategy to determine the optimal hyperparameter q for supervised data is to use evaluation values using k-NN as in the experiment. The advantage of our proposed method is that it can provide an embedding space with high accuracy even if it is small, and the space can be controlled in various ways.

4.3 Word dataset experiments

We have performed the experiments of embedding using a word dataset. The word is converted to vectors using a technique called word2vec [3]. It is important to analyze the similarity between each word. The GloVe dataset [10] is one of the words dataset. The GloVe dataset contains 400,000 words which have a 300 dimensions vector. For our experiments, we randomly chose 10,000 words in the dataset. We show the embedding of q-SNE and q-UMAP with several hyperparameters q. The hyperparameter perplexity of q-SNE is 30. The hyperparameter n_neighbor of q-UMAP is 5. Other experimental settings of q-SNE and q-UMAP are the same as preliminary experiments. To confirm the ten words around "man", we show them in Table 3 for q-SNE and Table 4 for q-UMAP. The higher the hyperparameter q, the more similar the word "man" is to it.

5 Conclusion

In this paper, we proposed to use q-Gaussian distribution for dimensionality reduction. These are called q-Gaussian-distributed stochastic neighbor embedding (q-SNE) and q-Gaussian-distributed uniform manifold approximation and projection (q-UMAP). The q-SNE uses q-Gaussian distribution in low-dimensional space instead of t-distribution of t-SNE. The q-UMAP uses q-Gaussian distribution in low-dimensional space instead of the curve of UMAP. Through the experiments, we can control the embedding and get better evaluated accuracies for MNIST, COIL-20, OlivettiFaces, and FashionMNIST dataset than t-SNE and UMAP. Since the q-SNE includes t-SNE, we can use our proposal instead of t-SNE. Since the q-UMAP can control intuitively embedding space than UMAP and give faster computational time, we can use our proposal instead of UMAP. We uploaded our experiment programs on Python to Zenodo. DOI are 10.5281/zenodo.10278750 (q-UMAP) and 10.5281/zenodo.10277126 (q-SNE).

Data availability

The experimental data that support the findings of this study are available in Swissroll : https://scikit-learn.org/stable/modules/generated/sklearn.datasets. make_swiss_roll.html, MNIST : http://yann.lecun.com/exdb/mnist/, FashionMNIST : https://github.com/zalandoresearch/fashion-mnist, OlivettiFaces : https://scikit-learn.org/0.19/datasets/olivetti_faces.html, COIL-20 : https://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php, and Glove : https://nlp.stanford.edu/projects/glove/.

References

Abe M, Miyao J, Kurita T (2020) q-sne: visualizing data using q-gaussian distributed stochastic neighbor embedding. arXiv:2012.00999

Chan DM, Rao R, Huang F, Canny JF (2018) t-sne-cuda: Gpu-accelerated t-sne and its applications to modern data. In: 2018 30th International symposium on computer architecture and high performance computing (SBAC-PAD). IEEE, pp 330–338

Church KW (2017) Word2vec. Nat Lang Eng 23(1):155–162

Cox MA, Cox TF (2008) Multidimensional scaling. In: Handbook of data visualization. Springer, pp 315–347

Hinton GE, Roweis ST (2003) Stochastic neighbor embedding. In: Advances in neural information processing systems, pp 857–864

Maaten L, Hinton G (2008) Visualizing data using t-sne. J Mach Learn Res 9:2579–2605

McInnes L, Healy J, Melville J (2018) Umap: uniform manifold approximation and projection for dimension reduction. arXiv:1802.03426

Mika S, Ratsch G, Weston J, Scholkopf B, Mullers KR (1999) Fisher discriminant analysis with kernels. In: Neural networks for signal processing IX: proceedings of the 1999 IEEE signal processing society workshop (cat. no. 98th8468). IEEE, pp 41–48

Pearson K (1901) Liii. on lines and planes of closest fit to systems of points in space. The London, Edinburgh, and Dublin. Philos Mag J Sci 2(11):559–572

Pennington J, Socher R, Manning CD (2014) Glove: global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1532–1543

Peterson LE (2009) K-nearest neighbor. Scholarpedia 4(2):1883

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

Schölkopf B, Smola A, Müller KR (1997) Kernel principal component analysis. In: International conference on artificial neural networks. Springer, pp 583–588

Tanaka M (2019) Geometry of entropy. Series on stochastic models in informatics and data science. Corona Publishing Co.Ltd, New York

Tang J, Liu J, Zhang M, Mei Q (2016) Visualizing large-scale and high-dimensional data. In: Proceedings of the 25th international conference on world wide web, pp 287–297

Tenenbaum JB, De Silva V, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290(5500):2319–2323

Thompson B (2005) Canonical correlation analysis. In: Encyclopedia of statistics in behavioral science

van der Maaten L (2013) Barnes-hut-sne

Van Der Maaten L (2013) Barnes-hut-sne. arXiv:1301.3342

Van Der Maaten L (2014) Accelerating t-sne using tree-based algorithms. J Mach Learn Res 15(1):3221–3245

Yang Z, Chen Y, Sedov D, Kaski S, Corander J (2023) Stochastic cluster embedding. Stat Comput 33(12)

Funding

Open Access funding provided by Hiroshima University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Dr.Kurita is a professor at the Hiroshima University in Japan. All other authors have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abe, M., Nomura, Y. & Kurita, T. Nonlinear dimensionality reduction with q-Gaussian distribution. Pattern Anal Applic 27, 26 (2024). https://doi.org/10.1007/s10044-024-01210-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10044-024-01210-1