Abstract

This paper proposes a framework for constructing high-order linearly implicit exponential integrators that conserve a quadratic invariant. This is then applied to the scalar auxiliary variable (SAV) approach. Quadratic invariants are significant objects that are present in various physical equations and also in computationally efficient conservative schemes for general invariants. For instance, the SAV approach converts the invariant into a quadratic form by introducing scalar auxiliary variables, which have been intensively studied in recent years. In this vein, Sato et al. (Appl. Numer. Math. 187, 71-88 2023) proposed high-order linearly implicit schemes that conserve a quadratic invariant. In this study, it is shown that their method can be effectively merged with the Lawson transformation, a technique commonly utilized in the construction of exponential integrators. It is also demonstrated that combining the constructed exponential integrators and the SAV approach yields schemes that are computationally less expensive. Specifically, the main part of the computational cost is the product of several matrix exponentials and vectors, which are parallelizable. Moreover, we conduct some mathematical analyses on the proposed schemes.

Similar content being viewed by others

1 Introduction

In this paper, we consider ordinary differential equations (ODEs)

on a finite-dimensional real Hilbert space \( (\mathcal {X}, \langle {\cdot },{\cdot }\rangle _{\mathcal {X}})\), where \( y :[0,T) \rightarrow \mathcal {X}\) is a dependent variable, \( S :\mathcal {X}\rightarrow \mathcal {L} (\mathcal {X}) \) is a “skew-symmetric matrix function” (\( \mathcal {L}(\mathcal {X}) \) denotes the space of linear operators on \(\mathcal {X}\)), i.e., \( {\langle }{x},{S(z)y}{\rangle _{\mathcal {X}}} = - \langle {S(z) x },{y}{\rangle _{\mathcal {X}}} \) holds for all \( x,y,z\in \mathcal {X}\), \( V :\mathcal {X}\rightarrow \mathbb {R}\) is a differentiable function, and \( \nabla V: \mathcal {X}\rightarrow \mathcal {X}\) denotes the gradient of V with respect to the inner product of \(\mathcal {X}\). Although the methods in this paper can be applied even when \( \mathcal {X}\) is infinite-dimensional, we limit ourselves to finite-dimensional cases for the sake of mathematical analysis (see Remark 4).

The class of ODEs in the form (1) includes many examples: Hamiltonian systems, Poisson systems, and spatial discretization of variational partial differential equations (PDEs) (see, e.g., [1]). These ODEs satisfy the conservation law with respect to V:

where the last equality holds due to the skew-symmetry of S(y(t)).

Since this conservation law is an important property of the differential equation (1), numerical methods that preserve it have been studied in the literature, for example, the discrete gradient method [2,3,4] for the gradient ODEs and the discrete variational derivative method [5, 6] (see also [7]) for variational PDEs. In addition, Cohen and Hairer [8] proposed a high-order extension of the discrete gradient method.

Since these conservative numerical schemes are fully implicit, several techniques have been devised to reduce computational cost. For example, Besse [9] and Zhang, Pérez-García, and Vázquez [10] proposed linearly implicit conservative schemes for the nonlinear Schrödinger equation. For polynomial invariants, Matsuo and Furihata [11] proposed multistep linearly implicit DVDM (see also Dahlby and Owren [12]). Recently, Yang and Han [13] proposed the invariant energy quadratization (IEQ) approach, and Shen et al. [14] proposed the scalar auxiliary variable (SAV) approach (see also [15] and the references therein). The SAV approach employs scalar auxiliary variables to convert the invariant into a quadratic form, which is then preserved by a linearly implicit scheme (see Sect. 2.4 for details).

Although the above computationally inexpensive methods differ, they have in common that they attribute the invariant to a quadratic function in some way. Given this, Sato et al. [16] recently proposed a framework for constructing high-order and linearly implicit schemes conserving a quadratic invariant.

Exponential integrators (cf. [17]) are efficient numerical methods for solving semilinear differential equations, and combining it with geometric numerical integration has also been studied. Celledoni et al. [18] deal with \(L^2\) norm conservation for the Schrödinger equation and derive the condition to preserve it in exponential Runge–Kutta methods. Mei et al. [19] deal with (1) with \( V(y) = \frac{1}{2} \langle {y},{y}{\rangle _{\mathcal {X}}} \) and construct conservative exponential integrators. Some researchers deal with the equation in the form \( \dot{y} = J (Ly+\nabla E(y)) \), where J is skew-symmetric, L is symmetric, and E is a function: Li and Wu [20] propose second-order exponential discrete gradient schemes; Mei et al. [21] discuss how to design high-order conservative schemes based on Li and Wu [20], modified differential equation, and order condition in terms of B-series; and Li [22] proposes a multistep linearly implicit conservative exponential scheme based on polarization.

Combinations of exponential integrators and the SAV approach have also been investigated [23,24,25,26]. In particular, Jiang et al. [27] propose high-order linearly implicit structure-preserving exponential integrators for the nonlinear Schrödinger equation based on the SAV approach and the Lawson transformation [28]. They also mention that the same method can be applied to some general differential equations, and numerically confirm the superiority of their method. However, no theoretical guarantee of high accuracy is given. In this paper, we reveal that linearly implicit high-order conservative schemes proposed by Sato et al. [16] can be combined with exponential integrators (Sect. 3). The resulting scheme, although linearly implicit, is computationally not very cheap, since it includes matrix exponentials in the coefficient matrix. However, when combined with the SAV approach, its structure can be exploited to provide a computationally efficient implementation (Sect. 4). Specifically, the main part of the computational cost is a few products of matrix exponential functions and vectors with the size of the dimension of the given differential equation. Furthermore, these products can be computed in parallel. Theoretical guarantees such as accuracy are also provided, albeit being limited to finite dimensions. (The relationship with [27] is discussed in Remark 6.)

Here, we note a limitation of the proposed method: it cannot be applied to dissipative systems. In many cases, if a conservative numerical method can be constructed, a dissipative numerical method, one that replicates the dissipation law, can be constructed correspondingly: [16] can also be applied to dissipative cases. However, this is not the case for the proposed method in the present paper. The proposed method is essentially driven by the fact that under a mild assumption, the quadratic invariants are invariant by the Lawson transformation (see Lemma 4), and the extension to dissipative cases is nontrivial (see Remark 2).

The remainder of the paper is organized as follows. In Sect. 2, we briefly review the canonical Runge–Kutta methods, linearly implicit high-order conservative schemes proposed by Sato et al. [16], the Lawson transformation, and the SAV approach. The contents in Sects. 3 and 4 are already described above. The proposed schemes are numerically examined in Sect. 5.

2 Preliminaries

2.1 Runge–Kutta methods and quadratic invariants

For the autonomous system

general Runge–Kutta methods that compute an approximation \( y_1 \approx y (h) \) from \( y_0 = y(0)\) can be written as

where \( [s]:= \{ 1, 2, \dots , s \} \). Throughout this paper, the fixed step size is considered, but as is common in the context of conservative methods, variable step sizes can also be employed without difficulty.

A subclass of Runge–Kutta methods preserves all quadratic invariants.

Proposition 1

(Cooper [29]) Runge–Kutta methods satisfying

automatically preserve all quadratic invariants.

The Runge–Kutta methods satisfying (2) are said to be canonical (see [30] for details on canonical Runge–Kutta methods). Note that a canonical Runge–Kutta method must be implicit, yet diagonally implicit Runge–Kutta methods can be canonical.

For example, the second-order Gauss, fourth-order Gauss, and third-order diagonally implicit canonical Runge–Kutta methods are as follows:

where \( \alpha = \frac{1}{3} \left( { 2 + \frac{1}{2^{1/3}} + 2^{1/3} }\right) \).

2.2 High-order linearly implicit schemes that conserve quadratic invariants

In this section, we briefly review the high-order linearly implicit schemes for the gradient system (1) with a quadratic invariant V proposed by [16].

Definition 1

- Step 0:

-

Prepare \( Y^{(0)}_i \approx y (c_i h) \) and set \(k = 1\).

- Step 1:

-

Solve the linear equation system

$$\begin{aligned} Y^{(k)}_i = y_0 + h \sum _{ j \in [s]} a_{ij} S \left( {Y^{(k-1)}_j }\right) \nabla V \left( { Y^{(k)}_j }\right) \qquad \big ( i \in [s] \big ) \end{aligned}$$(3)to obtain \( Y_i^{(k)} \)’s. If some criteria hold, go to Step 2. Otherwise, set \(k = k + 1 \) and repeat Step 1.

- Step 2:

-

Output

$$\begin{aligned} y_1^{(k)} = y_0 + h \sum _{j \in [s] } b_j S \left( {Y^{(k-1)}_j }\right) \nabla V \left( { Y^{(k)}_j }\right) . \end{aligned}$$

Theorem 2

Suppose that A and b satisfy (2), and V is quadratic. Then, the solution \(y_1^{(k)}\) of Definition 1 satisfies \( V \left( { y_1^{(k)} }\right) = V(y_0) \) for any \( h > 0\).

Theorem 3

Assume the following conditions:

- (A1):

-

\( Y^{(0)}_i \) satisfies \( \big \Vert { Y^{(0)}_i - y( c_i h ) }\big \Vert _{\mathcal {X}} \le C h^{q} \) for each \( i \in \big [s\big ] \) .

- (A2):

-

\( S :\mathcal {X}\rightarrow \mathcal {L} (\mathcal {X}) \) is Lipschitz continuous.

- (A3):

-

The base Runge–Kutta method is of order p .

Then, the numerical solution \( y^{(k)}_1 \) of the scheme defined by Definition 1 satisfies

for sufficiently small \(h > 0\), i.e., the scheme with k iteration is of order \( \min \{ p, q + k-1\} \). Here, \( C' \) is a constant depending only on the exact solution y, k, S, V, A, b, and the constant C in (A1).

Remark 1

In view of Theorem 3, \( k = p - q + 1\) is a natural choice as the criterion in Step 1 of Definition 1. In addition, there are several other possibilities. As shown in [16, Theorem 4.1], \( Y^{(k)}_i\) linearly coverges to the corresponding inner stage of the base Runge–Kutta method. Based on this fact, one can choose k so that the update \( \big \Vert { Y^{(k)}_i - Y^{(k-1)}_i }\big \Vert _{\mathcal {X}} \) is sufficiently small. Another possibility is to choose k so that \( \big \Vert { y^{(k)}_1 - y^{(k-1)}_1 }\big \Vert _{\mathcal {X}} \), an approximation of the local error, is sufficiently small (note that, when \( k \le p - q + 1 \), \(y^{(k)}_1 \) and \( y^{(k-1)}_1 \) are approximations of y(h) with different order of accuracy, which are known to be useful to approximate the local error). However, in the numerical experiments in Sect. 5, we simply choose some fixed k to verify the theoretical order of accuracy.

2.3 Lawson transformation

We briefly review the Lawson transformation [28] which is useful to construct exponential integrators. For the ODE in the form

we consider the transformation \( w(t) = \exp (-t M) y (t) \) to obtain

where \( \exp \) denotes the matrix exponential function. It is known that, when \( M\) has large eigenvalues, the transformed system (5) is easier to solve numerically than the original ODE. Therefore, a good approach to solve the system (4) is to apply a numerical method to (5) and use the inverse transformation to return to the original variable. If a Runge–Kutta method is adopted as the numerical method, the resulting numerical method is written as

Hereafter, the scheme is designated the Lawson method.

2.4 Scalar auxiliary variable approach

In this section, we review the scalar auxiliary variable (SAV) approach for the Hamiltonian system

on a finite-dimensional real Hilbert space \((\mathcal {V},{\langle }{\cdot },{\cdot }{\rangle })\), where \( u :[0,T) \rightarrow \mathcal {V}\) is a dependent variable, \( J \in \mathcal {L} (\mathcal {V}) \) is a (constant) skew-symmetric linear operator, and \(H :\mathcal {V}\rightarrow \mathbb {R}\) is written in the form

where \( L \in \mathcal {L} (\mathcal {V}) \) is symmetric.

Here, for brevity, we assume that the function \( E :\mathcal {V}\rightarrow \mathbb {R}\) is bounded from below, i.e., \( \alpha := - \inf E (u) + \epsilon \) is finite (see [15] for unbounded cases). Using this assumption, we introduce a scalar auxiliary variable \( r:= \sqrt{ E (u) + \alpha } \). Then, (7) is rewritten as

where \( \phi (u):= \nabla E(u) / \left( { 2 \sqrt{ E(u) + \alpha }}\right) \).

The system (8) has a quadratic modified invariant \( V(u,r) = \frac{1}{2} \langle {Lu},{u}{\rangle } + r^2 - \alpha \). Since quadratic invariants are much easier to preserve in numerical schemes than general invariants, this property enables us to construct computationally efficient conservative schemes (see, e.g., [14]).

A comprehensive way to construct conservative schemes based on the SAV approach is given in [15]; the reformulated system (8) can be rewritten for it to be regarded as a special case of (1). To this end, the inner product space \(\mathcal {X}\) is defined by \( \mathcal {X}= \mathcal {V}\times \mathbb {R}\), and the associated inner product is defined as \( \langle { (x_1,r_1), }{(x_2,r_2)}{\rangle _{\mathcal {X}}} = \langle {x_1,}{x_2}{\rangle } + r_1 r_2 \). Then, the system (8) can be written as

where the superscript \( *\) denotes the adjoint.

Since the scheme in Sect. 2.2 can be applied to this system, such reformulation enables the construction of high-order linearly implicit schemes that conserve the modified invariant V. However, high-order schemes require solving large linear equation systems (see Appendix 4 for details).

3 Exponential Runge–Kutta methods conserving quadratic invariants

Let us consider the system

where \( S :\mathcal {X}\rightarrow \mathcal {L} (\mathcal {X}) \) is a skew-symmetric matrix function, and V is a quadratic function (\( V (y) = \frac{1}{2} \langle {y},{Qy}{\rangle _{\mathcal {X}}} \)). We further assume that V is also an invariant of the linear part \( \dot{y} = My \). In Sect. 3.1, we show that the Lawson method based on the canonical Runge–Kutta method conserves the quadratic invariant V. Then, Sect. 3.2 shows that the combination of the Lawson transformation and the scheme defined by Definition 1 yields a linearly implicit exponential integrators that conserves the quadratic invariant V. Although the linearly implicit exponential integrators are not always computationally efficient, but as we will see in Sect. 4, it works very well with the SAV approach.

3.1 Lawson transformation and quadratic invariants

The following lemma, which is a slight extension of [20, Lemma 2.2], is crucial in constructing conservative schemes (see Remark 2).

Lemma 4

Let \( V :\mathcal {X}\rightarrow \mathbb {R}\) be a quadratic function, i.e., \( V (y) = \frac{1}{2} \langle {y},{Qy}{\rangle _{\mathcal {X}}} \), where \( Q \in \mathcal {L} (\mathcal {X}) \) is symmetric. Then, V is an invariant of the linear ODE \( \dot{y} = My \) if and only if \( \left( { \exp \left( { t M}\right) }\right) ^{*} Q \exp \left( { t M}\right) = Q \) holds for any \( t \in \mathbb {R}\).

Proof

Since \( y (t) = \exp (t M) y (0) \) holds for a solution of the linear ODE \( \dot{y} = My \), V(y(t)) can be written as

Therefore, V is an invarint, i.e., \( V(y(t)) = V(y(0)) \) holds for any \( t \in \mathbb {R}\) and initial value y(0), if and only if \( \left( { \exp \left( { t M}\right) }\right) ^{*} Q \exp \left( { t M}\right) = Q \) holds for any \( t \in \mathbb {R}\). \(\square \)

Assumption that V is an invariant of the linear ODE \( \dot{y} = My \) may seem restrictive, but there are many examples satisfying this assumption. For example, since the difference operators often commute with each other, ODEs obtained by a finite difference discretization of PDEs often satisfy this assumption. Moreover, as shown in Sect. 4, ODEs obtained by the SAV approach satisfy this assumption.

Remark 2

In [20], it is assumed that “\( M= S Q \) holds with a skew-symmetric matrix S” instead of “V is an invariant.” The latter condition is weaker in the sense that, even when V is an invariant of the linear ODE \( \dot{y} = My \), we cannot conclude the existence of a skew-symmetric matrix S that satisfies \( M= S Q \); the pair

is a counterexample.

The lemma in [20] also deals with dissipative cases. Lemma 4 can also be extended to the dissipative cases. Under the setting of Lemma 4, if V is a weak Lyapunov function, then \( \left( { \exp \left( { t M}\right) }\right) ^{*} Q \exp \left( { t M}\right) \le Q \) holds for any \( t > 0 \). However, extending the following theorem to dissipative systems is nontrivial and will be the subject of future research.

By using the lemma above, we show a sufficient condition for conserving quadratic invariants.

Theorem 5

Suppose that V is a quadratic invariant of the semilinear ODE (4). Assume that V is also an invariant of the linear part \( \dot{y} = My \). In addition, we assume (A, b) satisfies (2). Then, the solution \(y_1\) of the Lawson method (6) satisfies \( V(y_1) = V (y_0) \).

Proof

Using the Lawson transformation \( w(t) = \exp \left( { - t M}\right) y (t) \) and Lemma 4, we see

Since this implies the transformed ODE (5) also has a quadratic invariant in the form \( \frac{1}{2} \langle {w},{Qw}{\rangle _{\mathcal {X}}} \), a canonical RK method applied to the system (5) preserves the invariant (Proposition 1). \(\square \)

3.2 Linearly implicit exponential integrators conserving quadratic invariants

As shown in the previous section, a class of Lawson methods automatically preserves quadratic invariants under the additional assumption that the linear part also preserves the invariants. However, since all canonical RK methods are implicit, the resulting scheme must be fully implicit. Therefore, in this section, we construct linearly implicit schemes conserving quadratic invariants. To this end, we apply the schemes introduced in Sect. 2.2 instead of the Lawson methods.

For (10), the Lawson transformation \( w(t) = \exp \left( { - t M}\right) y (t) \) gives

Using Lemma 4 and \( \left( { \exp (t M) }\right) ^{-1} = \exp (-t M) \), we can further rewrite the equation as

Since the map \( w \mapsto \exp (-t M) S \left( { \exp (t M) w }\right) \left( { \exp (-t M) }\right) ^{*} \) is a skew-symmetric matrix function, we can apply the scheme in Sect. 2.2. The inverse transformation reads the following scheme.

Definition 2

- Step 0:

-

Prepare \( Y^{(0)}_i \approx y (c_i h) \) and set \(k = 1\).

- Step 1:

-

Solve the linear equation system

$$\begin{aligned} Y^{(k)}_i = \exp (c_i h M) y_0 + h \sum _{ j \in [s]} a_{ij} \exp \left( { (c_i - c_j) h M}\right) S \left( { Y^{(k-1)}_j }\right) \nabla V \left( { Y^{(k)}_j}\right) \quad ( i \in \big [s\big ] ) \end{aligned}$$(11)to obtain \( Y_i^{(k)} \)’s. If some criteria hold, go to Step 2. Otherwise, set \(k = k + 1 \) and repeat Step 1.

- Step 2:

-

Output

$$\begin{aligned} y_1^{(k)} = \exp ( h M) y_0 + h \sum _{j \in [s] } b_j \exp \left( { (1 - c_j) h M}\right) S \left( {Y^{(k-1)}_j }\right) \nabla V \left( { Y^{(k)}_j }\right) . \end{aligned}$$

Similar to Theorem 5, the discrete conservation law for the scheme above can be proved.

Theorem 6

Suppose that V is a quadratic invariant of the semilinear ODE (4). Assume that V is also an invariant of the linear part \( \dot{y} = My \). In addition, we assume (A, b) satisfies (2). Then, the solution \(y^{(k)}_1\) of the scheme defined by Definition 2 satisfies \( V \left( { y^{(k)}_1}\right) = V (y_0) \).

Moreover, Theorem 3 implies the following theorem showing the accuracy of the scheme defined by Definition 2. Although the proof of Theorem 7 is similar to that for Theorem 3, we present the complete proof here for the reader’s convenience.

Theorem 7

Assume the following conditions:

-

(A1)

\( Y^{(0)}_i \) satisfies \( \big \Vert { Y^{(0)}_i - y( c_i h ) }{\big \Vert _{\mathcal {X}}} \le C h^{q} \) for each \( i \in \big [s\big ] \) .

-

(A2)

\( S :\mathcal {X}\rightarrow \mathcal {L} (\mathcal {X}) \) is \(L_S\)-Lipschitz continuous.

-

(A3)

The base Runge–Kutta method is of order p .

Then, the numerical solution \( y^{(k)}_1 \) of the scheme defined by Definition 2 satisfies

for sufficiently small \(h > 0\), i.e., the scheme with k iteration is of order \( \min \{ p, q + k-1\} \). Here, \( C' \) is a constant depending only on the exact solution y, k, \(M\), S, V, A, b, and the constant C in (A1).

Proof

Due to the assumption (A1), there exists a function \( y^{(0)} :[0,h] \rightarrow \mathcal {X}\) satisfying \( y^{(0)} (c_i h) = Y^{(0)}_i \) and \( \sup _{t \in [0,h] } \big \Vert { y^{(0) } (t) - y(t) }{\big \Vert _{\mathcal {X}}} \le C h^q \). Then, the scheme defined by Definition 2 can be regarded as the usual Runge–Kutta method corresponding to A, b for the system

where \( w^{(0)} (t) = \exp (-t M) y^{(0)} (t) \). Otherwise expressed, the scheme can be regarded as the usual Lawson method for the system

where \( y^{(i)} (t) = \exp (t M) w^{(i)} (t) \) for \(i = 1,\dots , k\). Therefore, it is sufficient to prove \( \big \Vert { y^{(k)} (h) - y (h) }{\big \Vert _{\mathcal {X}}} \le C^{(k)} h^{q+k} \).

To this end, we prove

by induction, where \( C^{(j)}:= \left( { 2 L_S C_y }\right) ^{j} C \ ( C_y:= \sup _{ t \in [0,h] } \big \Vert { \nabla V (y(t)) }{\big \Vert _{\mathcal {X}}} )\). We assume that \( \sup _{ t \in [0,h]} \big \Vert { y^{(j-1)} (t) - y (t) }{\big \Vert _{\mathcal {X}}} \le C^{(j-1)} h^{q+j-1} \) holds so that

where \( \big \Vert {S(y_0)}{\big \Vert _{\mathcal {X}}} \) denotes the operator norm of \( S(y_0) \) with respect to the inner product of \(\mathcal {X}\). Then, we see

Since we assume that h is sufficiently small, in particular, \( h \le ( 2 \big \Vert {M}{\big \Vert _{\mathcal {X}}} + 2 M_S^{(j-1)} \big \Vert {Q}{\big \Vert _{\mathcal {X}}} )^{-1}\) holds, we see

Thus, by induction, we obtain \(\sup _{ t \in [0,h]} \big \Vert { y^{(j)} (t) - y (t) }{\big \Vert _{\mathcal {X}}} \le C^{(j)} h^{q+j} \ ( j = 1,\dots , k )\). Since it implies \( {\big \Vert }{ y^{(k)} (h) - y(h) }{\big \Vert _{\mathcal {X}}} \le C^{(k)} h^{q+k} \), the theorem holds. \(\square \)

Remark 3

In the above and subsequent theorems, we assume the global Lipschitz continuity of S for the sake of brevity. However, this can be relaxed to the local Lipschitz continuity.

Remark 4

As can be seen from the proof above, even if \(\mathcal {X}\) is infinite-dimensional, the same theorem holds if \( {\big \Vert }{S(y_0)}{\big \Vert _{\mathcal {X}}} \), \( {\big \Vert }{ M}{\big \Vert _{\mathcal {X}}} \), and \( {\big \Vert }{ Q }{\big \Vert _{\mathcal {X}}} \) are bounded.

4 Application to the SAV approach

The scheme defined in Definition 2 generally requires solving linear equations that involve matrix exponential functions, which is computationally somewhat more expensive than the usual linearly implicit schemes from [16]. However, combining it to the SAV system (9) produces a scheme that is computationally extremely inexpensive. Specifically, the main part of each iteration is the product of a matrix exponential function and a vector O(s) times, which can be computed in parallel. The computational cost of the scheme can be the same level as that of explicit exponential integrators (see Appendix 3). When an efficient implementation of the matrix exponential function is available, the proposed scheme in this section overwhelms the linearly implicit scheme defined in Definition 1 in terms of computational efficiency (see Fig. 2).

4.1 Simple case (9)

The SAV system (9) can be rewritten as

Recall that \( J \in \mathcal {L} (\mathcal {V}) \), \( L \in \mathcal {L} (\mathcal {V}) \), \( \phi :\mathcal {V}\rightarrow \mathcal {V}\) is a function defined as \( \phi (u) = \nabla E (u) / \left( { 2 \sqrt{ E (u) + \alpha } }\right) \), and this system has the quadratic invariant \( V (u,r) = \frac{1}{2} {\big \langle }{ Lu},{u }{\big \rangle } + r^2 - \alpha \). Since the skew-symmetry of J implies \( \big \langle { \phi (u) },{ J \phi (u) }\rangle = 0 \), it can be rewritten as

which is a special case of (10) with

Note that the linear part \( \dot{y} = M y \) preserves V:

Therefore, we can apply the scheme defined by Definition 2 for (12). In this case, the linear equation system (11) reads

where \( U^{(k)}_i \in \mathcal {V}\) and \( R^{(k)}_i \in \mathbb {R}\) are the inner stages with respect to u and r, respectively. This linear equation, expressed element by element, is as follows:

By substituting the first equation into the second equation, we obtain a linear equation

only on \( \{ R^{(k)}_i \}_{i=1}^s \). To reduce the number of computations of the matrix exponential function, we use Lemma 4, i.e., the relation \( L \exp \left( { t JL }\right) = \left( { \exp \left( { - t JL }\right) }\right) ^{*} L \), and obtain

where \( \psi ^{(k-1)}_j \!:=\! \exp \left( { - c_j h JL }\right) J \phi \left( { U^{(k-1)}_j }\right) \). By introducing \( R^{(k)} \!=\! \begin{bmatrix} R^{(k)}_1&\dots&R^{(k)}_s \end{bmatrix}^{\textsf {T}} \), the linear equation can be simplified to

where \( I_s \in \mathbb {R}^{ s \times s }\) denotes the identity matrix, \(A = (a_{ij}) \in \mathbb {R}^{s \times s } \), \( \circ \) denotes the Hadamard product, \( \Psi \in \mathbb {R}^{s \times s } \) is defined by \( \Psi _{i,j} = \Big \langle {\psi _i^{(k-1)} },{ L \psi _j^{(k-1)} }\Big \rangle \), \( \textbf{1}_s \in \mathbb {R}^s \) denotes all one vector of size s, and \( \nu \in \mathbb {R}^{s} \) is defined by \( \nu _i = \Big \langle {\psi _i^{(k-1)}},{L u_0}\Big \rangle \).

Another note on the implementation should address the fact that \( r^{(k)}_1 \) can be computed without \( \left\{ U^{(k)}_i \right\} _{i=1}^s \):

where \( \omega _{ij} \) denotes the (i, j) element of the inverse matrix of A. Consequently, we propose the following scheme.

Definition 3

-

Step 0

Prepare \( U^{(0)}_i \approx u (c_i h) \) and set \(k = 1\).

-

Step 1

Compute \( \{ \psi ^{(k-1)}_i \}_{i=1}^s \) by

$$\begin{aligned} \psi ^{(k-1)}_i = \exp \left( { - c_i h JL }\right) J \phi \left( { U^{(k-1)}_i }\right) . \end{aligned}$$(14)Solve the linear equation (13) to obtain \( R^{(k)} \). If some criteria hold, go to Step 2. Otherwise, compute \( \{ U_i^{(k)} \}_{i=1}^s \) by

$$\begin{aligned} U_i^{(k)} = \exp \left( { c_i h JL }\right) \left( { u_0 + 2 h \sum _{j \in [s]} a_{ij} R^{(k)}_j \psi ^{(k-1)}_j }\right) , \end{aligned}$$(15)set \(k = k + 1 \) and repeat Step 1.

-

Step 2

Output

$$\begin{aligned} u_1^{(k)}&= \exp \left( { h JL }\right) \left( { u_0 + 2 h \sum _{j \in [s]} b_j R^{(k)}_j \psi ^{(k-1)}_j }\right) , \\ r_1^{(k)}&= r_0 + \sum _{i,j \in [s]} b_i \omega _{ij} \left( { R^{(k)}_j - r_0 }\right) . \end{aligned}$$

Note that, in many cases, the computation of (14) and (15) is the most computationally expensive part. However, efficient algorithms for computing the matrix exponential and vector products are known. Moreover, since the computation of \( \psi ^{(k-1)}_1, \psi ^{(k-1)}_2, \dots , \psi ^{(k-1)}_s \) by using (14) can be done independently, they can be trivially parallelized. Similarly, the computation of \( U^{(k)}_i \)’s by (15) can be parallelized. See Appendix 3 for more details on the computational cost.

The scheme defined by Definition 3 has a unique solution if and only if the linear equation (13) has a unique solution, which is satisfied for small step size h. The step size restriction depends largely on the nature of J and L (see Remark 5).

Theorem 8

Suppose that the step size h satisfies

where \( \lambda _{\max } (LJ-JL)\) denotes the maximum eigenvalue of \(LJ-JL\), \( c_{\max } = \max _{i} c_i \), and \( \Vert {A}\Vert _2 \) denotes the spectral norm of \(A \in \mathbb {R}^{s\times s}\). Then, the linear (13) has a unique solution.

Proof

The linear (13) has a unique solution if and only if the matrix \( I_s + 2 h^2 A \left( { A \circ \Psi }\right) \) is nonsingular. Then, \( \big \Vert { 2 h^2 A \left( { A \circ \Psi }\right) }\big \Vert _2 < 1 \) is a sufficient condition for the solution’s unique existence.

Since we have \( \big \Vert { A \circ \Psi }\big \Vert _2 \le \big \Vert {A}\big \Vert _2 \big \Vert {\Psi }\big \Vert _2\) (cf. [31]), we evaluate the norm \( \big \Vert {\Psi }\big \Vert _2 \):

Note that \( \Vert { \exp \left( { t (-JL) }\right) }\Vert \le \exp \left( { t \omega (-JL) }\right) \) holds for any \(t \ge 0\) (cf. [32]), where \(\omega (-JL) \) denotes the numerical abscissa of the matrix \(-JL\). Since \( \omega (-JL) = \lambda _{\max } \left( { \frac{1}{2} \left( { -JL + (-JL)^{*} }\right) }\right) = \lambda _{\max } \left( { \frac{1}{2} \left( { L J -JL }\right) }\right) \) holds, we have

which implies the theorem. \(\square \)

Remark 5

The step size restriction (16) may seem severe. Of course, this is true when \( \lambda _{\max } \left( {LJ-JL}\right) \) is positive, and the above argument only shows the unique existence for very small step sizes. However, when L and J commute, we have \( \lambda _{\max } \left( {LJ-JL}\right) = 0 \), and the step size restriction is rather mild. The commutativity of L and J is sometimes assumed in the literature (cf. [33], see also Sect. 5).

Theorem 6 implies the following theorem showing the discrete conservation law with respect to the modified invariant V.

Theorem 9

(Conservation law) The solution \( \left( { u^{(k)}_1, r^{(k)}_1}\right) \) of the scheme defined by Definition 3 satisfies \( V \left( { u^{(k)}_1, r^{(k)}_1 }\right) = V (u_0,r_0) \).

Theorem 7 implies the following theorem showing the accuracy of the scheme defined by Definition 3.

Theorem 10

(Accuracy) Assume the following conditions:

-

(A1)

\( U^{(0)}_i \) satisfies \( \big \Vert { U^{(0)}_i - u( c_i h ) }\big \Vert \le C h^{q} \) for each \( i \in [s] \) .

-

(A2)

\( \phi :\mathcal {V}\rightarrow \mathcal {V}\) is Lipschitz continuous.

-

(A3)

The base Runge–Kutta method is of order p .

Then, the numerical solution \( \left( { u^{(k)}_1, r^{(k)}_1}\right) \) of the scheme defined by Definition 3 satisfies

for sufficiently small \(h > 0\), i.e., the scheme with k iteration is of order \( \min \{ p, q + k-1\} \). Here, \( C' \) is a constant depending only on the exact solution u, k, J, L, \(\phi \), A, b, and the constant C in (A1).

Proof

It is sufficient to prove

In general, for any \( v \in \mathcal {V}\), we see

which proves (17). \(\square \)

Remark 6

The scheme defined by Definition 3 includes the schemes proposed in [27] as special cases. In [27], they focus on the cases \( U_i^{(0)} \) computed by the extrapolation or \( U_i^{(0)} = u_0 \). In addition, the intended implementation is also somewhat different: for example, their algorithm requires \( k s (s-1) \) computations of the product of the matrix exponential functions and vectors, while it is reduced to \((2k-1)s\) in Definition 3 by introducing \(\psi ^{(k-1)}\).

Moreover, the consequences of Theorem 10 are consistent with the accuracy confirmed numerically in [27]. For example, from numerical experiments in [27, Remark 3.6], the authors predict that the scheme has fourth-order accuracy when the base RK method is a fourth-order Gauss method, \( U^{(0)}_i = u_0 \) (i.e., \(q=1\)), and \(k=4\).

4.2 Multiple scalar auxiliary variables

As shown in [15], when E is unbounded, two scalar auxiliary variables are needed. The scheme described in the previous section can be extended to this case.

Let us consider the Hamiltonian system (7) with

where \( E_{\textrm{X}} :\mathcal {V}\rightarrow \mathbb {R}\) are bounded from below, i.e., \( \alpha _{\textrm{X}}:= - \inf E_{\textrm{X}} (u) + \epsilon _{\textrm{X}} \) is finite (\( \textrm{X} \in \{ \textrm{L}, \textrm{U} \} \)). Then, by introducing \( r_{\textrm{X}}:= \sqrt{ E_{\textrm{X}} (u) + \alpha _{\textrm{X}} } \), \( \phi _{\textrm{X}} (u):= \nabla E_{\textrm{X}} (u) / \left( { 2 \sqrt{ E_{\textrm{X}} (u) + \alpha _{\textrm{X}} }}\right) \), and \( V (u,r_{\textrm{L}},r_{\textrm{U}}) = \frac{1}{2} \langle {Lu},{u}\rangle + r_{\textrm{L}}^2 - r_{\textrm{U}}^2 \), we obtain

which is a special case of (10) (\( \phi _{\textrm{X}} (u) \) is abbreviated to \( \phi _{\textrm{X}} \)).

Therefore, we can apply the scheme defined by Definition 2. In addition, by introducing \(\psi ^{\textrm{X}}_i:= \exp \left( { - c_i h JL }\right) J \phi _{\textrm{X}} \left( { U^{(k-1)}_i }\right) \), the techniques to reduce the computational cost in Sect. 4.1 can also be used in this case (see Appendix 1 for details). Then, the linear equation system with respect to \( R^{(k)}_{\textrm{L}} = \begin{bmatrix} R^{(k)}_{\textrm{L},1}&\dots&R^{(k)}_{\textrm{L},s} \end{bmatrix}^{\textsf {T}} \) and \( R^{(k)}_{\textrm{U}} = \begin{bmatrix} R^{(k)}_{\textrm{U},1}&\dots&R^{(k)}_{\textrm{U},s} \end{bmatrix}^{\textsf {T}} \) can be written as

where \( \Psi ^{\textrm{LL}}, \Psi ^{\textrm{LU}}, \Psi ^{\textrm{UL}}, \Psi ^{\textrm{UU}} \in \mathbb {R}^{s \times s } \) are defined by \( \Psi ^{\textrm{XY}}_{i,j} = \big \langle {\psi ^{\textrm{X}}_i},{ L \psi ^{\textrm{Y}}_j }\big \rangle \) for \({\textrm{X}}, {\textrm{Y}} \in \{ \textrm{L}, \textrm{U} \} \) (note that \( \Psi ^{\textrm{LU}} = \left( { \Psi ^{\textrm{LU}}}\right) ^{\textsf {T}} \) holds), \( \Phi \in \mathbb {R}^{s \times s } \) is a diagonal matrix defined by \( \Phi _{ii} = \Big \langle { \phi _{\textrm{L}} \left( { U_i^{(k-1)} }\right) },{ J \phi _{\textrm{U}} \left( { U_i^{(k-1)} }\right) }\Big \rangle \), and \( \nu ^{\textrm{L}}, \nu ^{\textrm{U}} \in \mathbb {R}^{s} \) are defined by \( \nu ^{{\textrm{X}}}_i = \Big \langle {\psi _i^{\textrm{X}}},{L u_0}\Big \rangle \). Consequently, we obtain the following scheme:

Definition 4

-

Step 0

Prepare \( U^{(0)}_i \approx u (c_i h) \) and set \(k = 1\).

-

Step 1

Compute \( \{ \psi ^{\textrm{X}}_i \}_{i=1}^s \) by

$$\begin{aligned} \psi ^{\textrm{X}}_i = \exp \left( { - c_i h JL }\right) J \phi _{\textrm{X}} \left( { U^{(k-1)}_i }\right) . \end{aligned}$$Solve the linear equation system (19) to obtain \( R_{ \textrm{L}}^{(k)} \) and \( R_{ \textrm{U}}^{(k)} \). If some criteria hold, go to Step 2. Otherwise, compute \( \{ U_{i}^{(k)} \}_{i=1}^s \) by

$$\begin{aligned} U_i^{(k)} = \exp \left( { c_i h JL }\right) \left( { u_0 + 2 h \sum _{j \in [s]} a_{ij} \left( { R^{(k)}_{ \textrm{L}, j} \psi ^{\textrm{L}}_j - R^{(k)}_{ \textrm{U}, j} \psi ^{\textrm{U}}_j }\right) }\right) , \end{aligned}$$set \(k = k + 1 \) and repeat Step 1.

-

Step 2

Output

$$\begin{aligned} u_1^{(k)}&= \exp \left( { h JL }\right) \left( { u_0 + 2 h \sum _{j \in [s]} b_j \left( { R^{(k)}_{ \textrm{L} ,j} \psi ^{\textrm{L}}_j - R^{(k)}_{ \textrm{U} ,j} \psi ^{\textrm{U}}_j }\right) }\right) , \\ r_{\textrm{X},1}^{(k)}&= r_{\textrm{X},0} + \sum _{i,j \in [s]} b_i \omega _{ij} \left( { R^{(k)}_{ \textrm{X}, j} - r_0 }\right) . \end{aligned}$$

The scheme defined by Definition 4 has properties similar to the scheme defined by Definition 3. Here, we only provide the results, and the proof is given in Appendix 2. In particular, for the uniqueness and existence, only the case \(LJ=JL\) is presented here, while the general case is presented in Theorem 14.

Theorem 11

Suppose that \(LJ=JL\) holds. If h satisfies

the linear equation (19) has a unique solution.

Theorem 12

(Conservation law) The solution \( \left( { u^{(k)}_1, r^{(k)}_{\textrm{L},1}, r^{(k)}_{\textrm{U},1} }\right) \) of the scheme defined by Definition 4 satisfies \( V \left( { u^{(k)}_1, r^{(k)}_{\textrm{L},1}, r^{(k)}_{\textrm{U},1} }\right) = V (u_0,r_{\textrm{L},0}, r_{\textrm{U},0}) \).

Theorem 13

(Accuracy) Assume the following conditions:

-

(A1)

\( U^{(0)}_i \) satisfies \( \big \Vert { U^{(0)}_i - u( c_i h ) }\big \Vert \le C h^{q} \) for each \( i \in [s] \) .

-

(A2)

\( \phi _{\textrm{X}} :\mathcal {V}\rightarrow \mathcal {V}\) is Lipschitz continuous and bounded.

-

(A3)

The base Runge–Kutta method is of order p .

Then, the numerical solution \( \left( { u^{(k)}_1, r^{(k)}_{\textrm{L},1}, r^{(k)}_{\textrm{U},1} }\right) \) of the scheme defined by Definition 4 satisfies

for a sufficiently small \(h > 0\), i.e., the scheme with k iteration is of order \( \min \{ p, q + k-1\} \). Here, \( C' \) is a constant depending only on the exact solution u, k, J, L, \(\phi _{\textrm{X}}\), A, b, and the constant C in (A1).

5 Numerical experiments

We employ the sixth-order Gauss method as the base RK method. The initial approximation \( U^{(0)}_i \approx u (c_i h) \) is computed by the Lawson transformation and the continuous explicit RK (CERK) method with order \(1, 2,\dots , 5\) (\( q = 2, 3,\dots , 6\)). All numerical experiments are performed in Julia (Version 1.8.0) on a PC with Apple M1 Ultra and 128GB RAM. The numerical experiments in this paper are not parallelized. We leave it to future work.

5.1 Modified Korteweg–de Vries equation

Let us consider the modified Korteweg–de Vries (mKdV) equation (on \(\mathbb {S}:= \mathbb {R}/ L\mathbb {Z}\)):

We employ an energy-preserving spatial discretization

where \( \delta _x \) denotes the Fourier-spectral difference operator (see, e.g., [34] for details on the difference operator). The inner product is defined as \( \langle {v},{w}\rangle = \sum _{k=1}^Nv_k w_k \Delta x \). Then, \( \nabla H (u) = \delta _x^2 u_k + 2 (u_k)^3 \) holds.

Then, \( E(u):= \frac{1}{2} \sum _{k=1}^N(u_k)^4 \Delta x \) is bounded from below so that we can use the scheme defined by Definition 3. Note that the product of the matrix exponential function \( e^{ t \delta _x^3} \) and a vector can be computed by the FFT. In numerical experiments, we use the exact solution \( \textrm{dn} (x-(2-m)t \mid m) \) with the spatial period \(L=2K(m)\) and temporal period \( T = L/\vert {2-m}\vert \), where \(\textrm{dn}\) is one of the Jacobi elliptic functions and K denotes the complete elliptic integral of the first kind. We choose the parameters \(m=0.1\) (\(L\approx 3.225\), \(T\approx 1.697\)) and \(N= 16\).

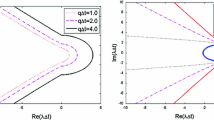

Figure 1 summarizes the relative errors. They decrease along the reference lines drawn based on Theorem 10. Figure 2 compares the computational cost of the proposed scheme with the scheme defined by [16] (see Appendix 4 for details) and an explicit Lawson method (corresponds to a seven stage sixth-order explicit Runge–Kutta method [35]). Note that all schemes have sixth order. The proposed scheme is more efficient than the scheme defined by [16]. However, the proposed scheme is less efficient than the explicit Lawson method for such a short time interval. As shown in the next example, the proposed scheme is more efficient than the explicit Lawson method for a long time interval (this advantage is typical in the comparison between conservative and non-conservative schemes).

Comparison of the computational efficiency of several schemes for the mKdV equation. In the legend, “proposedi” denotes the sixth-order scheme defined by Definition 3 with \(q=i\) and \(k=6-i\), “LI5” denotes the sixth-order scheme defined by [16] (1) with \(q=5\) and \(k=2\), and “Lawson6” denotes a seven stage sixth-order explicit Lawson method. Note that, the proposed schemes and the scheme defined by [16] are applied to the SAV systems (12) and (9), respectively, and the explicit Lawson method is directly applied to the system (20). Each scheme is computed 30 times, and the mean values and the standard deviations of the computational times are shown

5.2 Korteweg–de Vries equation

Let us consider the Korteweg–de Vries (KdV) equation (on \(\mathbb {S}:= \mathbb {R}/ L\mathbb {Z}\)):

We employ an energy-preserving spatial discretization

Since \( \sum _{k=1}^N(u_k)^3 \Delta x \) is unbounded, we employ the scheme defined by Definition 4. We define

similarly to [15]. In the numerical experiments below, we use the exact solution \( 2 m \left( { \textrm{cn} (x-ct \mid m) }\right) ^2 \), where \( c = 4 ( 2 m - 1 ) \) and \(\textrm{cn}\) is a Jacobi elliptic function. We choose the parameters \(m=0.1\) (\(L = 2K(m) \approx 3.225\), \(T = L/\vert {c}\vert \approx 1.008\)) and \(N= 64\).

Evolution of the relative errors of invariants of the KdV equation. In the figure, H, V, and I denote the invariant, the modified invariant, and the other invariant, respectively. The proposed scheme employed the step size \(h=T/64\approx 0.01575\), and the Lawson method employed the step size \(h=T/512\approx 0.001968\)

Figure 3 summarizes the relative errors. They decrease along the reference lines.

To confirm the long-term behavior of the proposed scheme, we conducted the numerical experiments over 32 periods. Figure 4 shows the evolution of relative errors of the invariant H, modified invariant V, and \( I (u):= \frac{1}{2} \sum \left( { u_k }\right) ^2 \Delta x \) corresponding to the another invariant \( \mathcal {I} (u):= \int \left( { \frac{1}{2} u^2 }\right) \textrm{d}x \) of the KdV equation.

As for the modified invariant V, as expected, it is very well preserved. The relative errors for the original invariants H and I are also small.

There, the proposed scheme (\(q=6\) and \(k=1\)) with \(h=T/64\approx 0.01575\) is applied to the SAV system (12), and the explicit Lawson method with \(h=T/512\approx 0.001968\) is directly applied to the system (21). We employ the small step size for the Lawson method because numerical solutions computed with \(h=T/256\) diverged. Even in the step size employed in the figure, the behavior of the relative errors of H suggests that it is expected to diverge after a slightly long run. In the current setup, the relative errors of numerical solutions itself at the final time are approximately \( 5.39 \times 10^{-7} \) for the proposed scheme and \( 8.33 \times 10^{-7} \) for the Lawson method. The computational time of the proposed scheme is 0.678 s, and that of the Lawson method is 0.882 s (they are mean values of 30 computations, with a standard deviation of about 0.01 for both methods).

5.3 Sine-Gordon equation

Let us consider the sine-Gordon (sG) equation (on \(\mathbb {S}:= \mathbb {R}/ L\mathbb {Z}\)):

By introducing a new variable \( v:= u_t \), we obtain the following first-order system:

We employ an energy-preserving spatial discretization

Since \( E (u) = \sum _{k=1}^N\cos u_k \Delta x \) is bounded, we employ the scheme defined by Definition 3.

In this case, since we employ the Fourier spectral difference \( \delta _x \) which can be diagonalized by the Fourier transform matrix F, i.e., \( \delta _x = F^{*} \left( { \textrm{i}\Lambda }\right) F \) (\( \Lambda \in \mathbb {R}^{N\times N} \) is a diagonal matrix), we have

for all \( m = 0,1,\dots \). Therefore, we have

where \( f_{\textrm{sG}} (t, \Lambda ) \) is a diagonal matrix defined by \( \left( { f_{\textrm{sG}} (t, \Lambda ) }\right) _{ii} = \Lambda _{ii}^{-1} \sin \left( { t \Lambda _{ii} }\right) \) if \( \Lambda _{ii} \ne 0 \) and \( \left( { f_{\textrm{sG}} (t, \Lambda ) }\right) _{ii} = t \) otherwise.

In numerical experiments, we use the exact solution [36]

where \( k_1^2 = \frac{\gamma ^2}{1+\gamma ^2} \left( { 1 + \frac{1}{\beta _1 ( 1 + \gamma ^2) } }\right) \), \( k_2^2 = \frac{\gamma ^2}{1+\gamma ^2} \left( { 1 - \frac{1}{\beta _2 ( 1 + \gamma ^2) } }\right) \), \( \beta _2^2 = \beta _1^2 + \frac{1-\gamma ^2}{1+\gamma ^2}\), \( \beta _1\) and \(\gamma \) are parameters (we choose \( \gamma = 0.1 \) and \( \beta _1 = 1 \) in the numerical experiments below). This solution has the spatial period \( L = 4 K (k_1^2) \) and the temporal period \( T = 4 K (k_2^2) \). In the numerical experiment, we choose \( N= 16\).

Figrure 5 summarizes the relative errors. The errors achieved higher orders of convergence than expected by Theorem 10. From the numerical results, the order of accuracy seems to be \( \min \{ p, q + 2k -1 \} \). In fact, this can be proved by using the specific form of the sine-Gordon equation (see Appendix 5). There, we show that the order of accuracy with respect to u is \( \min \{ p, q + 2k -1 \} \), while that with respect to v and r is \( \min \{ p, q + 2k -2 \} \).

Data Availability

The code and data that support the findings of this paper are available from the corresponding author upon reasonable request.

References

Celledoni, E., Grimm, V., McLachlan, R.I., McLaren, D., O’Neale, D., Owren, B., Quispel, G.R.: Preserving energy resp. dissipation in numerical PDEs using the “average vector field’’ method. J. Comp. Phys. 231, 6770–6789 (2012)

Gonzalez, O.: Time integration and discrete Hamiltonian systems. J. Nonlinear Sci. 6, 449–467 (1996)

McLachlan, R.I., Quispel, G.R.W., Robidoux, N.: Unified approach to Hamiltonian systems, Poisson systems, gradient systems with Lyapunov functions or first integrals. Phys. Rev. Lett. 81, 2399–2403 (1998)

McLachlan, R.I., Quispel, G.R.W., Robidoux, N.: Geometric integration using discrete gradients. Philos. Trans. R. Soc. Lond. A Math. Phys. Eng. Sci. 357, 1021–1045 (1999)

Furihata, D.: Finite difference schemes for \(\partial u/\partial t=(\partial /\partial x)^\alpha \delta G/\delta u\) that inherit energy conservation or dissipation property. J. Comput. Phys. 156(1), 181–205 (1999). https://doi.org/10.1006/jcph.1999.6377

Furihata, D., Mori, M.: General derivation of finite difference schemes by means of a discrete variation (in Japanese). Trans. Japan Soc. Indust. Appl. 8(3), 317–340 (1998)

Furihata, D., Matsuo, T.: Discrete variational derivative method-a structure-preserving numerical method for partial differential equations. CRC Press, Boca Raton (2011)

Cohen, D., Hairer, E.: Linear energy-preserving integrators for Poisson systems. BIT 51(1), 91–101 (2011). https://doi.org/10.1007/s10543-011-0310-z

Besse, C.: A relaxation scheme for the nonlinear Schrödinger equation. SIAM J. Numer. Anal. 42(3), 934–952 (2004). https://doi.org/10.1137/S0036142901396521

Zhang, F., Pérez-García, V.M., Vázquez, L.: Numerical simulation of nonlinear Schrödinger systems: a new conservative scheme. Appl. Math. Comput. 71(2–3), 165–177 (1995)

Matsuo, T., Furihata, D.: Dissipative or conservative finite-difference schemes for complex-valued nonlinear partial differential equations. J. Comput. Phys. 171(2), 425–447 (2001). https://doi.org/10.1006/jcph.2001.6775

Dahlby, M., Owren, B.: A general framework for deriving integral preserving numerical methods for PDEs. SIAM J. Sci. Comput. 33(5), 2318–2340 (2011). https://doi.org/10.1137/100810174

Yang, X., Han, D.: Linearly first- and second-order, unconditionally energy stable schemes for the phase field crystal model. J. Comput. Phys. 330, 1116–1134 (2017). https://doi.org/10.1016/j.jcp.2016.10.020

Shen, J., Xu, J., Yang, J.: The scalar auxiliary variable (SAV) approach for gradient flows. J. Comput. Phys. 353, 407–416 (2018). https://doi.org/10.1016/j.jcp.2017.10.021

Kemmochi, T., Sato, S.: Scalar auxiliary variable approach for conservative/dissipative partial differential equations with unbounded energy functionals. BIT 62, 903–930 (2022)

Sato, S., Miyatake, Y., Butcher, J.C.: High-order linearly implicit schemes conserving quadratic invariants. Appl. Numer. Math. 187, 71–88 (2023). https://doi.org/10.1016/j.apnum.2023.02.005

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010). https://doi.org/10.1017/S0962492910000048

Celledoni, E., Cohen, D., Owren, B.: Symmetric exponential integrators with an application to the cubic Schrödinger equation. Found. Comput. Math. 8, 303–317 (2008)

Mei, L., Huang, L., Huang, S.: Exponential integrators with quadratic energy preservation for linear Poisson systems. J. Comput. Phys. 387, 446–454 (2019)

Li, Y.-W., Wu, X.: Exponential integrators preserving first integrals or Lyapunov functions for conservative or dissipative systems. J. Sci. Comput. 38(3), 1876–1895 (2016)

Mei, L., Huang, L., Wu, X.: Energy-preserving exponential integrators of arbitrarily high order for conservative or dissipative systems with highly oscillatory solutions. J. Comput. Phys. 442, 110429 (2021). https://doi.org/10.1016/j.jcp.2021.110429

Li, L.: A new symmetric linearly implicit exponential integrator preserving polynomial invariants or Lyapunov functions for conservative or dissipative systems. J. Comput. Phys. 449, 110800 (2022). https://doi.org/10.1016/j.jcp.2021.110800

Jiang, C., Wang, Y., Cai, W.: A linearly implicit energy-preserving exponential integrator for the nonlinear Klein-Gordon equation. J. Comput. Phys. 419, 109690 (2020)

Cui, J., Xu, Z., Wang, Y., Jiang, C.: Mass- and energy-preserving exponential Runge-Kutta methods for the nonlinear Schrödinger equation. Appl. Math. Lett. 112, 106770 (2021). https://doi.org/10.1016/j.aml.2020.106770

Xu, Z., Cai, W., Song, Y., Wang, Y.: Explicit high-order energy-preserving exponential time differencing method for nonlinear Hamiltonian PDEs. Appl. Math. Comput. 404, 126208 (2021)

Fu, Y., Hu, D., Xu, Z.: High-order explicit conservative exponential integrator schemes for fractional Hamiltonian PDEs. Appl. Numer. Math. 172, 315–331 (2022). https://doi.org/10.1016/j.apnum.2021.10.011

Jiang, C., Cui, J., Qian, X., Song, S.: High-order linearly implicit structure-preserving exponential integrators for the nonlinear Schrödinger equation. J. Sci. Comput. 90(1), 66–27 (2022). https://doi.org/10.1007/s10915-021-01739-x

Lawson, J.D.: Generalized Runge-Kutta processes for stable systems with large Lipschitz constants. SIAM J. Numer. Anal. 4(3), 372–380 (1967)

Cooper, G.J.: Stability of Runge-Kutta methods for trajectory problems. IMA J. Numer. Anal. 7(1), 1–13 (1987). https://doi.org/10.1093/imanum/7.1.1

Butcher, J.C.: B-series—algebraic analysis of numerical methods. Springer Series in Computational Mathematics, vol. 55, p. 310. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-70956-3

Horn, R.A., Johnson, C.R.: Topics in matrix analysis, p. 607. Cambridge University Press, Cambridge (1991). https://doi.org/10.1017/CBO9780511840371

Trefethen, L.N., Embree, M.: Spectra and pseudospectra, p. 606. Princeton University Press, Princeton, NJ (2005)

Shen, X., Leok, M.: Geometric exponential integrators. J. Comput. Phys. 382, 27–42 (2019). https://doi.org/10.1016/j.jcp.2019.01.005

Fornberg, B.: A practical guide to pseudospectral methods. Cambridge Monographs on Applied and Computational Mathematics, vol. 1, p. 231. Cambridge University Press, Cambridge (1996). https://doi.org/10.1017/CBO9780511626357

Butcher, J.C.: On Runge-Kutta processes of high order. J. Austral. Math. Soc. 4, 179–194 (1964)

Marchesoni, F.: Exact solutions of the sine-Gordon equation with periodic boundary conditions. Progr. Theoret. Phys. 77(4), 813–824 (1987). https://doi.org/10.1143/PTP.77.813

Zhang, D.K.: Discovering new Runge-Kutta methods using unstructured numerical search (2019)

Owren, B., Zennaro, M.: Continuous explicit Runge-Kutta methods. In: Computational Ordinary Differential Equations (London, 1989). Inst. Math. Appl. Conf. Ser. New Ser., vol. 39, pp. 97–105. Oxford Univ. Press, New York, ??? (1992)

Verner, J.H.: Differentiable interpolants for high-order Runge-Kutta methods. SIAM J. Numer. Anal. 30(5), 1446–1466 (1993). https://doi.org/10.1137/0730075

Hairer, E., Wanner, G.:Solving ordinary differential equations. II, Stiff and Differential-algebraic Problems. Springer Series in Computational Mathematics, vol. 14, p. 614. Springer, Berlin (2010). https://doi.org/10.1007/978-3-642-05221-7

Acknowledgements

We would like to thank Editage (www.editage.com) for English language editing. The author would also like to thank the anonymous referees for their helpful comments.

Funding

Open Access funding provided by The University of Tokyo. The author is supported by a Japan Society for the Promotion of Science (JSPS) Grant-in-Aid for Scientific Research (B) (No. 20H01822) and a JSPS Grant-in-Aid for Early-Career Scientists (No. 22K13955).

Author information

Authors and Affiliations

Contributions

S.S. wrote the whole manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The author declares no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: The image of Figure 4 was not replaced during proof correction stage.

Appendices

Appendix 1. Derivation of the scheme defined by Definition 4

When we apply the scheme defined by (2) to the equation (18), the linear equation system (11) reads

By introducing \( \psi ^{{\textrm{X}}}_i = \exp \left( { - c_i h JL }\right) J \phi _{\textrm{X}} \left( { U^{(k-1)}_i }\right) \), we see

Therefore, we obtain the linear equation system

Appendix 2. Proofs of theorems in 4.2

Theorem 11 is a corollary of the following theorem.

Theorem 14

Suppose that the step size h satisfies

Then, the linear equation (19) has a unique solution.

Proof

Since the coefficient matrix of the linear equation (19) can be written as

it is sufficient to evaluate the spectral norms of the second and the third terms. The second term can be evaluated as

The third term can be evaluated as

and

holds, which can be shown in a manner similar to the proof of Theorem 8. \(\square \)

Next, we prove Theorem 13.

Proof of Theorem 13

It is sufficient to prove that the skew-symmetric matrix function

is Lipschitz continuous.

For any \( v_1, v_2 \in \mathcal {V}\) and \( \rho \in \mathbb {R}\), we have

Therefore, we see

which shows the Lipschitz continuity of \( S_{\textrm{MSAV}} \) under the assumption (A2). \(\square \)

Appendix 3. Computational efficiency of the scheme defined by Definition 3

In this section, we evaluate the computational efficiency of the scheme defined by Definition 3 in terms of the number of the computation of the product of matrix exponential and vector. To this end, we consider the explicit Lawson method and the four types of the initial approximation. Before evaluating the computational efficiency, we summarize the number of stages of the explicit Runge–Kutta and continuous explicit Runge–Kutta (CERK) methods achieving the order p in Table 1.

Let us list the four types of initial approximation. To achieve the order p, we consider the Gauss method with \( s = \lceil p/2 \rceil \) stages as the base Runge–Kutta method. Then, to minimize the number of iterations in Definition 3, we choose \( k = p - q + 1\). Recall that Steps 1 and 2 in Definition 3 require the computation of the product of matrix exponential and vector \( ( 2 k - 1 )s + 1 \) times in sequential computation and 2k times in parallel computation. Below, \( N_{\textrm{S}}\) and \( N_{\textrm{P}}\) denote the number of the computation of the product of matrix exponential and vector in sequential and parallel computation, respectively.

-

Proposed (I): the simplest initial approximation \( U^{(0)}_i = u_0 \). In this case, since \( q = 1 \) holds, we choose \( k = p\). Then, \( N_{\textrm{S}}= (2p-1)s + 1 \) and \( N_{\textrm{P}}= 2p \) hold.

-

Proposed (II): an accurate initial approximation using continuous explicit Runge–Kutta methods with the Lawson transformation. In this case, since \( q = p \) holds, we choose \(k =1\). Then, \( N_{\textrm{S}}= 2(s^{*} - 1) + 2 s + 1 \) and \( N_{\textrm{P}}= 2(s^{*} - 1) + 3 \) hold, where \( s^{*} \) is the number of stages of the continuous explicit Runge–Kutta method achieving the order \( p - 1 \) (see Table 1).

-

Proposed (III): initial approximation using extrapolation of inner stages in the previous step (\(p\ge 2\)). The inner stages of the Gauss method are \( (s + 1 )\)th order approximations, the order of approximation is at most \((s+1)\)th order. Therefore, let us construct the extrapolation satisfying \( q = s + 1\) and \( k = p - s \). However, since \( U^{(i)}_k \) is not computed at the last iteration in Definition 3, the situation is a bit complicated. First, when \( p = 2 \), we have \( s = 1 \) and \(k =1 \). In this case, to construct a second-order approximation, we employ the extrapolation using the input \(u_0\) and \(u_1 \) in the previous step. Therefore, \( N_{\textrm{S}}= N_{\textrm{P}}= 2 \) hold. Next, when \( p = 3 \), we have \( s = 2 \) and \( k = 1 \). In this case, to construct a third-order approximation, we employ the extrapolation using the input \(u_0\), \(u_1\), and one of the inner stages in the previous step. Therefore, \( N_{\textrm{S}}= 4 \) and \( N_{\textrm{P}}= 3 \) hold. Finally, when \( p \ge 4 \), we have \( k \ge 2 \). In this case, we can use \( U^{(k-1)}_i \)’s which are computed in the previous step. Therefore, \( N_{\textrm{S}}= (2 p - 2 s - 1) s + 1 \) and \( N_{\textrm{P}}= 2 (p - s) \) hold.

-

Proposed (IV): initial approximation using extrapolation of several previous steps. In this case, by using sufficiently many previous steps, we can construct the extrapolation satisfying \( q = p \) so that \( k = 1 \). Then, we have \( N_{\textrm{S}}= s + 1 \) and \( N_{\textrm{P}}= 2 \) hold.

We compare the computational efficiency of the proposed methods with that of the explicit Lawson method in Table 2. Note that the explicit Lawson method requires the product of the matrix exponential and vector \( 2s-1 \) times.

As shown in Table 2, the computational efficiency of the proposed method largely depends on the choice of the initial approximation. In particular, the extrapolation methods (III) and (IV) are much more efficient than the other methods. However, as reported in [27], the extrapolation method (III) causes the instability in the numerical solution. We expect worse stability for the extrapolation method (IV). In this sense, we believe the methods (I) and (II) (numerically tested in Sect. 5) are better to use in practice. As summarized in Table 2, the proposed method (I) is better in parallel computation, while the proposed method (II) is better in sequential computation.

Appendix 4. Application of the scheme by [16] to the SAV system (18)

In this section, we consider the scheme by [16] (i.e., Definition 1) applied to the SAV system (18).

In this case, the (3) reads

where \( \hat{\phi }^{(k-1)}_{\textrm{X},j} = \phi _{\textrm{X}} \left( { U^{(k-1)}_j }\right) \) for \( \textrm{X} \in \{ \textrm{L}, \textrm{U} \} \). Let \( U^{(k)} \) denote the vector \( \begin{bmatrix} U^{(k)}_1&\cdots&U^{(k)}_s \end{bmatrix}^{\textsf {T}}\). Then, the first equation in the above equation can be written as

where \( \otimes \) denotes the Kronecker product, \(I_s \in \mathbb {R}^{s \times s }\) is the identity matrix, \( I_{\mathcal {V}} \in \mathcal {L} ( \mathcal {V}) \) is the identity operator, \( A \in \mathbb {R}^{s \times s} \) is the matrix with the entries \( a_{ij} \), \( A_j = \begin{bmatrix} a_{1j}&\cdots&a_{s j} \end{bmatrix}^\textsf{T}\in \mathbb {R}^{s} \) is the vector, and \( \textbf{1}_s \in \mathbb {R}^s \) is the vector with the entries 1. Since the linear equation in the form

can be efficiently solved (cf. [40, IV.8]), it is computationally inexpensive to compute

By using this, \( U^{(k)}_i \) can be written as

Then, by using the above equation, the second and the third equations in (22) can be simplified into

where \( R^{(k)}_{\textrm{X}} = \begin{bmatrix} R^{(k)}_{ \textrm{X} ,1 }&\cdots&R^{(k)}_{ \textrm{X},s } \end{bmatrix}^\textsf {T}\), \( \tilde{\Phi }_{ \textrm{XY} } \in \mathbb {R}^{s \times s} \) is defined by \( \left( {\tilde{\Phi }_{\textrm{XY}_{ij}}}\right) = \left\langle { L \hat{\phi }_{ \textrm{X},i } },{ \tilde{\phi }^{\textrm{Y}}_{ji} }\right\rangle \), \( \Phi \in \mathbb {R}^{s \times s} \) is a diagonal matrix defined by \( \Phi _{ii} = \left\langle { \hat{\phi }_{ \textrm{L},i }, }{ J \hat{\phi }_{ \textrm{U},i} }\right\rangle \), \( \nu _{\textrm{X}} \in \mathbb {R}^{s} \) is defined by \( \left( { \nu _{\textrm{X}} }\right) _i = \left\langle { L \hat{\phi }_{ \textrm{X},i } },{ \tilde{u}_i }\right\rangle \). Consequently, we obtain the following scheme:

Definition 5

-

Step 0

Prepare \( U^{(0)}_i \approx u (c_i h) \) and set \(k = 1\).

-

Step 1

Compute \( \tilde{u}_i \) and \( \tilde{\phi }^{\textrm{X}}_{ij} \) for \( i,j \in [s] \) by (23) and (24). Solve the linear equation system (26) to obtain \( R_{ \textrm{L}}^{(k)} \) and \( R_{ \textrm{U}}^{(k)} \). Compute \( \{ U_{i}^{(k)} \}_{i=1}^s \) by (25). If some criteria hold, go to Step 2. Otherwise, set \(k = k + 1 \) and repeat Step 1.

-

Step 2

Output

$$\begin{aligned} \begin{bmatrix} u_1 \\ r_{ \textrm{L}, 1 } \\ r_{ \textrm{U}, 1} \end{bmatrix} = \begin{bmatrix} u^{(k)}_0 \\ r^{(k)}_{\textrm{L},0} \\ r^{(k)}_{\textrm{U},0} \end{bmatrix} + h \sum _{j \in [s]} b_{j} \begin{bmatrix} JL U^{(k)}_j + 2 R^{(k)}_{\textrm{L},j} J \hat{\phi }_{\textrm{L},j} - 2 R^{(k)}_{\textrm{U},j} J \hat{\phi }_{\textrm{U},j} \\ - \langle { J \hat{\phi }_{\textrm{L},j} ,}{ L U^{(k)}_j}\rangle - 2 R_{\textrm{U},j} \langle {\hat{\phi }_{\textrm{L},j}, }{ J \hat{\phi }_{\textrm{U},j} }\rangle \\ - \langle {J \hat{\phi }_{\textrm{U},j}, }{ L U^{(k)}_j}\rangle - 2 R_{\textrm{L},j} \langle {\hat{\phi }_{\textrm{L},j}, }{ J \hat{\phi }_{\textrm{U},j} } \rangle \end{bmatrix}. \end{aligned}$$

Appendix 5. The error behavior of the proposed scheme for the sine-Gordon equation

In this section, we consider the ODE

given by a spatial discretization of the sine-Gordon equation.

Theorem 15

Let (u, v, r) be the solution of the ODE (27). Assume the following conditions:

-

(A1)

\( U^{(0)}_i \) satisfies \( \big \Vert { U^{(0)}_i - u( c_i h ) }\big \Vert \le C h^{q} \) for each \( i \in [s] \) .

-

(A2)

\( \alpha \) in the definition of \(\phi \) satisfies \( \alpha \ge 2 N\Delta x \) .

-

(A3)

The base Runge–Kutta method is of order p .

Then, the solution \( (u^{(k)}_1, v^{(k)}_1, r^{(k)}_1) \) of the scheme defined by Definition 3 satisfies

for a sufficiently small step size \(h > 0\), Here, \( C' \) is a constant depending only on the exact solution (u, v, r) , k, \(N\), A, b, and the constant C in (A1).

Proof

In this case, the coupled system considered in the proof of Theorem 7 reads

for \( i = 1,2,\dots , k\). According to the proof of Theorem 7, it is sufficient to show that \(\sup _{t \in [0,h]} \big \Vert { u^{(i)} (t) - u (t) }\big \Vert \le C'' h^{ q + 2k }\), \(\sup _{t \in [0,h]} \big \Vert { v^{(i)} (t) - v (t) } \big \Vert \le C'' h^{ q + 2k - 1 }\) and \(\sup _{t \in [0,h]} \big \vert { r^{(i)} (t) - r (t) }\big \vert \le C'' h^{ q + 2k - 1 }\).

First, we introduce several bounds with respect to \(\phi \). Since \(E \left( {u^{(i)} (t) }\right) = \sum _{k=1}^N\) \(\cos \left( { u^{(i)}_k (t) }\right) \Delta x \ge - N\Delta x \) and \( \big \Vert { \sin \left( {u^{(i)} (t) }\right) }\big \Vert = \sqrt{ \sum ^N_{k=1} \Big \vert { \sin \left( {u^{(i)}_k }\right) }\Big \vert \Delta x } \le \sqrt{N\Delta x}\) hold, we see

which implies \( \sup _{ t \in [0,h]} \Vert { \phi \left( { u^{(i)} (t) }\right) }\Vert \le 1/2 \) by choosing \( \alpha = 2 N\Delta x \). In addition, under the setting \( \alpha = 2 N\Delta x \), for any \( v, w \in \mathbb {R}^N\), we see

Therefore, the map \( \phi \) is Lipschitz continuous with the Lipschitz constant \( L_{\phi }:= 5 / (4 \sqrt{ N\Delta x }) \).

Let us introduce \( E_u^{(i)}:= \sup _{t \in [0,h]} \big \Vert { u^{(i)} (t) - u (t) }\big \Vert \), \( E_v^{(i)}:= \sup _{t \in [0,h]} \big \Vert v^{(i)} (t)\) \(- v (t) \big \Vert \) and \( E_r^{(i)}:= \sup _{t \in [0,h]} \big \vert r^{(i)} (t)- r (t) \big \vert \). Then, by introducing \( C_v:= \sup _{t \in [0,h]} \big \Vert { v(t) }\big \Vert \) and \( C_r:= \sup _{t \in [0,h]} \big \vert { r(t) }\big \vert \), we see

The inequalities on \( E_v^{(i)} \) and \( E_r^{(i)} \) imply

Therefore, when \( h \le 1 \), we see

This inequality and \( E_u^{(i)} \le h E_v^{(i)} \) imply

Therefore, when \( h \le \left( { 4 \big \Vert { \delta _x^2 }\big \Vert }\right) ^{-1} \), we see

which implies \( E_u^{(i)} \le C^{(k)} h^{q+2k} \), where \( C^{(k)} = \left( { 4 L_\phi \left( {2C_r + C_v}\right) }\right) ^k \). This fact and inequalities (29) and (28) show the theorem. \(\square \)

As indicated in the proof above, the same argument holds true for the nonlinear Klein–Gordon equation with a general potential function when \( \phi \) is Lipschitz continuous.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sato, S. High-order linearly implicit exponential integrators conserving quadratic invariants with application to scalar auxiliary variable approach. Numer Algor (2024). https://doi.org/10.1007/s11075-024-01781-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-024-01781-4

Keywords

- Ordinary differential equations

- Quadratic invariants

- Geometric numerical integration

- Exponential integrators

- Scalar auxiliary variable approach