Abstract

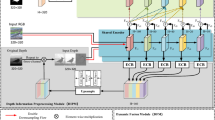

RGB-D salient object detection (SOD) has received immense research interest in the recent past due to its capability to handle complex and challenging image scenes. Despite substantial endeavors in this field, two notable challenges endure. The first pertains to the efficient extraction of saliency-relevant features from both modalities, necessitating a comprehensive understanding and capture of intricate features present in RGB and depth views. To tackle this, a novel approach is proposed, which encompasses convolutional neural network (CNN) and transformer architecture into a unified framework. This effectively captures spatial hierarchies and intricate dependencies, facilitating enhanced feature extraction and deeper contextual understanding. The second hurdle involves devising an optimal fusion strategy for the RGB and depth views, which is handled by a specialized cross-interactive multi-modal (CIMM) feature fusion module. Constructed with two stages of self-attention, this module generates a unified feature representation, effectively bridging the gap between the two modalities. Further, to emphasize the delineation of salient objects’ boundaries, an edge enhancement module (EEM) is incorporated that enhances the visual distinctiveness of salient objects, thereby improving the overall quality and accuracy of salient object detection in complex scenes. Extensive experimental evaluations performed on seven benchmark datasets demonstrate that the proposed model performs favourably against CNN and transformer based state-of-the-art methods under standard saliency evaluation metric. Notably, on NJU-2K test set, it achieves an S-measure of 0.933, F-measure of 0.930, E-measure of 0.951 and a remarkably low mean absolute error of 0.025, underscoring the efficacy of the proposed model.

Similar content being viewed by others

Data availability

The code and data are shared via GitFront and can be accessed at https://gitfront.io/r/user-9759679/eoWpi4HW5B72/SalFormer_GitFront/.

References

Achanta R, Hemami S, Estrada F, Susstrunk S (2009) Frequency-tuned salient region detection. In: 2009 IEEE conference on computer vision and pattern recognition. IEEE, pp 1597–1604

Bai Z, Liu Z, Li G, Ye L, Wang Y (2021) Circular complement network for rgb-d salient object detection. Neurocomputing 451:95–106

Banerjee S, Mitra S, Shankar BU (2018) Automated 3d segmentation of brain tumor using visual saliency. Inf Sci 424:337–353

Borji A, Cheng MM, Jiang H, Li J (2015) Salient object detection: a benchmark. IEEE Trans Image Process 24(12):5706–5722

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: European conference on computer vision. Springer, pp 213–229

Chen S, Fu Y (2020) Progressively guided alternate refinement network for rgb-d salient object detection. In: European conference on computer vision. Springer, pp 520–538

Chen H, Li Y (2018) Progressively complementarity-aware fusion network for rgb-d salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3051–3060

Cheng Y, Fu H, Wei X, Xiao J, Cao X (2014) Depth enhanced saliency detection method. In: Proceedings of international conference on internet multimedia computing and service, pp 23–27

Chen H, Deng Y, Li Y, Hung TY, Lin G (2020) Rgbd salient object detection via disentangled cross-modal fusion. IEEE Trans Image Process 29:8407–8416

Chen Z, Cong R, Xu Q, Huang Q (2020) Dpanet: depth potentiality-aware gated attention network for rgb-d salient object detection. IEEE Trans Image Process 30:7012–7024

Chen Q, Fu K, Liu Z, Chen G, Du H, Qiu B, Shao L (2021) Ef-net: a novel enhancement and fusion network for rgb-d saliency detection. Pattern Recognit 112:107740

Chen T, Hu X, Xiao J, Zhang G, Wang S (2022) Cfidnet: cascaded feature interaction decoder for rgb-d salient object detection. Neural Comput Appl 34(10):7547–7563

Ciptadi A, Hermans T, Rehg JM (2013) An in depth view of saliency. Georgia Institute of Technology

De Boer PT, Kroese DP, Mannor S, Rubinstein RY (2005) A tutorial on the cross-entropy method. Ann Oper Res 134:19–67

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S et al (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929

Durga BK, Rajesh V (2022) A resnet deep learning based facial recognition design for future multimedia applications. Comput Electr Eng 104:108384

Fan DP, Cheng MM, Liu Y, Li T, Borji A (2017) Structure-measure: a new way to evaluate foreground maps. In: Proceedings of the IEEE international conference on computer vision, pp 4548–4557

Fan DP, Gong C, Cao Y, Ren B, Cheng MM, Borji A (2018) Enhanced-alignment measure for binary foreground map evaluation. arXiv:1805.10421

Fan DP, Lin Z, Zhang Z, Zhu M, Cheng MM (2020) Rethinking rgb-d salient object detection: models, data sets, and large-scale benchmarks. IEEE Trans Neural Netw Learn Syst 32(5):2075–2089

Fang Y, Chen Z, Lin W, Lin CW (2012) Saliency detection in the compressed domain for adaptive image retargeting. IEEE Trans Image Process 21(9):3888–3901

Fang X, Zhu J, Shao X, Wang H (2022) Grouptransnet: group transformer network for rgb-d salient object detection. arXiv:2203.10785

Feng D, Barnes N, You S, McCarthy C (2016) Local background enclosure for rgb-d salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2343–2350

Feng G, Meng J, Zhang L, Lu H (2022) Encoder deep interleaved network with multi-scale aggregation for rgb-d salient object detection. Pattern Recognit 128:108666

Gao L, Liu B, Fu P, Xu M (2023) Depth-aware inverted refinement network for rgb-d salient object detection. Neurocomputing 518:507–522

Guo J, Han K, Wu H, Tang Y, Chen X, Wang Y, Xu C (2022) Cmt: convolutional neural networks meet vision transformers. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 12175–12185

Gupta S, Girshick R, Arbeláez P, Malik J (2014) Learning rich features from rgb-d images for object detection and segmentation. In: European conference on computer vision. Springer, pp 345–360

Han J, Chen H, Liu N, Yan C, Li X (2017) Cnns-based rgb-d saliency detection via cross-view transfer and multiview fusion. IEEE Trans Cybern 48(11):3171–3183

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Hu K, Zhao L, Feng S, Zhang S, Zhou Q, Gao X, Guo Y (2022) Colorectal polyp region extraction using saliency detection network with neutrosophic enhancement. Comput Biol Med 147:105760

Huang P, Shen CH, Hsiao HF (2018) Rgbd salient object detection using spatially coherent deep learning framework. In: 2018 IEEE 23rd international conference on digital signal processing (DSP). IEEE, pp 1–5

Huang Z, Chen HX, Zhou T, Yang YZ, Liu BY (2021) Multi-level cross-modal interaction network for rgb-d salient object detection. Neurocomputing 452:200–211

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Jia S, Zhang Y (2018) Saliency-based deep convolutional neural network for no-reference image quality assessment. Multimed Tools Appl 77(12):14859–14872

Jia X, DongYe C, Peng Y (2022) Siatrans: siamese transformer network for rgb-d salient object detection with depth image classification. Image Vis Comput 127:104549

Jin X, Guo C, He Z, Xu J, Wang Y, Su Y (2022) Fcmnet: frequency-aware cross-modality attention networks for rgb-d salient object detection. Neurocomputing 491:414–425

Ju R, Ge L, Geng W, Ren T, Wu G (2014) Depth saliency based on anisotropic center-surround difference. In: 2014 IEEE international conference on image processing (ICIP). IEEE, pp 1115–1119

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, p 25

Kroner A, Senden M, Driessens K, Goebel R (2020) Contextual encoder-decoder network for visual saliency prediction. Neural Netw 129:261–270

Lee M, Park C, Cho S, Lee S (2022) Spsn: superpixel prototype sampling network for rgb-d salient object detection. arXiv:2207.07898

Li N, Ye J, Ji Y, Ling H, Yu J (2014) Saliency detection on light field. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2806–2813

Li C, Cong R, Kwong S, Hou J, Fu H, Zhu G, Zhang D, Huang Q (2020) Asif-net: attention steered interweave fusion network for rgb-d salient object detection. IEEE Trans Cybern 51(1):88–100

Li G, Liu Z, Chen M, Bai Z, Lin W, Ling H (2021) Hierarchical alternate interaction network for rgb-d salient object detection. IEEE Trans Image Process 30:3528–3542

Li H, Wu P, Wang Z, Mao J, Alsaadi FE, Zeng N (2022) A generalized framework of feature learning enhanced convolutional neural network for pathology-image-oriented cancer diagnosis. Comput Biol Med 151:106265

Li J, Ji W, Zhang M, Piao Y, Lu H, Cheng L (2023) Delving into calibrated depth for accurate rgb-d salient object detection. Int J Comput Vis 131(4):855–876

Liang J, Cao J, Sun G, Zhang K, Van Gool L, Timofte R (2021) Swinir: image restoration using swin transformer. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 1833–1844

Liu Z, Shi S, Duan Q, Zhang W, Zhao P (2019) Salient object detection for rgb-d image by single stream recurrent convolution neural network. Neurocomputing 363:46–57

Liu N, Zhang N, Wan K, Shao L, Han J (2021a) Visual saliency transformer. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4722–4732

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021b) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10012–10022

Liu Z, Tan Y, He Q, Xiao Y (2021) Swinnet: swin transformer drives edge-aware rgb-d and rgb-t salient object detection. IEEE Trans Circuits Syst Video Technol 32(7):4486–97

Liu Z, Wang Y, Tu Z, Xiao Y, Tang B (2021d) Tritransnet: Rgb-d salient object detection with a triplet transformer embedding network. In: Proceedings of the 29th ACM international conference on multimedia, pp 4481–4490

Liu C, Yang G, Wang S, Wang H, Zhang Y, Wang Y (2023) Tanet: transformer-based asymmetric network for rgb-d salient object detection. In: IET Computer Vision

Mashrur FR, Islam MS, Saha DK, Islam SR, Moni MA (2021) Scnn: scalogram-based convolutional neural network to detect obstructive sleep apnea using single-lead electrocardiogram signals. Comput Biol Med 134:104532

Michel P, Levy O, Neubig G (2019) Are sixteen heads really better than one? In: Advances in neural information processing systems, p 32

Ning X, Gong K, Li W, Zhang L (2021) Jwsaa: joint weak saliency and attention aware for person re-identification. Neurocomputing 453:801–811

Niu Y, Geng Y, Li X, Liu F (2012) Leveraging stereopsis for saliency analysis. In: 2012 IEEE conference on computer vision and pattern recognition. IEEE, pp 454–461

Pantazis G, Dimas G, Iakovidis DK (2020) Salsum: saliency-based video summarization using generative adversarial networks. arXiv:2011.10432

Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, Killeen T, Lin Z, Gimelshein N, Antiga L et al (2019) Pytorch: an imperative style, high-performance deep learning library. In: Advances in neural information processing systems, p 32

Patel Y, Appalaraju S, Manmatha R (2021) Saliency driven perceptual image compression. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 227–236

Peng H, Li B, Xiong W, Hu W, Ji R (2014) Rgbd salient object detection: a benchmark and algorithms. In: European conference on computer vision. Springer, pp 92–109

Perazzi F, Krähenbühl P, Pritch Y, Hornung A (2012) Saliency filters: contrast based filtering for salient region detection. In: 2012 IEEE conference on computer vision and pattern recognition. IEEE, pp 733–740

Piao Y, Ji W, Li J, Zhang M, Lu H (2019) Depth-induced multi-scale recurrent attention network for saliency detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 7254–7263

Rahman MA, Wang Y (2016) Optimizing intersection-over-union in deep neural networks for image segmentation. In: International symposium on visual computing. Springer, pp 234–244

Ren J, Gong X, Yu L, Zhou W, Ying Yang M (2015) Exploiting global priors for rgb-d saliency detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 25–32

Shigematsu R, Feng D, You S, Barnes N (2017) Learning rgb-d salient object detection using background enclosure, depth contrast, and top-down features. In: Proceedings of the IEEE international conference on computer vision workshops, pp 2749–2757

Strudel R, Garcia R, Laptev I, Schmid C (2021) Segmenter: transformer for semantic segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 7262–7272

Sun F, Ren P, Yin B, Wang F, Li H (2023) Catnet: a cascaded and aggregated transformer network for rgb-d salient object detection. IEEE Trans Multimed

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems, p 30

Wang N, Gong X (2019) Adaptive fusion for rgb-d salient object detection. IEEE Access 7:55277–55284

Wang X, Li S, Chen C, Hao A, Qin H (2021) Depth quality-aware selective saliency fusion for rgb-d image salient object detection. Neurocomputing 432:44–56

Wei W, Xu M, Wang J, Luo X (2023) Bidirectional attentional interaction networks for rgb-d salient object detection. Image Vis Comput 138:104792

Wu J, Hao F, Liang W, Xu J (2023) Transformer fusion and pixel-level contrastive learning for rgb-d salient object detection. IEEE Trans Multimed

Yang Y, Wang J, Xie F, Liu J, Shu C, Wang Y, Zheng Y, Zhang H (2021) A convolutional neural network trained with dermoscopic images of psoriasis performed on par with 230 dermatologists. Comput Biol Med 139:104924

Yang Y, Qin Q, Luo Y, Liu Y, Zhang Q, Han J (2022) Bi-directional progressive guidance network for rgb-d salient object detection. IEEE Trans Circuits Syst Video Technol 32(8):5346–5360

Yuan L, Chen Y, Wang T, Yu W, Shi Y, Jiang ZH, Tay FE, Feng J, Yan S (2021) Tokens-to-token vit: training vision transformers from scratch on imagenet. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 558–567

Zhang M, Ren W, Piao Y, Rong Z, Lu H (2020) Select, supplement and focus for rgb-d saliency detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3472–3481

Zhang J, Fan DP, Dai Y, Anwar S, Saleh F, Aliakbarian S, Barnes N (2021a) Uncertainty inspired rgb-d saliency detection. IEEE Trans Pattern Anal Mach Intell

Zhang Y, Zheng J, Li L, Liu N, Jia W, Fan X, Xu C, He X (2021) Rethinking feature aggregation for deep rgb-d salient object detection. Neurocomputing 423:463–473

Zhao JX, Cao Y, Fan DP, Cheng MM, Li XY, Zhang L (2019) Contrast prior and fluid pyramid integration for rgbd salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3927–3936

Zhao X, Zhang L, Pang Y, Lu H, Zhang L (2020) A single stream network for robust and real-time rgb-d salient object detection. In: European conference on computer vision. Springer, pp 646–662

Zhou W, Zhu Y, Lei J, Wan J, Yu L (2021) Ccafnet: crossflow and cross-scale adaptive fusion network for detecting salient objects in rgb-d images. IEEE Trans Multimed 24:2192–2204

Zhou W, Yue Y, Fang M, Qian X, Yang R, Yu L (2023) Bcinet: bilateral cross-modal interaction network for indoor scene understanding in rgb-d images. Inf Fusion 94:32–42

Acknowledgements

This work was partly supported by Rashtriya Uchchatar Shiksha Abhiyan (RUSA) 2.0 of India under “Research, Innovation and Quality Improvement” for Project ID. T4G

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Abraham, S.E., Kovoor, B.C. Unifying convolution and transformer: a dual stage network equipped with cross-interactive multi-modal feature fusion and edge guidance for RGB-D salient object detection. J Ambient Intell Human Comput 15, 2341–2359 (2024). https://doi.org/10.1007/s12652-024-04758-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-024-04758-2