Abstract

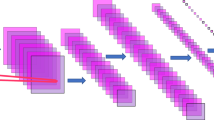

Recently, the neural networks with convolution computation is widely used for image classification and recognition. For real-time implementation, the video buffer is required to store the image temperately. However, traditional buffers like CLSB (content line shift buffer) may experience delays during the read process, particularly when encountering line breaks or image changes. As for N × N convolution, the delay time is N−1 clocks for every row changing. As the image width is W, the delay time is 2W + N clocks for every frame changing. These delays can impact the efficiency and performance of the neural network. To overcome this challenge, this paper presented novel buffer design to avoid the delay at the line ends and frame change. By proactively fetching data ahead of time, the buffer can dynamically schedule the read operation and ensure that the subsequent data are correctly placed for efficient processing. This improvement in read latency contributes to enhanced performance and better utilization of computational resources within the hardware system. Then the full convolutional network accelerator is implemented with the fast buffer design and common computational kernel to save the hardware cost based on LeNet model. The results show that the accuracy can achieve 99.1% with MNIST dataset verification. By eliminating the waiting time, the modified buffer allows for more efficient processing in the image, and the fame rate for a computer vision can achieve 46 per second, to meet the real-time requirement.

Similar content being viewed by others

Data availability

Data openly available in a public repository.

References

Wang, C., Li, X., Chen, H., Zhou, X., Gong, C.L.: MALOC: a fully pipelined FPGA accelerator for convolutional neural networks with all layers mapped on. IEEE Trans. Comput. Aided Design Integrat. Circ. Syst. 37(11), 2601–2612 (2018)

Chang, L., Zhang, S., Du, H., Chen, Y., Wang, S.: A reconfigurable neural network processor with tile-grained multicore pipeline for object detection on FPGA. IEEE Trans. Very Large Scale Integrat. Syst. 29(11), 1967–1980 (2021)

Nguyen, D.T., Nguyen, T.N., Kim, H., Lee, H.J.: A high-throughput and power-efficient FPGA implementation of YOLO CNN for object detection. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 27(8), 1861–1873 (2019)

Ma, Y., Cao, Y., Vrudhula, S., Seo, J.-S.: Performance modeling for CNN inference accelerators on FPGA. IEEE Trans. Comput. Aided Design Integrat. Circ. Syst. 39(4), 843–856 (2020)

Kang, H.J.: Accelerator-aware pruning for convolutional neural networks. IEEE Trans. Circ. Syst. Video Technol. 30(7), 2093–2103 (2020)

Islam, M.N., Shrestha, R., Chowdhury, S.R.: An uninterrupted processing technique-based high-throughput and energy-efficient hardware accelerator for convolutional neural networks. IEEE Trans. Very Large Scale Integrat. Syst. 30(12), 1891–1901 (2022)

Li, B., Hang Wang, X., Zhang, J., Ren, L., Liu, H., Sun, N.Z.: Dynamic dataflow scheduling and computation mapping techniques for efficient depthwise separable convolution acceleration. IEEE Trans. Circ. Syst. I Reg. Pap. 68(8), 3279–3292 (2021)

Zhang, Z., Mahmud, M.A.P., Kouzani, A.Z.: FitNN: a low-resource FPGA-based CNN accelerator for drones. IEEE Internet Things J. 9(21), 21357–21369 (2022)

Chon, D., Yang, Y., Choi, H., Choi, W.: Hardware-efficient barrel shifter design using customized dynamic logic based MUX. In: 2022 19th International SoC Design Conference (ISOCC), pp.59–60 (2022)

Tianqi Tang, Y.C., Xia, L., Li, B., Wang, Y., Yang, H.: Low bit-width convolutional neural network on RRAM. IEEE Trans. Comput. Aided Design Integrat. Circ. Syst. 39(7), 1414–1427 (2020)

Yadav, D. K., Gupta, A. K., Mishra, A. K.: A fast and area efficient 2-D convolver for real time image processing. In: 2008 IEEE Region 10 Conference, pp 1–4 (2008)

Huang, K., et al.: Acceleration-aware fine-grained channel pruning for deep neural networks via residual gating. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 41(6), 1902–1915 (2021)

Du, L., et al.: A Reconfigurable streaming deep convolutional neural network accelerator for internet of things. IEEE Trans. Circ. Syst. I Reg. Pap. 65(1), 198–208 (2018)

The Mnist Database of handwritten digits. (2024). [Online]. Available: http://yann.lecun.com/exdb/mnist/.

Zynq UltraScale+ MPSoC ZCU104 Evaluation Kit. [Online]. (2024). Available: https://www.xilinx.com/products/boards-and-kits/zcu104.html.

Aguilar-González, A., Arias-Estrada, M., Pérez-Patricio, M., Camas-Anzueto, J.L.: An FPGA 2D-convolution unit based on the CAPH language. J. Real-Time Image Proc. 16, 305–319 (2019)

Birem, M., Berry, F.: DreamCam: a modular FPGA-based smart camera architecture. J. Syst. Architect. 60(6), 519–527 (2014)

Kosuge, A., Hamada, M., Kuroda, T.: A 16 nJ/classification FPGA-based wired-logic dnn accelerator using fixed-weight non-linear neural net. IEEE J. Emerg. Select. Top. Circ. Syst. 11(4), 751–761 (2021)

Xu, A., Li, C., Wei, Y., Ge, Z., Cheng, X., Liu, G.: Gate-controlled memristor FPGA model for quantified neural network. IEEE Trans. Circ. Syst. II Brief 69(11), 4583–4587 (2022)

Liu, Y., Chen, Y., Ye, W., Gui, Y.: FPGA-NHAP: a general FPGA-based neuromorphic hardware acceleration platform with high speed and low power. IEEE Trans. Circ. Syst. I Reg. Pap. 69(6), 2553–2566 (2022)

Funding

This work was supported in part by the National Science and Technology Council under Grant NSTC 112-2221-E-224-021.

Author information

Authors and Affiliations

Contributions

Shih-Chang Hsia: wrote the main manuscript text and architecture planning Yu-Xiang Zhang: simulation and FPGA verilog programming.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hsia, SC., Zhang, YX. Neural network accelerator with fast buffer design for computer vision. J Real-Time Image Proc 21, 47 (2024). https://doi.org/10.1007/s11554-024-01423-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01423-x