Abstract

Given the current pace of technological advancement and its pervasive impact on society, understanding public sentiment is essential. The usage of AI in social media, facial recognition, and driverless cars has been scrutinized using the data collected by a complex survey. To extract insights from data, a descriptive-prescriptive hybrid data processing method is proposed. It includes graphical visualization, cross-tabulation to identify patterns and correlations, clustering using K-means, principal component analysis (PCA) enabling 3D cluster representation, analysis of variance (ANOVA) of clusters, and forecasting potential leveraged by Random Forest to predict clusters. Three well-separated clusters with a silhouette score of 0.828 provide the profile of the respondents. The affiliation of a respondent to a particular cluster is assessed by an F1 score of 0.99 for the test set and 0.98 for the out-of-sample set. With over 5000 respondents answering over 120 questions, the dataset reveals interesting opinions and concerns regarding AI technologies that have to be handled to facilitate AI acceptance and adoption. Its findings have the potential to shape meaningful dialog and policy, ensuring that the evolution of technology aligns with the values and needs of the people.

Similar content being viewed by others

Introduction

The current society increasingly depends on AI technologies. Most people possess at least one social media account, exposing them to diverse information sources. When traveling, we depend on security measures like facial recognition. Furthermore, automated transportation, such as driverless cars, has been in use for some time; for example, Narita AirportFootnote 1 has operated such commuting trains for over 20 years. Other assistants in our homes are tasked with performing mundane activities, gathering data, and consequently enhancing our quality of life. Developments in AI technologies have the potential to reshape and enhance society in the upcoming decades. Recently, the promise of these technologies in enhancing daily life and human capabilities has been acknowledged (Choung et al., 2023; Kopalle et al., 2022; Wang et al., 2023a; Yu and Helwig, 2022). Nonetheless, public perspectives are heavily influenced by considerations such as the intended use of these technologies, the regulation in place, and the beneficiaries or potential losses when AI advancements become implemented on a large scale. As expected, a sense of caution is prevalent in public attitudes toward AI and human enhancement applications, often revolving around concerns related to personal autonomy, lack of human connections, loss of jobs, unintended consequences, and the substantial societal changes that these innovations might entail (Querci et al., 2022; Lambert and Stevens, 2023). People might be apprehensive that economic inequalities may worsen as certain advancements emerge, and they also express concerns about the potential for increased surveillance through technologies like facial recognition software and driverless cars.

According to a recent Pew Research CenterFootnote 2 (PRC) survey applied in February 2021 to over 10,000 Americans, it revealed that they perceived potential in how AI technologies could enhance life and society capabilities. The survey delves into a wide spectrum of scientific and technological advancements, testing the perceptions of respondents. It was mainly focused on public opinions regarding three AI developments that are frequently debated in social media: (1) The utilization of facial recognition technology (Smith and Miller, 2022), (Andrejevic and Selwyn, 2020); (2) The use of algorithms by social media companies to detect false information (Tseng et al., 2023); (3) The advancement of driverless passenger vehicles (Karmakar et al., 2021). The dataset is a subset of a larger survey that approached both technological and science-related issues. The investigated survey aims to gather opinions on the impact of technology on society, specifically looking at perceptions of fairness related to algorithms (Giovanola and Tiribelli, 2023) and the interplay between technology and issues of discrimination (Kim, 2022). It also seeks to understand how these views correlate with demographic factors such as income, political affiliation, personal ideologies, etc. The survey addresses several themes, including the overall impact of technology on daily life and societal well-being, concerns and excitement about future technological developments and their implications, views on the possibility of algorithms functioning in a fair and unbiased manner, etc. The distribution of responses suggests that the survey targeted a diverse adult population, likely with a broad age range, covering various income levels, political affiliations, and ideological beliefs. The findings extracted from answers could be used to inform policymakers and business leaders about public concerns and expectations regarding AI and its governance, guide the development of more equitable and fair technology policies and practice, enhance understanding of the relationship between socioeconomic status and perspectives on AI (van Noordt and Misuraca, 2022).

The survey has been structured to provide quantitative data through a series of closed-ended questions. This approach allows for a clear analysis of trends and patterns within the responses, potentially supporting a wide range of statistical and comparative analyses. Given the analysis of the dataset and the prevalence of topics related to technology and algorithms, the survey may have sought to capture public sentiment on AI and its acceptance. The survey data we have analyzed offers a window into this complex landscape, capturing the multifaceted attitudes toward AI and technology as a whole. Respondents were asked to qualify the impact of AI technologies (question code: TECH1_W99) as mostly positive, negative, or equally mixed. The distribution of responses indicates a general optimism towards technology, with many participants recognizing its benefits. However, a significant portion of the populace retains a level of skepticism or concern, highlighting the need for ongoing dialog about AI’s role in society. The nuanced balance of concern and excitement regarding technological advances (CNCEXC_W99) suggests that while the public is hopeful about the potential of AI, there is an underlying caution about its implications, ethical, privacy-related, and socioeconomic. This duality is a clear sign that acceptance of AI is not unconditional and is tempered by realistic appraisals of its potential downsides. Questions regarding the fairness of algorithms (ALGFAIR_W99) delve into the heart of AI ethics. The responses reflect a divided opinion, with a notable portion of participants unsure about whether algorithms can be truly fair. This uncertainty underscores a pivotal aspect of AI acceptance: trust in the systems’ design and the transparency of their decision-making processes. The dataset incorporates demographic variables like political affiliation (F_PARTYSUM_FINAL) and income (F_INC_SDT1), which could be correlated with AI acceptance levels. For instance, one might hypothesize that individuals with higher incomes, who may benefit more from AI services, could show greater acceptance, or that political leanings might influence trust in technology providers and regulatory approaches.

The columns that start with POSNEGAI relate to questions where respondents were asked about their levels of concern or excitement. These questions are designed to measure the respondents’ attitudes towards certain issues, gauging their emotional responses on a spectrum from concern to excitement. The SMALG columns in the codification file correspond to questions about social media, algorithms, and their impact or perceptions among the respondents. The FACEREC prefix in these column names indicates that they are related to questions about facial recognition technology. These questions cover a range of issues related to facial recognition technology, including public sentiment, expected outcomes, societal impacts, and regulatory opinions. The available responses suggest that respondents can express a variety of opinions, from concerns about privacy and fairness to support for the potential benefits of the technology in areas such as law enforcement and crime prevention. The DCARS prefix in the column names indicates that these questions are related to the topic of driverless cars (autonomous vehicles) and the issues surrounding them. These questions cover a broad spectrum of issues related to autonomous vehicles (general feeling, societal and economic impact, regulation, and standards), from their potential societal and economic impacts to ethical considerations and personal comfort levels with the technology. This nuanced perspective on AI acceptance is important for stakeholders in the AI ecosystem. As AI continues to evolve, monitoring and understanding public sentiment is significant in navigating its integration into the fabric of society.

In the current research, we propose a combined descriptive and prescriptive model that integrates cross-tabulation, K-means clustering, 3D graphical visualization using PCA, analysis of variance (ANOVA) of clusters, and the predictive capabilities of Random Forest for cluster prediction. The proposed model combines supervised and unsupervised Machine Learning (ML) algorithms and contributes to the state of the art in the field of survey data processing, gaining insights that are essential for promoting the acceptance and adoption of AI. Our primary objective is to conduct a comprehensive analysis of survey data on the excitement and concerns surrounding AI technology in areas like social media, facial recognition, and driverless cars, using a descriptive-prescriptive method. Our research motivation consists of understanding relevant groups of individuals, identifying their concerns, and predicting new instances of individuals into a cluster using prediction algorithms. This approach will enable us to delve deeply into the public perception and attitudes towards these technologies. Thus, the contribution of this paper lies in extracting meaningful insights from this data, which may lead to advancements in both humanity and social sciences.

Our research questions are “How do different groups of individuals perceive AI technologies such as social media, facial recognition, and driverless cars, and what are their primary concerns, and how can these perceptions and concerns be predicted for new instances of individuals?” This paper is structured as follows: in the “Literature review” section, a brief literature survey is presented focusing on similar research, the “Methodology” section is dedicated to the proposed methodology, and in the section “Results”, the results are presented. Section “Discussion” is designed for discussions, whereas section “Conclusion” is designed for conclusions.

Literature review

The acceptance and adoption of AI have been investigated in many fields, such as: agriculture (Mohr and Kühl, 2021), manufacturing, health, education, transportation, social media (Chung et al., 2021), security, etc. Moreover, the ML and AI-induced technology-related stress in organizations was analyzed in (Kumar et al., 2023). The study on the practice of AI in manufacturing firms in Malaysia, focusing on its role in driving digital transformation, revealed significant insights (Ahmad et al., 2022). Integration of digital technologies into business processes is essential for digital transformation, a trend underscored by the emergence of “Industry 4.0”. In this context, AI is a key component in enhancing performance and stimulating demand and productivity in the manufacturing sector. The research also highlighted several barriers and challenges faced by manufacturing firms in Malaysia, primarily due to the nascent stage of AI development. These include a lack of skilled talent, insufficient incentives, and a dearth of innovation. To gather data, managers from various manufacturing companies were selected as respondents. From the 93 answers, descriptive analysis was employed to pinpoint the specific barriers and challenges. The findings indicate that the most significant barrier to AI implementation in these firms is the lack of talent. Furthermore, the primary challenge identified is the absence of in-house experts. This study underscores the need for focused strategies to overcome these issues, such as investing in education and training, fostering a culture of innovation, and creating incentives to attract and retain AI experts. The researchers focused on exploring the mediating role of perceptions on the relationship between technology readiness and the adoption of AI in the field of accounting (Damerji and Salimi, 2021). Specifically, it aimed to determine how Perceived Ease Of Use (PEOU) and Perceived Usefulness (PU) influence the correlation between the technology readiness of accounting students and their inclination to adopt AI technologies. This quantitative research involved assessing individual accounting students’ perceptions of their readiness for technology and their attitudes toward adopting it. To collect data, a questionnaire with 31 items was used. This questionnaire also gathered demographic information. The findings of the study revealed that technology readiness significantly impacts technology adoption. However, more nuanced insights were obtained through mediation analysis using hierarchical regression. This analysis showed that the link between technology readiness and the adoption of AI is significantly influenced by PEOU and PU.

Patients’ views on the implementation of AI in radiology were investigated by a questionnaire (Ongena et al., 2020). The study aimed to gauge patients’ perspectives on the implementation of AI in radiology. A questionnaire was developed based on insights from previous qualitative research. The questionnaire was administered to 150 patients scheduled for various radiographies. The primary analytical tool used in this research was exploratory factor analysis (EFA) with principal axis factoring and oblique promax rotation. This approach helped to uncover latent variables underlying patients’ responses. To ensure the reliability of these factors, internal consistency was measured using Cronbach’s alpha and composite reliability. The EFA identified 5 distinct factors concerning patients’ views on AI in radiology: distrust and accountability, procedural knowledge, personal interaction, efficiency, and being informed. The internal consistency of the responses was good for the first three factors and acceptable for the last two. Moreover, in (Xuan et al., 2023), the readiness towards AI among undergraduate medical students in Malaysia was analyzed using frequency tables, percentages, standard deviation, unpaired t-tests, and ANOVA. Significant correlations were found between the age and academic year of participants and their readiness in the ability, vision, and ethics domains of medical AI. Additionally, a notable association was identified between prior training and all four domains of medical AI readiness. Additionally, other studies investigated AI impact and adoption factors in health systems (Kim et al., 2022; Kosan et al., 2022; Patrzyk et al., 2022; Vorisek et al., 2023).

Researchers explored the use of natural language processing (NLP) to analyze patient experiences (van Buchem et al., 2022). The data was primarily gathered through 5 open-ended questions, allowing for a more nuanced understanding of patient perspectives. An NLP pipeline, incorporating sentiment analysis and topic modeling, was employed to process the responses, and a visualization tool was developed to help physicians navigate the results. The research involved an iterative process of developing and validating both the questionnaire and the NLP pipeline within a clinical setting. The questionnaire focused on various aspects of patient care, including the information provided, personal approach, collaboration among healthcare professionals, organization of care, and other experiences. A total of 534 patients participated by responding to this questionnaire. The performance of the sentiment analysis model was notable, achieving an F1 score of 0.97 for identifying positive texts and 0.63 for negative texts. The use of NLP proved efficient in reducing the time required by healthcare professionals to assess and prioritize patient experiences. This approach offers a significant advantage over traditional methods that rely on closed-ended questions, as it captures a broader and more detailed range of patient feedback.

The researchers explored the implementation of AI in talent acquisition by human resource managers (Pillai and Sivathanu, 2020). A survey involving 562 managers was conducted. The collected data was analyzed through Partial Least Squares Structural Equation Modeling (PLS-SEM). The findings indicate that factors such as cost-effectiveness, relative advantage, support from top management, HR readiness, competitive pressure, and backing from AI vendors have a positive impact on the adoption of AI for talent acquisition. Conversely, concerns regarding security and privacy serve as deterrents. The research also identified that the characteristics of tasks and technology affect the suitability of AI for talent acquisition tasks. Furthermore, the adoption and appropriateness of AI technology are linked to its actual usage in talent acquisition. Interestingly, the study uncovers that a preference for traditional methods of talent acquisition can weaken the relationship between AI adoption and its actual usage in this field.

Almarashda et al. (2021) focused on identifying factors influencing the implementation of AI in the United Arab Emirates’ energy sector. A comprehensive analysis was conducted using data from 350 respondents. The study found that the primary drivers for adopting AI in organizations are its potential to foster effective business innovation, align with business strategies, and enhance production levels. Key aspects of AI, such as user-friendliness, the ability to improve work quality, and compatibility with existing tasks, were identified as critical factors for its adoption. Additionally, the research highlighted the importance of human resource management factors, including the need for a knowledgeable referral person when encountering difficulties with AI and expert support in utilizing AI technology.

The factors influencing the adoption of AI in banking services were examined (Rahman et al., 2022). A survey was conducted, yielding 302 opinions from Malaysian banking customers. This research aimed to identify the key determinants of customers’ intentions to adopt AI in banking services. From the qualitative analysis, it was found that AI is essential for detecting fraud and preventing risks. However, concerns about data privacy and security, lack of regulatory frameworks, and deficiencies in relevant skills and IT infrastructure pose significant challenges to AI adoption. The quantitative analysis revealed that several factors significantly impact customers’ intentions to adopt AI. These include their attitudes towards AI, PU, perceived risk, perceived trust, and subjective norms. Interestingly, factors like PEOU and awareness were not found to influence this intention. Moreover, the study highlighted the role of attitude towards AI as a mediator in the relationship between PU and the intention to adopt AI.

Another research presented an innovative approach to exploring the dynamics between digital technology and the adoption of AI in the context of electronic manufacturing enterprises (Binsaeed et al., 2023). Specifically, the study aimed to (a) investigate the direct relationship between digital technology and AI adoption; (b) examine the mediating role of Knowledge Sharing (KS) in this relationship; (c) assess how privacy and security considerations might moderate the link between digital technology and AI adoption. A questionnaire survey was distributed among electronic firms in Saudi Arabia. The analysis is based on data from 298 respondents. The research methods include multi-level correlation and regression analysis to test the hypotheses. The findings from this analysis are noteworthy. There is a positive direct influence of digital technology on AI adoption within electronics manufacturing enterprises. KS acts as a significant mediator in the relationship between digital technology and AI adoption. Privacy and security considerations play a moderating role in this relationship. Moreover, (Qiu et al., 2022) delved into the influence of AI-enabled services in the hospitality industry, particularly focusing on how AI technology supports Frontline Employees (FE) physically, mentally, and emotionally, thereby enhancing their ability to provide hospitable service. A total of 342 valid questionnaires were collected to examine various aspects related to AI-enabled services. The study employed factor analyses and measurement model evaluation to understand the construct of AI-enabled service better. Four key factors were identified as central to this construct: anthropomorphic attributes, entertainment attributes, functional attributes, and information attributes. While entertainment does not reduce the physical and mental fatigue experienced by FE, it has a notable positive impact on their emotional well-being.

The adoption of AI and related technologies by public and academic librarians in North America was investigated (Yoon et al., 2022). Quantitative analysis of the survey responses was conducted using various chi-square tests and crosstab analyses. It was observed that academic librarians reported higher usage and awareness of AI and related technologies. Conversely, public librarians exhibited more positive attitudes towards AI technologies. Overall, 67% of the participants believed that AI would significantly change library functions, and 68% of the librarians expressed interest in receiving training in AI. These findings suggest a growing recognition of the transformative potential of AI in the library sector, coupled with a strong interest in training and development to leverage these technologies effectively.

Another research contributed to the field of AI education by introducing a novel AI curriculum framework (Chiu et al., 2022). The effectiveness of this curriculum in enhancing AI learning was established through a multifactorial pre-post-test evaluation, focusing on students’ perceptions of AI learning. The curriculum’s development involved a collaborative co-creation process, with a team of 14 professors working alongside 17 principals and teachers from 6 secondary schools. The study participants included 335 students and 8 teachers from these schools. The research employed a mixed-methods approach, combining quantitative data from pre- and post-questionnaires with qualitative insights highlighting teachers’ views on the co-creation process. Data analysis was conducted using paired t-tests and ANCOVAs for quantitative data and thematic analysis for qualitative data. Post-intervention, students reported an increased sense of competence in AI and a more positive attitude towards learning AI. Additionally, other studies investigated AI in education (Rauf et al., 2021; Wang et al., 2023b; Nouraldeen, 2023) in Lebanese universities. Another study investigated an integrated model designed to predict elementary school students’ acceptance of AI robots serving as teachers (Chen et al., 2023). This research area has garnered significant interest due to AI teachers’ potential to address the global teacher shortage. Participants were students from Chinese elementary schools. The study utilized descriptive statistics and SEM. The research identified several key factors influencing the acceptance of AI teachers, including Robot Use Anxiety (RUA), PU, PEOU, and Robot Instructional Task Difficulty (RITD). The findings indicated that students had positive attitudes towards AI teachers, and these attitudes could be predicted based on their perceptions of the AI teachers’ PU, PEOU, and the difficulty of the tasks they were programmed to perform. Furthermore, the study discovered that the relationship between RITD and the acceptance of AI teachers was mediated by factors such as RUA, PEOU, and PU. This indicated that the difficulty of tasks assigned to AI teachers, the anxiety students feel about using robots, and their PEOU of AI teachers all play important roles in determining how well these AI teachers are accepted by students.

The study (Amichai-Hamburger et al., 2020) focused on the adoption of personal autonomous cars, particularly how tailoring the vehicle’s operations to the user’s personality could improve user experience. A questionnaire was created, targeting various features of a proposed information system for autonomous cars. Analysis of the responses identified two key factors influencing user preferences: the willingness to share personal information and the desire to maintain control over the vehicle. Additionally, a regression analysis examining the relationship between preferences for autonomous car features and factors such as personality traits, gender, and age revealed that traits like openness, conscientiousness, and age significantly influenced these preferences. Another study (Rowthorn, 2019) investigated the ethical decision-making processes in autonomous vehicles, examining aspects of machine ethics, AI in driving, and algorithms used during crash scenarios. Utilizing data from multiple sources, including the Pew Research Center, the researcher conducted a series of analyses and estimations. These included assessing the percentage of U.S. adults willing or not willing to travel in a driverless car, evaluating how safe these adults feel as pedestrians in cities where Autonomous Vehicles (AVs) operate, determining which countries are best equipped for the advent of autonomous vehicles, and identifying the key data infrastructure needs in smart cities that are essential for the testing and implementation of AVs. More studies focused on identifying the behavioral factors influencing the adoption of AVs (Acheampong and Cugurullo, 2019). The primary objective was to develop conceptual frameworks and measurement models to forecast trends in public transportation, sharing, and ownership of self-driving cars. These proposed frameworks incorporated socio-demographic variables along with key latent behavioral factors. They included PEOU of AVs, public concerns and apprehensions about AVs, subjective norms, perceived behavioral control, and attitudes related to the environment, technology, shared consumption, public transport, and car ownership. Utilizing survey data with CFA, the research aimed to establish and validate the reliability of scale indicators. This process involved confirming both the convergent and discriminant validity of the relationships among the latent variables. As a result of this comprehensive analysis, four distinct measurement models were developed and presented.

Passengers’ perceptions and satisfaction levels with the digital technology implemented by airlines during the COVID-19 pandemic, focusing specifically on the Chinese market, were explored (Shiwakoti et al., 2022). An online survey was conducted to assess passengers’ views on 11 different digital technology-based services provided by airlines, including facial recognition. A total of 365 valid responses were collected and analyzed using ANOVA tests and stepwise multiple linear regression analysis. The findings revealed a generally positive attitude among passengers towards the adoption of new technologies by airlines. From the regression analysis, 6 technologies were identified as having a statistically significant impact on passenger satisfaction. These include AI customer service, electronic luggage tags, cleaning robots, ultraviolet light and antimicrobial cabin cleaning, an app-controlled in-flight entertainment system, and an electronic library. Conversely, three technologies were found to be less favorable among passengers: facial recognition, digital documentation, and AI customer service.

Other recent research focused on public sentiment extracted from texts from social platforms such as Twitter (Liu, Zhou, et al., 2023b) and emotions classification involving ML algorithms and complex data processing pipelines (Liu et al., 2023a). Autonomous cars, driving, and traffic issues were debated in (Xiao et al., 2023), addressing the safety challenges posed by occlusions in autonomous driving by proposing a multi-tier perception task offloading framework that leverages collaborative computing between autonomous vehicles and roadside units. Federated learning in autonomous cars (Fu et al., 2023), technology in of moving trajectory of autonomous cars (Ding et al., 2023), and human activity recognition (Jannat et al., 2023) were further examined by exploring the use of Wi-Fi Channel State Information (CSI) signals for recognizing human activities, leveraging the insight that human movement alters Wi-Fi signal propagation, evident in CSI signal variations. Other intriguing aspects such as management in traffic with autonomous vehicles (Yue et al., 2023) and policies related to technological innovations in vehicles and their manufacturers (Jiang and Xu, 2023) were investigated.

Comparing the brief findings extracted in Table 1, we can conclude that AI acceptance and adoption were investigated in various countries (Malaysia, U.S.A., Netherlands, Malaysia, India, United Arab Emirates, and Saudi Arabia. North America, China (Hong-Kong), China-other regions, Israel, Ireland (Dublin). The number of respondents varied from 93 to 5,400, showing a wide range of cases. Furthermore, the methods used in these studies vary widely, ranging from descriptive analysis, quantitative research, and hierarchical regression in the Malaysian industry ([17]) and U.S. accounting fields ([18]), to EFA and CFA in health-related studies in the Netherlands ([19], [25]), PLS-SEM in human resources and banking system research in India and Malaysia ([26], [28]), and more advanced techniques like NLP and multi-level regression analysis in health and manufacturing sectors in the Netherlands and Saudi Arabia ([25], [29]). Other methods include OLS regression in transportation studies in Israel ([37]), and a mix of ANOVA, chi-square tests, and thematic analysis in various fields across different countries. This diversity in methods reflects the unique requirements and complexities of each field and research question.

Methodology

Proposed data processing method

Survey data can be analyzed in various ways to gain insights and make informed decisions: descriptive analysis, cross-tabulation (Warwas et al., 2022), hypothesis testing (t-tests and ANOVA, Chi-square tests, regression analysis) (Ko et al., 2021), (Sampurna et al., 2023), factor analysis (Bâra and Oprea, 2023), (Oprea and Bâra, 2022), cluster analysis, sentiment analysis (Lepelaar et al., 2022), principal component analysis (PCA) to reduce the dimensionality of the data and identify the most important components; latent class analysis (LCA), Bayesian analysis, text mining and topic modeling like latent dirichlet allocation (LDA) or word cloud visualizations. To investigate the responses, we propose a descriptive-prescriptive hybrid model that includes cross-tabulation, clustering using K-means, analysis of variance (ANOVA), and prediction ensemble algorithm (Random Forest) to estimate clusters. The process flow of the methodology is described in Fig. 1.

Algorithms and statistical approach background

K-means is an unsupervised ML algorithm used for clustering similar data points into groups or clusters. The algorithm aims to partition a dataset into K distinct, non-overlapping clusters, with each data point belonging to the cluster with the nearest mean value. The number of clusters is the most important parameter in K-Means. Thus, it is important to determine the optimal number of clusters using the elbow method or silhouette score. It represents the number of centroids (cluster centers) to be generated. K-means starts by randomly initializing K cluster centroids. These centroids serve as the initial cluster centers. Each data point is assigned to the nearest centroid based on a distance metric, commonly Euclidean distance. The data points are grouped into clusters based on their proximity to the centroids. After assigning data points to clusters, the algorithm calculates new centroids for each cluster. The new centroids are computed as the mean of all data points within the cluster. The assignment and update steps are repeated iteratively until one of the stopping criteria is met. K-Means is guaranteed to converge to a solution, but it may not always find the global optimum. The final clustering result depends on the initial random centroid selection. It has several advantages, such as: simple and easy to understand, scales well to large datasets, and can be used for various types of data, including numerical and categorical. Nonetheless, it has also disadvantages, such as: requires specifying the number of clusters (K) in advance; being sensitive to the initial placement of centroids, which leads to different results; assuming that clusters are spherical and equally sized, which may not be the case in real complex datasets. There are several applications where K-means can be applied alone or in combination with other ML algorithms providing solutions for customer segmentation for marketing, image compression, anomaly detection, document classification, genomic data analysis, recommendation systems, etc.

The first step consists of random initialization of K cluster centroids μ1, μ2,…,μK, where K is the number of clusters. Then, the assignment of the data points to clusters is performed. For each data point xi, where i = 1,2, …,N (N is the number of data points), the clusters are selected based on Eq. (1):

Each data point xi is assigned to the cluster with the nearest centroid, where ci is the cluster assignment for xi. After each assignment, the cluster centroids are updated. For each cluster k = 1,2,…,K, the cluster centroids are calculated as in Eq. (2):

The centroid μk is updated as the mean of all data points xi assigned to cluster k, where |Ck| is the number of data points in cluster k. The assignment and update steps are repeated until a stopping criterion is met. Common stopping criteria include a maximum number of iterations reached or centroids no longer changing significantly (leading to convergence).

Finally, the clusters are evaluated in terms of size, centroids, distributions, and other characteristics. Therefore, the K-means algorithm involves iteratively assigning data points to clusters and updating cluster centroids until convergence. The algorithm aims to minimize the sum of squared distances between data points and their assigned centroids. The choice of distance metric and the initialization method (e.g., random initialization or K-means) are essential aspects of the K-means algorithm. Additionally, the algorithm’s performance is sensitive to the choice of K, the number of clusters, which requires evaluation techniques.

ANOVA is a statistical technique used to analyze and compare the means of two or more groups or populations to determine whether there are statistically significant differences among them. ANOVA is a tool for understanding the variability within and between groups. It can be combined with K-means to further analyze the quality of clusters. Two-way ANOVA extends the one-way ANOVA to handle situations with two independent categorical variables or factors. It allows us to simultaneously investigate the effects of two factors on a response variable. To formalize the two-way ANOVA, let’s consider two independent factors A and B, where I is the number of levels or categories in the first factor (Factor A) and J is the number of levels or categories in the second factor (Factor B), N is the total number of observations, nij is the number of observations in the i-th level of Factor A and the j-th level of Factor B. \({\bar{x}}_{{ij}}\) is the mean of observations in the i-th level of Factor A and the j-th level of Factor B. \(\bar{\bar{x}}\) is the overall mean, SST is the total sum of squares (variation of all data points from the overall mean), SSA is the sum of squares for Factor A (variation due to Factor A), SSB is the sum of squares for Factor B (variation due to Factor B), SSAB is the sum of squares for the interaction between Factors A and B (joint effect of A and B) and SSW is the within-group sum of squares (residual or error variation). The total sum of squares is defined in Eq. (3):

It calculates the total variability in the data, measuring how far individual data points are from the overall mean. The sum of squares for Factor A is formulated in Eq. (4):

The variation due to Factor A, measuring how much the group means (across levels of Factor A) differ from the overall mean, is calculated. The sum of squares for Factor B is defined in Eq. (5):

The variation due to Factor B, measuring how much the group means (across levels of Factor B) differ from the overall mean, is calculated. The sum of squares for interaction is provided in Eq. (6):

Equation (6) calculates the variation due to the interaction between Factors A and B, measuring the combined effect of A and B. Within-group sum of squares is provided in Eq. (7):

Equation (7) calculates the variation within each group, measuring how far individual data points are from their respective group means. The total degrees of freedom is defined in Eq. (8):

The degrees of freedom for Factor A and for Factor B are formulated in Eq. (9):

The degrees of freedom for the interaction and within-group are defined as in Eq. (10):

The mean squares for Factor A and for Factor B are provided in Eq. (11):

The mean squares for the interaction and within groups are formulated in Eq. (12):

F-statistics for Factor A, for Factor B, and for the interaction are provided in Eq. (13):

The p-values are calculated based on the respective F-statistics and the F-distribution. They indicate the probability of observing such extreme F-statistic values under the null hypothesis for each factor and the interaction. Null Hypotheses (H0) implies that H0A: No significant effect on Factor A, H0B: No significant effect of Factor B, and H0AB: No significant interaction between Factors A and B. Alternative Hypotheses (H1): implies that H1A Significant effect on Factor A, \({H}_{1A}\) a significant effect on Factor B and \({H}_{1{AB}}\): Significant interaction between Factors A and B. If the p-value for a factor or interaction is smaller than a chosen significance level (e.g., 0.05), we reject the null hypothesis for that factor or interaction. Otherwise, we fail to reject it. These equations formalize the process of conducting a two-way ANOVA to analyze the effects of two categorical factors (Factor A and Factor B) and their interaction on a response variable. Two-way ANOVA helps determine whether variations in the response variable are influenced by one or both factors, as well as their interaction. Variation analysis is applied to the groups obtained with K-means to estimate the performance of the clustering process.

The aim of cluster prediction is to categorize a new instance within a dataset into a predefined cluster. For instance, when an individual responds to survey questions, they can be assigned to a specific cluster based on their answers. While it is acknowledged that perceptions and attitudes in technology and innovation are subject to change, such allocation can still be effectively done using existing classifications. However, to ensure accuracy and relevance in understanding technology adoption, it is necessary to periodically repeat the survey, thereby updating the responses and refining the calibration of adoption measures.

Classification methods focus on identifying variables that take on discrete values, which are represented as categories or classes (in our particular case cluster number). These classes can be binary, such as y ∈ {0,1}, or multi-class, such as y ∈{0,1,2,3,4,…,p}, where p represents the total number of distinct classes. In a binary classification scenario, the value 1 typically signifies the “positive” class, while 0 indicates the “negative” class. The core of classification techniques lies in the construction of a function that predicts the probability that a given instance belongs to a particular class. This is achieved by modeling a function based on independent variables x and computing the likelihood of belonging to a specific class. For instance, in binary classification, if the function \({h}_{\theta }(x)\) yields a value equal to or greater than 0.5, it is predicted that \(\hat{y}=1\), meaning the instance is classified into the positive class. Conversely, if \({h}_{\theta }(x)\) is less than 0.5, \(\hat{y}=0\) is predicted, placing the instance in the negative class. The function \({h}_{\theta }(x)\), which ranges from 0 to 1 (i.e., \(0\le {h}_{\theta }(x)\le 1\)), is used to model this classification decision. To effectively model the function \({h}_{\theta }(x)\), specific notations and methods are employed as in Eq. (14):

Where \({\rm{\theta }}=\left[\begin{array}{ccc}{\theta }_{0} & \ldots & {\theta }_{n}\end{array}\right]\)- the vector of weights that is multiplied by X; X—the matrix of input variables; n—number of variables; m—number of observations; \(x=\left[\begin{array}{ccc}{x}_{1}^{\left(i\right)} & \ldots & {x}_{n}^{\left(i\right)}\end{array}\right]\)—an observation. The following probability function is calculated as in Eq. (15):

The sigmoid function approximates: \(\hat{y}=1\), if \({h}_{\theta }\left(x\right)\ge 0.5\) (or \(q\ge 0\)) and \(\hat{y}=0\), if \({h}_{\theta }\left(x\right) < 0.5\) (or \(q < 0\)), whereas the function \({h}_{\theta }\left(x\right)\) estimates the probability that \(y=1\) as in Eq. (16):

The relation between the two probabilities \(P\left(y=1{|x;}\theta \right)\) and \(P\left(y=0{|x;}\theta \right)\) is defined in Eq. (17):

Let’s consider \({q=\theta }_{0}+{\theta }_{1}{x}_{1}+{\theta }_{2}{x}_{2}\), where \({\theta }_{0}=-2,{\theta }_{1}=1\) and \({\theta }_{2}=1\). Thus, \(q=-2+{x}_{1}+{x}_{2}\) and \({h}_{\theta }\left(x\right)=\sigma \left(q\right)=\frac{1}{1+{e}^{-(-2+{x}_{1}+{x}_{2})}}\). \(y=1\), if \(-2+{x}_{1}+{x}_{2}\ge 0\) or \({x}_{1}+{x}_{2}\ge 2\), creating a decision boundary (as in Fig. 2).

The cost function for classification depends on the dependent variable y as it can be either \(y=1\) or \(y=0\) as in Eq. (18):

If \(y=1\), when \({h}_{\theta }\left(x\right)\) tends 1, the \({Cost}\left(\theta \right)\) is very small and tends to 0, whereas when \({h}_{\theta }\left(x\right)\) tends 0, the cost is very high \({Cost}\left(\theta \right)\) and tends to infinite. Furthermore, if \(y=0\), when \({h}_{\theta }\left(x\right)\) is closer to 0, the cost tends to 0, then \({Cost}\left(\theta \right)\to 0\), whereas if \({h}_{\theta }\left(x\right)\) tends to 1, then the cost is rocketing to infinite: \({Cost}\left(\theta \right)\to \infty\) as the estimation is far from the target and it has to be significantly penalized (as in Fig. 3).

In the same way as with linear regression, where overfitting is a potential issue, a regularization term denoted by γ can be incorporated into the cost function. This addition aims to mitigate the problem of overfitting by decreasing the variance. Consequently, the cost function is modified to include this regularization term as in eq. (19).

The goal is to fine-tune the model’s weights to achieve the lowest possible value of the cost function. The gradient descent algorithm is a commonly used method for tackling this optimization challenge. By iteratively updating the weights θ, the algorithm works towards minimizing the overall cost, defined in Eq. (20).

The partial derivative of the cost function is calculated to obtain Eq. (21):

If the classification has multiple classes, \(y\epsilon \left\{\mathrm{0,1,2},\ldots ,p\right\}\), then the classification problem can be decomposed in p + 1 problems as in Eq. (22):

To evaluate the performance of classification, Accuracy (A), Precision (P), Recall (R), F1 score, and AUC are calculated as in Eq. (23):

Where y – real value, \(\hat{y}\) – estimated value.

Recall (R) or True Positive Rate (TPR) is the ratio between True Positive (TP) and the sum of TP and False negative (FN). Precision (P) is the ratio between TP and the sum of TP and False Positive (FP). Usually, F1score is calculated mostly as it represents a combination of R and P. They are defined in Eq. (24):

Area under the curve (AUC) represents the performance metric of a classifier. This metric ranges from 0.5 to 1, where 1 represents the ideal score for excellent model performance. An AUC significantly greater than 0.5, typically above 0.7, indicates strong predictive accuracy.

Results

Input data

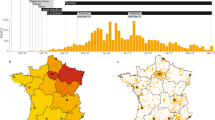

This research examines a subset of a comprehensive, publicly accessible survey, the details of which are available in a GitHub repository and on the Pew Research Center (PRC) website. The analyzed data originates from a survey panel that took place between November 1st and November 7th, 2021. Out of the 11,492 individuals selected for the survey, 10,260 completed their responses, resulting in an 89% participation rate. Therefore, the survey was designed mainly by PCR in consultation with Ipsos and responses were collected online and pre-processed (including the data quality checks) by the same institution. According to PRC methodology, when considering non-responses to initial recruitment efforts and subsequent dropouts, the overall response rate was 3%. Among those panelists who began the survey and answered at least one question, the break-off rate was 1%. For the entire sample of 10,260 respondents, the margin of error in sampling was estimated to be ±1.6 percentage points.

This survey aimed to gather data from non-institutionalized adults aged 18 and over who reside in the United States, encompassing those living in Alaska and Hawaii. Within each household, the survey methodology involved selecting the adult who had the nearest upcoming birthday to participate by completing the survey online. Each participant was provided with a pre-arranged reward for their involvement in the survey.

While the original survey explored both technological and scientific impacts, our analysis in this paper is confined to the technological aspects (that refer to 5,153 out of the 10,260 records of the dataset). This decision is due to the dataset’s complexity and its extensive range of variables. For reader convenience and to support data transparency, we included a link to the dataset on GitHub in the data availability section. This allows for the replication of our analysis.

Addressing potential biases in survey data is essential for the validity of a study’s outcomes. Concerns such as response bias, where respondents may provide socially desirable answers, can be mitigated by ensuring anonymity and using neutral, non-leading questions. Sampling bias, which occurs when the sample is not representative of the population, can be minimized through randomized sampling methods and, if necessary, stratified sampling to include underrepresented groups. We used data from the PRC. It is an organization renowned for conducting surveys, sampling, data analysis, and pre-processing. We considered their pre-processed survey data, which comes with an array of explanatory read-me files. PRC employs a rigorous survey methodology and provides a wealth of resources for each survey, including files in both CSV and PDF formats. Their surveys feature questions labeled with full descriptions and answers presented in various formats, ranging from all numeric to mixed formats. Additionally, the PRC offers detailed information on its methodology and pre-processing stages. The answers were not linked to the respondents’ names being pre-processed by the PRC.

The exploratory data analysis (EDA) provides some initial insights into the dataset sample: The QKEY column is a unique identifier for respondents, ranging from 2 to 10,260, with a total count of 5,153 entries. This indicates that the dataset is a subset of a larger survey that approached both technological and science-related issues. TECH1_W99: Responses suggest that the majority of participants (2627 out of 5153) perceive the impact of technology as “Mostly positive”, with a significant number (2036) seeing equal positive and negative effects. A smaller group (482) views it as “Mostly negative”. CNCEXC_W99: The sentiment about concern vs. excitement is fairly even, with 2,267 respondents being “Equally concerned and excited” and 1,981 being “More concerned than excited”. A smaller portion of 889 is “More excited than concerned”. ALGFAIR_W99: A plurality of respondents (2046) is “Not sure” about the fairness of algorithms, with almost equal numbers finding it “Not possible” (1580) and “Possible” (1480) for algorithms to be fair. F_PARTYSUM_FINAL: Political affiliations are split closely between “Dem/Lean Dem” (2589) and “Rep/Lean Rep” (2445), with a small number (119) not leaning towards any party or refusing to disclose. F_INC_SDT1: Income distribution varies, with the highest number of respondents (1427) reporting an income of “$100,000 or more”. The next largest group earns “Less than $30,000” (812), followed by a fairly even distribution across other income ranges. F_IDEO: Ideological self-identification is mostly “Moderate” (1,853), with “Conservative” (1,344) and “Liberal” (913) also represented. Fewer respondents identify as “Very conservative” (541) or “Very liberal” (424).

This dataset is designed to capture a diverse array of opinions on technology, societal concerns, perceptions of fairness, and personal beliefs, alongside demographic information such as political affiliation and income. The dataset in focus offers a compiled array of survey responses, designed to capture a snapshot of public opinion on a spectrum of contemporary issues. The data is organized into 126 columns, each representing a unique question or data point, and spans 5153 rows, corresponding to individual participants. The survey’s breadth covers topics as varied as technological impact (TECH1_W99), concerns and excitement about future developments (CNCEXC_W99), and perceptions of algorithmic fairness (ALGFAIR_W99). Additionally, it delves into personal beliefs and demographic details, including political leanings (F_PARTYSUM_FINAL), income brackets (F_INC_SDT1), and self-identified ideology (F_IDEO). The columns cover responses to questions regarding technology, fairness of algorithms, discrimination, and personal beliefs. Moreover, the dataset includes demographic information such as political affiliation, income brackets, and frequency of internet use, among other variables. Given the presence of certain columns such as TECH1_W99 and CNCEXC_W99, it can be inferred that the survey explores attitudes towards technological advancements and their perceived impact on society. Additionally, columns like DISCRIM1_a_W99 to DISCRIM1_f_W99 suggest an assessment of perceived discrimination across various facets of life. The survey data is rich with individual viewpoints that are categorized to facilitate a nuanced analysis of public opinion. The diversity of topics within the dataset indicates a multifaceted approach to understanding complex societal issues, ranging from technology and privacy to socio-political dynamics and personal ideology.

An EDA was performed, and it revealed a dataset that is complete and free from common data entry errors such as duplicates or missing values. This level of data integrity allows for a robust analysis of the survey responses. The data showcases a diversity of viewpoints, with no single perspective dominating the responses. For instance, opinions on technology’s impact range broadly from “Mostly positive” to “Mostly negative,” reflecting a spectrum of individual experiences and attitudes. The demographic information suggests a balanced representation of political affiliations and income levels, providing a rich ground for examining correlations between socioeconomic status and opinions on the surveyed topics. Similarly, the distribution of ideological self-identification points to a nuanced political landscape, which could be pivotal in interpreting the subtleties of the participant’s views on the fairness of algorithms and other technology-related issues.

Americans’ attitudes toward the large-scale use of facial recognition technology by law enforcement to monitor crowds and search for potential crime suspects lean more toward the positive side. Specifically, 46% of U.S. adults view this as a beneficial concept for society, while 27% consider it unfavorable, and another 27% are uncertain about it. Regarding the use of computer algorithms by social media companies to detect false information on their platforms, public opinion slightly favors it being a good idea for society (38%) rather than a bad one (31%). Ambivalence is another notable theme in the survey data: 45% indicate they feel both excitement and concern about the increased use of AI programs in daily life, while 37% express more concern than excitement, and 18% report feeling more excited than concerned.

The greatest concern relates to the loss of jobs, followed by the loss of privacy (as in Fig. 4). Top of FormBottom of Form This discussion contextualizes the survey data within the broader topic of AI acceptance, highlighting the complexity of public sentiment and the factors that influence it. The dataset encompasses a comprehensive collection of survey responses, potentially aimed at gauging public sentiment on a variety of topics.

Distributions and cross-tabulation

Education and AI technologies

To focus on the education level of the respondents and analyze its relationship with the acceptance of AI and technology, the dataset contains two columns that relate to the education of the respondents: ‘F_EDUCCAT’ and ‘F_EDUCCAT2’. These columns contain categorical data reflecting the highest level of education attained by the survey participants. We analyze the responses in these columns and cross-reference them with other columns related to attitudes toward technology and AI. This helps us understand if there are any discernible patterns or correlations between education levels and opinions on these topics. We start by examining the distribution of educational attainment among the respondents and then look at the relationship with their views on AI technology.

The analysis of the dataset with respect to the education of the respondents reveals the following education distribution: College Graduate or Higher 2620 respondents; Some College 1640 respondents; High School Graduate or Less 877 respondents; Refused 16 respondents. When broken down further (‘F_EDUCCAT2’), we notice: College Graduate/Some Postgraduate 1443 respondents; Postgraduate 1177 respondents; Some College, No Degree 1083 respondents; High School Graduate 717 respondents; Associate’s Degree 557 respondents; Less than High School 160 respondents; Refused 16 respondents (as in Fig. 5).

To determine opinion on technology’s impact (TECH1_W99), we cross-tabulate education levels with opinions on the impact of technology. Respondents with a higher education level (College Graduate+) tend to have a more positive view of technology, with 1,527 considering it “Mostly positive”. Those with a high school education or less have a greater tendency to see technology’s effects as “Equally positive and negative” (359) or “Mostly negative” (124). Some college education respondents also exhibit a balanced perspective, with significant numbers viewing technology’s impact as both positive (736) and mixed (724). Looking at perceptions of algorithm fairness (ALGFAIR_W99), college graduates and postgraduates show a higher degree of skepticism or uncertainty, with 976 “Not sure” about the fairness of algorithms and 839 finding it “Not possible” for algorithms to be fair. Those with less education also show uncertainty but with fewer respondents (398 “Not sure” for high school education or less). Across all education levels, a significant number of respondents are unsure about the fairness of algorithms, indicating a general ambiguity regarding this aspect of AI. As education often plays a role in technological acceptance and understanding, a bar plot for the opinion on algorithm fairness by the highest level of education attained is showcased in Fig. 6.

Figure 6 shows the perception of algorithm fairness by the respondents’ education level. The education levels are on the x-axis, and the number of respondents is on the y-axis. The colors differentiate the respondents’ views on whether they believe it is possible for algorithms to be fair, not possible, or if they are not sure. These provide an image of how education correlates with opinions on technology’s impact and perceptions of algorithm fairness, which are key indicators of AI acceptance.

Age and AI technologies

To focus on the age of the respondents, we analyze the distribution of respondents’ ages and examine how different age groups’ opinions vary on technology’s impact, excitement and concerns about future developments, and perceptions of algorithmic fairness. The column ‘F_AGECAT’ represents the age categories of the respondents. We further analyze the distribution of respondents’ ages and then look at how these age groups relate to their views on technology’s impact (TECH1_W99), excitement and concerns about future developments (CNCEXC_W99) and perceptions of algorithmic fairness (ALGFAIR_W99). Age distribution is as follows: Ages 30–49 1,642 respondents; Ages 65 + 1,527 respondents; Ages 50–64 1,509 respondents; Ages 18–29 457 respondents; Refused to specify 18 respondents. Analyzing these age segments and opinions on technology’s impact (TECH1_W99), younger respondents (ages 18–29) show a more positive view of technology’s impact, with the majority (237) considering it “Mostly positive”. The 30–49 age group also leans toward a “Mostly positive” view (774) but with a substantial number seeing equal positive and negative effects (702). Older age groups (50–64 and 65+) show a balanced perspective, with a significant number viewing technology’s impact as both positive (754 and 857, respectively) and mixed (603 and 546, respectively). Furthermore, analyzing the age groups and the concern vs. excitement about future developments (CNCEXC_W99), the youngest cohort (18–29) exhibits a higher level of excitement than concern, with a notable proportion equally concerned and excited (215). As age increases, the level of concern seems to rise, with the 50–64 and 65+ groups showing the highest number of respondents more concerned than excited (643 and 651, respectively). Moreover, considering the perception of algorithm fairness (ALGFAIR_W99), the following aspects were extracted. There is a fair degree of uncertainty across all age groups regarding the fairness of algorithms, with “Not sure” being a common response. The perception of algorithms as “Not possible” to be fair is consistently high across all age groups, indicating a widespread skepticism about AI fairness.

Gender, race, and AI technologies

To focus on the gender and race of the respondents and their relationship with attitudes toward AI and technology, we analyze how opinions on technology’s impact, excitement and concerns about future developments, and perceptions of algorithmic fairness vary by these demographic factors. The columns ‘F_GENDER’, ‘F_RACECMB’, and ‘F_RACETHNMOD’ correspond to the respondents’ gender and race/ethnicity. First, we analyze the distribution of respondents’ gender and race and then look at how these demographic groups relate to their views on technology’s impact (TECH1_W99), excitement and concerns about future developments (CNCEXC_W99), and perceptions of algorithmic fairness (ALGFAIR_W99). The gender distribution reveals: Women 2,840 respondents; Men: 2,270 respondents; Other/Non-binary: 31 respondents; Refused: 12 respondents, while the race distribution distinguished among White: 4,072 respondents; Black or African-American 436 respondents; Mixed Race: 186 respondents; Asian or Asian-American 180 respondents; Other Races 177 respondents; Refused: 102 respondents.

Their opinions on technology’s impact (TECH1_W99) reflect that both men and women predominantly see technology’s impact as either “Mostly positive” or “Equal positive and negative effects.” However, women are slightly more likely than men to see the impact as “Mostly negative”. Respondents who identify as Asian or Asian-American are most likely to view technology’s impact as “Mostly positive,” followed by White respondents. Regarding the concern vs. excitement about future developments (CNCEXC_W99), women are slightly more likely than men to be “More concerned than excited” about future technological developments. Black or African-American respondents are the most likely to be “More concerned than excited,” whereas Asian or Asian-American respondents are more likely to be “More excited than concerned.” The perception of algorithm fairness (ALGFAIR_W99) that a higher proportion of women than men believe it is “Not possible” for algorithms to be fair, whereas men are more likely to believe it is “Possible”. White respondents show the greatest skepticism about the possibility of fair algorithms, with the highest count of respondents who believe it is “Not possible” (as in Fig. 7).

Religion, ideology, and AI technologies

To analyze the survey data based on the religion and ideology of the respondents, we explore how different religious and ideological groups perceive the impact of technology, their concerns and excitement about future developments, and their views on the fairness of algorithms. The columns ‘F_RELIG’ and ‘F_RELIGCAT1’ correspond to the respondents’ religious affiliations, and ‘F_PARTYSUMIDEO_FINAL’ and ‘F_IDEO’ to their ideological leanings. We analyze the distribution of respondents’ religion and ideology and then look at how these demographic groups relate to their views on technology’s impact (TECH1_W99), excitement and concerns about future developments (CNCEXC_W99), and perceptions of algorithmic fairness (ALGFAIR_W99).

Examining the distribution of religion among the respondents, we find that the largest group of respondents identified as Protestant (2,204), followed by Roman Catholic (1,075), and those with no particular religious affiliation (826). Smaller religious groups included Agnostics (307), Atheists (279), and other faiths like Jewish (108), Mormon (100), Buddhist (34), Hindu (26), and Muslim (24). Regarding ideology distribution, Moderates represent the largest ideological group (1,853), followed by Conservatives (1,344), Liberals (913), Very Conservatives (541), and Very Liberals (424). A small number refused to specify their ideology (78). Their opinions on technology’s impact (TECH1_W99) reveal that across most religious groups, there’s a balance between seeing technology’s effects as equally positive and negative. However, Atheists and Agnostics tend to have a more positive view. Ideologically, Moderates and Conservatives are more inclined to see technology’s impact as equally positive and negative, while Liberals and Very Liberals are more likely to see it as mostly positive. Concerns and excitement about future technological developments (CNCEXC_W99) are distributed relatively evenly across religious affiliations, with no significant outliers. Ideologically, Moderates and Conservatives tend to be more concerned than excited, while Liberals and Very Liberals show more excitement than concern. The perception of algorithm fairness (ALGFAIR_W99) reflects that skepticism about the possibility of algorithms being fair is high across all religious groups, with Protestants and Roman Catholics having the highest number of respondents who think it is not possible. Ideologically, Conservatives and Very Conservatives are more likely to believe that it is not possible for algorithms to be fair, while Liberals and Very Liberals are more divided between “Not possible” and “Not sure.”

Income and AI technologies

To analyze the survey data based on the income levels of the respondents, represented by the columns ‘F_INC_SDT1’ and ‘F_INC_TIER2’, we look into how income correlates with attitudes toward technology, concerns about future developments, and views on the fairness of algorithms.

A summary of the analyses focusing on the income levels (‘F_INC_SDT1’ and ‘F_INC_TIER2’) of the respondents is provided. The highest number of respondents have an income of $100,000 or more (1427). The next largest group earns less than $30,000 (812). Other income ranges have smaller counts, with the least being those earning $80,000 to less than $90,000 (277). Some respondents refused to disclose their income (244). Middle income is the largest group (2560). Upper income is next (1222), followed by lower income (1090). A number of respondents refused to disclose their income tier (281).

Their opinions on technology’s impact (TECH1_W99) show that in the ‘F_INC_SDT1’ category, those earning $100,000 or more have the highest number of respondents viewing technology’s impact as “Mostly positive” (846), while also having a considerable portion seeing it as “Equal positive and negative effects” (481). The ‘F_INC_TIER2’ category shows similar patterns, with upper-income respondents more likely to view technology’s impact as “Mostly positive” compared to other income tiers. Regarding concern vs. excitement about future developments (CNCEXC_W99), for ‘F_INC_SDT1’, lower-income respondents have a higher count of being “More concerned than excited” (445) compared to those excited (176), whereas upper-income individuals are more balanced with a considerable number being “More excited than concerned” (268). ‘F_INC_TIER2’ analysis shows that middle-income respondents are more evenly split between concern and excitement, with a notable number being “Equally concerned and excited” (1119). Concerning the perception of algorithm fairness (ALGFAIR_W99), respondents across all income levels in the ‘F_INC_SDT1’ category show skepticism about the possibility of algorithms being fair, with high-income earners being the most doubtful (440 believe it’s “Not possible”). The ‘F_INC_TIER2’ category also reflects skepticism across all tiers, with middle-income individuals showing the highest number of respondents who think it is “Not possible” for algorithms to be fair (812).

In Fig. 8, the opinion on AI technology’s impact on income is showcased.

The income tiers are represented on the x-axis, and the number of respondents is on the y-axis. Different colors indicate whether respondents view technology’s impact as mostly positive, mostly negative, or equally positive and negative.

Clustering using K-means

The columns QKEY, SMALG3_W99, F_REG, and F_INTFREQ that contain identifiers or strings are removed and the values are scaled using a standard scaler, which centers the data around zero with a standard deviation of one. The majority of data points are grouped into Cluster 4 (using 5 clusters setting) and Cluster 1 (using 3 clusters), while the other clusters contain significantly fewer points.

After rerunning the K-means clustering with 5 and 3 clusters, the respondents’ distribution is presented in Table 2.

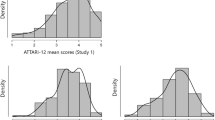

With 3 clusters, the majority of the respondents are in Cluster 1, while Clusters 0 and 2 are much smaller. To visualize the clusters, a dimensionality reduction technique such as Principal Component Analysis (PCA) is applied to reduce the dataset to 2 dimensions for a 2D plot, or to 3 dimensions for a 3D plot (as in Fig. 9). The silhouette score, with the clusters assigned by K-Means, is approximately 0.828. This score suggests a good separation between the clusters, indicating that the clustering configuration is well-defined for the sampled data. In our research, we explored various datasets that contained both numeric and non-numeric variables. We applied multiple clustering techniques, including K-prototypes, DBSCAN, and hierarchical and spectral clustering. However, we found that the K-means clustering algorithm yielded the most effective results in terms of segmentation quality.

Each color represents one of the three clusters: Red: Cluster 0; Green: Cluster 1; Blue: Cluster 2. ANOVA is performed for each feature across the three clusters. For most features, the p-values are significantly low (often below 0.05), suggesting that there are statistically significant differences in the mean values of those features across the clusters. This indicates that the clustering has effectively separated the respondents into groups with distinct characteristics. ANOVA is useful for identifying features that contribute significantly to clustering, which can help in interpreting the clusters or in feature selection for further modeling.

The centroids of the clusters represent the mean value for each feature within the cluster. These are the centroids in the original feature space for each of the three clusters: Cluster 0: This cluster has higher average values for several features compared to Cluster 1 but is generally lower than Cluster 2. Features like TECH1_W99, CNCEXC_W99, and ALGFAIR_W99 are notably higher than Cluster 1 but less than Cluster 2. This cluster might represent a middle ground between the other two clusters in terms of features; Cluster 1: This cluster tends to have the lowest average values across most features. It suggests that this cluster may represent a group with less pronounced characteristics in terms of the variables measured or a baseline group in comparison to the other clusters; Cluster 2: This cluster has the highest average values for the features, particularly for TECH1_W99, CNCEXC_W99, ALGFAIR_W99, DISCRIM1_a_W99, and DISCRIM1_b_W99.

This indicates that this cluster’s characteristics are quite distinct and more pronounced in comparison to the other two clusters. These interpretations are general and based on the average values of the features; individual cluster members may vary. The centroids can help in understanding the profiles of the respondents in each cluster, which could be useful for targeted analysis, marketing, or further study. The feature with the most variation across the centroids of the three clusters is DCARS11_b_W99: A feature with high variance among the clusters, suggesting that respondents have significantly different values for this feature across clusters; DCARS11_a_W99: Similar to the previous feature, indicating that this feature strongly differentiates the clusters. The feature-specific analysis for the selected features shows the following mean responses for each cluster are presented in Table 3.

From these observations, we notice that Cluster 2 is characterized by a strong engagement with the survey’s topics, particularly concerning technology and societal issues. Cluster 0 appears to have moderate views, while Cluster 1 seems less engaged or concerned about these topics. To understand the profiles of the respondents in each cluster, we can look at the centroids for each cluster and interpret them based on the features that stand out. A general profile summary based on the centroids provided earlier is provided: Cluster 0 - The Moderates. It has moderate average values for most features, neither extremely high nor low. This could indicate a more balanced or moderate perspective on various issues. Respondents in this cluster might not hold extreme views and could represent a general population in terms of the survey’s topics; Cluster 1—The Baseline. It shows the lowest average values for most of the features. This suggests that respondents in this cluster may be less engaged or have less intense opinions on the surveyed topics. This could be the most common profile, possibly representing the average respondent with no strong inclinations toward the topics surveyed; Cluster 2—The Intensives. It exhibits the highest values across nearly all features, indicating strong opinions, higher engagement, or more pronounced behaviors in the topics covered by the survey. Respondents in this group might be more passionate, involved, or affected by the survey’s topics, which could make them stand out in specific areas.

For a more detailed profile, we map the questions’ code with their description. With that information, we can interpret the clusters’ profiles more precisely in the context of the survey and refine our understanding of the three clusters: Cluster 0 - The Cautious Moderates. This group appears to be moderate in their acceptance of new technologies like driverless vehicles and facial recognition, possibly favoring some regulations like dedicated lanes for driverless cars (‘DCARS11_a_W99’) and identifiable labels (‘DCARS11_b_W99’). They might hold moderate views on the role of companies and federal agencies in setting standards for facial recognition technology used by the police (‘FACEREC6_b_W99’ and ‘FACEREC6_a_W99’). Their opinions on social media’s role in filtering false information (‘SMALG4_d_W99’ and ‘SMALG12_W99’) might be balanced, suggesting they see both benefits and drawbacks in technology’s role in public discourse. Cluster 1—The Baseline or Technology Skeptics. Members of this cluster could be less inclined towards the adoption of new technologies, perhaps less excited about the prospect of enhancements like advanced vision (‘POSNEGHE_e_W99’), or less accepting of driverless vehicles under various conditions (‘DCARS11’ series). They might also be more skeptical of the effectiveness of computer programs in filtering false information on social media (‘SMALG4_d_W99’ and ‘SMALG12_W99’). This cluster could be more conservative in its views on how facial recognition technology is regulated and used by law enforcement (‘FACEREC6’ series). Cluster 2—The Technologically Enthusiastic or Concerned. This group likely has strong opinions about technology, possibly showing a high level of acceptance for driverless vehicles (‘DCARS11’ series) and new human abilities through technological advancements (‘POSNEGHE_e_W99’). They may advocate for a significant role of both companies and government agencies in setting standards for the use of facial recognition technology by the police (‘FACEREC6_b_W99’ and ‘FACEREC6_a_W99’). Concerns about racial bias in the use of facial recognition technology by the police (‘FACEREC3_c_W99’) and strong views on the role of technology in combating false information on social media (‘SMALG4_d_W99’ and ‘SMALG12_W99’) could also be defining characteristics.

These profiles are inferred from the centroids, which represent the average values for each cluster, and are based on the assumption that higher values indicate greater acceptance or concern, as suggested by the descriptions. Each cluster seems to have a unique stance on technology and its implications for society. Corroborating the below features specifically with their description in the survey, significant differences suggest that they are the most influential in distinguishing the groups defined by the clustering algorithm.

-

FACEREC6_b_W99: Another distinctive feature among the clusters, possibly relating to a respondent’s recognition or attitude towards something specific.

-

FACEREC6_a_W99: As with the “b” variant of this feature, it seems to be a strong differentiator between clusters.

-

FACEREC3_c_W99: This feature also shows considerable variance and may represent a unique aspect of the respondent’s profile.

-

SMALG4_d_W99: Indicates a distinctive characteristic or opinion among the clusters.

-

POSNEGHE_e_W99: Could represent a particular positive or negative health aspect that differs significantly among clusters.

-

DCARS11_c_W99: Like the other “DCARS11” features, this one is also a strong differentiator.

-

SMALG12_W99: Another feature that significantly varies among clusters.

The features with the most variation across clusters relate to opinions on emerging technologies such as driverless vehicles, facial recognition, and computer programs to detect false information. They can be used to understand the clusters’ general attitudes toward technology and regulation. For example, a cluster with high values in DCARS11_b_W99 and DCARS11_a_W99 may consist of respondents who are more accepting of driverless vehicles under certain conditions. Conversely, a cluster with low values in FACEREC3_c_W99 might be less concerned about potential racial biases in the use of facial recognition technology by the police. For a more nuanced understanding, it is useful to visualize the distribution of these key features within clusters to see how the responses vary (as in Fig. 10). Figure 10 and Table 3 show the differences in mean responses between the clusters for the selected features. As of TECH1_W99 (Opinions on technology), the mean response for Cluster 1 is approximately 1.5 points lower than that of Cluster 0, indicating less enthusiasm or engagement with technology. Cluster 2’s mean response is significantly higher than Cluster 1’s by over 16 points, reflecting a much stronger sentiment towards technology among respondents in Cluster 2. Regarding CNCEXC_W99 (Views on the concept of excellence), the mean response for Cluster 1 is about 2.6 points lower than Cluster 0’s, suggesting different standards or priorities regarding excellence. Cluster 2’s mean response is dramatically higher than Cluster 1’s by about 40 points, indicating that the concept of excellence is significantly more important to respondents in Cluster 2. In terms of ALGFAIR_W99 (Views on the fairness of algorithms), Cluster 1 has a mean response that is about 0.78 points lower than Cluster 0, suggesting less concern or awareness of algorithmic fairness. The mean response for Cluster 2 is about 23.8 points higher than Cluster 1, which could indicate a strong opinion on the fairness of algorithms, possibly perceiving them as often unfair.

As of DISCRIM1_a_W99 (Views on discrimination), Cluster 1 shows a mean response that is about 6.6 points lower than Cluster 0, indicating that discrimination might not be perceived as a significant issue among this group. Cluster 2 has a mean response that is about 39.6 points higher than Cluster 1, which could signify a heightened awareness or concern regarding discrimination. Related to FACEREC3_c_W99 (Opinions on potential racial bias in the use of facial recognition), the mean response for Cluster 1 is about 41.9 points lower than Cluster 0, suggesting significantly less concern about potential racial bias.